Exploratory Research on the Potential of Human–AI Interaction for Mental Health: Building and Verifying an Experimental Environment Based on ChatGPT and Metaverse

Abstract

1. Introduction

2. Related Work

2.1. AI as an Emotional Support Companion

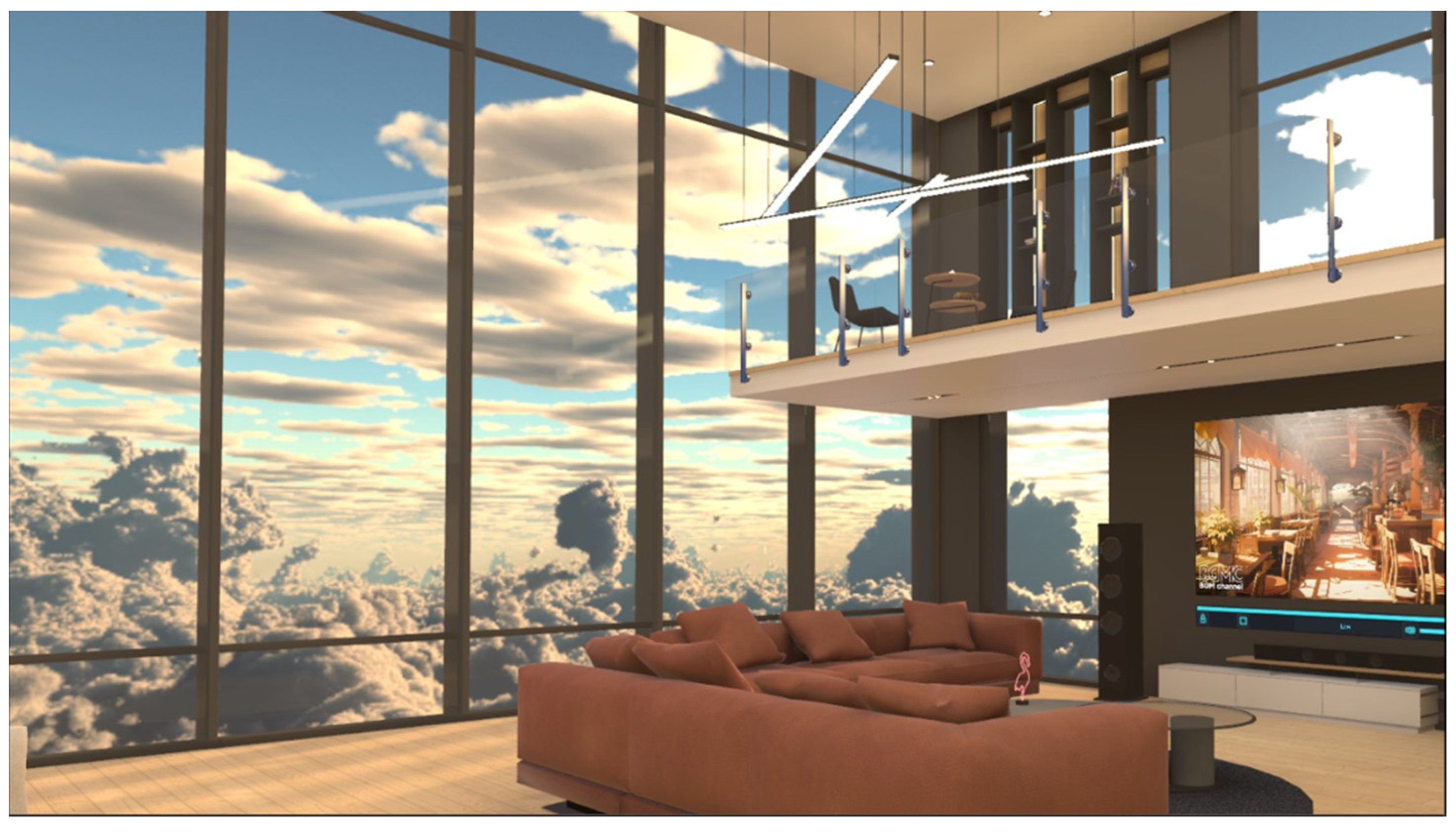

2.2. Metaverse for Avatar-Based Immersive Interactions

2.3. Position of This Study

3. Design and Implementation of an Experimental Environment

3.1. Basic Architecture Based on ChatGPT and Metaverse

3.2. AI Agent Persona and Behavioral Configuration

- Primary Role: The AI agent’s main purpose is to provide comfort and listen empathetically to the user’s concerns.

- Conversational Style: It is instructed to communicate like a gentle and kind friend, using concise responses to maintain a natural conversational rhythm.

- Engagement Strategy: The AI agent actively fosters dialog by occasionally asking open-ended questions to explore topics further.

- Error Handling: It has built in strategies to politely ask for help when it encounters potential speech recognition failures.

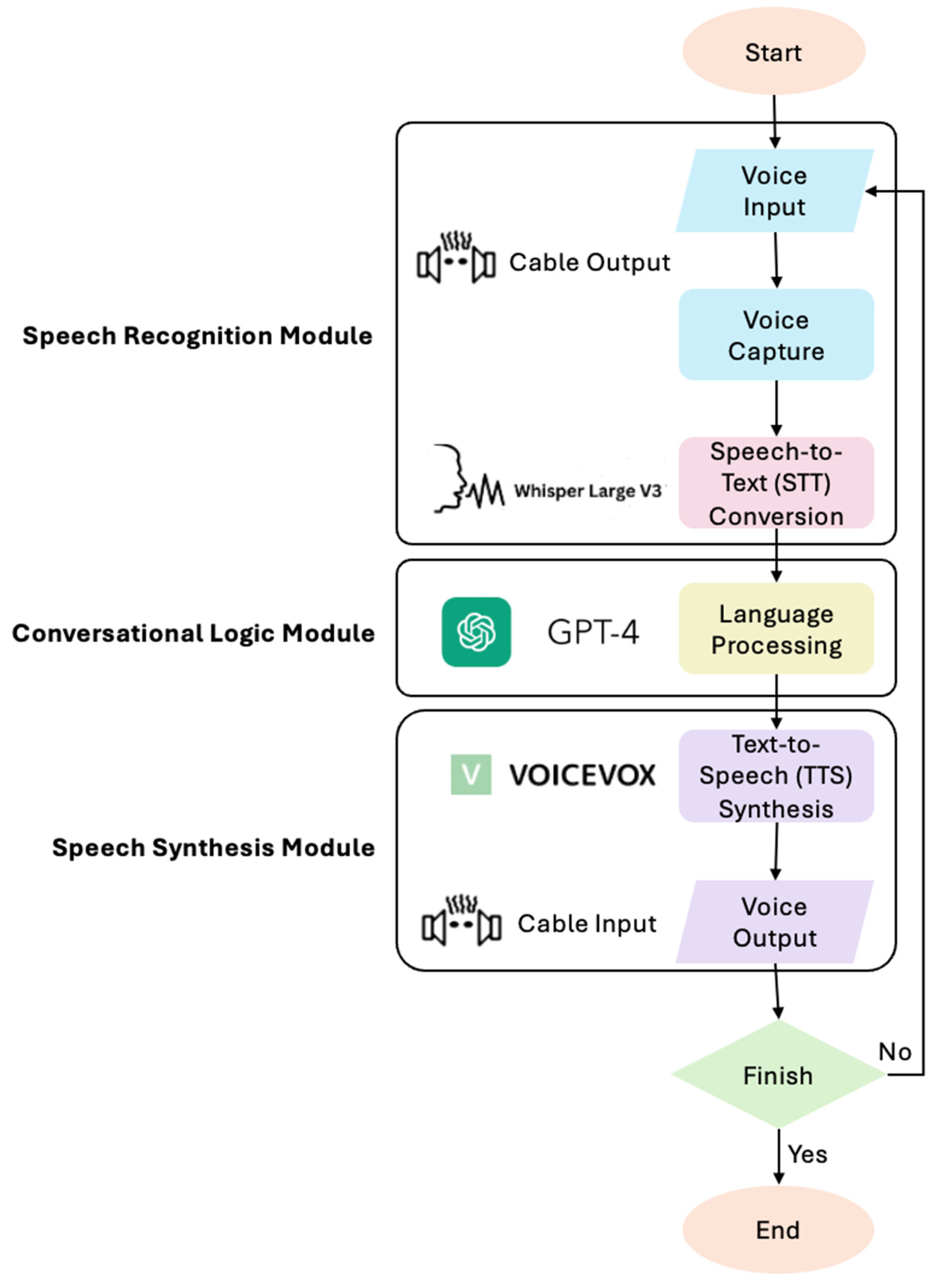

3.3. Functional Modules and Data Processing

3.3.1. Speech Recognition Module

- Voice Input: The process begins with the system receiving the user’s voice from the metaverse environment, which is routed into the application via the first virtual audio device, which is the cable output function.

- Voice Capture: The incoming audio stream is continuously monitored by a voice activity detection (VAD) algorithm. The monitoring relies on a real-time analysis of the audio waveform’s intensity, calculated using the Root Mean Square (RMS). Recording ceases after a set period of silence is detected, ensuring the complete capture of the user’s utterance.

- Speech-to-Text (STT) Conversion: The recorded audio segment is processed by OpenAI’s Whisper model, which is Large V3 [27]. As a state-of-the-art Automatic Speech Recognition (ASR) model, Large V3 is known for its high accuracy and robustness in handling various accents and moderate background noise. This capability is critical for the experiment, as it helps to ensure the user feels accurately heard and understood, which is a foundational element for building a connection and minimizing interactional friction.

3.3.2. Conversational Logic Module

- Language Processing: The text string from the previous module is sent to GPT-4 [28], a powerful Large Language Model (LLM). This module is responsible for all higher-level cognitive tasks, including understanding the user’s intent, recalling past turns in the conversation to maintain context, and generating empathetic and context-aware responses. The ability to process language allows the AI agent to engage in the kind of supportive, multi-turn dialog that is essential for building a sense of companionship.

3.3.3. Speech Synthesis Module

- 1.

- Text-to-Speech (TTS) Synthesis: The text response generated by GPT-4 is transformed into a natural-sounding audio file by the VOICEVOX [29] engine, a speech synthesis tool that provides a variety of expressive Japanese voices.

- 2.

- Voice Output: The synthesized audio is routed back into the metaverse environment through the second virtual audio device, which is the cable input, and functions as the AI agent’s microphone. This transforms the AI agent from a disembodied text generator into an audible character within the virtual world, allowing the user to hear the voice directly from the AI agent’s avatar.

4. Experiment Results and Discussion

4.1. Experiment Overview

4.1.1. Participants

4.1.2. Experiment Conditions

- AI-Metaverse Condition: A voice-based conversation with the AI agent in the immersive metaverse environment.

- AI-Text Condition: A text-based conversation with the same AI agent through a chat interface.

- Pre-experiment Questionnaire: First, we take a questionnaire to gather baseline data on the participants’ current feelings and their prior experience with AI agents and the metaverse.

- VR Test: Before the AI-Metaverse condition, we provided instructions on how to use the Meta Quest 2. Participants then perform a brief body synchronization exercise in the metaverse environment, which involves moving their head and hands and following the AI avatar around the room to establish a sense of presence.

- Interaction Session: Participant then completes both the 10 min AI-Metaverse session and the 10 min AI-Text session. To ensure privacy and encourage open expression, each participant is alone in the room during the interaction sessions.

- Post-experiment Questionnaire: Following each session, participants complete a detailed questionnaire to assess their user experience in that specific condition.

4.2. Experiment Results

4.2.1. Analysis of User Experience

4.2.2. Analysis Based on Pre-Psychological State

4.2.3. Analysis Based on VR Experience

4.2.4. Analysis Based on Consultation Frequency

4.2.5. Qualitative Analysis of Intention for Future Use

4.3. Discussion

4.3.1. The Potential of Human–AI Interaction on Loneliness Alleviation

4.3.2. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Item |

|---|---|

| Q1 | Rate your overall satisfaction with the AI-Metaverse Counseling Room service. |

| Q2 | Rate the ease of use of the AI-Metaverse Counseling Room interface. |

| Q3 | Rate the alleviation of your worries after using the AI-Metaverse Counseling Room. |

| Q4 | Rate the reduction of your loneliness after using the AI-Metaverse Counseling Room. |

| Q5 | Rate the extent to which you gained a deeper understanding of your feelings after using the AI-Metaverse Counseling Room. |

| Q6 | Rate the healing effect of the AI-Metaverse environment. |

| Q7 | Rate the AI agent’s response time in the AI-Metaverse environment. |

| Q8 | Rate the naturalness of the conversation with the AI agent in the AI-Metaverse environment. |

| Q8.1 | [For respondents who rated Q8 as scale 5–7 (Not natural) only] What aspects of the conversation felt unnatural? (Multiple selections allowed) |

| Q8.2 | [For respondents who rated Q8 as scale 5–7 (Not natural) only] If the conversation with the AI had been more natural and fluent, do you believe the reduction of loneliness and worries would have been more effective? |

| Q9 | Rate your intention to use this kind of AI-Metaverse Counseling Room in the future. |

| Q9.1 | [For respondents who rated Q9 as scale 1-4 (Intending to use) only] Why would you want to use it again? (Multiple selections allowed, up to three) |

| Q9.2 | [For respondents who rated Q9 as scale 5-7 (Intending not to use) only] Why would you not want to use it again? (Multiple selections allowed, up to three) |

| Q9.3 | [For respondents who rated Q9 as scale 5–7 (Intending not to use) only] How would you like the AI-Metaverse Counseling Room to be improved for you to want to use it? (Multiple selections allowed) |

| Q10 | What emotions did you experience while using the AI-Metaverse Counseling Room? (Multiple selections allowed) |

| Q11 | Regarding this experiment, please provide any advice for service improvement, or any other comments or opinions. (Optional) |

Appendix B

| No. | Item |

|---|---|

| Q1 | Rate your overall satisfaction with the AI-Text Counseling Room service. |

| Q2 | Rate the ease of use of the AI-Text Counseling Room interface. |

| Q3 | Rate the alleviation of your worries after using the AI-Text Counseling Room. |

| Q4 | Rate the reduction of your loneliness after using the AI-Text Counseling Room. |

| Q5 | Rate the extent to which you gained a deeper understanding of your feelings after using the AI-Text Counseling Room. |

| Q6 | Rate the healing effect of the AI-Text environment. |

| Q7 | Rate the AI agent’s response time in the AI-Text environment. |

| Q8 | Rate the naturalness of the conversation with the AI agent in the AI-Text environment. |

| Q8.1 | [For respondents who rated Q8 as scale 5-7 (Not natural) only] What aspects of the conversation felt unnatural? (Multiple selections allowed) |

| Q8.2 | [For respondents who rated Q8 as scale 5-7 (Not natural) only] If the conversation with the AI had been more natural and fluent, do you believe the reduction of loneliness and worries would have been more effective? |

| Q9 | Rate your intention to use this kind of AI-Text Counseling Room in the future. |

| Q9.1 | [For respondents who rated Q9 as scale 1-4 (Intending to use) only] Why would you want to use it again? (Multiple selections allowed, up to three) |

| Q9.2 | [For respondents who rated Q9 as scale 5-7 (Intending not to use) only] Why would you not want to use it again? (Multiple selections allowed, up to three) |

| Q9.3 | [For respondents who rated Q9 as scale 5-7 (Intending not to use) only] How would you like the AI-Text Counseling Room to be improved for you to want to use it? (Multiple selections allowed) |

| Q10 | Assuming an AI agent of the same performance was used in both conditions, which environment would you choose? |

| Q11 | Regarding this experiment, please provide any advice for service improvement, or any other comments or opinions. (Optional) |

References

- WHO. Artificial Intelligence in Mental Health Research: New WHO Study on Applications and Challenges. Available online: https://www.who.int/europe/news/item/06-02-2023-artificial-intelligence-in-mental-health-research--new-who-study-on-applications-and-challenges (accessed on 13 August 2025).

- Hwang, T.J.; Rabheru, K.; Peisah, C.; Reichman, W.; Ikeda, M. Loneliness and social isolation during the COVID-19 pandemic. Int. Psychogeriatr. 2020, 32, 1217–1220. [Google Scholar] [CrossRef] [PubMed]

- Casu, M.; Triscari, S.; Battiato, S.; Guarnera, L.; Caponnetto, P. AI Chatbots for Mental Health: A Scoping Review of Effectiveness, Feasibility, and Applications. Appl. Sci. 2024, 14, 5889. [Google Scholar] [CrossRef]

- Coombs, N.C.; Meriwether, W.E.; Caringi, J.; Newcomer, S.R. Barriers to healthcare access among US adults with mental health challenges: A population-based study. SSM-Popul. Health 2021, 15, 100847. [Google Scholar] [CrossRef] [PubMed]

- Lustgarten, S.D.; Garrison, Y.L.; Sinnard, M.T.; Flynn, A.W. Digital privacy in mental healthcare: Current issues and recommendations for technology use. Curr. Opin. Psychol. 2020, 36, 25–31. [Google Scholar] [CrossRef] [PubMed]

- Stephanidis, C.; Salvendy, G.; Antona, M.; Duffy, V.G.; Gao, Q.; Karwowski, W.; Konomi, S.; Nah, F.; Ntoa, S.; Rau, P.-L.P.; et al. Seven HCI Grand Challenges Revisited: Five-Year Progress. Int. J. Hum.-Comput. Interact. 2025, 41, 11947–11995. [Google Scholar] [CrossRef]

- Mozumder, M.A.I.; Armand, T.P.T.; Imtiyaj Uddin, S.M.; Athar, A.; Sumon, R.I.; Hussain, A.; Kim, H.C. Metaverse for Digital Anti-Aging Healthcare: An Overview of Potential Use Cases Based on Artificial Intelligence, Blockchain, IoT Technologies, Its Challenges, and Future Directions. Appl. Sci. 2023, 13, 5127. [Google Scholar] [CrossRef]

- Furukawa, T.A.; Tajika, A.; Toyomoto, R.; Sakata, M.; Luo, Y.; Horikoshi, M.; Akechi, T.; Kawakami, N.; Nakayama, T.; Kondo, N.; et al. Cognitive behavioral therapy skills via a smartphone app for subthreshold depression among adults in the community: The RESiLIENT randomized controlled trial. Nat. Med. 2025, 31, 1830–1839. [Google Scholar] [CrossRef] [PubMed]

- De Freitas, J.; Oğuz-Uğuralp, Z.; Uğuralp, A.K.; Puntoni, S. AI companions reduce loneliness. J. Consum. Res. 2025, ucaf040. [Google Scholar] [CrossRef]

- Ifdil, I.; Situmorang, D.D.B.; Firman, F.; Zola, N.; Rangka, I.B.; Fadli, R.P. Virtual reality in Metaverse for future mental health-helping profession: An alternative solution to the mental health challenges of the COVID-19 pandemic. J. Public Health 2023, 45, e142–e143. [Google Scholar] [CrossRef] [PubMed]

- Govindankutty, S.; Gopalan, S.P. The Metaverse and Mental Well-Being: Potential Benefits and Challenges in the Current Era. In The Metaverse for the Healthcare Industry; Springer: Cham, Switzerland, 2024. [Google Scholar] [CrossRef]

- Wang, L.; Gai, W.; Jiang, N.; Chen, G.; Bian, Y.; Luan, H.; Huang, L.; Yang, C. Effective Motion Self-Learning Genre Using 360° Virtual Reality Content on Mobile Device: A Study Based on Taichi Training Platform. IEEE J. Biomed. Health Inform. 2024, 28, 3695–3708. [Google Scholar] [CrossRef] [PubMed]

- Cho, S.; Kang, J.; Baek, W.H.; Jeong, Y.B.; Lee, S.; Lee, S.M. Comparing counseling outcome for college students: Metaverse and in-person approaches. Psychother. Res. 2024, 34, 1117–1130. [Google Scholar] [CrossRef] [PubMed]

- Croes, E.A.; Antheunis, M.L.; van der Lee, C.; de Wit, J.M. Digital confessions: The willingness to disclose intimate information to a chatbot and its impact on emotional well-being. Interact. Comput. 2024, 36, 279–292. [Google Scholar] [CrossRef]

- Anmella, G.; Sanabra, M.; Primé-Tous, M.; Segú, X.; Cavero, M.; Morilla, I.; Grande, V.; Mas, A.; Sanabra, M.; Martín-Villalba, I.; et al. Vickybot, a Chatbot for Anxiety-Depressive Symptoms and Work-Related Burnout in Primary Care and Health Care Professionals: Development, Feasibility, and Potential Effectiveness Studies. J. Med. Internet Res. 2023, 25, e43293. [Google Scholar] [CrossRef]

- Liu, A.R.; Pataranutaporn, P.; Maes, P. Chatbot companionship: A mixed-methods study of companion chatbot usage patterns and their relationship to loneliness in active users. arXiv 2024, arXiv:2410.21596. [Google Scholar] [CrossRef]

- Li, H.; Zhang, R.; Lee, Y.C.; Kraut, R.E.; Mohr, D.C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. npj Digit. Med. 2023, 6, 236. [Google Scholar] [CrossRef]

- Lee, A.; Moon, S.; Jhon, M.; Kim, J.; Kim, D.; Kim, J.E.; Park, K.; Jeon, E. Comparative Study on the Performance of LLM-based Psychological Counseling Chatbots via Prompt Engineering Techniques. In Proceedings of the 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Lisbon, Portugal, 3–6 December 2024; pp. 7080–7082. [Google Scholar] [CrossRef]

- Kim, M.; Lee, S.; Kim, S.; Heo, J.I.; Lee, S.; Shin, Y.B.; Cho, C.H.; Jung, D. Therapeutic Potential of Social Chatbots in Alleviating Loneliness and Social Anxiety: Quasi-Experimental Mixed Methods Study. J. Med. Internet Res. 2025, 27, e65589. [Google Scholar] [CrossRef]

- Park, G.; Chung, J.; Lee, S. Effect of AI chatbot emotional disclosure on user satisfaction and reuse intention for mental health counseling: A serial mediation model. Curr. Psychol. 2023, 42, 28663–28673. [Google Scholar] [CrossRef] [PubMed]

- Thieme, A.; Hanratty, M.; Lyons, M.; Palacios, J.; Marques, R.F.; Morrison, C.; Doherty, G. Designing Human-centered AI for Mental Health: Developing Clinically Relevant Applications for Online CBT Treatment. ACM Trans. Comput.-Hum. Interact. 2023, 30, 1–50. [Google Scholar] [CrossRef]

- Spiegel, B.M.R.; Liran, O.; Clark, A.; Samaan, J.S.; Khalil, C.; Chernoff, R.; Reddy, K.; Mehra, M. Feasibility of combining spatial computing and AI for mental health support in anxiety and depression. npj Digit. Med. 2024, 7, 22. [Google Scholar] [CrossRef] [PubMed]

- Al Falahi, A.; Alnuaimi, H.; Alqaydi, M.; Al Shateri, N.; Al Ameri, S.; Qbea’h, M.; Alrabaee, S. From Challenges to Future Directions: A Metaverse Analysis. In Proceedings of the 2nd International Conference on Intelligent Metaverse Technologies Applications (iMETA), Dubai, United Arab Emirates, 26–29 November 2024; pp. 34–43. [Google Scholar] [CrossRef]

- Wang, X.; Mo, X.; Fan, M.; Lee, L.H.; Shi, B.; Hui, P. Reducing Stress and Anxiety in the Metaverse: A Systematic Review of Meditation, Mindfulness and Virtual Reality. In Proceedings of the Tenth International Symposium of Chinese CHI (Chinese CHI ‘22), Guangzhou, China, 22–23 October 2022; Association for Computing Machinery: New York, NY, USA, 2024; pp. 170–180. [Google Scholar] [CrossRef]

- Fang, A.; Chhabria, H.; Maram, A.; Zhu, H. Social Simulation for Everyday Self-Care: Design Insights from Leveraging VR, AR, and LLMs for Practicing Stress Relief. In Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems (CHI ‘25), Yokohama, Japan, 26 April–1 May 2025; pp. 1–23. [Google Scholar] [CrossRef]

- VRChat. Available online: https://hello.vrchat.com/ (accessed on 13 August 2025).

- Radford, A.; Kim, J.W.; Xu, T.; Brockman, G.; McLeavey, C.; Sutskever, I. Robust speech recognition via large-scale weak supervision. In Proceedings of the 40th International Conference on Machine Learning (ICML’23), Honolulu, HI, USA, 23–39 July 2023; pp. 28492–28518. Available online: https://dl.acm.org/doi/10.5555/3618408.3619590 (accessed on 12 October 2025).

- OpenAI GPT4. Available online: https://openai.com/ja-JP/api/ (accessed on 13 August 2025).

- VOICEVOX. Available online: https://voicevox.hiroshiba.jp/ (accessed on 13 August 2025).

| Version | |

|---|---|

| OS | Microsoft Windows 10 Pro 64bit |

| CPU | AMD Ryzen 7 3700X 8-Core Processor |

| GPU | NVIDIA GeForce RTX 2060 Graphics card |

| Headset | Meta Quest 2 |

| Python | 3.10.11 |

| Visual Studio Code | 1.85.1 |

| Item | Condition | Min | Max | Median | Mode | Range |

|---|---|---|---|---|---|---|

| Overall Satisfaction | AI-Metaverse | 2 | 6 | 3.00 | 3 | 2~6 |

| AI-Text | 1 | 5 | 3.00 | 2 | 1~5 | |

| Ease of Use | AI-Metaverse | 1 | 5 | 2.00 | 1 | 1~5 |

| AI-Text | 1 | 4 | 2.00 | 2 | 1~4 | |

| Perceived Support for Worry | AI-Metaverse | 2 | 7 | 3.00 | 3 | 2~7 |

| AI-Text | 1 | 6 | 3.00 | 2 | 1~6 | |

| Perceived Support for Loneliness | AI-Metaverse | 2 | 5 | 3.00 | 2 | 2~5 |

| AI-Text | 1 | 6 | 4.00 | 4 | 1~6 | |

| Understanding of Feelings | AI-Metaverse | 2 | 7 | 3.00 | 3 | 2~7 |

| AI-Text | 1 | 3 | 2.00 | 2 | 1~3 | |

| Perceived Support for Calming | AI-Metaverse | 1 | 5 | 2.00 | 1 | 1~5 |

| AI-Text | 1 | 6 | 3.00 | 2 | 1~6 | |

| AI Response Time | AI-Metaverse | 3 | 7 | 5.00 | 5 | 3~7 |

| AI-Text | 3 | 7 | 5.00 | 5 | 3~7 | |

| Conversation Naturalness | AI-Metaverse | 2 | 6 | 4.00 | 5 | 2~6 |

| AI-Text | 1 | 4 | 2.00 | 2 | 1~4 | |

| Intention for Future Use | AI-Metaverse | 1 | 5 | 2.00 | 2 | 1~5 |

| AI-Text | 1 | 7 | 2.00 | 2 | 1~7 |

| Item | Group | Min | Max | Median | Mode | N |

|---|---|---|---|---|---|---|

| Overall Satisfaction | Feels Worried | 2 | 5 | 3.00 | 3 | 8 |

| Does Not Feel Worried | 3 | 6 | 3.00 | 3 | 7 | |

| Ease of Use | Feels Worried | 1 | 5 | 2.00 | 1 | 8 |

| Does Not Feel Worried | 1 | 5 | 2.00 | 2 | 7 | |

| Perceived Support for Worry | Feels Worried | 2 | 7 | 3.00 | 3 | 8 |

| Does Not Feel Worried | 2 | 7 | 3.00 | 3 | 7 | |

| Perceived Support for Loneliness | Feels Worried | 2 | 5 | 3.00 | 3 | 8 |

| Does Not Feel Worried | 2 | 5 | 2.00 | 2 | 7 | |

| Intention for Future Use | Feels Worried | 1 | 4 | 3.50 | 4 | 8 |

| Does Not Feel Worried | 1 | 5 | 2.00 | 2 | 7 |

| Item | Group | Min | Max | Median | Mode | N |

|---|---|---|---|---|---|---|

| Overall Satisfaction | Novice | 2 | 5 | 3.00 | 3 | 8 |

| Intermediate | 2 | 6 | 3.00 | N/A | 3 | |

| Experienced | 3 | 3 | 3.00 | 3 | 4 | |

| Ease of Use | Novice | 1 | 5 | 2.00 | 1 | 8 |

| Intermediate | 1 | 2 | 1.00 | 1 | 3 | |

| Experienced | 1 | 3 | 2.00 | 2 | 4 | |

| Perceived Support for Worry | Novice | 2 | 7 | 3.00 | 3 | 8 |

| Intermediate | 3 | 7 | 5.00 | N/A | 3 | |

| Experienced | 2 | 4 | 3.50 | 4 | 4 | |

| Perceived Support for Loneliness | Novice | 2 | 5 | 3.00 | 2 | 8 |

| Intermediate | 2 | 5 | 2.00 | 2 | 3 | |

| Experienced | 2 | 3 | 3.00 | 2 | 4 | |

| Intention for Future Use | Novice | 1 | 5 | 3.00 | 4 | 8 |

| Intermediate | 1 | 3 | 1.00 | 1 | 3 | |

| Experienced | 2 | 4 | 2.50 | 2 | 4 |

| Perceived Support | Neutral | Did Not Perceive Support | |

|---|---|---|---|

| Novice | 5 | 0 | 3 |

| Intermediate | 2 | 0 | 1 |

| Experienced | 4 | 0 | 0 |

| Item | Group | Min | Max | Median | Mode | N |

|---|---|---|---|---|---|---|

| Overall Satisfaction | Never | 2 | 6 | 3.00 | 2 | 5 |

| Case-by-case | 3 | 6 | 3.00 | 3 | 6 | |

| Frequently | 3 | 5 | 4.00 | 4 | 4 | |

| Ease of Use | Never | 1 | 3 | 1.00 | 1 | 5 |

| Case-by-case | 1 | 4 | 3.00 | 3 | 6 | |

| Frequently | 1 | 5 | 2.50 | N/A | 4 | |

| Perceived Support for Worry | Never | 2 | 7 | 3.00 | 3 | 5 |

| Case-by-case | 2 | 4 | 3.00 | 3 | 6 | |

| Frequently | 3 | 7 | 4.50 | N/A | 4 | |

| Perceived Support for Loneliness | Never | 2 | 3 | 2.00 | 2 | 5 |

| Case-by-case | 2 | 5 | 3.00 | 3 | 6 | |

| Frequently | 3 | 5 | 5.00 | 5 | 4 | |

| Intention for Future Use | Never | 1 | 4 | 1.00 | 1 | 5 |

| Case-by-case | 2 | 4 | 2.00 | 2 | 6 | |

| Frequently | 3 | 5 | 4.00 | 4 | 4 |

| Perceived Support | Neutral | Did Not Perceive Support | |

|---|---|---|---|

| Never | 5 | 0 | 0 |

| Case-by-Case | 5 | 0 | 1 |

| Frequently | 1 | 0 | 3 |

| Condition | Intention | Reason (Multiple Selections Allowed) | Count |

|---|---|---|---|

| AI-Metaverse | Use | Attracted by the non-everyday space | 8 |

| The AI’s appearance was cute | 8 | ||

| The AI’s voice was cute | 6 | ||

| Can discuss worries you can’t tell people | 5 | ||

| The space was comfortable | 4 | ||

| It had an effect on reducing loneliness | 4 | ||

| There was a sense of immersion | 1 | ||

| Could converse fluently | 1 | ||

| Not to Use | The voice recognition was not accurate | 3 | |

| Don’t intend to consult non-humans | 1 | ||

| Dislike speaking out loud | 1 | ||

| It had no effect on reducing loneliness | 1 | ||

| AI-Text | Use | The content recognition was accurate | 6 |

| Don’t have to speak out loud | 2 | ||

| It had an effect on reducing loneliness | 2 | ||

| It was fun to talk about my hobbies | 1 | ||

| Not to Use | Didn’t feel like counseling | 4 | |

| Don’t intend to consult non-humans | 2 | ||

| Text input was inconvenient | 1 | ||

| Might become aggressive in text | 1 | ||

| It had no effect on reducing loneliness | 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chung, P.; Cong, R.; Yao, L.; Jin, Q. Exploratory Research on the Potential of Human–AI Interaction for Mental Health: Building and Verifying an Experimental Environment Based on ChatGPT and Metaverse. Appl. Sci. 2025, 15, 11209. https://doi.org/10.3390/app152011209

Chung P, Cong R, Yao L, Jin Q. Exploratory Research on the Potential of Human–AI Interaction for Mental Health: Building and Verifying an Experimental Environment Based on ChatGPT and Metaverse. Applied Sciences. 2025; 15(20):11209. https://doi.org/10.3390/app152011209

Chicago/Turabian StyleChung, PuiTing, Ruichen Cong, Lin Yao, and Qun Jin. 2025. "Exploratory Research on the Potential of Human–AI Interaction for Mental Health: Building and Verifying an Experimental Environment Based on ChatGPT and Metaverse" Applied Sciences 15, no. 20: 11209. https://doi.org/10.3390/app152011209

APA StyleChung, P., Cong, R., Yao, L., & Jin, Q. (2025). Exploratory Research on the Potential of Human–AI Interaction for Mental Health: Building and Verifying an Experimental Environment Based on ChatGPT and Metaverse. Applied Sciences, 15(20), 11209. https://doi.org/10.3390/app152011209