A Gradient-Variance Weighting Physics-Informed Neural Network for Solving Integer and Fractional Partial Differential Equations

Abstract

1. Introduction

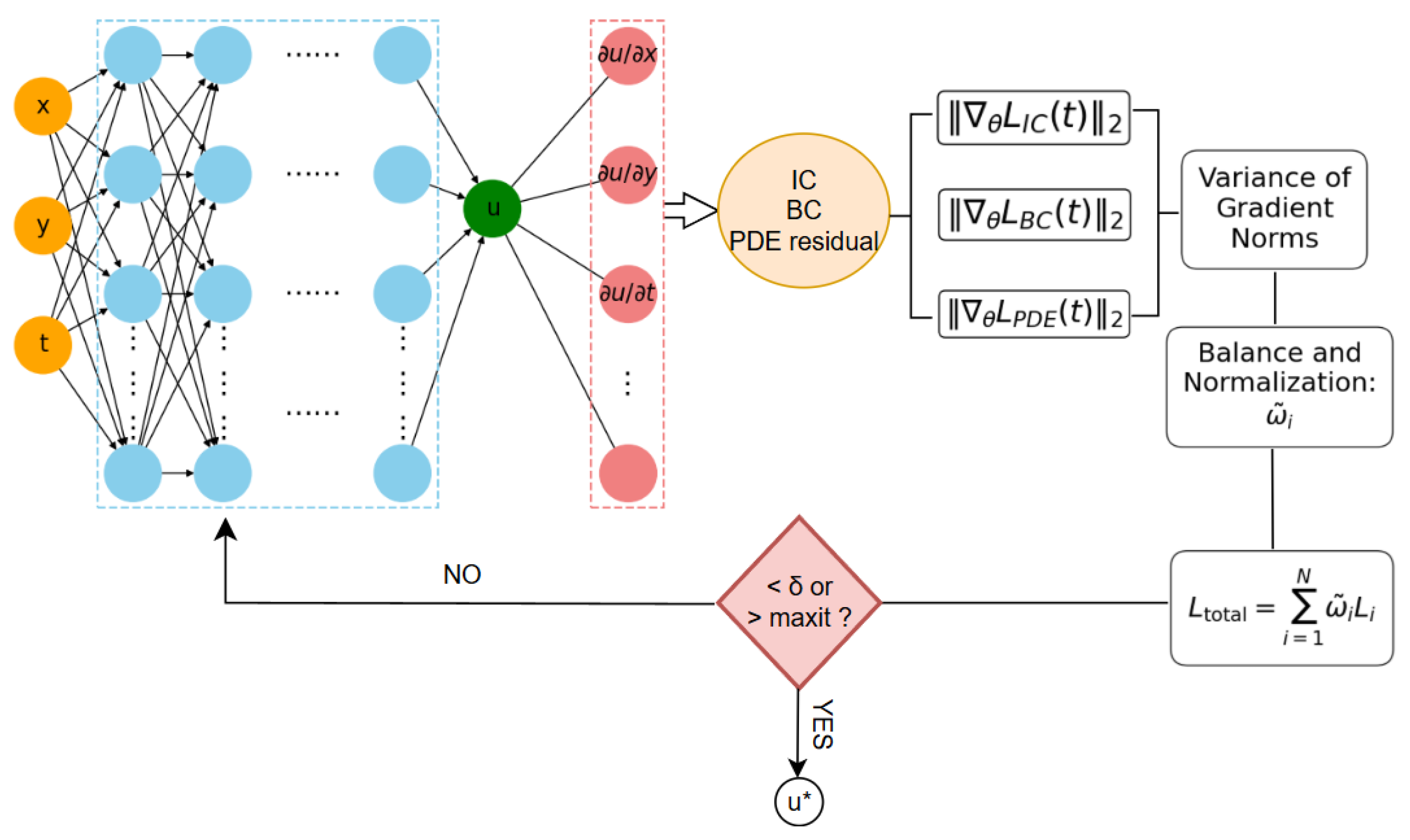

2. Methodology

2.1. Methodology of the Standard Physics-Informed Neural Network

2.2. Methodology of the Gradient-Variance Weighting Physics-Informed Neural Network

2.2.1. Theoretical Formulation

2.2.2. Two-Phase Weighting Strategy

- Warm-up phase ():Equal weights are assigned to all sub-losses:where N is the number of loss terms.

- GVW phase ():The full GVW theoretical formulation is applied as described above.

- 1.

- When the gradient of a particular loss component becomes very small (indicating near convergence) or exhibits high gradient variance (reflecting instability), its corresponding weight is automatically reduced, thereby mitigating potential negative effects on the overall training process.

- 2.

- The method enables self-balanced optimization across different tasks (e.g., PDE residual, boundary, and initial conditions) without the need for manual tuning of loss weights.

- 3.

- It simultaneously promotes convergence by emphasizing informative gradients and enhances training stability by suppressing variance-dominated components, leading to improved robustness and generalization across a broad spectrum of PDE problems.

3. Experimental Results

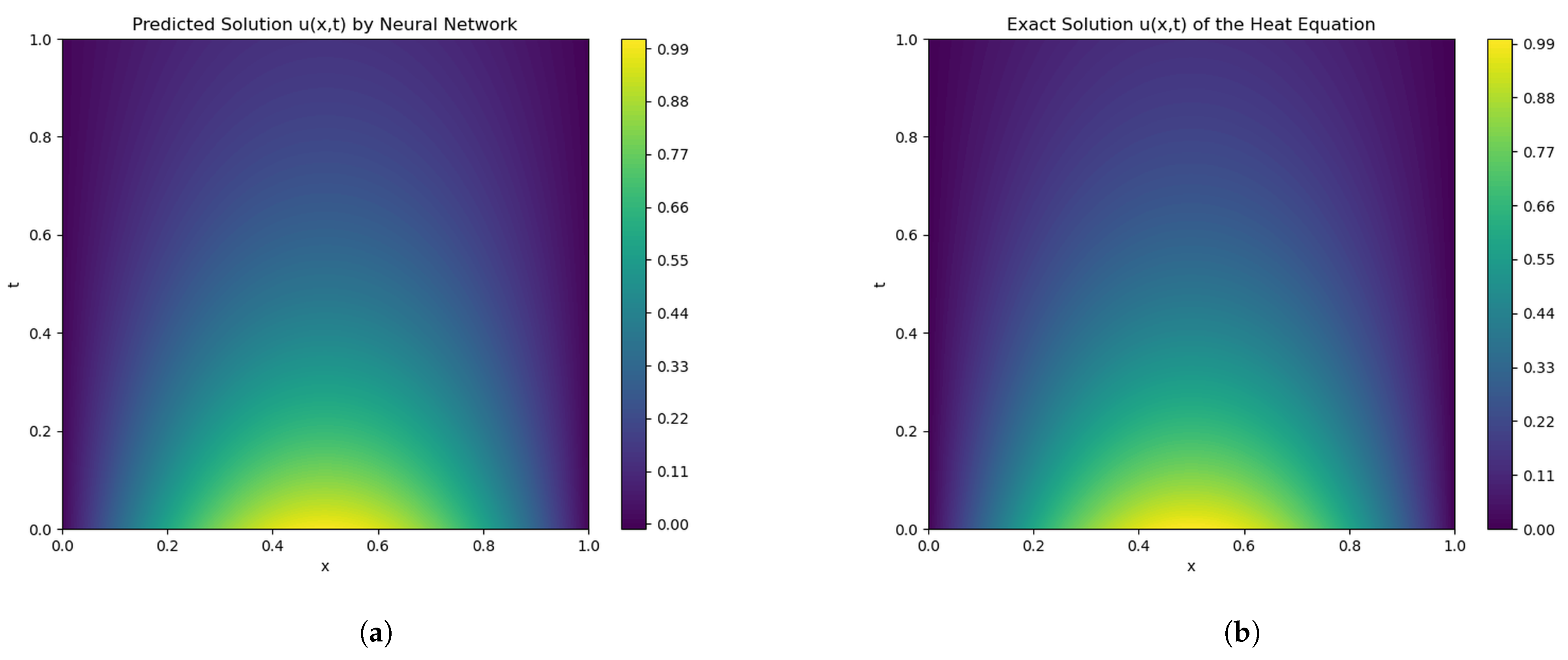

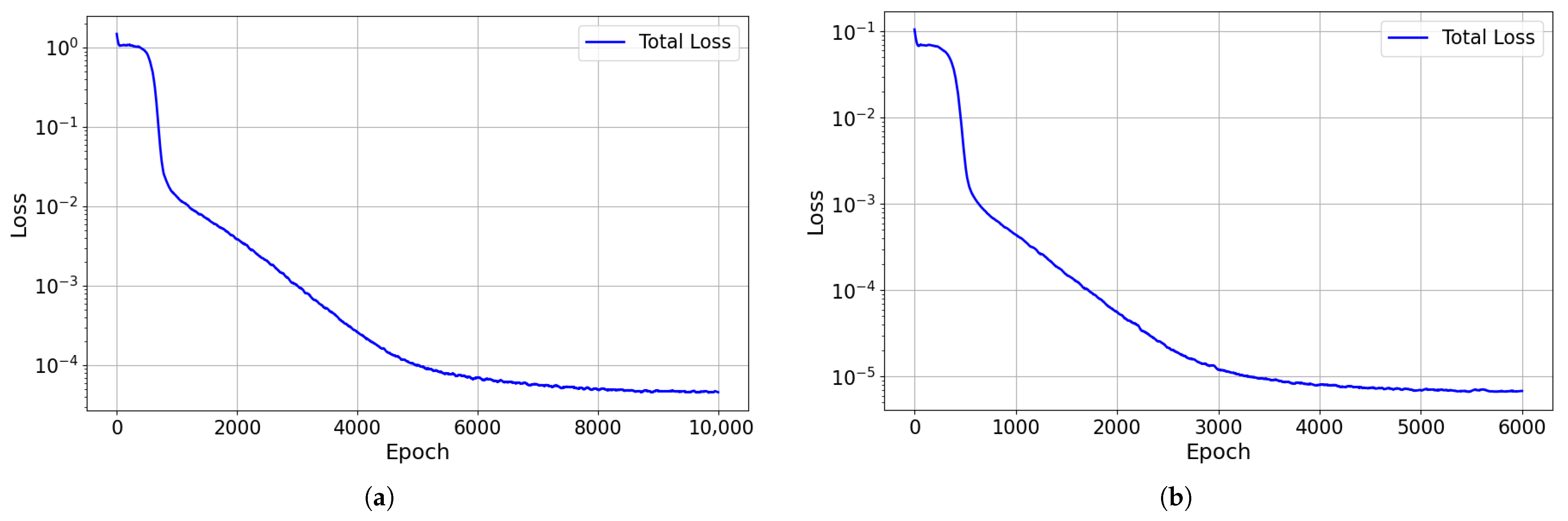

3.1. Heat Conduction Equation

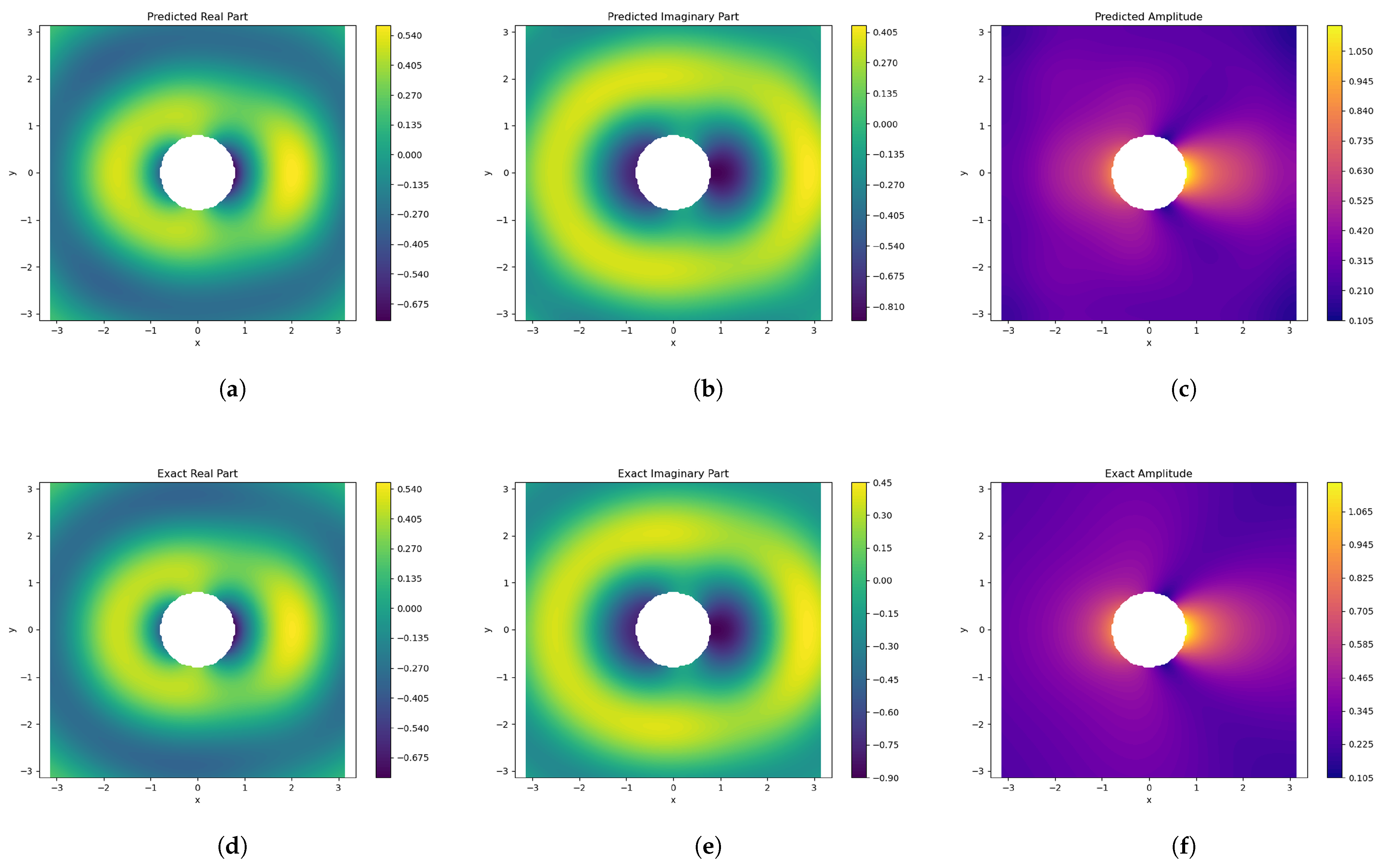

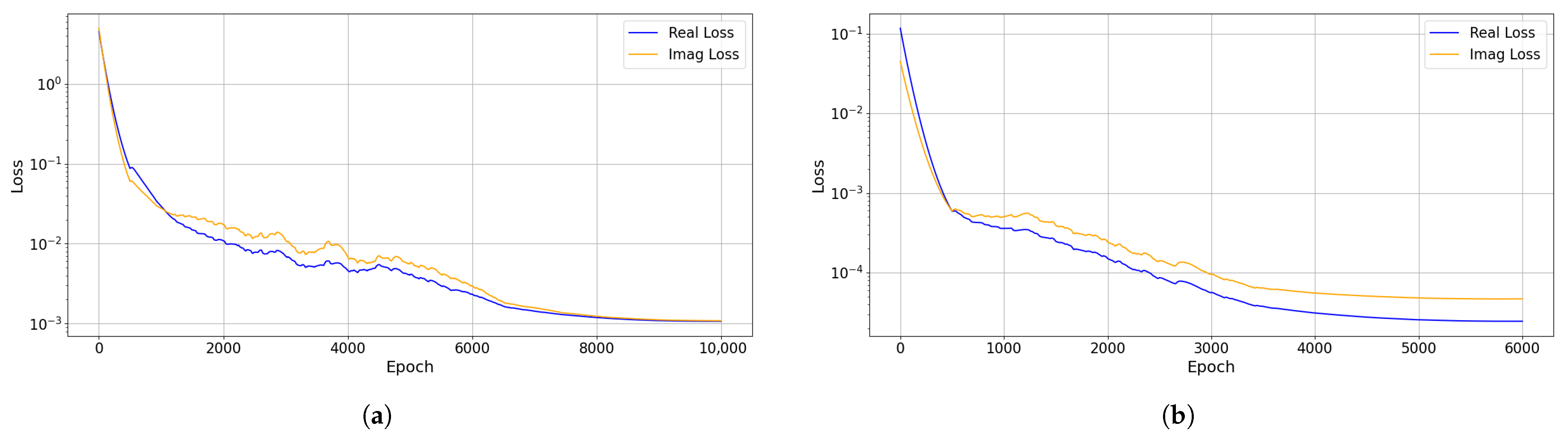

3.2. Two-Dimensional Acoustic Scattering Problem Governed by the Helmholtz Equation

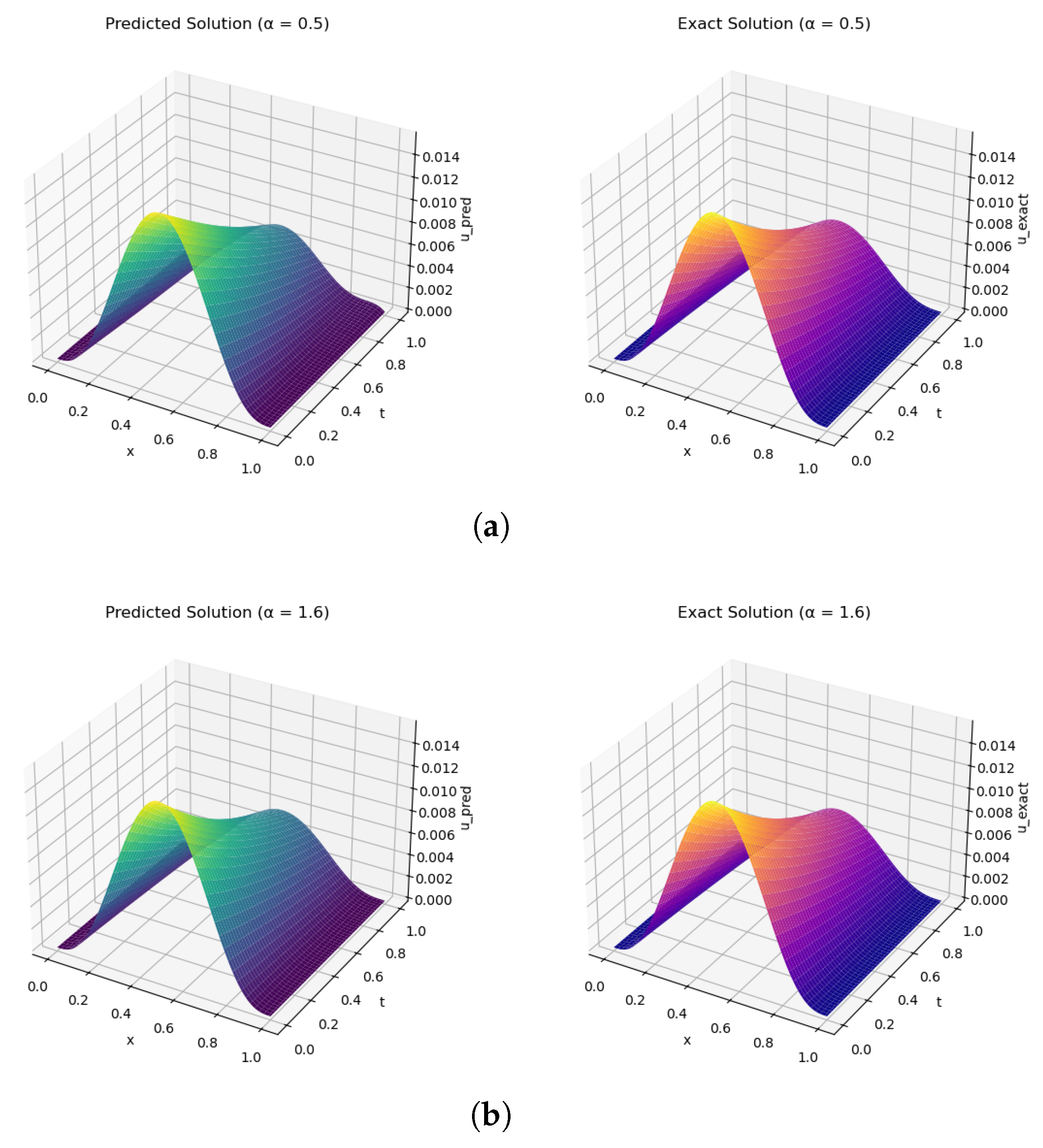

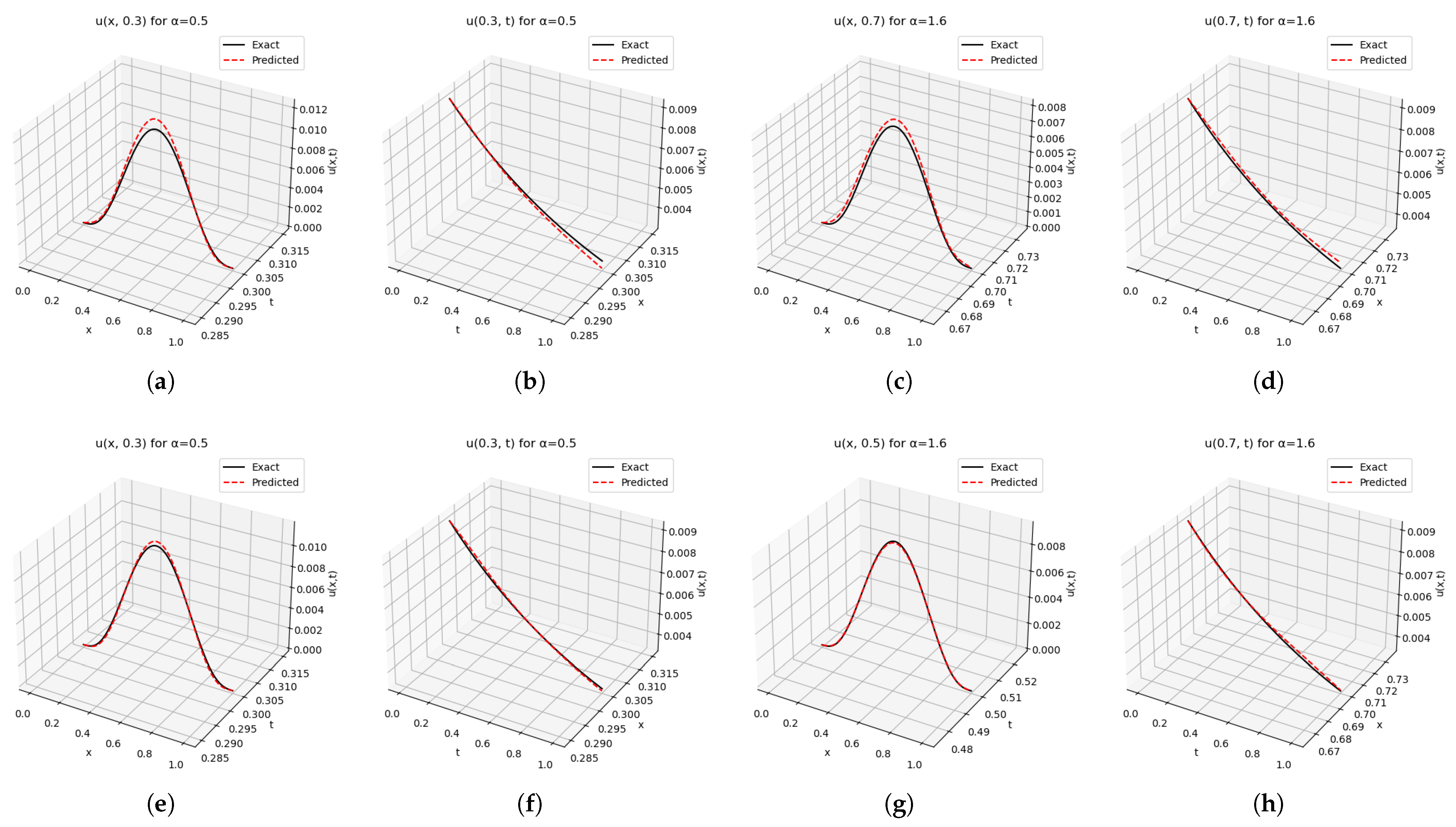

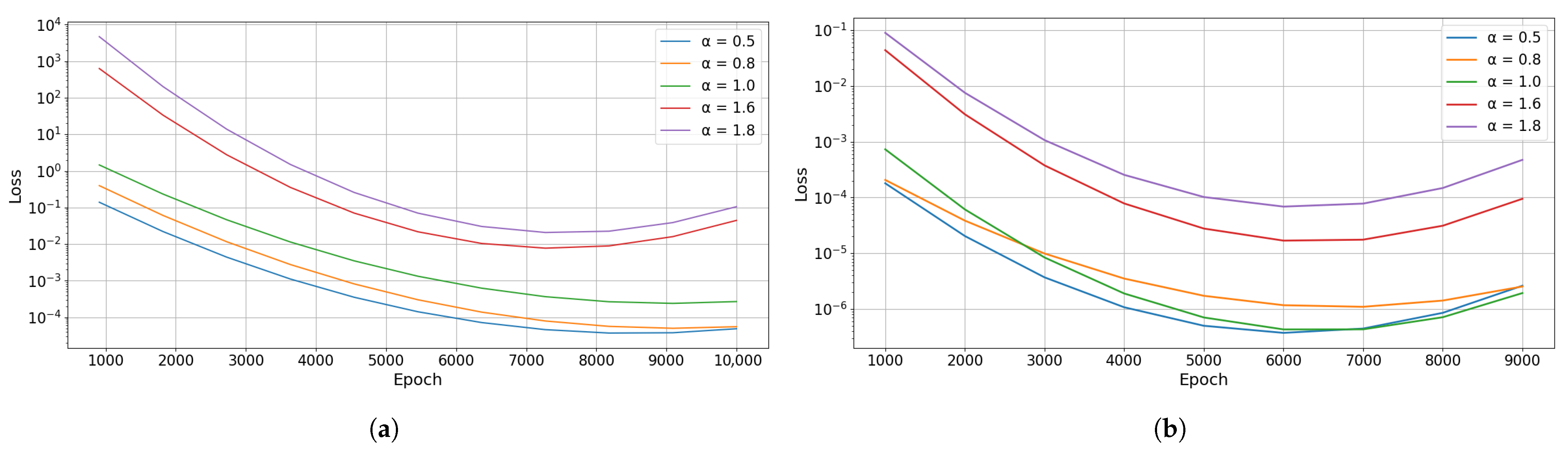

3.3. Time-Fractional Diffusion Equation with Riemann–Liouville Derivatives

4. Discussion

4.1. Performance Improvements and Robustness

4.2. Mechanism of Gradient Variance Weighting

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Evans, L.C. Partial Differential Equations, 2nd ed.; American Mathematical Society: Providence, RI, USA, 2010. [Google Scholar]

- LeVeque, R.J. Finite Difference Methods for Ordinary and Partial Differential Equations: Steady-State and Time-Dependent Problems; SIAM: Philadelphia, PA, USA, 2007. [Google Scholar]

- Lehrenfeld, C.; Olshanskii, M.A. An Eulerian finite element method for PDEs in time-dependent domains. ESAIM Math. Model. Numer. Anal. 2019, 53, 585–614. [Google Scholar] [CrossRef]

- Bueno-Orovio, A.; Pérez-García, V.M.; Fenton, F.H. Spectral methods for partial differential equations in irregular domains: The spectral smoothed boundary method. SIAM J. Sci. Comput. 2006, 28, 886–900. [Google Scholar] [CrossRef]

- Alzahrani, H.; Turkiyyah, G.; Knio, O.; Keyes, D. Space-fractional diffusion with variable order and diffusivity: Discretization and direct solution strategies. J. Comput. Phys. 2021, 435, 110162. [Google Scholar] [CrossRef]

- Efendiev, Y.; Galvis, J.; Hou, T.Y. Generalized multiscale finite element methods. J. Comput. Phys. 2013, 251, 116–135. [Google Scholar] [CrossRef]

- Garrappa, R. Neglecting nonlocality leads to unreliable numerical methods for fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 2019, 70, 302–306. [Google Scholar] [CrossRef]

- Moghaddam, B.P.; Babaei, A.; Dabiri, A.; Galhano, A. Fractional stochastic partial differential equations: Numerical advances and practical applications—A state of the art review. Symmetry 2024, 16, 563. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Raissi, M.; Perdikaris, P.; Karniadakis, G.E. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear PDEs. J. Comput. Phys. 2019, 378, 686–707. [Google Scholar] [CrossRef]

- Cuomo, S.; Di Cola, V.S.; Giampaolo, F.; Rozza, G.; Raissi, M.; Piccialli, F. Scientific machine learning through physics-informed neural networks: Where we are and what’s next. J. Sci. Comput. 2022, 92, 88. [Google Scholar] [CrossRef]

- Lu, L.; Meng, X.; Mao, Z.; Karniadakis, G.E. DeepXDE: A deep learning library for solving differential equations. SIAM Rev. 2021, 63, 208–228. [Google Scholar] [CrossRef]

- Karniadakis, G.E.; Kevrekidis, I.G.; Lu, L.; Perdikaris, P.; Wang, S.; Yang, L. Physics-informed machine learning. Nat. Rev. Phys. 2021, 3, 422–440. [Google Scholar] [CrossRef]

- Jin, X.; Cai, S.; Li, H.; Karniadakis, G.E. NSFnets (Navier–Stokes Flow nets): Physics-informed neural networks for the incompressible Navier–Stokes equations. J. Comput. Phys. 2021, 426, 109951. [Google Scholar] [CrossRef]

- Song, C.; Alkhalifah, T.; Waheed, U.B. Solving the frequency-domain acoustic VTI wave equation using PINNs. Geophys. J. Int. 2021, 227, 1928–1947. [Google Scholar] [CrossRef]

- Pang, G.; Lu, L.; Karniadakis, G.E. fPINNs: Fractional Physics-Informed Neural Networks. SIAM J. Sci. Comput. 2019, 41, A2603–A2626. [Google Scholar] [CrossRef]

- Weng, Y.; Zhou, D. Multiscale Physics-Informed Neural Networks for Stiff Chemical Kinetics. J. Phys. Chem. A 2022, 126, 8534–8543. [Google Scholar] [CrossRef]

- Mustajab, A.H.; Lyu, H.; Rizvi, Z.; Wuttke, F. Physics-Informed Neural Networks for High-Frequency and Multi-Scale Problems Using Transfer Learning. Appl. Sci. 2024, 14, 3204. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.; Feng, X.; Xu, H.; Hui, X. An improved physics-informed neural network with adaptive weighting and mixed differentiation for solving the incompressible Navier–Stokes equations. Nonlinear Dyn. 2024, 111, 2345–2365. [Google Scholar] [CrossRef]

- Hou, J.; Li, Y.; Ying, S. Enhancing PINNs for solving PDEs via adaptive collocation point movement and adaptive loss weighting. Nonlinear Dyn. 2023, 111, 15233–15261. [Google Scholar] [CrossRef]

- Mao, Z.; Meng, X. Physics-informed neural networks with residual/gradient-based adaptive sampling methods for solving partial differential equations with sharp solutions. Appl. Math. Mech. (Engl. Ed.) 2023, 44, 1069–1084. [Google Scholar] [CrossRef]

- Wang, S.; Teng, Y.; Perdikaris, P. Self-adaptive loss balanced physics-informed neural networks (lbPINNs). Neurocomputing 2022, 496, 11–34. [Google Scholar] [CrossRef]

- Gao, B.; Yao, R.; Li, Y. Physics-informed neural networks with adaptive loss weighting algorithm for solving partial differential equations (APINNs). Comput. Math. Appl. 2025, 181, 216–227. [Google Scholar]

- Wang, J.; Gao, H.; Sun, H. A simple remedy for failure modes in physics-informed neural networks. Neural Netw. 2025, 183, 106963. [Google Scholar] [CrossRef]

- Crank, J. The Mathematics of Diffusion, 2nd ed.; Oxford University Press: Oxford, UK, 1975. [Google Scholar]

- Bouche, D.; Hong, Y.; Jung, C.-Y. Asymptotic analysis of the scattering problem for the Helmholtz equation with high wave numbers. Discret. Contin. Dyn. Syst. 2017, 37, 2581–2602. [Google Scholar] [CrossRef]

- Moiola, A. Scattering of Time-Harmonic Acoustic Waves: Helmholtz Equation; MNAPDE2022; University of Pavia: Pavia, Italy, 2022. [Google Scholar]

- Spence, E.A. Wavenumber-explicit bounds in time-harmonic acoustic scattering. SIAM J. Math. Anal. 2014, 46, 2987–3024. [Google Scholar] [CrossRef]

- Saadat, M.; Mangal, D.; Jamali, S. UniFIDES: Universal fractional integro-differential equations solver. Comput. Math. Appl. 2022, 103, 23–45. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H.; Jiang, X. Fractional Physics-informed Neural Networks for Time-fractional Phase Field Models. Nonlinear Dyn. 2022, 110, 2715–2739. [Google Scholar] [CrossRef]

- Wu, C.; Zhu, M.; Tan, Q.; Kartha, Y.; Lu, L. A Comprehensive Study of Non-Adaptive and Residual-Based Adaptive Sampling for Physics-Informed Neural Networks. Comput. Methods Appl. Mech. Eng. 2022, 396, 115100. [Google Scholar] [CrossRef]

| Problem | Fractional Order | PINN | GVW-PINN |

|---|---|---|---|

| Heat conduction equation | – | ||

| Helmholtz equation | – | ||

| Fractional diffusion equation | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Liu, Q.; Zhang, R.; Yue, L.; Ding, Z. A Gradient-Variance Weighting Physics-Informed Neural Network for Solving Integer and Fractional Partial Differential Equations. Appl. Sci. 2025, 15, 11137. https://doi.org/10.3390/app152011137

Zhang L, Liu Q, Zhang R, Yue L, Ding Z. A Gradient-Variance Weighting Physics-Informed Neural Network for Solving Integer and Fractional Partial Differential Equations. Applied Sciences. 2025; 15(20):11137. https://doi.org/10.3390/app152011137

Chicago/Turabian StyleZhang, Liang, Quansheng Liu, Ruigang Zhang, Liqing Yue, and Zhaodong Ding. 2025. "A Gradient-Variance Weighting Physics-Informed Neural Network for Solving Integer and Fractional Partial Differential Equations" Applied Sciences 15, no. 20: 11137. https://doi.org/10.3390/app152011137

APA StyleZhang, L., Liu, Q., Zhang, R., Yue, L., & Ding, Z. (2025). A Gradient-Variance Weighting Physics-Informed Neural Network for Solving Integer and Fractional Partial Differential Equations. Applied Sciences, 15(20), 11137. https://doi.org/10.3390/app152011137