Collaborative Real-Time Single-Object Anomaly Detection Framework of Roadside Facilities for Traffic Safety and Management Using Efficient YOLO

Abstract

Featured Application

Abstract

1. Introduction

2. Related Works

2.1. Road and Roadside Anomaly Detection

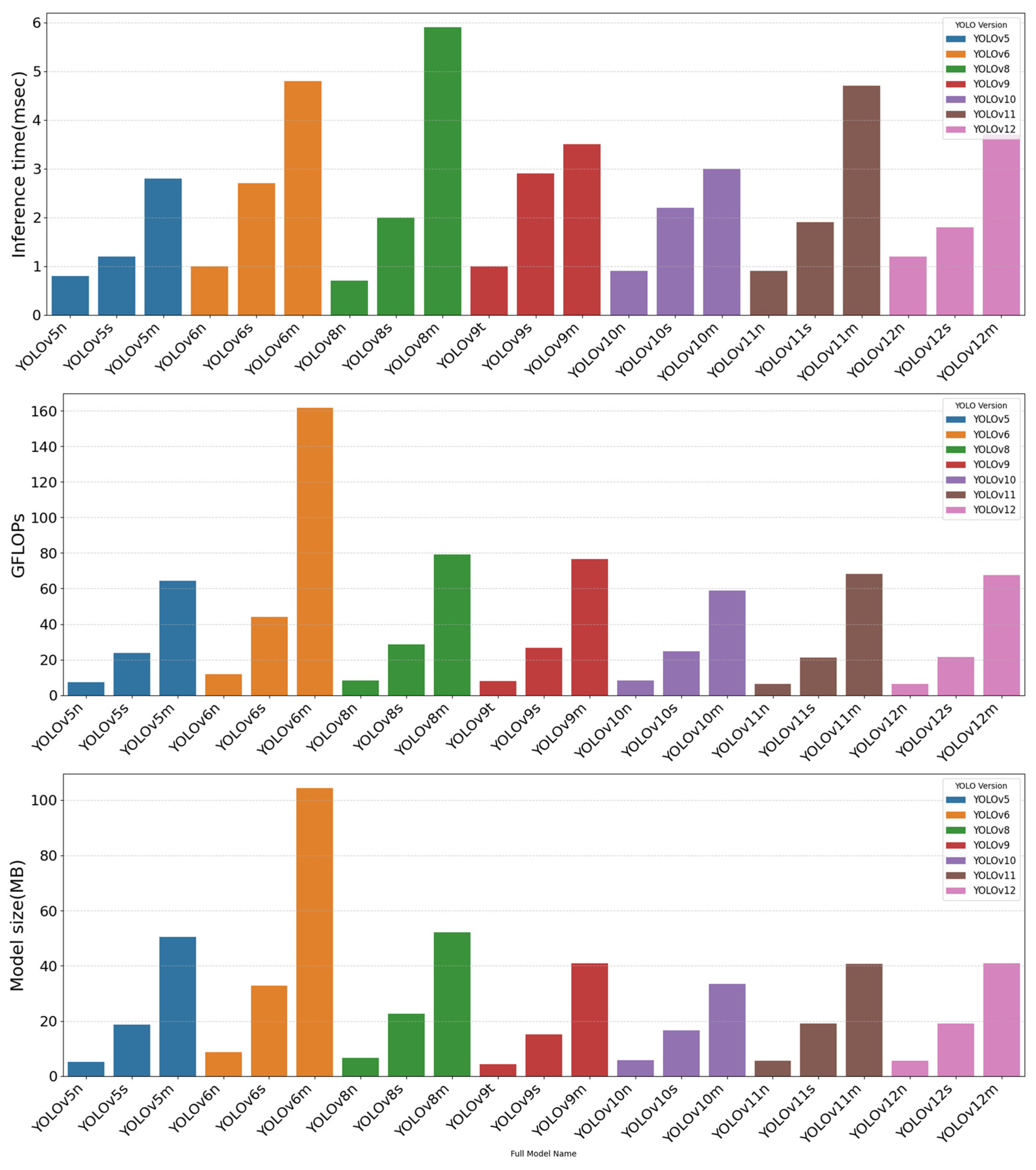

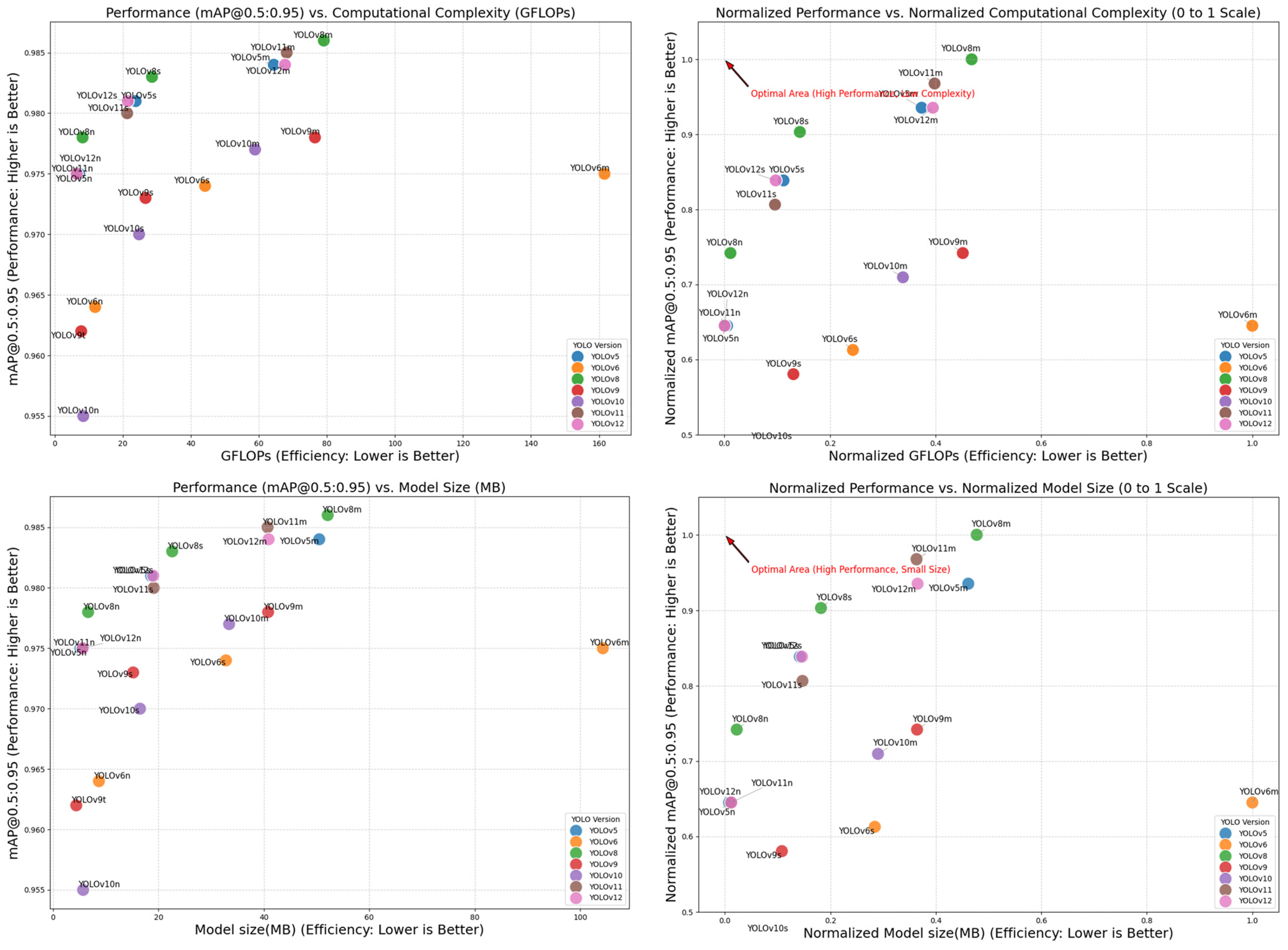

2.2. Comparison of YOLO Family

2.3. Specialized Single-Object Detection Models for Collaborative Multi Agent Deployment

- Reduced computational load and model complexity on each device, resulting in faster inference and lower power consumption.

- Improved detection accuracy and robustness for each object type by enabling targeted training on highly specific features.

- Flexibility in deployment, where different agents can be assigned different detection tasks based on situational needs and available resources.

3. Data and Method

3.1. Dataset

3.2. The Proposed Method

3.2.1. Data Collection an Annotation

3.2.2. Model Training and Selection for Deploying on Edge Devices

- Performance (P): This refers to the model’s accuracy in detecting the target objects. For object detection tasks, the mean Average Precision (mAP) is the standard metric used to quantify performance. A higher mAP value indicates greater detection accuracy across all object classes.

- Efficiency (E): This encompasses the computational cost and resource footprint of the model. Key efficiency metrics include the Inference Speed (FPS, Frames Per Second), Model Size (MB), and Computational Cost (GFLOPs). A higher FPS, smaller model size, and lower GFLOPs indicate greater efficiency.

- Hardware Constraint Analysis: Analyze the target device’s specifications, including its CPU/GPU/NPU capabilities, available RAM, and storage. These constraints define the acceptable range for model size and computational cost.

- Candidate Pool Generation: A diverse set of lightweight object detection models is trained on the collected dataset. This pool includes models with varying architectures to ensure a wide range of performance-efficiency profiles.

- Empirical Measurement: Each candidate model is empirically tested on the target hardware to measure its exact performance (mAP) and efficiency metrics (FPS, model size). This step is crucial as theoretical values can differ significantly from real-world performance.

- Utility Score Calculation: Using the empirical data, the utility score for each model is calculated with pre-defined weights (, ) that align with the project’s priorities.

- Final Selection: The model with the highest utility score is chosen for deployment. This systematic approach ensures that the final selection is not based on a single metric but on a holistic evaluation tailored to the specific application’s needs.

3.2.3. On-Site Detection and Localization

- Intrinsic Camera Parameters (K): These are properties inherent to the camera itself, such as focal length (, ), principal point (, ), and skew coefficient. They are typically determined through a one-time camera calibration process and are used to model the camera’s projection of a 3D scene onto a 2D image plane.

- Extrinsic Parameters (): These define the camera’s position and orientation relative to the global world coordinate system. This is where the GPS and IMU data are integrated. A key assumption is that the road surface is a flat plane, and all detected objects are located on this plane. This simplification allows for more robust depth estimation. The GPS sensor is assumed to be at a known, fixed height () above the road surface.

4. Experiment Setting and Evaluation Metrics

4.1. Experimental Environment

4.2. Evaluation Matrics

4.3. Evaluation Reuslts Analysis and Discussion

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Type | Evaluation Metrics | Formulation | Description |

|---|---|---|---|

| Performance (Accuracy) | Precision | High precision reduces false alarms of object damage, preventing unnecessary inspections and resource waste. | |

| Recall | High recall minimizes missed detection of anomaly, ensuring early response to safety risks. | ||

| mAP@0.5 | Strong mAP@0.5 confirms that damages can be consistently localized and classified under standard inspection criteria. | ||

| mAP@0.5:0.95 | High mAP@0.5:0.95 demonstrates stable detection of object defects across stricter evaluation conditions, proving robustness in real-world monitoring. | ||

| F1 | A balanced F1 score means the system both avoids false detections and captures true damages, achieving efficient and safe infrastructure management. | ||

| Efficiency (Lightweight) | FPS | Real-time guarantee | |

| TT | - | A shorter training time reduces development cost. Training time depends on the size of the dataset and the complexity of the model. | |

| IT | A shorter inference time enables real-time detection and faster system response, enhancing safety and efficiency | ||

| Params. | - | More parameters make the model more expressive, but also increase computational cost and storage demand. | |

| GFLOPs | - | Lower GFLOPs indicate higher efficiency and faster inference on limited resources. | |

| Model size | - | Model size directly impacts deployment cost and storage needs; smaller models are well suited for memory-constrained devices such as mobile and embedded systems, while also improving loading time and transfer efficiency | |

| Positioning | 2DEDE | The smaller the distance error, the more accurately the location of the actual object can be estimated. |

References

- Baccari, S.; Hadded, M.; Ghazzai, H.; Touati, H.; Elhadef, M. Anomaly detection in connected and autonomous vehicles: A survey, analysis, and research challenges. IEEE Access 2024, 12, 19250–19276. [Google Scholar] [CrossRef]

- Rathee, M.; Bačić, B.; Doborjeh, M. Automated Road Defect and Anomaly Detection for Traffic Safety: A Systematic Review. Sensors 2023, 23, 5656. [Google Scholar] [CrossRef]

- Fang, L.; Shen, G.; Lue, H.; Chen, C.; Zhao, Z. Automatic Extraction of Roadside Traffic Facilities from Mobile Laser Scanning Point Clouds Based on Deep Belief Network. IEEE Trans. Intell. Transp. Syst. 2021, 22, 1964–1980. [Google Scholar] [CrossRef]

- Bello-Salau, H.; Onumanyi, A.J.; Salawedeen, A.T.; Muazu, M.B.; Oyinbo, A.M. An Examination of Different Vision Based Approaches for Road Anomaly Detection. In Proceedings of the 2nd International Conference of the IEEE Nigeria, Zaria, Nigeria, 14 October 2019. [Google Scholar] [CrossRef]

- AI-Hub. Local Government Road Facility Damage Dataset. Available online: https://aihub.or.kr/aihubdata/data/view.do?dataSetSn=71306 (accessed on 24 September 2025).

- Khan, N.N.; Ahmed, M.M. Weather and Surface Condition Detection Based on Road-Side Webcams: Application of Pre-Trained Convolutional Neural Network. Int. J. Transp. Sci. Technol. 2022, 11, 468–483. [Google Scholar] [CrossRef]

- Yang, Z.; Lan, X.; Wang, H. Comparative Analysis of YOLO Series Algorithms for UAV-Based Highway Distress Inspection: Performance and Application Insights. Sensors 2025, 25, 1475. [Google Scholar] [CrossRef]

- Manoni, L.; Orcioni, S.; Conti, M. Recent Advancements in Deep Learning Techniques for Road Condition Monitoring: A Comprehensive Review. IEEE Access 2024, 12, 154271–154293. [Google Scholar] [CrossRef]

- Xin, H.; Ye, Y.; Na, X.; Hu, H.; Wang, G.; Wu, C.; Hu, S. Sustainable Road Pothole Detection: A Crowdsourcing Based Multi-Sensors Fusion Approach. Sustainability 2023, 15, 6610. [Google Scholar] [CrossRef]

- Zareei, M.; Castaneda, C.A.L.; Alanazi, F.; Granda, F.; Perez-diaz, A.J.A. Machine Learning Model for Road Anomaly Detection Using Smartphone Accelerometer Data. IEEE Access 2025, 13, 122841–122851. [Google Scholar] [CrossRef]

- Martinez-Ríos, E.A.; Bustamante-Bello, M.R.; Arce-Sáenz, L.A. A Review of Road Surface Anomaly Detection and Classification Systems Based on Vibration-Based Techniques. Appl. Sci. 2022, 12, 9413. [Google Scholar] [CrossRef]

- Srivastava, V.; Mishra, S.; Gupta, N. Enhancing Safety in Autonomous Vehicles Using Advanced Deep Learning-Based Pothole Detection. In Advanced Technologies in Electronics, Communications and Signal Processing, ICATECS 2024, Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Koganti, K.K., E., S.R., Gupta, N., Eds.; Springer: Cham, Switzerland, 2025; Volume 620. [Google Scholar] [CrossRef]

- Yang, L.; Yu, K.; Tang, T.; Li, J.; Yuan, K.; Wang, L.; Zhang, X.; Chen, P. BEVHeight: A Robust Framework for Vision-Based Roadside 3D Object Detection. arXiv 2023, arXiv:2303.08498. [Google Scholar] [CrossRef]

- Assemlali, H.; Bouhsissin, S.; Sael, N. Computer Vision-Based Detection and Classification of Road Obstacles: Systematic Literature Review. IEEE Access 2025, 13, 128603–128638. [Google Scholar] [CrossRef]

- Lu, Y.-B.; Yang, S.-R.; Lin, P.; Huang, C.-W. VADtalk: An Internet of Vehicles Platform Facilitating Anomaly Detection Modeling and Deployment for Self-Driving Vehicles. In Proceedings of the International Wireless Communications and Mobile Computing, Marrakesh, Morocco, 19–23 June 2023. [Google Scholar] [CrossRef]

- Tran, T.M.; Vu, T.N.; Nguyen, T.V.; Nguyen, K. UIT-ADrone: A Novel Drone Dataset for Traffic Anomaly Detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 5590–5601. [Google Scholar] [CrossRef]

- Redmon, J.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892v1. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Kong, Z.; Ding, B.; Zeng, Z.; Wang, K.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.-Y.; Liao, H.-Y.M. YOLOv9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Youwai, S.; Chaiyaphat, A.; Chaipetch, P. YOLO9tr: A lightweight model for pavement damage detection utilizing a generalized efficient layer aggregation network and attention mechanism. J. Real Time Image Process. 2024, 21, 163. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Lamos, L.T.; Sappa, A.D. A Decade of You Only Look Once (YOLO) for Object Detection. arXiv 2025, arXiv:2504.18586. [Google Scholar]

- Ali, M.L.; Zhang, Z. The YOLO Framework: A Comprehensive Review of Evolution, Applications, and Benchmarks in Object Detection. Computer 2024, 13, 336. [Google Scholar] [CrossRef]

- Wang, R.-F.; Qin, Y.-M.; Zhao, Y.-Y.; Xu, M.; Schardong, I.B.; Cui, K. RA-CottNet: A Real-Time High-Precision Deep Learning Model for Cotton Boll and Flower Recognition. AI 2025, 6, 235. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Q.; Xie, X.; Shen, Y.; Ran, J.; Gui, S.; Zhang, H.; Li, X.; Zhang, Z. GLNet-YOLO: Multimodal Feature Fusion for Pedestrian Detection. AI 2025, 6, 229. [Google Scholar] [CrossRef]

- Nghiem, V.Q.; Nguyen, H.H.; Hoang, M.S. LEAF-YOLO: Lightweight Edge-Real-Time Small Object Detection on Aerial Imagery. Intell. Syst. Appl. 2025, 25, 200484. [Google Scholar] [CrossRef]

- Navardi, N.; Humes, E.; Manjunath, T.; Mohenin, T. MetaE2RL: Toward Meta-Reasoning for Energy-Efficient Multigoal Reinforcement Learning With Squeezed-Edge You Only Look Once. IEEE Micro 2023, 43, 29–39. [Google Scholar] [CrossRef]

- Guo, Y.; Wu, Z.; You, B.; Chen, L.; Zhao, J.; Li, X. YOLO-SDD: An Effective Single-Class Detection Method for Dense Livestock Production. Animals 2025, 15, 1205. [Google Scholar] [CrossRef]

- Fortin, L.V.; Lantos, O.E. Performance Analysis of YOLO versions for Real-time Pothole Detection. Procedia Comput. Sci. 2025, 257, 77–84. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, L.; Huang, Y.; Wang, X.; Hsieh, S.-Y.; Gadekallu, T.R. A Cooperative Vehicle-Road System for Anomaly Detection on Vehicle Tracks with Augmented Intelligence of Things. IEEE Internet Things J. 2024, 11, 35975–35988. [Google Scholar] [CrossRef]

- Ramesh, A.; Nikam, D.; Balachandran, V.N.; Guo, L.; Wang, R.; Hu, L.; Comert, G.; Jia, Y. Cloud-Based Collaborative Road-Damage Monitoring with Deep Learning and Smartphones. Sustainability 2022, 14, 8682. [Google Scholar] [CrossRef]

- Teixeira, K.; Miguel, G.; Silva, H.; Madeiro, F. A Survey on Applications of Unmanned Aerial Vehicles Using Machine Learning. IEEE Access 2023, 11, 117582–117621. [Google Scholar] [CrossRef]

- Alzamzami, O.; Babour, A.; Baalawi, W.; Al Khuzayem, L. PDS-UAV: A Deep Learning-Based Pothole Detection System Using Unmanned Aerial Vehicle Images. Sustainability 2024, 16, 9168. [Google Scholar] [CrossRef]

- Zhang, S.; Li, J.; Ding, M.; Nguyen, D.C.; Tan, W. Federated Learning in Intelligent Transportation Systems: Recent Applications and Open Problems. IEEE Trans. Intell. Transp. Syst. 2024, 25, 3259–3285. [Google Scholar] [CrossRef]

- El-Wakeel, A.S.; Li, J.; Noureldin, A.; Hassanein, H.S.; Zorba, N. Towards a Practical Crowdsensing System for Road Surface Conditions Monitoring. IEEE Internet Things J. 2018, 5, 4672–4685. [Google Scholar] [CrossRef]

- Chen, D.; Deng, T.; Huang, H.; Jia, J.; Dong, M.; Yuan, D. Mobility-Aware Multi-Task Decentralized Federated Learning for Vehicular Networks: Modeling, Analysis, and Optimization. IEEE Trans. Mob. Comput. 2025, 1–17. [Google Scholar] [CrossRef]

- Zhang, C.; Liu, X.; Yao, A.; Bai, J.; Dong, C.; Pal, S.; Jiang, F. Fed4UL: A Cloud–Edge–End Collaborative Federated Learning Framework for Addressing the Non-IID Data Issue in UAV Logistics. Drones 2024, 8, 312. [Google Scholar] [CrossRef]

- Chen, X.; Chen, M.; Tang, S.; Niu, Y.; Zhu, J. MOSE: Boosting Vision-based Roadside 3D Object Detection with Scene Cues. arXiv 2024, arXiv:2404.05280. [Google Scholar] [CrossRef]

| Category | Class | Number of Images (EA) | Ratio (%) |

|---|---|---|---|

| PE Drum | Normal | 30,511 | 4.36 |

| Damaged | 40,946 | 5.84 | |

| PE Guardrails | Normal | 55,577 | 7.93 |

| Damaged | 31,918 | 4.57 | |

| No parking Cone | Normal | 48,027 | 6.86 |

| Damaged | 31,970 | 4.56 | |

| Traffic Cone | Normal | 60,276 | 8.60 |

| Damaged | 58,462 | 8.34 | |

| Tubular marker | Normal | 72,370 | 10.33 |

| Damaged | 39,561 | 5.65 | |

| Snow removal box | Normal | 30,299 | 4.32 |

| Damaged | 50,643 | 7.23 | |

| Sandwich Board Sign | Normal | 41,572 | 5.93 |

| Damaged | 55,655 | 7.94 | |

| PE Fence | Normal | 26,426 | 3.77 |

| Damaged | 26,430 | 3.77 | |

| Total | 700,643 | 100 | |

| Model | Performance | Efficiency | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | mAP@0.5 | mAP@0.5:0.95 | FPS | IT (ms) | TT (Hour) | Params (EA) | GFLOPs | Model Size (MB) | ||

| YOLOv5 | n | 0.99 | 0.991 | 0.990 | 0.995 | 0.975 | 1250 | 0.8 | 0.64 | 2,503,854 | 7.2 | 5.2 |

| s | 0.992 | 0.993 | 0.992 | 0.995 | 0.981 | 833 | 1.2 | 0.65 | 9,112,310 | 23.8 | 18.6 | |

| m | 0.991 | 0.99 | 0.990 | 0.995 | 0.984 | 357 | 2.8 | 0.91 | 25,066,278 | 64.4 | 50.5 | |

| YOLOv6 | n | 0.981 | 0.981 | 0.981 | 0.994 | 0.964 | 1000 | 1 | 0.55 | 4,238,342 | 11.9 | 8.7 |

| s | 0.986 | 0.985 | 0.985 | 0.994 | 0.974 | 370 | 2.7 | 0.66 | 16,306,230 | 44.2 | 32.8 | |

| m | 0.988 | 0.984 | 0.986 | 0.994 | 0.975 | 208 | 4.8 | 1.23 | 51,997,798 | 161.6 | 104.3 | |

| YOLOv8 | n | 0.991 | 0.991 | 0.991 | 0.995 | 0.978 | 1428.6 | 0.7 | 0.58 | 3,011,238 | 8.2 | 6.65 |

| s | 0.993 | 0.993 | 0.993 | 0.995 | 0.983 | 500 | 2 | 0.84 | 11,136,374 | 28.6 | 22.6 | |

| m | 0.99 | 0.992 | 0.991 | 0.995 | 0.986 | 169.5 | 5.9 | 0.94 | 25,857,478 | 79.1 | 52.1 | |

| YOLOv9 | t | 0.982 | 0.975 | 0.978 | 0.993 | 0.962 | 1000 | 1 | 1.20 | 2,005,798 | 7.8 | 4.4 |

| s | 0.984 | 0.985 | 0.984 | 0.994 | 0.973 | 345 | 2.9 | 1.22 | 7,167,862 | 26.7 | 15.2 | |

| m | 0.987 | 0.989 | 0.988 | 0.995 | 0.978 | 285.7 | 3.5 | 1.21 | 20,014,438 | 76.5 | 40.8 | |

| YOLOv10 | n | 0.966 | 0.958 | 0.962 | 0.99 | 0.955 | 1111.1 | 0.9 | 0.82 | 2,707,820 | 8.4 | 5.7 |

| s | 0.978 | 0.968 | 0.973 | 0.993 | 0.97 | 454.5 | 2.2 | 0.88 | 8,067,900 | 24.8 | 16.5 | |

| m | 0.986 | 0.972 | 0.979 | 0.994 | 0.977 | 333 | 3 | 1.14 | 15,314,326 | 58.9 | 33.4 | |

| YOLOv11 | n | 0.99 | 0.99 | 0.990 | 0.994 | 0.975 | 1111.1 | 0.9 | 0.69 | 2,590,230 | 6.4 | 5.6 |

| s | 0.987 | 0.986 | 0.986 | 0.994 | 0.98 | 526.3 | 1.9 | 0.72 | 9,413,574 | 21.3 | 19.1 | |

| m | 0.99 | 0.993 | 0.991 | 0.995 | 0.985 | 212.8 | 4.7 | 0.99 | 20,054,550 | 68.2 | 40.7 | |

| YOLOv12 | n | 0.985 | 0.99 | 0.987 | 0.995 | 0.975 | 833.3 | 1.2 | 0.84 | 2,568,422 | 6.5 | 5.6 |

| s | 0.983 | 0.989 | 0.986 | 0.995 | 0.981 | 556 | 1.8 | 0.90 | 9,253,910 | 21.5 | 19 | |

| m | 0.989 | 0.992 | 0.990 | 0.995 | 0.984 | 270 | 3.7 | 1.24 | 20,139,030 | 67.7 | 40.9 | |

| Type of Object | Number of Dataset Train/Val. | Performance | Efficiency | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | mAP@0.5 | mAP@0.5:0.95 | TT (Hour) | GFLOPs | Params (EA) | Model Size (MB) | ||

| 8 Objects (16 Classes) | 174,633/43,455 | 0.976 | 0.973 | 0.947 | 0.991 | 0.946 | 2.76 | 28.7 | 11,141,792 | 22.6 |

| PE Drum | 17,866/4493 | 0.979 | 0.967 | 0.973 | 0.992 | 0.951 | 0.28 | 28.6 | 11,136,374 | 22.6 |

| sPE Barrier | 22,181/5642 | 0.977 | 0.98 | 0.978 | 0.993 | 0.962 | 0.35 | 28.6 | 11,136,374 | 22.6 |

| No Parking Cone | 18,333/4563 | 0.975 | 0.984 | 0.979 | 0.994 | 0.957 | 0.29 | 28.6 | 11,136,374 | 22.6 |

| Traffic Cone | 30,430/7348 | 0.974 | 0.972 | 0.973 | 0.992 | 0.936 | 0.48 | 28.6 | 11,136,374 | 22.6 |

| Tubular Marker | 16,962/4316 | 0.959 | 0.953 | 0.95 | 0.988 | 0.892 | 0.27 | 28.6 | 11,136,374 | 22.6 |

| Snow Removal Box | 24,858/6226 | 0.996 | 0.997 | 0.996 | 0.995 | 0.962 | 0.39 | 28.6 | 11,136,374 | 22.6 |

| Sandwich Board Sign | 39,620/9699 | 0.993 | 0.993 | 0.993 | 0.995 | 0.983 | 0.63 | 28.6 | 11,136,374 | 22.6 |

| PE Fence | 16,423/4104 | 0.995 | 0.995 | 0.995 | 0.994 | 0.983 | 0.26 | 28.6 | 11,136,374 | 22.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, J.; Jang, S.; Choi, Y.; Lee, W.; Kim, B. Collaborative Real-Time Single-Object Anomaly Detection Framework of Roadside Facilities for Traffic Safety and Management Using Efficient YOLO. Appl. Sci. 2025, 15, 11139. https://doi.org/10.3390/app152011139

Kang J, Jang S, Choi Y, Lee W, Kim B. Collaborative Real-Time Single-Object Anomaly Detection Framework of Roadside Facilities for Traffic Safety and Management Using Efficient YOLO. Applied Sciences. 2025; 15(20):11139. https://doi.org/10.3390/app152011139

Chicago/Turabian StyleKang, Jiheon, Soohyen Jang, Yoonyoung Choi, Wooyong Lee, and Byoungkug Kim. 2025. "Collaborative Real-Time Single-Object Anomaly Detection Framework of Roadside Facilities for Traffic Safety and Management Using Efficient YOLO" Applied Sciences 15, no. 20: 11139. https://doi.org/10.3390/app152011139

APA StyleKang, J., Jang, S., Choi, Y., Lee, W., & Kim, B. (2025). Collaborative Real-Time Single-Object Anomaly Detection Framework of Roadside Facilities for Traffic Safety and Management Using Efficient YOLO. Applied Sciences, 15(20), 11139. https://doi.org/10.3390/app152011139