1. Introduction

The rapid adoption of ChatGPT-4 has accelerated the integration of generative artificial intelligence (GenAI) into higher education. Within months of its release, large language models entered student workflows for ideation, drafting, and revision. GenAI can reduce cognitive load, provide personalized feedback, and support iterative learning [

1]. These capabilities build on earlier educational AI systems—such as intelligent tutoring—that have improved student outcomes [

2]. However, widespread use raises concerns about academic integrity, epistemic reliability, and ethical governance [

3,

4].

The perceived utility of GenAI varies with task type, disciplinary norms, task specificity, and user characteristics. Acosta-Enríquez et al. [

5] found that perceived ease of use, emotional disposition, and a sense of responsibility are significant predictors of students’ intention to use AI tools in academia. Similarly, Al-Abdullatif and Alsubaie [

6] reported that perceived value—encompassing constructs such as usefulness, enjoyment, and affordability—is a principal determinant of engagement, with GenAI literacy serving as a salient moderating variable.

Research in writing studies has emphasized how GenAI is reshaping academic writing practices. Tools such as ChatGPT support full composition—ideation, structure, drafting, and revision—by providing immediate feedback, personalized guidance, and opportunities for iterative refinement [

7,

8,

9,

10]. Evidence also shows these tools’ usefulness in drafting sections of scholarly work, such as abstracts, introductions, and theoretical frameworks [

11,

12], and in enhancing writers’ linguistic accuracy and syntactic complexity [

13,

14,

15]. Explainable AI and alignment with proficiency standards are emerging strategies to increase transparency and pedagogical utility, particularly for second-language writers—who receive targeted support in grammar, collocation, and rhetorical conventions [

1]—and for language learning instruction [

16,

17].

At the same time, studies report risks—including plagiarism, overreliance, automation bias, and superficial reasoning—when instructional design is weak, eroding students’ critical skills, information retention, attention capabilities, and conceptual understanding [

18,

19]. Because GenAI is not a neutral tool, but rather “generative, opaque, and unstable” [

10], scholars call for new pedagogical competencies (e.g., models such as Technological Pedagogical Content Knowledge (TPACK)) to guide its effective classroom use. Rasul et al. [

20] underscore the need for careful integration strategies, detailing both the benefits and challenges of deploying ChatGPT in higher education. Van Dis et al. [

21] emphasize the importance of strategic research priorities to ensure responsible AI integration, pointing to challenges that extend beyond classroom practice toward broader institutional and ethical frameworks. Indeed, Zheng and Yang [

22] outline emerging research trends in AI-enhanced language education and the need to align GenAI tools with sound pedagogical principles. Therefore, without careful instructional design, the pervasive availability of AI may foster superficial reasoning or generic argumentation, undermining originality in student work [

21].

The literature also illustrates that students predominantly utilize GenAI for low-stakes or repetitive academic tasks, thereby allowing them to reallocate cognitive resources toward higher-order analytical processes. Barrot [

1] and Chan and Hu [

8] document this functional displacement; for instance, students in technical fields often employ GenAI for code generation, whereas those in the humanities primarily leverage it for writing support [

23]. Task type also modulates perceived usefulness. Chan and Hu [

8] observed that student reliance on ChatGPT intensifies during the preliminary stages of academic work—such as brainstorming or outlining—when immediate feedback can jump-start the creative process. Additionally, individual-level factors—including the user’s stage of academic progression and epistemological orientation—appear to influence patterns of GenAI engagement [

9,

24]. Yan [

25] and Qu and Wang [

4] report disciplinary asymmetries in students’ skepticism toward AI assistance, particularly in contexts where institutional policies on GenAI use remain underdeveloped. In contrast, other studies suggest that ChatGPT can function as a motivational scaffold, enhancing creative confidence and learner autonomy [

26]. This dichotomy highlights the nuanced role that generative AI plays in learning.

These concerns strengthen importance of examining final degree projects (FDPs), where high-stakes assessment, disciplinary integration, and demands for originality and rigorous argumentation are combined. Despite extensive research on low-stakes tasks, the role of GenAI in FDPs is underexplored. These assignments demand not only technical accuracy but also disciplinary integration, originality, and critical thinking. This study examines final-year students’ perceptions of GenAI’s utility across FDP stages and uses AI-driven linguistic analysis to assess academic maturity and support comparative interpretation. The following objectives guided the development and interpretation of our study:

To assess academic maturity and linguistic complexity in students’ oral discourse using GenAI-based linguistic analysis tools, ensuring participant homogeneity as a methodological foundation for comparative interpretation;

To analyze students’ perceived utility of GenAI across different phases of the final degree project’s development, identifying significant variations based on academic discipline, task type, and individual characteristics;

To explain these patterns through an inductive–deductive thematic analysis of the discussion transcripts, integrating emergent themes with item-level descriptive results within a convergent mixed-methods framework.

2. Materials and Methods

2.1. Participants

The study included final-year undergraduate students enrolled in Tourism, Business Administration and Management, Law, Computer Engineering, Chemical Engineering, Chemistry, History, Musicology, Philosophy, Medicine, and Psychology at a public university in Spain. One student per discipline was selected as a representative case, with a gender distribution of 36.36% male and 63.64% female. While this limited sample does not allow for generalization, it enables an exploratory analysis of cross-disciplinary patterns in GenAI use during final degree project (FDP) development.

2.2. Procedure

The research followed a descriptive, observational design covering the complete FDP cycle, from initial planning through to the final report, implemented over the course of three structured discussion sessions conducted between March and May 2025. To guide design and reporting, we adopted a convergent mixed-methods design (single-phase, concurrent collection of quantitative and qualitative data) following Creswell’s core typology for mixed-methods research [

27]. In this approach, numeric responses gathered during the moderated debates (closed-ended items via Kahoot) and textual data from full audio recordings/transcripts of the 60 min group discussions were collected in parallel, analyzed separately, and then integrated at interpretation to compare, corroborate, and extend findings (triangulation). The rationale for mixing is pragmatic (combining scale-based indicators of perceived GenAI utility with participants’ articulated reasoning provides a more comprehensive account than either strand alone).

Each session was structured around a dual-phase format:

Phase 1: 60 min engagement with GenAI tools applied to participants’ ongoing FDPs. In this first phase, students worked on their FDP by uninterrupted interaction with GenAI tools. In this stage, all the interaction was monitored by recording their screens in order to register all the information they extracted and the way they did it. In that way, different prompting strategies could be monitored. Furthermore, the presence of three or more supervisors was mandatory to ensure that students’ issues with the applications were addressed and guarantee that students did not cheat by using GenAI incorrectly.

Phase 2: 60 min of moderated discussion. In this phase, students answered questions made by the researchers. Depending on the question contents, answers were open (requiring a short text as an answer), multiple choice, or on a Likert scale. The specific tools used in this phase are described in the following section. Results were shown upon completion of each question to start a short debate based on the results. This strategy stimulated discussion, enabling participants to reflect on peer-generated insights. The dataset held pre-interaction and post-interaction responses, offering a dynamic view of student perspectives. Conversations were recorded and transcribed for later analysis. Researchers involved in the experiment included experts in Computer Science, Education, and Psychology to cover all the potential areas of interest in the experiment.

The sessions, task types, and debate questions were focused on the different phases of the FDP’s development:

Session 1: Introduction, formulation of objectives, and construction of the theoretical framework.

Session 2: Methodological design, data analysis procedures, and formal aspects of the written dissertation.

Session 3: Synthesis of results, formulation of conclusions, and preparation for oral defense.

2.3. Tools

When using a small experimental sample (11 students), it is crucial to ensure that results are not biased due to the inclusion of students with special or very particular features in the sample. For that reason, a preliminary part of our study focused on analyzing the individual differences between students.

In this preliminary section, we used an AI classification algorithm that measures the academic maturity of students based on written texts. The procedure starts by obtaining the lexical diversity and readability metrics (Type Token Ratio (TTR) and Flesch–Kincaid Grade (FKG)) using the 2021 Python open library

https://github.com/WSE-research/LinguaF/tree/main (accessed on 12 July 2024; 2024 update). The TTR quantifies lexical diversity by dividing the number of unique words (types) by the total number of words (tokens), with values closer to 1 indicating richer vocabulary use and lower values reflecting repetition. The FKG is a readability index based on average sentence length and syllables per word, designed to approximate the level of education required to understand a text; lower values indicate simpler writing, while higher scores reflect greater syntactic and lexical complexity. These measures served as inputs for a Random Forest regressor trained on data from degree students across the four program years. Even when used without further linguistic analysis, the TTR and FKG provide reliable predictors of writing maturity and sample homogeneity.

In the discussion sessions, data collection was operationalized through two complementary instruments: the Kahoot platform and the audiovisual recording and transcription of oral discourse. The Kahoot tool facilitated the real-time capture of individual responses to questions, offering a preliminary diagnostic of students’ perceptions and expectations regarding GenAI. We conducted an inductive–deductive thematic analysis of the verbatim transcripts. Two researchers independently coded an initial subset to develop a shared codebook (definitions and examples), resolved discrepancies by consensus, and then applied the finalized codebook to the full corpus using constant comparison. The unit of analysis was a short meaning unit relevant to the research questions. To enhance trustworthiness, we kept an audit trail and performed cross-checks on a random sample. Finally, themes were aligned with item-level descriptive results to integrate qualitative explanations with the quantitative strand.

2.4. Ethical Issues

The research was conducted in strict compliance with the ethical standards governing studies involving human participants. Prior to participation, informed consent was obtained from all students, who were assured of the anonymity and confidentiality of their contributions. No personally identifiable information was collected at any stage. Furthermore, the study received formal ethical clearance from the Ethics Committee of Universidad Autónoma de Madrid under protocol CEI-145-3331.

3. Results and Discussion

3.1. Linguistic Analysis and Academic Maturity Assessment

Figure 1 presents the linguistic parameters derived from transcriptions of students’ interventions during the post-session debate following their first experience with GenAI. We have included only the 24 answers found with a minimum length that can give accurate results in the linguistic analysis. It reveals distinct patterns in both lexical diversity and syntactic complexity measures.

TTR values, shown in

Figure 1a, demonstrate remarkable homogeneity across all student interventions, with values approaching the theoretical maximum of 1. This consistency suggests that students maintained comparable levels of lexical diversity during their oral contributions, indicating similar vocabulary richness in their spontaneous discourse. In contrast, the FKG values exhibit greater variability, ranging from 5 to 15 (

Figure 1b). This heterogeneity is expected and contextually appropriate, as the FKG measures syntactic complexity, which naturally fluctuates in spontaneous informal oral discourse. During debates, speakers must formulate responses extemporaneously, often resulting in occasional false starts and varied sentence structures and complexity levels that differ significantly from carefully constructed written texts.

The AI algorithm combines these linguistic parameters to predict academic maturity, with results presented in

Figure 1c. The prediction obtained for every single text is shown to ensure that average values do not hide strong differences. All students’ interventions yielded similar academic maturity predictions, clustering around values slightly above 2 on the 1–4 scale (such a scale is used to match the 4-year degree structure). While this may initially appear low for fourth-year students, this finding is reasonable when considering the algorithm’s training. The predictive model was developed using written texts produced in an academic environment where students had adequate time for reflection, revision, and error correction. Consequently, lower maturity scores for oral contributions are expected and methodologically sound.

Importantly, the homogeneity of academic maturity predictions across all participants given by our AI algorithm (

Figure 1c) validates our analytical approach. This consistency demonstrates that students possess comparable academic development levels, enabling the attribution of response variations to factors such as academic discipline or specific task characteristics rather than individual differences in academic background.

3.2. Quantitative Assessment of GenAI Utility Across Project Phases

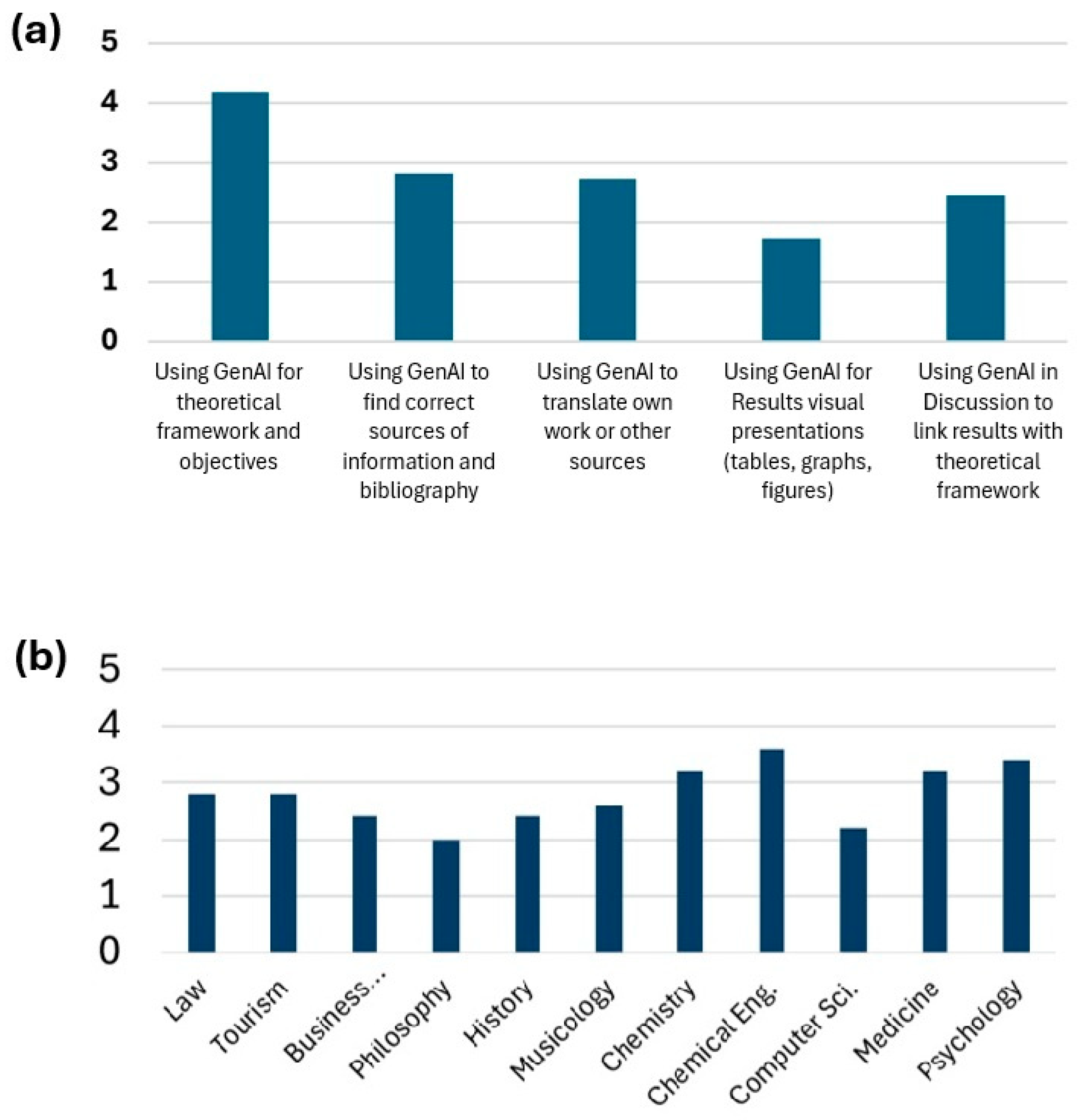

Figure 2a presents the mean scores for the five quantitative questions (1–5 scale) assessing GenAI’s utility across different final degree project phases. The results reveal significant variation in students’ perceptions of AI assistance effectiveness depending on the specific project component—different degrees’ academic disciplines, as shown in

Figure 2b.

The theoretical background and objective development received the highest average rating, reflecting students’ recognition of GenAI’s strength in synthesizing existing knowledge and organizing conceptual foundations. This finding aligns with GenAI’s documented fundamental components of academic research projects. Conversely, visual presentation development received the lowest average scores. This disparity likely reflects discipline-specific variations in visualization requirements and familiarity with AI-assisted design tools. Engineering and technical disciplines may have more sophisticated visualization needs that current GenAI tools cannot adequately address, while humanities-focused programs may rely heavily on textual presentations. The intermediate scores for the remaining three project phases suggest moderate perceived utility effectiveness varying according to specific task characteristics and disciplinary contexts.

3.3. Disciplinary Variations and AI Adoption

Figure 2b illustrates notable differences in average responses across academic disciplines. Computer Science and Philosophy students consistently provided the lowest ratings across all questions, whereas Chemical Engineering and Psychology students reported the highest perceived utility. The lower ratings from Computer Science students may reflect a deeper awareness of AI limitations and technical constraints, leading to more critical evaluations, whereas Philosophy students’ skepticism likely stems from norms of original argumentation and critical thinking, where AI support is seen as less appropriate. By contrast, the higher scores in Chemical Engineering and Psychology align with workflows that rely on systematic literature review, methodological scaffolding, and structured analysis that GenAI can readily support. These aggregated contrasts are further explained by our inductive–deductive thematic analysis, which surfaced distinct disciplinary logics across conceptual, methodological, documentary, and communicative tasks.

(a) Epistemic fit and technical literacy. Students in engineering/computing approach GenAI through a procedural–technical lens (precision, reproducibility, code quality), often restricting its role in design-level decisions: “In this case, I’ve been guided by my thesis advisor; that’s why I haven’t used [GenAI] for methodological design.”. In contrast, Chemical Engineering emphasizes technical wording, clarification, and idea sourcing: “It’s useful for technical/academic wording, to explain concepts I don’t understand, and to look for bibliography or ideas.”. Chemistry adopts a trust-but-verify stance, flagging domain-specific errors: “Sometimes it gets confused when I ask about uncommon things… The reference it gave me had the wrong article title—almost right, but not quite.”.

(b) Normative constraints and citation stringency. Fields with strict citation/style regimes (e.g., Law, Vancouver in Medicine) report guarded or selective use for references. As one student notes: “No… given how strict professors are with citations, I don’t trust [GenAI] for that task.”. Others limit GenAI to format conversion: “I provided it with all my citations and asked it to convert them to Vancouver, and I copied and pasted what it gave me.”.

(c) Conceptual scaffolding versus originality norms. Philosophy students leverage GenAI for clarifying frameworks and structuring, while avoiding conclusion-level guidance: “For the theoretical framework… it clarified the state of the question and gave me useful bibliography… It helped with brainstorming and structuring what I want more clearly.”. This helps explain their lower overall ratings despite targeted, upstream benefits.

(d) Managerial/organizational affordances. Business-oriented profiles emphasize organization, synthesis, and alignment across sections: “It was useful to check that what I concluded was related to my objectives and hypotheses.”. The tourism student similarly highlighted drafting support: “Often, I tell it my ideas and it writes them for me.”.

The qualitative themes account for the quantitative gradients. The higher utility in Chemical Engineering and Psychology coheres with tasks where GenAI maps onto systematic literature work, analytic scaffolding, and formal presentation. Law (style rigidity) and computing (technical constraints, advisor-led design) show cautious, bounded adoption. Chemistry’s domain-sensitive caution tempers high perceived utility with error-aware oversight. While these patterns are clear at the group level, we note that firm conclusions require examining individual responses without aggregation, as developed in the next section.

3.4. Individual Response Patterns

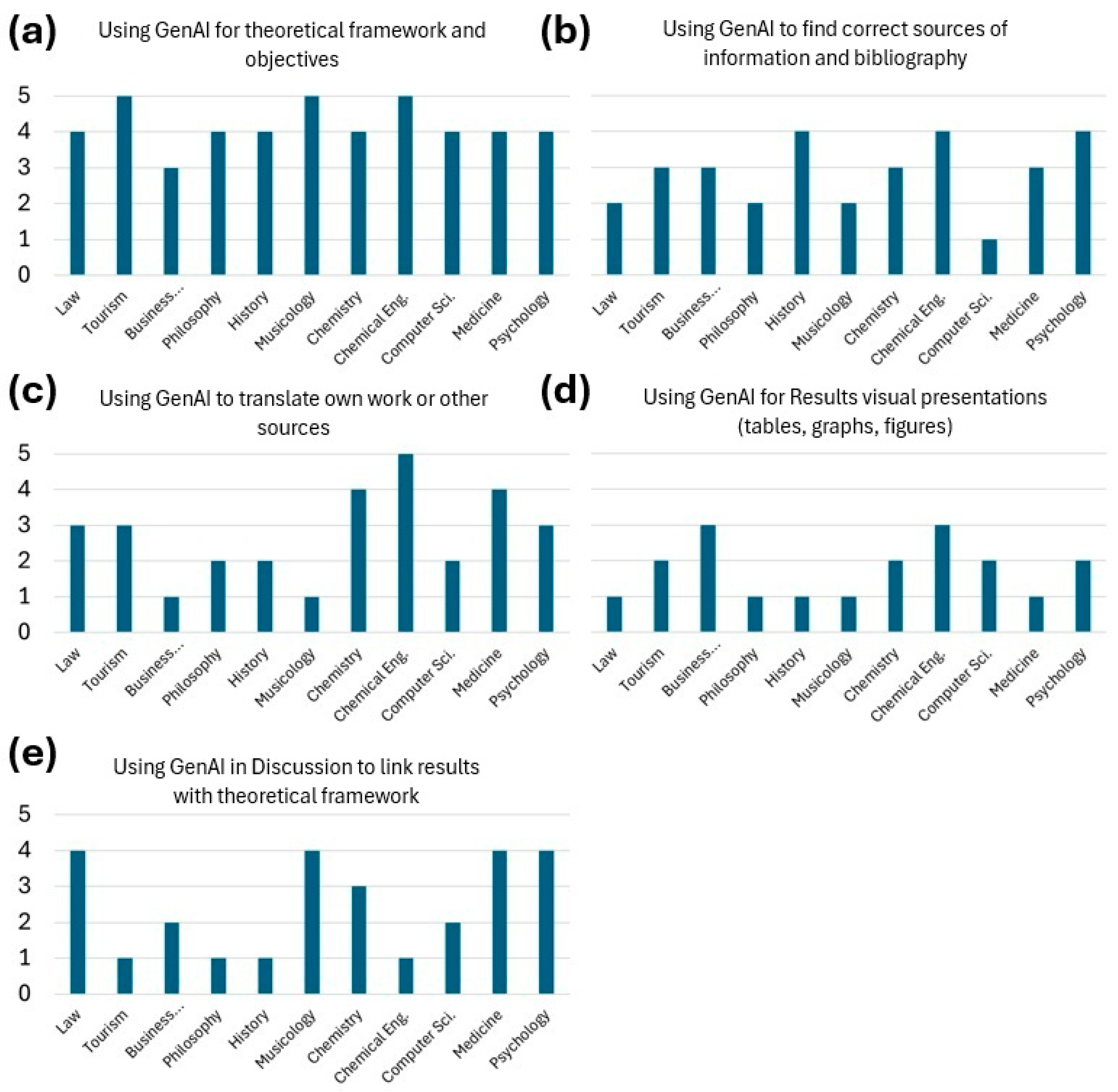

Figure 3 provides a comprehensive view of individual student responses across all quantitative questions throughout the three GenAI sessions. Visualization reveals considerable individual variation within disciplinary groups, suggesting that personal factors, prior experience, and specific project requirements significantly influence perceived utility.

The scale employed (1–5) represents an interval measure where students understood 1 as maximum disagreement and 5 as maximum agreement with utility statements. This is not a Likert scale in the traditional sense, as it does not link numerical values to fixed subjective perceptions such as “strongly agree” or “strongly disagree”. While we cannot precisely quantify students’ subjective interpretations of intermediate values, the consistent application of endpoint anchors provides reliable comparative data across participants and sessions. We can reasonably assume that students perceive equal intervals between consecutive scale values, treating the measure as an interval scale.

Analysis of

Figure 3a reveals minimal variation across disciplines regarding GenAI’s utility for theoretical framework and objective development. This consistency aligns with our previous findings (

Figure 2), identifying this as the area of highest perceived AI utility. Only the Business Administration student provided a notably lower score (3/5), which may be attributed to their project’s focus on a specific business model that provided sufficient background information, reducing the perceived need for AI assistance in conceptual development. Still, qualitative comments show targeted use for structuring and clarity in several cases (e.g., “For the theoretical framework… it clarified the state of the question and gave me useful bibliography… it helped me structure what I want more clearly” and “It helped me link one idea to the next in writing” (Philosophy)).

Substantial interdisciplinary differences emerge in

Figure 3b, where students evaluated GenAI’s effectiveness for identifying relevant information sources. Scores range from 1 to 4 across different academic areas, reflecting varied experiences and expectations. Post-session discussions revealed that no student expressed complete trust in GenAI for bibliographic research. Some highlighted useful summaries and idea generation (“If I’ve already found some articles, I ask it to summarize them,” (ChemicalEngineering) and “It helps me look for more ideas for sources and types of analyses,” (History)), while others explicitly withheld trust for references or note omissions (“Since professors are very strict with citations, I don’t trust it with that task,” (Law), “The problem is that sometimes it ignores information that is relevant,” (Law), and “Sometimes it gets confused when I ask about uncommon things… the article title wasn’t correct,” (Chemistry)). Students who provided higher ratings acknowledged their skepticism but reported success in training the GenAI with domain-specific expertise to enhance its efficiency. This finding suggests that GenAI utility for bibliography management depends on proper training and domain-specific customization rather than working as a standalone application.

Figure 3c demonstrates considerable disciplinary variation in GenAI utility for text translation, with responses influenced by field-specific language requirements. Higher scores were consistently observed in Chemistry, Chemical Engineering, and Medicine, where English-language sources predominate. Qualitative data indicate language mediation as a recurrent use case (“What I ask most is the ‘translation’ of very long texts into easier-to-understand, more synthesized texts,” (Law) and “Targeted help with writing or translation of concepts,” (Chemistry)), alongside style elevation in biomedical contexts (“It rewrote what I had in a more formal way, and more orderly,” (Medicine)). This pattern reflects the practical necessity of translation support in disciplines where the primary literature is published in English, while students may need to produce final projects in their native language (Spanish). Individual factors such as English proficiency levels and institutional requirements for project language also contribute to these variations.

The analysis of GenAI utility for visual elements (graphs, pictures) reveals the lowest overall scores across all project phases (

Figure 3d). This finding is particularly pronounced in disciplines where visual elements are less central to academic work, while higher scores correspond to fields where graphical representation is essential, such as Business Administration and Chemical Engineering. Related to the design of oral presentations for the defense of their projects, qualitative comments emphasize limited reliance on GenAI for visual production, reserving it for organizational cues (“What title to put on each slide and a sense of what to talk about in each” (Medicine)), skepticism about generated assets (“I tried to have it generate a cover but I didn’t like it,” (Chemistry)), and preferences for natural delivery in oral defenses (“I think the oral presentation should be more natural than something provided by AI,” (Law)).

Finally, student evaluations of GenAI’s ability to link conclusions with theoretical frameworks yielded diverse responses. Those answers correlated with varied opinions expressed during post-session debates (

Figure 3e). Students providing lower ratings identified two primary limitations:

GenAI-generated conclusions were often overly simplistic and lacked depth;

GenAI systems demonstrated a tendency toward false positivity, generating optimistic conclusions even when data did not support such interpretations.

Conversely, students with higher ratings, while acknowledging GenAI’s limitations and the need for critical evaluation, appreciated its capacity to suggest novel perspectives that could serve as starting points for more detailed analysis and development (“I only used it as a base to write my own conclusion,” (Chemistry), “Useful to check that what I concluded was related to my objectives and hypotheses,” (Business Administration), and “To explain results I wasn’t expecting, and, in general, to explain what I obtained,” (Psychology)).

These findings collectively demonstrate that individual experiences with GenAI in academic contexts vary substantially, even within disciplinary groups, highlighting the critical importance of personalized approaches to AI integration in educational settings. In this sense, these results demonstrate that AI integration in educational settings requires a full and complete understanding of the necessities of the different degrees based on their characteristics and, also, the maturity that students could achieve.

4. Discussion and Conclusions

This study examined GenAI applications in final degree project development across disciplines through a longitudinal analysis of the entire project cycle. Participant homogeneity was validated with AI-based linguistic analysis tools [

9,

12], ensuring that differences in GenAI utility perceptions reflected disciplinary contexts or other uncontrolled factors beyond academic preparedness. Because each discipline was represented by a single case, results should be interpreted as exploratory and hypothesis-generating rather than as evidence of disciplinary effects.

Findings revealed notable variation in GenAI utility across project phases, indicating that its effective integration with traditional learning strategies requires adaptive rather than uniform strategies. Students consistently valued GenAI for theoretical framework and objective development, recognizing its usefulness in literature synthesis and conceptual organization. This aligns with its role as a cognitive amplifier that supports higher-order thinking by automating routine tasks [

6,

14]. Conversely, participants expressed strong skepticism toward GenAI’s reliability in bibliographic research and source validation, with none fully trusting its bibliographic recommendations, echoing concerns about epistemological limitations and the need for domain-specific training [

3,

5].

Translation support showed discipline- and proficiency-dependent differences, consistent with earlier findings on the contextual nature of GenAI use [

10,

12]. Visual element creation had the lowest reported utility, reflecting discipline-specific visualization practices and current limitations in AI design tools [

7]. Students were divided on the value of GenAI for synthesizing conclusions, acknowledging its capacity to generate new perspectives while critiquing its tendency to oversimplify and produce biased outputs [

3,

11].

Overall, results support prior research showing that students primarily use GenAI for preparatory or lower-level tasks, thereby allocating cognitive effort to analysis and argumentation [

1,

8]. The strong perceived value in theoretical framework development supports the idea that usefulness, enjoyment, and accessibility are key adoption drivers [

6]. At the same time, students’ skepticism toward bibliographic outputs confirms their awareness of automation bias and factual inaccuracies [

8,

21]. This suggests that advanced students critically evaluate AI outputs rather than accepting them passively, aligning with pedagogical calls to reinforce verification and critical reasoning in AI-mediated learning [

3,

20].

These findings point to the need for discipline-sensitive integration strategies. Rather than promoting uniform adoption, universities should design guidelines that leverage GenAI’s strengths in literature synthesis while reinforcing information literacy and ethical practices [

15]. Van Dis et al. [

21] stress the importance of clear policies to avoid overreliance and to ensure AI complements rather than replaces human cognition. Similarly, Zheng and Yang [

22] highlight the need to align AI use with instructional goals, so automation supports rather than substitutes higher-order learning. In practice, this may involve structured training in prompt engineering, systematic verification of outputs, and reflective activities encouraging students to evaluate GenAI contributions critically.

Future research should compare educational levels, from secondary to higher education, to track how perceptions of trust and utility evolve with academic experience. While advanced students in this study questioned bibliographic reliability due to their research training, earlier-stage students may respond differently given limited exposure to conventional research methods [

4,

13,

16]. Our findings suggest that near-graduation students adopt a more critical stance toward AI tools, whereas less experienced students may require guided scaffolding to avoid overreliance.

Although the sample size was limited, further studies with larger and more diverse cohorts could validate these patterns and inform differentiated integration strategies based on student profiles, disciplinary expectations, and maturity levels. Future work will also examine students’ metacognitive profiles in interactions with ChatGPT, comparing prompting and reflective practices across disciplines. Parallel investigations will assess supervisors’ and tutors’ perspectives on GenAI, focusing on its pedagogical potential and ethical implications. Finally, large-scale surveys across the university community are planned to capture student attitudes toward GenAI for academic work, contributing to institutional policies and evidence-based guidelines for responsible integration in higher education.