1. Introduction

In parallel with the burgeoning advancements in industrial Internet of Things, artificial intelligence algorithms, and computational capabilities, DL-based data-driven methodologies have emerged as predominant paradigms in PHM for rotating machinery [

1,

2,

3]. Bearings, as critical components in sectors such as power generation, aerospace, and manufacturing, directly affect system reliability and performance, so accurate RUL estimation is essential for facilitating predictive maintenance, reducing unplanned downtimes, and ultimately extending the operational lifespan of machinery [

4,

5,

6,

7,

8]. RUL prediction approaches are dichotomized into physics-based models [

9,

10], which demand intricate mechanical insights and precise simulations, and data-driven models [

11,

12,

13], which harness operational data to discern degradation patterns, offering adaptability to multifaceted systems sans profound physical domain knowledge. Consequently, data-driven techniques are increasingly favored for handling the large and high-dimensional datasets common in modern predictive maintenance.

Constructing efficacious data-driven RUL models entails four pivotal stages: sensor data acquisition and preprocessing (e.g., denoising, normalization, dimensionality reduction); degradation feature extraction and health indicator (HI) formulation, which combines signal processing with domain expertise; FPT detection; and RUL regression using machine learning [

14]. The HI construction is critical because it determines how faithfully degradation is represented and thus influences prediction accuracy. In this regard, Dong et al. [

15] applied principal component analysis to combine the features, which were then input into a weighted complex support vector machine model to predict the RUL of bearings. Similarly, Guo et al. [

16] introduced a degradation indicator for predicting the RUL of bearings, utilizing EMD. Zhang et al. [

17] proposed a method that combines multi-scale entropy features as a health indicator for accurate RUL prediction of rolling bearings. Zhao et al. [

18] employed a method for RUL prediction of rolling bearings that focuses on modeling degraded features using the derivative method of the fitting curve for maximum power spectral density. Long et al. [

19] introduced a method for RUL prediction of rolling bearings that emphasizes the creation of HI based on EMD, refined composite multi-scale attention entropy, and dispersion entropy. These studies primarily relied on signal processing techniques to extract features from raw signals in order to develop degradation indicators. These methods are advantageous due to their clear physical interpretation and ease of validation. However, signal processing-based feature extraction has its limitations. The techniques often depend on expert knowledge and experience, may lack generalizability across equipment types, and can miss implicit nonlinear features present in complex data.

Conversely, DL paradigms automate HI extraction from raw vibrations. For instance, [

20] was a pioneer in applying recurrent neural networks to autonomously extract HIs from selected features, creating an early data-driven framework to characterize the deterioration of rolling bearings. Similarly, Ref. [

21] utilized a hybrid model combining CNN and bidirectional gated recurrent units to derive HIs from raw vibrational signals for roller bearings. Li et al. [

22] used a multi-channel fusion hierarchical vision Transformer with wavelet packet decomposition representations to model degraded features for RUL prediction. Guo et al. [

23] proposed a hybrid approach that initially constructs a nonlinear HI using complete ensemble empirical mode decomposition with adaptive noise and kernel principal component analysis. They then extracted multi-domain features from vibration signals and applied a dual-channel Transformer network with convolutional attention modules to generate HIs for other bearings. Ping et al. [

24] proposed a multi-scale efficient channel attention convolutional neural network and bidirectional gated recurrent unit model for RUL prediction of rolling bearings, extracting multi-scale temporal features from Gram’s angle difference field images. Notwithstanding advancements, these predominantly supervised techniques necessitate abundant labeled data, impeding applicability in prevalent, unlabeled real-world industrial scenarios.

To facilitate data-driven automatic construction of HIs, this study investigates unsupervised learning paradigms. Autoencoders provide a viable framework, enabling label-agnostic HI development through feature extraction and degradation trend modeling. Guo et al. [

25] proposed an unsupervised HI construction method using a multi-scale convolutional autoencoder optimized by a genetic algorithm, effectively identifying machine degradation through feature learning and data similarity. Ma et al. [

26] developed a self-attention convolutional autoencoder, demonstrating enhanced trendability and scale similarity on bearing and milling cutter datasets. Xu et al. [

27] proposed an enhanced stacked autoencoder with an exponential weighted moving average for health indicator construction using unlabeled vibration signals, improving noise reduction and eliminating the need for manual feature selection. De et al. [

28] developed an LSTM autoencoder with attention for health indicator construction in aircraft systems. Xu et al. [

29] developed a multi-scale-multi-head attention mechanism with an automatic encoder–decoder model, extracted multi-scale features from raw vibration signals, and measured the Wasserstein distance between healthy and unhealthy features, which demonstrated superiority over other similarity-based methods and provided a more reliable HI. Qu et al. [

30] proposed an unsupervised method for bearing health indicator construction using multi-scale convolution and long short-term memory combined with an autoencoder for feature extraction and support vector regression to build health indicators, improving degradation trend detection. Wang et al. [

31] developed a denoising Transformer autoencoder for tool condition monitoring, enhancing feature extraction and resistance to noise.

Although autoencoders are extensively employed in existing studies for unsupervised feature learning, they exhibit shortcomings in processing time-series signals, particularly in capturing the temporal dependencies of encoded features. Additionally, conventional HI construction techniques frequently necessitate manual selection and design of dominant features, rendering them inefficient and error-prone, especially with high-dimensional or nonlinear datasets. This reliance on manual feature engineering can lead to inaccurate similarity measurements and diminished HI fidelity. To address these challenges, this paper proposes an HCVT-WD for bearing HI construction and RUL prediction. The proposed method encompasses four key stages: (1) an unsupervised HCVT-WD autoencoder framework is developed to extract features from FFT-transformed raw vibration signals without manual feature engineering or labeling; (2) CNN and Vi-T are integrated to captures local spatial patterns and global temporal correlations; (3) Wasserstein distance is used to quantify divergences between healthy for robust HI formulation; and (4) an HI driven RUL prediction pipeline provides accurate forecasts with uncertainty quantification.

The structure of the paper is as follows:

Section 2 focuses on the proposed framework, specifically highlighting the development of HIs using the proposed HCVT-WD model.

Section 3 details the experimental setup, results, and analysis.

Section 4 provides a summary of the key results and proposes possible directions for future studies.

2. Proposed Framework

The overall workflow of the proposed method is illustrated in

Figure 1, with a detailed clarification of the HI construction process provided in Algorithm 1. In the initial phase, the HCVT-WD model is trained using a subset of the dataset

composed of non-overlapping windows extracted from vibration signals recorded during healthy operation.

refers to the number of time instances, with each instance corresponding to a specific time window from the healthy state data. The training process minimizes the reconstruction error of the network. Once trained, the encoded features from the HCVT-WD are extracted. In the next phase, the model is implemented on the whole dataset

, which consists of both healthy and unhealthy state data samples, similarly done for the training dataset. The WD between the combined encoded features of the healthy state and the encoded features of all other states is computed and used as the HI. Finally, the HI is modeled using a CNN-BiLSTM network with MC simulations to predict the RUL and quantify uncertainty.

| Algorithm 1 Train the HCVT-WD model and construct HI |

Input: Frequency domain raw vibrational signals (healthy and unhealthy states): and initial parameters . Build and Initialize: HCVT-WD model. Consider training data as healthy data and as whole data. While do Decoded output from model. Minimize loss between and . Update parameters of the model. End for Obtain encoded features and along with trained HCVT-WD model parameters . Calculate the Wassertian distance between encoded and as HI. Detect the FPT using Output: , FPT

|

2.1. HCVT Autoencoder Model

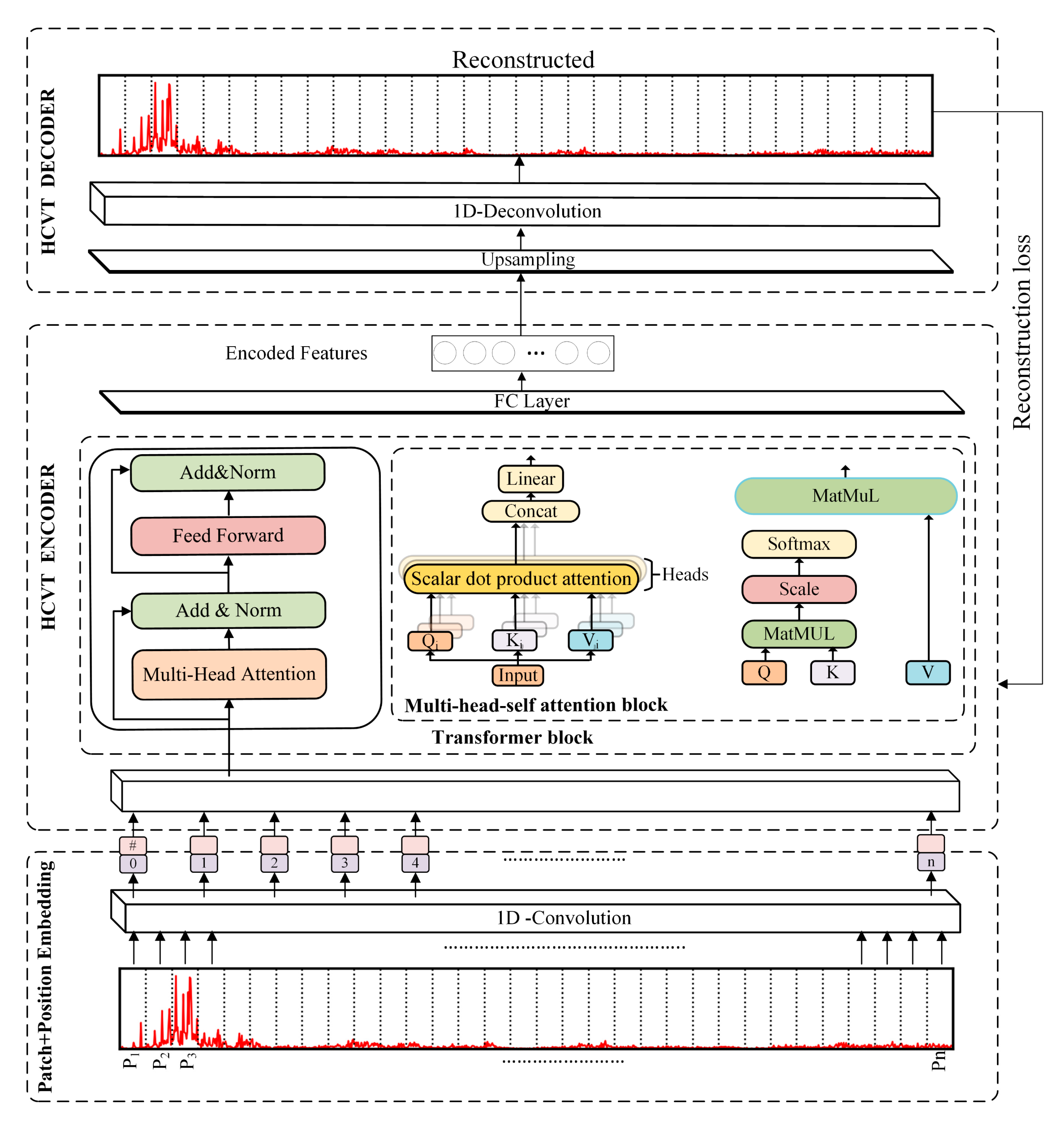

In this section, we employ a hybrid model consisting of 1D-CNN and Vi-T as the encoder. The encoder extracts deep features from the inputs using the combined strengths of CNN and Vi-T architectures, and these encoded features are then upsampled to the original image dimensions using a CNN-based decoder.

2.1.1. 1D-CNN Patch and Position Embedding

To process time series data effectively, the signal is first split into smaller segments. Patch embedding serves this purpose before applying the Vi-T to time series data, as it enables the Transformer model to process both one-dimensional and multi-dimensional time series data effectively.

The raw vibrational signals are initially segmented into non-overlapping segments. Denote each segment by

, where

and

are the length of the segments and the dimension of the input signals. Each segment is further partitioned into fixed-size patches. Each patch is represented as

, where

is the length of each patch. It can be deduced to

, where

is the number of patches. One-dimensional convolution operation is then applied to each patch as shown in Equation (1). The size of the convolution kernel is identical to that of patch

, and the stride is

, to preserve the temporal dynamics in the raw signals.

where

and

represent the weight and bias of the k-th convolutional kernel, respectively. The variable denotes the number of convolutional kernels, as well as the dimension of the time-series patch embedding. The term

corresponds to the embedding outcome of the patch. The embedding results for the entire set of patches in each sample

are presented in Equation (2).

Since Vi-T does not inherently recognize the order of patches, positional encoding is incorporated into the patch embeddings to preserve spatial information. For position encoding, each embedded patch is encoded as follows:

where the positions

for the i-th patch are defined as follows:

where

and

represent the positional encodings for the i-th position within the sequence, and

is the dimensionality of the feature vectors.

2.1.2. Vision Transformer Encoder

The patch embeddings, augmented with positional encodings, are subsequently processed through an MHSA module. This module is built upon the scaled dot-product attention mechanism, which facilitates interactions between all positions in the sequence. The MHSA architecture extends this principle by employing multiple attention heads in parallel. Each head has independent parameters, and learn distinct attention patterns and captures a diverse set of semantic relationships within the data. A schematic of the MHSA mechanism is illustrated in

Figure 2.

The definition of the i-th attention function is defined as follows:

where

,

, and

are learnable weight matrices and the vectors

,

and

represent query, key, and value, respectively. Scalar dot-product attention is computed as follows:

where

denotes the dimensionality of the key vectors, which serves to counteract the issue of small gradients and produces a more stable attention distribution. The output of the MHSA mechanism is defined as follows:

where

represents the trainable weight.

The output of the MHSA, denoted by

, is then passed through an FFN with residual connections and layer normalization:

Subsequently, an FFN transforms each element in the sequence independently. The FFN comprises two fully connected layers with a nonlinear activation between them and is mathematically expressed as follows:

where

and

are the weight and bias of the first linear layer, which projects the input into a higher-dimensional space. Subsequently,

and

are the weight and bias of the second linear layer, projecting the transformed features back to the original dimensionality.

The final output of the Vi-T encoder retains the feature representations for all time patches, effectively capturing the temporal dependencies learned within the sequence. These output features are then passed through FC layers with dropout regularization, performing downsampling to obtain the latent , which are also called the encoded features and are subsequently used for HI creation.

In this section, we employ a hybrid model consisting of 1D-CNN and Vi-T as the encoder. The encoder extracts deep features from the inputs using the combined strengths of CNN and Vi-T architectures, and these encoded features are then upsampled to the original image dimensions using a CNN-based decoder, as illustrated in

Figure 2.

2.1.3. 1D Deconvolution

The decoding process begins by taking the final latent features and progressively reconstructing the original time series data. To achieve this, we first apply FC layers to expand the latent features, followed by a 1D deconvolution operation. The 1D deconvolution, also known as transposed convolution, allows us to upsample the latent representations to the original time series dimensions.

Mathematically, given the latent feature vector

, where

is the batch size and

is the size of the latent space, we use FC layers to expand the latent vector back to a higher dimension that matches the size of the Vi-T encoder output.

The expanded features are then reshaped and passed through the 1D Deconvolution layers to reconstruct the original input signal:

During this reconstruction process, the HCVT-WD model is optimized using a composite loss function

that combines reconstruction accuracy with regularization:

The first term calculates the mean squared error (MSE) between the original input signals

and their reconstructions

, where

denotes the number of samples and

indexes individual samples. The second term applies regularization to the model trainable parameters

(with

total parameter tensors) controlled by the coefficient

. This regularization prevents overfitting by penalizing large weight values while maintaining model generalizability. The complete optimization procedure, implemented using the Adam optimizer, is detailed in

Table 1.

2.2. HI Construction

The HI is calculated using WD between the distribution of encoded test features and the empirical distribution of the healthy training encoded features. The WD measures the minimum cost required to transform one probability distribution into another, quantifying how far the test data deviates from the healthy distribution in the embedded feature space. Due to its ability to effectively quantify distributional shifts, the Wasserstein distance proved to be a more robust metric for constructing an HI compared to other distance measures [

32].

The empirical distribution of healthy training encoded features is defined as follows:

where

are encoded training features having

dimensions, and

denotes a Dirac delta centered at

. Similarly, for each test sample

, its distribution is defined as

, and finally, the HI for j-th test sample is computed as follows:

where

is the L1 normalization across all feature dimensions.

2.3. FPT Detection

FPT detection based on the

criterion involves constructing a monitoring interval for the healthy state by calculating the mean

and standard deviation

of the initial healthy HI values [

33]. A potential fault is indicated when the absolute deviation of the current HI value from the mean exceeds three standard deviations, as expressed below:

However, to minimize false alarms caused by random fluctuations, the FPT is not triggered immediately upon a single exceedance. Instead, it is confirmed only when three consecutive HI values exceed the threshold, ensuring a more reliable and robust detection of early fault conditions.

2.4. RUL Prediction and Uncertainty Quantification

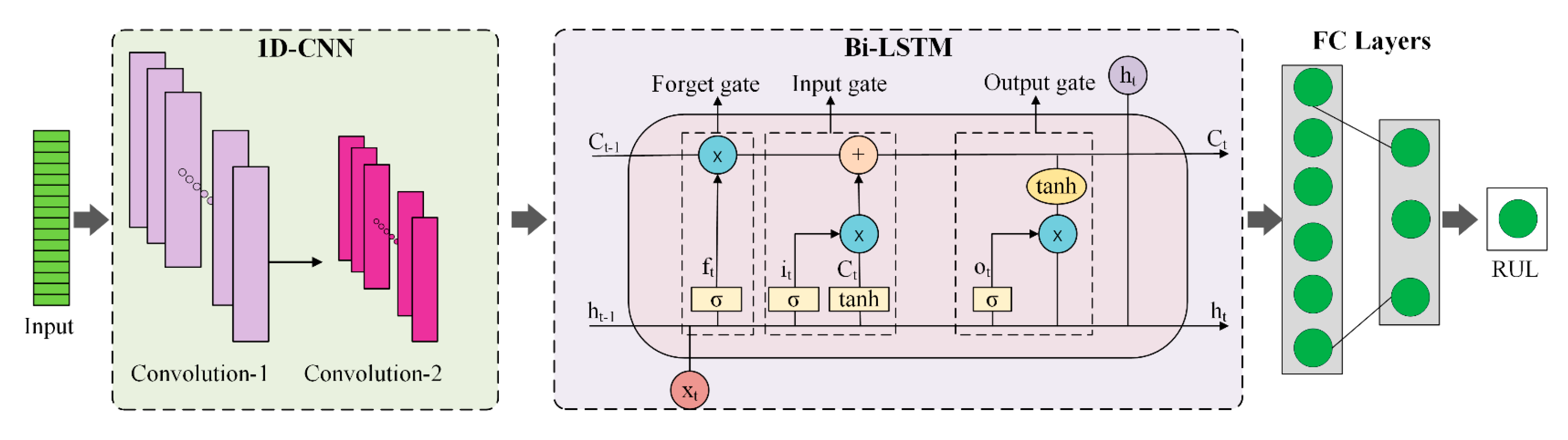

2.4.1. RUL Prediction

The RUL prediction process for bearings begins with estimating the time interval from FPT detection to eventual failure. When the FPT is identified for a test bearing, its true RUL labels can be established based on the actual remaining operational lifespan. The prediction architecture employs a CNN-Bi-LSTM network [

34] as illustrated in

Figure 3 and the prediction process is detailed in Algorithm 2. This dual-stage approach enables comprehensive learning of degradation patterns by analyzing both forward and backward sequence relationships. The trained model processes post-FPT HI sequences to establish an accurate mapping between evolving degradation characteristics and remaining lifespan.

| Algorithm 2 CNN-BiLSTM network training for prognosis and uncertainty quantification |

Input: , and initial parameters , is the constructed after FPT, and is the RUL labels assigned to each . Build and Initialize: CNN-BILSTM model. While do Calculate loss between and predicted . Update parameters of the model. End for Obtain RUL via trained CNN-BiLSTM model parameters . Perform MC-Dropout at inference: run S stochastic forward passes → compute μ as predicted RUL, and σ as model uncertainty. Output: RUL prediction with confidence intervals .

|

After the convolution operations in the CNN, the deep features are passed into the Bi-LSTM model. The LSTM network incorporates an input gate

, a forget gate

, an output gate

, and a memory cell

. The hidden layer uses the input vector

and output vector

to implement the activation function and weight updates. The core principle for the LSTM cell is as follows:

where

is the tangent activation function, and

is an intermediate value obtained by applying the

function to both the input and previous information. The function

refers to the sigmoid function, and the terms

and

represent the weight matrices and bias, respectively.

The hidden states from both forward and backward directions are then concatenated and fed into FC layers to predict the RUL.

2.4.2. Uncertainty Quantification with MC Dropout and KDE

Given an input sequence , our network produces a point prediction . Point estimates, however, ignore epistemic and aleatoric sources of uncertainty that arise during equipment operation. To characterize predictive uncertainty without incurring the computational cost of full Bayesian neural networks, we adopt MC dropout as a tractable approximation to Bayesian inference and then recover a full predictive probability density via KDE.

Let

denote all stochastic weights of the model and D the training data. The Bayesian predictive distribution is expressed as follows:

Following the dropout-as-Bayesian-approximation view, we replace the posterior with a variational distribution induced by dropout masks applied at inference time. Concretely, for each dropout layer

, the effective weights are as follows:

where

are the learned parameters,

is the retain probability, and

is the incoming dimensionality. During testing, dropout is kept active, and

stochastic forward passes are performed to generate samples:

where

is defined by the sampled dropout masks. From these samples, the predictive mean and variance are estimated as follows:

These summarize uncertainty for the interval prediction as follows:

where

is the critical value from the standard normal distribution corresponding to the desired confidence level. To capture the full shape of uncertainty, the MC samples are converted into a continuous density with KDE. Let

be the MC predictions for input

. The conditional probability density

is estimated as follows:

where

is a Gaussian kernel and

is the bandwidth. Selecting the appropriate bandwidth is crucial, as it greatly impacts the smoothness and accuracy of the estimation. A grid search and cross-validation are used to identify the optimal bandwidth, choosing the one that minimizes the mean integrated squared error.

3. Experimental Validation and Analysis

3.1. Dataset Preparation

The proposed HI construction and RUL prediction method is validated on the XJTU-SY bearing dataset [

35]. As shown in

Figure 4, the test rig is designed to collect vibration signals under varying operating conditions. The experiment involved testing 15 LDK UER204 bearings until failure under controlled operating conditions, as shown in

Table 1. Vibration data were recorded by two accelerometers operating at a frequency of 25.6 kHz, capturing both vertical and horizontal vibrations. The LDK UER204 bearings were chosen for their stable structural design and consistent performance, making them well-suited for repeatable accelerated degradation experiments and clear observation of the full degradation process. A 1.28 s sample was then recorded every 60 s during the testing.

Table 2 further provides the description of the experimental details of the XJTU-SY dataset. In the XJTU-SY bearing dataset, defects on individual bearing components were generated through accelerated degradation tests. During these tests, bearings were continuously operated under heightened load and speed conditions to expedite the wear process and induce failure in a reduced timeframe. The degradation occurred naturally on the inner and outer raceways as well as the rolling elements due to prolonged mechanical stress and friction. The test was concluded when the vibration amplitude surpassed a specified failure threshold (20 g), at which point various types of defects—such as inner race wear, outer race wear, and outer race fracture—were identified.

For this study, only the horizontal vibration signals were considered, as they exhibited clearer trends in the degradation process.

In order to create the healthy dataset containing data for network training and validation, the Pauta criterion was employed, as used in [

36]. The initial healthy dataset was constructed from the first 1 min of operation, which was defined as the baseline normal stage under the assumption that the bearing was in a healthy state at the beginning of its life. The Pauta criterion

was then calculated from this set. For each new data point, if it falls within this range, it is added to the healthy dataset, and the criterion is updated. If a point falls outside, it is considered abnormal. The healthy dataset was finalized and locked once a predefined number of consecutive data points (e.g., 500) were classified as abnormal, marking the failure threshold. 80% of the healthy data from the normal state were randomly split for training, while 20% were randomly set aside for validation. For example, for Bearing1-1, the total number of samples was 3936, with 1580 samples identified as healthy. Of these healthy samples, 1264 were randomly selected for training, and 316 were used as the validation set. The test set consisted of the run-to-failure samples, which were used to extract the encoded features from the proposed model.

Following the determination of the normal dataset, the vibration data were segmented into samples of 1024 data points without overlapping. The FFT was then applied to each sample, and the shape of the data was changed to 512 by retaining only the first half of the FFT spectrum. For the CNN patch embedding, the input for the model was shaped into (128, 1, 512), where 128 represents the batch size, 1 represents the channel dimension, and 512 corresponds to the number of frequency components after FFT.

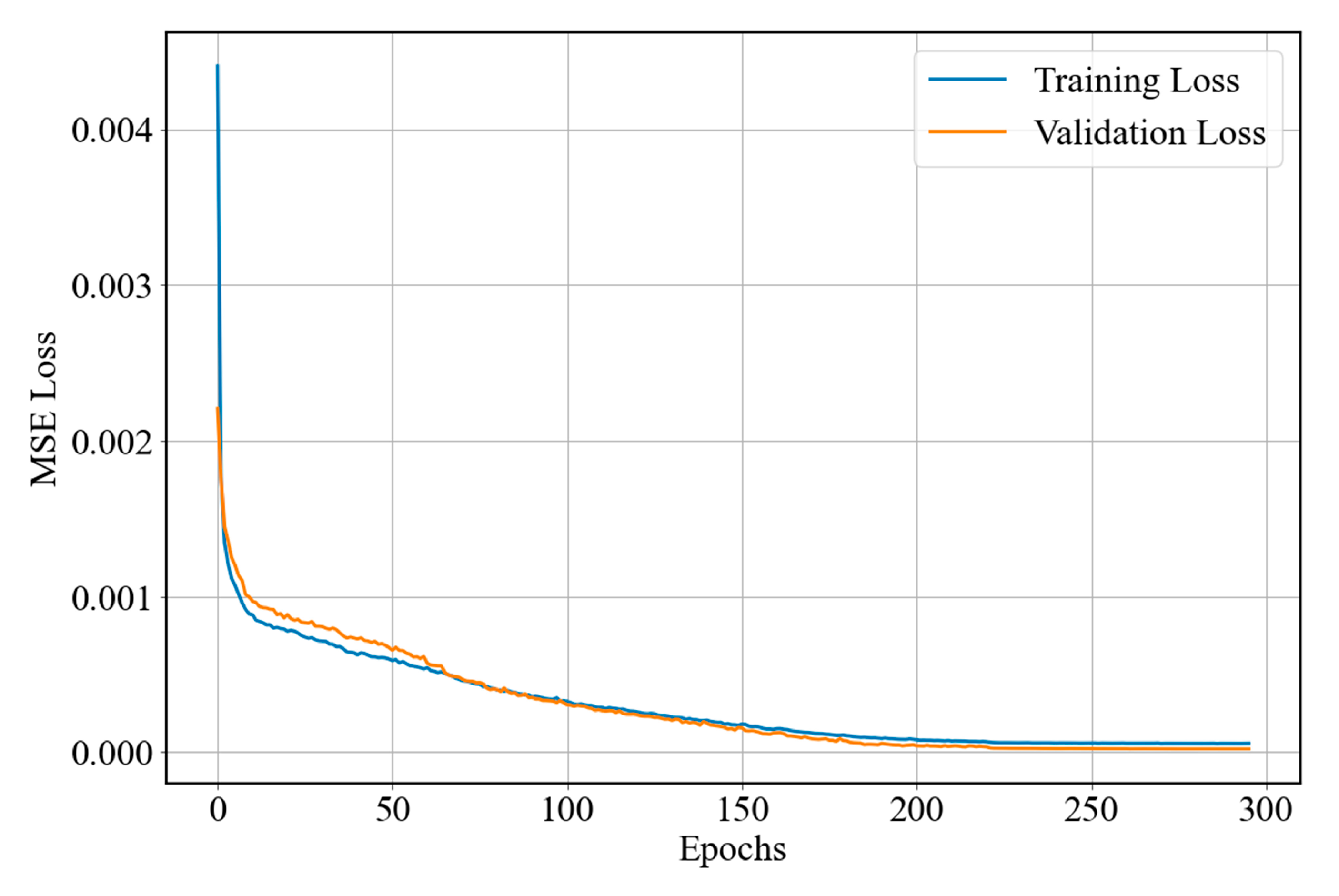

3.2. Structural Parameters and Hyperparameters

The hyperparameters and structural parameters of the proposed model are summarized in

Table 3 and

Table 4, respectively. The optimal hyperparameters are determined based on the reconstruction loss during validation through the use of the grid search algorithm. The model was trained using AdamW as the optimizer for up to 400 epochs, with early stopping employed to avoid overfitting by halting training when the validation loss ceased to improve. The training and validation losses with the optimized parameters are shown in

Figure 5. The computational work was done on a computer in PyTorch with an i5 12400F CPU, an NVIDIA RTX3060 GPU, and 16GB of RAM.

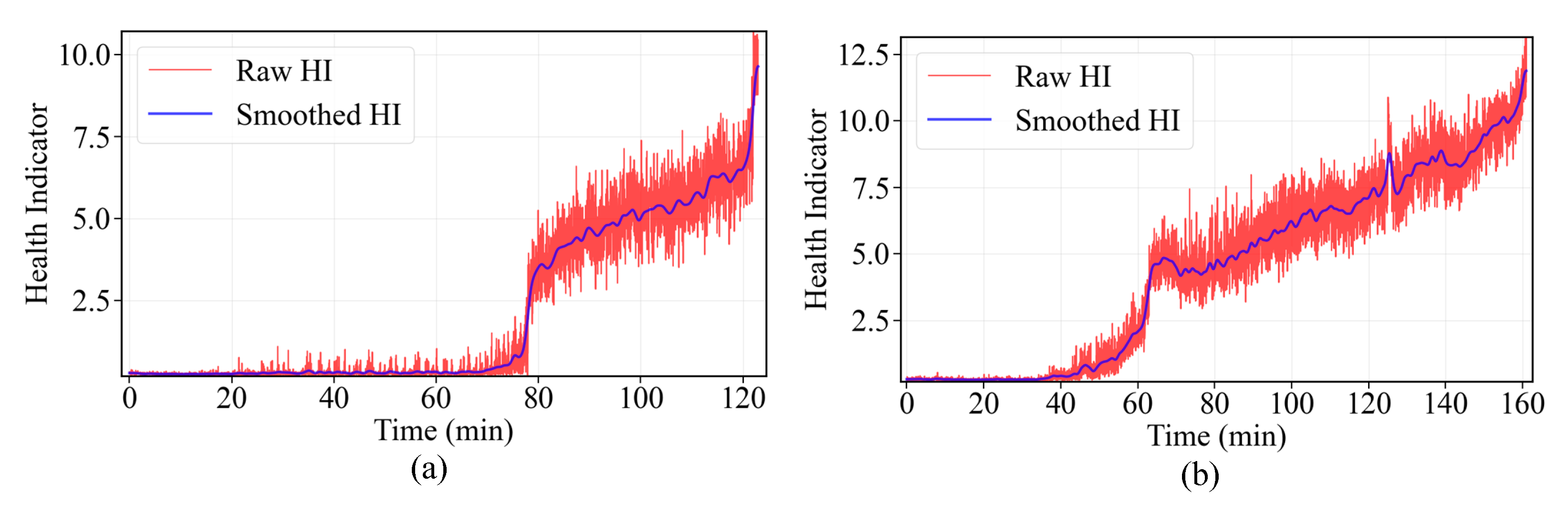

3.3. HI Construction and FPT Detection

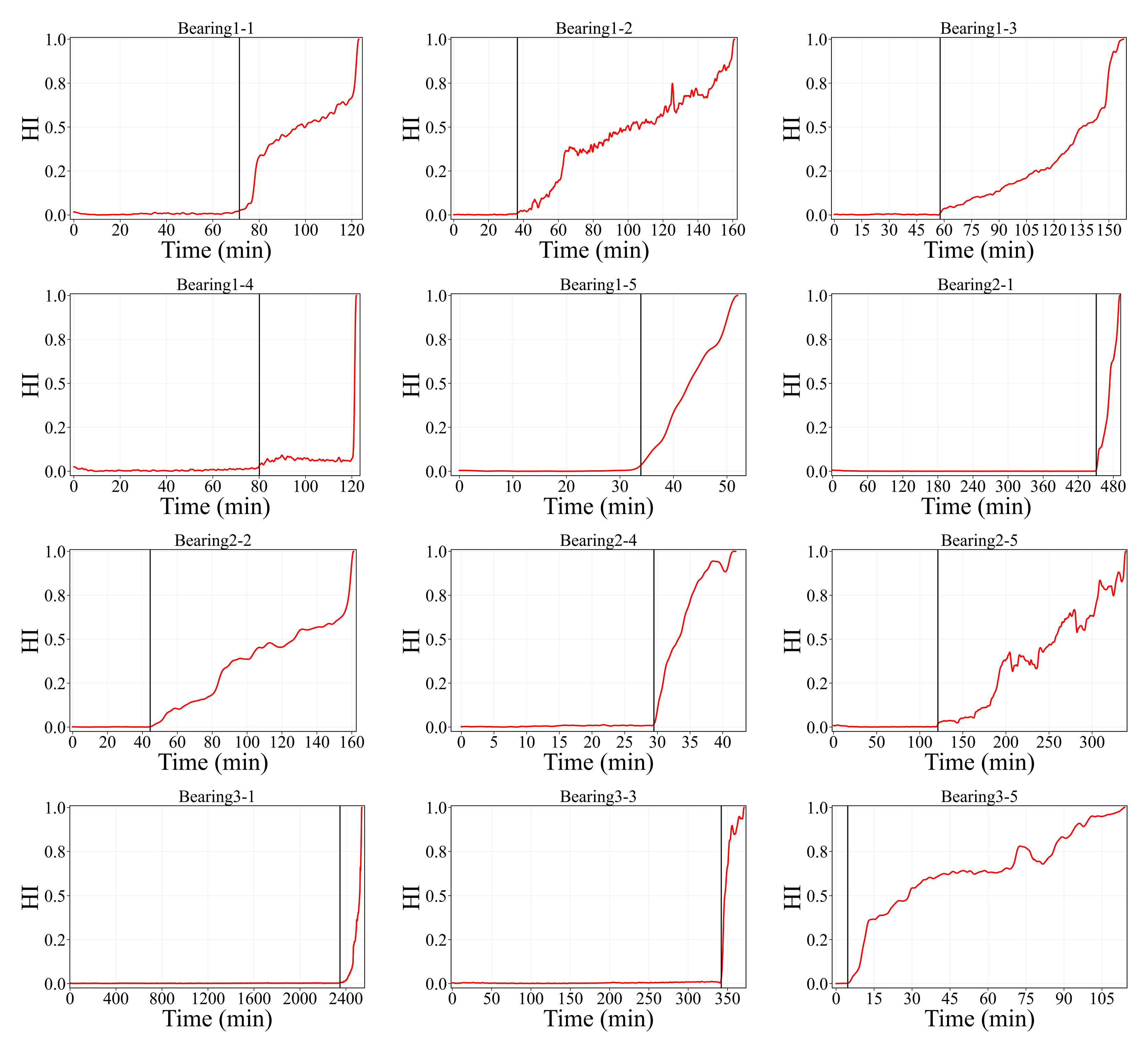

The HCVT-WD model was used to construct the HI for all bearings under various operating conditions. These indicators display a clear trend over time, which is crucial for FPT detection and RUL prediction. To further enhance the clarity of these indicators, Gaussian smoothing was applied to the raw HI as shown in

Figure 6, effectively filtering out noise while preserving the overall degradation trend. The smoothed curves provide a more stable and interpretable representation of bearing degradation, facilitating reliable FPT detection and RUL estimation. The FPT and corresponding HIs for the bearings are illustrated in

Figure 7.

3.4. Evaluation Metrics of HIs

This study presents three quantitative metrics: monotonicity (Mo), trendability (Tr), and prognosability (Pr) to evaluate the effectiveness of HI construction, as defined in Equations (1)–(3):

where

represents the rank of the j-th HI within the HI sequence and

denotes the number of samples.

is the value of the HI at the time

.

and

denote the means of the HI values at the initial and failure phases, and

is the standard deviation of the HI values in the failure phase. All three evaluation metrics range between 0 and 1, with values closer to 1 indicating higher-quality health indicators that exhibit more consistent degradation trends, stronger linear relationships with time, and more predictable failure behavior.

To validate the effectiveness of the proposed method, we compared its performance against three state-of-the-art HI construction methods: ensemble stacked autoencoder (ES-AE), deep CNN-auto-encoder–decoder (DCN-AE), and multi-scale CNN-auto-encoder–decoder (MSC-AE), using the aforementioned evaluation metrics. As shown in

Figure 8 and

Table 5, our method consistently outperforms the other approaches across all evaluation metrics.

While ES-AE is capable of capturing the overall degradation trend, it suffers from noise, which lowers both monotonicity and trendability. DCN-AE and MSC-AE demonstrate better performance by leveraging convolutional feature extraction and multi-scale representations, yet their HIs still exhibit noticeable fluctuations, particularly in the mid-life regions. In contrast, the proposed HCVT-WD achieves superior results through a dual-branch design: the CNN branch effectively filters out local noise from raw vibration signals, while the Vi-T branch captures long-range dependencies and global structural information. The integration of these two branches through skip fusion produces smoother HI curves with reduced oscillations, which explains the markedly higher monotonicity, trendability, and prognosability observed for HCVT-WD.

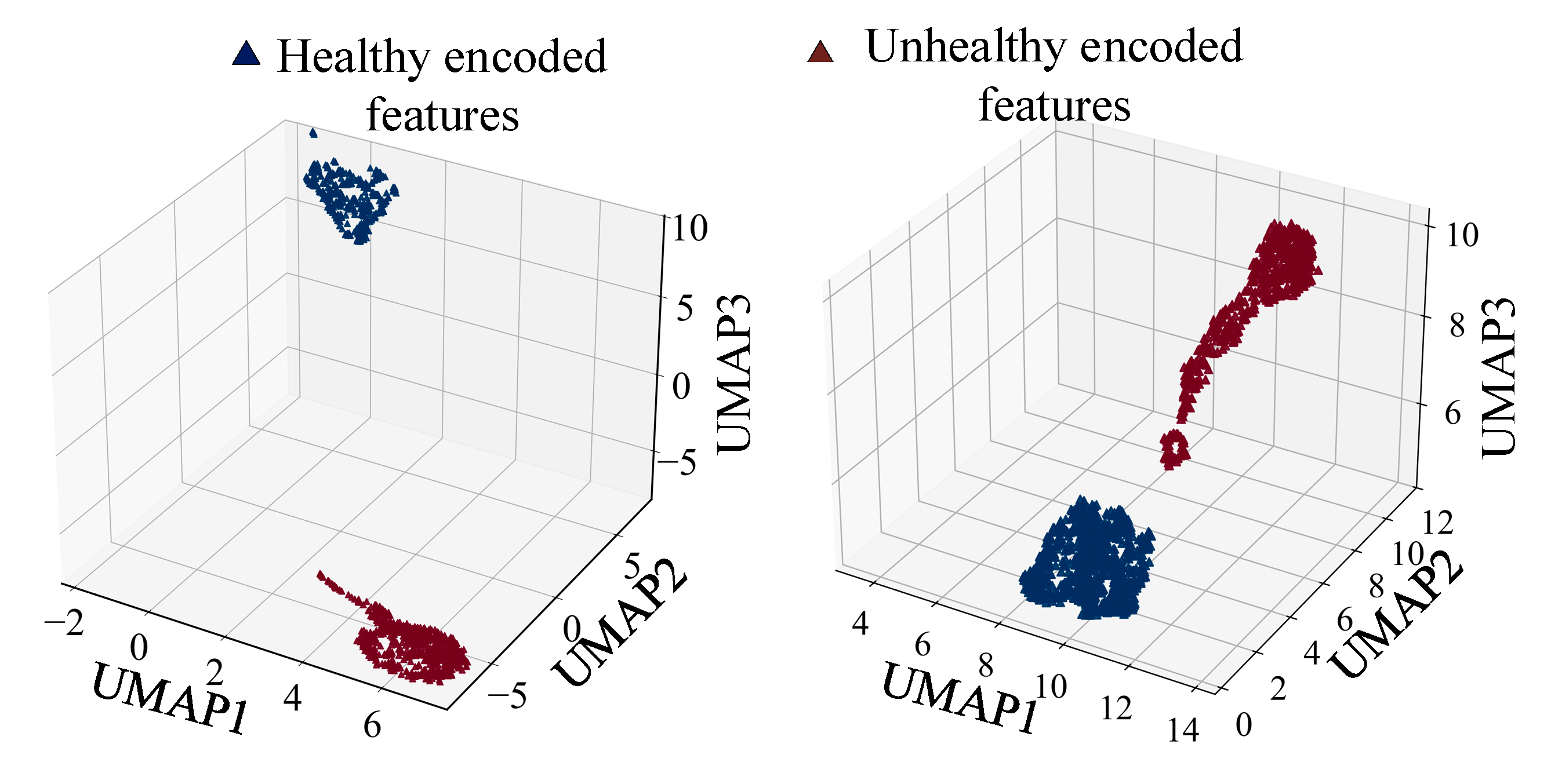

3.5. Visualization Analysis

In the visualization analysis, the healthy and degraded features are clearly separated in the uniform manifold approximation and projection embedding space, even when testing with full healthy and degraded samples, as shown in

Figure 9. This separation indicates that the proposed HCVT-WD model effectively segregates the encoded features. Notably, the unhealthy features follow a clear degradation trajectory in the embedding space, reflecting the progressive failure process, while the healthy features remain well clustered. This separation is attributed to the multi-head self-attention mechanism in the Transformer, which enhances discriminative feature learning. The attention maps further validate this behavior, as illustrated in

Figure 10, where the learned attention weights for three randomly selected testing samples exhibit sparsity in the attention matrices. This sparsity confirms that the proposed module successfully captures dynamic and temporal dependencies within the raw input signals, thereby improving the model’s cycle life prediction capability by focusing on the most informative features.

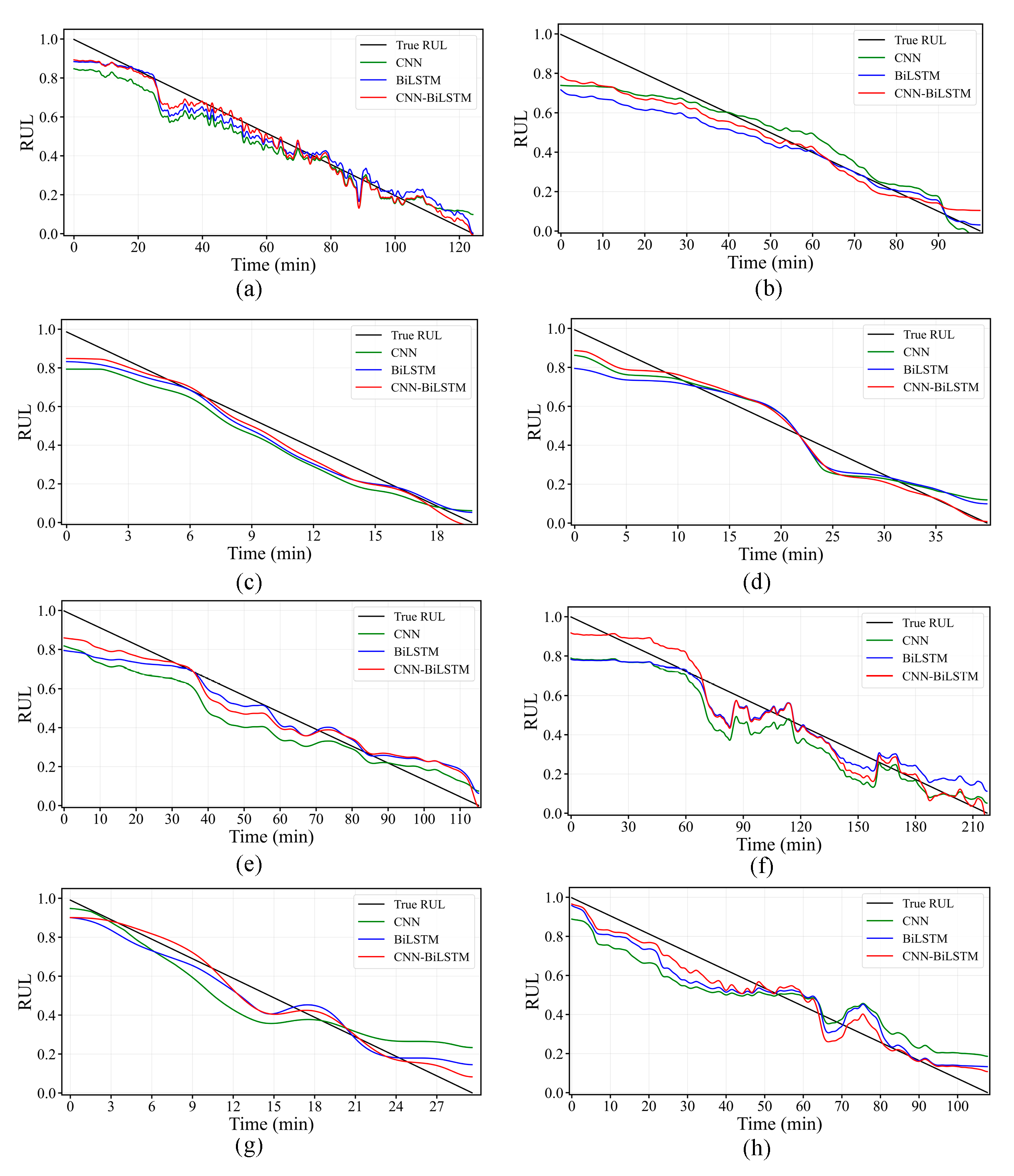

3.6. RUL Estimation and Uncertainty Analysis

The CNN-Bi-LSTM model, integrated with a Bayesian neural network, was employed to map the HIs to the RUL. To predict the RUL for each bearing, the FPT was initially identified. After determining the FPT, post-FPT HI values were collected, and RUL labels were assigned. These labels indicate the remaining life as a percentage of the total remaining time after the FPT for each bearing.

The samples were created by looking at sequences of 10 consecutive time steps of the HIs. Each sequence consisted of a sliding window of 10 time steps, and the model used these sequences to learn how the health of the bearing evolves over time. Once the sequences were created, the data were randomly split into training and validation sets, with 80% used for training and 20% used for validation. For each sequence, the RUL label was assigned based on the remaining life at the end of the sequence.

During the training phase, a leave-one-out cross-validation method was used for each operating condition. For each bearing, one was held out as the test set, while the others under the same operating condition were used to train the model. To evaluate the proposed method’s accuracy, two performance metrics—RMSE and MAE—were used. These metrics were calculated as follows:

where

and

represent the actual and predicted RUL values, respectively, and

is the total number of samples.

The hyperparameters of the proposed model were carefully tuned, with the optimized values being a batch size of 32, a learning rate of 0.0001, and two LSTM hidden layers with 64 and 32 neurons. A dropout was added after the first hidden layer, and an additional dropout of 0.3 was applied after the final FC layer to implement MC dropout for uncertainty estimation. The prediction results of the proposed methodology are shown in

Figure 11 across different working conditions. Additionally, separate CNN and Bi-LSTM prediction networks were constructed for comparison, and the results are presented in

Table 6. The performance comparison demonstrates that the CNN-Bi-LSTM model outperforms these alternatives, achieving superior accuracy in RUL prediction.

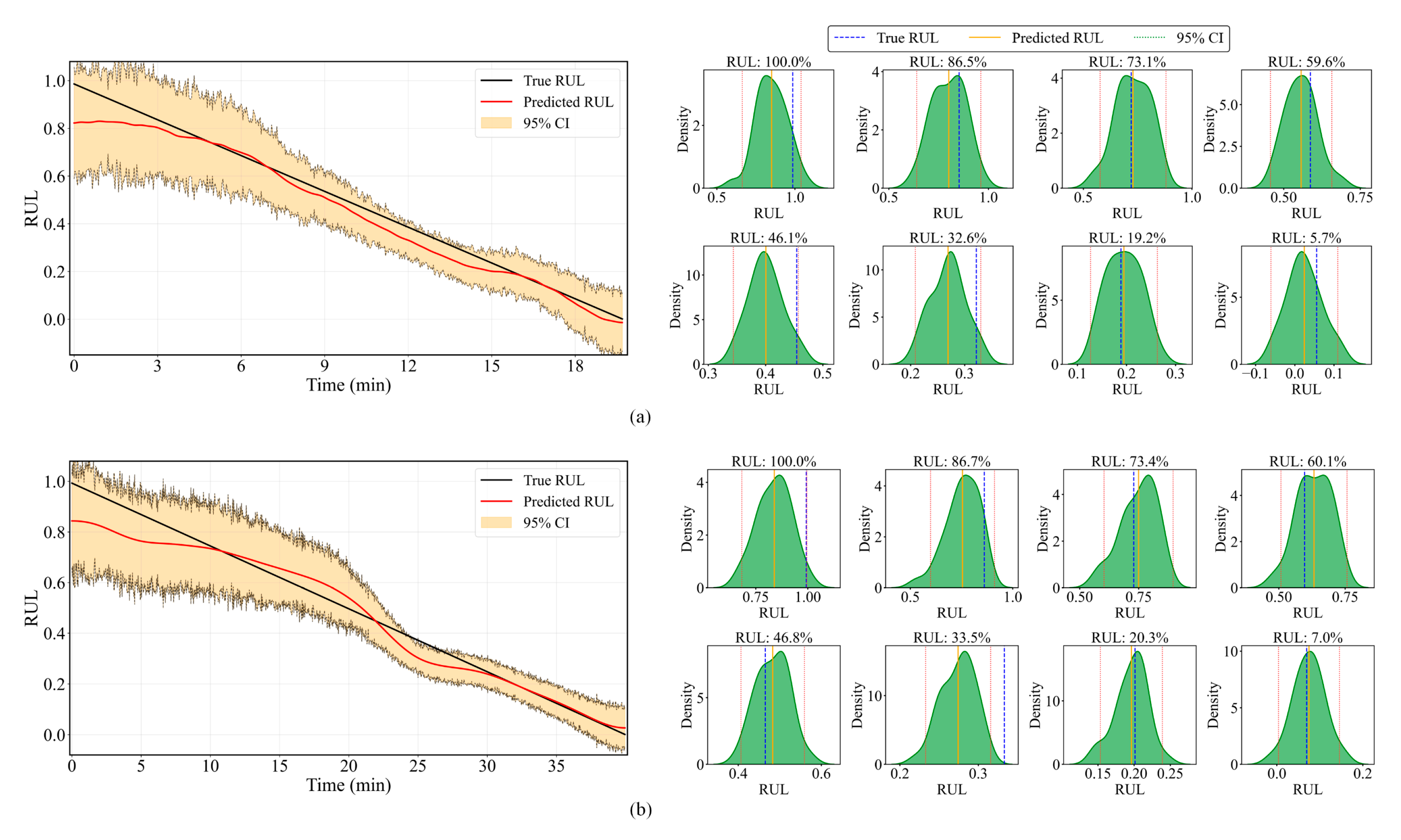

For uncertainty quantification, an MC dropout rate of 0.3 was applied during inference. Additionally, prediction uncertainty was quantified through statistically derived confidence intervals, as shown in

Figure 12. Notably, all RUL predictions remain within the calculated 95% confidence bounds throughout the degradation process. KDE analysis revealed that the predicted RUL distributions are tightly clustered around the true values, with minimal skewness. This synergy of accurate point estimation and rigorous uncertainty quantification represents a significant advancement for industrial condition-based maintenance, where understanding both the predicted RUL and its associated confidence level is critical for operational decision-making. The consistent outperformance of conventional approaches validates the efficiency of merging convolutional feature extraction with sequential pattern identification in this integrated prognostic framework.

3.7. Ablation Studies on the HCVT-WD Model

This ablation study investigates the impact of CNN patch embedding, varying patch sizes, and skip connection fusion. As illustrated in

Figure 13a the reconstruction loss, observed through both training and validation losses, remains high, fluctuates significantly, and converges slowly in the absence of CNN patch embedding. This indicates that CNN patch embedding performs effectively via convolution operations, and highlights the critical role of patch embedding. Similarly, for a patch size of 16, the reconstruction loss curves exhibit smoother and more rapid convergence compared to patch sizes 8 and 32, as shown in

Figure 13b. Furthermore, the introduction of skip connection fusion after the transformer block, aimed at enhancing feature reusability and preserving spatial information, results in a marked improvement in reconstruction loss, as shown in

Figure 13c. The skip connection fusion enables the decoder to leverage both high-level features from the transformer blocks and low-level features from the encoder, leading to more stable and smoother convergence of the loss curves and improving the accuracy of the reconstructed input data.

3.8. Comparative Experiments with Other State-of-the-Art Methods for RUL Prediction

To further validate the usefulness of our developed method, a comparative analysis was conducted with four alternative models: the memory fusion network (CLSTMF) [

39], self-adaptive graph convolutional networks with self-attention (SAGCN-SA) [

40], Time Transformer convolutional LSTM (TT-ConvLSTM) [

41], and TCN-Transformer [

42]. The first three models were designed for feature extraction and direct RUL prediction, whereas the TCN-Transformer model adopted a two-stage degradation process, considering both HI construction and RUL mapping.

The CLSTMF model achieved a lowest RMSE of 0.051, while the TT-ConvLSTM model showed the lowest RMSE and MAE values of 0.072 and 0.052, respectively. The TCN-Transformer model performed relatively well with a lowest RMSE of 0.0549 and MAE of 0.0441. In contrast, our proposed two-stage degradation model consistently outperformed most of the alternatives, yielding the lowest RMSE of 0.0441 and MAE of 0.0321, demonstrating superior predictive accuracy across all metrics. These results of the comparative experiments are summarized in

Table 7, where the RMSE and MAE values for each model are provided. This emphasizes the effectiveness of our approach in accurately predicting RUL through the two-stage degradation process, including both point prediction and interval prediction.

4. Conclusions

This study proposed the HCVT-WD framework for constructing HIs in an unsupervised manner and predicting the RUL of bearings. Raw vibration signals are processed by a sequential CNN–vision Transformer architecture, eliminating manual feature engineering while capturing local spatial patterns and long-range temporal dependencies. The HI is defined as the Wasserstein distance between encoded representations of healthy and degraded states, providing a precise metric of degradation severity. Experimental validation on bearing datasets shows that the proposed HI outperforms state-of-the-art methods in monotonicity, trendability, and prognosability. For RUL estimation, the HI is seamlessly integrated into a CNN-BiLSTM regressor that models both temporal and nonlinear degradation dynamics. A Bayesian neural network yields uncertainty-aware predictions and confidence, which support risk-informed maintenance decisions for safety- and mission-critical applications. The HCVT-WD model requires minimal preprocessing and operates without full lifecycle data, making it adaptable to real-world industrial applications. Its capacity to yield robust HIs and quantify predictive uncertainty establishes it as a potent paradigm for PHM in intricate systems.

Future research will prioritize the development of adaptable HIs across varying operational conditions, validated through extensive testing on heterogeneous datasets to broaden their applicability. Additionally, attention will be directed towards addressing the uncertainty present in both the measurement process and the model’s predictions. This includes the integration of measurement uncertainty as well as other sources of uncertainty, such as model and environmental factors, into the development of more robust and reliable predictive models. Furthermore, future work will focus on fault diagnosis and prognosis through HI construction in a dual-task learning framework, with an emphasis on incorporating zero-fault-shot learning techniques to improve the model’s adaptability to unseen fault types and operational conditions.