4.1. Experiment Settings

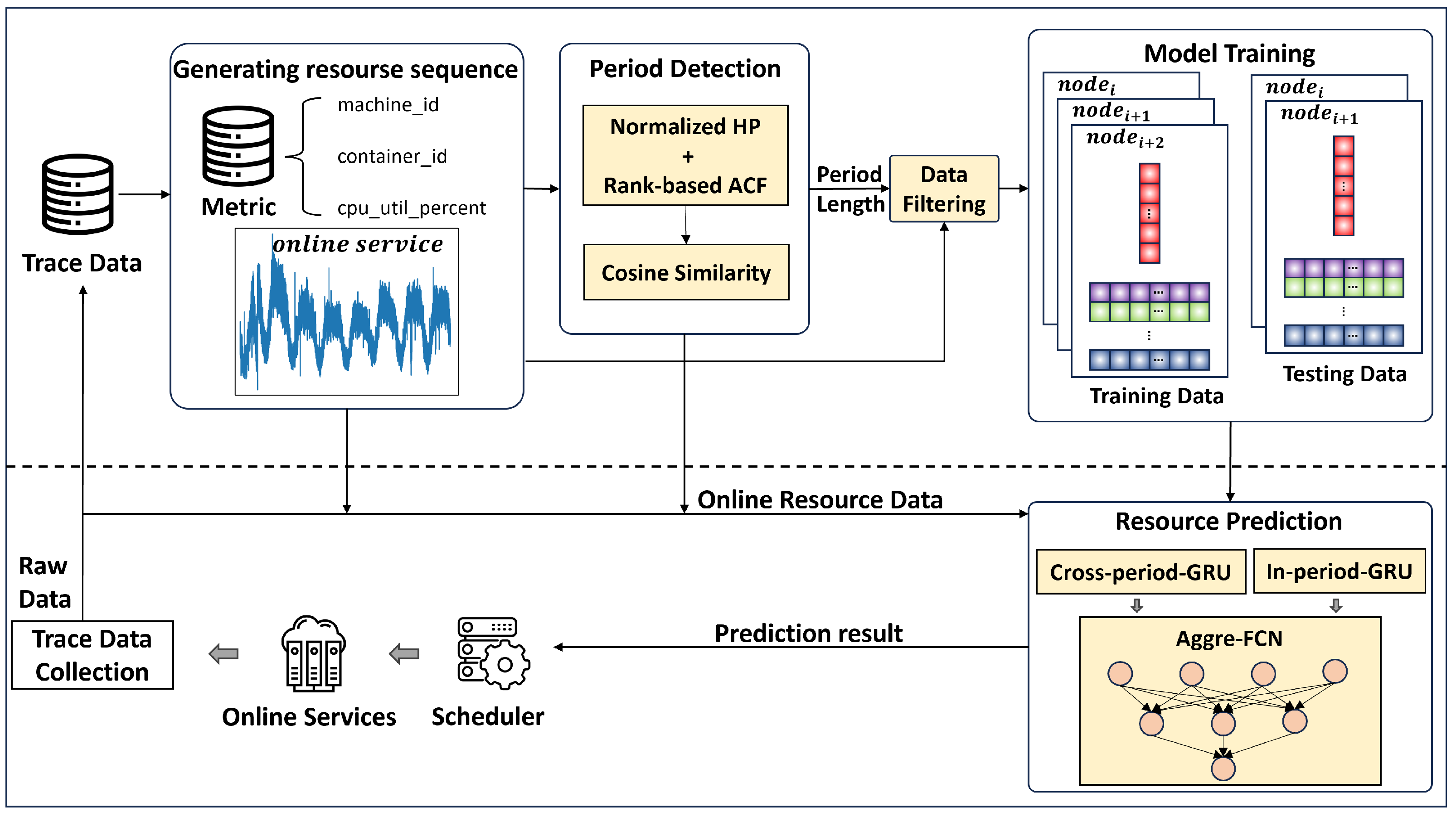

To evaluate the resource prediction capability of PRPOS, we utilize the Alibaba cluster trace v2018 dataset. Released in 2018, this dataset offers comprehensive insights into data center operations by recording the resource usage of over 5000 online services during an eight-day period. Among its six constituent tables, we specifically employ

, which contains service metadata, including the status field used to identify normally functioning services, and

which includes CPU, memory, and disk measurements recorded at 30-s intervals. After selecting 40 services with normal status from

, we aggregate their resource usage sequences by

in

. We focus on

CPU utilization percentage as the primary prediction metric due to its representative nature. For each service, we extract timestamp-sorted CPU utilization percentages to form individual time series, which together constitute our experimental dataset. Each service trains a dedicated resource prediction model using the data partitioning approach described in the "Model Training" subsection in part B of

Section 3.3.

To comprehensively evaluate PRPOS’s period detection under scenarios with both trend consistency and magnitude heterogeneity, we construct a synthetic dataset based on statistical characteristics from the Alibaba cluster trace v2018. This dataset enforces trend consistency by maintaining identical resource usage change patterns across consecutive cycles. Periodic patterns are modeled as standard sine waves with period lengths measured in number of 30-s data intervals. The lengths span five ranges—[800–1000), [1000–1200), [1200–1400), [1400–1600), and [1600–1820] intervals—systematically covering the dominant period length distribution in the dataset. For each of the five configurations, we generate 100 CPU usage percentage sequences, each containing 8 cycles. Magnitude heterogeneity is introduced into these sequences through two key dimensions: baseline levels, representing the steady-state resource occupancy and ranging from 5% to 50%, and fluctuation intensity, characterized by ±15% inter-cycle variations. Additionally, we randomly inject Gaussian noise () and outliers () into each sequence.

Our experiments are conducted on one server equipped with one 4-core 3.3 GHz Intel Core i5-6600 processor, 32 GB memory, and a 2TB Hard Drive HDD.

- B.

Baselines

The experimental baselines were constructed by adapting existing competitive approaches for cloud resource prediction, each employing statistical, machine learning, or deep learning architectures.

ARIMA [

6]: Combines wavelet decomposition and ARIMA for short-term trend prediction but struggles with nonlinear and complex patterns.

XGBoost [

8]: Uses CNN to optimize tree weights, boosting accuracy but increasing computational complexity.

CNN [

62]: Captures local temporal patterns via sliding kernels but fails to model long-term periodic dependencies due to fixed receptive fields.

LSTM [

63]: Robust to noise and temporal dependencies but suffers from high computational complexity and data hunger for intricate patterns.

BiLSTM [

64]: Enhances context modeling with bidirectional processing but inherits LSTM’s high cost and interpretability issues.

GRU [

65]: More parameter-efficient than LSTM but limited in parallelization and long-range dependency modeling.

GRU+Attention [

14]: Attention mechanisms alleviate GRU’s long-range issues but introduce full-sequence processing overhead and noise sensitivity.

RPTCN [

40]: Combines dilated convolutions and attention for long-range dependencies but struggles with periodic phase shifts and irrelevant feature noise.

VSSW-MSDSS [

43]: Dynamically selects among statistical, ML, and DL models via variable-size sliding windows but relies on manual threshold tuning and lacks explicit periodicity modeling.

FISFA [

44]: Leverages SG filter, a FEDformer, and FECAM for spectral patterns, but incurs heavy computation and implicit periodicity handling.

In addition, we evaluate the accuracy and computational efficiency of our period detection method in PRPOS, comparing it to widely adopted frequency-domain and time-domain baselines.

findFrequency [

66]: This is based on using the maximum value in the frequency spectrum to estimate period length.

SAZED [

49]: This approach includes the SAZEDopt and SAZEDmaj variants, which combine spectral and autocorrelation analysis for parameter-free seasonality detection with high efficiency. However, it assumes stable amplitudes and exhibits sensitivity to outliers, owing to its reliance on raw autocorrelation.

RobustPeriod [

20,

21]: This leverages wavelet transforms and robust Huber optimization to detect multiple periodicities. It suffers from high computational costs and the phase-amplitude decoupling, making it less ideal for magnitude-varying resource patterns.

- C.

Evaluation Metrics

To quantify and compare the prediction performance of PRPOS with the ten baselines, we use two performance metrics—MAPE [

67] and RMSE [

68]—as follows:

where

and

are the observed value and ground truth of instance

i, and

n is the number of all sample instances.

In period detection evaluation, we use Accuracy to measure their performance.

- D.

Parameter Settings

In this section, we present the core parameter settings in PRPOS. The detailed parameter settings for each baseline model are provided in

Appendix A (

Table A1 and

Table A2).

The configurable parameters in PRPOS are categorized into three functional groups corresponding to the stages of period detection, sample data selection, and resource prediction modeling. It is noteworthy that the parameters for the prediction modeling stage are specifically the parameters of the deep learning model, as shown in

Table 1.

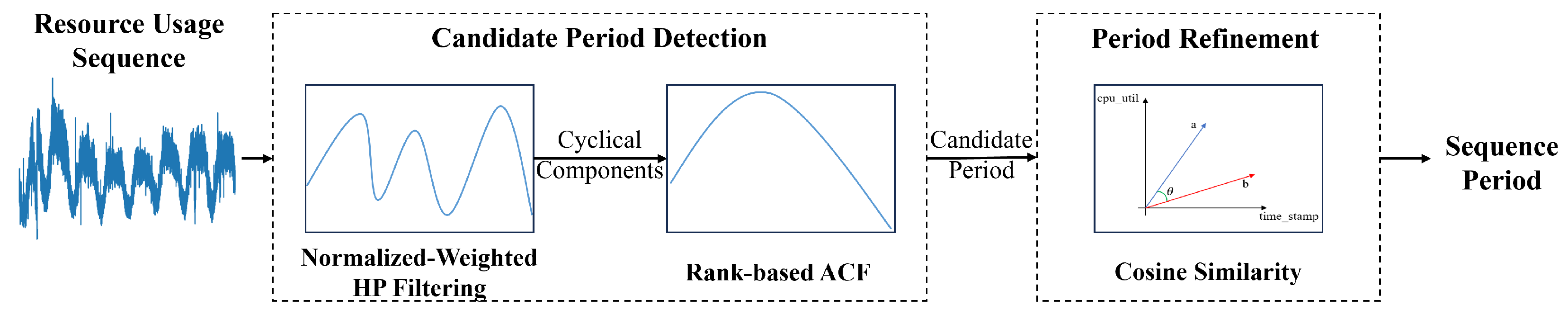

In the period detection stage, the HP filter smoothing parameter (

= 14,400) adopts the classical setting to extract near-daily periodic trends [

25]. The rank-based ACF is computed with two key parameters: the window length

and the maximum lag

. In our experiments, we set

= 3000 and

= 4000 so as to fully encompass the characteristic near-daily periodicity (6.5–18 h in Alibaba trace) and its potential harmonics of online service CPU usage [

26]. The cosine similarity threshold

is set to 0.8 to enforce strict waveform consistency against the inherent burstiness and noise in online service CPU usage. During period refinement, the step size

is set to the minimum sampling interval (30 s) in the trace data to achieve the finest adjustment granularity, while the terminal condition

is set to 5 to serve as a robust stopping criterion, effectively distinguishing between short-lived noise spikes and a genuine, persistent shift in the periodic pattern of CPU consumption.

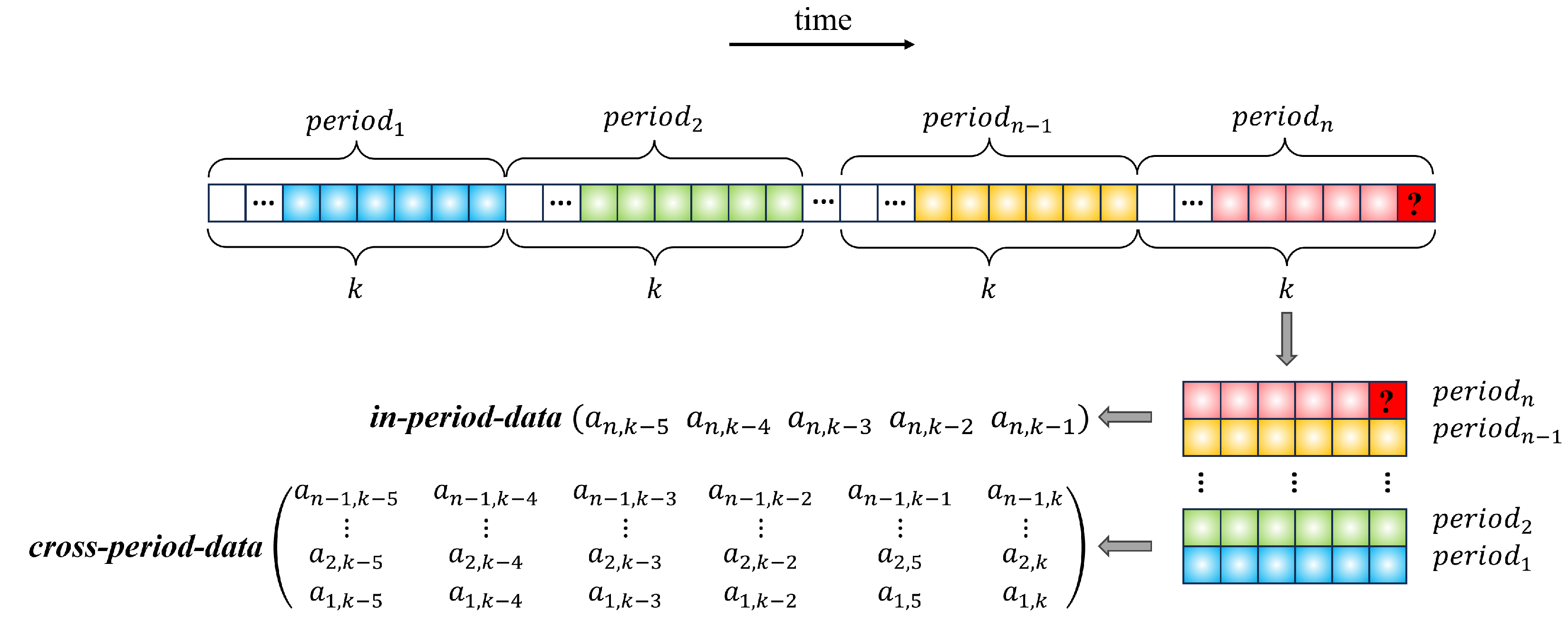

In the sample data selection stage, parameters are set to model the continuous trends and mitigate transient noise in online service’s CPU usage. The input data size is set to 60 to capture essential short-term context. The sampled window size per cycle is set to 10 to provide the resolution needed to represent pattern morphology. The number of historical cycles is set to 5 to offer a robust view of long-term, repeating trends, allowing the model to average out non-repeating noise.

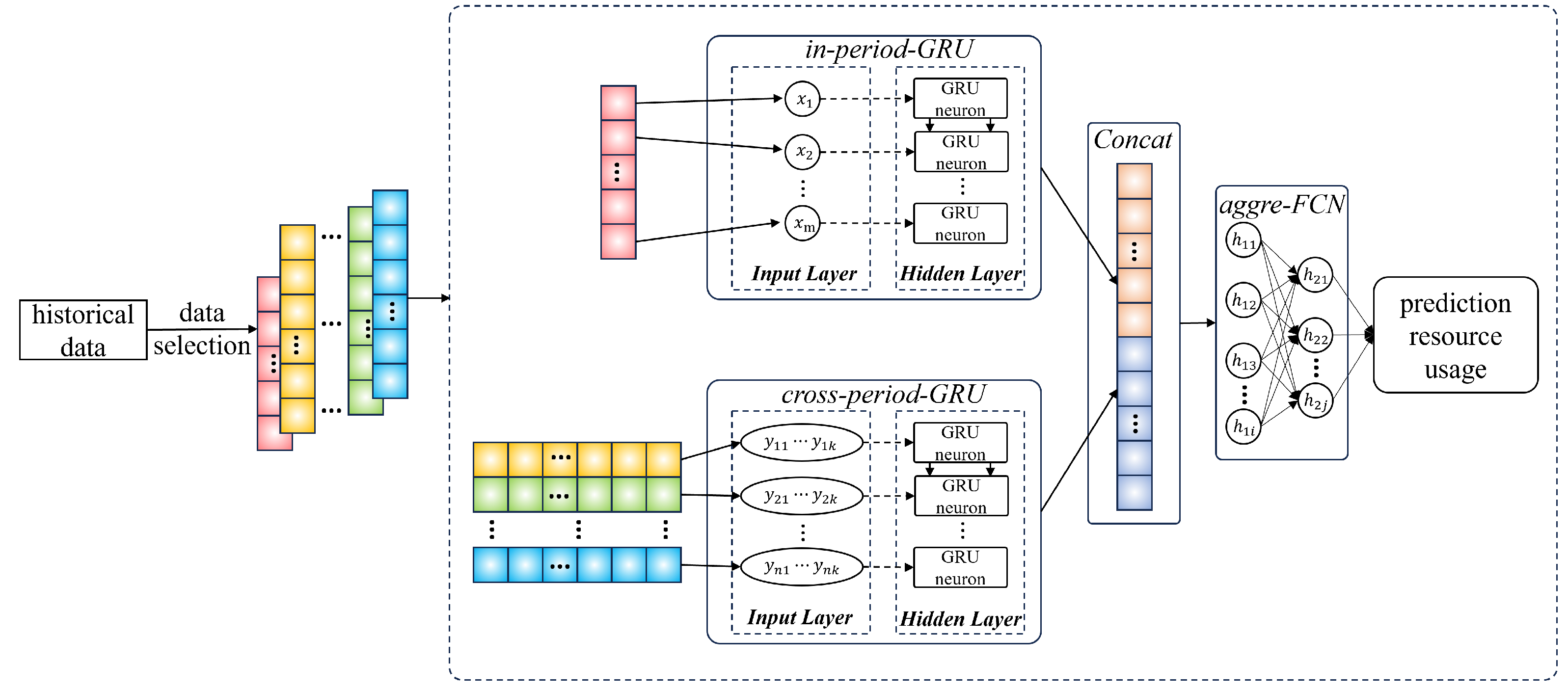

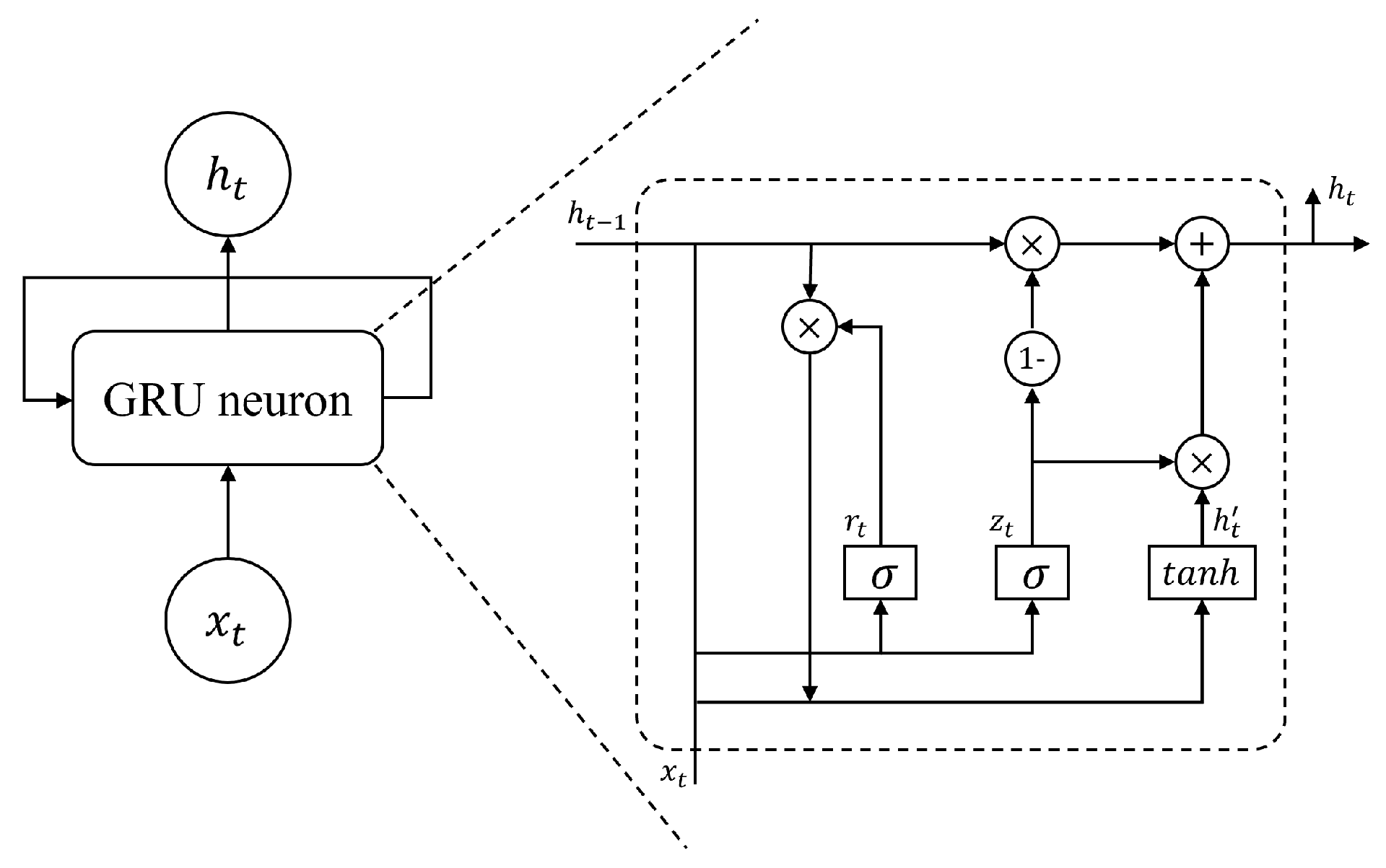

In the resource prediction modeling stage, the model structure is defined by several key parameters. The fully connected network has a depth = 2 with neuron numbers = 128, = 64, balancing capacity and stability. The GRU hidden unit number = 64 is chosen for effective temporal modeling. The core design is the dual-scale GRU, where the in-period-GRU hidden layer depth = 1 captures high-frequency dynamics, and the cross-period-GRU hidden layer depth = 2 models complex long-term trends. The learning rate = 0.005 ensures stable convergence.

4.2. Results and Analysis

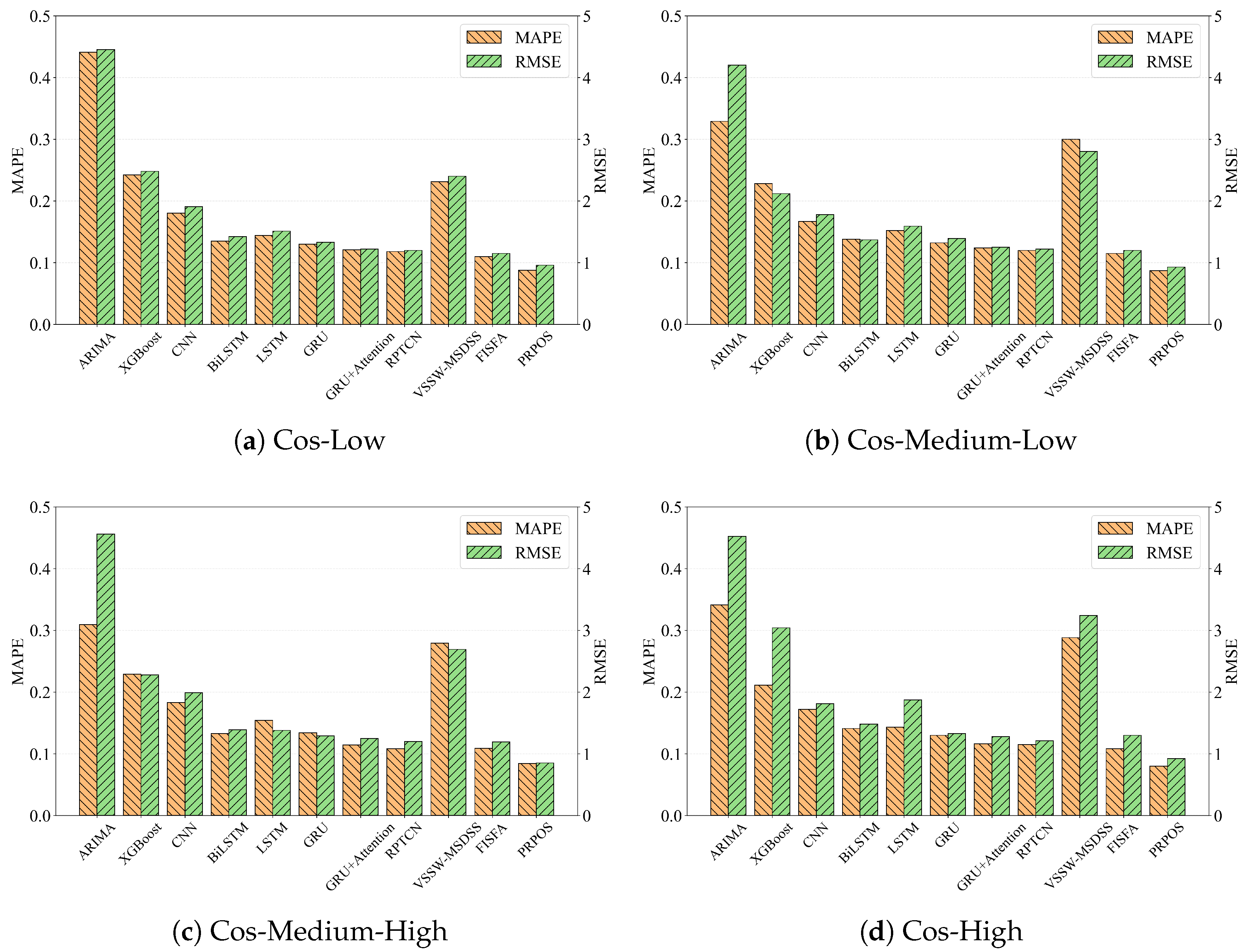

To evaluate the resource prediction performance of PRPOS under diverse utilization patterns, we classified the CPU usage sequences of online services from Alibaba cluster trace v2018 dataset based on their average cosine similarity of periodic patterns obtained through PRPOS’s period detection method. These sequences were grouped into four categories according to similarity ranges—[0.8, 0.85] (Cos-Low), [0.85, 0.9] (Cos-Medium-Low), [0.9, 0.95] (Cos-Medium-High), and [0.95, 1] (Cos-High)—each reflecting a distinct periodic characteristic. From each category, ten sequences were randomly selected for experimentation. To ensure a fair comparison, all baseline methods use the 60 most recent data points as input, matching the total historical data volume of PRPOS, which integrates 10 points from the current cycle and 5 historical cycles (10 points each).

Figure 6 illustrates the overall performance of PRPOS and baseline methods. PRPOS consistently outperforms all baselines across all four test groups, achieving the best results in online service resource prediction. Specifically, it attains an average improvement of 45.3% and a maximum of 80% in MAPE, along with an average improvement of 44.3% and a maximum of 81.4% in RMSE. PRPOS achieves superior performance by explicitly modeling periodicity in resource usage. By intelligently incorporating both short-term dependencies (from the current cycle) and long-term dependencies (from historical cycles), PRPOS effectively captures both immediate variations and recurring patterns. This dual-temporal approach proves more accurate than methods that either ignore periodic features or rely solely on transformer-based dependency modeling.

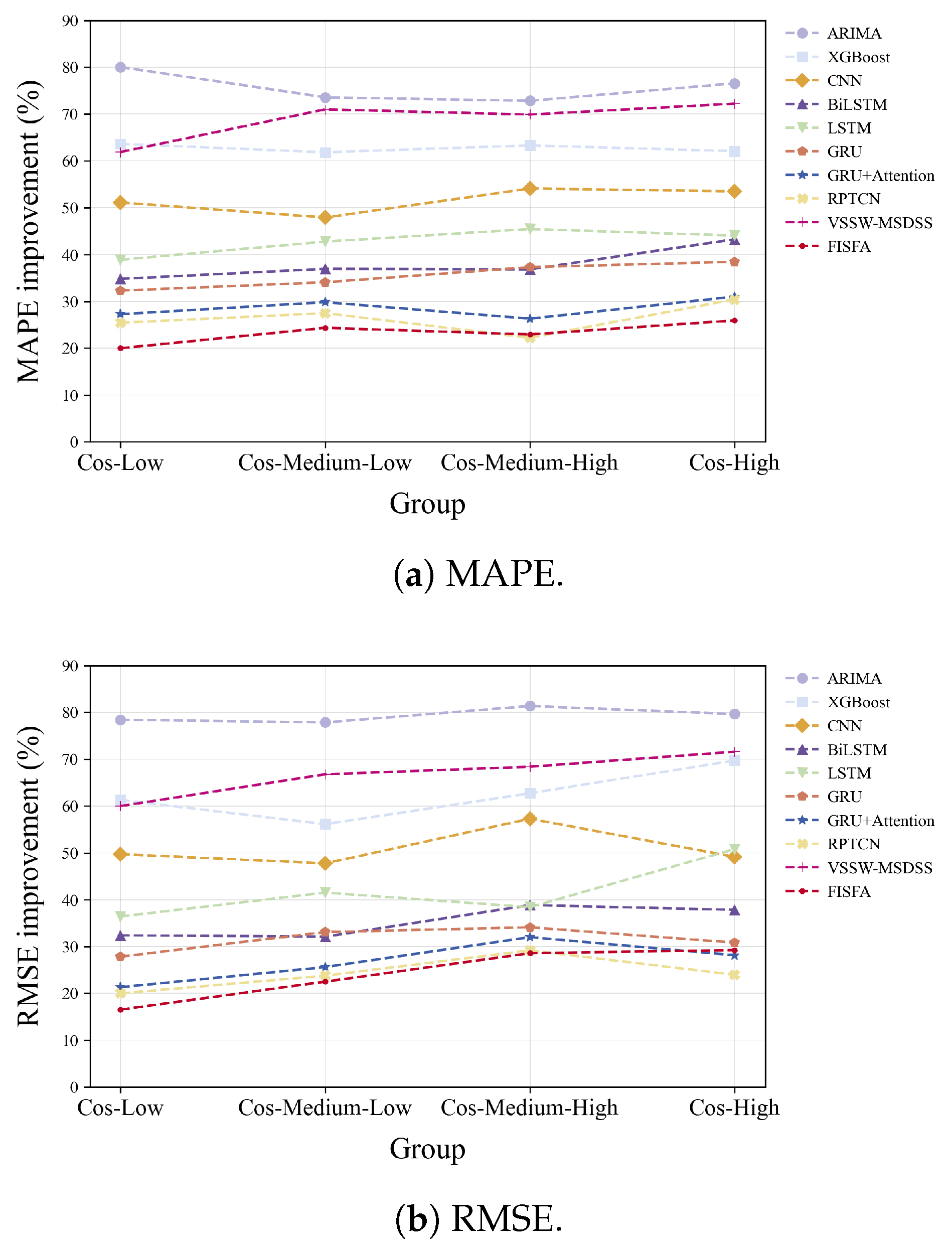

We further observe that the performance improvement of PRPOS becomes more pronounced as the trend similarity across cycles increases. As illustrated in

Figure 7, which shows the relative improvement in both MAPE and RMSE over baseline methods, PRPOS achieves significantly greater accuracy gains in groups with higher cosine similarity. For example, compared to the GRU-based model, as similarity increases from [0.8, 0.85] to [0.95, 1], PRPOS attains relative reductions in MAPE ranging from 32.3% to 38.5%, and in RMSE from 27.8% to 30.8%. This trend highlights the advantage of periodic pattern modeling: Higher inter-cycle trend consistency implies more stable and predictable resource usage, thereby improving prediction accuracy.

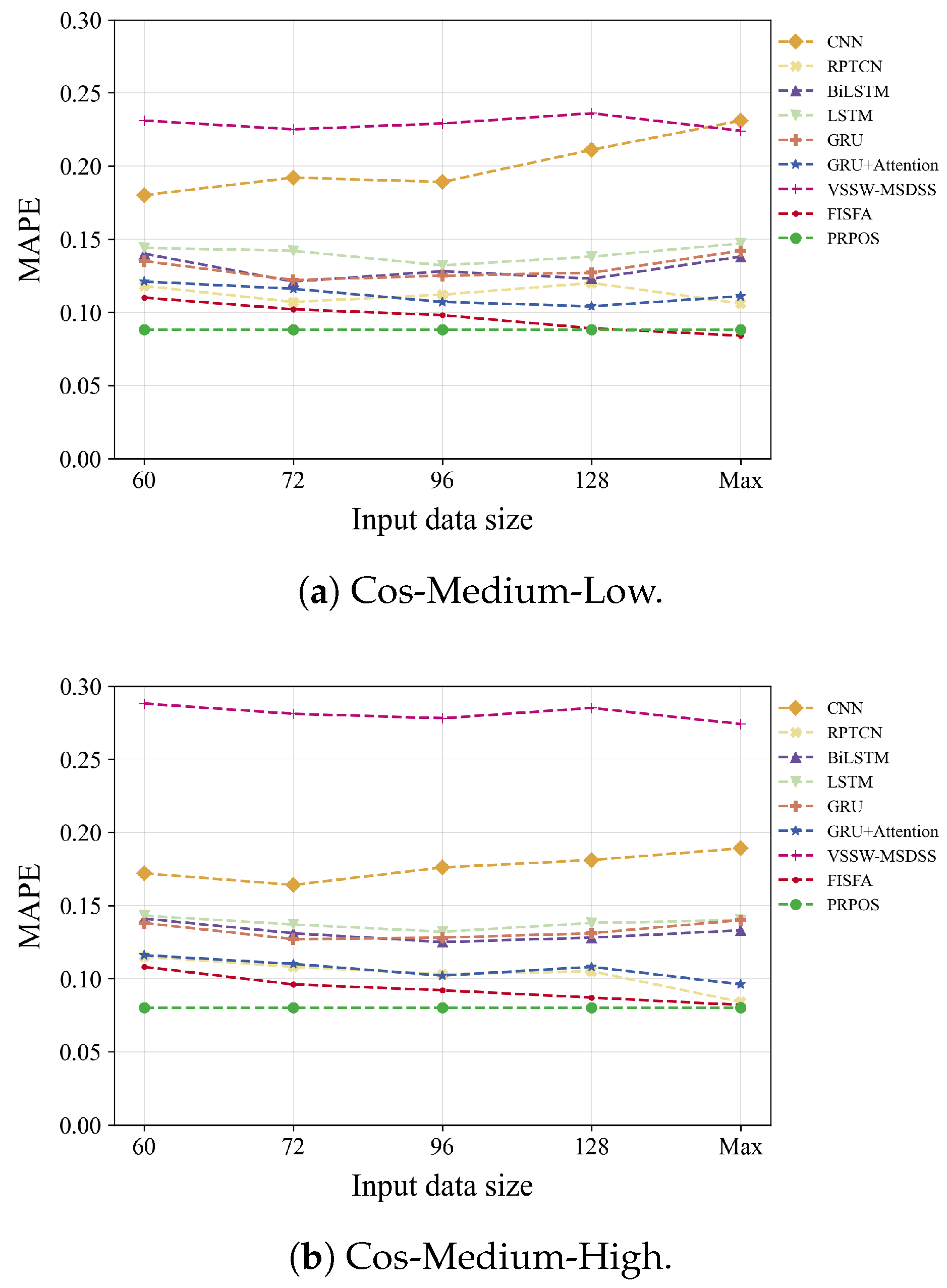

To evaluate the impact of input length on model performance, we maintained PRPOS’s fixed 60-data-point input architecture (comprising the 10 most recent observations plus 5 × 10 phase-aligned historical points) while progressively increasing the sequence lengths of baseline deep learning models to assess their capacity for leveraging extended historical contexts. Specifically, we extended the input length to 72, 96, 128, and maximum feasible lengths (denoted as “”) for all selected baselines except VSSW-MSDSS. Considering the computational constraint during model training, the maximum lengths were configured as 200 for CNN-based model, 256 for RNN-style models and RPTCN, and 512 for FISFA. We employ the Cos-Medium-Low and Cos-Medium-High service groups for this set of the experiments.

As shown in

Figure 8, prediction accuracy does not improve monotonically with longer historical windows. PRPOS consistently outperforms baselines even under the same historical data scale. This is because (1) overly short windows in baseline models fail to capture short-term patterns, while (2) excessively long windows introduce noise and outliers that impair prediction. In contrast, PRPOS maintains robust performance by selectively retaining the most relevant historical data and explicitly modeling inter-cycle trends.

Finally, to validate the robustness of PRPOS, we evaluated its predictive performance on the Cos-Low and Cos-High service groups by varying two key parameters: the sampled window size per cycle and the number of historical cycles used for prediction.

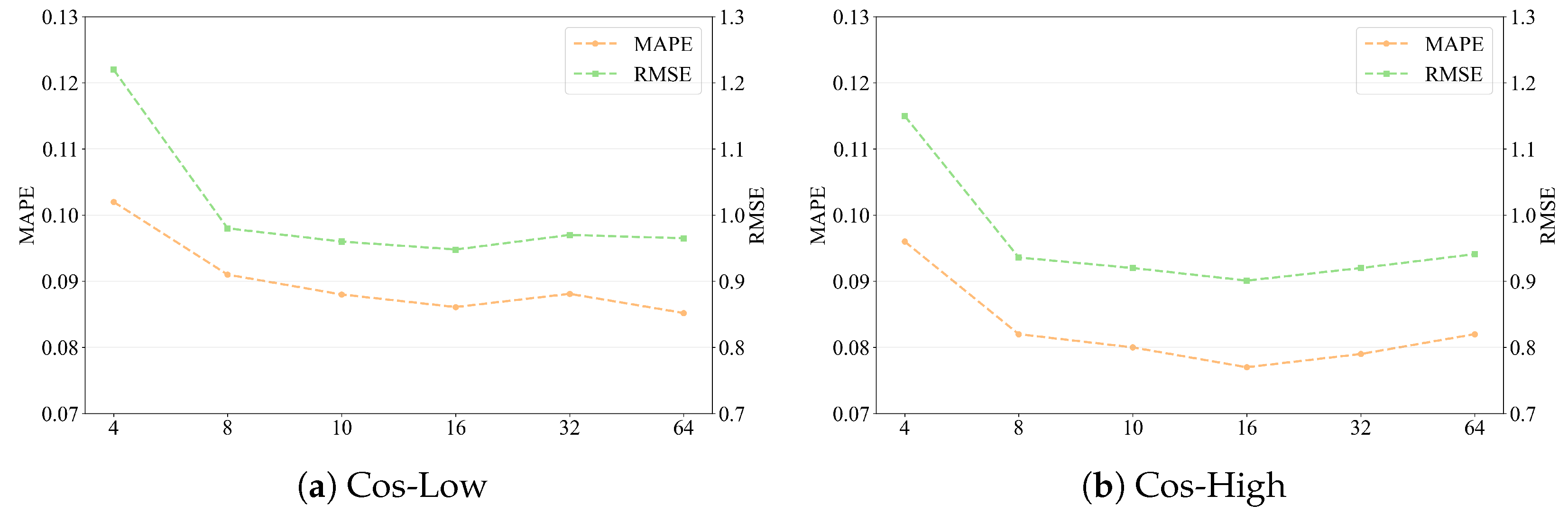

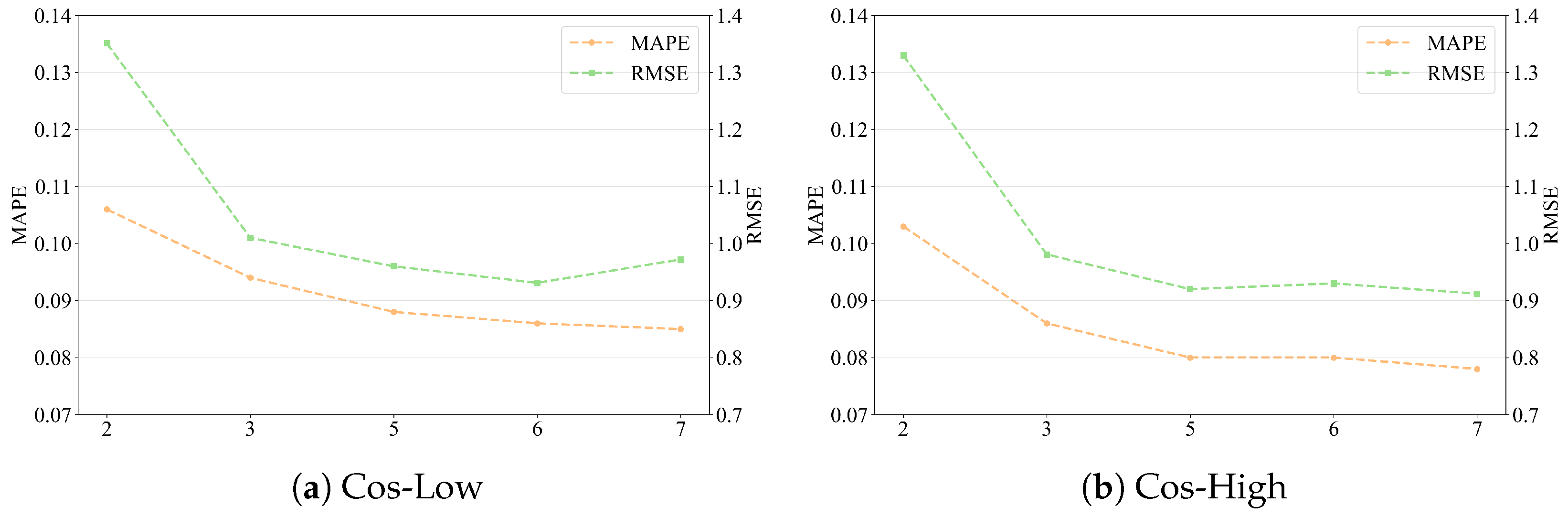

In our experiments, we use the configuration of 10 samples per cycle and 5 historical cycles, which has been employed in our preceding experiments, as the baseline. As illustrated in

Figure 9 and

Figure 10, the model demonstrates notable robustness. Both MAPE and RMSE remained stable as the sample window size per cycle increased from 8 to 64. A noticeable performance degradation occurred only when the window size was drastically reduced to 4, which resulted in a MAPE increase of up to 20% compared to the baseline. For all other configurations, the deviations in MAPE and RMSE were confined within 3.75% and 2.28%, respectively. Similarly, varying the number of historical cycles from 3 to 7 induced marginal deviations within 7.5% for MAPE and 6.63% for RMSE. However, reducing the cycles to 2 caused a sharp performance decline, increasing MAPE and RMSE by approximately 28.75% and 44.57%, respectively. These results confirm that PRPOS exhibits strong robustness across a wide range of parameter settings, provided they are chosen within appropriate bounds.

Furthermore, compared to the baseline configuration, increasing either parameter did not yield significant performance gains. This suggests that the default setup offers a favorable trade-off between prediction accuracy and computational efficiency.

- B.

Period Detection Performance

In this section, we evaluate PRPOS’s novel period detection method, specifically optimized for time series data characterized by consistent temporal trends and heterogeneous magnitude patterns. The experimental dataset and baseline methods are described detail in part A of

Section 4.1.

Figure 11 summarizes the accuracy of all methods across different period lengths and under varying degrees of magnitude heterogeneity. PRPOS consistently achieves the highest period detection accuracy in all scenarios. By applying normalized HP filtering and rank-based ACF to mitigate inter-cycle magnitude variations, PRPOS significantly outperforms both SAZED and findFrequency across different period lengths, with an average accuracy improvement of 109.6% and a maximum improvement of 240%.

We further analyze two selected subsets from the 500 synthetic resource usage sequences: a light-variation subset (baseline: 5–10%, fluctuation intensity: 1–10% of the baseline) and a heavy-variation subset (baseline: >10%, fluctuation intensity > 10% of the baseline). Our experimental results demonstrate that PRPOS’s period detection method achieves accuracy improvements of 179.7% on average(up to 304.8%) over SAZED and findFrequency on these subsets. Notably, in experiments with the heavy-variation group compared to the light-variation group, PRPOS achieves higher accuracy improvement, validating the effectiveness of normalization and rank transformation operations.

Among the four baseline methods, RobustPeriod achieves the best detection performance, matching PRPOS in accuracy thanks to its MODWT decomposition, Huber robust estimation, and joint time–frequency validation. However, computational cost comparisons reveal significant differences. As shown in

Table 2, PRPOS maintains sub-second execution times across all period lengths, while RobustPeriod’s runtime grows sharply with longer periods—reaching up to 3788× slower than PRPOS for periods in [1600, 1820]. This efficiency gap stems from their underlying mechanisms: PRPOS leverages low-complexity normalized HP filtering and rank-based ACF, which exploit monotonic cosine similarity to terminate corrections early, whereas RobustPeriod incurs higher overhead from DWT-based frequency decomposition and DFT-based heuristic searches. Notably, findFrequency incurs the lowest computational cost among all methods but suffers from a 2.4-fold reduction in accuracy, attributable to spectral leakage resulting from its simplistic frequency-domain approach.

- C.

Ablation Study

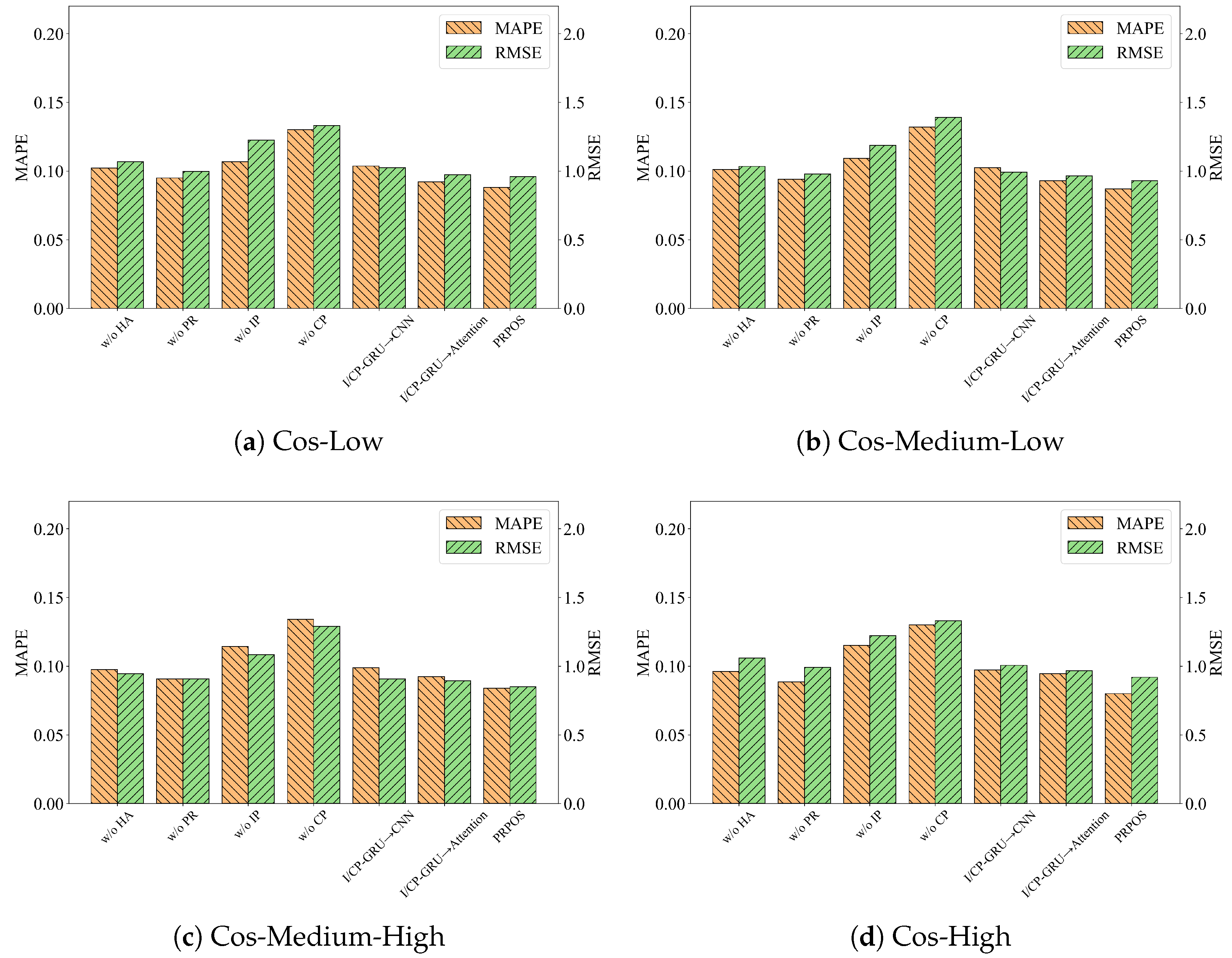

To thoroughly assess the contributions of individual components in the PRPOS prediction model, we perform ablation studies comparing the complete model with its variants, with results detailed in

Figure 12. All ablated versions show measurable accuracy degradation compared to the full PRPOS model, which achieves superior performance through its dual capability to perform (1) robust period detection via distribution-resistant normalization and period correction to handle magnitude variations and (2) comprehensive dependency modeling that simultaneously captures both short-term in-period relationships and long-term cross-period patterns across historical sequences.

Effectiveness of the period detection method: We conduct two ablation studies on the three key components in our period detection method. First, replacing normalized HP filtering and rank-based ACF with conventional HP filtering and standard ACF (w/o HA) results in an average increase of 17.17% in MAPE and 12.2% in RMSE across all datasets, confirming the importance of distribution-resistant normalization in reducing period detection noise. Removing the cosine similarity-based refinement component (w/o PR) causes a milder degradation, with MAPE increasing by 8.6% and RMSE by 5.9%, indicating its role in providing additional noise suppression beyond the baseline detector.

Effectiveness of the resource prediction model: To evaluate the effectiveness of our resource prediction model, which consists of in-period and cross-period components, we construct two ablation variants. The w/o IP variant is constructed by removing in-period from the model while retaining cross-period’s structure and inputs; results show that this leads to a 31.7% increase in MAPE and a 28.9% rise in RMSE, underscoring the importance of short-term historical patterns. The w/o CP variant removes the cross-period component and extends in-period’s input to the latest 60-step history; this configuration fails to capture long-term periodic features—even with matched input length—causing a further 55.4% increase in MAPE and a 46.1% rise in RMSE.

Effectiveness of Sequential Modeling Units: To validate the effectiveness of using GRUs for sequential modeling in both the in-period and cross-period components, we constructed two ablation variants—I/CP-GRU→CNN and I/CP-GRU→Attention—replacing the GRU units with convolution layers and a standard self-attention module (with positional encoding), respectively. Compared to the complete model, the CNN variant shows an average increase of 18.7% in MAPE and 7.3% in RMSE, confirming that GRU outperforms CNN in capturing temporal trends and short-term dependencies. However, replacing GRU with a self-attention mechanism also degrades performance (MAPE: 9.9%, RMSE: 3.8%). This is because our periodicity-based approach splits fixed-length sequences into historical cycles and selects phase-aligned short sub-sequences, negating the self-attention mechanism’s long-sequence weighting. Instead, GRU’s strength in local short-term dependencies prevails. Additionally, GRU’s gating mechanisms adaptively filter noise/outliers for robustness to imperfect data, whereas the attention mechanism is more susceptible to such interference.