Large Language Models for Machine Learning Design Assistance: Prompt-Driven Algorithm Selection and Optimization in Diverse Supervised Learning Tasks

Abstract

1. Introduction

1.1. Related Work

1.2. The Contributions of This Study

- It presents a systematic and comparative performance analysis of different LLM families (OpenAI, GPT, Gemini, Claude, DeepSeek) on real-world tasks, which is limited in literature.

- The integration of HPT into the code generation process is one of the first studies to question the effectiveness of LLMs in terms of not only solution generation but also solution calibration.

- The impact of two different prompt design strategies (few-shot prompting (FSP) and Tree of Thoughts (ToT)) on end-to-end ML workflows including data preprocessing, algorithm selection and code generation is extensively studied. The capabilities of LLM models guided by these strategies in terms of end-to-end solution generation capacity are evaluated.

- The practical applicability of the generated codes was analyzed in a multidimensional way by comparing their performance with both the public scores of Kaggle participants and the solutions written by experts.

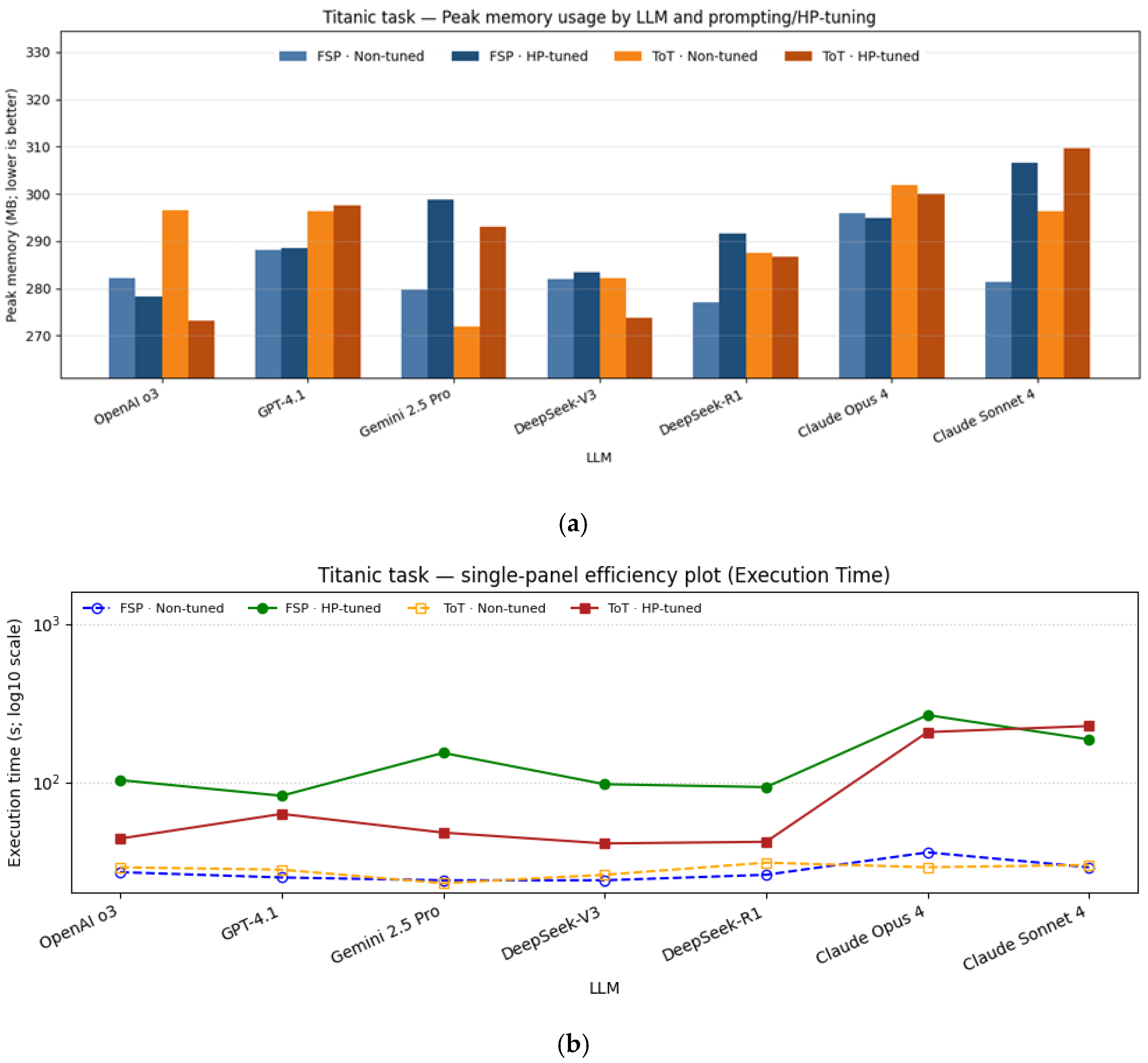

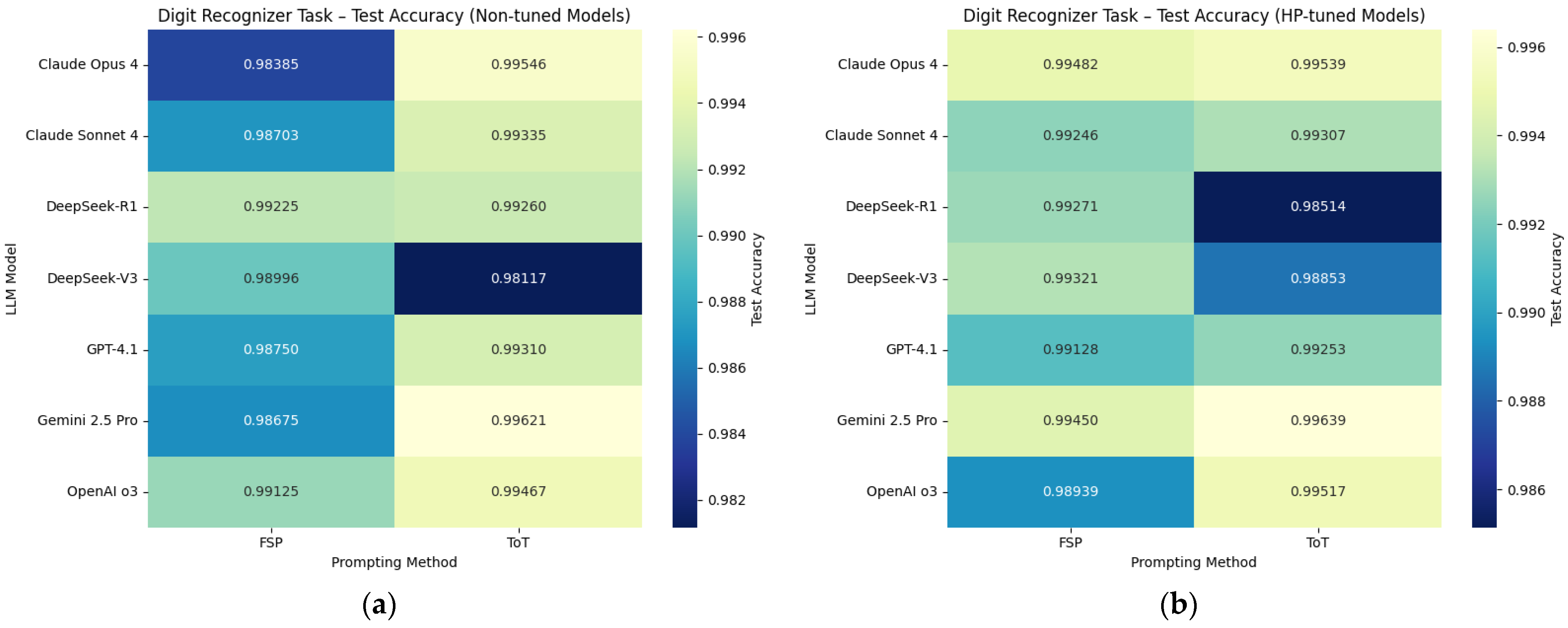

- Performance evaluation is not limited to classical metrics such as accuracy, F1 score and Root Mean Squared Error (RMSE), but also takes into account resource cost aspects such as execution time and peak memory usage, which are critical in real-world applications.

- Through the analysis of four different task types (tabular, text, image; including classification and regression), the stronger or weaker performance of the models was revealed in detail in the context of the task type.

- It extends the scope of LLM evaluation by including comparisons with established AutoML frameworks, thereby situating LLM-driven code generation within the broader landscape of machine learning.

2. Materials and Methods

2.1. Machine Learning Tasks for Evaluation

2.2. Selected LLMs for Experimental Evaluation

2.3. Prompt-Driven Code Generation with LLMs

2.4. AutoML Frameworks: Setup and Parameters

2.5. Evaluation Metrics

3. Experiments and Results

4. Discussion

4.1. Prompting Effectiveness by Task Type

4.2. A Practical Comparison of LLM-Generated and Human-Developed Solutions

4.3. Comparison with Prior Literature

4.4. LLM Pipelines vs. Classical AutoML

4.5. General Insights and Practical Recommendations

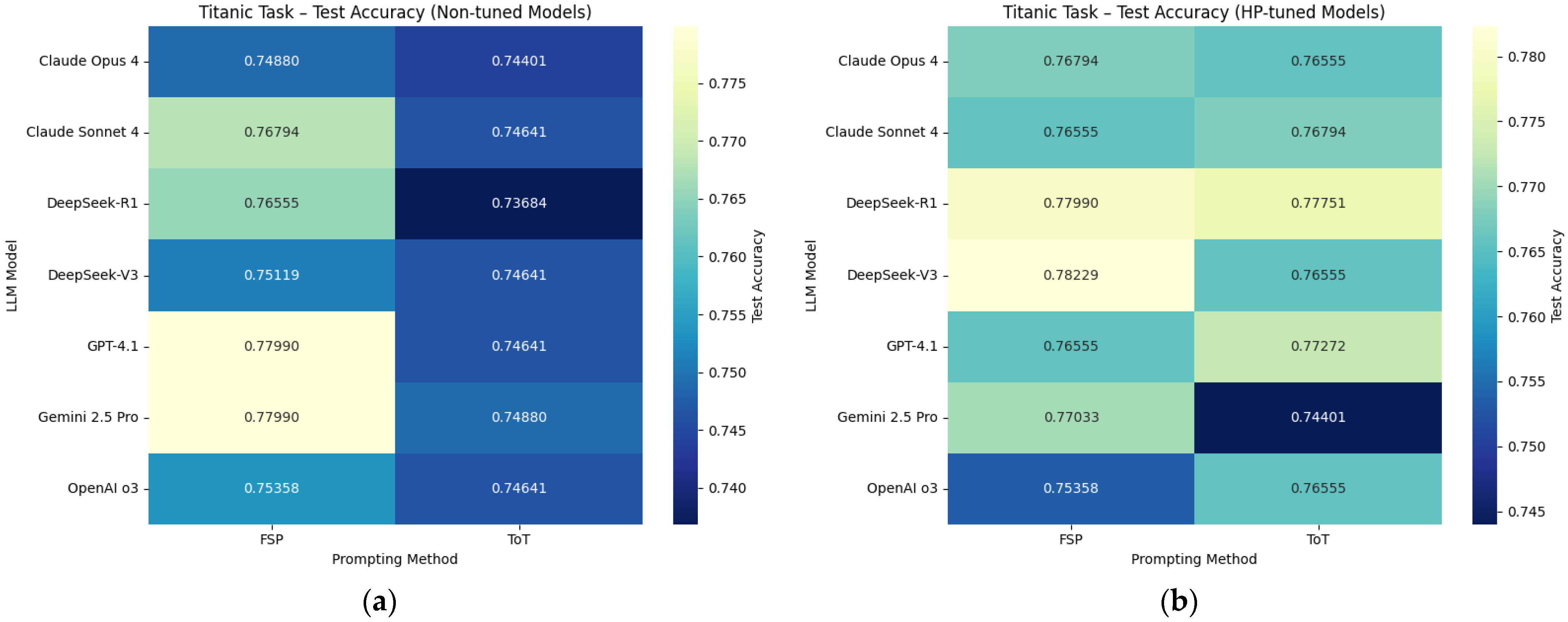

- There is no universally superior prompting technique in terms of accuracy, F1 score/error metrics. FSP offers a strong and stable start without tuning, while ToT is often significantly strengthened with HP-tuning and can achieve the best results in some tasks

- HPT usually increases execution time but creates a leverage effect that can change the ranking.

- There is no uniform trend in memory consumption; differences depend on the LLM model × prompting × tuning interaction and are small in most tasks.

- Among the models, Gemini 2.5 Pro and DeepSeek-V3 often stand out in terms of high accuracy, F1 score/low RMSE.

- Practical advice: For a fast and stable start FSP (no-tuning) is particularly suitable for medium to narrow search space tasks. For tasks with wide search space, ToT (HP-tuned) can provide better peak performance if there is enough tuning budget.

- Comparisons with AutoML frameworks indicate that LLM-based solutions can match or even surpass systematically optimized baselines in several tasks, while AutoML remains valuable for stability specifically in structured regression problems.

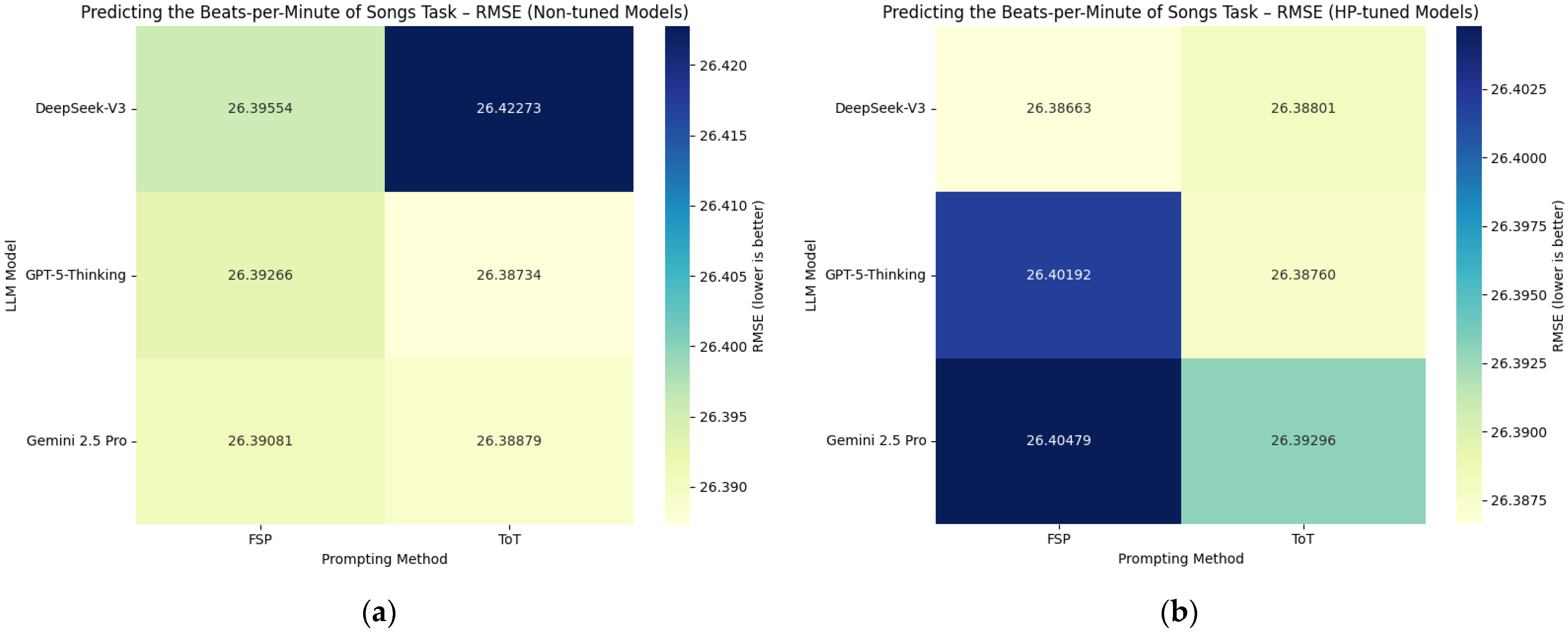

- Results from the most recent and relatively underexplored task (Beats-per-Minute of Songs) demonstrate that LLMs can deliver competitive results even on less-studied datasets, with notable efficiency gains, though their performance remains sensitive to prompting and tuning choices.

5. Conclusions and Future Work

Supplementary Materials

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | Gradient Boosting Classifier | Random Forest Classifier | Random Forest Classifier | |

| Tuned Hyperparameters: | clf__n_estimators: randint(200, 600), clf__learning_rate: uniform(0.01, 0.2), clf__max_depth: randint(2, 5), clf__min_samples_split: randint(2, 20), clf__min_samples_leaf: randint(1, 20), clf__subsample: uniform(0.6, 0.4) | n_estimators: [100, 200], max_depth: [4, 6, 8, None], min_samples_split: [2, 5, 10], min_samples_leaf: [1, 2, 4], class_weight: [‘balanced’] | n_estimators: [100, 200, 300], max_depth: [None, 10, 20], min_samples_split: [2, 5, 10], min_samples_leaf: [1, 2, 4], class_weight: [None, ‘balanced’] | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | Random Forest Classifier | Random Forest Classifier | Random Forest Classifier | Random Forest Classifier |

| Tuned Hyperparameters: | classifier__n_estimators: [100, 200, 300], classifier__max_depth: [None, 5, 10], classifier__min_samples_split: [2, 5, 10], classifier__min_samples_leaf: [1, 2, 4] | classifier__n_estimators: [100, 200, 300], classifier__max_depth: [None, 5, 10], classifier__min_samples_split: [2, 5, 10], classifier__min_samples_leaf: [1, 2, 4] | n_estimators: [100, 200, 300], max_depth: [5, 10, 15, None], min_samples_split: [2, 5, 10], min_samples_leaf: [1, 2, 4], max_features: [‘auto’, ‘sqrt’, ‘log2’] | n_estimators: [100, 200, 300], max_depth: [3, 5, 7, None], min_samples_split: [2, 5, 10], min_samples_leaf: [1, 2, 4], max_features: [‘sqrt’, ‘log2’] |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | Random Forest Classifier | Random Forest Classifier | Random Forest Classifier | |

| Tuned Hyperparameters: | clf__n_estimators: [100, 200, 300] clf__max_depth: [None, 10, 20] clf__min_samples_split: [2, 5] | n_estimators: [100, 200, 300, 400] max_depth: [3, 5, 7, 9, None] min_samples_split: [2, 5, 10] min_samples_leaf: [1, 2, 4] max_features: [‘sqrt’, ‘log2’] | n_estimators: [100, 200] max_depth: [5, 10, None] min_samples_split: [2, 5] min_samples_leaf: [1, 2] | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | Random Forest Classifier | Random Forest Classifier | Random Forest Classifier | Random Forest Classifier |

| Tuned Hyperparameters: | classifier__n_estimators: [100, 200] classifier__max_depth: [None, 10, 20] classifier__min_samples_split: [2, 5] | classifier__n_estimators: [100, 200] classifier__max_depth: [5, 10, None] classifier__min_samples_split: [2, 5] classifier__min_samples_leaf: [1, 2] | n_estimators: [100, 200, 300] max_depth: [5, 10, 15, None] min_samples_split: [2, 5, 10] min_samples_leaf: [1, 2, 4] max_features: [‘sqrt’, ‘log2’] | n_estimators: [100, 200, 300] max_depth: [3, 5, 7, 10, None] min_samples_split: [2, 5, 10] min_samples_leaf: [1, 2, 4] max_features: [‘sqrt’, ‘log2’, None] |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | XGBRegressor | Blending LightGBM+XGBoost+CatBoost | Blending LGBMRegressor+XGBRegressor+CatBoostRegressor | |

| Tuned Hyperparameters: | model__n_estimators: randint(800, 1600) model__max_depth: randint(3, 7) model__learning_rate: uniform(0.01, 0.09) model__subsample: uniform(0.6, 0.4) model__colsample_bytree: uniform(0.6, 0.4) model__reg_alpha: uniform(0.0, 0.6) model__reg_lambda: uniform(0.3, 1.0) | # LightGBM objective: “regression” metric: “rmse” random_state: SEED learning_rate: trial.suggest_float(“learning_rate”, 0.01, 0.2) num_leaves: trial.suggest_int(“num_leaves”, 20, 80) feature_fraction: trial.suggest_float(“feature_fraction”, 0.6, 1.0) bagging_fraction: trial.suggest_float(“bagging_fraction”, 0.6, 1.0) bagging_freq: trial.suggest_int(“bagging_freq”, 1, 7) min_child_samples: trial.suggest_int(“min_child_samples”, 5, 30) # XGBoost objective: “reg:squarederror” tree_method: “hist” random_state: SEED learning_rate: trial.suggest_float(“learning_rate”, 0.01, 0.2) max_depth: trial.suggest_int(“max_depth”, 3, 10) subsample: trial.suggest_float(“subsample”, 0.6, 1.0) colsample_bytree: trial.suggest_float(“colsample_bytree”, 0.6, 1.0) min_child_weight: trial.suggest_int(“min_child_weight”, 1, 10) # CatBoost loss_function: “RMSE” random_seed: SEED learning_rate: trial.suggest_float(“learning_rate”, 0.01, 0.2) depth: trial.suggest_int(“depth”, 3, 10) l2_leaf_reg: trial.suggest_float(“l2_leaf_reg”, 1, 10) bagging_temperature: trial.suggest_float(“bagging_temperature”, 0, 1) border_count: trial.suggest_int(“border_count”, 32, 255) verbose: 0 | # LightGBM num_leaves: 31 learning_rate: 0.05 n_estimators: 720 max_bin: 55 bagging_fraction: 0.8 bagging_freq: 5 feature_fraction: 0.2319 feature_fraction_seed: 9 bagging_seed: 9 min_data_in_leaf: 6 min_sum_hessian_in_leaf: 11 random_state: SEED n_jobs: −1 # XGBoost learning_rate: 0.05 n_estimators: 600 max_depth: 3 min_child_weight: 0 gamma: 0 subsample: 0.7 colsample_bytree: 0.7 reg_alpha: 0.005 random_state: SEED n_jobs: −1 # CatBoost iterations: 1000 learning_rate: 0.05 depth: 3 l2_leaf_reg: 4 loss_function: ‘RMSE’ eval_metric: ‘RMSE’ random_seed: SEED verbose: 0 | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | XGBRegressor+ LGBMRegressor+ CatBoostRegressor | XGBRegressor+ LGBMRegressor+ CatBoostRegressor + Ridge | Meta Model: Ridge XGBRegressor + LGBMRegressor + CatBoostRegressor | LGBMRegressor + XGBRegressor + CatBoostRegressor |

| Tuned Hyperparameters: | # XGBRegressor: n_estimators: 100, 2000 max_depth: 3, 12 learning_rate: 0.001, 0.1, log = True subsample: 0.6, 1.0 colsample_bytree: 0.6, 1.0 reg_alpha: 0, 10 reg_lambda: 0, 10 The LGBMRegressor and CatBoostRegressor models are trained directly with their default parameters. | # xgb: objective: reg:squarederror n_estimators: 1000 learning_rate: 0.01 max_depth: 3 subsample: 0.8 colsample_bytree: 0.4 random_state: 42 # lgbm: objective: regression n_estimators: 1000 learning_rate: 0.01 max_depth: 3 subsample: 0.8 colsample_bytree: 0.4 random_state: 42 # catboost: iterations: 1000 learning_rate: 0.01 depth: 3 subsample: 0.8 colsample_bylevel: 0.4 random_seed: 42 verbose: 0 # ridge: alpha: 10 random_state: 42 | # XGB n_estimators: trial.suggest_int(‘n_estimators’, 100, 1000) max_depth: trial.suggest_int(‘max_depth’, 3, 10) learning_rate: trial.suggest_float(‘learning_rate’, 0.01, 0.3) subsample: trial.suggest_float(‘subsample’, 0.6, 1.0) colsample_bytree: trial.suggest_float(‘colsample_bytree’, 0.6, 1.0) reg_alpha: trial.suggest_float(‘reg_alpha’, 0, 10) reg_lambda: trial.suggest_float(‘reg_lambda’, 0, 10) random_state: 42 # LGBM n_estimators: trial.suggest_int(‘n_estimators’, 100, 1000) max_depth: trial.suggest_int(‘max_depth’, 3, 10) learning_rate: trial.suggest_float(‘learning_rate’, 0.01, 0.3) num_leaves: trial.suggest_int(‘num_leaves’, 20, 300) feature_fraction: trial.suggest_float(‘feature_fraction’, 0.5, 1.0) bagging_fraction: trial.suggest_float(‘bagging_fraction’, 0.5, 1.0) bagging_freq: trial.suggest_int(‘bagging_freq’, 1, 7) reg_alpha: trial.suggest_float(‘reg_alpha’, 0, 10) reg_lambda: trial.suggest_float(‘reg_lambda’, 0, 10) random_state: 42 verbosity: −1 # CatBoost iterations: trial.suggest_int(‘iterations’, 100, 1000) depth: trial.suggest_int(‘depth’, 4, 10) learning_rate: trial.suggest_float(‘learning_rate’, 0.01, 0.3) l2_leaf_reg: trial.suggest_float(‘l2_leaf_reg’, 1, 10) random_seed: 42 verbose: False | # LGBM objective: “regression” metric: “rmse” boosting_type: “gbdt” num_leaves: trial.suggest_int(“num_leaves”, 10, 300) learning_rate: trial.suggest_float(“learning_rate”, 0.01, 0.3) feature_fraction: trial.suggest_float(“feature_fraction”, 0.4, 1.0) bagging_fraction: trial.suggest_float(“bagging_fraction”, 0.4, 1.0) bagging_freq: trial.suggest_int(“bagging_freq”, 1, 7) min_child_samples: trial.suggest_int(“min_child_samples”, 5, 100) verbosity: −1 random_state: 42 # XGB n_estimators: 1000 learning_rate: 0.05 max_depth: 6 random_state: 42 # CatBoost iterations: 1000 learning_rate: 0.05 depth: 6 verbose: False random_state: 42 |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | LGBMRegressor | Lasso + XGBRegressor | Ridge + LGBMRegressor | |

| Tuned Hyperparameters: | num_leaves: [31, 64] learning_rate: [0.05, 0.1] n_estimators: [500, 1000] max_depth: [−1, 10] | # Lasso alpha: np.logspace(−4, 0, 40) # XGB learning_rate: [0.01, 0.03] max_depth: [3, 4] n_estimators: [300, 500] reg_alpha: [0, 0.1] reg_lambda: [0.7, 1.0] subsample: [0.7, 1.0] | # Model 1—Ridge Regression (non-tuned) # Model 2—LightGBM objective: “regression” num_leaves: 31 learning_rate: 0.05 n_estimators: 720 max_bin: 55 bagging_fraction: 0.8 bagging_freq: 5 feature_fraction: 0.2319 feature_fraction_seed: 9 bagging_seed: 9 min_data_in_leaf: 6 min_sum_hessian_in_leaf: 11 random_state: RANDOM_SEED n_jobs: −1 | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | LGBMRegressor + XGBRegressor + CatBoostRegressor | LGBMRegressor | ridge + lasso + ElasticNet + XGBoost + LightGBM | XGBoost + LightGBM + Ridge + ElasticNet + Random Forest |

| Tuned Hyperparameters: | # LGBMRegressor n_estimators: trial.suggest_int(‘n_estimators’, 100, 2000) max_depth: trial.suggest_int(‘max_depth’, 3, 12) learning_rate: trial.suggest_float(‘learning_rate’, 0.001, 0.1) subsample: trial.suggest_float(‘subsample’, 0.6, 1.0) colsample_bytree: trial.suggest_float(‘colsample_bytree’, 0.6, 1.0) | n_estimators: (100, 1000) max_depth: (3, 10) learning_rate: (0.001, 0.1, ‘log-uniform’) num_leaves: (10, 100) min_child_samples: (5, 50) subsample: (0.6, 1.0) colsample_bytree: (0.6, 1.0) | # XGBoost max_depth: [3, 4, 5] learning_rate: [0.01, 0.05, 0.1] n_estimators: [300, 500] subsample: [0.8] colsample_bytree: [0.8] # LightGBM num_leaves: [20, 31, 40] learning_rate: [0.01, 0.05, 0.1] n_estimators: [300, 500] subsample: [0.8] colsample_bytree: [0.8] # Ridge Regression alpha: [0.1, 0.5, 1, 5, 10, 20, 50] # Lasso alpha: [0.0001, 0.0005, 0.001, 0.005, 0.01] # ElasticNet alpha: [0.0001, 0.0005, 0.001, 0.005] l1_ratio: [0.3, 0.5, 0.7, 0.9] | # XGBoost n_estimators = 1000, max_depth = 3, learning_rate = 0.05, subsample = 0.8, colsample_bytree = 0.8, reg_alpha = 0.05, reg_lambda = 0.05 # LightGBM n_estimators = 1000, max_depth = 3, learning_rate = 0.05, subsample = 0.8, colsample_bytree = 0.8, reg_alpha = 0.05, reg_lambda = 0.05, verbosity = −1 # Ridge Regression alpha = 10.0 # ElasticNet alpha = 0.005, l1_ratio = 0.9, max_iter = 1000 # Random Forest n_estimators = 300, max_depth = 15, n_jobs = −1 |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | CNN | CNN | CNN | |

| Tuned Hyperparameters: | conv1: [32, 48] conv2: [64, 96] dense: [128, 256] dropout: [0.2, 0.3, 0.4] epochs: [12, 15, 20] batch_size: [64, 128, 256] | conv1_filters: [32, 64] conv2_filters: [64, 128] dense_units: [128, 256] dropout_rate: [0.2, 0.3] batch_size: [64, 128] epochs: [12, 15] | conv_filters: [32, 64] dropout_rates: [0.25, 0.5] | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | CNN | CNN | CNN | CNN + Random Forest Classifier |

| Tuned Hyperparameters: | learning_rate: [0.001, 0.0005, 0.0001] batch_size: [64, 128, 256] epochs: [30, 40, 50] dropout_rate: [0.3, 0.4, 0.5] | dropout_rate: [0.2, 0.3, 0.4] optimizer: [‘adam’, ‘rmsprop’] batch_size: [64, 128] epochs: [20] | epochs: [20, 30] batch_size: [64, 128] model__learning_rate: [0.001, 0.0005] | # CNN epochs: [5, 10, 15] batch_size: [64, 128, 256] model__optimizer: [‘adam’, ‘rmsprop’] # Random Forest n_estimators: [100, 200, 300] max_depth: [10, 20, None] min_samples_split: [2, 5, 10] |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | Medium CNN | Simple CNN | CNN | |

| Tuned Hyperparameters: | lr: [1 × 10−4, 5 × 10−3] bs: [64, 128, 256] | lr: [1 × 10−4, 5 × 10−2] batch_size: [64, 128, 256] | lr: [1 × 10−4, 1 × 10−2] dropout_rate: [0.1, 0.6] | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | CNN | CNN | Simple CNN | CNN |

| Tuned Hyperparameters: | learning_rate: 0.0003 epochs: 10 batch_size: 64 | units: [128, 256] dropout: [0.3, 0.4, 0.5] lr: [0.001, 0.0005, 0.0001] | learning_rate: 5 × 10−4 batch_size_train: 128 batch_size_valtest: 256 epochs: 30 | batch_size: 128 learning_rate: 0.0005 epochs: 30 |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | TF/IDF + Logistic Regression | TF-idf + Stacking Classifier | TF TDF + Logistic Regression | |

| Tuned Hyperparameters: | clf__C: [0.5, 1, 2, 5] | lr__C: [0.5, 1, 2] lgbm__num_leaves: [15, 31] lgbm__learning_rate: [0.05, 0.1] final_estimator__C: [0.5, 1, 2] | # LogisticRegression C: 1.0 solver: ‘liblinear’ random_state: 42 # TfidfVectorizer ngram_range: (1, 2) max_features: 15,000 | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | TF-IDF + Logistic Regression | TF-IDF + Logistic Regression | TF-idf + Logistic Regression/SVM/LGBMClassifier | TF-idf+ Logistic Regression + SVM |

| Tuned Hyperparameters: | clf__C: [0.1, 1, 10] clf__penalty: [‘l1’, ‘l2’] clf__solver: [‘liblinear’] | # TfidfVectorizer ngram_range: [(1, 1), (1, 2)] max_features: [5000, 10,000] # LogisticRegression C: [0.1, 1, 10] solver: [‘liblinear’, ‘saga’] | # Logistic Regression (pipe_lr) clf__C: [0.1, 0.5, 1.0, 2.0, 5.0] clf__penalty: [‘l2’] clf__max_iter: [500] # SVM (pipe_svm) clf__C: [0.1, 1.0, 10.0] clf__kernel: [‘rbf’, ‘linear’] clf__gamma: [‘scale’, ‘auto’] # LightGBM (pipe_lgb) clf__n_estimators: [100, 200, 300] clf__num_leaves: [31, 50, 100] clf__learning_rate: [0.05, 0.1, 0.2] clf__min_child_samples: [20, 30] | # LogisticRegression C: [0.1, 1, 10] # SVC C: [0.1, 1, 10] kernel: [‘linear’, ‘rbf’] |

| (a) | ||||

| LLM | OpenAI o3 | GPT-4.1 | Gemini 2.5 Pro | |

| Selected Algorithm | TF/IDF + Logistic Regression | TF/IDF + Logistic Regression | TF/IDF + Logistic Regression | |

| Tuned Hyperparameters: | C: [1, 2, 4, 8] | C: [0.3, 1, 3] penalty: [‘l2’] | C: trial.suggest_loguniform(‘C’, 1 × 10−1, 1 × 10) solver: ‘liblinear’ penalty: ‘l2’ random_state: 42 | |

| (b) | ||||

| LLM | DeepSeek-V3 | DeepSeek-R1 | Claude Opus 4 | Claude Sonnet 4 |

| Selected Algorithm | TF/IDF + Logistic Regression | TF/IDF + Logistic Regression | TF/IDF + XGBClassifier | XGBoost + SVM + Multinomial Naive Bayes |

| Tuned Hyperparameters: | C: [0.1, 1, 10] penalty: [‘l1’, ‘l2’] solver: [‘liblinear’] | C: [0.1, 1, 10] class_weight: [None, ‘balanced’] | n_estimators: [100, 500] max_depth: [3, 9] learning_rate: [0.01, 0.3] subsample: [0.6, 1.0] colsample_bytree: [0.6, 1.0] min_child_weight: [1, 4] gamma: [0, 0.5] | # XGB max_depth: [4, 6, 8] learning_rate: [0.05, 0.1, 0.15] n_estimators: [100, 200] # SVM C: [0.1, 1, 10] gamma: [‘scale’, ‘auto’] # Naive Bayes alpha: [0.01, 0.1, 1.0] |

| LLM | GPT-5 Thinking | Gemini 2.5 Pro | DeepSeek-V3 |

|---|---|---|---|

| Selected Algorithm | CatBoost/XGBoost/Ridge | LightGBM | LightGBM |

| Tuned Hyperparameters: | # CatBoost depth: [4, 5, 6, 7, 8, 9, 10] learning_rate: [0.03, 0.05, 0.07, 0.10] l2_leaf_reg: [1, 3, 5, 7, 10, 15, 20, 30] bagging_temperature: [0.0, 0.25, 0.5, 1.0] random_strength: [0.5, 1.0, 1.5, 2.0] grow_policy: [‘SymmetricTree’, ‘Depthwise’, ‘Lossguide’] border_count: [64, 128, 254] min_data_in_leaf: [1, 5, 10, 20, 50] n_estimators: [2000, 4000, 8000] # XGBoost xgb__n_estimators: [400, 600, 800, 1200, 1600] xgb__max_depth: [3, 4, 5, 6, 7, 8, 9, 10] xgb__learning_rate: [0.03, 0.05, 0.07, 0.10, 0.15, 0.20] xgb__subsample: [0.6, 0.7, 0.8, 0.9, 1.0] xgb__colsample_bytree: [0.6, 0.7, 0.8, 0.9, 1.0] xgb__min_child_weight: [1, 2, 3, 5, 7, 10] xgb__reg_alpha: [0.0, 1 × 10−8, 1 × 10−6, 1 × 10−4, 1 × 10−3, 1 × 10−2,1 × 10−1] xgb__reg_lambda: [0.1, 0.5, 1.0, 2.0, 5.0, 10.0] #Ridge rg__alpha: list(np.logspace(−2, 3, 30)) # 0.01 … 1000 | # LightGBM objective: ‘regression_l1’ metric: ‘rmse’ boosting_type: ‘gbdt’ random_state: 42 n_jobs: −1 verbose: −1 n_estimators: int [200, 2000] learning_rate: float [0.01, 0.3] num_leaves: int [20, 300] max_depth: int [3, 12] min_child_samples: int [5, 100] subsample: float [0.6, 1.0] colsample_bytree: float [0.6, 1.0] reg_alpha: logfloat [1 × 10−8, 10.0] reg_lambda: logfloat [1 × 10−8, 10.0] | # LightGBM objective: ‘regression’ metric: ‘mae’ verbosity: −1 boosting_type: ‘gbdt’ random_state: 42 n_estimators: int [100, 1000] learning_rate: logfloat [0.01, 0.3] num_leaves: int [20, 300] max_depth: int [3, 12] min_child_samples: int [5, 100] subsample: float [0.5, 1.0] colsample_bytree: float [0.5, 1.0] reg_alpha: logfloat [1 × 10−8, 10.0] reg_lambda: logfloat [1 × 10−8, 10.0] |

| LLM | GPT-5 Thinking | Gemini 2.5 Pro | DeepSeek-V3 |

|---|---|---|---|

| Selected Algorithm | Ridge (blender, α = 1.0, OOF-based ensemble) | LightGBM | XGBoost |

| Tuned Hyperparameters: | # Ridge alpha: float [1 × 10−3,1 × 103] random_state: 42 # ElasticNet alpha: float [1 × 10−3,1 × 103] l1_ratio: float [0.01, 0.99] random_state: 42 # ExtraTrees n_estimators: int [200, 800] max_depth: {None | 8 | 12 | 16 | 24} min_samples_split: int [2, 20] min_samples_leaf: int [1, 12] max_features: {“sqrt” | “auto” | 0.5 | 0.8} random_state: 42 # HistGradientBoostingRegressor max_depth: int [3, 10] learning_rate: float [0.01, 0.3] l2_regularization: float [1 × 10−8, 10.0] max_leaf_nodes: int [15, 63] random_state: 42 # LightGBM num_leaves: int [15, 255] feature_fraction: float [0.5, 1.0] bagging_fraction: float [0.5, 1.0] bagging_freq: int [0, 7] min_data_in_leaf: int [10, 200] lambda_l1: float [1 × 10−3, 10.0] lambda_l2: float [1 × 10−3, 10.0] learning_rate: float [0.01, 0.2] random_state: 42 # XGBoost max_depth: int [3, 10] min_child_weight: int [1, 20] subsample: float [0.5, 1.0] colsample_bytree: float [0.5, 1.0] reg_alpha: float [1 × 10−3, 10.0] reg_lambda: float [1 × 10−3, 10.0] eta: float [0.01, 0.2] random_state: 42 # CatBoost depth: int [4, 10] learning_rate: float [0.01, 0.2] l2_leaf_reg: float [1.0, 15.0] bagging_temperature: float [0.0, 5.0] random_state: 42 | # LightGBM objective: ‘regression_l1’ metric: ‘rmse’ n_estimators: 2000 learning_rate: 0.01 feature_fraction: 0.8 bagging_fraction: 0.8 bagging_freq: 1 lambda_l1: 0.1 lambda_l2: 0.1 num_leaves: 31 verbose: −1 n_jobs: −1 seed: RANDOM_SEED boosting_type: ‘gbdt’ | # XGBoost n_estimators: int [100, 1000] max_depth: int [3, 10] learning_rate: [0.01, 0.3] subsample: float [0.6, 1.0] colsample_bytree: float [0.6, 1.0] reg_alpha: float [0, 1.0] reg_lambda: float [0, 1.0] random_state: 42 |

References

- Gençtürk, T.H.; Gülağiz, F.K.; Kaya, İ. Detection and segmentation of subdural hemorrhage on head CT images. IEEE Access 2024, 12, 82235–82246. [Google Scholar] [CrossRef]

- Laney, D. 3D Data Management: Controlling Data Volume, Velocity and Variety; META Group Research Note; META Group: Stamford, CT, USA, 2001. [Google Scholar]

- Gandomi, A.; Haider, M. Beyond the hype: Big data concepts, methods, and analytics. Int. J. Inf. Manag. 2015, 35, 137–144. [Google Scholar] [CrossRef]

- Du, X.; Liu, M.; Wang, K.; Wang, H.; Liu, J.; Chen, Y.; Feng, J.; Sha, C.; Peng, X.; Lou, Y. Evaluating large language models in class-level code generation. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, New York, NY, USA, 14–20 April 2024; pp. 1–13. [Google Scholar] [CrossRef]

- Li, J.; Li, G.; Zhang, X.; Zhao, Y.; Dong, Y.; Jin, Z.; Li, B.; Huang, F.; Li, Y. EvoCodeBench: An Evolving Code Generation Benchmark with Domain-Specific Evaluations. Adv. Neural Inf. Process. Syst. 2024, 37, 57619–57641. [Google Scholar] [CrossRef]

- Fakhoury, S.; Naik, A.; Sakkas, G.; Chakraborty, S.; Lahiri, S.K. Llm-based test-driven interactive code generation: User study and empirical evaluation. IEEE Trans. Softw. Eng. 2024, 50, 2254–2268. [Google Scholar] [CrossRef]

- Coignion, T.; Quinton, C.; Rouvoy, R. A performance study of llm-generated code on leetcode. In Proceedings of the 28th international conference on evaluation and assessment in software engineering, Salerno, Italy, 18–21 June 2024; pp. 79–89. [Google Scholar] [CrossRef]

- Tambon, F.; Dakhel, A.M.; Nikanjam, A.; Khomh, F.; Desmarais, M.C.; Antoniol, G. Bugs in Large Language Models Generated Code: An Empirical Study. Empir. Software Eng. 2025, 30, 65. [Google Scholar] [CrossRef]

- Li, J.; Li, G.; Li, Y.; Jin, Z. Structured Chain-of-Thought prompting for code generation. ACM Trans. Softw. Eng. Methodol. 2025, 34, 37. [Google Scholar] [CrossRef]

- Khojah, R.; de Oliveira Neto, F.G.; Mohamad, M.; Leitner, P. The impact of prompt programming on function-level code generation. IEEE Trans. Softw. Eng. 2025, 51, 2381–2395. [Google Scholar] [CrossRef]

- Yang, G.; Zhou, Y.; Chen, X.; Zhang, X.; Zhuo, T.Y.; Chen, T. Chain-of-thought in neural code generation: From and for lightweight language models. IEEE Trans. Softw. Eng. 2024, 50, 2437–2457. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 2025, 6, 101260. [Google Scholar] [CrossRef]

- Yao, J.; Zhang, L.; Huang, J. Evaluation of Large Language Model-Driven AutoML in Data and Model Management from Human-Centered Perspective. Front. Artif. Intell. Sec. Nat. Lang. Process. 2025, 8, 1590105. [Google Scholar] [CrossRef]

- Fathollahzadeh, S.; Mansour, E.; Boehm, M. Demonstrating CatDB: LLM-based Generation of Data-centric ML Pipelines. In Proceedings of the Companion of the 2025 International Conference on Management of Data, New York, NY, USA, 22–27 June 2025; pp. 87–90. [Google Scholar] [CrossRef]

- Zhao, Y.; Pang, J.; Zhu, X.; Shao, W. LLM-Prompting Driven AutoML: From Sleep Disorder—Classification to Beyond. Trans. Artif. Intell. 2025, 1, 59–82. [Google Scholar] [CrossRef]

- Mulakala, B.; Saini, M.L.; Singh, A.; Bhukya, V.; Mukhopadhyay, A. Adaptive multi-fidelity hyperparameter optimization in large language models. In Proceedings of the 2024 8th International Conference on Computational System and Information Technology for Sustainable Solutions (CSITSS), Bengaluru, India, 7–9 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Zhang, M.R.; Desai, N.; Bae, J.; Lorraine, J.; Ba, J. Using large language models for hyperparameter optimization. In Proceedings of the NeurIPS 2023 Workshop, New Orleans, LA, USA, 16 December 2023. [Google Scholar] [CrossRef]

- Wang, L.; Shi, C.; Du, S.; Tao, Y.; Shen, Y.; Zheng, H.; Qiu, X. Performance Review on LLM for solving leetcode problems. In Proceedings of the 2024 4th International Symposium on Artificial Intelligence and Intelligent Manufacturing (AIIM), Chengdu, China, 20–22 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1050–1054. [Google Scholar] [CrossRef]

- Jain, R.; Thanvi, J.; Subasinghe, A. The evolution of ChatGPT for programming: A comparative study. Eng. Res. Express 2025, 7, 015242. [Google Scholar] [CrossRef]

- Döderlein, J.B.; Kouadio, N.H.; Acher, M.; Khelladi, D.E.; Combemale, B. Piloting Copilot, Codex, and StarCoder2: Hot temperature, cold prompts, or black magic? J. Syst. Softw. 2025, 230, 112562. [Google Scholar] [CrossRef]

- Jamil, M.T.; Abid, S.; Shamail, S. Can LLMs Generate Higher Quality Code Than Humans? An Empirical Study. In Proceedings of the 2025 IEEE/ACM 22nd International Conference on Mining Software Repositories (MSR), Ottawa, ON, Canada, 28–29 April 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 478–489. [Google Scholar] [CrossRef]

- Mathews, N.S.; Nagappan, M. Test-driven development and llm-based code generation. In Proceedings of the 39th IEEE/ACM International Conference on Automated Software Engineering, Sacramento, CA, USA, 27 October–1 November 2024; pp. 1583–1594. [Google Scholar] [CrossRef]

- Ko, E.; Kang, P. Evaluating Coding Proficiency of Large Language Models: An Investigation Through Machine Learning Problems. IEEE Access 2025, 13, 52925–52938. [Google Scholar] [CrossRef]

- Radford, A.; Narasimhan, K. Improving Language Understanding by Generative Pre-Training. OpenAI. 2018. Available online: https://api.semanticscholar.org/CorpusID:49313245 (accessed on 3 August 2025).

- OpenAI. Introducing OpenAI o3 and o4-Mini. OpenAI. 2025. Available online: https://openai.com/index/introducing-o3-and-o4-mini (accessed on 3 August 2025).

- OpenAI. Introducing GPT-4.1 in the API. OpenAI. 2025. Available online: https://openai.com/index/gpt-4-1/ (accessed on 3 August 2025).

- OpenAI. ChatGPT Release Notes. OpenAI Help Center. 2025. Available online: https://help.openai.com/en/articles/6825453-chatgpt-release-notes (accessed on 3 August 2025).

- Comanici, G.; Bieber, E.; Schaekermann, M.; Pasupat, I.; Sachdeva, N.; Dhillon, I.; Blistein, M.; Ram, O.; Zhang, D.; Rosen, E.; et al. Gemini 2.5: Pushing the frontier with advanced reasoning, multimodality, long context, and next generation agentic capabilities. arXiv 2025, arXiv:2507.06261. [Google Scholar] [CrossRef]

- DeepSeek-AI. Deepseek-AI/Organization Card. Hugging Face. Available online: https://huggingface.co/deepseek-ai (accessed on 3 August 2025).

- DeepSeek-AI; Liu, A.; Feng, B.; Xue, B.; Wang, B.; Wu, B.; Lu, C.; Zhao, C.; Deng, C.; Zhang, C.; et al. Deepseek-v3 technical report. arXiv 2024, arXiv:2412.19437. [Google Scholar] [CrossRef]

- DeepSeek-AI; Guo, D.; Yang, D.; Zhang, H.; Song, J.; Zhang, R.; Xu, R.; Zhu, Q.; Ma, S.; Wang, P.; et al. Deepseek-r1: Incentivizing reasoning capability in LLMs via reinforcement learning. arXiv 2025, arXiv:2501.12948. [Google Scholar] [CrossRef]

- Anthropic. Introducing Claude. 2023. Available online: https://www.anthropic.com/index/introducing-claude (accessed on 3 August 2025).

- Anthropic. System Card: Claude Opus 4 & Claude Sonnet 4. Anthropic. 2025. Available online: https://www-cdn.anthropic.com/4263b940cabb546aa0e3283f35b686f4f3b2ff47.pdf (accessed on 3 August 2025).

- Anthropic. Introducing Claude 4. Anthropic. 2025. Available online: https://www.anthropic.com/news/claude-4 (accessed on 3 August 2025).

- Tonmoy, S.M.; Zaman, S.M.; Jain, V.; Rani, A.; Rawte, V.; Chadha, A.; Das, A. A comprehensive survey of hallucination mitigation techniques in large language models. arXiv 2024, arXiv:2401.01313. [Google Scholar] [CrossRef]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-train, prompt, and predict: A systematic survey of prompting methods in natural language processing. ACM Comput. Surv. 2023, 55, 195. [Google Scholar] [CrossRef]

- Sahoo, P.; Singh, A.K.; Saha, S.; Jain, V.; Mondal, S.; Chadha, A. A systematic survey of prompt engineering in large language models: Techniques and applications. arXiv 2024, arXiv:2402.07927. [Google Scholar] [CrossRef]

- Tafesse, W.; Wood, B. Hey ChatGPT: An examination of ChatGPT prompts in marketing. J. Mark. Anal. 2024, 12, 790–805. [Google Scholar] [CrossRef]

- Chang, Y.; Wang, X.; Wang, J.; Wu, Y.; Yang, L.; Zhu, K.; Chen, H.; Yi, X.; Wang, C.; Wang, Y.; et al. A survey on evaluation of large language models. ACM Trans. Intell. Syst. Technol. 2024, 15, 39. [Google Scholar] [CrossRef]

- Lee, Y.; Oh, J.H.; Lee, D.; Kang, M.; Lee, S. Prompt engineering in ChatGPT for literature review: Practical guide exemplified with studies on white phosphors. Sci. Rep. 2025, 15310. [Google Scholar] [CrossRef] [PubMed]

- Debnath, T.; Siddiky, M.N.A.; Rahman, M.E.; Das, P.; Guha, A.K. A comprehensive survey of prompt engineering techniques in large language models. TechRxiv 2025. [Google Scholar] [CrossRef]

- Phoenix, J.; Taylor, M. Prompt Engineering for Generative AI; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2024. [Google Scholar]

- Saint-Jean, D.; Al Smadi, B.; Raza, S.; Linton, S.; Igweagu, U. A Study of Prompt Engineering Techniques for Code Generation: Focusing on Data Science Applications. In International Conference on Information Technology-New Generations; Springer Nature: Cham, Switzerland, 2025; pp. 445–453. [Google Scholar] [CrossRef]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Bareiß, P.; Souza, B.; d’Amorim, M.; Pradel, M. Code generation tools (almost) for free? A study of few-shot, pre-trained language models on code. arXiv 2022, arXiv:2206.01335. [Google Scholar] [CrossRef]

- Xu, D.; Xie, T.; Xia, B.; Li, H.; Bai, Y.; Sun, Y.; Wang, W. Does few-shot learning help LLM performance in code synthesis? arXiv 2024, arXiv:2412.02906. [Google Scholar] [CrossRef]

- Khot, T.; Trivedi, H.; Finlayson, M.; Fu, Y.; Richardson, K.; Clark, P.; Sabharwal, A. Decomposed prompting: A modular approach for solving complex tasks. arXiv 2022, arXiv:2210.02406. [Google Scholar] [CrossRef]

- Suzgun, M.; Scales, N.; Scharli, N.; Gehrmann, S.; Tay, Y.; Chung, H.W.; Chowdhery, A.; Le, Q.V.; Chi, E.H.; Zhou, D.; et al. Challenging BIG-Bench tasks and whether chain-of-thought can solve them. In Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Dublin, Ireland, 22–27 May 2022. [Google Scholar]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.; Cao, Y.; Narasimhan, K. Tree of thoughts: Deliberate problem solving with large language models. Advances in neural information processing systems. arXiv 2023, arXiv:2305.10601. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- Feurer, M.; Klein, A.; Eggensperger, K.; Springenberg, J.; Blum, M.; Hutter, F. Efficient and robust automated machine learning. Adv. Neural Inf. Process. Syst. 2015, 28, 2962–2970. [Google Scholar]

- Machine Learning Professorship Freiburg. auto-sklearn—AutoSklearn 0.15.0 documentation. Machine Learning Professorship Freiburg. Available online: https://automl.github.io/auto-sklearn/master/ (accessed on 26 September 2025).

- Erickson, N.; Mueller, J.; Shirkov, A.; Zhang, H.; Larroy, P.; Li, M.; Smola, A. AutoGluon-Tabular: Robust and Accurate AutoML for Structured Data. arXiv 2020, arXiv:2003.06505. [Google Scholar] [CrossRef]

- Truong, A.; Walters, A.; Goodsitt, J.; Hines, K.; Bruss, C.B.; Farivar, R. Towards automated machine learning: Evaluation and comparison of AutoML approaches and tools. In Proceedings of the 2019 IEEE 31st International Conference on Tools with Artificial Intelligence (ICTAI), Portland, OR, USA, 4–6 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1471–1479. [Google Scholar]

- Tian, J.; Che, C. Automated machine learning: A survey of tools and techniques. J. Ind. Eng. Appl. Sci. 2024, 2, 71–76. [Google Scholar] [CrossRef]

- Baratchi, M.; Wang, C.; Limmer, S.; Van Rijn, J.N.; Hoos, H.; Bäck, T.; Olhofer, M. Automated machine learning: Past, present and future. Artif. Intell. Rev. 2024, 57, 122. [Google Scholar] [CrossRef]

- Quaranta, L.; Azevedo, K.; Calefato, F.; Kalinowski, M. A multivocal literature review on the benefits and limitations of industry-leading AutoML tools. Inf. Softw. Technol. 2025, 178, 107608. [Google Scholar] [CrossRef]

- An, J.; Kim, I.S.; Kim, K.J.; Park, J.H.; Kang, H.; Kim, H.J.; Kim, Y.S.; Ahn, J.H. Efficacy of automated machine learning models and feature engineering for diagnosis of equivocal appendicitis using clinical and computed tomography findings. Sci. Rep. 2024, 14, 22658. [Google Scholar] [CrossRef] [PubMed]

- Wang, J.; Xue, Q.; Zhang, C.W.; Wong, K.K.L.; Liu, Z. Explainable coronary artery disease prediction model based on AutoGluon from AutoML framework. Front. Cardiovasc. Med. 2024, 11, 1360548. [Google Scholar] [CrossRef]

- Shoaib, H.A.; Rahman, M.A.; Maua, J.; Rahman, A.; Mridha, M.F.; Kim, P.; Shin, J. An enhanced deep learning approach to potential purchaser prediction: AutoGluon ensembles for cross-industry profit maximization. IEEE Open J. Comput. Soc. 2025, 6, 468–479. [Google Scholar] [CrossRef]

| Name | Total Teams | Submissions | |

|---|---|---|---|

| 1 | Titanic—ML from Disaster | 15,866 | 60,236 |

| 2 | Housing Prices Competition for Kaggle Learn Users | 5966 | 18,933 |

| 3 | House Prices—Advanced Regression Techniques | 4653 | 22,907 |

| 4 | Spaceship Titanic | 2116 | 13,123 |

| 5 | Digit Recognizer | 1630 | 4702 |

| 6 | NLP with Disaster Tweets | 901 | 3520 |

| 7 | Store Sales—Time Series Forecasting | 746 | 2643 |

| 8 | LLM Classification Finetuning | 255 | 1091 |

| 9 | Connect X | 193 | 508 |

| 10 | I’m Something of a Painter Myself | 129 | 315 |

| Dataset | Size | Attributes | Data Type | Task | ||

|---|---|---|---|---|---|---|

| Independent | Dependent | |||||

| Titanic | Train | 891 | 10 (+Passenger ID column) | Survived Yes:1/No: 0 | Numerical & Categorical | Binary Classification |

| Test | 418 | - | ||||

| House Price | Train | 1460 | 79 (+ID column) | Sale Price (Continuous) | Numerical & Categorical | Regression |

| Test | 1459 | - | ||||

| MNIST | Train | 42,000 | 784 pixels | Label (Digit 0–9) | Numerical (flattened 28 × 28 pixels) | Multiclass Classification |

| Test | 28,000 | - | ||||

| Disaster Tweets | Train | 7613 | 3 (+ID column) | Disaster Relevant:1/Irrelevant:0 | Numerical & String | Binary Text Classification (NLP) |

| Test | 3263 | - | ||||

| Predicting the Beats-per-Minute of Songs | Train | 524,164 | 9 (+ID column) | Beats Per Minute (Continuous) | Numerical | Regression |

| Test | 174,722 | - | ||||

| Competition | Data Version | Date of Access |

|---|---|---|

| Titanic—ML from Disaster | N/A (competition data) | 3–7 June 2025 |

| House Prices—Advanced Regression Techniques | N/A (competition data) | 7–13 June 2025 |

| Digit Recognizer | N/A (competition data) | 13–26 June 2025 |

| NLP with Disaster Tweets | N/A (competition data) | 15 June–15 July 2025 |

| Predicting the Beats-per-Minute of Songs | N/A (competition data) | 18–25 September 2025 |

| Model | Version | Platform | Context Length | Inference Setting |

|---|---|---|---|---|

| OpenAI o3 | o3 | OpenAI ChatGPT/Web | Up to 200 K | Default |

| GPT-4.1 | gpt-4.1 | OpenAI ChatGPT/Web | Up to 1 M | Default |

| GPT-5 | Thinking (standart) | OpenAI ChatGPT/Web | Up to 400 K | Default |

| Gemini 2.5 Pro | gemini-pro | Google AI Studio | ~1 M | Default |

| DeepSeek-V3 | deepseek-coder-v3-0324 | Hugging Face/Web interface | 128 K | Default |

| DeepSeek-R1 | deepseek-coder-R1 | Hugging Face/Web interface | 128 K | Default |

| Claude Opus 4 | claude-4-opus | Claude AI Web (Anthropic) | Up to 200 K | Default |

| Claude Sonnet 4 | claude-4-sonnet | Claude AI Web (Anthropic) | Up to 200 K | Default |

| Step | FSP Template | ToT Template |

|---|---|---|

| Role Definition | You are a Kaggle Grandmaster and senior MLOps engineer. | You are a Kaggle Grandmaster and senior MLOps engineer. |

| Task Description | Build the best possible, fully-reproducible solution for the “Kaggle House Prices—Advanced Regression Techniques” competition | Build the best possible, fully-reproducible solution for the “Kaggle House Prices—Advanced Regression Techniques” competition |

| Requirements |

|

|

| Output Constraints |

|

(step-by-step strategy)

|

| Example PLAN Output |

|

|

| In-Context Examples | Presents representative input-output pairs with full code implementations to clarify the intended output structure. Example 1: Example 2: | Relies on internal reasoning at inference time, without supplying prior example demonstrations. |

| Example Code Block | import pandas as pd, numpy as np from sklearn.linear_model import Ridge … | import pandas as pd, numpy as np from catboost import CatBoostRegressor from xgboost import XGBRegressor … |

| Reasoning Type | Learns from examples. Extracts structure from examples | Thinks iteratively. Evaluates alternatives. Chooses the best solution reasoning. |

| Problems: | Titanic | House Price | Beats per Minute of Songs | NLP with Disaster Tweets | Digit Recognizer | |

|---|---|---|---|---|---|---|

| Framework &version | auto-sklearn 0.15.0, Python 3.10.18 | |||||

| Core settings | metric | accuracy | RMSE | RMSE | F1 Score | Accuracy |

| seed | 42 | |||||

| memory_limit | 8192 MB | |||||

| Tasks | Titanic | House Price | Beats per Minute of Songs | NLP with Disaster Tweets | Digit Recognizer | |

|---|---|---|---|---|---|---|

| Framework &version | AutoGluon-Tabular 1.4.0, Python 3.12.x | |||||

| Core settings | Preset | high_quality | ||||

| Metric | Accuracy | RMSE | F1 Score | Accuracy | ||

| time_limit | 7200 s. | 14,400 s. | ||||

| auto_stack | True | |||||

| excluded_model_types | None | |||||

| Seed | 42 | |||||

| ag_args_fit | max_memory_usage_ratio: 0.8, num_gpus: 0 | max_memory_usage_ratio: 0.8, num_gpus: 1 | ||||

| hyperparameter_tune_kwargs | Auto | num_trials: 80 | ||||

| num_stack_levels | - | 0 | ||||

| num_bag_folds | - | 3 | ||||

| (a) | |||||||||

| LLM | OpenAI | GPT | Gemini | ||||||

| Series | o3 | o3 (HP-Tuned) | 4.1 | 4.1 (HP-Tuned) | 2.5 Pro | 2.5 Pro (HP-Tuned) | |||

| FSP | CV Acc. | 0.8316 | 0.844 | 0.8260 | 0.8339 | 0.8395 | 0.8384 | ||

| Test Acc. | 0.75358 | 0.75358 | 0.77990 | 0.76555 | 0.77990 | 0.77033 | |||

| Exec. Time | 27s | 1 min 43 s | 25 s | 1 min 22 s | 24 s | 2 min 33 s | |||

| Memory (MB) | 282.12 | 278.36 | 288.18 | 288.58 | 279.84 | 298.91 | |||

| Algorithm | GBC | GBC | RFC | RFC | RFC | RFC | |||

| ToT | CV Acc. | 0.8137 | 0.8294 | 0.8137 | 0.8406 | 0.8227 | 0.8316 | ||

| Test Acc. | 0.74641 | 0.76555 | 0.74641 | 0.77272 | 0.74880 | 0.74401 | |||

| Exec. Time | 29 s | 44 s | 28 s | 1 min 3 s | 23 s | 48 s | |||

| Memory (MB) | 296.57 | 273.26 | 296.32 | 297.64 | 272.04 | 293.06 | |||

| Algorithm | RFC | RFC | RFC | RFC | RFC | RFC | |||

| (b) | |||||||||

| LLM | DeepSeek | Claude | |||||||

| Series | V3 | V3 (HP-tuned) | R1 | R1 (HP-tuned) | Opus 4 | Opus 4 (HP-tuned) | Sonnet 4 | Sonnet 4 (HP-tuned) | |

| FSP | CV Acc. | 0.79232 | 0.826 | 0.8227 | 0.8328 | 0.8271 | 0.8351 | 0.8339 | 0.8395 |

| Test Acc. | 0.75119 | 0.78229 | 0.76555 | 0.77990 | 0.74880 | 0.76794 | 0.76794 | 0.76555 | |

| Exec. Time | 24 s | 1 min 37 s | 26 s | 1 min 36 s | 36 s | 4 min 25 s | 29 s | 3 min 6 s | |

| Memory (MB) | 282.03 | 283.47 | 277.07 | 291.67 | 295.98 | 295.02 | 281.41 | 306.54 | |

| Algorithm | RFC | RFC | RFC | RFC | GBC | RFC | RFC | RFC | |

| ToT | CV Acc. | 0.813 | 0.8294 | 0.8103 | 0.8350 | 0.8182 | 0.8339 | 0.8159 | 0.8395 |

| Test Acc. | 0.74641 | 0.76555 | 0.73684 | 0.77751 | 0.74401 | 0.76555 | 0.74641 | 0.76794 | |

| Exec. Time | 26 s | 41 s | 31 s | 42 s | 29 s | 3 min 27 s | 30 s | 3 min 46 s | |

| Memory (MB) | 282.22 | 273.88 | 287.54 | 286.63 | 301.98 | 300.11 | 296.32 | 309.67 | |

| Algorithm | RFC | RFC | RFC | RFC | RFC | RFC | RFC | RFC | |

| (a) | |||||||||

| LLM | OpenAI | GPT | Gemini | ||||||

| Series | o3 | o3 (HP-Tuned) | 4.1 | 4.1 (HP-Tuned) | 2.5 Pro | 2.5 Pro (HP-Tuned) | |||

| FSP | RMSE | 0.12404 | 0.12802 | 0.12301 | 0.12530 | 0.12007 | 0.12256 | ||

| Exec. Time | 1 min 42 s | 7 min 58 s | 32 s | 28 min 13 s | 1 min 43 s | 41 s | |||

| Memory (MB) | 438.14 | 299.00 | 273.68 | 842.48 | 454.28 | 420.48 | |||

| Algorithm | LGBMR + XGBR + Ridge | XGBR | Ridge + Lasso | LGBMR + XGBR+ CBR | LGBMR + XGBR+ CBR + Ridge | LGBMR + XGBR + CBR | |||

| ToT | RMSE | 0.12131 | 0.13098 | 0.13052 | 0.12141 | 0.12161 | 0.12211 | ||

| Exec. Time | 5 min 8 s | 5 min 8 s | 22 s | 1 min 48 s | 34 s | 35 s | |||

| Memory (MB) | 274.68 | 365.88 | 368.68 | 298.44 | 375.98 | 380.50 | |||

| Algorithm | Lasso + ElasticNet + GBR | LGBMR | LGBMR | Lasso + XGBR | Ridge + LGBMR | Ridge + LGBMR | |||

| (b) | |||||||||

| LLM | DeepSeek | Claude | |||||||

| Series | V3 | V3 (HP-tuned) | R1 | R1 (HP-tuned) | Opus 4 | Opus 4 (HP-tuned) | Sonnet 4 | Sonnet 4 (HP-tuned) | |

| FSP | RMSE | 0.12452 | 0.12508 | 0.12602 | 0.12317 | 0.12691 | 0.12361 | 0.12446 | 0.12487 |

| Exec. Time | 4 min 48 s | 8 min 28 s | 1 min 26 s | 51 s | 55 s | 22 min 26 s | 44 s | 3 min 18 s | |

| Memory (MB) | 442.81 | 483.73 | 458.61 | 438.46 | 439.98 | 590.97 | 413.82 | 455.34 | |

| Algorithm | XGBR+ LGBMR+ CBR, Final Model: Ridge | XGBR + LGBMR + CBR | CBR+ XGBR+ LGBMR | XGBR+ LGBMR+ CBR+ Ridge | CBR+ XGBR+ LGBMR | Meta Model: Ridge XGBR+ LGBMR+ CBR | LGBMR+ XGBR+ CBR | LGBM+ XGBR+ CBR | |

| ToT | RMSE | 0.13027 | 0.13095 | 0.14649 | 0.12843 | 0.13660 | 0.12340 | 0.13348 | 0.12438 |

| Exec. Time | 35 s | 4 min 50 s | 43 s | 10 h 41 min 50 s | 40 s | 3 min 16 s | 41 s | 1 min 45 s | |

| Memory (MB) | 363.61 | 437.12 | 280.83 | 383.1 | 309.52 | 393.02 | 417.71 | 425.72 | |

| Algorithm | LGBMR | LGBMR+ XGBR+ CBR | RFR | LGBMR | Ridge + Lasso+ RFR+ XGBR | Ridge + Lasso + ElasticNet + XGBR + LGBMR | RFR+ XGBR+ LGBMR | XGBR+ LGBMR+ Ridge+ ElasticNet+ RFR | |

| (a) | |||||||||

| LLM | OpenAI | GPT | Gemini | ||||||

| Series | o3 | o3 (HP-Tuned) | 4.1 | 4.1 (HP-Tuned) | 2.5 pro | 2.5 pro (HP-tuned) | |||

| FSP | CV Acc. | 99.2857 | 0.9876 | 0.9853 | 0.99 | 0.9872 | 0.9956 | ||

| Test Acc. | 0.99125 | 0.98939 | 0.98750 | 0.99128 | 0.98675 | 0.99450 | |||

| Exec. Time | 23 min 3 s | 14 min 28 s | 2 min 8 s | 3 min 13 s | 4 min 33 s | 37 min 17 s | |||

| Memory (MB) | 3194.35 | 3067.41 | 2772.10 | 2721.93 | 2817.53 | 4200.00 | |||

| Algorithm | CNN | CNN | CNN | CNN | CNN | CNN | |||

| ToT | CV Acc. | 99.2786 | 99.45714 | 0.9929 | 0.99224 | 0.99569 | 0.9937 | ||

| Test Acc. | 0.99467 | 0.99517 | 0.99310 | 0.99253 | 0.99621 | 0.99639 | |||

| Exec. Time | 8 min 7 s | 19 min 33 s | 13 min 26 s | 44 min 59 s | 26 min 8 s | 45 min 15 s | |||

| Memory (MB) | 2020.12 | 2050.82 | 1760.64 | 2027.67 | 2632.75 | 2451.20 | |||

| Algorithm | Small CNN | Medium CNN | Simple CNN | Simple CNN | VGG like CNN | CNN | |||

| (b) | |||||||||

| LLM | DeepSeek | Claude | |||||||

| Series | V3 | V3 (HP-tuned) | R1 | R1 (HP-tuned) | Opus 4 | Opus 4 (HP-tuned) | Sonnet 4 | Sonnet 4 (HP-tuned) | |

| FSP | CV Acc. | 0.9860 | 0.9957 | 0.9911 | 0.9895 | 0.9879 | 0.996 | 0.99 | 0.99 |

| Test Acc. | 0.98996 | 0.99321 | 0.99225 | 0.99271 | 0.98385 | 0.99482 | 0.98703 | 0.99246 | |

| Exec. Time | 6 min 39 s | 13 min 7 s | 5 min 27 s | 9 min 53 s | 8 min 34 s | 12 min 43 s | 2 min 7 s | 27 min 4 s | |

| Memory (MB) | 4143.05 | 2697.58 | 2752.93 | 2714.18 | 3079.88 | 5080.94 | 3073.10 | 2927.02 | |

| Algorithm | CNN | CNN | CNN | CNN | CNN | CNN | CNN | CNN+ RFC | |

| ToT | CV Acc. | 97.83 | 0.9926 | 0.9916 | 0.9837 | 99.48 | 99.49 | 0.99329 | 0.99314 |

| Test Acc. | 0.98117 | 0.98853 | 0.99260 | 0.98514 | 0.99546 | 0.99539 | 0.99335 | 0.99307 | |

| Exec. Time | 1 min 48 s | 1 min 42 s | 6 min 26 s | 41 min 1 s | 19 min 55 s | 20 min 25 s | 17 min 29 s | 15 min 42 s | |

| Memory (MB) | 2630.61 | 2154.87 | 2226.44 | 4344.48 | 2198.39 | 2172.88 | 2912.29 | 2912.65 | |

| Algorithm | CNN | CNN | CNN | CNN | Simple CNN | Simple CNN | CNN | CNN | |

| (a) | |||||||||

| LLM | OpenAI | GPT | Gemini | ||||||

| Series | o3 | o3 (HP-Tuned) | 4.1 | 4.1 (HP-Tuned) | 2.5 Pro | 2.5 Pro (HP-Tuned) | |||

| FSP | CV F1 Score | 0.7927 | 0.7972 | 0.8011 | 0.7808 | 0.83830 | 0.80494 | ||

| Test F1 Score | 0.79742 | 0.79405 | 0.79650 | 0.78302 | 0.83695 | 0.79865 | |||

| Exec. Time | 28 s | 25 s | 22 s | 8 min 52 s | 25 min 25 s | 22 s | |||

| Memory (MB) | 284.97 | 288.98 | 314.32 | 391.31 | 4864.11 | 279.46 | |||

| Algorithm | TF-idf +LRC | TF-idf +LRC | TF-idf +LRC | TF-idf + Stacking Classifier | Hugging Face Bert-Base-Uncased | TF-idf +LRC | |||

| ToT | CV F1 Score | 0.7918 | 0.8141 | 0.7923 | 0.8074 | 0.80008 | 0.80192 | ||

| Test F1 Score | 0.79007 | 0.81428 | 0.78915 | 0.80692 | 0.78792 | 0.79926 | |||

| Exec. Time | 39s | 41 s | 35 s | 28 s | 27 s | 47 s | |||

| Memory (MB) | 363.88 | 429.84 | 352.58 | 348.71 | 273.73 | 285.75 | |||

| Algorithm | TF-idf + LinearSVC | TF-idf +LRC | TF-idf + LinearSVC | TF-idf +LRC | TF-idf +LRC | TF-idf +LRC | |||

| (b) | |||||||||

| LLM | DeepSeek | Claude | |||||||

| Series | V3 | V3 (HP-tuned) | R1 | R1 (HP-tuned) | Opus 4 | Opus 4 (HP-tuned) | Sonnet 4 | Sonnet 4(HP-tuned) | |

| FSP | CV F1 Score | 0.7344 | 0.7305 | 0.8024 | 0.8028 | LRC: 0.8007 RFC: 0.7787 XGBC: 0.7906 | 0.8101 | 0.7892 | 0.7950 |

| Test F1 Score | 0.79895 | 0.79711 | 0.79834 | 0.79681 | 0.79619 | 0.80753 | 0.79313 | 0.79129 | |

| Exec. Time | 36 s | 31 s | 21 s | 1 min 12 s | 1 min 1 s | 49 min 5 s | 31 s | 7 h 8 min 35 s | |

| Memory (MB) | 269.09 | 316.75 | 280.21 | 272.31 | 333.52 | 586.85 | 292.43 | 2221.02 | |

| Algorithm | TF-idf + LRC | TF-idf + LRC | TF-idf + LRC | TF-idf + LRC | TF-idf+ (LRC/ RFC/ XGBC) | TF-idf + (LRC/SVM/LGBMC) | TF-idf + LRC+ NB | TF-idf+ LRC+ SVM | |

| ToT | CV F1 Score | 0.7774 | 0.7316 | 0.7323 | 0.8009 | 0.7887 | 0.7902 | 0.7793 | 0.7760 |

| Test F1 Score | 0.78976 | 0.79528 | 0.79895 | 0.79773 | 0.78332 | 0.78547 | 0.78945 | 0.77811 | |

| Exec. Time | 1 min 36 s | 25 s | 23 s | 33 s | 32 s | 3 min 68 s | 26 s | 3 h 51 min 51 s | |

| Memory (MB) | 319.79 | 284.76 | 283.12 | 285.93 | 1804.99 | 329.46 | 389.11 | 3875.11 | |

| Algorithm | TF-idf + LRC | TF-idf + LRC | TF-idf + LRC | TF-idf + LRC | TF-idf+ LGBMC | TF-idf + XGBC | TF-idf + LGBMC | TF-idf + XGBC+ SVM + NB | |

| LLM | GPT | Gemini | DeepSeek | ||||

|---|---|---|---|---|---|---|---|

| Series | 5 Thinking | 5 Thinking (HP-Tuned) | 2.5 Pro | 2.5 Pro (HP-Tuned) | V3 | V3 (HP-Tuned) | |

| FSP | RMSE | 26.39266 | 26.40192 | 26.39081 | 26.40479 | 26.39554 | 26.38663 |

| Exec. Time | 3 h 31 min 18 s | 1 h 22 min 16 s | 1 h 24 min 46 s | 15 min 15 s | 35 min 44 s | 9 min 41 s | |

| Memory (MB) | 1123.58 | 957.88 | 791.73 | 606.72 | 905.31 | 697.42 | |

| Algorithm | LGBMR | CBR/ XGBR/Ridge | Ridge/ RFR/ LGBMR/ XGBR/ CBR | LGBMR | Ridge/ RFR/ LGBMR/ XGBR/ CBR | LGBMR | |

| ToT | RMSE | 26.38734 | 26.38760 | 26.38879 | 26.39296 | 26.42273 | 26.38801 |

| Exec. Time | 3 h 35 min 29 s | 2 h 55 min 28 s | 47 s | 1 min 21 s | 46 min 28 s | 23 min 21 s | |

| Memory (MB) | 461.14 | 1987.59 | 684.24 | 631.26 | 480.21 | 1278.91 | |

| Algorithm | Ridge/ Lasso/ ElasticNet/ RFR/ GBR/ HGBR | Ridge/ ElasticNet/ETR/HGBR/LGBMR/XGBR/CBR Ridge blend TOP_K OOF/TEST preds | LGBMR | LGBMR | RFR | XGBR | |

| Tasks | Search/Design Space | Appropriate Technique | ||

|---|---|---|---|---|

| Prompt Technique | In Practice | |||

| (General Trend) | Best | |||

| Titanic | Narrow | FSP | FSP | FSP (HP-tuned) |

| House Prices | Medium | FSP (Usually) | FSP (non-tuned)/ToT (HP-tuned) | FSP (non-tuned) |

| Digit Recognizer | Very Large | FSP | ToT | ToT (HP-tuned) |

| Disaster Tweets | Large | ToT | FSP (non-tuned)/ToT (HP-tuned) | FSP (non-tuned) |

| Beats-per-Minute of Songs | Large | FSP (Usually) | FSP (non-tuned)/ToT (HP-tuned) | FSP (HP-tuned) |

| Task | BEST LLM | Metrics | Kaggle Notebooks | |

|---|---|---|---|---|

| Titanic | DeepSeek-V3 (FSP (HP-tuned)) | Accuracy | 0.78229 | 0.80143 |

| Exec. Time | 1 min 37 s | 5 min 37 s | ||

| Algorithm | RFC | GBT | ||

| Kaggle Learderboard % | 17.3 | 2.82 | ||

| House Price | Gemini 2.5 Pro (FSP) | RMSE | 0.12007 | 0.12096 |

| Exec. Time | 1 min 43 s | 47 s | ||

| Algorithm | LGBMR + XGBR+ CBR + Ridge | Regularized Linear Regression Model | ||

| Kaggle Learderboard % | 5.25 | 6.84 | ||

| Digit Recognizer | Gemini 2.5 Pro (ToT (HP-tuned)) | Accuracy | 0.99639 | 0.99028 |

| Exec. Time (GPU 100) | 45 min 15 s | 1 h 47 min 7 s | ||

| Algorithm | CNN | CNN | ||

| Kaggle Learderboard % | 10.4 | 32.7 | ||

| NLP with Disaster Tweets | Gemini 2.5 Pro (FSP) | F1 Score | 0.83695 | 0.83726 |

| Exec. Time (GPU 100) | 25 min 25 s | 5 min 53 s | ||

| Algorithm | Hugging Face Bert-Base-Uncased | Distil BERT | ||

| Kaggle Learderboard % | 10.1 | 9.3 | ||

| Beats-per-Minute of Songs | DeepSeek-V3 (FSP (HP-tuned)) | RMSE | 26.38663 | 26.38020 (20.09.2025) |

| Exec. Time | 9 min 41 s | - | ||

| Algorithm | LGBMR | - |

| Task | Model | Version | Tuning | Prompting Techniques | Accuracy | Algorithm |

|---|---|---|---|---|---|---|

| Titanic | GPT [23] | 3.5 | No | Preprocess: FSP Chain of Thought Specifying Desired Response Format HPT: FSP Specifying Desired Response Format | 0.7511 | RFC |

| GPT [23] | 3.5 | Yes | 0.7918 | RFC | ||

| Gemini [23] | N/A | No | 0.7655 | XGBoost | ||

| Gemini [23] | N/A | Yes | 0.7583 | XGBoost | ||

| Human [23] | - | N/A | - | 0.7966 | XGBoost | |

| Kaggle Notebook | - | Yes | - | 0.80143 | GBTC | |

| GPT | 4.1 | No | Preprocess+ Alg. Selection+ HPT: FSP Specifying Desired Response Format | 0.7799 | RFC | |

| GPT | 4.1 | Yes | 0.76555 | RFC | ||

| Gemini | 2.5 Pro | No | 0.7799 | RFC | ||

| Gemini | 2.5 Pro | Yes | 0.77033 | RFC | ||

| GPT | 4.1 | No | Preprocess+ Alg. Selection+ HPT: ToT Specifying Desired Response Format | 0.74641 | RFC | |

| GPT | 4.1 | Yes | 0.77272 | RFC | ||

| Gemini | 2.5 Pro | No | 0.7488 | RFC | ||

| Gemini | 2.5 Pro | Yes | 0.74401 | RFC | ||

| Best LLM DeepSeek | V3 | Yes | Preprocess+ Alg. Selection+ HPT: FSP Specifying Desired Response Format | 0.78229 | RFC | |

| Digit Recognizer | GPT [23] | 3.5 | No | Classification + HPT: FSP Specifying Desired Response Format | 0.9863 | CNN |

| GPT [23] | 3.5 | Yes | 0.9960 | |||

| Gemini [23] | N/A | No | 0.9801 | |||

| Gemini [23] | N/A | Yes | 0.9820 | |||

| Human [23] | - | N/A | - | 0.9834 | ||

| Kaggle Notebook | - | N/A | - | 0.99028 | ||

| GPT | 4.1 | No | Classification + HPT: FSP Specifying Desired Response Format | 0.98750 | ||

| GPT | 4.1 | Yes | 0.99128 | |||

| Gemini | 2.5 Pro | No | 0.98675 | |||

| Gemini | 2.5 Pro | Yes | 0.99450 | |||

| GPT | 4.1 | No | Classification + HPT: ToT Specifying Desired Response Format | 0.99310 | ||

| GPT | 4.1 | Yes | 0.99224 | |||

| Gemini | 2.5 Pro | No | 0.99569 | |||

| Best LLM Gemini | 2.5 Pro | Yes | 0.99639 |

| Task | Hyperparameter | GPT [23] | DeepSeek-V3 |

|---|---|---|---|

| Titanic | n_estimator | [100, 200, 300] | [100, 200, 300] |

| max_depth | [None, 10, 20] | [None, 5, 10] | |

| min_samples_split | [2, 5, 10] | [2, 5, 10] | |

| min_samples_leaf | [1, 2, 4] | [1, 2, 4] | |

| max_features | [‘auto’, ‘sqrt’] | - | |

| Model | RFC | RFC | |

| Task | Hyperparameter | GPT [23] | Gemini 2.5 Pro |

| Digit Recognizer | filter | [32, 64] | [32, 64] |

| units | [64, 128] | [256] | |

| learning_rate | [1 × 10−5, 1 × 10−2] | [1 × 10−4, 1 × 10−2] | |

| dropout | - | [0.1, 0.6] | |

| Model | CNN | CNN | |

| Architecture | Input | Conv2D(32,(3,3), activation = ‘relu’) | Conv2D(32, (3,3), activation = ‘relu’) |

| Hidden | MaxPooling2D((2, 2)) Conv2D(64, (3, 3), activation = ‘relu’) MaxPooling2D((2, 2)) Conv2D(64, (3, 3), activation = ‘relu’) Dense(64, activation = ‘relu’) | Conv2D(32, (3,3), activation = ‘relu’) MaxPooling2D((2,2)) Dropout(rate=dropout/2) Conv2D(64, (3,3), activation = ‘relu’) Conv2D(64, (3,3), activation = ‘relu’) MaxPooling2D((2,2)) Dropout(rate = dropout/2) Dense(256, activation = ‘relu’) Dropout(rate = dropout) | |

| Output | Dense(10, activation=‘softmax’) | Dense(10, activation = ‘softmax’) | |

| Task | DeepSeek-V3 (FSP & HP-Tuned) | Auto-Sklearn | AutoGluon | Kaggle Notebooks | |

|---|---|---|---|---|---|

| Titanic | Accuracy | 0.78229 | 0.77272 | 0.76315 | 0.80143 |

| Exec. Time | 1 min 37 s | 59 min 56.5 s | 1 h 37 min 42.7 s | 5 min 37 s | |

| Algorithm | RFC | LinearSVC | ETC (BAG L1 FULL) | GBTC | |

| House Price | Gemini 2.5 Pro (FSP) | auto-sklearn | AutoGluon | Kaggle Notebooks | |

| RMSE | 0.12007 | 0.12775 | 0.1181 | 0.12096 | |

| Exec. Time | 1 min 43 s | 1 h 48.5 s | 1 h 36 min 2.1 s | 47 s | |

| Algorithm | LGBMR + XGBR+ CBR + Ridge | LinearSVR | LGBMR (BAG L1/T2 FULL) | Regularized Linear Regression Model | |

| Digit Recognizer | Gemini 2.5 Pro (ToT & HP-tuned) | auto-sklearn | AutoGluon | Kaggle Notebooks | |

| Accuracy | 0.99639 | 0.98167 | 0.97996 | 0.99028 | |

| Exec. Time (GPU) | 45 min 15 s | 1 h 5 min 23.9 s | 2 h 51 min 3.6 s | 1 h 47 min7 s | |

| Algorithm | CNN | LinearSVC | RFC (BAG L1 FULL) | CNN | |

| NLP with Disaster Tweets | Gemini 2.5 Pro (FSP) | auto-sklearn | AutoGluon | Kaggle Notebooks | |

| F1 Score | 0.83695 | 0.78424 | 0.7919 | 0.83726 | |

| Exec. Time (GPU) | 25 min 25 s | 1 h 9.9 s | 3 h 30 min 58.2 s | 5 min 53 s | |

| Algorithm | Hugging Face Bert-Base-Uncased | PAC | ETC (BAG L1 FULL) | Distil BERT | |

| The Beats per Minute of Songs | DeepSeek-V3 (FSP & HP-tuned) | auto-sklearn | AutoGluon | Kaggle Notebooks | |

| RMSE | 26.38663 | 26.39400 | 26.39029 | 26.38020 (20 September 2025) | |

| Exec. Time | 9 min 41 s | 59 min 56.8 s | 2 h 52 min 16.6 s | - | |

| Algorithm | LGBMR | GBR | ETR (BAG L1 FULL) | - |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaya Gülağız, F. Large Language Models for Machine Learning Design Assistance: Prompt-Driven Algorithm Selection and Optimization in Diverse Supervised Learning Tasks. Appl. Sci. 2025, 15, 10968. https://doi.org/10.3390/app152010968

Kaya Gülağız F. Large Language Models for Machine Learning Design Assistance: Prompt-Driven Algorithm Selection and Optimization in Diverse Supervised Learning Tasks. Applied Sciences. 2025; 15(20):10968. https://doi.org/10.3390/app152010968

Chicago/Turabian StyleKaya Gülağız, Fidan. 2025. "Large Language Models for Machine Learning Design Assistance: Prompt-Driven Algorithm Selection and Optimization in Diverse Supervised Learning Tasks" Applied Sciences 15, no. 20: 10968. https://doi.org/10.3390/app152010968

APA StyleKaya Gülağız, F. (2025). Large Language Models for Machine Learning Design Assistance: Prompt-Driven Algorithm Selection and Optimization in Diverse Supervised Learning Tasks. Applied Sciences, 15(20), 10968. https://doi.org/10.3390/app152010968