1. Introduction

Visual–inertial odometry (VIO) has been widely adopted as a core component of autonomous navigation systems, enabling accurate estimation of ego-motion by combining visual and inertial measurements [

1,

2,

3]. Among existing systems, methods based on tightly coupled optimization, such as VINS-Mono [

4], have demonstrated strong performance and robustness in a variety of scenarios. However, most traditional pipelines are based on hand-crafted features [

5,

6], which often suffer from limited repeatability and sensitivity to appearance variations, making them vulnerable in visually complex or rapidly changing environments.

Recent advances in deep learning have included feature extraction methods that outperform traditional descriptors in terms of semantic richness and robustness to appearance changes [

7,

8,

9]. Deep features offer higher-level representations that are more invariant to viewpoint and illumination changes. However, their direct application in SLAM (Simultaneous Localization and Mapping) or VIO systems introduces new challenges [

10,

11]. Deep features tend to cluster in semantically salient regions and lack temporal stability, which may lead to unstable tracking or degeneracy in optimization-based pipelines.

To address these limitations, a novel VIO system is proposed that leverages a lightweight deep feature extractor in combination with a multi-scale image pyramid and spatially balanced keypoint selection strategy. Additionally, an optical-flow-based filtering method is introduced, which applies forward–backward consistency checks to reject unstable keypoints. This hybrid front-end is designed to retain the descriptive power of deep features while improving spatial coverage and temporal stability for reliable tracking.

The system is implemented as an extension of VINS-Mono, where the original front-end is replaced by a deep learning-based feature extraction and filtering module. The main contributions of this work are summarized as follows:

A hybrid front-end for visual–inertial odometry is developed by integrating a lightweight deep feature extractor with an image pyramid and grid-based keypoint sampling strategy, ensuring spatial diversity of extracted features.

An optical-flow-based keypoint filtering method is introduced, which employs forward–backward consistency checks to improve temporal stability and reject unreliable features.

The proposed system is implemented to run in real time on standard GPU-equipped hardware and demonstrates competitive trajectory accuracy on the EuRoC MAV dataset under fair conditions.

The remainder of this paper is organized as follows.

Section 2 reviews relevant studies, and

Section 3 describes the overall architecture of the proposed system and presents the details of the feature extraction and filtering modules. The experimental results and comparisons are discussed in

Section 4, and finally,

Section 5 concludes the paper.

3. Proposed Method

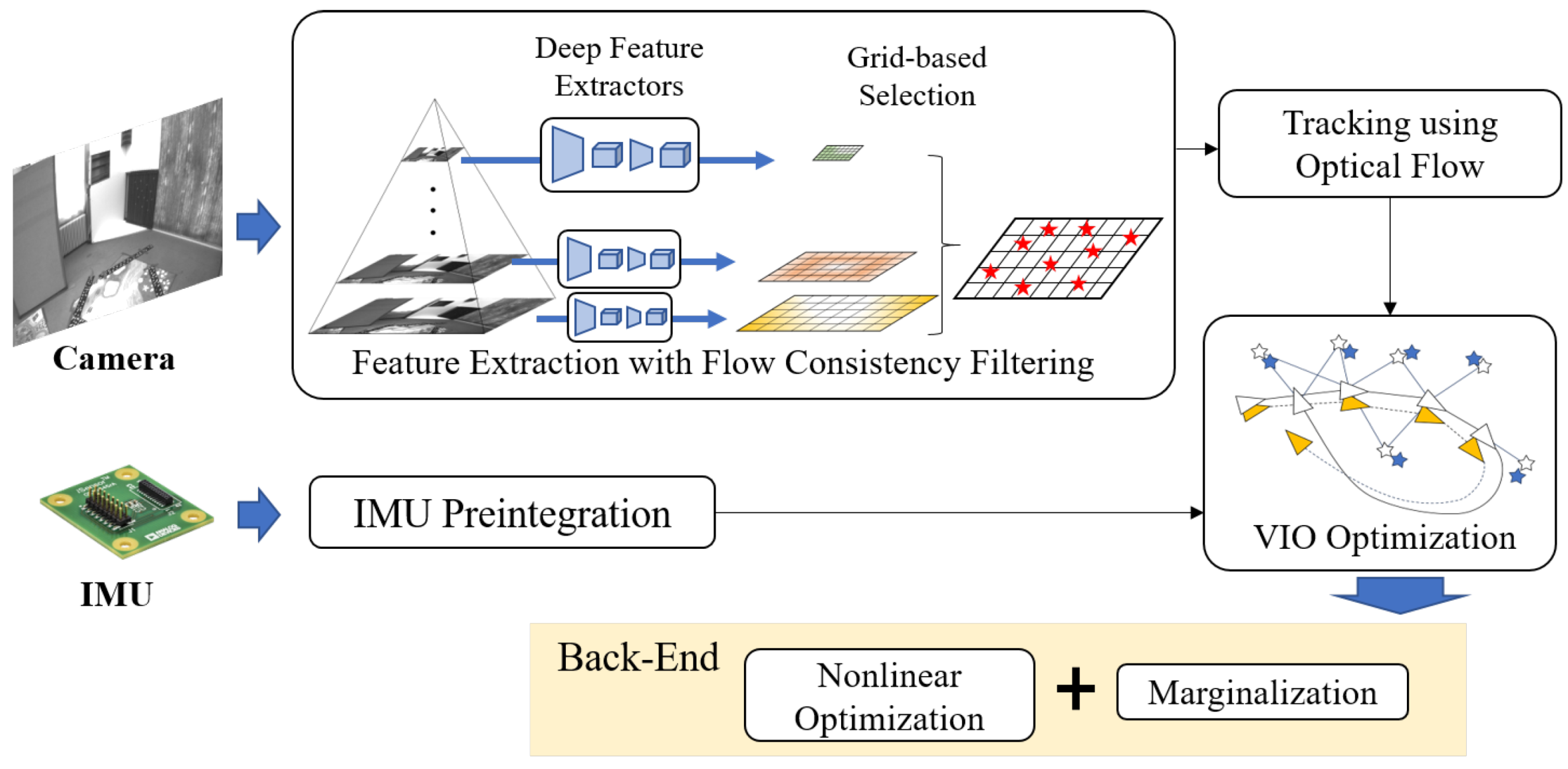

3.1. Overview of the Modified VIO System

The proposed system builds upon the well-established VINS-Mono [

4] framework, which tightly integrates visual and inertial measurements through a nonlinear optimization back-end. While the original system relies on handcrafted ORB features for point detection and tracking, our modification introduces a deep-learning-based feature extraction module to improve robustness and accuracy, particularly under challenging visual conditions.

Figure 1 illustrates the architecture of the modified system. The core pipeline of VINS-Mono, including IMU pre-integration and visual–inertial state estimation, is preserved in our system, but loop closure is omitted to focus solely on front-end improvements. This exclusion allows an isolated evaluation of the proposed keypoint extraction and filtering strategies without the influence of global optimization effects. Nevertheless, the proposed front-end is compatible with standard SLAM frameworks and can be integrated into existing systems without modification. The front-end is redesigned to integrate a lightweight deep feature extractor along with a pyramid-based sampling and optical-flow validation strategy for point selection.

Specifically, when the number of successfully tracked points falls below a predefined threshold, the system triggers a re-detection phase. At this point, an image pyramid is constructed, and a deep feature extractor is applied at each scale to generate candidate keypoints. The image is then divided into uniform grids, and keypoints are selected within each grid based on confidence scores to ensure spatial diversity. To further improve the stability of selected points, front–back optical-flow consistency is used to filter out unreliable candidates. The combination of deep feature extraction and optical-flow-consistency filtering provides a complementary balance between semantic robustness and temporal coherence. While previous hybrid front-ends [

11,

20] mainly focus on spatial matching or residual weighting without explicit temporal regularization, the proposed formulation introduces forward–backward flow consistency directly at the feature level. Deep features offer high-level invariance to viewpoint and illumination changes, whereas geometric flow consistency enforces stable correspondences over time by penalizing bidirectional motion discrepancies. This integration effectively regularizes feature trajectories, reducing outlier influence in the optimization back-end and improving the convergence of visual–inertial residual minimization. Consequently, the proposed hybrid front-end achieves greater temporal stability and optimization consistency than conventional appearance-only or purely geometric approaches.

This hybrid front-end leverages deep features while maintaining the spatial coverage and stability of traditional multi-scale detectors. The integration is designed to retain the real-time performance of the original system and requires only minimal additional computational overhead. This configuration isolates the proposed improvements. The two modules (pyramid-based sampling and forward and backward flow checks) are extractor-agnostic and can be reused with lightweight detectors or descriptors with only minor changes to the optimization back-end.

3.2. Deep Accelerated Feature Extraction

To replace the traditional handcrafted feature detector in VINS-Mono, we adopt a deep-learning-based module that generates keypoints and confidence maps from a single image. Since visual–inertial odometry systems require fast and consistent front-end processing to ensure high real-time performance, the feature extractor must be both lightweight and efficient. To meet these requirements, we use XFeat [

9], a modular deep local feature framework designed for rapid keypoint and descriptor extraction with minimal computational overhead. Its streamlined architecture makes it well-suited for tightly coupled VIO pipelines, where delays in feature computation can directly degrade system responsiveness.

In our system, the model takes a grayscale image as input and produces a dense keypoint confidence map along with corresponding local descriptors in a single forward pass. The architecture consists of a shared encoder followed by two parallel heads for confidence and descriptor prediction. Unlike other deep detectors that incorporate scale-awareness through multi-scale convolutions or feature fusion, XFeat omits such mechanisms for efficiency. To compensate, we externally apply an image pyramid during inference to recover scale diversity in the extracted keypoints.

The adopted feature extractor achieves high speed and produces distinctive and robust keypoints, but presents two major limitations when deployed in VIO systems. First, due to the heatmap-like nature of the confidence output, keypoints tend to concentrate around localized high-response regions, resulting in poor coverage in homogeneous or distant areas. Second, the spatial distribution of keypoints is often uneven, which can negatively affect the accuracy and stability of pose estimation.

To overcome these limitations, we introduce a custom feature selection strategy, described in the

Section 3.3. The key idea is to compensate for the lack of scale-awareness and ensure spatial diversity by constructing an external image pyramid and applying grid-based sampling across multiple scales.

3.3. Multi-Scale Feature Selection with Flow-Based Filtering

To address the limitations of uneven spatial distribution and lack of scale-awareness commonly found in learning-based feature detectors, we propose a multi-scale and flow-guided feature selection strategy. This process is triggered when the number of tracked points falls below a predefined threshold, indicating degraded tracking quality.

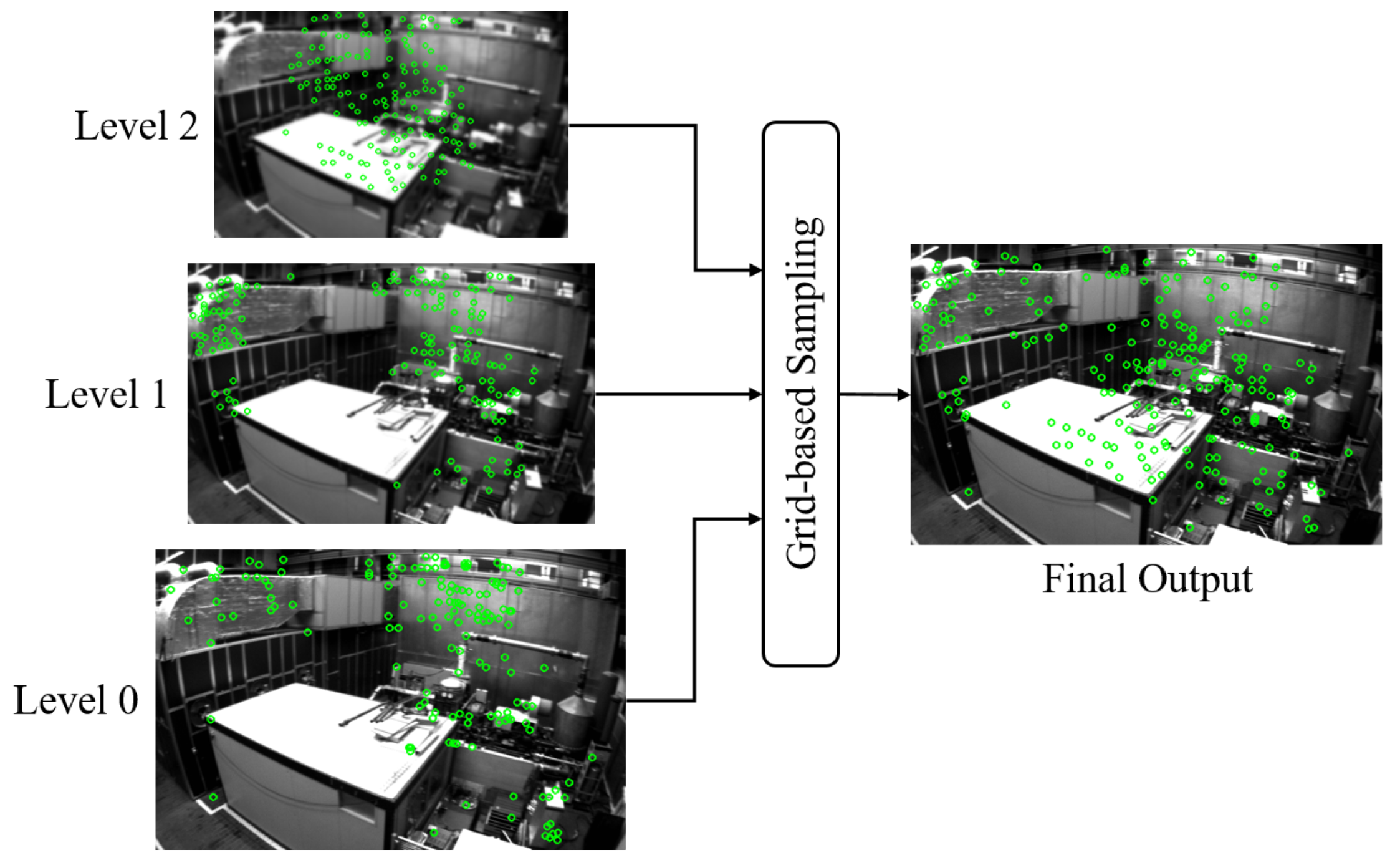

First, we construct an image pyramid from the current grayscale frame. At each pyramid level, a lightweight deep feature extractor is applied to generate dense confidence maps and descriptors. Since the adopted feature extractor omits internal multi-scale processing for efficiency, our use of an explicit image pyramid compensates for the lack of scale diversity in the keypoints.

To ensure spatial uniformity, each image at every scale level is divided into fixed-size grids. Within each grid cell, we select the top-

N keypoints based on the confidence score provided by the network. This grid-based sampling helps to avoid the typical clustering of keypoints in high-texture regions, which can otherwise lead to unbalanced feature distributions.

Figure 2 presents a visualization of the multi-scale feature extraction and selection pipeline. The left column shows the keypoints extracted at three different levels of the image pyramid, each capturing features at different spatial resolutions. It is evident that the spatial distribution and density of keypoints vary significantly across levels, reflecting their complementary nature. The rightmost image displays the final set of selected keypoints after grid-based aggregation. The result demonstrates improved spatial coverage while avoiding over-concentration of keypoints in textured regions. This spatial balancing plays a critical role in improving tracking robustness, especially under challenging conditions such as viewpoint changes or partial occlusions.

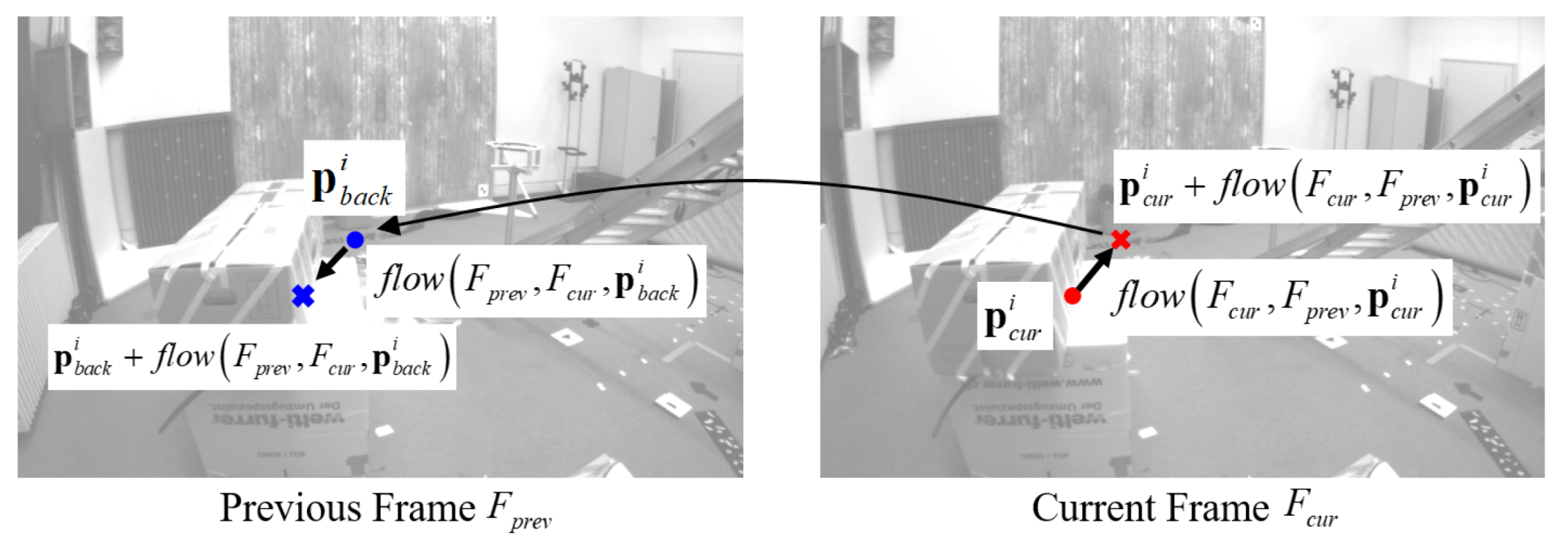

Following the multi-scale extraction and spatial sampling, we apply a forward–backward flow-consistency check to filter out temporally unstable keypoints. For each candidate

in the current image

, we compute the forward flow to the previous image

to obtain the corresponding point

:

It should be noted that the function

flow(·) in Equation (

1) refers to a sparse optical flow estimated for detected keypoints using the pyramidal Lucas–Kanade (KLT) algorithm [

23]. Unlike dense flow estimation, which computes pixel-wise motion fields, this sparse formulation focuses on maintaining accurate trajectories for selected features that directly contribute to visual–inertial optimization. The approach provides sub-pixel accuracy for individual keypoint motion while ensuring computational efficiency for real-time processing. Consequently, the forward–backward consistency check is applied per keypoint track to validate temporal stability without computing dense flow across the entire image.

We then compute the backward flow from

in

back to

, and measure the discrepancy between the original point and the round-trip result:

Equivalently, the consistency error can be written as the sum of forward and backward flows:

Keypoints with

exceeding a predefined threshold are rejected to eliminate temporally inconsistent or geometrically unreliable candidates. As shown in

Figure 3, this filtering process enhances stability and leads to more reliable tracking by removing temporally inconsistent keypoints. While features are extracted from a multi-scale image pyramid, all optical-flow fields are upsampled to the highest resolution before computing the forward–backward consistency error. This approach ensures that the consistency evaluation benefits from the higher accuracy of full-resolution flow estimation, eliminating the need for level-wise scaling.

By combining pyramid-based deep feature extraction, grid-constrained sampling, and temporally consistent filtering, the proposed strategy yields robust, evenly distributed, and geometrically stable keypoints suitable for reliable VIO front-end operation.

3.4. Implementation Details

Our system builds on the open-source VINS-Mono framework, replacing its original feature module with the proposed deep feature extraction and selection pipeline. We adopt pre-trained weights from XFeat for efficient and stable inference. The deep feature extractor is integrated using TorchScript-based deployment, allowing efficient GPU inference without requiring a separate deep learning framework at runtime. The image pyramid is constructed on-the-fly using standard OpenCV functions, and feature extraction is performed independently on each scale level. The confidence threshold and the number of keypoints selected per grid cell are tunable parameters that can be adjusted based on the desired trade-off between performance and computational load.

For tracking, we retain the original optical-flow-based method from VINS-Mono, using a pyramidal Lucas–Kanade tracker. Flow-guided filtering is implemented by computing bidirectional flow between frames and comparing the displacement of forward–backward matches. Points with inconsistency beyond a threshold are removed before being passed to the front-end estimator.

The feature extraction and selection process is triggered only when the number of tracked points drops below a certain threshold. This strategy helps maintain high tracking quality while reducing unnecessary computation. All modules run in real time on a desktop platform with GPU acceleration, and further details on runtime performance and resource usage are provided in

Section 4.

4. Experiment

We evaluate the proposed system on the EuRoC MAV dataset, a widely used benchmark for visual–inertial odometry that provides synchronized stereo images, IMU data, and accurate ground truth for six-degree-of-freedom pose estimation. We compare it against several representative visual–inertial odometry (VIO) systems. VINS-Mono [

4] serves as a strong baseline for traditional tightly coupled monocular VIO based on hand-crafted features. In addition, we include OKVIS [

12], another classical keyframe-based VIO system that relies on optical-flow tracking and stereo input. VINS-Fusion [

14,

15] extends VINS-Mono to support multiple sensor modalities and provides improved robustness in diverse conditions. Furthermore, we compare our method with two recent learning-based systems: SuperVINS [

11], which integrates SuperPoint features with a LightGlue matcher to enhance correspondence robustness within an optimization-based pipeline, and VIO-DualProNet [

24], which employs a dual-network fusion of visual and inertial data for end-to-end motion estimation. These recent approaches represent state-of-the-art learning-augmented VIO frameworks and serve as strong references for assessing both the accuracy and computational efficiency of the proposed method.

All experiments are conducted on a desktop equipped with an Intel Core i9-14900K CPU with 32 GB RAM and an NVIDIA RTX 5080 GPU. Although the evaluation is performed on a high-end desktop, the proposed system is designed with lightweight modules, including the XFeat-based feature extractor and an efficient filtering scheme. Thanks to this design, the method maintains real-time operation and can operate reliably even on lower-end computing platforms without significant degradation in accuracy. Each method is executed on the same hardware under identical conditions to ensure fairness. For methods without publicly available implementations, the reported results are adopted from their original papers to maintain consistency in the comparisons.

The trajectory accuracy is evaluated using the Root-Mean-Square Error (RMSE) of absolute poses, computed after aligning the estimated trajectory with the ground truth using a standard SE(3) alignment procedure [

25]. The RMSE is defined as

where

denotes the estimated pose at frame

i,

denotes the corresponding ground-truth pose, and

N is the total number of evaluated frames.

To assess the effectiveness of our contributions, we also report the results from an internal ablation: a version of our system that uses deep features extracted from a pretrained network but omits the proposed flow-consistency filtering and spatial sampling steps (denoted as

Ours (raw deep features)). This variant helps isolate the contribution of each component in the proposed front-end. In addition, an ablation study is conducted to analyze the impact of key parameters that significantly influence the performance of the proposed system, including the maximum number of tracked features, the grid size for deep feature extraction, the number of pyramid levels, and the flow-consistency threshold. For other parameters related to the camera configuration and sensor noise characteristics, the same values as those used in VINS-Mono are adopted to ensure fairness and consistency across methods. A detailed summary of the IMU noise parameters used in this study is provided in

Appendix A (

Table A1). All methods are evaluated without loop closure and use monocular input only, ensuring a fair comparison under consistent back-end optimization frameworks.

4.1. Quantitative Results

Table 1 presents the absolute trajectory RMSE for all evaluated methods on the EuRoC MAV dataset. The results reveal that the proposed method achieves robust and consistent tracking performance across diverse scenarios. To provide a clearer quantitative comparison, the table also reports the average percentage improvement of the proposed method over VINS-Mono, which is the primary baseline.

In sequences such as V102 and V201, which involve challenging visual conditions like dynamic illumination or aggressive motion, the proposed method outperforms all baselines. In V102, the RMSE is reduced, outperforming both VINS-Mono and VINS-Fusion. This improvement is attributed to the grid-based keypoint selection, which ensures that features are spatially well-distributed even in regions with low texture or uneven illumination. Similarly, in V201, which involves rapid camera motion and potential motion blur, our method outperforms traditional baselines and ablation variants. The forward–backward flow-consistency filtering is particularly effective here in eliminating unstable keypoints caused by blur or occlusion.

The benefit of the proposed front-end becomes especially clear when comparing it to the Ours (raw deep features) variant. In sequences like V203, where raw deep features alone lead to noticeable degradation, our full method reduces the error by suppressing temporally inconsistent keypoints. This shows the importance of the filtering mechanism, which prevents unstable keypoints from contributing to drift in dynamic scenes.

On the other hand, in more regular and static sequences such as MH03 and MH04, the performance gap between methods is narrower. These sequences offer abundant stable features, making handcrafted descriptors like ORB sufficient for accurate tracking. Nonetheless, our method maintains competitive accuracy without relying on stereo input or loop closure, demonstrating its general applicability.

It is also worth noting the comparison with VINS-Fusion, a widely adopted visual–inertial odometry framework that extends VINS-Mono by enhancing optimization stability and feature management. Although VINS-Fusion generally serves as a strong baseline in the community, our method shows competitive or even superior results on several sequences. In particular, on V201 and V202, the proposed pipeline outperforms VINS-Fusion, suggesting that the integration of learned features and temporal filtering enhances robustness and consistency even under challenging conditions. Overall, these findings confirm that the proposed approach achieves accuracy comparable to that of state-of-the-art VIO systems while maintaining a lightweight monocular configuration.

Although the proposed system achieves consistent improvements across most sequences, a few challenging cases, such as MH04 and V203, exhibit slightly increased drift. These sequences contain severe motion blur and abrupt illumination changes, which can distort optical-flow estimation and reduce temporal consistency across frames. Such effects may be mitigated by photometric normalization, contrast-invariant feature encoding, or adaptive flow-thresholding strategies, which will be considered in future extensions. In such conditions, a small number of temporally inconsistent keypoints may remain even after flow-consistency filtering, resulting in minor localization drift. The system may also experience reduced robustness in environments with repetitive textures or partial occlusion, where spatially balanced sampling cannot fully prevent feature ambiguity or mismatched correspondences. These observations highlight the inherent limitations of deep feature-based front-ends in visually degraded environments and emphasize the need for more adaptive perception strategies. Future research will focus on integrating semantic priors and blur- or occlusion-aware feature weighting mechanisms to improve resilience under such challenging conditions.

In contrast to recent deep learning-based approaches, the proposed system exhibits comparable or even superior performance on several sequences such as MH03, MH04, and V102. While fully learned pipelines like SuperVINS and VIO-DualProNet show strong results in visually challenging scenarios, they generally rely on heavier network architectures and greater computational cost. Our method, on the other hand, achieves competitive accuracy using a lightweight hybrid front-end that combines compact deep features with optical-flow-based filtering. This demonstrates that stable and spatially balanced feature selection can yield robustness on par with complex end-to-end frameworks while preserving real-time efficiency.

Overall, these results indicate that the proposed system not only improves performance in difficult scenarios where traditional features struggle, but also retains robustness and accuracy in favorable conditions. On average, the method achieves an approximately 21% lower trajectory error compared with VINS-Mono, confirming its effectiveness across both indoor and outdoor sequences. By integrating deep learning-based feature extraction with principled spatial and temporal filtering, the system achieves a well-balanced VIO pipeline suitable for diverse real-world environments.

Taken together,

Table 1 and

Figure 4 suggest that robustness gains stem from the synergy between spatial coverage (grid-constrained selection across pyramid scales) and temporal consistency (forward–backward flow filtering). The former prevents over-concentration in textured regions and secures motion observability across directions, while the latter removes transient, blur- or occlusion-induced instabilities before they propagate to the optimizer. Notably, the method remains competitive against stereo-enabled baselines while using monocular input only, which is attractive for platforms where additional sensors or calibration effort are impractical.

4.2. Impact of Feature Selection Strategy

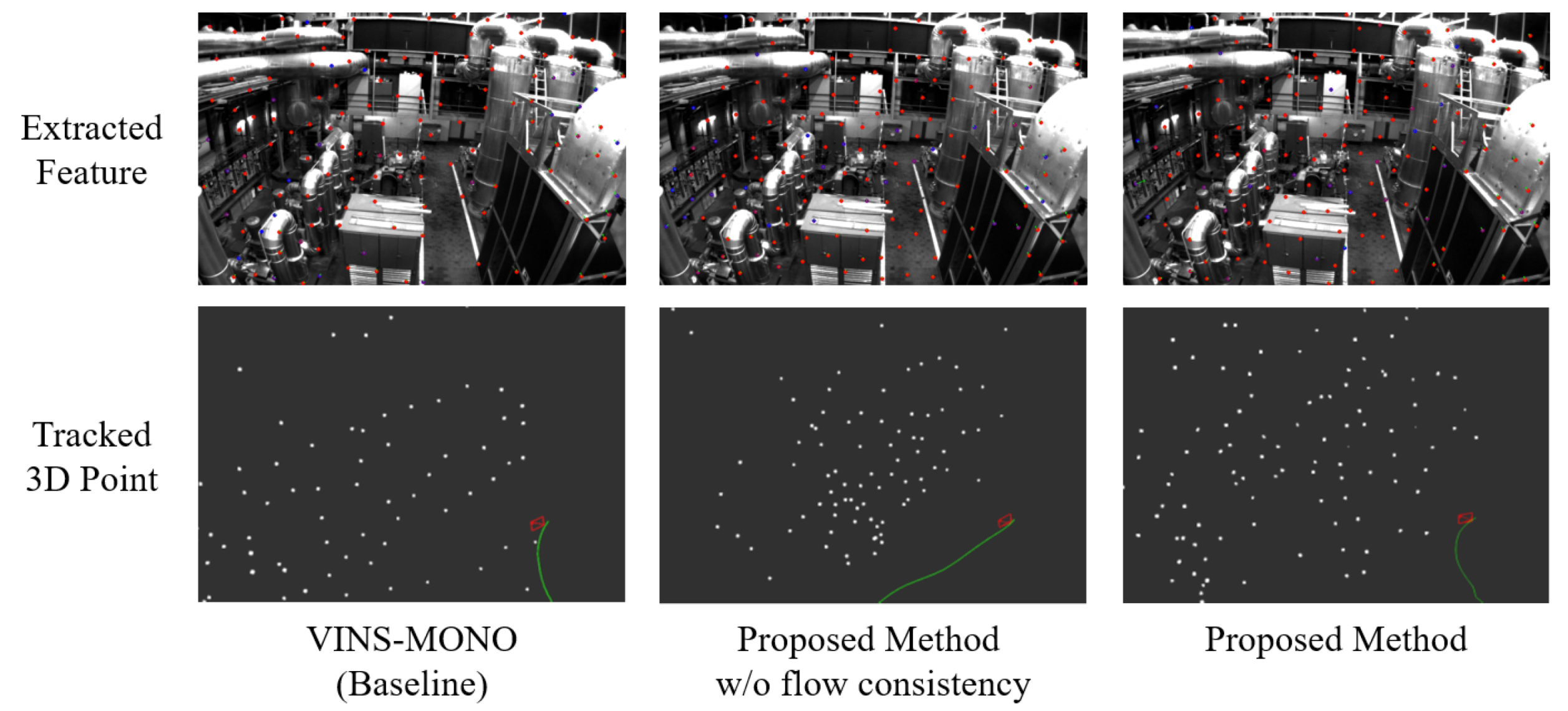

The quality of visual–inertial odometry (VIO) strongly depends on the robustness, spatial distribution, and temporal consistency of selected keypoints. Learned features often exhibit high repeatability and semantic invariance, but they tend to concentrate in highly textured regions, leading to spatial imbalance and potential instability across frames. Our method explicitly addresses these limitations by combining three core strategies: (1) multi-scale deep feature extraction to capture keypoints across different resolutions, (2) grid-based spatial sampling to enforce even distribution, and (3) optical-flow-based consistency filtering to eliminate geometrically unstable or temporally inconsistent points.

Figure 5 provides a qualitative comparison on the MH05 sequence of the EuRoC dataset. The raw deep feature baseline shows a high density of keypoints clustered in certain regions, particularly around textured edges and corners, while large homogeneous regions remain underrepresented. In contrast, our method yields more uniform spatial coverage across the image due to grid-level selection across all scales. This balanced distribution ensures that motion in all directions is adequately observed, reducing the risk of estimation degradation due to local feature dropout. To further verify temporal stability, the keypoint survival rate was analyzed across consecutive frames. The proposed front-end maintained an average survival rate of 78.4%, compared to 62.1% for the baseline without flow-consistency filtering. This confirms that the filtering step effectively enhances feature longevity and tracking stability over time.

Furthermore, the incorporation of flow-consistency filtering significantly improves the temporal reliability of selected keypoints. By enforcing forward–backward consistency, the system effectively discards outliers caused by motion blur, occlusion, or viewpoint-induced descriptor drift. As a result, our method maintains a denser and more stable set of 3D landmarks over time, as visualized in the bottom row of

Figure 5.

Together, these strategies demonstrate the importance of a principled front-end design. Instead of relying purely on raw deep features or handcrafted descriptors, our approach leverages the strengths of learned representations while addressing their limitations, resulting in a more robust and consistent VIO pipeline.

However, limitations remain. Flow consistency hinges on accurate correspondences; under severe blur, abrupt exposure changes, or large unmodeled parallax, its rejection power diminishes and some outliers persist. Highly repetitive textures can also stress the grid selection by trading off coverage and descriptor distinctiveness. These cases suggest a need to add complementary cues (e.g., semantics or depth) and scene-adaptive thresholds to further increase robustness.

4.3. Runtime Analysis

To assess the computational efficiency of the proposed front-end, we measured the runtime of its two main components: deep feature extraction (including multi-scale processing and grid-based keypoint selection) and optical-flow-based consistency filtering. On average, the feature extraction stage requires 33.73 ms per frame, covering the computation of multi-scale feature maps, keypoint extraction, and spatial sampling. The flow-consistency step adds 0.62 ms per frame for forward–backward flow and pixel-wise checks. End-to-end, the front-end takes ms per frame ( FPS), operating within real-time constraints on the evaluated desktop GPU. In addition, a computational resource analysis was performed to evaluate adaptability to embedded platforms. The XFeat extractor requires less than 150 MB of GPU memory during inference and utilizes fewer than 20% of available CUDA cores on an RTX-level GPU, while the KLT-based optical-flow-consistency module runs entirely on the CPU at real-time rates. This configuration enables real-time operation even on embedded platforms with limited computational resources. Further speedups can be achieved through mixed-precision or pruning.

The breakdown shows that flow consistency contributes a negligible share of the total (), indicating that latency is dominated by the extractor. In practice, further speedups are therefore most effectively sought on the extraction path (e.g., lighter backbones or fewer pyramid levels) while keeping the lightweight filtering intact. This aligns with the empirical trend that robustness gains come primarily from enforcing spatial coverage and temporal stability rather than from expensive post-processing. Even under reduced-frame-rate conditions, the proposed system maintains stable pose estimation. The flow-consistency filtering enforces temporal smoothness across consecutive frames, while the feature selection strategy prioritizes long-lived correspondences with consistent motion. As a result, the system remains accurate and reliable even when the image sampling frequency is lowered, demonstrating robustness for low-frame-rate cameras or resource-constrained hardware.

For deployment on embedded or low-power platforms, additional engineering may be required to keep the end-to-end latency within real-time bounds at lower budgets, such as model compression (quantization or pruning), kernel fusion, and pipeline scheduling. Because the proposed modules are decoupled from the back-end, such optimizations can proceed without modifying the estimator.

4.4. Ablation Study

4.4.1. Parameter Sensitivity

To validate the robustness of the proposed method and analyze the impact of key parameters, we conducted an ablation study by varying the maximum number of extracted features, the grid size, and the pyramid level while comparing the full pipeline against a variant without the flow-consistency filtering. As summarized in

Table 2, the full pipeline consistently outperforms the variant without flow across all settings and sequences. When the maximum number of features is limited to 300, the accuracy slightly decreases compared to the default configuration, confirming that the proposed filtering benefits from a sufficient feature pool but remains stable even under reduced density. Larger grid sizes slightly degrade the performance due to lower spatial diversity, whereas reducing the pyramid levels increases the drift on long sequences such as MH05. Overall, the default configuration achieves the best trade-off between accuracy and computational efficiency, maintaining strong performance across all tested sequences. The default configuration provides balanced accuracy and efficiency across various settings, confirming stable performance without dataset-specific tuning. These results confirm that the observed gain is not merely due to higher feature density, but rather stems from the improved robustness of the flow-based filtering mechanism.

4.4.2. Effect of Flow-Consistency Filtering

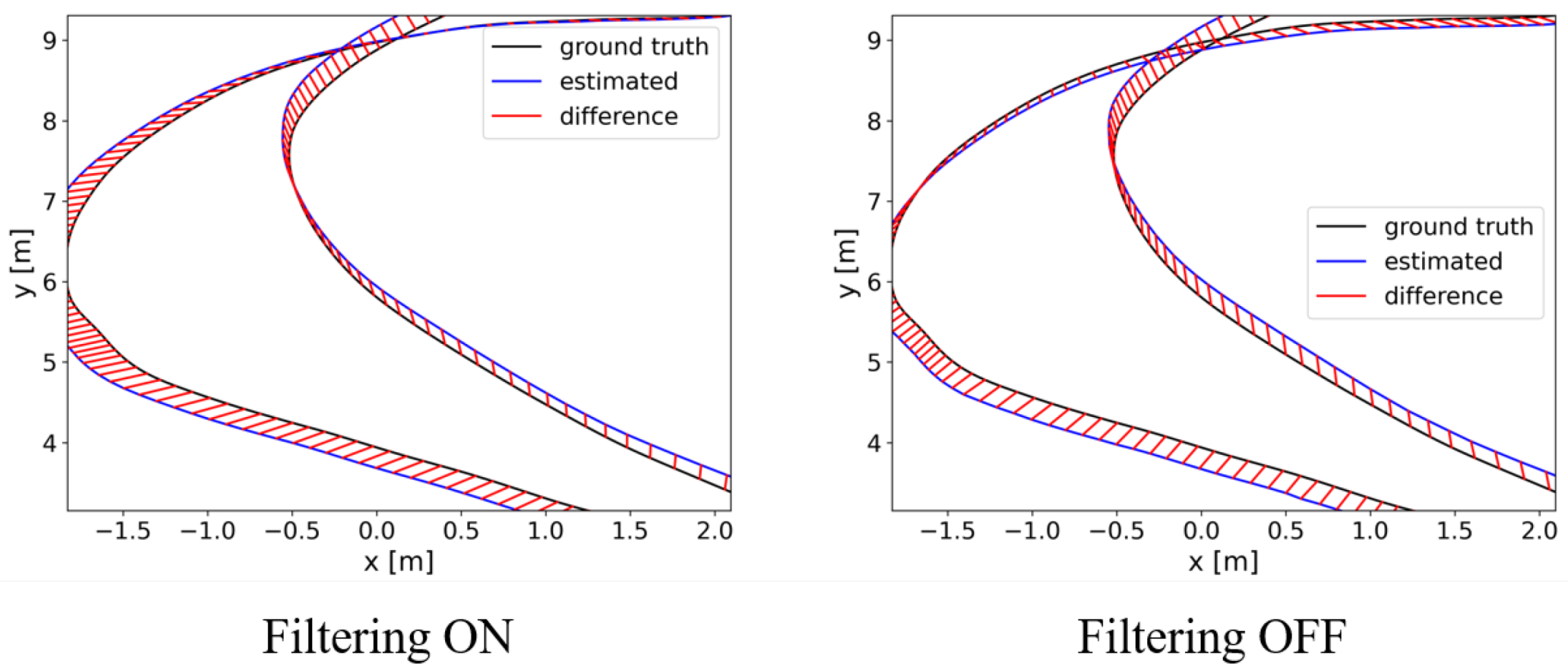

To examine the impact of the proposed filtering module, an ablation experiment was performed on the EuRoC MH04 sequence.

Figure 6 presents side-by-side trajectories estimated with filtering (left) and without filtering (right). Although both results follow a similar overall path, the filtered version shows smoother motion and less local drift, especially in curved regions where deviations are most evident. This visual comparison clearly demonstrates that enforcing bidirectional flow consistency enhances temporal stability in feature tracking.

Table 3 further summarizes the quantitative results, showing that despite a slight reduction in the average number of tracked keypoints, the overall trajectory accuracy improves, confirming the benefit of the filtering step.

4.4.3. Flow-Consistency Threshold

To evaluate the influence of the flow-consistency threshold (

), an ablation study was conducted on the EuRoC MAV dataset using sequences MH03, MH05, and V202.

Table 4 reports the trajectory RMSE under different threshold settings. Without the consistency check, all sequences show noticeably higher errors due to temporally unstable matches. When a strict threshold (

) is applied, unstable points are successfully filtered out, yielding improved accuracy; however, over-filtering may remove useful keypoints, slightly reducing robustness in dynamic frames. The threshold of

achieves the lowest average ATE across sequences, providing a good trade-off between rejecting inconsistent matches and preserving sufficient spatial coverage. In contrast, a relaxed threshold (

) allows noisy flows to remain, increasing trajectory drift. Therefore,

was adopted as the default setting for all experiments in this paper, balancing precision and stability.

5. Conclusions

We present a Visual Inertial Odometry system that augments a lightweight deep feature extractor with a multi-scale image pyramid, grid-based keypoint selection for spatial coverage, and forward and backward flow-consistency checks for temporal stability. The front-end directly targets two common weaknesses of learned features, namely spatial clustering and frame-to-frame instability, while preserving real-time operation and requiring only modest computational overhead.

Evaluations on the EuRoC MAV benchmark demonstrate that the proposed method achieves up to 19.35 % lower RMSE compared with existing feature-based and learning-based VIO systems, showing competitive and robust tracking across diverse scenarios. The method reduces drift in challenging sequences and remains strong even without loop closure or stereo input. This indicates that a simple and principled front-end can substantially stabilize optimization-based VIO. These results support the practicality of incorporating learned perception modules into classical geometric pipelines when paired with lightweight selection and filtering.

The proposed front-end shows practical potential for deployment in real-world robotic and mobile platforms. The architecture maintains real-time localization performance even on embedded systems with limited computational resources. These results indicate that the approach provides a solid foundation for reliable visual–inertial navigation in real-world environments. Future research will focus on extending the framework to multi-sensor configurations, including LiDAR, visual, and inertial inputs, and enhancing robustness in dynamic environments such as urban traffic scenes or indoor spaces with moving agents. To this end, semantic and depth cues will be integrated to improve environmental understanding and motion separation. Semantic information will guide keypoint selection toward stable, static regions, while depth priors will refine spatial distribution in texture-sparse or partially occluded areas. Quantitative evaluation will be conducted using metrics such as trajectory RMSE, dynamic-object recall, and scene-level consistency to assess the impact of these extensions. Additional lightweight branches can operate in parallel with the front-end to preserve real-time performance. Further directions include self-supervised and domain-adaptive training for temporal consistency, as well as hardware-aware optimization for efficient embedded deployment.