1. Introduction

The rapid advancement of artificial intelligence in agriculture has created new opportunities for food security and sustainable farming. Modern precision agriculture relies on intelligent advisory systems to provide timely and context-specific recommendations, while their effectiveness depends on the ability to accurately understand farmers’ natural language queries, which often involve domain-specific terminology and context-dependent expressions. Intent recognition and slot filling play a crucial role in semantic modeling and in building efficient question-answering systems [

1,

2].

Agricultural question-answering systems face unique challenges that distinguish them from conventional natural language processing applications. First, the agricultural domain contains a large number of specialized vocabularies, such as crop varieties, growth stages, disease classifications, treatment methods, and environmental factors, which are rarely found in general corpora [

3,

4,

5]. Second, farmers’ queries often exhibit complex semantic structures where intent and entity information are closely intertwined; for example, the query “What is the growth status during the fruiting stage?” requires both intent recognition and entity extraction [

6]. Third, agricultural decision-making requires the integration of textual queries with environmental data such as soil conditions, climate patterns, and seasonal variations in order to provide actionable recommendations.

In natural language understanding research, methods for intent recognition and slot filling are typically categorized into pipeline approaches and joint modeling frameworks [

7,

8,

9]. Pipeline methods treat the two tasks as independent, which can easily lead to error propagation and fail to exploit their inherent interdependencies [

10,

11]. Joint modeling frameworks alleviate this issue to some extent, yet most still rely on general-purpose language representations, which are insufficient to capture the specialized semantics and terminology of agricultural contexts [

12,

13]. Although pre-trained language models such as BERT have achieved remarkable success in NLP tasks in recent years [

14,

15], their performance often degrades in specialized domains like agriculture without domain adaptation strategies. For instance, on a certain agricultural knowledge platform, the daily number of user queries has exceeded several thousand, directly impacting production management and yield prediction, which highlights the urgent need for efficient agricultural question-answering systems. Previous studies have shown that hybrid architectures combining BERT with convolutional neural networks are effective in applications such as rumor detection and text classification [

16], while attention mechanisms have proven valuable in selectively focusing on salient information [

17]. However, research on systematically integrating these advanced architectures with agricultural domain knowledge remains relatively limited.

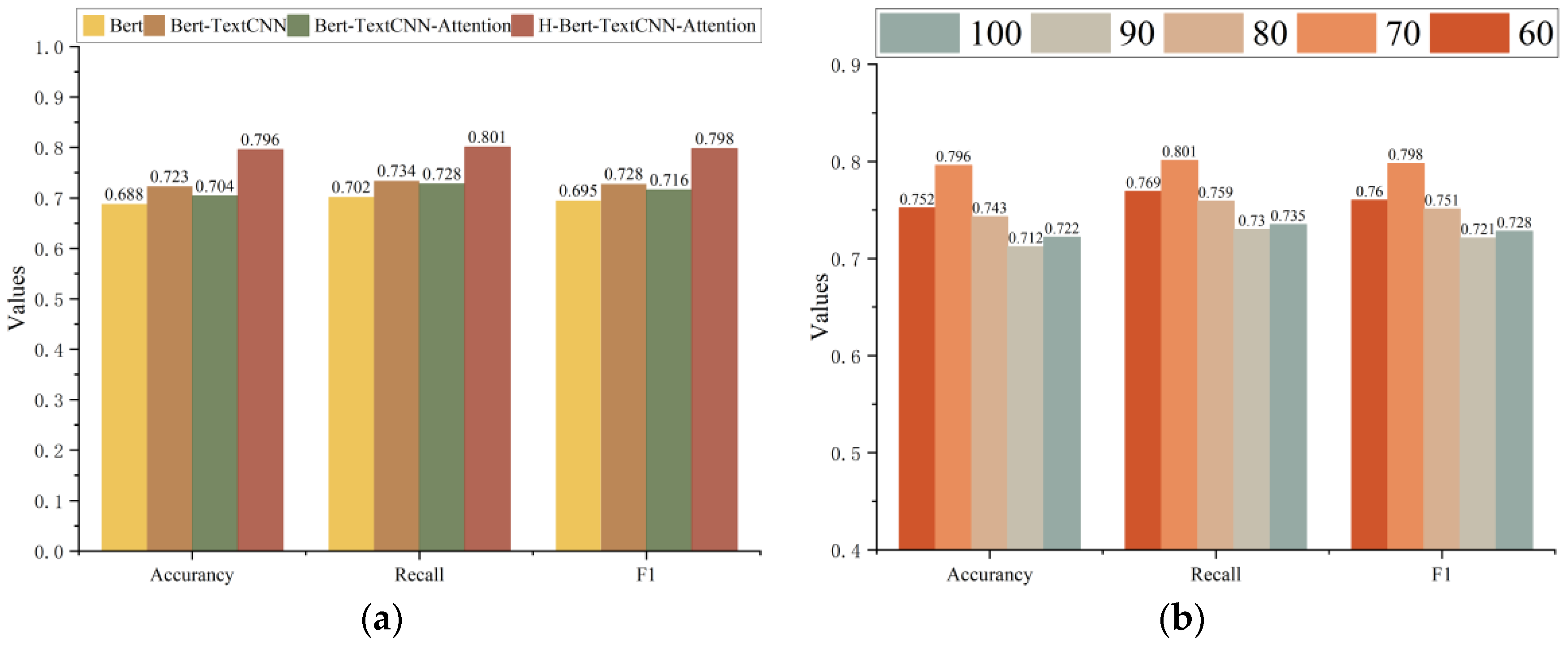

The primary contributions of this work are threefold: (1) We develop a comprehensive framework that systematically incorporates agricultural knowledge—including specialized terminology, growth stage classifications, and environmental parameters—into the neural natural language understanding process. (2) We design a hybrid architecture that combines the strengths of pre-trained language models (BERT) with convolutional feature extraction (TextCNN) and attention mechanisms to capture both global contextual semantics and fine-grained local patterns in agricultural queries. (3) We conduct extensive experiments on a curated agricultural dataset, achieving 79.6% accuracy, 80.1% recall, and 79.8% F1-score, with substantial gains (7–22%) over baseline methods.

To the best of our knowledge, this is one of the first studies to systematically integrate domain-specific agricultural knowledge and environmental parameters into a joint intent detection and slot filling model, thereby demonstrating the effectiveness of agricultural knowledge enhancement for practical deployment in precision agriculture applications.

The remainder of this paper is organized as follows:

Section 2 presents the proposed framework and model architecture;

Section 3 describes the dataset and experimental setup;

Section 4 reports the experimental results and analysis;

Section 5 discusses implications and potential future research; and

Section 6 concludes the paper.

2. Materials and Methods

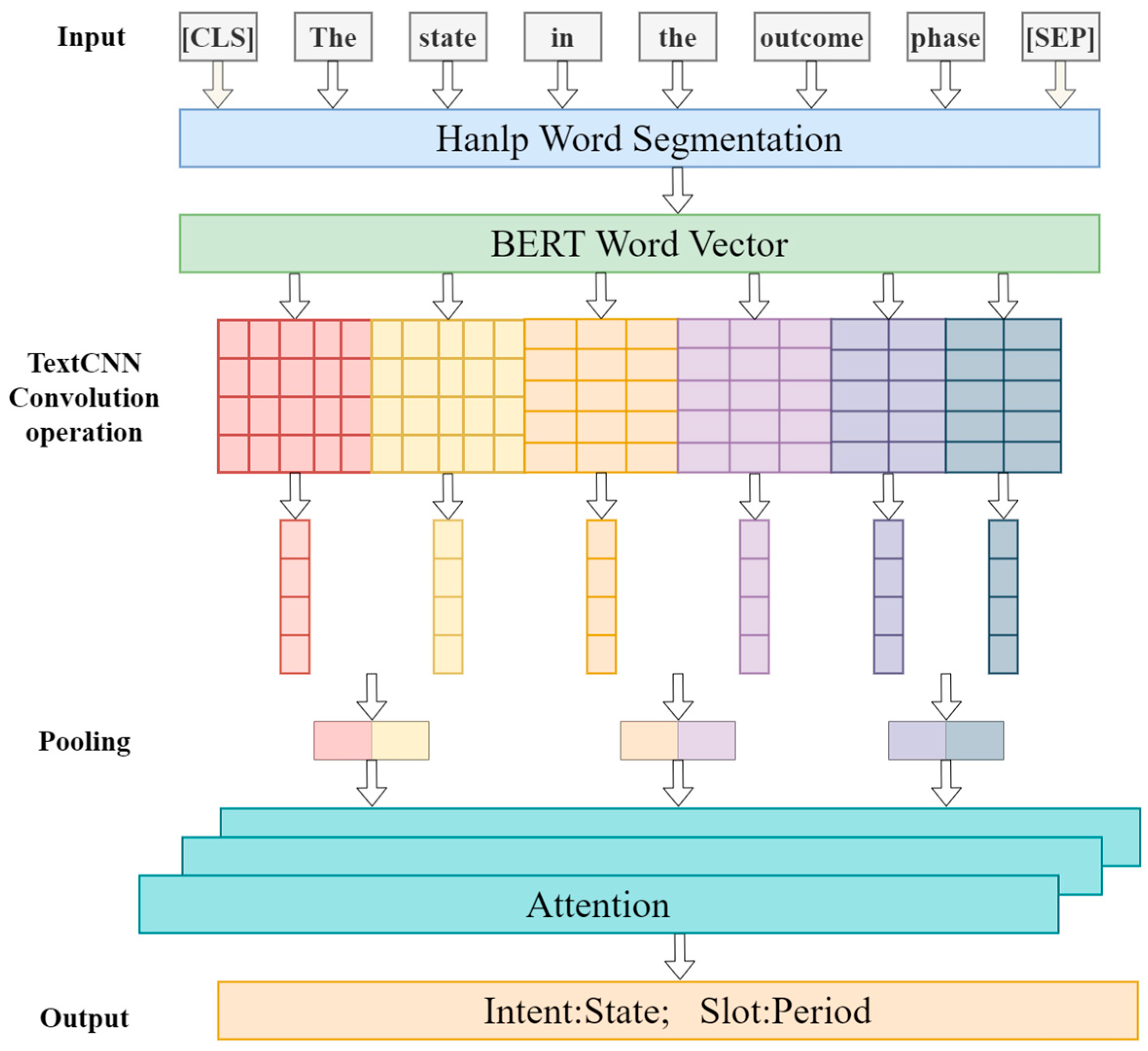

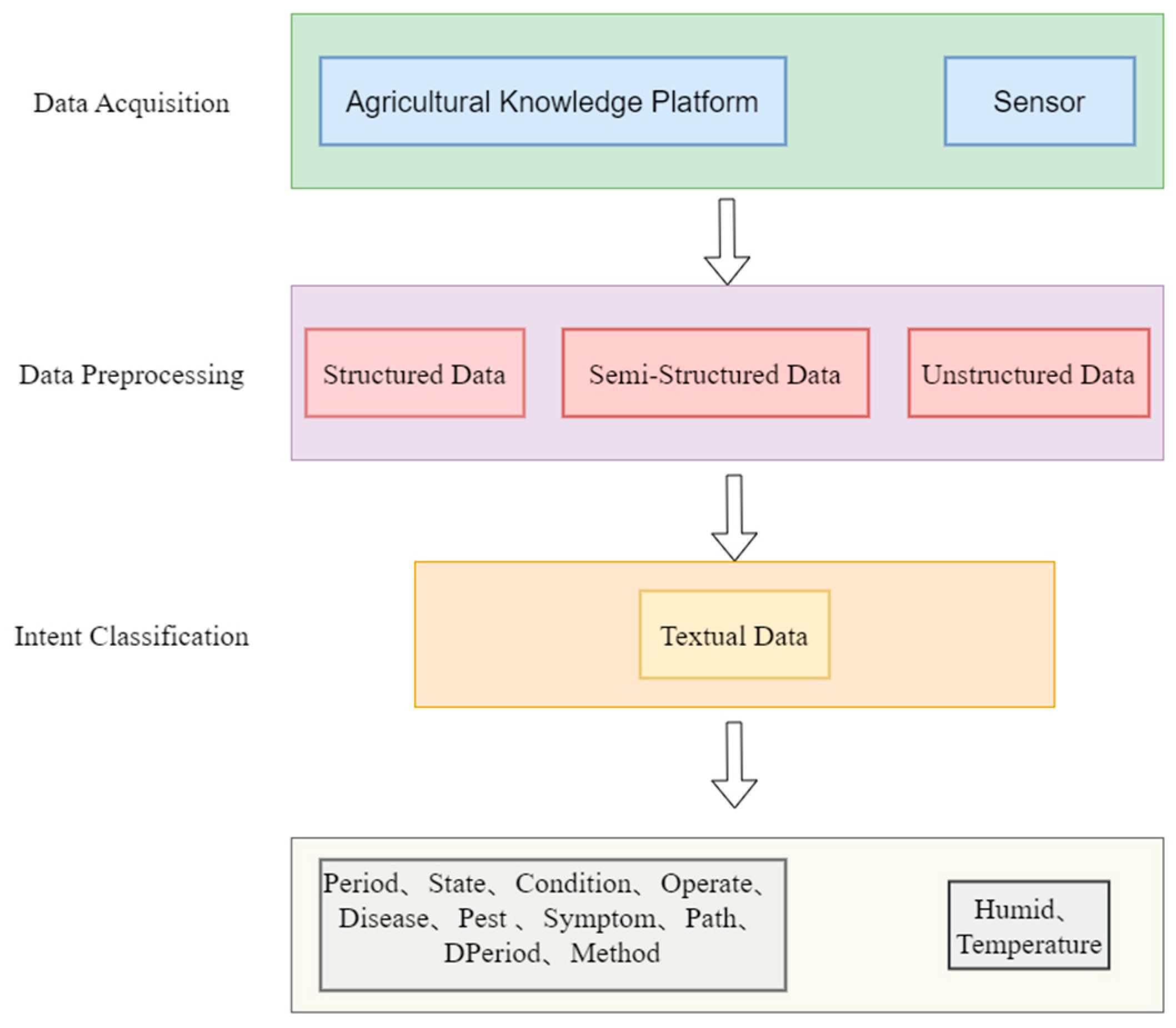

This study proposes a hybrid model integrating HanLP-based word segmentation with an enhanced BERT-TextCNN-AT architecture, whose overall structure is illustrated in

Figure 1. The model comprises the following core modules: first, HanLP is employed to perform word segmentation for text representation; subsequently, the BERT model is utilized to generate deep semantic vectors; then, TextCNN is applied to extract multi-scale features; finally, an attention mechanism is introduced to achieve adaptive weighted feature fusion. This architecture provides a complete end-to-end processing pipeline from raw text input to final intent recognition.

2.1. HanLP Word Segmentation

In this study, the HanLP [

18] natural language processing toolkit is employed to perform the slot filling task. For the input text, domain-specific vocabulary used in melon cultivation—such as growth stages, pest and disease terms, and growth status—is accurately mapped to the corresponding part-of-speech (POS) categories. During this process, HanLP leverages its built-in annotation model to transform the input text into POS-tagged output, ensuring that each component of the text is assigned an explicit POS label. This process ultimately completes the slot filling task and establishes a solid foundation for subsequent natural language processing tasks.

Here, t denotes the positional index of the token, and c represents the input character sequence.

2.2. Bert Model

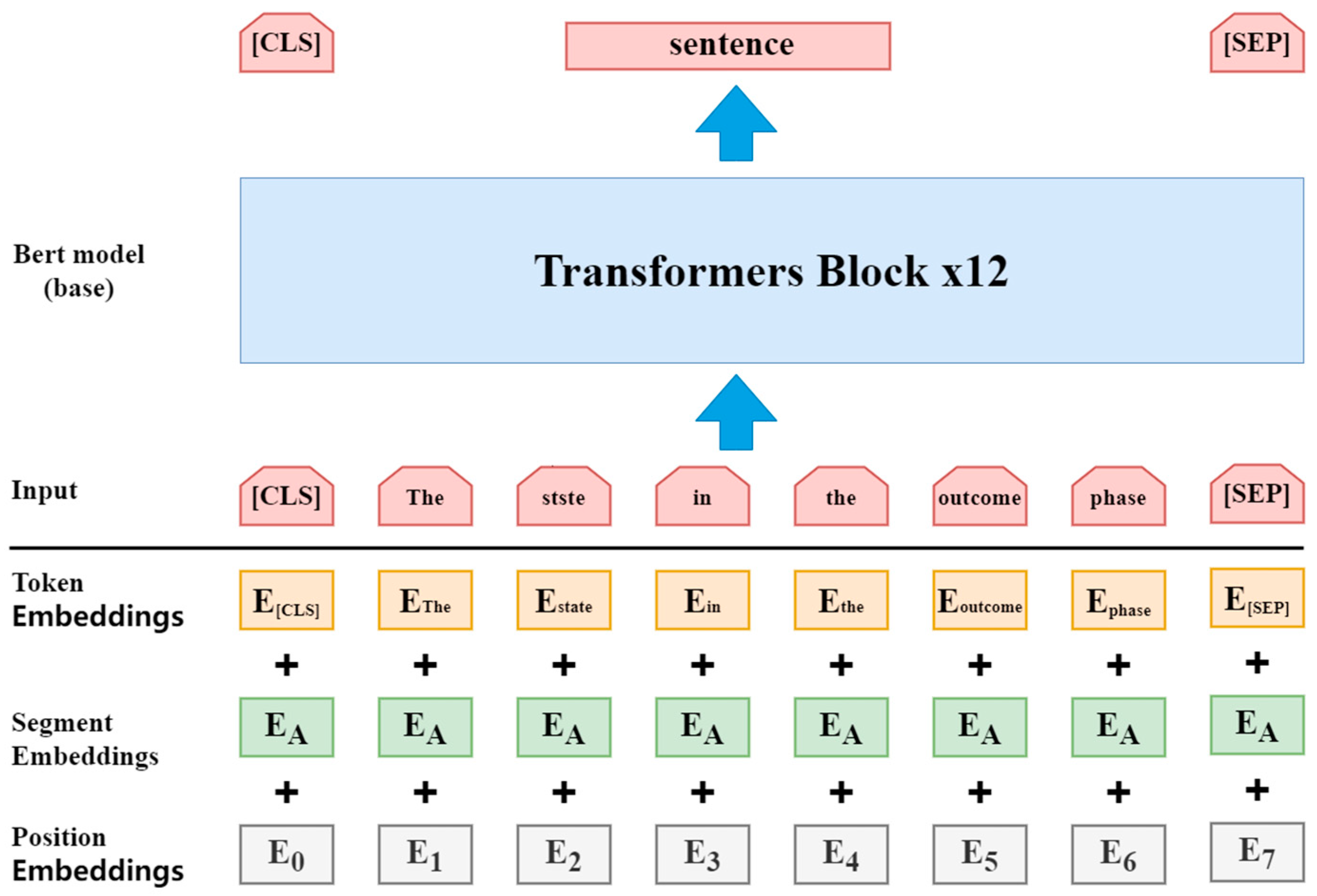

In the intent recognition and slot filling tasks within the domain of melon cultivation, the BERT model primarily serves as the core component for deep semantic representation learning. User queries or utterances related to melon cultivation are first tokenized and encoded into corresponding word vector sequences, with special tokens (such as [CLS] and [SEP]) added to explicitly define the input structure. During the semantic modeling process, BERT maps each token into a context-dependent vector representation, enabling domain-specific terms (e.g., “seedling cultivation,” “fertilization,” and “disease control”) to be represented more accurately in the semantic space. Based on this, the output vectors of BERT can be applied to intent recognition (using the [CLS] vector for classification to determine the user’s intent, such as “disease control inquiry” or “cultivation technique consultation”) and slot filling (using token-level outputs for sequence labeling to identify specific slot information, such as “disease name = downy mildew” and “cultivation stage = seedling period”). These feature vectors are then used as the input for the subsequent TextCNN model. The structure of the BERT model is shown in

Figure 2.

2.3. TextCNN Model

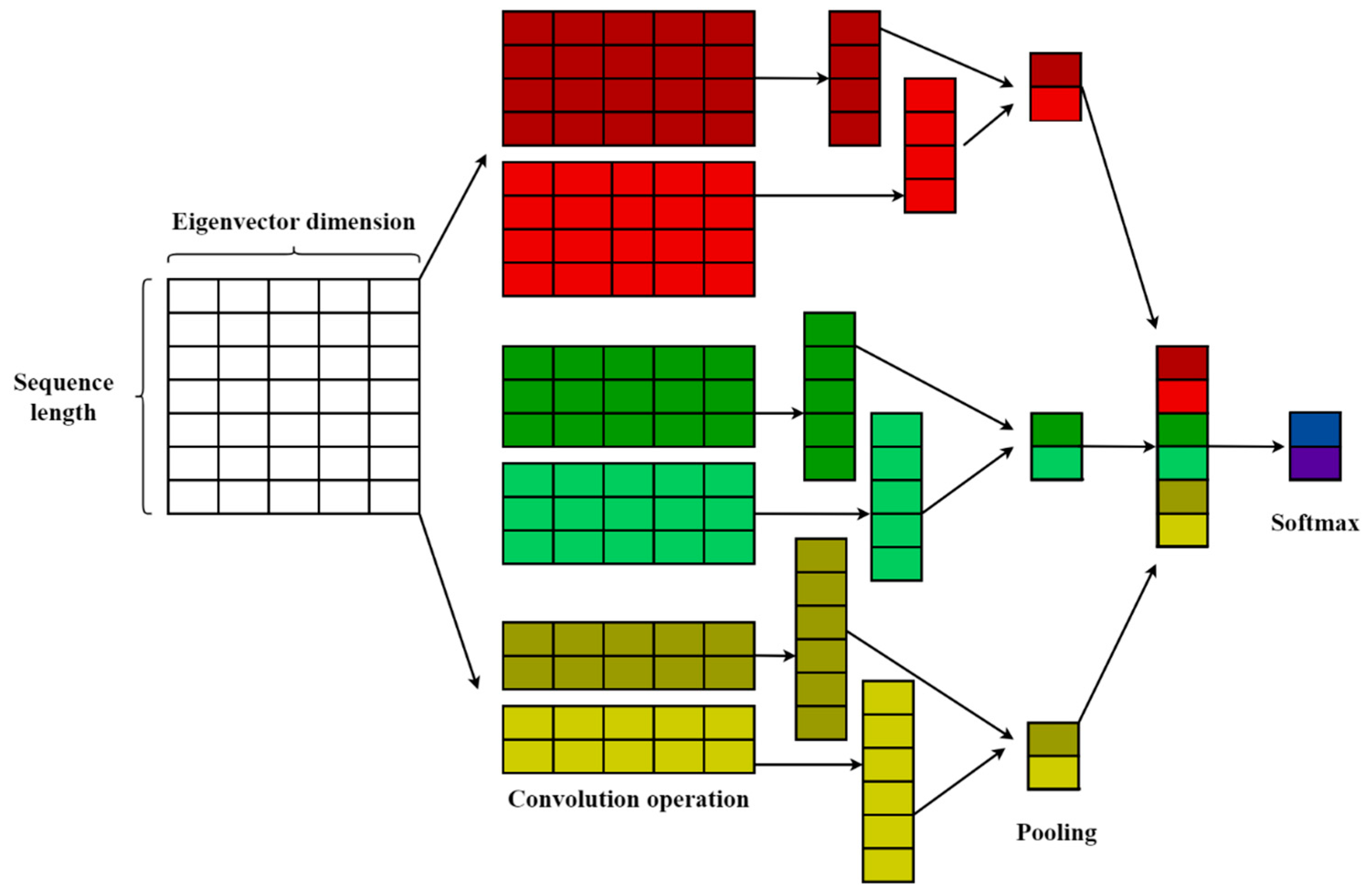

TextCNN applies multiple convolution kernels of different sizes to the sequence feature vectors output by BERT, thereby extracting local semantic features at various granularities, such as phrase-level and clause-level representations. For example, it can capture key information fragments such as “flowering stage control” and “disease management.” Through max-pooling, TextCNN selects the most representative feature values from those extracted by different convolution kernels, enabling the aggregation of critical information and dimensionality reduction, thus enhancing the compactness and discriminative capability of the feature representation. The structure of the TextCNN model is illustrated in

Figure 3.

In the architecture of the TextCNN model, the convolutional layer serves as the core feature extraction module, applying multi-dimensional convolution kernels over the text representation matrix through sliding window operations to capture local features. Specifically, given an input text that has been vectorized into a word embedding matrix

, the model employs a convolution kernel of dimension

for the computation.

The feature activation value computed by a single convolution kernel at a specific position is denoted as . After the convolution kernel completes the sliding scan over the entire input sequence, a complete feature mapping vector C is generated. To perform feature dimensionality reduction, the model applies a pooling operation following the convolutional layer. In practical implementation, the original text sequence is first transformed into a word embedding matrix representation through the embedding layer, after which multiple sets of convolution kernels with varying sizes are applied in parallel to perform convolution operations, thereby extracting multi-level local semantic features.

2.4. Attention Mechanism

In this study, the traditional TextCNN model is enhanced with an attention mechanism. The attention layer assigns different weights to the feature sequences obtained from the fusion of BERT and TextCNN, with the core idea being to “focus the model on more important parts.” For instance, in the sentence “How to control downy mildew during the flowering stage?”, higher weights are assigned to domain-specific key information such as “downy mildew,” “flowering stage,” and “control.” In terms of model architecture, the attention layer takes the multi-level features extracted by the convolutional neural network and generates an attention-weighted vector representation

v. The computation formula is as follows:

Here,

Q denotes the query vector, while

K and

V represent the key-value pairs, respectively. Finally, the representation vector is passed through a

Softmax classifier to output the probability distribution over intent categories. The computation is formulated as follows:

5. Discussion

5.1. Performance Analysis and Model Contribution

The proposed H-Bert-TextCNN-AT model achieves an F1-score of 79.8%, outperforming all baseline methods and validating the effectiveness of integrating do-main-specific preprocessing with hybrid contextual and local feature extraction. The performance gains can be attributed to three key architectural innovations working synergistically.

First, the integration of HanLP-based word segmentation provides domain-specific preprocessing that effectively addresses agricultural terminology recognition challenges. Unlike general-purpose tokenization methods, HanLP’s domain-aware segmentation enables accurate identification of compound agricultural terms such as “fruiting period” and specialized disease names, which are critical for effective slot filling in agricultural con-texts.

Second, the hybrid BERT-TextCNN architecture combines the strengths of contextual understanding and local feature extraction. While BERT provides rich semantic representations that capture long-range dependencies in user queries, TextCNN effectively extracts multi-granularity local features that are particularly important for identifying specific agricultural concepts and entities. This combination addresses the limitation of using either approach independently, as evidenced by the substantial improvement over the individual BERT-TextCNN baseline (79.8% vs. 72.8% F1-score).

Third, the attention mechanism enables adaptive weighting of features, allowing the model to focus on domain-relevant terms such as growth stages, pest names, and control methods. This targeted attention proves particularly valuable in agricultural question-answering scenarios where certain keywords carry disproportionate semantic importance.

5.2. Comparison with Existing Approaches

When compared to recent work in agricultural natural language processing, our approach demonstrates notable advantages. The agricultural intent detection method pro-posed by Hao et al. (2023) [

13] in “Joint agricultural intent detection and slot filling based on enhanced heterogeneous attention mechanism” achieved similar objectives but focused primarily on heterogeneous attention mechanisms without incorporating domain-specific preprocessing. Our integration of HanLP-based knowledge enhancement provides a more systematic approach to handling agricultural terminology, which directly contributes to the observed performance improvements.

Furthermore, compared to general-purpose joint models evaluated on standard datasets, our domain-specific approach highlights the complexity challenges inherent in specialized domains. The performance gap between general benchmark results and our agricultural domain performance (79.8% F1-score) underscores the necessity of do-main-specific enhancements rather than direct application of general-purpose models.

The inclusion of soil temperature and humidity slots in our framework represents a novel contribution to agricultural NLP, extending beyond traditional linguistic features to incorporate environmental context. This multimodal consideration aligns with the practical requirements of precision agriculture systems, where environmental factors significantly influence crop management decisions.

5.3. Limitations and Challenges

Despite the encouraging results, several limitations warrant discussion. First, the dataset size of 8041 queries, while substantial for a domain-specific corpus, remains orders of magnitude smaller than standard benchmarks like SNIPS or ATIS. This limitation potentially affects the model’s ability to capture the full diversity of agricultural terminology and user query patterns.

Second, the current approach focuses specifically on melon cultivation, raising questions about generalizability to other crops and agricultural domains. While the framework architecture is designed to be adaptable, the domain-specific components would require reconfiguration for different agricultural contexts.

Third, the performance ceiling at approximately 80% F1-score suggests remaining challenges in semantic understanding. Error analysis reveals that the model struggles with ambiguous queries and novel terminology combinations not well-represented in the training data.

The computational complexity of the hybrid architecture also presents practical deployment considerations, potentially limiting real-time applications in re-source-constrained agricultural environments.

5.4. Implications for Agricultural AI Systems

Despite these limitations, the demonstrated improvements provide valuable insights into the practical deployment potential of the model. The results have important implications for developing intelligent agricultural advisory systems. The effectiveness of do-main-specific knowledge enhancement suggests that agricultural AI applications benefit significantly from specialized preprocessing and terminology handling, rather than relying solely on general-purpose language models.

The integration of environmental sensor data (soil temperature and humidity) with linguistic processing represents a step toward comprehensive agricultural decision sup-port systems. This approach aligns with the broader trend toward precision agriculture, where multiple data sources inform farming decisions. Such multi-source integration can further enable predictive analytics for crop yield estimation and early warning systems for pests and diseases. Future agricultural AI systems could build upon this foundation to incorporate additional modalities such as weather data, soil composition, and crop imagery.

The performance improvements over baseline methods validate the potential for deploying such systems in practical agricultural advisory contexts, particularly for ad-dressing common farmer queries about cultivation practices, pest management, and growth optimization. However, the current performance levels suggest that such systems would likely function best as decision support tools rather than autonomous advisory systems.

5.5. Future Research Directions

Several promising directions emerge from this work. First, since this experiment focuses on melon cultivation, its applicability to other crops has not yet been empirically validated. In future research, extending this method to multiple crops and agricultural domains should be a key priority, as it would enhance its practical utility and provide new insights into cross-domain transfer learning in agricultural contexts. Second, improving the model’s handling of multi-intent queries represents an important technical challenge, as real-world agricultural queries often contain multiple related questions.

Third, integration with broader agricultural information systems presents opportunities for enhanced functionality through connecting with comprehensive databases, weather services, and precision agriculture platforms. Fourth, addressing computational efficiency through model compression or edge computing deployment would enhance practical applicability in resource-constrained environments.

Finally, longitudinal evaluation in real agricultural settings would provide valuable insights into practical deployment challenges and system effectiveness in supporting actual farming decisions, crucial for transitioning from research prototypes to production-ready agricultural AI systems.

6. Conclusions

This study tackles the critical challenge of natural language understanding in agricultural question-answering systems by developing an agricultural knowledge-enhanced deep learning framework for joint intent detection and slot filling. Experimental validation on a curated dataset of 8041 melon cultivation queries demonstrates substantial performance improvements. The proposed H-Bert-TextCNN-AT model achieved 79.6% accuracy, 80.1% recall, and 79.8% F1-score, representing significant gains of 7–22% over baseline methods including TextRNN, TextRCNN, TextCNN, and BERT-TextCNN models, validating the effectiveness of integrating specialized agricultural knowledge with hybrid neural architectures for practical agricultural applications.

The key contributions of this work advance the field of agricultural artificial intelligence in three fundamental ways. First, we developed a comprehensive framework that systematically integrates agricultural domain knowledge, specialized terminology, and environmental parameters into neural language understanding processes. Second, we designed a novel hybrid architecture that synergistically combines BERT contextual understanding, TextCNN local feature extraction, and attention-based fusion mechanisms. Third, we demonstrated the superior effectiveness of knowledge enhancement over general-purpose approaches in agricultural contexts. These innovations collectively represent a significant step toward bridging the gap between complex agricultural expertise and accessible intelligent advisory systems.

While the current study focuses on melon cultivation with a domain-specific dataset, the demonstrated improvements provide valuable insights into the broader potential of agricultural language understanding systems. The research contributes to the growing field of precision agriculture by providing a practical framework for developing intelligent advisory systems. However, the applicability of the proposed approach to other crops has not yet been empirically validated. Looking ahead, extending this framework to multiple crop domains represents not only a critical priority for verifying its generalizability and robustness, but also an essential step toward advancing intelligent agricultural question-answering systems toward broader practical deployment by integrating additional environmental modalities for context-aware multimodal agriculture NLP, and evaluating deployment effectiveness in real-world settings. In addition, it is necessary to address the dynamic evolution of agricultural vocabularies and the potential impact of regional linguistic variations, and to explore corresponding mitigation strategies such as dynamic lexicon expansion or continual learning approaches. As agricultural digitalization continues to advance, domain-specific natural language understanding systems will play an increasingly critical role in supporting global food security and sustainable farming practices. This research underscores its importance for shaping the next generation of intelligent agricultural advisory systems at the intersection of artificial intelligence and agricultural science.