1. Introduction

Object detectors deployed in dynamic, real-world environments must recognize not only a fixed set of training classes but also unknown objects that were never seen during training [

1]. This challenge is formalized as open-world object detection (OWOD), where a model should detect and flag novel objects as unknown and later learn them incrementally when labels become available. A key difficulty in OWOD is that previously unseen objects are often misclassified as one of the known categories or even suppressed as background by traditional detectors [

2]. The ability to generalize beyond the training distribution is thus essential [

3]. Another major obstacle is the long-tailed distribution of object frequencies in open-world data [

4]. In large-vocabulary benchmarks such as LVIS, some common classes have abundant examples, while many tail categories have very scarce training data [

5]. This long tail poses a severe challenge for state-of-the-art detectors, which tend to struggle in rare categories with few samples [

6]. In an open-world setting, models must handle extreme class imbalance alongside distributional shifts (new classes at test time). This combination exacerbates the detection of tail-class and novel objects [

7]. Moreover, unknown objects lack ground-truth annotations during training, making it particularly challenging for the model to learn to detect them [

8]. These problems lead to missed detections or confusion with known classes, especially for objects with under-represented tails or entirely new classes [

9].

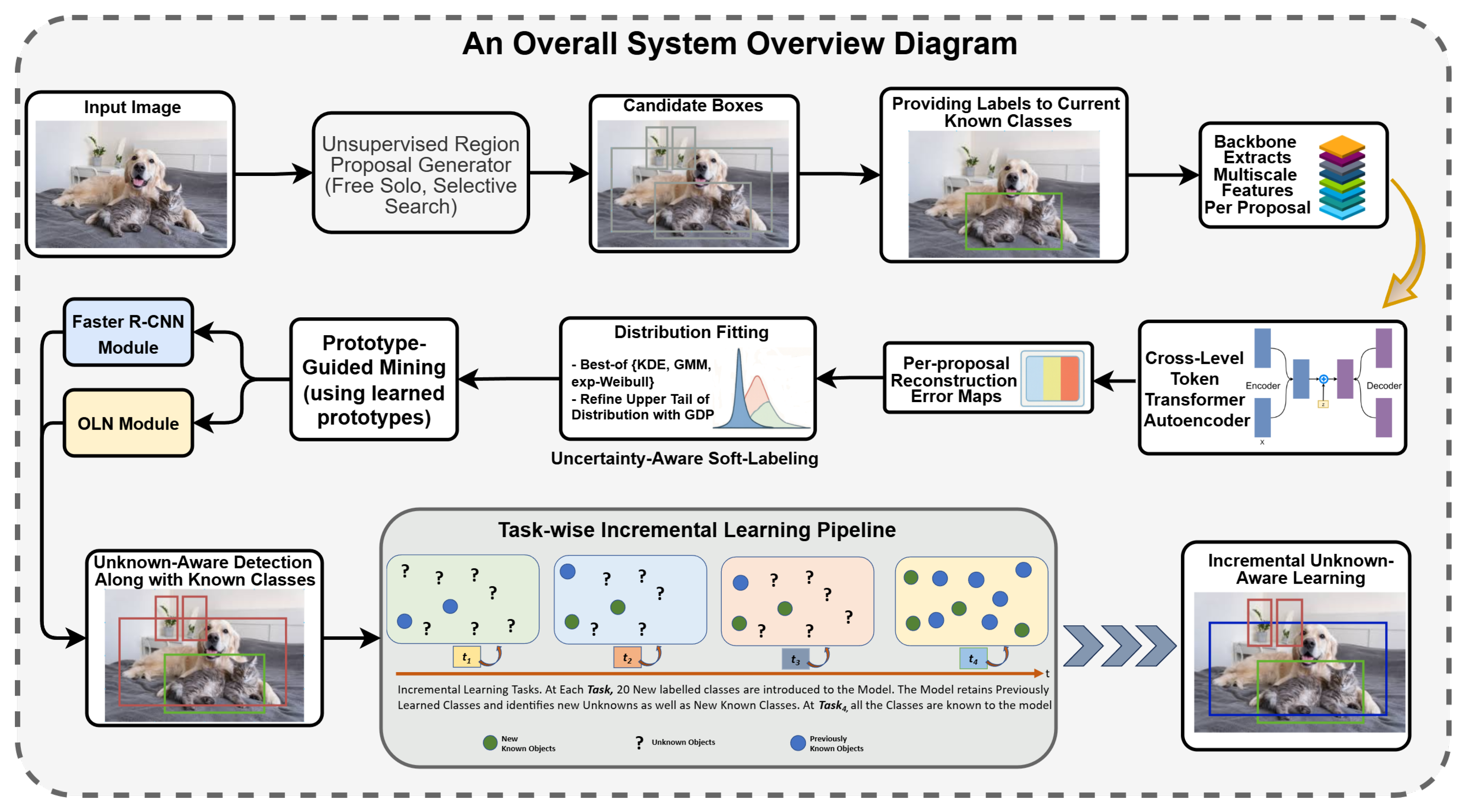

To address these challenges, we propose TAPM (Tail-Calibrated Transformer Autoencoding with Prototype-Guided Mining), a novel framework for open-world object detection. TAPM introduces three key components: (1) Tail-calibrated transformer autoencoding, which uses a self-supervised reconstruction module within a transformer detector to calibrate feature representations towards data-sparse tail classes. By learning to autoencode object features, the model reduces its bias toward head classes and better captures the distinctive patterns of tail-category objects. (2) Prototype-guided mining, which leverages class prototypes (learned representative feature vectors for each class) to guide the discovery of new or hard-to-detect objects. This mechanism finds candidate regions in unlabeled data or background regions that are similar to learned prototypes, effectively revealing potential unknown objects or tail-class instances that the base detector might overlook. (3) Uncertainty-aware soft-labeling, an adaptive pseudo-labeling strategy that assigns soft labels to extract proposals based on the predictive uncertainty of the model. An overview of the entire framework is summarized in

Figure 1.

Instead of hard one-hot labels, our method produces probabilistic labels for unknown-object proposals, thereby mitigating the impact of labeling noise and model overconfidence. This uncertainty-aware labeling leads to more stable training on pseudo-unknowns by down-weighting ambiguous cases and up-weighting confident detections. We validate the TAPM approach on large-scale detection benchmarks, MS-COCO [

10] and LVIS [

5], under challenging open-world evaluation protocols. The results demonstrate that our method significantly improves the detection of rare and unseen objects compared with prior work. In particular, TAPM exhibits robust unknown-object detection, reducing misclassification of novel objects as known or background. These gains yield new state-of-the-art results on OWOD benchmarks, taking us a step closer to reliable open-world object detection at scale. Our main contributions are listed below.

We design a transformer-based autoencoding module that reconstructs object features to calibrate the representation of tail classes, improving detection for rare objects by mitigating the bias toward frequent classes.

We introduce a prototype-guided mining strategy that utilizes learned class prototypes to identify and propose regions containing potential unknown or tail-category objects, thereby providing effective pseudo-supervision for open-world learning.

We develop an uncertainty-driven soft-labeling scheme for pseudo-unknown objects which assigns probabilistic labels based on model confidence. This approach reduces noisy labels and enables the stable incremental learning of new object categories.

Our TAPM framework achieves state-of-the-art open-world object detection results on MS-COCO and LVIS, demonstrating improved unknown-object recall and significantly better performance on long-tailed categories in large-vocabulary settings.

2. Related Work

Open-Set Recognition (OSR) is a foundational paradigm related to OWOD, in which a classifier must reject samples from classes that were not seen during training. Building on this idea, several studies [

11,

12,

13,

14] have explored proposal generation strategies designed to improve generalization when encountering novel categories. Scheirer et al. [

15] first formalized the open-set classification problem, and Bendale and Boult [

16] extended it to open-world classification, which not only detects unknown classes but also updates the model with new classes over time. In computer vision, early work on open-set object recognition and anomaly detection tackled problems such as detecting novel objects in images or robotics scenes [

17]. However, these were mostly limited to image classification or relied on coarse heuristics for detection. Open-world object detection (OWOD), introduced by Joseph et al. in ORE [

1], adapts the open-set concept to the object detection task. ORE [

1] formally defined the OWOD problem and proposed the first solution. This two-stage Faster R-CNN-based model combines contrastive clustering and energy-based scoring to identify unknown objects. The energy-based unknown identifier in ORE reduces the confidence of proposals that do not cluster with any known class, thereby labeling them as unknown. This work demonstrates the feasibility of detecting unknown objects without explicit annotations while also performing the incremental learning of new classes.

Based on ORE, OW-DETR [

18] was introduced, a transformer-based framework for OWOD that operates in an end-to-end manner. It built on Deformable-DETR and introduced a bottom-up attention-driven pseudo-labeling scheme: region proposals with high objectness scores (as measured by feature activation magnitude) were selected and pseudo-labeled as unknown objects for training. OW-DETR also added a dedicated novelty classification head to distinguish between unknown and background objects, and a foreground objectness branch to enhance the transfer of knowledge from known to unknown classes. UCOWOD [

19] proposed a similarity-based cluster mechanism for grouping different unseen classes into distinct groups that separate them from known classes. Despite their successes, pseudo-labeling approaches face challenges: they can introduce false positives (noise) and are computationally heavy due to iterative re-labeling. These limitations motivated alternative strategies that focus on class-agnostic objectness modeling. Rather than relying on the pseudo-labeling of unknowns, several recent works [

2,

20,

21] model objectness probabilistically to separate unknown objects from background. In a recent study [

22], a similar model, called PROB, was introduced. This transformer-based OWOD model incorporates a probabilistic objectness head, replacing the need for generating pseudo-unknown labels. PROB extends Deformable-DETR by adding an unknown class and crucially decoupling objectness prediction from classification. It learns a multivariate Gaussian distribution in the query embedding space to parameterize an objectness probability for each detected region.

More recently, several studies were conducted to explore the open-world object detection problem. Fang et al. [

9] designed an unsupervised discriminative learner to filter true unknowns from pseudo-labels through iterative self-training; however, it struggles in cluttered scenes by confusing the background with unknown objects. Greer et al. [

6] proposed VisLED, a vision–language-guided active learning framework that queries informative 3D samples to capture rare or unseen categories better. Wang et al. [

23] introduced OV-DQUO. This open-vocabulary DETR variant employs wildcard matching and denoising text queries, thereby reducing label bias and enhancing the recognition of novel classes. Xie et al. [

24] developed OWSOL, which combines contrastive co-learning and unsupervised clustering to localize novel objects without bounding-box supervision. More recently, Fu et al. [

25] presented LLMDet, which co-trains detectors with large language models using detailed region- and image-level captions, enhancing the ability to identify unseen categories. Yang et al. [

26] proposed a Partial Attribute Assignment framework that formulates attribute selection as a Partial Optimal Transport problem to learn human-interpretable attributes for both known and unknown objects, yielding explainable unknown detection and strong OWOD performance without relying solely on objectness scores.

We follow RE-OWOD [

27] for evaluation (IoU matching; WI/A-OSE at a fixed operating point) and use it throughout.

Table 1 summarizes the key differences and shows a comparison between the proposed method and the most recent OWOD work. We introduce tail-calibrated transformer encoding (TCTE) inside TAPM that equalizes reconstruction pressure with CLT-AE, converts deviations into calibrated evidence via a GPD bulk–tail fit, and applies a prototype gate to reduce head-biased shortcuts. This yields consistent gains in WI and A-OSE without changes to the detector head or training labels. PROB [

22] adds a probabilistic objectness head to Deformable-DETR; we do not add an extra head. Instead, the prototype-guided soft score

down-weights head-aligned features even when objectness is high. TAPM lowers WI and A-OSE relative to PROB; ablations in

Section 5.12 isolate the effect of the prototype gate versus a pure objectness prior. Objectness-unification methods [

2] emphasize a single objectness score; we focus on tail calibration to separate unknowns from background, which is compatible with unified objectness that can feed proposals to our TCTE and then performs calibration. UC-OWOD [

19] aims to classify unknowns via a two-stage pipeline; our goal is cleaner unknown vs. background separation, and our calibrated unknowns can serve as better inputs to such grouping. For label-free discovery, MEPU-OWOD [

9] uses an unsupervised discriminative model; our unknown branch is also label-free but bases routing on calibrated reconstruction density (GPD tails) with prototype alignment, and we report FKA/KOR alongside WI/A-OSE at the same operating point. Open-CRB [

28] provides an active learning framework for open-world 3D learning with an oracle. Our scope is 2D image detection without human queries; we, therefore, do not adopt active strategies.

Section 5.6 reports the computational cost of our overall methods and shows that its inference overhead is negligible relative to other SOTA models.

In contrast to the studies mentioned earlier, which range from energy-based scoring (ORE), transformer-based pseudo-labeling, and objectness-driven proposals to prototype, attribute-guided approaches, our work addresses a critical gap: the underperformance of open-world detectors on tail categories. We propose TAPM (Tail-Calibrated Transformer Autoencoding with Prototype-Guided Mining), which explicitly recalibrates the feature space for rare classes through a novel cross-level transformer autoencoder (CLT-AE). To ensure reliable unknown discovery, TAPM integrates distribution-tail calibration with GPD refinement and introduces a prototype-guided gating mechanism that filters pseudo-labels by cosine similarity. Additionally, an uncertainty-aware soft-labeling scheme is developed to dynamically weight detection losses. Such a combination of tail recalibration, prototype-guided mining, and uncertainty calibration enables TAPM to improve both known-class balance and unknown-class detection, setting it apart from existing methods.

3. Proposed Methodology

Open-world object detection (OWOD) necessitates a detector that simultaneously recognizes labeled classes, flags unfamiliar objects as unknown, and adapts incrementally as new categories emerge. Existing approaches either rely heavily on pseudo-labeling heuristics or overlook the severe class imbalance across head and tail categories. To address these challenges, we propose TAPM (Tail-Calibrated Transformer Autoencoding with Prototype-Guided Mining). This unified framework integrates reconstruction-driven uncertainty modeling, density-based calibration, and prototype-guided mining into an incremental OWOD pipeline. The training procedure of TAPM is summarized in Algorithm 1, which highlights the end-to-end flow: (i) generation of unsupervised proposals, (ii) feature reconstruction with our cross-level transformer autoencoder (CLT-AE), (iii) scoring of proposals using reconstruction errors, (iv) fitting of level-wise error distributions with best-of statistical models plus GPD tail refinement, (v) maintaining of a prototype vector of known-class features for contrastive gating, (vi) assignment of uncertainty-aware soft labels to proposals, and (vii) optimization of the detector with weighted losses while extending pseudo-unknowns via OLN. The algorithm provides an overview of the training pipeline, while the following subsections provide an in-depth explanation of each module.

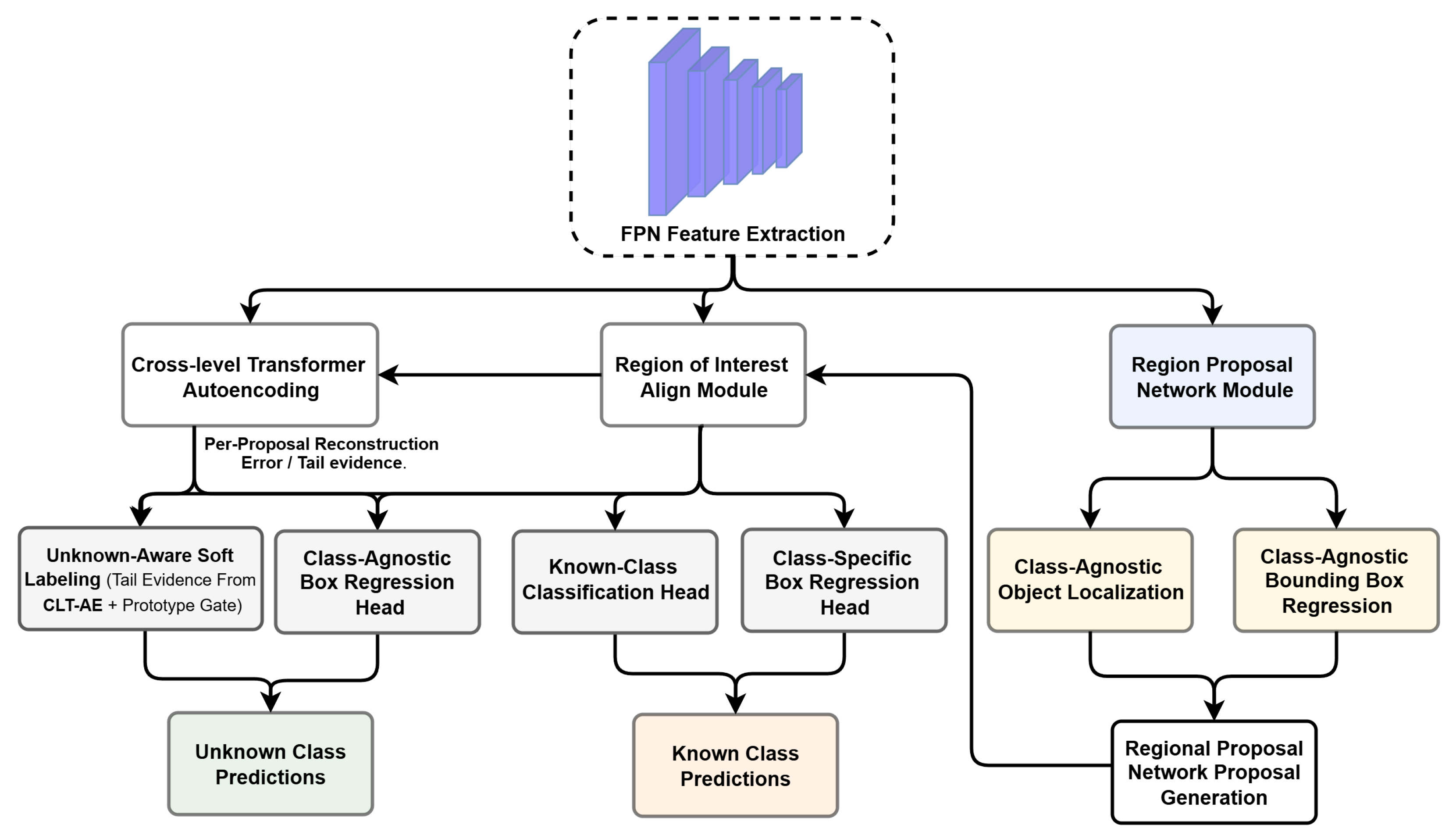

Figure 2 gives a high-level overview of TAPM, illustrating the flow from proposal generation and ROI feature extraction to our tail-calibrated encoding and the known/unknown prediction branches.

| Algorithm 1: Proposed framework training with CLT-AE for incremental OWOD. |

![Applsci 15 10918 i001 Applsci 15 10918 i001]() |

In the following subsections, we provide a detailed description of each module, which includes the mathematical details of CLT-AE reconstruction, reconstruction error modeling, probabilistic uncertainty estimation, prototype-guided contrastive learning, and incremental training protocol.

3.1. Problem Formulation: Long-Tailed Unsupervised Object Detection

Setting. Let be an unlabeled image set drawn from an open world of object categories. For each image I, an unsupervised proposal generator (e.g., Selective Search or FreeSOLO) produces candidate regions that may contain objects but have no class labels.

Over the dataset, let . The open-world label space is , where denotes the currently known classes for the task and U denotes unknown objects. Note that unknown U is distinct from background (clutter or non-object regions); the detector’s standard negative class handles background in the classification branch.

Long-tailed class distribution. Let

be the (latent) count of proposals in

that correspond to class

, and let

be the count for unknowns. Define

and

. We call the distribution long-tailed if the class frequencies, sorted in non-increasing order

, are heavy-tailed, e.g.,

or, more generally, if they exhibit a large imbalance ratio

(where the minimum is taken over classes with

). We partition classes into head and tail by using a frequency threshold

(or percentile

q):

and

. In practice, the severity of imbalance is governed by

and the mass concentrated in

. Counts

are latent in the unsupervised setting and are used only for analysis; when needed, we estimate them from benchmark annotations or high-confidence pseudo-labels. As

increases, mini-batch sampling and loss aggregation become dominated by head classes, which under-represents

and exacerbates false-known assignments for unknown proposals (

U).

Detector objective under long tails. Let

denote detector parameters. Each proposal

has an (unobserved during training) label

. The goals are to (i) correctly classify/regress known objects (

) and (ii) assign unknown objects to

U while avoiding false-known assignments. Let

denote the standard detection loss for known classes (classification with background negatives + box regression), and define an open-set penalty.

where

encourages rejecting unknowns and

regularizes overconfident misclassifications on known classes (e.g., via margin/entropy terms). With class-frequency priors

and

, the population risk decomposes as

In long-tailed regimes, small for increases variance and induces bias toward head classes, which under-trains tails and elevates open-set error (unknowns mistaken as rare knowns).

Unsupervised mining with soft labels. Because

y is unavailable at train time, we estimate a soft unknown score

for each proposal via reconstruction error densities with tail refinement and a prototype-based cosine gate. We then optimize the empirical objective.

where

routes background-like proposals to the detector’s negative class and emphasizes supervised updates for known classes. In contrast,

emphasizes rejecting or down-weighting proposals that behave like unknowns. Our approach (Algorithm 1) computes

from level-wise reconstruction error posteriors with generalized Pareto tail refinement and filters them with a cosine-similarity prototype; this mitigates the effect of

by promoting tail-consistent features and suppressing head-biased shortcuts.

3.2. Tail-Calibrated Transformer Encoding: Modules and Objective

TCTE converts region proposals into object-centric representations that are robust to long-tailed frequency. The pipeline follows Algorithm 1: we (A) encode multi-level features with a cross-level transformer autoencoder (CLT-AE) and reconstruct each level, (B) convert per-proposal reconstruction errors into calibrated tail evidence using a bulk–tail density fit, (C) suppress head-biased shortcuts by comparing each proposal to learned known-class prototypes, and (D) combine tail evidence and prototype alignment into a soft unknown score that routes training signal between open-set rejection and standard detection. Each module is detailed in the following subsection.

3.2.1. Module A: Cross-Level Transformer Autoencoder (CLT-AE)

A backbone with feature maps processes images at

L pyramid levels. The encoder utilizes cross-level attention to exchange context across levels, while the decoder reconstructs each level to enforce an object-centric structure rather than relying on head-class shortcuts. For a proposal

r, we aggregate a compact latent

by pooling encoder tokens within the region of

r across levels. This

serves two roles: (i) it stabilizes per-proposal error estimation via consistent content encoding, and (ii) it provides a metric space where known-class prototypes are well-formed. The reconstruction objective couples the levels,

encouraging the encoder to represent fine and coarse structures uniformly, even for rare (tail) appearances.

3.2.2. Module B: Reconstruction Error Density with Tail Refinement

For each proposal

r, we summarize its per-level reconstruction discrepancy by averaging absolute errors inside the region. Rather than using a fixed threshold, we model the error distribution with a light bulk component and a generalized Pareto tail on exceedance (peaks over threshold); this yields a calibrated tail probability that adapts to level-wise statistics. We then combine level-wise tail probabilities into a single “tail-evidence” score

(via a noisy-OR or learned weights), which increases when

r exhibits a structure poorly explained by the current reconstruction model:

3.2.3. Module C: Prototype-Guided Gating

Frequent head classes often dominate long-tailed learning. To counter this, we build a prototype

for each known class

by averaging

over high-confidence proposals. For any proposal

r, we compute its maximum cosine alignment to the known prototypes; high alignment suggests head-like content, whereas low alignment indicates potential novelty or tail variation poorly captured by heads. We use the normalized alignment

as an anti-evidence term for unknownness:

3.2.4. Module D: Soft-Label Mining and Training Objective

Tail calibration emerges when we combine tail evidence with prototype anti-evidence to form a soft unknown score

and then route losses accordingly. Intuitively,

is high when

r looks distributionally extreme (Equation (

2)) and poorly aligned with any known-class prototype (Equation (

3)). We define

and optimize a balanced empirical risk that emphasizes open-set penalties for high-

proposals while preserving standard detection updates otherwise:

Here, is the standard detection loss (classification with background negatives plus box regression), and aggregates unknown-rejection and confidence regularization terms. Weights and are selected on a small validation split; they remain fixed across tasks.

3.2.5. Why This Calibrates Tails

In long-tailed regimes, gradients are dominated by head classes, which suppresses learning for rare classes and increases false-positive assignments of unknowns. CLT-AE enforces an object-centric reconstruction objective applied uniformly across head and tail classes. The bulk–tail density fit converts reconstruction deviations into calibrated tail evidence, rather than using ad hoc thresholds. The prototype gate down-weights spurious alignments to head-class prototypes in the feature space. The soft score in Equation (

4) then routes proposals with strong tail evidence to the open-set loss while preserving standard updates for known classes.

3.3. Prototype Guidance: Error Formulation

Prototype guidance relies on per-batch prototypes from high-confidence known proposals rather than corpus-level counts, so it remains effective when exact dataset statistics are unavailable. Let

be the ground-truth label for a proposal

r and let

be our soft unknown score. At a fixed decision threshold

, we predict unknown if

and known otherwise. We summarize operating-point errors with two conditional rates:

Intuitively,

is the fraction of truly unknown proposals that are not flagged as unknown (they fall below

). In contrast,

is the fraction of truly known proposals that are incorrectly routed to the unknown branch (they exceed

). In practice, we estimate these from the evaluation set as

Counts are taken over proposals with ground-truth object labels; background-only regions are excluded. Lower

aligns with higher U-Recall, while lower

correlates with improved WI/A-OSE. In

Section 5.10 we visualize calibration and the decision geometry of

and report

and

at

.

3.4. Unsupervised Region Proposal Generation

To discover candidate objects without class bias, we leverage unsupervised region proposal methods. In particular, we utilize Selective Search and FreeSOLO for class-agnostic proposal generation. Selective Search employs bottom-up super-pixel merging to propose object regions, whereas FreeSOLO provides self-supervised instance segmentation, which we convert into bounding boxes. These complementary methods yield a diverse set of region proposals. per image, covering both known and unknown objects. We apply non-maximum suppression and size filtering to refine the proposals, resulting in N high-quality candidate regions per image that likely contain an object but have no assigned class. All proposals are treated uniformly in subsequent stages, ensuring that no class-specific heuristics eliminate unknown objects.

3.5. Multi-Scale Proposal Feature Extraction

We extract features for each proposal using a Feature Pyramid Network (FPN) backbone. Given an input image, the backbone (e.g., ResNet-50 with a Feature Pyramid Network, FPN) produces a pyramid of feature maps.

. For each region

, we perform RoIAlign on each pyramid level

to obtain a fixed-size feature map

capturing the content of

at scale

ℓ. These multi-scale features

preserve both coarse context and fine details. We project each

to a standard embedding dimension

d (using

convolutions) and flatten spatial dimensions, yielding

tokens per level. The result is a collection of tokens

representing proposal

across all FPN levels. This forms the input to our transformer-based autoencoder, enabling multi-scale fusion. To ensure consistent assignment of proposals to pyramid levels, we define an area-based bucketing rule. A proposal

r with bounding-box area

is mapped to the appropriate feature map according to thresholds:

This function, denoted by , guarantees that small proposals align with higher-resolution maps and significant proposals with lower-resolution ones and is used in Algorithm 1 for density indexing and reconstruction error computation.

3.6. Cross-Level Transformer Autoencoder

We design a transformer autoencoder to compress and reconstruct the multi-scale proposal features. A transformer encoder fuses cross-level tokens for each proposal. We prepend a learnable

[PROPOSAL]token

to

and feed the sequence into

layers of multi-head self-attention (MHSA) and feed-forward networks, producing

As shown in Equation (

6), self-attention computes with

, and

V obtained from linear projections of the inputs. The

[PROPOSAL] token attends to all-level features, yielding a compact cross-level representation

. A transformer decoder uses

to reconstruct the original multi-scale features via

layers of cross-attention from

to level/location query tokens, producing initial reconstructions

. To preserve fine details, we add attention-gated skip connections: we compute

(GAP: global average pooling;

: sigmoid) and form the final reconstruction

where ⊙ denotes channel-wise multiplication. The autoencoder is trained by minimizing the per-pixel reconstruction loss, as shown in Equation (

8), forcing

to capture the essential content of known objects while being less faithful to unknown/background patterns.

3.7. Reconstruction Error Estimation

After the cross-level autoencoding stage, each proposal

has a set of reconstructed feature maps.

. To quantify how well the autoencoder explains the content of

at each FPN level

ℓ, we compute a location-wise discrepancy between the original feature

and its reconstruction

. We adopt an

deviation because it is less sensitive to occasional large activations and yields sharper error maps that align with object boundaries. Formally, letting

index the spatial grid,

These per-pixel maps highlight regions that the autoencoder fails to reproduce, which typically arise for out-of-distribution structures (unknown objects or clutter). To summarize evidence across resolution levels and spatial locations into a single uncertainty score for the proposal, we average the error magnitudes first over the spatial grid and then across levels:

Small values of indicate that the proposal lies on (or near) the manifold of known objects learned by the autoencoder. In contrast, large values signal content that is poorly reconstructable and thus likely unknown or background. A single threshold on is brittle because reconstruction errors vary with scale, texture, and context. Instead, we model the distribution of errors for two groups collected during training: (1) proposals aligned to ground-truth known instances, producing samples , and (2) proposals not matching any known instance, producing (background and potential unknowns). We estimate class-conditional densities for these sets by using three complementary families and subsequently select the best one based on validation likelihood or unknown detection performance.

3.8. Distribution Fitting for Uncertainty Estimation

Reconstruction errors vary significantly depending on whether a proposal corresponds to a known object, an unknown object, or pure background. A fixed threshold is insufficient to capture this variability, particularly when the error distributions are multi-modal or heavy-tailed. To achieve robust separation, we explicitly fit probabilistic models to the reconstruction errors collected during training. For proposals aligned to labeled ground-truth objects, we obtain a set representing known-class errors, while errors from proposals not overlapping any labeled object form , which includes background and potentially unknown objects. By learning densities and for these two sets, our framework transforms raw errors into calibrated likelihoods that drive pseudo-labeling and unknown discovery.

3.8.1. Kernel Density Estimation (KDE)

As reconstruction errors do not necessarily follow simple parametric laws, we first employ nonparametric Kernel Density Estimation (KDE), which flexibly models arbitrary shapes. This is particularly useful when known-class errors are sharply peaked while background errors are dispersed. Given

background errors, the estimated density is

where

K is a Gaussian kernel and

h is the bandwidth controlling smoothness. An analogous estimate

is obtained for known-class errors. KDE provides a data-driven baseline that adapts to the empirical structure of reconstruction errors.

3.8.2. Gaussian Mixture Model (GMM)

While KDE is flexible, it can overfit or underfit depending on the bandwidth. To obtain a smooth and interpretable alternative, we also fit a Gaussian Mixture Model (GMM). This model assumes that reconstruction errors arise from a mixture of two latent clusters—low error (known) and high error (background/unknown). Formally, the error density is expressed as

where

and

are mixture weights learned via expectation–maximization. This parametric approach provides stable boundaries between error regimes, facilitating the probabilistic assignment of proposals.

3.8.3. Exponential Weibull with GPD Tail

Open-world detection must also handle extreme reconstruction errors produced by highly novel or noisy regions. To capture this heavy-tailed behavior, we fit an Exponential Weibull (EW) distribution to the background errors. EW has a cumulative distribution function.

Equation (

13) provides a probability density function with scale

and shape parameters

. To further refine the modeling of extreme cases, we augment EW with a Generalized Pareto Distribution (GPD) fitted to the upper tail (e.g., errors beyond the 95th percentile). This hybrid treatment ensures that substantial errors characteristic of unknowns are not underestimated. By combining KDE, GMM, and EW+GPD, our framework selects the most appropriate density model for each error set based on validation likelihood and detection performance. The resulting calibrated densities

and

provide a principled probabilistic foundation for uncertainty-aware pseudo-labeling, ensuring that ambiguous proposals are weighted appropriately and extreme unknowns are properly emphasized.

3.9. Uncertainty-Aware Pseudo-Labeling

Rather than binarizing a proposal by a hard threshold, we compute posterior-like soft knownness using the fitted densities. Given error

e, the probability of being generated by the known distribution is

This continuous score naturally down-weights ambiguous cases. During detection training, we weight the loss terms by these confidence scores: for proposals labeled as unknown, we use , and for known proposals, we use . In effect, uncertain samples contribute less, which stabilizes pseudo-supervision and reduces noise propagation.

3.10. Prototype-Guided Contrastive Learning

To further separate known from unknown in the representation space, we maintain a dynamic prototype —the -normalized mean of confident known RoI embeddings—and align proposal features relative to this prototype. In practice, we update by using RoI features extracted from FPN level , which provides a stable balance between spatial resolution and semantic abstraction across scales. This design ensures consistency and avoids scale dominance when maintaining the prototype throughout incremental training.

For a proposal feature

, we measure cosine similarity and its induced distance:

Let

indicate known (1) versus unknown (0). We then enforce compactness for known and a margin for unknown via

where

is a margin (e.g.,

). This objective pulls known features toward

and pushes unknowns away, creating a wide buffer around the known manifold. The induced score

also acts as a complementary feature-space anomaly due to the reconstruction error signal.

3.11. Incremental Open-World Training

We adopt an incremental learning setting. The model is first trained on an initial set of known classes; all other objects are treated as unknown. Then, over tasks , new classes with labels are introduced, and the detector is fine-tuned without forgetting previous classes. At task t, images contain labeled instances of current classes and unlabeled instances of future classes . An "unknown" category is kept throughout. During training at task t, our reconstruction-based module provides soft labels to flag high-error proposals as unknown; known-class prototype is updated to include , and refines the feature geometry. New-class heads are initialized and learned with standard cross-entropy on labeled proposals; old heads are preserved (optionally with distillation). Across tasks, proposals that do not match any current known class are assigned to the unknown category based on reconstruction uncertainty and objectness. When a previously unknown class becomes known, its instances contribute to supervised learning and to prototype . By the final task, all classes have been introduced, and the detector has learned incrementally while remaining unknown-aware, enabling continual expansion without the need for manual annotation of unknowns.

5. Results and Discussion

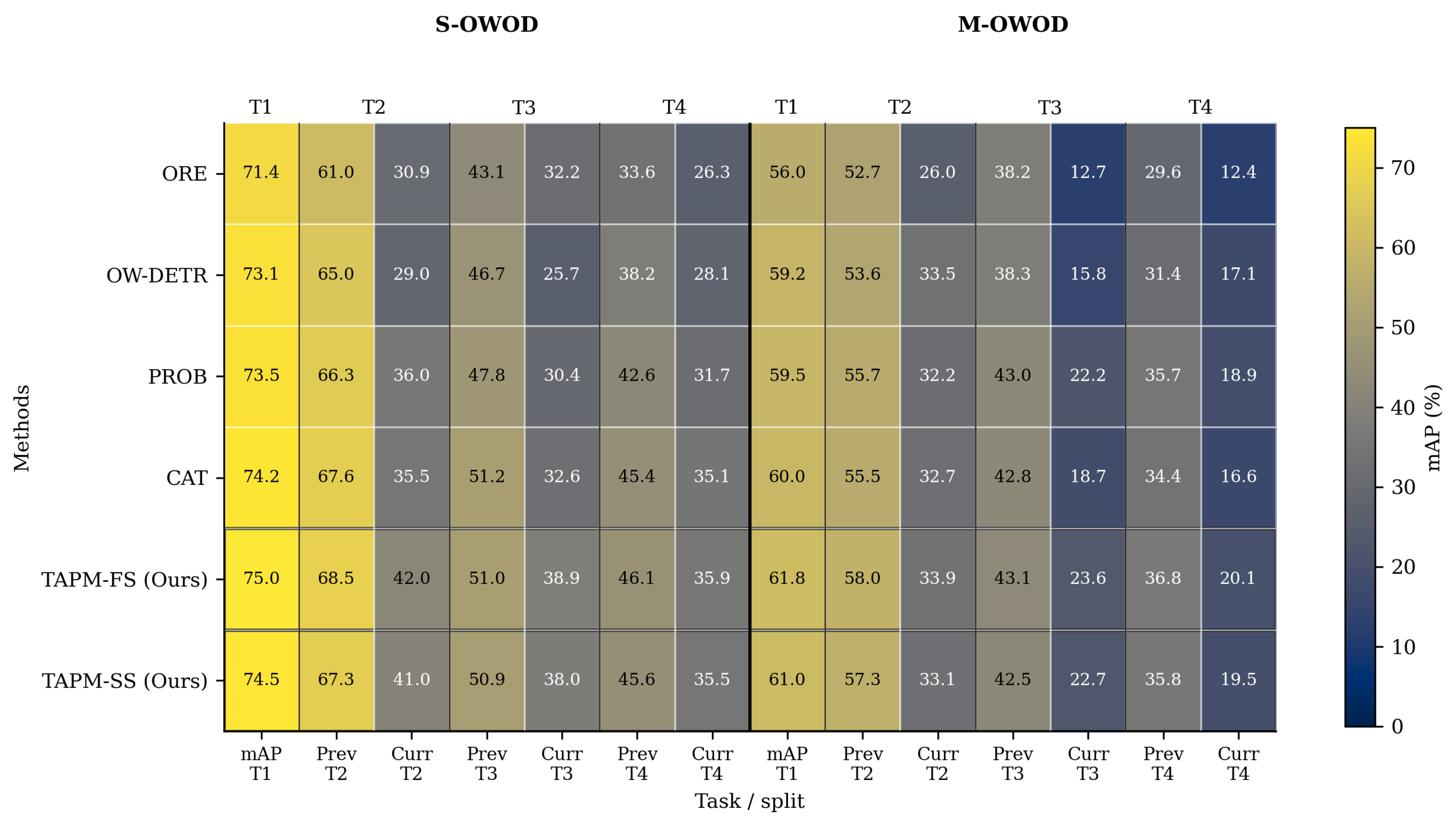

We evaluate TAPM under both superclass-separated (S-OWODB) and superclass-mixed (M-OWODB) settings, reporting incremental performance across tasks (T1–T4).

Table 3 and

Table 4 summarize detection quality on known classes (Prev/Curr mAP), discovery ability on unknown objects (U-Recall), and open-set robustness (A-OSE and WI). We compare our results against representative OWOD baselines, including Faster R-CNN (with/without fine-tuning), ORE, OW-DETR, PROB, and CAT. Two proposal generators are considered for TAPM: Selective Search (SS) [

32] and FreeSOLO [

33] (FS). Below, we analyze trends by setting and task, and relate gains to the components of TAPM (CLT-AE, density fitting with GPD tails, and prototype-gated soft labels).

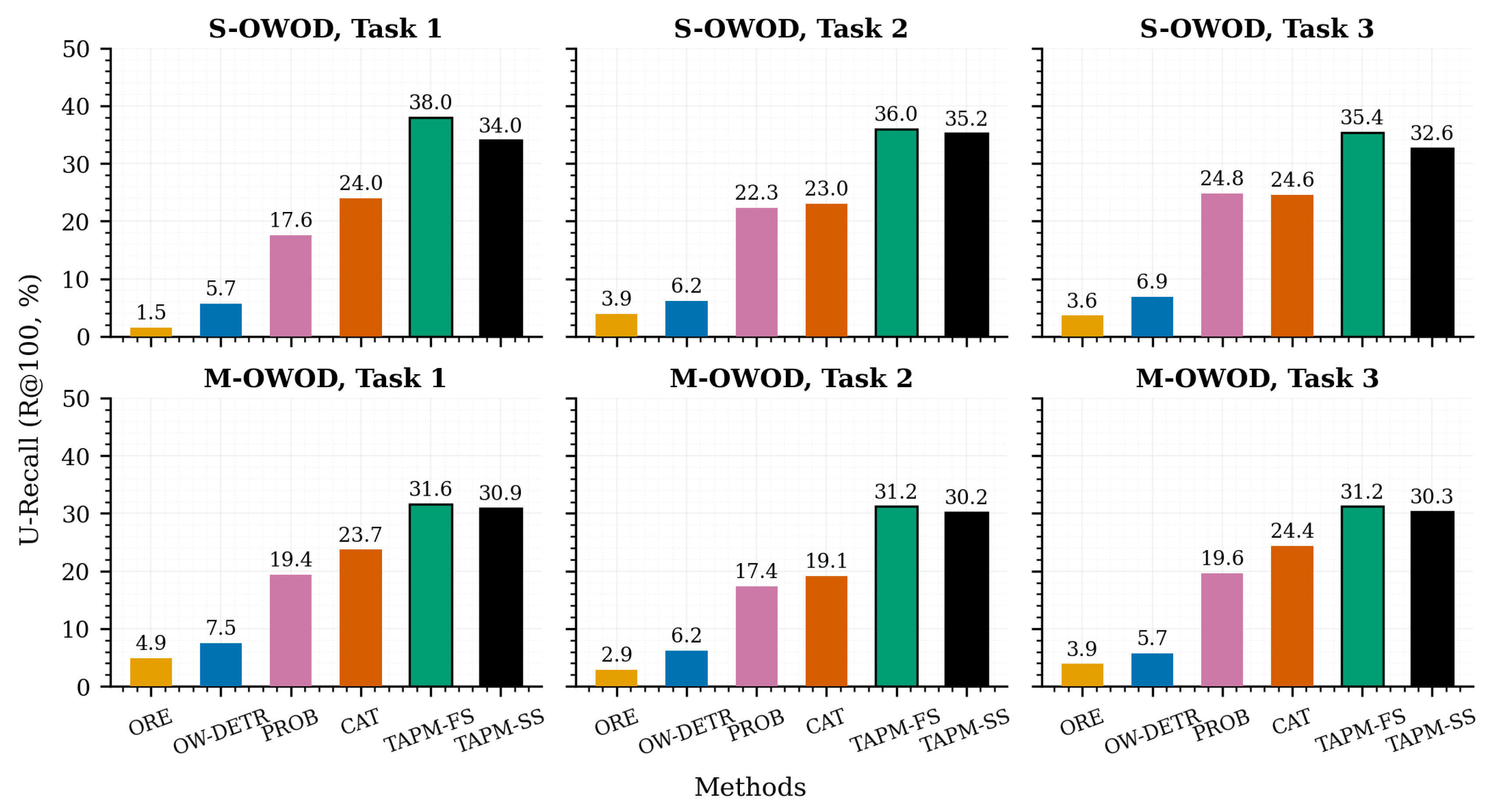

5.1. Overall Trends

Across both benchmarks, TAPM consistently achieves the highest unknown recall while preserving or improving mAP on known categories. On S-OWODB, TAPM-FS raises T1 U-Recall to 38.0 (

Table 3), surpassing the strongest baseline (CAT: 24.0) by +14.0 points; PROB (17.6) is outperformed by +20.4. These detection gains persist through T2 and T3 (e.g., T2 U-Recall: 36.0 vs. PROB 22.3/CAT 23.0; T3 U-Recall: 35.4 vs. PROB 24.8/CAT 24.6), while Curr mAP concurrently improves (T2: 42.0 vs. 36.0/35.5; T3: 38.9 vs. 30.4/32.6). Under M-OWODB, which is a more complex, label-imbalanced regime, TAPM again leads unknown recall (e.g., T1: 31.6 vs. CAT 23.7; T2: 31.2 vs. 17.4–19.1; T3: 31.2 vs. 19.6–24.4) while keeping known-class performance competitive (e.g., T2 Curr mAP: 33.9 vs. 32.2/32.7; T3 Curr mAP: 23.6 vs. 22.2/18.7). For better visualization of the results, we include

Figure 3, which shows the Current- and Previous-class mAP across all incremental learning tasks, as well as a comparison between our method and the previous SOTA methods.

Figure 4 visualizes the comparison between our methods and the previous SOTA on unknown recall, which highlights our method’s better performance on unknown detections.

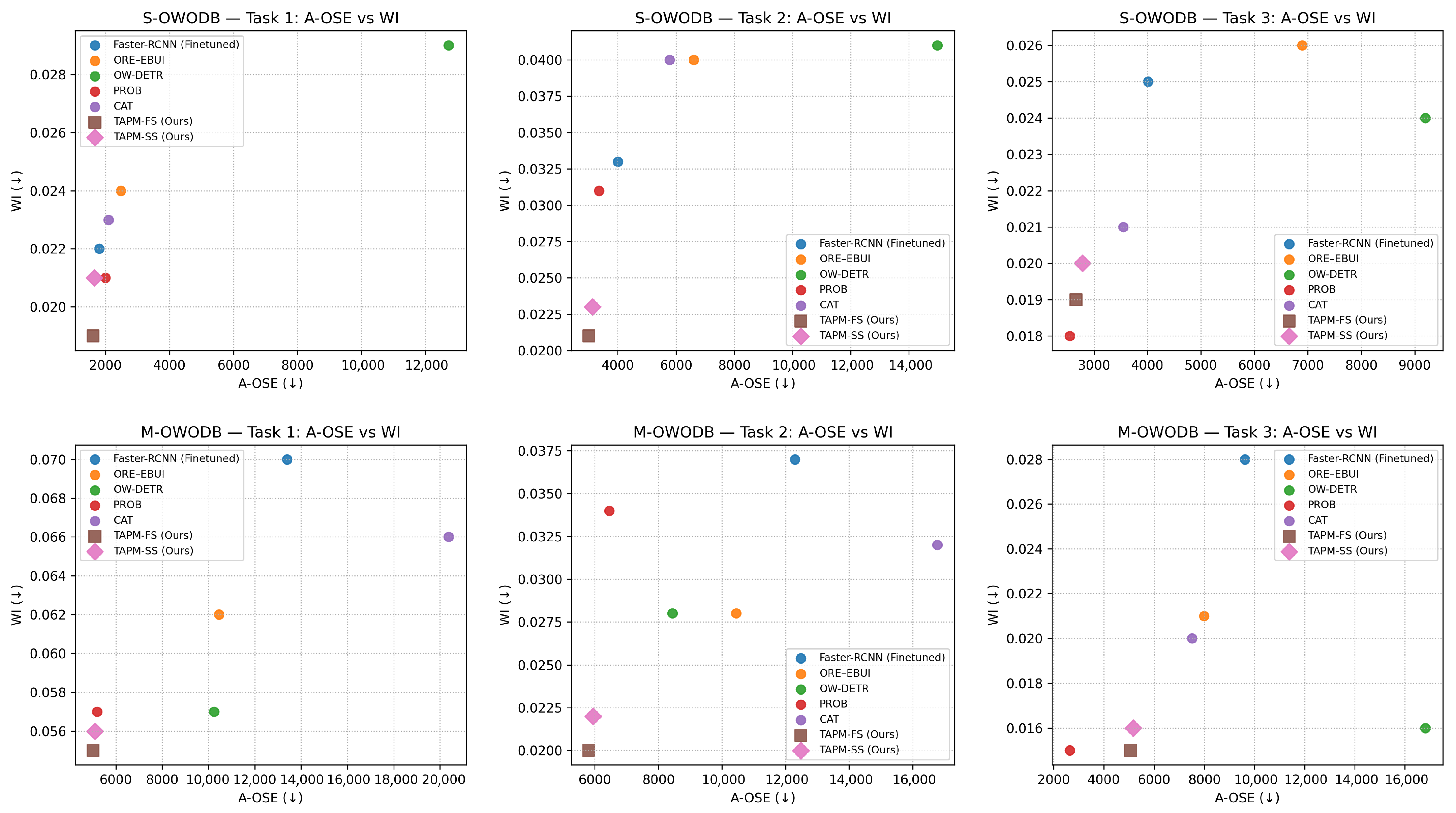

Figure 5 shows the AOSE and WI comparison of our method with the current SOTA methods.

Open-set reliability corroborates these findings. On S-OWODB, TAPM reduces both absolute mistakes on unknowns (A-OSE) and the normalized penalty (WI) in T1 and T2 (e.g., T2: A-OSE 3012 vs. PROB 3358; WI 0.021 vs. 0.031) and remains competitive in T3 (A-OSE 2662 vs. PROB 2546; WI 0.019 vs. 0.018). On M-OWODB, TAPM delivers significant WI reductions in T2 (WI 0.020 vs. PROB 0.034) while also lowering A-OSE (5815 vs. 6452). Together, these results indicate that TAPM not only finds more unknowns but also curbs the tendency to mislabel them as known.

5.2. S-OWODB: Incremental Learning with Clear Class Boundaries

In task 1, it can be seen that with no prior knowledge of future classes, TAPM-FS achieves the strongest balance between discovery and precision (U-Recall of 38.0 and mAP of 75.0). Relative to the best baseline (CAT), TAPM improves unknown recall by +14.0 while also nudging mAP upward (+0.8). The corresponding A-OSE/WI are the lowest among all methods. As summarized in

Table 4, both TAPM-FS and TAPM-SS achieve the lowest A-OSE and WI across tasks 1–3 on S-OWODB and M-OWODB, indicating fewer unknowns misclassified as known and a smaller precision drop when unknowns are present. The score-based analysis provides the probabilistic unknown score

and its operating-point error rates, directly linking the score to detection performance (U-Recall, WI, and A-OSE) at the same operating point (in

Table 4, T1: 1610/0.019), reflecting fewer misclassifications of unknowns as known. As new classes arrive in task 2, TAPM maintains strong retention (Prev mAP 68.5) and boosts the learning of current classes (Curr mAP 42.0), outperforming PROB/CAT by +6.0/+6.5 points, respectively. Unknown discovery remains high (U-Recall of 36.0), and open-set errors drop substantially (A-OSE of 3012 and WI of 0.021), indicating that prototype-gated soft-labeling effectively suppresses false-known assignments for ambiguous proposals.

In task 3, it is evident that TAPM continues to lead in U-Recall (35.4) and Curr mAP (38.9), with retention comparable to that of CAT (Prev mAP of 51.0 vs. 51.2). Although PROB attains a slightly lower A-OSE (2546) and WI (0.018), TAPM’s WI remains competitive (0.019), and the framework achieves higher recall and Curr mAP. This trade-off suggests that TAPM adopts a more recall-seeking stance with careful normalization of open-set risk. When all classes are revealed (no “unknowns”) in task 4, TAPM sustains top-tier mAP (Prev/Curr: 46.1/35.9), indicating that earlier pseudo-labeling did not erode final supervised learning.

5.3. M-OWODB: Mixed Superclasses and Stronger Imbalance

The mixed-superclass regime intensifies overlaps between known/unknown semantics and amplifies tail effects. TAPM remains robust. As shown in task 1, TAPM-FS yields 31.6 U-Recall and 61.8 mAP, improving unknown discovery by +7.9 over CAT with a +1.8 mAP gain. A-OSE/WI also improves (in

Table 4, T1: 5019/0.055 vs. PROB 5195/0.057), indicating fewer open-set mistakes after normalization. In task 2, TAPM excels in both discovery and stability (U-Recall of 31.2; Prev/Curr mAP of 58.0/33.9), while WI is decreased to 0.020 (vs. PROB 0.034). This significant drop (∼41% relative) indicates that CLT-AE’s error modeling plus GPD tail calibration provides a better separation between unknowns and knowns even under mixed superclasses.

In task 3, TAPM secures the best U-Recall (31.2) and Curr mAP (23.6) and matches the PROB WI (both ). A-OSE is higher than PROB (5059 vs. 2641), suggesting a modest increase in residual confusion on especially ambiguous unknowns. In practice, this can be mitigated by slightly tightening the prototype gate or raising the unknown threshold, typically with a marginal loss in recall if an application prioritizes conservative unknown handling. Similarly, as in S-OWODB, TAPM maintains known-class accuracy in task 4, achieving Prev/Curr mAP of 36.8/20.1, edging out alternatives (e.g., PROB: 35.7/18.9), confirming that our pseudo-labeling strategy does not hinder the final fully supervised phase.

5.4. Impact of Proposal Generator: SS vs. FS

FreeSOLO proposals consistently provide a slight yet reliable edge over Selective Search in both discovery and detection quality. On S-OWODB T1, TAPM-FS improves U-Recall by +4.0 over TAPM-SS (38.0 vs. 34.0) with a slight mAP gain (75.0 vs. 74.5). On M-OWODB T1, the gap is smaller but still positive (31.6 vs. 30.9). The pattern repeats across tasks, indicating that denser, segmentation-driven proposals better cover unknown instances, letting CLT-AE and the density model operate on richer candidate sets.

5.5. Why TAPM Works

- (i)

CLT-AE for tail calibration. The cross-level transformer autoencoder aligns multi-scale features and reconstructs object-centric structure; unknowns and atypical tails then manifest as larger reconstruction errors. This shift makes low-data (tail) modes more separable from background, improving Curr mAP on newly introduced classes (e.g., S-OWODB T2/T3: +6–8 points over PROB/CAT).

- (ii)

Best-of density with GPD tails. Selecting the most suitable error model per level (KDE/GMM/EW) and refining extremes with a GPD tail sharpen the posterior . The substantial WI reductions in M-OWODB T2 (0.020 vs. 0.034) exemplify improved calibration where mixed semantics typically blur boundaries.

- (iii)

Prototype-gated soft labels. Cosine gating against a running known prototype suppresses background-like or off-manifold proposals, cutting false-known assignments (lower A-OSE/WI) without sacrificing recall. The joint effect with calibrated densities explains why TAPM achieves both higher unknown recall and competitive (often superior) mAP.

5.6. Computational Complexity Analysis

All training experiments were conducted on four NVIDIA RTX A6000 GPUs. Throughput (FPS) and floating-point cost (GFLOPs) are reported per image at inference and are measured under identical pre-/post-processing and input resolution for all methods. The notation “NG” in

Table 5 indicates that an explicit external proposal generation stage is not required (i.e., DETR-family and end-to-end detectors); otherwise, the reported training time includes proposal generation where applicable.

Table 5 summarizes training time, inference speed, and computational cost on S-OWODB. Relative to a fine-tuned Faster R-CNN baseline (14 h, 25 FPS, 185 GFLOPs), the proposed TAPM variants increase wall-clock training time moderately to 21 h while keeping inference essentially unchanged (24 FPS) with comparable arithmetic cost (180 GFLOPs). Most of the extra training time comes from CLT-AE reconstruction and the bulk–tail (GPD) fit; at inference, the overhead is negligible (a few lightweight projections and prototype cosine checks per proposal). Rows marked “NG” (CAT and PROB) are DETR-based and thus skip proposal generation but still train for substantially longer (46 h and 40 h) and run slower at inference (18–20 FPS) despite similar GFLOPs, reflecting the higher optimization cost of end-to-end transformer training under our settings. RE-OWOD (30 h, 24 FPS, 180 GFLOPs), MEPU (25 h, 24 FPS, 180 GFLOPs), and UC-OWOD (30 h, 24 FPS, 185 GFLOPs) use Faster R-CNN with proposals and show throughput close to the baseline. OWOBJ is a plugin; the numbers shown reflect a Faster R-CNN host (proposals are required). When attached to a DETR host, proposal generation is not used (NG would apply), and throughput follows the base DETR configuration.

For TAPM-FS/SS, the additional training time arises from the tail-calibrated transformer encoding, specifically (i) the CLT-AE reconstruction objective and (ii) the bulk–tail density calibration. At inference, the prototype gate computes a cosine similarity between each proposal embedding

and

K stored prototypes, i.e.,

per proposal (or

per image with

P proposals), which is small relative to backbone feature extraction FLOPs. This is consistent with the near parity in FPS and GFLOPs with Faster R-CNN: TAPM-FS/SS delivers the open-set robustness gains reported in

Section 5 and

Section 5.10 with minimal inference overhead (≈4% lower FPS than Faster R-CNN and similar GFLOPs) and a moderate increase in training time. For completeness, the prototype cache is a

matrix (about

bytes in FP32). By contrast, transformer-based baselines trained in an end-to-end manner require substantially longer optimization and exhibit lower runtime throughput under the same evaluation conditions.

5.7. Discussion

TAPM maintains a high Prev mAP while increasing Curr mAP in T2/T3, indicating that pseudo-unknown mining does not destabilize incremental learning—robustness to mixing (M-OWODB). Even with heavier class overlap, TAPM maintains the best U-Recall and competitive WI. If an application requires stricter open-set conservatism, a slightly higher prototype threshold or a more conservative posterior cutoff can reduce A-OSE with limited impact on recall. Across two challenging OWOD regimes, TAPM improves unknown-object discovery by large margins while maintaining or enhancing known-class accuracy, and it reduces open-set confusion as measured by WI/A-OSE in most phases. The gains stem from a principled combination of reconstruction-driven uncertainty, tail-aware density calibration, and prototype-guided filtering.

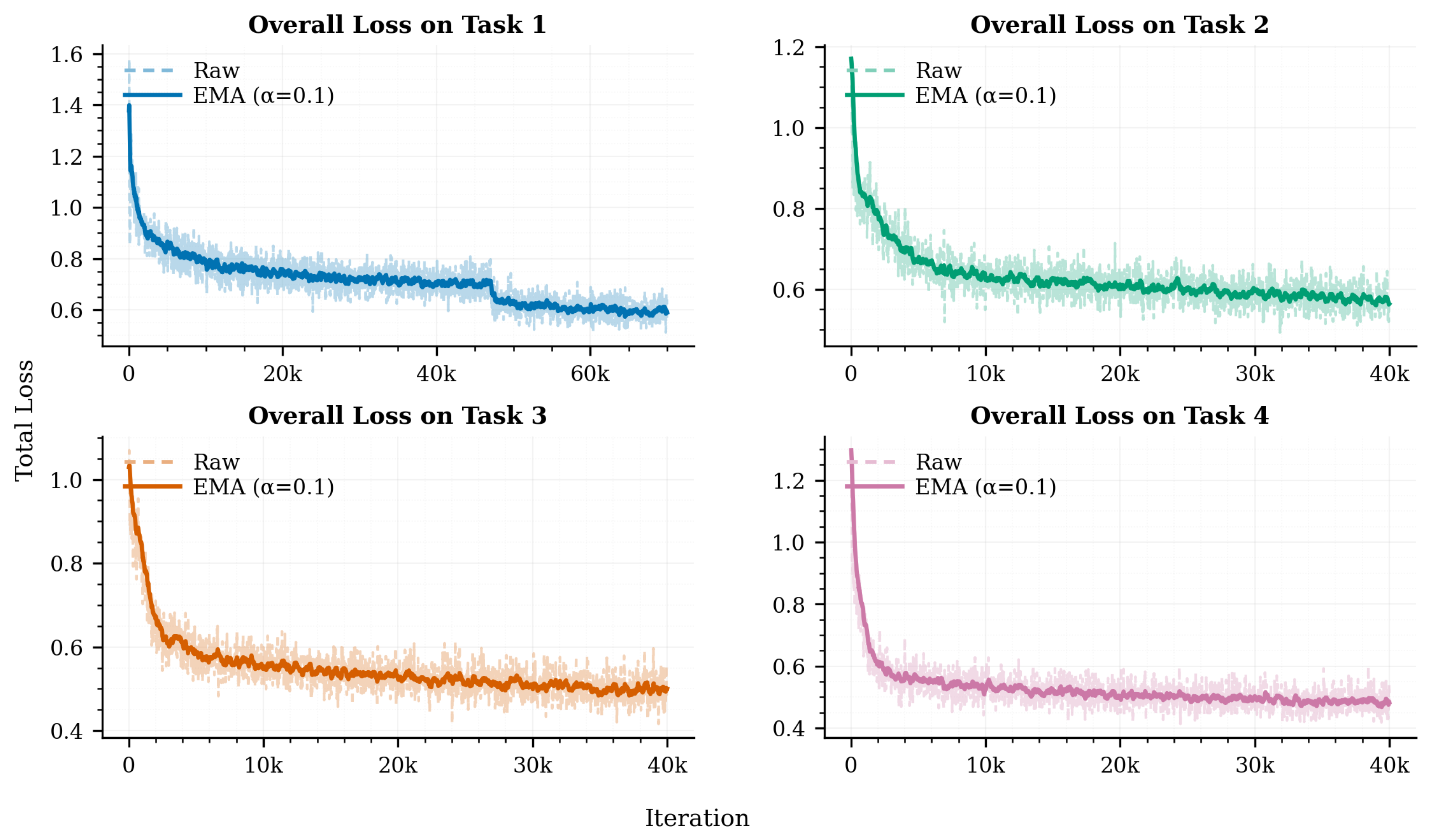

5.8. Training Loss Analysis Across Tasks

Figure 6 presents the overall incremental learning training loss trends for tasks 1–4. Both the raw training loss and its exponentially smoothed version (EMA,

) are shown to visualize the convergence behavior better. Across all tasks, the curves follow a consistent pattern: an initial rapid loss reduction during the early iterations, reflecting the model’s rapid adaptation to the data from the current task, followed by a gradual stabilization phase as training progresses. The EMA curves demonstrate smooth convergence, highlighting the stability of the optimization process across all incremental tasks.

A task-wise comparison reveals that task 1, which introduces the largest group of classes, begins with the highest loss (around 1.4) but steadily converges to approximately 0.6. By contrast, tasks 2–4 start with lower initial loss values (ranging from 1.2 to 1.0), benefiting from the accumulated knowledge gained in previous tasks. These later tasks converge more quickly and reach lower final loss levels between 0.55 and 0.45. Another notable trend is the progressive reduction in final loss across tasks. Task 4 achieves the lowest overall loss, indicating that as more classes are introduced incrementally, the model becomes increasingly robust and effective in balancing previously learned knowledge with new information. This suggests that the incremental training framework not only preserves past knowledge but also improves efficiency in subsequent tasks.

5.9. Cross-Dataset Generalization Performance of TAPM

To evaluate the generalization ability of the proposed TAPM framework, we conduct experiments across two large-scale benchmarks: LVIS and Objects-365 (

Table 6). These datasets differ significantly in their object distributions and annotation densities, making them a strong testbed for assessing robustness beyond the training domain. On LVIS, Faster R-CNN fails to recover any unknown objects (R@100 = 0.0), highlighting its limited open-world capability. CAT and PROB achieve modest recall values (R@100 = 35.2 and 40.5, respectively), but their known-class AP is notably lower than that of TAPM. By contrast, both TAPM-SS and TAPM-FS consistently outperform all baselines, yielding higher AP on known classes (39.3 and 38.5, respectively) while also improving the recall of unknown instances, with TAPM-FS achieving the best overall unknown recall (R@100 = 47.2).

A similar trend is observed on Objects-365. TAPM surpasses prior approaches in both known-class AP and unknown recall. While Faster R-CNN again fails to generalize to unknowns (R@100 = 0.0), CAT and PROB improve recall to 37.4 and 40.8, respectively, albeit at the cost of reduced AP. TAPM-SS achieves 38.2 AP with 46.9 R@100, while TAPM-FS maintains a competitive AP (37.0) and achieves the highest unknown recall (47.8). These results demonstrate that TAPM effectively balances closed-set accuracy and open-set discovery, a crucial property for real-world deployment. Overall, the results confirm that TAPM delivers superior cross-dataset generalization compared with existing open-world detection baselines. The consistent improvements on both LVIS and Objects-365 validate the robustness of our proposal generation and thresholding strategies in handling diverse and previously unseen object categories.

5.10. Prototype Guidance: Graphical and Quantitative Analysis

We evaluate

using two views:

Figure 7 (left) is a reliability plot that bins predictions and compares the bin-mean score

to the empirical

;

Figure 7 (right) shows the decision geometry in

with the boundary

overlaid. We then report the conditional operating-point error rates

and

at

in

Table 7. As

Table 7 shows, prototype guidance substantially reduces

while preserving

, consistent with the boundary shift observed in

Figure 7. FKA and KOR are reported at the same operating point used for WI/A-OSE in the main results.

5.11. Statistical Analysis

We quantify variability and test significance under a matched evaluation protocol. Each method is trained with three independent random seeds; the main tables report mean ± standard deviation for mAP, U-Recall, WI, and A-OSE. To capture evaluation set uncertainty, we also use an image-level bootstrap. For each method on S-OWODB task 2 (FS pipeline), we draw 10,000 resamples of the test images (with replacement), recompute all metrics on each resample, and report 95% confidence intervals (CIs) via the percentile method (

Table 8).

To assess whether TAPM–FS improves over baselines, we perform paired bootstrap testing: using the same resampled image sets for both methods, we compute per-resample differences as

reporting the 95% CI of

(

Table 9). Here, the comparator is the baseline method. CIs that do not include zero indicate statistical significance at

(two-sided). mAP and U-Recall are reported as percentages; differences are in percentage points (pp). WI is a unitless precision-drop measure (lower is better). A-OSE is the count of unknown ground-truth objects misclassified as known at the fixed operating point used throughout (IoU

and known-class recall target

).

5.12. Ablation Study

Table 10 presents ablations on S-OWODB (task 2). All variants use the same detector and backbone, identical data partitions, and an identical evaluation protocol: greedy assignment with

and a confidence threshold chosen to achieve known-class recall

when computing WI and A-OSE (consistent with ORE). The Baseline excludes all components of the proposed tail-calibrated transformer encoding (TCTE), i.e., no CLT-AE, no bulk–tail density calibration, and no prototype gating. The FS and SS blocks differ only in the proposal generator (FreeSOLO vs. Selective Search); all other settings are identical.

Introducing CLT-AE substantially improves detection of unknown instances while preserving known-class accuracy. For the FS configuration, U-Recall increases from to with a concomitant rise in mAP from to ; WI decreases from to , and A-OSE is reduced from 4007 to 3850. For SS, U-Recall increases to and mAP to (from ), with WI improving from to and A-OSE decreasing from 4207 to 3920. These results indicate that the reconstruction objective already provides discriminative evidence for unknown objectness without degrading performance on known classes. Converting reconstruction deviations into calibrated evidence yields further reductions in open-set error. For FS, WI decreases from to and A-OSE from 3850 to 3425, accompanied by an increase in U-Recall from to and a modest mAP gain to . For SS, WI decreases from to and A-OSE from 3920 to 3550, with U-Recall improving from to and mAP reaching . These trends confirm that calibration mitigates over-rejection and reduces misclassifications of unknowns as known.

Augmenting the calibrated model with prototype gating produces the largest additional improvement. For FS, WI decreases from

to

and A-OSE from 3425 to 3012, while U-Recall rises to

and mAP to

. For SS, WI decreases to

and A-OSE to 3130, with a U-Recall of

and an mAP of

. Relative to the respective baselines, the full models reduce A-OSE by 995 (FS) and 1077 (SS) and lower WI by

(FS) and

(SS), while improving mAP by

(FS) and

(SS). Across both proposal generators, the progression is monotonic and consistent with the mechanism-level analysis in

Section 5.10: CLT-AE increases recall of unknowns, the bulk–tail fit calibrates evidence and reduces WI/A-OSE, and prototype guidance further suppresses head-aligned confounders in the feature space, yielding the largest additional reduction in WI and A-OSE with mAP being maintained or improved.

5.13. Qualitative Comparison with SOTA Methods

To complement the quantitative results, we provide a qualitative comparison of the proposed TAPM framework against two SOTA OWOD methods, PROB [

22] and CAT [

34].

Figure 8 and

Figure 9 illustrate diverse open-world scenarios where both SOTA methods are compared against our TAPM side by side, with PROB [

22] and CAT [

34] being shown in the top row and TAPM in the bottom row of each example. In

Figure 8, the first column, column A (tennis scene), demonstrates that PROB frequently produces multiple redundant unknown detections around the same subject and even mislabels background regions, whereas TAPM outputs compact and targeted unknown boxes while maintaining high-confidence recognition of the known person. In the second column, column B (paddleboarding), PROB [

22] marks extensive, ambiguous background and regions of water and paddle edges as unknown. At the same time, TAPM avoids such spurious detections and produces cleaner results, showing robustness against texture-heavy backgrounds. In the third column, column C (ski jump), TAPM successfully identifies additional occluded individuals while preventing the proliferation of background unknowns seen in PROB’s outputs. These results highlight TAPM’s ability to reduce redundant unknown detections and improve the recall of small or partially visible known objects.

Figure 9 further supports these observations. In the riverside scene, A (left), CAT [

34] generates multiple false, confusing unknown boxes inside the known class boxes. TAPM, by contrast, suppresses these false alarms and localizes only semantically plausible unknowns while detecting known objects such as people and the bird with tighter, non-overlapping bounding boxes. In the alpine snow scene, B (middle), CAT [

34] misses many candidate unknown objects, whereas TAPM restricts unknown predictions to only genuine candidate regions and produces more reliable unknown objects. In the surf sequence, C, CAT [

34] detects only a limited subset of surfers, missing some individuals who are partially occluded or submerged in the wave. Its predictions remain sparse, and no attempt is made to highlight uncertain regions, resulting in incomplete coverage of the scene. By contrast, our TAPM framework (bottom) achieves more comprehensive detection, identifying all visible surfers along the wave crest with high confidence. Importantly, TAPM maintains restraint in its treatment of background textures: the dynamic water surface is not misclassified as large unknown regions, and only a few small, localized labels appear in ambiguous areas. This balance of recovering additional true positives while avoiding the proliferation of false unknowns demonstrates the improved calibration of TAPM in complex, cluttered environments.

Taken together, the qualitative evidence demonstrates that TAPM consistently achieves three key improvements over PROB [

22] and CAT [

34]: (1) suppression of background-driven false unknowns, (2) more compact and non-redundant detections, and (3) improved coverage of small or occluded known objects. These visual behaviors align directly with the quantitative trends observed in our experiments, where TAPM achieves higher unknown recall while simultaneously reducing open-set error metrics such as A-OSE and WI.

6. Limitations and Future Work

TAPM delivers consistent gains for open-world detection, yet several practical limitations remain. First, the approach relies on external proposal generators (Selective Search or FreeSOLO); therefore, performance hinges on proposal coverage, which can be fragile for small, thin, or heavily occluded objects. Second, although inference overhead is modest, cross-level transformer encoding and bulk–tail calibration add training time and memory relative to a plain Faster R-CNN (see

Section 5.6). Third, the soft routing score requires calibration on a small validation split; under distribution shift, miscalibration may increase WI or A-OSE. Finally, under extreme class imbalance, prototype estimates can be biased toward head classes, potentially suppressing the discovery of rare categories. These constraints are most apparent when proposal recall is low, computational budgets are tight, or the data distribution varies substantially.

For future work, we identify three concrete directions. Real-time and edge deployment: Develop lighter cross-level encoders, apply pruning/distillation and quantization, and adopt approximate prototype search, with deployment to optimized runtime for streaming video. Proposal-free integration: Embed tail calibration into a one-stage detector (e.g., DETR/YOLO family) so that objectness and novelty cues are learned jointly, removing the external proposal stage and improving small-object recall. Vision–language grounding: Leverage CLIP-style encoders to initialize or refine class prototypes, use text prompts to guide the discovery and naming of novel clusters, and evaluate language-conditioned unknown recall on large-vocabulary benchmarks. Additional directions include online or conformal calibration to handle distribution shift, drift-aware prototype updates for continual learning, and selective querying to minimize supervision in safety-critical settings. Collectively, these steps target real-time operation, reduce dependence on external proposals, and improve interpretability while maintaining the accuracy demonstrated in this work.