MKF-NET: KAN-Enhanced Vision Transformer for Remote Sensing Image Segmentation

Abstract

1. Introduction

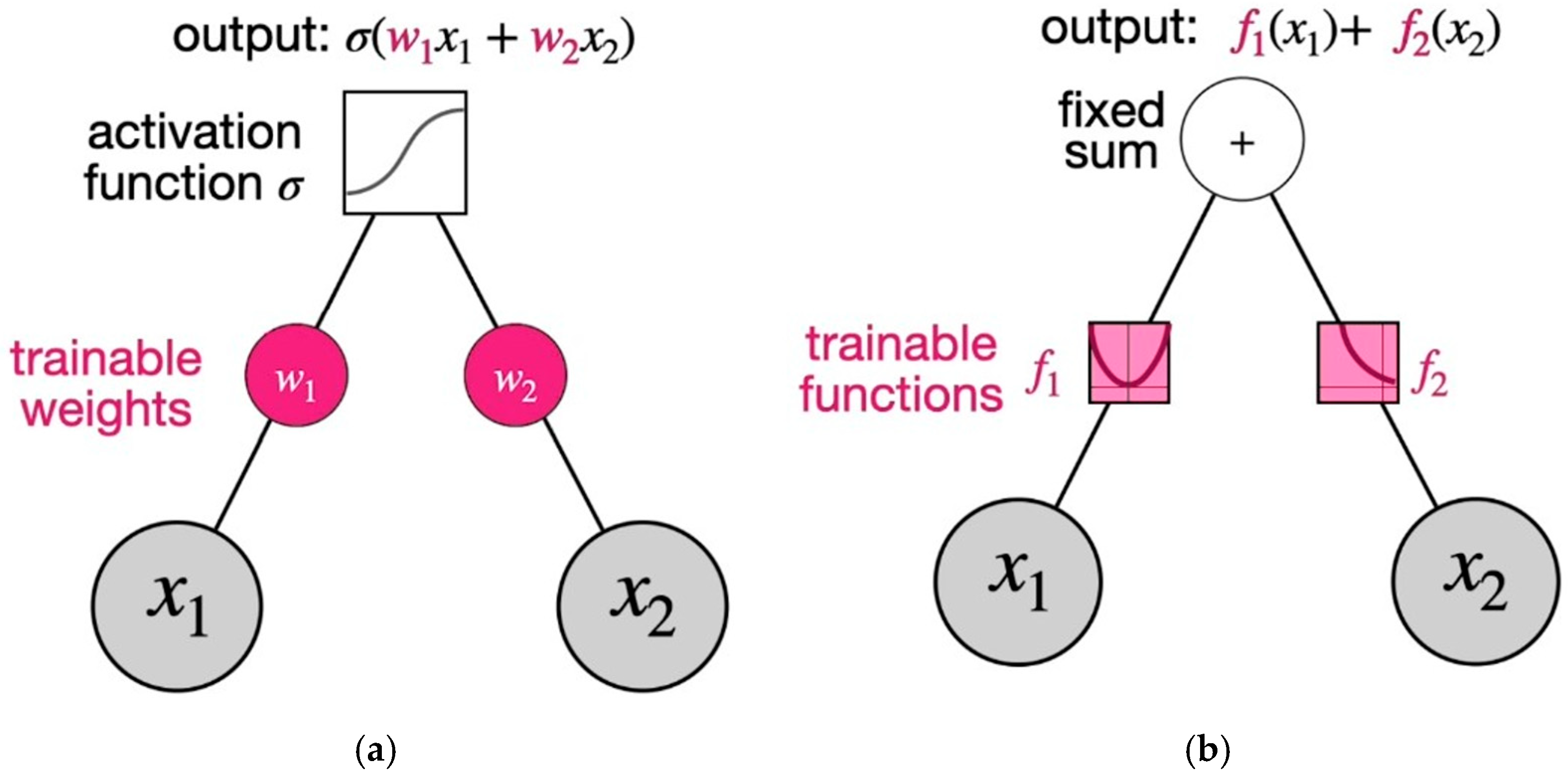

2. Related Work

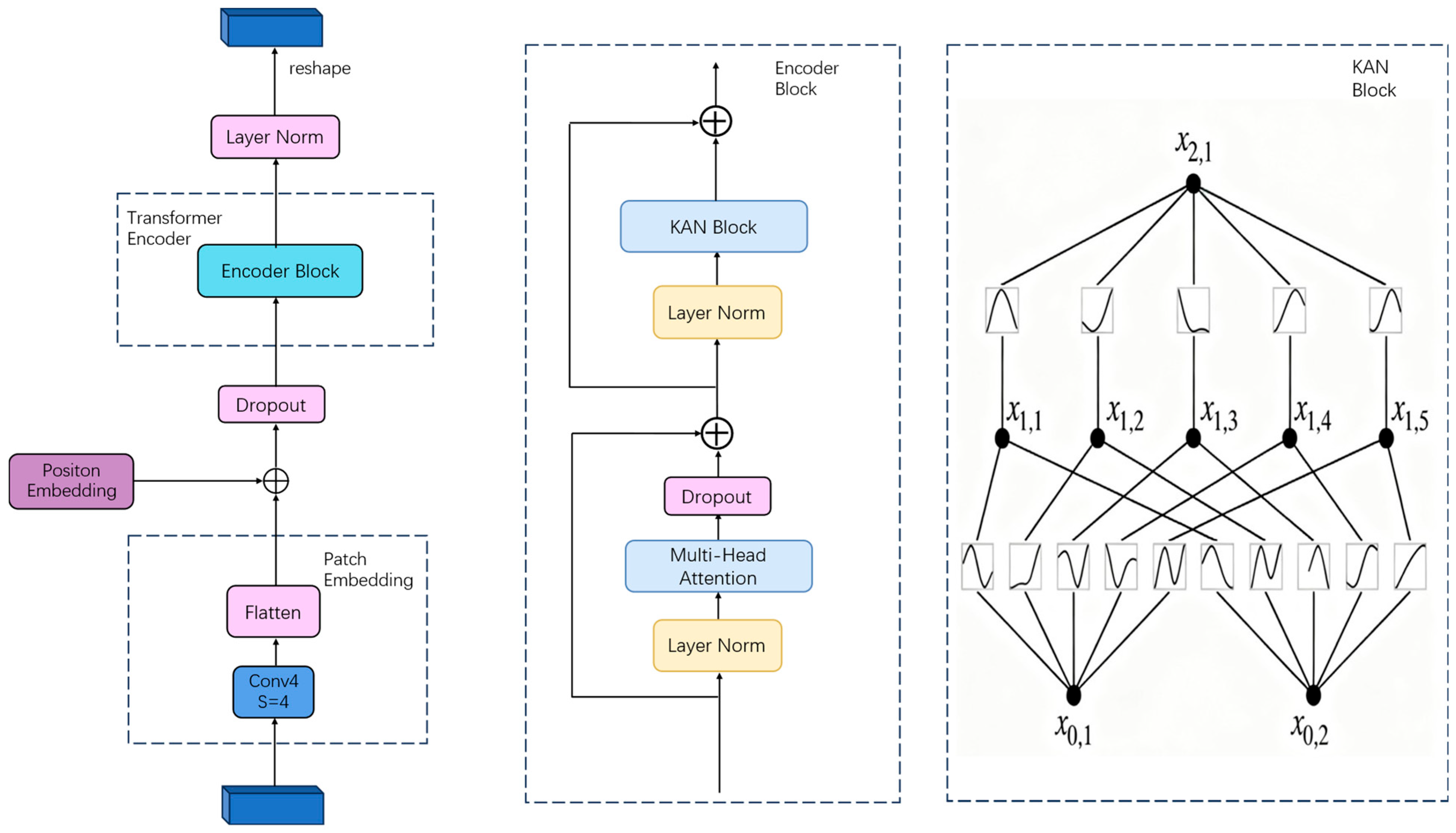

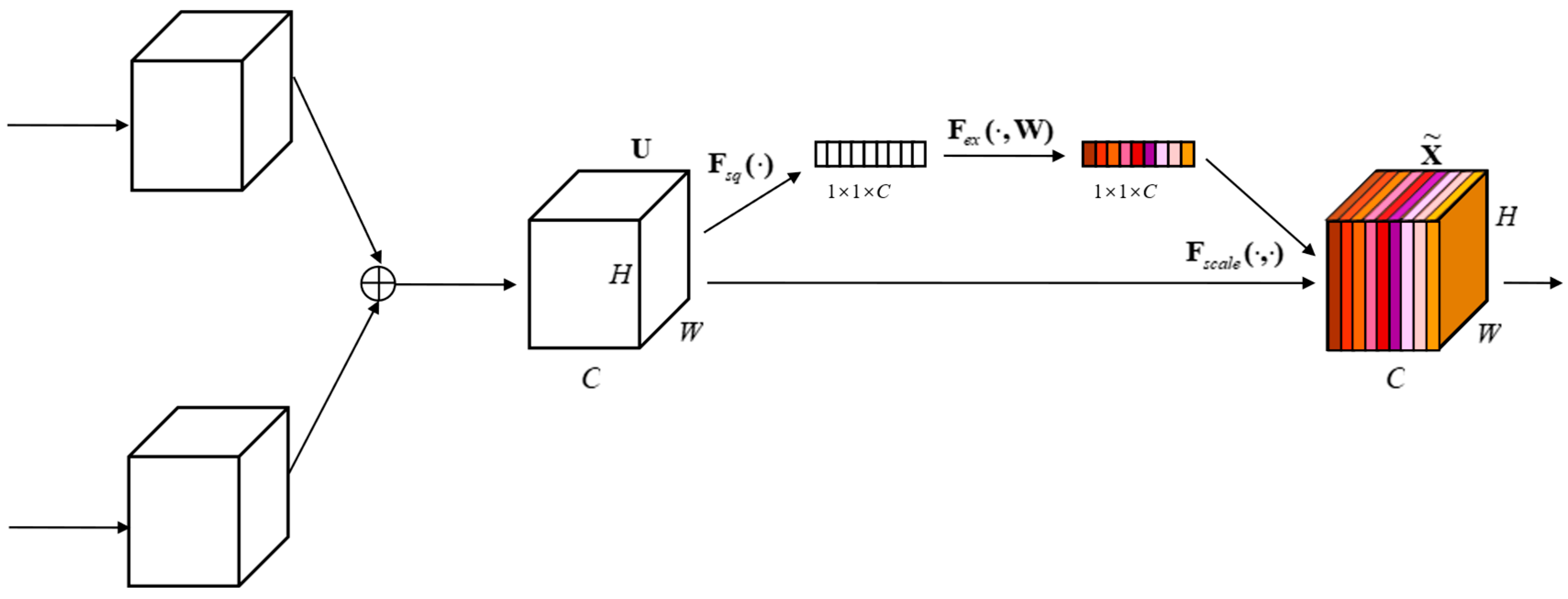

3. Materials and Methods

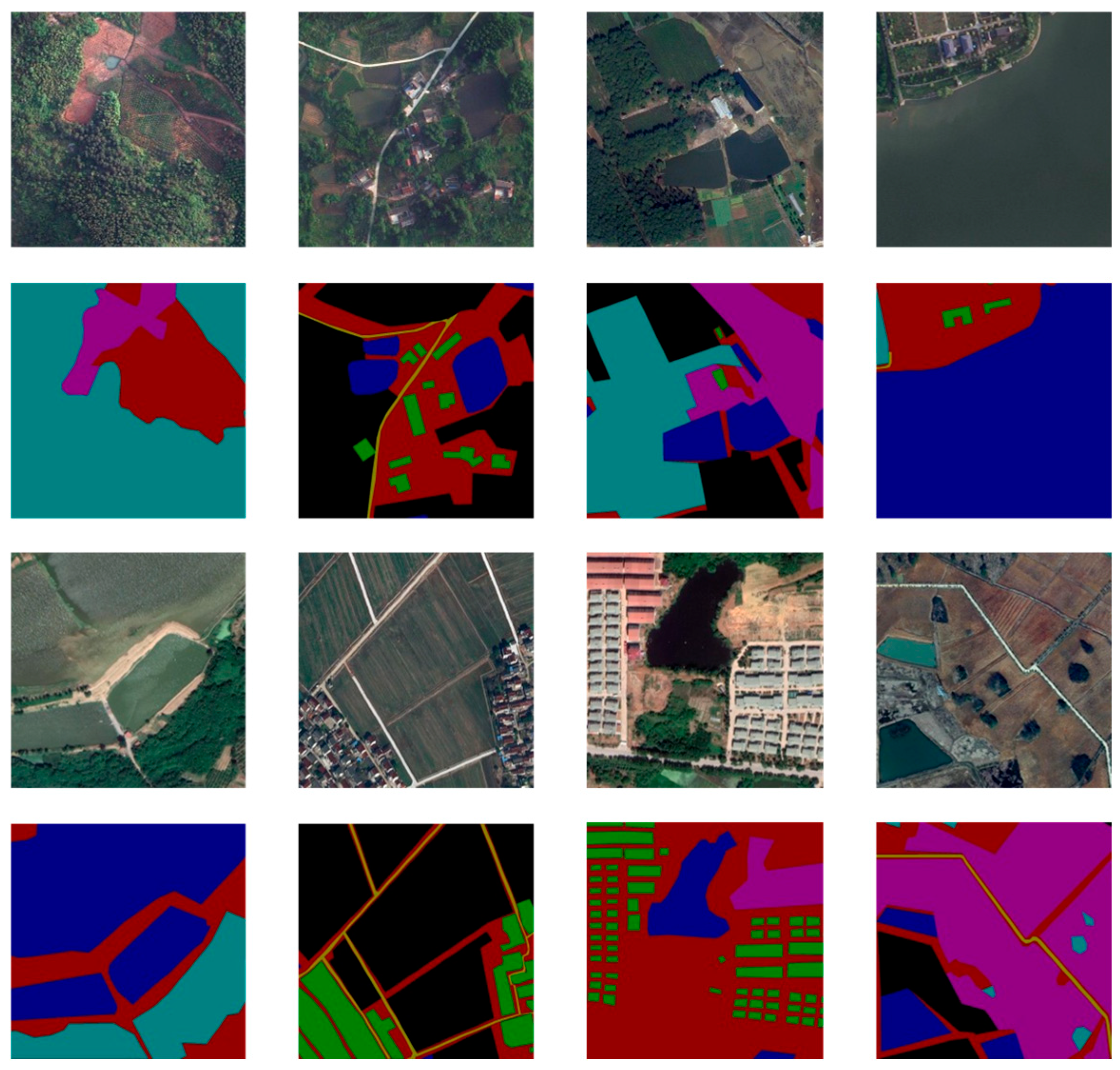

3.1. Data Source and Notes

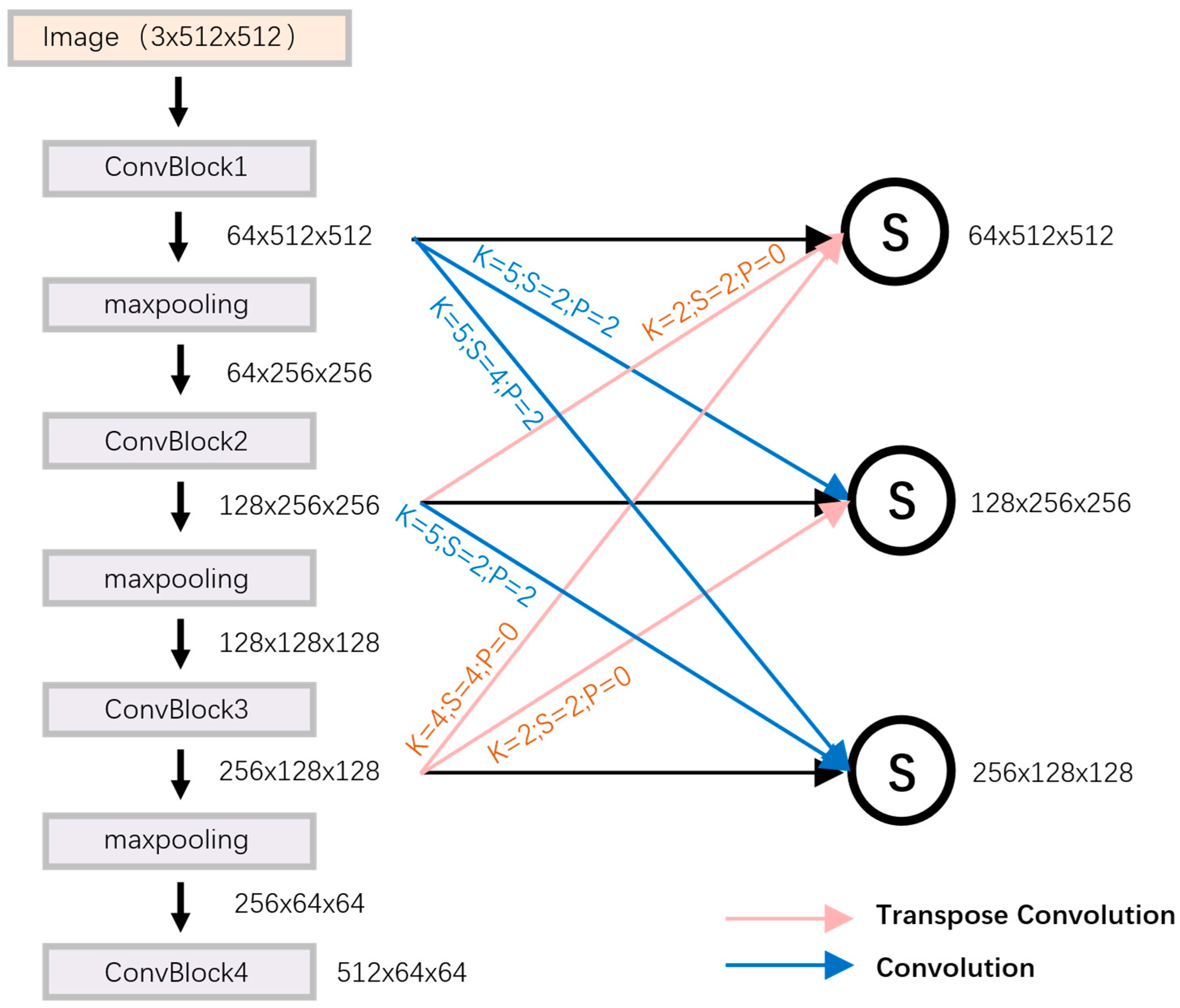

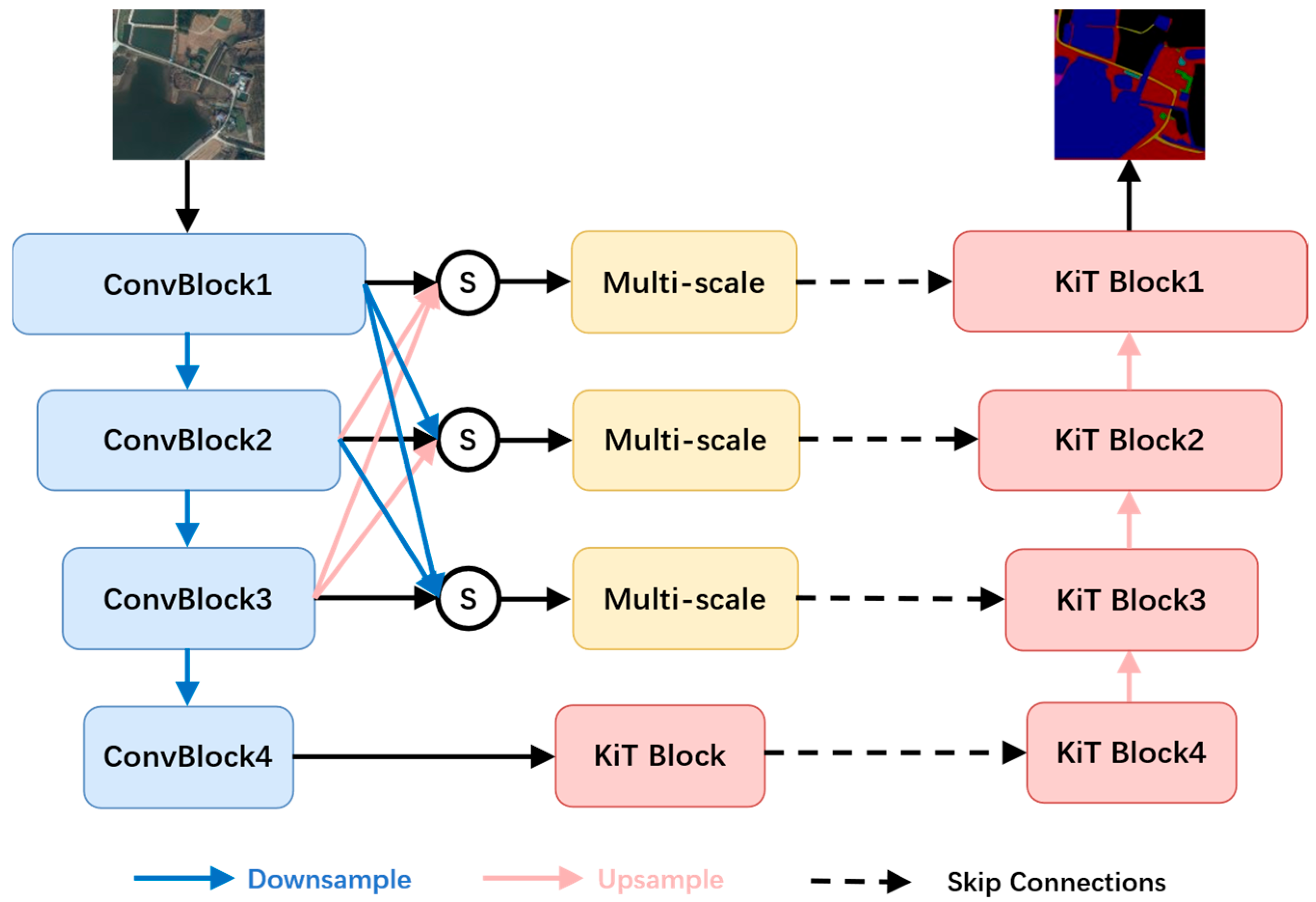

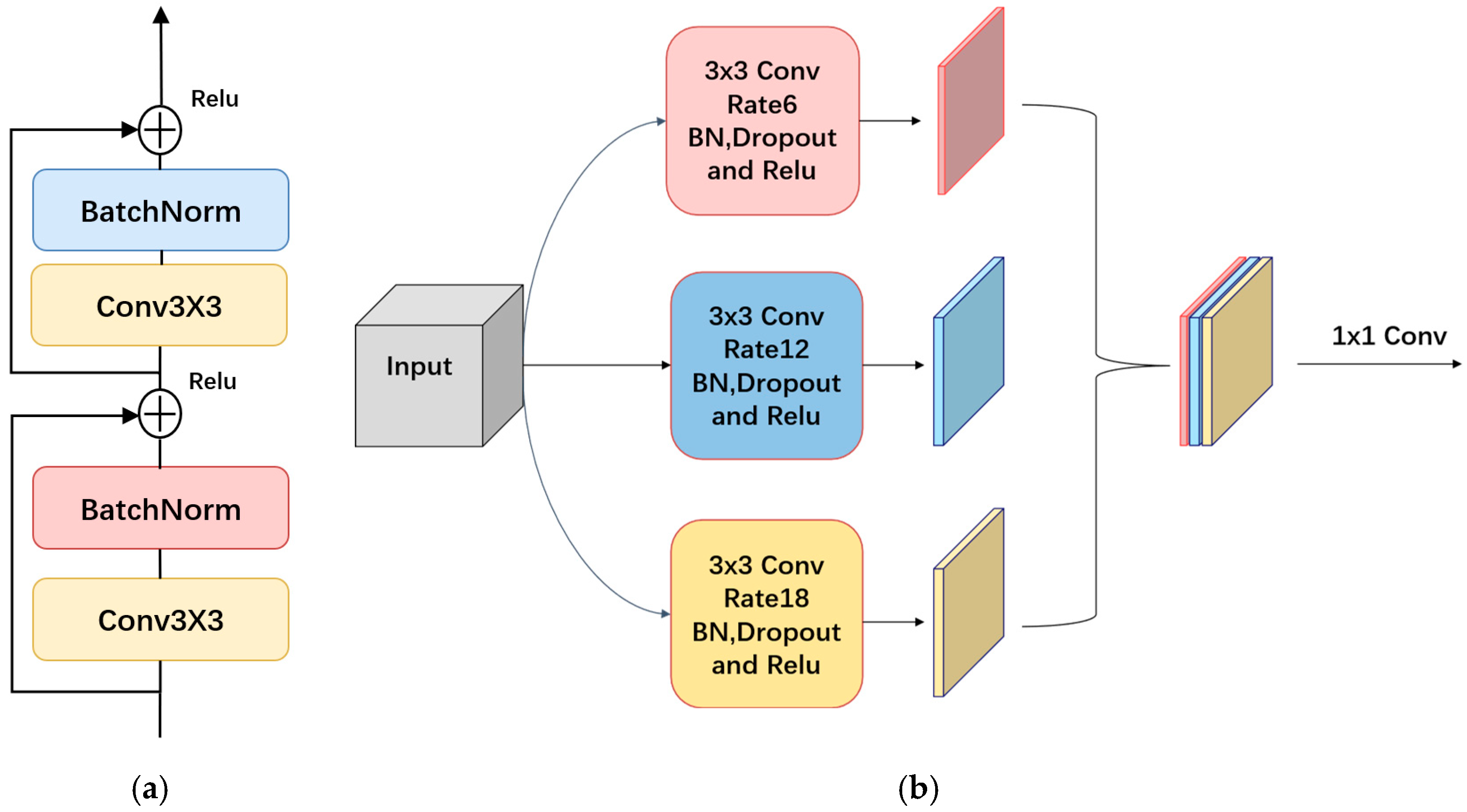

3.2. Models

3.3. Evaluation Indicators

4. Results

4.1. Comparison of Evaluation Parameters of Different Models

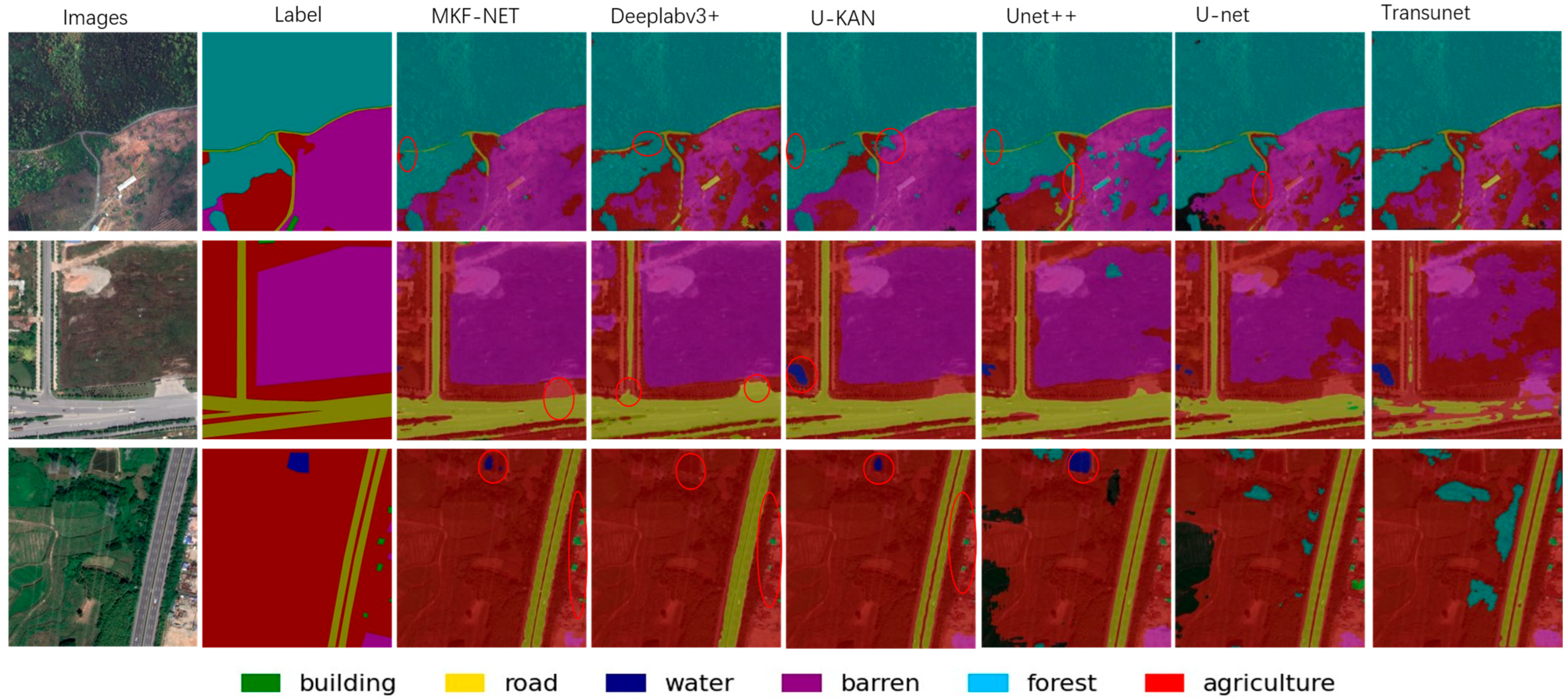

4.2. Segmentation Effects of Different Models

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Navalgund, R.R.; Jayaraman, V.; Roy, P. Remote sensing applications: An overview. Curr. Sci. 2007, 93, 1747–1766. [Google Scholar]

- Huan, H.; Liu, Y.; Xie, Y.; Wang, C.; Xu, D.; Zhang, Y. MAENet: Multiple attention encoder–decoder network for farmland segmentation of remote sensing images. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Zhang, R.; Chen, J.; Feng, L.; Li, S.; Yang, W.; Guo, D. A refined pyramid scene parsing network for polarimetric SAR image semantic segmentation in agricultural areas. IEEE Geosci. Remote Sens. Lett. 2021, 19, 1–5. [Google Scholar] [CrossRef]

- Jung, H.; Choi, H.-S.; Kang, M. Boundary enhancement semantic segmentation for building extraction from remote sensed image. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–12. [Google Scholar] [CrossRef]

- Wang, L.; Fang, S.; Meng, X.; Li, R. Building extraction with vision transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–11. [Google Scholar] [CrossRef]

- Xu, C.; Wang, J.; Sang, Y.; Li, K.; Liu, J.; Yang, G. An effective deep learning model for monitoring mangroves: A case study of the Indus Delta. Remote Sens. 2023, 15, 2220. [Google Scholar] [CrossRef]

- Cui, L.; Jing, X.; Wang, Y.; Huan, Y.; Xu, Y.; Zhang, Q. Improved swin transformer-based semantic segmentation of postearthquake dense buildings in urban areas using remote sensing images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 16, 369–385. [Google Scholar] [CrossRef]

- Pal, S.K.; Ghosh, A.; Shankar, B.U. Segmentation of remotely sensed images with fuzzy thresholding, and quantitative evaluation. Int. J. Remote Sens. 2000, 21, 2269–2300. [Google Scholar] [CrossRef]

- Kanopoulos, N.; Vasanthavada, N.; Baker, R.L. Design of an image edge detection filter using the Sobel operator. IEEE J. Solid-State Circuits 1988, 23, 358–367. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 2009, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Chandra, M.A.; Bedi, S. Survey on SVM and their application in image classification. Int. J. Inf. Technol. 2021, 13, 1–11. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

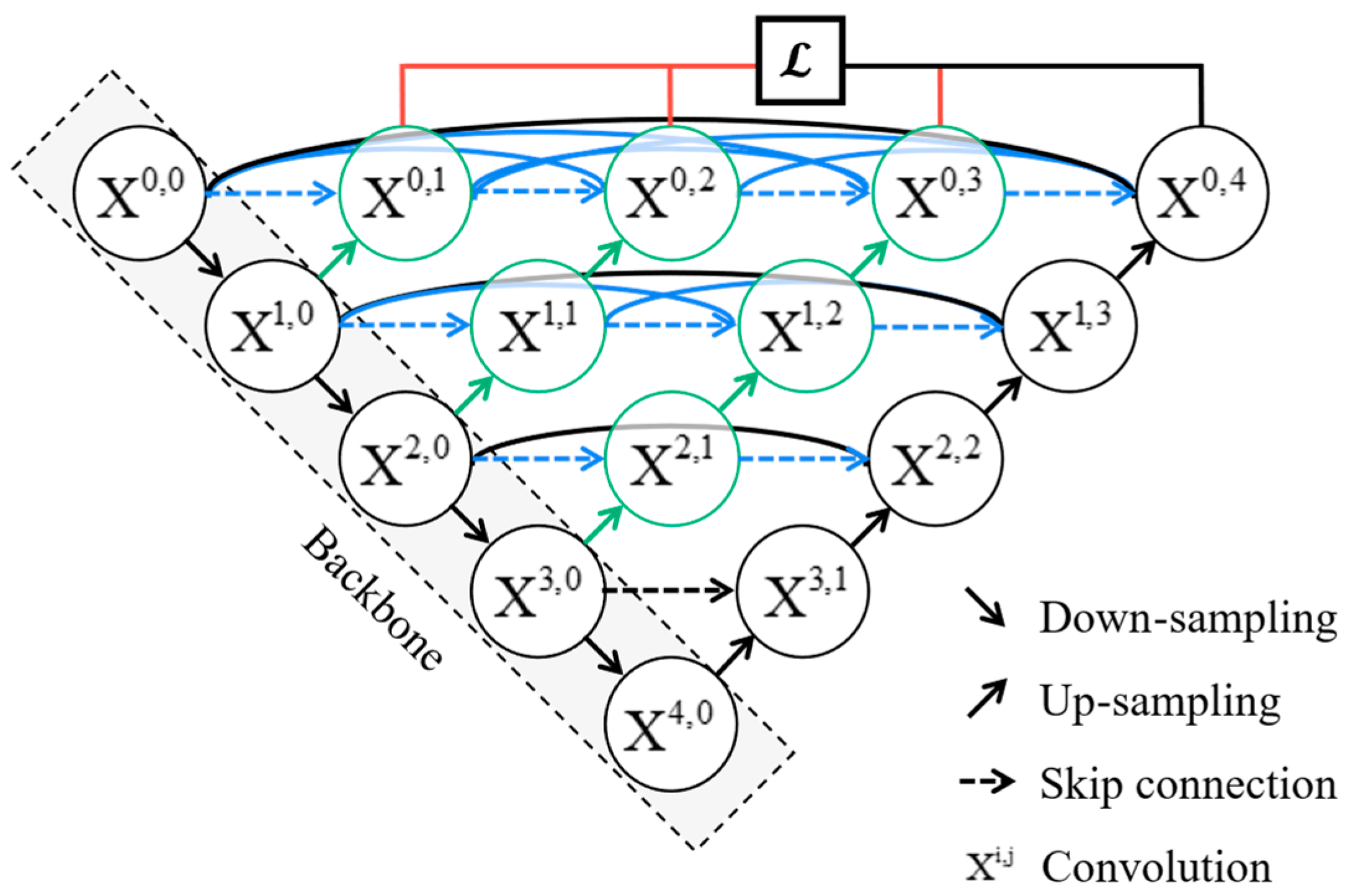

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Proceedings of the International Workshop on Deep Learning in Medical Image Analysis, Granada, Spain, 20 September 2018; pp. 3–11. [Google Scholar]

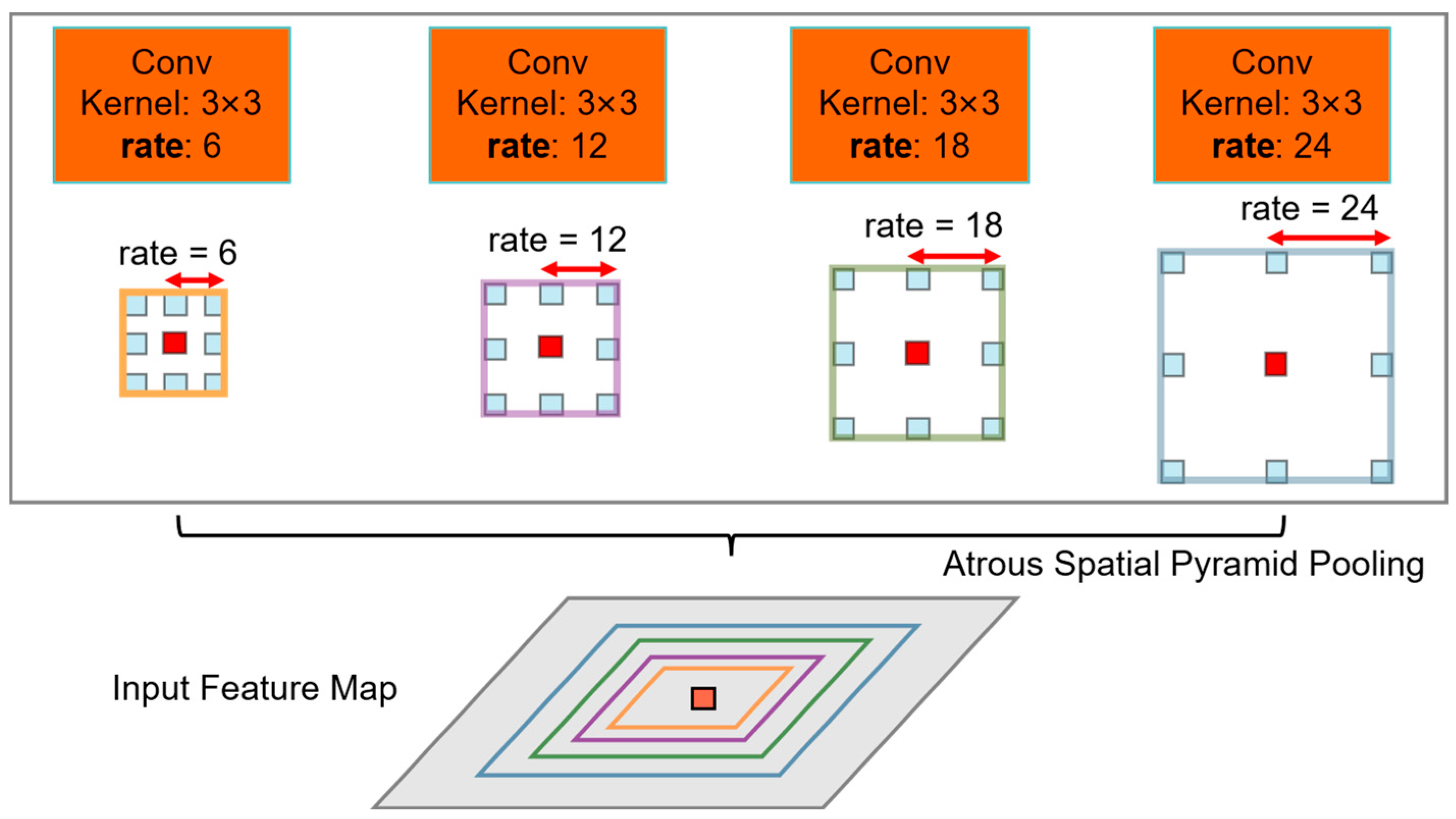

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljačić, M.; Hou, T.Y.; Tegmark, M. Kan: Kolmogorov-arnold networks. arXiv 2024, arXiv:2404.19756. [Google Scholar] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zhao, S.; Feng, Z.; Chen, L.; Li, G. DANet: A Semantic Segmentation Network for Remote Sensing of Roads Based on Dual-ASPP Structure. Electronics 2023, 12, 3243. [Google Scholar] [CrossRef]

- Jia, J.; Song, J.; Kong, Q.; Yang, H.; Teng, Y.; Song, X. Multi-Attention-Based Semantic Segmentation Network for Land Cover Remote Sensing Images. Electronics 2023, 12, 1347. [Google Scholar] [CrossRef]

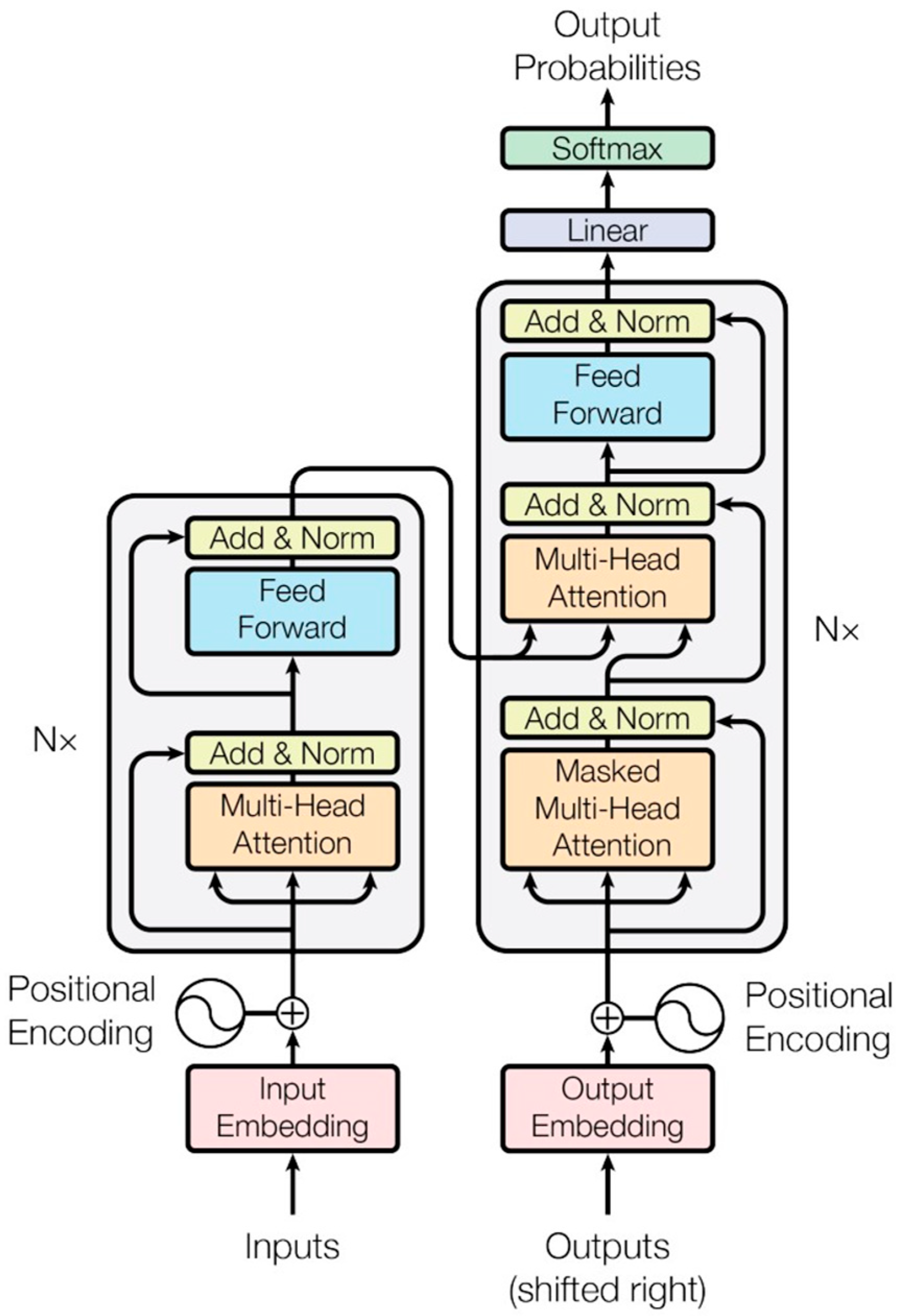

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 1–11. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Liu, B.; Li, B.; Sreeram, V.; Li, S. MBT-UNet: Multi-Branch Transform Combined with UNet for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2024, 16, 2776. [Google Scholar] [CrossRef]

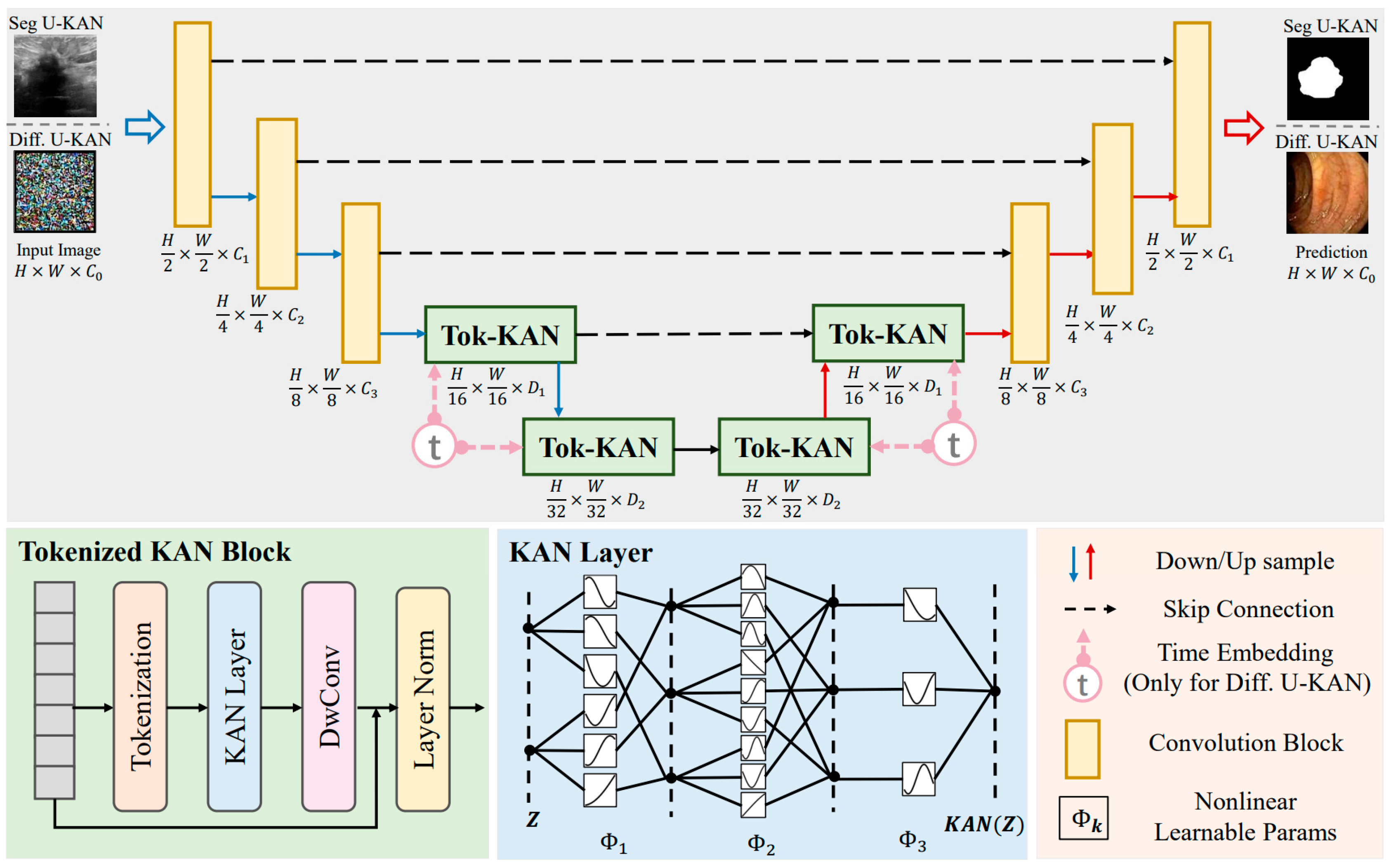

- Li, C.; Liu, X.; Li, W.; Wang, C.; Liu, H.; Liu, Y.; Chen, Z.; Yuan, Y. U-kan makes strong backbone for medical image segmentation and generation. In Proceedings of the Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; pp. 4652–4660. [Google Scholar]

- Wang, J.; Zheng, Z.; Ma, A.; Lu, X.; Zhong, Y. LoveDA: A remote sensing land-cover dataset for domain adaptive semantic segmentation. arXiv 2021, arXiv:2110.08733. [Google Scholar]

- Shorten, C.; Khoshgoftaar, T.M. A survey on image data augmentation for deep learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Wang, S.; Korolija, I.; Rovas, D. Impact of traditional augmentation methods on window state detection. In Proceedings of the CLIMA 2022 The 14th REHVA HVAC World Congress, Rotterdam, The Netherlands, 22–25 May 2022. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-net: Fully convolutional neural networks for volumetric medical image segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 565–571. [Google Scholar]

- Raghu, M.; Unterthiner, T.; Kornblith, S.; Zhang, C.; Dosovitskiy, A. Do vision transformers see like convolutional neural networks? Adv. Neural Inf. Process. Syst. 2021, 34, 12116–12128. [Google Scholar]

- Liu, Y.; Zhang, Y.; Wang, Y.; Hou, F.; Yuan, J.; Tian, J.; Zhang, Y.; Shi, Z.; Fan, J.; He, Z. A survey of visual transformers. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 7478–7498. [Google Scholar] [CrossRef] [PubMed]

- Khan, A.; Rauf, Z.; Sohail, A.; Khan, A.R.; Asif, H.; Asif, A.; Farooq, U. A survey of the vision transformers and their CNN-transformer based variants. Artif. Intell. Rev. 2023, 56, 2917–2970. [Google Scholar] [CrossRef]

- Yao, W.; Bai, J.; Liao, W.; Chen, Y.; Liu, M.; Xie, Y. From cnn to transformer: A review of medical image segmentation models. J. Imaging Inform. Med. 2024, 37, 1529–1547. [Google Scholar] [CrossRef] [PubMed]

| Model | Accuracy | Precision | MPA | mIoU |

|---|---|---|---|---|

| U-net | 76.36% | 79.28% | 70.45% | 58.14% |

| Unet++ | 78.6% | 76.31% | 74.12% | 60.57% |

| Transunet | 74.64% | 79.28% | 63.66% | 54.71% |

| Deeplabv3+ | 79.06% | 78.48% | 75.04% | 62.65% |

| U-KAN | 78.99% | 76.20% | 74.76% | 61.27% |

| MKF-NET(Ours) | 79.19% | 78.53% | 76.50% | 64.31% |

| Model | Background | Water | Barren | Forest | Agriculture | Road | Building |

|---|---|---|---|---|---|---|---|

| U-net | 66% | 55% | 67% | 43% | 66% | 58% | 52% |

| Unet++ | 73% | 50% | 69% | 52% | 76% | 56% | 48% |

| Transunet | 62% | 57% | 65% | 32% | 63% | 55% | 49% |

| Deeplabv3+ | 73% | 57% | 71% | 50% | 79% | 56% | 52% |

| U-KAN | 72% | 54% | 71% | 49% | 74% | 58% | 51% |

| MKF-NET(Ours) | 74% | 60% | 73% | 52% | 75% | 63% | 53% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, N.; Xu, Y.-H.; Zhou, W.; Yu, G.; Zhou, D. MKF-NET: KAN-Enhanced Vision Transformer for Remote Sensing Image Segmentation. Appl. Sci. 2025, 15, 10905. https://doi.org/10.3390/app152010905

Ye N, Xu Y-H, Zhou W, Yu G, Zhou D. MKF-NET: KAN-Enhanced Vision Transformer for Remote Sensing Image Segmentation. Applied Sciences. 2025; 15(20):10905. https://doi.org/10.3390/app152010905

Chicago/Turabian StyleYe, Ning, Yi-Han Xu, Wen Zhou, Gang Yu, and Ding Zhou. 2025. "MKF-NET: KAN-Enhanced Vision Transformer for Remote Sensing Image Segmentation" Applied Sciences 15, no. 20: 10905. https://doi.org/10.3390/app152010905

APA StyleYe, N., Xu, Y.-H., Zhou, W., Yu, G., & Zhou, D. (2025). MKF-NET: KAN-Enhanced Vision Transformer for Remote Sensing Image Segmentation. Applied Sciences, 15(20), 10905. https://doi.org/10.3390/app152010905