1. Introduction

Accidental or malicious releases of toxic industrial chemicals (TICs)—including chlorine, sulfur dioxide, and anhydrous ammonia—have repeatedly demonstrated their potential for severe harm to public health, industry, and ecosystems. Large-scale field trials and incident reports alike show that dense gases hug the surface, weave around obstacles, and traverse long distances within minutes. Under such constraints, the practical value of a localization system is decided in the earliest stage: quickly inferring the emission origin guides evacuation corridors, prioritises mobile or fixed sensor deployment, and informs remediation teams. While the conceptual ideal would be a dense, always-on lattice of chemical detectors, the reality is constrained by cost, power, maintenance, and social acceptance; in practice, responders pair sparse measurements with operational dispersion tools such as ALOHA and HPAC to delineate hazard footprints [

1,

2]. The resulting observability gap motivates methods that can reason from sparse measurements or image-like surrogates of concentration fields, with early mobile-robotics work demonstrating gas-concentration gridmaps from sparse sensors [

3].

A natural direction is to treat the gridded concentration map—obtained from fast surrogates, physics simulators, or interpolated sensor mosaics—as a spatiotemporal signal and to pose source localization as a data-driven inference task. Early pipelines based on 3D convolutional neural networks (3D CNNs) or hybrid CNN–LSTM stacks (e.g., ConvLSTM [

4]) learn useful local motion features, but their compute and memory grow unfavourably with temporal horizon, cubically for deep 3D CNNs (to achieve large receptive fields) and at least quadratically for CNN–LSTM variants that carry recurrent state over long windows [

5,

6]. Parallel optimization and Bayesian inversions remain attractive for small grids [

7,

8], yet they typically require explicit wind-field estimates and degrade as resolution or domain size increases. More recently, Transformer-based approaches have recast localization as recursive frame prediction: given recent frames, a model iteratively predicts earlier snapshots until the origin emerges [

9]. Transformer models have also been widely adapted to vision tasks [

10]. Video Vision Transformer (ViViT) further improved single-pass accuracy [

11]; however, global self-attention incurs

cost in sequence length

L, which constrains temporal reach and throughput, and prior studies primarily address single-source incidents. Related video backbones such as SlowFast and the Temporal Shift Module (TSM) have also been widely adopted for efficient temporal modelling [

12,

13]. Recent surveys and designs on efficient video backbones further motivate axis-wise, linear-time operators for streaming use cases (see, e.g., [

14]).

State-space sequence models (SSMs) offer a principled path to linear-time sequence modelling without global attention. Mamba replaces global attention with content-aware kernels that propagate a latent state at

cost [

15], and visual extensions apply directional scans along image axes to capture spatial dependencies [

16,

17]. Building on these ideas, we adopt a Visual Mamba-inspired formulation tailored to dispersion grids: rather than implementing explicit associative scans, we realize each axis-wise state update with a one-sided, causal, depthwise operator and learn a convex combination over directions via an upwind gate. This preserves linear scaling, encodes physical causality along the operated axis, and maps cleanly to commodity GPUs through grouped convolutions. In effect, directional depthwise stencils play the role of stable, axis-aligned transports, while the gate adapts to local flow regimes (global/local/hybrid), which we ablate in

Section 5.3. Beyond gas release response, we view this combination as a general backbone for inverse inference on transported fields, i.e., spatiotemporal backtracking tasks in environmental monitoring and urban analytics.

Within this design space, we introduce Recursive Backtracking with Visual Mamba (RBVM), a directionally gated visual state-space backbone for source localization. Each residual block applies causal, depthwise sweeps along , , and and then fuses them via a learned upwind gate; a lightweight two-layer GELU MLP follows. Small LayerScale coefficients modulate both the directional aggregate and the MLP branch, and a layer-indexed, depth-weighted DropPath schedule stabilizes training across blocks. A 3D-Conv stem embeds the single-channel lattice into C channels, while a symmetric head produces logits for all time slices. Causal padding strictly prevents look-ahead along the operated axis, aligning the inductive bias with advective transport. The six-direction variant adds a backward temporal branch () and improves robustness under meandering winds, whereas the five-direction variant (omitting ) may be considered for strictly causal regimes. In all cases, computation and memory scale linearly with the spatiotemporal volume and with the number of directions .

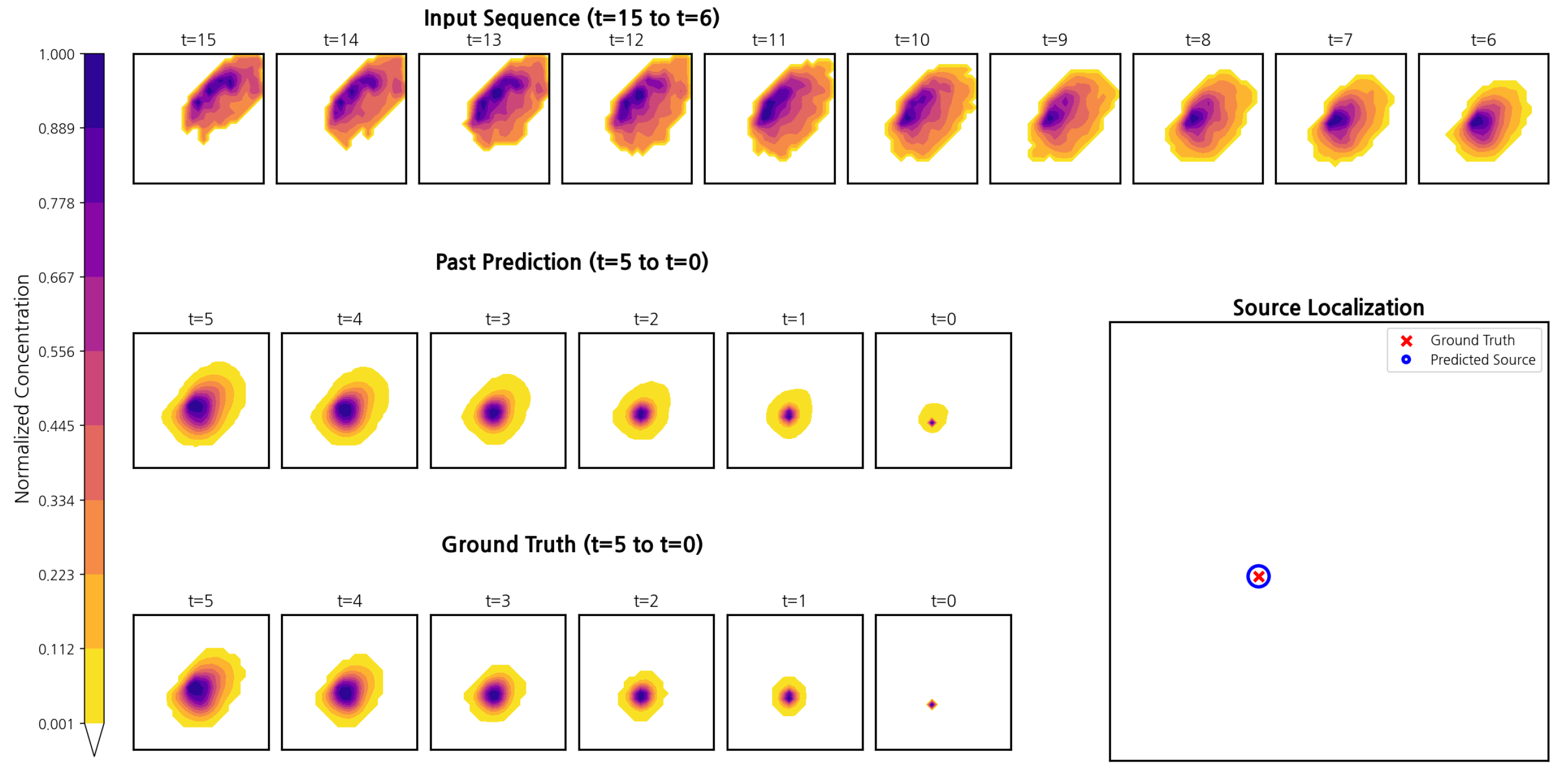

Our inference protocol follows the recursive backtracking paradigm. The model ingests a conditioning window of recent frames and recursively predicts earlier snapshots in reverse chronological order, with dataset-specific choices of window length and backtracking depth (cf. [

9,

11]). The last predicted slice provides the probability map for localization. Training uses a supervised loss that blends per-slice reconstruction MSE with a BCE-with-logits term computed over the backtracked slices (including the oldest), encouraging sharp, well-localized peaks. To further stabilize learning, we maintain a Polyak/EMA [

18] teacher of the student parameters, and, after a short warm-up, add consistency terms (probability-space MSE and logit-space BCE) whose coefficients ramp linearly with epoch [

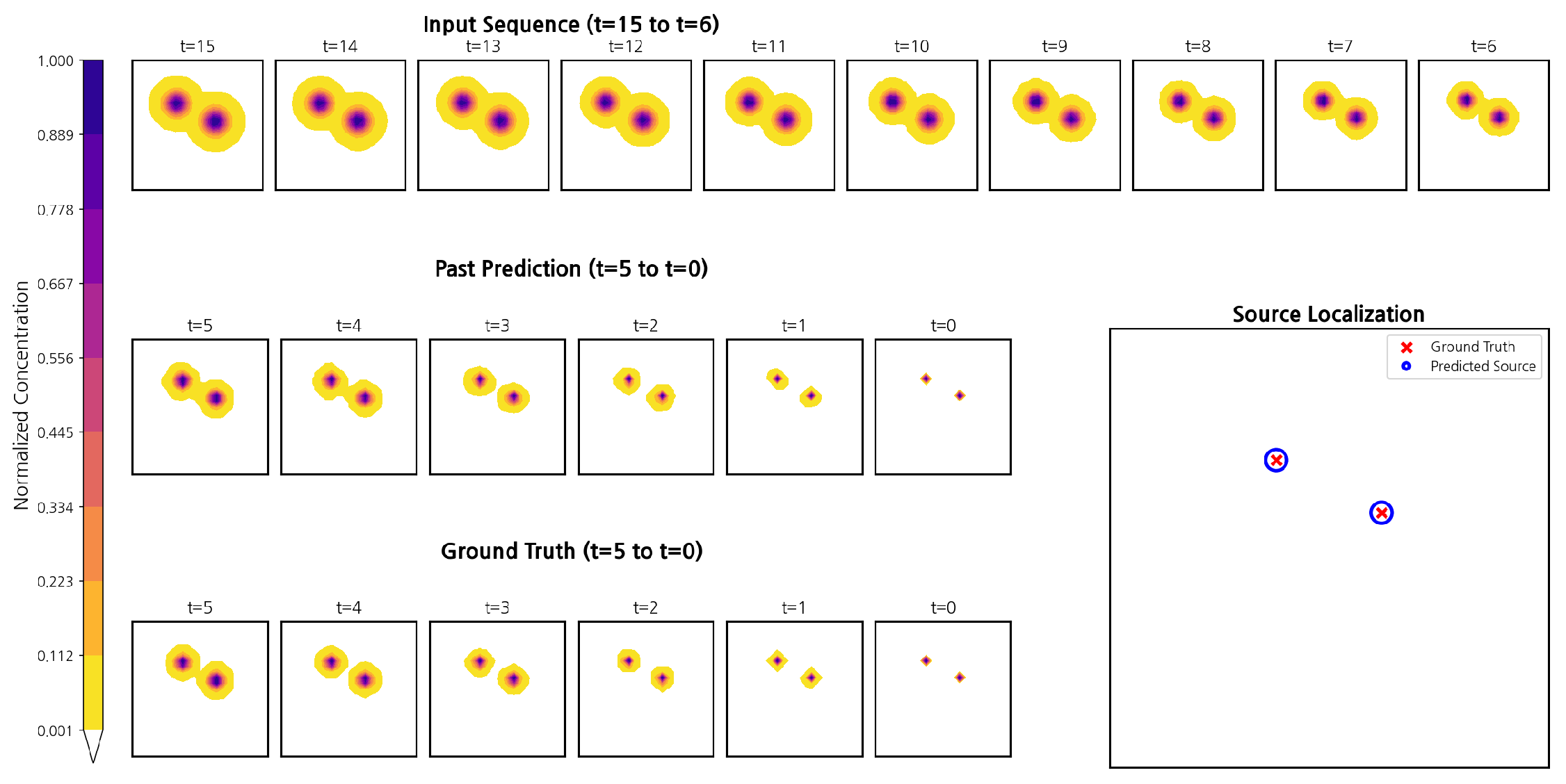

19]. Because dispersion in our synthetic generator is additive, a simple two-peak subtraction applied post hoc to the oldest slice enables zero-shot dual-source localization without retraining.

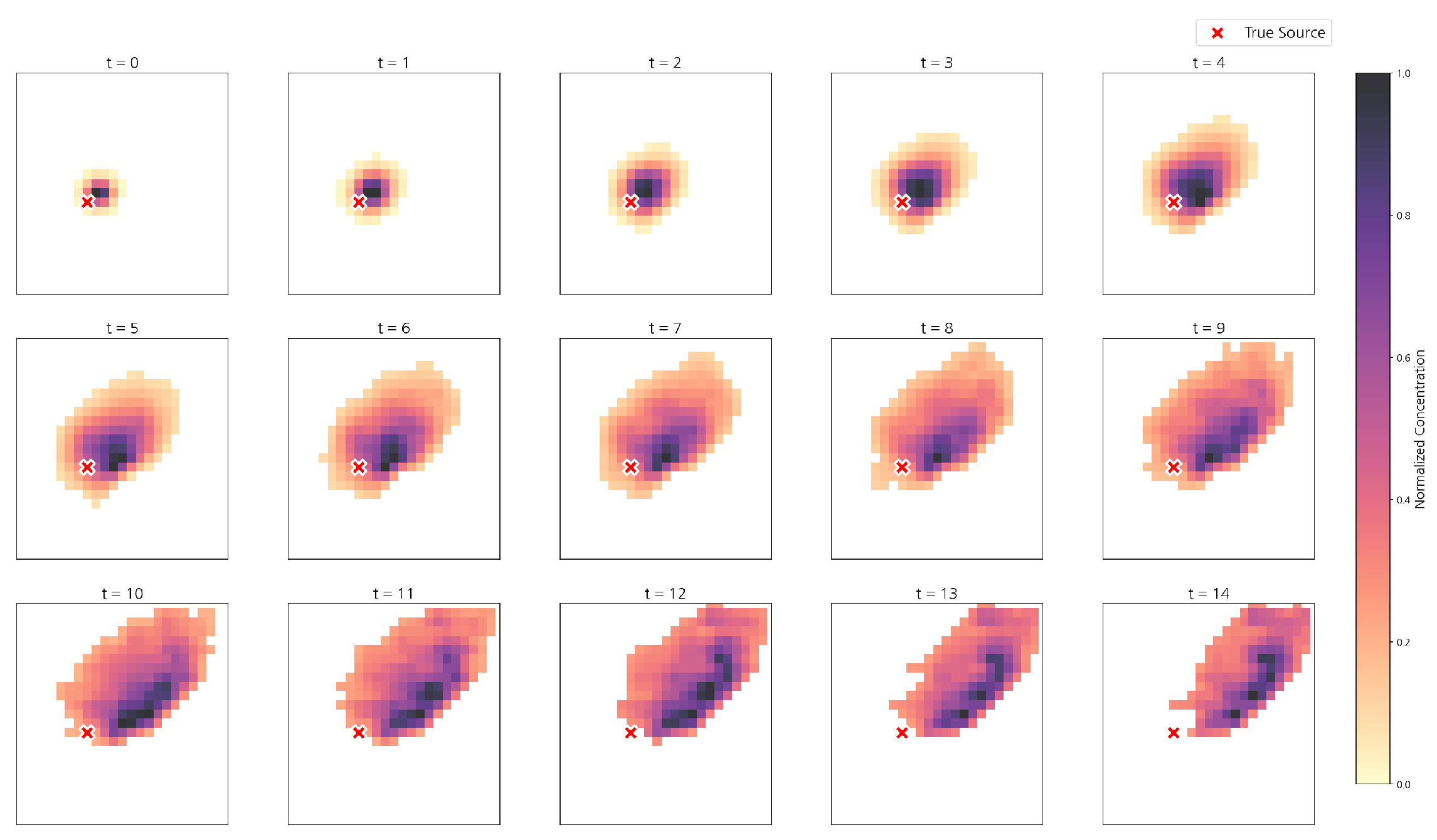

We evaluate on two complementary domains. A controlled synthetic benchmark—flat terrain with stochastic yet strictly causal advection—isolates algorithmic design effects and supplies dual-source sequences for stress testing. In addition, we rigorously evaluate the model using diffusion data spanning diverse experimental conditions and meteorological environments generated via the Nuclear–Biological–Chemical Reporting and Modelling System (

NBC_RAMS) [

9,

11]. A held-out subset of these runs captures urban channelling, shear, and occasional wind reversals. Models are trained

only on single-source synthetic sequences and then evaluated on

NBC_RAMS as-is, under the same protocol, as in prior work [

9,

11]. Across both domains, we compare RBVM against strong baselines—3D-CNN, CNN–LSTM, and ViViT—and report geometry-aware localization metrics (RMSE/AED, hit

, Exact) together with model compactness (parameter count).

In summary, this paper makes three contributions in a unified framework. First, it presents a Visual Mamba-inspired, directionally gated visual SSM that aggregates causal depthwise operators via a learned upwind gate, achieving linear-time scaling with a hardware-friendly implementation. Second, it demonstrates robust performance under meandering, urban flows—yielding a favourable accuracy–compactness trade-off relative to 3D-CNN, CNN–LSTM, and ViViT—without resorting to quadratic-cost attention. Third, it shows that a parameter-free, physics-motivated two-peak subtraction enables zero-shot dual-source localization on synthetic stress tests, indicating that RBVM learns a physically meaningful representation rather than a brittle lookup. The remainder of the paper develops the preliminaries on linear-time state-space modelling, details the RBVM architecture and training protocol, specifies datasets and experimental procedures, and reports quantitative and qualitative results before concluding with a brief outlook.

Paper organization. We first lay the groundwork in

Section 2, reviewing linear-time state-space modelling, introducing notation, and motivating directional causality for dispersion grids. Next,

Section 3 presents RBVM end-to-end: we unpack the block design (causal depthwise operators and

DirGate), the stem/head, training objectives and schedules, the inference protocol, and the zero-shot dual-source postprocessing step. We then turn to the experimental setup in

Section 4, detailing datasets, splits, preprocessing, the common evaluation protocol, and baseline implementations, ensuring like-for-like comparisons. With the stage set,

Section 5 presents the evidence: we begin with the NBC_RAMS field benchmark (

Section 5.1), then turn to the synthetic benchmark (

Section 5.2), and round out the section with targeted analyses—sensitivity highlights (

Section 5.3) and qualitative overlays (

Section 5.1 and

Section 5.2). Finally,

Section 6 distils the main takeaways, limitations, and practical guidance, and

Section 7 closes with a brief outlook and opportunities for extension.

Scope and positioning. We address the real-time inverse task on short windows of solver-generated concentration fields under strict latency and input-availability constraints (no winds or solver calls at inference). Directional state-space modelling matches advective transport while retaining linear-time scaling and streaming readiness; physics-based solvers and their adjoint and data assimilation variants solve the forward problem, while we study a compact, directionally causal inverse layer that consumes their rasters at decision time.

Novelty—what is new vs. prior art.

- (i)

Directionally causal visual SSM. Axis-wise one-sided, depthwise stencils with an upwind gate (global/local/hybrid), preserving per-axis causality and linear scaling—contrasted with bidirectional scans or quadratic attention.

- (ii)

Flipped-time joint decoding. A reverse-index causal temporal design that predicts all backtracked slices in one pass and is trained with BCE-on-logits across slices plus light EMA consistency, limiting exposure bias without iterative rollout.

- (iii)

Deployment posture. A learned inverse layer that consumes solver rasters under strict latency without winds or online solver calls, with optional calibration/abstention for operations—complementing (not replacing) physics stacks.

Contributions.

A directionally causal, depthwise state-space backbone with an upwind gate that preserves linear-time scaling in sequence length and respects per-axis causality.

On NBC_RAMS and synthetic diffusion, the model improves localization accuracy while remaining compact (with a compact parameter budget and linear-time scaling).

A zero-shot dual-source postprocess with explicit assumptions and lightweight safeguards (non-negative residual clamping, small-radius NMS, uncertainty flag).

3. Methodology

3.1. RBVM: Directionally Gated Visual SSM Backbone

Long-horizon backtracking requires a model that (i) preserves temporal causality, (ii) propagates information efficiently across space and time, and (iii) scales linearly with the sequence extent so that compute and memory remain bounded as the look-back window grows. In dispersion grids, the spatiotemporal signal is moreover anisotropic: advection makes information travel primarily along an upwind/downwind axis that can change over time. These constraints motivate a state-space formulation with directional propagation.

Why Mamba? Transformers provide global context but incur time and memory in sequence length L. Three-dimensional CNNs scale linearly but need deep stacks to connect distant voxels; CNN–LSTM hybrids lose parallelism and grow memory with horizon. State-space sequence models such as Mamba replace global attention with content-aware latent dynamics that advance in , enable streaming, and keep memory flat with horizon.

Why Visual Mamba? Backtracking must move information along spatial axes as well as time. Visual Mamba extends SSMs with directional scans on images (and videos), yielding short communication paths across H and W while preserving linear complexity. In dispersion grids, this is a good inductive bias: gaseous filaments meander and split, but transport is largely directional instant-to-instant; directional scans approximate these flows with lightweight operators.

Why advance beyond prior Visual Mamba? Standard visual SSM implementations often use bidirectional (non-causal) passes or associative scans [

17,

23] that, while powerful, are heavier than needed here and can blur temporal causality. We tailor the formulation to dispersion by (i) enforcing

axis-wise causality with

one-sided, depthwise operators (e.g., [

42,

43]); (ii) introducing an

upwind gate (

DirGate) that learns a convex mixture over directions

, routing information along the current flow; and (iii) using a 3D–Conv stem/head for compact I/O, with small LayerScale coefficients and a

layer-indexed, depth-weighted DropPath for stable stacking [

44,

45]. This Mamba-inspired design keeps the linear-time advantage, encodes physical causality, and is hardware-friendly (grouped depthwise ops dominate cost).

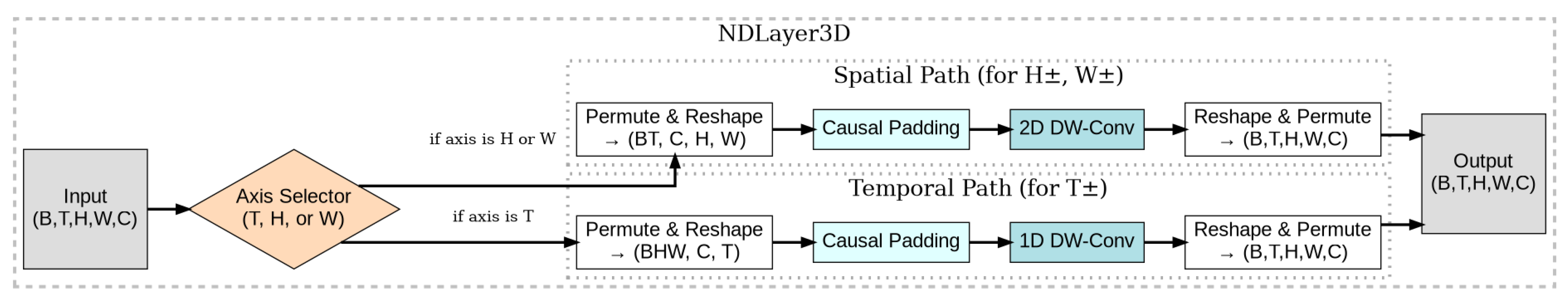

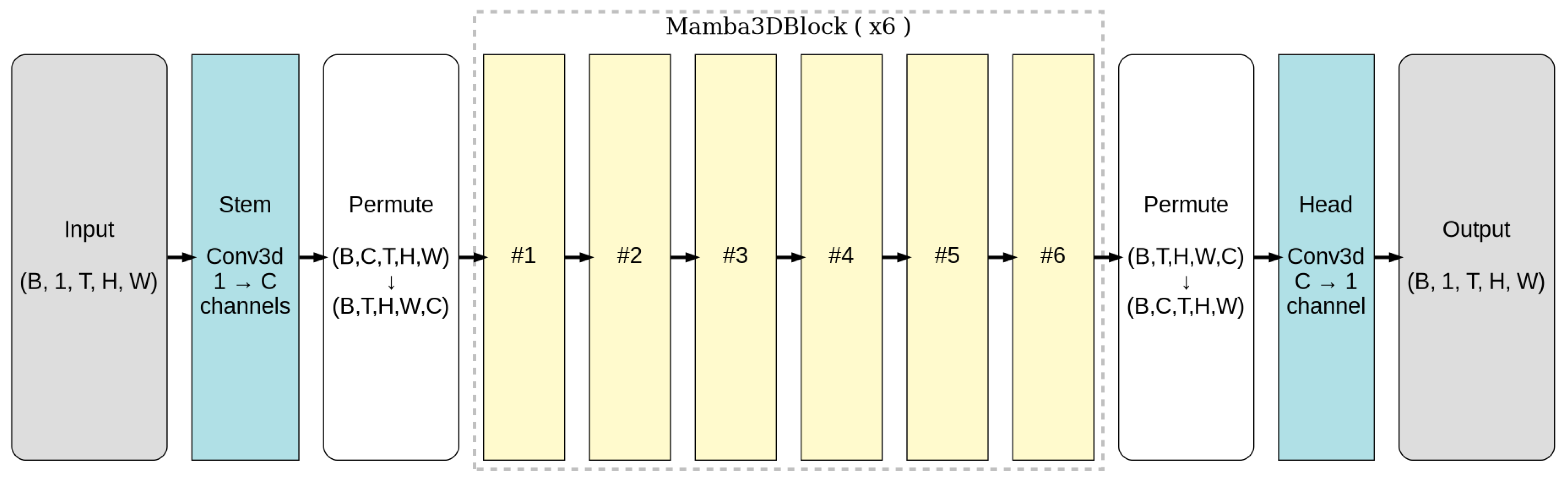

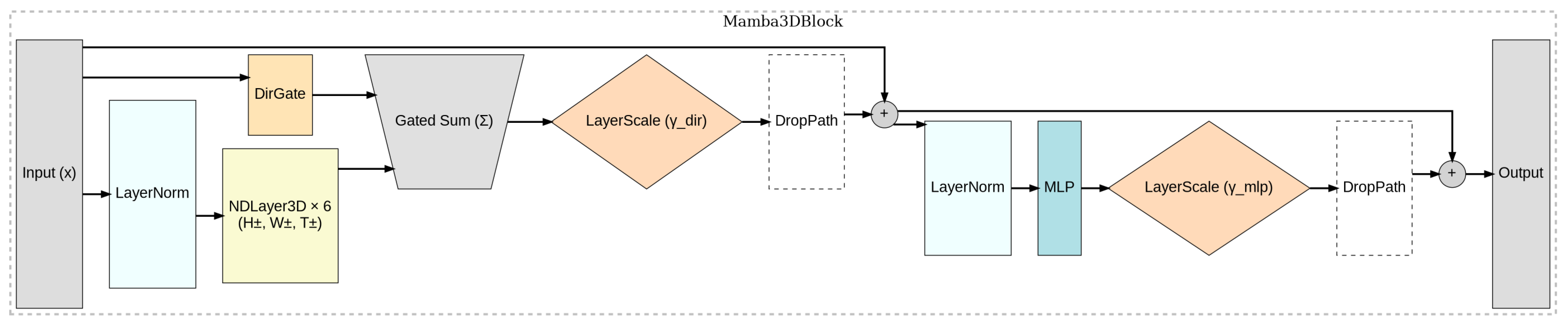

Concretely, we parameterise RBVM as follows: Let the input be (one channel); the stem maps to C channels and we then operate on (pre-norm, channels-last inside the block). For notational consistency in this section, we denote the channels-last features by .

Model overview. The model structure comprises a

3D-Conv stem that embeds inputs

into

C channels, a

stack of Mamba3D blocks operating in channels-last form

, and a

3D-Conv head that maps back to one channel of logits for all

T slices; inside each block, six

directional NDLayer3D operators

are applied with causal padding and mixed by an

upwind DirGate. LayerScale and optional DropPath are used on both the directional and MLP branches with residual skips, while NDLayer3D permutes/reshapes to apply

2D depthwise convolutions along space and

1D depthwise convolutions along time before restoring the

layout (

Figure 1,

Figure 2 and

Figure 3).

Operational inputs. The model consumes gridded concentration rasters exported by an upstream dispersion stack (e.g., NBC_RAMS/HPAC-family). No wind vectors are used at inference. The same interface applies to other operational stacks that export concentration fields on a regular grid.

3.1.1. Temporal Causality and Time Flip

We index the conditioning window so that the oldest slice used for evaluation aligns with the causal boundary (via a simple time flip). Temporal branches and then perform one-sided sweeps on this flipped timeline, with padding applied only on the causal (upwind) side. All temporal operations remain within the observed window; no operator can access frames earlier than the oldest target, and the head unflips the index before producing logits. The time flip is a non-learned re-indexing that preserves gradients to all supervised frames; it does not grant access to frames earlier than the oldest target and does not remove supervision from any FUT index. Normalization statistics are taken from the conditioning window only; we do not peek into frames earlier than the oldest target, so no information leakage is introduced by normalization.

3.1.2. Temporal

Windowing

Let the conditioning window cover with min. We apply a time flip so the oldest frame is the decoding target and supervise all backtracked frames within this window. By construction, neither training nor metrics require frames earlier than , and excluding the first five minutes avoids near-field transients beyond the scope of this study.

3.1.3. Optional Raster Adaptor for Irregular Sensing

When only sparse/irregular measurements are available, a lightweight adaptor constructs a working raster together with a co-registered uncertainty/support map . We can use conservative kernel interpolation (e.g., inverse-distance or Gaussian kernels with mass normalization) or standard kriging to populate X, and define U from local support (e.g., distance-to-sample or posterior variance). The model then consumes (uncertainty as an auxiliary channel) with no change to the backbone. At inference, U is folded into the decision rule by tightening abstention thresholds when support is low (higher U). This adaptor runs before the directional sweeps, thus keeping linear-time behaviour and per-axis causality intact. This optional raster adaptation is documented for deployment completeness.

3.1.4. Directional Operators (Causal, Depthwise)

Each operator performs a causal sweep along one axis/direction, propagating information only from the past (or from the upwind side) without peeking ahead. In gas dispersion, this matches physics: under advection, the most informative context at lies immediately upwind or in the preceding time step. We therefore use one-sided stencils (kernel width 3) with causal padding along the operated axis. Kernels are depthwise (groups ) so each channel evolves with its own small filter; cross-channel mixing is deferred to the gated fusion and the MLP head. This yields short communication paths and excellent hardware efficiency while preserving the state-space flavour of axis-wise propagation. While each operator acts along a single axis ( or ), stacking blocks composes these short paths into oblique or curved trajectories; the upwind gate shifts the dominant orientation across blocks and time, and the post-aggregation MLP mixes channels to couple axes. This compositional routing suffices to capture meanders and off-axis transport in our data; if tightly wound eddies dominate, diagonal variants are a drop-in extension that leave the rest of the stack unchanged.

3.1.5. Orientation Extensions

For scenes dominated by rotational or swirling transport, we can extend the direction set to , where are one-sided, depthwise diagonal stencils implemented as oriented masked kernels with causal support on the upwind half-plane. As with , padding is causal with respect to the operated direction and evaluation index, so per-direction causality is preserved. This change is modular and increases complexity linearly with (still ).

Define the direction set

(our default), with

used only in sensitivity snapshots. For

, we apply a one-sided, depthwise kernel of width 3 with causal padding along the operated axis:

3.1.6. Implementation

Note

processes the sequence in index order (consistent with streaming), while adds a backward temporal branch that helps under meandering winds; and use stencils with padding applied only on the upwind side. We permute/reshape tensors so these become depthwise 1D/2D grouped convolutions on contiguous memory. Across blocks, alternation of sweeps with gate-driven orientation changes yields oblique information flow without breaking per-axis causality. On the flipped timeline, and are both one-sided with causal padding, so adding improves robustness to meanders without introducing bidirectional look-ahead.

3.1.7. Upwind Gate (DirGate)

The five (or six) directional responses are not equally informative at every site. When the plume drifts east, for example, (eastward) should carry more weight; when winds reverse or channel, the optimal mixture shifts accordingly. DirGate learns this upwind routing as a temperature-controlled softmax over directions. At each block, we normalize features, project to logits, scale by , and apply softmax to obtain a convex mixture. Smaller sharpens the distribution; a larger encourages smoother mixing. We zero-initialize the projection so training starts from a uniform mixture.

3.1.8. Formal Definition and Modes

Directional responses

are combined by a convex mixture where a lightweight summary

feeds zero-initialized logits

W with temperature

. We support

global,

local, and a

hybrid (global prior + local refinement); operators are unchanged across modes and preserve linear complexity:

Here

summarizes

U (global pooling for

global; identity or

projection for

local);

W are gate logits (zero-initialized; uniform start), and

controls mixture entropy.

3.1.9. Gating

with Additional Orientations

DirGate naturally generalizes to by producing convex weights over the enlarged set. Alternatively, a compact dynamic-orientation variant replaces fixed diagonals with K learned-orientation atoms (small K, e.g., ) and gates over these atoms (global or local mode). Zero-initialized logits and a moderate temperature avoid premature collapse; the added cost is linear in K, and streaming behaviour is unchanged. At higher resolutions, a local DirGate (per-voxel mixtures) can replace or refine the global mixture while keeping linear complexity; an optional patchify/strided stem lets global gating operate on a reduced token grid, with the patch stride chosen to balance latency and spatial fidelity. Note that DirGate supports three modes. Global gating predicts a single convex mixture over directions for the whole sample (our default at ). Local gating predicts a mixture per voxel (and time index), enabling spatially varying routing when heterogeneity increases. A hybrid option combines a global prior with local refinement. Logits are zero-initialized (uniform start) and the temperature controls entropy; for local mode, we optionally apply a small spatial smoothing on pre-softmax logits. Switching modes leaves the directional operators unchanged and preserves linear complexity in (and in ).

3.1.10. Degeneracy

Mitigation

We zero-initialize gate logits (uniform start) and use a moderate temperature () to avoid overly sharp mixtures. Small LayerScale on both branches ( at init) prevents any single direction from dominating early; global gating (default at modest grids) further stabilizes routing by averaging over space–time. Local/hybrid variants remain available when heterogeneity increases.

3.1.11. Block, Stem, Head, and Complexity

Each residual block first aggregates directional responses using DirGate and then refines them with a lightweight two-layer GELU MLP. We use pre-norm LayerNorm on both branches so gradients flow through identity skips; small LayerScale coefficients stabilize early training; and a layer–indexed, depth-weighted DropPath regularizes across blocks without adding inference cost. A 3D-Conv stem maps the single-channel lattice to C channels, and a symmetric head maps back to one channel of logits at all time steps. Conceptually, stacking such blocks alternates axis-wise message passing (short, causal stencils) with channel mixing (MLP), so long-range dependencies emerge through depth rather than wide filters.

3.1.12. Block, Stem, and Head (Modular View)

Stem. Conv3D maps ; an optional patchify/strided reduction maps before the sweeps.

Block. Six axis-wise depthwise paths

operate on

to produce

; DirGate yields

g, and the gated sum is added with LayerScale and optional DropPath:

Residual form. We initialize and at . Small residual scales ease early optimization in deep residual/Transformer-style stacks while preserving capacity.

Head. Conv3D maps logits on the cell grid; temporal alignment follows the flip convention in Sec. Temporal causality. Deployment safeguards (calibration/abstention) are wrappers and do not alter the backbone.

3.1.13. Scaling and Input Reduction

Patchify at input. For large rasters, we optionally apply a non-overlapping patchify (or a strided stem) to coarsen before the directional sweeps; the head symmetrically upsamples to cell logits. Because the operators remain depthwise and one-sided, axis-wise causality and the linear-time profile are preserved. Gating (global/local/hybrid) is orthogonal to this reduction and leaves the directional operators unchanged. Gating Under Patchify Global DirGate operates unchanged on patch tokens; local DirGate (per token) is also supported when heterogeneity increases with resolution. Both retain linear complexity and reuse the same directional responses. Gating granularity does not alter the backbone’s cost profile: axis-wise, one-sided depthwise operators remain the dominant term. The gate adds a per-sample (global) or per-voxel (local) convex mixture whose overhead is linear in volume. When resolution increases, a patchify/strided stem maps tokens before the directional sweeps; global or hybrid gating then operates on the compact token grid, with optional local refinement if sub-grid heterogeneity persists.

3.1.14. Cost

Drivers and Permutations

Per-block cost scales linearly in (and ); grouped depthwise convolutions dominate runtime. We keep a channels-last layout throughout the body so most permutations are metadata (stride) changes, only stem/head swap layouts. In practice, permutation overhead is negligible relative to depthwise stencils on current GPUs. Adding diagonal or learned-orientation stencils increases the per-block constant proportionally to the number of directions/orientation atoms, but does not change the linear dependence on sequence extent; memory overhead grows linearly with the volume and linearly with the number of directional buffers.

3.1.15. Tiling

with Causal Halos

For very large rasters, we process overlapping tiles with a small causal halo so one-sided stencils remain valid at tile boundaries; logits are then stitched without seams.

Compute and memory are dominated by depthwise grouped convolutions and thus scale linearly with the spatiotemporal volume and with . DirGate (a single linear projection + softmax) and LayerScale add negligible overhead. This keeps the backbone compact while allowing look-back horizons to extend without quadratic penalties.

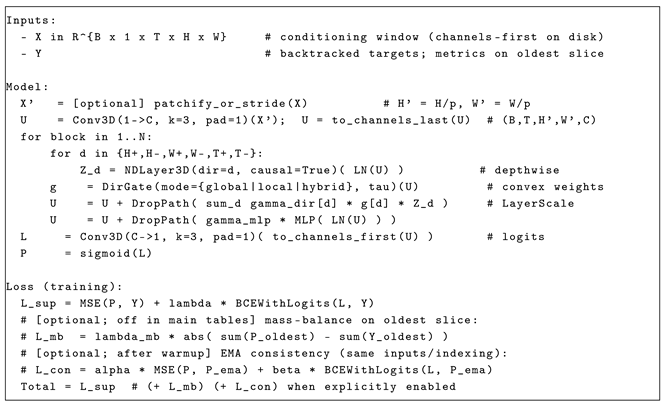

3.1.16. Pseudocode

(Forward and One Training Step)

Listing A1 provides ASCII-safe pseudocode for one forward pass (incl. optional patchify) and one training step reflecting the equations. Optional regularizers/safeguards are flagged and

disabled in the main tables unless stated. For quick lookup, see

Appendix A.

3.2. Training Objective and Schedules

We adopt a finite-horizon

backtracking protocol parameterised by the conditioning-window length

COND and the backtracking depth

FUT (cf. [

9,

11]). Given a mini-batch

, the network returns logits

for the

earlier slices. During training we apply a

time flip so that the index

corresponds to the oldest slice (closest to

), aligning targets and losses with the evaluation slice used at test time.

3.2.1. Joint Decoding

We decode all backtracked frames jointly from the same conditioning window and supervise the entire FUT stack in parallel. Concretely, the network produces (and ) in one forward pass; we do not feed as input to predict . The temporal operators are one-sided causal sweeps over the latent representation aligned to the observed window (via time flip), not an auto-regressive rollout. This joint design mitigates per-step error accumulation and exposure bias typical of strictly auto-regressive decoders; the “oldest” evaluation slice is a direct head output rather than the result of repeated prediction chaining.

All FUT slices are decoded from the same conditioning window on a flipped index; both temporal branches remain one-sided with respect to the evaluation boundary, so training cannot leak information from frames earlier than the oldest target.

No forgetting via joint supervision. We supervise the entire FUT stack jointly from the same conditioning window, aggregating per-frame terms across all FUT indices (oldest included) in the supervised loss. Because all targets are optimized together, the model does not preferentially fit only the most recent slice; random window sampling across epochs further rehearses every FUT index.

Per-frame min–max yields invariance to unknown affine rescaling (emission strength, sensor gain), which is desirable for localization across heterogeneous sources. The model thus learns shape/transport patterns rather than absolute amplitude; when absolute mass is needed operationally, the optional amplitude channel provides that cue without altering the backbone or loss.

3.2.2. Loss

Decomposition

The objective encourages two complementary behaviours: (i)

faithful reconstruction of the earlier maps (to learn dynamics, not merely a point label) and (ii)

sharp localization on the oldest slice, where the source imprint is most concentrated. Let

and

. We blend per-pixel regression (MSE) with a classification-style term (BCE) applied to the

logits over all backtracked frames (including the oldest):

Applying BCE

on logits improves gradients when probabilities saturate;

trades off geometric fidelity and peak sharpness (default

). Losses use

reduction=”sum” internally and are normalized by batch size in logging.

3.2.3. Complementarity

of BCE-on-Logits and MSE

Writing , the logistic loss on logits satisfies , while MSE on probabilities gives by the chain rule; the latter attenuates near saturation ( or 1). We therefore use with : BCE-on-logits supplies non-vanishing sharpening signal under saturation, and MSE preserves geometric fidelity elsewhere. For numerical stability, we compute BCE with logits and evaluate MSE on clipped probabilities; post hoc temperature scaling (optional) can further refine calibration without altering the objective.

3.2.4. Class

Imbalance and Weighting

Because positives occupy a small fraction of cells on the oldest slice, the dense term prevents trivial all-zero solutions and enforces the global plume shape, while focuses learning on the sparse high-probability region and maintains gradients under saturation. For simplicity and robustness across datasets, we use a single for all backtracked frames; this setting was stable in our experiments.

3.2.5. SWA/Polyak

Teacher Consistency

Backtracking stacks several predictions; small calibration errors can compound. To stabilize training without affecting inference cost, we maintain a Polyak/EMA teacher (

AveragedModel) and add light consistency terms

after a short warm-up:

with

ramped linearly by

. The total loss is

.

3.2.6. EMA Teacher and Schedule

Let be the student parameters and the teacher. We update the teacher as with a high decay , so the teacher prediction is a temporally smoothed copy of the student. After a short warm-up, the consistency weights are ramped linearly from 0 to modest values so the EMA target does not act as a strong prior early in training. The teacher uses the same inputs and indexing as the student (including the time flip that makes the oldest slice the target), receives no external wind or solver signals, and is updated with stop-gradient. Thus, the consistency loss improves calibration and temporal coherence across FUT slices without introducing information leakage or exogenous bias.

3.2.7. Robustness

Under Meanders

When winds meander or reverse, student and teacher may transiently disagree; keeping modest ensures supervised terms dominate in low-confidence cases, while the EMA acts as a gentle stabilizer rather than a hard constraint.

3.2.8. Inference

At test time, we feed the most recent

frames and obtain

. After the same time flip used in training,

is the estimate at

. The predicted source cell is the spatial argmax:

We report

geometry-aware metrics—Exact hit, hit

(Chebyshev

), and RMSE (px). Using the same per-frame normalization at train/test time avoids bias from dilution-induced dynamic-range shifts. All FUT slices are produced in a single pass from the same conditioning window, so no predicted slice is reused as input at test time. At test time, we run a single forward pass on the observed window; temporal sweeps are one-sided on the flipped index, so no information beyond the window can be accessed.

3.2.9. Uncertainty

and Abstention (Optional)

Alongside the point estimate on the oldest predicted slice , we provide an optional confidence score built from two quantities: (i) the top-two probability margin at the argmax cell and (ii) the predictive entropy . An abstention policy can be enabled at deployment: emit a hard decision only if and ; otherwise, return uncertain. These thresholds are chosen on a held-out validation set and kept fixed. For reliability, logits may be post hoc calibrated by temperature scaling (; T fit on validation); when enabled, we recommend reporting the expected calibration error (ECE) on the oldest slice. All of these components are optional knobs for deployment and are disabled in the main tables unless explicitly stated.

3.2.10. Stabilizers

We use a DropPath schedule across blocks, an EMA teacher for light consistency after warm-up, and gradient clipping. These discourage sample-idiosyncratic routing and help the gate avoid collapse, without changing the backbone.

3.2.11. Deployment

Note

In practice, we keep the validation-selected thresholds fixed at deployment, optionally apply temperature scaling for calibration, and log both the margin and entropy with each prediction. The abstention safeguard remains disabled in the main accuracy tables unless stated.

3.2.12. Optional

Stabilizer for Very Low-Wind Plateaus

Episodes with near-stagnant flow can produce broad, low-contrast concentration fields. As an optional stabilizer that leaves the backbone unchanged, we add a light mass-balance term on the oldest slice,

and we recommend mixing low- and moderate-wind windows within a mini-batch to avoid collapse onto trivial flat solutions. When used,

is applied after a short warm-up with a small weight

tuned on validation.

3.2.13. Conceptual Algorithms (For Clarity)

Algorithms 1 and 2 give compact, ASCII-safe pseudocode for the forward pass (causal directional sweeps + DirGate) and a single training step (BCE-with-logits across backtracked slices; after warm-up, a light EMA-consistency term). These conceptual listings include only the interfaces needed for reproduction and do not alter the backbone.

| Algorithm 1 Forward pass with directional sweeps and DirGate |

| Input:

|

| Stem: ; permute to |

| For each block: |

| For : (depthwise, causal) |

| (global or local DirGate) |

|

|

| Head: permute to channels-first and apply to produce logits

|

| Algorithm 2 One training step |

| Input: conditioning window , targets (time-flipped) |

| Forward: logits , probs |

| Loss:

|

| After warm-up: teacher EMA gives ; add |

| Update: mixed-precision backprop, gradient clipping, EMA update

|

3.3. Dual-Source Stress Test (Zero-Shot)

We frame the dual-source experiment as a stress test under additive transport assumptions: it is parameter-free, states its assumptions/safeguards, and is intended to probe qualitative behaviour rather than to assert fully general multi-source performance in the absence of solver-backed multi-release data.

Scope and assumptions. We cast dual-source localization as a zero-shot stress test under passive advection–diffusion: (i) approximate linear superposition over the short observation window; (ii) after backtracking, the oldest slice exhibits at least moderate peak separation so that the global maximum is attributable to a single emitter; and (iii) subtraction uses an isotropic template by default, or an oriented/elliptical template when a coarse wind prior is available.

Safeguards. We clamp residuals to be non-negative, apply small-radius NMS before the second pick, and flag “uncertain” when the top-two probability margin on the oldest slice is small, in which case we defer a hard two-source decision.

Optional upgrades (kept off by default). A compact two-Gaussian mixture fit or a short unrolled residual decoder trained on synthetic superpositions can improve deblending under stronger overlap while remaining near-linear at our lattice size and leaving the backbone unchanged.

Real incidents may involve more than one emitter. Because the synthetic generator is additive (linear superposition), we keep the backbone unchanged and apply a lightweight, physics-aware postprocess on the oldest slice. We treat as a superposition of two diffusion kernels and perform a two-peak subtraction: (i) locate the global maximum, subtract a fixed isotropic heat-kernel template scaled by that peak, and clamp to zero; (ii) repeat once on the residual and return the two peak coordinates. This deterministic, parameter-free procedure introduces no learned weights, runs in time, and provides a practical zero-shot baseline for dual-source localization without retraining.

Multi-source extension (documented). For , we apply a sequential K-peak procedure on the oldest slice: (1) pick the current global maximum; (2) subtract a scaled template (isotropic by default, oriented if a coarse wind prior is available); (3) clamp residuals to non-negative; (4) apply small-radius NMS; (5) repeat until a residual mass criterion is met. We stop when the residual mass falls below a fraction of the original or after picks (e.g., ). A minimum-separation guard (≥1 cell) and a top-two margin check prevent over-segmentation. This runs in linear time per pick and leaves the backbone unchanged.

Optional refinements (kept off by default). For overlapping lobes, a K-component Gaussian mixture may be fitted from the subtractor initialization (means at picked peaks; amplitudes from subtracted scales; optionally shared covariance) and refined by a few EM steps. Alternatively, a tiny residual decoder (a shallow conv head on the residual) trained on synthetic superpositions improves deblending while preserving the linear-time profile and leaving the directional operators/gating unchanged.

For , we expose a per-pick confidence based on the local top-two margin and the residual mass curve; if either falls below thresholds, the routine halts further picks and flags uncertain. This confidence is combined with predictive entropy into a single actionable score.

7. Conclusions and Future Work

We presented Recursive Backtracking with Visual Mamba (RBVM), a compact, Visual-Mamba-inspired visual state-space framework that infers the origin of a gas release from a short conditioning window. The backbone aggregates causal, depthwise directional operators along via an upwind gate (DirGate) and uses pre-norm LayerNorm with a small LayerScale and a layer-indexed, depth-weighted DropPath for stable stacking. Because grouped depthwise convolutions dominate the cost, compute and memory scale linearly with the spatiotemporal extent, yielding predictable behaviour as the look-back horizon increases.

Our contributions are threefold and mutually reinforcing. Architecturally, we replace heavy spatiotemporal mixing with axis-wise, one-sided depthwise operators that preserve physical causality, while DirGate learns a convex directional mixture that follows the flow and remains hardware-friendly. In training/inference, a simple recipe—time flip alignment, BCE-with-logits across all backtracked slices (including the oldest), and an EMA-based consistency term—produces sharp, well-calibrated peaks on the evaluation slice without complicating optimization. Empirically, across a field corpus with urban channelling and wind variability and a strictly causal synthetic benchmark, RBVM improves geometry-aware localization metrics with a small parameter budget relative to attention-centric trackers. The six-direction variant (with a backward temporal branch) proves more robust than five directions in settings with meandering winds, and in a dual-source stress test, a parameter-free, physics-aware two-peak subtraction on the oldest slice provides a practical zero-shot extension without changing the backbone.

To maintain clarity and comparability, we scoped evaluation around two complementary settings. Field analyses follow an as-is protocol, and synthetic experiments follow a controlled setup used for sensitivity studies and the dual-source stress test. This design yields clean, like-for-like baselines and forms a springboard for broader field corpora and additional synthetic variants.

Looking ahead, we aim to broaden RBVM’s applicability while preserving its linear-time core. Priorities include handling sparser or irregular observations, strengthening generalization across diverse wind and terrain conditions with physics-aware extensions, scaling to larger domains, and improving multi-source separation—all within a streaming, hardware-friendly pipeline.

By marrying linear-time, directional state-space modelling with visual grid inputs, RBVM offers a practical alternative to attention-centric trackers and advances toward real-time decision support in CBRN emergencies. Beyond this domain, we view RBVM as a compact, directionally causal backbone for inverse inference on transported fields, useful more broadly for spatiotemporal backtracking tasks in environmental monitoring and urban analytics. Future work will pursue controlled comparisons against solver-backed inversions under harmonised I/O and budgets, and explore light hybridisation via auxiliary priors or gating biases while retaining linear-time inference.