Adaptive Ensemble Machine Learning Framework for Proactive Blockchain Security

Abstract

1. Introduction

2. Literature Review

3. Methodology

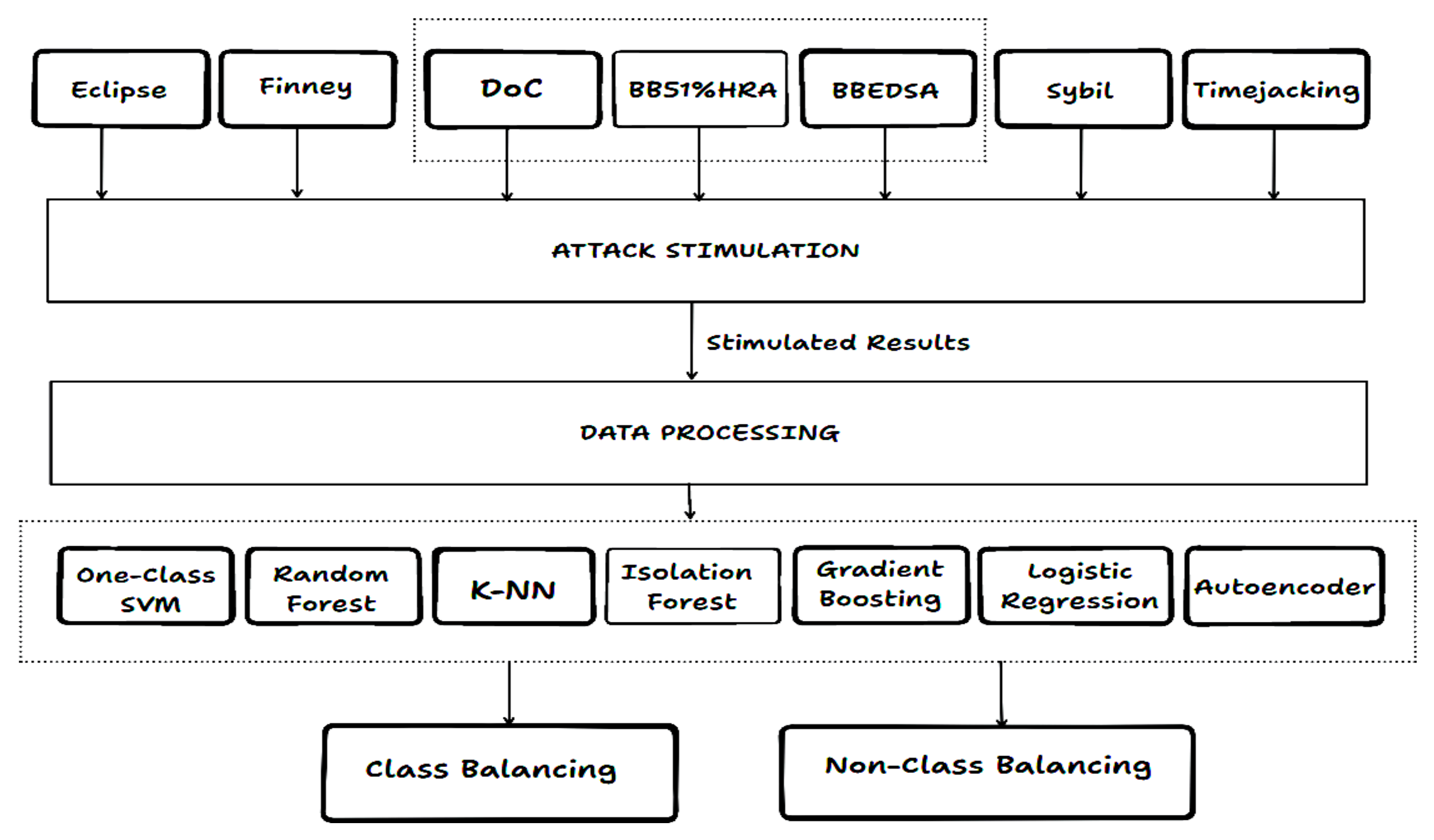

3.1. Research Model

3.2. Blockchain Attacks Used

3.3. Data Collection and Pre-Processing

3.4. Machine Learning and Hybrid Models Used

- One-Class Support Vector Machine (OCSVM) (Unsupervised).

- Random Forests (RF) (Supervised).

- K-Nearest Neighbors (KNN) (Supervised).

- Isolation Forest (IF) (Unsupervised).

- Gradient Boosting (GB) (Supervised).

- Logistic Regression (LR) (Supervised).

- Autoencoder (Unsupervised).

3.5. Experimental Design for Model Evaluation

3.6. Performance Metrics

- Accuracy measures the proportion of correctly classified instances among all samples, but it can be less informative when classes are imbalanced.

- Precision divides true positives by total positive predictions, showing the accuracy of positive estimates.

- Recall is the ratio of true positives to all actual positives, reflecting the model’s capacity to detect all positive instances.

- F1 score strikes a compromise between recall and precision, particularly useful for handling imbalanced datasets.

- Anomaly Detection (AD) is the proportion of data points identified as anomalies relative to the total number of test samples, providing a measure of model sensitivity.

3.7. Computer Configuration

4. Results

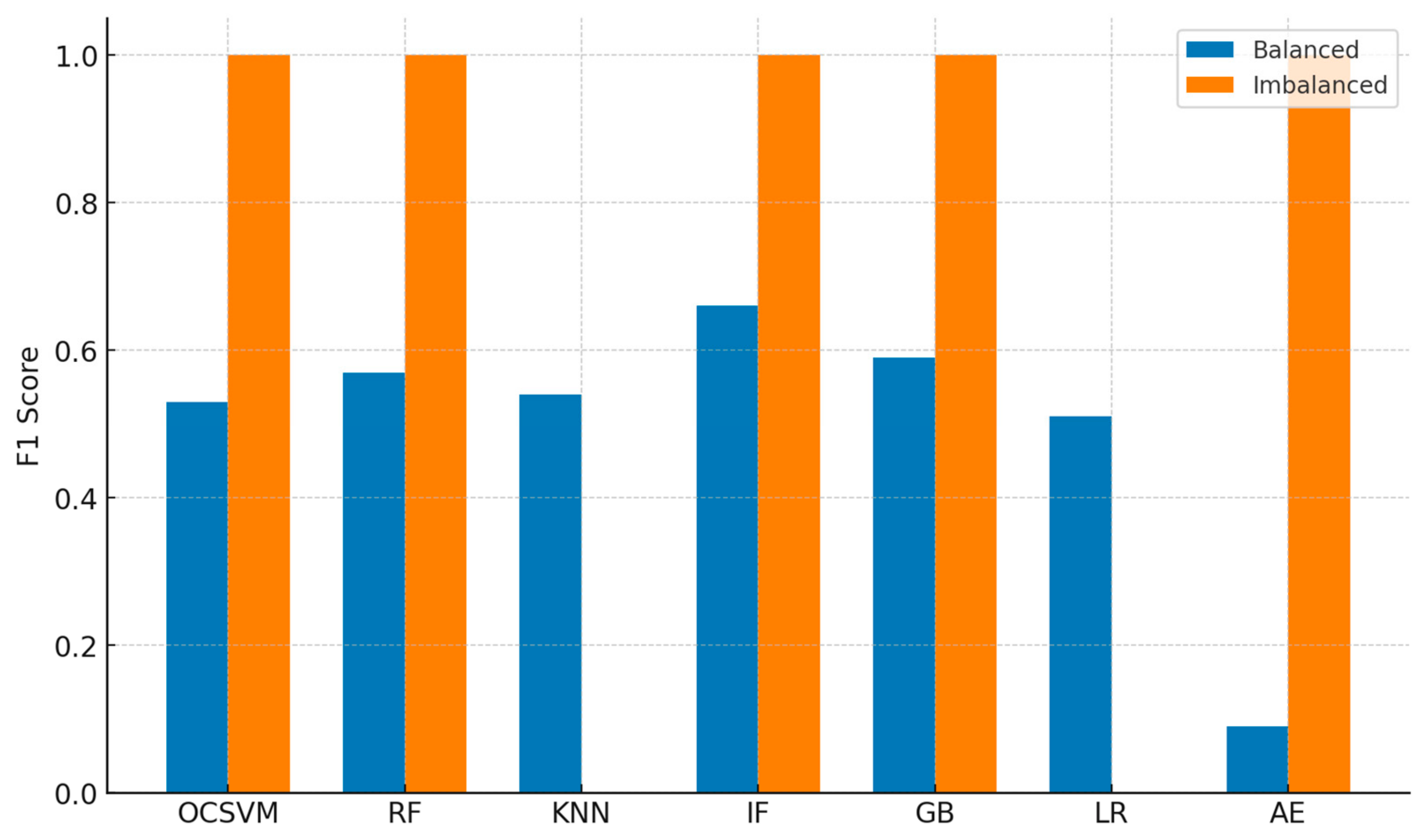

4.1. Initial Single Dataset Analysis (Baseline)

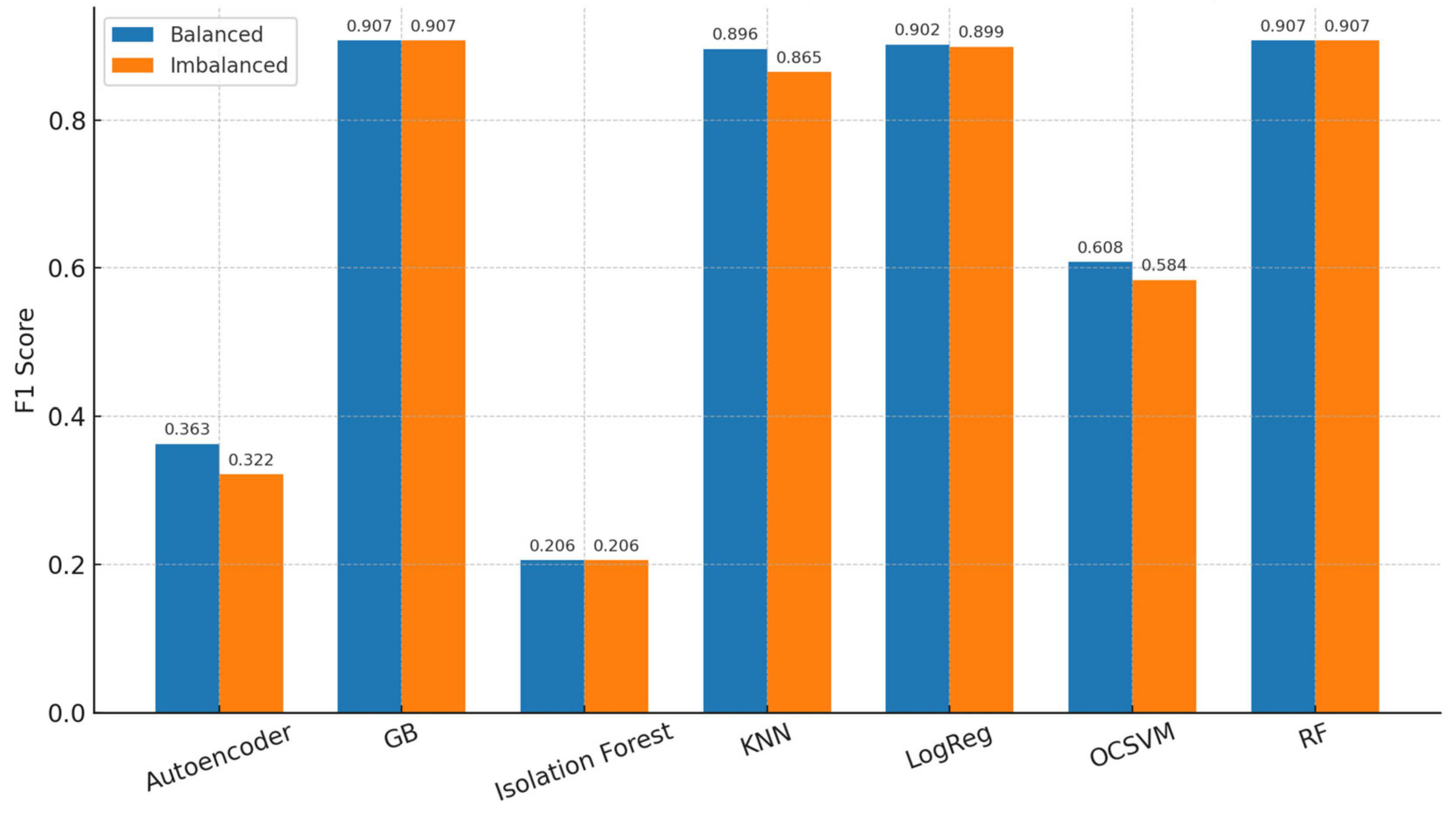

4.2. Cross-Validation (CV) Performance

4.3. Per-Attack Performance

4.4. Threshold Sensitivity (Unsupervised)

4.5. Cross Dataset Validation (Datasets A and B)

4.6. Computational Efficiency

4.7. Combined Models (Ensemble and Adaptive Defense Performance)

4.7.1. Ensemble Models Performance

4.7.2. ASR Policy Effects

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| AD% | Anomaly Detection Percentage |

| ADASYN | Adaptive Synthetic Sampling |

| AI | Artificial Intelligence |

| ASR | Adaptive Security Response |

| AutoML | Automated Machine Learning |

| BAR | Block Access Restriction |

| BBA | Black Bird Attack |

| BBEDSA | Black Bird Embedded Double Spending Attack |

| CV | Cross-Validation |

| DoC | Denial-of-Chain |

| F1 | F1 Score |

| FN | False Negative |

| FP | False Positive |

| GB | Gradient Boosting |

| GPU | Graphics Processing Unit |

| HR | Hash Rate |

| IF | Isolation Forest |

| IQR | Interquartile Range |

| KNN | K-Nearest Neighbors |

| LR | Logistic Regression |

| ML | Machine Learning |

| OCSVM | One-Class Support Vector Machine |

| RAM | Random Access Memory |

| RF | Random Forest |

| SD | Standard Deviation |

| SMOTE | Synthetic Minority Oversampling Technique |

| SVM | Support Vector Machine |

| TN | True Negative |

| TP | True Positive |

| VRAM | Video Random Access Memory |

References

- Ahmed, M.R.; Islam, M.; Shatabda, S.; Islam, S. Blockchain-Based Identity Management System and Self-Sovereign Identity Ecosystem: A Comprehensive Survey. IEEE Access 2022, 10, 113436–113481. [Google Scholar] [CrossRef]

- Laroiya, C.; Saxena, D.; Komalavalli, C. Applications of Blockchain Technology. In Handbook of Research on Blockchain Technology; Krishnan, S., Balas, V.E., Julie, E.G., Robinson, H., Balaji, S., Kumar, R., Eds.; Academic Press (Elsevier): Cambridge, MA, USA, 2020; pp. 213–243. [Google Scholar] [CrossRef]

- Guo, H.; Yu, X. A Survey on Blockchain Technology and Its Security. Blockchain Res. Appl. 2022, 3, 100067. [Google Scholar] [CrossRef]

- Uddin, M.; Obaidat, M.; Manickam, S.; Shams, A.; Dandoush, A.; Ullah, H.; Ullah, S.S. Exploring the Convergence of Metaverse, Blockchain, and AI: Opportunities, Challenges, and Future Research Directions. WIREs Data Min. Knowl. Discov. 2024, 14, e1556. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, H.; Zhang, Y.; Han, M.; Siddula, M.; Cai, Z. A Survey on Blockchain Systems: Attacks, Defenses, and Privacy Preservation. High-Confid. Comput. 2022, 2, 100048. [Google Scholar] [CrossRef]

- Zhang, P.; Schmidt, D.C.; White, J.; Lenz, G. Blockchain Technology Use Cases in Healthcare. In Advances in Computers; Zelkowitz, M.V., Ed.; Academic Press: Cambridge, MA, USA, 2018; Volume 111, pp. 1–41. [Google Scholar] [CrossRef]

- Li, M.; Zeng, L.; Zhao, L.; Yang, R.; An, D.; Fan, H. Blockchain-Watermarking for Compressive Sensed Images. IEEE Access 2021, 9, 56457–56467. [Google Scholar] [CrossRef]

- Singh, S.; Hosen, A.S.M.S.; Yoon, B. Blockchain Security Attacks, Challenges, and Solutions for the Future Distributed IoT Network. IEEE Access 2021, 9, 13938–13959. [Google Scholar] [CrossRef]

- Badawi, E.; Jourdan, G.V. Cryptocurrencies Emerging Threats and Defensive Mechanisms: A Systematic Literature Review. IEEE Access 2020, 8, 200021–200037. [Google Scholar] [CrossRef]

- Grobys, K. When the Blockchain Does Not Block: On Hackings and Uncertainty in the Cryptocurrency Market. Quant. Financ. 2021, 21, 1267–1279. [Google Scholar] [CrossRef]

- Bard, D.A.; Kearney, J.J.; Perez-Delgado, C.A. Quantum Advantage on Proof of Work. Array 2022, 15, 100225. [Google Scholar] [CrossRef]

- Junejo, Z.; Hashmani, M.A.; Alabdulatif, A.; Memon, M.M.; Jaffari, S.R.; Abdullah, M.Z. RZee: Cryptographic and Statistical Model for Adversary Detection and Filtration to Preserve Blockchain Privacy. J. King Saud Univ.—Comput. Inf. Sci. 2022, 34, 7885–7910. [Google Scholar] [CrossRef]

- Agarwal, R.; Barve, S.; Shukla, S.K. Detecting Malicious Accounts in Permissionless Blockchains Using Temporal Graph Properties. Appl. Netw. Sci. 2021, 6, 9. [Google Scholar] [CrossRef]

- Ali, H.; Ahmad, J.; Jaroucheh, Z.; Papadopoulos, P.; Pitropakis, N.; Lo, O.; Abramson, W.; Buchanan, W.J. Trusted Threat Intelligence Sharing in Practice and Performance Benchmarking through the Hyperledger Fabric Platform. Entropy 2022, 24, 1379. [Google Scholar] [CrossRef] [PubMed]

- Saad, M.; Spaulding, J.; Njilla, L.; Kamhoua, C.; Shetty, S.; Nyang, D.; Mohaisen, D. Exploring the Attack Surface of Blockchain: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2020, 22, 1977–2008. [Google Scholar] [CrossRef]

- Badawi, E.; Jourdan, G.V.; Onut, I.V. The “Bitcoin Generator” Scam. Blockchain Res. Appl. 2022, 3, 100084. [Google Scholar] [CrossRef]

- Bordel, B.; Alcarria, R.; Robles, T. Denial of Chain: Evaluation and Prediction of a Novel Cyberattack in Blockchain-Supported Systems. Future Gener. Comput. Syst. 2021, 116, 426–439. [Google Scholar] [CrossRef]

- Xing, Z.; Chen, Z. Black Bird Attack: A Vital Threat to Blockchain Technology. Procedia Comput. Sci. 2022, 198, 556–563. [Google Scholar] [CrossRef]

- Wang, Q.; Li, R.; Zhan, L. Blockchain Technology in the Energy Sector: From Fundamentals to Applications. Comput. Sci. Rev. 2021, 39, 100362. [Google Scholar] [CrossRef]

- Salle, A.L.; Kumar, A.; Jevtic, P.; Boscovic, D. Joint Modeling of Hyperledger Fabric and Sybil Attack: Petri Net Approach. Simul. Model. Pract. Theory 2022, 122, 102674. [Google Scholar] [CrossRef]

- Hong, H.; Woo, S.; Park, S.; Lee, J.; Lee, H. CIRCUIT: A JavaScript Memory Heap-Based Approach for Precisely Detecting Cryptojacking Websites. IEEE Access 2022, 10, 95356–95368. [Google Scholar] [CrossRef]

- Albakri, A.; Mokbel, C. Convolutional Neural Network Biometric Cryptosystem for the Protection of the Blockchain’s Private Key. Procedia Comput. Sci. 2019, 160, 235–240. [Google Scholar] [CrossRef]

- Dua, M.; Sadhu, A.; Jindal, A.; Mehta, R. A Hybrid Noise Robust Model for Multireplay Attack Detection in ASV Systems. Biomed. Signal Process. Control 2022, 74, 103517. [Google Scholar] [CrossRef]

- Campos, G.O.; Zimek, A.; Sander, J.; Campello, R.J.G.B.; Micenková, B.; Schubert, E.; Assent, I.; Houle, M.E. On the Evaluation of Unsupervised Outlier Detection: Measures, Datasets, and an Empirical Study. Data Min. Knowl. Discov. 2016, 30, 891–927. [Google Scholar] [CrossRef]

- Xing, Z.; Chen, Z. Using BAR Switch to Prevent Black Bird Embedded Double Spending Attack. Procedia Comput. Sci. 2022, 198, 829–836. [Google Scholar] [CrossRef]

- Saxena, D.; Cao, J. Generative Adversarial Networks (GANs): Challenges, Solutions, and Future Directions. ACM Comput. Surv. 2021, 54, 1–42. [Google Scholar] [CrossRef]

- Mahmood, M.; Dabagh, A. Blockchain Technology and Internet of Things: Review, Challenge and Security Concern. Int. J. Power Electron. Drive Syst. 2023, 13, 718–735. [Google Scholar] [CrossRef]

- Hamdi, A.; Fourati, L.C.; Ayed, S. Vulnerabilities and Attacks Assessments in Blockchain 1.0, 2.0 and 3.0: Tools, Analysis and Countermeasures. Int. J. Inf. Secur. 2023, 23, 713–757. [Google Scholar] [CrossRef]

- Allende, M.; León, D.L.; Cerón, S.; Pareja, A.; Pacheco, E.; Leal, A.; Silva, M.D.; Pardo, A.; Jones, D.; Worrall, D.J.; et al. Quantum-Resistance in Blockchain Networks. Sci. Rep. 2023, 13, 5664. [Google Scholar] [CrossRef] [PubMed]

| Feature | Description |

|---|---|

| hash_rate | Computational power assigned to a node |

| malicious_transactions_added | Indicates whether a smart-contract audit allowed a bad transaction through (1 = yes, 0 = no) |

| nodes_after_sybil_attack | Number of nodes remaining after a Sybil attack |

| nodes_after_eclipse_attack | Number of nodes remaining after an Eclipse attack |

| block_added_after_finney | Finney attack success indicator (1 = fraudulent block added, 0 = failed) |

| transaction_authorized_finney | Whether the Finney attack transaction was authorized (1 = yes, 0 = no) |

| blocks_before_attack | Chain length immediately before an attack |

| blocks_after_attack | Chain length immediately after an attack |

| doc_attack_identified | Target variable: DoC attack detection (1 = detected, 0 = not detected) |

| ML Models | Hyperparameters |

|---|---|

| One-Class SVM | Kernel: rbf, Nu: 0.5, Gamma: scale |

| Random Forest | n_estimators: 200, max_depth: 10, criterion: gini |

| KNN | n_neighbors: 5, Weights: uniform, Algorithm: auto |

| Isolation Forest | n_estimators: 200, Max_samples: auto, Contamination: 0.1 |

| Gradient Boosting | n_estimators: 150, learning_rate: 0.05, max_depth: 3 |

| Logistic Regression | Penalty: l2, C: 1.0, Solver: lbfgs |

| Autoencoder | Hidden Layers: ([64, 32, 16, 32, 64)], Activation: relu, Optimizer: adam, Loss: mse, Epochs: 100, Batch Size: 16 |

| Model | Accuracy | Precision | Recall | F1 | AD% |

|---|---|---|---|---|---|

| One-Class SVM | 0.52 | 0.51 | 0.55 | 0.53 | 0.53 |

| Random Forest | 0.50 | 0.49 | 0.65 | 0.57 | 0.65 |

| KNN | 0.52 | 0.50 | 0.59 | 0.54 | 0.57 |

| Isolation Forest | 0.48 | 0.49 | 0.96 | 0.66 | 1.00 |

| Gradient Boosting | 0.49 | 0.48 | 0.76 | 0.59 | 0.76 |

| Logistic Regression | 0.52 | 0.52 | 0.54 | 0.51 | 0.54 |

| Autoencoder | 0.51 | 0.50 | 0.05 | 0.09 | 0.05 |

| Model | Accuracy | Precision | Recall | F1 | AD% |

|---|---|---|---|---|---|

| One-Class SVM | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Random Forest | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| KNN | 0.00 | 0.50 | 0.50 | 0.00 | 0.00 |

| Isolation Forest | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Gradient Boosting | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Logistic Regression | 0.00 | 0.50 | 0.50 | 0.00 | 0.00 |

| Autoencoder | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| Model | F1_ Mean | F1_ Std | Acc_ Mean | Acc_ Std | Fit_ Median | Pred_ Median | Size_ Median |

|---|---|---|---|---|---|---|---|

| Autoencoder | 0.363 | 0.163 | 0.442 | 0.151 | 0.184 | 0.020 | 2.000 |

| Gradient Boosting | 0.907 | 0.055 | 0.878 | 0.078 | 0.416 | 0.003 | 0.168 |

| Isolation Forest | 0.206 | 0.154 | 0.479 | 0.073 | 2.061 | 0.032 | 1.104 |

| KNN | 0.896 | 0.075 | 0.868 | 0.086 | 0.002 | 0.009 | 0.025 |

| Logistic Regression | 0.902 | 0.056 | 0.872 | 0.08 | 0.009 | 0.001 | 0.001 |

| One-Class SVM | 0.608 | 0.195 | 0.591 | 0.138 | 0.003 | 0.002 | 0.008 |

| Random Forest | 0.907 | 0.055 | 0.878 | 0.078 | 1.607 | 0.161 | 0.245 |

| Model | F1_ Mean | F1_ Std | Acc_ Mean | Acc_ Std | Fit_ Median | Pred_ Median | Size_ Median |

|---|---|---|---|---|---|---|---|

| Autoencoder | 0.322 | 0.15 | 0.418 | 0.133 | 0.181 | 0.020 | 2.000 |

| Gradient Boosting | 0.907 | 0.055 | 0.878 | 0.078 | 0.415 | 0.003 | 0.168 |

| Isolation Forest | 0.206 | 0.156 | 0.473 | 0.079 | 2.07 | 0.032 | 1.048 |

| KNN | 0.865 | 0.118 | 0.848 | 0.104 | 0.002 | 0.009 | 0.022 |

| Logistic Regression | 0.899 | 0.054 | 0.867 | 0.078 | 0.009 | 0.001 | 0.001 |

| One-Class SVM | 0.584 | 0.226 | 0.563 | 0.174 | 0.003 | 0.002 | 0.007 |

| Random Forest | 0.907 | 0.055 | 0.878 | 0.078 | 1.604 | 0.161 | 0.245 |

| Model | F1_ Mean_Bal | F1_ Std_Bal | F1_ Mean_Imbal | F1_ Std_Imbal | F1_Delta_ Bal_Minus_Imbal |

|---|---|---|---|---|---|

| Gradient Boosting | 0.907 | 0.055 | 0.907 | 0.055 | 0.000 |

| Random Forest | 0.907 | 0.055 | 0.907 | 0.055 | 0.000 |

| Logistic Regression | 0.902 | 0.056 | 0.899 | 0.054 | 0.003 |

| KNN | 0.896 | 0.075 | 0.865 | 0.118 | 0.031 |

| One-Class SVM | 0.608 | 0.195 | 0.584 | 0.226 | 0.023 |

| Autoencoder | 0.363 | 0.163 | 0.322 | 0.15 | 0.041 |

| Isolation Forest | 0.206 | 0.154 | 0.206 | 0.156 | −0.001 |

| Model | Paired_T_Pvalue | Wilcoxon_Pvalue | Pairs_N |

|---|---|---|---|

| Autoencoder | N/A | N/A | 35 |

| Gradient Boosting | 0.083 | 0.083 | 35 |

| Isolation Forest | N/A | N/A | 35 |

| KNN | 0.16 | 0.157 | 35 |

| Logistic Regression | 0.044 | 0.046 | 35 |

| One-Class SVM | 1 | 1 | 35 |

| Random Forest | 0.083 | 0.083 | 35 |

| Model | Attack_Type | F1_Mean | F1_Std |

|---|---|---|---|

| Gradient Boosting | DoC | 0.95 | 0.04 |

| Gradient Boosting | Eclipse | 0.94 | 0.05 |

| Gradient Boosting | Hashrate | 0.96 | 0.03 |

| Gradient Boosting | Smart Contract | 0.92 | 0.06 |

| Gradient Boosting | Sybil | 0.93 | 0.05 |

| Gradient Boosting | Finney | 0.60 | 0.12 |

| KNN | DoC | 0.91 | 0.07 |

| KNN | Eclipse | 0.90 | 0.08 |

| KNN | Hashrate | 0.92 | 0.06 |

| KNN | Smart Contract | 0.89 | 0.09 |

| KNN | Sybil | 0.90 | 0.08 |

| KNN | Finney | 0.56 | 0.15 |

| Logistic Regression | DoC | 0.93 | 0.06 |

| Logistic Regression | Eclipse | 0.92 | 0.07 |

| Logistic Regression | Hashrate | 0.94 | 0.05 |

| Logistic Regression | Smart Contract | 0.90 | 0.08 |

| Logistic Regression | Sybil | 0.91 | 0.07 |

| Logistic Regression | Finney | 0.57 | 0.14 |

| Random Forest | DoC | 0.94 | 0.05 |

| Random Forest | Eclipse | 0.93 | 0.06 |

| Random Forest | Hashrate | 0.95 | 0.04 |

| Random Forest | Smart Contract | 0.91 | 0.07 |

| Random Forest | Sybil | 0.92 | 0.06 |

| Random Forest | Finney | 0.58 | 0.13 |

| Model | Best_F1_Mean | Best_F1_Std | Best_Q_Median | Best_Q_Iqr |

|---|---|---|---|---|

| Isolation Forest | 0.672 | 0.064 | 50 | 20 |

| One-Class SVM | 0.79 | 0.066 | 50 | 0 |

| Model | DatasetA_ Balanced | DatasetA_ Imbalanced | DatasetB_ Balanced | DatasetB_ Imbalanced |

|---|---|---|---|---|

| Gradient Boosting | 0.893 ± 0.153 | 0.865 ± 0.161 | 0.907 ± 0.055 | 0.907 ± 0.055 |

| KNN | 0.865 ± 0.161 | 0.846 ± 0.164 | 0.896 ± 0.075 | 0.865 ± 0.118 |

| Logistic Regression | 0.912 ± 0.144 | 0.874 ± 0.159 | 0.902 ± 0.056 | 0.899 ± 0.054 |

| Random Forest | 0.893 ± 0.153 | 0.865 ± 0.161 | 0.907 ± 0.055 | 0.907 ± 0.055 |

| Model | Feature Extraction (s) | Fit Time (s) | Predict Time (s) | Peak Memory (MB) | Model Size (MB) |

|---|---|---|---|---|---|

| Logistic Regression | 0.013 | 0.010 | 0.001 | 15 | 0.001 |

| KNN | 0.013 | 0.002 | 0.009 | 18 | 0.025 |

| Gradient Boosting | 0.013 | 0.420 | 0.003 | 95 | 0.170 |

| Random Forest | 0.013 | 1.600 | 0.160 | 120 | 0.245 |

| One-Class SVM | 0.013 | 0.050 | 0.004 | 40 | 0.008 |

| Isolation Forest | 0.013 | 2.050 | 0.032 | 110 | 1.100 |

| Autoencoder | 0.013 | 3.200 | 0.020 | 250 | 2.000 |

| Ensemble Models | Accuracy | Precision | Recall | F1 | AD % |

|---|---|---|---|---|---|

| Bagging (RF base) | 0.530 | 0.528 | 0.533 | 0.531 | 0.68 |

| Boosting (GB base) | 0.545 | 0.543 | 0.546 | 0.544 | 0.78 |

| Voting (Hard) | 0.535 | 0.532 | 0.538 | 0.535 | 0.70 |

| Stacking (Meta-LR) | 0.540 | 0.537 | 0.542 | 0.540 | 0.72 |

| Model (with ASR) | Accuracy-ASR | Precision-ASR | Recall-ASR | F1-ASR | ASR Effect% |

|---|---|---|---|---|---|

| Random Forest + ASR | 0.962 | 0.960 | 0.963 | 0.961 | 0.97 |

| Gradient Boosting + ASR | 0.945 | 0.944 | 0.947 | 0.946 | 0.95 |

| Isolation Forest + ASR | 0.900 | 0.890 | 0.998 | 0.940 | 0.99 |

| Autoencoder + ASR | 0.870 | 0.860 | 0.873 | 0.865 | 0.88 |

| Logistic Regression + ASR | 0.930 | 0.928 | 0.933 | 0.930 | 0.92 |

| Study | Approach | Limitation | This Study’s Contribution | Models Used | Attacks Considered | Dataset Type | Performance Evaluation |

|---|---|---|---|---|---|---|---|

| [3] | Comprehensive literature review of blockchain threats and vulnerabilities | Conceptual only; no empirical validation or defense model | Moves beyond review by implementing ML-based proactive defense validated on simulated datasets | N/A (survey) | Broad overview of multiple blockchain threats | Literature review | No performance reported |

| [5] | Taxonomy of blockchain attacks and defenses (consensus, network, application) | No empirical testing or prototype | Provides empirical ML evaluation across multiple simulated attack scenarios | N/A (conceptual) | Multiple attack categories in theory | Literature review/taxonomy | No performance reported |

| [17] | Graph-theoretic model to predict/mitigate Denial-of-Chain (DoC) | Focused on a single attack (DoC only) | Extends scope to DoC plus other attacks, embedded in ML-based multi-layer defense | Graph-theory model | DoC (consensus attack) | Simulation | Attack feasibility modeled; no ML results |

| [18] | Simulation of Black Bird 51% attack variant | No counter-measures proposed | Empirically evaluates ML anomaly detection against Black Bird-style attacks within adaptive framework | N/A (attack simulation) | Black Bird (51% attack variant) | Simulation logs | Impact analysis; no defense performance |

| [25] | Block Access Restriction (BAR) to mitigate BBEDSA | Specific to Black Bird double-spend; lacks generalization | Generalizes by applying ML-based anomaly detection across diverse attacks | BAR mechanism | Black Bird embedded double spend | Simulation | Attack prevented in model; no ML metrics |

| [21] | CIRCUIT: JavaScript memory-heap analysis for cryptojacking detection | Narrow scope; limited to cryptojacking | Broadens anomaly detection across diverse blockchain threats, integrating adaptive ML response | ML classifiers for cryptojacking | Cryptojacking | Internet-scale crawl (websites) | Detected ~1800 malicious sites; high precision |

| This Study (2025) | ML-based anomaly detection + Adaptive Security Response (ASR) across multiple attack types | Based on simulated logs only; not yet tested on live blockchain networks | First integrated ML-based proactive defense benchmarked across multiple simulated blockchain attack scenarios | Random Forest, Gradient Boosting, Logistic Regression, KNN, One-Class SVM, Isolation Forest, Autoencoder, Ensembles, ASR | Black Bird (51% and BBEDSA), DoC, Sybil, Eclipse, Finney, Smart Contract | Simulated blockchain logs (Datasets A and B) | Supervised models F1 > 0.90 (balanced); unsupervised complementary (OCSVM F1 ≈ 0.79); Finney hardest (F1 ≈ 0.58); efficiency: RF/GB training < 2 s, inference < 0.2 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Omonayajo, B.; Oke, O.A.; Cavus, N. Adaptive Ensemble Machine Learning Framework for Proactive Blockchain Security. Appl. Sci. 2025, 15, 10848. https://doi.org/10.3390/app151910848

Omonayajo B, Oke OA, Cavus N. Adaptive Ensemble Machine Learning Framework for Proactive Blockchain Security. Applied Sciences. 2025; 15(19):10848. https://doi.org/10.3390/app151910848

Chicago/Turabian StyleOmonayajo, Babatomiwa, Oluwafemi Ayotunde Oke, and Nadire Cavus. 2025. "Adaptive Ensemble Machine Learning Framework for Proactive Blockchain Security" Applied Sciences 15, no. 19: 10848. https://doi.org/10.3390/app151910848

APA StyleOmonayajo, B., Oke, O. A., & Cavus, N. (2025). Adaptive Ensemble Machine Learning Framework for Proactive Blockchain Security. Applied Sciences, 15(19), 10848. https://doi.org/10.3390/app151910848