The Preliminaries Subsection lays the groundwork for this study by explaining the data structure, describing the core elements of MCA and Random Forest, and illustrating them with examples. It also connects the manufacturing context to the analytical framework through a conceptual diagram.

2.1.1. Conceptual Framework of the Production Line and Quality Notification Workflow

The data used in this study directly reflect manufacturing issues managed through the quality notification system.

Figure 1 presents a conceptual diagram of the production line and the workflow of quality notifications.

These problems include the detection of anomalies in parts during production, such as representative defects, affected batches, and incidents tied to specific machines or critical production stages. The workflow described in the methodology—from anomaly detection by the supervisor, formal reporting by the quality engineer, review and registration in the Information System (IS), to follow-up and closure—ensures that each deviation is documented and categorized. In this way, notifications serve not only as a control mechanism but also as a structured input that enables analysis of recurrent deviations, prioritization of critical alerts, and a direct connection between the analytical results and industrial process management.

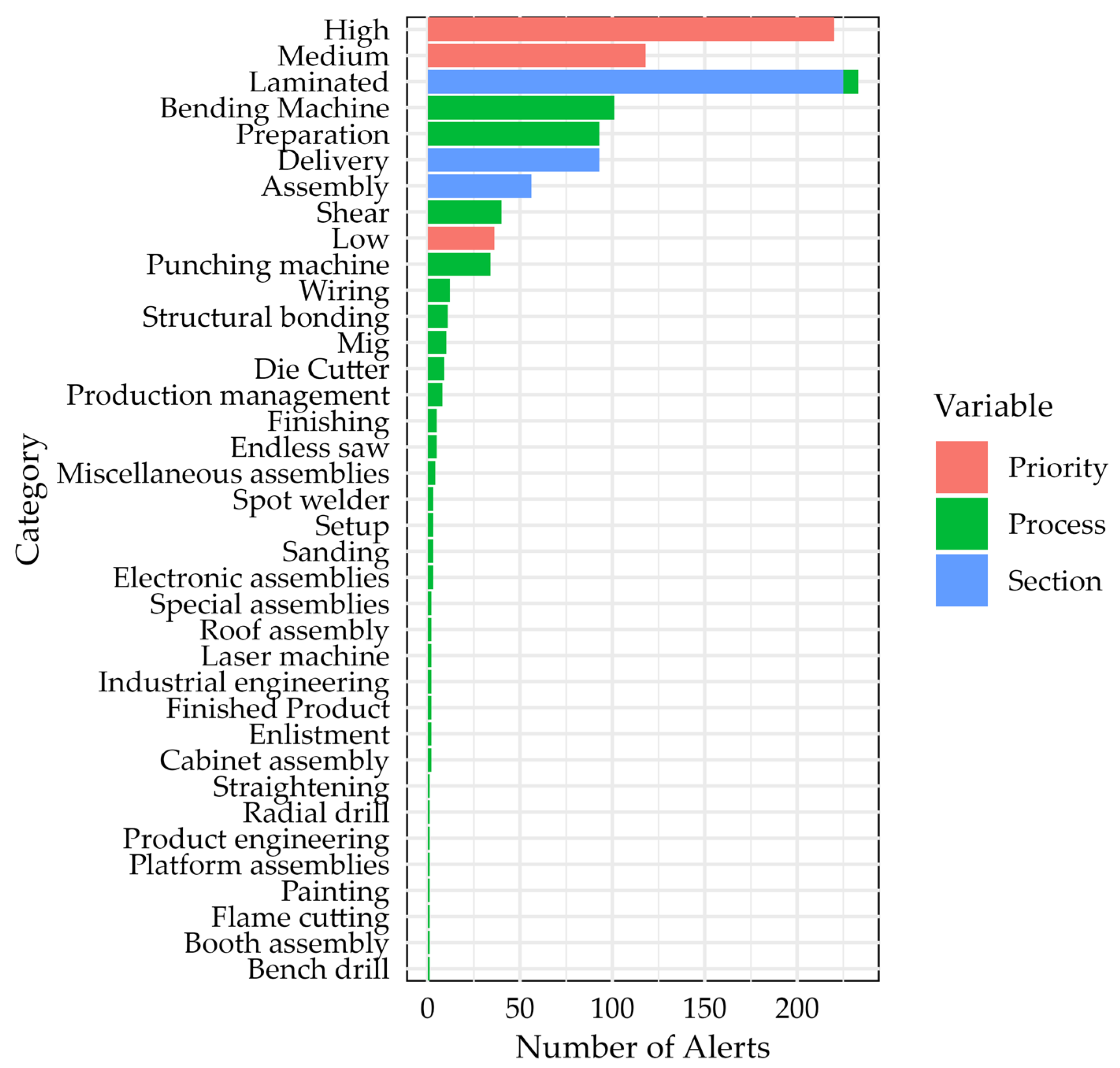

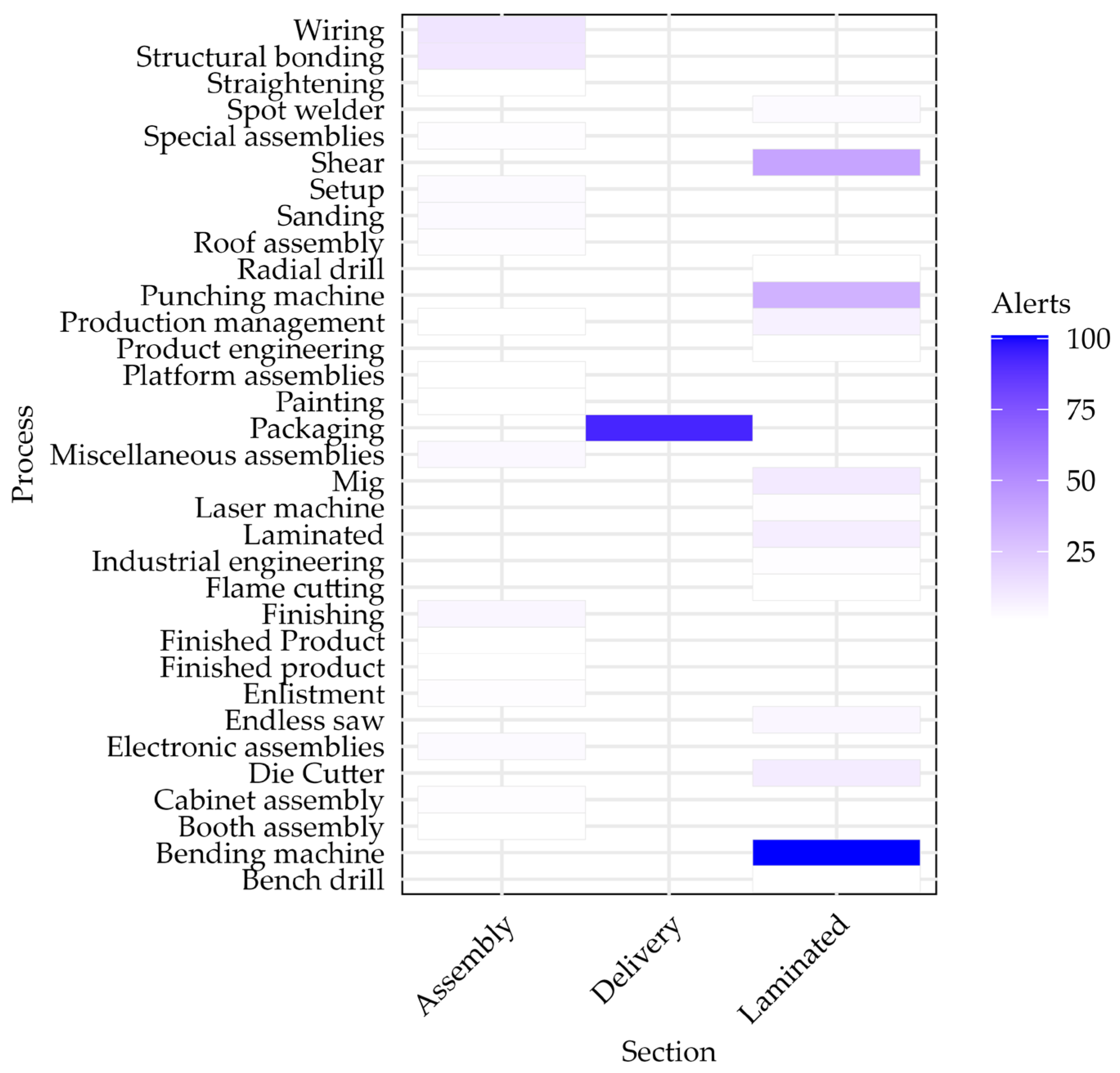

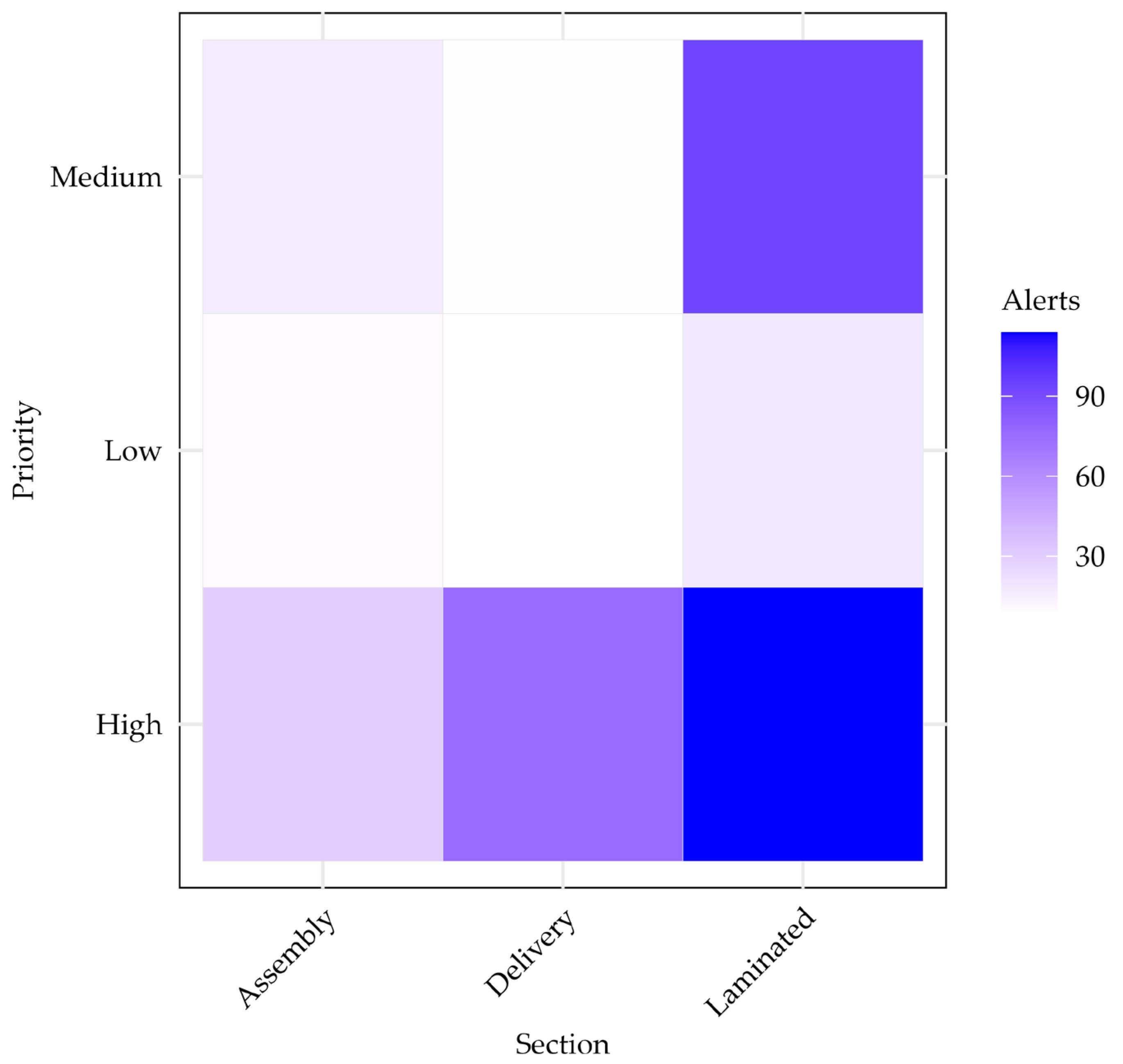

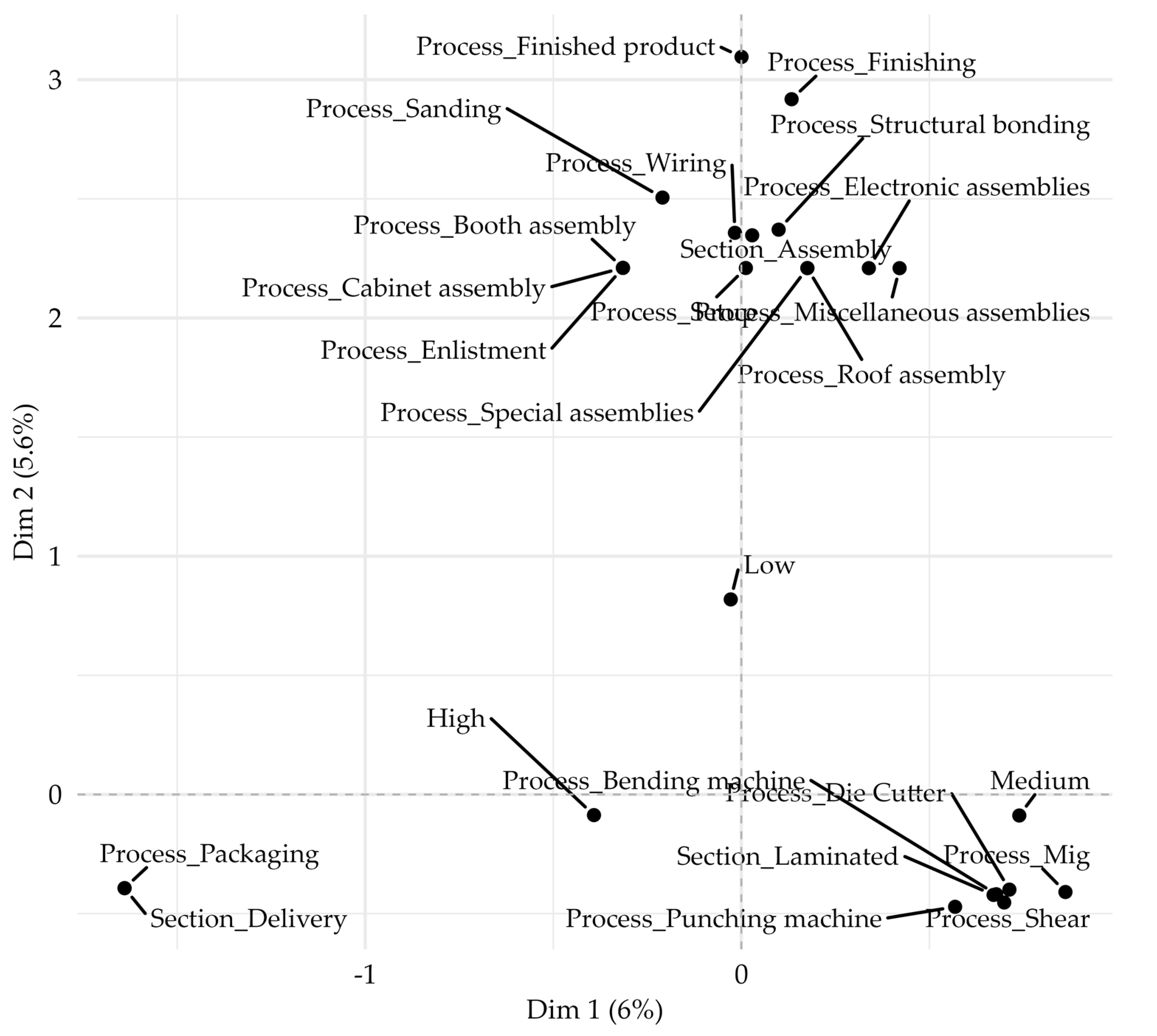

The diagram shows the main operational stages (cutting, welding, assembly, painting, and packaging), the points where alerts may arise, and the subsequent flow of notification, review, and closure in the information system. This representation connects the industrial context with the analytical framework, highlighting how raw production events are transformed into categorical variables (process, section, and priority) for statistical modeling.

2.1.2. MCA and Random Forest: Mathematical Foundations and Pseudocode

Multiple Correspondence Analysis (MCA) is an extension of Simple Correspondence Analysis (SCA) for more than two categorical variables. Its goal is to graphically represent the relationships between categories and observations in a low-dimensional space, preserving as much inertia as possible (variance in categorical data). The outmost basic concepts are as follows [

18,

23].

Let us suppose we have observations and J categorial variables with a total of m categories that are codified in a complete disjunctive matrix X of size n × m, with “ones” in the observed category and “zeros” in the rest of the entries.

Frequency calculation. Let X be the complete disjunctive matrix of size n × m, where n is the number of observations and m is the number of categories. We define P as the relative frequency matrix. It holds that

Row and column profiles. Now, it is possible to obtain profiles of rows, , and profiles of columns, , as follows: and . These and values will then be used as weights to centralize and normalize distances.

Centering and scaling. Let

r and

c be the row and column profile vectors, respectively. Let

be the diagonal matrix built from

r, and let

be the diagonal matrix built from

c. Then, the standardized matrix

Z is given by

Singular Value Decomposition (SVD). Let

Z be the standardized matrix defined in (2), while

and

are the left and right singular vector matrices, respectively, and

is the diagonal matrix of singular values. Its singular value decomposition is expressed as

Let

U and

be the components obtained in decomposition (3). The row coordinates

are

and the column coordinates

are

Factor coordinates are obtained by projecting rows and columns onto the axes associated with the singular vectors. These are the points plotted in biplots/maps; proximities among categories (and observations) reflect their associations.

To operationalize these steps, the MCA workflow can be summarized as a sequence of transformations starting from the categorical dataset and ending with the factorial coordinates and explained inertia. The following pseudocode outlines the main stages of the procedure in a concise and reproducible manner:

Input: Categorical table with variables {Process, Section, Priority}

Build complete disjunctive table X

Compute relative frequencies P = X/n

Obtain row (r) and column (c) profiles

Construct standardized residual matrix Z

Apply SVD: Z = U Λ VT

Project rows and columns onto factorial axes

Output: Factorial coordinates + percentages of inertia (Dim1, Dim2, …)

To further clarify the sequence of operations and highlight how categorical information is transformed into factorial coordinates, we provide a simple analytical example with a small dataset. This example illustrates each step of the MCA, from the construction of the disjunctive table to the interpretation of the resulting dimensions.

Consider three observations of two categorical variables:

Process = {Cutting, Welding};

Priority = {High, Low}.

The complete disjunctive structure is shown below:

| Cutting | Welding | High | Low |

| 1 | 0 | 1 | 0 |

| 0 | 1 | 0 | 1 |

| 1 | 0 | 0 | 1 |

Relative frequencies: ;

Profiles: r = (1/3, 1/3, 1/3)T and c = (2/3, 1/3, 1/3, 2/3).

SVD of the standardized residuals Z produces two main dimensions that reveal the following associations:

Cutting is balanced between High and Low.

Welding is more strongly associated with Low.

This toy example illustrates how MCA maps categorical variables into a geometric space where proximities reflect the strength of associations among categories.

On the other hand, we present a brief review of the mathematics behind a Random Forest, which is basically an ensemble method constructed from numerous decision trees. In addition to the decision trees themselves, other concepts that make the Random Forest a suitable and essential alternative are bootstrap sampling, random selection, and ensemble voting [

24].

- 1.

Decision Trees. The initial data space is split into regions (trees) by using criteria such as Gini or Enthropy. At each split, the algorithm selects the best variable and threshold by optimizing a criterion. Let

t be a node in a decision tree and

K be the number of classes.

denotes the proportion of observations of class

k at node

t. The Gini index at node

t is defined as

- 2.

Classification in Random Forests. Let

B be the total number of trees in the forest, and let

be the class predicted by tree

b for an observation

x. The class predicted by the Random Forest

Ĉ(

x) is obtained by majority vote:

where the indicator function

I(

Ĉb(

x) =

k) equals 1 if the tree predicted class k, and 0 otherwise.

Prediction in regression with Random Forests. Let

be the prediction of tree

b for observation

x, and let

B be the total number of trees in the forest. The final prediction

is calculated as the average of the individual predictions:

- 3.

Random Feature Selection. At each split, only a random subset of predictors is considered. This decorrelates trees and prevents them from making the same mistakes.

- 4.

Importance of variables. The importance of a variable Xj is quantified by its average contribution to the reduction in node impurity across all decision nodes in which it is utilized within the forest.

The algorithm is conceptualized as an ensemble learning technique that constructs multiple decision trees using bootstrap samples of the training dataset and aggregates their outputs to generate robust predictions. The pseudocode below outlines the fundamental procedural steps, from data partitioning to ensemble prediction, in a clear and reproducible format.

Input: Dataset {Process, Priority} → Quality

- 1.

Choose number of trees T (e.g., T = 3)

- 2.

For each tree t in 1…T:

- 3.

For a new observation x:

Output: Predicted class (High or Low quality)

Similarly to the step-by-step illustration of MCA using a small dataset, we now present a didactic example that demonstrates the functioning of a Random Forest model. Suppose we have a dataset in an array with two categorical variables and one binary label.

| Process | Priority | Quality |

| Cutting | High | High |

| Welding | Low | Low |

| Cutting | Low | High |

The Random Forest builds several decision trees. Each tree is trained with a bootstrap sample of the dataset and randomly selects variables at each split. Examples of simplified possible trees are as follows:

Tree 1:

Root: Priority

If High → High

If Low → Low

Tree 2:

Root: Process

If Cutting → High

If Welding → Low

Each new case is classified by majority vote of the trees:

(Process = Welding, Priority = Low)

Tree 1 → Low

Tree 2 → Low

Final prediction: Low quality