Mobile Data Visualisation Interface Design for Industrial Automation and Control: A User-Centred Usability Study

Abstract

1. Introduction

1.1. Motivation

1.2. Objective

1.3. Structure of This Study

2. Related Work

2.1. Data Visualisation

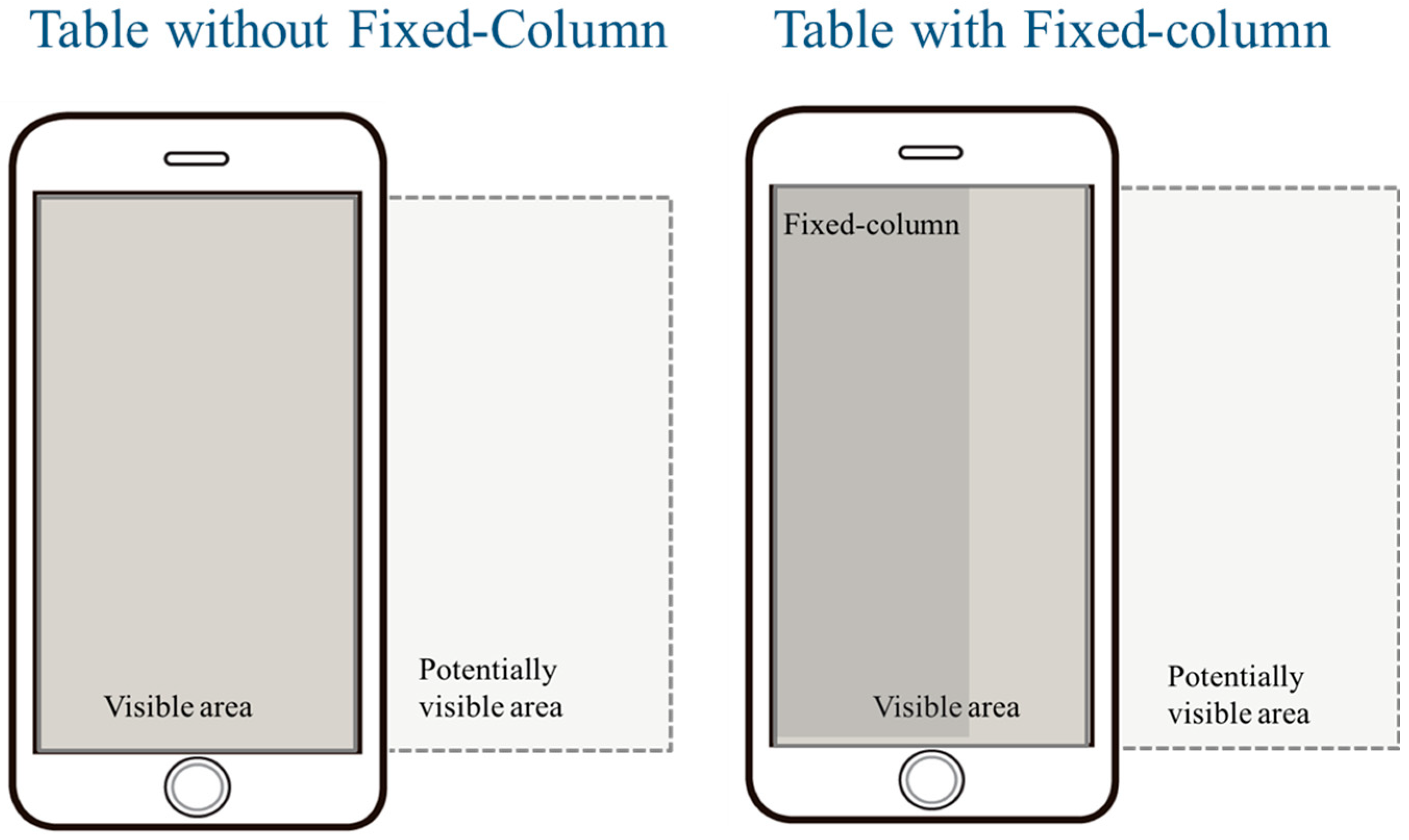

2.1.1. Table

2.1.2. Chart

2.2. Responsive Data Visualisation

2.3. Graphical Perception

2.4. User Interface Design Evaluation

2.4.1. System Usability Scale (SUS)

2.4.2. NASA-Task Load Index (NASA-TLX)

3. Materials and Methods

3.1. Participants

3.2. Apparatus and Questionnaires

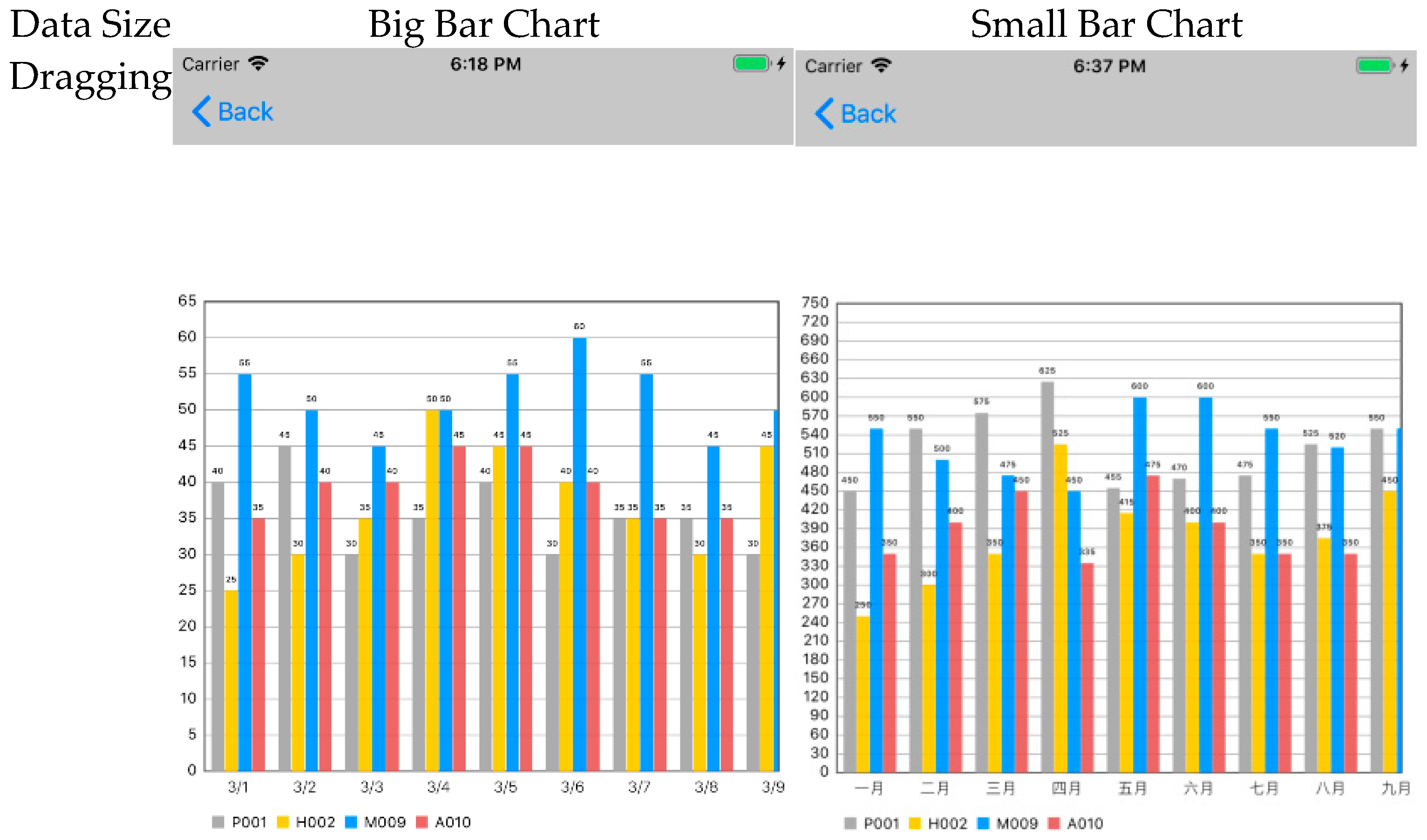

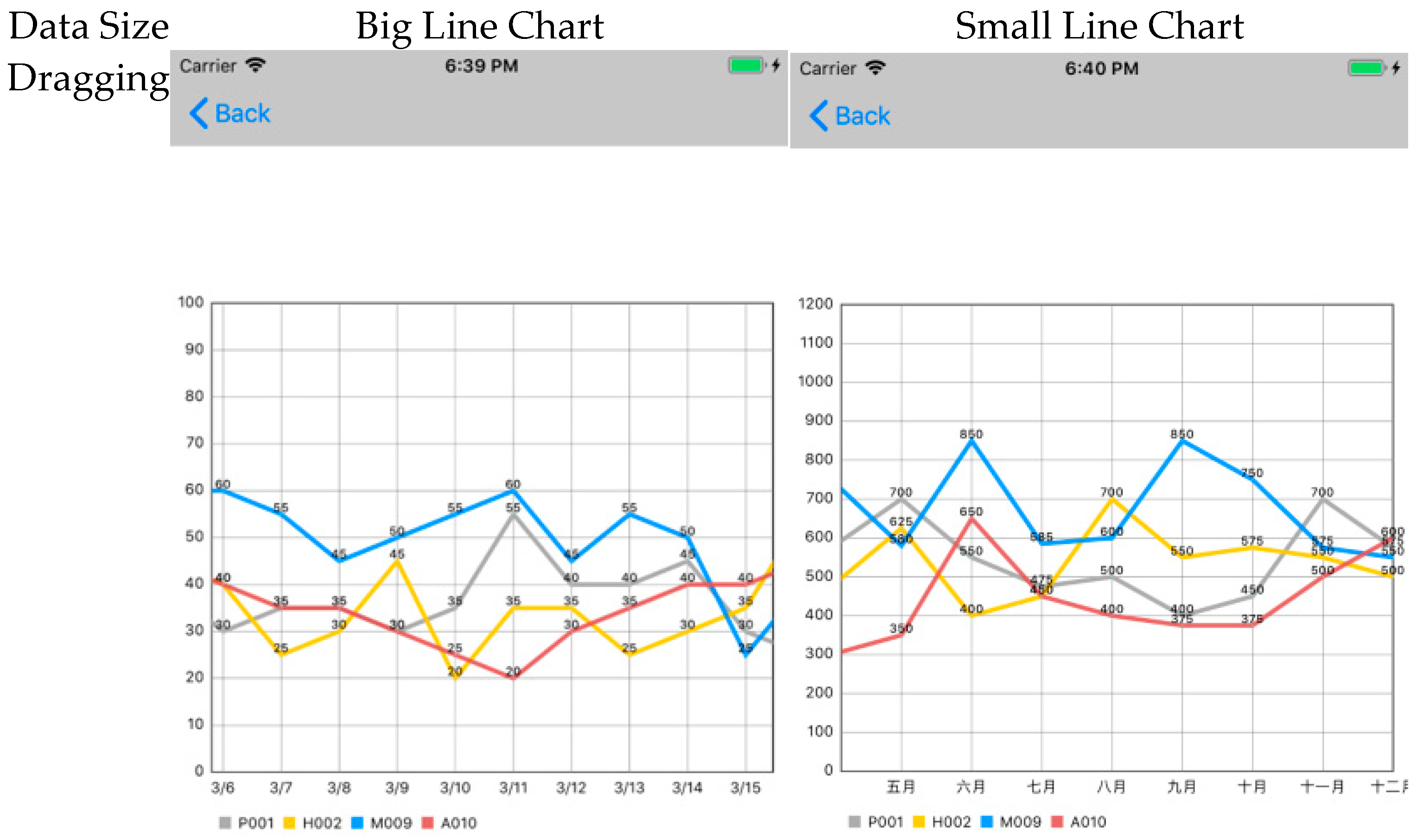

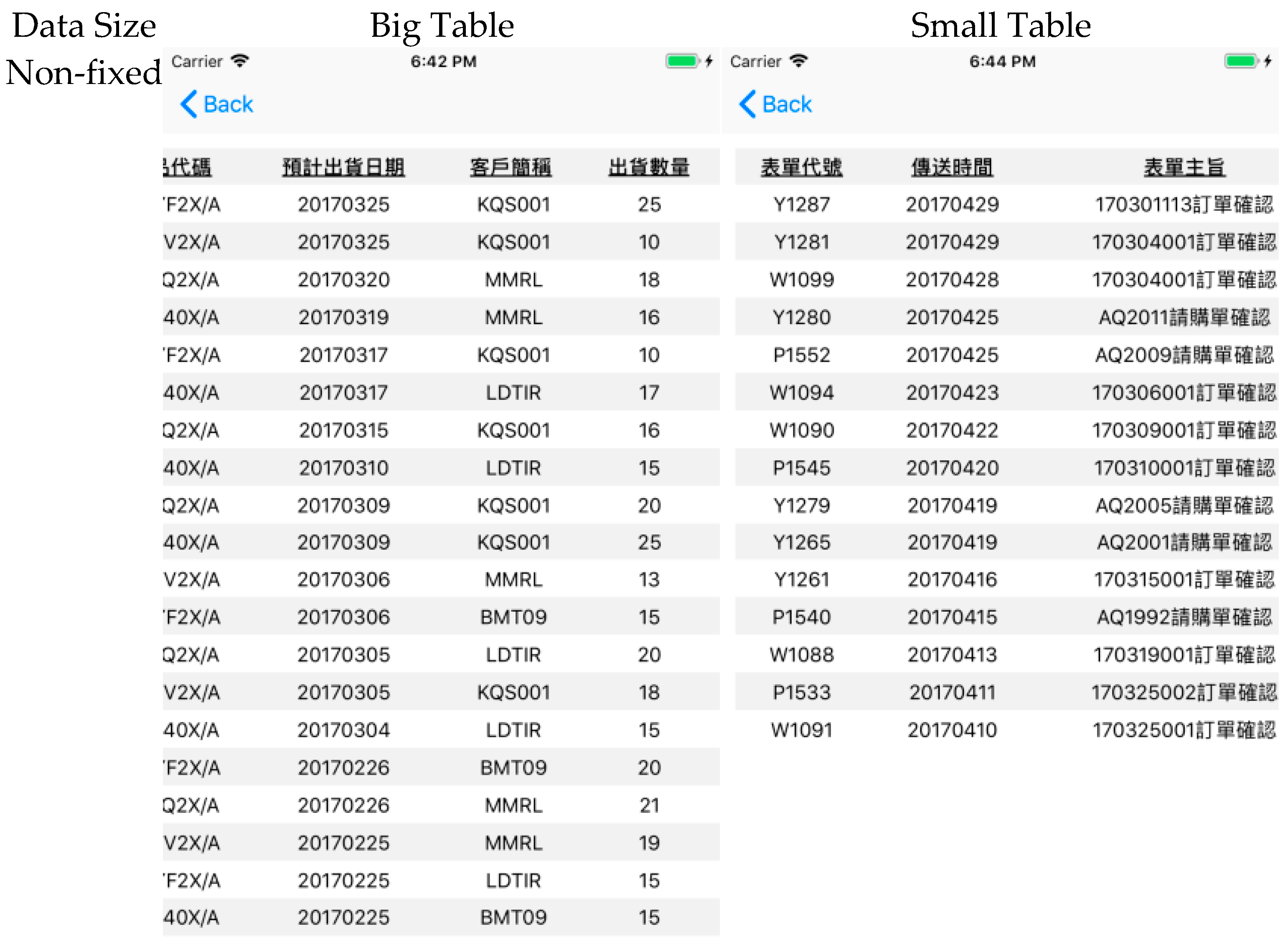

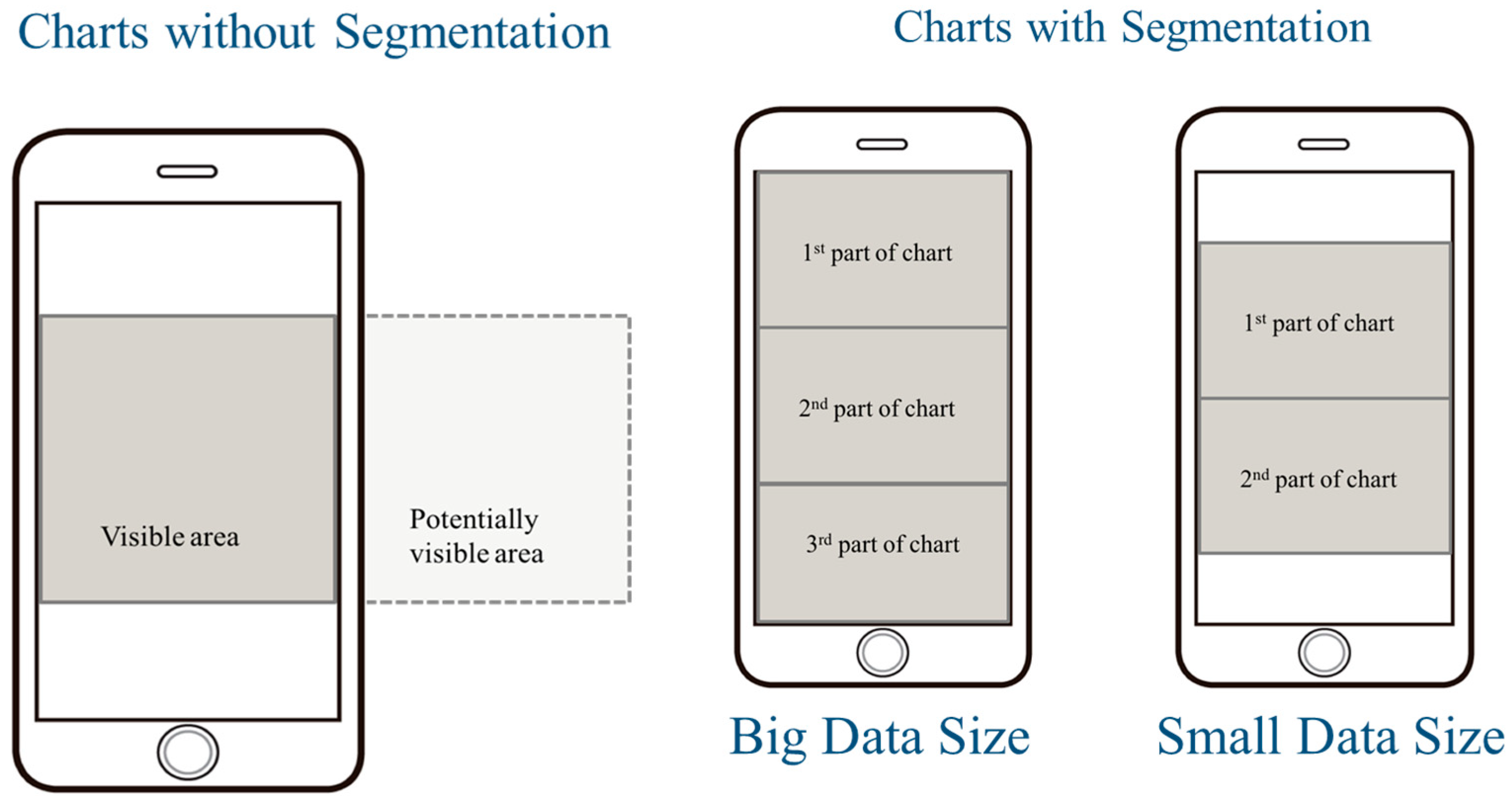

3.3. Experiment Design

3.4. Experiment Procedure

4. Results

4.1. Task Completion Time

4.1.1. Bar Chart

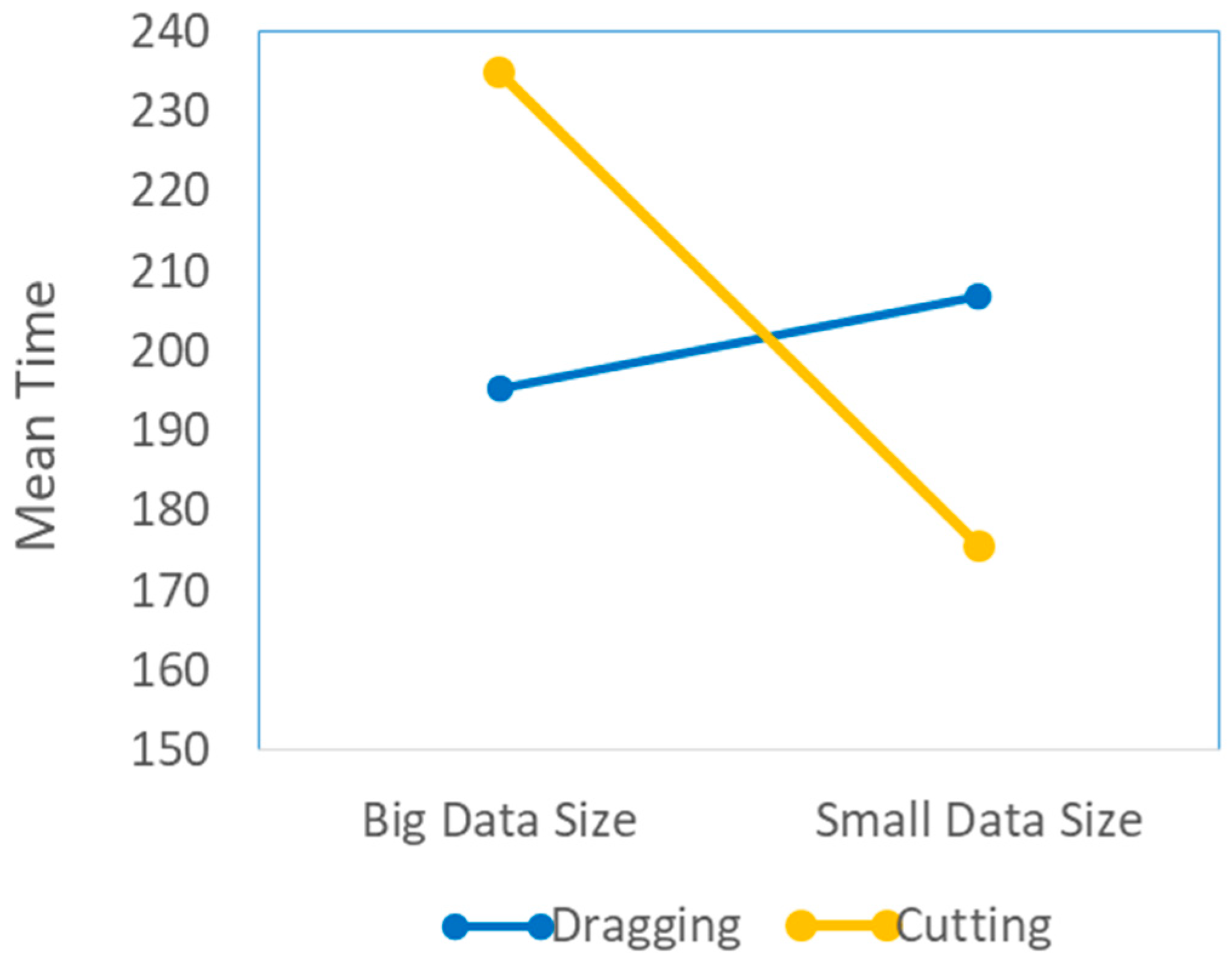

4.1.2. Line Chart

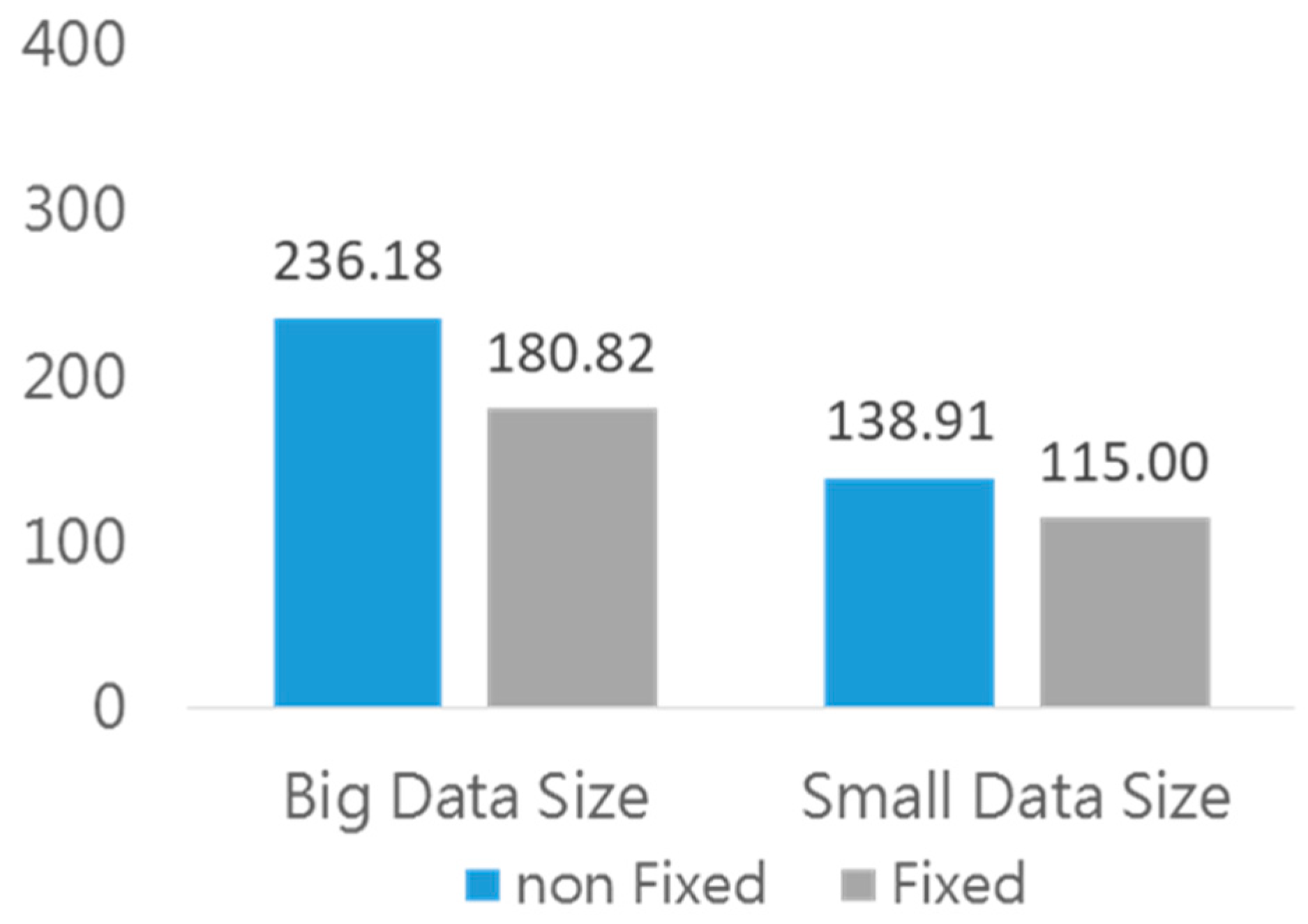

4.1.3. Table

4.2. Usability Subjective Assessment

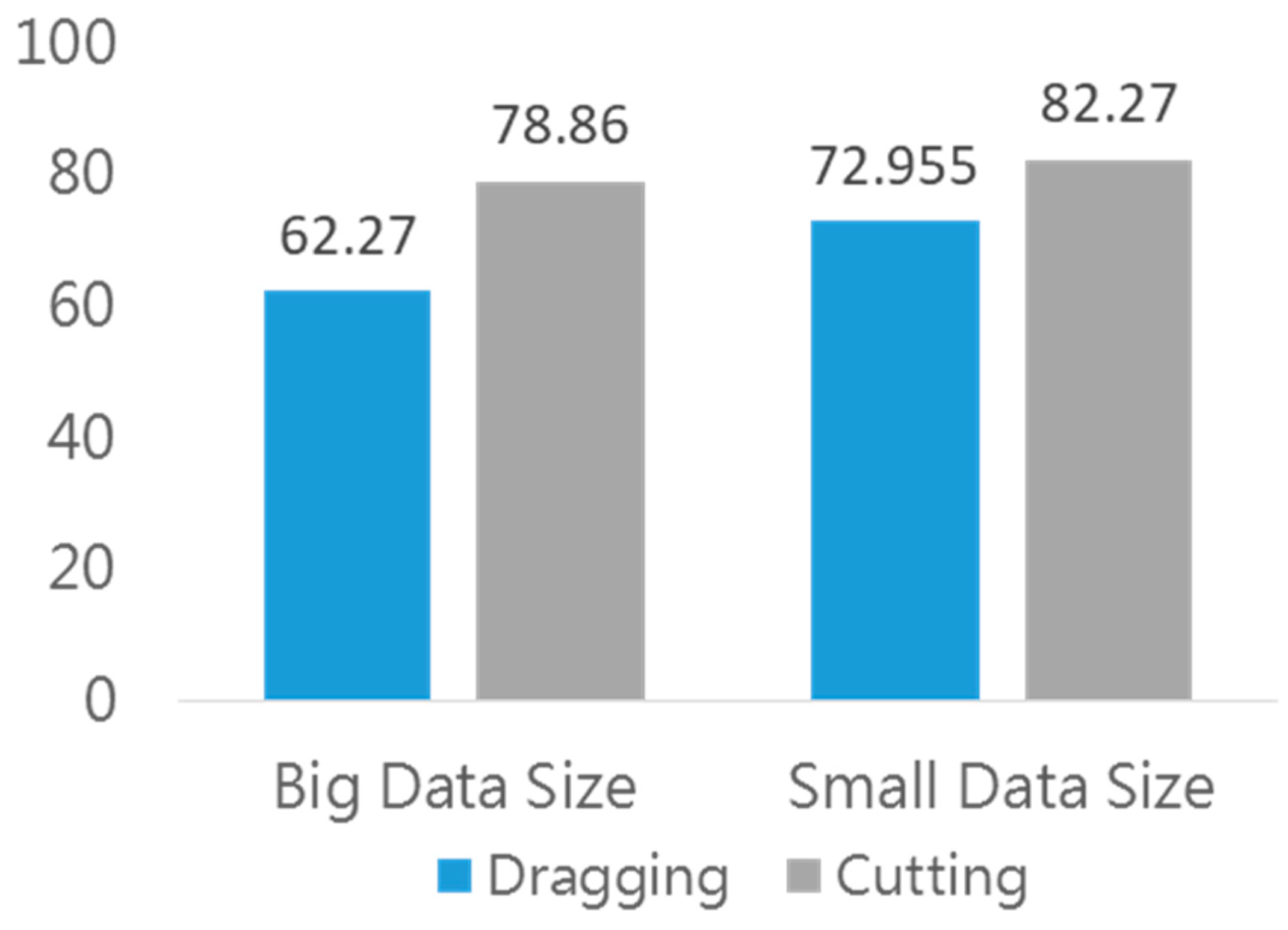

4.2.1. Bar Chart

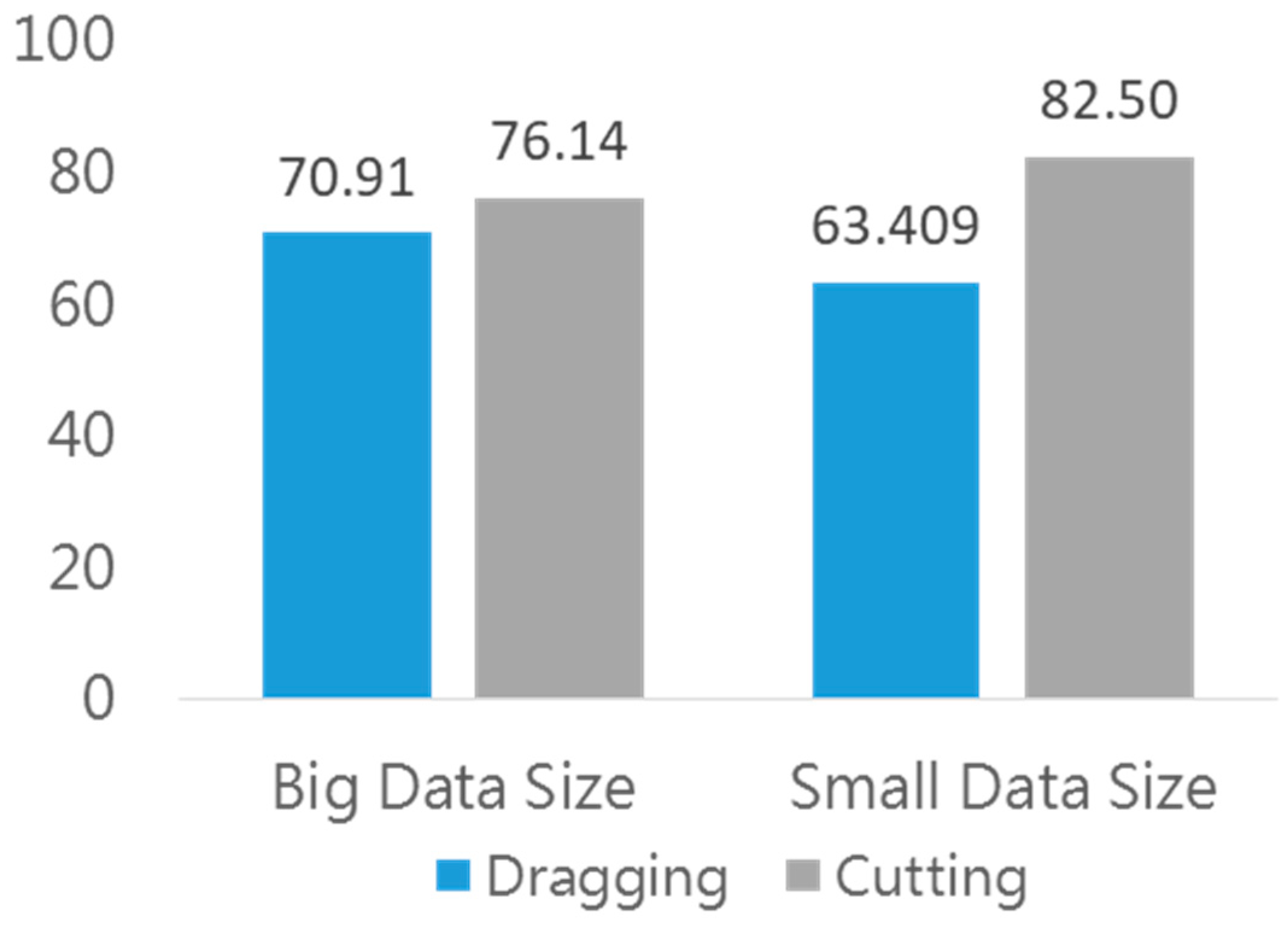

4.2.2. Line Chart

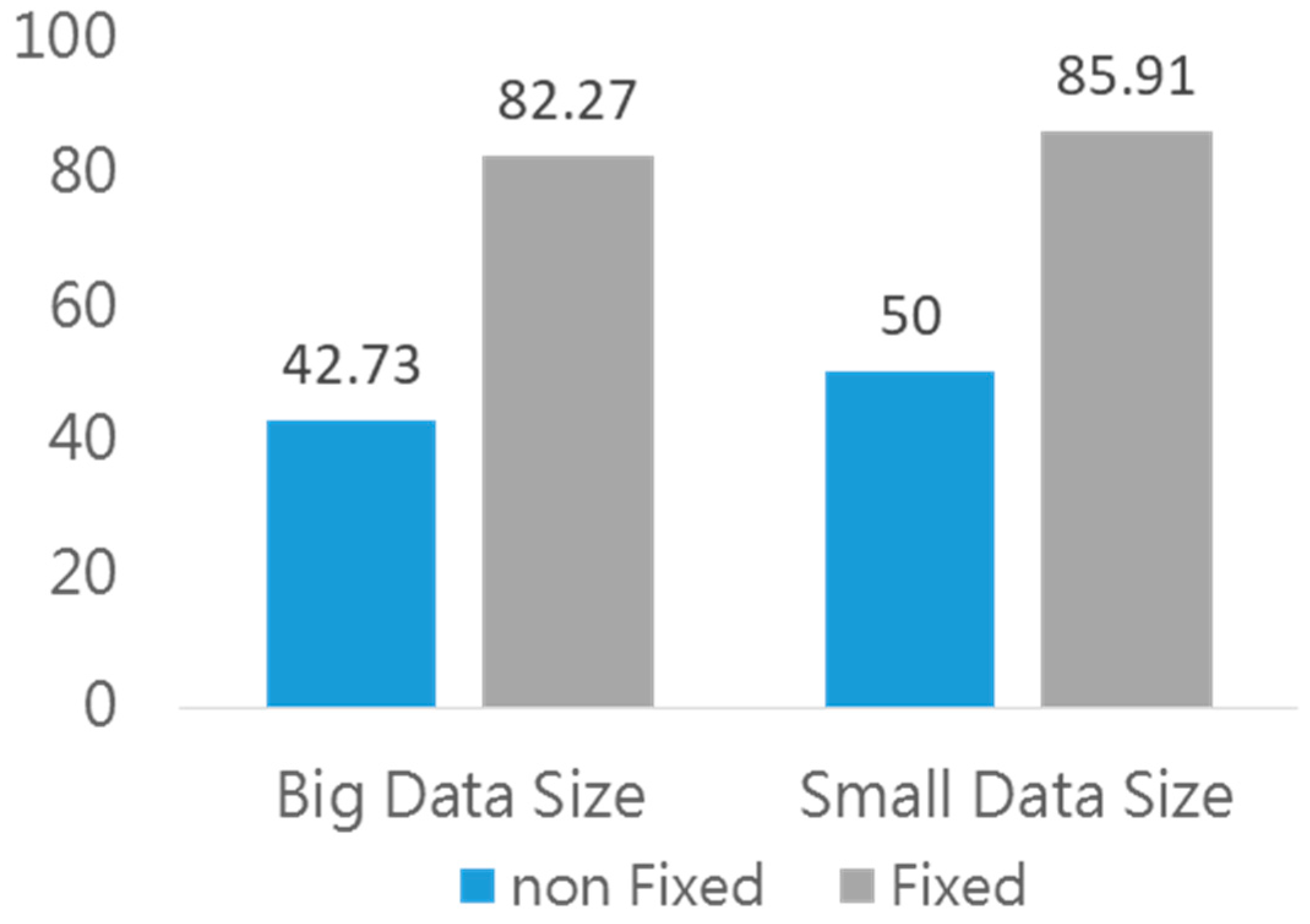

4.2.3. Table

4.2.4. Adjective Rating Scale

4.3. NASA-TLX

4.3.1. Bar Chart

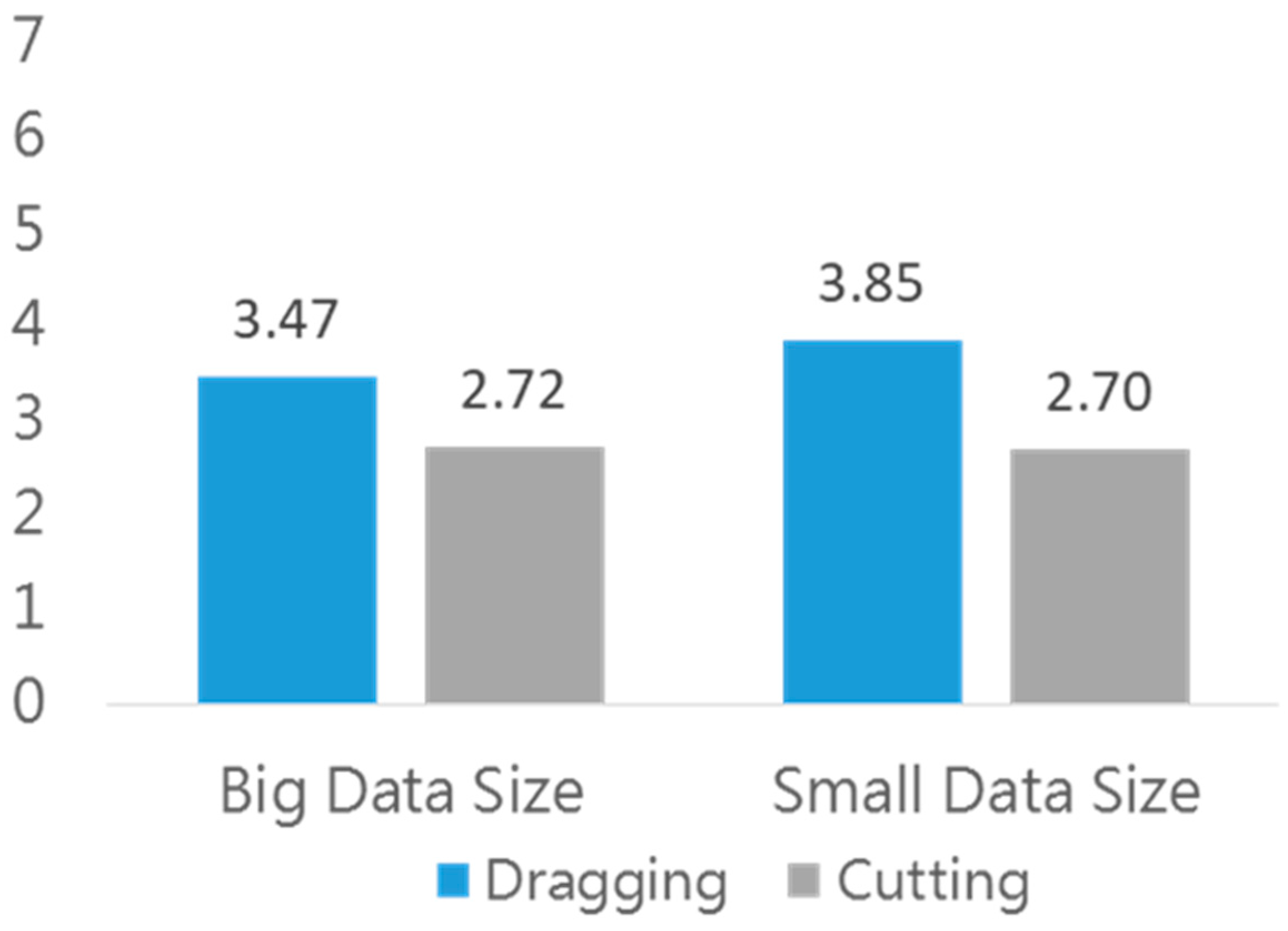

4.3.2. Line Chart

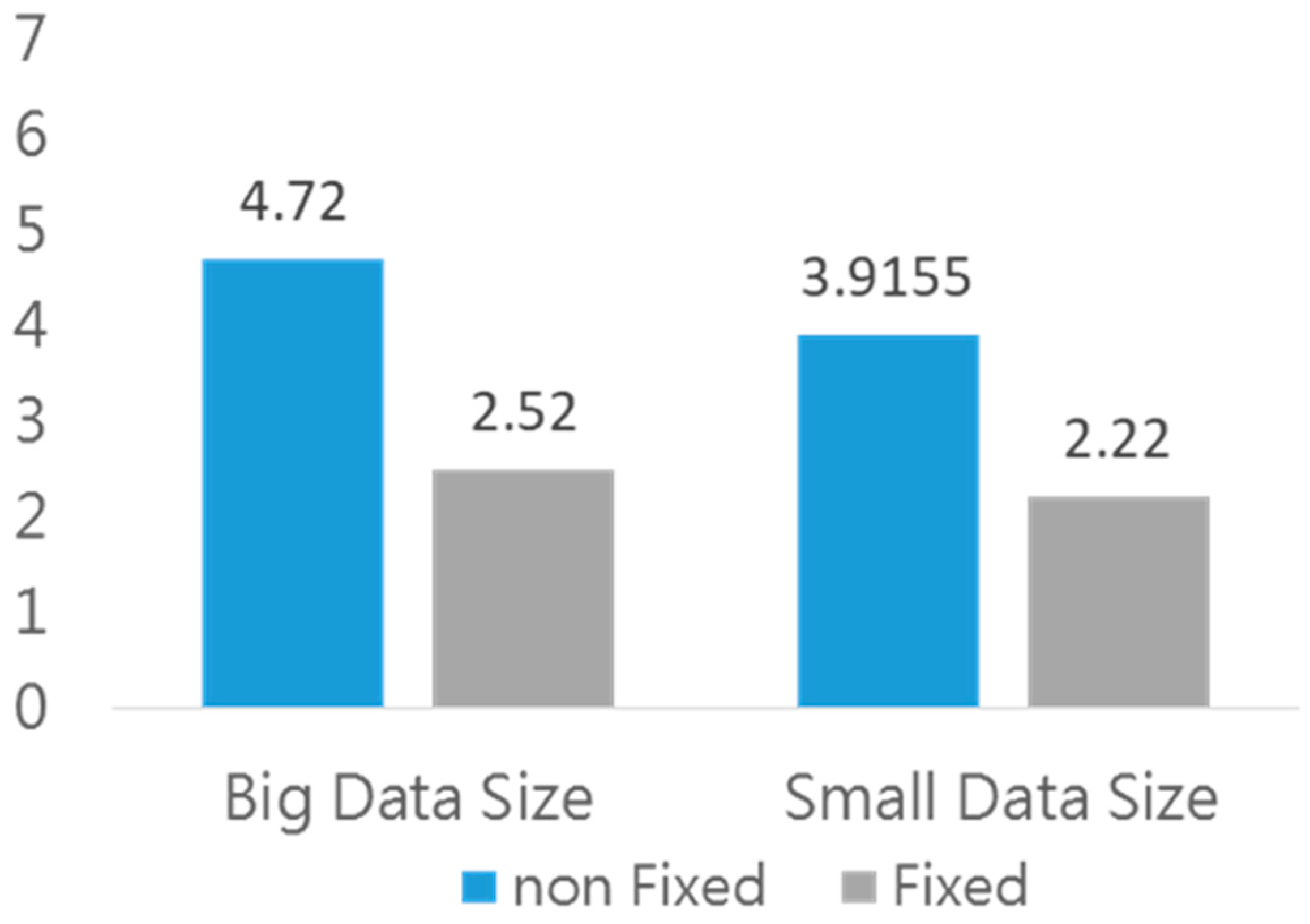

4.3.3. Table

5. Discussion

5.1. The Effect of Segmentation on Performance

5.2. The Effect of Segmentation on Usability

5.3. The Effect of Segmentation on Mental Workload

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. The Diagram of Experimental Interfaces

Appendix B. The Tasks of Experiment

- Bar chart with big data size (30 units)

- (1)

- Among the daily inventory levels, which products have inventory below 30? Please record the products and their corresponding dates.

- (2)

- Please identify on which days product H002 had the lowest sales volume for that day.

- (3)

- Please sort the production volume on 3/11 from highest to lowest.

- (4)

- Please write down which products had inventory levels equal to 60 on which days.

- (5)

- Please identify which days had 2 products with daily production volume exceeding 20 (not including 20), and write down the products as well.

- (6)

- What was the product with the highest inventory on 3/18?

- (7)

- Please observe the daily sales volume from 3/5–20 and identify which dates had two or more products with sales volume less than 20 (not including 20), and which products.

- (8)

- Please write down the sales volume of all products on 3/20.

- Bar chart with small data size (12 units)

- (1)

- In which months was the monthly inventory of product M009 below 500?

- (2)

- In which months were the monthly production volumes of both P001 and A010 below 300?

- (3)

- What was the inventory level of each product in December?

- (4)

- Which products had monthly sales exceeding 900, and in which months?

- (5)

- In which months were actual sales lower than forecasted sales?

- (6)

- What was the ranking of product production volume in September? Please list from highest to lowest.

- (7)

- Which months had two or more products with sales volume below 500? Write down the months and products.

- (8)

- What was the difference between actual sales and forecasted sales in November?

- Line chart with big data size (30 units)

- (1)

- In the historical annual sales volume, what were all the changes from 1995 to 2000? Rising, falling, or unchanged.

- (2)

- Please write down the sales changes for M009 from 3/25–27. Rising, falling, or unchanged.

- (3)

- Please write down which products had declining production volume from 3/26–27.

- (4)

- Please write down the date ranges when A010’s inventory increased continuously for two or more days.

- (5)

- Please observe between 3/5–20, the date ranges when both P001 and A010’s production volumes increased.

- (6)

- Between which two years did the historical annual sales volume have the largest decline?

- (7)

- Please write down the sales volume changes for all products from 3/21–22. Rising, falling, or unchanged.

- (8)

- Which product had the largest inventory decrease from 3/14–15?

- Line chart with small data size (12 units)

- (1)

- What were the month ranges when product P001’s inventory decreased?

- (2)

- Observing the same-period sales volume, what were the month ranges when 2016 increased and 2017 decreased?

- (3)

- Which products had increased sales volume from August to September?

- (4)

- What were the changes in the same-period sales volume from July to August, respectively?

- (5)

- What were the month ranges when both M009 and A010’s monthly sales volumes declined?

- (6)

- Which products had decreased inventory from October to November?

- (7)

- In which months did each of the four products have the largest increase in sales volume?

- (8)

- For the same-period sales volume change from September to October, which year had a larger magnitude of change? Please write down the amount of increase or decrease.

- Table with big data size (30 units)

- (1)

- Which orders were shipped on 3/17? Please record the shipping numbers and status.

- (2)

- In order management, which orders were placed on 4/22? Write down the order numbers and amounts.

- (3)

- Referring to shipping management, which orders currently have insufficient inventory and are still being prepared? Please write down the shipping numbers.

- (4)

- In order management, which orders have amounts greater than 5000? Please record the order numbers and corresponding product quantities.

- Table with small data size (12 units)

- (1)

- Which products have an inventory quantity below 10? Please write down the product codes.

- (2)

- Which products have an inventory cost higher than 1300? Please record the product codes and corresponding inventory quantities.

- (3)

- In the current inventory status, which products have an average cost greater than 100? Please write down the product codes.

- (4)

- Which forms have not yet been approved? Please write down the form numbers.

References

- Chataut, R.; Phoummalayvane, A.; Akl, R. Unleashing the Power of IoT: A Comprehensive Review of IoT Applications and Future Prospects in Healthcare, Agriculture, Smart Homes, Smart Cities, and Industry 4.0. Sensors 2023, 23, 7194. [Google Scholar] [CrossRef] [PubMed]

- Chen, S.; Xu, H.; Liu, D.; Hu, B.; Wang, H. A Vision of IoT: Applications, Challenges, and Opportunities With China Perspective. IEEE Internet Things J. 2014, 1, 349–359. [Google Scholar] [CrossRef]

- Gubbi, J.; Buyya, R.; Marusic, S.; Palaniswami, M. Internet of Things (IoT): A vision, architectural elements, and future directions. Future Gener. Comput. Syst. 2013, 29, 1645–1660. [Google Scholar] [CrossRef]

- Bach, B.; Keck, M.; Rajabiyazdi, F.; Losev, T.; Meirelles, I.; Dykes, J.; Laramee, R.S.; AlKadi, M.; Stoiber, C.; Huron, S.; et al. Challenges and Opportunities in Data Visualization Education: A Call to Action. IEEE Trans. Vis. Comput. Graph. 2024, 30, 649–660. [Google Scholar] [CrossRef]

- Philip Chen, C.L.; Zhang, C.-Y. Data-intensive applications, challenges, techniques and technologies: A survey on Big Data. Inf. Sci. 2014, 275, 314–347. [Google Scholar] [CrossRef]

- Shakeel, H.M.; Iram, S.; Al-Aqrabi, H.; Alsboui, T.; Hill, R. A Comprehensive State-of-the-Art Survey on Data Visualization Tools: Research Developments, Challenges and Future Domain Specific Visualization Framework. IEEE Access 2022, 10, 96581–96601. [Google Scholar] [CrossRef]

- Keim, D.; Qu, H.; Ma, K.L. Big-Data Visualization. IEEE Comput. Graph. Appl. 2013, 33, 20–21. [Google Scholar] [CrossRef] [PubMed]

- Arman, A.; Bellini, P.; Bologna, D.; Nesi, P.; Pantaleo, G.; Paolucci, M. Automating IoT Data Ingestion Enabling Visual Representation. Sensors 2021, 21, 8429. [Google Scholar] [CrossRef]

- Lee, I.; Lee, K. The Internet of Things (IoT): Applications, investments, and challenges for enterprises. Bus. Horiz. 2015, 58, 431–440. [Google Scholar] [CrossRef]

- Theodorakopoulos, L.; Theodoropoulou, A.; Stamatiou, Y. A State-of-the-Art Review in Big Data Management Engineering: Real-Life Case Studies, Challenges, and Future Research Directions. Eng 2024, 5, 1266–1297. [Google Scholar] [CrossRef]

- Mittelstädt, V.; Brauner, P.; Blum, M.; Ziefle, M. On the Visual Design of ERP Systems The—Role of Information Complexity, Presentation and Human Factors. Procedia Manuf. 2015, 3, 448–455. [Google Scholar] [CrossRef]

- Ziefle, M.; Brauner, P.; Speicher, F. Effects of data presentation and perceptual speed on speed and accuracy in table reading for inventory control. Occup. Ergon. 2015, 12, 119–129. [Google Scholar] [CrossRef]

- Vărzaru, A.A.; Bocean, C.G. Digital Transformation and Innovation: The Influence of Digital Technologies on Turnover from Innovation Activities and Types of Innovation. Systems 2024, 12, 359. [Google Scholar] [CrossRef]

- Marcotte, E. Responsive Web Design. 2010. Available online: https://alistapart.com/article/responsive-web-design/ (accessed on 8 August 2025).

- Marcotte, E. Responsive Web Design; A Book Apart: New York, NY, USA, 2011; ISBN 978-1-937557-18-8. [Google Scholar]

- Schade, A. Responsive Web Design (RWD) and User Experience. 2014. Available online: https://www.nngroup.com/articles/responsive-web-design-definition/ (accessed on 8 August 2025).

- Tensmeyer, C.; Bylinski, Z.; Cai, T.; Miller, D.; Nenkova, A.; Niklaus, A.; Wallace, S. Web Table Formatting Affects Readability on Mobile Devices. In Proceedings of the ACM Web Conference 2023, Austin, TX, USA, 30 April–4 May 2023; pp. 1334–1344. [Google Scholar]

- Jones, M.; Buchanan, G.; Thimbleby, H. Improving web search on small screen devices. Interact. Comput. 2003, 15, 479–495. [Google Scholar] [CrossRef]

- Marcial, L.; Hemminger, B. Scrolling and pagination for within document searching: The impact of screen size and interaction style. Proc. Am. Soc. Inf. Sci. Technol. 2011, 48, 1–4. [Google Scholar] [CrossRef]

- Okur, N.; Saricam, C. Digital Markets and E-Commerce Applications in Fashion Retailing. In Changing Textile and Apparel Consumption in Transformative Era of Sustainability and Digitalization; Saricam, C., Okur, N., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 71–111. [Google Scholar]

- Naylor, J.S.; Sanchez, C.A. Smartphone Display Size Influences Attitudes Toward Information Consumed on Small Devices. Soc. Sci. Comput. Rev. 2017, 36, 251–260. [Google Scholar] [CrossRef]

- Bao, P.; Pierce, J.; Whittaker, S.; Zhai, S. Smart phone use by non-mobile business users. In Proceedings of the 13th International Conference on Human Computer Interaction with Mobile Devices and Services, Stockholm, Sweden, 30 August–2 September 2011; pp. 445–454. [Google Scholar]

- Sanchez, C.A.; Branaghan, R.J. Turning to learn: Screen orientation and reasoning with small devices. Comput. Hum. Behav. 2011, 27, 793–797. [Google Scholar] [CrossRef]

- Card, S.K.; Mackinlay, J.; Shneiderman, B. Readings in Information Visualization: Using Vision to Think; Morgan Kaufmann: San Francisco, CA, USA, 1999. [Google Scholar]

- Kowalski, G.J.; Maybury, M.T. Information Storage and Retrieval Systems: Theory and Implementation; Springer: Berlin/Heidelberg, Germany, 2002. [Google Scholar]

- Firat, E.E.; Joshi, A.; Laramee, R.S. Interactive visualization literacy: The state-of-the-art. Inf. Vis. 2022, 21, 285–310. [Google Scholar] [CrossRef]

- Tufte, E.R. Visual Explanations: Images and Quantities, Evidence and Narrative; Graphics Press: Cheshire, CT, USA, 1997. [Google Scholar]

- Ware, C. Information Visualization: Perception for Design; Morgan Kaufmann: San Francisco, CA, USA, 2019. [Google Scholar]

- Shao, H.; Martinez-Maldonado, R.; Echeverria, V.; Yan, L.; Gasevic, D. Data Storytelling in Data Visualisation: Does it Enhance the Efficiency and Effectiveness of Information Retrieval and Insights Comprehension? In Proceedings of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–21. [Google Scholar]

- Eberhard, K. The effects of visualization on judgment and decision-making: A systematic literature review. Manag. Rev. Q. 2021, 73, 167–214. [Google Scholar] [CrossRef]

- Alharbi, F.; Kashyap, G.S. Empowering Network Security through Advanced Analysis of Malware Samples: Leveraging System Metrics and Network Log Data for Informed Decision-Making. Int. J. Networked Distrib. Comput. 2024, 12, 250–264. [Google Scholar] [CrossRef]

- Butler, D.M.; Almond, J.C.; Bergeron, R.D.; Brodlie, K.W.; Haber, R.B. Visualization reference models. In Proceedings of the 4th conference on Visualization’93, San Jose, CA, USA, 25–29 October 1993; pp. 337–342. [Google Scholar]

- Huang, Z.; Yuan, L. Enhancing learning and exploratory search with concept semantics in online healthcare knowledge management systems: An interactive knowledge visualization approach. Expert. Syst. Appl. 2024, 237, 121558. [Google Scholar] [CrossRef]

- Khan, M.; Khan, S.S. Data and information visualization methods, and interactive mechanisms: A survey. Int. J. Comput. Appl. 2011, 34, 1–14. [Google Scholar]

- Ratwani, R.M.; Trafton, J.G.; Boehm-Davis, D.A. Thinking graphically: Connecting vision and cognition during graph comprehension. J. Exp. Psychol. Appl. 2008, 14, 36. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Zhang, J.; Zhu, Q.; Li, W.; Wu, J.; Guo, Y. A knowledge-guided visualization framework of disaster scenes for helping the public cognize risk information. Int. J. Geogr. Inf. Sci. 2024, 38, 626–653. [Google Scholar] [CrossRef]

- Li, W.; Zhu, J.; Zhu, Q.; Zhang, J.; Han, X.; Dehbi, Y. Visual attention-guided augmented representation of geographic scenes: A case of bridge stress visualization. Int. J. Geogr. Inf. Sci. 2024, 38, 527–549. [Google Scholar] [CrossRef]

- Liu, Z. Evaluating Digitalized Visualization Interfaces: Integrating Visual Design Elements and Analytic Hierarchy Process. Int. J. Hum. –Comput. Interact. 2024, 41, 5731–5760. [Google Scholar] [CrossRef]

- Salkind, N.J.; Frey, B.B. Statistics for People Who (Think They) Hate Statistics: Using Microsoft Excel; Sage Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- Cleveland, W.S.; McGill, R. Graphical Perception: The Visual Decoding of Quantitative Information on Graphical Displays of Data. J. R. Stat. Soc. Ser. A 1987, 150, 192–210. [Google Scholar] [CrossRef]

- Cleveland, W.S.; McGill, M.E.; McGill, R. The Shape Parameter of a Two-Variable Graph. J. Am. Stat. Assoc. 1988, 83, 289–300. [Google Scholar] [CrossRef]

- Beattie, V.; Jones, M.J. The Impact of Graph Slope on Rate of Change Judgments in Corporate Reports. Abacus 2002, 38, 177–199. [Google Scholar] [CrossRef]

- Polidoro, F.; Liu, Y.; Craig, P. Enhancing Mobile Visualisation Interactivity: Insights on a Mixed-fidelity Prototyping Approach. In Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–7. [Google Scholar]

- Chittaro, L. Visualizing information on mobile devices. Computer 2006, 39, 40–45. [Google Scholar] [CrossRef]

- Andrews, K.; Smrdel, A. Responsive Data Visualisation. In Proceedings of the EuroVis (Posters), Barcelona, Spain, 12–16 June 2017; pp. 113–115. [Google Scholar]

- Prakash, Y.; Khan, P.A.; Nayak, A.K.; Jayarathna, S.; Lee, H.N.; Ashok, V. Towards Enhancing Low Vision Usability of Data Charts on Smartphones. IEEE Trans. Vis. Comput. Graph. 2025, 31, 853–863. [Google Scholar] [CrossRef]

- Korner, C. Learning Responsive Data Visualization; Packt Publishing Ltd.: Birmingham, UK, 2016. [Google Scholar]

- Baigabulov, S.; Ipalakova, M.; Turzhanov, U. Virtual Reality Enabled Immersive Data Visualisation for Data Analysis. Procedia Comput. Sci. 2025, 265, 602–609. [Google Scholar] [CrossRef]

- Cook, M. Building Responsive Data Visualizations with D3.js; Packt Publishing: Birmingham, UK, 2015. [Google Scholar]

- Schottler, S.; Dykes, J.; Wood, J.; Hinrichs, U.; Bach, B. Constraint-Based Breakpoints for Responsive Visualization Design and Development. IEEE Trans. Vis. Comput. Graph. 2025, 31, 4593–4604. [Google Scholar] [CrossRef]

- Nagle, R. Responsive Charts with D3 and Backbone. Chicago Tribune News Apps Blog. 7 March 2014. Available online: https://newsapps.wordpress.com/2014/03/07/responsive-charts-with-d3-and-backbone/ (accessed on 8 August 2025).

- Lindsay, P.H.; Norman, D.A. Human Information Processing: An Introduction to Psychology; Academic Press: New York, NY, USA, 2013. [Google Scholar]

- Lohse, G.L. A Cognitive Model for Understanding Graphical Perception. Hum.–Comput. Interact. 1993, 8, 353–388. [Google Scholar] [CrossRef]

- Cleveland, W.S.; McGill, R. Graphical Perception: Theory, Experimentation, and Application to the Development of Graphical Methods. J. Am. Stat. Assoc. 1984, 79, 531–554. [Google Scholar] [CrossRef]

- Cleveland, W.S. A Model for Studying Display Methods of Statistical Graphics. J. Comput. Graph. Stat. 1993, 2, 323–343. [Google Scholar] [CrossRef]

- Woods, D.D. Visual momentum: A concept to improve the cognitive coupling of person and computer. Int. J. Man-Mach. Stud. 1984, 21, 229–244. [Google Scholar] [CrossRef]

- ISO 9241-11:1998; Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs)—Part 11: Guidance on Usability. International Organization for Standardization: Geneva, Switzerland, 1998.

- Nielsen, J. Usability Engineering; Morgan Kaufmann: San Francisco, CA, USA, 1994. [Google Scholar]

- Hackos, J.T.; Redish, J.C. User and Task Analysis for Interface Design; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1998. [Google Scholar]

- Longo, L.; Rusconi, F.; Noce, L. The importance of human mental workload in web design. In Proceedings of the WEBIST 2012: 8th International Conference on Web Information Systems and Technologies, Porto, Portugal, 18–21 April 2012. [Google Scholar]

- Tracy, J.P.; Albers, M.J. Measuring Cognitive Load to Test the Usability of Web Sites. In Proceedings of the 53rd Annual Conference of the Society for Technical Communication (STC), Las Vegas, NV, USA, 7–10 May 2006; pp. 256–260. [Google Scholar]

- Brooke, J. SUS-A quick and dirty usability scale. Usability Eval. Ind. 1996, 189, 4–7. [Google Scholar]

- Bangor, A.; Kortum, P.T.; Miller, J.T. An Empirical Evaluation of the System Usability Scale. Int. J. Hum. -Comput. Interact. 2008, 24, 574–594. [Google Scholar] [CrossRef]

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Human Mental Workload; Hancock, P.A., Meshkati, N., Eds.; Advances in Psychology, Vol. 52; North-Holland: Amsterdam, The Netherlands, 1988; pp. 139–183. [Google Scholar] [CrossRef]

- Bangor, A.W. Display Technology and Ambient Illumination Influences on Visual Fatigue at VDT Workstations. Ph.D. Thesis, Virginia Polytechnic Institute and State University, Blacksburg, Virginia, 2000. [Google Scholar]

- Gliem, J.A.; Gliem, R.R. Calculating, Interpreting, and Reporting Cronbach’s Alpha Reliability Coefficient for Likert-Type Scales. In Proceedings of the 2003 Midwest Research-to-Practice Conference in Adult, Continuing, and Community Education, Columbus, OH, USA, 8–10 October 2003; pp. 82–88. [Google Scholar]

- Tavakol, M.; Dennick, R. Making sense of Cronbach’s alpha. Int. J. Med. Educ. 2011, 2, 53. [Google Scholar] [CrossRef] [PubMed]

- Jarvenpaa, S.L.; Dickson, G.W. Graphics and managerial decision making: Research-based guidelines. Commun. ACM 1988, 31, 764–774. [Google Scholar] [CrossRef]

- Melody Carswell, C.; Wickens, C.D. Information integration and the object display An interaction of task demands and display superiority. Ergonomics 1987, 30, 511–527. [Google Scholar] [CrossRef]

- Simcox, W.A. A Method for Pragmatic Communication in Graphic Displays. Hum. Factors 1984, 26, 483–487. [Google Scholar] [CrossRef]

| Factor | Chart | Table |

|---|---|---|

| Data Size | 12 units/30 units | 12 units/30 units |

| Segmentation | Dragging/Cutting | Fixed column/Non-fixed column |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 326.45 | 78.72 | 23.73 |

| Cutting | 11 | 257.91 | 61.14 | 18.43 | |

| Small | Dragging | 11 | 209.00 | 42.69 | 12.87 |

| Cutting | 11 | 189.55 | 46.06 | 13.89 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 195.18 | 37.61 | 11.34 |

| Cutting | 11 | 234.91 | 52.09 | 15.70 | |

| Small | Dragging | 11 | 206.91 | 39.39 | 11.88 |

| Cutting | 11 | 175.46 | 27.48 | 8.28 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 236.18 | 41.96 | 12.65 |

| Cutting | 11 | 180.82 | 37.23 | 11.23 | |

| Small | Dragging | 11 | 139.91 | 19.30 | 5.82 |

| Cutting | 11 | 115.00 | 19.40 | 5.85 |

| Subjective Metrics | Cronbach’s Alpha | Number of Items |

|---|---|---|

| Data Size | 0.926 | 10 |

| Segmentation | 0.823 | 6 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 62.27 | 19.15 | 5.77 |

| Cutting | 11 | 78.86 | 10.92 | 3.29 | |

| Small | Dragging | 11 | 72.96 | 23.29 | 7.02 |

| Cutting | 11 | 82.27 | 9.38 | 2.83 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 70.91 | 13.00 | 3.92 |

| Cutting | 11 | 76.14 | 18.18 | 5.48 | |

| Small | Dragging | 11 | 63.41 | 16.33 | 4.92 |

| Cutting | 11 | 82.5 | 12.94 | 3.90 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 42.73 | 18.72 | 5.65 |

| Cutting | 11 | 82.27 | 18.22 | 5.49 | |

| Small | Dragging | 11 | 50.00 | 17.99 | 5.43 |

| Cutting | 11 | 81.36 | 25.63 | 7.73 |

| Rating | Count | Mean | Std. Deviation |

|---|---|---|---|

| Best imaginable | 16 | 94.84 | 7.77 |

| Excellent | 27 | 81.39 | 15.04 |

| Good | 38 | 74.34 | 11.79 |

| OK | 23 | 67.50 | 9.91 |

| Poor | 17 | 47.79 | 17.11 |

| Awful | 9 | 34.44 | 19.83 |

| Worst imaginable | 1 | 17.50 | NA |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 4.04 | 1.05 | 0.32 |

| Cutting | 11 | 2.95 | 1.17 | 0.35 | |

| Small | Dragging | 11 | 3.30 | 1.12 | 0.34 |

| Cutting | 11 | 2.58 | 1.09 | 0.33 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 3.47 | 1.13 | 0.34 |

| Cutting | 11 | 2.72 | 1.06 | 0.32 | |

| Small | Dragging | 11 | 3.85 | 1.24 | 0.37 |

| Cutting | 11 | 2.70 | 0.79 | 0.24 |

| Data Size | Segmentation | n | Mean | Std. Deviation | Std. Error |

|---|---|---|---|---|---|

| Big | Dragging | 11 | 4.72 | 1.23 | 0.37 |

| Cutting | 11 | 2.52 | 1.14 | 0.34 | |

| Small | Dragging | 11 | 3.92 | 1.07 | 0.32 |

| Cutting | 11 | 2.22 | 0.97 | 0.29 |

| Implications |

|---|

| The bar chart with cutting spends less time. |

| The line chart with cutting spends more time. |

| The table with a fixed column takes less time. |

| The bar chart with cutting has a higher subjective rating of usability. |

| The line chart with cutting has a higher subjective rating of usability. |

| The table with a fixed column has a higher subjective rating of usability. |

| The bar chart with cutting has a lower subjective mental workload. |

| The line chart with cutting has a lower subjective mental workload. |

| The table with a fixed column has a lower subjective mental workload. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, C.-F.; Lin, C.J.; Liu, I.-C. Mobile Data Visualisation Interface Design for Industrial Automation and Control: A User-Centred Usability Study. Appl. Sci. 2025, 15, 10832. https://doi.org/10.3390/app151910832

Cheng C-F, Lin CJ, Liu I-C. Mobile Data Visualisation Interface Design for Industrial Automation and Control: A User-Centred Usability Study. Applied Sciences. 2025; 15(19):10832. https://doi.org/10.3390/app151910832

Chicago/Turabian StyleCheng, Chih-Feng, Chiuhsiang Joe Lin, and I-Chin Liu. 2025. "Mobile Data Visualisation Interface Design for Industrial Automation and Control: A User-Centred Usability Study" Applied Sciences 15, no. 19: 10832. https://doi.org/10.3390/app151910832

APA StyleCheng, C.-F., Lin, C. J., & Liu, I.-C. (2025). Mobile Data Visualisation Interface Design for Industrial Automation and Control: A User-Centred Usability Study. Applied Sciences, 15(19), 10832. https://doi.org/10.3390/app151910832