1. Introduction

Optical communication systems face significant challenges due to nonlinear effects, particularly Kerr-induced phase noise [

1,

2], which severely distorts the constellation diagrams of high-order modulation formats such as m-QAM, increasing the difficulty of accurate symbol classification at the receiver [

3,

4]. While various digital signal processing techniques have been proposed to mitigate these impairments, including deep learning [

5,

6,

7] and machine learning methods [

8,

9,

10], their hardware implementation faces substantial scalability issues in real-time systems.

Spectral clustering is an unsupervised learning technique that transforms data into a new domain using the eigenvectors of a similarity graph constructed from the input data [

11]. The transformation into spectral space often reveals cluster boundaries that are not linearly separable in the original domain [

12], making it particularly suitable for identifying structures in distorted constellations affected by nonlinear phase noise. Spectral clustering enables dynamic adaptation to changing channel conditions without requiring labeled data or explicit channel models when applied to streaming m-QAM data [

13]. However, the main challenge for real-time hardware deployment has been the computational complexity of the eigenvector decomposition stage.

In [

14], the authors present a parallel implementation of the Jacobi algorithm for eigenanalysis, comparing its performance on CPUs, GPUs, and FPGAs. The research demonstrated that, despite using multi-threaded approaches, CPUs are not well-suited for this type of computation. The analysis concludes that FPGA implementations deliver the highest computational performance for matrix eigenanalysis using the Jacobi algorithm, though GPUs present a competitive alternative with easier development and better scalability.

To the best of our knowledge, Liu et al. [

15] demonstrated the first application of spectral clustering for nonlinear phase noise compensation in 16-QAM systems using offline DSP. Building upon this foundation and our previous work [

13] on the algorithm’s response to varying nonlinear phase noise and SNRs (5–20 dB) across 16/32/64-QAM constellations, this work presents the first hardware implementation of a spectral clustering-based demodulator for nonlinear phase noise mitigation.

We present a comprehensive System-on-Chip Field-Programmable Gate Array (SoC-FPGA) implementation of a spectral clustering receiver for m-QAM optical signals, encompassing all algorithmic stages, including affinity matrix construction, eigenvector and eigenvalue computation, and cluster assignment. The system supports real-time data stream processing [

16] and includes an integrated demapper stage to resolve labeling ambiguities in windowed data. The core architecture is designed to handle the symbol flow in real time, overcoming the main hardware bottleneck by implementing a parallel Jacobi method for eigenvector computation.

The key contributions of this work include the first complete SoC-FPGA hardware implementation of a spectral clustering receiver for m-QAM signals affected by nonlinear phase noise, encompassing the on-chip computation of eigenvectors via a novel parallel Jacobi method with cyclic scheduling that reduces computational complexity. Additionally, the integration of a Greedy K-means++ initialization strategy enhances clustering stability under severe phase noise conditions. The proposed heterogeneous hardware/software co-design partitions tasks between the ARM CPU and the FPGA fabric to optimize resource utilization. While the processing speeds currently do not meet commercial requirements, the experimental results provide critical insights into memory bandwidth limitations as the fundamental bottleneck for real-time spectral clustering architectures on FPGA platforms. This work validates the robustness of spectral clustering compared to traditional K-means clustering in challenging noise environments and lays the groundwork for future architectural optimizations and scalability improvements.

The rest of this paper is organized as follows:

Section 2 presents the spectral clustering algorithm and the parallelization strategies, emphasizing the new eigenvector computation stage and parallelization strategies.

Section 3 describes the hardware/software co-design of the spectral clustering receiver.

Section 4 discusses experimental results and performance analysis.

Section 5 presents the discussion, and conclusions and future work are presented in

Section 6.

2. Spectral Clustering Algorithm and Parallelization Strategies

The spectral clustering algorithm consists of two main stages implemented through our heterogeneous CPU/FPGA co-design approach. First, an affinity matrix A is constructed using a radial basis function (RBF) kernel and normalized with the diagonal matrix to obtain the normalized Laplacian L. From L, the top k eigenvectors are computed and stacked into matrix X, which is row-normalized to form matrix Y. The second stage involves applying the K-means algorithm to the rows of the Y matrix, which represents the data transformed into spectral space.

In the particular case of QAM constellation analysis, the initialization of the centroids in K-means influences the quality of the final clustering [

17,

18]. The effect of centroid initialization on clustering performance was systematically evaluated using QAM constellation data affected by nonlinear phase noise, revealing that more sophisticated initialization methods help the algorithm find better groupings more reliably, leading to improved performance in challenging signal conditions.

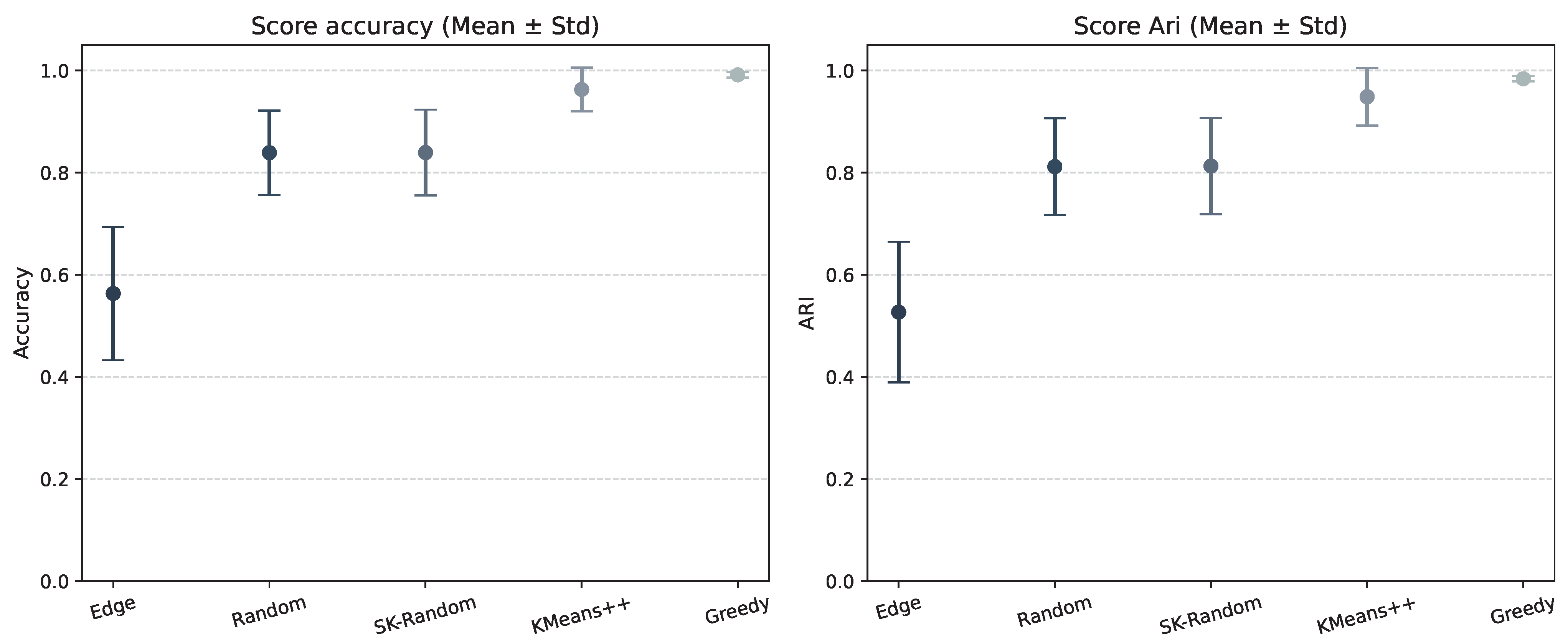

Figure 1 illustrates a comparison of the performance of different initialization methods applied to QAM constellation data. The Greedy K-means++ approach consistently achieves higher mean accuracy and lower variance compared to random, K-means++, and other strategies, indicating more robust and reliable clustering performance under severe phase noise conditions. Centroid initialization has a significant impact on the final clustering outcome, particularly in scenarios with substantial nonlinear distortions, which validates the adoption of Greedy K-means++ in hardware implementations, as it enhances the stability and quality of the spectral clustering receiver.

2.1. Greedy K-Means++ Initialization

Greedy K-means++ utilizes a proportional likelihood selection mechanism to choose new centroids, resulting in a better initial distribution that accelerates the algorithm’s convergence. Unlike the standard K-means++ algorithm (presented in Algorithm 1), which selects a single point as the next centroid at random based on probability, Greedy K-means++ evaluates multiple candidates and performs multiple trials at each sampling step, choosing the optimal centroid among the evaluated options. Greedy K-means++ is presented in Algorithm 2.

| Algorithm 1 Standard K-means++ initialization |

- 1:

Input: A set of data points , number of clusters k. - 2:

Output: A set of k centroids .

▹ Step 1: Choose the first centroid uniformly at random - 3:

Choose the first centroid from X uniformly at random. - 4:

.

▹ Step 2: Choose remaining centroids - 5:

for to k do - 6:

Let D be an array of size n. - 7:

for each point do ▹ Find the squared distance to the nearest centroid in C - 8:

. - 9:

end for

▹ Choose the next centroid with weighted probability - 10:

Choose the next centroid from X with a probability proportional to . - 11:

i.e., pick with probability . - 12:

. - 13:

end for - 14:

return C.

|

| Algorithm 2 Greedy K-means++ initialization |

- 1:

Input: A set of data points , number of clusters k. - 2:

Output: A set of k centroids .

▹ Step 1: Initialize the first centroid - 3:

Choose the first centroid uniformly at random from X. - 4:

.

▹ Step 2: Calculate initial squared distances - 5:

Let D be an array of size n. - 6:

for each point do - 7:

. - 8:

end for ▹ Step 3: Iteratively select remaining centroids - 9:

for to k do - 10:

. - 11:

. - 12:

for each potential centroid do - 13:

. - 14:

for each point do - 15:

. - 16:

. - 17:

. - 18:

end for - 19:

if then - 20:

. - 21:

. - 22:

end if - 23:

end for - 24:

. ▹ Update distances to the nearest centroid - 25:

for each point do - 26:

. - 27:

end for - 28:

end for - 29:

return C.

|

Greedy K-means++ uses the following initialization procedure:

- 1.

Randomly select the first centroid.

- 2.

For each subsequent centroid, perform the following:

- (a)

Instead of choosing a single point with probability proportional to , several random candidates are generated:

- (b)

- (c)

For each candidate

, compute the expected cost:

- (d)

Select the candidate that minimizes the cost:

- 3.

Repeat the process until K centroids are obtained.

2.2. Parallel Jacobi Method: Computation of Eigenvectors and Eigenvalues

The eigenvector computation uses the Jacobi algorithm, which iteratively zeros the largest off-diagonal elements of a symmetric matrix via plane rotations. The classical Jacobi method requires comparisons per iteration, making it computationally expensive for large matrices. To address this computational challenge, we implement the Cyclic-by-Row strategy, which traverses fixed pairs of rows and columns in each sweep, avoiding the search for the largest off-diagonal element and enabling more predictable hardware implementation.

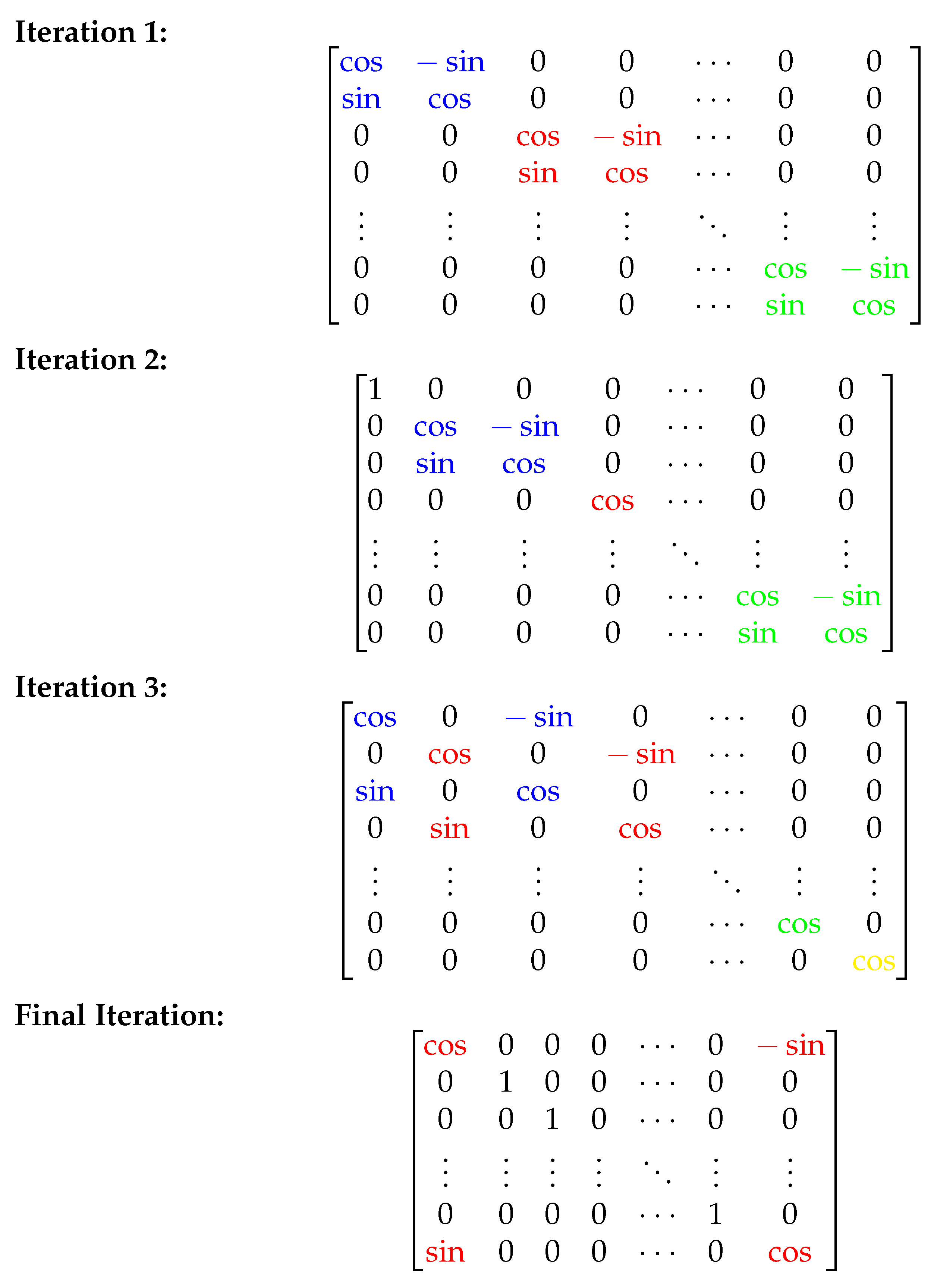

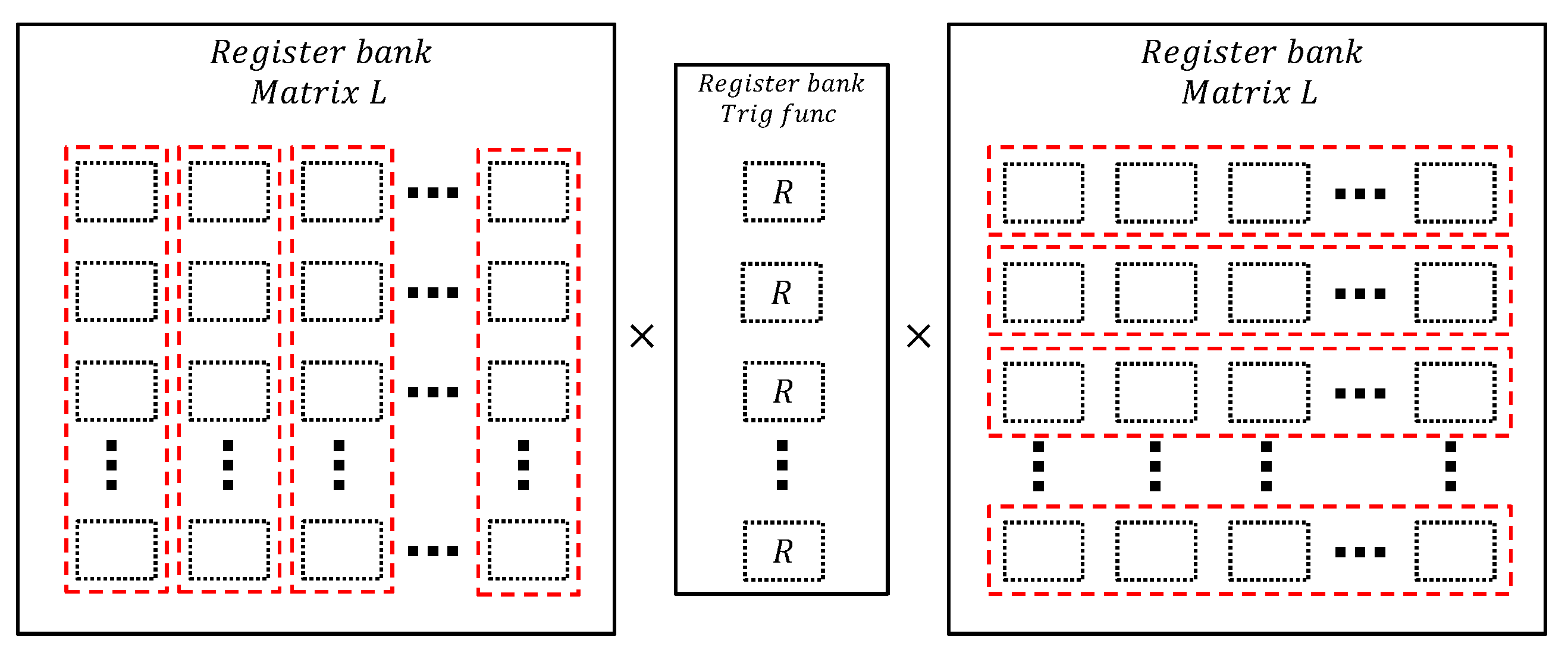

This approach enables parallelization, since each Jacobi rotation only affects two rows and columns

, allowing multiple non-overlapping rotations to be performed simultaneously. The block-diagonal structure illustrated in

Figure 2 demonstrates how multiple Jacobi rotations can be executed in parallel across different iterations, significantly accelerating convergence and making the algorithm suitable for FPGA hardware implementation. The parallel execution strategy reduces the overall computational latency while maintaining the numerical stability characteristics essential for accurate eigenvalue decomposition in optical communication systems.

3. Hardware/Software Co-Design of the Spectral Clustering Receiver

A hardware/software co-design approach for spectral clustering on a System-on-Chip FPGA enables designers to flexibly partition tasks between the CPU and FPGA, allowing each processing unit to handle operations best suited to its architectural strengths [

19]. This collaborative development approach streamlines the design process, supports parallel development, and enables direct iteration and refinement of partitioning decisions on the reconfigurable platform [

20]. The ability to rapidly reprogram the FPGA and profile system performance on chip provides valuable feedback, reduces development time, and increases system flexibility, making co-design especially advantageous for complex embedded applications in optical communications.

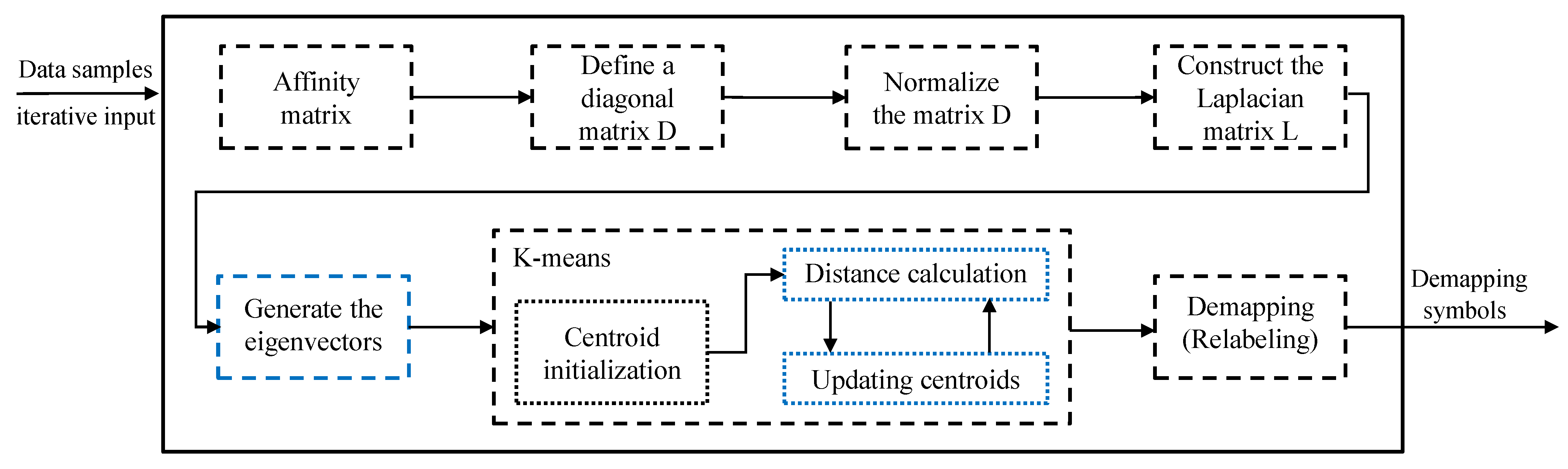

In our implementation, the spectral clustering pipeline is partitioned between the ARM CPU and the FPGA to utilize their respective strengths best. As shown in

Figure 3, the ARM Cortex-A53 CPU is responsible for managing data samples, controlling iterative input, performing affinity matrix construction, defining and normalizing the diagonal matrix, constructing the Laplacian matrix, initializing centroids, and handling the final demapping and relabeling steps. The FPGA, in turn, is dedicated to accelerating the most computationally demanding stages, specifically generating the eigenvectors, calculating distances, and updating centroids during the K-means clustering process. This division of tasks allows for efficient resource utilization and demonstrates the potential for further scaling and optimization in real-time signal processing applications for next-generation optical systems [

21].

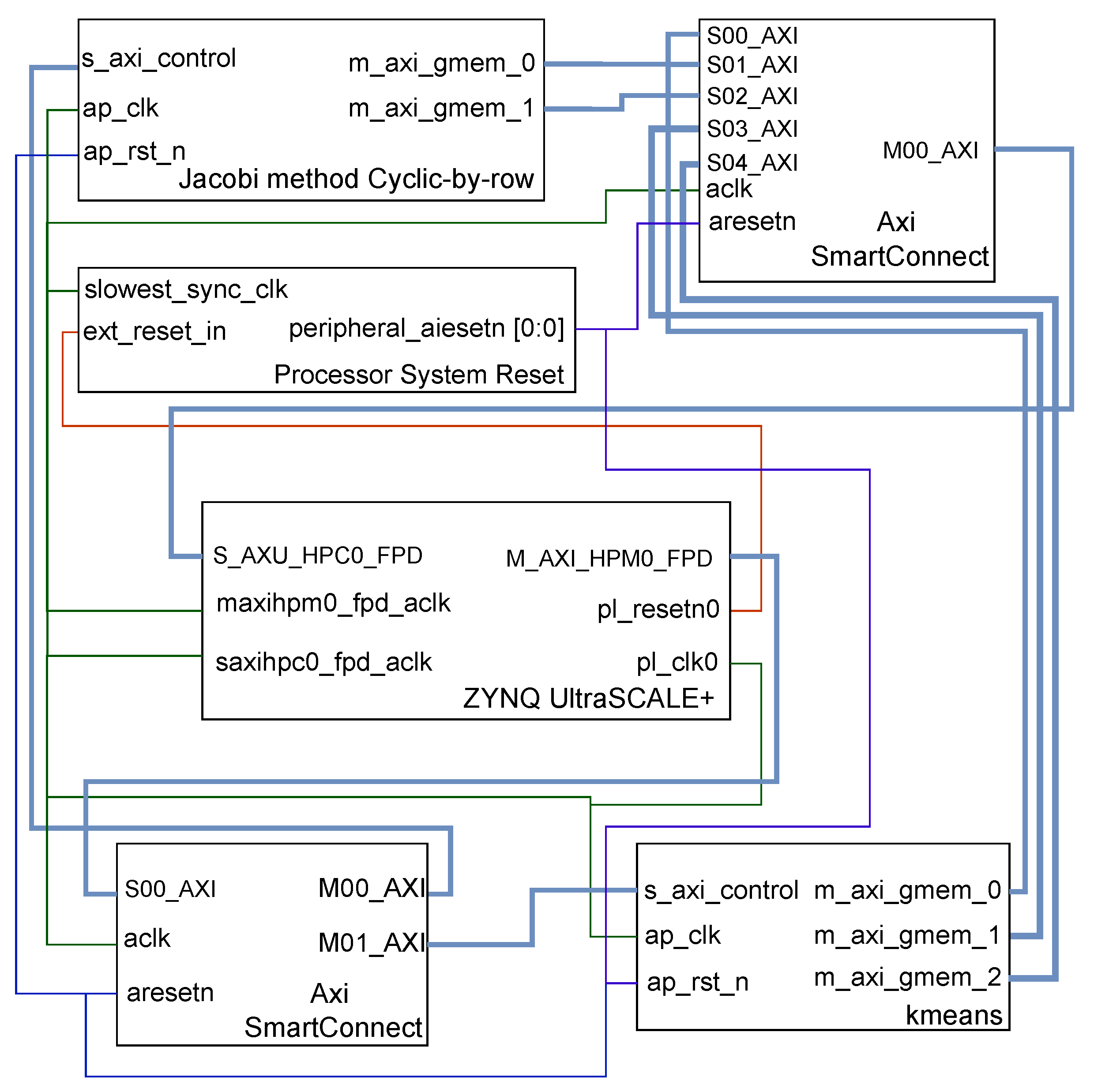

Figure 4 presents a detailed block diagram of the hardware/software co-design architecture implemented on a Zynq UltraScale FPGA platform. It shows the interconnection of key components via AXI interfaces and the flow of control and data signals across the system. At the center is the Zynq UltraScale MPSoC, integrating a processor system (PS) featuring an ARM Cortex-A53 and programmable logic (PL). The diagram highlights the AXI SmartConnect interconnects that facilitate high-bandwidth communication between the processor and programmable logic blocks. Key functional blocks include the hardware implementations of the parallel Jacobi method and the K-means clustering module. These are connected to the processor system through multiple master and slave AXI interfaces (denoted S00AXI, M00AXI, etc.), which support streaming data transfer and control signaling. The figure also shows memory controllers (

m_axi_gmem0,

m_axi_gmem1, and

m_axi_gmem2) connected to dedicated memory banks accessed by computational units. Additionally, peripheral control signals, reset modules, clock domains, and AXI interconnect layers are depicted to illustrate system synchronization and resource management. Overall, the figure portrays a modular and scalable architecture where computationally heavy tasks are offloaded to FPGA logic, while the ARM processor handles coordination, initialization, and relabeling. The comprehensive AXI interconnect structure enables efficient communication and data streaming necessary for real-time spectral clustering.

The architecture operates in data streaming mode, processing incoming data samples without buffering the entire dataset, which is crucial for high-throughput optical communication systems where minimizing latency and resource utilization is essential. The design incorporates a dedicated demapping block that handles cluster labeling to ensure compatibility with m-QAM constellations, correcting inconsistencies arising from windowed processing in the streaming mode.

This section details the implementation’s modular structure, the internal data flow between components, and the strategies employed to optimize the design for FPGA resources.

3.1. Custom Processing Units

In previous work [

22], the authors defined custom processing units (CuPUs) to implement complex functions or high-level digital signal processing (DSP) routines, going beyond the simple, clock-independent operations typically associated with processing elements (PEs) in hardware design. Unlike basic arithmetic operations, CuPUs represent complete routines that would require a finite state machine (FSM) and datapath in RTL. The term “custom” emphasizes that these units incorporate specific optimization directives specified by the authors. By executing independent operations, CuPUs enable parallel processing in hardware, supporting improved algorithm performance.

3.2. Parallel Jacobi Method CuPU

The parallel Jacobi rotations are mapped onto FPGA logic blocks, enabling simultaneous updates of matrix elements through the cyclic scheduling approach that ensures all off-diagonal elements are addressed efficiently. This implementation strategy, combined with pipelined arithmetic units for trigonometric operations, enables the practical computation of eigenvectors for moderate-size Laplacian matrices in real-time optical processing scenarios.

In the initial stage of the Jacobi method implementation, a dedicated register bank stores pre-computed values of sine and cosine functions within the range

, as well as arctangent function values in the range

. With these pre-stored trigonometric values, matrix multiplications are performed only in positions where values directly affect the Laplacian matrix

L, reducing computational overhead and memory access requirements. To enable parallel execution, a complete register bank partition contains the matrix

L, and multiple non-overlapping rotation matrices R are defined, allowing simultaneous application of multiple rotations, as demonstrated in

Figure 2, and the general scheme of the implementation of the Jacobi method on an FPGA is shown in

Figure 5.

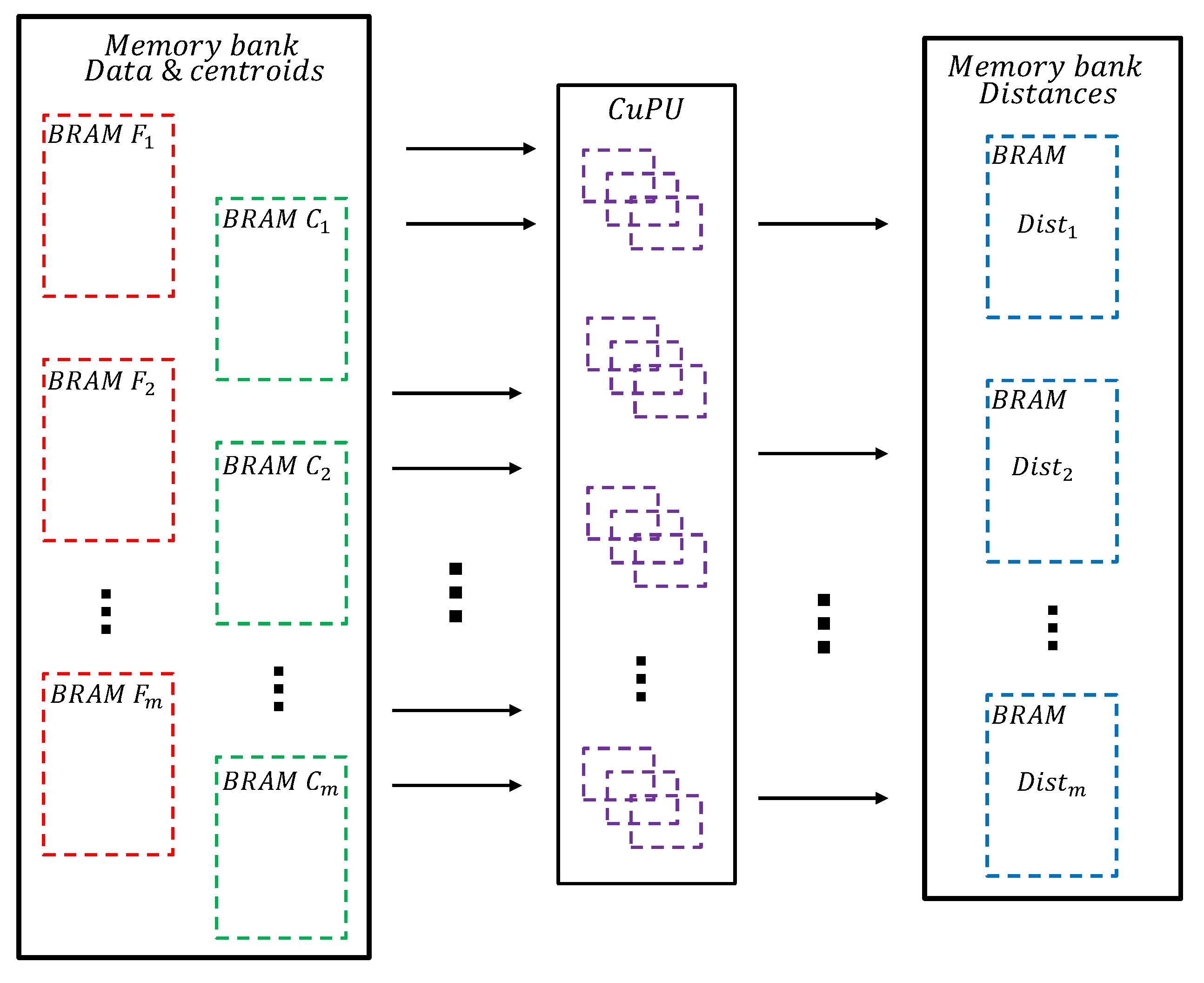

3.3. Euclidean Distance CuPU

After initializing centroid positions on the CPU, the system proceeds to calculate Euclidean distances between each feature vector and the centroids, representing a fundamental step in the clustering process that enables the assignment of each data point to its nearest centroid.

The Euclidean distance for a point

and a centroid

is implemented using the standard formula:

The Euclidean distance calculation CuPU loads data from dedicated memory banks, where each stores specific features of the data, and each stores the corresponding features of centroids. For example, in a 16-QAM constellation represented in eigenvector space where K-means is applied for data grouping, the implementation utilizes memory blocks through , each containing 300 data points, while centroid blocks through each contain 16 values representing centroid characteristics.

The CuPU performs parallel calculation of Euclidean distances between data points and centroids, storing results in the dedicated distance memory bank, as illustrated in

Figure 6.

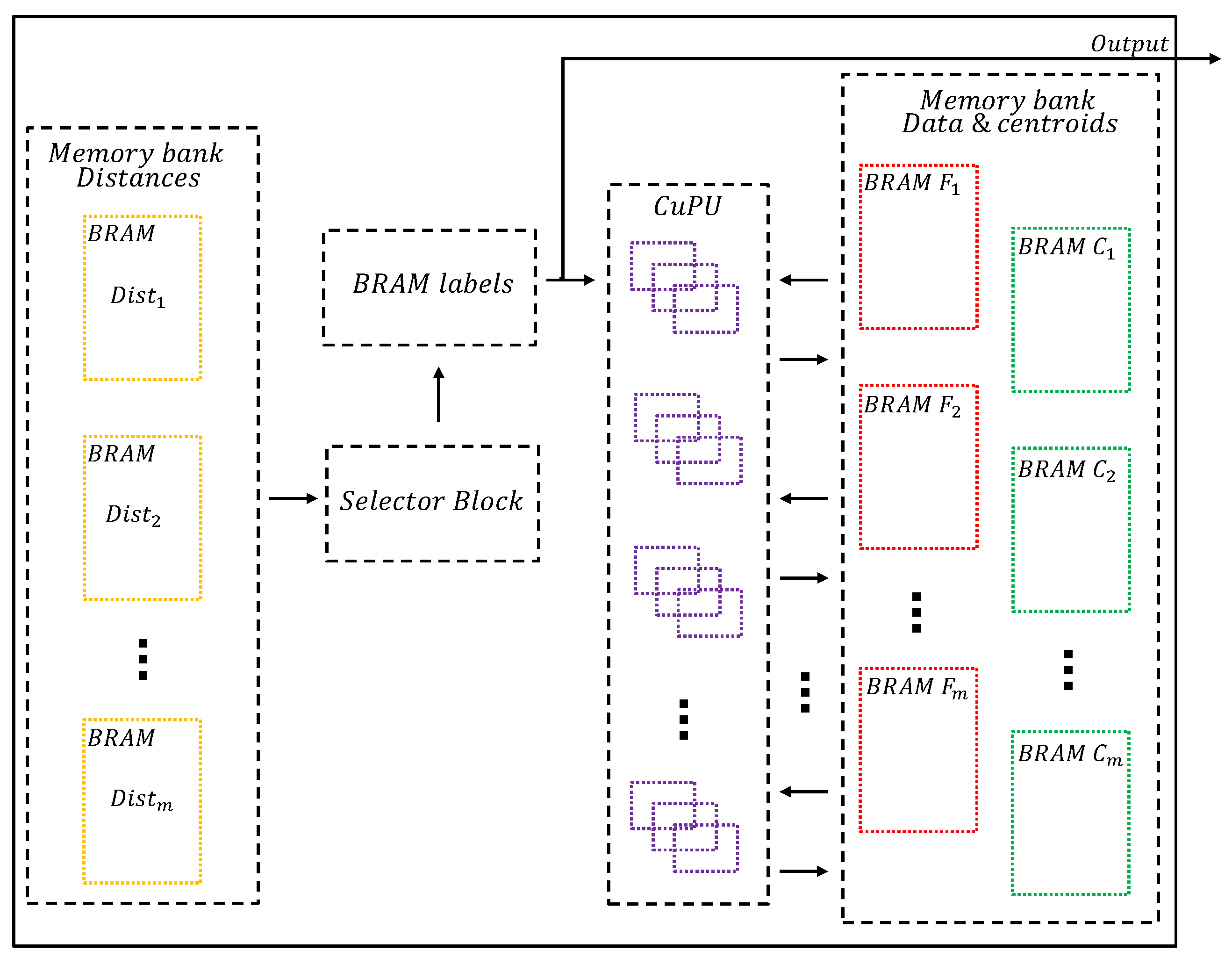

3.4. Centroid Update CuPU

Once labels are assigned based on minimum distance calculations to each centroid, the system enters the centroid update stage, where a selector block accesses corresponding memory addresses in each block and compares values to identify the minimum distance. The minimum value is assigned a label indicating which memory block contains the lowest distance, enabling proper cluster assignment. For example, during the first access cycle, the selector block examines the first memory address of each and identifies that the minimum value resides in , consequently assigning label 4 and storing it in BRAM labels .

The value corresponding to that position represents the characteristics of the first data point in the

blocks and is utilized by the CuPU to calculate means and update centroids accordingly. In the given example, this value updates the centroid stored in

, with each label in

being updated during each iteration as centroids are refined. After the iterative process, these labels represent the final data grouping, as demonstrated in the centroid update CuPU architecture shown in

Figure 7.

4. Experimental Results

The hardware implementation was synthesized using Xilinx Vivado HLS 2023.1 (Advanced Micro Devices—AMD, Inc., Santa Clara, CA, USA) and targeted the MPSoC ZCU104 Evaluation Kit development board (Advanced Micro Devices—AMD, Inc., Santa Clara, CA, USA), which integrates a Zynq UltraScale+ MPSoC featuring both programmable logic and ARM Cortex-A53 cores operating at a 100 MHz clock frequency. The ZCU104 platform, featuring the XCZU7EV device with 504K system logic cells and 11 Mb of total block RAM (BRAM), represents a mid-range FPGA suitable for proof-of-concept development but inherently constrained for high-throughput applications compared to larger platforms.

The experimental evaluation reveals significant insights into the computational and resource requirements of implementing spectral clustering on reconfigurable hardware platforms. The parallel Jacobi implementation required 705,419,050 cycles (7.05 s at 100 MHz) to process a window of 255 symbols, yielding an effective throughput of approximately 36 symbols per second for 16-QAM modulation. Each symbol consists of two 32-bit floating point values to represent in-phase and quadrature, corresponding to 16,320 bits per second, see

Table 1. While this performance is insufficient for commercial transceivers operating at multi-gigabit rates, it establishes a critical baseline for FPGA-based spectral clustering implementations and exposes fundamental system bottlenecks that limit scalability. To further illustrate the hardware acceleration effect, we compared the execution time of the complete spectral clustering algorithm processing a window of 255 symbols on both a non-parallel CPU (ARM-only) implementation and the heterogeneous ARM+FPGA implementation with parallel Jacobi eigenvector computation. Each timing measurement corresponds to 10 repetitions, accounting for system variability due to the operating system running on the ARM processor. The CPU implementation achieved a mean runtime of 81.89 with a standard deviation of 0.38 s, while the FPGA-accelerated implementation completed the task in 6.35 with a standard deviation of 0.01 s, yielding an approximate

speedup. This significant reduction in processing time demonstrates the effectiveness of FPGA-based parallelization and hardware/software co-design in accelerating the computationally intensive spectral clustering algorithm for real-time optical communication applications.

Table 2 summarizes the comparison of computation times for spectral clustering and K-means.

The resource utilization analysis presented in

Table 1 demonstrates that the implementation consumed 140 of 312 available BRAM blocks (44.8% utilization) on the ZCU104 platform, representing near-complete saturation of memory resources. The DSP slice utilization reached 170 out of 1728 available (9.8%), indicating that computational units were not the primary limiting factor in the system design. This resource distribution pattern highlights the memory-intensive nature of spectral clustering algorithms, where the construction and storage of the affinity matrix dominate system requirements rather than arithmetic computation capabilities. The K-means module was used in spectral clustering, processing 16 features, 16 centroids, and 255 data points after eigenvector computation. Additionally, the K-means algorithm is applied directly to the data as a baseline. For this reason, there are only two features (in-phase and quadrature), see

Table 3.

Table 4 presents the execution times for spectral clustering modules implemented on the ARM processor within the heterogeneous architecture, with the matrix L construction requiring 18,821.8 μs, representing a significant portion of the total processing time.

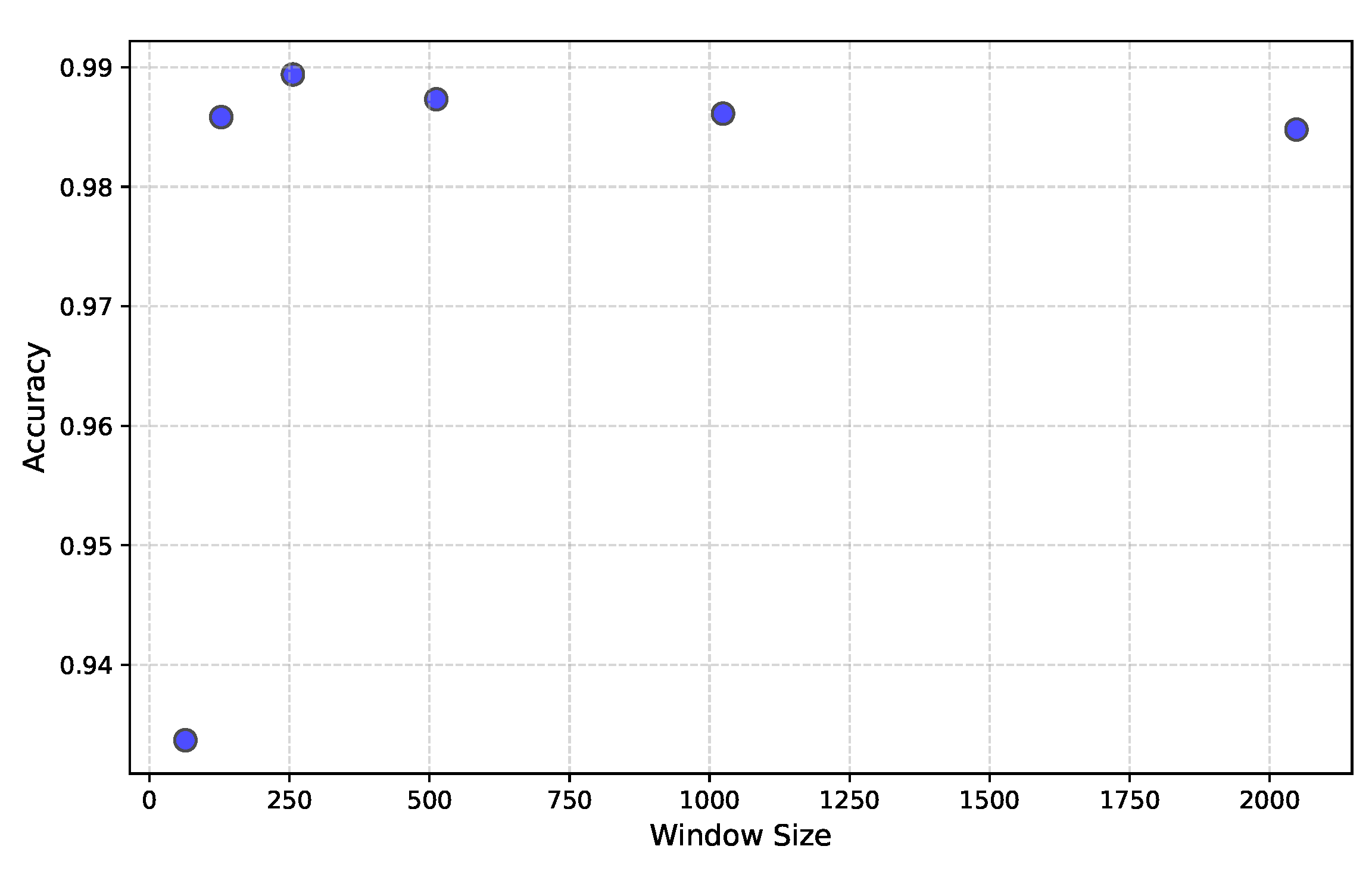

The relationship between window size and clustering accuracy, demonstrated in

Figure 8, shows that performance stabilizes for window sizes of approximately 250 symbols or larger, validating the implementation’s design choice of 255 symbols as an optimal trade-off between accuracy and resource utilization given the BRAM constraints.

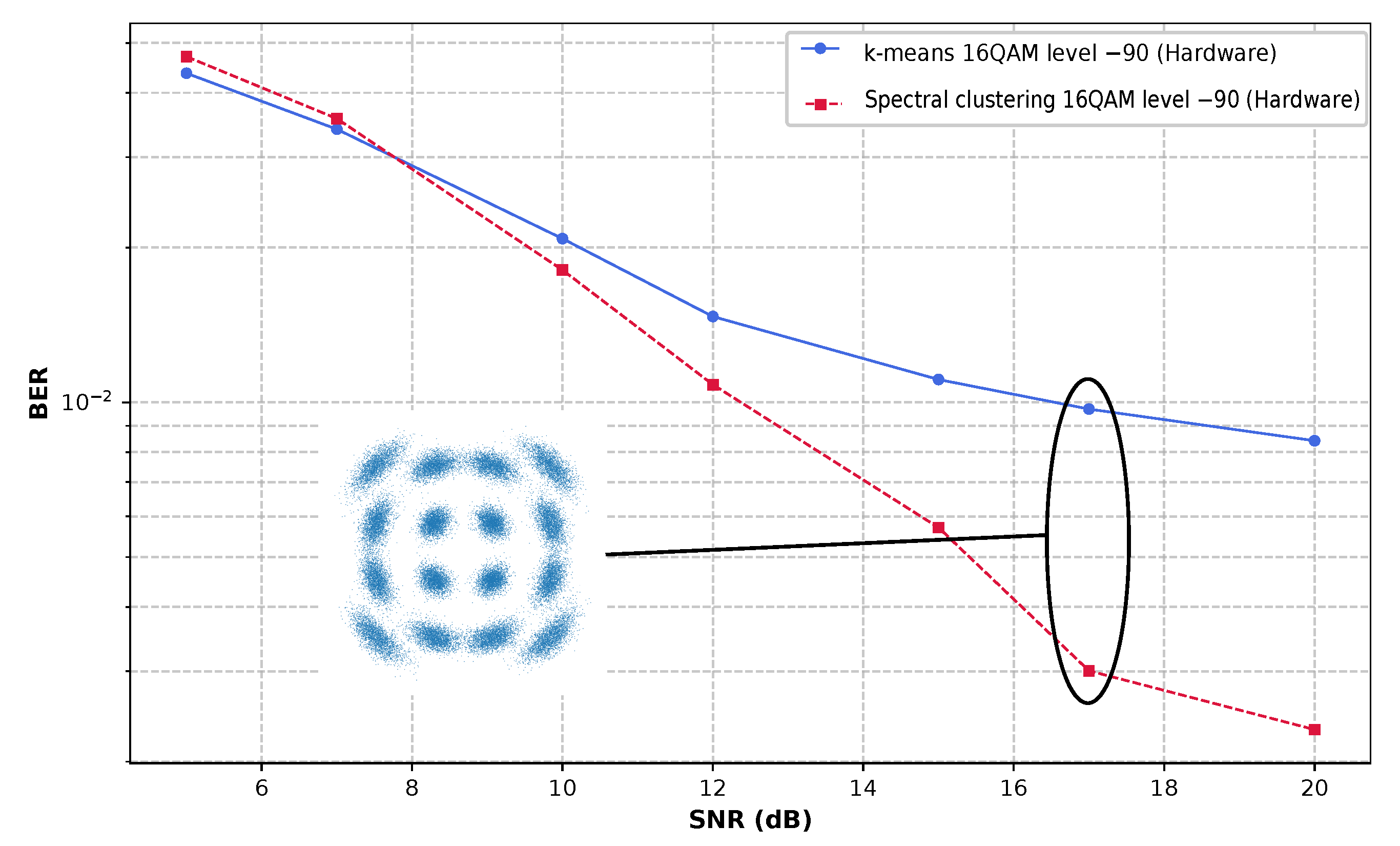

Under severe phase noise conditions (−90 dBc/Hz at a 1 MHz offset), as shown in

Figure 9, the spectral clustering algorithm demonstrated superior bit error rate performance compared to K-means for 16-QAM signals, with the performance advantages becoming more pronounced as constellation complexity increased.

A comparative summary of the implemented methods is presented in

Table 5. Spectral clustering, as proposed in this work, achieves higher accuracy and demonstrates robustness against nonlinear phase noise, albeit at the cost of increased latency and resource usage due to the complexity of eigenvector decomposition and affinity matrix construction. In contrast, the FPGA-only K-means implementation offers lower latency and reduced resource consumption; however, its accuracy degrades significantly under severe noise conditions, thereby limiting its applicability in nonlinear optical channels. This comparison highlights the trade-off between computational complexity and noise robustness, underscoring the advantages of spectral clustering for advanced optical receiver architectures despite current hardware limitations.

5. Discussion

Our FPGA implementation reveals intrinsic constraints for spectral clustering on reconfigurable hardware platforms, where parallel processing capabilities must be carefully balanced against memory bandwidth limitations imposed by the target device architecture. The ZCU104 platform’s resource constraints, while suitable for algorithm validation and proof-of-concept development, expose fundamental challenges of implementing computationally intensive clustering algorithms in resource-constrained embedded environments.

The 7.05 s processing latency per window primarily stems from memory access patterns rather than computational complexity, as evidenced by the low DSP utilization (9.8%) compared to near-complete BRAM saturation (44.8%). This pattern aligns with recent research on FPGA-based matrix computations, which identifies memory bandwidth as the primary bottleneck rather than arithmetic capability in modern reconfigurable computing systems. The parallel Jacobi implementation, while reducing eigenanalysis complexity through spatial parallelization, still faces convergence challenges that scale with matrix dimensions and require an average of 22 iterations for acceptable numerical accuracy.

While recent implementations of machine learning-based nonlinearity compensation have achieved higher throughputs using GPU acceleration or specialized hardware for simpler clustering algorithms, our work demonstrates the feasibility of spectral clustering using heterogeneous CPU/FPGA co-design. Our implementation partitions the algorithm between ARM processors and the FPGA fabric, with the FPGA handling computationally intensive eigenanalysis through parallel Jacobi rotations while the CPU manages data flow and high-level control. This co-design strategy enables efficient resource utilization and provides a practical framework for implementing complex clustering algorithms in embedded optical systems.

The power efficiency characteristics of FPGA implementations, although not explicitly quantified in this work, typically demonstrate advantages over GPU-based alternatives for streaming DSP applications, particularly when algorithms can be spatially parallelized, as demonstrated in our Jacobi rotation scheme. This efficiency advantage becomes more significant in scenarios where sustained processing is required rather than burst computation, making FPGAs attractive for continuous optical signal processing applications.

The identified bottlenecks in memory bandwidth and eigenvalue computation convergence directly inform optimization priorities for future implementations targeting higher-throughput optical systems. Mixed-precision arithmetic could reduce resource overhead while maintaining clustering accuracy, particularly for eigenvalue computation stages where full precision may not be required throughout the entire algorithmic pipeline. The transition to larger FPGA platforms, such as the Virtex UltraScale+ family with integrated high-bandwidth memory, could alleviate memory constraints while providing significantly higher computational resources for more demanding applications.

6. Conclusions and Future Work

This work demonstrates the first complete SoC-FPGA hardware implementation of spectral clustering for optical communication receivers, including on-chip eigenvector and eigenvalue computation, using a parallel Jacobi method integrated within a heterogeneous CPU/FPGA co-design framework. Although the achieved processing speeds of approximately 36 symbols per second do not meet the requirements for commercial optical transceivers, our implementation validates the feasibility and exposes the practical challenges of deploying advanced clustering algorithms in real hardware systems.

The experimental results establish BRAM bandwidth as the fundamental limiter for windowed clustering architectures on FPGA platforms, with 44.8% memory utilization representing a critical constraint that affects both scalability and performance. Our implementation provides comprehensive insights into the memory–compute trade-offs inherent in spectral clustering algorithms, where the construction and storage of the affinity matrix dominate resource requirements rather than arithmetic operations. The superior performance of spectral clustering over K-means under severe phase noise conditions (−90 dBc/Hz at a 1 MHz offset) validates its potential for next-generation optical receivers despite current implementation constraints.

This work provides a valuable reference for future research and optimization in hardware-accelerated optical signal processing, highlighting the essential role of FPGAs as a bridge between algorithm development and the deployment of high-speed optical systems. The comprehensive characterization of performance bottlenecks and resource utilization patterns establishes a foundation for future optimization efforts, guiding the development of next-generation hardware architectures for intelligent optical receivers.

Future work should focus on architectural optimizations, including the use of mixed-precision arithmetic to reduce resource overhead, the exploration of advanced memory architectures to alleviate bandwidth constraints, and the investigation of hybrid algorithmic approaches that combine the strengths of spectral clustering with complementary techniques. The migration to application-specific integrated circuits (ASICs) or larger FPGA platforms with enhanced memory subsystems represents a promising pathway to overcome current throughput limitations while maintaining the algorithmic advantages demonstrated in this work. Additionally, integrating spectral clustering with emerging machine learning techniques could leverage complementary strengths for adaptive nonlinear compensation in next-generation optical communication systems.

Author Contributions

Conceptualization, D.M.-V., M.S.-S. and A.E.C.-O.; methodology, D.M.-V., M.S.-S., A.E.C.-O. and N.G.-G.; software, D.M.-V. and M.S.-S.; validation, D.M.-V., M.S.-S., A.E.C.-O., N.G.-G. and M.B.M.; formal analysis, D.M.-V., M.S.-S. and A.E.C.-O.; investigation, D.M.-V., M.S.-S. and A.E.C.-O.; resources, N.G.-G. and M.B.M.; data curation, D.M.-V. and M.S.-S.; writing—original draft preparation, D.M.-V. and M.S.-S.; writing—review and editing, D.M.-V., M.S.-S., A.E.C.-O., N.G.-G. and M.B.M.; visualization, D.M.-V. and M.S.-S.; supervision, N.G.-G. and M.B.M.; project administration, D.M.-V., N.G.-G. and M.B.M.; funding acquisition, N.G.-G. and M.B.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Office of Naval Research Global (ONRG) and the Air Force Office of Scientific Research/Southern Office of Aerospace Research and Development (AFOSR/SOARD) grant number N62909-24-1-2088, with financial support by the European Regional Development Fund within the Operational Program “Bulgarian national recovery and resilience plan” and the procedure for direct provision of grants “Establishing of a network of research higher education institutions in Bulgaria”, under the Project BG-RRP-2.004-0005 “Improving the research capacity and quality to achieve international recognition and resilience of TU-Sofia”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SNR | Signal-to-Noise Ratio |

| BER | Bit Error Rate |

| QAM | Quadrature Amplitude Modulation |

| FPGA | Field-Programmable Gate Array |

| SoC | System on Chip |

| ARI | Adjusted Rand Index |

| CPU | Central Processing Unit |

| CuPU | Custom Processing Unit |

| BRAM | Block Random Access Memory |

| DSP | Digital Signal Processing |

| FF | Flip-Flops |

| LUT | Look-Up Table |

References

- Soman, S.K.O. A tutorial on fiber Kerr nonlinearity effect and its compensation in optical communication systems. J. Opt. 2021, 23, 123502. [Google Scholar] [CrossRef]

- Aldaya, I.; Giacoumidis, E.; de Oliveira, G.; Wei, J.; Pita, J.L.; Marconi, J.D.; Fagotto, E.A.M.; Barry, L.; Abbade, M.L.F. Histogram Based Clustering for Nonlinear Compensation in Long Reach Coherent Passive Optical Networks. Appl. Sci. 2020, 10, 152. [Google Scholar] [CrossRef]

- Ali, F.; Shakeel, M.; Ali, A.; Shah, W.; Qamar, M.S.; Ahmad, S.; Ali, U.; Waqas, M. Probing of nonlinear impairments in long range optical transmission systems. J. Opt. Commun. 2023, 44, s1225–s1231. [Google Scholar] [CrossRef]

- Jin, C.; Shevchenko, N.A.; Wang, J.; Chen, Y.; Xu, T. Wideband Multichannel Nyquist-Spaced Long-Haul Optical Transmission Influenced by Enhanced Equalization Phase Noise. Sensors 2023, 23, 1493. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chang, H.; Gao, R.; Zhang, Q.; Tian, F.; Yao, H.; Tian, Q.; Wang, Y.; Xin, X.; Wang, F.; et al. End-to-End Deep Learning of Joint Geometric Probabilistic Shaping Using a Channel-Sensitive Autoencoder. Electronics 2023, 12, 4234. [Google Scholar] [CrossRef]

- Pryamikov, A. Deep learning as a highly efficient tool for digital signal processing design. Light. Sci. Appl. 2024, 13, 248. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Zhang, M. Artificial Intelligence in Optical Communications: From Machine Learning to Deep Learning. Front. Commun. Netw. 2021, 2, 656786. [Google Scholar] [CrossRef]

- Pan, X.; Wang, X.; Tian, B.; Wang, C.; Zhang, H.; Guizani, M. Machine-Learning-Aided Optical Fiber Communication System. IEEE Netw. 2021, 35, 136–142. [Google Scholar] [CrossRef]

- Melek, M.M.; Yevick, D. Machine learning compensation of fiber nonlinear noise. Opt. Quantum Electron. 2022, 54, 685. [Google Scholar] [CrossRef]

- Jain, V.; Bhatia, R. A survey on machine learning schemes for fiber nonlinearity mitigation in radio over fiber system. J. Opt. Commun. 2023, 45, s1157–s1163. [Google Scholar] [CrossRef]

- Ng, A.Y.; Jordan, M.I.; Weiss, Y. On spectral clustering: Analysis and an algorithm. In Proceedings of the 14th International Conference on Neural Information Processing Systems: Natural and Synthetic, Cambridge, MA, USA, 3–8 December 2001; NIPS’01. pp. 849–856. [Google Scholar]

- Sadjadi, F.; Torra, V.; Jamshidi, M. Preprocessed spectral clustering with higher connectivity for robustness in real-world applications. Int. J. Comput. Intell. Syst. 2024, 17, 86. [Google Scholar] [CrossRef]

- Solarte-Sanchez, M.; Marquez-Viloria, D.; Castro-Ospina, A.E.; Reyes-Vera, E.; Guerrero-Gonzalez, N.; Botero-Valencia, J. m-QAM Receiver Based on Data Stream Spectral Clustering for Optical Channels Dominated by Nonlinear Phase Noise. Algorithms 2024, 17, 553. [Google Scholar] [CrossRef]

- Torun, M.U.; Yilmaz, O.; Akansu, A.N. FPGA, GPU, and CPU implementations of Jacobi algorithm for eigenanalysis. J. Parallel Distrib. Comput. 2016, 96, 172–180. [Google Scholar] [CrossRef]

- Liu, X.; Wang, Y.; Xu, H. Nonlinearity Compensation Technique by Spectral Clustering for Coherent Optical Communication System. In Proceedings of the Asia Communications and Photonics Conference/International Conference on Information Photonics and Optical Communications 2020 (ACP/IPOC), Optica Publishing Group, Beijing, China, 24–27 October 2020; p. M4A.291. [Google Scholar] [CrossRef]

- Bahri, M. Improving IoT Data Stream Analytics Using Summarization Techniques. Ph.D. Thesis, Institut Polytechnique de Paris, Palaiseau, France, 2020. [Google Scholar]

- Arthur, D.; Vassilvitskii, S. k-means++: The advantages of careful seeding. In Proceedings of the Eighteenth Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007; SODA’07. pp. 1027–1035. [Google Scholar]

- Grunau, C.; Özüdoğru, A.A.; Rozhoň, V.; Tětek, J. A Nearly Tight Analysis of Greedy k-means++. In Proceedings of the 2023 Annual ACM-SIAM Symposium on Discrete Algorithms (SODA), Florence, Italy, 22–25 January 2023; pp. 1012–1070. [Google Scholar] [CrossRef]

- Cirstea, M.; Benkrid, K.; Dinu, A.; Ghiriti, R.; Petreus, D. Digital Electronic System-on-Chip Design: Methodologies, Tools, Evolution, and Trends. Micromachines 2024, 15, 247. [Google Scholar] [CrossRef] [PubMed]

- Dewald, F.; Rohde, J.; Hochberger, C.; Mantel, H. Improving Loop Parallelization by a Combination of Static and Dynamic Analyses in HLS. ACM Trans. Reconfigurable Technol. Syst. 2022, 15, 1–31. [Google Scholar] [CrossRef]

- Jeon, H.B.; Kim, S.M.; Moon, H.J.; Kwon, D.H.; Lee, J.W.; Chung, J.M.; Han, S.K.; Chae, C.B.; Alouini, M.S. Free-Space Optical Communications for 6G Wireless Networks: Challenges, Opportunities, and Prototype Validation. IEEE Commun. Mag. 2023, 61, 116–121. [Google Scholar] [CrossRef]

- Marquez-Viloria, D.; Castano-Londono, L.; Guerrero-Gonzalez, N. A Modified KNN Algorithm for High-Performance Computing on FPGA of Real-Time m-QAM Demodulators. Electronics 2021, 10, 627. [Google Scholar] [CrossRef]

Figure 1.

Bar chart comparing clustering accuracy and ARI (mean ± standard deviation) for different centroid initialization methods, random, SK-Random, KMeans++, and Greedy, showing that Greedy consistently achieves the highest and most stable performance.

Figure 1.

Bar chart comparing clustering accuracy and ARI (mean ± standard deviation) for different centroid initialization methods, random, SK-Random, KMeans++, and Greedy, showing that Greedy consistently achieves the highest and most stable performance.

Figure 2.

Illustrative example of parallel Jacobi rotations in different iterations applied on different row/column pairs.

Figure 2.

Illustrative example of parallel Jacobi rotations in different iterations applied on different row/column pairs.

Figure 3.

Task assignment in heterogeneous CPU/FPGA architectures for spectral clustering. CPU-implemented blocks in black and FPGA-implemented blocks in blue.

Figure 3.

Task assignment in heterogeneous CPU/FPGA architectures for spectral clustering. CPU-implemented blocks in black and FPGA-implemented blocks in blue.

Figure 4.

Hardware/software co-design architecture of the spectral clustering receiver.

Figure 4.

Hardware/software co-design architecture of the spectral clustering receiver.

Figure 5.

Implementation of the Jacobi method on an FPGA.

Figure 5.

Implementation of the Jacobi method on an FPGA.

Figure 6.

Hardware block for Euclidean distance calculation using pipelined arithmetic units and memory blocks.

Figure 6.

Hardware block for Euclidean distance calculation using pipelined arithmetic units and memory blocks.

Figure 7.

Centroid update hardware block. The new centroids are calculated by accumulating the points assigned to each cluster and dividing by their count.

Figure 7.

Centroid update hardware block. The new centroids are calculated by accumulating the points assigned to each cluster and dividing by their count.

Figure 8.

The graph displays the results of an experiment examining the effect of window size on streaming data. The accuracy remains stable for window sizes of approximately 150 data points or larger.

Figure 8.

The graph displays the results of an experiment examining the effect of window size on streaming data. The accuracy remains stable for window sizes of approximately 150 data points or larger.

Figure 9.

SNR vs. BER performance of spectral clustering and K-means algorithm for 16-QAM, applying a level of nonlinear phase noise of −90 dBc/Hz at a 1 MHz offset.

Figure 9.

SNR vs. BER performance of spectral clustering and K-means algorithm for 16-QAM, applying a level of nonlinear phase noise of −90 dBc/Hz at a 1 MHz offset.

Table 1.

Time and resource table taken with a window size of 255. This is the largest window size that can be calculated in the ZCU104 board without exceeding the BRAM resources. The values were obtained from the design synthesis in Vivado.

Table 1.

Time and resource table taken with a window size of 255. This is the largest window size that can be calculated in the ZCU104 board without exceeding the BRAM resources. The values were obtained from the design synthesis in Vivado.

| Spectral Clustering | Latency (Cycles) | BRAM | DSP | FF | LUT |

|---|

| Parallel Jacobi method | 705,419,050 | 122.5 | 58 | 11,083 | 13,178 |

| K-means for spectral clustering | 99,074 | 17.5 | 112 | 17,901 | 13,225 |

| AXI Connect and others | Negligible | 0 | 0 | 696 | 850 |

| TOTAL | 705,518,124 | 140 | 170 | 38,364 | 31,092 |

Table 2.

Comparison of execution times and speedup between the non-parallel CPU (ARM-only) implementation and the heterogeneous ARM + FPGA implementation of the spectral clustering algorithm processing a window of 255 symbols. The reported timings correspond to mean values over 10 repetitions, with the associated standard deviation representing measurement variability.

Table 2.

Comparison of execution times and speedup between the non-parallel CPU (ARM-only) implementation and the heterogeneous ARM + FPGA implementation of the spectral clustering algorithm processing a window of 255 symbols. The reported timings correspond to mean values over 10 repetitions, with the associated standard deviation representing measurement variability.

| Architecture | Execution Time [s] | Speedup |

|---|

| ARM | 81.890330 ± 0.378422 | 1 |

| ARM + FPGA | 6.354489 ± 0.013957 | 13 |

Table 3.

Resources and latency summary for K-means module.

Table 3.

Resources and latency summary for K-means module.

| Module | Latency (Cycles) | BRAM | DSP | FF | LUT |

|---|

| K-means | 96,077 | 9.3 | 14 | 4608 | 9216 |

Table 4.

This table shows the times corresponding to the execution times of the spectral clustering modules that are executed on the PC, specifically on the ARM processor within the heterogeneous architecture on the ZCU104 board.

Table 4.

This table shows the times corresponding to the execution times of the spectral clustering modules that are executed on the PC, specifically on the ARM processor within the heterogeneous architecture on the ZCU104 board.

| Process | Average Execution Time (μs) |

|---|

| Start to matrix L | 18,821.8 |

| Matrix X to centroid initialization | 6204.5 |

| Relabeling | 188.2 |

Table 5.

Comparison of spectral clustering and K-means implementations.

Table 5.

Comparison of spectral clustering and K-means implementations.

| Method | Accuracy | Latency | Resource Usage | Notes |

|---|

| Spectral clustering (this work) | High | High | High | Robust to nonlinear phase noise |

| K-means | Medium | Low | Low | Not robust under nonlinear phase noise |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).