1. Introduction

In the last decade, computational mechanics has entered into an intensive dialog with machine learning and neural networks, and the aim of this synergy is not to replace well-established numerical tools, but to complement them, that is, to introduce physical knowledge into data models, accelerate costly computations, create surrogate models, and solve inverse problems under limited measurement information [

1,

2]. Recent reviews emphasize that combining physics-based and data-driven methods sets new standards of accuracy and computational efficiency, while also structuring engineering practices in the areas of material, flow, and structural modeling [

3].

At the center of this shift are physics-informed approaches, in which physics-informed neural networks introduce initial-boundary equations directly into the loss function, enabling the solution of forward and inverse problems without full measurement fields [

4,

5]. In parallel, operator learning is developing, from Fourier operators FNO to DeepONet networks, which learn mappings between function spaces and create fast PDE surrogates, independent of mesh resolution [

2,

5]. For complex geometries and nonlinear couplings, graph neural networks are gaining importance, representing fields and conservation laws directly on mesh elements or point clouds, opening the way to multiple accelerations of fluid and solid mechanics simulations.

The integration of computational and data-driven methods brings particularly large benefits in constitutive modeling, multiscale modeling, uncertainty quantification, and inverse analysis. Data at the microstructural level can be compressed into deep material networks, which learn effective constitutive laws and transfer them to structural analyses at the macroscale [

6]. In parallel, data-driven mechanics abandons explicit material laws in favor of direct work on datasets consistent with conservation principles, becoming a real alternative to classical FEM in material and structural tasks [

3]. Advances in constitutive learning include enforcing polyconvexity and other thermodynamic conditions in neural models, and improving the stability and physical consistency of hyperelasticity descriptions [

7]. At the same time, Bayesian methods for PINN, including B-PINN, enable reliable uncertainty estimation and robust identification of material parameters from noisy data, while cross-cutting UQ frameworks for scientific machine learning organize quality metrics, inference strategies, and comparative procedures needed in engineering practice [

8,

9].

Although physics-informed neural networks (PINNs) and operator learning methods such as the Fourier Neural Operator (FNO) and DeepONet have demonstrated strong capabilities in solving complex mechanics problems, several limitations have also been reported. These include sensitivity to the relative weighting of loss function terms, rigid dependence on hyperparameter tuning, and challenges in representing complex geometries or three-dimensional contact conditions. Recent studies suggest possible remedies, such as dynamic loss balancing, variational enforcement of boundary conditions, or domain decomposition strategies. While these techniques mitigate some of the difficulties, they highlight that the practical deployment of PINN and operator-learning approaches still require careful design and transparent reporting of model assumptions.

Surrogates and reduced-order models play an important role, replacing costly computations with fast approximations without losing key physical features. In fluid dynamics, convolutional networks and autoencoders have been shown to complement missing information and improve the resolution of turbulent fields, as well as create nonlinear reduced models for chaotic flows. Operator approaches FNO and DeepONet transfer this idea to the level of learning mappings between functions, combining generalizability with very high computational accelerations [

2,

5]. The common denominator is the ability to combine physical knowledge, data, and uncertainty estimation in a single workflow, which supports applications in design, diagnostics, and control [

10].

Data-driven design is dynamically developing, including topology optimization, where generative models, such as GANs and diffusion models, shorten design time by quickly approximating material distributions and physical fields, as well as facilitating multi-criteria trade-offs, previously unattainable within reasonable computation time. From an application perspective, similar techniques are penetrating automotive engineering, for example, into lane detection and risk assessment of events, which further motivates the development of methods combining mechanics, computer vision, and uncertainty quantification [

10].

Recent advances in data-driven computational mechanics have also highlighted the importance of targeted data enrichment. For example, adaptive sampling strategies have been proposed to systematically expand material databases in regions of high prediction error, thereby improving the robustness of surrogate models in both forward and inverse tasks [

11]. Randomized solvers and adaptive error driven data augmentation now allow data-driven frameworks to escape local minima and deliver more stable convergence in large scale applications. These developments emphasize that the reliability of data-driven approaches depends not only on model architecture but also on the design and coverage of the underlying datasets [

12].

In parallel, multiscale modeling has increasingly benefited from hybrid strategies coupling reduced-order bases with deep neural surrogates. Recent studies demonstrate that combining POD compression with transformer-based networks or convolutional encoders enables accurate stress–strain predictions at the macroscale while significantly reducing the computational burden of generating representative volume element (RVE) responses [

13,

14]. This offline and online separation, where the cost of database generation is balanced by notable speedups in macroscale simulations, illustrates how multiscale approaches are moving closer to real-time engineering design environments. At the same time, physics-informed neural networks (PINNs) and operator learning methods such as Fourier Neural Operators (FNOs) and DeepONets are gaining prominence [

15].

This article organizes these achievements and focuses on publications from 2015 to 2024, with the compilation prepared based on a search in the Scopus database, followed by manual content qualification, and visualizations of concept co-occurrence carried out in the VOSviewer program. The categories of analysis and the assumptions for data selection correspond to the thematic scope of the Special Issue of Applied Sciences, which concerns intelligent systems and tools for optimal design in mechanical engineering, and to the keywords defined in the article project description, which constitutes our source file, together with query details, sample size, and classification rules.

This review has been prepared to organize the dynamically growing but still fragmented body of research at the intersection of computational mechanics and data-driven methods. In contrast to previous studies focusing on narrow topics, such as PINN alone, constitutive models, or surrogate methods, this article presents a coherent and multidimensional taxonomy of the entire field. It is based on five axes of analysis, namely categories of computational mechanics tasks, families of ML/NN methods, document types, research geography, and methodological approaches. The foundation is a clearly defined and reproducible query in the Scopus database, covering the TITLE–ABS–KEY fields, with precise domain and language constraints. The distinctive contribution of this work is the combination of thematic synthesis with quantitative analysis, including the use of statistical significance tests (χ2). This made it possible to separate lasting trends from random fluctuations and to indicate where an actual change in research profile occurred (for example, a shift in emphasis toward deterministic methods and material modeling), and where the field structure remained stable.

After the Introduction,

Section 2 presents the methodological framework, including the study design, the query applied in the Scopus database, qualification criteria and the publication selection process, the adopted classification scheme, as well as the principles of data extraction and the bibliometric tools used.

Section 3, entitled State of the Art, is divided into four parts. The first part (

Section 3.1) discusses computational mechanics and modeling methods, presenting six main task categories and their application in solving differential equations, constitutive modeling, inverse analysis, and surrogate model construction. The second part (

Section 3.2) concerns machine learning and neural network methods, characterizing three basic families of approaches and their role as tools for approximating physical phenomena, uncertainty quantification, and inverse design. The third part (

Section 3.3) focuses on future directions of development, pointing out, among others, the importance of differentiable solvers, models with physical guarantees, as well as the standardization of validation and uncertainty quantification procedures. The fourth part (

Section 3.4) summarizes the findings so far.

Section 4 presents a statistical picture of the research field, showing quantitative results and significance tests regarding the distributions of thematic categories, document types, and geography publication.

Section 5 contains the discussion, which combines qualitative and quantitative conclusions, as well as addressing limitations and practical implications of the results. The article concludes with

Section 6, which presents final conclusions, formulating synthetic recommendations regarding further research and implementation opportunities. Such a content structure guides the reader logically from the discussion of methods and data, through quantitative and statistical analyses, to practical conclusions, ensuring consistency of narrative and clarity of the entire presentation of results.

To reflect the ongoing acceleration of research, the corpus and discussion now include 2025 contributions, particularly on multiscale FE2/UMAT–NN couplings, data-driven computational mechanics, and PINN/operator learning (e.g., recent Comput. Methods Appl. Mech. Eng. articles). This update strengthens both the state-of-the-art synthesis and the forward-looking recommendations.

2. Materials and Methods

Section 2 organizes the methodological framework of the review and guides the reader from the overall research concept to the details of data acquisition, selection, and processing. First, the study design and data source are presented, together with the rationale for choosing Scopus as the sole controlled repository, as well as the specification of temporal, linguistic, and disciplinary scope. Next, the search strategy is outlined, including the full query applied to the title, abstract, and keyword fields, along with imposed restrictions and filters. Subsequent sections describe the inclusion and exclusion criteria and the two-stage selection procedure with reference to the PRISMA scheme, ensuring transparency and replicability of the process. A consistent classification scheme was also introduced, covering computational mechanics categories and ML and NN method classes, and additionally the authors’ affiliation countries, document types, and methodological approaches. The chapter further explains the principles of field extraction from records, the set of comparative measures used, and the bibliometric tools, including term density maps and co-occurrence networks prepared in VOSviewer, with appropriate figure captions and reference to the tool’s source publication. These methodological frames comply with the requirements of the Special Issue of Applied Sciences devoted to intelligent systems and tools for optimal design in mechanical engineering.

In

Section 2, a complete and reproducible methodological framework was built, ranging from query design to classification and visualization, which made it possible to form a coherent corpus of publications and prepare the ground for substantive and quantitative analyses in the subsequent parts of the study. The sources and search parameters were defined, clear inclusion and exclusion criteria established, selection documented in the PRISMA scheme, a five-dimensional classification framework introduced, the set of extraction fields and comparative measures determined, and bibliometric tools described together with their limitations. Thanks to this chapter, the subsequent narrative is based on a transparent process and comparable data, which strengthens the credibility of the conclusions of the entire article.

2.1. Research Design and Data Sources

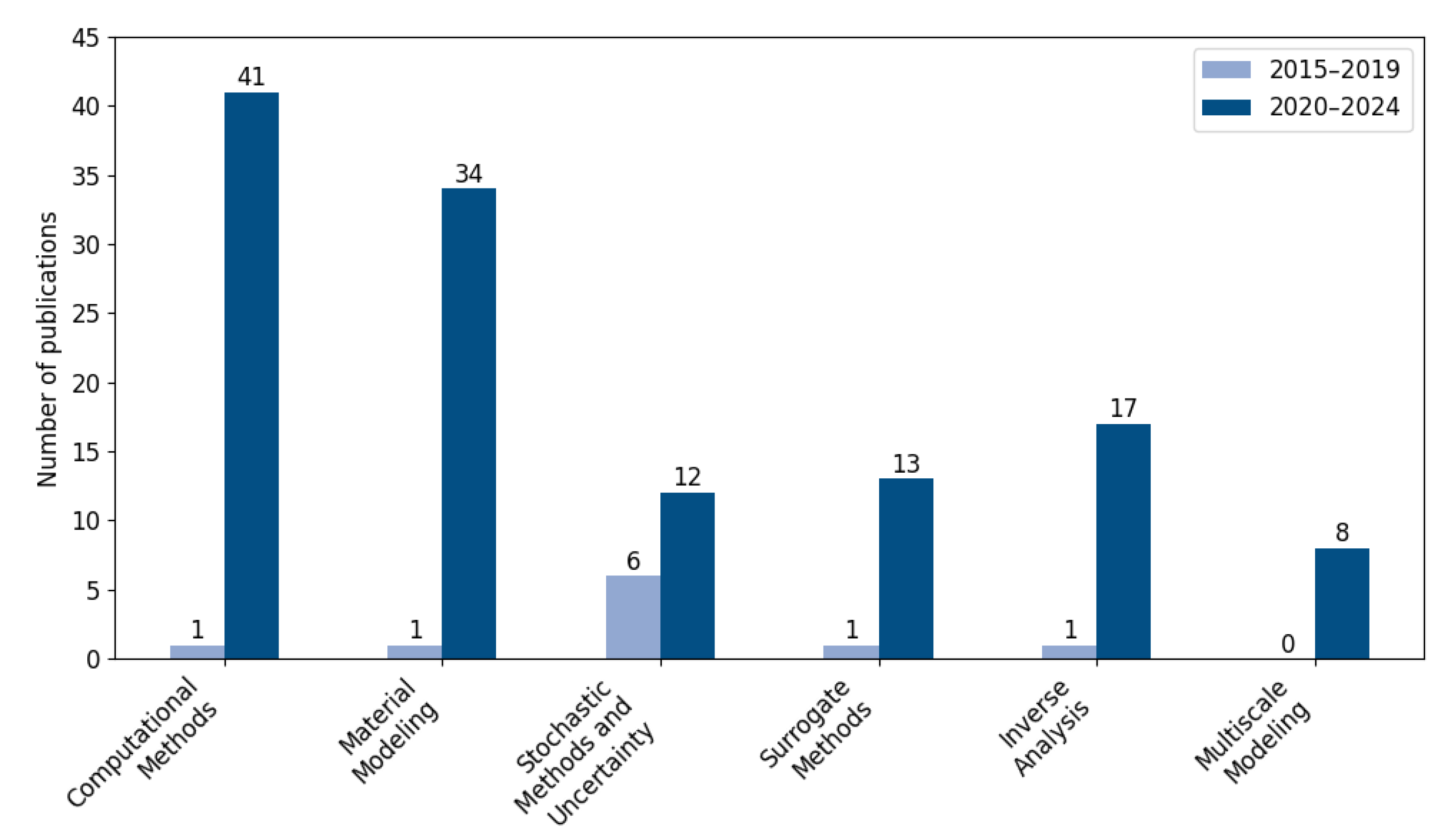

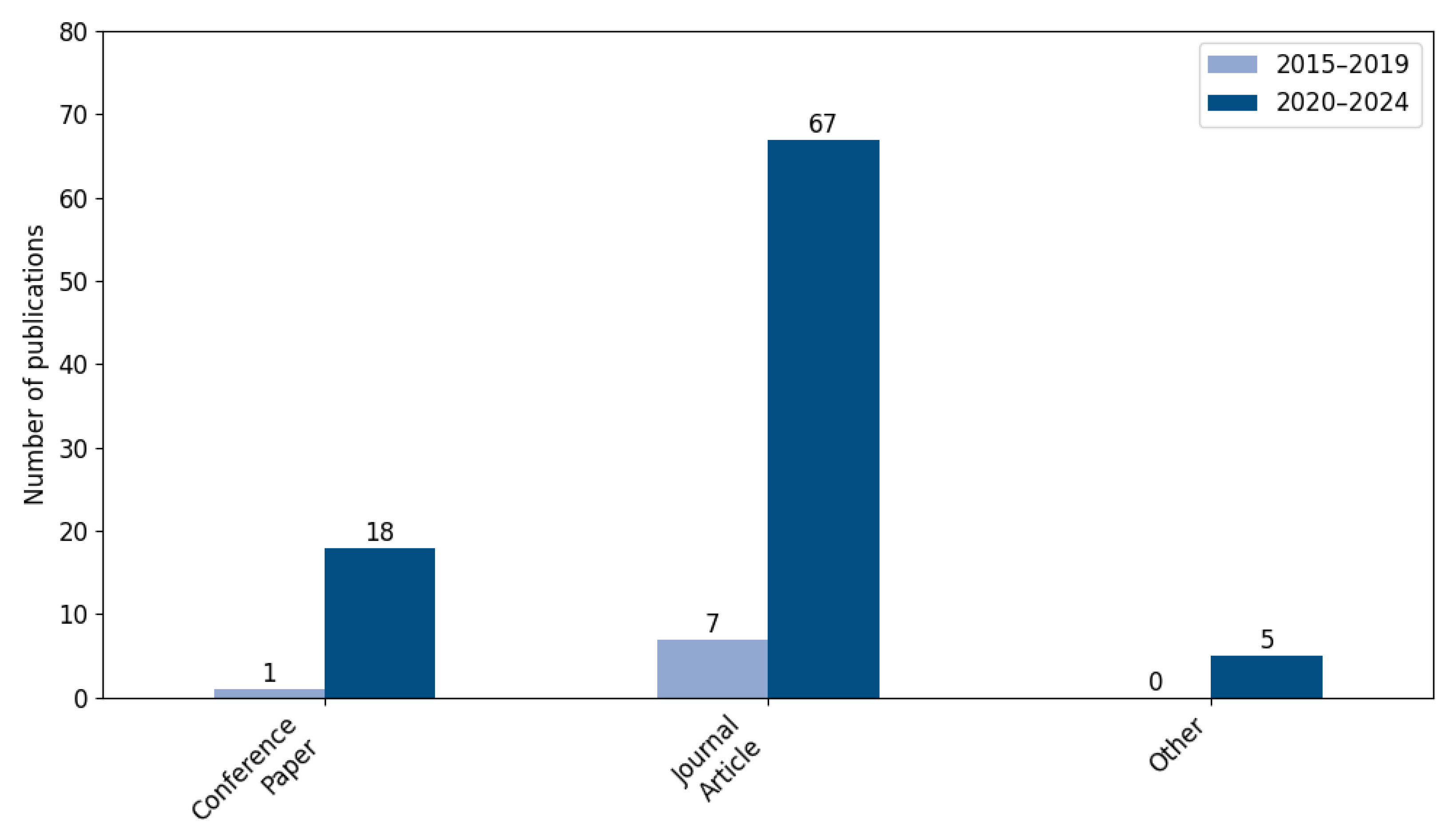

The study was designed as a systematic review with a bibliometric analysis component, aimed at identifying and synthesizing applications of machine learning and neural network methods in combination with computational mechanics methods, particularly in the areas of material modeling, surrogate models, inverse analysis, and uncertainty. The only controlled data source was the Scopus database, the search was conducted in the combined fields of title, abstract, and keywords, and the scope was limited to the years 2015–2024, the English language, and the subject areas Computer Science and Engineering, which ensures metadata consistency and reproducibility of the procedure. In the first step, a query defined around the phrase Computational Mechanics in connection with Machine Learning or Neural Networks returned 109 records, then after applying a keyword filter for AI method classes, 101 publications were obtained, and after removing three out-of-scope items, the final corpus comprised 98 papers, which were subjected to further analysis. These data comes from the working file accompanying the article, including the full Scopus query and a description of the constraints, which makes it possible to faithfully reproduce the search.

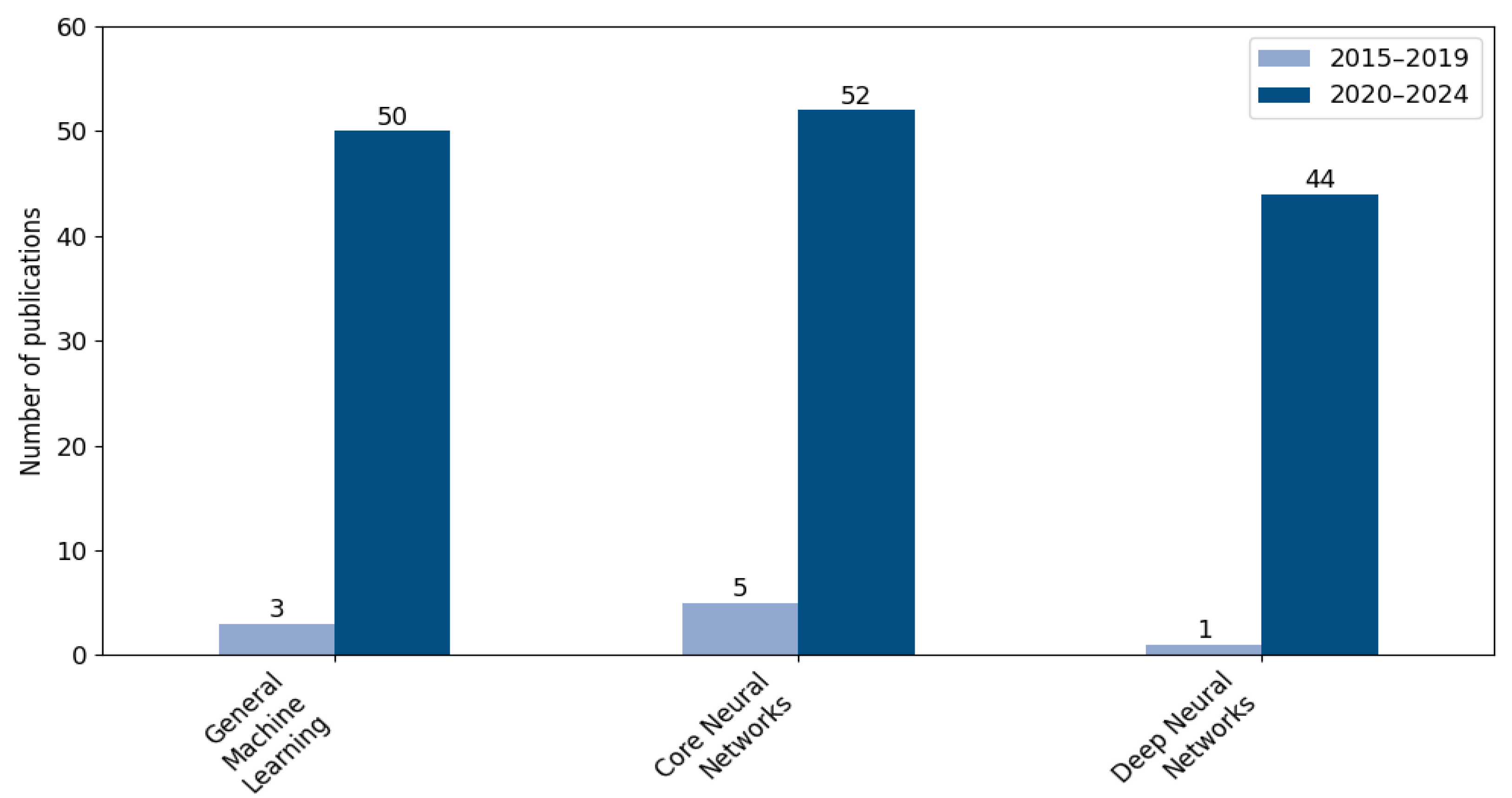

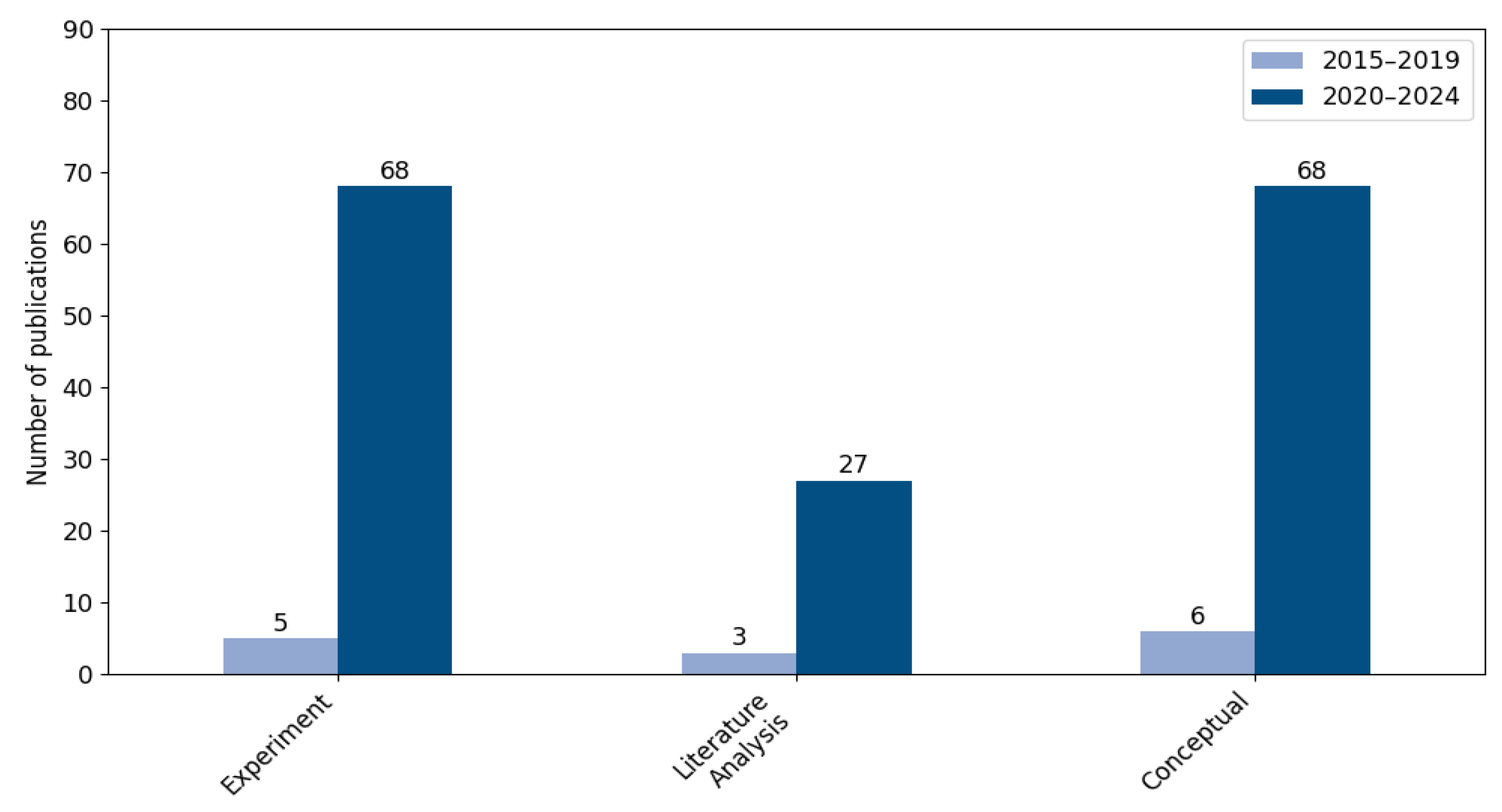

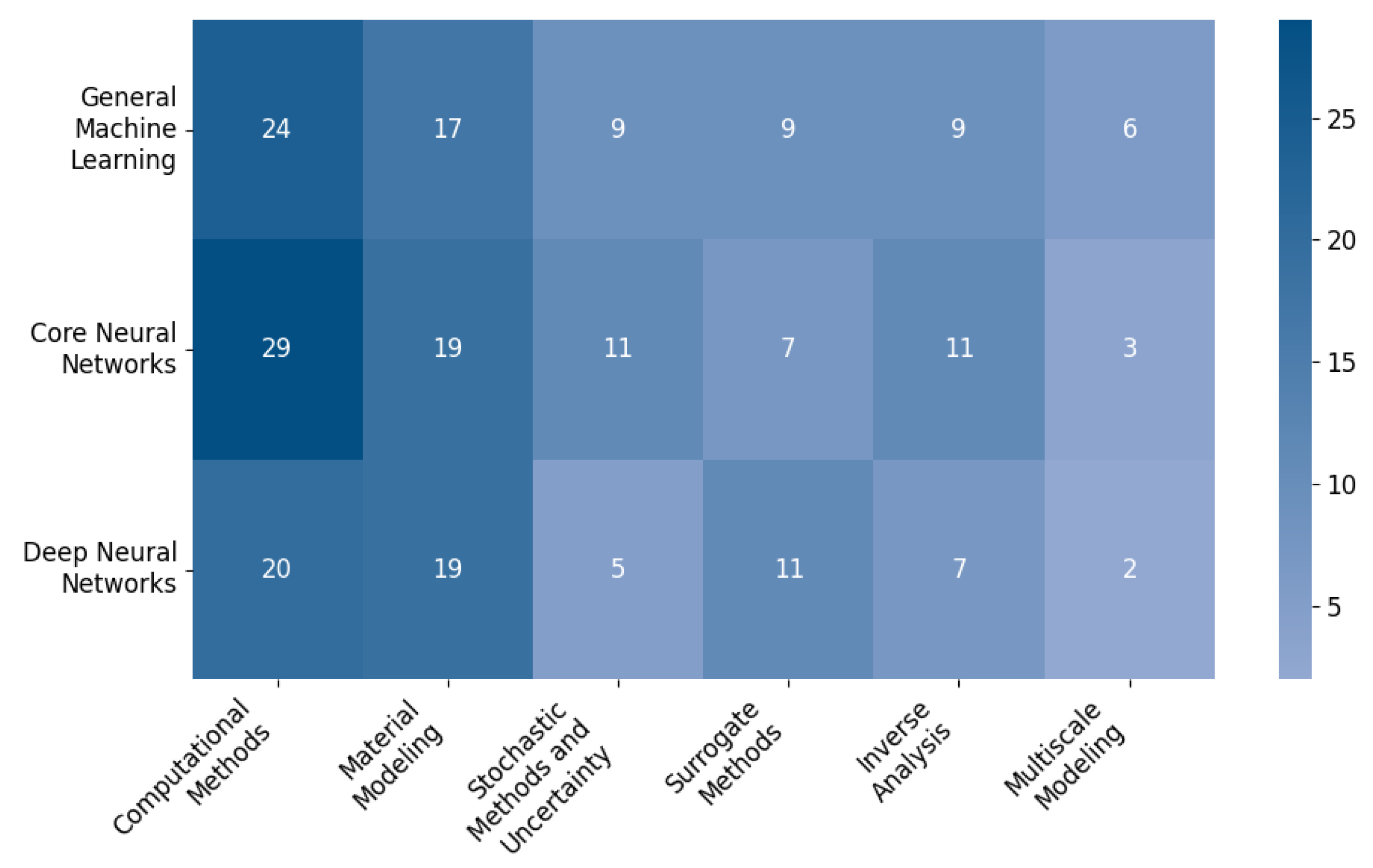

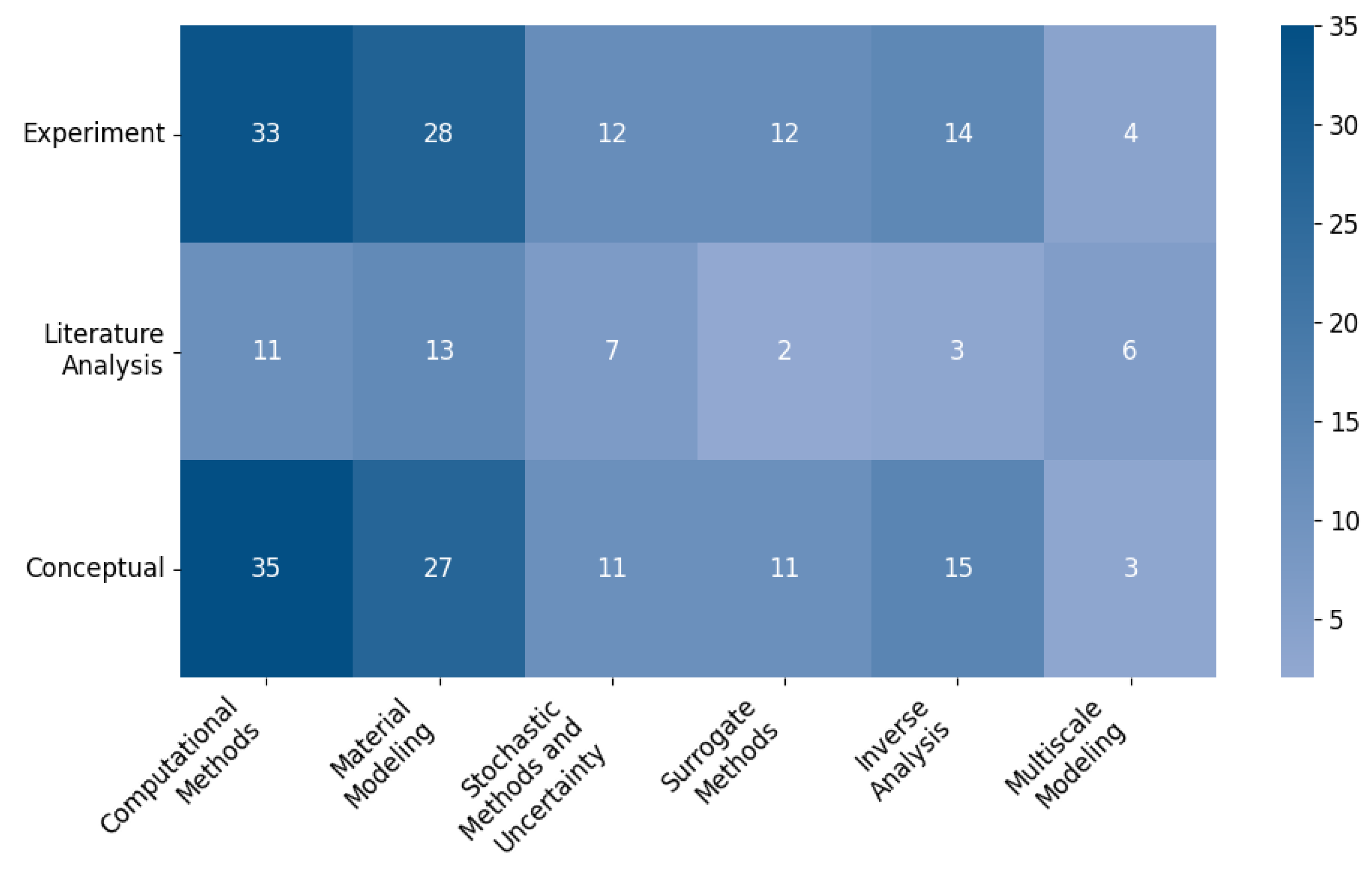

The selection procedure was designed in two stages: First, topical relevance was assessed based on metadata and abstracts, then the full text was analyzed in the case of included or ambiguous items, while in parallel a consistent classification scheme was prepared. Each publication was assigned across five interdependent dimensions: to a category from the group Computational Mechanics Methods and Modeling, namely Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, Inverse Analysis; to an AI method class, namely Core Neural Networks, Deep Neural Networks, General Machine Learning; to the country of authors’ affiliation based on Scopus data; to the document type, namely Conference Paper, Article, Other; and to the methodological approach, namely Experiment, Literature Analysis, Case Study, Conceptual, determined on the basis of content. This structure enables cross-sectional comparisons and mapping of the research landscape from the perspective of computational mechanics tasks and algorithmic classes.

To ensure transparency and reproducibility, a complete set of materials, including the exact wording of the query with filters, the list of 98 records with metadata (namely authors, affiliations, DOI identifiers, and keywords), and input files for bibliometric visualizations, was archived in the open Zenodo repository under the DOI:

https://doi.org/10.5281/zenodo.17116232. To generate term density and keyword co-occurrence maps, the VOSviewer software (version 1.6.20) was used, with each visualization accompanied by the recommended caption indicating the tool’s origin and citing the source publication of VOSviewer. This structured design meets MDPI requirements for methodological transparency, facilitates replication of the search and classification, and provides the basis for a reliable synthesis of results in the subsequent sections of the article.

2.2. Search Strategy

The search strategy was based exclusively on the Scopus database in order to ensure high metadata quality, consistent filtering, and full reproducibility. The results were limited to English-language publications in the subject areas of Computer Science and Engineering, within the years 2015–2024, and the search was conducted jointly in the Title, Abstract, and Keyword fields. This procedure was defined to match the scope of the review, which combines computational mechanics with machine learning and neural network methods.

The search was carried out in two steps. The first step identified literature on computational mechanics in connection with AI in a broad sense, while the second step refined the list using keywords for ML and NN method classes. The following query was applied to the TITLE, ABS, and KEY fields, covering the timespan, language, and two Scopus subject areas, together with a list of keywords describing computational mechanics tasks and methods:

„TITLE-ABS-KEY(“Computational Mechanics” AND (“Machine Learning” OR “Neural Networks”)) AND PUBYEAR > 2014 AND PUBYEAR < 2025 AND (LIMIT-TO (SUBJAREA,”COMP”) OR LIMIT-TO (SUBJAREA,”ENGI”)) AND (LIMIT-TO (LANGUAGE,”English”)) AND (LIMIT-TO (EXACTKEYWORD,”Finite Element Method”) OR LIMIT-TO (EXACTKEYWORD,”Inverse Problems”) OR LIMIT-TO (EXACTKEYWORD,”Surrogate Modeling”) OR LIMIT-TO (EXACTKEYWORD,”Constitutive Models”) OR LIMIT-TO (EXACTKEYWORD,”Uncertainty Analysis”) OR LIMIT-TO (EXACTKEYWORD,”Stochastic Systems”) OR LIMIT-TO (EXACTKEYWORD,”Elastoplasticity”) OR LIMIT-TO (EXACTKEYWORD,”Elasticity”) OR LIMIT-TO (EXACTKEYWORD,”Surrogate Model”) OR LIMIT-TO (EXACTKEYWORD,”Plasticity”) OR LIMIT-TO (EXACTKEYWORD,”Multiscale Modeling”) OR LIMIT-TO (EXACTKEYWORD,”Mesh Generation”) OR LIMIT-TO (EXACTKEYWORD,”Finite Element Analyse”) OR LIMIT-TO (EXACTKEYWORD,”Constitutive Modeling”) OR LIMIT-TO (EXACTKEYWORD,”Stochastic Models”) OR LIMIT-TO (EXACTKEYWORD,”Stress Analysis”) OR LIMIT-TO (EXACTKEYWORD,”Degrees Of Freedom (mechanics)”) OR LIMIT-TO (EXACTKEYWORD,”Boundary Value Problems”))”.

Next, a refining keyword filter has been added for the ML and NN methods:

„AND (LIMIT-TO (EXACTKEYWORD,”Machine Learning”) OR LIMIT-TO (EXACTKEYWORD,”Neural Networks”) OR LIMIT-TO (EXACTKEYWORD,”Neural-networks”) OR LIMIT-TO (EXACTKEYWORD,”Deep Learning”) OR LIMIT-TO (EXACTKEYWORD,”Learning Systems”) OR LIMIT-TO (EXACTKEYWORD,”Deep Neural Networks”) OR LIMIT-TO (EXACTKEYWORD,”Artificial Neural Network”) OR LIMIT-TO (EXACTKEYWORD,”Recurrent Neural Networks”) OR LIMIT-TO (EXACTKEYWORD,”Convolutional Neural Network”) OR LIMIT-TO (EXACTKEYWORD,”Convolutional Neural Networks”))”.

In the first step, 109 records were obtained, after applying the second filter, 101 records remained, and after manual verification, three out-of-scope items were removed, resulting in a final corpus of 98 publications selected for extraction and classification. The full wording of the queries, the lists of keywords, and the counts at the stages of identification, screening, and qualification are available in the Zenodo repository, which strengthens the transparency and reproducibility of the research procedure.

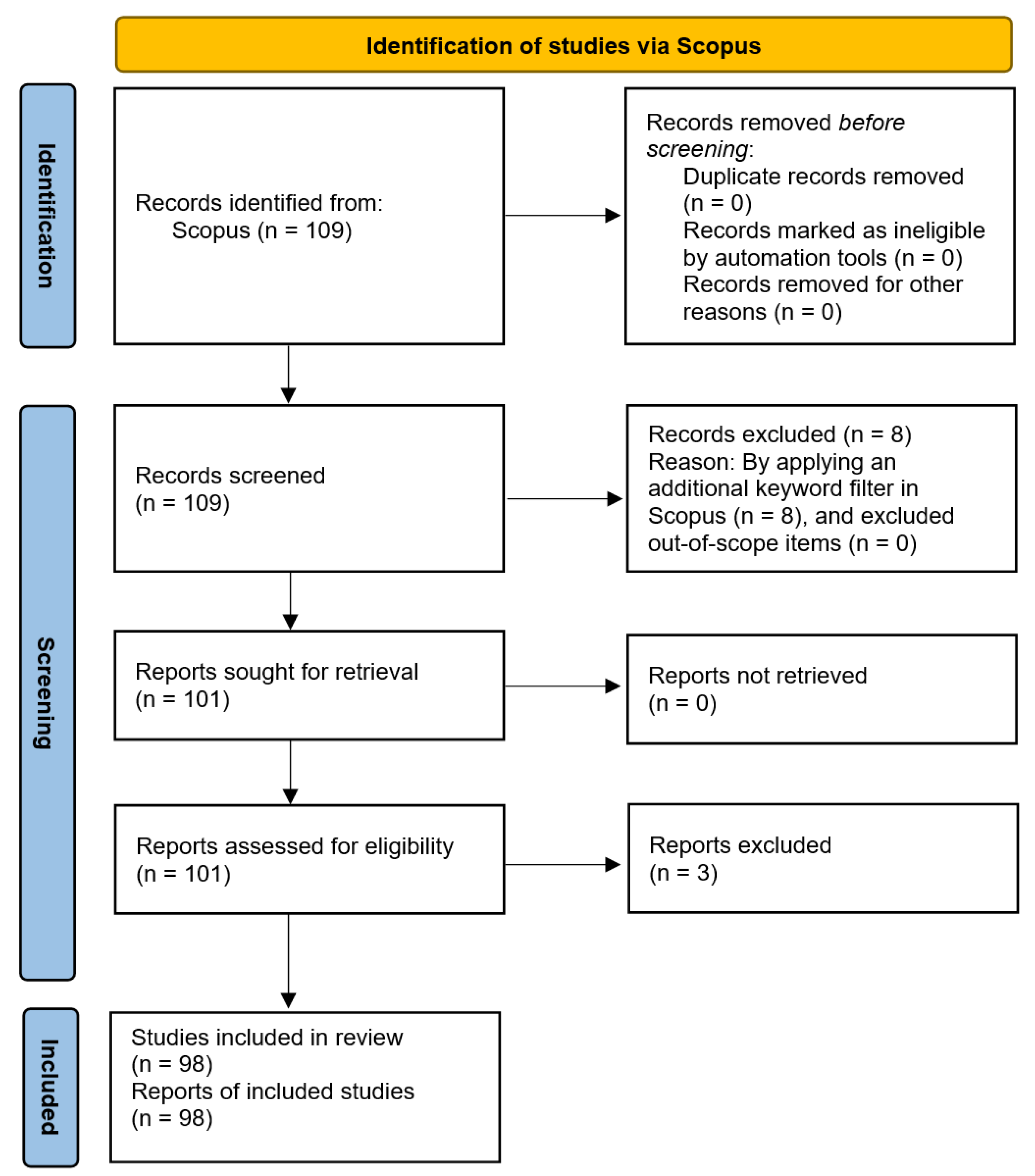

The process of data collection and preparation is illustrated in

Figure 1, the diagram has a vertical layout with four sequentially connected blocks and arrows, without color coding.

The top block presents the full Scopus query together with year, subject area, and language restrictions. The next block shows the narrowing through computational mechanics keywords, followed by the block presenting the ML and NN method filter, and beneath it the information on the size of the final corpus, 98 articles. The lower part of the diagram organizes the classification framework used in the subsequent analysis, namely the computational mechanics tasks, that is, Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, and Inverse Analysis; the AI method classes, that is, Core Neural Networks, Deep Neural Networks, and General Machine Learning, as well as categories based on Scopus metadata, authors’ affiliation countries, document types, and the manually determined research methodology; Experiment, Literature Analysis, Case Study, and Conceptual. Thus, the figure provides a concise, black-and-white guide to the query and classification principles applied in this review.

The articles qualified for analysis were described and compiled in the form of working files, which, together with metadata and input files for visualization, were archived in the open Zenodo repository. The repository contains Excel files including the responses to the Scopus queries, with complete metadata (titles, authors, affiliations, DOIs, keywords), as well as the input files used in the bibliometric analyses. Full texts of the articles are not provided there, as they are available online through Scopus and other publisher repositories. This dataset ensures transparency, durability, and reproducibility of the results, and enables reuse of the corpus in future updates. The literature selected for analysis covers references [

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

45,

46,

47,

48,

49,

50,

51,

52,

53,

54,

55,

56,

57,

58,

59,

60,

61,

62,

63,

64,

65,

66,

67,

68,

69,

70,

71,

72,

73,

74,

75,

76,

77,

78,

79,

80,

81,

82,

83,

84,

85,

86,

87,

88,

89,

90,

91,

92,

93,

94,

95,

96,

97,

98,

99,

100,

101,

102,

103,

104,

105,

106,

107,

108,

109,

110,

111,

112]. This corresponds to a corpus of 98 publications that met the established substantive and formal criteria, identified through the Scopus search query, a two-stage screening procedure, and keyword normalization.

While the TITLE–ABS–KEY filter ensured high precision of the query, such a strict strategy may also exclude relevant contributions that use alternative terminology. To reduce this bias, we constructed a normalization vocabulary. For example, operator learning is also referred to as neural operators, graph neural networks are also termed GNN, GCN or MGN, and finite element analysis is often used interchangeably with finite element method. Nevertheless, a small number of false negatives were identified. For instance, papers describing neural operator surrogates without explicitly using the phrase operator learning, or studies on graph message-passing networks without the exact term graph neural networks, were not captured by the automatic query. We therefore complemented the Scopus search with targeted manual screening of references in leading review articles and high-impact journals. This procedure increases recall while keeping transparency about the possible bias introduced by strict keyword matching.

2.3. Rationale for the Review, Purpose of the Study and Problems of the Study

Previous studies at the intersection of computational mechanics and data-driven methods are fragmented, often focusing on single tasks or algorithm classes, and rarely covering the full spectrum of problems, that is, numerical methods, material modeling, multiscale modeling, surrogate models, stochastic methods and uncertainty, and inverse analysis, in combination with the three families of approaches, that is, machine learning, core neural networks, deep neural networks. There is a lack of a coherent taxonomy that integrates these axes with unified quality and computational cost metrics, as well as a lack of transparent criteria for literature selection and explicit rules for terminology normalization. This review fills these gaps, being based on a clearly defined Scopus query covering the years 2015–2024, the English language, and the subject areas Computer Science and Engineering, with a final corpus of 98 publications after manual scope verification. Five interdependent classification dimensions were adopted, namely computational mechanics tasks, AI method classes, country of authors’ affiliation, document type, and methodology, which enables cross-sectional comparisons and identification of thematic and methodological gaps.

The review distinguishes itself from earlier work in three respects. First, it presents a comprehensive cross-section of applications, linking initial–boundary value problems, constitutive models, and multiscale issues with specific classes of learning architectures, which allows algorithmic solutions to be related to physical requirements and computational constraints. Second, it introduces a bibliometric layer based on explicit metadata and VOSviewer visualizations, which enables quantitative assessment of field dynamics, term co-occurrence, as well as the geographic and document-type distribution. Third, it ensures full reproducibility, providing the exact wording of the query, inclusion and exclusion criteria, the classification structure, and the compiled records deposited together with input files for visualization, which meets editorial transparency requirements and facilitates future updates.

The main objective of the review is to provide synthetic ordering and critical assessment of applications of machine learning and neural networks in computational mechanics, with mapping of tasks against data types, modes of embedding physical knowledge, and computational costs, as well as identification of research priorities relevant to engineering practice, including reliability, scalability, and reproducibility. The study poses the following research questions:

What classes of problems in computational mechanics dominate the literature, and what integration patterns with data-driven methods are most frequently applied, that is, physics-informed loss functions, operator learning, graph networks, and in which configurations they yield the greatest qualitative benefits.

What data types, geometry and mesh representations, and boundary condition and validation schemes are reported in the analyzed works, and which combinations provide the best compromise between accuracy and computational cost.

To what extent do publications account for uncertainty quantification, verification and validation, and report computational costs, for example, accelerations relative to reference solvers and hardware requirements, and what conclusions follow for engineering practice.

Whether, in the years 2015–2024, there was a significant growth trend in the number of publications across the six computational mechanics categories, and what the cumulative growth rate was over the entire period.

Whether the structure of methods changes over time, that is, whether the share of works using deep neural networks increases compared to core neural networks and classical machine learning, and whether the observed changes are statistically significant.

How the distribution of document types and authors’ affiliation countries evolves in the studied period, and whether the share of journal articles increases relative to conference papers, and whether geographic concentration intensifies, with an assessment of the significance of these trends.

The first three questions are substantive in nature, allowing identification of dominant tasks in computational mechanics, revealing thematic gaps, and assessing the readiness of methods for operation in engineering environments, with reliable uncertainty information and the possibility of integration into design and maintenance processes. The remaining three questions are statistical, measuring the popularity of the topic and the dynamics of the research field, including analyses of annual trends, cumulative growth rates, and significance tests of changes in method shares and publication forms. This set of problems structures the practical and quantitative objectives of the review, enabling coherent analysis and unambiguous interpretation of results in the subsequent sections.

The outcome of the substantive component will be an organized taxonomy of tasks and methods, cross-tables of method by task, and evidence cards for representative case studies, which will compile data types, modes of embedding physical knowledge, quality metrics, and computational costs. The quantitative layer will provide VOSviewer maps of term density and co-occurrence, distributions by document type and country of affiliation, as well as conclusions from significance tests, which will allow linking the dynamics of field development with methodological directions and application areas.

The practical value of the review lies in formulating recommendations for designing workflows in computational mechanics, selecting algorithm classes for task types, curating datasets, and reporting uncertainty and computational costs. The reproducibility layer, that is, the explicit search query, classification scheme, and openly available corpus, facilitates updates in subsequent years, supports comparability across research teams, and promotes the transfer of methods into engineering practice.

2.4. Eligibility Criteria

The literature selection was designed to be transparent and reproducible, and a two-stage screening was applied; first, titles, abstracts, and keywords were assessed, then full texts were analyzed in borderline cases. The construction of the criteria refers to good reporting practices for reviews in MDPI, including the way selection stages and justifications are presented in the PRISMA style, while strictly reflecting the query parameters adopted in this study, the years 2015–2024, the English language, the subject areas Computer Science and Engineering, the combined search of the Title, Abstract, Keywords fields, and keyword filters describing computational mechanics tasks and AI method classes. The final corpus after manual verification comprises 98 publications, in accordance with the compilation in the working file.

Works were included for analysis if they simultaneously met substantive and formal conditions. A direct connection to computational mechanics was required in at least one of the six categories, Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, Inverse Analysis; and in the use of data methods, Machine Learning, Core Neural Networks, Deep Neural Networks, which had to follow from the metadata or the content. Publications in English published in the years 2015–2024 were accepted and classified in Scopus under the subject areas Computer Science or Engineering. Journal articles, conference papers, and items marked as Other, for example, book chapters and review articles, were included if they presented a coherent methodological contribution or synthesized results in a way that allowed unambiguous classification. Access to the full text and a complete set of basic metadata, title, authors, affiliations, DOI identifier, keywords, was required.

Works not meeting any of the conditions were excluded from the corpus. Publications without an ML or NN component were eliminated, as were publications without a direct connection to computational mechanics issues, items outside the accepted years, language, and subject areas, incomplete records, and duplicates identified on the basis of DOI or title. Materials of a non-technical nature were rejected if they did not explicitly contain a component of computational or data methods in the context of equations, constitutive models, or multiscale analyses, as well as works with inadequate reporting, without a description of data, without quality metrics, or without information enabling the assessment to be reproduced. In cases where the full text did not allow assignment to any category, the item was marked as out-of-scope.

The decision chain corresponds to the stages of the Scopus query. After applying the query and filters, 109 records were obtained, refining the keyword list for ML and NN methods yielded 101 publications, and manual verification of scope compliance resulted in the exclusion of three items outside the thematic scope, which set the final count at 98. Justifications for inclusion and exclusion decisions were documented, and multiple assignments of the same item to several classification categories were allowed, which reflects the multidimensionality of the subject. Consistency of the procedure with MDPI good practices and the adopted protocol ensures comparability and reproducibility of the selection in subsequent updates of the review.

2.5. Selection Procedure and Screening

Screening was conducted in two stages, first, titles, abstracts, and keywords were assessed, then full texts of included or ambiguous items were analyzed. Before the actual screening, a short calibration was carried out on a random sample of records, the aim being to harmonize the interpretation of criteria and the terminology related to computational mechanics and data methods. Two reviewers conducted the assessment independently; decisions were recorded in a form with three possible outcomes, include, exclude, and unclear, and discrepancies were resolved by consensus, and, if necessary, with the involvement of a third person. For transparency, each decision was assigned a reason code, among others, the lack of an ML or NN component, outside the scope of computational mechanics, inadequate document type, incomplete metadata, lack of assessable method, which later made it possible to compile exclusion categories in the outcome report.

In the title and abstract screening, a minimal set of decision questions was applied, namely whether the work directly concerned computational mechanics in one of the six categories, Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, Inverse Analysis, and whether it used data methods understood as machine learning, core neural networks, or deep neural networks. If the answer was positive, the publication was directed to full-text screening, if negative, it was excluded, if ambiguous, it was marked as unclear and also directed to full-text screening. At this stage, multiple assignments of topics and methods was allowed, reflecting the complexity of tasks, and terminological inconsistencies were reduced by normalizing keywords to a reference list, for example, merging neural networks and neural-networks into one class, unifying finite element analyze to finite element method, merging convolutional neural network and convolutional neural networks.

Deduplication was technically confirmed on the basis of DOI identifiers and titles, and borderline cases of sibling publications, that is conference and journal versions of the same work, were resolved in favor of the version more complete methodologically. Full-text assessment served to verify whether the publication met all substantive and formal criteria, in particular, whether it actually contained a data-method component applied in the context of computational mechanics, whether it reported input data and metrics appropriate to the task, and whether the method description allowed unambiguous classification. For review and conceptual articles, a coherent taxonomy or methodological conclusion referring to the defined axes was required. Lack of access to the full text, incomplete metadata, or inconsistencies between title, keywords, and content resulted in exclusion with the assignment of the appropriate reason code. All decisions at this stage were recorded in a selection log, and disputed classifications were corrected by consensus.

The flow of records between stages together with the number of items at each step is presented in the PRISMA diagram,

Figure 2. The methodology followed the PRISMA 2020 guidelines, with the completed checklist provided in the

Supplementary Materials [

113]. The diagram covers identification, screening, eligibility assessment, and final inclusion.

At the screening stage, all 109 records were assessed, refining the keyword list for ML and NN methods resulted in 101 items forwarded to full-text assessment, with no work lost at the full-text retrieval stage. At the eligibility stage, the full texts of 101 publications were analyzed, three were excluded for substantive reasons, and ultimately 98 works were included, which formed the review corpus. Stage indicators confirm the effectiveness of the procedure, retention after screening was 92.7%, full-text exclusions accounted for 3.0% of the reports analyzed, and the proportion of publications included relative to the number of records identified was 89.9%. The dominant cause of exclusions at the initial stage, that is the refinement of the keyword list, confirms that thematic narrowing was carried out at the metadata level before content assessment, which ensures a technically homogeneous corpus for further classification and quantitative analyses.

2.6. Classification Scheme

The classification scheme was built on five parallel dimensions, which ensures consistent coding of content and allows for comparability of results in subsequent analyses. Each document may receive more than one label within a given dimension if this follows from its scope or metadata, and variant or synonymous terms were normalized to reference lists, which reduces indexing artifacts and facilitates cross-sectional comparisons.

The first dimension covers areas of computational mechanics. Six groups were distinguished that organize the most frequently occurring tasks: Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, Inverse Analysis. These groups were assigned corresponding descriptors, including Finite Element Method, Boundary Value Problems, Mesh Generation, Degrees of Freedom, Constitutive Models, Plasticity, Elasticity, Elastoplasticity, Stress Analysis, Surrogate Modeling, Stochastic Systems, Uncertainty Analysis, and Inverse Problems.

The second dimension concerns data methods. Three overarching categories were applied, reflecting the level of complexity of approaches: General Machine Learning, Core Neural Networks, Deep Neural Networks. The first group included, among others, Machine Learning, machine learning, and Learning Systems; the second group included Neural Networks, neural-networks, and Artificial Neural Network; the third group included Deep Learning, Deep Neural Networks, Convolutional Neural Network, Convolutional Neural Networks, and Recurrent Neural Networks. Differences in spelling and lexical variants were merged into parent classes, which enables comparisons between publications.

The third dimension reflects the geography of output based on authors’ affiliations. The set applied includes Australia, Austria, Canada, China, France, Germany, Greece, India, Luxembourg, United Kingdom, United States, and Others, with all appropriate labels assigned to co-authored publications, which allows for the analysis of international collaboration.

The fourth dimension organizes document types in line with Scopus classification. Article, Conference Paper, and the group Other were included, the latter comprising, among others, review articles and book chapters, provided they met substantive and formal criteria.

The fifth dimension describes the methodological approach, determined on the basis of content and authors’ declarations. Four categories were applied, Experiment, Literature Analysis, Case Study, and Conceptual, which facilitates the assessment of solution maturity and methodological rigor.

This five-dimensional structure enables the construction of cross-tables, for example, data method by mechanics category, the analysis of distributions by document type and authors’ affiliation countries, as well as unambiguous interpretation of bibliometric results in subsequent chapters.

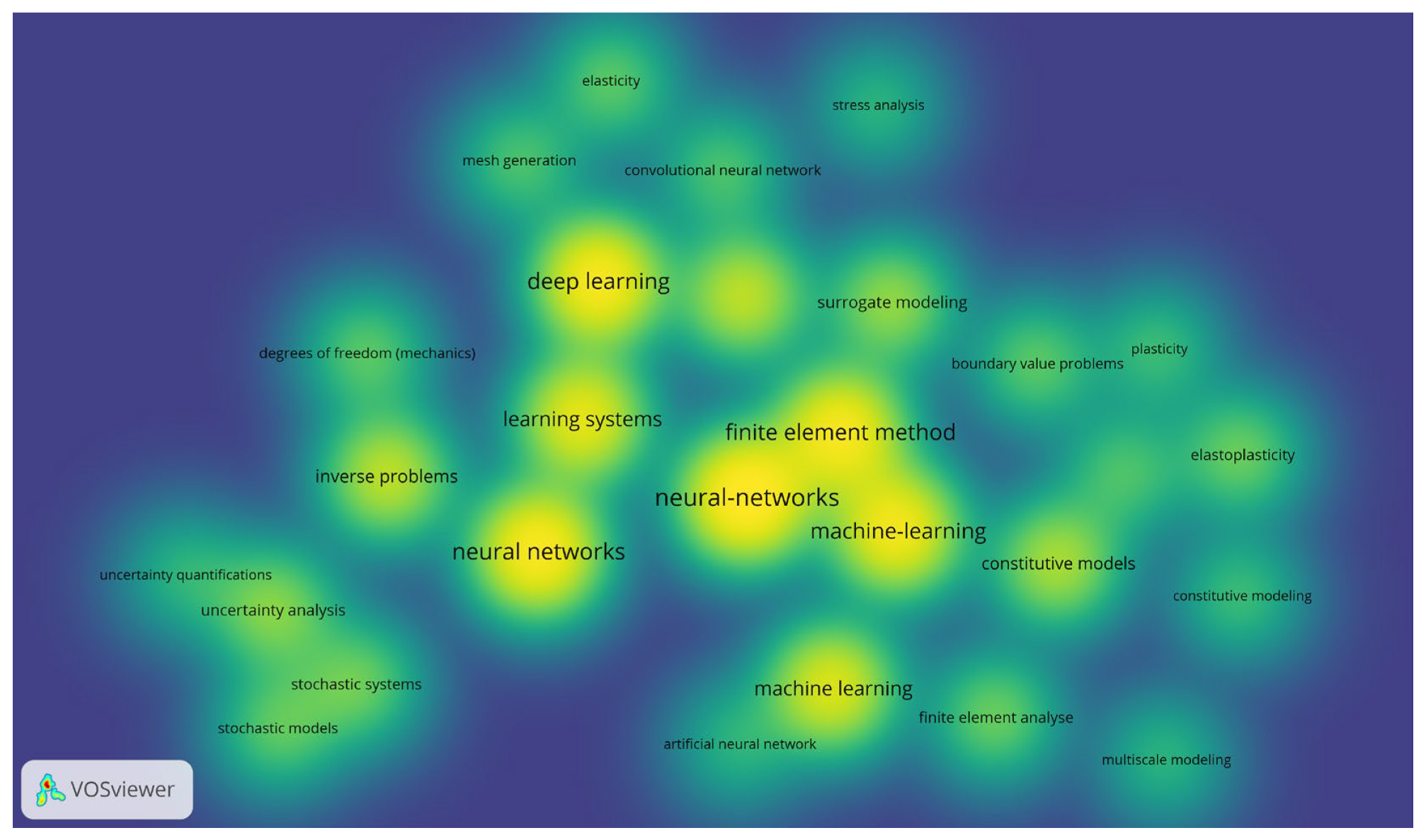

The term density map in

Figure 3 shows central clusters around the terms’ finite element method, neural-networks, machine-learning, and deep learning, as well as medium-density fields related to surrogate modeling, inverse problems, boundary value problems, and uncertainty analysis. Yellow indicates the highest density, that is, the topics most frequently co-occurring in the corpus. Lexical pairs of the same meaning, such as machine learning and machine-learning or neural networks and neural-networks, were merged in the quantitative analysis, although they may appear as separate spots in the density visualization, which does not distort the overall picture of the dominance of core concepts of mechanics and learning methods.

To ensure reproducibility of the five-dimensional classification, explicit labeling rules and conflict resolution procedures were defined. Each record could receive multiple labels within a given dimension, but a single primary label was always assigned when needed for cross tabulation.

Mechanical problem. The label was chosen based on the stated research objective in the title and abstract. When a paper addressed multiple tasks, the primary label corresponded to the problem driving the evaluation protocol or the main result. For example, if a study built a surrogate to estimate parameters, the primary label was Inverse Analysis, while Surrogate Methods was recorded as secondary.

Data class. A distinction was made between simulated and experimental data. The primary label was determined by the dominant source, defined as more than 50% of the dataset used for training and evaluation. If both sources were comparable, the label Dual was assigned and explicitly reported in figure captions.

Country. The country label was derived from the first author affiliation. In multinational collaborations, a multi country flag was added. If the first author listed several affiliations, the first institutional country was used.

Document type. The assigned label reflected the publication venue, that is journal article, conference proceedings, preprint or review. If a preprint was later published in a journal, the label journal article was used, and the preprint was listed as secondary.

Methodology. The label reflected the central computational approach. If two approaches were combined, the primary label was the method governing the training objective or inference at deployment. For example, when a graph-based model enforced physics through a penalty, graph neural networks was primary and physics informed was secondary. When boundary conditions were imposed as hard constraints that dominated feasibility, Physics informed became primary.

Conflict resolution followed a clear precedence: (i) task intent over tool choice, (ii) data source over data format, (iii) venue type over manuscript stage. Borderline cases were adjudicated by two independent reviewers and disagreements resolved by discussion. A random 10% sample was re-labeled after two weeks to assess consistency, yielding agreement above 0.9 in terms of Cohen’s kappa.

Examples of specific assignments include a study titled Surrogate Modeling for Parameter Identification in Elastography with synthetic training data and limited experimental validation was labeled primarily as Inverse Analysis, Simulated with Surrogate Methods, Experimental as secondary; a paper on mesh-independent field prediction with PINNs on CT derived geometries based on clinical scans only was labeled Forward Modeling, Experimental, Physics informed; and a conference contribution on Operator Learning for Turbulent Flow Reconstruction fine-tuning DeepONet on DNS snapshots was labeled Forward Modeling, Simulated, Operator learning, Conference.

Cross tabulations in

Section 4 are computed using primary labels, while secondary labels are used in sensitivity analyses presented in the Zenodo repository.

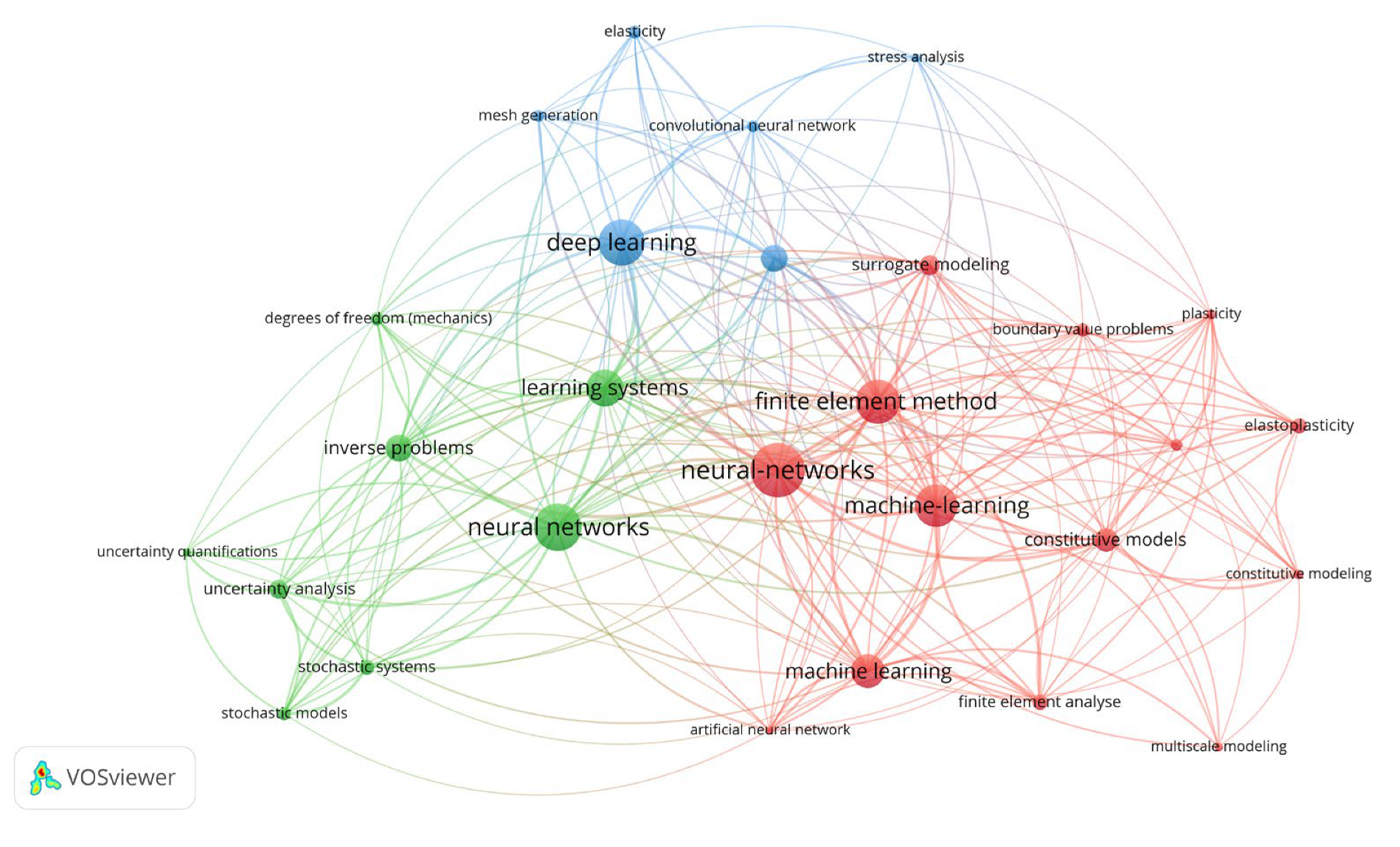

The term co-occurrence network in

Figure 4 structures the conceptual space into three distinct clusters. The red cluster brings together machine-learning, finite element method, boundary value problems, and constitutive models, which reflects works combining classical mechanics tasks with ML methods. The green cluster includes neural networks, inverse problems, uncertainty analysis, and stochastic systems, indicating a line of research linking networks with inverse analysis and uncertainty. The blue cluster centers on deep learning and convolutional neural network, accompanied by terms such as mesh generation and elasticity, which confirms the broad applications of deep networks, particularly convolutional ones. The thickness of the edges between nodes illustrates the strength of co-occurrences, with especially visible links between the finite element method and machine-learning, as well as between inverse problems and neural networks.

2.7. Data Extraction and Benchmarks

From each publication, a standardized set of fields was extracted to ensure a comparable characterization of the studies and their results. The computational mechanics task was recorded, that is, Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, Inverse Analysis, together with the corresponding descriptors, among others Finite Element Method, Boundary Value Problems, Constitutive Models, Surrogate Modeling, Uncertainty Analysis, Inverse Problems. In addition, the data method class was recorded, that is, General Machine Learning, Core Neural Networks, Deep Neural Networks, with normalization of lexical variants, for example, Machine learning and Learning Systems to Machine Learning, Neural-networks to Neural Networks, Convolutional Neural Network and Convolutional Neural Networks to Deep Neural Networks. For clarity, the document type, the country of authors’ affiliation, and the methodological approach, Experiment, Literature Analysis, Case Study, Conceptual, were also included in accordance with the classification rules described in the working materials. This set was supplemented with technical fields, such as the type of equations and boundary conditions represented, geometry and mesh representation, dataset size and splitting, description of the architecture and loss function components, as well as information on computational costs and elements of reproducibility, code and data availability, and DOI identifiers.

Comparative measures were selected to reflect the nature of the tasks. For continuous fields and differential equations, L2 errors, MAE, MSE, and energy consistency indicators were included; for surrogate models, accelerations relative to reference solvers, as well as training and inference times were recorded; for inverse analyses and material identification, parameter errors and credibility measures were reported; and in some works, calibration metrics and uncertainty interval widths were also noted. The results were normalized to the most frequently reported metrics within each task class and compiled in comparative tables, with interpretive commentary linking algorithmic quality to computational costs and resource constraints.

The bibliometric layer was implemented using VOSviewer version 1.6.20, and density maps of terms and co-occurrence networks of keywords were prepared to identify thematic clusters and central nodes. Each visualization was accompanied by the recommended caption.

The synthesis of results was carried out along two lines. First, thematic synthesis within the six categories of computational mechanics and three classes of data methods, which allows linking specific tasks with model types and methods of embedding physical knowledge, among others boundary conditions in the loss function, energy constraints, operator learning. Second, quantitative aggregation covering geographic distribution, document types, and methodological approaches, with the possibility of constructing cross-tables, for example, data method by mechanics category, as well as analyzing popularity trends within the corpus.

The description of the procedure remains consistent with transparency guidelines, the Scopus query with filters, the inclusion and exclusion criteria, definitions of classification categories, and the full list of 98 publications with metadata are prepared for release in the Zenodo repository. In this way, the subsection integrates extraction, comparative measures, bibliometrics, and synthesis, which ensures reproducibility of conclusions and enables updating of the review in subsequent editions.

2.8. Limitations

The scope of the review is defined by a single source, the Scopus database, the English language, and the years 2015–2024, as well as a restriction on the areas of Computer Science and Engineering. This choice guarantees consistent metadata and uniform selection criteria, while introducing the risk of omitting publications indexed exclusively in other databases and works in other languages. Indexing delays must also be taken into account, which may result in underestimation of the newest items at the end of the study period.

Further limitation arises from the construction of the search query. EXACTKEYWORD descriptors were used for both the terminology of computational mechanics, for example, Finite Element Method, Surrogate Modeling, Inverse Problems, and data methods, for example, Machine Learning, Neural Networks, Convolutional Neural Network, which increases precision, but may remove works that use less common synonyms or a different naming convention. This risk was mitigated by jointly searching the Title, Abstract, and Keywords fields and by normalizing lexical variants, nevertheless individual false negatives cannot be ruled out, nor, conversely, borderline items classified into the corpus.

The selection procedure included independent double screening and consensus decisions. Despite these safeguards, classification along five dimensions, that is, computational mechanics category, data method class, country of affiliation, document type, methodology, contains an element of expert judgment. Subjectivity concerns in particular multi-topic cases and publications with concise descriptions of data and metrics, therefore a normalization glossary was used, and decision justifications were recorded, nonetheless isolated ambiguities may remain.

A significant barrier to comparisons is the heterogeneity of tasks and metrics. The analyzed works concern different equations and boundary conditions, diverse representations of geometry and meshes, and differing validation protocols. Authors use different error measures, for example, L2, MAE, MSE, and energy indicators, and in surrogate models, they report computational speedups under different hardware configurations. Common benchmarks and consistent descriptions of computational costs are rare, thus there is no basis for a formal meta-analysis of effects, and comparative conclusions are descriptive in nature.

The bibliometric layer is based on keyword co-occurrence analysis and VOSviewer visualizations. The maps are descriptive, they reflect the structure of indexing and term frequency, not the quality of methods or strength of evidence. Results depend on the threshold for including terms, the rules for merging synonyms, and the choice of clustering algorithm; therefore, interpretations of clusters and concept centrality should be related to the substantive context of the review and not treated as causal indicators.

At the external level, publication and selection bias must be considered. Positive results dominate in technical literature, reports of failures and negative outcomes are rare, which may inflate expected performance measures. The review does not include gray literature and preprints outside Scopus, for example, industrial reports and internal materials, which often contain information on deployments and operational constraints.

The generalizability of the conclusions is limited by environmental and computational differences. Applications of ML and NN in computational mechanics depend on data size and quality, mesh resolution, hardware configuration, for example, GPU, and adopted simplifications, for example, 2D instead of 3D. Many studies rely on synthetic data or limited experiments, and results under industrial conditions may differ from those presented in articles. Some publications do not report prediction uncertainty or full computational costs, which hinders the assessment of risk and scalability.

The above limitations do not invalidate the conclusions of the review, but they delineate the boundaries of their applicability. In subsequent iterations, the query is planned to be extended to additional databases, including IEEE Xplore and Web of Science, in consideration of selected languages other than English, refinement of the synonym glossary, and reporting of the classification agreement coefficient. It is recommended to promote open benchmarks, standardized evaluation protocols, and transparent reporting of computational costs and uncertainty, which will enable more rigorous comparisons in future work.

The literature coverage extends through 2025; due to indexing latency in Scopus, some very recent items may not yet be captured at the time of querying. This limitation was mitigated by targeted manual screening of references and the inclusion of 2025 publications identified through journal websites and cross citations.

3. State of the Art

Section 3 constitutes the core of the study and is divided into four complementary subsections.

Section 3.1 presents the main currents of contemporary computational mechanics, grouped into six thematic categories: Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, and Inverse Analysis. Their role is discussed in solving problems governed by differential equations, in constitutive modeling, in inverse analysis, and in the development of surrogate models.

Section 3.2 focuses on the three main classes of data-driven methods: General Machine Learning, Core Neural Networks, and Deep Neural Networks. Their applications are presented as tools supporting material modeling, approximation of complex physical phenomena, uncertainty quantification, and inverse design.

Section 3.3 outlines future directions of development and synthesizes conclusions from the two preceding subsections, highlighting key avenues for further research, such as the advancement of differentiable solvers, models with physical guarantees, and the standardization of validation and uncertainty quantification procedures. The chapter concludes with

Section 3.4, which summarizes the key observations and formulates practical implications for the further development of computational mechanics and data-driven methods.

3.1. Computational Mechanics Methods and Modeling

The starting point of contemporary computational mechanics is the tight coupling of boundary equation discretization with the approximation of physical fields and, increasingly, with machine learning. Mature approaches, from classical FEM to meshfree and variational methods, are now being enriched with neural networks, which either replace selected stages of computation, or enter the solver as “components” of numerical methods. This methodological shift is well illustrated by works in which the network becomes part of the method, from the Neural Element Method (NEM), in which neurons construct shape functions and stiffness matrices, creating a bridge between ANN and weak formulations and W2 [

16], to integrated I FENN frameworks, where a PINN maps the nonlocal response directly into the definition of element stiffness and its derivatives, in order to drive the nonlinear solver to convergence at a cost comparable to local damage models [

17]. Against this backdrop, meshfree EFG formulations with MLS approximation are developing in parallel, here combined with HSDT9 and a lightweight network for instantaneous prediction of FGM plate deflections, which shows a measurable gain in time and accuracy relative to FEM [

18]. In the opposite direction are concepts in which FEM integrates an NN as an exchangeable solver component, and the FEMIN frameworks aggressively replace parts of the mesh with a neural model to accelerate crash simulations without loss of fidelity [

19]. Yet another line is represented by HiDeNN FEM, where the network takes over the role of constructing shape functions and r-adaptivity, boosting accuracy and suppressing “hourglass” modes in nonlinear 2D/3D problems [

20]. Finally, the differentiable, GPU-accelerated JAX FEM solver opens an “off the shelf” path to inverse design, because sensitivities here are a by-product of automatic differentiation [

21]. These trends: element as network, network as element, solver as differentiable graph, create a common language of next-generation computational methods.

A special place is occupied by physics-informed networks, PINNs, and related energy methods. In elastodynamics, PINNs with mixed outputs, displacements and stresses, and enforced satisfaction of I/BCs break through the known difficulties of classical PINNs, especially for complex boundary conditions [

22]. The “meshless + PINN” formulation shows that deep collocation can reliably reproduce the response of elastic, hyperelastic, and plastic materials without generating FEM-labeled data [

23]. In optimization applications, the deep energy method, DEM, serves both for solving the forward problem and for formulating a fully self-supervised topology optimization framework, where sensitivities arise directly from the DEM displacement field, thus a second “inverse” network becomes unnecessary [

24]. On the other hand, PINNTO replaces finite element analysis in the SIMP topology optimization loop with a private, energy-based PINN, thanks to which design can proceed without labeled data [

25]. In elliptic BVPs, it has been shown that loss-function modifications enable convolutional networks to act as FEM surrogates, with accuracies comparable to Galerkin discretizations [

26], and variational PINNs at the same time provide more precise identification of material parameters, also for heterogeneous distributions [

27].

Today, constitutive modeling is a key arena where physics and data meet. Instead of directly predicting stress, the SPD-NN architecture learns the Cholesky factor of the tangent stiffness, which weakly enforces energy convexity, temporal consistency, and Hill’s criterion, and in practice stabilizes FEM computations with history-dependent materials [

28]. The “training with constraints” approach improves hyperelasticity learning by imposing energy conservation, normalization, and material symmetries, which increases solver convergence stability [

29]. In classes of models that guarantee polyconvexity from the outset, neural ODEs introduce monotonic derivatives of the energy with respect to invariants, which ensures the existence of minima and successfully transfers to experimental skin data [

30]. Deep long-memory networks reproduce viscoplasticity with memory of rate and temperature, satisfying path consistency conditions and capturing history effects in solders [

31]. When scale effects are nonlocal, CNN hybrids can learn nonlocal closures without explicitly known submodels, thanks to the convolutional structure that follows from the formal solution of the transport PDE [

32]. In geotechnics, a tensorial, physics-encoded formulation respects stress invariants and porosity, maintaining the requirements of isotropic hypoplasticity and readiness for integration in BVP solvers [

33]. In turn, geometric DL, graphs, and Sobolev training learn anisotropic hyperelasticity from microstructures, taking care of the smoothness of the energy functional and the correctness of its derivatives [

34], and graph embeddings allow interpretable internal variables for multiscale plasticity [

35]. In soft materials, conditional networks, CondNN, compactly parameterize the influence of rate, temperature, and filler on full constitutive curves of elastomers [

36], and RNN-based descriptions are also integrated into gradient damage frameworks, avoiding localization [

37]. A synthetic comparison of “model-free” and “model-based” approaches for computational homogenization explains when constitutive NNs underperform or outperform DDCM with distance minimization or entropy maximization, and how pre- and post-processing costs differ [

38].

Beyond the material laws themselves, flows of information across scales are important. Fully connected networks trained on RVE data of fibrous materials can reproduce energy derivatives with respect to invariants and be plugged in as UMATs in FEM, linking the micro-network with the macro-simulation without painful on-the-fly coupling [

39]. CNNs with PCA predict complete stress–strain curves of composites, also beyond the elastic limit, enabling high-throughput design with limited data [

40]. In classical FE2, a “data-driven” mechanics with adaptive sampling controlled by a DNN has been proposed, which significantly reduces offline cost while maintaining the quality of the macro response [

13]. Meta-modeling games with DRL automate the selection of hyperparameters and the “law architecture” for NN-based elastoplasticity in a multiscale approach [

41]. A broader perspective on the roles of ML in multiscale modeling, from homogenization to materials design, is outlined by cross-sectional reviews [

42,

43]. In elastoplastic composites, combining computational homogenization with ANN makes it possible to build DDCM databases more cheaply, yet with high fidelity in 3D tasks [

44].

In parallel, an ecosystem of surrogate methods that accelerate analysis is maturing. U-Mesh, a U-Net-type architecture, approximates the nonlinear force–displacement mapping in hyperelasticity and works across many geometries and mesh topologies, with small errors relative to POD [

45]. CNNs trained on FEM data solve torsion for arbitrary cross-sections, bypassing laborious discretization [

46], and Bayesian operator learning, VB-DeepONet, adds credible a posteriori uncertainties and better generalization than deterministic DeepONet [

47]. In fluid–structure interaction, networks take over the role of one of the sub-solvers, shortening cosimulation time without loss of accuracy [

48], and in MEMS, NN surrogates enable efficient Bayesian calibration with MCMC despite the cost of FEA [

49]. In the domain of general shapes, a multiresolution network interpolated to mesh nodes predicts scalar fields, stresses, temperature, on arbitrary input meshes, R

2 close to 0.9–0.99, which makes it a realistic alternative to FEM in design loops [

50]. Moreover, a cGAN transferred from image processing allows near real-time emulation of FEM responses, deflections, stresses, with 5–10% error after only 200 training epochs [

51]. They are supported by “Sobolev training” techniques with residual weighting, which insert partial derivatives into the loss function, reducing generalization error in linear and nonlinear mechanics [

52], and meshfree NIM hybrids that integrate variational formulations with differentiable programming [

53]. Even an apparently “minor” component, such as Gaussian quadrature, can be learned and adapted to the element and material, which translates into savings in stiffness matrix integration [

54].

The new paradigm does not avoid uncertainty; on the contrary, it models and exploits it. An experimental–numerical program for C40/50–C50/60 concretes combines tests with ANN-based identification of fracture parameters, in order to directly propose stochastic parameter models [

55]. For FGM shells, SVM provides a fast substitute for Monte Carlo in analyzing temperature effects on natural frequencies, with verification against classical MCS [

56]. ROMES frameworks and related error models learn regressions of residual indicators to predict the error of approximate solutions, which provides quality control for nonlinear parametric equations [

57]. Nonparametric probabilistic learning by Soize–Farhat makes it possible to model model-form uncertainty and identify hyperparameters through a statistical inverse problem, and then accelerate it with a predictor–corrector scheme [

58]. When probability distributions are lacking, interval networks, DINNs, propagate interval uncertainties through cascades of models, providing credible prediction ranges [

59]. In laminated composites, ANNs faithfully reproduce first-ply failure statistics directly against Monte Carlo [

60], and in forecasting fatigue crack growth, a selected NN architecture can operate in real-time with uncertain inputs [

61]. In computational turbulence, an elegant, frame-independent representation of stress tensor perturbations by unit quaternions has been proposed, which is crucial for UQ and ML in RANS modeling [

62]. Synthetic geotechnical reviews emphasize that input selection and data representation determine AI effectiveness under high material uncertainty [

63].

Inverse problems are the natural culmination of this trend. Galerkin graph networks with a piecewise polynomial basis strictly impose boundary conditions and assimilate sparse data, solving forward and inverse tasks in a unified manner on unstructured meshes [

64]. In heat conduction and convection, combining simulation and deep networks allows boundary conditions to be recovered in strongly nonlinear problems from only a few temperature measurements [

65]. In geophysics, deep networks for borehole resistivity inversion require carefully designed loss functions and error control to ensure real-time stability [

66], while alternative migration, learning, and cost-functional methods reveal the positions of cracks in brittle media [

67]. Differentiable approaches, such as JAX FEM, turn full 3D FEA into a component of gradient programming, which automates inverse and topology design [

21,

68]. At material scales, from hierarchical wrinkling to the thermal conductivity of composites, surrogate models and parameter-space planning enable inversion on a minute time scale instead of multi-day searches [

69], and stable determination of liquid metal microfoam with a prescribed thermal conductivity [

70]. In 3D/4D printing, reviews clearly indicate that ML is becoming a primary tool for inverse design of mechanical properties and active shape [

71].

All the above threads also intertwine at the level of theoretical and methodological foundations. BINNs propose a boundary-integral formulation with networks that naturally embed boundary conditions and reduce problem dimensionality [

72]. For PINNs, a priori error estimates have been derived, combining Rademacher analysis with Galerkin least squares, which form a basis for convergence theory [

73]. Reviews of deep learning in mechanics synthesize five roles of DL, substitution, enhancement, discretizations as networks, generativity, and RL, organizing the dynamic landscape of methods [

74], while other surveys contain the “classics and the state of the art,” from LSTMs to transformers and hyper-reduction [

75]. In structural practice, deep and physics-informed networks can in places replace FEA in accurately reproducing stress and strain fields, although PINNs show greater generalization capacity [

76], and qualitative inference from networks trained on FEM data can meaningfully support structural assessment [

77]. In topology optimization, DNNs significantly accelerate sensitivity analysis by mapping the sensitivity field from a reduced mesh back to a fine mesh [

78].

Finally, it is worth noting the rapidly developing “games on data.” Data-driven games between the “stress player” and the “strain player” provide nonparametric, unsupervised effective laws that reduce to classical displacement-driven boundary problems [

79]. In turn, a non-cooperative meta-modeling game uses two competing AIs for automatic calibration, validation, and even falsification of constitutive laws, through experiment design and adversarial attacks, in order to realistically describe the range of model applicability [

80]. At the very foundations of fracture micromechanics, diffusion–transformer models are emerging that generalize beyond atomistic databases, predicting crack initiation and dynamics [

81]. Complementing this panorama is a reflection on the role of LLMs in applied mechanics, not only as assistive tools, but as potential interfaces for exploring mechanical knowledge and designing computations [

82].

The article [

83] provides an overview of advances in computational mechanics and numerical simulations, including CFDs, gas dynamics, multiscale modeling, and classical discretization tools. The paper [

84] presents the use of artificial neural networks to identify the parameters of poroelastic models, combining the ANN approach with asymptotic homogenization and finite element method to characterize materials with differentiated porosity and Poisson ratio of the solid matrix. The paper [

85] presents a solution to the statistical inverse problem in multiscale computational mechanics using an artificial neural network, combining probabilistic modeling with uncertainty analysis and identification of parameters of heterogeneous materials. The paper [

86] presents an adaptive surrogate model based on the local radial point interpolation method (LRPIM) and directional sampling technique for probabilistic analysis of the turbine disk, demonstrating improvements in computational accuracy and efficiency compared to classical response surface models, Kriging, and neural networks. The article [

87] provides an overview of surrogate modeling techniques used in structural reliability problems, including ANN, kriging, and polynomial chaos in combination with LHS and Monte Carlo simulation, with a focus on reducing computational costs in uncertainty analyses. The paper [

88] proposes a hybrid approach to describe the elastic–plastic behavior of open-cell ceramic foams, combining a homogenized material model with interpolation of FEM simulation results using neural networks, which significantly reduced the computational effort. The paper [

89] presents a method of stochastic neural network-assisted structural mechanics, in which the phase angles of the spectral representation are used as inputs, and the network, trained on a small subset of Monte Carlo samples, accelerates uncertainty calculations regardless of the size of the FEM model.

Within Category Group 1, a coherent architecture of methods is taking shape, (i) hybrid discretizations, elements and meshes as networks, and networks in the solver, (ii) PINNs and energy methods carrying BCs and ICs and physical conditions in the loss function, (iii) constitutive NNs with structures enforcing thermodynamics and convexity, (iv) multiscale models integrating ANN and UMAT and FE2 data-driven approaches, (v) surrogates and operator learning with UQ and credibility, and (vi) inversion based on differentiable simulation. The most urgent tasks include formal error estimates and convergence criteria, especially for 3D PINNs, standardization of surrogate model credibility with an uncertainty measure, and scaling to geometries and meshes of arbitrary topology without loss of computational stability. These directions are already outlined in the cited works, and their further integration foreshadows the next generation of computational methods that will “converse” equally well with physical equations and with data.

To conclude the analysis of methods in

Section 3.1, a summary has been prepared in

Table 1, which organizes the discussed threads into the subcategories Computational Methods, Material Modeling, Multiscale Modeling, Surrogate Methods, Stochastic Methods and Uncertainty, and Inverse Analysis, presenting for each group the leading theme, data types and sensors, for example, computational or FEM fields, DIC, strain gauges, microstructure images, the research task, the models and techniques used, including PINNs or variational PINNs, operator learning, FNO or DeepONet, GNNs, meshfree methods, ROMs or surrogates, and the metrics and implementation requirements, L2 or MAE or MSE, energy measures, enforcement of BCs and ICs, numerical stability, thermodynamic consistency, speedups relative to FEM, together with references [n]. The summary serves as a map of concepts and methods, unifies terminology throughout the chapter, enables comparison of data ranges and algorithms, and provides an assessment of operational readiness, runtime, and approaches to uncertainty, which closes the methodological part and prepares the basis for the synthetic conclusions in

Section 3.3.

The data in

Table 2 show that IM-CNN models operating on arbitrary meshes achieve high field accuracy (R

2 approximately 0.9 to 0.99), generative cGAN emulators maintain about 5 to 10 percent error with near real-time inference, and differentiable solvers such as JAX-FEM deliver roughly a tenfold speedup at very large numbers of degrees of freedom. EFG-ANN hybrids can reduce computational time by up to about 99.94 percent in specific configurations, CNN plus PCA methods for composites keep mean errors below 10 percent, and machine-learning-based inverse design shortens the exploration of million-scale design spaces from more than ten days to under one minute. Taken together, this confirms that different classes of methods occupy distinct points on the accuracy–cost–robustness trade-off, which we revisit in the synthesis in

Section 3.3.

Reporting is standardized by explicitly distinguishing the offline cost of generating micromodel databases from the online cost at the macroscale. For multiscale pipelines, the offline profile states the database cardinality, approximate data size and generation time, and the sampling strategy used to cover the material state space. The online profile states the macro mesh size in elements, the accuracy tolerance relative to the reference model, the typical per-step runtime in milliseconds, and the wall-clock speedup at matched accuracy tolerance together with any observed accuracy loss. In practice, UMAT–NN couplings trade a substantial offline investment for stable macro-level solves, data-driven FE2 with adaptive sampling reduces both offline and online burdens by focusing queries in high-value regions of the state space, and deep material networks integrated at the macro level provide large online gains once a suitable database has been curated. Across the studies discussed here, reported speedups range from several times to tens or even hundreds of times at comparable accuracy, which is critical for design loops and parametric studies.

Cross-family transfer beyond the training distribution is made explicit by adopting a protocol in which models are trained on one family of shapes or meshes and evaluated on a distinct family, and stress tests are performed at loads between 1.2 and 1.5 times the training envelope. Performance is assessed by standard field errors and by physics-residual monitors that quantify departures from equilibrium or energy balance. Within this protocol, IM-CNN-style surrogates tend to transfer well when boundary conditions and loading patterns remain compatible, whereas residuals can increase when topological differences or mesh-quality gaps are pronounced. U-Mesh-style surrogates offer fast inference across diverse discretizations but benefit from explicit domain control to maintain physical plausibility under extrapolative loads. Reporting both error and residual indicators helps define safe operating ranges for deployment.

Inverse settings require robustness to be documented with respect to additive noise and sensor sparsity. A practical protocol perturbs observations with one to five percent Gaussian noise and reduces sensor density by factors of ten and twenty, while tracking the error in both parameters and reconstructed fields. Accuracy typically degrades more with sensor sparsity than with mild noise, which suggests that penalization alone is insufficient. Effective stabilization combines Sobolev or Tikhonov regularization with physics barriers and, where possible, hard or variational enforcement of boundary conditions. Bayesian or ensemble-based operator learning provides calibrated uncertainty that supports decision making when data are scarce. Reports therefore include the exact noise levels, sensor density and layout, the chosen regularization, and the resulting confidence measures alongside point estimates.

3.2. Machine Learning and Neural Networks

In this chapter I show how machine learning methods, from classical shallow networks to deep architectures with embedded physical knowledge, are increasingly interwoven with computational mechanics, as approximators of constitutive laws, as fast solvers of differential equations, and as tools for inverse design and uncertainty quantification.

First, it is useful to capture the idea of the “network as a finite element,” which builds a bridge between ANNs and classical discretizations. The author of the Neural Element Method demonstrated that neurons can be used to construct shape functions and then employed in weak and weakened weak formulations, which provides an explicit link between ANNs and FEM or S-FEM, numerical demonstrations confirmed the feasibility of the approach and opened the way to new loss functions with desirable convexity in machine learning [

16]. In a similar spirit, HiDeNN FEM ties hierarchical DNNs to nonlinear FEM, introducing differentiation blocks, r-adaptivity, and material derivatives, which in 2D and 3D significantly reduces element distortions and increases accuracy, the perspective is smooth integration with any existing solver [

20]. A bolder move is to replace parts of the mesh directly with a network and plug it into the FEM code, the FEMIN platform for crash simulations proposes a temporal TgMLP and an LSTM variant as state predictors, showing that fidelity can be preserved while computation time is reduced, the next steps are generalization to multi-material brittle–plastic scenarios [