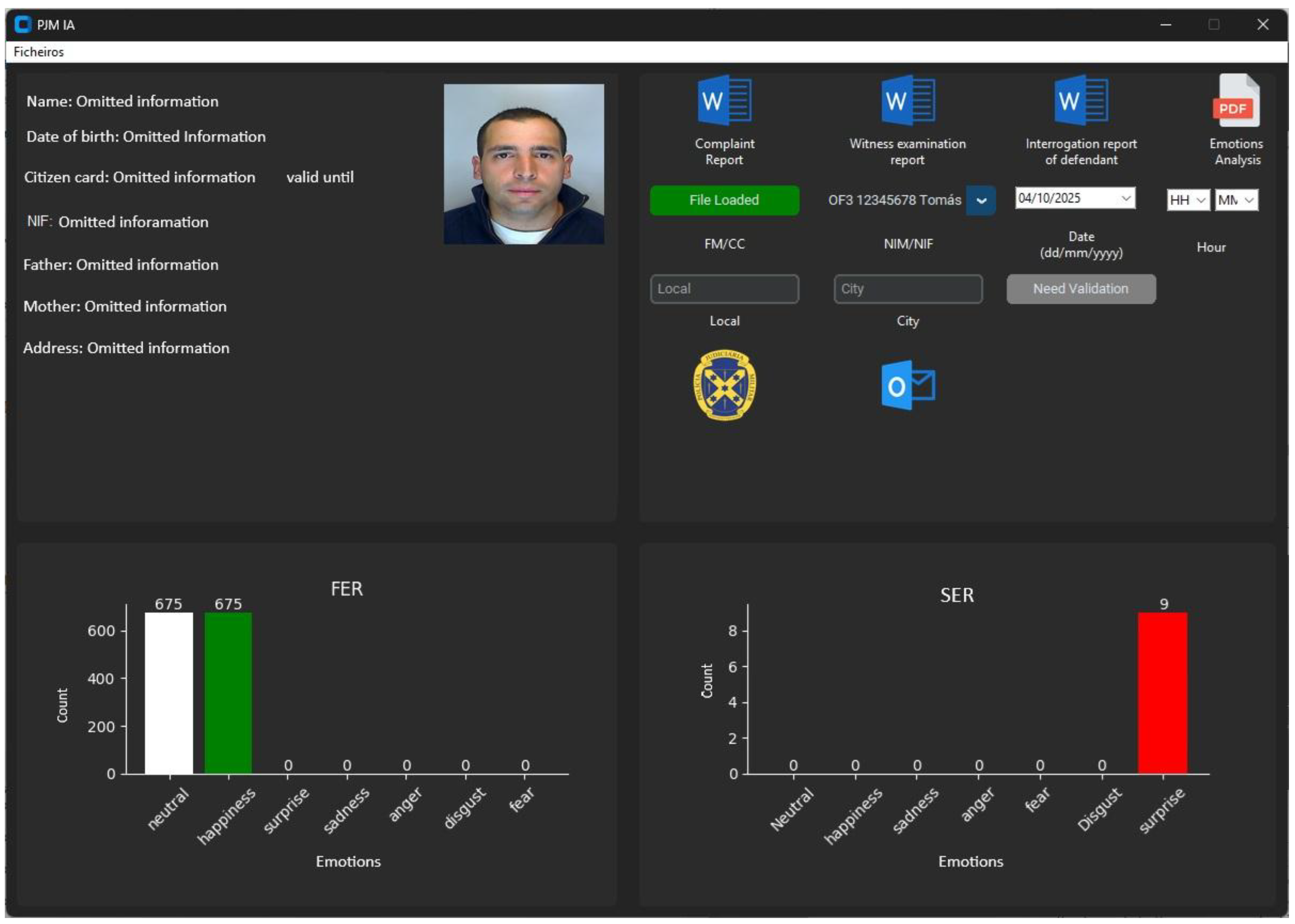

The INTU-AI software was officially launched as an independent executable (.exe) [

52] for the Windows operating system at the end of January 2025. Owing to privacy concerns that made real-world interrogation scenarios unfeasible, system validation was conducted during the PJM inspector training sessions in February and March 2025, involving PJM inspector trainees acting as both interviewees and interviewers. Additional testing is underway, with a focus on system performance, compatibility, security, and data confidentiality, utilizing input from PJM. Presently, the project is in its preliminary post-deployment stage, which involves corrective maintenance and iterative improvements based on user feedback. Next, we present the results of a questionnaire answered by 23 PJM trainees.

Questionnaire and Results

A 12-question structured questionnaire was developed and administered to assess the system qualitatively [

53]. The survey was distributed to 23 instructor officers and investigators from the PJM, with the goal to collect feedback across several dimensions: usability, existing functionalities, practical utility of the program, user experience, and open-ended qualitative responses. The evaluation was conducted individually with anonymous respondents through a shared online link [

53].

The assessment instrument employed a 5-point Likert scale [

54] shown in

Table 5, with items adapted from the validated technology acceptance model [

55]. This approach allowed for both quantitative measurement of user perceptions and qualitative analysis of open-ended feedback.

Table 6 provides an overview of the dimensions assessed by the questionnaire administered in this study.

The results obtained from the administered questionnaires are presented in

Figure 11,

Figure 12,

Figure 13 and

Figure 14, organized according to the evaluation dimensions described in

Table 6. The figures show the percentage of answers that correspond to the respective Likert value. Regarding the evaluation dimensions, specifically Usability, the applied questions were Q1: “The program’s interface is intuitive and easy to navigate?” and Q2: “The processing time (video/audio analysis) is acceptable for practical use?”. The corresponding results are presented in

Figure 11.

Regarding interface usability (Q1), the responses corresponded to levels 5 and 4 on the Likert scale, indicating a high level of acceptance and perceived intuitiveness of the program. However, 26.1% of respondents selected level 3 (Neither Agree nor Disagree), suggesting that some users may have experienced difficulties. This could potentially be improved through an initial training session or guided introduction, as participants only had access to the program’s user manual, without any direct explanation of how the system operates.

In relation to the next question—Q2: speed of audio/video processing—the obtained results reflect a high level of satisfaction with the processing speed for video and audio analysis.

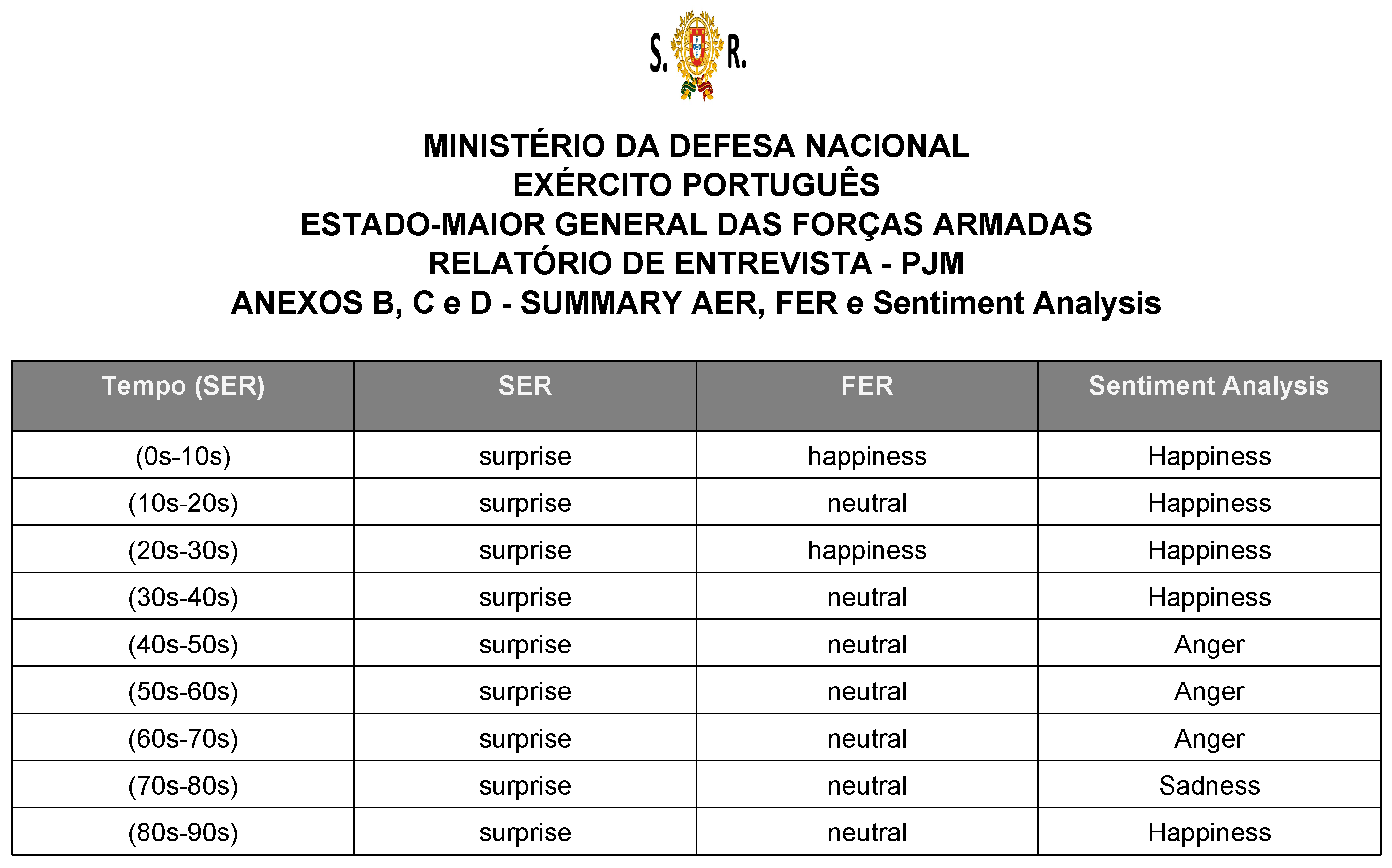

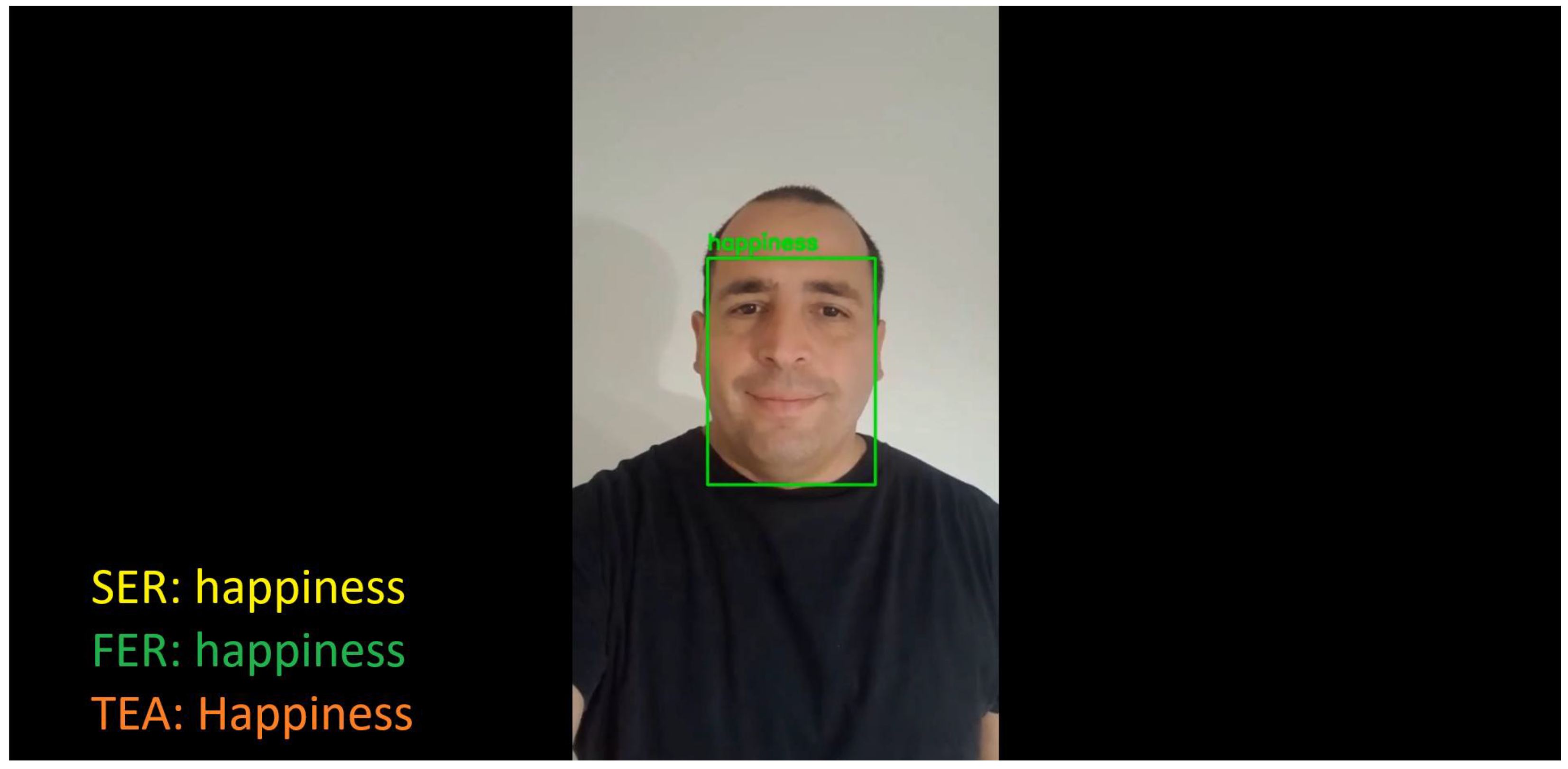

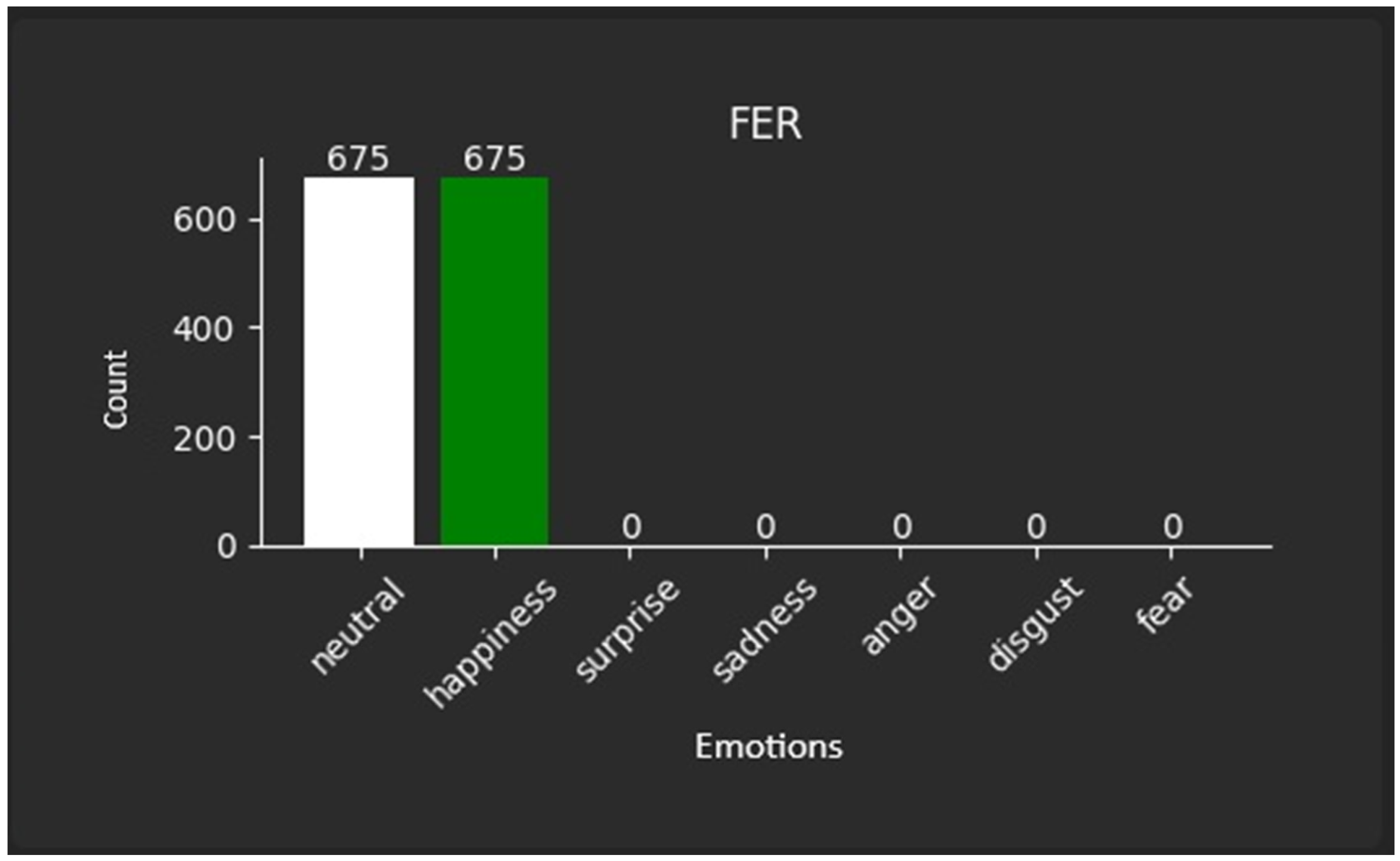

For the functionality dimension, the related questions were Q3: “Emotion recognition tools (FER/SER/TEA) are accurate?”, Q4: “Automatic transcription of audio accurately reflects the spoken content?”, and Q5: “Automatic report generation (PDF/DOCX) meets operational needs?”. Results for this dimension are presented in

Figure 12. These results were generally positive. Regarding Q3, responses were more evenly distributed: approximately 34.8% of participants selected “Strongly Agree” (level 5), and 30.4% chose “Agree” (level 4), totaling 65.2% expressing confidence in the model’s ability to classify the three emotion vectors (FER/SER/TEA). Meanwhile, 34.8% of respondents selected “Neither Agree nor Disagree”, reflecting a degree of uncertainty. This neutrality is further clarified in the open-ended responses, where participants expressed skepticism about the accuracy of the models in the context of criminal investigations, suggesting that future models should be trained on domain-specific datasets to increase trust and applicability.

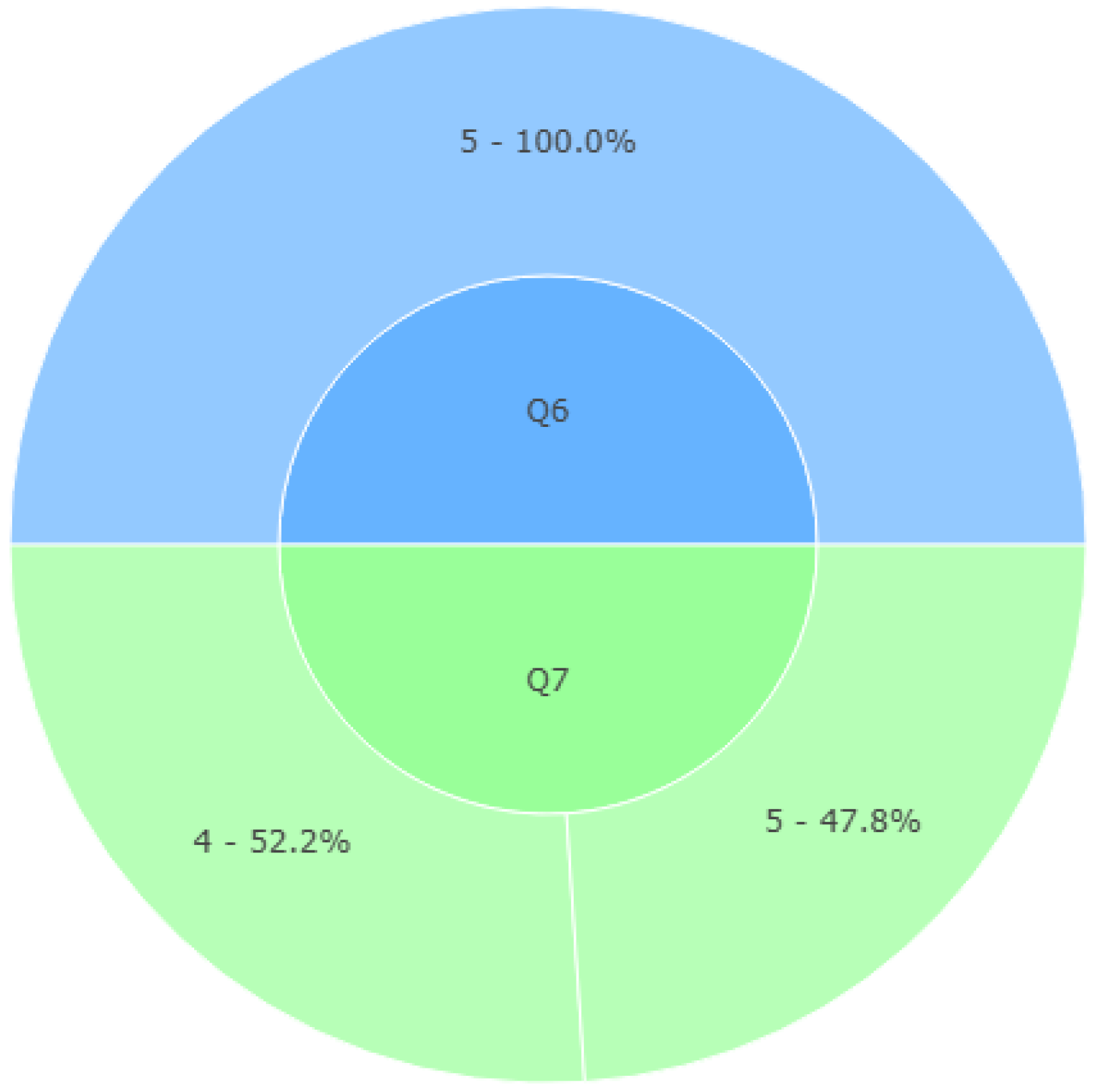

Concerning question Q4—“Does automatic transcription of audio accurately reflect the spoken content?”— a majority of respondents (52.2%) strongly concurred that transcription faithfully mirrors the spoken content. The remaining 47.8% were split between Agree (21.7%) and Neither Agree nor Disagree (26.1%). This also corresponds to the previously evaluated WER and highlights the absence of speaker diarization in the transcript.

Concerning the last inquiry within the functionality domain—Q5—“Does automatic report generation (PDF/DOCX) satisfy operational requirements?”, respondents unanimously agreed that the application adequately produces the required reports and is in line with the operational necessities of the investigative procedures.

The questions addressing the dimension of Practical Utility were Q6: “The program improves efficiency in writing interrogation reports?” and Q7: “The generated emotion graphs are useful for behavioral analysis?”. Results for this dimension are presented in

Figure 13.

The results indicate a strong acceptance of the INTU-AI system in terms of its Practical Utility. All respondents agreed that the program improves efficiency in writing interrogation reports (Q6), even though some selected a rating of 4 on the Likert scale and identified, in open-ended responses, the need for speaker diarization to improve transcription accuracy. Despite these remarks, there was unanimous agreement regarding the program’s positive impact on report writing efficiency.

Likewise, for question Q7—“Are the generated emotion graphs beneficial for behavioral analysis?”, the general assessment indicates a positive reception of the tool as an auxiliary resource for investigators, facilitating the tracking of emotional fluctuations during interviews. This graphical depiction of emotional trends was viewed as especially useful for obtaining a comprehensive summary of the subject’s behavioral patterns.

This perspective is reinforced by the testimony of the Head of the Criminal Department of the Military Judicial Police, who stated: “…one of the things I end up identifying is that transcription and writing activities are always very time-consuming (…), which, during the evidentiary phase, may affect the course of the investigation”.

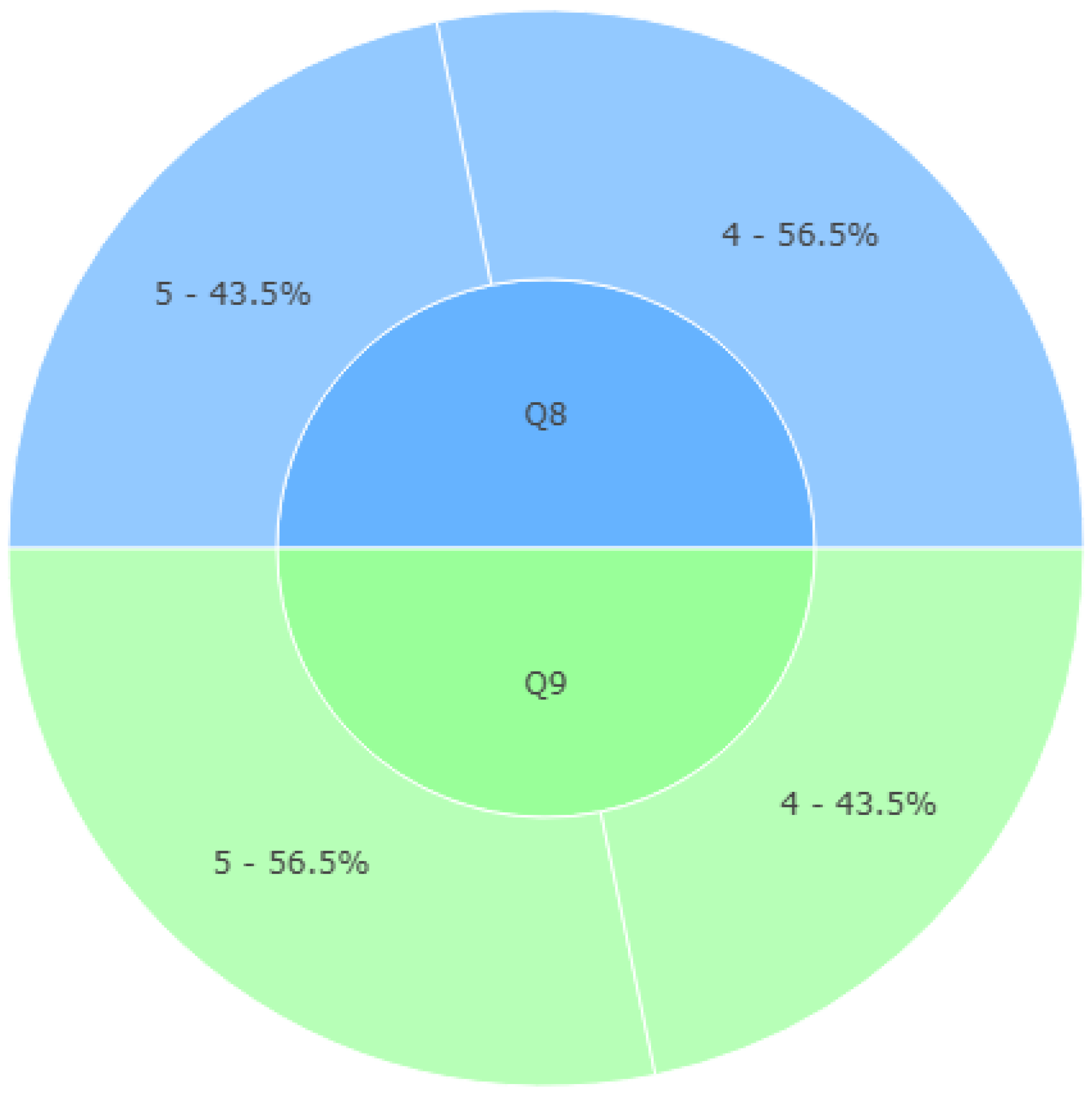

Regarding the questions related to the User Experience dimension, namely Q8: “The program reduces human errors compared to traditional methods?” and Q9: “The integration between video, audio and reporting is well implemented?”, the results are presented in

Figure 14. The analysis of the User Experience dimension also yielded positive results, with no responses falling below the level of “Agree” for either question. Regarding Q8, participants expressed agreement that the program contributes to a reduction in human error during the interrogation workflow. In open-ended responses, several users emphasized that correcting a machine-generated transcription is considerably easier than performing the transcription manually.

In the open-ended questions, specifically Q10: “What additional features would be useful?” and Q11: “Describe any difficulties encountered during use.”, the responses broadly converged on a few key suggestions. As reported earlier, participants emphasized the importance of incorporating speaker diarization to improve the clarity of transcriptions by identifying who is speaking at each moment. Additionally, several respondents proposed extending the emotion recognition capabilities beyond FER, SER, and TEA to include other behavioral cues such as hand positioning or body movement, which could enrich the multimodal analysis and provide deeper insights into the interviewee’s emotional and cognitive state.

Overall, the qualitative evaluation of the program (Q12) by the respondents was classified as “Very useful”. This perception is reinforced by the statement of the Head of the Criminal Department of the Military Judicial Police, who noted: “(…) this tool [INTU-AI] (…) is an added value as it allows not only the recording and/or audio (…) but also transcribes and fills in the reports, which greatly facilitates the conduct of the investigation (…) in addition to all this, this investigative support tool assists in reading emotions (…) which may allow us to open new lines of investigation.”