Deep Learning Strategies for Semantic Segmentation in Robot-Assisted Radical Prostatectomy

Abstract

1. Introduction

- We formulate and tackle the clinically relevant pixel-level segmentation of mucosal tissue and suture needles specifically during the VUA step of RARP, using real annotated endoscopic videos combined with a DL-based framework;

- We compare two pipelines employing convolutional- and transformer-based models for the fine-grained segmentation of endoscopic images, assessing the feasibility of applying DL methods to guide the development of objective assessment tools for robotic surgical skills;

- We study the impact of transfer learning, data augmentation, and task-related loss functions on the delineation of subtle structures, and report considerations on latency and reproducibility of the proposed methods.

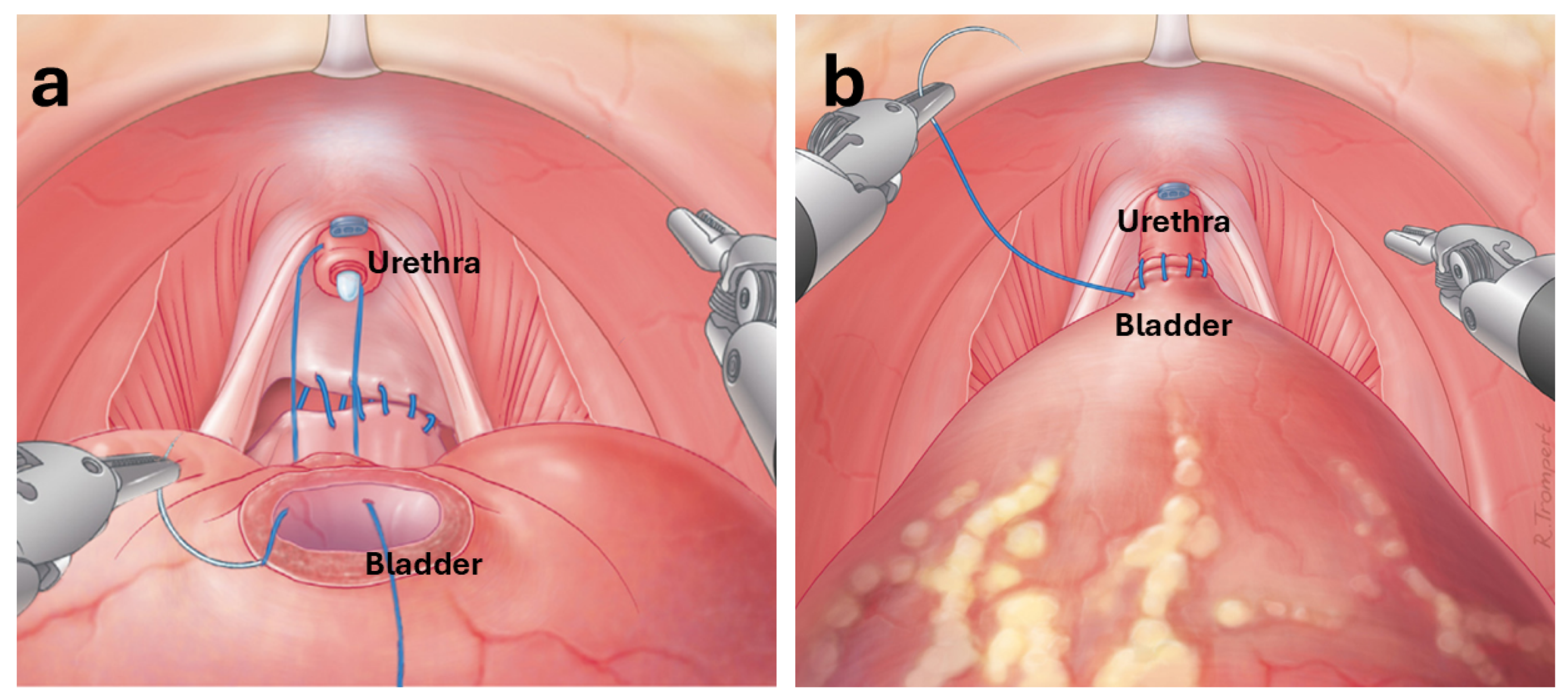

2. Problem Formulation

3. Related Work

3.1. Deep Learning for Suture Quality Assessment

3.2. Deep Learning for Surgical Scene Segmentation

4. Materials and Methods

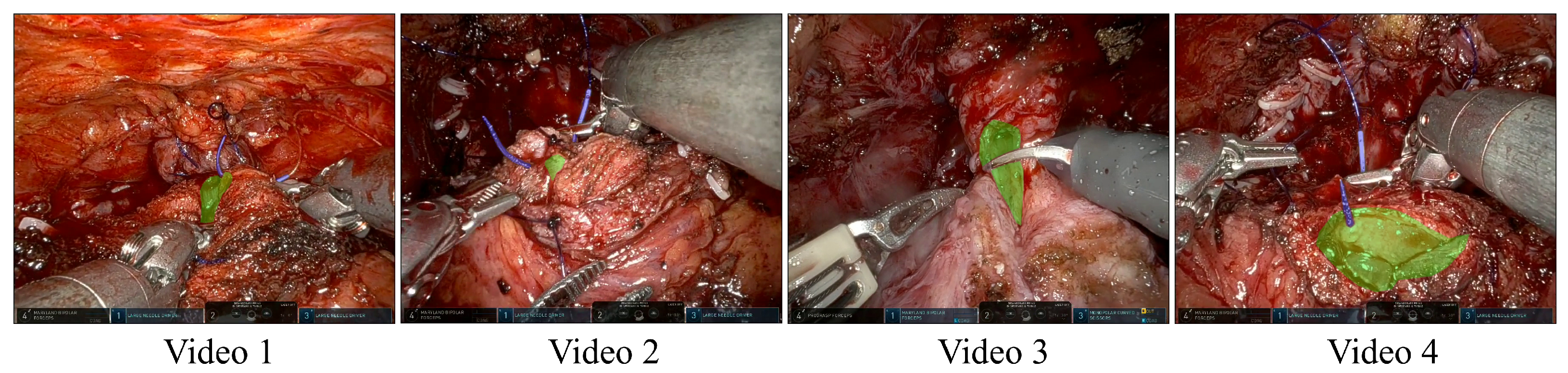

4.1. Data Collection and Preprocessing

4.2. Segmentation Pipelines

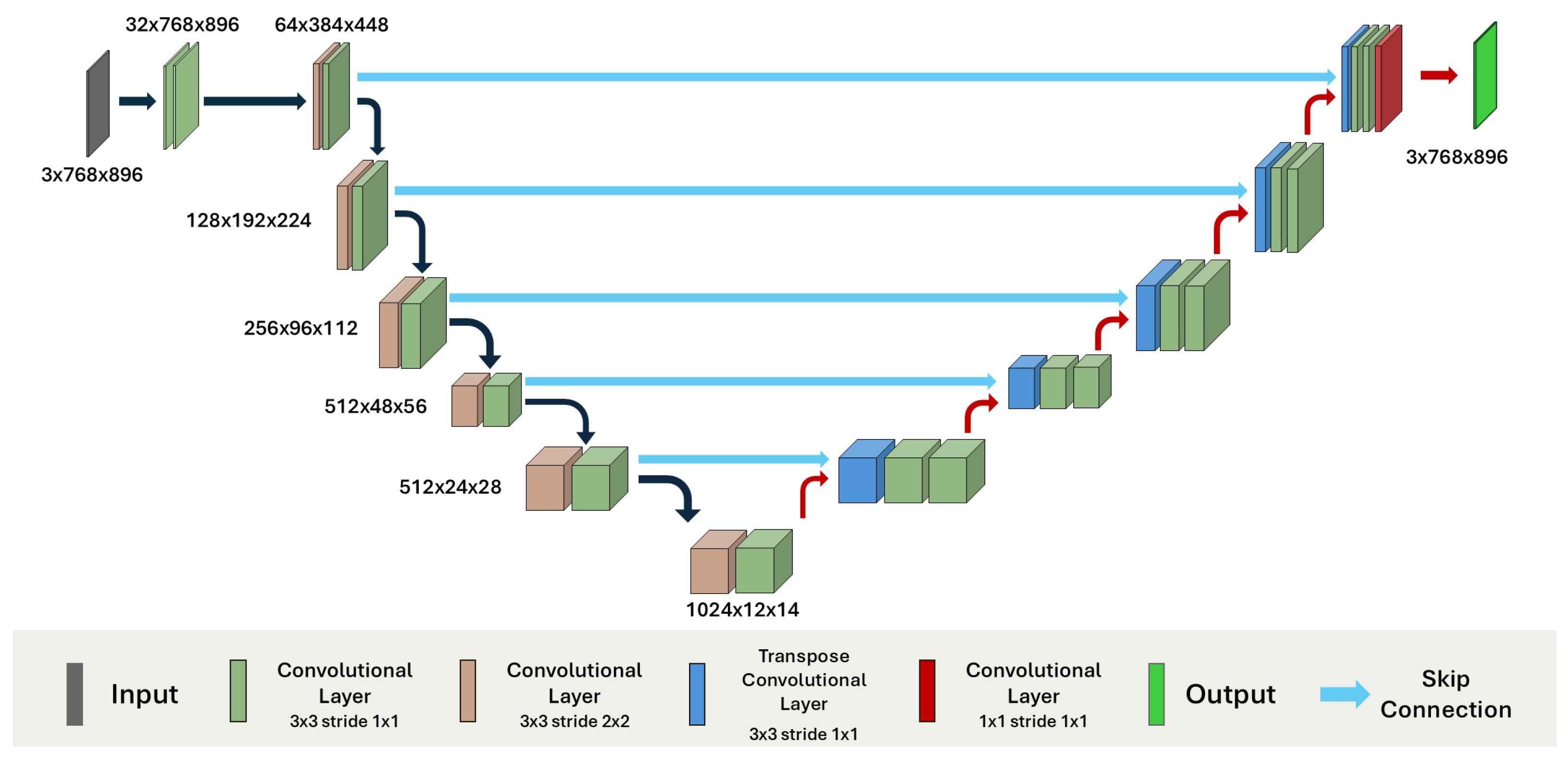

4.2.1. Convolutional Models

- 2D Configuration: The model was trained using individual frames extracted from video sequences. This approach allowed us to leverage a larger number of training samples by treating each frame as an independent input.

- 3D Configuration: In this setting, we trained the model on short video clips as volumetric inputs, preserving the temporal and spatial continuity across consecutive frames.

- ,

- ,

- ,

- and is a small constant for numerical stability.

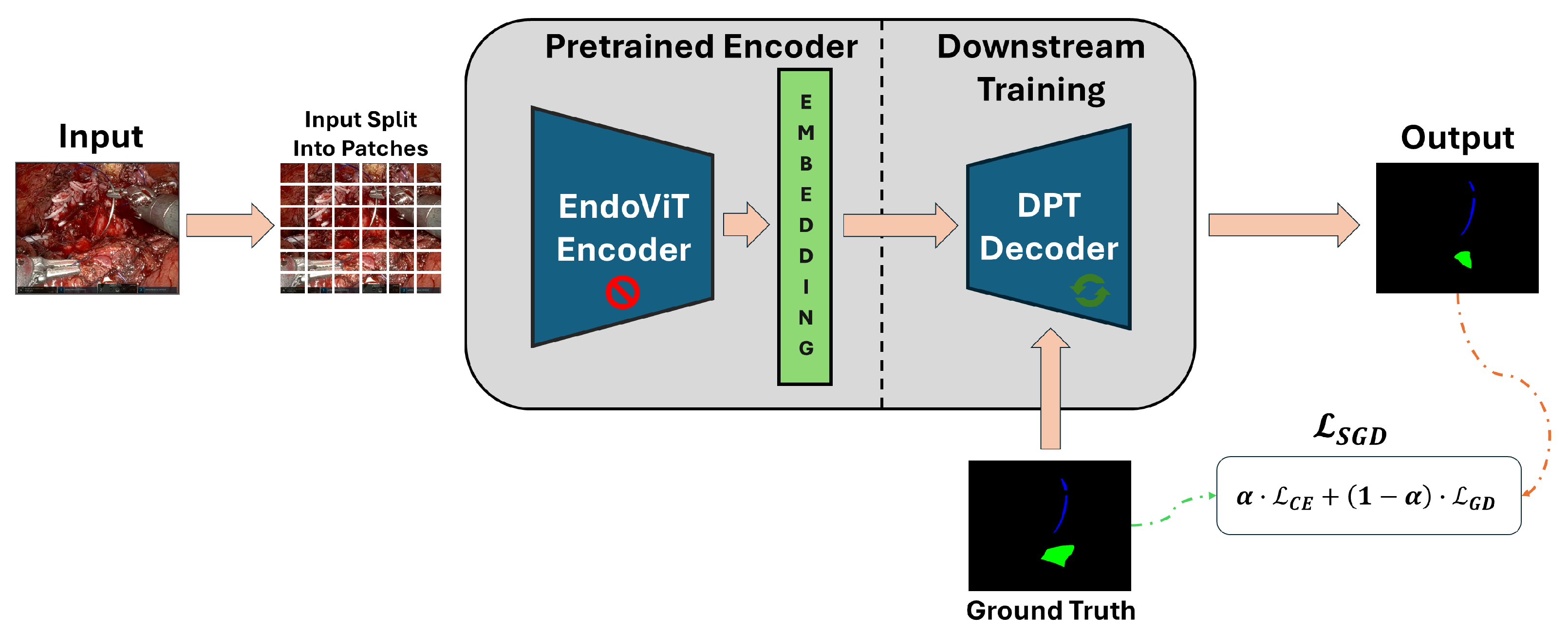

4.2.2. Transformer Model

4.3. Training Setup and Evaluation Metrics

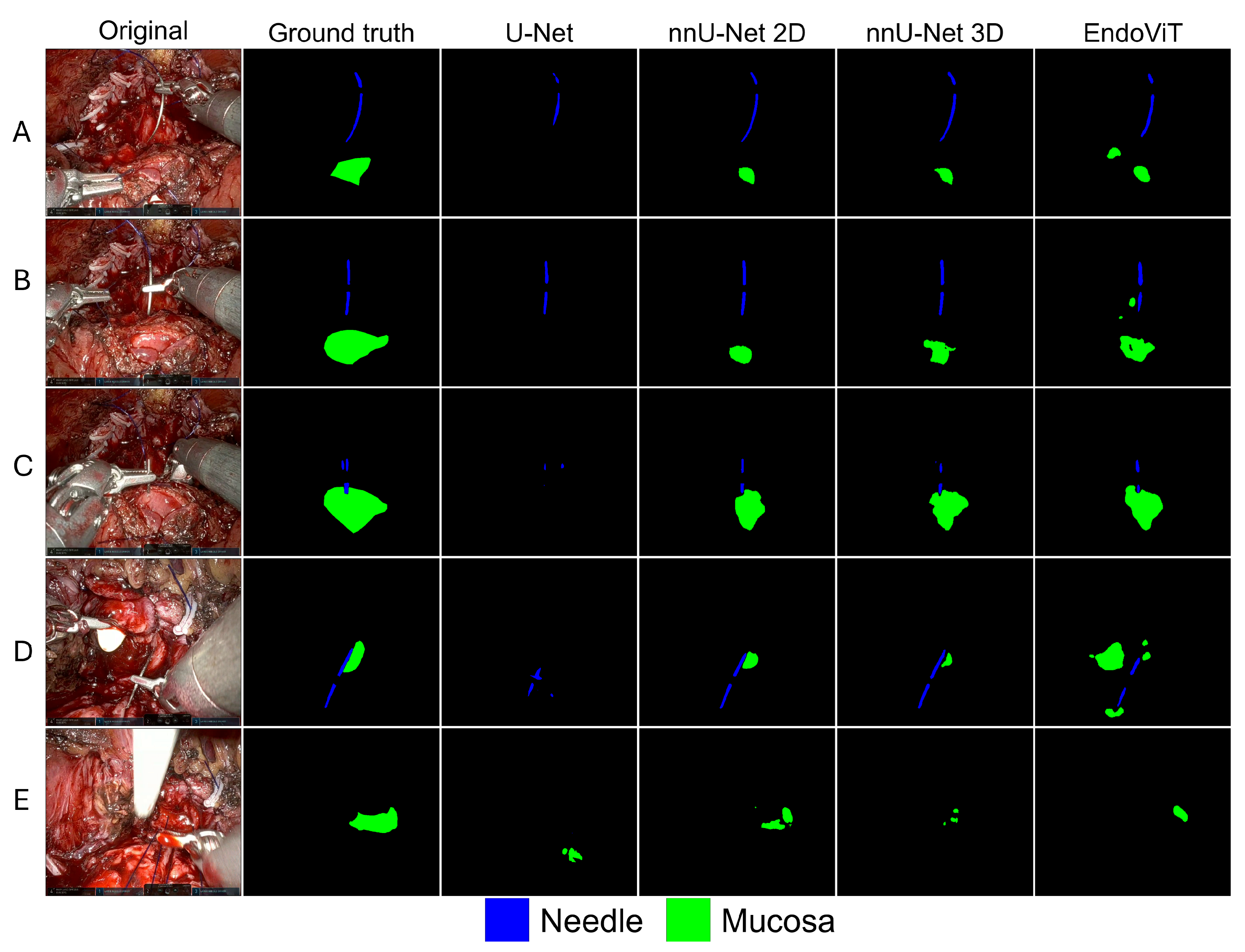

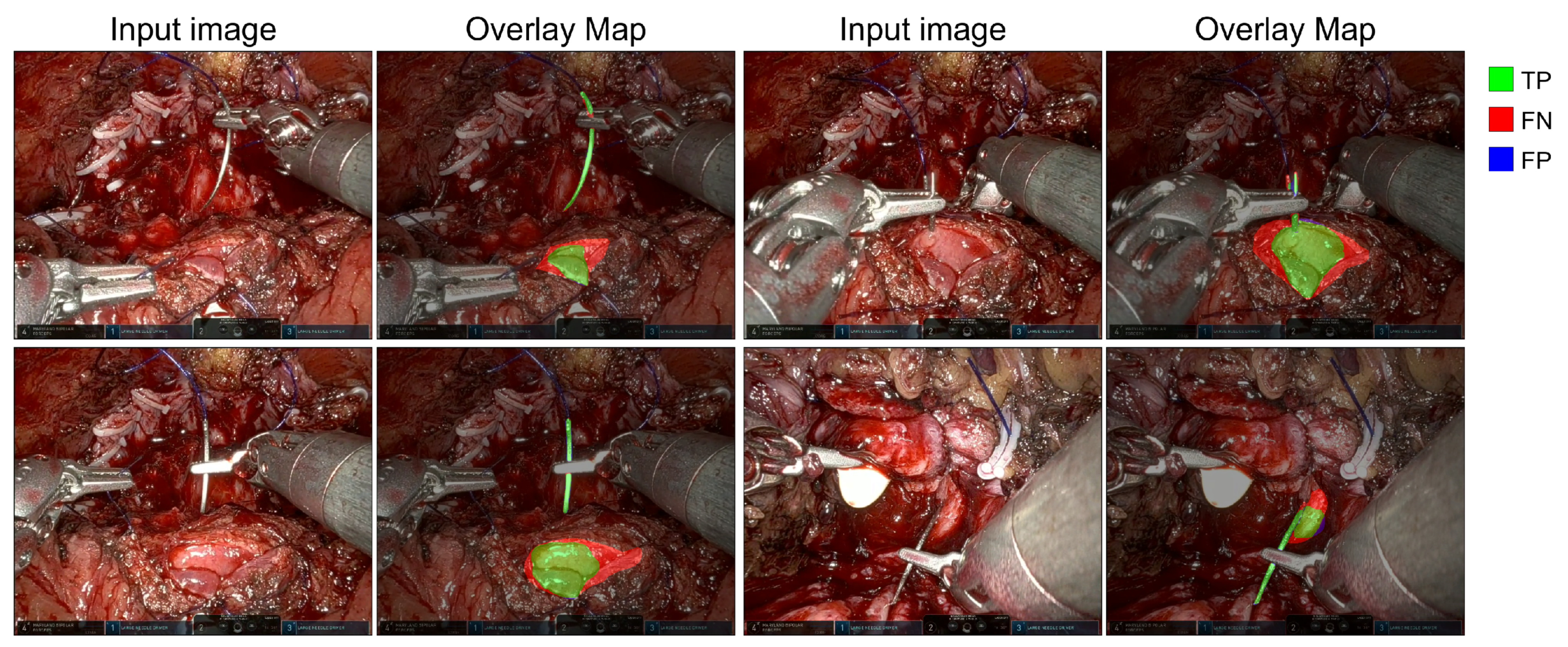

5. Results

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cornford, P.; van den Bergh, R.C.; Briers, E.; Van den Broeck, T.; Brunckhorst, O.; Darraugh, J.; Eberli, D.; De Meerleer, G.; De Santis, M.; Farolfi, A.; et al. EAU-EANM-ESTRO-ESUR-ISUP-SIOG guidelines on prostate cancer—2024 update. Part I: Screening, diagnosis, and local treatment with curative intent. Eur. Urol. 2024, 86, 148–163. [Google Scholar] [CrossRef] [PubMed]

- Carbonara, U.; Minafra, P.; Papapicco, G.; De Rienzo, G.; Pagliarulo, V.; Lucarelli, G.; Vitarelli, A.; Ditonno, P. Xi nerve-sparing robotic radical perineal prostatectomy: European single-center technique and outcomes. Eur. Urol. Open Sci. 2022, 41, 55–62. [Google Scholar] [CrossRef]

- Binder, J.; Kramer, W. Robotically-assisted laparoscopic radical prostatectomy. BJU Int. 2001, 87, 128–132. [Google Scholar] [CrossRef]

- Carbonara, U.; Srinath, M.; Crocerossa, F.; Ferro, M.; Cantiello, F.; Lucarelli, G.; Porpiglia, F.; Battaglia, M.; Ditonno, P.; Autorino, R. Robot-assisted radical prostatectomy versus standard laparoscopic radical prostatectomy: An evidence-based analysis of comparative outcomes. World J. Urol. 2021, 39, 3721–3732. [Google Scholar] [CrossRef] [PubMed]

- Burttet, L.M.; Varaschin, G.A.; Berger, A.K.; Cavazzola, L.T.; Berger, M. Prospective evaluation of vesicourethral anastomosis outcomes in robotic radical prostatectomy during early experience in a university hospital. Int. Braz. J. Urol. 2017, 43, 1176–1184. [Google Scholar] [CrossRef]

- Chapman, S.; Turo, R.; Cross, W. Vesicourethral anastomosis using V-Loc™ barbed suture during robot-assisted radical prostatectomy. Cent. Eur. J. Urol. 2011, 64, 236. [Google Scholar] [CrossRef]

- Zorn, K.C.; Widmer, H.; Lattouf, J.B.; Liberman, D.; Bhojani, N.; Trinh, Q.D.; Sun, M.; Karakiewicz, P.I.; Denis, R.; El-Hakim, A. Novel method of knotless vesicourethral anastomosis during robot-assisted radical prostatectomy: Feasibility study and early outcomes in 30 patients using the interlocked barbed unidirectional V-LOC180 suture. Can. Urol. Assoc. J. 2011, 5, 188. [Google Scholar] [CrossRef]

- Hakimi, A.A.; Faleck, D.M.; Sobey, S.; Ioffe, E.; Rabbani, F.; Donat, S.M.; Ghavamian, R. Assessment of complication and functional outcome reporting in the minimally invasive prostatectomy literature from 2006 to the present. BJU Int. 2012, 109, 26–30. [Google Scholar] [CrossRef]

- Haque, T.F.; Knudsen, J.E.; You, J.; Hui, A.; Djaladat, H.; Ma, R.; Cen, S.; Goldenberg, M.; Hung, A.J. Competency in Robotic Surgery: Standard Setting for Robotic Suturing Using Objective Assessment and Expert Evaluation. J. Surg. Educ. 2024, 81, 422–430. [Google Scholar] [CrossRef]

- Khan, H.; Kozlowski, J.D.; Hussein, A.A.; Sharif, M.; Ahmed, Y.; May, P.; Hammond, Y.; Stone, K.; Ahmad, B.; Cole, A.; et al. Use of Robotic Anastomosis Competency Evaluation (RACE) tool for assessment of surgical competency during urethrovesical anastomosis. Can. Urol. Assoc. J. 2019, 13, E10–E16. [Google Scholar] [CrossRef] [PubMed]

- Anderson, D.D.; Long, S.; Thomas, G.W.; Putnam, M.D.; Bechtold, J.E.; Karam, M.D. Objective Structured Assessments of Technical Skills (OSATS) does not assess the quality of the surgical result effectively. Clin. Orthop. Relat. Res. 2016, 474, 874–881. [Google Scholar] [CrossRef]

- Gumbs, A.A.; Hogle, N.J.; Fowler, D.L. Evaluation of resident laparoscopic performance using global operative assessment of laparoscopic skills. J. Am. Coll. Surg. 2007, 204, 308–313. [Google Scholar] [CrossRef] [PubMed]

- Mackay, S.; Datta, V.; Chang, A.; Shah, J.; Kneebone, R.; Darzi, A. Multiple Objective Measures of Skill (MOMS): A new approach to the assessment of technical ability in surgical trainees. Ann. Surg. 2003, 238, 291–300. [Google Scholar] [CrossRef] [PubMed]

- Alibhai, K.M.; Fowler, A.; Gawad, N.; Wood, T.J.; Raîche, I. Assessment of laparoscopic skills: Comparing the reliability of global rating and entrustability tools. Can. Med. Educ. J. 2022, 13, 36–45. [Google Scholar] [CrossRef]

- Chen, J.; Cheng, N.; Cacciamani, G.; Oh, P.; Lin-Brande, M.; Remulla, D.; Gill, I.S.; Hung, A.J. Objective assessment of robotic surgical technical skill: A systematic review. J. Urol. 2019, 201, 461–469. [Google Scholar] [CrossRef]

- Lam, K.; Chen, J.; Wang, Z.; Iqbal, F.M.; Darzi, A.; Lo, B.; Purkayastha, S.; Kinross, J.M. Machine learning for technical skill assessment in surgery: A systematic review. NPJ Digit. Med. 2022, 5, 24. [Google Scholar] [CrossRef]

- Hung, A.J.; Chen, J.; Gill, I.S. Automated performance metrics and machine learning algorithms to measure surgeon performance and anticipate clinical outcomes in robotic surgery. JAMA Surg. 2018, 153, 770–771. [Google Scholar] [CrossRef]

- Hung, A.J.; Ma, R.; Cen, S.; Nguyen, J.H.; Lei, X.; Wagner, C. Surgeon automated performance metrics as predictors of early urinary continence recovery after robotic radical prostatectomy—A prospective bi-institutional study. Eur. Urol. Open Sci. 2021, 27, 65–72. [Google Scholar] [CrossRef] [PubMed]

- Shvets, A.A.; Rakhlin, A.; Kalinin, A.A.; Iglovikov, V.I. Automatic instrument segmentation in robot-assisted surgery using deep learning. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 624–628. [Google Scholar] [CrossRef]

- Kitaguchi, D.; Takeshita, N.; Matsuzaki, H.; Hasegawa, H.; Igaki, T.; Oda, T.; Ito, M. Deep learning-based automatic surgical step recognition in intraoperative videos for transanal total mesorectal excision. Surg. Endosc. 2022, 36, 1143–1151. [Google Scholar] [CrossRef]

- Luongo, F.; Hakim, R.; Nguyen, J.H.; Anandkumar, A.; Hung, A.J. Deep learning-based computer vision to recognize and classify suturing gestures in robot-assisted surgery. Surgery 2021, 169, 1240–1244. [Google Scholar] [CrossRef]

- Lajkó, G.; Nagyne Elek, R.; Haidegger, T. Endoscopic image-based skill assessment in robot-assisted minimally invasive surgery. Sensors 2021, 21, 5412. [Google Scholar] [CrossRef] [PubMed]

- Funke, I.; Mees, S.T.; Weitz, J.; Speidel, S. Video-based surgical skill assessment using 3D convolutional neural networks. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 1217–1225. [Google Scholar] [CrossRef]

- Lavanchy, J.L.; Zindel, J.; Kirtac, K.; Twick, I.; Hosgor, E.; Candinas, D.; Beldi, G. Automation of surgical skill assessment using a three-stage machine learning algorithm. Sci. Rep. 2021, 11, 5197. [Google Scholar] [CrossRef]

- Mohammed, E.; Khan, A.; Ullah, W.; Khan, W.; Ahmed, M.J. Efficient Polyp Segmentation via Attention-Guided Lightweight Network with Progressive Multi-Scale Fusion. ICCK Trans. Intell. Syst. 2025, 2, 95–108. [Google Scholar]

- Anh, N.X.; Nataraja, R.M.; Chauhan, S. Towards near real-time assessment of surgical skills: A comparison of feature extraction techniques. Comput. Methods Programs Biomed. 2020, 187, 105234. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III 18. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Isensee, F.; Petersen, J.; Klein, A.; Zimmerer, D.; Jaeger, P.F.; Kohl, S.; Wasserthal, J.; Koehler, G.; Norajitra, T.; Wirkert, S.; et al. nnU-Net: Self-adapting Framework for U-Net-Based Medical Image Segmentation. arXiv 2018, arXiv:1809.10486. [Google Scholar]

- Batić, D.; Holm, F.; Özsoy, E.; Czempiel, T.; Navab, N. EndoViT: Pretraining vision transformers on a large collection of endoscopic images. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1085–1091. [Google Scholar] [CrossRef]

- Gillitzer, R.; Thüroff, J. Technical advances in radical retropubic prostatectomy techniques for avoiding complications. Part II: Vesico-urethral anastomosis and nerve-sparing prostatectomy. BJU Int. 2003, 92, 178–184. [Google Scholar] [CrossRef] [PubMed]

- Webb, D.R.; Sethi, K.; Gee, K. An analysis of the causes of bladder neck contracture after open and robot-assisted laparoscopic radical prostatectomy. BJU Int. 2009, 103, 957–963. [Google Scholar] [CrossRef] [PubMed]

- Kumar, S.; Soni, P.K.; Chandna, A.; Parmar, K.; Gupta, P.K. Mucosal coaptation technique for early urinary continence after robot-assisted radical prostatectomy: A comparative exploratory study. Cent. Eur. J. Urol. 2021, 74, 528. [Google Scholar]

- Vis, A.N.; van der Poel, H.G.; Ruiter, A.E.; Hu, J.C.; Tewari, A.K.; Rocco, B.; Patel, V.R.; Razdan, S.; Nieuwenhuijzen, J.A. Posterior, anterior, and periurethral surgical reconstruction of urinary continence mechanisms in robot-assisted radical prostatectomy: A description and video compilation of commonly performed surgical techniques. Eur. Urol. 2019, 76, 814–822. [Google Scholar] [CrossRef]

- Handelman, A.; Keshet, Y.; Livny, E.; Barkan, R.; Nahum, Y.; Tepper, R. Evaluation of suturing performance in general surgery and ocular microsurgery by combining computer vision-based software and distributed fiber optic strain sensors: A proof-of-concept. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 1359–1367. [Google Scholar] [CrossRef]

- Kil, I.; Eidt, J.F.; Singapogu, R.B.; Groff, R.E. Assessment of Open Surgery Suturing Skill: Image-based Metrics Using Computer Vision. Int. J. Comput. Assist. Radiol. Surg. 2024, 81, 983–993. [Google Scholar] [CrossRef] [PubMed]

- Yamada, T.; Suda, H.; Yoshitake, A.; Shimizu, H. Development of an Automated Smartphone-Based Suture Evaluation System. J. Surg. Educ. 2022, 79, 802–808. [Google Scholar] [CrossRef] [PubMed]

- Liu, D.; Li, Q.; Jiang, T.; Wang, Y.; Miao, R.; Shan, F.; Li, Z. Towards unified surgical skill assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9522–9531. [Google Scholar]

- Nagaraj, M.B.; Namazi, B.; Sankaranarayanan, G.; Scott, D.J. Developing artificial intelligence models for medical student suturing and knot-tying video-based assessment and coaching. Surg. Endosc. 2023, 37, 402–411. [Google Scholar] [CrossRef]

- Frischknecht, A.C.; Kasten, S.J.; Hamstra, S.J.; Perkins, N.C.; Gillespie, R.B.; Armstrong, T.J.; Minter, R.M. The objective assessment of experts’ and novices’ suturing skills using an image analysis program. Acad. Med. 2013, 88, 260–264. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Noraset, T.; Mahawithitwong, P.; Dumronggittigule, W.; Pisarnturakit, P.; Iramaneerat, C.; Ruansetakit, C.; Chaikangwan, I.; Poungjantaradej, N.; Yodrabum, N. Automated measurement extraction for assessing simple suture quality in medical education. Expert Syst. Appl. 2024, 241, 122722. [Google Scholar] [CrossRef]

- Mansour, M.; Cumak, E.N.; Kutlu, M.; Mahmud, S. Deep learning based suture training system. Surg. Open Sci. 2023, 15, 1–11. [Google Scholar] [CrossRef]

- Lee, D.H.; Kwak, K.S.; Lim, S.C. A Neural Network-based Suture-tension Estimation Method Using Spatio-temporal Features of Visual Information and Robot-state Information for Robot-assisted Surgery. Int. J. Control. Autom. Syst. 2023, 21, 4032–4040. [Google Scholar] [CrossRef]

- Hoffmann, H.; Funke, I.; Peters, P.; Venkatesh, D.K.; Egger, J.; Rivoir, D.; Röhrig, R.; Hölzle, F.; Bodenstedt, S.; Willemer, M.C.; et al. AIxSuture: Vision-based assessment of open suturing skills. Int. J. Comput. Assist. Radiol. Surg. 2024, 19, 1045–1052. [Google Scholar] [CrossRef]

- Li, X.; Li, M.; Yan, P.; Li, G.; Jiang, Y.; Luo, H.; Yin, S. Deep learning attention mechanism in medical image analysis: Basics and beyonds. Int. J. Netw. Dyn. Intell. 2023, 2, 93–116. [Google Scholar] [CrossRef]

- Li, X.; Li, L.; Jiang, Y.; Wang, H.; Qiao, X.; Feng, T.; Luo, H.; Zhao, Y. Vision-Language Models in medical image analysis: From simple fusion to general large models. Inf. Fusion 2025, 118, 102995. [Google Scholar] [CrossRef]

- Salman, T.; Gazis, A.; Ali, A.; Khan, M.H.; Ali, M.; Khan, H.; Shah, H.A. ColoSegNet: Visual Intelligence Driven Triple Attention Feature Fusion Network for Endoscopic Colorectal Cancer Segmentation. ICCK Trans. Intell. Syst. 2025, 2, 125–136. [Google Scholar] [CrossRef]

- Allan, M.; Shvets, A.; Kurmann, T.; Zhang, Z.; Duggal, R.; Su, Y.H.; Rieke, N.; Laina, I.; Kalavakonda, N.; Bodenstedt, S.; et al. 2017 robotic instrument segmentation challenge. arXiv 2019, arXiv:1902.06426. [Google Scholar] [CrossRef]

- Roß, T.; Reinke, A.; Full, P.M.; Wagner, M.; Kenngott, H.; Apitz, M.; Hempe, H.; Mindroc-Filimon, D.; Scholz, P.; Tran, T.N.; et al. Comparative validation of multi-instance instrument segmentation in endoscopy: Results of the ROBUST-MIS 2019 challenge. Med. Image Anal. 2021, 70, 101920. [Google Scholar] [CrossRef]

- Scheikl, P.M.; Laschewski, S.; Kisilenko, A.; Davitashvili, T.; Müller, B.; Capek, M.; Müller-Stich, B.P.; Wagner, M.; Mathis-Ullrich, F. Deep learning for semantic segmentation of organs and tissues in laparoscopic surgery. In Current Directions in Biomedical Engineering; De Gruyter: Berlin/Heidelberg, Germany, 2020; Volume 6, p. 20200016. [Google Scholar]

- Kolbinger, F.R.; Rinner, F.M.; Jenke, A.C.; Carstens, M.; Krell, S.; Leger, S.; Distler, M.; Weitz, J.; Speidel, S.; Bodenstedt, S. Anatomy segmentation in laparoscopic surgery: Comparison of machine learning and human expertise–an experimental study. Int. J. Surg. 2023, 109, 2962–2974. [Google Scholar] [CrossRef] [PubMed]

- Hong, W.Y.; Kao, C.L.; Kuo, Y.H.; Wang, J.R.; Chang, W.L.; Shih, C.S. Cholecseg8k: A semantic segmentation dataset for laparoscopic cholecystectomy based on cholec80. arXiv 2020, arXiv:2012.12453. [Google Scholar]

- Allan, M.; Kondo, S.; Bodenstedt, S.; Leger, S.; Kadkhodamohammadi, R.; Luengo, I.; Fuentes, F.; Flouty, E.; Mohammed, A.; Pedersen, M.; et al. 2018 robotic scene segmentation challenge. arXiv 2020, arXiv:2001.11190. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar]

- Chen, M.; Peng, H.; Fu, J.; Ling, H. Autoformer: Searching transformers for visual recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 12270–12280. [Google Scholar]

- Guo, J.; Li, T.; Shao, M.; Wang, W.; Pan, L.; Chen, X.; Sun, Y. CeDFormer: Community Enhanced Transformer for Dynamic Network Embedding. In Proceedings of the International Workshop on Discovering Drift Phenomena in Evolving Landscapes, Barcelona, Spain, 26 August 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 37–53. [Google Scholar]

- Kehinde, T.; Adedokun, O.J.; Joseph, A.; Kabirat, K.M.; Akano, H.A.; Olanrewaju, O.A. Helformer: An attention-based deep learning model for cryptocurrency price forecasting. J. Big Data 2025, 12, 81. [Google Scholar] [CrossRef]

- Pak, S.; Park, S.G.; Park, J.; Choi, H.R.; Lee, J.H.; Lee, W.; Cho, S.T.; Lee, Y.G.; Ahn, H. Application of deep learning for semantic segmentation in robotic prostatectomy: Comparison of convolutional neural networks and visual transformers. Investig. Clin. Urol. 2024, 65, 551–558. [Google Scholar] [CrossRef]

- Park, S.G.; Park, J.; Choi, H.R.; Lee, J.H.; Cho, S.T.; Lee, Y.G.; Ahn, H.; Pak, S. Deep Learning Model for Real-time Semantic Segmentation During Intraoperative Robotic Prostatectomy. Eur. Urol. Open Sci. 2024, 62, 47–53. [Google Scholar] [CrossRef]

- Rocco, F.; Carmignani, L.; Acquati, P.; Gadda, F.; Dell’Orto, P.; Rocco, B.; Casellato, S.; Gazzano, G.; Consonni, D. Early continence recovery after open radical prostatectomy with restoration of the posterior aspect of the rhabdosphincter. Eur. Urol. 2007, 52, 376–383. [Google Scholar] [CrossRef]

- Oh, N.; Kim, B.; Kim, T.; Rhu, J.; Kim, J.; Choi, G.S. Real-time segmentation of biliary structure in pure laparoscopic donor hepatectomy. Sci. Rep. 2024, 14, 22508. [Google Scholar] [CrossRef] [PubMed]

- Kamtam, D.N.; Shrager, J.B.; Malla, S.D.; Lin, N.; Cardona, J.J.; Kim, J.J.; Hu, C. Deep learning approaches to surgical video segmentation and object detection: A Scoping Review. Comput. Biol. Med. 2025, 194, 110482. [Google Scholar] [CrossRef] [PubMed]

- Sudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised dice overlap as a deep learning loss function for highly unbalanced segmentations. In Proceedings of the Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; Proceedings 3. Springer: Berlin/Heidelberg, Germany, 2017; pp. 240–248. [Google Scholar]

- Ershad, M.; Rege, R.; Majewicz Fey, A. Automatic and near real-time stylistic behavior assessment in robotic surgery. Int. J. Comput. Assist. Radiol. Surg. 2019, 14, 635–643. [Google Scholar] [CrossRef] [PubMed]

- Tanzi, L.; Piazzolla, P.; Porpiglia, F.; Vezzetti, E. Real-time deep learning semantic segmentation during intra-operative surgery for 3D augmented reality assistance. Int. J. Comput. Assist. Radiol. Surg. 2021, 16, 1435–1445. [Google Scholar] [CrossRef]

| Study | Objective | Methodology | Limitations |

|---|---|---|---|

| Handelman et al. [34] | Assessment of suture quality and stitching flow in general and ocular surgery | Image processing for end-product sutures | Simulated and experimental setup; not real-time |

| Kil et al. [35] Yamada et al. [36] | Identification of insertion/extraction points, accuracy, tissue damage, task time | Image- and video-based metrics | Simulated settings; no endoscopic data; focus on open surgery |

| Liu et al. [37] Funke et al. [23] Nagaraj et al. [38] | Automated assessment of surgical skill and gestures from video | Multi-path framework (skill aspects), 3D ConvNet (skill classification), CNN-based error detection (instrument/knot) | Focus on gestures; limited datasets; mostly simulated/training settings |

| Frischknecht et al. [39] | Objective assessment of expert vs. novice suturing skills | Image processing for end-product sutures (stitch length, bite size, travel, orientation, symmetry) | Post-hoc evaluation; not real-time; tested on limited sample |

| Noraset et al. [41] Mansour et al. [42] Lee et al. [43] Hoffmann et al. [44] | Automated analysis of sutures for quality and skill assessment | CNN-based approaches: instance segmentation (suture geometry), image classification (success/failure), spatio-temporal models (tension prediction), video classification (skill levels) | Simulated/phantom or limited datasets; lack of endoscopic and clinical variability |

| Study | Objective | Methodology | Limitations |

|---|---|---|---|

| Shvets et al. [19] | Instrument segmentation | CNN-based models | Focus on tools; limited anatomical context |

| Scheikl et al. [50] Kolbinger et al. [51] | Semantic segmentation of organs/tissues in laparoscopic surgery | CNN- and Transformer-based models | Laparoscopic focus; limited data and anatomical coverage |

| Hong et al. [52] | Multi-class segmentation in cholecystectomy | CNN-based models | Laparoscopic focus; not validated in robotic surgery |

| Allan et al. [53] | Tool and anatomy segmentation in kidney transplant | CNN-based models | Porcine data; simpler than human tissues; limited anatomical realism |

| Pak et al. [58] Gonpark et al. [59] | Segmentation of instruments and organs in RARP | CNN- and transformer-based models; Reinforced U-Net for real-time segmentation | Organ-level focus; limited dataset diversity |

| Video | Sequence | # Frames | Mucosa (% ± SD) | Needle (% ± SD) |

|---|---|---|---|---|

| 1 | 22 | 1279 | % | % |

| 2 | 11 | 2813 | % | % |

| 3 | 1 | 140 | % | - |

| 4 | 3 | 449 | % | % |

| Parameter | Model | |||

|---|---|---|---|---|

| EndoViT | U-Net | nnU-Net 2D | nnU-Net 3D | |

| Input resolution | ||||

| Loss function | Stable Generalized Dice | Dice | CE + Soft Dice | CE + Soft Dice |

| Optimizer | AdamW | RMSprop | SGD | SGD |

| Base learning rate | ||||

| Weight decay | 0 | |||

| Drop path rate | 0.1 | 0 | 0 | 0 |

| Batch size | 64 | 8 | 16 | 2 |

| Number of epochs | 20 | 20 | 200 | 200 |

| Normalization | Z-score | Z-score | Z-score | Z-score |

| Models | mIoU | mDice | GDS | IoU Mucosa | Dice Mucosa | IoU Needle | Dice Needle | Inference Time (s/frame) |

|---|---|---|---|---|---|---|---|---|

| U-Net | 0.512 | 0.590 | 0.328 | 0.062 | 0.117 | 0.491 | 0.658 | 0.22 |

| nnU-Net 2D | 0.749 | 0.841 | 0.555 | 0.495 | 0.663 | 0.763 | 0.866 | 0.28 |

| nnU-Net 3D | 0.589 | 0.696 | 0.347 | 0.252 | 0.403 | 0.529 | 0.692 | 0.47 |

| EndoVit | 0.627 | 0.735 | 0.393 | 0.301 | 0.463 | 0.598 | 0.748 | 0.12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sibilano, E.; Delprete, C.; Marvulli, P.M.; Brunetti, A.; Marino, F.; Lucarelli, G.; Battaglia, M.; Bevilacqua, V. Deep Learning Strategies for Semantic Segmentation in Robot-Assisted Radical Prostatectomy. Appl. Sci. 2025, 15, 10665. https://doi.org/10.3390/app151910665

Sibilano E, Delprete C, Marvulli PM, Brunetti A, Marino F, Lucarelli G, Battaglia M, Bevilacqua V. Deep Learning Strategies for Semantic Segmentation in Robot-Assisted Radical Prostatectomy. Applied Sciences. 2025; 15(19):10665. https://doi.org/10.3390/app151910665

Chicago/Turabian StyleSibilano, Elena, Claudia Delprete, Pietro Maria Marvulli, Antonio Brunetti, Francescomaria Marino, Giuseppe Lucarelli, Michele Battaglia, and Vitoantonio Bevilacqua. 2025. "Deep Learning Strategies for Semantic Segmentation in Robot-Assisted Radical Prostatectomy" Applied Sciences 15, no. 19: 10665. https://doi.org/10.3390/app151910665

APA StyleSibilano, E., Delprete, C., Marvulli, P. M., Brunetti, A., Marino, F., Lucarelli, G., Battaglia, M., & Bevilacqua, V. (2025). Deep Learning Strategies for Semantic Segmentation in Robot-Assisted Radical Prostatectomy. Applied Sciences, 15(19), 10665. https://doi.org/10.3390/app151910665