Abstract

This paper applies a Convolutional Neural Network (CNN)-based vehicle security enhancement model, with a specific focus on the South African context. While conventional security systems, including immobilizers, alarms, steering locks, and GPS trackers, provide a baseline level of protection, they are increasingly being circumvented by technologically adept adversaries. These limitations have spurred the development of advanced security solutions leveraging artificial intelligence (AI), with a particular emphasis on computer vision and deep learning techniques. This paper presents a CNN-based Vehicle Security Enhancement Model (CNN-based VSEM) that integrates facial recognition with GSM and GPS technologies to provide a robust, real-time security solution in South Africa. This study contributes a novel integration of CNN-based authentication with GSM and GPS tracking in the South African context, validated on a functional prototype.The prototype, developed on a Raspberry Pi 4 platform, was validated through practical demonstrations and user evaluations. The system achieved an average recognition accuracy of 85.9%, with some identities reaching 100% classification accuracy. While misclassifications led to an estimated False Acceptance Rate (FAR) of ~5% and False Rejection Rate (FRR) of ~12%, the model consistently enabled secure authentication. Preliminary latency tests indicated a decision time of approximately 1.8 s from image capture to ignition authorization. These results, together with positive user feedback, confirm the model’s feasibility and reliability. This integrated approach presents a promising advancement in intelligent vehicle security for regions with high rates of vehicle theft. Future enhancements will explore the incorporation of 3D sensing, infrared imaging, and facial recognition capable of handling variations in facial appearance. Additionally, the model is designed to detect authorized users, identify suspicious behaviour in the vicinity of the vehicle, and provide an added layer of protection against unauthorized access.

1. Introduction

The global rise in vehicle theft and unauthorized access presents a significant threat to personal safety and property. In South Africa, vehicle theft and unauthorized access remain persistently high, with recent national reports indicating a 12% hike and recovery rates of 10%. Traditional measures (immobilizers, alarms, steering locks, GPS trackers) are largely reactive and do not verify who is attempting to start the vehicle, creating opportunities for social-engineering and relay attacks. These constraints motivate a low-cost, on-device approach that can make decisions locally while still notifying the owner over resilient channels (such as SMS) with precise GPS location [1,2]. The emergence of the next generation of smart devices and applications has ushered in a new era of innovation, accompanied by escalating security and privacy concerns. As these technologies continue to advance rapidly, embedding security as a core design principle has become imperative. Security serves as a fundamental pillar that underpins various aspects of daily life, safeguarding personal privacy, safety, and the well-being of society. It encompasses a broad range of domains, including physical, information, and network security, as highlighted in [3].

Due to the high economic value of assets such as vehicles and motorcycles, grand theft auto (GTA) incidents have significantly increased globally [4]. Historically, the first documented automobile theft was committed by Bertha Benz in the 18th century when she drove away in the world’s first car, created by her husband Carl Benz [5]. While symbolic, this act exposed the vulnerabilities of automobiles. Later, in 1913, an imprisoned inventor in Denver introduced the first anti-theft alarm system, followed by the remote starter alarm in 1916 [6]. Although innovative, these early systems had significant limitations, including manual activation and a limited communication range, which made them less effective in real-world conditions.

Modern vehicles now incorporate advanced security systems, including sensors and surveillance components, to detect threats and notify drivers [7]. Despite these advancements, criminal techniques have also become more sophisticated, requiring even more secure and intelligent systems [8]. Carjackings, joyrides, and signal jamming incidents continue to occur frequently, often driven by financial motives or criminal use. Inadequate security remains a common denominator in these crimes [9].

In South Africa, the South African Police Service (SAPS) recorded 18,162 vehicle theft cases during the 2019/2020 period, averaging nearly 50 incidents per day [8]. Moreover, vehicle theft rose by 19% in that period [10]. Ref. [11] attributes these high rates to outdated or inadequate security systems, highlighting the urgency for improved vehicle protection in the current technological landscape.

Traditional vehicle security methods, such as door, wheel, and steering locks, alarm systems, and immobilizers, while effective in the past, are increasingly insufficient [12]. According to the South African Insurance Association [13], an estimated 1.5 million hijacked or stolen vehicles have never been recovered. Although tracking and insurance companies have improved their systems through constant vehicle monitoring [14,15], sophisticated thieves can still disable or bypass these technologies. The present security mechanisms and their shortcomings are listed in Table 1 below.

Table 1.

Limitations of current security solutions.

In South Africa, there is a compelling need for a proactive and intelligent vehicle security system capable of anticipating and preventing threats before they materialize. This paper addresses this challenge by presenting a security model that leverages convolutional neural networks (CNNs) for real-time visual verification and threat detection, aiming to mitigate contemporary risks associated with vehicle security.

Complementing this AI-driven approach, recent advancements in the Internet of Things (IoT) offer additional opportunities to enhance vehicle protection. IoT technologies enable continuous connectivity and real-time data acquisition, which can significantly improve the system’s ability to detect unauthorized access and respond dynamically to emerging threats [16].

Researchers have also explored the integration of artificial intelligence (AI), particularly deep learning, to enhance protection systems [17]. AI, through deep learning and neural networks, can mimic human cognitive abilities by processing large volumes of data to recognize patterns and make intelligent decisions [18,19].

The convergence of AI and IoT technologies has already demonstrated success in domains such as image and speech recognition and shows strong potential in tackling vehicle theft [20,21]. This paper leverages this synergy by proposing an AI- and IoT-based vehicle security enhancement model incorporating Convolutional Neural Networks (CNNs), GPS, and GSM modules. The proposed solution aims to deliver real-time authentication, tracking, and notification features, offering seamless integration with fleet management systems and enhanced protection for individual and commercial vehicles.

2. Problem Statement

The global increase in vehicle theft and unauthorized access presents a significant threat to personal property and safety. Traditional vehicle security measures such as alarms, immobilizers, and mechanical locks largely lack the capacity for real-time, intelligent decision-making. Moreover, these systems typically do not authenticate individuals attempting to access the vehicle or recognize potentially dangerous situations.

Despite the adoption of modern electronic and mechanical security systems, in South Africa, vehicle theft remains a persistent and serious security concern [22]. The ongoing prevalence of Grand Theft Auto (GTA) highlights the limitations of current solutions, which often serve to respond to incidents rather than prevent them. The consequences of such crimes extend beyond financial loss, contributing to psychological distress for victims and imposing a considerable burden on law enforcement agencies. While physical deterrents can be effective, they are frequently impractical due to the time and effort required for consistent use. Similarly, electronic systems often lack proactive capabilities, offering limited deterrence and delayed response to security breaches [23].

Commercial vehicle tracking services offer real-time location monitoring through the integration of onboard tracking devices [24]. However, despite these innovations, the prevalence of vehicle theft remains a pressing challenge in South Africa. This highlights the need for ongoing research and the development of more robust and proactive security solutions.

In response to the limitations of existing systems, this paper proposes an advanced vehicle security model that leverages artificial intelligence (AI) and Internet of Things (IoT) technologies to facilitate real-time driver authentication and validation. AI-IoT integrated security frameworks surpass traditional systems by offering improved automation, adaptability, intelligent threat detection, and system-wide integration. Convolutional Neural Networks (CNNs), recognized for their ability to extract spatial features from images, have been successfully applied in facial recognition applications [25]. Consequently, this research employs CNNs to support secure vehicle access and mitigate GTA risks. Although existing vehicle security technologies are widespread, the persistence of vehicle theft in South Africa reveals a critical need for more intelligent and adaptive systems that harness AI and IoT for real-time driver verification and enhanced security.

The primary objective of this research is to design and implement a CNN-based vehicle security enhancement model aimed at reducing the incidence of grand theft auto (GTA) through automated driver authentication mechanisms

Contribution of the Paper

This paper makes a significant contribution to both the motor vehicle industry and academic literature. Several researchers in the literature focused on face detection or post-incident alerting. The paper developed a real-time on-device CNN-based VSEM that addresses persistent vehicle theft and unauthorized access. Unlike prior works [26,27,28,29,30] that focused only on face detection or basic alerting, this paper contributes a novel integration of CNN-based driver authentication with GSM-based notifications and GPS tracking in a South African context.

The developed prototype utilizes transfer learning with GoogLeNet on a Raspberry Pi 4 prototype, and its performance was validated through both quantitative metrics and qualitative evaluations in real-time. The results showed an average recognition accuracy of 85.9%, with a FAR of approximately 5%, an FRR of approximately 12%, and an average decision latency of 1.8 s. Additionally, user evaluations indicated strong acceptance of the approach, demonstrating both technical effectiveness and real-world applicability. The paper thus bridges the gap between theoretical models and practical, low-cost implementations suitable for deployment in high-risk environments.

Biometric systems found in commercial vehicles such as the Genesis GV60 and Tesla exhibit sophisticated facial recognition capabilities; however, their direct applicability in the South African context is limited. These systems are predominantly deployed in developed countries, where they benefit from robust infrastructure and seamless integration with proprietary vehicle platforms. In contrast, South Africa, as a developing (third-world) country, lacks the widespread infrastructure, such as reliable connectivity and robust charging networks, required to support such advanced technologies effectively. Additionally, these commercial systems are typically vendor-locked and dependent on proprietary hardware and firmware, making them incompatible with generic or legacy vehicles. The proposed system in this study addresses these challenges by being lightweight, flexible, and cost-effective, utilizing only a Raspberry Pi and Pi Camera for deployment. This open and modular design allows for easy integration into a variety of vehicles, including those not supported by major manufacturers, making it a more viable solution for low-resource environments and infrastructure-limited regions.

3. Related Work

Vehicle theft remains a growing concern, despite the advancement of modern vehicle security technologies, such as keyless entry systems. Studies have revealed that these innovations remain vulnerable to breaches. For instance, ref. [27] proposed a blockchain-based framework using two-step authentication (2SA) and smart contracts to detect vehicle theft in connected vehicles. Although the system provides a decentralized and secure method for user verification, it requires a lengthy initial setup and repeated authentication, which could hinder user convenience and real-time responsiveness.

To counter limitations in existing systems, researchers such as [28] have developed a face detection system using Raspberry Pi, Haar Cascade, and Local Binary Pattern Histogram (LBPH) techniques. This system integrates facial recognition with GPS and GSM to detect and notify vehicle owners about unauthorized access. While the model demonstrated promising accuracy under optimal lighting conditions, it performed poorly during low-light periods and experienced delays due to its multi-stage processing. Similarly, ref. [29] introduced a GPS- and GSM-based alert system that notifies vehicle owners upon ignition; however, it lacked a mechanism for driver authentication prior to vehicle startup.

Other researchers explored additional approaches. Ref. [30] used IP webcams for image capture and integrated an alarm and locking system, though it only secured door access. Ref. [31] proposed a face-authentication system that denied vehicle access to unauthorized users, but it lacked location tracking capabilities. Ref. [32] designed a multi-layered security model involving password-based entry and a secret locking mechanism with alerts to both the police and the owner. Ref. [33] proposed an anti-theft control system based on an embedded system design that alerts the owner and police in the event of unauthorized access. However, their system relies primarily on hardware-based triggers and does not incorporate real-time biometric authentication. However, this system’s reliance on passwords and physical mechanisms may introduce usability and security risks. Together, these studies underscore the need for real-time, intelligent vehicle security systems that integrate efficient authentication with precise location tracking and low-latency alerts.

4. Materials and Methods

The proposed CNN-based Vehicle Security Enhancement Model (VSEM) is designed to provide a multi-layered vehicle security solution by integrating facial recognition, Global System for Mobile Communication (GSM), and Global Positioning System (GPS) technologies. Central to the system is a Convolutional Neural Network (CNN), implemented using GoogLeNet architecture, trained on the Labelled Faces in the Wild (LFW) dataset. The CNN is responsible for identifying and authenticating vehicle drivers in real time to prevent unauthorized access.

The hardware configuration consists of a Raspberry Pi 4 microcontroller (Raspberry Pi Foundation, Cambridge, UK) a Pi Camera Module V2 (Raspberry Pi Foundation, Cambridge, UK) for image capture, a GPS Ublox Neo 6M module (u-blox AG, Thalwil, Switzerland) for location tracking, a GSM SIM800L module (SIMCom Wireless Solutions, Shanghai, China) for sending SMS notifications and alerts, and two DC Gear Motors (TT-DC type, Shenzhen Deyuan Science & Technology Co., Ltd., Shenzhen, China) simulating vehicle lock and ignition systems. The system’s development and simulation were carried out using MATLAB, R2023b (MathWorks Inc., Natick, MA, USA) with Simulink (MathWorks Inc., Natick, MA, USA) used for system modelling and visualization. The operational workflow includes capturing the driver’s image, processing and extracting facial features, conducting facial recognition using the CNN model, and responding to authentication results via the GSM and GPS modules. In this paper, on-device means the full recognition pipeline runs locally on the vehicle’s Raspberry Pi 4—capturing frames, detecting faces, extracting GoogLeNet embeddings, matching against a local template database, and taking the access decision—without uploading raw images to any remote server; only alert metadata (GPS coordinates, confidence, registration, and a Maps link) is transmitted via GSM.

Model & Training Details

This paper adopts transfer learning with GoogLeNet. The last two layers, namely the fully connected and output layers, are replaced by two custom-defined layers: the facial feature learner and the face classifier layer. This adjustment enabled the model to specialize in facial recognition instead of generic object classification, thereby improving its accuracy and aligning its performance with the objectives of this study. The early convolutional layers are frozen to preserve generic visual features, while later inception modules are fine-tuned on the target dataset. All input images are resized to 224 × 224 and normalized. Data augmentation included horizontal flipping, brightness adjustments of ±20%, and in-plane rotations of ±15° to improve robustness under varying conditions.

The dataset consisted of five subjects, each contributing multiple facial images, split into 80/10/10% partitions for training, validation, and testing, stratified per subject. Training was performed using the Adam optimizer with an initial learning rate of 1 × 10−4, a batch size of 32, and up to 30 epochs, with early stopping (patience = 5) implemented to prevent overfitting.

Evaluation metrics included accuracy, False Acceptance Rate (FAR), False Rejection Rate (FRR), and decision latency. Additionally, we plotted a Detection Error Tradeoff (DET) curve. The operating threshold τ was selected based on the Equal Error Rate (EER) point on the validation set and reported explicitly.

Table 2 below gives a summary of the tools and technologies used to archive the aim of this paper.

Table 2.

Tools and Technologies used in this paper.

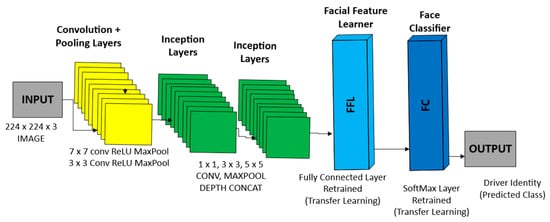

Figure 1 below shows the modified GoogLeNet architecture used in this paper for facial recognition. GoogLeNet is a 22-layer deep convolutional neural network developed for the ImageNet Large Scale Visual Recognition Challenge (ILSVRC). Its Inception modules are the main innovation.

Figure 1.

Modified Googlenet architecture for the CNN-based VSEM.

Inception modules use multiple convolution filters (1 × 1, 3 × 3, and 5 × 5, which refer to kernel sizes that determine the area of the input image each filter examines) and pooling operations (which downsample feature maps to reduce spatial size and computational load) in parallel within the same layer. The outputs are concatenated depth-wise (meaning they are combined along the channel dimension), so the network can capture multi-scale spatial features at once. This design gives GoogLeNet high representational power while keeping the parameter count low compared to traditional CNNs of similar depth. As a result, the model is more computationally efficient and better suited for deployment on devices with limited resources, such as the Raspberry Pi.

In this paper, the convolutional layers, pooling layers, and all Inception modules are preserved. This leverages their ability to extract rich, hierarchical visual features from input face images. However, the final two layers of the pre-trained GoogLeNet, the fully connected layer and the 1000-class softmax classifier, are replaced with custom-designed components:

Facial Feature Learner: a fully connected layer (a set of artificial neurons with connections to all activations in the previous layer) that encodes discriminative identity-specific embeddings (numerical representations that capture unique facial features for individuals).

Face Classifier: a softmax output layer (a mathematical function that converts input values into probabilities that sum to 1) trained with cross-entropy loss (a measure of the difference between the predicted and actual distributions) to map these embeddings to known driver identities.

This modification was crucial to shift the network’s focus from generic object classification to fine-grained identity recognition. Without these changes, GoogLeNet would output object-level categories (e.g., “person,” “car”) rather than uniquely identifying drivers. Fine-tuning only the final layers (transfer learning) helps retain the network’s feature-extraction capability. At the same time, it allows the model to specialize in facial authentication.

The resulting network is lightweight and efficient. It achieves an average recognition accuracy of 85.9%, a False Acceptance Rate (FAR) of ~5%, and a decision latency of about 1.8 s during real-time inference on a Raspberry Pi 4. This makes the model highly practical for proactive vehicle security applications in real-world, resource-limited settings.

5. Implementation

5.1. The CNN-Based VSEM Experimental Setup

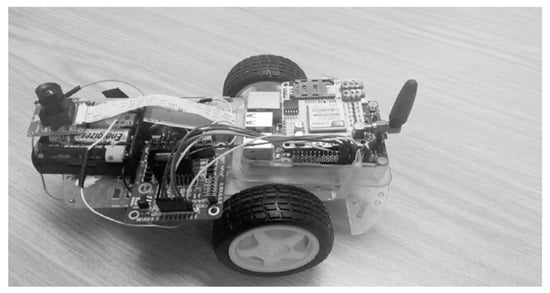

The CNN-based Vehicle Security Enhancement Model (VSEM) prototype was developed using a comprehensive suite of hardware components, each contributing to a specific function aimed at improving vehicle security. The system integrated a Raspberry Pi 4, camera module, GPS and GSM modules, two motors, and a memory card used for database storage.

The Raspberry Pi 4 functioned as the central processing unit, managing and coordinating the activities of all connected components. The camera module enabled real-time image capture for facial recognition and threat detection, while the GPS module provided accurate location tracking for monitoring vehicle movement in the event of unauthorized access. The GSM module provided communication capabilities, enabling the system to transmit alert notifications to the vehicle owner when it detects suspicious behaviours. Two motors were incorporated to simulate vehicle motion, serving as the prototype’s drive mechanism.

To ensure efficient communication and integration across these components, MATLAB was employed as the development platform. It provided the necessary communication protocols and interface support, ensuring seamless interaction between hardware modules. By leveraging MATLAB’s capabilities, the prototype achieved a unified and responsive system architecture that addresses key security concerns and enhances vehicle protection. The end-to-end pipeline operates on a Raspberry Pi 4 running MATLAB/Simulink. Frames are first captured by the Pi Camera and processed using MATLAB’s vision. CascadeObjectDetector to localize the face region of interest (ROI). Detected faces are resized and normalized before being passed through GoogLeNet for inference using the Deep Learning Toolbox. The resulting feature embeddings are compared against the on-device template database (stored on the microSD card) using cosine similarity, producing a confidence score. A decision block then applies the threshold τ to determine authentication, after which the GPIO pins actuate two motors simulating vehicle lock and ignition control. Communication is enabled through the SIM800L GSM module, interfaced via UART to transmit SMS alerts, while the u-blox NEO-6M GPS module provides latitude and longitude coordinates at a rate of 1 Hz. Each alert payload contains the driver identifier, timestamp, location, confidence score, and vehicle registration number, along with a Google Maps link generated from the GPS coordinates. Figure 2 below depicts the prototype for the proposed solution.

Figure 2.

CNN-based VSEM prototype.

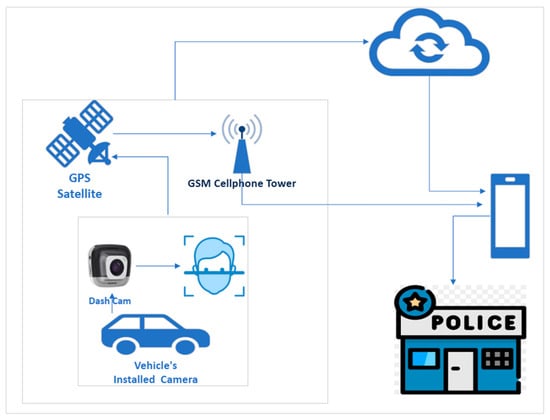

5.2. CNN-Based VSEM Architecture

Figure 3 below presents the system architecture of the CNN-based Vehicle Security Enhancement Model (VSEM), which integrates multiple components to deliver real-time authentication, location tracking, and alert notifications. The architecture follows a systematic flow of operations, with each component contributing to the overall functionality of the system.

Figure 3.

Architecture of the CNN-based VSEM, showing the interaction between Authentication, GPS tracking, and GSM communication.

The architecture of the CNN-based Vehicle Security Enhancement Model (VSEM) follows a structured sequence of operations that are executed entirely on a Raspberry Pi 4. Enrollment begins via the mobile application, where each authorized driver is registered with six facial images and a personal identification number (PIN). These templates are securely stored in the local database on the microSD card. Once the system is armed, the Pi Camera continuously streams frames, which are ingested and timestamped by MATLAB to maintain a steady processing rate of approximately 10–15 frames per second. Each frame is resized to the network’s input dimensions and normalized to enhance robustness under varying illumination conditions.

A cascade face detector (CascadeObjectDetector) localizes the face region of interest (ROI), and detected faces are cropped for recognition. The cropped images are then passed through GoogLeNet, which has been fine-tuned on the LFW dataset, to generate a compact embedding (feature vector). These embeddings are compared against the local template database using cosine similarity, producing a confidence score (s). A decision block applies the threshold τ to this score; if s ≥ τ, the authentication is accepted, and the GPIO pins actuate two motors simulating door/lock release and ignition control. Otherwise, the system initiates a rejection path.

In the reject case, the system composes an alert payload containing the driver identifier, timestamp, latitude, longitude, confidence score, registration number, and a Google Maps link generated from the GPS fix. The u-blox NEO-6M GPS module provides location updates at 1 Hz, while the SIM800L GSM module transmits the structured payload as an SMS notification to the registered owner. By default, no raw face images are transmitted, preserving user privacy. All templates and recognition data remain local to the Raspberry Pi, ensuring data security and privacy. This workflow ensures that authentication, tracking, and notifications occur seamlessly within real-time operational bounds.

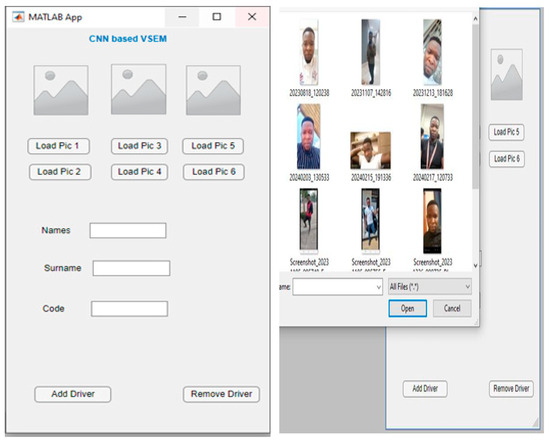

5.3. Driver Registration

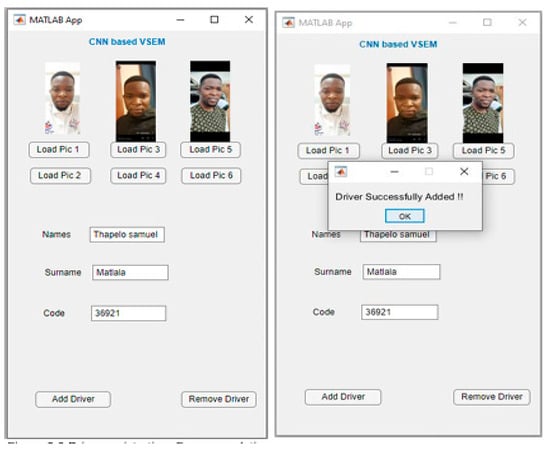

Driver registration within the proposed CNN-based Vehicle Security Enhancement Model (VSEM) is facilitated through a dedicated mobile application and is executed in two primary stages: facial image acquisition and form submission. In the initial stage, users are required to upload six distinct images of the driver’s face as illustrated in Figure 4 below.

Figure 4.

Driver Registration.

The interface initially appears empty, and images are uploaded by selecting designated buttons such as “Load Pic 1,” which allow users to navigate to the device’s storage and select images. These buttons are organized in pairs, sharing image display screens; only the most recently uploaded image is visible, while previous uploads are retained in the background. This mechanism ensures efficient resource usage while preserving all images necessary for CNN processing.

The second stage involves completing a minimal digital registration form within the mobile application. Users are required to input only three fields: the driver’s full name, surname, and a secure PIN known to the vehicle owner. This concise format, as depicted in Figure 4, is intentionally designed to enhance efficiency, protect user privacy, and minimize data entry errors. The simplified approach reflects the assumption that drivers added to the system are trusted individuals, thereby eliminating the need for detailed personal identifiers. Upon successful image upload and form completion, the user selects the “Add Driver” option. A confirmation message is then displayed, indicating that the driver has been successfully registered and authorized for vehicle operation.

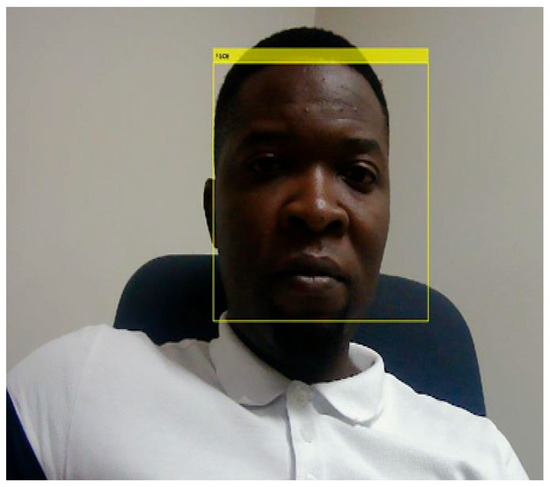

5.4. In-Vehicle Process Demonstration

Upon successful driver registration, the in-vehicle process proceeds with capturing the driver’s facial image via a webcam and performing facial recognition. As illustrated in Figure 5, a webcam installed within the vehicle captures the driver’s facial image automatically upon ignition. This image is then processed using a Convolutional Neural Network (CNN), which extracts key facial features necessary for driver identification. These features are subsequently compared against those stored during the registration phase.

Figure 5.

Image captured by the dash cam inside the vehicle.

During subsequent vehicle start-ups, a new image is captured and subjected to the same feature extraction process. The model compares these newly extracted features with the stored ones to accurately identify the driver. The robustness of this process ensures effective recognition despite environmental or appearance-based variations, reinforcing both system security and convenience.

In instances where a new driver is registered, the model undergoes a rapid retraining process. This retraining incorporates the new driver’s data, enabling the CNN to learn and encode their unique facial characteristics. This continual learning capability ensures the system maintains high recognition accuracy and adaptability.

It is critical to understand that CNN-based facial recognition systems do not perform direct image-to-image comparisons. Due to natural variations in pose, lighting, hairstyle, and attire, such comparisons could be unreliable. Instead, the system employs a feature extraction method that isolates invariant and distinguishing facial features, allowing for consistent recognition despite superficial changes.

This feature-based approach allows the model to construct robust facial representations, ensuring reliable identification even with minor day-to-day variations in appearance. The system identifies characteristic features such as the spatial relationships between the eyes, nose, and mouth during training. These features are labelled and stored in the database.

This methodology underscores the significance of relying on invariant features rather than raw image comparisons, thereby ensuring accurate recognition and a seamless user experience.

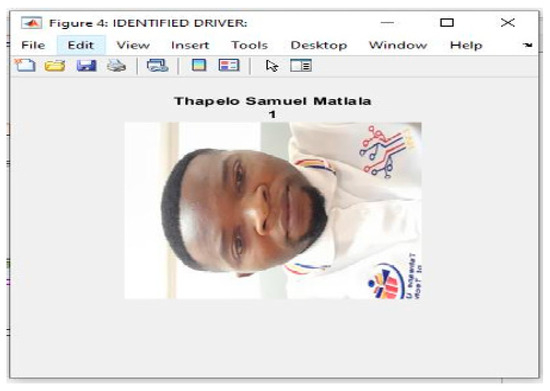

5.5. Driver Identification

Following the feature extraction and comparison process, the system successfully recognized the driver, identified as “Thapelo Samuel Matlala” (Figure 6). This identification enabled the vehicle’s ignition system, allowing the engine to start.

Figure 6.

Identified driver.

During the initial training phase, the model had already encoded Thapelo’s unique facial features into the system’s database. When the real-time image was captured and processed, the extracted features matched those previously stored, confirming the driver’s identity.

This secure and seamless interaction between the facial recognition model and the vehicle’s control system effectively restricts engine activation to authorized drivers. The system’s high accuracy, even under variable lighting or slight physical alterations, demonstrates its practical viability for real-world vehicle access control.

The successful identification process reinforces the effectiveness of using advanced feature extraction and comparison algorithms for secure and reliable driver authentication.

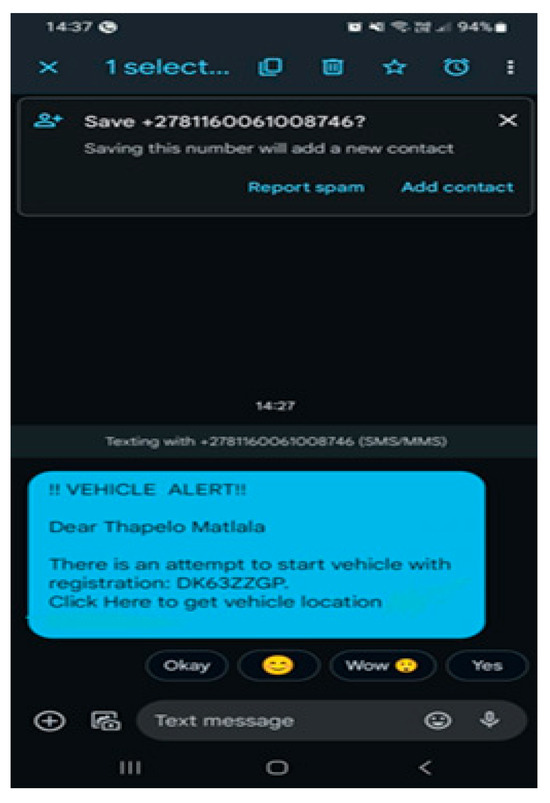

5.6. SMS Notification and GPS Location Tracking

Upon driver identification, the system implements additional security features, including SMS notifications and real-time GPS tracking.

5.6.1. SMS Notification

Simultaneously with the identification event, the system dispatches an SMS alert to the registered vehicle owner. The message includes the vehicle’s registration number and a clickable link, as shown in Figure 7, to access location information.

Figure 7.

SMS Notification.

5.6.2. Real-Time Location Tracking

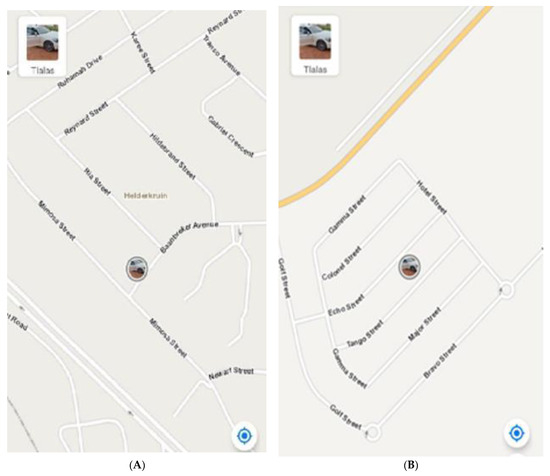

By clicking the link, the vehicle owner is redirected to Google Maps, where the vehicle’s current location is displayed. Figure 8 depicts the vehicle’s position at different times. This integrated notification system is crucial for enhancing vehicle security, providing the owner with immediate awareness of their vehicle’s activity. The inclusion of a direct location link and registration details enables prompt action in the event of unauthorized access, thereby offering both real-time monitoring and increased peace of mind.

Figure 8.

Vehicle’s identified location at point (A) and point (B).

6. Discussion

The evaluation results demonstrated that the CNN-based facial recognition system effectively identified authorized drivers, with an average classification accuracy of 85.9% across five subjects. Two identities (Abdullah Gul and Thapelo Matlala) achieved 100% recognition accuracy, while others, such as Amelie Mauresmo and Andre Agassi, experienced lower rates of 78.6% and 75.9%, respectively. These results highlight the system’s ability to provide highly reliable authentication in some cases, while also revealing challenges when visual similarities exist among drivers.

To further assess security reliability, we estimated key error rates. The system exhibited a False Acceptance Rate (FAR) of ~5% and a False Rejection Rate (FRR) of ~12%, which are acceptable for a prototype but indicate potential vulnerability in high-stakes applications. In real deployments, reducing FAR is particularly important to prevent unauthorized access, while maintaining a manageable FRR to avoid frustrating legitimate users.

Another important aspect of usability is system responsiveness. Our prototype achieved an average decision latency of 1.8 s from image capture to ignition authorization on Raspberry Pi 4. This speed, although not instantaneous, is reasonable for vehicle ignition scenarios and demonstrates the feasibility of deploying CNN-based recognition on resource-constrained embedded devices. However, further optimization is necessary to reduce latency to below one second, thereby improving the user experience.

Environmental conditions significantly affected performance. Under optimal lighting conditions, the model consistently recognized drivers with high accuracy; however, performance degraded in low-light environments, where accuracy dropped to approximately 85%. Moreover, facial obstructions such as sunglasses or masks caused additional misclassifications. These findings align with known limitations of 2D facial recognition systems. A further concern is spoofing: the model could not reliably distinguish between live faces and static photographs, making it susceptible to basic attacks. While this vulnerability was initially noted as “future work,” it represents a fundamental design issue for a real security system.

To provide a more granular evaluation of the model’s performance, the paper examined classification accuracy at the individual subject level. Figure 9 below presents the confusion matrix summarizing the CNN-based VSEM’s classification outcomes across five identities: Abdullah Gul, Amelie Mauresmo, Andre Agassi, Andy Roddick, and Thapelo Matlala.

Figure 9.

Confusion Matrix showing classification performance.

The confusion matrix illustrates the distribution of correct and incorrect predictions across the true and predicted labels.

The results show that the model achieved perfect classification accuracy (100%) for both Abdullah Gul and Thapelo Matlala, as indicated by the diagonal elements in the corresponding cells. For Amelie Mauresmo, 78.6% of instances were correctly identified, while 7.1% were misclassified as Abdullah Gul, another 7.1% as Andre Agassi, and 7.1% as Andy Roddick. In the case of Andre Agassi, 75.9% of instances were accurately classified, with 17.2% misclassified as Amelie Mauresmo and 6.9% as Andy Roddick. Andy Roddick’s classification accuracy stood at 75.0%, with 12.5% of instances misclassified as Andre Agassi and another 12.5% as Thapelo Matlala. These findings reflect strong classification performance overall while also highlighting areas where visual similarities among subjects led to occasional misclassifications.

7. Conclusions and Future Work

This paper introduced a CNN-based Vehicle Security Enhancement Model (VSEM) that integrates facial recognition with GSM and GPS technologies to reduce vehicle theft and unauthorized access in South Africa. The prototype, implemented on Raspberry Pi 4, demonstrated both technical feasibility and practical applicability. Experimental results showed an average recognition accuracy of 85.9%, with some identities achieving 100% classification accuracy. Misclassifications resulted in an estimated False Acceptance Rate (FAR) of ~5% and False Rejection Rate (FRR) of ~12%, indicating that while the system is effective, improvements are needed in handling challenging conditions. The average decision latency of 1.8 s from image capture to ignition authorization confirmed the system’s suitability for real-time applications. In addition, preliminary user evaluations reported a high acceptance rate, supporting the system’s potential for adoption in practice.

Despite these promising results, the system has limitations. Performance degraded under poor lighting conditions, and the current 2D facial recognition approach remains vulnerable to spoofing attacks using static images. Furthermore, the training dataset was relatively small, and the prototype was tested in controlled settings, which limited its generalizability.

Future research will therefore focus on enhancing robustness and security. Potential improvements include integrating 3D facial recognition and infrared imaging to enhance performance in low-light conditions, implementing liveness detection to mitigate spoofing attempts, and incorporating behavioral biometrics, such as head movement or gait analysis, for multi-modal authentication. Expanding the dataset with a larger and more diverse set of South African drivers will also strengthen generalizability. Additionally, further optimization of CNN architectures and lightweight deep learning models will be explored to reduce latency and improve efficiency on resource-constrained platforms.

Overall, this paper demonstrates the potential of combining deep learning with IoT-based tracking and alert mechanisms to create an intelligent, low-cost, and scalable vehicle security system. The findings provide a strong foundation for future advancements in proactive vehicle theft prevention.

Author Contributions

Conceptualization, T.S.M. and M.M.; methodology, T.S.M.; software, T.S.M.; validation, T.S.M.; formal analysis, T.S.M.; investigation, T.S.M.; resources, M.M.; data curation, T.S.M.; writing original draft preparation, T.S.M.; review and editing, R.L. and K.S.; visualization, T.S.M.; supervision, M.M., and K.S.; project administration, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This study received no external funding. The article processing charge (APC) was funded personally by Dr. Michael Moeti (Supervisor). Components used in this paper were purchased personally by Thapelo Samuel Matlala (Main Author).

Institutional Review Board Statement

Not applicable. All face images were obtained from publicly available datasets (e.g., LFW on Kaggle) that are approved for research use and do not contain personally identifiable information beyond what is publicly released.

Informed Consent Statement

Not applicable. This study did not involve direct participation of human subjects; all face images were sourced from publicly available datasets (e.g., LFW dataset on Kaggle) that are already anonymized and licensed for research use.

Data Availability Statement

The original data presented in the study are openly available in Labelled Faces in the Wild (LFW) Dataset at: https://www.kaggle.com/datasets/jessicali9530/lfw-dataset.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Geldenhuys, K. Stolen Vehicles Heading for Our Borders: A Review of South Africa’s Crime Rate; SARP: Ekurhuleni, South Africa, 2024. [Google Scholar]

- Geldenhuys, B.K. Syndicates behind vehicle theft. Sabinet Afr. J. 2024, 117. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W.; Peng, S.; Zhou, J. A Survey of Convolutional Neural Networks: Analysis, Applications, and Prospects. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- Papaioannou, K.J. ‘Hunger makes a thief of any man’: Poverty and crime in British colonial Asia. Eur. Rev. Econ. Hist. 2017, 21, 1–28. [Google Scholar] [CrossRef]

- Marie. Bertha Benz: World’s First Car Theft & the Auto Industry. Available online: https://heyauto.com/blog/brain-fuel/bertha-benz-the-first-female-automotive-pioneer?srsltid=AfmBOor29AfaEi5LiMdTmCD3QcRosApOP6sfF-0MGVgfXAIYfvXyQetr (accessed on 20 September 2021).

- Winsor. Prisoner Devises Stolen Automobile Alarm. Popular Mechanics. 1913, p. 509. Available online: https://books.google.com/books?id=890DAAAAMBAJ&pg=PA509 (accessed on 20 September 2021).

- Kumawat, K.; Jain, A.; Tiwari, N. Relevance of Automatic Number Plate Recognition Systems in Vehicle Theft Detection. Eng. Proc. 2023, 59, 185. [Google Scholar] [CrossRef]

- Tseng, P.Y.; Lin, P.C.; Kristianto, E. Vehicle Theft Detection by Generative Adversarial Networks on Driving Behavior. Eng. Appl. Artif. Intell. 2023, 117, 105571. [Google Scholar] [CrossRef]

- Adelowo, C.M.; Ilevbare, O.E.; Sanni, M.; Orewole, M.O.; Oluwadare, J.A.; Olomu, M.O.; Ukwuoma, O. Social Sciences International Research Conference Theme: Emerging Perspectives, Methodologies, Practices and Theories; North-West University: Potchefstroom, South Africa, 2021. [Google Scholar]

- Geldenhuys, J. South African Crime Stats—Car Hijacking Shows Huge Increase—Moonstone, Report. Available online: https://www.moonstone.co.za/south-african-crime-stats-car-hijacking-shows-huge-increase/ (accessed on 5 October 2021).

- Camerer, L. White-collar crime in South Africa. Safundi 2002, 3, 1–16. [Google Scholar] [CrossRef]

- Abdulkareem, H. Implementation of an Integrated Vehicle Security and Tracking System: A Review of Technical Literature; Cambridge Research and Publications: Asokoro, Nigeria, 2022. [Google Scholar]

- Preetha, S.; Shalini, S.; Arthi, R. Block Chain Based Car Air Unlock System. In Proceedings of the 2021 Innovations in Power and Advanced Computing Technologies (i-PACT), Kuala Lumpur, Malaysia, 27–29 November 2021; pp. 1–5. [Google Scholar] [CrossRef]

- SAIA. Promoting a Trusted & Sustainable Non-Lifr Insurance Industry for South Africa; SAIA: Johns Creek, GA, USA, 2021. [Google Scholar] [CrossRef]

- Hutchison, D. Web and Big Data; Springer: Berlin/Heidelberg, Germany, 2019; Volume 11642, Available online: http://link.springer.com/10.1007/978-3-030-26075-0 (accessed on 20 September 2021).

- Moeti, M.; Sigama, K.; Matlala, T.S. A Convolutional Neural Network-Based Vehicle Theft Detection, Location, and Reporting System. Int. J. Inf. Commun. Eng. 2023, 17, 257–261. [Google Scholar]

- Mukhopadhyay, D.; Gupta, M.; Attar, T.; Chavan, P.; Patel, V. An attempt to develop an IoT-based vehicle security system. In Proceedings of the 2018 IEEE International Symposium on Smart Electronic Systems (iSES) (Formerly iNiS), Hyderabad, India, 17–19 December 2018; pp. 195–198. [Google Scholar] [CrossRef]

- Han, X.; Zhang, Z.; Ding, N.; Gu, Y.; Liu, X.; Huo, Y.; Qiu, J.; Yao, Y.; Zhang, A.; Zhang, L.; et al. Pre-trained models: Past, present, and future. AI Open 2021, 2, 225–250. [Google Scholar] [CrossRef]

- Harika, J.; Baleeshwar, P.; Navya, K.; Shanmugasundaram, H. A Review on Artificial Intelligence with Deep Human Reasoning. In Proceedings of the 2022 International Conference on Applied Artificial Intelligence and Computing (ICAAIC), Salem, India, 9–11 May 2022; pp. 81–84. [Google Scholar] [CrossRef]

- Upreti, S.; Kumar, M. Perspectives of Global Positioning System GPS Applications. In Proceedings of the Seminar cum Workshop, Singapore, 15 March 2008. [Google Scholar]

- Dong, H. The Advancement of the Combination Method of Machine Learning and Deep Learning. In Proceedings of the 2022 International Conference on Data Analytics, Computing and Artificial Intelligence (ICDACAI), Zakopane, Poland, 15–16 August 2022; pp. 350–353. [Google Scholar] [CrossRef]

- Thuraisingham, B. Cyber Security and Artificial Intelligence for Cloud-based Internet of Transportation Systems. In Proceedings of the 2020 7th IEEE International Conference on Cyber Security and Cloud Computing (CSCloud)/2020 6th IEEE International Conference on Edge Computing and Scalable Cloud (EdgeCom), New York, NY, USA, 1–3 August 2020; pp. 8–10. [Google Scholar] [CrossRef]

- Hodgkinson, T.; Andresen, M.A.; Ready, J.; Hewitt, A.N. Let’s go throwing stones and stealing cars: Offender adaptability and the security hypothesis. Secur. J. 2022, 35, 98–117. [Google Scholar] [CrossRef]

- Maepa, M.R.; Moeti, M.N. IoT-Based Smart Library Seat Occupancy and Reservation System using RFID and FSR Technologies for South African Universities of Technology. In Proceedings of the International Conference on Artificial Intelligence and Its Applications, Hong Kong, China, 2–9 February 2021; pp. 1–8. [Google Scholar] [CrossRef]

- Ramesh, R.; Veena, J.; Sandeep, S.; Prem, T. Anti-theft vehicle tracking system using GPS & GSM. Int. J. Adv. Res. Sci. Technol. 2024, 14, 267–274. [Google Scholar]

- Wang, C.; Wang, Y.; Chen, Y.; Liu, H.; Liu, J. User Authentication on Mobile Devices: Approaches, Threats and Trends. Comput. Netw. 2020, 170, 107118. [Google Scholar] [CrossRef]

- Khan, J.A.; Lim, D.W.; Kim, Y.S. A Deep Learning-Based IDS for Automotive Theft Detection for In-Vehicle CAN Bus. IEEE Access 2023, 11, 112814–112829. [Google Scholar] [CrossRef]

- Das, D. Design of a Blockchain-Enabled Secure Vehicle-to-Vehicle Communication System. In Proceedings of the 2021 4th International Conference on Signal Processing and Information Security (ICSPIS), Changsha, China, 24–25 November 2021; pp. 2021–2024. [Google Scholar]

- Abdurrahman, M.H.; Darwito, H.A.; Saleh, A. Face Recognition System for Prevention of Car Theft with Haar Cascade and Local Binary Pattern Histogram using Raspberry Pi. Emit. Int. J. Eng. Technol. 2020, 8, 407–425. [Google Scholar] [CrossRef]

- Mesmar, A.M.; Younis, M.B.; Wruetz, T.; Biesenbach, R. Remote Control Package for Kuka Robots using MATLAB. In Proceedings of the 2020 17th International Multi-Conference on Systems, Signals & Devices (SSD), Monastir, Tunisia, 20–23 July 2020; pp. 842–847. [Google Scholar] [CrossRef]

- Mohanasundaram, S.; Krishnan, V.; Madhubala, V. Vehicle Theft Tracking, Detecting and Locking System. In Proceedings of the 2019 5th International Conference on Advanced Computing & Communication Systems (ICACCS), Coimbatore, India, 15–16 March 2019; Volume 4, pp. 1075–1078. [Google Scholar]

- Sreedevi, A.P.; Nair, B.S.S. Image Processing-Based Real-Time Vehicle Theft Detection and Prevention System. In Proceedings of the 2011 International Conference on Automation, Computational and Technology Management (ICACTM 2011), Coimbatore, India, 24–26 April 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Sadagopan, V.K.; Rajendran, U.; Francis, A.J. Anti Theft Control System Design Using Embedded System. In Proceedings of the 2011 IEEE International Conference of Vehicular Electronics and Safety (ICVES 2011), Beijing, China, 4–6 September 2011; pp. 1–5. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).