1. Introduction

Intrusion detection systems (IDSs) are fundamentally designed to identify unauthorized access, misuse, and attacks on networked systems, whether initiated by external attackers or insider threats [

1,

2,

3]. Conventional IDS approaches often rely on the premise that malicious activities exhibit distinct patterns compared to normal user behavior and that such anomalies can be reliably detected. Recent advancements in artificial intelligence (AI) have driven the development of autonomous intrusion detection solutions [

4,

5]. To automate threat detection, researchers have employed diverse AI techniques, such as deep learning models [

6,

7], tree-based classifiers [

8,

9], regression-based methods [

10,

11], and ensemble learning algorithms [

12,

13].

Most AI-driven intrusion detection techniques, apart from random forest, function as independent models without integrating their outputs [

14,

15]. These models exhibit distinct limitations, including elevated false alarm rates (e.g., some enterprises grapple with over 10,000 daily alerts from AI-powered security tools [

16]) or significant missed detections (a critical concern in high-stakes network environments [

17]).

Earlier research on AI-based IDS primarily prioritized individual algorithm accuracy rather than leveraging synergistic combinations of multiple techniques. This gap has underscored the necessity of adopting ensemble learning to improve detection robustness [

18,

19]. Recent efforts have increasingly explored ensemble-based IDS solutions, as seen in studies such as [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31]. Some frameworks target anomaly detection by distinguishing malicious from benign traffic [

21,

22,

24,

26,

27,

29,

32], while others classify specific attack types (e.g., DoS, port scans) alongside normal traffic [

20,

25,

28,

30,

31,

33].

Common ensemble strategies include boosting, stacking, and bagging, applied to base models such as decision trees, K-nearest neighbors (KNN), and neural networks. Performance is typically assessed using metrics such as precision, recall, and F1-score, with evaluations conducted on benchmark datasets (e.g., NSL-KDD) or real-world networks (e.g., Palo Alto [

29] or real-time systems such as Kitsune [

22]).

Notable contributions include dataset generation and benchmarking via ensemble methods [

20] and AI model selection through ensemble optimization [

24]. However, existing studies often narrow their focus to specific ensemble techniques applied to a small subset of models, leaving broader comparisons across diverse datasets and methodologies unexplored—a limitation that may restrict their wider adoption.

This study sought to bridge the identified research gap by systematically evaluating a range of ensemble learning techniques for network intrusion detection systems (NIDS). We implemented multiple standalone AI models alongside both basic and advanced ensemble learning frameworks to assess their effectiveness in the NIDS context. Building upon previous studies such as [

20,

21,

22,

23,

24,

25,

26,

27,

28,

29,

30,

31,

32,

33], which have explored a variety of ensemble strategies, we categorized our proposed framework accordingly to facilitate a structured analysis.

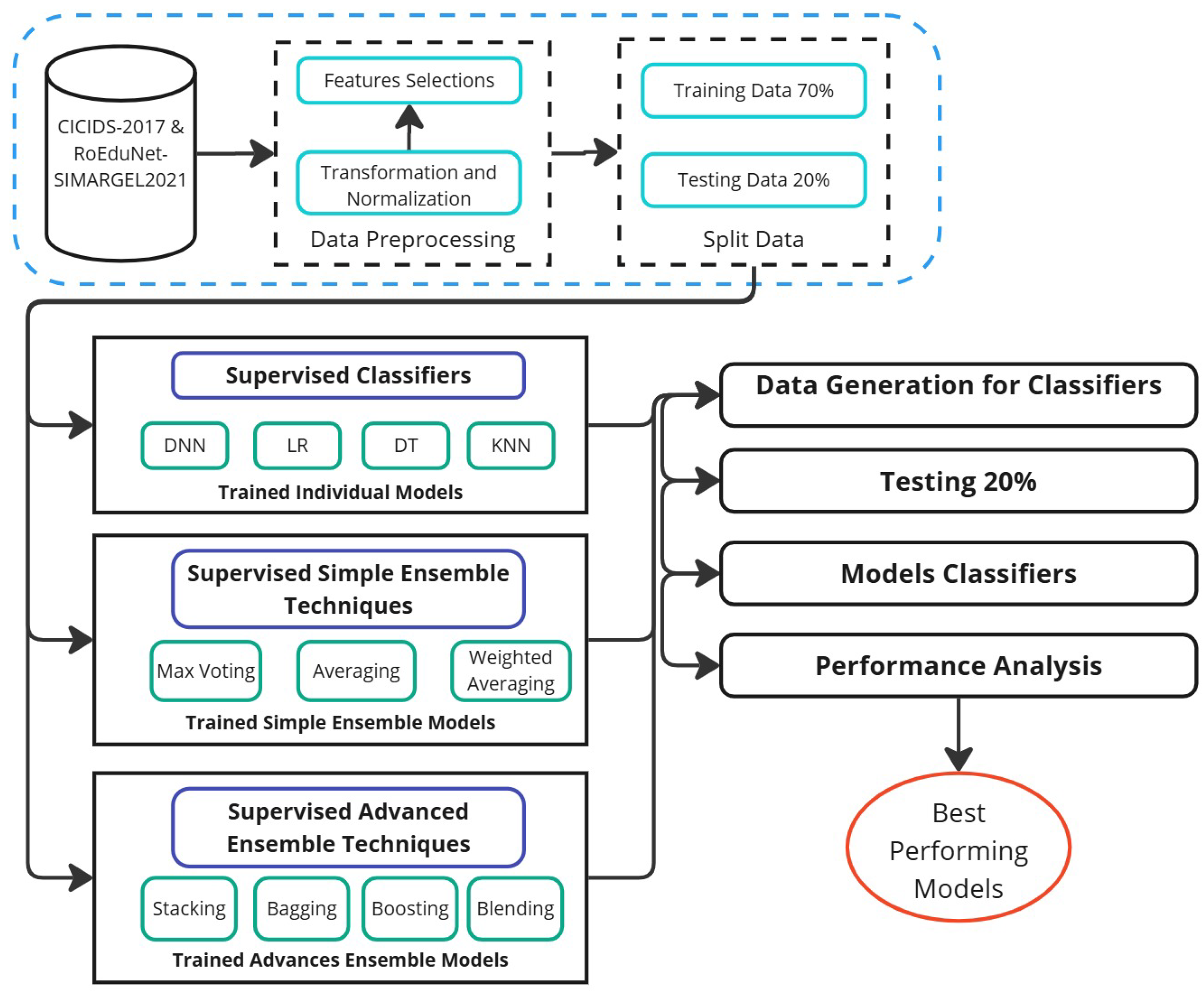

Dataset Preparation: This initial step included importing relevant intrusion detection datasets such as CICIDS-2017 [

34] and RoEduNet-SIMARGL2021 [

35] for subsequent analysis.

Feature Reduction: Prior to model development, we employed feature selection techniques to enhance detection accuracy and decrease computational load. Specifically, we utilized information gain (IG) and K-best algorithms to extract the most informative attributes. From these, we generated multiple feature subsets—namely All_features, IG Top-5, IG Top-10, K-best Top-5, and K-best Top-10—which were then consistently applied across all model training pipelines.

Training Individual Models: With the selected features, we developed baseline models including decision trees [

8,

9], logistic regression [

10,

11], neural networks [

6,

7], and K-nearest neighbors. Each model’s performance was measured using standard classification metrics such as accuracy, precision, recall, and F1-score.

Basic Ensemble Strategies: We then incorporate simple ensemble techniques, including majority voting, weighted averaging, and mean prediction aggregation. The same evaluation metrics are used to assess their effectiveness in comparison to individual models.

Sophisticated Ensemble Techniques: This phase integrated more advanced ensemble learning methods, such as bagging, boosting, stacking, and blending. Random forest [

12,

13], which relies on aggregating multiple decision trees, is categorized here under bagging-based methods. Again, model evaluation was conducted using accuracy, precision, recall, and F1-score.

Model Comparison and Insights: In the final step, we performed a comprehensive comparison of all individual and ensemble models to determine the most efficient configurations for intrusion detection. We also examined how different feature subsets influenced model performance, helping identify the most effective feature selection approaches for IDS optimization.

Our study introduces a diverse range of ensemble configurations, including techniques such as bagging, stacking, and boosting, applied across various foundational learners such as decision trees, logistic regression, neural networks, random forests, and others. These methodological variations emphasize the uniqueness of our contribution in comparison to earlier works, as elaborated in

Section 2.

To assess the robustness and adaptability of our ensemble framework, we utilized two widely recognized intrusion detection datasets, each offering distinct characteristics. The first is the RoEduNet-SIMARGL2021 dataset [

35], compiled under the European Union’s SIMARGL project. This dataset contains live traffic features and simulates real-world network activity, making it particularly appropriate for intrusion detection applications. Notably, few studies have employed ensemble learning extensively on this dataset, highlighting a gap our work aimed to address. The second dataset is CICIDS-2017 [

34], developed by the Canadian Institute for Cybersecurity. This benchmark dataset encompasses a range of intrusion types and remains a staple in IDS research.

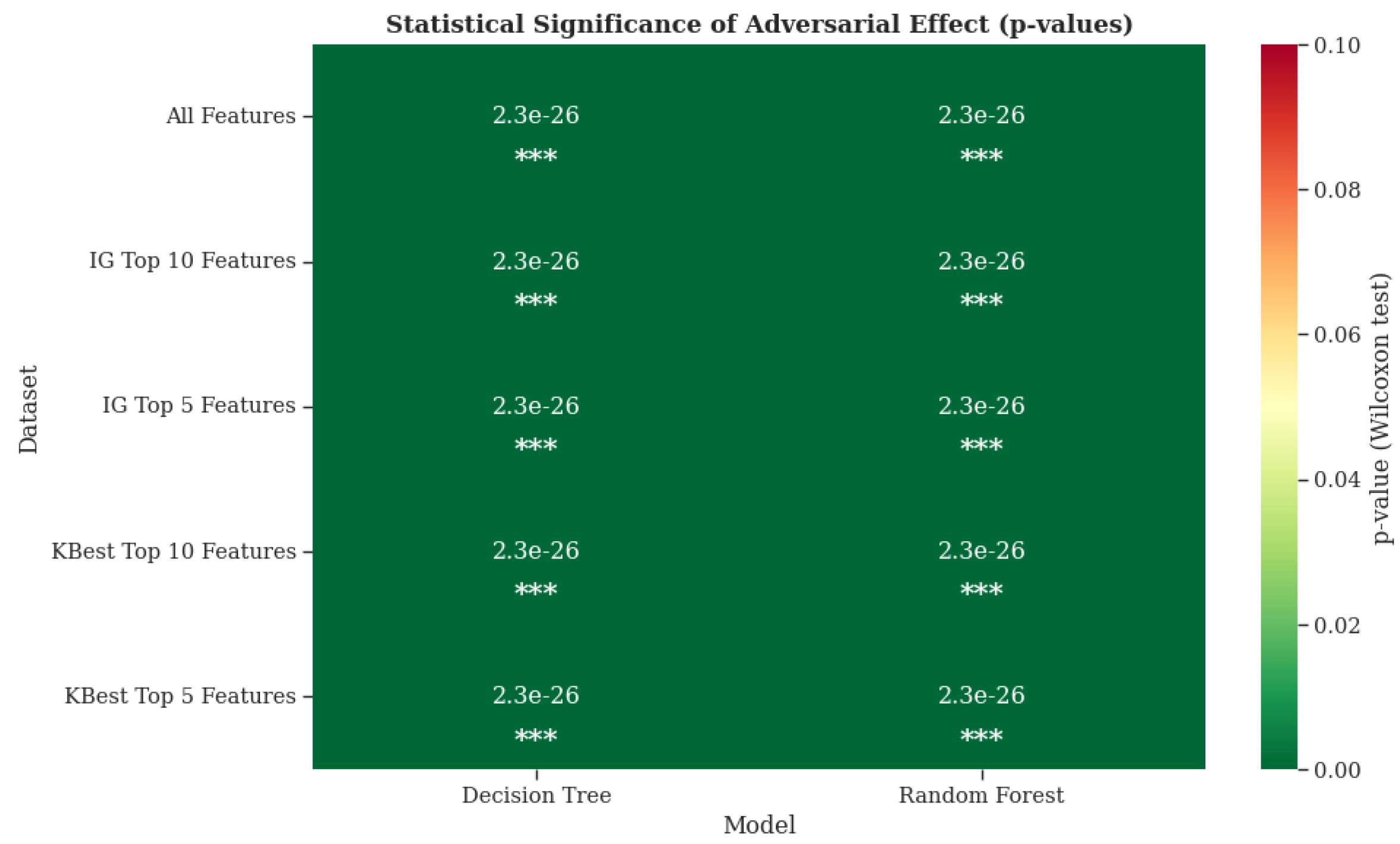

We systematically examined different ensemble strategies across both datasets, incorporating a wide selection of machine learning algorithms. These included logistic regression (LR), decision tree (DT), K-nearest Neighbors (KNN), multi-layer perceptron (MLP), adaptive boosting (ADA), extreme gradient boosting (XGB), CatBoost (CAT), gradient boosting (GB), averaging (Avg), max voting, weighted averaging, and random forest (RF). For each method, we computed a comprehensive set of evaluation metrics across both datasets to measure detection performance. Our analysis covered not only raw metric values but also a comparative ranking of the methods based on F1-score, enabling clearer identification of high-performing models. We further categorized the algorithms by their effectiveness in detecting network intrusions under different scenarios, offering an organized view of their relative strengths.We performed pairwise statistical significance tests with paired t-tests on the F1-scores of all models across multiple feature selection settings. These tests were conducted for both datasets—RoEduNet-SIMARGL2021 and CICIDS-2017—to evaluate whether observed performance differences between models were statistically meaningful.

This evaluation framework empowers researchers and practitioners to make data-driven choices when selecting ensemble methods for IDS. Our study significantly contributes to closing the methodological gap in ensemble-based IDS research by offering a detailed comparative analysis. Our assessment included vital performance indicators such as accuracy, precision, recall, and F1=score, along with runtime analysis. to evaluate operational efficiency. By doing so, we provide a holistic view of each method’s viability in real-world deployment. Our contributions not only benchmark current ensemble approaches but also lay a foundation for future developments in secure and intelligent network defense systems. The selected metrics—accuracy, precision, recall, and F1-score—offer a comprehensive view of model performance, especially in the context of imbalanced datasets common in intrusion detection. Accuracy provides an overall correctness measure, while precision and recall capture the model’s ability to correctly identify attacks without excessive false alarms. The F1 score balances precision and recall, making it ideal for evaluating detection reliability. Runtime analysis complements these metrics by assessing the operational efficiency and scalability of each model, which is critical for real-time deployment scenarios.

In-depth Comparison of Learning Approaches: We performed an extensive comparative study involving a variety of standalone machine learning models and both basic and advanced ensemble strategies applied to intrusion detection tasks.

Multi-Metric Evaluation: The proposed framework was benchmarked using critical performance indicators relevant to cybersecurity, including classification metrics such as accuracy, precision, recall, and F1-score, alongside execution time, to assess efficiency of different learning methods when applied to IDS.

Cross-Dataset Analysis: Our experiments utilized two widely recognized and contrasting IDS datasets—RoEduNet-SIMARGL2021 and CICIDS-2017—to ensure a thorough and diversified performance evaluation in multiple intrusion contexts.

Model Effectiveness Ranking: We present a performance-based ranking of individual and ensemble models, organized in descending order of F1-score, highlighting the comparative strengths and weaknesses of each method.

Advancing Ensemble Learning in IDS Research: By validating the success of various ensemble approaches, our work broadens the scope of ensemble learning techniques in intrusion detection systems and sets the stage for future explorations in the domain.

3. Background and Problem Statement

This section lays the foundation for understanding the landscape of network intrusion detection, the limitations posed by individual AI models, the motivation for using ensemble methods, and the associated evaluation challenges within this domain.

3.1. Categories of Network Intrusions

Network intrusions can be classified using the MITRE ATT&CK framework [

37], which provides a comprehensive taxonomy of adversarial tactics and techniques. In our evaluation, we focused on key attack types from this framework:

Normal Traffic: This category represents standard, legitimate network operations without any malicious activity.

Malware/Malware Repository Intelligence [MITRE ATT&CK ID: DS0004]: This category involves analysis of software designed with malicious intent. Identifying characteristics such as code signatures, debugging metadata, and code reuse patterns helps trace malware origin or link it to known threat actors. Shared features may reveal malware sourced from common platforms or providers [

38].

PortScan (PS)/Network Service Discovery [MITRE ATT&CK ID: T1046]: Port scanning is a reconnaissance activity used to identify open ports and services. It acts as a precursor to full-scale attacks by revealing vulnerable points in the target system [

39].

Denial of Service (DoS)/Network Denial of Service [MITRE ATT&CK ID: T1498]: The approach aims to render services inaccessible by overwhelming the target with traffic or connection requests, ultimately exhausting server resources and causing downtime [

40].

Brute Force [MITRE ATT&CK ID: T1110]: This method involves repeated attempts to guess authentication credentials, exploiting weak or common passwords to gain unauthorized access [

40].

Web Attack/Initial Access [MITRE ATT&CK ID: TA0001, T1659, T1189]: This technique targets vulnerabilities in web applications to gain unauthorized entry. These attacks exploit misconfigurations, software flaws, or exposed services to infiltrate systems [

37,

41].

Infiltration/Initial Access [MITRE ATT&CK ID: TA0001]: This category refers to attempts to gain unauthorized entry into systems, typically via phishing or by exploiting exposed services, potentially leading to persistent access.

Botnet/Compromise Infrastructure [MITRE ATT&CK ID: T1584.005, T1059, T1036, T1070]: Botnets consist of compromised devices controlled remotely via scripts, often used for scalable and automated attacks across multiple vectors.

Probe Attack/Surveillance [MITRE ATT&CK ID: T1595]: These intrusions gather intelligence on network topologies and exposed services. Tactics include ping sweeps, DNS zone transfers, and other scanning methods [

42,

43,

44].

3.2. Intrusion Detection Systems

The sophistication of modern cyber threats requires resilient monitoring systems. IDSs serve as the primary line of defense against malicious actors attempting unauthorized access [

45,

46]. Traditional IDS solutions detect anomalies by observing deviations from normal user behavior [

47]. The incorporation of AI models into IDSs over the last decade has substantially improved detection capabilities [

48], yet significant gaps remain in achieving trustworthy, explainable, and generalizable solutions.

3.3. Limitations of Individual AI Models

Although machine learning models have demonstrated strong performance in IDS, their individual limitations hinder their broader applicability. These models—such as decision trees (DTs), K-nearest neighbors (KNN), support vector machines (SVMs), and deep neural networks (DNNs)—struggle with dataset complexity and often fail to generalize across different types of intrusions. They may exhibit elevated false positive [

6] or false negative rates [

17], making them unreliable for mission-critical tasks such as real-time intrusion prevention. In addition, base models vary in their computational needs and transparency in decision-making. KNN requires significant memory and can be misled by noise and outliers. Neural networks demand large datasets and may suffer from poor interpretability. Logistic regression offers simplicity but is limited in modeling complex relationships. Decision trees are easy to train and interpret but may overfit the data. These disparities emphasize the difficulty of relying on a single AI model for IDS.

3.4. Need for Ensemble Learning in IDS

To overcome these challenges, ensemble learning methods—such as bagging, boosting, and stacking—combine the strengths of multiple models to enhance overall accuracy and robustness [

18,

19]. Ensembles help mitigate individual weaknesses by integrating diverse learners, thereby reducing bias, variance, and overfitting risks.

These ensemble strategies are especially beneficial in intrusion detection, where one-size-fits-all models rarely succeed. Through model diversity and collaborative voting or aggregation, ensemble methods provide more resilient and adaptable detection mechanisms, leading to improved identification of sophisticated and evolving cyber threats.

3.5. Key Advantages of Ensemble Methods

Ensemble learning is an evolving discipline in machine learning that focuses on combining multiple learning models to improve overall prediction accuracy and model stability. This approach leverages the diversity of various base models to counteract individual weaknesses, resulting in a more robust predictive system. The most widely adopted ensemble strategies include bagging, boosting, and stacking.

Bagging (Bootstrap Aggregating): This technique involves generating multiple versions of a training dataset by sampling with replacement. Each variant is used to train an independent model instance. The predictions from these models are then aggregated—commonly via majority voting for classification or averaging for regression—to produce a final output. Bagging primarily aims to reduce variance and prevent overfitting by promoting model diversity.

Boosting: Unlike bagging, boosting adopts a sequential training approach where each subsequent model is trained to focus on the errors made by the previous ones. Misclassified samples are assigned greater importance in the training of subsequent models. This progressive correction of mistakes leads to a more accurate final ensemble by minimizing both bias and variance.

Stacking: This method introduces a hierarchical learning process where multiple heterogeneous base models are first trained independently. Their predictions are then fed into a higher-level model, known as a meta-learner or meta-model. The meta-learner synthesizes the outputs of base models to make the final prediction, thereby capturing intricate dependencies among features and predictions.

Together, these ensemble methodologies offer powerful mechanisms for enhancing the predictive strength of machine learning models. By aggregating insights from diverse learners, they not only improve performance metrics but also increase model robustness across different tasks and datasets.

Application of Ensemble Learning in Our Framework: In this study, we systematically explored several ensemble learning techniques within the scope of network intrusion detection. Our framework exclusively focused on leveraging ensemble strategies—built upon foundational base models—for detecting anomalous network activities. To thoroughly assess the efficacy and generalizability of these ensemble approaches, we performed a detailed comparative analysis on two heterogeneous datasets, each characterized by unique traffic patterns and attack profiles.

This evaluation allowed us to investigate how different ensemble learning schemes impact detection accuracy, generalization capability, and computational efficiency. The resulting insights contribute to refining intrusion detection systems and guiding future applications of ensemble learning in cybersecurity.

4. Framework

This research introduces a novel ensemble learning approach designed to enhance detection performance across multiple network security applications. The proposed system provides security professionals with a structured methodology for optimizing threat identification and attack classification processes, ultimately strengthening organizational cyber defense capabilities. As illustrated in

Figure 1, our comprehensive methodology evaluates multiple ensemble strategies to determine their effectiveness for modern intrusion detection systems.

4.1. Data Preparation

Both the CICIDS-2017 and RoEduNet-SIMARGL2021 datasets were carefully processed to ensure optimal compatibility with intrusion detection algorithms. The CICIDS-2017 dataset required several cleaning operations: elimination of redundant entries, mean-value imputation for missing data in the “Flow Bytes/s” attribute (or feature), standardization of feature naming conventions, and numerical conversion of categorical labels through encoding techniques.

The RoEduNet-SIMARGL2021 dataset underwent comparable refinement procedures, including removal of duplicate entries, elimination of non-varying attributes, mean-based imputation for incomplete values, and numerical transformation of categorical variables using ordinal encoding. These preparatory measures significantly enhanced data integrity for machine learning applications.

4.2. Feature Optimization

To maximize detection accuracy while minimizing computational overhead, we implemented rigorous feature selection protocols. Our approach leveraged two distinct statistical methods: information gain (measuring uncertainty reduction) and K-Best ANOVA F-score (evaluating inter-class variance). Each technique independently identified the 10 most discriminative features, with both methods offering unique perspectives on feature relevance. The finalized feature sets were uniformly applied across all experimental models to maintain evaluation consistency. By evaluating models across multiple feature subsets (e.g., IG Top-5, K-Best Top-10), we demonstrated that certain configurations maintain high detection performance even with reduced or abstracted feature sets. This suggests resilience to feature drift and adaptability to unseen attack vectors.

4.2.1. CICIDS-2017 Feature Analysis

The feature selection process for CICIDS-2017 employed both information gain and ANOVA F-score methodologies.

Table 2 displays the highest-ranked features identified by each approach.

4.2.2. RoEduNet-SIMARGL2021 Feature Analysis

The identical feature selection methodology was employed for the RoEduNet- SIMARGL2021 dataset, with both the information gain and ANOVA F-score approaches being used.

Table 3 summarizes the ten most significant features identified by each selection criterion, demonstrating their discriminative capabilities for attack classification.

4.3. Algorithm Selection and Methodology

This section outlines our approach to selecting both core machine learning algorithms and their ensemble combinations for enhanced intrusion detection performance.

4.3.1. Core Single Classification Algorithms

We considered four fundamentally different machine learning approaches to ensure diverse modeling capabilities:

Decision Tree Classifiers: These hierarchical models offer transparent decision pathways through recursive data partitioning, making them valuable for interpretable security analytics.

K-Nearest Neighbors: This distance-based algorithm classifies network events by comparing them to the most similar historical instances, effectively capturing complex attack patterns through local approximations.

Multilayer Perceptrons: Our neural network implementation utilizes multiple hidden layers to learn sophisticated nonlinear relationships in network traffic data.

Logistic Regression: Serving as our baseline linear model, this algorithm establishes fundamental discriminative boundaries between attack and normal traffic patterns.

4.3.2. Basic Ensemble Strategies

To combine the strengths of individual models, we use three fundamental aggregation approaches:

Prediction Averaging: This technique synthesizes outputs from multiple classifiers through arithmetic mean computation, effectively smoothing out individual model biases.

Plurality (Majority) Voting: Our voting system determines final classifications by selecting the most frequently predicted class among all constituent models.

Performance-Weighted Combinations: More accurate models were assigned greater influence through empirically determined weighting coefficients, as detailed in

Section 5.

4.3.3. Advanced Ensemble Methods

To enhance model performance, we employ several advanced ensemble techniques:

Bagging: This method generates multiple bootstrapped datasets, training base learners independently on each. Aggregating their predictions reduces variance and boosts robustness. Random forest, an example of bagging, combines many decision trees to prevent overfitting and maintain consistent performance.

Blending: Blending combines outputs from various base learners as input features to a meta-learner, improving generalization by leveraging model diversity.

Boosting: Boosting sequentially trains models that focus on correcting previous errors, placing higher weights on misclassified samples to iteratively refine prediction accuracy.

Stacking: This hierarchical technique trains multiple base learners and feeds their predictions into a meta-model, which learns the optimal way to combine them, capturing complex relationships among predictions.

4.4. Model Development and Training

Our implementation uses Python 3.13, beginning with individual base models, advancing to simple ensemble methods, and culminating in advanced ensemble techniques. Prior to training, feature selection via information gain and K-Best methods identifies the most informative attributes, reducing complexity and improving performance. We also evaluate models trained on all features. To utilize computational resources efficiently, TensorFlow’s tf.distribute. MirroredStrategy() enables synchronous multi-GPU training by replicating models across GPUs, aggregating gradients to maximize throughput and consistency. For individual models, each base learner—decision trees, random forests, neural networks (MLP), and logistic regression—is implemented and trained separately using libraries including scikit-learn, TensorFlow, and Keras. For simple ensemble methods, we combine individual model predictions using averaging, max voting, and weighted averaging ensembles. Training leverages GPU acceleration. For advanced ensemble methods, advanced techniques including Bagging, Blending, Boosting (AdaBoost, CatBoost, Gradient Boosting, XGBoost), and stacking are implemented using scikit-learn and TensorFlow with multi-GPU support. Bagging uses random forest as a base; blending and stacking train meta-models (typically decision trees) on base learner predictions. Boosting methods follow standard iterative procedures.

4.5. Evaluation Metrics and Model Selection

We assessed models based on accuracy, precision, recall, F1-score, and runtime to balance effectiveness with computational efficiency. Models were chosen for their proven utility in IDS literature and diversity in learning principles, enabling robust comparative analysis. The chosen models encompassed a diverse range of learning paradigms—linear (LR), tree-based (DT, RF, GB variants), instance-based (KNN), and neural networks (MLP)—ensuring broad coverage of algorithmic behavior under different intrusion scenarios. Baseline aggregation is provided by ensemble strategies including Avg, Max Voting, and Weighted Avg, while advanced techniques including ADA, XGB, and CAT offer improved performance through feature sensitivity and iterative refinement.

4.6. Key Network Intrusion Features

Table 4 and

Table 5 list and describe important features from the RoEduNet-SIMARGL2021 and CICIDS-2017 datasets, essential for understanding model inputs and their relevance.

Table 6 presents a comparative overview of the two network intrusion datasets used in this study. CICIDS-2017 includes 7 attack categories and 78 features across approximately 2.78 million samples, while RoEduNet-SIMARGL2021 offers a significantly larger scale with over 31 million samples, 3 attack labels, and 29 features. This contrast highlights the diversity in dataset complexity and volume, supporting robust evaluation across varied threat landscapes. While all features listed in

Table 7 were used in initial experiments, highlighting these key characteristics helps to interpretability and understanding. We emphasize that we also used feature selection methods to choose the best features in order to test the performance (both accuracy-related and efficiency-related ones) of both ensemble and single methods under this selection.

7. Conclusions

The fundamental purpose of an intrusion detection tool is to provide a strong safeguard against security threats, and leveraging AI can greatly improve its automation and effectiveness. With the rising frequency of network attacks, significant research has been dedicated to creating AI-based IDS. However, the variety of AI models used for this task, each with unique advantages and limitations, complicates the selection of an optimal model for any specific dataset.

To overcome this issue, hybridizing multiple AI models can lead to substantial gains in overall performance for network intrusion detection. This work addresses this need by assessing a wide array of ensemble techniques for IDS. We conducted an in-depth comparative analysis of standalone models against both simple and advanced ensemble learning architectures. Our methodology included selecting key features, training the base and ensemble models, and then generating performance metrics to offer crucial findings on their effectiveness.

Our findings are based on fourteen different combinations of individual and ensemble models, which utilized techniques such as boosting, stacking, and blending across various base learners. The analysis classified these AI models according to key performance indicators (accuracy, precision, recall, F1-score) and processing time, revealing the strengths of different learning approaches on these datasets. Furthermore, our research offers detailed guidance on selecting the best individual or ensemble ML models for network intrusion detection, tailored to the characteristics of different datasets. Our evaluation was performed on two widely used network intrusion benchmarks, each possessing unique properties.

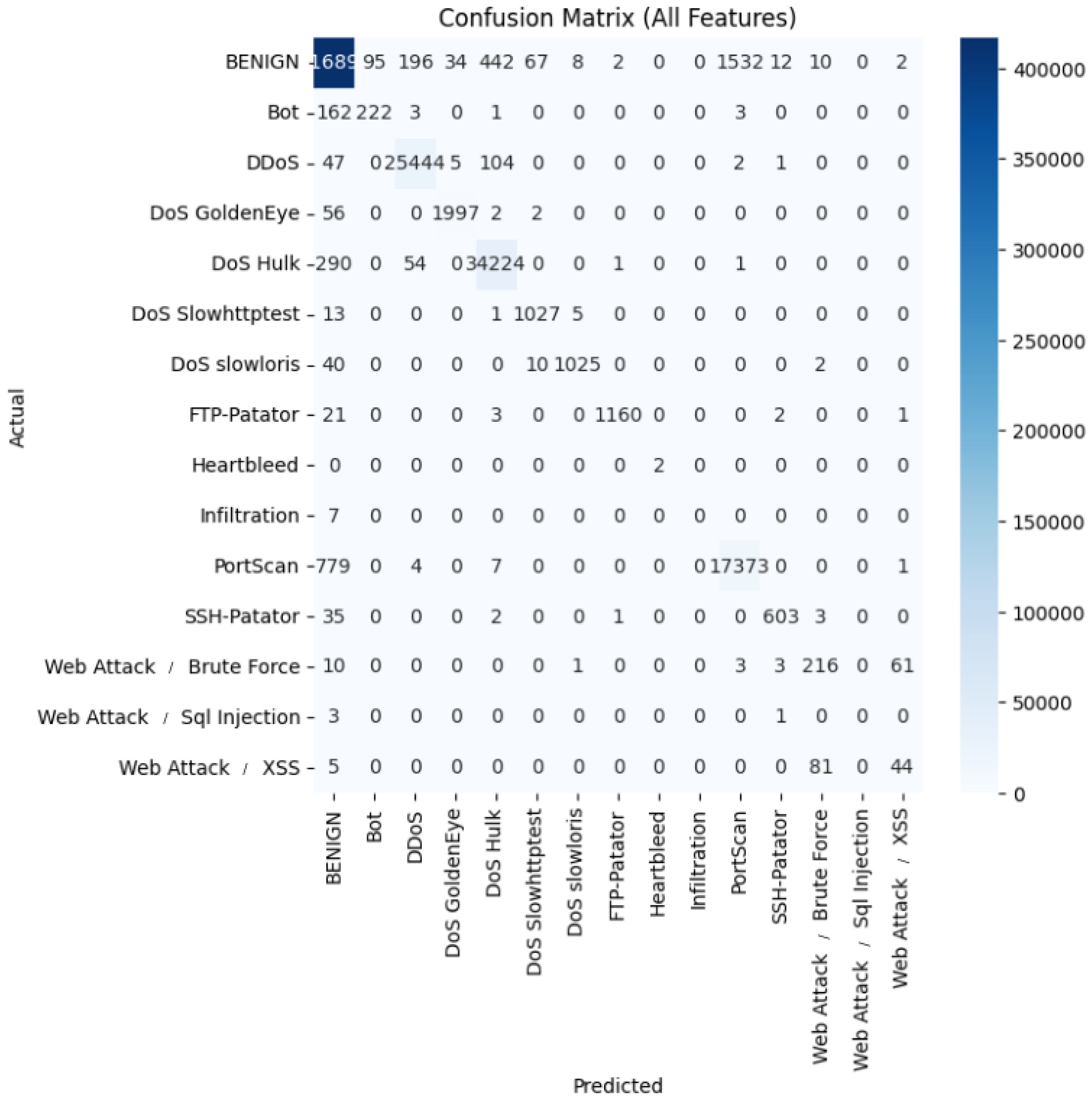

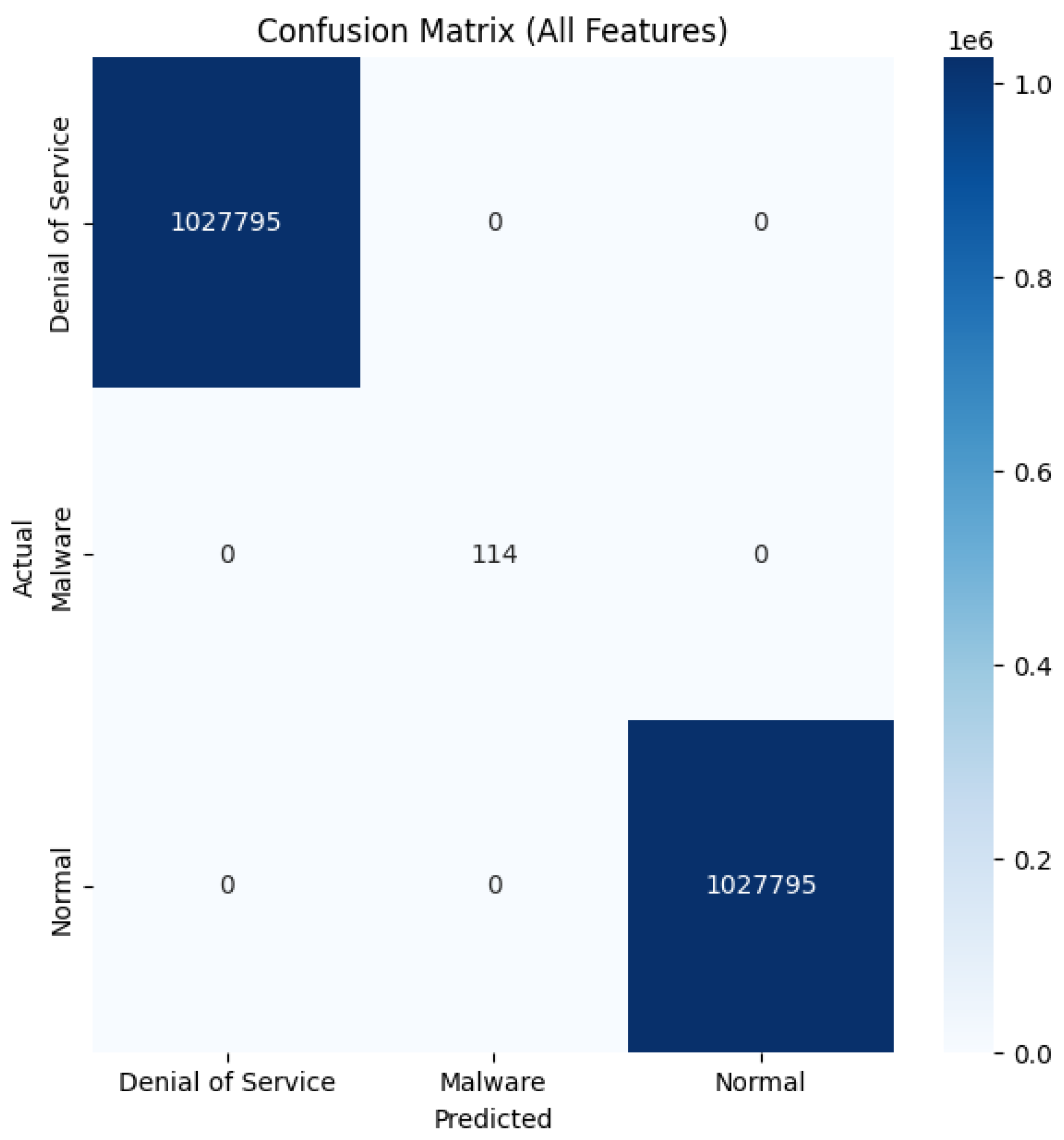

In particular, our framework was tested on the RoEduNet-SIMARGL2021 and CICIDS-2017 datasets and revealed several important findings:

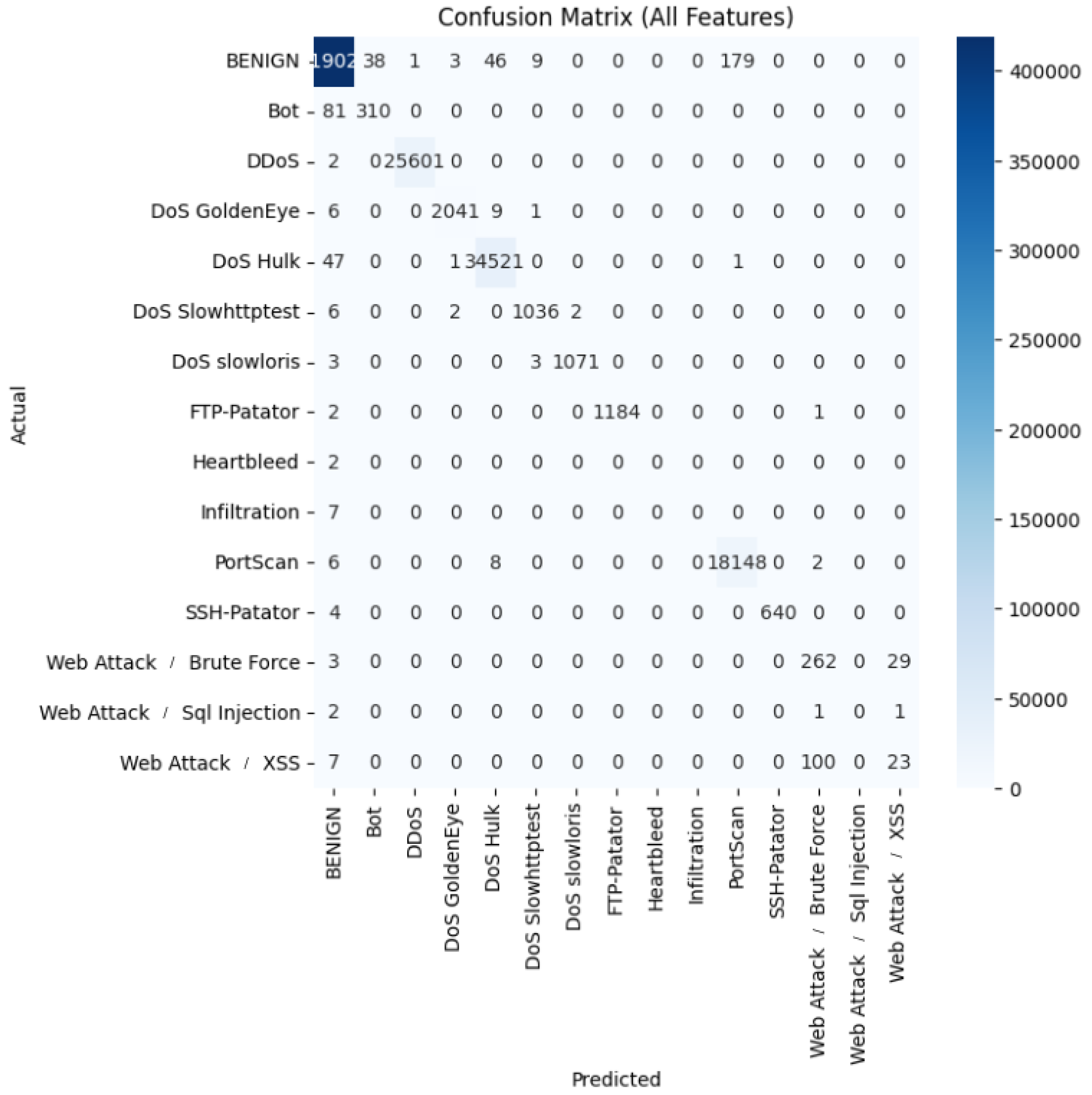

Ensemble Methods Improve Performance: Combining multiple models consistently outperformed single models. For example, random forest and decision trees achieved perfect scores (F1 = 1.0) on RoEduNet-SIMARGL2021, while blending and bagging techniques performed exceptionally well on CICIDS-2017 (F1 > 0.996).

Feature Selection Enhances Computational Efficiency: Using information gain (IG) reduced training time by 70%–94% without sacrificing accuracy. However, ANOVA-based K-best selection sometimes removed critical features, negatively impacting performance.

Speed vs. Accuracy Tradeoffs: Some ensemble methods, such as XGBoost, offered both speed and accuracy, making them ideal for real-time applications. In contrast, others such as stacking and blending were slower but provided higher robustness and accuracy.

Dataset-Specific Performance Variations: The performance of the model varied according to the dataset. For example, logistic regression struggled with complex attacks in CICIDS-2017 but performed well on simpler tasks with lower labels in RoEduNet-SIMARGL2021.

We further supported the research community by releasing our source code, establishing a versatile ensemble learning framework tailored for network intrusion detection. This framework can be extended with additional models and datasets. Our analysis also identified top-performing models per dataset and revealed shared and unique behavior patterns among models using confusion matrices that helped explain performance outcomes. This work marks a meaningful step forward in applying ensemble learning to intrusion detection systems. Our thorough experimentation and comparative analysis validate the strength of these methods, offering practical direction for both academic research and real-world cybersecurity applications.

Concept Drift: In dynamic network environments, the statistical properties of traffic data can change over time due to evolving attack strategies and legitimate usage patterns. This phenomenon, known as concept drift, poses a significant challenge to maintaining model accuracy. We acknowledge that periodic retraining or online learning mechanisms may be necessary to adapt to such changes. Future work will explore drift detection techniques and incremental learning strategies to enhance model resilience.

Retraining Costs of Complex Models: As noted in

Table 9, advanced ensemble methods such as stacking require substantial computational resources (e.g., over 23,000 s of training time on RoEduNet-SIMARGL2021). While these models offer high accuracy, their retraining cost may be prohibitive in real-time or resource-constrained environments. We suggest that lightweight models such as XGBoost or CatBoost may be more suitable for frequent updates in production settings.

Scalability in High-Throughput Networks: Enterprise networks often generate millions of flows per hour, demanding intrusion detection systems that can scale efficiently. Our framework demonstrates that certain models (e.g., decision trees, logistic regression) offer fast inference times and can be deployed in high-throughput scenarios. However, they have lower performance capabilities. Thus, future works can build on our insights for exploring the importance of balancing detection accuracy with inference latency and memory footprint, especially for real-time applications.

Integration of Unsupervised Learning: We emphasize that our work has exclusive focus on supervised learning methods with the main focus being on comparative analysis of different ensemble methods. Indeed, while supervised approaches offer strong performance when labeled data is available, they may fall short in detecting novel or zero-day attacks, which are not represented in the training data. Thus, we highlight the importance of integrating unsupervised and semi-supervised techniques in future work. These approaches—such as clustering, anomaly detection, and self-training—can enhance the system’s ability to identify previously unseen threats and adapt to evolving attack patterns.