1. Introduction

The world is highly dependent on Internet-based technologies. Malware attacks try to disrupt the normal functioning of the Internet-based world. Consequently, research work to focus on developing security solutions to secure such a world is ongoing.

Ransomware is one type of malware attack. Its goal is to encrypt files, making those files useless. The victims are required to pay a ransom to gain access to the decrypted files. If the victims do not pay, they can lose all the data that was encrypted. Moreover, the attacker threatens to sell, auction, or publish the data on third-party sites in the case of the ransom not being paid [

1]. According to the Sophos report of the year 2023 [

2], 66% of 3000 organizations surveyed across 14 countries have been attacked by ransomware. The research revealed that the rate of ransomware attacks has remained the same as in the review of the previous year. The education sector was the most likely to have been attacked by ransomware in the year 2022 with 79% reporting. We can acknowledge this fact by the experience of our university. According to a 2024 report from cybersecurity company Sophos [

3], 59% of 5000 organizations surveyed across 14 countries have been attacked by ransomware. While percentage of attacked organizations decreased, the number of surveyed organizations increased by almost double. Therefore, this decrease cannot be counted as a true decrease.

Financial institutions are particularly often targets of ransomware attacks according to a report from cybersecurity company Sophos [

4]. A survey [

4] of 592 IT and cybersecurity leaders in the financial services sector found that 65% of them had been affected by ransomware, up from 64% in 2023. Ransomware attacks in the financial sector have increased over the past two years, with only 48% of them having been affected by ransomware in 2020. Financial institutions are targeted because they cannot afford to lose their data or experience long-term disruptions, since they are the backbone of the modern economy [

5]. They provide a large range of services, varying from savings to investment opportunities. Financial institutions handle large volumes of financial transactions and sensitive user data that can be stolen, which could cause identity theft and financial fraud. Their role is so fundamental that any disturbance to their operations can have a ripple effect across various sectors, affecting the daily lives of ordinary citizens. Therefore, financial institutions are subject to strict regulations [

6]. A ransomware attack can lead to non-compliance with these regulations. Therefore, it is crucial for financial institutions to protect themselves from ransomware attacks.

Initially, a detection of ransomware relied on file signatures to identify malicious files [

7]. A signature refers to a sequence of bytes uniquely representing a file. The signature is based on strings, code segments, hashes, and other patterns in the binary file. Such a method is widely adopted by antivirus software for its high precision and ease of implementation. However, this method faces challenges, since altering a few lines of code within the file changes the signature of the file. Recycled malware can easily escape signature-based detection. Creation of signatures for a modified or new malware is a time-consuming process, since an analysis, which can take several months, is required. In response, the researchers turned their attention to behavior-based detection to identify ransomware [

8].

Behavior-based detection seeks to uncover the intended malicious actions of a file by analyzing it either at rest (static detection) or during execution (dynamic behavior). Machine learning (ML) enables recognition of malicious files by building ML models and comparing file behavior to known benign and malicious files. To build ML models, the features must be extracted. Static feature extraction approaches identify the static features of ransomware by analyzing its binary code [

9]. Static detection does not require an execution of the ransomware code. Therefore, static detection is considered a secure approach. The features commonly used for static detection include n-grams opcodes, byte sequence, API calls, portable executable (PE) header information, and so on.

Dynamic feature extraction approaches require an execution of the ransomware code [

10]. The features used for dynamic detection include system calls, API calls, changes in the entropy of inputs/output data buffers, and so on. Extracting dynamic features can be challenging because many types of ransomwares try to evade detection during their operation. Furthermore, it is unclear how to determine the appropriate duration for extracting dynamic features, as behavioral changes may not manifest for some time. As a consequence, dynamic detection significantly increases the time required for the detection in comparison with static detection.

The signature-based ransomware detection faces another problem, since it may not be effective for detecting zero-day attacks, which are previously unseen threats different from the known signatures [

11]. Zero-day ransomware attacks exploit new vulnerabilities, which become known, but patches are not yet available. Such attacks present a serious threat to behavior-based approaches as well, since the training data becomes available only after the attack takes place. Therefore, the solutions of behavior-based approaches are needed, which can detect the zero-day attacks using previously acquired knowledge.

When we consider behavior-based approaches, it is possible to observe that dynamic feature extraction approaches require execution of ransomware code in an isolated environment. Such an approach faces two challenges: demand of significant time and computational resources and risk of ransomware leakage. The advantage of the static method is that it can detect ransomware before the program is launched, which allows for timely detection of potential threats, preventing system infection, and significantly saving time and resources [

12]. Therefore, a development of static feature extraction approach, which can detect zero-day attacks, is an objective of the current research. The proposed approach analyzes portable executable (PE) header of ransomware samples and extracts the static features, since a feature extraction from the PE header is a relatively straightforward and fast process compared to the extraction of other static features [

13]. ML then is applied to learn the extracted static features.

The contributions of this paper are as follows:

Providing a systematic literature review of the methods using PE static features;

Forming a comprehensive static feature set that combines many static different features;

Creating and implementing the method based on PE static features, which is capable detecting the zero-day ransomware attacks;

To the best of our knowledge, the Chi-square test was first applied to measure the randomness of the content of a PE section. The Chi-square test showed better performance than the commonly used Shannon entropy;

To the best of our knowledge, the Tanimoto coefficient was first applied to measure the similarity of the dynamic link libraries and function names in the field of ransomware. The formula of the Tanimoto coefficient does not involve calculating the square root of the sum of the square values of a vector. So, it is more computationally efficient than calculation of cosine similarity;

Using a stacking classifier since the ensemble classifier is performs better than the alone classifier;

Providing the experimental results on the publicly available dataset and comparing them with available results of other similar methods.

The remainder of this paper is organized in the following way: a review of the existing methods using static PE features to detect ransomware and malware, in general, is provided in

Section 2.

Section 3 presents the proposed method.

Section 4 considers its implementation by delivering the results of the experiment and provides a discussion of obtained results compared with related works. Finally,

Section 5 draws conclusions.

2. Review of Related Work

Recent research has shown progress in detecting ransomware based on the PE file header. The typical approach involves extracting features from the ransomware PE header and classifying them using methods of either ML or DL. Manavi and Hamzeh have published three works [

14,

15,

16] in this field. Manavi and Hamzeh [

14] proposed a method that extracted the first 1024 bytes of each PE file header and submitted them to a LSTM network for training. The dataset used in the paper included 1000 benign and 1000 ransomware samples. The authors declared that testing was performed on unseen samples; however, they did not explain how the unseen testing samples were obtained. Moreover, they did not clarify why the testing was performed on unseen samples. The standard metrics of accuracy, of recall, of precision, and of F-measure were measured. The total values of metrics for the whole dataset were provided. The values are similar for all the metrics and constitute around 93%.

Manavi and Hamzeh [

15] developed a method based on a graph construction for each ransomware sample. The graph, which has 256 nodes, was constructed for each sample. The information of the graph was saved in a graph adjacency matrix with a size of 256 × 256. The features were extracted using the concept of eigenvector and eigenvalue of the matrix. The Power Iteration method enabled the finding of a dominant eigenvector of the obtained matrix. The resulting vector was submitted to a Random Forest ensemble learning classifier for training. Three datasets were used for the experiments. The second dataset was the same as in [

14]. A 10-fold cross validation was used in the research. The results obtained for the second dataset are almost the same as in [

14], since the provided total values of the four standard metrics are around 93%. In both cases, the values exceed 93%.

Manavi and Hamzeh [

16] presented a method that extracted a vector with 1024 bytes from the PE header of each executable file. Each byte of this vector has a value from 0 to 255. The vector is converted to a 32 × 32 grayscale image using a zigzag pattern to increase byte continuity. The image is submitted to CNN network for training, since CNN is used widely for image recognition. Three datasets were used for the experiments. The first dataset was the same as in [

14,

15]. A 10-fold cross validation was used in the research. The results obtained for the first dataset are almost the same as in [

14,

15]. In all cases, the values exceed 93%, but they are very close. In addition, Manavi and Hamzeh made a comparison of testing a proposed network of PE header bytes and of whole file bytes. The accuracy obtained was higher for the PE header bytes than the whole file bytes. This result indicates that the analysis of PE headers must be preferred over the analysis of the whole file.

A very similar method to Manavi and Hamzeh [

16] was presented by Moreira et al. [

17]. The proposed method extracted a PE header with 1024 bytes and converted it to a color image using four distinct patterns: sequential, zigzag, spiral, and diagonal zigzag. For classification, a special already defined Xception model of CNN network was used. Two datasets were used for the experiments. The first dataset was the same as in Manavi and Hamzeh [

16], with 1000 benign and 1000 ransomware samples. The second dataset was built by the authors. The second dataset contained 1134 benign and 1023 ransomware samples, grouped into 25 ransomware families. We would like to express gratitude to Moreira et al. [

17] since they made this dataset publicly available. As Cen et al. [

18] noticed in their survey, there is no currently available standard dataset for ransomware classification unlike in other fields such as image processing and malware classification. The dataset built by Moreira et al. [

17] can become a standard dataset for ransomware classification. Moreira et al. [

17] used 10-fold cross validation in their research. The accuracy obtained for the first dataset was 93.73%. It is a little higher than the accuracy obtained by Manavi and Hamzeh [

16], but it still did not reach 94%.

Considering all four reviewed research works [

14,

15,

16,

17] so far, we can make the following observation. All the authors used the same full 1024 bytes vector of PE file header, but different techniques of transformation of this vector, and different techniques of classification. However, almost the same result of classification was obtained in all the cases. Such an observation enables us to make a conclusion that transformation techniques of the vector and classification techniques are not important. The most important element is the initial feature vector.

Unlike Manavi and Hamzeh [

14,

15,

16], Moreira et al. [

17] considered zero-day ransomware attack detection. To detect zero-day attacks, Sgandurra et al. [

19] evaluated the detection of each family in the considered dataset, separating all samples of one family for testing and using all remaining ransomware and goodware to train the model. Moreira et al. [

17] noticed that the applied methodology to detect zero-day attacks [

19] is not the correct one, since the value of recall metrics always repeats the value of accuracy metrics. Such a result, where accuracy and recall values are equal, can be observed in all of Manavi and Hamzeh’s research studies [

14,

15,

16]. Moreira et al. [

17] improved this testing methodology by adding randomly selected goodware samples to the test set with the same number of samples present in the evaluated family. Zero-day attack detection was performed for the second dataset only. The obtained mean accuracy of all ransom families in the mode of zero-day attack detection was 93.84%, which is much less than the accuracy 98.20% obtained without this mode. So, there is room for improvement to detect zero-day ransomware attacks.

Unlike considered research works [

14,

15,

16,

17], other research works [

20,

21,

22,

23,

24], which investigated ransomware detection and were using PE header for feature extraction, did not use the whole 1024 bytes vector. Vehabovic et al. [

20] investigated a solution for ransomware detection and constructed “minimalistic” ransomware dataset having 100–120 training samples per class. However, such a dataset can hardly be considered as minimalistic, since Moreira et al. [

17] constructed a dataset with 13–50 ransomware samples per class and did not call it “minimalistic”. The feature sets extracted were really minimalistic. A total of four feature sets were created by forming vectors with 5, 7, 10, and 15 parameters. Each subsequent vector extended its predecessor by adding new parameters. The exact parameters were chosen after attentive investigation with parameters of the following sections: File Header, Optional Header, and Section Header. Vehabovic et al. [

20] declared early ransomware detection; however, the main attention was concentrated on ransomware detection. Surprisingly, the classifiers of Random Forest and extreme gradient boost obtained an accuracy of more than 90% for a feature set with only five features. Finally, the zero-day ransomware detection was performed according to the Sgandurra et al. [

19] model, where one ransomware class is excluded from the training and it is used for the testing. The obtained results were not optimistic, since accuracy higher than 90% was obtained for four classes only from the total number of nine classes. Again, the best performing classifiers were Random Forest and extreme gradient boost.

Deng et al. [

21] presented a method using 14 features from PE header static features to detect early ransomware attacks. The features from two sections, File Header and Optional Header, were used. The developed method was the first to apply deep reinforcement learning on the PE header static features to detect ransomware. A dataset containing 27,118 goodware samples and 35,367 ransomware samples was built. This is a large dataset; however, the dataset is imbalanced. An imbalanced dataset presents an issue to the learning-based classifiers, since they are biased towards the majority class [

25]. Mean accuracy of ransomware detection on this dataset was 97.9%. For ransomware early detection, a testing of unseen samples, contained in the second dataset, was performed, when training was carried out on the first dataset. The second dataset included 688 ransomware samples, and this dataset was built in the year 2016. The obtained mean accuracy of unseen ransomware samples was 99.3%. This result contradicts the results obtained in the considered works [

17,

20], when the accuracy of unseen samples was lower than of trained samples. Moreover, the value of obtained mean accuracy is very large. The very optimistic result can be explained by the following reasons. Firstly, the dataset of unseen samples was constructed in the year 2016, when training was performed on the latest achievements of ransomware samples. So, the unseen samples were more primitive than the latest samples. Secondly, the training dataset was very large and imbalanced. Thirdly, Deng et al. [

21] declared that they will explore a zero-day ransomware detection in the future. This means an admission of authors that such a mode of testing is not suitable for detecting zero-day ransomware.

Cen et al. [

22] proposed a method based on zero-shot learning to detect early zero-day ransomware attacks. The developed method was the first to apply zero-shot learning on the PE header static features to detect zero-day ransomware. A feature set included 87 features. A dataset to assess the method was provided by Sgandurra et al. [

19]. The dataset included 942 benign software and 582 ransomware that is a highly imbalanced dataset. To detect zero-day ransomware, the training dataset included seven different ransomware classes, while the testing dataset included four varying ransomware classes. However, the algorithm to vary ransomware classes in testing is not presented and it is not discussed. The mean accuracy of zero-day ransomware detection was 96.02%. The shortcomings of the presented approach are as follows. Firstly, the used dataset is outdated. Secondly, the dataset is highly imbalanced; however, a cross-validation was not applied. Meanwhile, Zahoora et al. [

26], which proposed a method using zero-shot learning to detect zero-day ransomware, used 5-cross validation. Thirdly, it is not clear whether it was a variation of tested ransomware classes or not.

Moreira et al. [

23] introduced a method that combined various structural features extracted from PE header static features to detect zero-day ransomware attacks. The authors determined that N-gram features are unsuitable to detect zero-day ransomware attacks. A dataset, which is publicly available, used for the experiments was obtained from [

17]. The dataset then was augmented by adding samples from 15 recent ransomware families and 133 benign samples. This new part of dataset was made public, as well. This new part of the dataset was used for the testing of zero-day ransomware attacks. The mean accuracy for training dataset was 98.41%. The mean accuracy for testing the dataset was 97.53%. The obtained values of the accuracy are sufficiently large; however, there is still room for an improvement since the used set of features is quite large, as well.

Yang et al. [

24] provided a method to detect the ransomware attacks. A feature set included all the features of N-gram, dynamic link libraries (DLLs), subsystem, and entropy. The model combining all the features was 492,253. This means that the feature set is very large. A dataset for the experiments was custom built. It included 1200 ransomware samples and 1200 benign samples. Cross validation was used in testing ransomware samples. The mean accuracy of ransomware detection was 99.77%. The obtained value of accuracy is very large; however, several limitations of the presented approach can be noticed. Firstly, the very large number of features increases computation time. To ease the computational burden, a computer GPU is needed. The approach is not oriented to zero-day attack detection. Several shortcomings of the presentation can be spotted, as well. Firstly, the term “CNN” was mentioned only three times in the paper, in the title, in the section of related work, and in the section of system structure. A structure and parameters of CNN were not considered and were not presented, “because of the complexity of network layers and structure, we used symbolic structure representation”. Secondly, an inaccuracy is spotted concerning the statement of data availability “No datasets were generated or analyzed during the current study”, since they assembled 1200 samples of ransomware from 80 different families to construct a dataset for the experiments. Such hiding of information means that the experiments cannot be replicated.

To summarize the review of related works, they can be grouped according to several indicators. One of the most distinguishing indicators is the formation of the feature set for classification. Four research works [

14,

15,

16,

17] used the same full 1024 bytes vector of PE file header, but different techniques of transformation of this vector, and different techniques of classification. However, in all cases, almost the same classification result was obtained. Such an observation leads us to conclude that using a 1024-byte PE file header vector for a feature set is not a promising research direction. The more perspective direction is to use the various combinations of PE file header static features. Another challenging indicator is the dataset used to evaluate the proposed method. We and other researchers [

5,

10,

18,

27] have noticed that there is no currently available standard dataset for ransomware classification. Therefore, many researchers [

14,

17,

20,

21,

23,

24] have been developing their own dataset. However, a comparison of obtained results of different works cannot be correct when the datasets are different. Only Moreira et al. [

17,

23] made their developed dataset publicly available. This dataset has the potential to become a benchmark dataset for ransomware detection. However, no researchers have noticed it yet. We will support the use of the dataset provided by Moreira et al. [

17,

23]. Finally, the zero-day ransomware attack detection [

14,

17,

20,

22,

23] is an important indicator. The testing methodology of zero-day attacks proposed by Moreira et al. [

17], when randomly selected benign samples with the same number of samples present in the evaluated family are added to the test set, is preferred, since this methodology ensures the correct values of accuracy and recall metrics.

3. Proposed Method

3.1. Method Overview

In this section, the design of the method is described. Based on the previous section’s explanation of related works and techniques, our method is proposed to detect zero-day ransomware attacks using a static analysis. The static analysis can be vulnerable to deceptive techniques such as packaging, obfuscation, and polymorphism [

18]. However, a comprehensive static analysis is less susceptible to deceptive techniques because it is difficult for malicious developers to apply these evasion techniques to multiple structural features [

13]. Therefore, we constructed a comprehensive static feature set by combining several different static features into a single feature set. Section analysis is particularly relevant when dealing with obfuscated or packed binary files [

28]. Examining the randomness of each section of a binary file is one of the methods for determining such content. High randomness values are often associated with encryption and lossless compression functions; therefore, its value is high when the section contains obfuscated or packed code [

28].

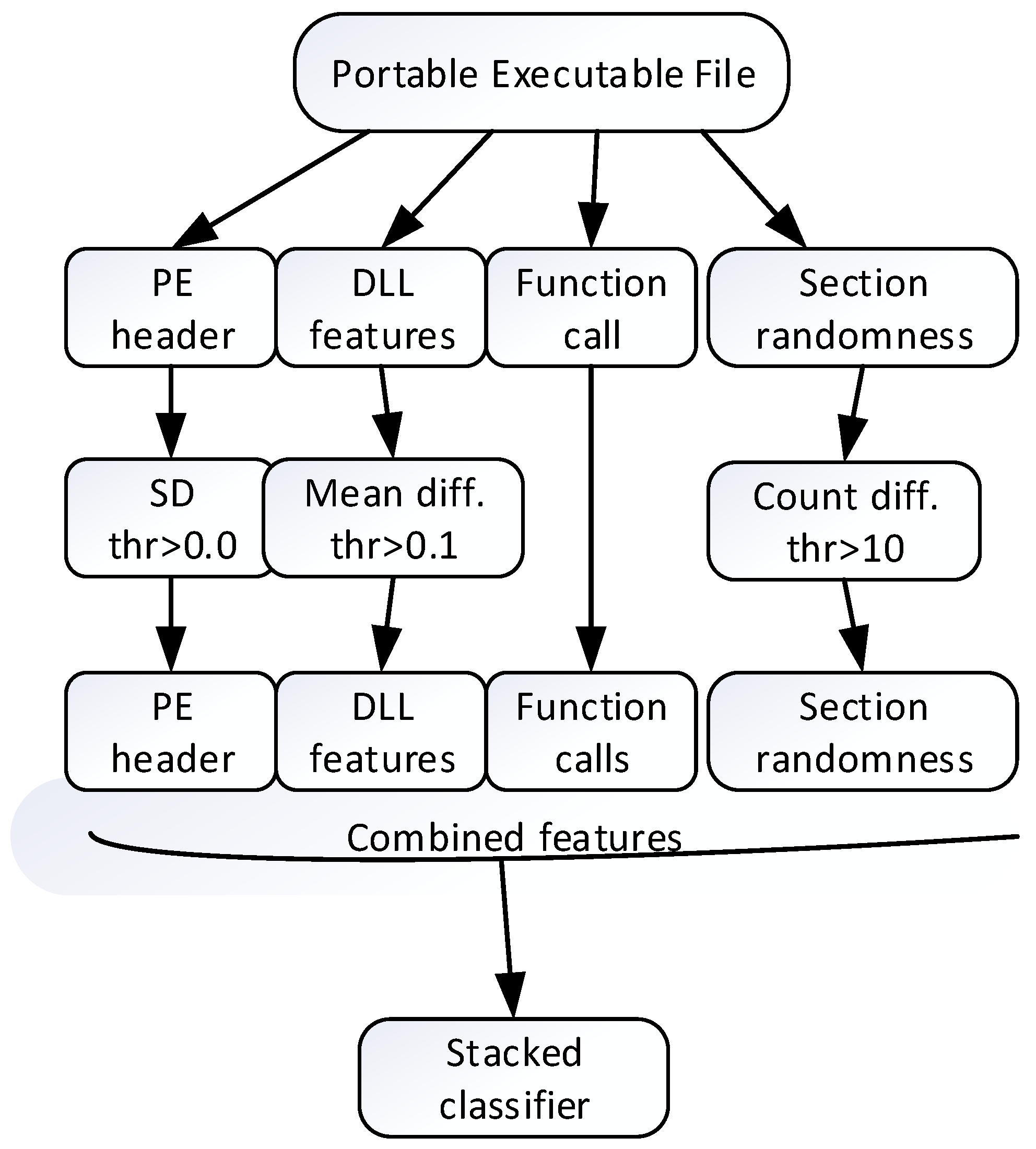

Figure 1 demonstrates the workflow of the proposed static analysis method.

Firstly, a valuable structural information is extracted from each PE sample. This information includes the PE header fields, imported DLLs features, function calls, and randomness of section contents. The inclusion of PE header fields, DLLs features and function calls is quite obvious, since these features characterize the software resource. Inclusion of section randomness is intended to detect encrypted and packed malicious files. Therefore, the section randomness can play an important role in identifying malicious behavior.

To improve the efficiency of the method, the initial feature set is decreased by applying the method of threshold. The use of a threshold enables elimination of features with little information. Several types of threshold, depending on feature set, were applied. A standard deviation (SD) threshold was applied to the PE header. A mean difference threshold was applied to the usage of DLLs in ransomware and benign software. A count difference threshold was applied to the usage of sections in ransomware and benign software. The resulting feature sets are then joined into a single comprehensive static feature set.

To measure the performance of the proposed approach, we employed common machine learning metrics used for binary classification. They are as follows: accuracy, precision, recall, and F-score. These metrics are defined in terms of four values: True Positive (TP), True Negative (TN), False Positive (FP), and False Negative (FN). TP denotes the number of ransomware samples correctly classified as ransomware; TN represents the number of benign samples correctly classified as benign; FP indicates the number of benign samples incorrectly classified as ransomware; and FN represents the number of ransomware samples incorrectly classified as benign. The recall metric was used as the primary metric for model comparison since it is the most important measure for detecting ransomware. To reveal the variability of the performance results, a confidence level of 95% was chosen and a confidence interval was calculated.

We applied the zero-day approach for each feature set. The application of this approach consisted of two stages: (1) no samples of tested family were used during model training; (2) the benign samples were randomly selected and included in the test set in the same number as the family being tested. Therefore, when one family was separated for testing, the remaining ransomware families were used for training. When training was complete, the tested family was increased by adding the randomly selected benign samples in the same number as the family being tested. Some ransomware families had fewer than 15 samples, so the test set was increased by adding benign samples to have no less than 30 samples to maintain a large sample size in order to preserve the ability to calculate the confidence interval [

29]. In this case, we obtained some classes that are not completely balanced. To solve the issue of class imbalance, we performed ten iterations of stratified K-fold cross validation with K = 10. Following this, the assessment of each family was repeated ten times according to the rules of the zero-day approach, and the mean value of each measured metric was calculated.

To test a specific ransomware family, the equal number of randomly selected benign samples is added to the test dataset. The remaining ransomware families are used for training a classifier. A stacked classifier is used for classification.

Next, we introduce a dataset used for experimentation and we provide the details on each step of the method.

3.2. Dataset

The proposed method was evaluated using the dataset developed by Moreira et al. [

23]. This dataset consists of two parts, training and testing. The training part of the dataset includes 1023 ransomware and 1134 benign software. The testing part of the dataset includes 385 ransomware and 133 benign software. The ransomware of the training part can be divided into 25 different families. The ransomware of the testing part can be divided into 15 different families. The names and the number of samples of ransomware families are provided in

Table 1.

3.3. PE Header

PE is the standard file format for executable files in Microsoft Windows OS. PE file covers wide range of different formats such as .exe files, batch files (.bat), device drivers (.sys), dynamic link libraries (.dlls), and several others. The PE file contains many specific characteristics that enable to identify this file. Therefore, these characteristics can be used successfully to distinguish between benign and ransomware samples. The PE file contains two main parts: the header and the sections [

30]. The header part includes the following sections: DOS Header, NT Headers, File Header, Optional Header, and Section Table. The PE header part has a permanent structure; meanwhile, the section part is closely related to the function of the file [

31]. We will directly use fields from PE header only. Initially, we selected 85 numerical features from PE header. During experimentation, we observed that the values of some features are constant for ransomware and benign samples. If the values do not vary, they do not present information for classification. The features with constant values are redundant. They present only noise, and they should be removed. To identify a variability of the values, a standard deviation can be used, since it is a measure of the variance of the values of a variable around its mean. The value 0 of standard deviation indicates that all the values of the variable are equal. Such a variable does not include information that can be used to differentiate between different samples. Therefore, we applied the SD threshold to remove the features with an SD equal to 0. Eight features were removed. The remaining features of PE header are presented in

Table 2.

3.4. DLL Features and Function Calls

The section part of the PE file contains many specific sections. One of these sections is .idata section that contains one entry for each imported dynamic link library (DLL). DLLs contain many subroutines to perform common actions. The subroutines are grouped according to the performed actions, and they are joined into a specific DLL. DLLs possess the specific property that they are loaded into memory whenever needed and released from memory whenever they are no longer needed. Therefore, DLLs are an important part of a program because they ensure efficient use of available memory and resources through dynamic linking capabilities. It is possible, but it is difficult to imagine that a program would not use DLLs. Consequently, certain characteristics of a program can be determined from the set of DLLs it uses. Analysis of the import table of the .idata section can help identify ransomware.

A total of 576 different DLL instances were found in the dataset used. This is a fairly large number of instances, if every instance were to be considered a separate feature. We decided to form the integrated characteristics of DLLs. Firstly, we counted the total number of different DLL instances used for each dataset sample separately. Next, we decided to determine similarity of DLLs used between separate samples. A common measure of similarity between two vectors is the cosine index [

32,

33]. To measure the similarity between two sets of DLLs, they have to be converted into two vectors. It is possible to use a simple conversion form. The entry of the vector is assigned the value 1, if the DLL is present, the value 0 is assigned in the opposite case. When the vectors hold the binary values, the cosine similarity index can be interpreted in terms of common attributes. A simple variant of cosine similarity can be applied to this scenario. This variant is called the Tanimoto coefficient [

34], and it is defined as follows:

The Tanimoto coefficient defines the ratio of the number of attributes shared by x and y to the number of attributes possessed by x or y. In such a way, the similarity indices are calculated between a specific sample and all the remaining samples of the dataset. The vector of values is obtained for each sample. To measure a central tendency of vector values, a median is calculated. The median is less affected by outliers and skewed data than the mean.

The last component of DLL integrated characteristics is a list of DLLs selected according to the threshold criterion. The idea is to select the DLLs that are the most frequently used either by ransomware or benign software, but not both. If the DLLs are frequently used by ransomware, they allow us to distinguish ransomware. If the DLLs are frequently used by benign software, they allow us to distinguish benign software. If the DLLs are frequently used by ransomware and benign software, they do not allow to distinguish ransomware from benign software. To implement the idea, a matrix is formed. The samples correspond to the rows in the matrix. The used DLL corresponds to the columns of the matrix. The entry of the matrix is assigned the value 1, if the DLL is used for the specific sample that corresponds to the particular row, the value 0 is assigned in the opposite case. Such an assignment of the values is performed separately for ransomware and benign samples. A value of mean is calculated for every DLL in use for ransomware and benign software. This value is a relative measure of the frequency of DLL use in samples. The obtained mean values are then compared between the same DLL of ransomware and benign software. The inclusion criterion is a mean difference higher than 0,1 between the use of DLL in ransomware and benign software. It is the least possible difference value between the uses of the same DLL in ransomware and benign software. The selected DLLs are provided in

Table 3. A total of 40 DLLs were selected according to the defined threshold.

The considered DLLs are just names that connect functions. The main operating entities are functions. A program can be distinguished from others by the function calls that are called to perform a specific action. The list of function calls can reveal the behavior of the program. The collected list of function calls can further improve the capability to distinguish between benign software and ransomware. A total of 9961 different instances of function calls were identified. This is a very large number of features, if to consider every instance as a separate feature. We decided to form a single integrated feature of all function calls. We formed it in the same way as for DLL names using Tanimoto coefficient to determine similarity between to separate samples of function calls.

3.5. Section Randomness

The section part of PE file contains many sections. Some of them are as follows: executable code section (.text), resource handling section (.rsrc), exception handling section (.pdata), and many others. The section part is closely related to the function of the file. Therefore, the number of sections varies quite substantially in different files. Some of sections like .text, data, .rsrc are present in many files. Some of the sections like .ndata, .bss, .itext are present in a few files. To measure the randomness of section contents all the reviewed works, [

22,

23,

24] used Shannon entropy. However, several studies [

35,

36,

37,

38] have stressed that using Shannon entropy does not enable us to distinguish successfully between compressed and encrypted files since both types of files demonstrate similar values. Other standard mathematical calculations are Chi-square test, Kullback–Leibler distance, serial byte correlation, which can be used to measure randomness of information [

39]. Davies et al. [

39] made the conclusion after a comparison of several methods that the results from the Chi-square test produced the highest accuracy. Moreover, Palisse et al. [

36] and Arakkal et al. [

38] used the Chi-square test to measure a randomness of the file contents instead of Shannon entropy. Having in mind the provided reasons, we decided to measure randomness of section contents using Chi-square test.

The Chi-square (X2) test is a statistical test of the accuracy of a distribution. It measures how closely an observed distribution is statistically similar to the expected distribution [

40]. It is a non-parametric statistical test, which means that no assumptions are made about the distribution of the samples. The observed data sequence is considered discrete and it is arranged in a frequency histogram [0,255]. The Formula (2) for calculating the chi-square test is as follows:

where

Oi is observed value, and

Ei is expected value.

Our method was able to identify 116 different sections from the training data. To be consistent with zero-day testing methodology, only specific sections of the training part of the dataset were included. Some sections are present only in a few samples. We decided to apply the threshold value for the inclusion of the sections into feature set. The threshold value is a count difference between the samples of ransomware and benign software for the specific section. We are targeting zero-day ransomware. The specific sections present in just a few samples are of interest. Therefore, the threshold value was chosen to be 10. The selected sections are presented in

Table 4. A total of 32 sections were selected according to the defined threshold. We can observe that the chosen sections constitute only 28% of the initial set of sections.

It needs to be noticed that we have checked the sections of the testing part of the dataset, as well. Some new sections, which were not present in the training part of the dataset, were observed. The sections of the testing part had an influence on the general frequency of use of the sections. If we consider the sections of both parts of the dataset, the selected sections could differ slightly.

3.6. Choice of Classifier

When features are selected, the next step in the classification process is to choose a classifier. A common approach to solve this challenge is to employ several typical ML and DL classifiers and to choose the best performing classifier to detect new ransomware variants. However, many research works [

23,

41,

42,

43] have proved that ensemble learning techniques perform better than an alone classifier. The performance of ensemble classifiers depends on the chosen base classifiers and how they are combined to produce a final classification result. The three best-known ensemble learning methods [

44] are bagging [

45,

46], boosting [

47,

48], and stacking [

49,

50]. We have chosen to use the stacking ensemble method since it is a generic framework that combines many ensemble methods, either homogenous or heterogenous. The stacking ensemble method employs two levels of learning, base learning, and meta-learning. In the base learning, the base classifiers are trained with the training dataset. After training, the base classifiers create a new dataset for the meta-classifier. The meta-classifier then is trained with dataset formed by the base classifiers. The trained meta-classifier is used to classify the test set. The main difference between stacking and other ensemble methods is that during stacking, a meta-level learning-based classification is applied as the final classification. Maniriho et al. [

50] used support vector machines (SVM), logistic regression (LR), stochastic gradient descent (SGD) as base classifiers. CatBoost classifier was used as a meta-classifier. We will start our stacking ensemble method using this combination of classifiers, since Maniriho et al. [

50] were successful in detecting new malware attacks. Moreover, Hancock and Khoshgoftaar [

51] announced in their review that the CatBoost classifier was the most successful when comparing CatBoost, LightGBM, SVM, and logistic regression in a multi-class and binary classification task for identifying computer network attacks. Of this combination of classifiers, the least known classifier is SGD. Therefore, we will explore the possibility of changing this classifier by other more frequently used classifiers. These classifiers include Random Forest (RF) [

23,

33,

42], gradient boost (GB) [

33,

42], and K-nearest neighbor [

22,

43].

5. Conclusions

The main direction of the research to detect ransomware attacks is a behavior-based approach. The behavior-based approaches can be static, dynamic, and hybrid that combines both static and dynamic. The static approach requires the least amount of resources and it is the most secure approach, since it does not require the direct execution of the ransomware code. The advantage of the static method is that it can detect ransomware before the program is launched, which allows for timely detection of potential threats, preventing system infection, and significantly saving time and resources. Therefore, we have developed a static feature extraction approach that can detect zero-day attacks. The proposed approach analyzes the PE header of ransomware samples and extracts the static features, since a feature extraction from the PE header is a relatively straightforward and fast process compared to the extraction of other static features. The approach forms the combined comprehensive static feature set that includes the PE header fields, DLL count, DLL average, DLL list, function call average and a measure of section contents randomness. To determine DLL average usage, the similarity of the used DLLs was measured between two samples. To the best of our knowledge, the Tanimoto coefficient was first applied to measure the similarity of the DLLs in the field of ransomware. The formula of Tanimoto coefficient does not involve calculating the square root of the sum of the square values of a vector. So, it is more computationally efficient than calculation of cosine similarity. The performed experiments allow us to conclude that use of the Tanimoto coefficient instead of cosine similarity enables obtaining the same performance and a lower margin of error. The same procedure was applied to measure the usage of function call average.

To the best of our knowledge, the Chi-square test was first applied to measure the randomness of the content of a PE section. The experiments conducted allow us to conclude that using the Chi-square test instead of Shannon entropy enables obtaining better performance and a lower margin of error.

When the feature set is constructed, machine learning is then applied to learn the extracted static features. We have chosen to use a stacking ensemble method since it is a generic framework that combines many ensemble methods either homogenous or heterogenous. The stacking ensemble method employs two levels of learning, base learning, and meta-learning. For the base classifiers, we have used three classifiers, support vector machines, logistic regression, and Random Forest. CatBoost classifier was used as a meta-classifier. The comparison of the obtained results showed that our method had a full advantage over the method that used feature set in the form of image and used convolutional neural network for classification. For many ransomware families, our method showed slightly better performance over the method that formed combined feature set in the similar way as our method and used ensemble method with soft voting for classification. However, our feature set was much smaller since we have applied threshold values to include a feature into the feature set. The comparison of the results was performed on the same publicly available dataset.