Abstract

Decision Support Systems (DSSs) are increasingly shaping high-performance sport by translating complex time series data into actionable insights for coaches and practitioners. This paper outlines a structured, five-stage DSS development pipeline, grounded in the Schelling and Robertson framework, and demonstrates its application in professional basketball. Using changepoint analysis, we present a novel approach to dynamically quantify Most Demanding Scenarios (MDSs) using high-resolution optical tracking data in this context. Unlike fixed-window methods, this approach adapts scenario duration to real performance, improving the ecological validity and practical interpretation of MDS metrics for athlete profiling, benchmarking, and training prescription. The system is realized as an interactive web dashboard, providing intuitive visualizations and individualized feedback by integrating validated workload metrics with contextual game information. Practitioners can rapidly distinguish normative from outlier performance periods, guiding recovery and conditioning strategies, and more accurately replicating game demands in training. While illustrated in basketball, the pipeline and principles are broadly transferable, offering a replicable blueprint for integrating context-aware analytics and enhancing data-driven decision-making in elite sport.

1. Introduction

Decision-making in professional sports is high-stakes and increasingly complex, requiring coaches and supporting staff to optimize team and player outcomes under conditions of time pressure, uncertainty, and rapidly growing data volume [1,2]. Technological advances have greatly expanded the range of available information, from real-time data streams like GPS tracking and heart rate monitoring to periodic sources such as optical tracking, biomarker assessments, and wellness reports. These datasets span both quantitative measures (e.g., movement kinematics, force plate kinetics, biomarker levels) and qualitative assessments (e.g., athlete self-reports, coaching evaluations), reflecting the multifaceted nature of modern sport [1,3,4].

Because much of the relevant information in sport is dynamic and sequential, time series data have become integral to performance decision-making. Temporal patterns must be considered at both macro levels (e.g., seasonal training cycles, athlete careers) and micro levels within games (e.g., quarters, high-intensity intervals, tactical substitutions). As new time series data sources and monitoring modalities proliferate, practitioners face an escalating challenge: integrating and interpreting a diversity of evolving data streams, often under significant time constraints and with considerable variability in data quality and access [2]. This complexity is amplified by well-established characteristics of human decision-making: judgments are shaped by the volume and reliability of available information (sometimes insufficient, sometimes overwhelming or inaccessible), time pressure, and innate cognitive limitations such as processing capacity and bias [5]. These realities underscore how difficult it is for coaches and staff to keep pace with the expanding sources of information, while balancing immediate competitive pressures with long-term development goals.

Decision Support Systems (DSSs) offer a solution to these challenges by integrating diverse data streams, applying advanced analytics, and employing user-centered design to deliver automated, context-relevant outputs [2]. Effective DSS development also recognizes the important distinction between descriptive (explaining past patterns) and predictive (forecasting future states) modeling in sports analytics, as each requires distinct design priorities, validation strategies, and use-case considerations [6]. Every stage in the DSS pipeline, from initial signal acquisition and data cleaning to feature engineering, context-aware pattern recognition, and practitioner-focused output, presents unique challenges that must be thoughtfully addressed, drawing on both the current literature and practical experience.

To provide a generalizable and scalable approach, this paper builds on the foundational principles of the DSS development framework outlined by Schelling and Robertson (2020) [2]. We extend these principles through a detailed five-step process specifically designed for handling time series data in sport: (1) data collection, (2) data conditioning, (3) feature extraction and integration, (4) pattern recognition and forecasting, and (5) DSS output (see Figure 1). By providing targeted methodological guidance and sport-specific examples at each stage, this paper offers practical steps for developing and deploying DSS that harness the full potential of time series data in high-performance sport. For each step, we synthesize methodological considerations, review supporting literature and applications in sport, and demonstrate core concepts through a worked example centered on the analysis of Most Demanding Scenarios (MDSs) in basketball. MDS are brief passages where external-load metrics approach individual maxima, identified via rolling-peak windows (e.g., 30–300 s) and operationalized as values near a player’s maximum or within upper percentiles (e.g., top 10–20%) across variables like accelerations and high-speed distance [7]. This example illustrates how a complete DSS, spanning high-resolution optical tracking, changepoint-driven peak detection, and interactive dashboard output, can translate complex data into actionable recommendations for practitioners.

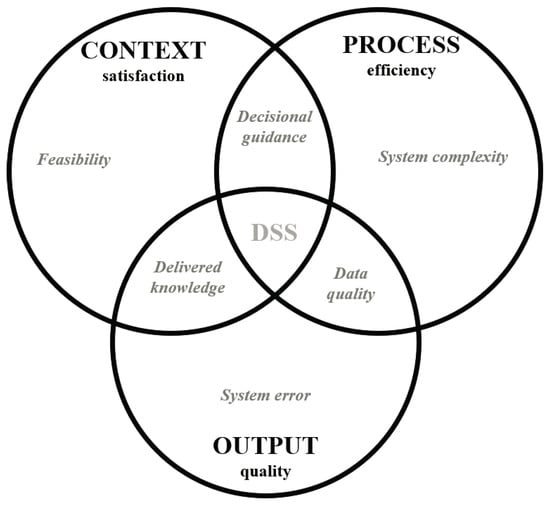

Figure 1.

Venn diagram illustrating the comprehensive DSS evaluation framework from Schelling and Robertson (2020). The three main domains: context satisfaction (fit and feasibility), process efficiency (usability and complexity), and output quality (usefulness and trustworthiness of the recommendations) [2].

This paper reviews key considerations for leveraging time series data in sports performance applications and illustrates their practical use through the design of a DSS that integrates player tracking data. It emphasizes how time series methods can be applied to monitor, interpret, and forecast athlete and team performance, whether capturing real-time metrics (e.g., movement speeds, heart rate, workload during competition) or analyzing retrospective datasets (e.g., wellness reports, force-plate outputs). While the provided example employs player tracking data, the principles and framework described are broadly applicable to any sports performance context where continuous or sequential measurements inform decision-making. Specifically, we address the methodological and practical requirements of each stage:

- Data collection: Selecting appropriate sampling rates for diverse signal types to ensure high data quality and minimize system error.

- Data conditioning: Applying filtering techniques to manage noise, enhance signal quality, and prepare data for quantitative analysis.

- Feature extraction and integration: Combining multi-source continuous and discrete signals to provide a holistic, context-aware athlete profile.

- Pattern recognition and forecasting: Employing both classical statistical and machine learning tools to detect trends, anomalies, and predict future performance.

- DSS output: Translating analysis into actionable recommendations via practitioner-facing interfaces, supporting both immediate decision-making and longer-term strategy.

2. Data Collection

In developing a DSS for time series data, selecting appropriate sampling rates across integrated sources is a foundational design choice. The sampling rate directly sets the temporal resolution, affecting the system’s ability to detect meaningful patterns and rapid performance changes [8]. According to the Nyquist–Shannon theorem, this rate should be at least twice the highest frequency present, ensuring fast-changing events are accurately captured and preventing misleading aliasing artifacts in the analysis [9,10]. Careful adherence to these principles is essential for high-quality, reliable downstream metrics. Table 1 summarizes sport-specific sampling rate guidelines and anti-aliasing considerations.

Table 1.

Sampling rates and anti-aliasing considerations for various data sources in sports analytics. Values are general guidelines; optimal choices should be tailored to each specific application and measurement goal. GPS (global positioning system), LPS (local positioning system), IMU (inertial measurement unit), HR (heart rate), HRV (heart rate variability), ECG (electrocardiogram), RFD (rate of force development), RPE (rating of perceived exertion), Hz (hertz), LF/HF (low frequency/high frequency power), rMSSD (root mean square of successive differences).

Cyclical or rhythmic signals (such as circadian rhythms) require particular care in sampling strategy. Sampling precisely at the cycle frequency (for instance, exactly every 24 h) can mask meaningful intra-cycle variations (a specialized aliasing problem). Increasing sampling frequency slightly or staggering collection times can help reveal within-cycle variability. Practitioners must balance the value of baseline consistency with the need to detect transient dynamics; in practice, this may involve combining regular fixed-time measurements with strategically timed additional samples.

While high-resolution data collection can be valuable, oversampling beyond what is necessary increases processing and storage demands without yielding further insight. For example, Bardella et al. (2016) found that sampling weight-lifting velocity above ~25 Hz introduced no additional accuracy for certain resistance exercises, underscoring that more data do not always mean better results [17]. As a practical approach to anti-aliasing, high-frequency raw data (e.g., 100 Hz from an accelerometer) are filtered, either in hardware or software, to remove frequencies above the Nyquist limit prior to analysis [18] (see also Section 2).

Aligning sampling rates with each signal’s physiological or mechanical characteristics optimizes data quality and reduces system error, as emphasized by Schelling and Robertson (2020) in the DSS framework [2] (see Figure 1). This approach ensures that downstream processing and calculations, such as velocity, acceleration, or workload estimation, are built on a solid data foundation, ultimately enabling robust, context-aware decision-making.

To illustrate practical data collection, we present a worked example: MDS analysis in basketball within a DSS context [19,20]. MDS identifies peak periods of physical demand during games, using rolling averages of metrics such as distance covered, speed, or accelerations over defined time windows (typically 30 s to 5 min) [7]. Unlike traditional averages, this approach pinpoints the most intense passages of play, which is critical for tailoring training loads and accurately reflecting in-game workload.

In this application, optical tracking is performed using a high-definition camera array, capturing the full basketball court at 25 frames per second (25 Hz). The system localizes all players and the ball in two and three dimensions using synchronized, multi-angle feeds, generating time-stamped positional coordinates for each frame [21]. These are processed to quantify peak physical demands within each player’s on-court stint, with changepoint analysis used to identify periods of maximal activity [22,23]. Analyses used historical, de-identified tracking data under standard cybersecurity policies and HIPAA-aligned safeguards in biomedical research [24] (ethics approval: Victoria University HRE 20–204). Reproducibility of our methodology is supported by a fully specified acquisition-to-output workflow; applying the same thought process to equivalent licensed tracking data with standard libraries (e.g., Python 3.12.5 package ruptures) should yield comparable results, although source code and raw data are contract-restricted.

Because MDS metrics rely on rolling averages or sums of time series variables [7,19,25] (e.g., mean velocity over 12-s windows), sampling beyond a certain frequency is not necessary to preserve analytical fidelity [26]. Here, data are downsampled to 5 Hz, preserving the accuracy of smoothed velocity estimates (R2 = 0.9998) while dramatically improving computation efficiency. Velocity is computed from mean displacement over a 0.4-s window (±0.2 s, or ±5 frames at 25 Hz), yielding nearly identical results whether starting from 25 Hz or 5 Hz data. This downsampling reduces computation time by approximately a factor of five for velocity estimation (840 ms vs. 167 ms per player) and more than threefold for metres-per-12-s calculations (408 µs vs. 111 µs per player), with no detectable loss of accuracy. Such efficiency is essential for real-time or near-real-time DSS processing, enabling the timely generation of actionable, high-demand scenario insights.

3. Data Conditioning

Signal filtering is an essential step in sports data pipelines, critical for distinguishing true physiological events, such as peak acceleration or rate of force development (RFD), from background variation and sensor noise. For a DSS, trustworthy filtering underpins user confidence, ensuring that performance metrics accurately reflect real changes and not artifacts [27]. Filtering also strengthens validation and reproducibility by enabling outputs to be cross-checked against reference standards, such as aligning filtered force plate data with high-speed motion capture or a validated accelerometer. However, as Ellens et al. (2024) demonstrate, inappropriate filter settings can disregard genuine acceleration events in Global Navigation Satellite System (GNSS) data, reducing the reliability of DSS outputs [28].

No single filtering method is optimal for every sports data stream; techniques differ in mathematical foundation, computational cost, and their ability to preserve sharp events or suppress noise. The choice, among Butterworth [27], moving average [27], exponentially weighted moving average (EWMA) [29], Kalman [30,31], and others, should reflect both the signal’s properties and the desired balance between noise reduction and retention of important features [27]. Table 2 summarizes common filtering approaches and their main applications in sport.

Table 2.

Summary of common filtering methods used in sport performance. For detailed explanations and practical guidelines on signal filtering techniques, refer to Crenna et al. (2021) [27], Winter (2009) [32], and Smith (1997) [33].

Robust filtering and sampling are prerequisites for effective feature extraction in time series analysis. Downsampling without pre-filtering introduces aliasing (misrepresentation of high-frequency content) [8]. Retrospective analyses often use zero-lag filters to preserve event timing, while real-time DSS depends on causal filters that may introduce minor signal lag. Excessive filtering may erode genuine performance spikes, whereas insufficient filtering can amplify noise. Transparent, detailed reporting of all filtering protocols is vital for reproducibility [28], especially as commercial device pipelines are frequently undocumented, sometimes leading to inconsistencies when combining raw and manufacturer-smoothed data [26].

When calculating rates of change, the selection of the time window is also crucial. Short windows, such as 50 ms for early RFD [37] capture rapid phenomena but amplify noise and sampling limitations [28]; longer ones (e.g., 200 ms for RFD or three weeks for workload ratios) smooth variability, improving reliability but potentially masking brief, significant events [38,39,40,41]. The most appropriate window depends on the dynamics of the measured process, required sensitivity, and prevailing signal-to-noise ratio. Implausibly high rates often signal suboptimal filters or window choices, reinforcing the need for careful selection and rigorous filtering, especially during downsampling.

Within Schelling and Robertson’s (2020) DSS framework, filtering is fundamental to achieving data quality and process efficiency (see Figure 1). Effective filtering minimizes wasted computational effort, improves output reliability, builds practitioner trust, and ensures that all data sources are prepared consistently for advanced analytics and integration.

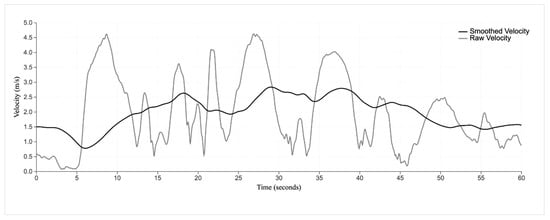

In our practical basketball DSS application, robust data conditioning was essential to ensure reliability and actionability in the velocity and acceleration metrics that drive MDS assessment. As shown in Figure 2, raw velocity time series data were smoothed using a 12-s moving average, a window chosen based on the typical possession durations in professional basketball (i.e., mean chance length in a professional basketball game [42,43,44]). This approach facilitates the detection of meaningful peak demands, though ongoing debate exists regarding optimal window length [40].

Figure 2.

Comparison of a raw velocity time series (lighter black) and a velocity time series that is smoothed over a 12-s window via a moving average (darker black).

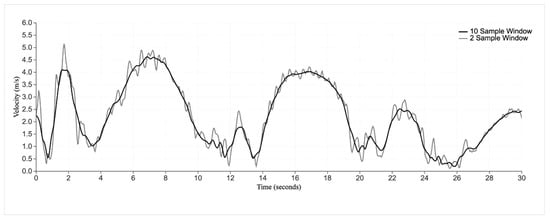

To further enhance transparency and reproducibility, our methodology explicitly computes velocity as the scaled mean displacement over a 0.4-s window (±0.2 s from the frame of interest) [40] (see Formula (1)), effectively suppressing high-frequency noise while maintaining responsiveness, even in contrast to the proprietary, often undocumented filtering pipelines used by some commercial vendors. Figure 3 compares velocity time series computed using different smoothing intervals, demonstrating the impact of methodological choices on outcome sensitivity.

Figure 3.

Comparison of velocity time series data computed as scaled displacement over 10 frames (black) and 2 frames (grey).

Acceleration is derived as the difference between consecutive velocity samples, and acceleration load is calculated as a rolling sum of absolute accelerations over each 12-s interval, following established performance analysis practices [45]. Aggregating over larger temporal windows, coupled with transparent reporting of all filter and smoothing steps, minimizes vendor-driven variability and enhances reproducibility, providing a robust foundation for MDS benchmarking. This highlights the critical link between filtering decisions, feature extraction, and the practical utility of time series analytics in DSS-based sport performance decision-making.

4. Feature Extraction and Integration

Integrating multi-source data into a cohesive DSS in sport presents practical, computational, and methodological challenges [46]. Performance datasets commonly include both continuous signals (such as GPS trajectories, accelerometer outputs, and physiological metrics) and discrete events (such as substitutions, fouls, injuries, or context-specific actions). An effective combination of these heterogeneous streams requires robust synchronization, alignment, and data fusion methodologies, beyond just optimizing sampling rates and filtering techniques.

Accurate synchronization of data streams is fundamental for meaningful integration [47]. This ensures, for example, that heart rate and HRV can be interpreted alongside session workloads in endurance sports [48], or that force plate outputs are precisely linked to movement phases in strength training [49]. Similarly, aligning wellness questionnaires and biomarker data enables early detection of recovery issues before they manifest as performance declines [50]. Synchronization challenges arise from differing sensor sampling rates, clock drift, data-packet delays, and risks of aliasing, especially when downsampling high-frequency metrics to match lower-frequency streams [30,51], as discussed in Section 1 and Section 2. Solutions include precise time-stamping at data capture (e.g., GPS time, unified system clocks) and the use of interpolation or resampling. For example, high-frequency accelerometer data may be summarized into 1-s intervals, while lower-frequency measures like wellness scores are interpolated or carried forward [52]. These strategies ensure that physiological or biomechanical events (e.g., heart rate peaks) align accurately with gameplay or tactical episodes [53]. Advanced methods, such as event-driven synchronization using accelerometer-detected collisions or detailed play-by-play markers, can further improve multimodal data alignment [54].

Beyond synchronization, integrating continuous signals (such as player position, heart rate, or IMU data) with discrete contextual information (substitutions, environmental conditions, coaching decisions) requires specialized data fusion approaches. Hybrid feature sets, developed by tagging continuous streams with discrete event labels (such as mapping GPS data to offensive vs. defensive phases), enrich context-driven analysis. Multi-level sensor fusion algorithms, including Kalman and Bayesian filters, are capable of merging information from different abstraction levels, from raw signals to features and final decisions. This enables robust athlete state estimation, even in the presence of noise or missing data [30].

Multi-source integration in real-time environments introduces further challenges, including computational overhead, network latency, and the need for precise sensor synchronization. Efficient integration leverages advanced algorithms and edge computing, with data processed locally on wearable devices or field-side workstations to minimize delay. System load can be reduced by downsampling to the minimum required rate (see Section 1 and Section 2) and using event-driven processing that triggers computation only when necessary. When integrating data from diverse stakeholders (medical staff, analysts, coaches, box score providers), it is crucial to use standardized formats, consistent units, and robust data-sharing protocols. Well-designed Application Programming Interfaces (APIs) are essential for secure, automated data exchange and ensuring seamless interoperability across departments and platforms [2,55].

Effective multi-source integration directly improves context satisfaction by leveraging all available contextual information and process efficiency, delivering timely, reliable insights within the DSS [2] (see Figure 1). When implemented holistically, this approach creates a comprehensive athlete profile, reduces conflicting recommendations, and builds practitioner trust by ensuring decision-making reflects the full spectrum of relevant information rather than isolated metrics.

In our MDS application, seamless integration of multi-modal datasets is achieved through unified timestamps and universally unique identifiers (UUIDs) for players and games, facilitating precise alignment of physical tracking data with event logs, player designation, and contextual game variables. Player tracking (25 Hz) and play-by-play (25 Hz) data streams, delivered with consistent frame numbers, game-clock values (milliseconds), and ISO 8601-formatted UTC timestamps, support accurate synchronization across modalities. While scoring data are often aggregated at the game or quarter level, detailed play-by-play shot records enable the construction of continuous scoring time series, such as score margin (downsampled to 5 Hz) and minute-by-minute differentials, all retaining consistent frame numbers and timestamps from the original datasets.

This integration framework enables precise overlay of physical demands (e.g., total distance, velocity, or acceleration load) with play-by-play actions, tactical assignments, and contextual variables, including quarter, score margin, offense/defense status, and possession. Supplementary inputs, such as video review, injury or substitution markers, and post-game RPE or wellness assessments, further enrich the contextual understanding of each MDS episode. By synchronizing and mapping all relevant information to a unified temporal reference, the DSS can characterize high-demand periods not only by physical load but also in relation to tactical, contextual, and athlete state variables, supporting truly comprehensive and context-aware decision-making.

5. Pattern Recognition and Forecasting

Robust time series methods are essential for enabling decision-makers to detect trends, identify anomalies, and forecast future outcomes with confidence [56]. Classical approaches remain foundational and highly interpretable for pattern detection and performance forecasting. Frequency-domain techniques like Fourier analysis decompose signals into separate frequency components, making them effective for identifying recurring patterns, such as weekly workload cycles or seasonal fluctuations [27,28]. Wavelet analysis complements this by revealing localized, time-varying frequency changes, which are particularly valuable for assessing non-stationary biomechanical signals, such as shifts in force output during fatigue or altered gait patterns. For example, wavelet-based techniques have successfully detected subtle changes in athletes with chronic ankle instability [29,30].

Time-domain models also play a key role. ARIMA (Auto-Regressive Integrated Moving Average) models forecast future values by capturing trends and autocorrelations after ensuring data stationarity, providing clear interpretability through slope and seasonal pattern estimates. Bayesian changepoint detection extends these methods by statistically identifying abrupt shifts in measured metrics [57], such as sudden drops in running velocity that may indicate fatigue, injury onset, or tactical adjustments. While these classical tools are generally linear and assume consistent data structure, making them efficient and easy to use, especially with smaller datasets or in coach-facing outputs, they often struggle with the complex, nonlinear interactions and sudden regime changes that characterize real-world sports data, limiting their predictive power in more dynamic contexts [58,59].

Modern machine learning (ML) models excel at capturing the nonlinear and complex relationships found in sports data, where numerous interdependent factors, such as training load, injury history, and tactical context, can interact unpredictably [59]. Deep learning architectures like Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks, are well-suited for modeling temporal dependencies in sequential performance data. LSTMs have been applied to predict injuries [60] and performance outcomes [61] from training load histories, learning intricate autoregressive patterns beyond the reach of linear models (though requiring large datasets and careful tuning) [62]. Transformers, which use self-attention mechanisms, have recently demonstrated promise in time series forecasting by learning long-range dependencies [63,64]; for instance, they can relate historical workload patterns and recent recovery trends to current injury risk [65]. Temporal Convolutional Networks (TCNs) also efficiently capture extended temporal dependencies, often with reduced computational complexity compared to RNNs [66].

Unsupervised models, such as autoencoders, including sequence and variational autoencoders, automatically learn lower-dimensional latent representations of time series data [67], simplifying multi-dimensional performance histories and facilitating anomaly detection when current behavior deviates from established patterns. In team sport analytics, Graph Neural Networks (GNNs) have been introduced to model player interactions, enabling the analysis of relational dynamics, such as passing structures or synchronized group movements, that go beyond what sequential models can capture [68].

Despite the promise of advanced ML techniques, current evidence indicates that a hybrid analytical approach is often most effective [69]. In many cases, simple models, such as logistic regression or ARIMA, perform as well as, or better than, complex ML algorithms for tasks like injury prediction, chiefly because they offer greater interpretability, transparency, and lower risk of overfitting [56]. For robust decision support, practitioners are encouraged to use traditional statistical models to establish baseline forecasts and to layer more sophisticated ML approaches for detecting nuanced anomalies or modeling nonlinear risk interactions [56].

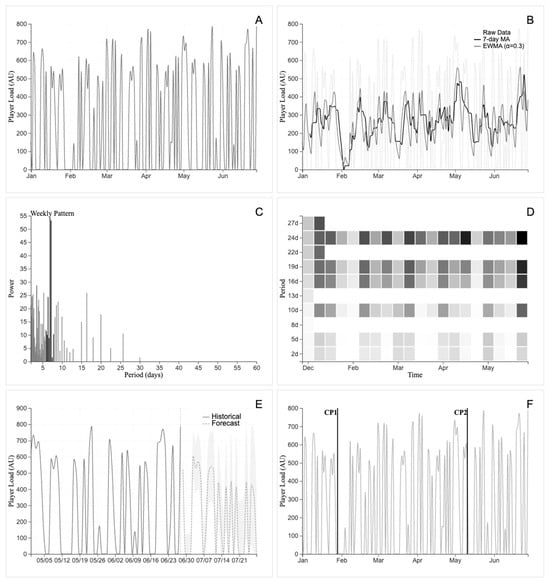

To illustrate the value of combining multiple analytical techniques, Figure 4 presents a suite of time series analyses applied to daily player load data. This example demonstrates how raw fluctuations, smoothing methods, periodicity detection, localized frequency analysis, probabilistic forecasting, and structural change identification combine to provide a comprehensive picture of both short- and long-term training load dynamics.

Figure 4.

(A). Raw time series of daily player load (arbitrary units) recorded over a 6-month period. Each point represents the total external load accumulated in a single day, capturing both training and rest days. The unprocessed series retains all short-term fluctuations and variability inherent to daily training schedules, recovery periods, and random deviations in load, providing the foundational dataset for subsequent time series analyses. (B). Comparison of moving average (7-day window) and exponentially weighted moving average (α = 0.3) smoothing methods applied to player load data. These techniques reduce noise and reveal underlying trends, with EWMA providing greater weight to recent observations and faster adaptation to changes in training patterns. (C). Power spectral density reveals dominant periodic patterns in training load. The frequency spectrum identifies the strength of various cyclical components, with particular emphasis on weekly (7-day) and bi-weekly (14-day) training periodization patterns commonly observed in structured athletic programs. The dominant peak at 7-day period (highlighted in black) confirms weekly training structure. Secondary peaks indicate longer periodization cycles. (D). Continuous wavelet transform provides localized frequency analysis, revealing how periodic patterns evolve temporally. This time-frequency representation is particularly valuable for detecting non-stationary behavior in training loads, such as changes in periodization strategy or adaptations to competition schedules. Darker regions indicate stronger periodic components at specific temporal locations. (E). Auto-Regressive Integrated Moving Average model captures temporal dependencies and trends to provide probabilistic forecasts. The model incorporates historical patterns and seasonal components to predict future training loads with confidence intervals, enabling proactive load management and training plan optimization. ARIMA forecast (green dashed line) with 95% confidence intervals (shaded region). Historical data (blue solid line) provides the model training period. Vertical dashed line separates observed from predicted values. (F). Statistical inference method for identifying significant structural breaks in time series data. The algorithm detects abrupt changes in the underlying data, potentially indicating training phase transitions, injury periods, competition blocks, or other physiological or strategic modifications in training approach. Changepoint detection results overlaid on raw time series. Black vertical lines indicate statistically significant breaks (p < 0.05) in the data generation process.

Regardless of the analytical approach, all forecasting, trend analysis, and anomaly detection tools must be contextualized within the specific athlete and team environment. To maintain output quality and practitioner trust, models should be regularly validated, recalibrated, and audited for ongoing relevance. Outputs, such as team win probabilities or player readiness metrics, should include confidence intervals, actionable thresholds, and transparent explanations of key drivers to enhance decisional guidance and on-field usability. Incorporating adaptive modeling frameworks that allow the DSS to update dynamically with new data and practitioner feedback further supports ongoing context satisfaction [2].

In our MDS application, pattern detection and forecasting are achieved by segmenting each player’s time series using changepoint detection. The example DSS employs the Python library ruptures to identify shifts [22] in physical output, such as changes in acceleration load or velocity, within individual player stints. Each stint is partitioned into segments of at least 12 s, aligning with the moving average window defined in Section 2. Practitioners can tailor the segmentation process by specifying the number of segments or by selecting a penalty function, which acts as a regularization mechanism to avoid overfitting by assigning a cost to each additional changepoint. For our analysis, a fixed penalty is used to ensure consistent fitting across all scenarios, enabling fair comparisons between player stints.

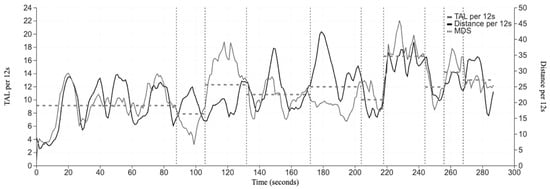

Figure 5 illustrates changepoint detection in a single basketball stint, showing how the method divides the stint into shorter segments for clearer interpretation and performance review. These segments are summarized to identify the Most Demanding Scenario within each stint, defined as the period where the weighted average of a player’s total acceleration load and velocity is maximized. Unlike studies limited to fixed-duration windows, changepoint detection produces scenarios of varying duration, allowing a more nuanced depiction of high-demand periods in team sports [70].

Figure 5.

Changepoint detection for a single stint during a basketball game.

To aid interpretation, unsupervised machine learning techniques are used to cluster changepoint-based segments by duration and key performance metrics, such as total acceleration load (TAL) and very high-speed distance (VHSD, defined as distance covered above 18 km/h) [71]. Gaussian Mixture Models (GMMs) are used for clustering, since their probabilistic assignments more accurately represent segments with mixed characteristics [72]. Descriptive labels are then added to each cluster to enhance interpretability and facilitate targeted queries for further analysis. This approach enables practitioners to identify MDS segments with distinct profiles, such as those characterized by high-intensity, mechanical loads or those dominated by elevated VHSD, thus supporting more granular and actionable insights.

6. Decision Support System Output

To be effective, a DSS output must demonstrate context satisfaction, output quality, and process efficiency [2] (see Figure 1). Context satisfaction is achieved when insights are tailored to the practical, evolving needs of practitioners. For example, an Australian football DSS might recommend substitutions by factoring in player fatigue, fouls, opponent matchups, and the current game situation, giving coaches a data-driven means to optimize lineups during key moments [73]. In soccer, systems that integrate player workload, opposition style, and weather can generate individualized tapering or hydration recommendations, offering acute and strategic guidance [74].

Output quality hinges on accuracy, interpretability, and transparency. For instance, an American football DSS might provide play-choice probabilities as risk-adjusted, data-driven visualizations with confidence intervals and supporting rationale, helping coaches make informed decisions [75]. In elite cycling, readiness dashboards that merge force-velocity profiling and HRV data can flag under-recovery with clear probability and uncertainty indicators, enabling precise adaptation of training and competition plans [76].

Process efficiency demands insights that are timely and actionable, delivered in formats that enhance workflow. Modern DSS platforms leverage real-time dashboards, customizable reports, and alerting, such as live-streamed pitching analytics in baseball for immediate intervention [77]. Integrating hydration sensors and daily wellness questionnaires can flag elevated risk for dehydration or overtraining in time for preventive support [78]. In winter sports, merging power output with environmental data enables real-time adjustments to pacing and equipment as conditions change [79]. Tennis DSS now offers instant, data-backed tactical briefings between games, synthesizing tracking and physiology with opponent patterning [80].

Collectively, these examples underscore the practical value and momentum of sport DSS. The next step is a systematic integration of interactive tools into daily workflows, fully embedding actionable, context-sensitive recommendations when and where they are most needed. The example presented in this manuscript corresponds to a male professional basketball player, with data collected during an official in-game situation using an optical tracking system. Although only one player is shown here for illustrative purposes, this approach has already been applied across thousands of players and matches in professional basketball. Therefore, the present example should not be understood as a representative sample but rather as a practical demonstration of the procedure.

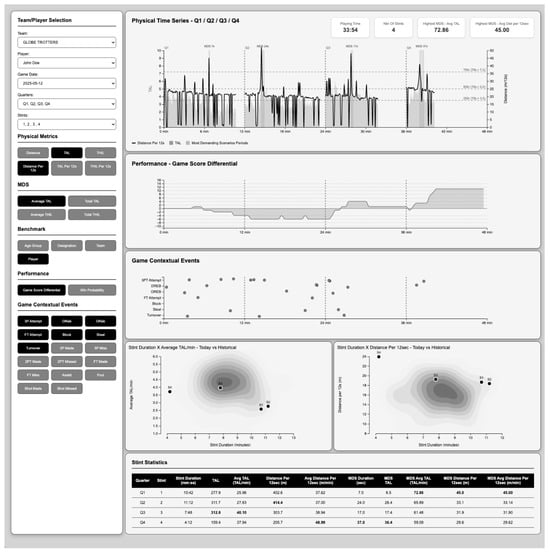

A practical deployment of our DSS for MDS analysis is embodied in a browser-based dashboard built as a single-page application using Vue.js for a responsive user interface and D3.js for dynamic, customizable visualizations. Data are securely served via a cloud-hosted REST API, enabling filtered, up-to-date access without needing local software. The interface supports focused queries by game date, team, player, quarter, and stint, with quick toggles for key physical metrics, MDS outcomes, and benchmarking, helping users rapidly isolate relevant workloads and contextual features (see Figure 6).

Figure 6.

Interactive post-game dashboard showing individualized physical time series, performance trends, contextual events, comparative MDS (Most Demanding Scenarios) analysis, and player statistics panels. Key metrics and filters include TAL (total acceleration load), THL (total high-intensity load), VHSD (very high-speed distance), SPRD (sprint distance), M60s (meters covered per 60 s), alongside contextual game events, enabling comprehensive exploration and benchmarking of peak basketball demands.

The dashboard’s central “Physical Time Series” panel displays TAL and distance covered by a player throughout an entire game, clearly identifying each stint. It also highlights detected MDS (shaded regions), while panel headers summarize total playing time, and the average load (TAL) and distance (m/12 s) for the highest MDS. Directly below, a score differential time series situates player load within the broader narrative of team performance. The contextual event raster aligns key technical-tactical actions, including 3-point attempts, steals, rebounds, blocks, and fouls, so users can connect workload peaks to game moments. Bottom panels compare stint durations and intensities (TAL/min, m/12 s) against historical player benchmarks using density and scatter plots, facilitating rapid identification of outliers and normative trends. The statistical summary table enables immediate lookup and export of all key calculations, supporting post-game review and ongoing athlete monitoring.

Throughout, the dashboard interface is designed for maximum clarity and utility, employing a minimal and uncluttered layout, with contextual event highlighting and interactive features like zoom and tooltips for detailed exploration. The modular sidebar allows efficient selection and filtering by team, player, period, and physical metric, supporting both targeted and holistic analysis. This architecture supports accessible, team-wide review while streamlining post-game workflow, effectively bridging complex time series insights with actionable, individualized feedback for training design and periodization. By translating advanced analytics into clear, context-sensitive decisions, the dashboard functions as a robust evidence-based tool for modern sport practitioners.

7. Limitations of the Study

These limitations pertain to the worked example, whose primary purpose was to demonstrate the application of the proposed time series DSS concepts rather than to produce definitive, generalizable estimates. The case focuses on a single sport and organization, which enhances ecological validity but limits external generalization across leagues and contexts. Despite efficiency gains (e.g., downsampling), ingesting high-frequency tracking data, performing changepoint segmentation, and clustering segments remain computationally demanding for near-real-time use without suitable infrastructure. Findings are also sensitive to design choices (e.g., changepoint penalty and minimum segment length; variable selection and weighting in the MDS score), and formal sensitivity analyses were beyond scope. Effective deployment requires practitioner training to interpret segmented stints and MDS outputs. Contractual restrictions on raw tracking data and code constrain open replication, although methods and parameters are fully specified. Future work should extend validation to other sports and organizations, conduct robustness and longitudinal impact studies, optimize pipelines for live workflows, and enhance explainability and usability while releasing anonymized feature tables and configuration files where permissible.

8. Conclusions

This study advances time series-driven DSS by refining a five-stage pipeline that links rigorous data handling to context-aware pattern detection and practitioner-oriented delivery. A novel application of changepoint analysis to MDS assessment adapts scenario duration to the data rather than imposing fixed windows, improving ecological validity and yielding actionable insights for post-game review and individualized training.

For practitioners, the main message is pragmatic: a dashboarded, changepoint-based MDS workflow can translate complex tracking streams into timely, interpretable guidance that aligns with staff workflows.

For researchers, the central contribution is conceptual and methodological: a sport-agnostic pipeline and rationale for changepoint-based MDS, including explicit parameterization and reproducibility notes, that can be tested across settings and linked to performance and health outcomes.

Together, the framework and the basketball-MDS DSS provide a replicable blueprint for embedding adaptive, transparent time series analytics into elite-sport decision making.

Author Contributions

Conceptualization, X.S. and B.S.; methodology, X.S. and B.S.; software, B.S. and V.A.; validation, X.S., B.S. and S.R.; formal analysis, X.S. and S.R.; investigation, X.S. and B.S.; resources, X.S.; data curation, X.S.; writing—original draft preparation, X.S.; writing—review and editing, E.A.-P.-C., B.S., C.S. and S.R.; visualization, X.S., V.A. and B.S.; supervision, S.R.; project administration, X.S.; funding acquisition, E.A.-P.-C. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this article are not readily available because they are owned by the league and the players and are subject to contractual confidentiality obligations that prohibit external sharing. This paper is methodology-driven rather than data-dependent; all calculation details and software packages are provided in the manuscript to ensure transparency and reproducibility. Requests to access the datasets cannot be accommodated. Procedural inquiries should be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| DSS | Decision Support Systems |

| ECG | Electrocardiogram |

| IMU | Inertial Measurement Unit |

| HR | Heart Rate |

| HRV | Heart Rate Variability |

| HF | High Frequency Power |

| HZ | Hertz |

| LPS | Local Positioning System |

| MDS | Most Demanding Scenarios |

| RFD | Rate of Force Development |

| RPE | Rating of Perceived Exertion |

| rMSSD | Root Mean Square of Successive Differences |

References

- Kolar, E.; Biloslavo, R.; Pišot, R.; Veličković, S.; Tušak, M. Conceptual Framework of Coaches’ Decision-Making in Conventional Sports. Front. Psychol. 2024, 15, 1498186. [Google Scholar]

- Schelling, X.; Robertson, S. A Development Framework for Decision Support Systems in High-Performance Sport. Int. J. Comp. Sci. Sport 2020, 19, 1–23. [Google Scholar] [CrossRef]

- Torres-Ronda, L.; Schelling, X. Critical Process for the Implementation of Technology in Sport Organizations. Strength. Cond. J. 2017, 39, 54–59. [Google Scholar]

- Stein, M.; Janetzko, H.; Seebacher, D.; Jäger, A.; Nagel, M.; Hölsch, J.; Kosub, S.; Schreck, T.; Keim, D.; Grossniklaus, M. How to Make Sense of Team Sport Data: From Acquisition to Data Modeling and Research Aspects. Data 2017, 2, 2. [Google Scholar] [CrossRef]

- Tversky, A.; Kahneman, D. Judgment Under Uncertainty: Heuristics and Biases. Science 1974, 185, 1124–1131. [Google Scholar] [CrossRef] [PubMed]

- Shmueli, G. To Explain or to Predict? Stat. Sci. 2010, 25, 289–310. [Google Scholar] [CrossRef]

- Pérez-Chao, E.A.; Portes, R.; Gómez, M.Á.; Parmar, N.; Lorenzo, A.; Jiménez-Sáiz, S.L. A Narrative Review of the Most Demanding Scenarios in Basketball: Current Trends and Future Directions. J. Hum. Kinet. 2023, 89, 231–245. [Google Scholar] [CrossRef]

- Rico-González, M.; Los Arcos, A.; Nakamura, F.Y.; Moura, F.A.; Pino-Ortega, J. The Use of Technology and Sampling Frequency to Measure Variables of Tactical Positioning in Team Sports: A Systematic Review. Res. Sports Med. 2020, 28, 279–292. [Google Scholar]

- Shannon, C.E. Communication in the Presence of Noise. Proc. IRE 1949, 37, 10–21. [Google Scholar] [CrossRef]

- Hasegawa-Johnson, M. Lecture 6: Sampling and Aliasing; University of Illinois Urbana-Champaign: Urbana-Champaign, IL, USA, 2021; Available online: https://courses.grainger.illinois.edu/ece401/fa2021/lectures/lec06.pdf (accessed on 1 January 2025).

- Johnston, R.J.; Watsford, M.L.; Kelly, S.J.; Pine, M.J.; Spurrs, R.W. Validity and Interunit Reliability of 10 Hz and 15 Hz GPS Units for Assessing Athlete Movement Demands. J. Strength. Cond. Res. 2014, 28, 1649–1655. [Google Scholar] [CrossRef]

- Fernández-Valdés, B.; Jones, B.; Hendricks, S.; Weaving, D.; Ramirez-Lopez, C.; Whitehead, S.; Toro-Román, V.; Trabucchi, M.; Moras, G. Comparison of Mean Values and Entropy in Accelerometry Time Series from Two Microtechnology Sensors Recorded at 100 vs. 1000 Hz During Cumulative Tackles in Young Elite Rugby League Players. Sensors 2024, 24, 7910. [Google Scholar] [CrossRef] [PubMed]

- Kwon, O.; Jeong, J.; Kim, H.B.; Kwon, I.H.; Park, S.Y.; Kim, J.E.; Choi, Y. Electrocardiogram Sampling Frequency Range Acceptable for Heart Rate Variability Analysis. Healthc. Inform. Res. 2018, 24, 198–206. [Google Scholar] [CrossRef] [PubMed]

- Beckham, G.; Suchomel, T.; Mizuguchi, S. Force Plate Use in Performance Monitoring and Sport Science Testing. New Stud. Athlet 2014, 29, 25–37. [Google Scholar]

- Alenzi, A.R.; Alzhrani, M.; Alanazi, A.; Alzahrani, H. Do Different Two-Dimensional Camera Speeds Detect Different Lower-Limb Kinematics Measures? A Laboratory-Based Cross-Sectional Study. J. Clin. Med. 2025, 14, 1687. [Google Scholar] [CrossRef]

- Saw, A.E.; Main, L.C.; Gastin, P.B. Monitoring the Athlete Training Response: Subjective Self-Reported Measures Trump Commonly Used Objective Measures: A Systematic Review. Br. J. Sports Med. 2016, 50, 281–291. [Google Scholar] [CrossRef]

- Bardella, P.; Carrasquilla García, I.; Pozzo, M.; Tous-Fajardo, J.; Saez de Villareal, E.; Suarez-Arrones, L. Optimal Sampling Frequency in Recording of Resistance Training Exercises. Sports Biomech. 2017, 16, 102–114. [Google Scholar] [CrossRef]

- Augustus, S.; Amca, A.M.; Hudson, P.E.; Smith, N. Improved Accuracy of Biomechanical Motion Data Obtained During Impacts Using A Time-Frequency Low-Pass Filter. J. Biomech. 2020, 101, 109639. [Google Scholar] [CrossRef]

- García, F.; Schelling, X.; Castellano, J.; Martín-García, A.; Pla, F.; Vázquez-Guerrero, J. Comparison of the Most Demanding Scenarios During Different in-Season Training Sessions and Official Matches in Professional Basketball Players. Biol. Sport 2022, 39, 237–244. [Google Scholar] [CrossRef]

- Vázquez-Guerrero, J.; Ayala, F.; Garcia, F.; Sampaio, J. The Most Demanding Scenarios of Play in Basketball Competition from Elite Under-18 Teams. Front. Psychol. 2020, 11, 2020. [Google Scholar] [CrossRef] [PubMed]

- Makar, P.; Silva, A.F.; Oliveira, R.; Janusiak, M.; Parus, P.; Smoter, M.; Clemente, F.M. Assessing the Agreement between a Global Navigation Satellite System and an Optical-Tracking System for Measuring Total, High-Speed Running, and Sprint Distances in Official Soccer Matches. Sci. Prog. 2023, 106, 368504231187501. [Google Scholar] [CrossRef]

- Truong, C.; Oudre, L.; Vayatis, N. Selective Review of Offline Change Point Detection Methods. Signal Process. 2020, 167, 107299. [Google Scholar] [CrossRef]

- Teune, B.; Woods, C.; Sweeting, A.; Inness, M.; Robertson, S. A Method to Inform Team Sport Training Activity Duration with Change Point Analysis. PLoS ONE 2022, 17, e0265848. [Google Scholar] [CrossRef]

- Arellano, A.M.; Dai, W.; Wang, S.; Jiang, X.; Ohno-Machado, L. Privacy Policy and Technology in Biomedical Data Science. Annu. Rev. Biomed. Data Sci. 2018, 1, 115–129. [Google Scholar] [CrossRef]

- Novak, A.R.; Impellizzeri, F.M.; Trivedi, A.; Coutts, A.J.; McCall, A. Analysis of the Worst-Case Scenarios in an Elite Football Team: Towards a Better Understanding and Application. J. Sports Sci. 2021, 39, 1850–1859. [Google Scholar] [CrossRef] [PubMed]

- Malone, J.J.; Lovell, R.; Varley, M.C.; Coutts, A.J. Unpacking the Black Box: Applications and Considerations for Using GPS Devices in Sport. Int. J. Sports Physiol. Perform. 2017, 12 (Suppl. 2), S218–S226. [Google Scholar] [CrossRef] [PubMed]

- Crenna, F.; Rossi, G.B.; Berardengo, M. Filtering Biomechanical Signals in Movement Analysis. Sensors 2021, 21, 4580. [Google Scholar] [CrossRef] [PubMed]

- Ellens, S.; Carey, D.L.; Gastin, P.B.; Varley, M.C. Accuracy of Gnss-Derived Acceleration Data for Dynamic Team Sport Movements: A Comparative Study of Smoothing Techniques. Appl. Sci. 2024, 14, 10573. [Google Scholar] [CrossRef]

- Murray, N.; Gabbett, T.; Townshend, A.; Blanch, P. Calculating Acute: Chronic Workload Ratios Using Exponentially Weighted Moving Averages Provides a More Sensitive Indicator of Injury Likelihood Than Rolling Averages. Br. J. Sports Med. 2017, 51, 749. [Google Scholar] [CrossRef]

- Wundersitz, D.W.T.; Gastin, P.B.; Richter, C.; Robertson, S.J.; Netto, K.J. Validity of a Trunk-Mounted Accelerometer to Assess Peak Accelerations During Walking, Jogging and Running. Eur. J. Sport Sci. 2015, 15, 382–390. [Google Scholar] [CrossRef]

- Zihajehzadeh, S.; Loh, D.; Lee, T.; Hoskinson, R.; Park, E. A Cascaded Kalman Filter-Based Gps/Mems-Imu Integration for Sports Applications. Measurement 2015, 73, 200–210. [Google Scholar] [CrossRef]

- Winter, D.A. Biomechanics and Motor Control of Human Movement, 4th ed.; Wiley: Hoboken, NJ, USA, 2009. [Google Scholar]

- Smith, S.W. The Scientist and Engineer’s Guide to Digital Signal Processing; California Technical Publishing: San Diego, CA, USA, 1997. [Google Scholar]

- Addison, P.S. The Illustrated Wavelet Transform Handbook: Introductory Theory and Applications in Science, Engineering, Medicine and Finance, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Siangphoe, U.; Wheeler, D.C. Evaluation of the Performance of Smoothing Functions in Generalized Additive Models for Spatial Variation in Disease. Cancer Inform. 2015, 14 (Suppl. 2), 107–116. [Google Scholar] [CrossRef] [PubMed]

- Robertson, D.G.E.; Caldwell, G.E.; Hamill, J.; Kamen, G.; Whittlesey, S.N. Research Methods in Biomechanics, 2nd ed.; Human Kinetics: Champaign, IL, USA, 2014. [Google Scholar]

- Andersen, L.L.; Aagaard, P. Influence of Maximal Muscle Strength and Intrinsic Muscle Contractile Properties on Contractile Rate of Force Development. Eur. J. Appl. Physiol. 2006, 96, 46–52. [Google Scholar] [CrossRef] [PubMed]

- Kozinc, Ž.; Pleša, J.; Djurić, D.; Šarabon, N. Comparison of Rate of Force Development between Explosive Sustained Contractions and Ballistic Pulse-Like Contractions During Isometric Ankle and Knee Extension Tasks. Appl. Sci. 2022, 12, 10255. [Google Scholar] [CrossRef]

- Gabbett, T.J. The Training-Injury Prevention Paradox: Should Athletes be Training Smarter and Harder? Br. J. Sports Med. 2016, 50, 273–280. [Google Scholar] [CrossRef]

- Buchheit, M.; Eriksrud, O. Maximal Locomotor Function in Elite Football: Protocols and Metrics for Acceleration, Speed, Deceleration, and Change of Direction Using a Motorized Resistance Device. Sport. Perform. Sci. Rep. 2024, 1, 238. [Google Scholar]

- Williams, S.; West, S.; Cross, M.J.; Stokes, K.A. Better Way to Determine the Acute:Chronic Workload Ratio? Br. J. Sports Med. 2017, 51, 209–210. [Google Scholar] [CrossRef]

- Zhang, F.; Yi, Q.; Dong, R.; Yan, J.; Xu, X. Inner Pace: A Dynamic Exploration and Analysis of Basketball Game Pace. PLoS ONE 2025, 20, e0320284. [Google Scholar] [CrossRef]

- Mandić, R.; Jakovljević, S.; Erčulj, F.; Štrumbelj, E. Trends in NBA and Euroleague Basketball: Analysis and Comparison of Statistical Data from 2000 to 2017. PLoS ONE 2019, 14, e0223524. [Google Scholar] [CrossRef]

- Jiménez, M.A.; Vaquero, N.A.C.; Suarez-Llorca, C.; Pérez-Turpin, J. The Impact of Offensive Duration on NBA Success: A Comparative Analysis of Jordan’s Chicago Bulls and Curry’s Golden State Warriors. J. Hum. Kinet. 2025, 96, 225–233. [Google Scholar] [CrossRef]

- Delaney, J.A.; Duthie, G.M.; Thornton, H.R.; Scott, T.J.; Gay, D.; Dascombe, B.J. Acceleration-Based Running Intensities of Professional Rugby League Match Play. Int. J. Sports Physiol. Perform. 2016, 11, 802–809. [Google Scholar] [CrossRef]

- Aguileta, A.A.; Brena, R.F.; Mayora, O.; Molino-Minero-Re, E.; Trejo, L.A. Multi-Sensor Fusion for Activity Recognition—A Survey. Sensors 2019, 19, 3808. [Google Scholar] [CrossRef]

- Wild, T.; Wilbs, G.; Dechmann, D.; Kohles, J.; Linek, N.; Mattingly, S.; Richter, N.; Sfenthourakis, S.; Nicolaou, H.; Erotokritou, E.; et al. Time Synchronisation for Millisecond-Precision on Bio-Loggers. Mov. Ecol. 2024, 12, 71. [Google Scholar] [CrossRef]

- Vesterinen, V.; Nummela, A.; Heikura, I.; Laine, T.; Hynynen, E.; Botella, J.; Häkkinen, K. Individual Endurance Training Prescription with Heart Rate Variability. Med. Sci. Sports Exerc. 2016, 48, 1347–1354. [Google Scholar] [CrossRef] [PubMed]

- Thompson, S.; Lake, J.; Rogerson, D.; Ruddock, A.; Barnes, A. Kinetics and Kinematics of the Free-Weight Back Squat and Loaded Jump Squat. J. Strength. Cond. Res. 2022, 37, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Reichel, T.; Hacker, S.; Palmowski, J.; Boßlau, T.; Frech, T.; Tirekoglou, P.; Weyh, C.; Bothur, E.; Samel, S.; Walscheid, R.; et al. Neurophysiological Markers for Monitoring Exercise and Recovery Cycles in Endurance Sports. J. Sports Sci. Med. 2022, 21, 446–457. [Google Scholar] [CrossRef] [PubMed]

- Rhudy, M. Time Alignment Techniques for Experimental Sensor Data. Int. J. Comput. Sci. Eng. Surv. (IJCSES) 2014, 5, 1–14. [Google Scholar] [CrossRef]

- Oppenheim, A.V.; Schafer, R.W.; Buck, J.R. Discrete-Time Signal Processing, 2nd ed.; Prentice-Hall: Englewood Cliffs, NJ, USA, 1999. [Google Scholar]

- Fridman, L.; Brown, D.E.; Glazer, M.; Angell, W.; Dodd, S.; Jenik, B.; Terwilliger, J.; Patsekin, A.; Kindelsberger, J.; Ding, L.; et al. Automated Synchronization of Driving Data Using Vibration and Steering Events. Pattern Recognit. Lett. 2016, 75, 9–15. [Google Scholar] [CrossRef]

- Khargonekar, P.P.; Dahleh, M.A. Kalman Filtering, Sensor Fusion, and Eye Tracking; University of California, Irvine: Irvine, CA, USA, 2020. [Google Scholar]

- IBM. IBM SPSS Modeler CRISP-DM Guide. Available online: https://www.ibm.com/support/knowledgecenter/en/SS3RA7_15.0.0/com.ibm.spss.crispdm.help/crisp_overview.htm (accessed on 4 February 2019).

- Leckey, C.; van Dyk, N.; Doherty, C.; Lawlor, A.; Delahunt, E. Machine Learning Approaches to Injury Risk Prediction in Sport: A Scoping Review with Evidence Synthesis. Br. J. Sports Med. 2025, 59, 491–500. [Google Scholar] [CrossRef]

- Adams, R.P.; MacKay, D.J.C. Bayesian Online Changepoint Detection. arXiv 2007, arXiv:0710.3742. [Google Scholar] [CrossRef]

- Turner, J.; Mazzoleni, M.; Little, J.A.; Sequeira, D.; Mann, B. A nonlinear Model for the Characterization and Optimization of Athletic Training and Performance. Biomed. Human Kinet. 2017, 9, 82–93. [Google Scholar] [CrossRef][Green Version]

- Jianjun, Q.; Isleem, H.F.; Almoghayer, W.J.K.; Khishe, M. Predictive Athlete Performance Modeling with Machine Learning and Biometric Data Integration. Sci. Rep. 2025, 15, 16365. [Google Scholar] [CrossRef]

- Sadr, M.; Khani, M.; Tootkaleh, S. Predicting Athletic Injuries with Deep Learning: Evaluating CNNs and RNNs for Enhanced Performance and SAFETY. Biomed. Signal Process. Control 2025, 105, 107692. [Google Scholar] [CrossRef]

- Sarkar, T.; Kumar, D. Hybrid Transformer-LSTM Model for Athlete Performance Prediction in Sports Training Management. Informatica 2025, 49, 527–543. [Google Scholar] [CrossRef]

- Siami-Namini, S.; Tavakoli, N.; Siami Namin, A. A Comparison of ARIMA and LSTM in Forecasting Time Series. In Proceedings of the 17th IEEE International Conference on Machine Learning and Applications, Orlando, FL, USA, 17–20 December 2018. [Google Scholar]

- Hao, J.; Liu, F. Improving Long-Term Multivariate Time Series Forecasting with A Seasonal-Trend Decomposition-Based 2-Dimensional Temporal Convolution Dense Network. Sci. Rep. 2024, 14, 1689. [Google Scholar] [CrossRef] [PubMed]

- Wen, Q.; Zhou, T.; Zhang, C.; Chen, W.; Ma, Z.; Yan, J.; Sun, L. Transformers in Time Series: A Survey. In Proceedings of the Thirty-Second International Joint Conference on Artificial Intelligence (IJCAI-23), Macao SAR, China, 19–25 August 2023. [Google Scholar]

- Zhu, J.; Ye, Z.; Ren, M.; Ma, G. Transformative Skeletal Motion Analysis: Optimization of Exercise Training and Injury Prevention through Graph Neural Networks. Front. Neurosci. 2024, 18, 1353257. [Google Scholar] [CrossRef] [PubMed]

- Singh, V.; Singh, S. A Separable Temporal Convolutional Networks Based Deep Learning Technique for Discovering Antiviral Medicines. Sci. Rep. 2023, 13, 13722. [Google Scholar] [CrossRef]

- Cai, B.; Yang, S.; Gao, L.; Xiang, Y. Hybrid Variational Autoencoder for Time Series Forecasting. Knowl.-Based Syst. 2023, 281, 111079. [Google Scholar] [CrossRef]

- Xenopoulos, P.; Silva, C. Graph Neural Networks to Predict Sports Outcomes. arXiv 2021, arXiv:2207.14124. [Google Scholar]

- Kulakou, S.; Ragab, N.; Midoglu, C.; Boeker, M.; Johansen, D.; Riegler, M.; Halvorsen, P. Exploration of Different Time Series Models for Soccer Athlete Performance Prediction. Eng. Proc. 2022, 18, 37. [Google Scholar]

- Yung, K.K.; Teune, B.; Ardern, C.L.; Serpiello, F.R.; Robertson, S. A Change-Point Method to Detect Meaningful Change in Return-To-Sport Progression in Athletes. Int. J. Sports Physiol. Perform. 2024, 19, 943–948. [Google Scholar] [CrossRef]

- Puente, C.; Abián-Vicén, J.; Areces, F.; López, R.; Del Coso, J. Physical and Physiological Demands of Experienced Male Basketball Players During a Competitive Game. J. Strength. Cond. Res. 2017, 31, 956–962. [Google Scholar] [CrossRef] [PubMed]

- Reynolds, D. Gaussian Mixture Models. In Encyclopedia of Biometrics; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Johnston, R.D.; Black, G.M.; Harrison, P.W.; Murray, N.B.; Austin, D.J. Applied Sport Science of Australian Football: A Systematic Review. Sports Med. 2018, 48, 1673–1694. [Google Scholar] [CrossRef] [PubMed]

- Guerrero-Calderón, B.; Klemp, M.; Morcillo, J.A.; Memmert, D. How Does the Workload Applied During the Training Week and the Contextual Factors Affect the Physical Responses of Professional Soccer Players in the Match? Int. J. Sports Sci. Coach. 2021, 16, 994–1003. [Google Scholar] [CrossRef]

- Brill, R.S.; Yee, R.; Deshpande, S.K.; Wyner, A.J. Moving from Machine Learning to Statistics: The Case of Expected Points in American Football. arXiv 2024, arXiv:2409.04889. [Google Scholar] [CrossRef]

- Javaloyes, A.; Sarabia, J.M.; Lamberts, R.P.; Moya-Ramon, M. Training Prescription Guided by Heart-Rate Variability in Cycling. Int. J. Sports Physiol. Perform. 2019, 14, 23–32. [Google Scholar] [CrossRef]

- Adlou, B.; Wilburn, C.; Weimar, W. Motion Capture Technologies for Athletic Performance Enhancement and Injury Risk Assessment: A Review for Multi-Sport Organizations. Sensors 2025, 25, 4384. [Google Scholar] [CrossRef]

- Halson, S.L. Monitoring Training Load to Understand Fatigue in Athletes. Sports Med. 2014, 44 (Suppl. 2), S139–S147. [Google Scholar] [CrossRef]

- Sands, W.A.; McNeal, J.R. The Puzzle of Monitoring Training Load in Winter Sports–A Hard Nut to Crack. Curr. Issues Sport Sci. (CISS) 2024, 5, 1–17. [Google Scholar]

- Crespo, M.; Martínez-Gallego, R.; Filipcic, A. Determining the Tactical and Technical Level of Competitive Tennis Players Using a Competency Model: A Systematic Review. Front. Sports Act. Living 2024, 6, 1406846. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).