Integrating Fitbit Wearables and Self-Reported Surveys for Machine Learning-Based State–Trait Anxiety Prediction

Abstract

1. Introduction

- Examining the potential of implementing ML algorithms with wearable-derived physiological and behavioral data for classifying state anxiety in individuals.

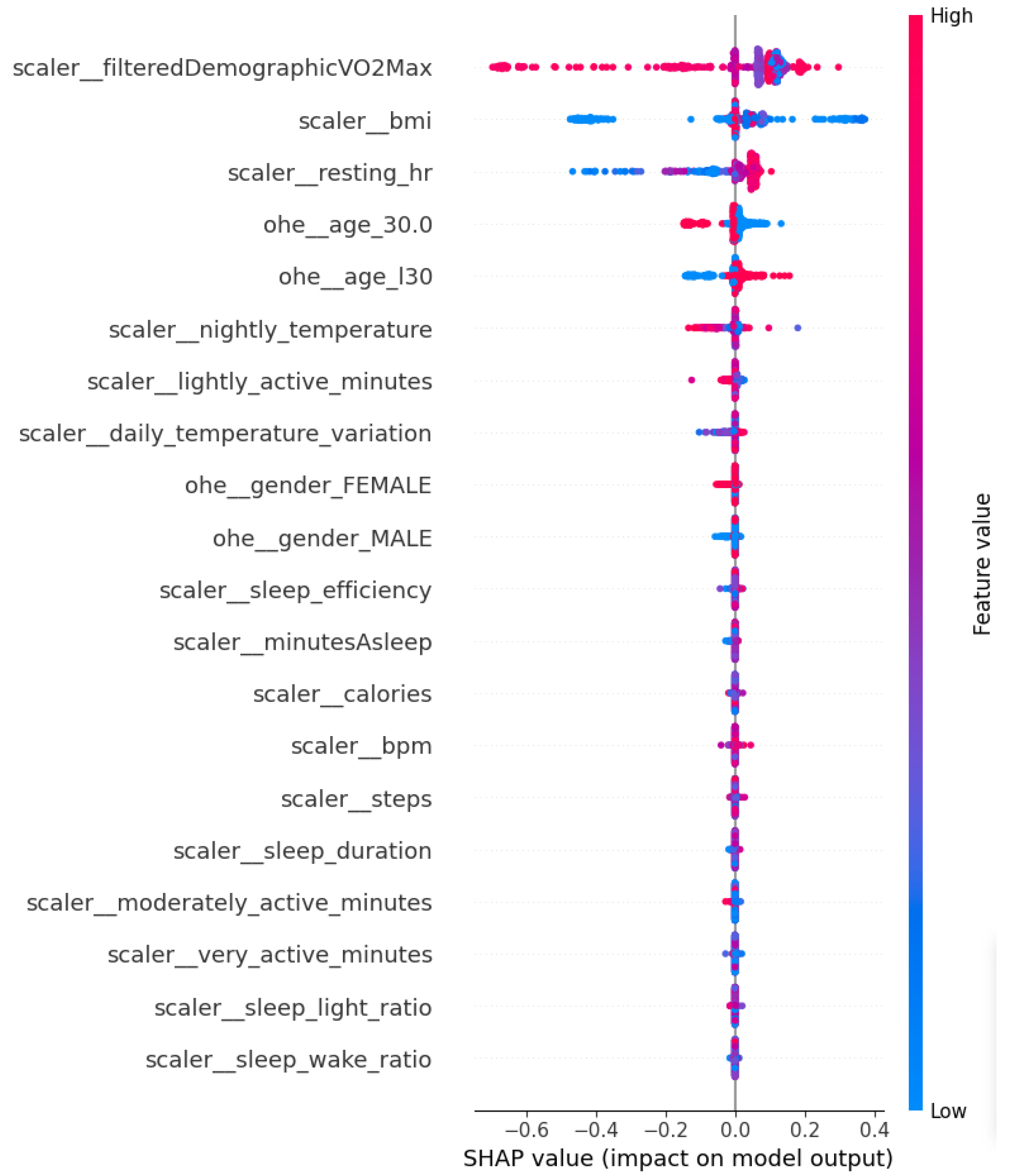

- Investigating the role of explainability with Shapley values in identifying important variables in naturalistic settings.

- Releasing the code at XXX of the robust preprocessing and machine learning workflow, to further facilitate in developing predictive models for mental well-being from unobtrusive wearable data.

2. Methodology

2.1. Dataset

2.2. Preprocessing

2.3. Machine Learning Models

3. Results

4. Discussion

4.1. Research Implications

4.2. Comparison with Related Work

4.3. Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| STAI | State–Trait Anxiety Inventory |

| CB | CatBoost |

| GNB | Gaussian Naïve Bayes |

| k-NN | k-Nearest Neighbors |

| LGB | Light Gradient Boosting |

| LR | Logistic Regression |

| ML | Machine Learning |

| RF | Random Forest |

| SVM | Support Vector Machine |

| XGB | Extreme Gradient Boosting |

References

- Spielberger, C.D.; Sydeman, S.J.; Owen, A.E.; Marsh, B.J. Measuring anxiety and anger with the State-Trait Anxiety Inventory (STAI) and the State-Trait Anger Expression Inventory (STAXI). In The Use of Psychological Testing for Treatment Planning and Outcomes Assessment, 2nd ed.; Lawrence Erlbaum Associates Publishers: Mahwah, NJ, USA, 1999; pp. 993–1021. [Google Scholar]

- Alonso, J.; Liu, Z.; Evans-Lacko, S.; Sadikova, E.; Sampson, N.; Chatterji, S.; Abdulmalik, J.; Aguilar-Gaxiola, S.; Al-Hamzawi, A.; Andrade, L.H.; et al. Treatment gap for anxiety disorders is global: Results of the World Mental Health Surveys in 21 countries. Depress. Anxiety 2018, 35, 195–208. [Google Scholar] [CrossRef]

- Regier, D.A.; Kuhl, E.A.; Kupfer, D.J. The DSM-5: Classification and criteria changes. World Psychiatry 2013, 12, 92–98. [Google Scholar] [CrossRef]

- Sánchez-Vincitore, L.V.; Castelló Gómez, M.E.; Lajara, B.; Duñabeitia, J.A.; Marte-Santana, H. Prevalence of state, trait, generalized, and social anxiety, and well-being among undergraduate students at a university in the Dominican Republic. Psychiatry Res. Commun. 2025, 5, 100225. [Google Scholar] [CrossRef]

- Xiong, P.; Liu, M.; Liu, B.; Hall, B.J. Trends in the incidence and DALYs of anxiety disorders at the global, regional, and national levels: Estimates from the Global Burden of Disease Study 2019. J. Affect. Disord. 2022, 297, 83–93. [Google Scholar] [CrossRef]

- Solatidehkordi, Z.; Ramesh, J.; Pasquier, M.; Sagahyroon, A.; Aloul, F. A Survey of Machine Learning Approaches for Detecting Depression Using Smartphone Data. In Proceedings of the 2022 IEEE International Conference on Industry 4.0, Artificial Intelligence, and Communications Technology (IAICT), Bali, Indonesia, 28–30 July 2022; pp. 184–190. [Google Scholar] [CrossRef]

- Ahmed, A.; Ramesh, J.; Ganguly, S.; Aburukba, R.; Sagahyroon, A.; Aloul, F. Evaluating multimodal wearable sensors for quantifying affective states and depression with neural networks. IEEE Sens. J. 2023, 23, 22788–22802. [Google Scholar] [CrossRef]

- Paraschou, E.; Yfantidou, S.; Vakali, A. UnStressMe: Explainable Stress Analytics and Self-tracking Data Visualizations. In Proceedings of the 2023 IEEE International Conference on Pervasive Computing and Communications Workshops and other Affiliated Events (PerCom Workshops), Atlanta, GA, USA, 13–17 March 2023; pp. 340–342. [Google Scholar] [CrossRef]

- Alexandra, A.M.M.; Gabriel, G.D.C.; Elio, N.V. Mobile Application for Managing Anxiety using Machine Learning Model. In Proceedings of the 2025 3rd Cognitive Models and Artificial Intelligence Conference (AICCONF), Prague, Czech Republic, 13–14 June 2025; pp. 1–7. [Google Scholar] [CrossRef]

- Ahmed, A.; Ramesh, J.; Ganguly, S.; Aburukba, R.; Sagahyroon, A.; Aloul, F. Investigating the Feasibility of Assessing Depression Severity and Valence-Arousal with Wearable Sensors Using Discrete Wavelet Transforms and Machine Learning. Information 2022, 13, 406. [Google Scholar] [CrossRef]

- Abd-alrazaq, A.; AlSaad, R.; Aziz, S.; Ahmed, A.; Denecke, K.; Househ, M.; Farooq, F.; Sheikh, J. Wearable Artificial Intelligence for Anxiety and Depression: Scoping Review. J. Med. Internet Res. 2023, 25, e42672. [Google Scholar] [CrossRef]

- Knowles, K.A.; Olatunji, B.O. Specificity of trait anxiety in anxiety and depression: Meta-analysis of the State-Trait Anxiety Inventory. Clin. Psychol. Rev. 2020, 82, 101928. [Google Scholar] [CrossRef] [PubMed]

- Jerath, R.; Syam, M.; Ahmed, S. The Future of Stress Management: Integration of Smartwatches and HRV Technology. Sensors 2023, 23, 7314. [Google Scholar] [CrossRef] [PubMed]

- Choudhury, A.; Asan, O. Impact of using wearable devices on psychological Distress: Analysis of the health information national Trends survey. Int. J. Med. Inform. 2021, 156, 104612. [Google Scholar] [CrossRef] [PubMed]

- Tsai, C.H.; Chen, P.C.; Liu, D.S.; Kuo, Y.Y.; Hsieh, T.T.; Chiang, D.L.; Lai, F.; Wu, C.T. Panic Attack Prediction Using Wearable Devices and Machine Learning: Development and Cohort Study. JMIR Med. Inform. 2022, 10, e33063. [Google Scholar] [CrossRef] [PubMed]

- De Angel, V.; Lewis, S.; White, K.; Oetzmann, C.; Leightley, D.; Oprea, E.; Lavelle, G.; Matcham, F.; Pace, A.; Mohr, D.C.; et al. Digital health tools for the passive monitoring of depression: A systematic review of methods. Npj Digit. Med. 2022, 5, 3. [Google Scholar] [CrossRef]

- Zhang, Y.; Stewart, C.; Ranjan, Y.; Conde, P.; Sankesara, H.; Rashid, Z.; Sun, S.; Dobson, R.J.B.; Folarin, A.A. Large-scale digital phenotyping: Identifying depression and anxiety indicators in a general UK population with over 10,000 participants. J. Affect. Disord. 2025, 375, 412–422. [Google Scholar] [CrossRef]

- Dai, R.; Kannampallil, T.; Kim, S.; Thornton, V.; Bierut, L.; Lu, C. Detecting Mental Disorders with Wearables: A Large Cohort Study. In Proceedings of the 8th ACM/IEEE Conference on Internet of Things Design and Implementation (IoTDI ’23), New York, NY, USA, 9–12 May 2023; pp. 39–51. [Google Scholar] [CrossRef]

- Ancillon, L.; Elgendi, M.; Menon, C. Machine Learning for Anxiety Detection Using Biosignals: A Review. Diagnostics 2022, 12, 1794. [Google Scholar] [CrossRef]

- Nakagome, K.; Makinodan, M.; Uratani, M.; Kato, M.; Ozaki, N.; Miyata, S.; Iwamoto, K.; Hashimoto, N.; Toyomaki, A.; Mishima, K.; et al. Feasibility of a wrist-worn wearable device for estimating mental health status in patients with mental illness. Front. Psychiatry 2023, 14, 1189765. [Google Scholar] [CrossRef] [PubMed]

- Ramesh, J.; Keeran, N.; Sagahyroon, A.; Aloul, F. Towards Validating the Effectiveness of Obstructive Sleep Apnea Classification from Electronic Health Records Using Machine Learning. Healthcare 2021, 9, 1450. [Google Scholar] [CrossRef] [PubMed]

- Bari, S.; Kim, B.W.; Vike, N.L.; Lalvani, S.; Stefanopoulos, L.; Maglaveras, N.; Block, M.; Strawn, J.; Katsaggelos, A.K.; Breiter, H.C. A novel approach to anxiety level prediction using small sets of judgment and survey variables. Npj Ment. Health Res. 2024, 3, 29. [Google Scholar] [CrossRef]

- Yfantidou, S.; Karagianni, C.; Efstathiou, S.; Vakali, A.; Palotti, J.; Giakatos, D.P.; Marchioro, T.; Kazlouski, A.; Ferrari, E.; Girdzijauskas, v. LifeSnaps, a 4-month multi-modal dataset capturing unobtrusive snapshots of our lives in the wild. Sci. Data 2022, 9, 663. [Google Scholar] [CrossRef]

- Park, J.E.; Ahn, E.K.; Yoon, K.; Kim, J. Performance of Fitbit Devices as Tools for Assessing Sleep Patterns and Associated Factors. J. Sleep Med. 2024, 21, 59–64. [Google Scholar] [CrossRef]

- Peake, J.M.; Kerr, G.; Sullivan, J.P. A Critical Review of Consumer Wearables, Mobile Applications, and Equipment for Providing Biofeedback, Monitoring Stress, and Sleep in Physically Active Populations. Front. Physiol. 2018, 9, 743. [Google Scholar] [CrossRef]

- Lederer, L.; Breton, A.; Jeong, H.; Master, H.; Roghanizad, A.R.; Dunn, J. The Importance of Data Quality Control in Using Fitbit Device Data From the All of Us Research Program. JMIR mHealth uHealth 2023, 11, e45103. [Google Scholar] [CrossRef]

- Gagnon, J.; Khau, M.; Lavoie-Hudon, L.; Vachon, F.; Drapeau, V.; Tremblay, S. Comparing a Fitbit wearable to an electrocardiogram gold standard as a measure of heart rate under psychological stress: A validation study. JMIR Form. Res. 2022, 6, e37885. [Google Scholar] [CrossRef]

- Ramesh, J.; Solatidehkordi, Z.; Sagahyroon, A.; Aloul, F. Multimodal Neural Network Analysis of Single-Night Sleep Stages for Screening Obstructive Sleep Apnea. Appl. Sci. 2025, 15, 1035. [Google Scholar] [CrossRef]

- Ercan, I.; Hafizoglu, S.; Ozkaya, G.; Kirli, S.; Yalcintas, E.; Akaya, C. Examining cut-off values for the state-trait anxiety inventory. Rev. Argent. Clin. Psicol. 2015, 24, 143–148. [Google Scholar]

- Mughal, F.; Raffe, W.; Stubbs, P.; Garcia, J. Towards depression monitoring and prevention in older populations using smart wearables: Quantitative Findings. In Proceedings of the 2022 IEEE 10th International Conference on Serious Games and Applications for Health(SeGAH), Sydney, Australia, 10–12 August 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Linde, K.; Olm, M.; Teusen, C.; Akturk, Z.; Schrottenberg, V.; Hapfelmeier, A.; Dawson, S.; Rücker, G.; Löwe, B.; Schneider, A. The diagnostic accuracy of widely used self-report questionnaires for detecting anxiety disorders in adults. Cochrane Database Syst. Rev. 2022, 2022, CD015292. [Google Scholar] [CrossRef]

- Teed, A.R.; Feinstein, J.S.; Puhl, M.; Lapidus, R.C.; Upshaw, V.; Kuplicki, R.T.; Bodurka, J.; Ajijola, O.A.; Kaye, W.H.; Thompson, W.K.; et al. Association of Generalized Anxiety Disorder With Autonomic Hypersensitivity and Blunted Ventromedial Prefrontal Cortex Activity During Peripheral Adrenergic Stimulation. JAMA Psychiatry 2022, 79, 323–332. [Google Scholar] [CrossRef] [PubMed]

- Richards, J.C.; Bertram, S. Anxiety Sensitivity, State and Trait Anxiety, and Perception of Change in Sympathetic Nervous System Arousal. J. Anxiety Disord. 2000, 14, 413–427. [Google Scholar] [CrossRef] [PubMed]

- Gullett, N.; Zajkowska, Z.; Walsh, A.; Harper, R.; Mondelli, V. Heart rate variability (HRV) as a way to understand associations between the autonomic nervous system (ANS) and affective states: A critical review of the literature. Int. J. Psychophysiol. 2023, 192, 35–42. [Google Scholar] [CrossRef]

- Herborn, K.A.; Graves, J.L.; Jerem, P.; Evans, N.P.; Nager, R.; McCafferty, D.J.; McKeegan, D.E. Skin temperature reveals the intensity of acute stress. Physiol. Behav. 2015, 152, 225–230. [Google Scholar] [CrossRef]

- Rawliuk, T.; Thrones, M.; Cordingley, D.M.; Cornish, S.M.; Greening, S.G. Promoting brain health and resilience: The effect of three types of acute exercise on affect, brain-derived neurotrophic factor and heart rate variability. Behav. Brain Res. 2025, 493, 115675. [Google Scholar] [CrossRef]

- Daniela, M.; Catalina, L.; Ilie, O.; Paula, M.; Daniel-Andrei, I.; Ioana, B. Effects of Exercise Training on the Autonomic Nervous System with a Focus on Anti-Inflammatory and Antioxidants Effects. Antioxidants 2022, 11, 350. [Google Scholar] [CrossRef]

- Verma, A.; Balekar, N.; Rai, A. Navigating the Physical and Mental Landscape of Cardio, Aerobic, Zumba, and Yoga. Arch. Med. Health Sci. 2024, 12, 242. [Google Scholar] [CrossRef]

- Schuch, F.B.; Stubbs, B. Physical Activity, Physical Fitness, and Depression. In Oxford Research Encyclopedia of Psychology; Oxford University Press: Oxford, UK, 2017. [Google Scholar] [CrossRef]

- Anderson, E.; Shivakumar, G. Effects of Exercise and Physical Activity on Anxiety. Front. Psychiatry 2013, 4, 27. [Google Scholar] [CrossRef] [PubMed]

- Taquet, V.; Blot, V.; Morzadec, T.; Lacombe, L.; Brunel, N. MAPIE: An open-source library for distribution-free uncertainty quantification. arXiv 2022, arXiv:2207.12274. [Google Scholar] [CrossRef]

- Lopez, R.; Shrestha, A.; Hickey, K.; Guo, X.; Tlachac, M.; Liu, S.; Rundensteiner, E.A. Screening Students for Stress Using Fitbit Data. In Proceedings of the 2024 IEEE International Conference on Big Data (BigData), Washington, DC, USA, 15–18 December 2024; pp. 3931–3934. [Google Scholar] [CrossRef]

- How do I Track and Manage Stress with my Fitbit Device?—Fitbit Help Center. Available online: https://support.google.com/fitbit/answer/14237928?hl=en (accessed on 3 August 2024).

- Ahmed, A.; Aziz, S.; Alzubaidi, M.; Schneider, J.; Irshaidat, S.; Abu Serhan, H.; Abd-alrazaq, A.A.; Solaiman, B.; Househ, M. Wearable devices for anxiety & depression: A scoping review. Comput. Methods Programs Biomed. Update 2023, 3, 100095. [Google Scholar] [CrossRef]

| Feature Name | Non-Anxiety (398) | Anxiety (1493) | Description |

|---|---|---|---|

| BMI † | Body Mass Index, collected as survey data (). | ||

| Steps | Number of steps taken by an individual. | ||

| Demographic VO2Max & | Maximum oxygen uptake, estimated every 2 days (). | ||

| Resting HR & | Heart rate at rest, baseline every 4 days. | ||

| BPM | Heart rate during general activity. | ||

| Daily temperature variation † | Measure of daily skin temperature variation from a 3-day baseline. | ||

| Nightly temperature | Measure of night-time skin temperature variation from a 3-day baseline. | ||

| Lightly active minutes | Time spent in light physical activity, calculated through METs *. | ||

| Moderately active minutes † | Time spent in moderately active physical activity, calculated through METs *. | ||

| Very active minutes | Time spent in very active physical activity, calculated through METs *. | ||

| Calories | Number of calories burned. | ||

| Sedentary minutes | Time spent unengaged in physical activity. | ||

| Mindfulness session | Number of mindfulness sessions. | ||

| Light sleep ratio | Ratio of time spent in light sleep. | ||

| REM sleep ratio | Ratio of time spent in REM sleep. | ||

| Deep sleep ratio | Ratio of time spent in deep sleep. | ||

| Sleep duration | Total duration of time spent in bed in milliseconds. | ||

| Minutes To Fall Asleep | Number of minutes for individual to fall asleep. | ||

| Minutes Asleep | Total minutes spent asleep. | ||

| Minutes After Wakeup † | Number of minutes individual spent awake during sleep session. | ||

| Minutes Awake † | Total minutes spent awake. | ||

| Sleep wake ratio | Ratio of time spent awake. | ||

| Sleep efficiency | Ratio of time spent asleep. |

| Feature p-Value (Chi-Square) | Category | Non-Anxiety (398) | Anxiety (1493) |

|---|---|---|---|

| Age | ≥30 | 161 | 816 |

| Age | <30 | 237 | 677 |

| Gender | Female | 58 | 544 |

| Gender | Male | 340 | 949 |

| Model | Acc | Sen | Sp | F1-Score | PPV | NPV | AUC% |

|---|---|---|---|---|---|---|---|

| k-NN | 0.71 ± 0.03 | 0.88 ± 0.06 | 0.20 ± 0.06 | 0.82 ± 0.02 | 0.78 ± 0.07 | 0.38 ± 0.24 | 0.56 ± 0.08 |

| LR | 0.98 ± 0.04 | 1.00 ± 0 | 0.84 ± 0.23 | 0.99 ± 0.02 | 0.97 ± 0.04 | 1.00 ± 0 | 1.00 ± 0 |

| SVM | 0.89 ± 0.07 | 1.00 ± 0 | 0.53 ± 0.15 | 0.93 ± 0.05 | 0.87 ± 0.08 | 0.99 ± 0.01 | 0.92 ± 0.10 |

| GNB | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 |

| RF | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0.01 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 |

| XGB | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 |

| CB | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 |

| LGB | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 | 1.00 ± 0 |

| Target Confidence (1 − ) | Avg. Set Size | Empirical Coverage |

|---|---|---|

| 0.95 | 0.900 | 0.701 |

| 0.85 | 0.603 | 0.484 |

| 0.75 | 0.442 | 0.332 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Velu, A.; Ramesh, J.; Ahmed, A.; Ganguly, S.; Aburukba, R.; Sagahyroon, A.; Aloul, F. Integrating Fitbit Wearables and Self-Reported Surveys for Machine Learning-Based State–Trait Anxiety Prediction. Appl. Sci. 2025, 15, 10519. https://doi.org/10.3390/app151910519

Velu A, Ramesh J, Ahmed A, Ganguly S, Aburukba R, Sagahyroon A, Aloul F. Integrating Fitbit Wearables and Self-Reported Surveys for Machine Learning-Based State–Trait Anxiety Prediction. Applied Sciences. 2025; 15(19):10519. https://doi.org/10.3390/app151910519

Chicago/Turabian StyleVelu, Archana, Jayroop Ramesh, Abdullah Ahmed, Sandipan Ganguly, Raafat Aburukba, Assim Sagahyroon, and Fadi Aloul. 2025. "Integrating Fitbit Wearables and Self-Reported Surveys for Machine Learning-Based State–Trait Anxiety Prediction" Applied Sciences 15, no. 19: 10519. https://doi.org/10.3390/app151910519

APA StyleVelu, A., Ramesh, J., Ahmed, A., Ganguly, S., Aburukba, R., Sagahyroon, A., & Aloul, F. (2025). Integrating Fitbit Wearables and Self-Reported Surveys for Machine Learning-Based State–Trait Anxiety Prediction. Applied Sciences, 15(19), 10519. https://doi.org/10.3390/app151910519