Efficient Sparse Quasi-Newton Algorithm for Multi-Physics Coupled Acid Fracturing Model in Carbonate Reservoirs

Abstract

1. Introduction

2. Mathematical Model and Discretization

2.1. Hydrodynamic Model of Carbonate Acid Fracturing

2.1.1. Mass Conservation Equation

2.1.2. Momentum Conservation Equation

2.1.3. Acid Transport Equation

2.2. Finite Volume Method Discretization

2.2.1. Discretization of the Mass Conservation Equation

2.2.2. Discretization of the Momentum Conservation Equation

3. Sparse Quasi-Newton Methods

- (1)

- is continuously differentiable in an open convex set ,

- (2)

- There exists an such that and is nonsingular.

- There exists a constant, such that

3.1. Sparse Quasi-Newton Update Methods

3.2. Computation of the Step Length

3.3. Sparse Quasi-Newton Algorithm

- Step 1: Given an initial point , a symmetric positive definite initial matrix , line search parameters , a convergence tolerance , and set the iteration counter .

- Step 2: Compute . If , stop; else, go to Step 3.

- Step 3: Compute search direction via (13).

- Step 4: Compute step size via (23)–(25).

- Step 5: Update using (12).

- Step 6: Update via (21), increment , return to Step 2.

3.4. Parallel Computation of Quasi-Newton Matrix and GPU Acceleration Techniques

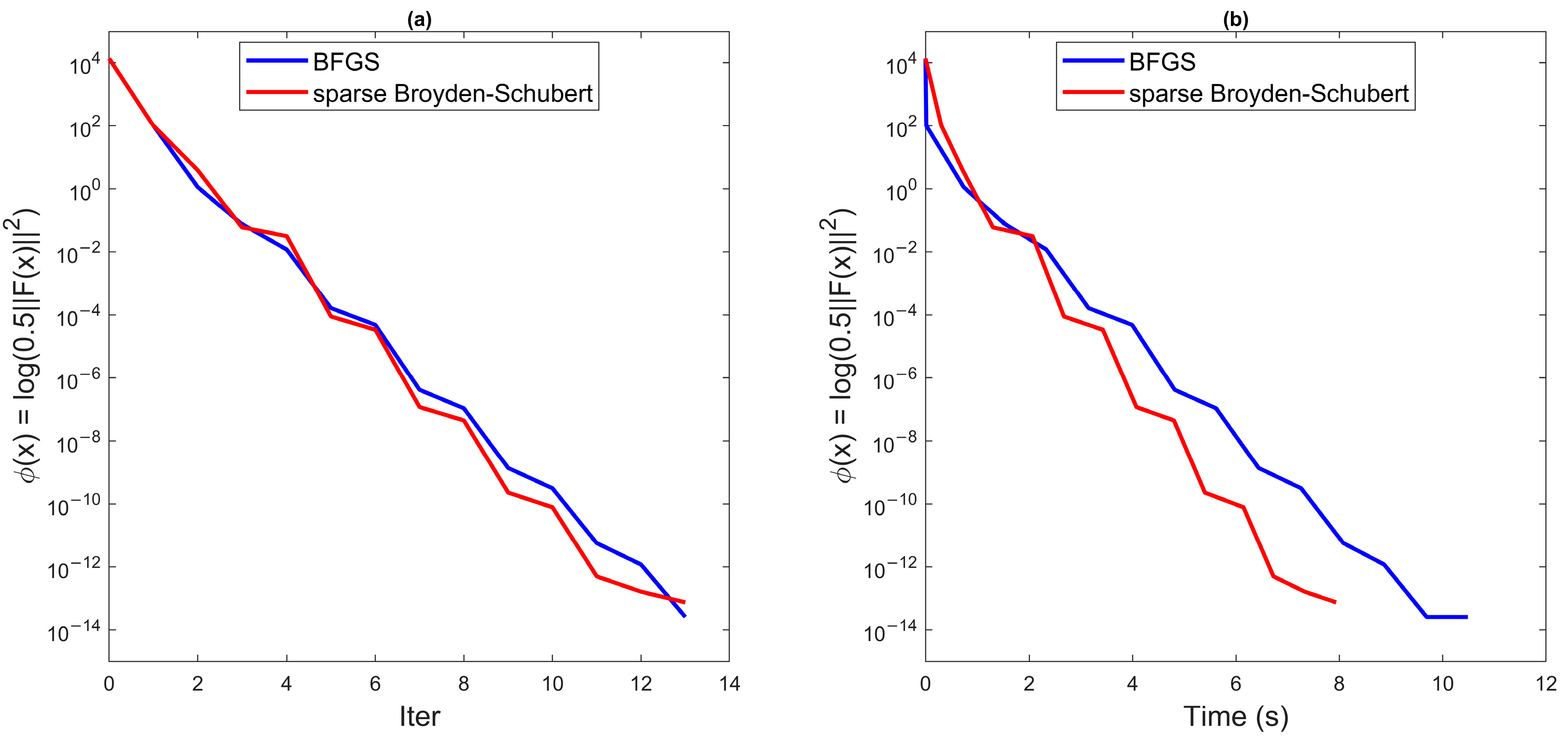

4. Numerical Experiments

4.1. Acid Fracturing Coupling Model

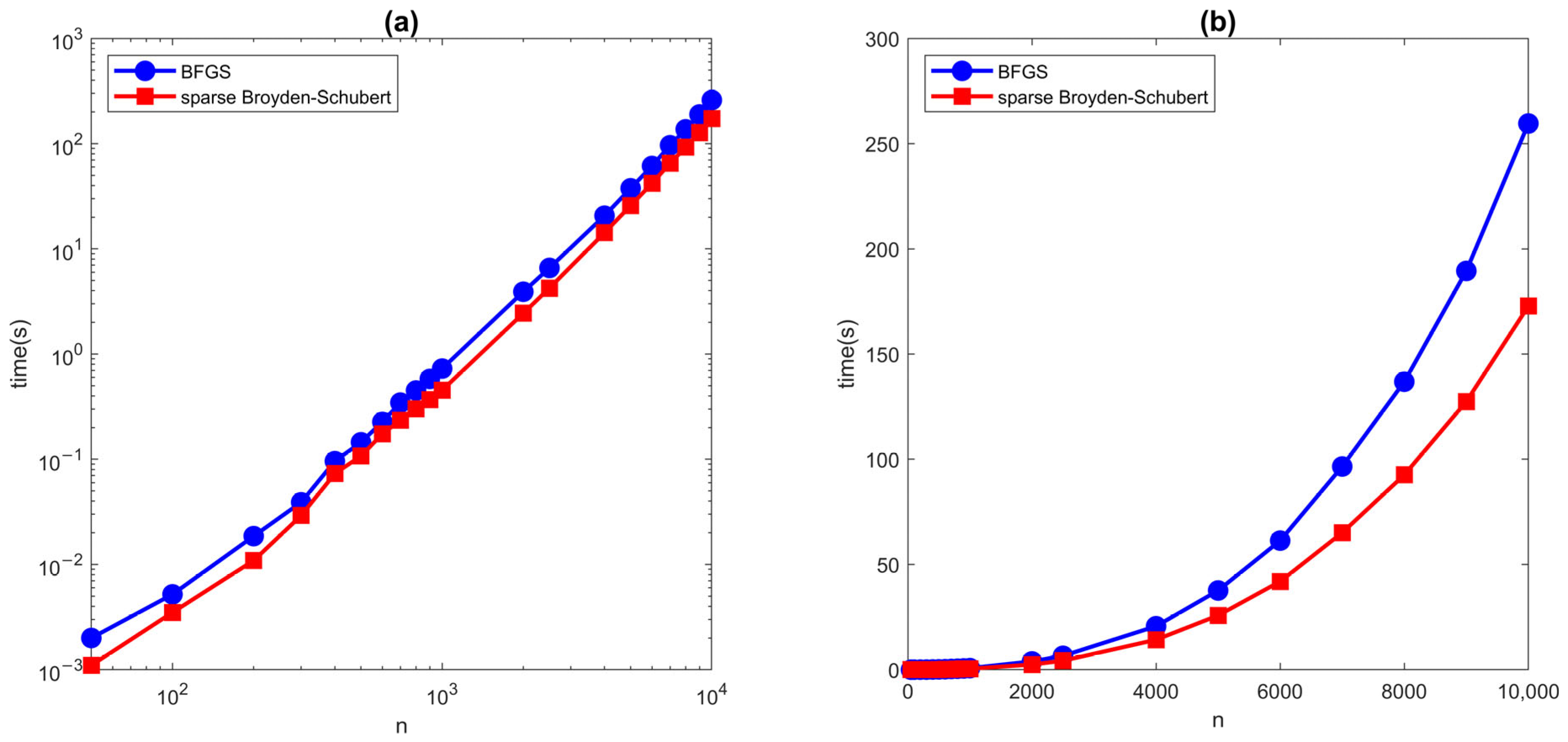

4.2. Nonlinear Heat Conduction Multi-Physics Coupling Model

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, L.; Long, W.; Wu, Y.; Chen, F.; Liu, Z.; Wang, G. Gas condensate occurrence during the pressure depletion of fracture-vuggy carbonate condensate gas reservoir. Sci. Technol. Eng. 2021, 21, 11136–11143. [Google Scholar]

- Zhou, S.; Li, X.; Su, G.; Zhou, C.; Li, X. The research of acid rock reaction kinetics experiment of different acid systems for carbonate gas reservoirs. Sci. Technol. Eng. 2014, 14, 211–214. [Google Scholar]

- Cai, J.; Fang, H.; Su, J.; Wang, L. Numerical simulation of acidizing in fracture-vug type carbonate reservoir. Sci. Technol. Eng. 2022, 22, 15131–15141. [Google Scholar]

- Aljawad, M.S.; Aljuliah, H.; Mahmoud, M.; Desouky, M. Integration of field, laboratory, and modeling aspects of acid fracturing: A comprehensive review. J. Pet. Sci. Eng. 2019, 181, 106158. [Google Scholar] [CrossRef]

- Forsyth, P.A. A control-volume, finite-element method for local mesh refinement in thermal reservoir simulation. SPE Reserv. Eng. 1990, 5, 561–566. [Google Scholar] [CrossRef]

- Schubert, L. Modification of a quasi-newton method for nonlinear equations with a sparse jacobian. Math. Comput. 1970, 24, 27–30. [Google Scholar] [CrossRef]

- Toint, P.L. On sparse and symmetric matrix updating subject to a linear equation. Math. Comput. 1977, 31, 954–961. [Google Scholar] [CrossRef]

- Toint, P.L. A sparse quasi-newton update derived variationally with a nondiagonally weighted frobenius norm. Math. Comput. 1981, 37, 425–433. [Google Scholar] [CrossRef]

- Toint, P. A note about sparsity exploiting quasi-newton updates. Math. Program. 1981, 21, 172–181. [Google Scholar] [CrossRef]

- Broyden, C. The convergence of an algorithm for solving sparse nonlinear systems. Math. Comput. 1971, 25, 285–294. [Google Scholar] [CrossRef]

- Marwil, E. Convergence results for schubert’s method for solving sparse nonlinear equations. SIAM J. Numer. Anal. 1979, 16, 588–604. [Google Scholar] [CrossRef]

- Bogle, I.; Perkins, J. A new sparsity preserving quasi-newton update for solving nonlinear equations. SIAM J. Sci. Stat. Comput. 1990, 11, 621–630. [Google Scholar] [CrossRef]

- Soulaine, C.; Tchelepi, H.A. Micro-continuum approach for pore-scale simulation of subsurface processes. Transp. Porous Media 2016, 113, 431–456. [Google Scholar] [CrossRef]

- Dennis, J.E., Jr.; Moré, J.J. Quasi-newton methods, motivation and theory. SIAM Rev. 1977, 19, 46–89. [Google Scholar] [CrossRef]

- Fletcher, R. An optimal positive definite update for sparse hessian matrices. SIAM J. Optim. 1995, 5, 192–218. [Google Scholar] [CrossRef]

- Broyden, C.G. The convergence of a class of double-rank minimization algorithms: 2. the new algorithm. IMA J. Appl. Math. 1970, 6, 222–231. [Google Scholar] [CrossRef]

- Fletcher, R. A new approach to variable metric algorithms. Comput. J. 1970, 13, 317–322. [Google Scholar] [CrossRef]

- Goldfarb, D. A family of variable-metric methods derived by variational means. Math. Comput. 1970, 24, 23–26. [Google Scholar] [CrossRef]

- Shanno, D.F. Conditioning of quasi-newton methods for function minimization. Math. Comput. 1970, 24, 647–656. [Google Scholar] [CrossRef]

- Daokun, C.; Chao, Y.; Fangfang, L.; Wenjing, M. Parallel structured sparse triangular solver for gpu platform. J. Softw. 2023, 34, 4941–4951. [Google Scholar]

- Saltz, J.H. Aggregation methods for solving sparse triangular systems on multiprocessors. SIAM J. Sci. Stat. Comput. 1990, 11, 123–144. [Google Scholar] [CrossRef]

- Alvarado, F.L.; Schreiber, R. Optimal parallel solution of sparse triangular systems. SIAM J. Sci. Comput. 1993, 14, 446–460. [Google Scholar] [CrossRef]

- Raghavan, P.; Teranishi, K. Parallel hybrid preconditioning: Incomplete factorization with selective sparse approximate inversion. SIAM J. Sci. Comput. 2010, 32, 1323–1345. [Google Scholar] [CrossRef]

- Anderson, E.; Saad, Y. Solving sparse triangular linear systems on parallel computers. Int. J. High Speed Comput. 1989, 1, 73–95. [Google Scholar] [CrossRef]

| Dim | BFGS | Sparse Broyden Schubert | ||

|---|---|---|---|---|

| Iteration | Tcpu (s) | Iteration | Tcpu (s) | |

| 50 | 14 | 0.0020 | 13 | 0.0011 |

| 100 | 15 | 0.0052 | 13 | 0.0035 |

| 200 | 15 | 0.0186 | 13 | 0.0109 |

| 300 | 15 | 0.0390 | 13 | 0.0292 |

| 400 | 15 | 0.0960 | 13 | 0.0731 |

| 500 | 15 | 0.1450 | 13 | 0.1074 |

| 600 | 15 | 0.2264 | 13 | 0.1743 |

| 700 | 15 | 0.3456 | 13 | 0.2345 |

| 800 | 15 | 0.4504 | 13 | 0.3022 |

| 900 | 15 | 0.5803 | 13 | 0.3694 |

| 1000 | 15 | 0.7275 | 13 | 0.4533 |

| 2000 | 15 | 3.9113 | 13 | 2.4461 |

| 2500 | 15 | 6.5859 | 13 | 4.2408 |

| 4000 | 14 | 20.6561 | 13 | 14.2332 |

| 5000 | 14 | 37.6264 | 13 | 25.7713 |

| 6000 | 14 | 61.3392 | 13 | 41.9309 |

| 7000 | 14 | 96.5590 | 13 | 65.1102 |

| 8000 | 14 | 136.8498 | 13 | 92.5859 |

| 9000 | 14 | 189.5147 | 13 | 127.4359 |

| 10,000 | 14 | 259.5736 | 13 | 172.7991 |

| Method | |||||

|---|---|---|---|---|---|

| 50 | BFGS | 21.3780 | 2.0820 × 10−7 | 27 | 0.6830 |

| Broyden–Schubert | 21.3780 | 4.1610 × 10−8 | 27 | 0.7430 | |

| 100 | BFGS | 30.1160 | 1.5070 × 10−7 | 29 | 0.6590 |

| Broyden–Schubert | 30.1160 | 3.9490 × 10−8 | 27 | 0.7570 | |

| 200 | BFGS | 42.5090 | 1.6970 × 10−7 | 29 | 0.6670 |

| Broyden–Schubert | 42.5090 | 3.7350 × 10−8 | 27 | 0.7720 | |

| 300 | BFGS | 52.0290 | 1.7890 × 10−7 | 29 | 0.6720 |

| Broyden–Schubert | 52.0290 | 3.6850 × 10−8 | 27 | 0.7800 | |

| 400 | BFGS | 60.0580 | 1.8650 × 10−7 | 29 | 0.6760 |

| Broyden–Schubert | 60.0580 | 3.7110 × 10−8 | 27 | 0.7850 | |

| 500 | BFGS | 67.1340 | 1.9120 × 10−7 | 29 | 0.6790 |

| Broyden–Schubert | 67.1340 | 3.7730 × 10−8 | 27 | 0.7890 | |

| 600 | BFGS | 73.5320 | 1.9330 × 10−7 | 29 | 0.6810 |

| Broyden–Schubert | 73.5320 | 3.8510 × 10−8 | 27 | 0.7910 | |

| 700 | BFGS | 79.4170 | 1.9340 × 10−7 | 29 | 0.6840 |

| Broyden–Schubert | 79.4170 | 3.9340 × 10−8 | 27 | 0.7940 | |

| 800 | BFGS | 84.8940 | 1.9220 × 10−7 | 29 | 0.6860 |

| Broyden–Schubert | 84.8940 | 4.0170 × 10−8 | 27 | 0.7950 | |

| 900 | BFGS | 90.0390 | 1.9010 × 10−7 | 29 | 0.6890 |

| Broyden–Schubert | 90.0390 | 4.0960 × 10−8 | 27 | 0.7970 | |

| 1000 | BFGS | 94.9050 | 1.8740 × 10−7 | 29 | 0.6910 |

| Broyden–Schubert | 94.9050 | 4.1700 × 10−8 | 27 | 0.7980 | |

| 2000 | BFGS | 134.1900 | 1.4810 × 10−7 | 29 | 0.7110 |

| Broyden–Schubert | 134.1900 | 4.6820 × 10−8 | 27 | 0.8070 | |

| 2500 | BFGS | 150.0230 | 1.2660 × 10−7 | 29 | 0.7200 |

| Broyden–Schubert | 150.0230 | 4.8330 × 10−8 | 27 | 0.8090 | |

| 4000 | BFGS | 189.7550 | 1.5590 × 10−7 | 27 | 0.7750 |

| Broyden–Schubert | 189.7550 | 5.1050 × 10−8 | 27 | 0.8160 | |

| 5000 | BFGS | 212.1490 | 1.1350 × 10−7 | 27 | 0.7910 |

| Broyden–Schubert | 212.1490 | 5.2090 × 10−8 | 27 | 0.8200 | |

| 6000 | BFGS | 232.3940 | 9.3630 × 10−8 | 27 | 0.8010 |

| Broyden–Schubert | 232.3940 | 5.2830 × 10−8 | 27 | 0.8220 | |

| 7000 | BFGS | 251.0120 | 8.4680 × 10−8 | 27 | 0.8080 |

| Broyden–Schubert | 251.0120 | 5.3380 × 10−8 | 27 | 0.8250 | |

| 8000 | BFGS | 268.3410 | 8.0460 × 10−8 | 27 | 0.8120 |

| Broyden–Schubert | 268.3410 | 5.3810 × 10−8 | 27 | 0.8270 | |

| 9000 | BFGS | 284.6170 | 7.8430 × 10−8 | 27 | 0.8150 |

| Broyden–Schubert | 284.6170 | 5.4140 × 10−8 | 27 | 0.8290 | |

| 10,000 | BFGS | 300.0120 | 7.7540 × 10−8 | 27 | 0.8180 |

| Broyden–Schubert | 300.0120 | 5.4420 × 10−8 | 27 | 0.8310 |

| Dim | BFGS | Sparse Broyden Schubert | ||

|---|---|---|---|---|

| Iteration | Tcpu (s) | Iteration | Tcpu (s) | |

| 500 | 6 | 0.0124 | 3 | 0.0076 |

| 1000 | 6 | 0.0544 | 3 | 0.0349 |

| 2000 | 6 | 0.2532 | 4 | 0.2096 |

| 2500 | 6 | 0.4003 | 4 | 0.3378 |

| 4000 | 6 | 1.2043 | 4 | 1.0850 |

| 5000 | 7 | 2.4818 | 4 | 1.8392 |

| 6000 | 7 | 3.7230 | 4 | 2.8919 |

| 7500 | 7 | 5.4478 | 4 | 4.3431 |

| 8000 | 7 | 7.6342 | 4 | 6.1400 |

| 9000 | 7 | 10.0049 | 4 | 8.1452 |

| 10,000 | 7 | 13.4487 | 4 | 10.7674 |

| 20,000 | 7 | 81.1970 | 4 | 69.0125 |

| 30,000 | 7 | 275.0223 | 4 | 221.1731 |

| Method | |||||

|---|---|---|---|---|---|

| 500 | BFGS | 0.2630 | 6.279 × 10−6 | 11 | 0.968 |

| Broyden–Schubert | 0.2630 | 1.163 × 10−5 | 7 | 1.433 | |

| 1000 | BFGS | 0.3720 | 8.932 × 10−6 | 11 | 0.967 |

| Broyden–Schubert | 0.3720 | 1.568 × 10−5 | 7 | 1.439 | |

| 2000 | BFGS | 0.5270 | 1.267 × 10−5 | 11 | 0.967 |

| Broyden–Schubert | 0.5270 | 5.633 × 10−7 | 9 | 1.528 | |

| 2500 | BFGS | 0.5890 | 1.417 × 10−5 | 11 | 0.967 |

| Broyden–Schubert | 0.5890 | 5.657 × 10−7 | 9 | 1.540 | |

| 4000 | BFGS | 0.7450 | 1.794 × 10−5 | 11 | 0.967 |

| Broyden–Schubert | 0.7450 | 5.726 × 10−7 | 9 | 1.564 | |

| 5000 | BFGS | 0.8330 | 1.8740 × 10−7 | 13 | 0.971 |

| Broyden–Schubert | 0.8330 | 5.771 × 10−7 | 9 | 1.576 | |

| 6000 | BFGS | 0.9120 | 3.008 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 0.9120 | 5.814 × 10−7 | 9 | 1.585 | |

| 7000 | BFGS | 0.9850 | 3.250 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 0.9850 | 5.858 × 10−7 | 9 | 1.593 | |

| 8000 | BFGS | 1.0530 | 3.474 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 1.0530 | 5.901 × 10−7 | 9 | 1.599 | |

| 9000 | BFGS | 1.1170 | 3.686 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 1.1170 | 5.943 × 10−7 | 9 | 1.605 | |

| 10,000 | BFGS | 1.1780 | 3.885 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 1.1780 | 5.986 × 10−7 | 9 | 1.610 | |

| 20,000 | BFGS | 1.6650 | 5.496 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 1.6650 | 6.391 × 10−7 | 9 | 1.641 | |

| 30,000 | BFGS | 2.0400 | 6.732 × 10−6 | 13 | 0.971 |

| Broyden–Schubert | 2.0400 | 6.772 × 10−7 | 9 | 1.658 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, M.; Chen, Z. Efficient Sparse Quasi-Newton Algorithm for Multi-Physics Coupled Acid Fracturing Model in Carbonate Reservoirs. Appl. Sci. 2025, 15, 10436. https://doi.org/10.3390/app151910436

Li M, Chen Z. Efficient Sparse Quasi-Newton Algorithm for Multi-Physics Coupled Acid Fracturing Model in Carbonate Reservoirs. Applied Sciences. 2025; 15(19):10436. https://doi.org/10.3390/app151910436

Chicago/Turabian StyleLi, Mintao, and Zhong Chen. 2025. "Efficient Sparse Quasi-Newton Algorithm for Multi-Physics Coupled Acid Fracturing Model in Carbonate Reservoirs" Applied Sciences 15, no. 19: 10436. https://doi.org/10.3390/app151910436

APA StyleLi, M., & Chen, Z. (2025). Efficient Sparse Quasi-Newton Algorithm for Multi-Physics Coupled Acid Fracturing Model in Carbonate Reservoirs. Applied Sciences, 15(19), 10436. https://doi.org/10.3390/app151910436