1. Introduction

In recent years, anomaly detection in digital environments has become a crucial research topic [

1,

2,

3]. Anomalies such as spam messages and fake news can lead to serious issues, including breaches of privacy, social disruption, and the degradation of information reliability. While various approaches have been explored to address these challenges, traditional anomaly detection models are often constrained by their need to be specifically trained for individual tasks. This requirement consumes significant time and resources and limits their flexibility in addressing a diverse range of domains and emerging problems.

In this context, Attention mechanisms [

4] and Transformer-based Large Language Models [

5,

6,

7,

8] (LLMs) have opened new possibilities. In particular, the application of Prompt Engineering [

9] in LLMs holds potential for achieving superior performance and efficiency compared to traditional anomaly detection techniques. Prompt Engineering optimizes input data to enable LLMs to better understand complex problems and generate appropriate responses, thereby reducing the need for extensive task-specific training. Moreover, since Prompt Engineering does not require separate training processes for specific tasks, it significantly reduces the costs and time associated with training while offering greater flexibility and adaptability across a variety of tasks and scenarios. Although research on utilizing Prompt Engineering for anomaly detection is still in its early stages, its diverse implementations invite a survey-based analysis to identify best practices and emerging trends.

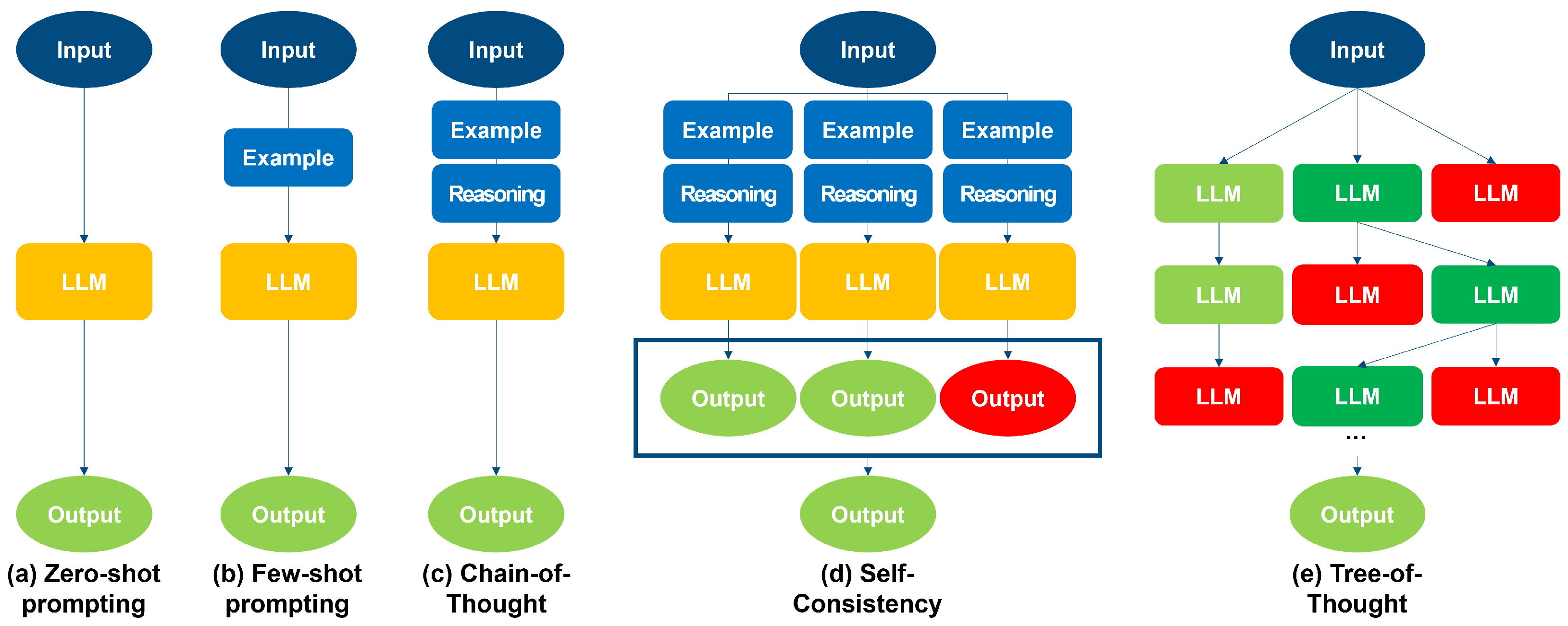

This paper provides a comprehensive survey of how Prompt Engineering techniques can enhance anomaly detection performance, particularly in the context of spam messages and fake news. Specifically, we will compare and analyze various Prompt Engineering techniques, such as Zero-shot prompting [

10], Few-shot prompting [

6,

11], Chain-of-Thought (CoT) prompting [

12], Self-Consistency (SC) [

13], and Tree-of-Thought (ToT) prompting [

14,

15,

16], to evaluate how each technique contributes to anomaly detection. In addition, we provide a brief but comprehensive overview of advanced Prompt Engineering methods applicable to anomaly detection, including Self-Refine [

17], Retrieval-Augmented Generation [

18], and Judgment of Thoguht [

19], which have the potential to further refine detection accuracy and robustness. This evaluation aims to assess how each technique contributes to anomaly detection. Through this analysis, we aim to identify the potential and limitations of Prompt-Engineering-based anomaly detection and suggest directions for future research.

This study makes several significant contributions to the field of anomaly detection using Prompt Engineering as a transformative paradigm. First, by synthesizing and critically examining a wide range of Prompt Engineering techniques, it offers valuable guidance for researchers in selecting the most appropriate methods for specific anomaly detection scenarios. Second, by highlighting emerging advanced approaches, the survey broadens the applicability of generative AI beyond traditional text-based anomaly detection. Third, it lays a foundation for future research by identifying key challenges and potential improvements, thereby guiding the development of next-generation AI technologies for more effective anomaly detection.

3. Prompt Design for Anomaly Detection: Exploring Its Effectiveness

In this section, we describe how prompts were designed for each Prompt-Engineering method for our experiments. The prompts were crafted to align with the characteristics and objectives of each methodology, aiming to maximize their effectiveness in anomaly detection. The content within the angle brackets (<>) varies depending on the task (e.g., spam detection, fake news detection, harmful comment detection). All user prompts and outputs are as follows:

Zero-shot Prompting involves providing the model with a task description and generating responses without any additional task-specific training. For anomaly detection, the zero-shot prompt is designed to enable the model to identify anomalies in a general context. This prompt guides the model to determine whether a sentence is spam based on its characteristics and context. The strength of zero-shot prompting lies in the model’s reliance on pre-existing knowledge, allowing it to utilize a broad domain of knowledge. Our zero-shot prompt design for anomaly detection is as follows:

Few-shot Prompting provides the model with a few examples to guide it in generating the correct responses. This approach is advantageous for enhancing the model’s accuracy by providing context and patterns. By providing specific examples, the model can better understand the task and improve detection accuracy. Our few-shot prompt design for anomaly detection is as follows:

System prompt: “You are a helpful <task> detection expert. Look at the given examples, analyze the given text, and respond with “<anomaly>” if the input is an anomaly or “<normal>” if it is normal.”

Example:

<example text>, Classification: <anomaly>/<normal>

<example text>, Classification: <anomaly>/<normal>

…

CoT prompting encourages the model to think through problems step-by-step. This method is useful for tasks requiring logical reasoning and deep analysis. This prompt encourages the model to break down the analysis process into steps, enabling more accurate anomaly detection. Our CoT prompt design for anomaly detection is as follows:

System prompt: “You are a helpful <task> detection expert. Look at the given examples and reasoning, analyze the given text, and respond with “<anomaly>” if the input is an anomaly or “<normal>” if it is normal.”

Example:

<example text>, Classification: <anomaly>/<normal> Reasoning: <reasoning>

<example text>, Classification: <anomaly>/<normal> Reasoning: <reasoning>

…

SC improves reliability by generating multiple responses and selecting the most consistent one. This method reduces the impact of random errors and inconsistencies, allowing the model to provide a more trustworthy final response. The design for SC in anomaly detection uses the same prompt as CoT, repeated multiple times, and selects the most consistent response as the final output.

ToT prompting is a method that creates a tree structure to explore various reasoning paths. This method is particularly useful for tasks that require complex decision-making processes. To apply ToT to anomaly detection, we adopted the single-prompt ToT approach described by Hulbert et al. [

16]. This approach guides the model to explore multiple reasoning paths, enhancing anomaly detection capabilities through comprehensive analysis. By designing prompts tailored to highlight anomalous patterns or behaviors, Prompt Engineering is expected to improve the anomaly detection performance of LLMs. Our ToT prompt design for anomaly detection is as follows:

System prompt: “Imagine three different <task> detection experts are answering the given input. In the first step, all three experts write down their thoughts and then share them with the group. Then, all the experts move on to the next step and discuss. If any expert realizes a mistake at any point, that expert leaves. Continue the discussion until all three experts agree on the input. If all experts finish the discussion, output the final decision.”

4. Experiment

4.1. Experimental Setting

In this section, we describe the experimental setup designed to evaluate the effectiveness of various Prompt-Engineering techniques for anomaly detection. The primary focus of the experiment is anomaly detection in textual data, such as spam detection and fake news detection. For the experiments, we used publicly available datasets related to each anomaly detection task. For spam detection, we utilized the SMS Spam dataset (SMS) [

26], which contains labeled spam and legitimate (non-spam) emails. For fake news detection, we employed the Fake News Corpus dataset (Fake) [

27], consisting of labeled fake and real news articles, and for Toxic Comment dataset (Toxic) detection, the Toxic Comment dataset [

28] was adopted.

The experiments were conducted using state-of-the-art large language models based on the Transformer architecture, specifically GPT-3.5-turbo and GPT-4o (GPT-4omni). The model parameters were set to their default configurations: temperature and Top P at 1, and Frequency penalty and Presence penalty at 0. Prompts were designed according to the characteristics and goals of each Prompt-Engineering technique evaluated. The techniques assessed include Zero-shot prompts, which provide only the task description without additional training, Few-shot prompts, which provide the model with a few examples to guide correct responses, CoT prompts, which encourage the model to think step-by-step, SC prompts, which generate multiple responses and select the most consistent one, and ToT prompts, which explore various reasoning paths through a tree structure. The number of examples used in Few-shot prompting and CoT prompting were 10 for the SMS Spam and Toxic Comment datasets, and 4 for the Fake News Corpus dataset. The number of responses generated in SC was set to 3 for all datasets.

The evaluation procedure consisted of four stages: prompt design, model execution, response collection, and performance evaluation. Specific prompts were designed for each anomaly detection task and technique, and these were used to run the GPT-3.5-turbo and GPT-4o models on the preprocessed datasets. The models’ responses to each input text were collected and compared against the labeled data to assess performance. The performance of each Prompt-Engineering technique was measured using accuracy and F1 score. Accuracy represents the proportion of instances where the model’s prediction matches the actual label, indicating how often the model makes correct predictions across the entire dataset. F1 score, the harmonic mean of precision and recall, is particularly important for imbalanced datasets, which are common in anomaly detection tasks. It evaluates the balance between how precisely the model identifies the positive class (precision) and how many of the actual positive instances it detects (recall). In tasks such as spam detection or fake news detection, where missing certain instances can lead to significant issues, the F1 score plays a crucial role in providing a comprehensive assessment of the model’s performance.

4.2. Experimental Results

In this section, we present the experimental results evaluating the effectiveness of various Prompt-Engineering techniques using the GPT-3.5-Turbo and GPT-4 models for tasks such as SMS spam detection, fake news detection, and toxic comment detection. The performance of each technique is assessed based on accuracy and F1 Score. The summary of the performance of different Prompt-Engineering techniques across anomaly detection tasks is shown in

Table 1. A comparative analysis of the results reveals that Prompt-Engineering techniques have the potential to significantly improve anomaly detection performance in both GPT-3.5-Turbo and GPT-4 models.

In the SMS Spam detection dataset, a comparison between prompt techniques reveals that the CoT and SC techniques were particularly effective in boosting performance. While both models achieved similar accuracy and F1 scores in Zero-shot and Few-shot learning, the application of CoT and SC techniques resulted in a significant performance increase, with GPT-4o achieving 96% accuracy and an F1 score of 0.98. In contrast, GPT-3.5-Turbo, despite showing improvements, still lagged slightly behind, with a 94% accuracy and an F1 score of 0.96 for the same techniques. This suggests that although both models benefited from CoT and SC, GPT-4o was able to leverage these techniques more efficiently, demonstrating a greater performance boost, particularly in tasks requiring complex reasoning.

In the Fake News detection dataset, the performance gap between prompt techniques was also noticeable. In Zero-shot and Few-shot learning, the differences between GPT-3.5-Turbo and GPT-4o were minimal. However, when CoT and SC techniques were applied, GPT-4o demonstrated superior performance, achieving 90% accuracy and an F1 score of 0.91 with CoT and 86%/0.87 with SC. GPT-4o also excelled in Few-shot learning, achieving a 92% accuracy and an F1 score of 0.92, indicating that even with minimal training data, the model could deliver high-level performance. This suggests that CoT and SC techniques played a critical role in enhancing model performance in fake news detection tasks, with GPT-4o particularly benefiting from their application, resulting in more accurate predictions.

In the Toxic dataset, the differences between prompt techniques were even more pronounced. While GPT-4o already outperformed GPT-3.5-Turbo in Zero-shot and Few-shot learning, the performance gap widened significantly with the application of CoT and SC techniques. With CoT and SC, GPT-4o achieved 87% accuracy and an F1 score of 0.93, significantly outperforming GPT-3.5-Turbo’s 79% accuracy and 0.87 F1 score. This highlights GPT-4o’s ability to better handle complex reasoning tasks using CoT and SC techniques, particularly in tasks like toxic content detection. The SC technique, which generates multiple answers and selects the most consistent one, was especially effective in boosting performance in tasks requiring nuanced text analysis.

In contrast, the ToT (Thought of Thoughts) technique did not lead to as significant performance improvements as CoT and SC. In spam and fake news detection, the performance gains were less pronounced, with ToT showing more modest improvements primarily in the toxic dataset. While GPT-4o still outperformed GPT-3.5-Turbo with ToT, the substantial differences seen in CoT and SC were not replicated here.

In conclusion, CoT and SC techniques were instrumental in improving performance across both models, with GPT-4o showing the greatest benefit, especially in tasks requiring complex reasoning. While ToT led to more modest performance improvements, it still maintained consistent gains across datasets. These results highlight the importance of advanced prompt techniques in enhancing model performance, particularly in state-of-the-art models like GPT-4o.

To contextualize our findings, we compare them with reported state-of-the-art results from the literature. On the SMS Spam Collection dataset, traditional machine learning approaches such as SVM and ensemble methods have typically achieved 90–95% accuracy [

29], which is comparable to our 96% accuracy with GPT-4o using reasoning-based prompting. In fake news detection, deep learning methods such as CNNs and LSTMs report accuracies in the 80–90% range [

30], which aligns with our results using CoT and Few-shot prompting. For toxic comment detection, neural architectures like CNN-GRU models reach F1 scores of about 0.80–0.85 [

31], consistent with the F1 0.87–0.93 achieved in our prompting-based experiments.

The superior performance of Chain-of-Thought (CoT) prompting compared to Few-shot prompting can be attributed to its reasoning process. By explicitly generating intermediate steps, CoT allows the model to consider contextual cues and logical consistency before reaching a decision. This step-by-step reasoning mitigates errors caused by surface-level similarities, which Few-shot prompting is more prone to. For example, in spam detection, Few-shot prompting occasionally misclassified benign messages containing promotional keywords, whereas CoT was able to reason about the overall intent and correctly classify them as non-spam.

Although our ablation study was limited to 30/8 examples for Few-shot prompting and 7 responses for Self-Consistency, the results suggest a clear saturation trend. Performance gains became marginal beyond 20/6 in Few-shot and 5 responses in SC, indicating that additional increases would likely yield diminishing returns. A more extensive investigation of these saturation points remains an important task for future research.

4.3. Ablation Study

In the ablation study, as summarized in

Table 2, explore the effect of varying the number of examples in Few-shot prompts and the number of responses generated in SC prompts on model performance. These experiments were conducted using the GPT-3.5-Turbo and GPT-4o models across tasks such as SMS spam detection, fake news detection, and toxic comment detection. The table illustrates how performance varied with changes in shot counts for Few-shot learning and the number of generated responses in the SC technique.

For Few-shot learning, we adjust the number of shots for each task with different configurations for each dataset (e.g., 10/4, 20/6, and 30/8). In the SMS spam detection task, increasing the number of shots from 10 to 30 led to a notable improvement in both models. GPT-4o, in particular, improved from 95% accuracy and 0.97 F1 score with 10 shots to 96% accuracy and 0.98 F1 score with 30 shots. Similarly, GPT-3.5-Turbo also showed a slight improvement, increasing from 92%/0.94 to 93%/0.96 under the same conditions. This suggests that while both models benefit from additional Few-shot examples, GPT-4o demonstrates a more consistent and significant performance boost as the number of examples increases.

In the fake news detection task, however, the impact of increasing the number of Few-shot examples is less pronounced. GPT-4o’s performance peaked at 92% accuracy and 0.92 F1 score with 10 shots but slightly decreased as more shots were introduced, stabilizing at 88% accuracy and 0.89 F1 score with 30 shots. This could indicate that for certain tasks like fake news detection, adding more Few-shot examples does not always correlate with improved performance. GPT-3.5-Turbo followed a similar trend, reaching its highest performance with 20 shots (86% accuracy and 0.87 F1 score), but remaining stable even as the number of shots increased to 30.

In the toxic comment detection task, the trend diverges more clearly between the two models. GPT-4o exhibited a substantial improvement as the number of Few-shot examples increased, with its accuracy rising from 81% to 88% and its F1 score improving from 0.89 to 0.93 as shots increased from 10 to 30. On the other hand, GPT-3.5-Turbo showed mixed results. Although its performance improved initially from 67% accuracy and 0.79 F1 score to 84%/0.91 with 20 shots, it declined slightly when the shot count was increased to 30, indicating that the model might struggle with overfitting or noise when provided with too many examples.

The SC technique, which varies the number of generated responses (3, 5, and 7), had a more consistent impact on both models. In the SMS spam detection task, GPT-4o maintained its peak performance (96% accuracy and 0.98 F1 score) regardless of the number of responses generated, while GPT-3.5-Turbo’s performance also remained stable at 95%/0.97. This suggests that for simpler tasks like SMS spam detection, generating more responses in the SC technique has a diminishing return after a certain point.

In the fake news detection task, GPT-4o showed a slight performance increase with more SC responses, rising from 88%/0.89 with 5 responses to 90%/0.91 with 7 responses. This pattern was not observed in GPT-3.5-Turbo, which remained constant at 86%/0.87 across all response configurations. For more nuanced tasks like fake news detection, GPT-4o appears to benefit more from the additional consistency checks in the SC approach compared to GPT-3.5-Turbo.

For toxic comment detection, the SC technique yielded more significant results. GPT-4o maintained its high performance (87% accuracy and 0.93 F1 score) with 5 and 7 responses, while GPT-3.5-Turbo exhibited a slight improvement with 5 responses, achieving 82% accuracy and 0.89 F1 score. These results indicate that the SC technique is particularly effective for more complex tasks, with both models benefiting from generating more responses and ensuring higher consistency, though GPT-4o consistently outperforms GPT-3.5-Turbo across configurations.

In summary, the ablation study reveals that increasing the number of Few-shot examples and SC responses can lead to improved performance, but the extent of improvement depends on the task and model. GPT-4o generally shows more resilience and gains with additional Few-shot examples and SC responses, while GPT-3.5-Turbo tends to experience diminishing returns or even slight declines with higher shot counts. The SC technique is particularly effective for both models in complex tasks, but its impact varies depending on the task’s complexity and the model used.

The results of this experiment demonstrate that adjusting the configuration of Few-shot and SC prompts can optimize model performance. Through a detailed analysis of each technique, we have closely examined how these adjustments influence the overall performance.

First, the analysis of the effect of the number of examples in Few-shot prompts reveals that the impact varies depending on the task. In the SMS spam detection task, both GPT-4o and GPT-3.5-Turbo showed steady improvements as the number of examples increased. Notably, GPT-4o’s performance continued to improve as the number of examples increased from 10 to 30, indicating that the model can better leverage additional examples to optimize its performance. In contrast, in the fake news detection task, increasing the number of examples led to a slight decline in performance. This suggests that for tasks like fake news detection, adding more examples does not necessarily correlate with improved performance. GPT-3.5-Turbo, for instance, saw little to no performance improvement beyond 20 shots, suggesting that exceeding a certain threshold of examples may not contribute to further gains and could potentially introduce noise or complexity that hinders performance.

In the toxic comment detection task, the trend diverged more clearly between the two models. GPT-4o exhibited substantial performance improvements as the number of Few-shot examples increased, with its accuracy and F1 score steadily rising until 30 shots. On the other hand, GPT-3.5-Turbo showed an initial improvement up to 20 examples, but its performance began to decline as the number of examples increased to 30. This suggests that an excessive number of examples may lead to overfitting or confusion in the model, particularly for GPT-3.5-Turbo, highlighting the importance of selecting an optimal number of examples for each task.

The analysis of the SC prompts showed that increasing the number of generated responses was especially effective for more complex tasks. In the SMS spam detection task, increasing the number of responses from 3 to 7 had little impact on performance for both models. However, for tasks such as fake news and toxic comment detection, the increase in responses resulted in noticeable performance gains. GPT-4o, in particular, exhibited significant improvements when the number of SC responses increased from 5 to 7, indicating that the SC technique helps the model produce more consistent and accurate outputs in complex tasks. In contrast, GPT-3.5-Turbo displayed more limited improvements in certain tasks, suggesting that its architecture may not fully capitalize on the benefits of the SC technique to the same extent as GPT-4o.

Overall, this study demonstrates that Few-shot and SC techniques are crucial for optimizing model performance, and their effectiveness varies depending on both the task and the model. GPT-4o, in particular, showed greater sensitivity to these techniques, resulting in more substantial performance gains, whereas GPT-3.5-Turbo exhibited more constrained improvements. These findings underscore the importance of fine-tuning advanced Prompt-Engineering techniques to maximize the performance of state-of-the-art models like GPT-4o, particularly in more complex tasks.

4.4. Limitation of Single Prompt ToT

The ToT prompt simulates a problem-solving process where multiple “experts” collaborate to reach a consensus decision. This method is designed to enhance the model’s reasoning capabilities by exploring multiple reasoning paths. However, experimental results indicated that the performance of ToT, particularly in spam detection tasks, was somewhat lower compared to other prompt techniques. To explain this in detail, we will examine an input example and its corresponding ToT-based output from

Table 3.

The example illustrates a significant limitation of the Single Prompt ToT. In this case, the input message was actually a legitimate message, not spam, but the ToT process mistakenly classified it as spam. Several factors contributed to this misclassification. The experts in the ToT process primarily relied on specific keywords related to drug activities (“gram”, “eighth”, “second gram”). This reliance led to a biased interpretation of the message’s context, resulting in a false positive. Although ToT aims to strengthen reasoning by considering multiple perspectives, it can fall short in situations where a detailed understanding of context is crucial. The model’s experts failed to recognize that the terms could be used in a legitimate context.

Moreover, the collaborative nature of ToT can lead to consensus bias, where the experts reinforce the initial interpretation and do not sufficiently explore alternative explanations. In this example, all three experts quickly agreed on the drug-related interpretation, failing to consider other possibilities. The model’s experts automatically interpreted potentially cryptic language as an indicator of spam. However, in real-world applications, cryptic language can be used legitimately, and overgeneralization can lead to frequent misclassifications.

This example demonstrates that, while the ToT prompt attempts to leverage collaborative reasoning, it is vulnerable to biases and can misclassify messages when contextual cues are misinterpreted. Over-reliance on specific keywords and a lack of detailed understanding can result in false positive decisions. This suggests that the ToT prompt, in its current form, needs improvement to better understand context and reduce consensus bias. These findings highlight the need for enhancements in the ToT prompt to improve its contextual understanding and mitigate consensus bias, ensuring more accurate message classification.

Beyond the illustrative example provided, our observations indicate that the limitations of Tree-of-Thought (ToT) are not confined to isolated cases. In multiple instances, the simulated experts exhibited consensus bias, converging too quickly on an early interpretation without sufficient exploration of alternatives. We also observed keyword over-reliance, where certain trigger terms (e.g., “gram”, “deal”) led to misclassification even in benign contexts. These recurring patterns suggest that, while ToT aims to enhance reasoning through structured collaboration, in practice, it can amplify biases when contextual understanding is shallow. This explains its lower performance relative to CoT and Self-Consistency and highlights the need for improved mechanisms to diversify reasoning paths in future work.

5. Conclusions

In this study, we present a comprehensive survey of Prompt-Engineering techniques for anomaly detection. We review and synthesize insights from empirical evaluations using the GPT-3.5-Turbo and GPT-4o models, comparing methods such as Zero-shot prompting, Few-shot prompting, Chain-of-Thought prompting, Self-Consistency prompting, and Tree-of-Thought prompting. Additionally, we conducted an ablation study to investigate how variations in the number of examples in Few-shot prompting and the number of responses in Self-Consistency prompting influence performance.

Our survey not only aggregates performance trends from recent experiments but also contextualizes these findings within the broader landscape of anomaly detection research. By integrating empirical evidence with established literature, we highlight the strengths and limitations of each Prompt-Engineering strategy and identify key areas for further exploration.

We also acknowledge that our evaluation is limited to a small number of monolingual datasets, and broader validation across multilingual and multi-domain benchmarks remains an important direction for future research.

Future research should further investigate the scalability and adaptability of Prompt-Engineering techniques across diverse domains and anomaly detection tasks beyond text data. In particular, studies focusing on real-time data streams, large-scale datasets, and multilingual environments are essential to advance the field. Such efforts will be instrumental in elucidating how Prompt Engineering can effectively address practical challenges and guide the development of next-generation AI technologies.