A Hybrid Semi-Supervised Tri-Training Framework Integrating Traditional Classifiers and Lightweight CNN for High-Resolution Remote Sensing Image Classification

Abstract

1. Introduction

- Hybrid Tri-Training with Heterogeneous Classifiers: We construct a tri-training ensemble that integrates traditional classifiers with a lightweight CNN to exploit their complementary strengths. While traditional models are more robust under limited supervision, CNNs excel at capturing high-level semantic and spatial features. This heterogeneous design enhances decision diversity compared to homogeneous ensembles and improves robustness in label-scarce scenarios by jointly assigning pseudo-labels and iteratively refining predictions.

- Landscape Metric-Guided Relearning: To mitigate pseudo-label noise and enhance spatial consistency, we introduce a relearning module based on landscape metrics. These metrics quantify spatial composition and configuration at the regional level, enabling more effective capture of structural semantics and improved delineation of land cover boundaries.

- Uncertainty-Aware Pseudo-Label Selection (UPS): To further improve classification around class boundaries, we incorporate an uncertainty-aware strategy for pseudo-label refinement. By selectively including low-confidence samples near decision edges, the model achieves more accurate boundary recognition and greater overall robustness.

2. Methodology

2.1. Overview of the Proposed Framework

- Iterative Landscape Metric Relearning (ILMR) (Section 2.2): This module enhances spatial structural awareness by extracting landscape-level metrics—such as compactness and shape complexity—from intermediate classification maps. These metrics are then used to guide the generation and refinement of pseudo-labels, particularly in ambiguous or spatially fragmented regions.

- Uncertainty-aware Pseudo-label Selection (UPS) (Section 2.3): To mitigate confirmation bias and error accumulation, a dual-threshold mechanism is employed. This strategy filters pseudo-labeled samples based on inter-classifier consensus and predictive uncertainty, allowing only reliable samples to be incorporated into the labeled dataset.

- Tri-training with hybrid base learners (Section 2.4): The above modules are integrated into an iterative tri-training scheme, where traditional classifiers and lightweight CNN models cooperatively refine predictions and expand supervision, achieving complementary learning from spatial structural perspectives.

2.2. Iterative Landscape Metric Relearning (ILMR)

- Mean patch size (MPS): Reflects average patch area;

- Largest patch index (LPI): Quantifies the dominance of the largest patch;

- Edge density (ED): Indicates fragmented or boundary regions;

- Mean shape index (MSI): Measures the geometric complexity of patches;

- (See Appendix A for definitions and computations of all eight metrics.)

2.3. Uncertainty-Aware Pseudo-Label Selection (UPS)

- The other two classifiers agree on a label;

- certainty , but at least one classifier’s certainty .

2.4. Tri-Training with Hybrid Base Learners

| Algorithm 1: Semi-supervised Land Cover Classification via Tri-training with hybrid base learners |

| Input: Labeled dataset: Unlabeled dataset: Ensemble of base classifiers: High/low confidence thresholds: and Max iterations: T |

| Procedure: 1. Initialize pseudo-label set 2. For iteration to T do 2.1. Train/update base classifiers on 2.2. Initialize temporary sets , 2.3. for each do (a) Obtain predictions , labels and probabilities (b) Compute agreement score and confidence bounds , (c) if and then Add to (d) else if and Add to 2.4. Generate coarse land cover map using 2.5. Extract landscape metrics from patches in the coarse map 2.6. Augment samples with corresponding metrics 2.7. Generate structural pseudo-labels for via ILMR module 2.8. Update pseudo-label set: labels 2.9. Optionally update thresholds and adaptively 3. End For |

| Output: Trained hybrid classifier ensemble and final pseudo-label map. |

3. Experimental Parameters and Datasets

3.1. Parameter Settings

- 1.

- To ensure model diversity and complementary strengths, three different classifiers were integrated: a lightweight Convolutional Neural Network (CNN), Random Forest (RF), and Logistic Regression via Splitting and Augmented Lagrangian (LORSAL). The CNN was employed to capture hierarchical semantic and spatial–contextual representations, trained with a learning rate of 0.001, the Adam optimizer, a batch size of 32, and a maximum of 100 epochs. The detailed CNN architecture is provided in Section 3.2. RF was adopted for its robustness under small-sample conditions, with 200 trees constructed to balance computational efficiency and classification accuracy [35]. LORSAL was included for its stability and interpretability in high-dimensional feature spaces. Its regularization parameter was set to λ = 0.001, and the maximum number of iterations was fixed at 1000, consistent with standard practices reported in previous study [36]. By combining these classifiers within the tri-training framework, the system capitalizes on their complementary advantages, enriching decision diversity and enhancing generalization across heterogeneous landscapes.

- 2.

- ILMR: In order to simultaneously capture the details and characterize the neighborhood extent (e.g., the spatial pattern and arrangement of the land-cover classes), a window size of 9 × 9 pixels was used.

- 3.

- Training: For each class, 50 samples were randomly selected from the reference map to train the classification model, while the remaining reference samples were reserved for accuracy assessment.

- 4.

- Accuracy assessment: Overall accuracy (OA) was computed from the confusion matrix for the quantitative assessment.

3.2. CNN Architecture

3.3. Datasets

- QB dataset: Acquired by the QuickBird satellite, this dataset contains 1123 × 748 pixels, covering four spectral bands with a spatial resolution of 2.4 m (Figure 3a);

- WV-2 dataset: Captured by the WorldView-2 high-spatial-resolution (HSR) sensor, this dataset comprises eight multispectral bands at a 2-m spatial resolution, with an image size of 600 × 520 pixels (Figure 3b);

- GE-1 dataset: Provided by the GeoEye-1 satellite, the images consist of 908 × 607 pixels, offering four spectral bands at a 2-m spatial resolution (Figure 3c);

- ZY-3 dataset: Acquired by ZY-3, China’s first civilian high-resolution mapping satellite, this dataset contains 651 × 499 pixels with four spectral bands and a spatial resolution of 5.8 m (Figure 3d).

4. Results and Discussion

- Comparison of Classification Performance. Table 2 presents the overall accuracy (OA) values, reported as mean ± standard deviation, for different classification methods evaluated on four datasets (QB, WV-2, GE-1, and ZY-3) over five independent runs. The results clearly indicate that the proposed method outperforms the baseline approaches (RF, LORSAL, and CNN) across all datasets. Notably, the proposed tri-training framework consistently achieves the highest OA, demonstrating its superior classification capability.

- 2.

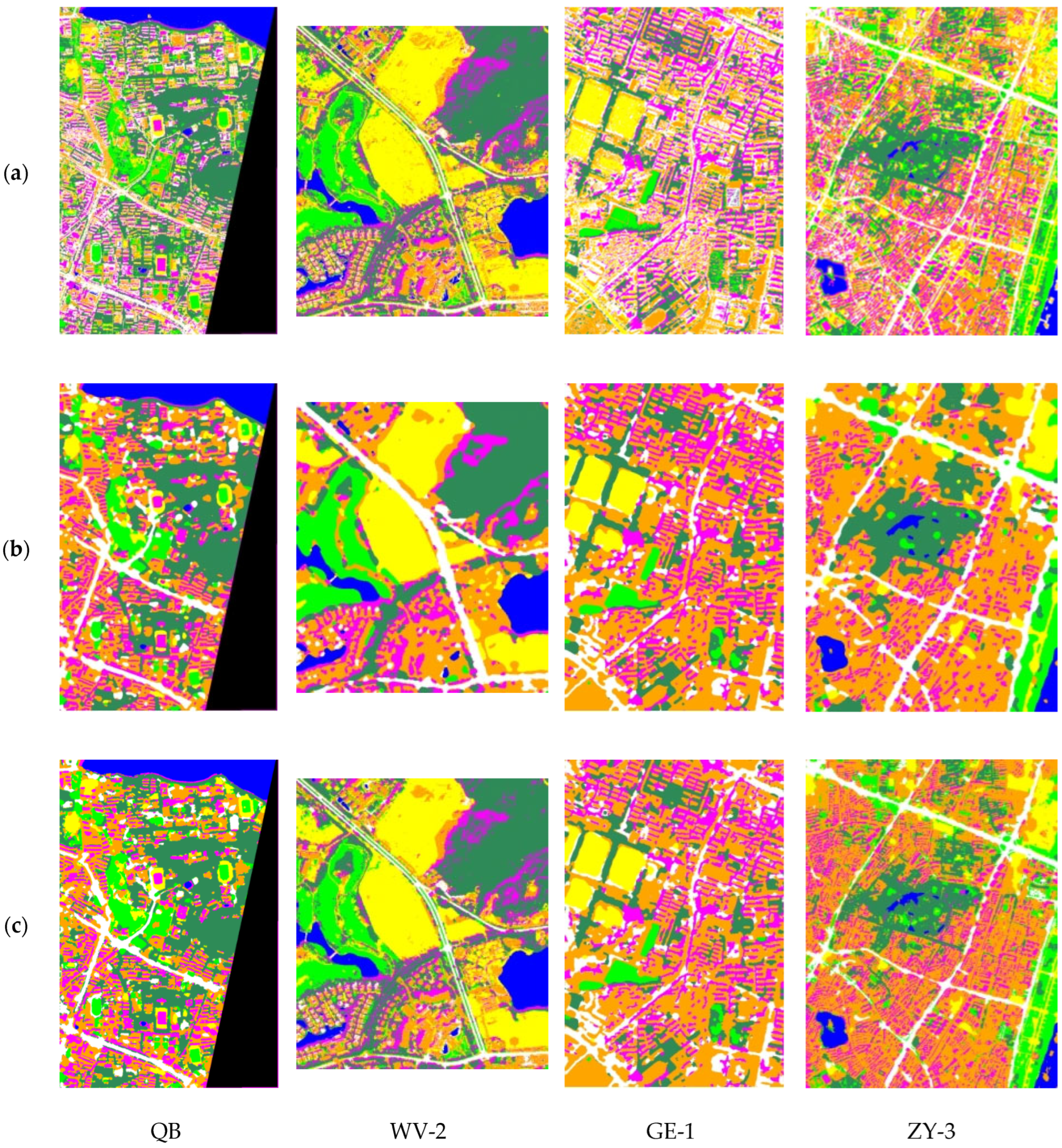

- Visual Inspection of Classification Maps. Figure 4 presents the classification maps produced by the proposed method across the four datasets. Compared with the raw output (Figure 4a), where evident misclassifications occur among spectrally similar classes (e.g., roads, buildings, and soil) and salt-and-pepper noise is prominent due to the inclusion of unreliable pseudo-labels, the proposed method achieves significantly cleaner and more coherent results. While the relearning-landscape method (Figure 4b) effectively reduces salt-and-pepper noise by extracting spatial regularities, it suffers from over-smoothing, leading to blurred object boundaries.

- 3.

- Ablation Study. To assess the individual contributions of the proposed modules—ILMR, UPS, and hybrid tri-training, we conducted a comprehensive ablation study by using QB dataset. The QB dataset was selected due to its complex urban landscape, where salt-and-pepper noise and pixel-level inconsistencies are more pronounced. The following model variants were compared:

- Model1-baseline: A traditional tri-training framework with homogeneous classifiers, without ILMR or UPS, which suffers from unrefined pseudo-labeling and is prone to noise;

- Model2-ILMR only: Incorporating ILMR for spatial regularization, but without UPS, to assess its effect on improving spatial coherence;

- Model3-UPS only: Incorporating UPS for pseudo-label filtering, but without ILMR, to assess its effect on reducing label noise;

- Model4-Full model (Ours): The complete framework integrating both ILMR and UPS, to verify the synergistic effect between the two modules.

- 4.

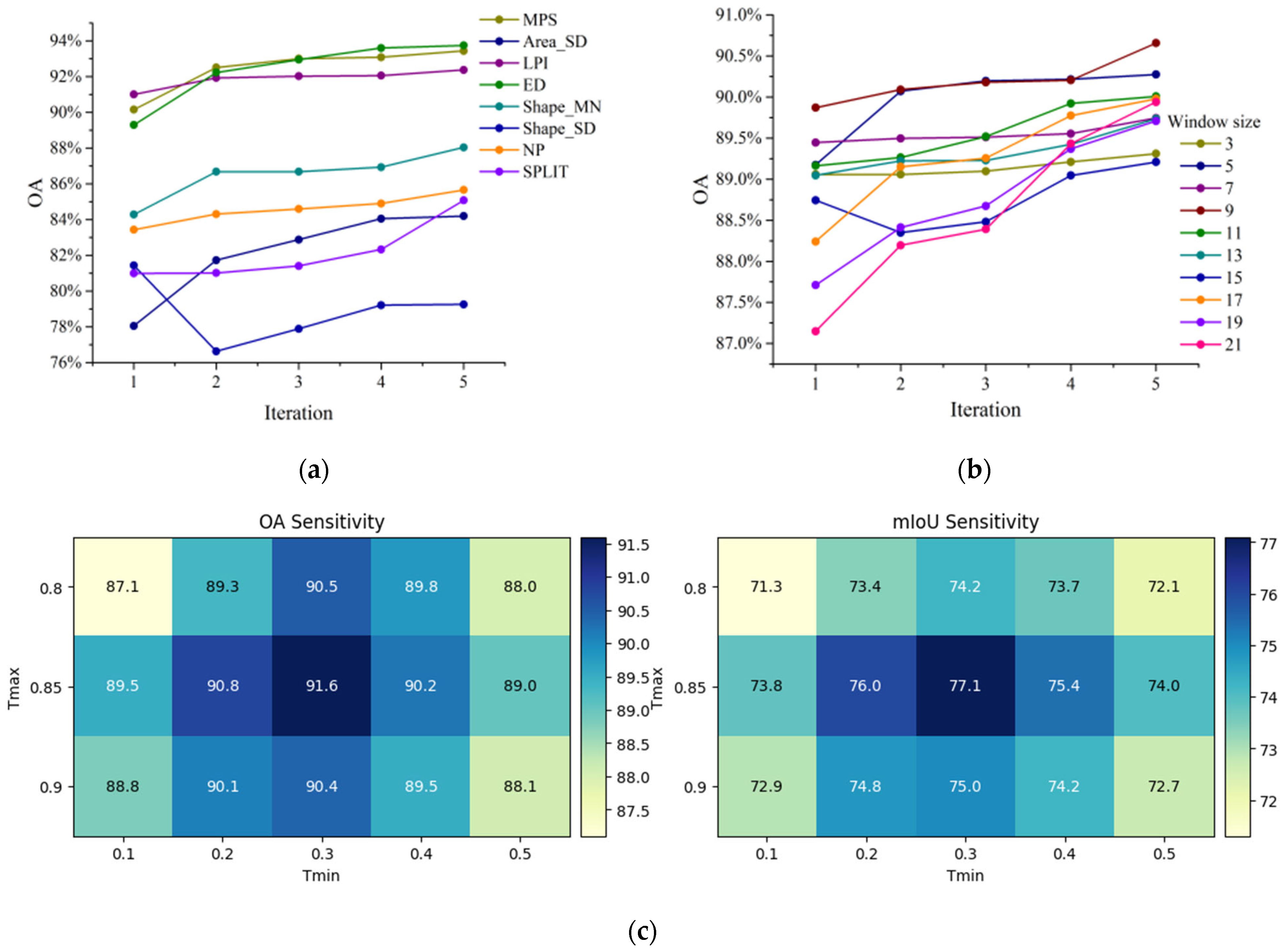

- Parameter Sensitivity Analysis. To further assess the robustness and adaptability of the proposed framework, a comprehensive sensitivity analysis was performed on key parameters. As illustrated in Figure 5, this analysis provides deeper insights into the model’s behavior under varying configurations and assists in identifying optimal parameter settings for practical applications. Specifically, eight widely used landscape metrics (as listed in Table A1 in Appendix A) were evaluated during the relearning-landscape process using the GE-1 dataset, which features representative urban landscape characteristics. Figure 5a presents the classification results obtained by individually incorporating each landscape metric during the relearning stage. The model achieves convergence within 3–4 iterations, indicating efficient adaptation. Among the tested metrics, Edge Density (ED), Largest Patch Index (LPI), and Mean Patch Size (MPS) yielded the highest classification accuracies, highlighting their effectiveness in capturing spatial structural information.

- 5.

- Computational Efficiency and Practical Implications. We conducted a comprehensive comparative analysis against several state-of-the-art deep learning models, including DeepLabV3+ [37], HRNet [38], and SegFormer [39]. The quantitative results (Table 5) reveal several notable advantages of our method in terms of computational efficiency.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Metrics | Formula | Main Content |

|---|---|---|

| Mean patch size (MPS) | is the area of patch i n is the number of patches for class i | |

| Standard deviation of area (AREA_SD) | is the area of patch i | |

| Largest patch index | is the area of patch i A is the total landscape area (m2) | |

| Edge density (ED) | E is the total length of edges in the landscape, and A is the total landscape area (m2) | |

| Mean shape index (SHAPE_MN) | is the perimeter of land-cover patch i, is the area of the land-cover patch, and n is the number of patches within the landscape | |

| Standard deviation of shape index (SHAPE_SD) | is the perimeter of land-cover patch i, is the area of the land-cover patch, and n is the number of patches within the landscape | |

| Number of patches (NP) | is the number of patches for class i | |

| Splitting index (SPLIT) | is the area of patch i A is the total landscape area (m2) |

References

- Fayaz, M.; Nam, J.; Dang, L.M.; Song, H.-K.; Moon, H. Land-cover classification using deep learning with high-resolution remote-sensing imagery. Appl. Sci. 2024, 14, 1844. [Google Scholar] [CrossRef]

- Tang, Y.; Hu, X.; Ke, T.; Zhang, M. Semantic Segmentation of High Resolution Remote Sensing Imagery via an End-to-End Graph Attention Network with Superpixel Embedding. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2025, 18, 7236–7252. [Google Scholar] [CrossRef]

- Han, W.; Zhang, X.; Wang, Y.; Wang, L.; Huang, X.; Li, J.; Wang, S.; Chen, W.; Li, X.; Feng, R. A survey of machine learning and deep learning in remote sensing of geological environment: Challenges, advances, and opportunities. ISPRS J. Photogramm. Remote. Sens. 2023, 202, 87–113. [Google Scholar] [CrossRef]

- Valjarević, A.; Morar, C.; Brasanac-Bosanac, L.; Cirkovic-Mitrovic, T.; Djekic, T.; Mihajlović, M.; Milevski, I.; Culafic, G.; Luković, M.; Niemets, L.; et al. Sustainable land use in Moldova: GIS & remote sensing of forests and crops. Land Use Policy 2025, 152, 107515. [Google Scholar] [CrossRef]

- Wang, D.; Chen, L.; Gong, F.; Zhu, Q. Maximizing Depth of Graph-Structured Convolutional Neural Networks with Efficient Pathway Usage for Remote Sensing. Tsinghua Sci. Technol. 2025, 30, 1940–1953. [Google Scholar] [CrossRef]

- Xue, Y.; Li, L.; Wang, Z.; Jiang, C.; Liu, M.; Wang, J.; Sun, K.; Ma, H. RFCNet: Remote sensing image super-resolution using residual feature calibration network. Tsinghua Sci. Technol. 2022, 28, 475–485. [Google Scholar] [CrossRef]

- Liu, M.; Liu, J.; Hu, H. A novel deep learning network model for extracting lake water bodies from remote sensing images. Appl. Sci. 2024, 14, 1344. [Google Scholar] [CrossRef]

- He, J.; Gong, B.; Yang, J.; Wang, H.; Xu, P.; Xing, T. ASCFL: Accurate and speedy semi-supervised clustering federated learning. Tsinghua Sci. Technol. 2023, 28, 823–837. [Google Scholar] [CrossRef]

- Zhou, L.; Duan, K.; Dai, J.; Ye, Y. Advancing perturbation space expansion based on information fusion for semi-supervised remote sensing image semantic segmentation. Information. Inf. Fusion 2025, 117, 102830. [Google Scholar] [CrossRef]

- Liu, B.; Zhan, C.; Guo, C.; Liu, X.; Ruan, S. Efficient remote sensing image classification using the novel STConvNeXt convolutional network. Sci. Rep. 2025, 15, 8406. [Google Scholar] [CrossRef]

- Li, Q.; Chen, Y.; He, X.; Huang, L. Co-training transformer for remote sensing image classification, segmentation, and detection. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 5606218. [Google Scholar] [CrossRef]

- Zhang, Y.; Song, X.; Hua, Z.; Li, J. CGMMA: CNN-GNN multiscale mixed attention network for remote sensing image change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2024, 17, 7089–7103. [Google Scholar] [CrossRef]

- Nezhad, S.A.; Tajeddin, G.; Khatibi, T.; Sohrabi, M. Self-supervised learning framework for efficient classification of endoscopic images using pretext tasks. PLoS ONE 2025, 20, e0322028. [Google Scholar] [CrossRef] [PubMed]

- Lian, Z.; Zhan, Y.; Zhang, W.; Wang, Z.; Liu, W.; Huang, X. Recent Advances in Deep Learning-Based Spatiotemporal Fusion Methods for Remote Sensing Images. Sensors 2025, 25, 1093. [Google Scholar] [CrossRef] [PubMed]

- Bai, H.; Ren, C.; Huang, Z.; Gu, Y. A dynamic attention mechanism for road extraction from high-resolution remote sensing imagery using feature fusion. Sci. Rep. 2025, 15, 17556. [Google Scholar] [CrossRef] [PubMed]

- Pham, P.; Nguyen, L.T.; Pedrycz, W.; Vo, B. Deep learning, graph-based text representation and classification: A survey, perspectives and challenges. Artif. Intell. Rev. 2023, 56, 4893–4927. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, Z.; Xiong, Z.; Shi, Y.; Saha, S.; Zhu, X.X. Beyond Grid Data: Exploring graph neural networks for Earth observation. IEEE Geosci. Remote. Sens. Mag. 2024, 13, 175–208. [Google Scholar] [CrossRef]

- Hua, W.; Sun, N.; Liu, L.; Ding, C.; Dong, Y.; Sun, W. Semi-supervised hybrid contrastive learning for PolSAR image classification. Knowledge-Based Syst. 2025, 311, 113078. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, Z.; Jin, Y.; Wang, X.; Xu, L.; Wang, L.; Yu, J.; Dai, W.; Gao, J.; Zhang, F. Interpreting Spatiotemporal Dynamics of Ulva prolifera Blooms in the Southern Yellow Sea Using an Attention-Enhanced Transformer Framework. Environ. Pollut. 2025, 384, 226999. [Google Scholar] [CrossRef]

- Yang, R.; Zhong, Y.; Su, Y. Self-Supervised Joint Representation Learning for Urban Land-Use Classification With Multi-Source Geographic Data. IEEE Trans. Geosci. Remote. Sens. 2025, 63, 5608021. [Google Scholar]

- Yang, X.; Song, Z.; King, I.; Xu, Z. A survey on deep semi-supervised learning. IEEE Trans. Knowl. Data Eng. 2022, 35, 8934–8954. [Google Scholar] [CrossRef]

- de Oliveira, W.D.G.; Berton, L. A systematic review for class-imbalance in semi-supervised learning. Artif. Intell. Rev. 2023, 56, 2349–2382. [Google Scholar] [CrossRef]

- Tarekegn, A.N.; Ullah, M.; Cheikh, F.A. Deep learning for multi-label learning: A comprehensive survey. arXiv 2024, arXiv:2401.16549. [Google Scholar] [CrossRef]

- Xu, H.; Liu, L.; Bian, Q.; Yang, Z. Semi-supervised semantic segmentation with prototype-based consistency regularization. Adv. Neural Inf. Process. Syst. 2022, 35, 26007–26020. [Google Scholar]

- Zhou, Z.-H.; Li, M. Tri-training: Exploiting unlabeled data using three classifiers. IEEE Trans. Knowl. Data Eng. 2005, 17, 1529–1541. [Google Scholar] [CrossRef]

- Saito, K.; Ushiku, Y.; Harada, T. Asymmetric tri-training for unsupervised domain adaptation. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; pp. 2988–2997. [Google Scholar]

- Tan, K.; Zhu, J.; Du, Q.; Wu, L.; Du, P. A novel tri-training technique for semi-supervised classification of hyperspectral images based on diversity measurement. Remote. Sens. 2016, 8, 749. [Google Scholar] [CrossRef]

- Han, X.; Huang, X.; Li, J.; Li, Y.; Yang, M.Y.; Gong, J. The edge-preservation multi-classifier relearning framework for the classification of high-resolution remotely sensed imagery. ISPRS J. Photogramm. Remote. Sens. 2018, 138, 57–73. [Google Scholar] [CrossRef]

- Nugroho, H.; Pramudito, W.A.; Laksono, H.S. Gray Level Co-Occurrence Matrix (GLCM)-based Feature Extraction for Rice Leaf Diseases Classification. Bul. Ilm. Sarj. Tek. Elektro 2024, 6, 392–400. [Google Scholar] [CrossRef]

- Li, Y.; Jin, W.; Qiu, S.; He, Y. Multiscale spatial-frequency domain dynamic pansharpening of remote sensing images integrated with wavelet transform. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 5408315. [Google Scholar] [CrossRef]

- Lu, Q.; Xie, Y.; Wei, L.; Wei, Z.; Tian, S.; Liu, H.; Cao, L. Extended attribute profiles for precise crop classification in UAV-borne hyperspectral imagery. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 2500805. [Google Scholar] [CrossRef]

- Liu, R.; Liao, J.; Liu, X.; Liu, Y.; Chen, Y. LSRL-Net: A level set-guided re-learning network for semi-supervised cardiac and prostate segmentation. Biomed. Signal Process. Contro. 2025, 110, 108062. [Google Scholar] [CrossRef]

- Geiß, C.; Taubenböck, H. Object-based postclassification relearning. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2336–2340. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. An SVM ensemble approach combining spectral, structural, and semantic features for the classification of high-resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2012, 51, 257–272. [Google Scholar] [CrossRef]

- Huang, X.; Han, X.; Zhang, L.; Gong, J.; Liao, W.; Benediktsson, J.A. Generalized differential morphological profiles for remote sensing image classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1736–1751. [Google Scholar] [CrossRef]

- Li, J.; Huang, X.; Gamba, P.; Bioucas-Dias, J.M.; Zhang, L.; Benediktsson, J.A.; Plaza, A. Multiple feature learning for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1592–1606. [Google Scholar] [CrossRef]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X. Deep high-resolution representation learning for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 3349–3364. [Google Scholar] [CrossRef] [PubMed]

- Anandkumar, A.; Alvarez, J.; Xie, E.; Wang, W. Simple and efficient design for semantic segmentation with transformers. Adv. Neural Inf. Process. Syst. 2021, 34, 12077–12090. [Google Scholar]

| Class | QB | WV-2 | GE-1 | ZY-3 |

|---|---|---|---|---|

| Buildings | 18,296 | 11,578 | 20,074 | 15,818 |

| Roads | 5103 | 5356 | 3187 | 13,564 |

| Trees | 17,415 | 14,086 | 5370 | 6154 |

| Grass | 9179 | 7417 | 4098 | 3065 |

| Water | 16,614 | 11,209 | - | 3928 |

| Soil | 3709 | 22,189 | 18,249 | 4659 |

| Shadow | 4378 | 1427 | 1330 | 4722 |

| Method | QB | WV-2 | GE-1 | ZY-3 |

|---|---|---|---|---|

| RF | 86.21 ± 0.45 | 88.12 ± 0.39 | 84.75 ± 0.53 | 82.30 ± 0.61 |

| LORSAL | 87.40 ± 0.47 | 89.01 ± 0.42 | 86.20 ± 0.48 | 83.95 ± 0.57 |

| CNN | 89.75 ± 0.36 | 91.28 ± 0.31 | 88.32 ± 0.45 | 88.77 ± 0.40 |

| Ours | 91.62 ± 0.29 | 92.96 ± 0.27 | 91.91 ± 0.34 | 92.25 ± 0.35 |

| Comparison | QB | WV-2 | GE-1 | ZY-3 |

|---|---|---|---|---|

| Ours vs. RF | 2.4 × 10−4 | 1.8 × 10−4 | 3.2 × 10−4 | 4.7 × 10−4 |

| Ours vs. LORSAL | 3.1 × 10−3 | 2.7 × 10−3 | 2.9 × 10−3 | 3.4 × 10−3 |

| Ours vs. CNN | 1.9 × 10−2 | 2.2 × 10−2 | 2.5 × 10−2 | 1.8 × 10−2 |

| Variant | OA (%) | Kappa | Highlights |

|---|---|---|---|

| Model1 | 82.6 | 0.76 | Easily trapped by boundary noise and fragmented predictions |

| Model2 | 85.2 | 0.81 | Improved spatial coherence and patch integrity |

| Model3 | 84.3 | 0.79 | Reduced label noise, but residual fragmentation remains |

| Model4 | 91.6 | 0.91 | Synergy between spatial and uncertainty modules |

| Method | OA (%) | Parameters (M) | Training Time (min) | Inference Time (s) |

|---|---|---|---|---|

| DeepLabV3+ | 89.21 ± 0.41 | 39.8 | 125 | 18.5 |

| HRNet | 90.35 ± 0.35 | 28.5 | 138 | 22.1 |

| SegFormer | 91.57 ± 0.30 | 13.1 | 115 | 9.8 |

| Proposed Tri-training | 91.62 ± 0.29 | 1.4 | 95 | 3.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, X.; Niu, Y.; He, C.; Zhou, D.; Cao, Z. A Hybrid Semi-Supervised Tri-Training Framework Integrating Traditional Classifiers and Lightweight CNN for High-Resolution Remote Sensing Image Classification. Appl. Sci. 2025, 15, 10353. https://doi.org/10.3390/app151910353

Han X, Niu Y, He C, Zhou D, Cao Z. A Hybrid Semi-Supervised Tri-Training Framework Integrating Traditional Classifiers and Lightweight CNN for High-Resolution Remote Sensing Image Classification. Applied Sciences. 2025; 15(19):10353. https://doi.org/10.3390/app151910353

Chicago/Turabian StyleHan, Xiaopeng, Yukun Niu, Chuan He, Ding Zhou, and Zhigang Cao. 2025. "A Hybrid Semi-Supervised Tri-Training Framework Integrating Traditional Classifiers and Lightweight CNN for High-Resolution Remote Sensing Image Classification" Applied Sciences 15, no. 19: 10353. https://doi.org/10.3390/app151910353

APA StyleHan, X., Niu, Y., He, C., Zhou, D., & Cao, Z. (2025). A Hybrid Semi-Supervised Tri-Training Framework Integrating Traditional Classifiers and Lightweight CNN for High-Resolution Remote Sensing Image Classification. Applied Sciences, 15(19), 10353. https://doi.org/10.3390/app151910353