GNN-MFF: A Multi-View Graph-Based Model for RTL Hardware Trojan Detection

Abstract

1. Introduction

- We propose a multi-view graph modeling framework that jointly represents RTL code using AST and DFG. Each graph is processed by a dedicated GNN architecture tailored to its structural characteristics, enabling complementary modeling of syntactic structures and logical dependencies in RTL designs.

- We design a multi-head attention-based feature fusion strategy to effectively align and integrate heterogeneous features from AST and DFG, enhancing the model’s capacity to detect subtle and abnormal logic behaviors across perspectives.

- We construct an extended HT dataset based on Trust-Hub [17], encompassing diverse HT variants, and evaluate our model on this dataset. Our method achieves an average F1-score of approximately 97.08% in detecting previously unseen HTs, outperforming state-of-the-art methods.

2. Related Work

2.1. Traditional Trojan Detection

2.2. Machine Learning-Based Trojan Detection

2.3. Graph-Based Trojan Detection

3. Methodology

3.1. Threat Model

3.2. Graph Construction

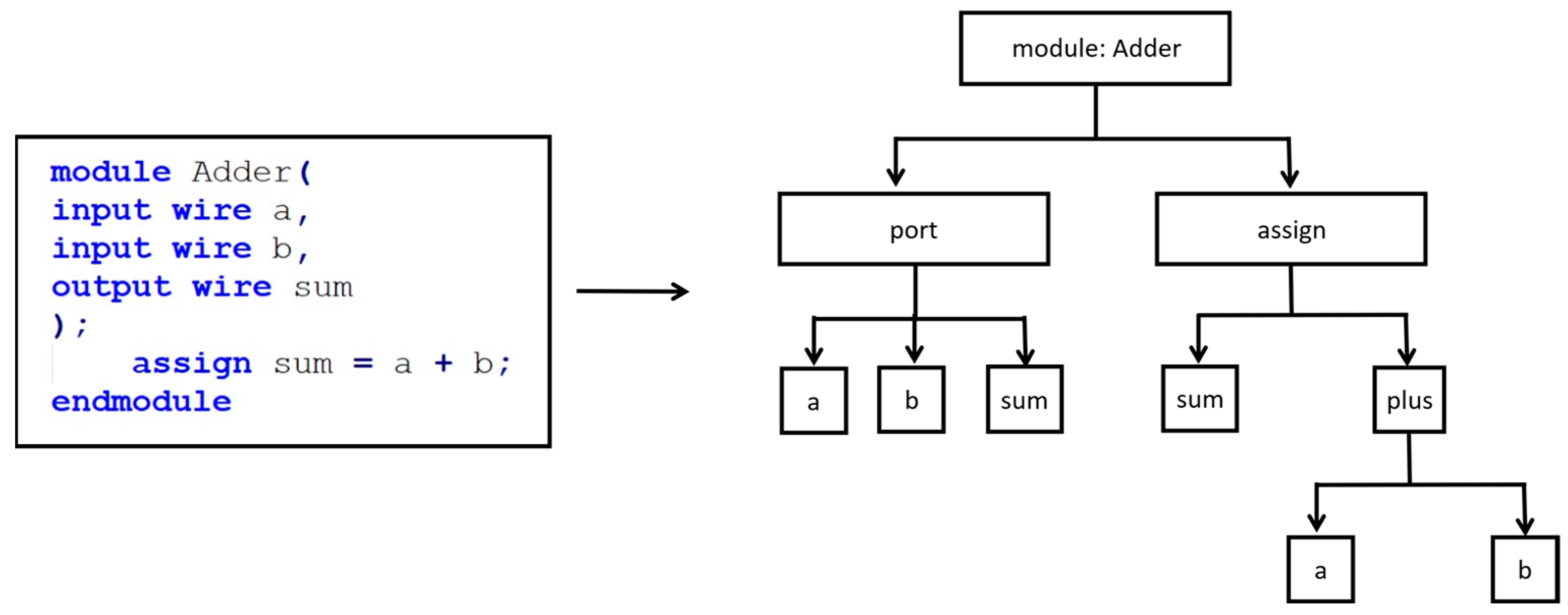

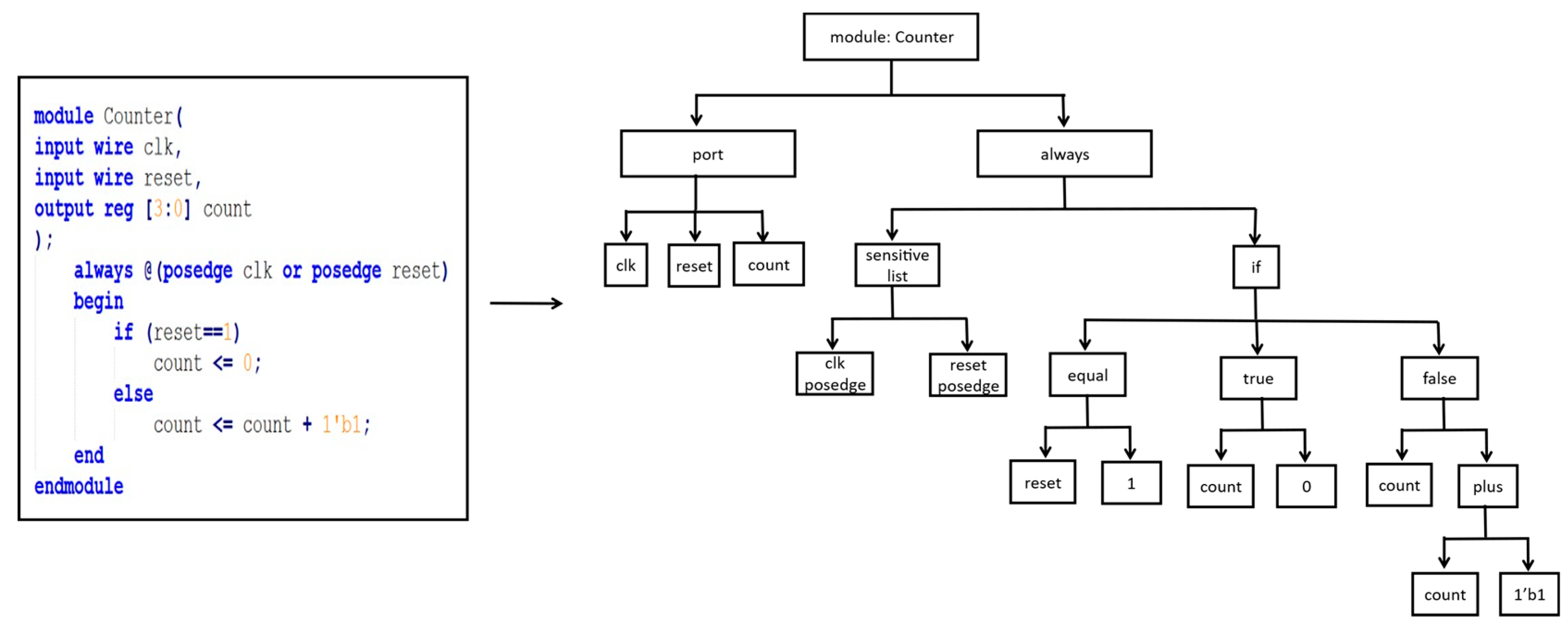

3.2.1. AST Generation

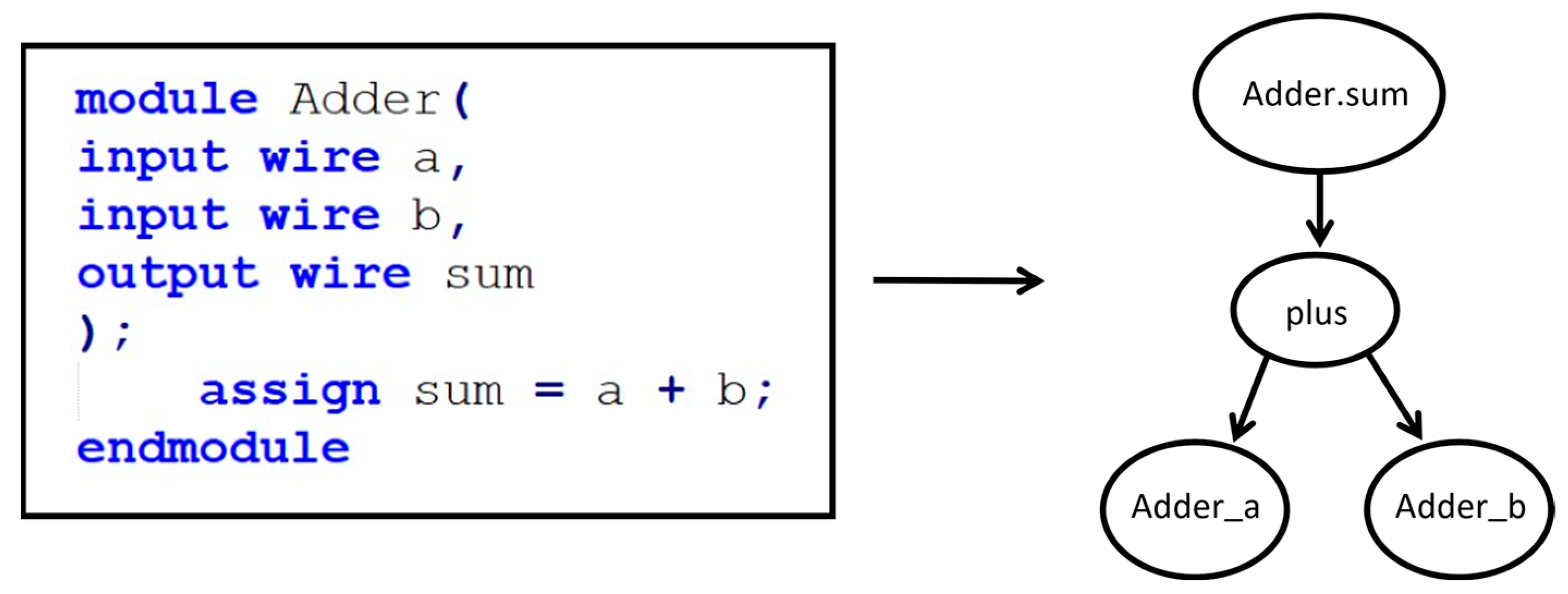

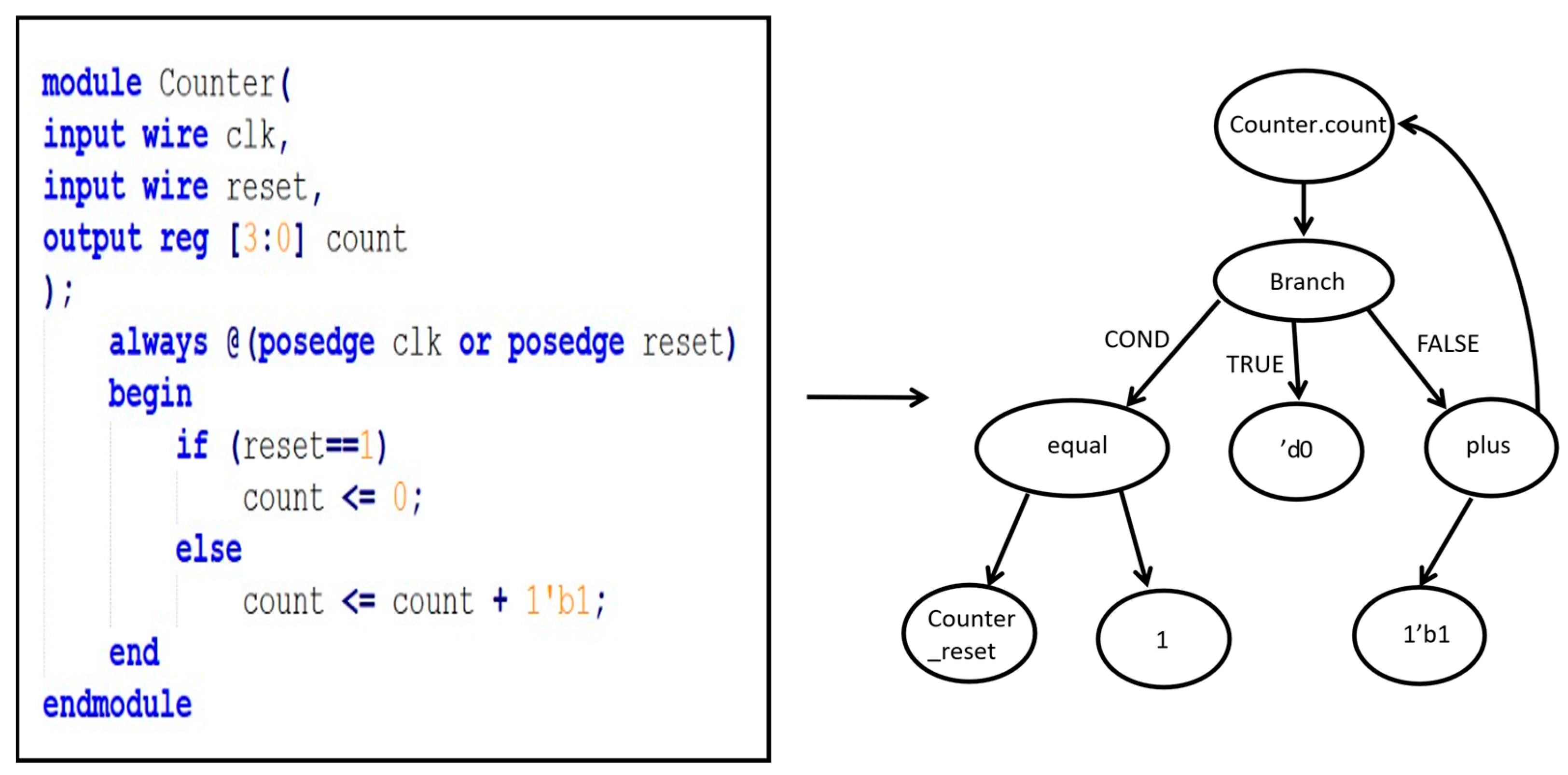

3.2.2. DFG Generation

3.3. Graph Feature Extraction

3.3.1. GAT-Based Feature Extraction for AST

3.3.2. GCN-Based Feature Extraction for DFG

3.4. Feature Fusion

3.5. Prediction Results

4. Evaluation

4.1. Dataset Creation

4.2. Experimental Setting

4.3. Experimental Results and Analysis

4.4. Comparison with State of the Art

4.5. Ablation Study

5. Conclusions

6. Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Sullivan, D.; Biggers, J.; Zhu, G.; Zhang, S.; Jin, Y. FIGHT-metric: Functional identification of gate-level hardware trustworthiness. In Proceedings of the ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 2–5 June 2014; pp. 1–4. [Google Scholar]

- Hicks, M.; Finnicum, M.; King, S.T.; Martin, M.M.K.; Smith, J.M. Overcoming an Untrusted Computing Base: Detecting and Removing Malicious Hardware Automatically. In Proceedings of the IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 159–172. [Google Scholar]

- Oya, M.; Shi, Y.; Yanagisawa, M.; Togawa, N. A score-based classification method for identifying Hardware-Trojans at gate-level netlists. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 9–13 March 2015; pp. 465–470. [Google Scholar]

- Islam, S.A.; Mime, F.I.; Asaduzzaman, S.M.; Islam, F. Socio-network Analysis of RTL Designs for Hardware Trojan Localization. In Proceedings of the International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 18–20 December 2019; pp. 1–6. [Google Scholar]

- He, J.; Guo, X.; Meade, T.; Dutta, R.G.; Zhao, Y.; Jin, Y. SoC interconnection protection through formal verification. Integration 2019, 64, 143–151. [Google Scholar] [CrossRef]

- Hu, W.; Ardeshiricham, A.; Gobulukoglu, M.S.; Wang, X.; Kastner, R. Property Specific Information Flow Analysis for Hardware Security Verification. In Proceedings of the IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018; pp. 1–8. [Google Scholar]

- Nahiyan, A.; Sadi, M.; Vittal, R.; Contreras, G.; Forte, D.; Tehranipoor, M. Hardware trojan detection through information flow security verification. In Proceedings of the IEEE International Test Conference (ITC), Fort Worth, TX, USA, 31 October–2 November 2017; pp. 1–10. [Google Scholar]

- Wang, J.; Li, Y. RDAMS: An Efficient Run-Time Approach for Memory Fault and Hardware Trojans Detection. Information 2021, 12, 169. [Google Scholar] [CrossRef]

- Zargari, A.H.A.; AshrafiAmiri, M.; Seo, M.; Pudukotai Dinakarrao, S.M.; Fouda, M.E.; Kurdahi, F. CAPTIVE: Constrained Adversarial Perturbations to Thwart IC Reverse Engineering. Information 2023, 14, 656. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Y.; Hua, Y.; Yao, J.; Mao, Z.; Chen, X. Hardware Trojans Detection Through RTL Features Extraction and Machine Learning. In Proceedings of the Asian Hardware Oriented Security and Trust Symposium (AsianHOST), Shanghai, China, 16–18 December 2021; pp. 1–4. [Google Scholar]

- Choo, H.S.; Ooi, C.-Y.; Inoue, M.; Ismail, N.; Moghbel, M.; Kok, C.H. Register-Transfer-Level Features for Machine-Learning-Based Hardware Trojan Detection. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2020, E103.A, 502–509. [Google Scholar] [CrossRef]

- Sutikno, S.; Putra, S.D.; Wijitrisnanto, F.; Aminanto, M.E. Detecting Unknown Hardware Trojans in Register Transfer Level Leveraging Verilog Conditional Branching Features. IEEE Access 2023, 11, 46073–46083. [Google Scholar] [CrossRef]

- Meng, X.; Hassan, R.; Dinakrrao, S.M.P.; Basu, K. Can Overclocking Detect Hardware Trojans? In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Daegu, Republic of Korea, 22–28 May 2021; pp. 1–5. [Google Scholar]

- Muralidhar, N.; Zubair, A.; Weidler, N.; Gerdes, R.; Ramakrishnan, N. Contrastive Graph Convolutional Networks for Hardware Trojan Detection in Third Party IP Cores. In Proceedings of the IEEE International Symposium on Hardware Oriented Security and Trust (HOST), Washington, DC, USA, 12–15 December 2021; pp. 181–191. [Google Scholar]

- Yasaei, R.; Yu, S.Y.; Faruque, M.A.A. GNN4TJ: Graph Neural Networks for Hardware Trojan Detection at Register Transfer Level. In Proceedings of the Design, Automation & Test in Europe Conference & Exhibition (DATE), Grenoble, France, 1–5 February 2021; pp. 1504–1509. [Google Scholar]

- Zhang, H.; Fan, Z.; Zhou, Y.; Li, Y. B-HTRecognizer: Bit-Wise Hardware Trojan Localization Using Graph Attention Networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2024, 44, 2240–2252. [Google Scholar] [CrossRef]

- Trust-Hub.org. Available online: https://www.trust-hub.org/benchmarks (accessed on 19 September 2025).

- Yu, W.; Wang, Y. An efficient methodology for hardware Trojan detection based on canonical correlation analysis. Microelectron. J. 2021, 115, 105162. [Google Scholar] [CrossRef]

- Huang, Z.; Quan, W.; Chen, Y.; Jiang, X. A Survey on Machine Learning Against Hardware Trojan Attacks: Recent Advances and Challenges. IEEE Access 2020, 8, 10796–10826. [Google Scholar] [CrossRef]

- Zareen, F.; Karam, R. Detecting RTL Trojans using Artificial Immune Systems and High Level Behavior Classification. In Proceedings of the Asian Hardware Oriented Security and Trust Symposium (AsianHOST), Hong Kong, China, 17–18 December 2018; pp. 68–73. [Google Scholar]

- Han, T.; Wang, Y.; Liu, P. Hardware Trojans Detection at Register Transfer Level Based on Machine Learning. In Proceedings of the IEEE International Symposium on Circuits and Systems (ISCAS), Sapporo, Japan, 26–29 May 2019; pp. 1–5. [Google Scholar]

- Hassan, R.; Meng, X.; Basu, K.; Dinakarrao, S.M.P. Circuit Topology-Aware Vaccination-Based Hardware Trojan Detection. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2023, 42, 2852–2862. [Google Scholar] [CrossRef]

- Utyamishev, D.; Partin-Vaisband, I. Knowledge Graph Embedding and Visualization for Pre-Silicon Detection of Hardware Trojans. In Proceedings of the 2022 IEEE International Symposium on Circuits and Systems (ISCAS), Austin, TX, USA, 27 May–1 June 2022; pp. 180–184. [Google Scholar] [CrossRef]

- Popryho, Y.; Pal, D.; Partin-Vaisband, I. NetVGE: Netwise Hardware Trojan Detection at RTL Using Variable Dependency and Knowledge Graph Embedding. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2025. [Google Scholar] [CrossRef]

- Opencores. Available online: https://opencores.org/ (accessed on 19 September 2025).

| Test Circuit | A (%) | P (%) | R (%) | F1-Score (%) |

|---|---|---|---|---|

| AES | 85.71 | 100 | 85.19 | 92.00 |

| RS232 | 93.33 | 100 | 92.86 | 96.30 |

| PIC | 100 | 100 | 100 | 100 |

| DES | 100 | 100 | 100 | 100 |

| Average | 94.76 | 100 | 94.51 | 97.08 |

| Test Circuit | Train Time (s) | Test Time (s) |

|---|---|---|

| AES | 670.19 | 3.91 |

| RS232 | 871.16 | 4.44 |

| PIC | 1031.28 | 4.98 |

| DES | 971.52 | 4.94 |

| Average | 886.04 | 4.57 |

| Paper | A (%) | P (%) | R (%) | F1-Score (%) |

|---|---|---|---|---|

| GNN-MFF | 94.76 | 100 | 94.51 | 97.08 |

| GNN4TJ [15] | NA | 92.3 | 96.6 | 94 |

| B-HTRecognizer [16] | 99.70 | 84.14 | 93.41 | 86.71 |

| Circuit-topology-aware [22] | 93.15 | 93.35 | 91.38 | 91.28 |

| NetVGE [24] | NA | 93 | 98 | 95.4 |

| Conditional Branching [12] | 96.57 | 100 | 78.79 | 88.13 |

| Overclock [13] | 87.58 | 89.33 | 87 | 88 |

| Artificial immune system [20] | 86.23 | 85.53 | 87.19 | 86.32 |

| Socio-network [4] | 97.3 | 98.2 | 97.9 | 98.0 |

| Information flow analysis [7] | NA | NA | 100 | NA |

| Method | A (%) | P (%) | R (%) | F1-Score (%) |

|---|---|---|---|---|

| AST only | 83.63 | 97.73 | 84.53 | 90.00 |

| DFG only | 73.63 | 87.73 | 80.95 | 82.63 |

| feature concatenation | 88.63 | 100 | 88.10 | 93.08 |

| AST + DFG only GAT | 92.20 | 98.96 | 92.73 | 95.63 |

| AST + DFG only GCN | 89.64 | 96.99 | 91.80 | 94.22 |

| GNN-MFF | 94.76 | 100 | 94.51 | 97.08 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, S.; Zhou, S.; Xue, P.; Kong, L.; Wang, J. GNN-MFF: A Multi-View Graph-Based Model for RTL Hardware Trojan Detection. Appl. Sci. 2025, 15, 10324. https://doi.org/10.3390/app151910324

Zhang S, Zhou S, Xue P, Kong L, Wang J. GNN-MFF: A Multi-View Graph-Based Model for RTL Hardware Trojan Detection. Applied Sciences. 2025; 15(19):10324. https://doi.org/10.3390/app151910324

Chicago/Turabian StyleZhang, Senjie, Shan Zhou, Panpan Xue, Lu Kong, and Jinbo Wang. 2025. "GNN-MFF: A Multi-View Graph-Based Model for RTL Hardware Trojan Detection" Applied Sciences 15, no. 19: 10324. https://doi.org/10.3390/app151910324

APA StyleZhang, S., Zhou, S., Xue, P., Kong, L., & Wang, J. (2025). GNN-MFF: A Multi-View Graph-Based Model for RTL Hardware Trojan Detection. Applied Sciences, 15(19), 10324. https://doi.org/10.3390/app151910324