Research on Influencing Factors of Users’ Willingness to Adopt GAI for Collaborative Decision-Making in Generative Artificial Intelligence Context

Abstract

1. Introduction

2. Literature Review

3. Research Model and Hypotheses

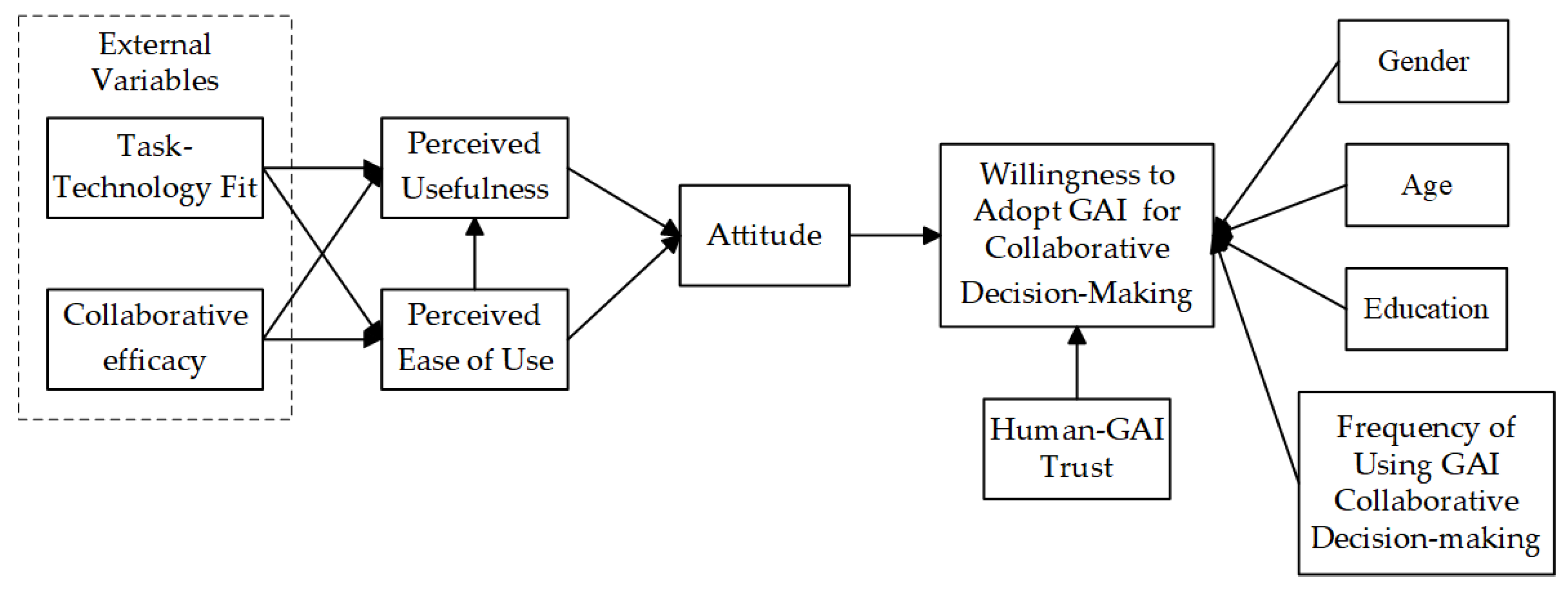

3.1. Research Model

3.2. Research Hypotheses

3.2.1. Perceived Usefulness and Perceived Ease of Use

3.2.2. Attitude

3.2.3. Human–GAI Trust

3.2.4. Task–Technology Fit

3.2.5. Collaborative Efficacy

4. Methodology

4.1. Sampling and Data Collection

4.2. Measures

4.3. Analysis Methods

5. Results

5.1. Reliability and Validity Analyses

5.2. Assessment of the Structural Model

5.3. Fuzzy-Set Qualitative Comparative Analysis

5.3.1. Calibration

5.3.2. Analysis of Necessary Conditions

5.3.3. Analysis of Sufficient Conditions

- (1)

- Perceived value-driven. Path 1 (PU * ATT * CE) includes perceived usefulness, attitude toward use, and collaborative efficacy as core conditions. This configuration aligns with the theoretical pathway of “Collaborative Efficacy → Perceived Usefulness → Attitude toward Use” and has an original coverage of 55.7%, the highest among the four identified pathways. Path 1 indicates that when users perceive high usefulness and collaborative efficacy in GAI-supported decision-making, and simultaneously hold a positive attitude toward its use, their willingness to adopt GAI for collaborative decision-making is significantly enhanced. These findings suggest that a favorable perception of GAI’s utility and collaborative capacity, coupled with a positive behavioral attitude, directly drives users’ willingness to adopt GAI for collaborative decision-making processes.

- (2)

- Functional compensation-driven. Path 2 (PU * ~HAT * TTF * CE) includes perceived usefulness, task–technology fit, and collaborative efficacy as core conditions, while human–GAI trust is absent as a peripheral condition. This configuration suggests that even when users exhibit low levels of trust in GAI-supported decision-making, they may still develop a willingness to adopt GAI for collaboration. This willingness is driven by the combined benefits of decision-making effectiveness and task fit. In such cases, technological strengths may outweigh the lack of trust. This reflects a dynamic compensation mechanism, where the functional value of GAI helps to offset deficits in trust during collaborative decision-making.When GAI demonstrates strong decision support capabilities and enables synergistic improvements in task performance, users may rely on GAI-based trust rather than psychological trust, thus increasing their willingness to collaborate. This pathway also underscores the configurational nature of user behavior, emphasizing that willingness to engage in human–GAI collaborative decision-making is shaped by the interplay of multiple factors. Although SEM results indicate that human–GAI trust has a direct and positive influence on collaborative willingness, the fsQCA findings reveal a more nuanced perspective. In certain contexts, the combination of perceived usefulness, task–technology fit, and collaborative efficacy can effectively compensate for low trust, promoting willingness to engage in human–GAI collaborative decision-making.

- (3)

- Trust in technology-driven. Path 3 (PU * PEU * HAT * TTF) and Path 4 (PU * PEU * HAT * CE) both feature perceived usefulness, perceived ease of use, and human–GAI trust as core conditions. These are supplemented by task–technology fit in Path 3 and collaborative efficacy in Path 4, which also serve as core conditions. The two paths exhibit similar raw coverage values—0.517 for Path 3 and 0.514 for Path 4—indicating comparable explanatory power. This configuration suggests that when users perceive high levels of usefulness, ease of use, and trust in GAI-supported decision-making, and when either task–technology fit or collaborative efficacy is also high, users are highly likely to exhibit a strong willingness to adopt GAI for collaboration. Additionally, both pathways highlight the synergistic effect between trust and technological factors in shaping collaborative decision-making behavior. However, the two paths emphasize different aspects of the decision-making context. Path 3 focuses on whether GAI can deliver personalized and efficient solutions that align with specific task requirements, emphasizing the role of task adaptation. In contrast, Path 4 centers on the benefit orientation of the collaboration process, indicating that users are more inclined to participate in GAI-assisted decision-making when they are convinced that it can lead to tangible performance gains.

5.3.4. Robustness Analysis

6. Conclusions, Discussion, and Implications

6.1. Conclusions

- (1)

- The SEM results show that task–technology fit, collaborative efficacy, perceived usefulness, perceived ease of use, attitude, and human–GAI trust all have significant positive effects on willingness to adopt GAI for collaborative decision-making. Complementing this, the fsQCA results identify perceived usefulness as a core condition across four high-willingness pathways, while collaborative efficacy appears as a core condition in three pathways. These findings underscore the central role of perceived usefulness and collaborative efficacy in shaping collaborative decision-making willingness. In particular, users’ positive perceptions of GAI in terms of decision support, decision quality, and decision efficiency emerge as core conditions for strengthening willingness to collaborate in decision-making.

- (2)

- The fsQCA results identified four high-willingness configuration pathways for adopting GAI in collaborative decision-making, which can be grouped into three categories. The cognitive value-driven pathway aligns with the theoretical model, emphasizing the role of collaborative efficacy in shaping users’ assessments of GAI’s usefulness in collaborative decision-making [48]. Users who developed positive cognitions regarding GAI’s practical value, collaborative efficacy, and attitudes toward its use exhibited significantly greater willingness to collaborate. The functional compensation-driven pathway demonstrates that the combination of external variables from the theoretical model—task–technology fit and collaborative efficacy—with perceived usefulness can compensate for users’ lack of human–GAI trust, leading decision-makers to accept collaboration primarily on functional grounds. This finding further confirms the central role of collaborative efficacy and perceived usefulness in shaping collaborative decision-making willingness. Finally, the trust in technology-driven pathway establishes a strong-drive configuration for collaborative decision-making willingness, grounded in perceived usefulness, perceived ease of use, and human–GAI trust, in combination with either task–technology fit or collaborative efficacy. Together, these pathways reveal that users may adopt preferences that are either task-oriented or outcome-oriented, depending on the decision-making context.

6.2. Discussion

6.3. Implications

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Variables | Indicators | Measurement Items | Source |

| Perceived Usefulness | PU1 | GAI effectively integrates the strengths of humans and GAI, providing real-time feedback that enhances the quality of collaborative decision-making. | [23,30] |

| PU2 | GAI significantly improves the speed and efficiency of decision-making. | ||

| PU3 | The intelligent analysis and multimodal support offered by GAI enable me to make optimal decisions. | ||

| Perceived Ease of Use | PEU1 | When learning to use GAI, I am able to quickly adapt to its interaction methods and personalized features. | [23,30] |

| PEU2 | Using GAI for collaborative decision-making is straightforward for me. | ||

| PEU3 | When using GAI for decision support, I can readily find solutions or obtain assistance if issues arise. | ||

| Attitude | ATT1 | I consider the use of GAI in collaborative decision-making to be necessary. | [32] |

| ATT2 | I consider the use of GAI in collaborative decision-making to be wise. | ||

| ATT3 | I consider the use of GAI in collaborative decision-making to be worthwhile. | ||

| ATT4 | I believe that GAI performs exceptionally well in collaborative decision-making. | ||

| Human-GAI trust | HAT1 | I trust GAI to safeguard my personal information (e.g., personal data, preferences, decision history). | [25,40] |

| HAT2 | I believe that the information resources provided by GAI are accurate, reliable, and thoroughly verified, thereby supporting better decision-making. | ||

| HAT3 | I believe that GAI’s decisions comply with ethical standards, laws, and regulations, and that it can explain the rationale for its decisions. | ||

| HAT4 | I believe that GAI’s decision-making process is transparent and that its outputs are explainable. | ||

| Task-Technology Fit | TTF1 | I believe that GAI can adjust its outputs in real time based on my feedback to meet my decision-making needs. | [26] |

| TTF2 | I believe that the information provided by GAI effectively supports the completion of my decision-making tasks. | ||

| TTF3 | I believe that the information and recommendations from GAI are highly aligned with, and practical for, my decision-making objectives. | ||

| Collaborative efficacy | CE1 | I believe that collaborating with GAI facilitates efficient completion of decision-making tasks, and that GAI can continuously optimize its recommendations in real time based on my feedback. | [50,51] |

| CE2 | I believe that GAI can enhance decision-making quality through active learning, thereby significantly shortening the decision-making cycle. | ||

| CE3 | I believe that GAI reduces my information-processing burden in complex decision-making tasks. | ||

| CE4 | I believe that collaborating with GAI strengthens my judgment and decision-making abilities, particularly in dynamic and complex decision-making environments. | ||

| Willingness to Adopt GAI for Collaborative Decision-making | WACD1 | I am willing to collaborate with GAI in the decision-making process. | [25,32] |

| WACDI2 | I intend to adopt GAI for collaborative decision-making in future complex decision-making tasks. | ||

| WACD3 | I look forward to jointly optimizing decision-making outcomes through the use of GAI. |

References

- Wu, J.; Gan, W.; Chen, Z.; Wan, S.; Lin, H. AI-Generated Content (AIGC): A Survey. arXiv 2023, arXiv:2304.06632. [Google Scholar] [CrossRef]

- Aickelin, U.; Maadi, M.; Khorshidi, H.A. Expert–Machine Collaborative Decision Making: We Need Healthy Competition. IEEE Intell. Syst. 2022, 37, 28–31. [Google Scholar] [CrossRef]

- Hao, X.; Demir, E.; Eyers, D. Exploring Collaborative Decision-Making: A Quasi-Experimental Study of Human and Generative AI Interaction. Technol. Soc. 2024, 78, 102662. [Google Scholar]

- Rahwan, I.; Cebrian, M.; Obradovich, N.; Bongard, J.; Bonnefon, J.-F.; Breazeal, C.; Crandall, J.W.; Christakis, N.A.; Couzin, I.D.; Jackson, M.O.; et al. Machine Behaviour. Nature 2019, 568, 477–486. [Google Scholar] [CrossRef]

- Wang, F.-Y.; Yang, J.; Wang, X.; Li, J.; Han, Q.-L. Chat with ChatGPT on Industry 5.0: Learning and Decision-Making for Intelligent Industries. IEEECAA J. Autom. Sin. 2023, 10, 831–834. [Google Scholar] [CrossRef]

- Haesevoets, T. Human-Machine Collaboration in Managerial Decision Making. Comput. Hum. Behav. 2021, 119, 106730. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. “So What If ChatGPT Wrote It?” Multidisciplinary Perspectives on Opportunities, Challenges and Implications of Generative Conversational AI for Research, Practice and Policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Yu, T.; Tian, Y.; Chen, Y.; Huang, Y.; Pan, Y.; Jang, W. How Do Ethical Factors Affect User Trust and Adoption Intentions of AI-Generated Content Tools? Evidence from a Risk-Trust Perspective. Systems 2025, 13, 461. [Google Scholar] [CrossRef]

- Coeckelbergh, M. Narrative Responsibility and Artificial Intelligence. AI Soc. 2023, 38, 2437–2450. [Google Scholar] [CrossRef]

- Puerta-Beldarrain, M.; Gómez-Carmona, O.; Sánchez-Corcuera, R.; Casado-Mansilla, D.; López-de-Ipiña, D.; Chen, L. A Multifaceted Vision of the Human-AI Collaboration: A Comprehensive Review. IEEE Access 2025, 13, 29375–29405. [Google Scholar] [CrossRef]

- Wamba, S.F.; Queiroz, M.M.; Trinchera, L. The Role of Artificial Intelligence-Enabled Dynamic Capability on Environmental Performance: The Mediation Effect of a Data-Driven Culture in France and the USA. Int. J. Prod. Econ. 2024, 268, 109131. [Google Scholar] [CrossRef]

- Banh, L.; Strobel, G. Generative Artificial Intelligence. Electron. Mark. 2023, 33, 63. [Google Scholar] [CrossRef]

- Silva, M.; Santos, E.; Alves, K.; Silva, H.; Pedrosa, F.; Valença, G.; Brito, K. Using generative AI for simplifying official documents in the public accounts domain. In Proceedings of the Workshop de Computação Aplicada em Governo Eletrônico (WCGE), Brasilia, Brazil, 21–25 July 2024; SBC: São Paulo, Brazil, 2024; pp. 246–253. [Google Scholar]

- Rao, V.M.; Hla, M.; Moor, M.; Adithan, S.; Kwak, S.; Topol, E.J.; Rajpurkar, P. Multimodal Generative AI for Medical Image Interpretation. Nature 2025, 639, 888–896. [Google Scholar] [CrossRef]

- Li, L.; Liu, Y.; Jin, Y.; Cheng, T.C.E.; Zhang, Q. Generative AI-Enabled Supply Chain Management: The Critical Role of Coordination and Dynamism. Int. J. Prod. Econ. 2024, 277, 109388. [Google Scholar] [CrossRef]

- Osborne, M.R.; Bailey, E.R. Me vs. the Machine? Subjective Evaluations of Human- and AI-Generated Advice. Sci. Rep. 2025, 15, 3980. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, T.X.; Shan, Y.; Zhong, S. The Emergence of Economic Rationality of GPT. Proc. Natl. Acad. Sci. USA 2023, 120, e2316205120. [Google Scholar] [CrossRef] [PubMed]

- Binz, M.; Schulz, E. Using Cognitive Psychology to Understand GPT-3. Proc. Natl. Acad. Sci. USA 2023, 120, e2218523120. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Inf. Syst. 2025, 43, 42:1–42:55. [Google Scholar] [CrossRef]

- Huang, Q.; Dong, X.; Zhang, P.; Wang, B.; He, C.; Wang, J.; Lin, D.; Zhang, W.; Yu, N. OPERA: Alleviating Hallucination in Multi-Modal Large Language Models via over-Trust Penalty and Retrospection-Allocation. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 13418–13427. [Google Scholar]

- Zhang, Y.; Gosline, R. Human Favoritism, Not AI Aversion: People’s Perceptions (and Bias) toward Generative AI, Human Experts, and Human–GAI Collaboration in Persuasive Content Generation. Judgm. Decis. Mak. 2023, 18, e41. [Google Scholar] [CrossRef]

- Celiktutan, B.; Klesse, A.-K.; Tuk, M.A. Acceptability Lies in the Eye of the Beholder: Self-Other Biases in GenAI Collaborations. Int. J. Res. Mark. 2024, 41, 496–512. [Google Scholar] [CrossRef]

- Davis, F.D.; Bagozzi, R.P.; Warshaw, P.R. User Acceptance of Computer Technology: A Comparison of Two Theoretical Models. Manag. Sci. 1989, 35, 982–1003. [Google Scholar] [CrossRef]

- Goodhue, D.L.; Thompson, R.L. Task-Technology Fit and Individual Performance. MIS Q. 1995, 19, 213–236. [Google Scholar] [CrossRef]

- Shata, A.; Hartley, K. Artificial Intelligence and Communication Technologies in Academia: Faculty Perceptions and the Adoption of Generative AI. Int. J. Educ. Technol. High. Educ. 2025, 22, 14. [Google Scholar] [CrossRef]

- Al-Emran, M.; Al-Sharafi, M.A.; Foroughi, B.; Al-Qaysi, N.; Mansoor, D.; Beheshti, A.; Ali, N. Evaluating the Influence of Generative AI on Students’ Academic Performance through the Lenses of TPB and TTF Using a Hybrid SEM-ANN Approach. Educ. Inf. Technol. 2025, 30, 17557–17587. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, H. Factors Influencing University Students’ Continuance Intentions towards Self-Directed Learning Using Artificial Intelligence Tools: Insights from Structural Equation Modeling and Fuzzy-Set Qualitative Comparative Analysis. Appl. Sci. 2024, 14, 8363. [Google Scholar]

- Rapp, A.; Curti, L.; Boldi, A. The Human Side of Human-Chatbot Interaction: A Systematic Literature Review of Ten Years of Research on Text-Based Chatbots. Int. J. Hum.-Comput. Stud. 2021, 151, 102630. [Google Scholar] [CrossRef]

- Choung, H.; David, P.; Ross, A. Trust in AI and Its Role in the Acceptance of AI Technologies. Int. J. Hum.–Comput. Interact. 2023, 39, 1727–1739. [Google Scholar]

- Belanche, D.; Casaló, L.V.; Flavián, C. Artificial Intelligence in FinTech: Understanding Robo-Advisors Adoption among Customers. Ind. Manag. Data Syst. 2019, 119, 1411–1430. [Google Scholar] [CrossRef]

- Wang, Y.-J.; Wang, N.; Li, M.; Li, H.; Huang, G.Q. End-Users’ Acceptance of Intelligent Decision-Making: A Case Study in Digital Agriculture. Adv. Eng. Inform. 2024, 60, 102387. [Google Scholar] [CrossRef]

- Lee, J.; Kim, J.; Choi, J.Y. The Adoption of Virtual Reality Devices: The Technology Acceptance Model Integrating Enjoyment, Social Interaction, and Strength of the Social Ties. Telemat. Inform. 2019, 39, 37–48. [Google Scholar]

- Dietvorst, B.J.; Simmons, J.P.; Massey, C. Algorithm Aversion: People Erroneously Avoid Algorithms after Seeing Them Err. J. Exp. Psychol. Gen. 2015, 144, 114–126. [Google Scholar] [CrossRef]

- Jussupow, E.; Benbasat, I.; Heinzl, A. An Integrative Perspective on Algorithm Aversion and Appreciation in Decision-Making. MIS Q. 2024, 48, 1575–1590. [Google Scholar] [CrossRef]

- Ivanov, S.; Webster, C. Automated Decision-Making: Hoteliers’ Perceptions. Technol. Soc. 2024, 76, 102430. [Google Scholar] [CrossRef]

- Brüns, J.D.; Meißner, M. Do You Create Your Content Yourself? Using Generative Artificial Intelligence for Social Media Content Creation Diminishes Perceived Brand Authenticity. J. Retail. Consum. Serv. 2024, 79, 103790. [Google Scholar] [CrossRef]

- Shin, D. The Effects of Explainability and Causability on Perception, Trust, and Acceptance: Implications for Explainable AI. Int. J. Hum.-Comput. Stud. 2021, 146, 102551. [Google Scholar] [CrossRef]

- Mårell-Olsson, E.; Bensch, S.; Hellström, T.; Alm, H.; Hyllbrant, A.; Leonardson, M.; Westberg, S. Navigating the Human–Robot Interface—Exploring Human Interactions and Perceptions with Social and Telepresence Robots. Appl. Sci. 2025, 15, 1127. [Google Scholar] [CrossRef]

- Logg, J.M.; Minson, J.A.; Moore, D.A. Algorithm Appreciation: People Prefer Algorithmic to Human Judgment. Organ. Behav. Hum. Decis. Process. 2019, 151, 90–103. [Google Scholar] [CrossRef]

- Keding, C.; Meissner, P. Managerial Overreliance on AI-Augmented Decision-Making Processes: How the Use of AI-Based Advisory Systems Shapes Choice Behavior in R&D Investment Decisions. Technol. Forecast. Soc. Change 2021, 171, 120970. [Google Scholar]

- Hyun Baek, T.; Kim, M. Is ChatGPT Scary Good? How User Motivations Affect Creepiness and Trust in Generative Artificial Intelligence. Telemat. Inform. 2023, 83, 102030. [Google Scholar] [CrossRef]

- Grewal, D.; Benoit, S.; Noble, S.M.; Guha, A.; Ahlbom, C.-P.; Nordfält, J. Leveraging In-Store Technology and AI: Increasing Customer and Employee Efficiency and Enhancing Their Experiences. J. Retail. 2023, 99, 487–504. [Google Scholar] [CrossRef]

- Benbya, H.; Strich, F.; Tamm, T. Navigating Generative Artificial Intelligence Promises and Perils for Knowledge and Creative Work. J. Assoc. Inf. Syst. 2024, 25, 23–36. [Google Scholar] [CrossRef]

- Liu, D.; Luo, J. College Learning from Classrooms to the Internet: Adoption of the YouTube as Supplementary Tool in COVID-19 Pandemic Environment. Educ. Urban Soc. 2022, 54, 848–870. [Google Scholar] [CrossRef]

- Bandura, A. Self-Efficacy Mechanism in Human Agency. Am. Psychol. 1982, 37, 122. [Google Scholar] [CrossRef]

- Sehgal, P.; Nambudiri, R.; Mishra, S.K. Teacher Effectiveness through Self-Efficacy, Collaboration and Principal Leadership. Int. J. Educ. Manag. 2017, 31, 505–517. [Google Scholar] [CrossRef]

- Tan, J.; Wu, L.; Ma, S. Collaborative Dialogue Patterns of Pair Programming and Their Impact on Programming Self-Efficacy and Coding Performance. Br. J. Educ. Technol. 2024, 55, 1060–1081. [Google Scholar] [CrossRef]

- Li, T.; Zhan, Z.; Ji, Y.; Li, T. Exploring Human and AI Collaboration in Inclusive STEM Teacher Training: A Synergistic Approach Based on Self-Determination Theory. Internet High. Educ. 2025, 65, 101003. [Google Scholar] [CrossRef]

- Zhenlei, Y.; Song, L.; Minyi, D.; Qiang, H. Assessing Knowledge Anxiety in Researchers: A Comprehensive Measurement Scale. PeerJ 2024, 12, e18478. [Google Scholar] [CrossRef]

- Shaw, J.D.; Zhu, J.; Duffy, M.K.; Scott, K.L.; Shih, H.-A.; Susanto, E. A Contingency Model of Conflict and Team Effectiveness. J. Appl. Psychol. 2011, 96, 391–400. [Google Scholar] [CrossRef]

- Shahzad, M.F.; Xu, S.; Zahid, H. Exploring the Impact of Generative AI-Based Technologies on Learning Performance through Self-Efficacy, Fairness & Ethics, Creativity, and Trust in Higher Education. Educ. Inf. Technol. 2025, 30, 3691–3716. [Google Scholar]

- Ragin, C.C. Redesigning Social Inquiry: Fuzzy Sets and Beyond; University of Chicao Press: Chicago, IL, USA, 2008. [Google Scholar]

- Xie, X.; Wang, H. How Can Open Innovation Ecosystem Modes Push Product Innovation Forward? An fsQCA Analysis. J. Bus. Res. 2020, 108, 29–41. [Google Scholar] [CrossRef]

- Cronbach, L.J. Coefficient Alpha and the Internal Structure of Tests. Psychometrika 1951, 16, 297–334. [Google Scholar] [CrossRef]

- Podsakoff, P.M.; MacKenzie, S.B.; Lee, J.-Y.; Podsakoff, N.P. Common Method Biases in Behavioral Research: A Critical Review of the Literature and Recommended Remedies. J. Appl. Psychol. 2003, 88, 879–903. [Google Scholar] [CrossRef]

- Huang, Z. Research on Innovation Capability of Regional Innovation System Based on Fuzzy-Set Qualitative Comparative Analysis: Evidence from China. Systems 2022, 10, 220. [Google Scholar] [CrossRef]

- Pappas, I.O.; Woodside, A.G. Fuzzy-Set Qualitative Comparative Analysis (fsQCA): Guidelines for Research Practice in Information Systems and Marketing. Int. J. Inf. Manag. 2021, 58, 102310. [Google Scholar] [CrossRef]

- Castro, F.G.; Kellison, J.G.; Boyd, S.J.; Kopak, A. A Methodology for Conducting Integrative Mixed Methods Research and Data Analyses. J. Mix. Methods Res. 2010, 4, 342–360. [Google Scholar] [CrossRef]

- Trist, E.L.; Bamforth, K.W. Some Social and Psychological Consequences of the Longwall Method of Coal-Getting. Hum. Relat. 1951, 4, 3–38. [Google Scholar]

| Variables | Indicators | Factor Loading | Alpha | CR | AVE |

|---|---|---|---|---|---|

| Perceived Usefulness | PU1 | 0.787 | 0.842 | 0.842 | 0.640 |

| PU2 | 0.815 | ||||

| PU3 | 0.797 | ||||

| Perceived Ease of Use | PEU1 | 0.806 | 0.852 | 0.853 | 0.658 |

| PEU2 | 0.825 | ||||

| PEU3 | 0.803 | ||||

| Attitude | ATT1 | 0.834 | 0.893 | 0.893 | 0.677 |

| ATT2 | 0.82 | ||||

| ATT3 | 0.817 | ||||

| ATT4 | 0.819 | ||||

| Human–GAI Trust | HAT1 | 0.808 | 0.878 | 0.878 | 0.644 |

| HAT2 | 0.788 | ||||

| HAT3 | 0.794 | ||||

| HAT4 | 0.819 | ||||

| Task–Technology Fit | TTF1 | 0.83 | 0.842 | 0.842 | 0.640 |

| TTF2 | 0.789 | ||||

| TTF3 | 0.78 | ||||

| Collaborative Efficacy | CE1 | 0.791 | 0.879 | 0.879 | 0.645 |

| CE2 | 0.803 | ||||

| CE3 | 0.799 | ||||

| CE4 | 0.819 | ||||

| Willingness to Adopt GAI for Collaborative Decision-Making | WACD1 | 0.804 | 0.844 | 0.844 | 0.644 |

| WACD2 | 0.826 | ||||

| WACD3 | 0.777 |

| PU | PEU | ATT | HAT | TTF | CE | WACD | |

|---|---|---|---|---|---|---|---|

| PU | 0.800 | ||||||

| PEU | 0.659 | 0.811 | |||||

| ATT | 0.501 | 0.488 | 0.823 | ||||

| HAT | 0.335 | 0.295 | 0.583 | 0.802 | |||

| TTF | 0.401 | 0.357 | 0.623 | 0.466 | 0.800 | ||

| CE | 0.501 | 0.448 | 0.367 | 0.403 | 0.303 | 0.803 | |

| WACD | 0.641 | 0.576 | 0.531 | 0.536 | 0.506 | 0.627 | 0.803 |

| Fitting Index | χ2/df | GFI | CFI | TLI | IFI | RMSEA |

|---|---|---|---|---|---|---|

| Recommended value | <3 | >0.90 | >0.90 | >0.90 | >0.90 | <0.08 |

| Actual value | 2.149 | 0.909 | 0.943 | 0.933 | 0.944 | 0.049 |

| Variables | Full Membership Threshold (95%) | Crossover (50%) | Full Non-Membership Threshold (5%) |

|---|---|---|---|

| PU | 4.67 | 4.33 | 1.67 |

| PEU | 4.67 | 4.00 | 1.67 |

| ATT | 4.75 | 4.00 | 1.50 |

| HAT | 4.75 | 4.00 | 1.50 |

| TTF | 4.67 | 4.00 | 1.67 |

| CE | 4.61 | 3.75 | 1.50 |

| WACD | 4.67 | 4.00 | 1.67 |

| Variables | Consistency Coverage | Consistency Coverage |

|---|---|---|

| PU1 | 0.75232 | 0.82156 |

| ~PU1 | 0.562701 | 0.655996 |

| PEU1 | 0.776609 | 0.765062 |

| ~PEU1 | 0.49985 | 0.659075 |

| ATT1 | 0.742434 | 0.773669 |

| ~ATT1 | 0.554145 | 0.680871 |

| HAT1 | 0.756919 | 0.770243 |

| ~HAT1 | 0.553277 | 0.69964 |

| TTF1 | 0.770015 | 0.765279 |

| ~TTF1 | 0.524931 | 0.684112 |

| CE1 | 0.798244 | 0.797816 |

| ~CE1 | 0.501553 | 0.648865 |

| Causal Conditions | S1 | S2 | S3 | S4 |

|---|---|---|---|---|

| PU | ⬤ | ⬤ | ⬤ | ⬤ |

| PEU | ⬤ | ⬤ | ||

| ATT | ⬤ | |||

| HAT | ⊗ | ⬤ | ⬤ | |

| TTF | ⬤ | ⬤ | ||

| CE | ⬤ | ⬤ | ⬤ | |

| Consistency | 0.930 | 0.958 | 0.949 | 0.954 |

| Raw coverage | 0.557 | 0.380 | 0.517 | 0.514 |

| Unique coverage | 0.042 | 0.012 | 0.044 | 0.019 |

| Overall consistency | 0.918 | |||

| Overall coverage | 0.650 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, J.; Wu, F.; Qi, J. Research on Influencing Factors of Users’ Willingness to Adopt GAI for Collaborative Decision-Making in Generative Artificial Intelligence Context. Appl. Sci. 2025, 15, 10322. https://doi.org/10.3390/app151910322

Deng J, Wu F, Qi J. Research on Influencing Factors of Users’ Willingness to Adopt GAI for Collaborative Decision-Making in Generative Artificial Intelligence Context. Applied Sciences. 2025; 15(19):10322. https://doi.org/10.3390/app151910322

Chicago/Turabian StyleDeng, Jiangao, Feifei Wu, and Jiayin Qi. 2025. "Research on Influencing Factors of Users’ Willingness to Adopt GAI for Collaborative Decision-Making in Generative Artificial Intelligence Context" Applied Sciences 15, no. 19: 10322. https://doi.org/10.3390/app151910322

APA StyleDeng, J., Wu, F., & Qi, J. (2025). Research on Influencing Factors of Users’ Willingness to Adopt GAI for Collaborative Decision-Making in Generative Artificial Intelligence Context. Applied Sciences, 15(19), 10322. https://doi.org/10.3390/app151910322