Abstract

In today’s fast-paced release cycles, mobile app user reviews offer a valuable source for tracking the evolution of user needs. At the core of these needs lies a structure of interdependencies—some enhancements are only relevant in specific usage contexts, while others may conflict when implemented together. Identifying these relationships is essential for anticipating feature interactions, resolving contradictions, and enabling context-aware, user-driven planning. The present work introduces an ontology-enhanced AI framework for predicting whether the requirements mentioned in reviews are interdependent. The core component is a Bidirectional Encoder Representations from Transformers (BERT) classifier retrained within a large-language-model-driven active learning loop that focuses on instances with uncertainty. The framework integrates contextual and structural reasoning; contextual analysis captures the semantic intent and functional role of each requirement, enriching the understanding of user expectations. Structural reasoning relies on a domain-specific ontology that serves as both a knowledge base and an inference layer, guiding the grouping of requirements. The model achieved strong performance on annotated banking app reviews, with a validation F1-score of 0.9565 and an area under the ROC curve (AUC) exceeding 0.97. The study results contribute to supporting developers in prioritizing features based on dependencies and delivering more coherent, conflict-free releases.

1. Introduction

Requirements Engineering (RE) represents a critical phase in the software development lifecycle, as it systematically captures, analyzes, and validates user needs to guide the specification of system functionalities. Its role extends beyond technical translation, functioning as a strategic process that ensures alignment between developed software and the evolving expectations of users and business stakeholders [1,2]. The precision in eliciting and prioritizing requirements directly influences the relevance, quality, and usability of the final product [3,4]. Empirical studies underscore that effective requirements engineering contributes to optimizing development resources, reducing project uncertainty, and enhancing stakeholder satisfaction [1], thereby establishing RE as a key determinant of project success in contemporary software practice. In light of its foundational significance, recent research has increasingly explored user-generated content as a rich and scalable source for identifying real-world requirements. Mobile app stores have emerged as a dynamic repository of user needs, offering a continuous stream of changing expectations that are reflected in user reviews. These reviews, rich in insights, reflect real-world user experiences, feature requests, and pain points, offering a direct line to understanding what users truly seek in their mobile applications [3,5].

With the advancement of Artificial Intelligence (AI), the shift from traditional Machine Learning (ML) to sophisticated Natural Language Processing (NLP) has significantly improved the extraction of actionable insights from user reviews [1,6,7]. Early ML techniques enabled basic sentiment detection and keyword-based classification, laying the groundwork for more complex analyses. With the advent of NLP, particularly deep-learning-based models such as BERT, it became feasible to perform nuanced classification of user feedback by intent, type, and domain, thereby capturing the contextual and semantic richness embedded in natural language. However, the emergence of Large Language Models (LLMs) such as the Generative Pre-Trained Transformer (GPT) has further expanded the creative and generative potential of these systems, enabling more coherent synthesis of feedback, automated summarization, and interactive dialog generation [6]. Despite these advancements, one critical challenge remains largely underexplored: the discovery of latent dependencies among user-stated requirements. While current models excel at extracting and classifying individual requirements, they often fail to identify how these requirements influence or constrain each other, a gap that hampers holistic understanding and optimal decision making in software evolution [1]. The current emphasis on isolated requirement extraction neglects the intricate web of relationships that exist between different user needs, overlooking how one requirement might influence or be influenced by others [1,7]. Manual identification of these dependencies is not only a resource-intensive endeavor, demanding significant time and effort from analysts, but it is also susceptible to the biases and subjective interpretations of those involved. A sophisticated natural language processing technique is necessary to capture and predict these dependencies effectively from dynamically evolving user reviews. Recent work has demonstrated that transformer-based models, particularly BERT, have the potential to accurately interpret nuanced linguistic patterns. As a binary classifier, BERT reliably distinguishes dependent from independent requirement pairs, harnessing deep contextual embeddings that uncover subtle semantic signals often overlooked by traditional classifiers [4,8,9,10].

To address these challenges, this study aims to introduce an innovative framework that integrates knowledge graphs with multiple AI-driven components—most notably large language models and bidirectional encoder representations from transformer-based classifiers. Central to the framework’s architecture is an LLM-driven active learning loop, which facilitates iterative refinement of the classification model through cycles of prediction, uncertainty-based instance selection, expert annotation, and retraining [11,12]. The adaptive mechanism ensures that the system remains responsive to the evolving landscape of user needs as expressed in app store feedback. So, the proposed framework enhances the accuracy, scalability, and adaptability of requirement dependency extraction in dynamic software environments. In addition, BERT integrates contextual reasoning and structural reasoning to enhance prediction capabilities. Contextual reasoning leverages semantic dimensions—including requirement intent, functional or non-functional type, and domain-specific relevance—factors that are essential for accurately distinguishing subtle variations in user needs and ensuring that predictions align with the contextual meaning of user feedback [13]. Structural reasoning plays a vital role by leveraging calculations between requirements, allowing the model to uncover implicit hierarchical relationships and directional dependencies that are often overlooked in purely semantic analyses, thereby guaranteeing consistency and accuracy in predicted dependency relations [14,15,16]. However, the accuracy and adaptability of BERT depend substantially on the availability of labeled training data and the ability to respond promptly to continuously evolving user-generated requirements.

In the realm of RE, ontologies provide cognitive and structural mappings of domain-specific knowledge that demonstrate utility across a spectrum of tasks, including facilitating requirements elicitation by providing a structured vocabulary and semantic context for understanding stakeholder needs, enabling more precise and unambiguous specification of requirements through the formal definition of concepts and relationships, as well as supporting conflict management by identifying inconsistencies and overlaps between requirements [17,18,19]. Dependency analysis frameworks leverage ontologies to represent various dependency types between requirements and to facilitate conflict detection throughout the software lifecycle. Furthermore, a knowledge graph represents the structural relationships between requirements, providing a valuable resource for identifying dependencies that may not be immediately apparent from the text of reviews [14].

The proposed framework integrates large language models in three key scenarios, creating a robust and coherent AI-driven approach. First, LLMs facilitate the initial extraction and generation of structured requirement statements from unstructured, user-generated reviews, significantly reducing the manual effort required and enabling more consistent representation of evolving user needs [3]. Second, within an active learning loop, LLMs replace human evaluators by estimating model-based uncertainty and selectively identifying the most uncertain requirement pairs for retraining the BERT classifier. With an automated active-learning process, the classifier can be continuously refined while requiring minimal human intervention, thereby enhancing its responsiveness to evolving requirements and lowering annotation costs [20,21]. Third, LLMs play a pivotal role in ontology construction by automatically proposing new domain concepts, relations, and structural refinements, allowing the ontology to remain aligned with emerging software features and user expectations [22,23]. Our study investigates two research questions. RQ1: To what extent can knowledge integration—through contextual and structural reasoning—enhance the prediction performance of requirement dependencies extracted from unstructured user feedback? Meanwhile, the study explores RQ2: to what extent can iterative refinement through dynamic knowledge feedback improve the learning efficiency of the dependency prediction process?

In conclusion, our research contributes a comprehensive and scalable solution to the challenge of automating requirement dependency analysis from dynamic user-generated content. By integrating contextual reasoning—capturing the intent, type, and domain of each requirement—with ontology-driven structural rationale, the proposed framework offers a holistic understanding of inter-requirement relationships. The incorporation of a large-language-model-based active learning loop ensures that both the classifier and the ontology evolve in alignment with shifting user needs, enabling continuous refinement of prediction accuracy. Dual-layer reasoning not only improves the interpretability and reliability of dependency detection but also enhances the coherence of release planning decisions. Moreover, the framework’s fully automated pipeline overcomes the scalability constraints of manual analysis, enabling development teams to harness the vast and growing volume of app-store feedback effectively. With the proposed framework, developers are provided with richer decision-making inputs by explicitly modeling inter-requirement dependencies, which contribute to the construction of more coherent and optimized release roadmaps for user-centered software development practices.

2. Literature Review

The increasing complexity of software systems and the dynamic nature of user expectations necessitate robust methods for managing requirements throughout the software development lifecycle [7]. A critical aspect of effective requirement management involves accurately identifying and understanding the dependencies that exist between various requirements, particularly those elicited directly from customer feedback [24]. Such understanding is crucial for effective decision making in release planning, resource allocation, and ensuring system coherence [24]. However, capturing these dependencies remains inherently challenging due to the informal and often ambiguous nature of customer input, which can obscure subtle interconnections between desired functionalities or constraints [25]. The proliferation of natural language processing techniques offers promising avenues for automated extraction and analysis of such dependencies from unstructured textual feedback [26]. However, the inherent ambiguities and nuances within natural language often pose significant hurdles for fully automated dependency identification, underscoring the need for advanced approaches that can capture more nuanced semantic relationships [27]. The complexity of this challenge is further exacerbated by the volume and velocity of feedback in agile development environments, highlighting the need for scalable and precise methodologies [1,3,7,28,29].

The following section evaluates the current state of automated dependency extraction from natural language, focusing on the application of AI and ontological methods to provide a comprehensive understanding of their effectiveness and limitations. Recent research has focused on automating the identification of dependencies between software requirements using natural language processing techniques. In this context, the authors of [4] proposed a two-stage approach using NLP and weakly supervised learning to extract binary dependencies and their types. In addition, the authors of [30] developed a method of identifying dependencies in evolutionary requirements, extracting relations such as PART OF, AND, OR, and XOR. Another study presented two content-based recommendation approaches, one using document classification techniques and another based on Latent Semantic Analysis. Their evaluation showed that Random Forest with probabilistic features achieved the best prediction quality (F1 0.89) [31]. Similarly, the authors of [32] implemented an approach to identify required dependencies using supervised classification techniques, with Random Forest classifiers achieving an F1-score of ~82%. These studies demonstrate the potential of NLP and machine learning techniques in automating the complex task of identifying requirement dependencies. A series of studies by the same authors has made notable contributions to advancing the extraction of requirement dependencies. In their first work, the authors of [15] proposed a method utilizing part-of-speech features and an enhanced stacking ensemble learning model to improve extraction performance. Building upon this foundation, their subsequent study [11] developed an integrated active learning approach to optimize sample selection for dependency extraction. In addition, the authors of [24] addressed value-related dependencies by mining user preferences and incorporating them into the release planning process. To improve requirement analysis, the authors of [18] introduced an ontology-driven framework for domain-specific requirements, incorporating formal scenario descriptions and dependency graphs. These approaches aim to overcome challenges in traditional requirement engineering methods, such as manual analysis inefficiencies, heterogeneous model interoperability, and reusability across domains. By leveraging machine learning, semantic techniques, and user preferences, these studies demonstrate significant improvements in requirement dependency extraction accuracy and analysis capabilities.

The inherent difficulty in detecting requirement dependencies stems from their natural language documentation, which often leads to incomplete or incorrect identification, thereby reducing release quality and necessitating substantial rework [14]. Moreover, the subjective interpretation of natural language requirements by different stakeholders can lead to inconsistencies in identifying dependencies, further complicating the development process. Such manual analysis is time-consuming and effort-intensive, underscoring the critical need for automated solutions to enhance efficiency and accuracy in requirement management [4,29,30,33,34]. To address these challenges, advanced AI techniques, specifically machine learning and natural language processing, have been increasingly employed to automate the detection of dependencies, offering a more scalable and precise alternative to manual methods [11,14]. Natural language processing has shown promise in identifying semantic dependencies between requirements; however, much of the existing research remains theoretical or focuses on pairwise analyses, overlooking the dynamic interplay of multiple requirements with varying degrees of dependency [4]. A significant challenge lies in the lack of robust evaluation methods for these approaches, often relying on simple case studies rather than comprehensive comparative assessments [26].

Despite these advancements, a significant gap persists in the extensive integration of ontological knowledge with AI methods to enrich the semantic understanding of dependencies, moving beyond mere syntactic or lexical associations to capture deeper conceptual relationships [4]. This integration is vital for mitigating the inherent verbosity, ambiguity, and inconsistency often found in natural language requirements, which traditionally complicate the selection of appropriate system architectures and the evaluation of alternative designs [30]. Further, while NLP techniques are critical for processing natural language requirements, their effectiveness can be significantly bolstered by integrating ontological frameworks that provide a structured and formal representation of domain knowledge, enabling a deeper, semantically aware analysis of dependencies [19,35]. This structured approach can help to formalize the often-informal knowledge embedded within natural language requirements, thereby facilitating more precise and automated dependency detection [14,18]. Furthermore, the integration of domain-specific ontologies can help resolve ambiguities and inconsistencies present in natural language, providing a standardized framework for interpreting and classifying various types of dependencies and thereby enhancing the precision of automated tools [18].

Our study investigates how artificial intelligence, particularly natural language processing, can be synergistically combined with ontological reasoning to overcome these limitations, thus providing a robust framework for automated dependency detection in software requirements. Natural language processing, a subfield of artificial intelligence, enables computers to comprehend, interpret, and generate human language, which is crucial for transforming raw textual data into structured, analyzable information [2,3,28]. Moreover, while recent advances in LLMs and BERT-based architectures have improved the extraction of requirement-related information [3,6,20,36,37], there is no unified framework that integrates semantic features (such as intent, domain, and requirement type) with structural reasoning powered by ontological knowledge to detect requirement dependencies from mobile app reviews. This gap motivates our proposed ontology-enhanced AI framework, which combines semantic analysis with structural dependency modeling to uncover the hidden architecture of user needs directly from natural language feedback.

In parallel, recent studies have explored strategies to reduce distributional bias in large language models. For example, the authors of [38] proposed an imitation-learning-based approach (DaD-DAgger) to mitigate exposure bias in LLM distillation, showing that combining teacher outputs (soft labels) with ground-truth data (hard labels) yields substantial improvements in predictive accuracy and generative quality. Their work underscores the importance of addressing discrepancies between training and deployment conditions. Building on this line of research, our framework targets a different but related challenge: dependency bias in requirement engineering. Unlike exposure bias in autoregressive text generation, dependency bias emerges when labeled data do not fully capture the semantic and structural relations underlying user requirements. By integrating ontology-driven structural features with LLM-driven active learning, we aim to enhance generalizability and robustness in requirement dependency detection.

3. Methodology

The methodology adopted in this study is grounded in our previously proposed framework [39], which outlines a set of structured criteria for calculating requirement priorities—one of which involves detecting inter-requirement dependencies. While the prior framework offered a conceptual basis for prioritization that includes inter-requirement dependency as one of its key components, this aspect has not yet been empirically validated. The proposed methodology introduces an ontology-enhanced AI framework specifically designed to predict such dependencies directly from customer feedback. It leverages an LLM-driven active learning loop to iteratively train and refine a BERT classifier, ensuring adaptive and accurate detection of relational links between features or requirements. As part of this extension, the framework integrates contextual understanding with structural reasoning to derive meaningful relationships from unstructured user-generated content [3], reflecting a refined methodological direction tailored to the goals of this investigation. The robust architecture of the framework allows continuous improvement and adaptation, making it highly suitable for dynamic environments where customer needs and product features evolve rapidly. Its methodological backbone relies on several interconnected stages to achieve accurate dependency prediction and effective visualization.

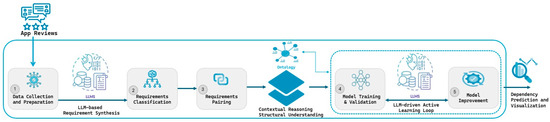

As illustrated in Figure 1, the research workflow is composed of stages that systematically process raw customer feedback and convert it into structured insights. These insights support the identification and classification of requirements and enable the prediction of complex interdependencies among them. The following subsections elaborate on each stage, offering a detailed account of the data processing pipeline and the advanced techniques integrated into the framework.

Figure 1.

The framework workflow for automated requirement dependency prediction from app reviews.

3.1. Data Collection and Preparation

This foundational step is critical for ensuring the high quality and relevance of the input data, directly impacting the accuracy and efficacy of subsequent analytical stages, particularly within a deep learning context where model performance is susceptible to input data characteristics. Gathering large quantities of user-generated content from diverse sources is a key part of this foundational step, which ensures data quality and coverage by accounting for volume, velocity, and variety, thereby reducing biases in user feedback. This study selected the mobile banking domain based on several compelling factors. First, the financial technology (FinTech) sector in Saudi Arabia has witnessed rapid growth and widespread digital adoption in recent years, leading to a surge in mobile banking usage. Consequently, user reviews in this domain are both voluminous and rich in requirement-related feedback. Second, banking applications often serve a broad spectrum of user needs—ranging from transactional services to security and user experience—which results in highly diverse and detailed feedback. This diversity enables the extraction of both functional and non-functional requirements, making the dataset particularly suitable for semantic and structural analysis of dependencies. Third, as banking applications are mission-critical systems, users tend to express more precise and task-oriented feedback, often reporting concrete issues, feature gaps, or usability concerns. As a result, user reviews in this context tend to be more issue-focused, feature-specific, and valuable for guiding requirement engineering decisions. By focusing on this context, our dataset offers high ecological validity and ensures the practical relevance of the proposed framework to real-world and user-centered applications. The dataset employed in this study comprises 8816 user reviews collected over a seven-year period (2018–2025), providing a temporally diverse snapshot of user feedback that reflects evolving expectations and system maturity. The reviews were drawn from 12 widely used mobile banking applications in Saudi Arabia, with data sourced from both the Apple App Store and the Google Play Store. In the initial phase, the AppBot platform was utilized to extract historical reviews, primarily from the Apple App Store; however, to improve recency and ensure coverage across platforms, this was complemented by manual retrieval of more recent reviews from both marketplaces. All reviews were authored in English, consistent with the bilingual (Arabic–English) interfaces commonly offered by Saudi banking applications and the frequent tendency of users to provide feedback in English. On average, the reviews contained 10.25 words, with the longest extending to 158 words and the shortest comprising a single word. In terms of lexical characteristics, the average word length was 4.37 characters, reflecting the generally concise and direct style of user feedback. The primary objective of this study was to maximize the extraction of user requirements irrespective of the application source. Accordingly, the dataset preserves its natural distribution, which mirrors real-world market dynamics, whereby leading applications, such as those of Al Rajhi Bank and Saudi National Bank, generate the largest share of reviews. Retaining this distribution ensured that the dataset captured the full breadth of user needs, with review frequency itself serving as an indicator of both application popularity and user engagement. Consequently, balancing across applications was not enforced, as the study’s focus was on requirement diversity rather than cross-application comparability.

The collected user reviews underwent a comprehensive preprocessing pipeline to ensure compatibility with downstream language understanding models. This pipeline was implemented using Python (3.12.11)-based libraries, including NumPy and Pandas for data handling, scikit-learn for preprocessing utilities, and the HuggingFace BERT tokenizer for subword-level tokenization. As part of the normalization process, all text was converted into lowercase, punctuation and special characters were removed, and sentence segmentation was applied. Filtering steps excluded duplicate reviews, non-informative entries (e.g., trivial comments such as “very good”, “Worst bank ever!!”, or “Too nice”), and concise reviews shorter than three words. These procedures reduced the dataset from 8816 to 6487 reviews, thereby enhancing its quality and representativeness. Beyond this preprocessing stage, the refined dataset was prepared for a strict LLM-based prompt filtering phase, in which large language models are guided to validate and retain only content relevant to the requirements semantically. The detailed methodology of requirement extraction is described in the subsequent section.

3.2. Requirement Extraction and Classification

The extraction of requirements from user-generated content has become a complex task in requirements engineering, particularly in the context of mobile app store reviews. Traditional manual elicitation techniques are often inadequate for processing such unstructured and large-scale feedback. Recent advances in natural language processing and deep learning have enabled the automation of this task through the use of LLMs, which are capable of capturing complex semantics and generating coherent textual outputs. The adoption of LLMs in requirement extraction marks a shift from simple keyword-based or classification techniques to models capable of understanding context and generating requirements in a format consistent with engineering standards.

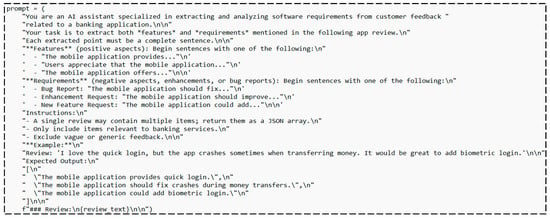

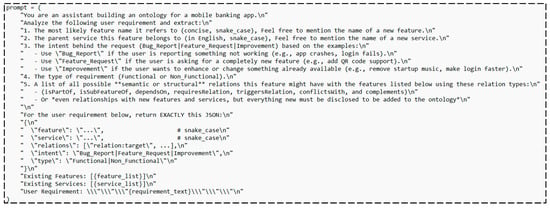

This study adopts a generative modeling paradigm for requirement extraction, using LLMs to synthesize requirement statements from raw user reviews. Recent empirical evidence informs this direction. For instance, the authors of [3] demonstrated the effectiveness of a generative model (GEMMA-7B) in producing requirements from app store feedback with high precision (Accuracy = 92.00%, F1 = 91.39). Similarly, recent studies have demonstrated that users often perceive ChatGPT- 3.5 or 4 generated requirements as transparent, self-contained, and consistent. For instance, the authors of [40] evaluated ChatGPT’s ability to elicit software requirements and found that its outputs were frequently rated by experts as understandable and logically structured, albeit with occasional gaps in traceability and completeness. Moreover, Hymel and Johnson [20] conducted a comparative study between GPT-4 and human analysts, reporting that LLM-generated requirements achieved higher alignment scores and comparable completeness while being generated at a fraction of the time and cost. Guided by these empirical insights, our framework integrates a generative LLM into the requirement extraction pipeline to help automatically generate both functional and non-functional requirements in a clear and meaningful way. To reach the final prompt design, we conducted a series of iterative refinements on a representative subset of the dataset, testing alternative formulations to balance coverage and precision. The final version was selected, as it consistently yielded high-quality extractions aligned with diverse requirement types. To ensure consistent model behavior, a tailored prompt was designed to define the extraction targets for each user review clearly. It includes sentence patterns for positive features and different types of requirements—such as bugs, improvements, and new feature requests—and gives an example to show the expected output. The prompt ensures that the responses are structured as a JSON array and filters out unrelated or unclear feedback. Figure 2 presents the complete prompt.

Figure 2.

Prompt for LLM-based requirement extraction: (*) emphasis; (**) key terms.

Upon successful extraction, identified user-stated requirements undergo a multi-label classification process to categorize them based on predefined typologies; this process often embeds a mix of functional requirements, non-functional expectations, defect reports, and feature enhancement suggestions, making manual categorization labor-intensive and error-prone. To address this challenge, our study adopts a BERT-based deep learning approach to automatically classify requirements along three key dimensions: requirement type (functional vs. non-functional), requirement intent (defect fix, service request, feature improvement), and requirement domain (e.g., performance, security, availability). This approach is grounded in recent empirical studies that confirm BERT’s ability to capture the contextual and semantic nuances required for fine-grained requirement classification. The integration of BERT-based models for requirement classification has demonstrated superior performance in categorizing both functional and non-functional requirements, often outperforming traditional methods through fine-tuning and transfer learning techniques [1,36]. Another study [3] demonstrated that BERT significantly outperforms traditional classifiers in identifying requirement intent—such as bug reports and feature requests—from app reviews, achieving over 92% accuracy. Their findings confirm BERT’s effectiveness in capturing the implicit purpose behind user feedback, making it ideal for intent-based requirement classification. In addition, the authors of [41] demonstrated that transformer-based models significantly outperform traditional NLP methods in classifying software requirements, particularly in accurately distinguishing non-functional domains such as performance, security, and usability, making them well suited for fine-grained domain categorization in requirements engineering. This classification leverages the contextual embeddings generated by the BERT model, enabling a robust understanding of the nature and scope of each requirement within the broader product ecosystem. This systematic categorization is vital for organizing the vast array of extracted requirements into manageable and analytically useful groups, facilitating a more granular analysis of their interrelationships and implications for product development. Then, the classification informs the subsequent stages of dependency prediction.

So, for each user review u ∈ , a set of candidate requirements 𝓡u is extracted using a generative-based model; each extracted requirement ri is then enriched through three independent BERT-based classifiers, each fine-tuned to predict one of the following critical attributes: intent (e.g., feature request or bug report), the requirement type (functional or non-functional), and the domain (e.g., security, performance). Each classifier employs a dense output layer with softmax activation to produce categorical probability distributions, from which the most likely label is selected. Equation (1) denotes the extraction function that identifies candidate requirements ri from the review. For each requirement ri, the attribute Intent (ri) represents its purpose (e.g., feature request, bug report), Type (ri) classifies it as functional or non-functional, and Domain (ri) assigns it to a relevant domain, such as performance, security, or availability. The set 𝓡u thus captures all enriched requirements associated with review u, each annotated with its semantic and structural properties.

𝓡u = {(ri, Intent(ri), Type(ri), Domain(ri))|ri ∈ Extract(u)}

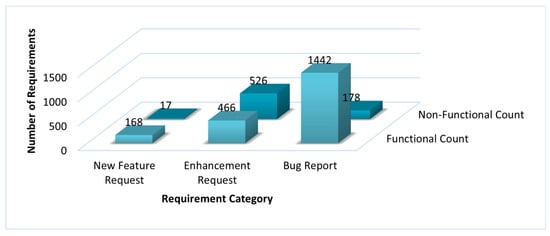

The dataset comprises 2797 extracted requirements, distributed across three major categories: Bug Reports (1620), Enhancement Requests (992), and New Feature Requests (185). As shown in Figure 3, these requirements are further classified into functional and non-functional dimensions, with noticeable variation across categories (e.g., 89% of bug reports are functional, while 53% of enhancement requests are non-functional). This natural imbalance reflects real-world user feedback patterns, where error reporting and incremental improvements dominate over novel feature requests. Importantly, this skew does not bias the dependency detection task, since the classification is ultimately binary (dependency vs. no dependency), and the integration of contextual features with ontology-based structural reasoning ensures robustness against uneven category sizes.

Figure 3.

Distribution of requirement types.

3.3. Requirement Pairing

Following the classification, requirements are systematically paired to identify potential interdependencies, leveraging sophisticated algorithms that consider both their semantic content and classified attributes to infer logical connections. This pairing process is critical for mapping out the intricate web of relationships between diverse requirements, laying the groundwork for a comprehensive understanding of how changes or implementations in one area might impact others within the product ecosystem. To exhaustively model potential dependencies among requirements, our study adopts a pairwise comparison strategy, where each requirement is evaluated in relation to all others. This method aligns with contemporary practices in requirement dependency extraction, where ML models assess relational features between every possible pair. For instance, the authors of [15] implemented a stacked ensemble (P-Stacking) that processes POS, TF-IDF, and Word2Vec features from requirement pairs, demonstrating significant improvements in F1-scores for dependency detection and validating the effectiveness of comprehensive pairwise feature modeling.

To explore dependencies between user-stated requirements, all pairwise combinations of requirements extracted from each user review are constructed. Let 𝓡u be the enriched set of requirements from review u; the pairwise set is defined as follows:

𝓟u = {(ri, rj)|ri, rj ∈ 𝓡u, i ≠ j}

Formulation (2) enables the systematic evaluation of directional dependencies among co-occurring requirements within the same contextual source, forming the basis for subsequent dependency prediction. If the number of extracted requirements in a review u is nu = |𝓡u|, then the number of generated pairs is

|𝓟u| = nu × (nu − 1)

This systematic pairing forms the basis for constructing a dependency matrix, thereby enhancing the overall robustness of the system architecture. The accuracy of this process directly influences the reliability of subsequent dependency prediction and visualization, enabling a proactive approach to managing complex interdependencies in product development. Aggregating across the dataset yields millions of requirement pairs, which is logically consistent with the objective of modeling directional dependencies, as each requirement can potentially influence or be influenced by others. Although the raw pair count is large, active learning and ontology-guided filtering are employed to narrow the focus to the most meaningful candidate pairs, thereby ensuring both computational feasibility and semantic relevance.

3.4. Model Training and Validation

The initial phase of training began with a small, manually labeled subset representing 10% of the user feedback data. Annotation was initially carried out using Amazon Mechanical Turk (MTurk), where multiple annotators provided independent labels for requirement pairs based on logical and functional dependencies. To ensure consistency and reliability, disagreements were first resolved through majority voting, while any remaining conflicts were adjudicated by two domain experts, who are also the authors of this study. With complementary expertise in software requirements engineering, natural language processing, and mobile banking systems, the authors ensured that the resulting consensus yielded a high-confidence reference set. This reference set was subsequently used to fine-tune a pre-trained BERT model and establish the baseline performance for the framework.

The training process is grounded in a hybrid multi-input architecture that combines language understanding with structural and contextual reasoning to enhance the prediction of inter-requirement dependencies. The model ingests three types of input features: first, contextualized textual embeddings are extracted from BERT by concatenating each requirement (ri, rj) into a single input sequence. These embeddings capture the semantic relationships, linguistic nuances, and discourse-level dependencies between the textual descriptions of the requirements. Second, a set of structured features is introduced to incorporate domain-informed signals derived from the underlying ontology and graph-based reasoning. Third, contextual features are utilized to simultaneously capture semantic intent and quantify the functional alignment and stylistic similarity between requirement pairs. This triadic feature design enables the model to reason jointly over linguistic content, structural proximity, and semantic consistency. In the following sections, more details about structural and contextual reasoning are provided.

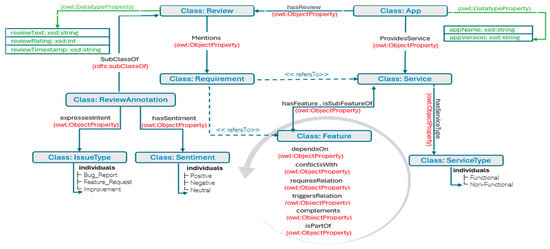

Structural Reasoning: To enable structured reasoning about mobile application requirements, a domain-specific ontology was developed to formalize the hierarchical, semantic, and relational structure of the services and features.

Figure 4 presents the proposed ontology architecture, which was initially manually designed to establish a semantic foundation linking user reviews to software services, features, and their interrelationships. The ontology introduces a review class that stores the feedback content, rating, and timestamp, and it connects each review to one or more requirements. To enrich these requirements with contextual meaning, the model uses a review annotation class that captures the user’s intent and sentiment. Each requirement explicitly refers to a service offered by an app, which includes metadata such as its name and version. The ontology categorizes services into functional or non-functional types and associates each service with relevant features. The model arranges features hierarchically through structural relations such as hasFeature, isSubFeatureOf, and isPartOf, and it defines semantic links between them—such as dependsOn, requiresRelation, conflictsWith, complements, and triggersRelation—to capture dynamic and logical dependencies. This manual design enables the ontology to capture compositional relationships between features, allowing for a structured and interpretable representation of requirement interactions that support reasoning, prediction, and visualization tasks.

Figure 4.

The ontology architecture.

Structural reasoning in the proposed framework is operationalized by combining two ontology-based similarity metrics that reflect both hierarchical positioning and topological proximity between requirements. These metrics—depth-based similarity and shortest-path similarity—jointly model the structural characteristics embedded in a graph-encoded domain ontology. To estimate whether a source requirement ri depends on a target rj, the model first locates the shortest semantic path between their corresponding nodes in the ontology. If a valid path exists, the model calculates the dependency strength using the following normalized score:

This formulation aligns with established approaches in network analysis that utilize the inverse shortest-path length to infer the relational strength between nodes. Recent studies have demonstrated the effectiveness of such metrics in diverse applications, including similarity-based link prediction and structural proximity estimation in complex networks [42,43].

As part of the structural reasoning component, an ontology-based method is employed to estimate the hierarchical relatedness between pairs of requirements. Each requirement is semantically aligned to a corresponding concept within a domain ontology that encodes structural relationships such as ‘isPartOf’ and ‘isSubFeatureOf’. To quantify structural proximity, the depth of each requirement’s ancestors is computed using a traversal function, which iteratively explores parent nodes and assigns a depth score based on their distance from the original requirement node. Given two requirements ri and rj, their respective ancestor sets Anc(ri) and Anc(rj) are extracted. The degree of structural similarity is then determined by identifying shared ancestors A = Anc(ri) ∩ Anc(rj) and computing their Lowest Common Ancestor (LCA). The depth-based similarity score follows the formulation

This formulation favors requirements that share close common ancestors, capturing latent structural dependencies within the ontology; such a depth-based metric supports finer-grained inference beyond surface-level textual similarity. The approach aligns with recent ontology-driven methods in requirements engineering, where structured reasoning has proven effective in enhancing traceability and dependency modeling [44].

Contextual Reasoning: Traditional methods for identifying requirement dependencies often rely on shallow lexical or syntactic similarity, which limits their ability to capture deeper semantic relationships or user-driven intent. To overcome this limitation, the proposed approach adopts a structured reasoning process that considers whether a source requirement ri depends on a target rj based on their contextual alignment. This alignment reflects not only their functional classification and domain-specific category (e.g., performance, usability) but also the nature of their annotated intent, such as issue reporting, enhancement, or feature addition. Rather than treating each requirement in isolation, the approach emphasizes how distinct yet causally related intents—particularly when originating from the same domain—may indicate a stronger likelihood of interdependence. For instance, a complaint about a system limitation followed by a request for enhancement may represent a natural progression of refinement within the same functional area. Empirical studies have shown that such intent signals embedded in user feedback often reflect underlying causal and hierarchical relationships that traditional similarity-based models fail to capture [3]. Moreover, prior work on causal requirement reasoning has shown that nearly one-third of requirement artifacts contain explicit or implicit causal markers, which, when systematically modeled, can enhance traceability and prioritization [45]. By incorporating these patterns into a principled evaluation process, the model enables a more nuanced and context-aware estimation of whether ri depends on rj. This is particularly valuable in large-scale user feedback scenarios, where subtle intent cues are essential for distinguishing between co-supportive, conflicting, or independent features. As such, this methodology offers a scalable and cognitively informed foundation for automated requirement analysis and release planning.

During training, the dataset was initially split into 80% training and 20% validation, with the validation set kept fixed across all active learning iterations to ensure comparability. We utilized the pre-trained BERT-base (uncased) model together with its default WordPiece tokenizer, as implemented in HuggingFace. The model was fine-tuned directly on the constructed dataset of requirement pairs, without additional domain adaptation, as prior studies have shown that fine-tuning BERT-base on task-specific data is sufficient to capture the contextual and semantic nuances of user feedback. The contextual embeddings generated by BERT were fused with the transformed numerical features and passed to a joint classification layer. Model optimization was performed using the AdamW optimizer (learning rate = 2 × 10−5, weight decay = 0.03), with gradient clipping and warm-up scheduling to ensure stable convergence. To mitigate class imbalance, we applied a hybrid strategy—at the data level, majority-class downsampling (Label = 0) was performed when composing augmented training batches, and at the loss level, either Binary Cross-Entropy (BCE) with class weights or Focal Loss was employed, depending on the experiment. Validation was conducted at each epoch, with multiple threshold values being evaluated and the one yielding the highest F1-score being selected.

All experiments were executed in a GPU-enabled environment (NVIDIA Tesla T4, 16 GB VRAM, and 25 GB RAM). On average, fine-tuning a single training loop (3–5 epochs, batch size: 16–32) required approximately 20–30 min, while completing a full active learning cycle with GPT-4-assisted annotation required an additional 1–2 h depending on the batch size. The framework is modular and scalable; ontology-based structural reasoning is computationally lightweight, BERT fine-tuning benefits from GPU acceleration, and LLM annotation was managed in controlled batches to ensure feasibility. This integrated architecture, which combines structural and contextual signals, enables the model to generalize effectively across varied requirement scenarios and supports more accurate and interpretable dependency prediction.

3.5. Model Improvement

To enhance the predictive performance of the proposed framework, an LLM-driven active learning loop was implemented to iteratively refine the fine-tuned BERT classifier while minimizing manual annotation effort. Rather than depending exclusively on human labels, the framework leveraged a large language model, GPT-4, to assist in classifying instances that the model was most uncertain about. After each iteration, the classifier produced probability scores for unlabeled data, and uncertainty was quantified using binary entropy, defined as follows:

where p denotes the predicted probability of the positive class, and Entropy reaches its maximum when p = 0.5, indicating that the model is least certain and is effectively guessing. Accordingly, unlabeled instances with probabilities in the range [0.40–0.60]—representing the uncertainty zone—were considered the most ambiguous and were forwarded to GPT-4 for automated labeling. The annotation batch size was adjusted adaptively across cycles; larger batches (≈400 pairs) were used in the initial iterations to accelerate learning, while smaller batches (≈200 pairs) were employed in later cycles to improve efficiency and focus on the most uncertain samples. To ensure model robustness and avoid introducing class imbalance during refinement, a class-balancing technique was applied to the newly labeled samples prior to their integration into the training data. These balanced annotations were then merged with the existing labeled set, and the model was retrained, completing a full refinement cycle. This iterative process allowed the framework to progressively improve classification performance by focusing annotation efforts only on the most informative samples, enabling efficient learning while maintaining minimal human supervision.

In parallel, the domain ontology—which serves as the foundation for structural reasoning—was incrementally updated using LLM-generated prompts that were reviewed and validated by domain experts.

The ontology construction prompt (see Figure 5) was not arbitrarily designed but was the result of several iterative refinements conducted on a representative subset of the dataset to ensure both accuracy and coverage. Through these experiments, we arrived at a prompt structure capable of consistently extracting candidate concepts from user requirements, including features, services, and potential semantic or structural relations. While the prompt ensured consistency in the format of extracted suggestions, the outputs were not directly integrated into the ontology. Instead, they were treated as candidate updates and subjected to systematic expert validation to ensure semantic correctness and banking-domain relevance. To further illustrate this process, Figure 6 presents a concrete example of the human-in-the-loop validation step. For the requirement “Implement a feature to transfer points between family and friends”, the LLM generated multiple candidate relations (e.g., isPartOf: loyalty_points_services, complements: points_redemption, dependsOn: points_for_card_spending, requiresRelation: manage_beneficiaries, triggersRelation: sms_email_alerts). The reviewer partially approved these suggestions, retaining only those consistent with the ontology structure and discarding irrelevant ones. This interactive step highlights how automated discovery by LLMs is balanced with expert oversight to reduce potential noise or bias.

Figure 5.

Prompt for LLM-based ontology construction update, (#) comments; (*) bullet points; (**) key terms for emphasis.

Figure 6.

Human-in-the-loop ontology update.

The framework establishes a self-improving loop by integrating LLM-driven active learning, refined prompt design, and expert-guided ontology validation. This hybrid and incremental strategy ensures semantic coherence, accuracy, and domain relevance while continuously enriching the ontology. As a result, the framework significantly enhances its ability to capture and reason about complex interdependencies among user-review requirements.

To evaluate model refinement across active learning iterations, our study measured performance improvement using standard classification metrics, including the F1-score, accuracy, and recall. After each training loop, these metrics were recalculated on a fixed validation set to capture the incremental gains attributable to the newly added LLM-labeled instances and ontology updates. To assess the effectiveness of knowledge refinement over successive active learning loops, the ratio of performance improvement (ΔF1-score) to the number of LLM-based interventions and ontology updates was monitored. This ratio served as a practical indicator of knowledge-driven learning efficiency, capturing how much predictive gain was achieved per unit of refinement effort. While this metric is not formally established in prior work, its underlying principle aligns with the efficiency-oriented evaluations adopted in recent active learning studies, particularly in low-resource and annotation-constrained settings [12,21]. By tracking this ratio across loops, the analysis provided insights not only into the extent of model improvement but also into how efficiently that improvement was achieved, highlighting the role of intelligent data selection and dynamic ontology enrichment in minimizing annotation cost while maximizing model performance.

4. Results

This section presents the experimental results in alignment with the research questions, focusing first on the effectiveness of knowledge integration on model performance (RQ1), followed by an analysis of the learning efficiency during the iterative refinement process (RQ2). To support this evaluation, a series of comparative experiments were conducted across three progressively enhanced model configurations. The first serves as a baseline, employing a standard BERT-based classifier trained without any contextual or structural augmentation. The second configuration integrates domain-specific ontology and contextual signals but excludes the active learning component, representing a static knowledge-enhanced setup. The final configuration comprises the whole framework, incorporating an LLM-driven active learning loop that iteratively refines the classifier through uncertainty-guided feedback and dynamic knowledge enrichment. This comparative design enables a systematic evaluation of the individual and combined contributions of contextual reasoning, structural domain knowledge, and adaptive learning dynamics to both predictive performance and knowledge-driven efficiency.

4.1. Impact of Knowledge Integration on Dependency Prediction

The initial evaluation of the baseline model—comprising a standard BERT-based binary classifier trained exclusively on raw textual pairs—reveals important limitations in its predictive behavior. Despite leveraging pre-trained contextual embeddings, the model achieved an F1-score of 0.766, with high recall (0.8738) but substantially low precision (0.6818). This imbalance indicates that while the model is effective at capturing a large proportion of true dependencies, it also produces a high rate of false positives, reflecting an overgeneralized decision boundary. The elevated validation loss (0.8738) and AUC (0.8738) further support this interpretation, suggesting that the model struggles to clearly distinguish between dependent and non-dependent requirement pairs in the absence of explicit structural or semantic signals.

To investigate the effect of incorporating domain knowledge, a knowledge-enriched configuration was implemented in the first loop, where context-awareness and structural relations cues—derived from a domain-specific ontology and fine-tuning BERT—were integrated into the training process. This modification led to a substantial improvement in predictive performance. The model’s F1-score increased to 0.8641, and more importantly, the precision and recall became fully balanced (both at 0.8641). This transition reflects a significant enhancement in the model’s ability to avoid both false positives and false negatives, resulting in more calibrated and reliable predictions. Furthermore, the validation loss dropped dramatically to 0.0298, indicating that the addition of structured knowledge not only improved output quality but also facilitated faster and more stable convergence during training. The AUC rose to 0.9141, confirming that the model had developed stronger discriminative capabilities across a range of decision thresholds.

These findings highlight the value of incorporating contextual and ontological knowledge into the predictive process, even in the absence of iterative refinement. The model configuration in the first loop, enhanced with structural relating and semantic reasoning, exhibits a markedly improved ability to interpret nuanced relationships between requirement pairs. Compared with the baseline, it more effectively captures latent dependencies that would otherwise be overlooked or misclassified. This outcome affirms the central principle of the proposed framework—that accurate and efficient dependency prediction from unstructured user reviews depends not only on linguistic patterns but also on the integration of functional relationships and intent-aware understanding.

4.2. Efficiency Gains Through Active Learning and Iteration

Beyond the initial accuracy improvements obtained through static knowledge integration, the proposed framework demonstrates substantial gains in learning efficiency through iterative refinement and active learning. As shown in Table 1, the transition from Loop#2 to Loop#4 reveals consistent improvements in both performance metrics and training dynamics. In Loop#2, the model achieved an F1-score of 0.9091. While these results marked a clear advancement over the static configuration, the efficiency of this gain—when evaluated in terms of the ratio of performance improvement (ΔF1-score) to the number of LLM-based interventions and ontology updates—remained moderate.

| Finding 1 Knowledge integration through contextual awareness and structural relations led to a clear improvement in dependency prediction performance, raising the F1-score from 0.766 to 0.8641 and achieving balanced precision and recall, even without iterative refinement. |

Table 1.

Performance of the proposed models compared with the baseline across different loops.

Importantly, Loop#4 demonstrated not only the highest F1-score (0.9565) and validation loss reduction (0.0128) but also the most efficient use of refinement effort, with a significantly smaller number of interventions. This loop yielded the largest single-step performance gain (ΔF1 = 0.0327) relative to the amount of refinement effort invested—indicating an improvement in knowledge-driven learning efficiency of over eightfold compared with Loop#3. These findings suggest that, as the model matures, it becomes increasingly effective at selecting the most informative instances for annotation and knowledge injection, thereby maximizing learning gains while minimizing intervention cost.

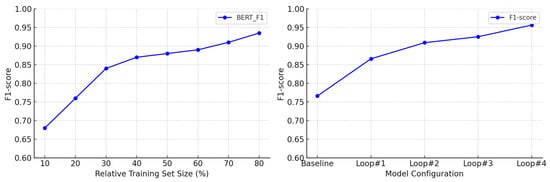

Figure 7 collectively illustrates the complementary roles of the training volume and knowledge-guided refinement in enhancing model performance. In the left plot, the learning curve depicts the impact of increasing training data volume on model performance. As the relative training set size increases from 10% to 80%, the F1-score steadily improves. Notably, the model surpasses an F1-score of 0.91 at 70% data utilization and approaches 0.935 at 80%, reflecting strong learning capacity with moderate data volumes. The right plot shows the progression of performance across successive refinement loops within the proposed framework. Starting from a baseline model trained only on textual input, each loop incrementally incorporates contextual and structural knowledge, resulting in consistent performance gains. Notably, Loop#1 (static integration) achieves a significant jump over the baseline, while subsequent loops (Loop#2 to Loop#4) demonstrate further improvement through selective, knowledge-efficient updates. Together, these visualizations provide evidence for both the scalability and adaptability of the proposed framework in handling dependency prediction with minimal supervision.

Figure 7.

Impact of data scaling and knowledge-guided refinement on model performance.

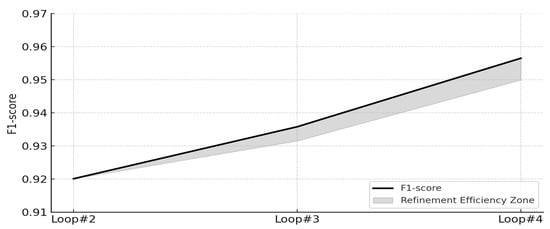

The results depicted in Figure 8 illustrate the progression of model performance and refinement efficiency across active learning loops. While the F1-score increases consistently from Loop #2 to Loop #4, the relative performance gain per unit of annotation and knowledge update effort becomes most pronounced in the final loop. At the same time, the learning curve indicates that performance improvements eventually plateau, reflecting stable generalization rather than overfitting. This trend confirms that the model not only becomes more accurate but also more selective in identifying the most informative instances for annotation and ontology enrichment. Overall, the analysis affirms the effectiveness of integrating domain knowledge and adaptive selection mechanisms in enhancing learning efficiency during iterative refinement.

Figure 8.

F1-score progression and refinement efficiency across active learning loops.

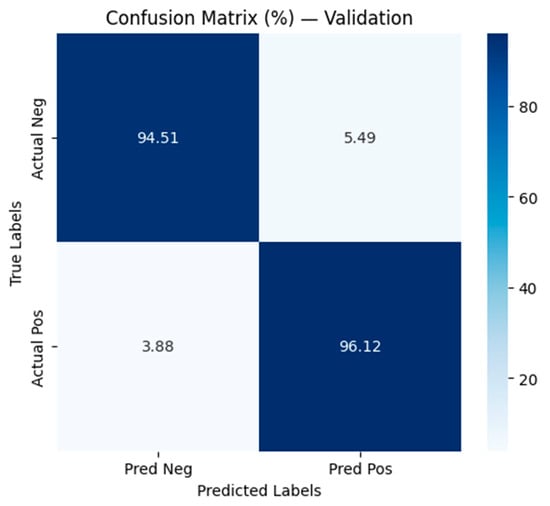

The final model’s classification performance demonstrates both precision and consistency, as evidenced by the normalized confusion matrix applied to the validation set (see Figure 9). The model correctly identified 96.12% of truly dependent requirement pairs (true positives) while misclassifying only 3.88% as non-dependent (false negatives). Similarly, 94.51% of actual non-dependent pairs were accurately predicted, with a 5.49% false positive rate. This distribution reflects a high degree of sensitivity and specificity, indicating that the model is capable of capturing subtle dependency patterns while avoiding over-generalization. Moreover, the relatively low error rates on both classes validate the model’s balanced decision boundaries and reinforce the strong F1 performance reported in previous evaluations. This level of reliability is particularly critical in requirement dependency prediction, where false positives may introduce unnecessary constraints between unrelated features, while false negatives risk overlooking essential dependencies. Both errors can undermine the integrity of release planning and compromise the overall coherence of software evolution.

Figure 9.

Confusion matrix: Validation of the performance of the dependency prediction model.

This robust performance further justified terminating the iterative refinement process at Loop#4, as the model exhibited clear signs of convergence in both predictive accuracy and refinement efficiency. Although each loop introduced measurable gains, the improvement from Loop#3 to Loop#4 was relatively modest in terms of the F1-score, yet it achieved significantly fewer interventions. Notably, Loop#3 attained the highest F1-score (0.9565) and an optimal balance between precision and recall, indicating the model’s maturity in capturing complex dependencies. Additionally, the ratio of performance gain to refinement effort showed a marked increase, suggesting that the model had become increasingly selective and effective in utilizing new annotations and ontology updates. Further refinement beyond this stage would likely incur higher costs with minimal added value, making Loop#4 a methodologically sound and practically efficient endpoint for the active learning cycle.

| Finding 2 The LLM-driven active learning approach substantially improved the framework’s learning efficiency through iterative refinement. In the fourth refinement cycle, the model achieved its highest F1-score (0.9565) while requiring notably fewer annotations and update efforts. This outcome highlights the effectiveness of targeted, knowledge-guided feedback in maximizing predictive performance with minimal supervision, demonstrating that the model becomes increasingly selective and impactful in leveraging new information across refinement cycles. |

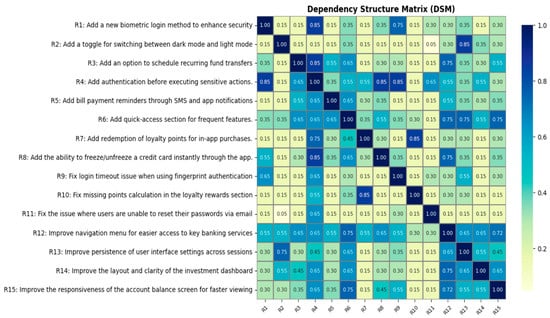

To illustrate the full capacity of the proposed framework, a 15 × 15 dependency structure matrix was generated, as seen in Figure 10, synthesizing insights derived from contextual reasoning, ontological structure, and LLM-driven iterative refinement. This matrix captures the pairwise dependency scores among 15 user-generated requirements, each processed through a pipeline that is not only context-aware but also structurally informed. The framework’s contextual awareness allows it to interpret nuanced signals from natural language feedback—such as intent, function, and domain cues—enabling the model to recognize implicit dependencies that extend beyond surface-level lexical similarity. For example, strong associations such as (R2–R13), (R7–R10), and (R8–R4) reveal clusters of requirements that are semantically or functionally aligned, suggesting that they contribute to a shared user objective or functional goal. These high-scoring links reflect not just textual correlation but also latent causal and structural logic that emerges from understanding user intent and domain semantics. Meanwhile, weak associations such as (R2–R11) or (R9–R10) point to modular, independent features that can be addressed in isolation, facilitating more efficient resource allocation and lower development risk.

Figure 10.

Dependency Structure Matrix (DSM) from user reviews.

Importantly, this matrix is more than a static visualization—it embodies the dynamic nature of the proposed model. Through its iterative learning process, driven by selective refinement and feedback loops, the model continuously adapts to new data and evolving user expectations. As users’ needs shift over time, the system remains responsive; newly emerging requirements, domain concepts, or semantic patterns can be integrated without reengineering the entire framework. This adaptability ensures that the dependency analysis remains current and actionable, even in rapidly evolving application domains. Furthermore, the architecture is inherently scalable; while the visualization here is limited to 15 requirements for clarity, the model is capable of processing substantially larger sets, maintaining both efficiency and interpretability.

By translating fragmented user input into structured dependency knowledge—grounded in both context and structure—the matrix offers a practical decision-support artifact. It aids stakeholders in identifying influential requirements, resolving conflicts, prioritizing release bundles, and planning modular deployments. Ultimately, this demonstration reinforces the framework’s core contribution: providing an intelligent, scalable, and continuously adaptive mechanism to support real-world software development and evolution through precise, context-aware dependency understanding.

5. Discussion

This study explores unstructured user reviews as a central data source for predicting requirement dependencies—an area that remains underexplored in the existing literature, which predominantly focuses on structured requirement documents or stakeholder-defined inputs. In contrast, app reviews reflect the lived experiences and evolving expectations of users, capturing functional demands, usability concerns, and enhancement suggestions naturally and dynamically. To the best of our knowledge, this is the first study to address the challenge of dependency prediction in this context by integrating contextual semantics, structural ontology reasoning, and iterative refinement through large language models. This shift—from developer-curated requirements to raw, user-generated feedback—introduces a unique set of complexities, including ambiguous phrasing, inconsistent terminology, and rapidly changing user needs. While traditional models often perform well in controlled settings, they typically lack the architectural flexibility needed to interpret informal, evolving inputs. To address this gap, the proposed framework employs a dual-layered reasoning strategy: contextual understanding is enhanced through the analysis of requirement intent and semantic similarity, while structural insight is derived from ontology-based metrics that capture hierarchical and functional relationships. These signals are further refined through an LLM-driven active learning loop, in which the model iteratively identifies uncertain predictions, receives automated labels from the LLM, and retrains accordingly. This feedback-driven pipeline enables the model not only to make accurate predictions but also to adapt over time—continuously aligning its internal representations with the emerging concerns, expectations, and priorities expressed by users. This dynamic adaptability represents a meaningful advancement over prior approaches and reinforces the framework’s relevance to real-world, user-centered software development.

The experimental findings demonstrate a consistent and significant improvement in dependency prediction performance as a result of the proposed framework’s integration of contextual and structural reasoning mechanisms. Notably, the iterative learning strategy supported by LLM feedback loops resulted in a gradual yet robust enhancement of the model’s predictive capabilities across multiple evaluation metrics, including the F1-score, AUC, and precision. These results provide empirical support for the claim that leveraging both semantic context and ontological structure can yield more accurate and interpretable representations of requirement dependencies—particularly those derived from unstructured, natural language app reviews. The substantial performance gains observed across successive refinement loops further highlight the effectiveness of dynamic knowledge infusion in reinforcing the classifier’s ability to discern complex inter-requirement relationships within noisy, user-generated content. Collectively, these outcomes underscore the potential of the proposed hybrid AI-driven approach in addressing the intricacies inherent in extracting and analyzing requirement dependencies from informal, real-world feedback sources.

Discussing the results of the first research question highlights the central role that contextual and structural reasoning played in enhancing the accuracy and robustness of dependency prediction. By incorporating deep semantic understanding of requirement intent, functional categories, and domain relevance, the model was able to interpret nuanced distinctions between user needs that are often obscured by the variability and ambiguity of natural language. Such integration proved particularly effective in uncovering hidden relationships between user statements that, on the surface, appear unrelated. For example, the model correctly identified a dependency between the complaint “the transfer fails whenever I try to send money abroad” and another enhancement request: “please add an option to track international transfers.” While one reflects a transactional error and the other a feature request, both point to a shared concern regarding the reliability and visibility of international transfer functions. Even in more subtle cases—such as linking the grievance “I get a notification that my card was blocked after failed ATM withdrawal, but there was no explanation why” to the suggestion “please allow users to view real-time status updates for their cards in the app”—the framework demonstrated an ability to infer contextual dependencies rooted in user frustration and the desire for greater transparency. These findings underscore the framework’s capacity to move beyond superficial lexical similarity and capture deeper semantic relationships, a limitation that constrains many conventional approaches. This depth of reasoning enables more accurate and meaningful modeling of user needs, ultimately supporting more informed and responsive software planning. In addition, structural reasoning further reinforced prediction quality by incorporating ontological features that quantify the relational distance between requirements. Metrics such as path similarity, ancestor depth, and directional alignment helped the model capture latent hierarchies and implicit dependencies, even in cases where surface-level textual similarity was weak or misleading. Together, these reasoning layers provided a more comprehensive representation of inter-requirement relationships, leading to the observed improvements across all evaluation criteria. The complementary integration of contextual and structural signals thus emerged as a key driver of performance gains in this domain.

The performance of our framework was further enhanced by incorporating iterative refinement strategies, where uncertain predictions identified by the model were selectively reviewed and improved through LLM-assisted feedback. Rather than relying on static supervision or exhaustive manual labeling, the proposed framework adopted an LLM in an active learning strategy. The uncertain requirement pairs identified by the classifier were processed within LLM-driven active learning loops, where the large language model functioned as a labeler. For instance, the model initially struggled to link the complaint “Sometimes it just logs me out without asking—especially when I switch to another app for a second” with the request “Please enable re-authentication when switching back to the app, especially for sensitive actions like transfers.” Although these statements lacked lexical similarity, their latent relationship emerged more clearly through repeated exposure and LLM-supported contextualization. Over time, the classifier learned to associate session interruption events with security enhancement requests, capturing a dependency that is subtle and semantically indirect, requiring a deeper contextual understanding beyond surface similarity. This mechanism replaced manual annotation by enabling the LLM to assign dependency labels to selected high-uncertainty pairs. This process enabled the efficient generation of additional training data without human intervention while maintaining semantic consistency and contextual awareness. The impact of this LLM-guided refinement was evident across successive training loops. A subsequent iteration using LLM-labeled data produced noticeable and consistent improvements in the F1-score, precision, and AUC. These gains underscore the effectiveness of the proposed active learning strategy, which combines uncertainty-based selection with automated, knowledge-infused labeling to enhance the model’s generalization capability progressively. Importantly, this iterative refinement process extended beyond the model’s parameter updates to include ongoing enhancements to the supporting ontology. As the system encountered novel expressions and emerging conceptual relations, the LLM contributed to structural updates—such as introducing new nodes, redefining hierarchies, or expanding relation types. This co-evolution of the model and ontology ensured that the framework’s semantic and structural reasoning capabilities remain aligned with the evolving language of user feedback.

As shown in Table 2, the current findings not only align with prior research in requirement dependency extraction but also contribute to its advancement by addressing limitations in adaptability, supervision, and knowledge integration. Early efforts, such as those in [46], demonstrated the feasibility of detecting functional dependencies through semantic clustering of natural language artifacts, achieving F1-scores of up to 75% by leveraging Doc2Vec embeddings combined with density-based or fuzzy clustering techniques. These unsupervised methods offered scalable alternatives to manual analysis, particularly in contexts lacking formal specification structures. Building on this direction, the authors of [11] introduced an integrated active learning framework that combined PV-DM embeddings with an ensemble learning model and a multi-criteria sample selection strategy. This approach yielded measurable gains in the weighted F1-score (+2.71%) while reducing the annotation cost by 46%, illustrating the efficiency of iterative refinement guided by uncertainty and semantic variation. Notably, the authors of [14] advanced this integration further by combining ontology-based reasoning with active learning mechanisms, allowing the model to benefit from structured domain knowledge while dynamically refining its predictions through iterative annotation. This hybridization enabled more context-aware dependency detection without fully relying on static ontological rules. Similarly, the authors of [16] leveraged ontology and semantic similarity for clustering-based extraction, achieving high precision (up to 89.2%) but remaining limited in adaptability due to fixed structures. Other hybrid models, such as those of [15,31], incorporated TF-IDF, POS tagging, and ensemble classification to enhance accuracy (e.g., F1-score of 89.7% in [31]), yet they lacked contextual awareness or learning adaptation. More recently, BERT-based models [9,45] achieved higher performance levels (F1-scores up to 93%), but they rely on batch learning, and the absence of user-driven feedback adaptation constrains their flexibility.

Table 2.

A comparative overview of recent studies related to the discovery of requirement dependencies.

The inherently dynamic and continuously evolving nature of user needs presents a significant challenge to traditional requirement analysis approaches, particularly those grounded in static, batch-based learning paradigms. While such models have demonstrated the capacity to capture complex semantic relationships—especially within large-scale systems—they often fall short in terms of adaptability. Their inability to incorporate real-time user feedback or assimilate evolving domain knowledge limits their effectiveness in dynamic environments. Moreover, dependency reasoning in these approaches tends to remain implicit, lacking explicit integration with structural cues or domain-specific ontologies. In contrast, our framework exhibits clear advantages in its adaptive capability, achieving superior performance (F1-score > 0.95) across successive refinement loops. More importantly, its design embraces user-centric adaptability—treating requirement prediction not as a one-time inference task but as a dynamic, learning-driven process shaped by ongoing user interaction. This perspective marks a paradigm shift in requirements engineering, underscoring the importance of scalable, context-aware models in supporting modern software evolution.

6. Threats to Validity

Despite the promising results achieved by the proposed framework, several threats to validity must be acknowledged to contextualize the findings and guide future research.

6.1. Internal Validity

The internal validity may be influenced by factors related to the data annotation process, ontology construction, and iterative integration of knowledge. Although annotation was guided by predefined criteria and supported by domain expertise, the inherently subjective nature of interpreting requirement dependencies—particularly in ambiguous or context-sensitive user feedback—could introduce variability or bias. Furthermore, the construction and incremental updating of the domain ontology were performed manually and semi-automatically during the active learning loops. While this mirrors realistic development conditions, it may affect the consistency of dependency representation across iterations. Additionally, the dynamic behavior of the LLM in suggesting ontology updates introduces a non-deterministic element, which, while valuable for capturing emergent knowledge, may also lead to variation in the quality or scope of added concepts. These factors collectively present a threat to the internal consistency and repeatability of the experimental process.

6.2. Construct Validity