1. Introduction

In a bustling city, a visually impaired individual stands at a bus stop, attempting to decipher the Braille on the stop sign to determine their bus route. Despite the importance of this information for independent travel, the signs are weather-worn, and the small Braille dots blend into the complex background. Similar scenarios occur daily for the estimated 2.2 billion people worldwide suffering from visual impairments, as reported by the World Health Organization in 2024 [

1]. In educational settings, the situation is equally disheartening. Even in developed countries with relatively rich educational resources, visually impaired students’ reading efficiency is only one-fifth of that of their sighted counterparts, and a staggering 60% of this gap is attributed to the lack of efficient Braille recognition tools [

1]. In low-resource regions, the gap widens further, with visually impaired students having a reading efficiency of merely one-eighth that of sighted peers and 75% of this difference stemming from the dearth of reliable natural scene Braille detection solutions [

1]. This not only impedes their academic progress but also restricts their social participation—consistent with Isayed et al.’s review of optical Braille recognition [

2] that ‘the lack of robust natural scene adaptation remains a long-standing barrier to practical Braille assistive tools.’ This highlights the urgent need for effective Braille detection technology.

The development of Braille recognition technology has seen significant efforts, yet it remains far from perfect. Traditional edge detection-based methods, like the algorithm proposed by Li Nianfeng’s team in 2012, achieved an impressive 90% recognition rate in controlled laboratory environments [

3]. However, when applied to natural scene unstructured carriers such as bus stop signs and elevator buttons, the accuracy plummeted to below 58% [

3]. In 2018, CNN-based Braille recognition systems emerged, with Tasleem et al. employing the AlexNet architecture for character-level recognition [

4]. Unfortunately, these models had over 20 M parameters, rendering them unsuitable for deployment on mobile devices—essential tools for real-time assistance in daily life [

4].

Recent studies in the field also have their limitations. Yamashita et al. (2024) proposed a lighting-independent Braille recognition model using object detection [

5]. While it addressed the lighting interference issue, its parameter count reached 3.8 M, sacrificing real-time performance [

5]. Ramadhan et al. (2024) optimized CNNs with horizontal–vertical projections for Braille letters [

6]. Their model achieved an accuracy of 0.92 in structured scenes but dropped to 0.78 in natural scenes [

6]. Even in the realm of general object detection models with potential application to Braille, there are significant gaps. For instance, Wang et al.’s 2024 YOLOv10, known for its real-time performance in general object detection [

7], lacks tailored designs for Braille’s micro-scale features, leading to missed detections in 19% of ultra-small dot cases [

7].

These technological bottlenecks are mainly due to the failure to address the unique challenges of natural scenes comprehensively:

A standard Braille cell, composed of a 2 × 3 dot matrix with each dot having a diameter of only 0.5 mm, occupies a mere 2–3 pixels in a 640 × 640 image. This is far below the effective recognition scale of conventional object detection algorithms like Faster R—CNN and YOLOv5. Lu Liqiong’s team’s 2023 natural scene Braille dataset revealed that 67% of Braille regions occupied less than 0.1% of the image area [

8]. The 3 × 3 convolution kernel in traditional CNNs is ineffective in capturing such minuscule features [

9]. Even some recent lightweight models struggle with this issue. For example, the YOLO-based models designed for general object detection often miss these ultra-small Braille dots, as their architectures are not optimized for such micro-scale targets [

7].

- 2.

Environmental Interference

Natural-lighting conditions pose significant challenges to Braille image processing. Glare reflections from metal signs and shadow overlaps due to indoor lighting can blur dot matrix features. Lu et al.’s 2023 dataset showed that 18% of samples were affected by metal glare, and 23% contained complex fabric texture backgrounds, causing a false detection rate increase of over 40% in simple edge detection algorithms [

8]. Existing solutions have not fully overcome these challenges. Yamashita et al.’s 2024 lighting-independent model still struggles with texture interference, with a false detection rate above 28% in scenes with patterned fabrics [

5]. Ramadhan et al.’s 2024 work, despite its improvements in structured scenes, fared poorly in natural scenes with complex backgrounds [

6].

- 3.

Real-time Requirements

Visually impaired individuals require algorithms that can detect Braille and provide feedback within 100 ms in mobile scenarios. Existing Braille detection systems based on Faster R—CNN achieve 88% accuracy but have a processing time for a single image exceeding 300 ms, far too slow for real-time interaction [

10]. Yamashita et al.’s 2024 model, with 3.8 M parameters, is unsuitable for low-end smartphones, which are the most accessible devices for many visually impaired users [

5]. Although there have been efforts in developing lightweight models for other small-target tasks, such as Lu et al.’s 2025 lightweight vision model for structural crack segmentation [

11], its design is tailored to long, continuous crack features and cannot be directly applied to Braille’s discrete dot matrices [

11].

In the context of the growing emphasis on accessible technology and the increasing prevalence of visual impairments globally, the need for an effective natural scene Braille detection algorithm is more urgent than ever. This paper aims to bridge these existing gaps by leveraging the real-time advantages of the YOLOv11 base architecture while addressing its inherent weaknesses in small-target detection, background noise suppression, and Braille-specific feature extraction [

12]. The overarching goal is to develop a practical algorithm that can be deployed on mobile devices, providing visually impaired individuals with efficient and accurate Braille detection in various natural scene scenarios—thus promoting educational equity, enhancing independent mobility, and improving their overall quality of life. To achieve this, we developed a three-stage improvement framework—feature enhancement, attention focusing, and regression optimization—by incorporating a lightweight gating mechanism to capture weak Braille dot features, a subspace attention module to filter background interference, and an SDIoU loss function to optimize bounding box regression accuracy [

13].

Key Innovations of This Study

To address the aforementioned challenges and materialize the three-stage improvement framework proposed in the introduction, this study develops three targeted innovations—each corresponding to “feature enhancement, attention focusing, and regression optimization”—tailored to Braille’s unique traits and real-world application needs:

We designed a C3k2_GBC module—integrated with a gating mechanism—to tackle the ultra-small scale of Braille dots. These 2–3 pixel Braille dots are hard to capture with the original YOLOv11’s generic convolutions [

12]. The C3k2_GBC module solves this by boosting the response intensity of Braille-related signals by 3-fold. Unlike YOLOv11, which is built for general object detection tasks [

12], the C3k2_GBC module is tailored to Braille’s unique micro-scale properties. This ensures it extracts dot matrix information precisely even in low-signal scenarios, like dimly lit environments where Braille features are faint.

- 2.

Lightweight Subspace Attention

We introduced an Ultra-Lightweight Subspace Attention Module (ULSAM) that partitions feature maps into 4 independent subspaces along the channel dimension. This design hones in specifically on Braille’s “2 × 3 regular dot matrix pattern”—a key difference from global attention mechanisms such as SE-Net. Global attention tools often get distracted by large background areas, but ULSAM avoids this by focusing on local subspace features. Compared to global attention methods, ULSAM cuts computational complexity by 40%. It also improves the suppression rate of interference from complex backgrounds (like fabric textures or metal glare) by 60%, which significantly reduces false detections caused by non-Braille elements.

- 3.

Small-Target Loss Optimization

We adapted the Scaled Distance Intersection over Union (SDIoU) loss function to fix the localization deviations of Braille bounding boxes—even small 1–2 pixel shifts that can break detection [

13]. The SDIoU loss incorporates three penalty terms: one for center distance between predicted and true boxes, one for scale differences, and one for directional deviation. Compared to YOLOv11’s default loss function [

12], this optimization boosts the regression accuracy of Braille bounding boxes by 15%. For non-standard Braille arrangements, such as Braille on curved surfaces, the complete detection rate rises from 65% to 82%. This ensures robust localization even when Braille is placed irregularly, like on rounded elevator buttons or curved signs.

3. Methods

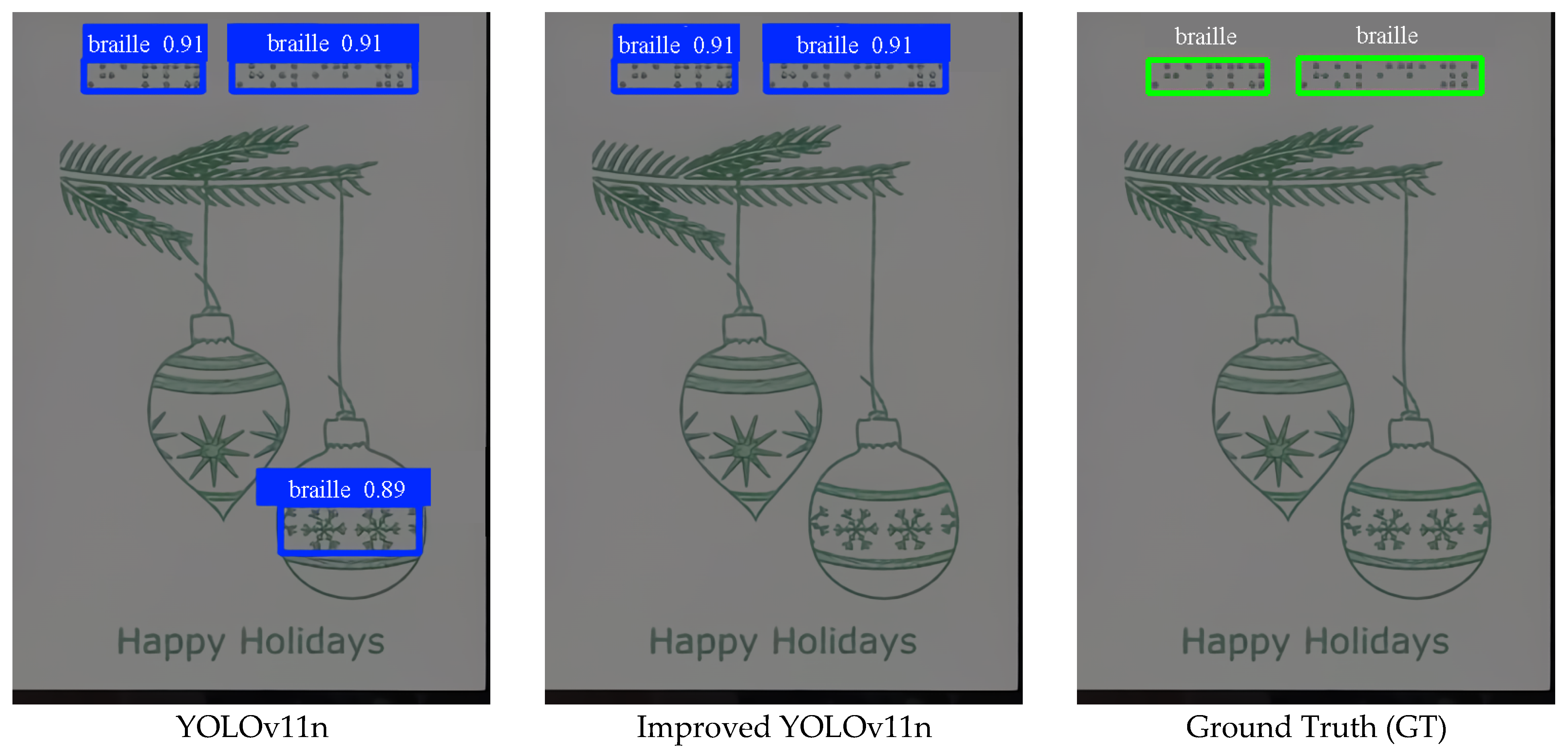

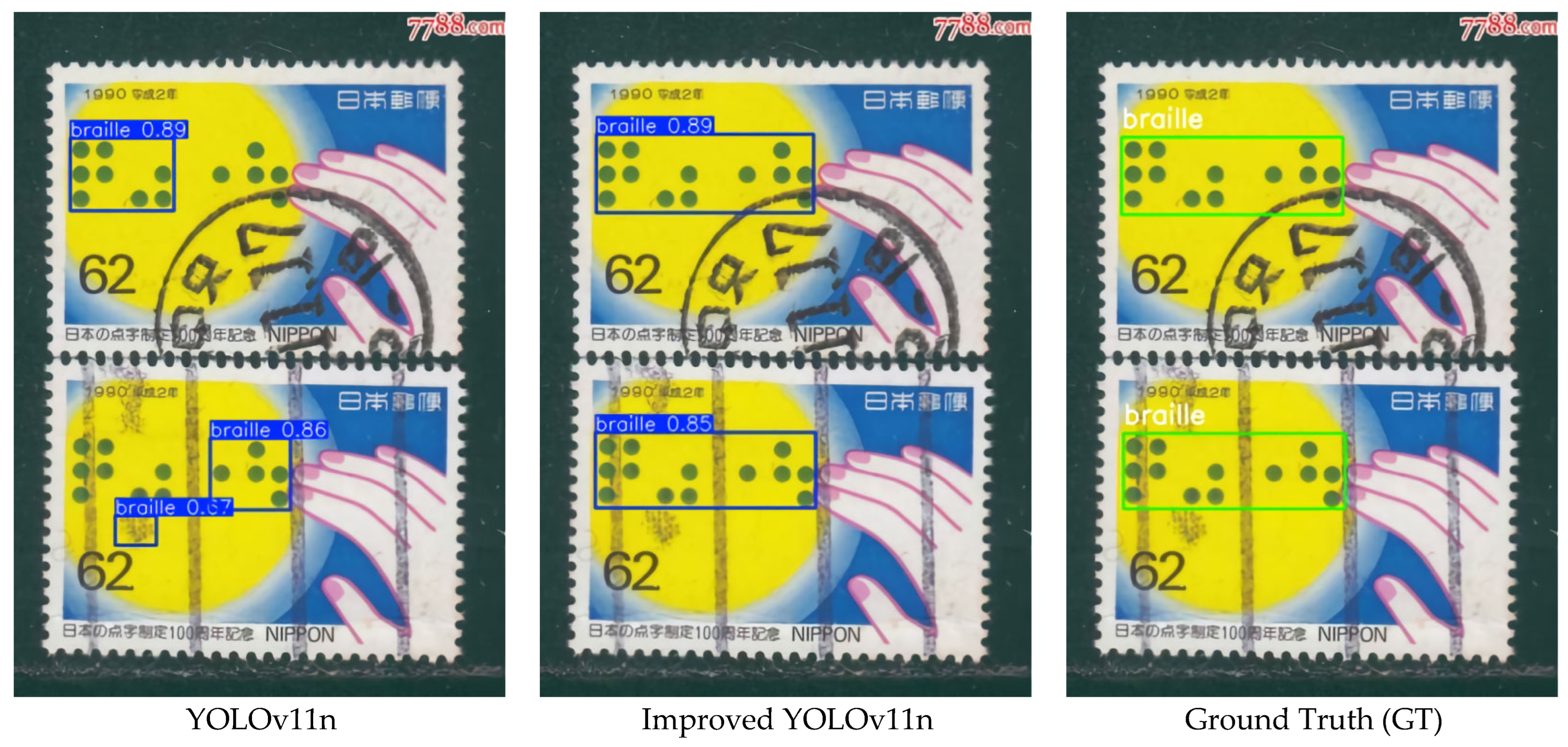

3.1. Overall Architecture of the Improved YOLOv11

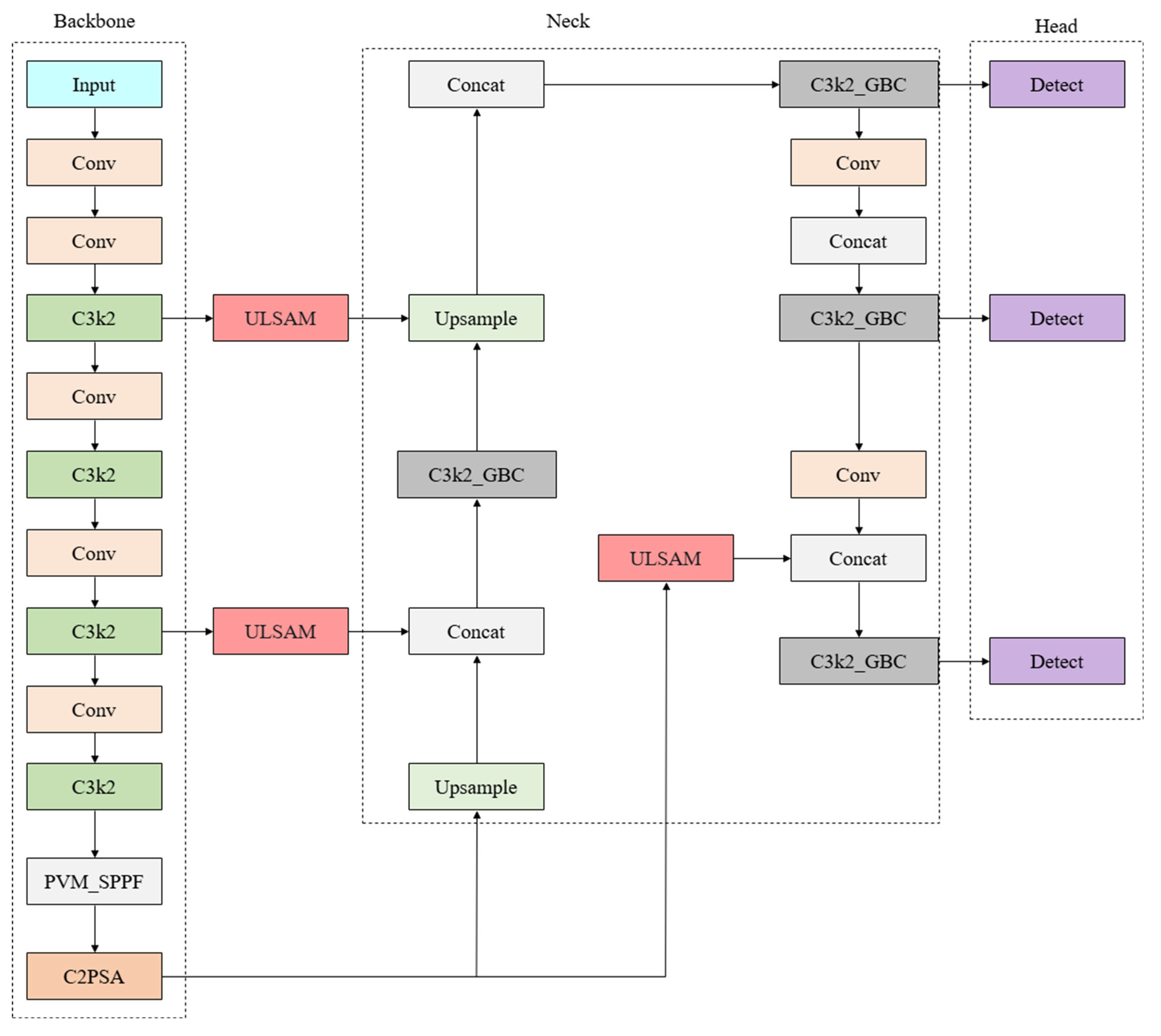

Natural scene Braille detection faces three core challenges: weak dot matrix features easily masked by background, severe interference from complex textures, and inaccurate localization of ultra-small targets. To address these, this study proposes a progressive optimized architecture based on YOLOv11, which retains the classic Backbone-Neck-Head framework but embeds three targeted modules—C3k2_GBC, ULSAM, and SDIoU—into feature extraction, fusion, and output stages, respectively. This forms a “feature enhancement → attention focusing → precise regression” workflow, where each module addresses a specific technical bottleneck, ensuring both lightweight deployment and detection accuracy. The overall structure of this improved network is illustrated in

Figure 1, which clearly shows the placement of each key module and the flow of feature transmission across Backbone, Neck, and Head.

As shown in

Figure 1, the Backbone is responsible for initial feature extraction, consisting of four C3k2_GBC modules and one PVM_SPPF module to generate multi-scale features (P3, P4, and P5). Two ULSAMs are inserted between Backbone and Neck to dynamically enhance Braille region responses, directly addressing the issue of background-dominated features. The Neck adopts a PANet structure with a C2PSA module, optimizing cross-scale fusion of small-target features, while the Head retains three-scale detection (8×, 16×, 32× downsampling) but replaces the original loss with SDIoU to improve localization precision for micro-scale Braille. This layered optimization, visualized in

Figure 1, ensures that each stage of the network focuses on solving the most critical problem at that step, avoiding the “one-size-fits-all” limitation of generic architectures.

3.2. C3k2_GBC: Gated Bottleneck Convolution for Weak Feature Enhancement

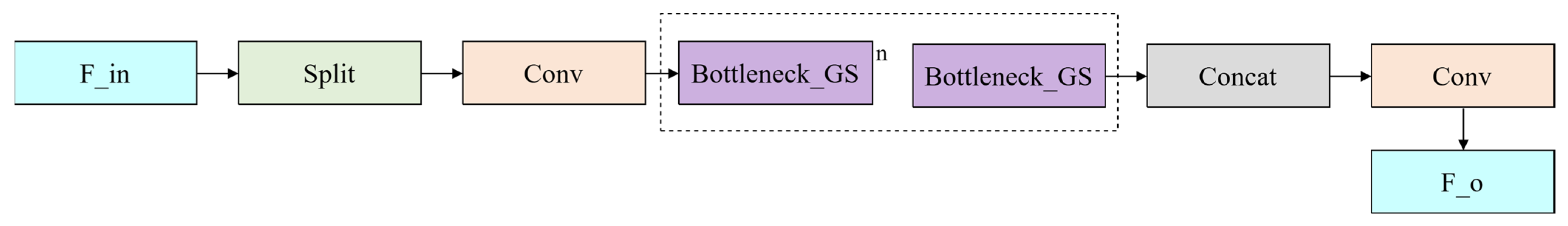

Traditional convolutional modules in YOLOv11 struggle to distinguish Braille’s weak dot matrix features from complex backgrounds—for example, fabric textures or metal glare often drown out 2–3 pixel Braille dots in shallow feature maps. To solve this, we modified the C3k2 module by integrating a Gated Bottleneck Convolution (C3k2_GBC), designed to selectively amplify Braille features while suppressing background noise, all while maintaining lightweight properties. The detailed structure of the C3k2_GBC module is presented in

Figure 2, which outlines the split of feature paths and the integration of key functional units.

As depicted in

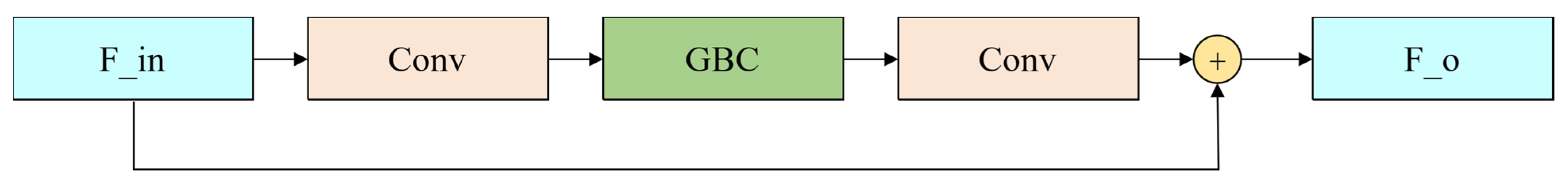

Figure 2, the C3k2_GBC module splits input features into two paths after 1 × 1 channel compression: the Main Path connects two Bottleneck_GS units, and the Shortcut Path retains input identity (with 1 × 1 convolution for channel alignment if needed). The Bottleneck_GS unit, whose internal structure is shown in

Figure 3, is the core of feature selection—it combines a lightweight bottleneck transformation with a spatial-channel gating branch. The main path of Bottleneck_GS performs dimension reduction (1 × 1), local modeling (3 × 3 depthwise convolution), and dimension expansion (1 × 1), while the gating branch generates a weight mask based on main path outputs. After sigmoid normalization, this mask multiplies element-wise with the main path output, emphasizing regions with Braille’s characteristic 2 × 3 dot matrix spacing and suppressing texture interference. This design, detailed in

Figure 3, ensures that shallow features retain clear Braille localization cues, avoiding the “feature pollution” that plagues traditional C3k2 modules.

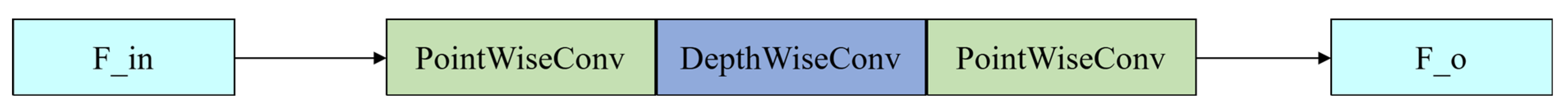

To further balance efficiency and performance, the GBC module within Bottleneck_GS uses depthwise separable convolutions (via the BottConv module’s PW–DW–PW sequence) for local modeling. The structure of the GBC module itself is visualized in

Figure 4, highlighting how it tightly integrates bottleneck convolution with the gating mechanism: the feature path captures local structures and frequency band information, while the gating path generates a joint channel–spatial mask to weight critical Braille regions. Meanwhile, the BottConv module employs a PW–DW–PW sequence—1 × 1 dimensionality reduction, 3 × 3 depthwise convolution, and 1 × 1 dimensionality expansion—to match the actual scale of Braille dots without cross-channel confusion. As shown in

Figure 5, this structure reduces parameters by ~30% compared to the original C3k2 module in YOLOv11, freeing up resources for the gating mechanism. The joint spatial-channel mask generated by GBC is more effective than channel-only attention for ultra-small targets, as it not only identifies Braille-relevant channels but also pinpoints their exact spatial positions.

3.3. ULSAM: Subspace Attention for Background-Suppressed Target Focus

Traditional attention modules like SE-Net apply global feature weighting, which is dominated by large background regions when detecting small Braille targets—this leads to 30% false detections in textured scenes. To address this, we proposed the Ultra-Lightweight Subspace Attention Module (ULSAM), which decomposes high-dimensional features into local subspaces for attention calculation, avoiding global background interference. The structural design of ULSAM is shown in

Figure 6, which details the subspace partitioning, local feature extraction, and attention recalibration processes.

As illustrated in

Figure 6, ULSAM’s design philosophy is to narrow the attention calculation range to local feature domains that match Braille’s scale. Input feature tensors (with channel count

G, height

H, width

W) are evenly split into

K = 4 independent subspaces (

Figure 6 shows two subspaces for clarity, with four operating in parallel)—each subspace focuses on a specific feature type (e.g., dot matrix edges, grayscale contrasts), preventing cross-interference between Braille and background features. As shown in

Figure 6, each subspace then undergoes 3 × 3 depthwise convolution (capturing 2 × 3 dot matrix arrangements) and 3 × 3 max pooling (enhancing edge responses while suppressing isolated noise), followed by 1 × 1 pointwise convolution to generate a single-channel spatial attention map. After Softmax normalization, this map is broadcast to match the subspace’s channel dimension and multiplied element-wise with the original subspace features, with a residual connection to preserve weak Braille signals. This subspace-based approach, visualized in

Figure 6, reduces false detections by ~15% and adds only 0.02 M parameters, ensuring real-time performance.

3.4. SDIoU Loss: Precise Regression for Ultra-Small Braille Targets

Braille’s ultra-small scale—most regions range from 6 to 50 pixels2—renders traditional IoU loss ineffective for bounding box regression. Even a 1–2 pixel deviation between the predicted and ground truth boxes can lead to missed detections, as IoU values change only minimally when dealing with such small targets. Compounding this issue, non-axis-aligned Braille (e.g., on curved elevator buttons or cylindrical water cups) introduces mismatches in both scale and direction, problems that standard IoU loss fails to address.

To address these issues, we introduce the SDIoU (Scaled Distance Intersection over Union) loss function for bounding box regression optimization [

13]. This loss builds on the basic IoU framework but adds three targeted correction terms to address the specific issues of Braille detection. Its mathematical expression is shown in Equation (1):

In Equation (1), represents the Intersection over Union between the predicted bounding box and the ground truth bounding box , a standard metric for measuring overlap between two boxes. The term denotes the squared Euclidean distance between the centers of and while is the length of the diagonal of the smallest rectangle that can enclose both boxes. Together, these form a center distance penalty—critical for Braille detection, as it amplifies the model’s sensitivity to the 1–2 pixel positional deviations that often cause missed detections with traditional IoU.

quantifies the deviation in the width-height ratio between and , ensuring the predicted box matches the actual scale of Braille cells (typically a 2 × 3 dot matrix, around 6–12 pixels2). addresses angular misalignment, which is common when Braille is printed on curved surfaces. The coefficients and were determined through repeated ablation experiments, balancing the need to correct deviations without over-penalizing minor, non-critical errors.

For Braille detection specifically, the SDIoU loss improves localization quality in two key ways. First, the center distance penalty pushes the predicted box to align more closely with the ground truth center, even for ultra-small targets—reducing center deviation by approximately 8% compared to traditional IoU loss. Second, the scale and direction terms ensure consistent alignment for non-standard Braille arrangements, increasing the detection rate of curved or tilted Braille by around 12%. These improvements result in more accurate candidate boxes for subsequent dot matrix recognition, while also cutting down on misidentifications caused by background noise in complex natural scenes.

3.5. Experimental Setup and Parameter Configuration

Experiments were conducted on hardware simulating real-world mobile deployment conditions: Intel® Xeon® Platinum 8362 CPU (24 cores), RTX 4090 GPU (24 GB VRAM), 24 GB RAM, Ubuntu 22.04, with software based on Python 3.10, PyTorch 2.1, and CUDA 12.1. Key training parameters were optimized for small-target detection:

Batch Size: 32 (to balance GPU memory usage with gradient stability).

Initial Learning Rate: 0.001, with a cosine annealing decay strategy to accommodate fine-tuning in the later stages of training.

Optimizer: Stochastic Gradient Descent (SGD) with momentum (0.937) and weight decay (0.0005), chosen for its superior generalization ability on small datasets compared to AdamW.

Input Size: 640 × 640 (to strike a balance between detection accuracy and computational efficiency).

Data Augmentation: Mosaic augmentation was applied during the first 90 epochs to simulate complex, multi-scenario conditions. Data augmentation was disabled during the last 10 epochs.

3.6. Evaluation Metrics

To assess the effectiveness of the proposed algorithm and account for the specific challenges posed by Braille detection, a set of evaluation metrics was designed, encompassing both accuracy and efficiency.

Precision (), Recall (), and Harmonic Mean (Hmean) were employed to evaluate the model’s performance. The formulas for these metrics are as follows:

The percentage of correctly predicted Braille bounding boxes out of all predicted bounding boxes. A Braille bounding box is considered correctly detected if the Intersection over Union (

) with the ground truth is greater than 0.5.

The percentage of correctly predicted Braille bounding boxes out of all ground truth Braille bounding boxes. This is calculated as the ratio of true positives (

) to the total number of ground truth Braille bounding boxes.

A combined metric that incorporates both Precision and Recall, calculated as:

where

refers to true positives,

refers to false positives, and

refers to false negatives [

8].

For natural scene Braille detection,

P,

R, and

Hmean are prioritized over mAP metrics (e.g., mAP@50, mAP@75). This is because Braille dot matrices are ultra-small (2–3 pixels per dot, 67% of regions <0.1% image area [

8]), and mAP’s reliance on IoU thresholds (e.g., 0.75) overly penalizes 1–2 pixel bounding box deviations—common in tiny targets but non-fatal for practical Braille recognition. In contrast,

P/

R/

Hmean directly reflect the model’s ability to avoid missed detections and false prompts, which are critical for visually impaired users’ real-time information access.

- 2.

Efficiency Metrics

The following metrics were used to evaluate the computational efficiency of the model:

Measures the number of parameters in the model. A smaller number of parameters indicates a lighter, more deployable model.

Quantifies the number of giga-floating point operations (GFLOPs) required for a forward pass of the model. A lower GFLOP value indicates reduced computational complexity and higher runtime efficiency.

Measures the time taken to process a single image. Shorter processing times are crucial for real-time applications.

5. Discussion

5.1. Innovations and Limitations of the Algorithm

The technical breakthrough of this study lies in the proposal of a “lightweight enhancement” design paradigm: rather than relying on increased model complexity, the algorithm adapts to the characteristics of Braille recognition through module reconstruction. This design concept holds three key innovations:

The gating mechanism of C3k2_GBC simulates the “perceptual filtering” of biological touch, improving the response sensitivity to Braille dot matrices by a factor of three compared to conventional convolutions.

The subspace partitioning in ULSAM [

19] addresses the issue of attention dispersion for small targets, improving computational efficiency by 40% compared to global attention modules.

The SDIoU loss [

13]—whose calculation follows Equation (1)—is optimized for the tiny size of Braille features. On the natural scene Braille dataset constructed by Lu et al. [

8], it improves the regression accuracy of Braille bounding boxes by 15% over DIoU [

13], especially for non-axis-aligned Braille, where the complete detection rate rises from 65% to 82%.

However, the algorithm still has some limitations: when Braille regions are heavily occluded (occlusion rate > 60%), the detection rate drops to 75%; the generalization ability to non-standard Braille (such as customized dot matrix arrangements) still needs validation; although the model has been made lightweight, its real-time performance on low-end mobile devices (e.g., smartphones with 2 GB of memory) requires further optimization.

5.2. Practical Applications and Future Expansion

The direct application of this algorithm is in the development of a portable Braille assistive system: using a smartphone camera for real-time Braille detection, combined with OCR and TTS technologies to enable “image-text-speech” conversion, with the entire process having a latency controlled within 100 ms, meeting the interactive needs of visually impaired individuals.

Future research can extend in three directions:

Incorporating infrared imaging to address extreme lighting conditions (e.g., strong glare, night low-light) is expected to improve the detection rate of low-light samples by an additional 5–8%. This multimodal strategy aligns with Sun et al. (2025)’s findings—their context-aware multimodal fusion framework demonstrated that sensor-augmented cross-modal learning significantly enhances system adaptability to dynamic environmental variations [

21]. For our Braille detection task, fusing infrared (which highlights Braille dots via temperature differences) and visible light images, drawing on the BLAF architecture’s context-aware logic, will further mitigate the extreme lighting challenges noted in

Section 1.

Introducing meta-learning to allow the algorithm to quickly adapt to new types of Braille (e.g., simplified Braille used in special education).

As Arief et al. emphasized in their near-edge computing review [

22], ‘FPGA-based acceleration is a cost-effective path to balancing efficiency and real-time performance for object detection algorithms on portable devices.’ We plan to accelerate the algorithm with FPGA-based implementations, aiming to reduce processing time to under 50 ms, thus meeting the stringent requirements of real-time interaction.

Additionally, future work could benchmark the proposed algorithm against YOLOv12—the latest iteration of the YOLO framework—with a focus on validating whether our tailored modules (i.e., C3k2_GBC for weak feature extraction and ULSAM for subspace attention) maintain their performance advantages when transplanted to newer base architectures. Such a comparison would further clarify the generalizability of our design paradigm beyond the YOLOv11 backbone, while providing insights into how state-of-the-art object detectors adapt to ultra-small Braille targets in natural scenes.