Scoping Review of ML Approaches in Anxiety Detection from In-Lab to In-the-Wild

Abstract

1. Introduction

1.1. Defining Anxiety

1.2. Measuring Anxiety

- i.

- Traditional Measures

- ii.

- Behavioral Measures

- iii.

- Physiological Measures

1.3. Inducing Anxiety

1.4. Detecting Anxiety

2. Review of Anxiety Detection Using ML

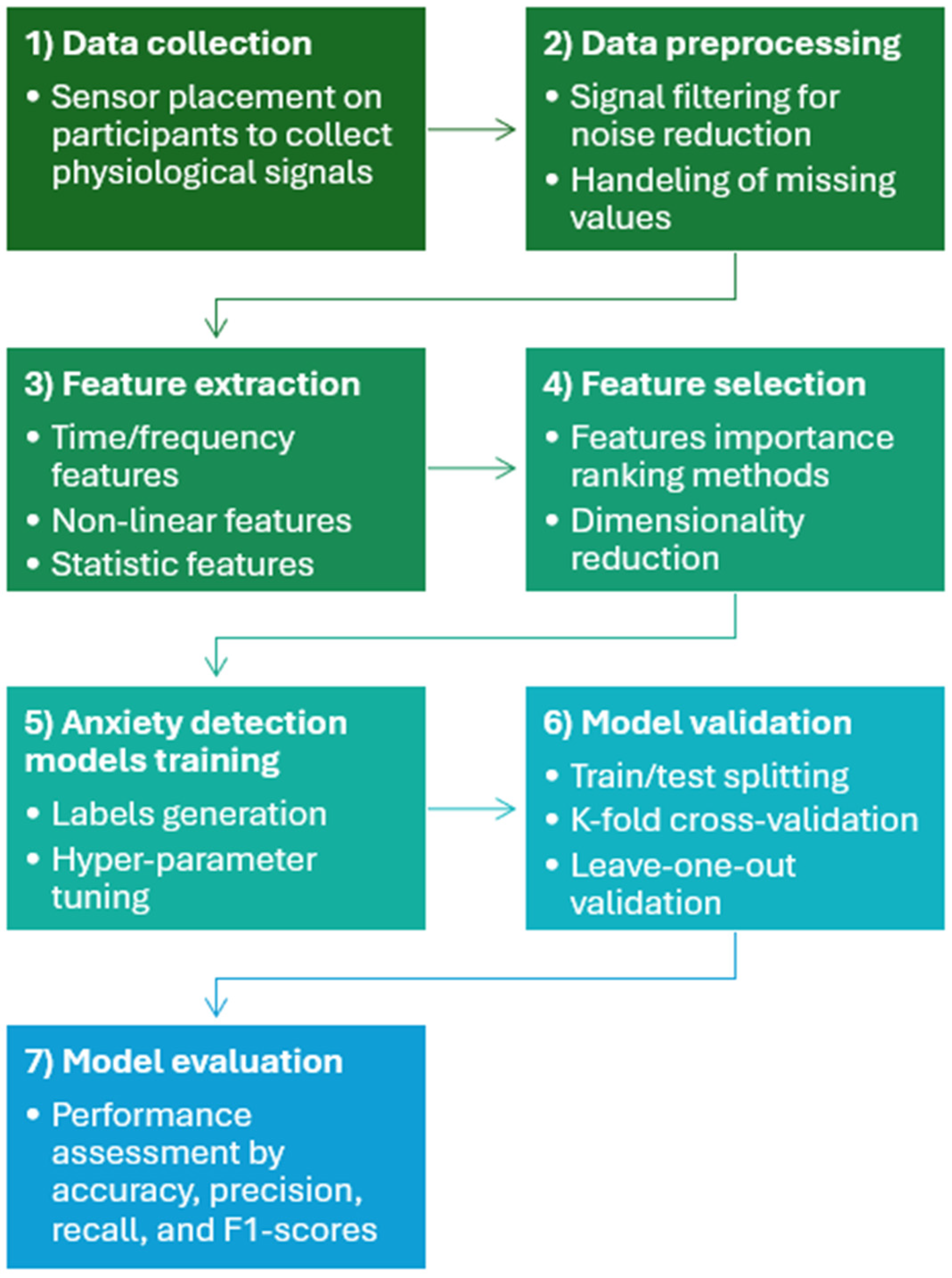

2.1. Methods

2.2. ML Models and Architectures

2.2.1. FB Models

2.2.2. E2E Models

3. Results

3.1. ML Techniques and Performances

3.2. Open Datasets for Anxiety Detection

3.3. Model Performances Based on Stressor Types

3.3.1. Social Stressors

3.3.2. Mental Stressors

3.3.3. Physical Stressors

3.3.4. Emotional Stressors

3.3.5. Driving Stressors

3.3.6. Daily-Life Stressors

4. Discussion

4.1. Summary

4.2. Key Observations

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| CASE | Continuously Annotated Signals of Emotion |

| CNN | Convolutional Neural Network |

| DT | Decision Tree |

| E2E | End-to-End |

| ECG | Electrocardiogram |

| EDA | Electrodermal Activity |

| EEG | Electroencephalogram |

| EMG | Electromyography |

| FB | Feature-Based |

| FCN | Fully Convolutional Neural Network |

| GCN | Graph Convolutional Network |

| HF | High Frequency |

| HPA | Hypothalamic–Pituitary–Adrenal axis |

| HRV | Heart Rate Variability |

| IAPS | International Affective Picture System |

| kNN | k-Nearest Neighbors |

| LDA | Linear Discriminant Analysis |

| LF | Low Frequency |

| LR | Linear Regression |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| MLP | Multilayer Perceptron |

| PPG | Photoplethysmography |

| RESP | Respiration |

| RF | Random Forest |

| RMSSD | Root Mean Square of Successive Differences |

| RNN | Recurrent Neural Network |

| SCWT | Stroop Color and Word Test |

| STAI | State–Trait Anxiety Inventory |

| SVM | Support Vector Machine |

| SWELL-KN | Smart Reasoning for Well-being at Home and at Work—Knowledge Work |

| TEMP | Temperature |

| TSST | Trier Social Stress Test |

| WESAD | Wearable Stress and Affect Detection |

References

- Manderscheid, R.W.; Ryff, C.D.; Freeman, E.J.; McKnight-Eily, L.R.; Dhingra, S.; Strine, T.W. Evolving Definitions of Mental Illness and Wellness. Prev. Chronic Dis. 2010, 7, A19. [Google Scholar]

- Salari, N.; Hosseinian-Far, A.; Jalali, R.; Vaisi-Raygani, A.; Rasoulpoor, S.; Mohammadi, M.; Rasoulpoor, S.; Khaledi-Paveh, B. Prevalence of Stress, Anxiety, Depression among the General Population during the COVID-19 Pandemic: A Systematic Review and Meta-Analysis. Glob. Health 2020, 16, 57. [Google Scholar] [CrossRef]

- Canals, J.; Voltas, N.; Hernández-Martínez, C.; Cosi, S.; Arija, V. Prevalence of DSM-5 Anxiety Disorders, Comorbidity, and Persistence of Symptoms in Spanish Early Adolescents. Eur. Child Adolesc. Psychiatry 2019, 28, 131–143. [Google Scholar] [CrossRef]

- Wittchen, H.U.; Jacobi, F.; Rehm, J.; Gustavsson, A.; Svensson, M.; Jönsson, B.; Olesen, J.; Allgulander, C.; Alonso, J.; Faravelli, C.; et al. The Size and Burden of Mental Disorders and Other Disorders of the Brain in Europe 2010. Eur. Neuropsychopharmacol. 2011, 21, 655–679. [Google Scholar] [CrossRef]

- Celano, C.M.; Daunis, D.J.; Lokko, H.N.; Campbell, K.A.; Huffman, J.C. Anxiety Disorders and Cardiovascular Disease. Curr. Psychiatry Rep. 2016, 18, 101. [Google Scholar] [CrossRef] [PubMed]

- Segerstrom, S.C.; Miller, G.E. Psychological Stress and the Human Immune System: A Meta-Analytic Study of 30 Years of Inquiry. Psychol. Bull. 2004, 130, 601–630. [Google Scholar] [CrossRef] [PubMed]

- Thomas, K.C.; Ellis, A.R.; Konrad, T.R.; Holzer, C.E.; Morrissey, J.P. County-Level Estimates of Mental Health Professional Shortage in the United States. Psychiatr. Serv. 2009, 60, 1323–1328. [Google Scholar] [CrossRef]

- Satiani, A.; Niedermier, J.; Satiani, B.; Svendsen, D.P. Projected Workforce of Psychiatrists in the United States: A Population Analysis. Psychiatr. Serv. 2018, 69, 710–713. [Google Scholar] [CrossRef] [PubMed]

- Althubaiti, A. Information Bias in Health Research: Definition, Pitfalls, and Adjustment Methods. J. Multidiscip. Healthc. 2016, 9, 211–217. [Google Scholar] [CrossRef]

- Julian, L.J. Measures of Anxiety. Arthritis Care 2011, 63, S467–S472. [Google Scholar] [CrossRef]

- Dziezyc, M.; Gjoreski, M.; Kazienko, P.; Saganowski, S.; Gams, M. Can We Ditch Feature Engineering? End-to-End Deep Learning for Affect Recognition from Physiological Sensor Data. Sensors 2020, 20, 6535. [Google Scholar] [CrossRef] [PubMed]

- Mentis, A.F.A.; Lee, D.; Roussos, P. Applications of Artificial Intelligence−machine Learning for Detection of Stress: A Critical Overview. Mol. Psychiatry 2023, 29, 1882–1894. [Google Scholar] [CrossRef]

- Spielberger, C.D. Theory and Research on Anxiety; Spielberger, C.D., Ed.; Academic Press Inc.: Oxford, UK, 1966; ISBN 9781483258362. [Google Scholar]

- Daviu, N.; Bruchas, M.R.; Moghaddam, B.; Sandi, C.; Beyeler, A. Neurobiological Links between Stress and Anxiety. Neurobiol. Stress 2019, 11, 100191. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders, 5th ed.; American Psychiatric Association: Washington, DC, USA, 2013. [Google Scholar]

- Spielberger, C.D. Notes and Comments Trait-State Anxiety and Motor Behavior. J. Mot. Behav. 1971, 3, 265–279. [Google Scholar] [CrossRef]

- Spielberger, C.D.; Gonzalez-Reigosa, F.; Martinez-Urrutia, A.; Natalicio, L.F.S.; Natalicio, D.S. The State-Trait Anxiety Inventory. Rev. Interam. Psicol. J. Psychol. 1971, 5, 3–4. [Google Scholar]

- Wiedemann, K. Anxiety and Anxiety Disorders. In International Encyclopedia of the Social & Behavioral Sciences; Elsevier: Amsterdam, The Netherlands, 2001; pp. 560–567. [Google Scholar]

- Duval, E.R.; Javanbakht, A.; Liberzon, I. Neural Circuits in Anxiety and Stress Disorders: A Focused Review. Ther. Clin. Risk Manag. 2015, 11, 115–126. [Google Scholar] [CrossRef]

- Ding, Y.; Cao, Y.; Duffy, V.G.; Wang, Y.; Zhang, X. Measurement and Identification of Mental Workload during Simulated Computer Tasks with Multimodal Methods and Machine Learning. Ergonomics 2020, 63, 896–908. [Google Scholar] [CrossRef] [PubMed]

- Mozos, O.M.; Sandulescu, V.; Andrews, S.; Ellis, D.; Bellotto, N.; Dobrescu, R.; Ferrandez, J.M. Stress Detection Using Wearable Physiological and Sociometric Sensors. Int. J. Neural Syst. 2017, 27, 1650041. [Google Scholar] [CrossRef]

- Sandulescu, V.; Dobrescu, R. Wearable System for Stress Monitoring of Firefighters in Special Missions. In Proceedings of the 2015 E-Health and Bioengineering Conference, EHB 2015, Iasi, Romania, 19–21 November 2015; pp. 1–4. [Google Scholar]

- Schmidt, P.; Dürichen, R.; Reiss, A.; Van Laerhoven, K.; Plötz, T. Multi-Target Affect Detection in the Wild: An Exploratory Study. In Proceedings of the Proceedings-International Symposium on Wearable Computers, ISWC, London, UK, 9–13 September 2019; pp. 211–219. [Google Scholar]

- Vaz, M.; Summavielle, T.; Sebastião, R.; Ribeiro, R.P. Multimodal Classification of Anxiety Based on Physiological Signals. Appl. Sci. 2023, 13, 6368. [Google Scholar] [CrossRef]

- Jiao, Y.; Wang, X.; Liu, C.; Du, G.; Zhao, L.; Dong, H.; Zhao, S.; Liu, Y. Feasibility Study for Detection of Mental Stress and Depression Using Pulse Rate Variability Metrics via Various Durations. Biomed. Signal Process. Control 2023, 79, 104145. [Google Scholar] [CrossRef]

- Bystritsky, A.; Kronemyer, D. Stress and Anxiety: Counterpart Elements of the Stress/Anxiety Complex. Psychiatr. Clin. N. Am. 2014, 37, 489–518. [Google Scholar] [CrossRef]

- Taschereau-Dumouchel, V.; Michel, M.; Lau, H.; Hofmann, S.G.; LeDoux, J.E. Putting the “Mental” Back in “Mental Disorders”: A Perspective from Research on Fear and Anxiety. Mol. Psychiatry 2022, 27, 1322–1330. [Google Scholar] [CrossRef]

- Beck, A.T.; Epstein, N.; Brown, G.; Steer, R.A. An Inventory for Measuring Clinical Anxiety: Psychometric Properties. J. Consult. Clin. Psychol. 1988, 56, 893–897. [Google Scholar] [CrossRef] [PubMed]

- Demetriou, C.; Ozer, B.U.; Essau, C.A. Self-Report Questionnaires. Encycl. Clin. Psychol. 2015, 1–6. [Google Scholar] [CrossRef]

- Arikian, S.R.; German, J.M. A Review of the Diagnosis, Pharmacologie Treatment, and Economic Aspects of Anxiety Disorders. Prim. Care Companion J. Clin. Psychiatry 2001, 3, 110–117. [Google Scholar] [CrossRef]

- Weinberger, D.A.; Schwartz, G.E.; Davidson, R.J. Low-Anxious, High-Anxious, and Repressive Coping Styles: Psychometric Patterns and Behavioral and Physiological Responses to Stress. J. Abnorm. Psychol. 1979, 88, 369–380. [Google Scholar]

- Kaplan, R.; Saccuzzo, D. Psychological Testing: Principles, Applications, and Issues; Cengage Learning: Boston, MA, USA, 1982; ISBN 9781337517065. [Google Scholar]

- Ancillon, L.; Elgendi, M.; Menon, C. Machine Learning for Anxiety Detection Using Biosignals: A Review. Diagnostics 2022, 12, 1794. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.G.; Cheon, E.J.; Bai, D.S.; Lee, Y.H.; Koo, B.H. Stress and Heart Rate Variability: A Meta-Analysis and Review of the Literature. Psychiatry Investig. 2018, 15, 235–245. [Google Scholar] [CrossRef]

- Giannakakis, G.; Grigoriadis, D.; Giannakaki, K.; Simantiraki, O.; Roniotis, A.; Tsiknakis, M. Review on Psychological Stress Detection Using Biosignals. IEEE Trans. Affect. Comput. 2022, 13, 440–460. [Google Scholar] [CrossRef]

- Merletti, R.; Aventaggiato, M.; Botter, A.; Holobar, A.; Marateb, H.; Vieira, T.M.M. Advances in Surface EMG: Recent Progress in Detection and Processing Techniques. Crit. Rev. Biomed. Eng. 2010, 38, 305–345. [Google Scholar] [CrossRef]

- Hidaka, O.; Yanagi, M.; Takada, K. Mental Stress-Induced Physiological Changes in the Human Masseter Muscle. J. Dent. Res. 2004, 83, 227–231. [Google Scholar] [CrossRef] [PubMed]

- Wijsman, J.; Grundlehner, B.; Penders, J.; Hermens, H. Trapezius Muscle EMG as Predictor of Mental Stress. Trans. Embed. Comput. Syst. 2013, 12, 1–20. [Google Scholar] [CrossRef]

- Tsai, C.M.; Chou, S.L.; Gale, E.N.; Mccall, W.D. Human Masticatory Muscle Activity and Jaw Position under Experimental Stress. J. Oral Rehabil. 2002, 29, 44–51. [Google Scholar] [CrossRef]

- Turpin, G.; Grandfield, T. Electrodermal Activity. Encycl. Stress 2007, 899–902. [Google Scholar] [CrossRef]

- Ren, P.; Barreto, A.; Huang, J.; Gao, Y.; Ortega, F.R.; Adjouadi, M. Off-Line and on-Line Stress Detection through Processing of the Pupil Diameter Signal. Ann. Biomed. Eng. 2014, 42, 162–176. [Google Scholar] [CrossRef]

- Palanisamy, K.; Murugappan, M.; Sazali, Y. Descriptive Analysis of Skin Temperature Variability of Sympathetic Nervous System Activity in Stress. J. Phys. Ther. Sci. 2012, 24, 1341–1344. [Google Scholar] [CrossRef]

- Long, N.; Lei, Y.; Peng, L.; Xu, P.; Mao, P. A Scoping Review on Monitoring Mental Health Using Smart Wearable Devices. Math. Biosci. Eng. 2022, 19, 7899–7919. [Google Scholar] [CrossRef]

- Gedam, S.; Paul, S. A Review on Mental Stress Detection Using Wearable Sensors and Machine Learning Techniques. IEEE Access 2021, 9, 84045–84066. [Google Scholar] [CrossRef]

- Vizer, L.M.; Zhou, L.; Sears, A. Automated Stress Detection Using Keystroke and Linguistic Features: An Exploratory Study. Int. J. Hum. Comput. Stud. 2009, 67, 870–886. [Google Scholar] [CrossRef]

- Allen, A.P.; Kennedy, P.J.; Dockray, S.; Cryan, J.F.; Dinan, T.G.; Clarke, G. The Trier Social Stress Test: Principles and Practice. Neurobiol. Stress 2017, 6, 113–126. [Google Scholar] [CrossRef]

- Scarpina, F.; Tagini, S. The Stroop Color and Word Test. Front. Psychol. 2017, 8, 557. [Google Scholar] [CrossRef]

- Tulen, J.H.M.; Moleman, P.; van Steenis, H.G.; Boomsma, F. Characterization of Stress Reactions to the Stroop Color Word Test. Pharmacol. Biochem. Behav. 1989, 32, 9–15. [Google Scholar] [CrossRef]

- Beh, W.-K.; Wu, Y.-H.; Wu, A.-Y. MAUS: A Dataset for Mental Workload Assessmenton N-Back Task Using Wearable Sensor. arXiv 2021, arXiv:2111.02561. [Google Scholar] [CrossRef]

- Lovallo, W. The Cold Pressor Test and Autonomic Function: A Review and Integration. Psychophysiology 1975, 12, 268–282. [Google Scholar] [CrossRef]

- Lang, P.J.; Bradley, M.M.; Cuthbert, B.N. International Affective Picture System (IAPS): Technical Manual and Affective Ratings. NIMH Cent. Study Emot. Atten. 1997, 39–58. Available online: https://acordo.net/acordo/wp-content/uploads/2020/08/instructions.pdf (accessed on 8 September 2025).

- Magaña, V.C.; Pañeda, X.G.; Garcia, R.; Paiva, S.; Pozueco, L. Beside and behind the Wheel: Factors That Influence Driving Stress and Driving Behavior. Sustainability 2021, 13, 4775. [Google Scholar] [CrossRef]

- Chung, W.-Y.; Chong, T.-W.; Lee, B.-G. Methods to Detect and Reduce Driver Stress: A Review. Int. J. Automot. Technol. 2019, 20, 1051–1063. [Google Scholar] [CrossRef]

- Pasha, S.T.; Halder, N.; Badrul, T.; Setu, J.H.; Islam, A.; Alam, M.Z. Physiological Signal Data-Driven Workplace Stress Detection Among Healthcare Professionals Using BiLSTM-AM and Ensemble Stacking Models. In Proceedings of the Advances in Science and Engineering Technology International Conferences ASET, Abu Dhabi, United Arab Emirates, 3–5 June 2024; pp. 1–10. [Google Scholar] [CrossRef]

- Gjoreski, M.; Luštrek, M.; Gams, M.; Gjoreski, H. Monitoring Stress with a Wrist Device Using Context. J. Biomed. Inform. 2017, 73, 159–170. [Google Scholar] [CrossRef] [PubMed]

- Chandra, V.; Sethia, D. Machine Learning-Based Stress Classification System Using Wearable Sensor Devices. IAES Int. J. Artif. Intell. 2024, 13, 337–347. [Google Scholar] [CrossRef]

- Ahmad, Z.; Rabbani, S.; Zafar, M.R.; Ishaque, S.; Krishnan, S.; Khan, N. Multilevel Stress Assessment from ECG in a Virtual Reality Environment Using Multimodal Fusion. IEEE Sens. J. 2023, 23, 29559–29570. [Google Scholar] [CrossRef]

- Agarwal, S.; Sharma, S.; Faisal, K.N.; Sharma, R.R. Induced Stress Identification Using EEG: A Framework Based on MVMD and Machine Learning. In Proceedings of the 2024 IEEE International Students’ Conference on Electrical, Electronics and Computer Science SCEECS, Bhopal, India, 24–25 February 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Akella, A.; Singh, A.K.; Leong, D.; Lal, S.; Newton, P.; Clifton-Bligh, R.; McLachlan, C.S.; Gustin, S.M.; Maharaj, S.; Lees, T.; et al. Classifying Multi-Level Stress Responses from Brain Cortical EEG in Nurses and Non-Health Professionals Using Machine Learning Auto Encoder. IEEE J. Transl. Eng. Health Med. 2021, 9, 1–9. [Google Scholar] [CrossRef]

- Abd Al-Alim, M.; Mubarak, R.; Salem, N.M.; Sadek, I. A Machine-Learning Approach for Stress Detection Using Wearable Sensors in Free-Living Environments. Comput. Biol. Med. 2024, 179, 108918. [Google Scholar] [CrossRef]

- AlShorman, O.; Masadeh, M.; Heyat, M.B.B.; Akhtar, F.; Almahasneh, H.; Ashraf, G.M.; Alexiou, A. Frontal Lobe Real-Time EEG Analysis Using Machine Learning Techniques for Mental Stress Detection. J. Integr. Neurosci. 2022, 21, 20. [Google Scholar] [CrossRef]

- Arya, L.; Chowdhary, H.; Agrawal, I.; Sreedevi, I. Towards Accurate Stress Classification: Combining Advanced Feature Selection and Deep Learning. In Proceedings of the 2023 3rd IEEE International Conference on Software Engineering and Artificial Intelligence, SEAI 2023, Xiamen, China, 16–18 June 2023; pp. 47–52. [Google Scholar]

- Badr, Y.; Al-Shargie, F.; Tariq, U.; Babiloni, F.; Al Mughairbi, F.; Al-Nashash, H. Classification of Mental Stress Using Dry EEG Electrodes and Machine Learning. In Proceedings of the 2023 Advances in Science and Engineering Technology International Conferences, ASET 2023, Dubai, United Arab Emirates, 20–23 February 2023. [Google Scholar]

- Badr, Y.; Al-Shargie, F.; Tariq, U.; Babiloni, F.; Al-Mughairbi, F.; Al-Nashash, H. Mental Stress Detection and Mitigation Using Machine Learning and Binaural Beat Stimulation. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Bahameish, M.; Stockman, T.; Requena Carrión, J. Strategies for Reliable Stress Recognition: A Machine Learning Approach Using Heart Rate Variability Features. Sensors 2024, 24, 3210. [Google Scholar] [CrossRef] [PubMed]

- Beh, W.-K.; Wu, Y.-H.; Wu, A.-Y. Robust PPG-Based Mental Workload Assessment System Using Wearable Devices. IEEE J. Biomed. Health Inform. 2023, 27, 2323–2333. [Google Scholar] [CrossRef]

- Bobade, P.; Vani, M. Stress Detection with Machine Learning and Deep Learning Using Multimodal Physiological Data. In Proceedings of the 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 15–17 July 2020; pp. 51–57. [Google Scholar] [CrossRef]

- Campanella, S.; Altaleb, A.; Belli, A.; Pierleoni, P.; Palma, L. A Method for Stress Detection Using Empatica E4 Bracelet and Machine-Learning Techniques. Sensors 2023, 23, 3565. [Google Scholar] [CrossRef]

- Cui, Z.; Ma, Y.; Ma, M.; Huang, R.; Du, B. Towards a Lightweight Stress Prediction Model: A Study on Dimension Reduction and Individual Models in HRV Analysis. In Proceedings of the 2023 IEEE 29th International Conference on Parallel and Distributed Systems (ICPADS), Ocean Flower Island, China, 17–21 December 2023; pp. 1709–1716. [Google Scholar] [CrossRef]

- Cruz, A.P.; Pradeep, A.; Sivasankar, K.R.; Krishnaveni, K. A Decision Tree Optimised SVM Model for Stress Detection Using Biosignals. In Proceedings of the 2020 International Conference on Communication and Signal Processing (ICCSP), Chennai, India, 28–30 July 2020; pp. 841–845. [Google Scholar]

- Dalmeida, K.M.; Masala, G.L. Hrv Features as Viable Physiological Markers for Stress Detection Using Wearable Devices. Sensors 2021, 21, 2873. [Google Scholar] [CrossRef]

- Delmastro, F.; Di Martino, F.; Dolciotti, C. Cognitive Training and Stress Detection in MCI Frail Older People through Wearable Sensors and Machine Learning. IEEE Access 2020, 8, 65573–65590. [Google Scholar] [CrossRef]

- Erkus, E.C.; Purutcuoglu, V.; Ari, F.; Gokcay, D. Comparison of Several Machine Learning Classifiers for Arousal Classification: A Preliminary Study. In Proceedings of the 2020 Medical Technologies Congress (TIPTEKNO), Antalya, Turkey, 19–20 November 2020; pp. 1–7. [Google Scholar]

- Fernandez, J.; Martínez, R.; Innocenti, B.; López, B. Contribution of EEG Signals for Students’ Stress Detection. IEEE Trans. Affect. Comput. 2025, 16, 1235–1246. [Google Scholar] [CrossRef]

- Giannakakis, G.; Pediaditis, M.; Manousos, D.; Kazantzaki, E.; Chiarugi, F.; Simos, P.G.; Marias, K.; Tsiknakis, M. Stress and Anxiety Detection Using Facial Cues from Videos. Biomed. Signal Process. Control 2017, 31, 89–101. [Google Scholar] [CrossRef]

- Hag, A.; Al-Shargie, F.; Handayani, D.; Asadi, H. Mental Stress Classification Based on Selected Electroencephalography Channels Using Correlation Coefficient of Hjorth Parameters. Brain Sci. 2023, 13, 1340. [Google Scholar] [CrossRef]

- Han, H.J.; Labbaf, S.; Borelli, J.L.; Dutt, N.; Rahmani, A.M. Objective Stress Monitoring Based on Wearable Sensors in Everyday Settings. J. Med. Eng. Technol. 2020, 44, 177–189. [Google Scholar] [CrossRef]

- Henry, J.; Lloyd, H.; Turner, M.; Kendrick, C. On the Robustness of Machine Learning Models for Stress and Anxiety Recognition from Heart Activity Signals. IEEE Sens. J. 2023, 23, 14428–14436. [Google Scholar] [CrossRef]

- Jahanjoo, A.; Taherinejad, N.; Aminifar, A. High-Accuracy Stress Detection Using Wrist-Worn PPG Sensors. In Proceedings of the 2024 IEEE International Symposium on Circuits and Systems (ISCAS), Singapore, 19–22 May 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Abdul Kader, L.; Al-Shargie, F.; Tariq, U.; Al-Nashash, H. One-Channel Wearable Mental Stress State Monitoring System. Sensors 2024, 24, 5373. [Google Scholar] [CrossRef]

- Kalra, P.; Sharma, V. Mental Stress Assessment Using PPG Signal a Deep Neural Network Approach. IETE J. Res. 2023, 69, 879–885. [Google Scholar] [CrossRef]

- Kim, N.; Seo, W.; Kim, S.; Park, S.M. Electrogastrogram: Demonstrating Feasibility in Mental Stress Assessment Using Sensor Fusion. IEEE Sens. J. 2021, 21, 14503–14514. [Google Scholar] [CrossRef]

- Kim, H.; Kim, M.; Park, K.; Kim, J.; Yoon, D.; Kim, W.; Park, C.H. Machine Learning-Based Classification Analysis of Knowledge Worker Mental Stress. Front. Public Health 2023, 11, 1302794. [Google Scholar] [CrossRef]

- Kim, N.; Lee, S.; Kim, J.; Choi, S.Y.; Park, S.M. Shuffled ECA-Net for Stress Detection from Multimodal Wearable Sensor Data. Comput. Biol. Med. 2024, 183, 109217. [Google Scholar] [CrossRef]

- Konar, D.; De, S.; Mukherjee, P.; Roy, A.H. A Novel Human Stress Level Detection Technique Using EEG. In Proceedings of the 2023 International Conference on Network, Multimedia and Information Technology (NMITCON), Bengaluru, India, 1–2 September 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Kurniawan, H.; Maslov, A.V.; Pechenizkiy, M. Stress Detection from Speech and Galvanic Skin Response Signals. In Proceedings of the CBMS 2013—26th IEEE International Symposium on Computer-Based Medical Systems, Porto, Portugal, 20–22 June 2013; pp. 209–214. [Google Scholar]

- Lingelbach, K.; Bui, M.; Diederichs, F.; Vukelic, M. Exploring Conventional, Automated and Deep Machine Learning for Electrodermal Activity-Based Drivers’ Stress Recognition. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 1339–1344. [Google Scholar]

- Liu, Y.; Li, H.; Wang, J.; Zhang, H.; Zheng, X. Psychological Stress Detection Based on Heart Rate Variability. In Proceedings of the International Conference on Electronic Information Engineering and Computer Science (EIECS 2022), Changchun, China, 16–18 September 2022; Yue, Y., Ed.; SPIE: Cergy, France, 2023; Volume 12602, p. 150. [Google Scholar]

- Mamdouh, M.; Mahmoud, R.; Attallah, O.; Al-Kabbany, A. Stress Detection in the Wild: On the Impact of Cross-Training on Mental State Detection. In Proceedings of the 2023 40th National Radio Science Conference (NRSC), Giza, Egypt, 30 May–1 June 2023; pp. 150–158. [Google Scholar] [CrossRef]

- Marthinsen, A.J.; Galtung, I.T.; Cheema, A.; Sletten, C.M.; Andreassen, I.M.; Sletta, Ø.; Soler, A.; Molinas, M. Psychological Stress Detection with Optimally Selected EEG Channel Using Machine Learning Techniques. CEUR Workshop Proc. 2023, 3576, 53–68. [Google Scholar]

- Mevlevioğlu, D.; Tabirca, S.; Murphy, D. Real-Time Classification of Anxiety in Virtual Reality Therapy Using Biosensors and a Convolutional Neural Network. Biosensors 2024, 14, 131. [Google Scholar] [CrossRef]

- Meteier, Q.; Capallera, M.; Ruffieux, S.; Angelini, L.; Abou Khaled, O.; Mugellini, E.; Widmer, M.; Sonderegger, A. Classification of Drivers’ Workload Using Physiological Signals in Conditional Automation. Front. Psychol. 2021, 12, 596038. [Google Scholar] [CrossRef] [PubMed]

- Meteier, Q.; De Salis, E.; Capallera, M.; Widmer, M.; Angelini, L.; Abou Khaled, O.; Sonderegger, A.; Mugellini, E. Relevant Physiological Indicators for Assessing Workload in Conditionally Automated Driving, Through Three-Class Classification and Regression. Front. Comput. Sci. 2022, 3, 775282. [Google Scholar] [CrossRef]

- Meteier, Q.; Capallera, M.; de Salis, E.; Angelini, L.; Carrino, S.; Widmer, M.; Abou Khaled, O.; Mugellini, E.; Sonderegger, A. A Dataset on the Physiological State and Behavior of Drivers in Conditionally Automated Driving. Data Br. 2023, 47, 109027. [Google Scholar] [CrossRef]

- Naegelin, M.; Weibel, R.P.; Kerr, J.I.; Schinazi, V.R.; La Marca, R.; von Wangenheim, F.; Hoelscher, C.; Ferrario, A. An Interpretable Machine Learning Approach to Multimodal Stress Detection in a Simulated Office Environment. J. Biomed. Inform. 2023, 139, 104299. [Google Scholar] [CrossRef] [PubMed]

- Plarre, K.; Raij, A.; Hossain, S.M.; Ali, A.A.; Nakajima, M.; Al’Absi, M.; Ertin, E.; Kamarck, T.; Kumar, S.; Scott, M.; et al. Continuous Inference of Psychological Stress from Sensory Measurements Collected in the Natural Environment. In Proceedings of the 10th ACM/IEEE International Conference on Information Processing in Sensor Networks, IPSN’11, Chicago, IL, USA, 12–14 April 2011; pp. 97–108. [Google Scholar]

- Rajendran, V.G.; Jayalalitha, S.; Adalarasu, K.; Thalamalaichamy, M. Analysis and Classification of EEG Data When Playing Video Games and Relax Using EEG Biomarkers. AIP Conf. Proc. 2024, 3180, 040002. [Google Scholar] [CrossRef]

- Sandulescu, V.; Andrews, S.; Ellis, D.; Bellotto, N.; Mozos, O.M. Stress Detection Using Wearable Physiological Sensors. Lect. Notes Comput. Sci. (Incl. Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinform.) 2015, 9107, 526–532. [Google Scholar]

- Setz, C.; Arnrich, B.; Schumm, J.; La Marca, R.; Tr, G.; Ehlert, U. Discriminating Stress From Cognitive Load Using a Wearable EDA Device. Technology 2010, 14, 410–417. [Google Scholar] [CrossRef]

- Sharisha Shanbhog, M.; Medikonda, J.; Rai, S. Unsupervised Machine Learning Approach for Stress Level Classification Using Electrodermal Activity Signals. In Proceedings of the 2024 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 12–14 July 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Shaposhnyk, O.; Yanushkevich, S.; Babenko, V.; Chernykh, M.; Nastenko, I. Inferring Cognitive Load Level from Physiological and Personality Traits. In Proceedings of the 2023 International Conference on Information and Digital Technologies (IDT), Zilina, Slovakia, 20–22 June 2023; pp. 233–242. [Google Scholar]

- Siam, A.I.; Gamel, S.A.; Talaat, F.M. Automatic Stress Detection in Car Drivers Based on Non-Invasive Physiological Signals Using Machine Learning Techniques. Neural Comput. Appl. 2023, 35, 12891–12904. [Google Scholar] [CrossRef]

- Silva, E.; Aguiar, J.; Reis, L.P.; Sá, J.O.E.; Gonçalves, J.; Carvalho, V. Stress among Portuguese Medical Students: The EuStress Solution. J. Med. Syst. 2020, 44, 45. [Google Scholar] [CrossRef]

- Souchet, A.D.; Lamarana Diallo, M.; Lourdeaux, D. Acute Stress Classification with a Stroop Task and In-Office Biophilic Relaxation in Virtual Reality Based on Behavioral and Physiological Data. In Proceedings of the 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Sydney, Australia, 16–20 October 2023; pp. 537–542. [Google Scholar] [CrossRef]

- Subhani, A.R.; Mumtaz, W.; Saad, M.N.B.M.; Kamel, N.; Malik, A.S. Machine Learning Framework for the Detection of Mental Stress at Multiple Levels. IEEE Access 2017, 5, 13545–13556. [Google Scholar] [CrossRef]

- Swapnil, S.S.; Nuhi-Alamin, M.; Rahman, K.M.; Sarkar, A.K.; Siam, M.Z.H. An Ensemble Approach to Classify Mental Stress Using EEG Based Time-Frequency and Non-Linear Features. In Proceedings of the 2024 3rd International Conference on Advancement in Electrical and Electronic Engineering (ICAEEE), Gazipur, Bangladesh, 25–27 April 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Toshnazarov, K.; Lee, U.; Kim, B.H.; Mishra, V.; Najarro, L.A.C.; Noh, Y. SOSW: Stress Sensing with Off-the-Shelf Smartwatches in the Wild. IEEE Internet Things J. 2024, 11, 21527–21545. [Google Scholar] [CrossRef]

- Troyee, T.G.; Chowdhury, M.H.; Khondakar, M.F.K.; Hasan, M.; Hossain, M.A.; Hossain, Q.D.; Ali Akber Dewan, M. Stress Detection and Audio-Visual Stimuli Classification from Electroencephalogram. IEEE Access 2024, 12, 145417–145427. [Google Scholar] [CrossRef]

- Troyee, T.G.; Karim Khondakar, M.F.; Hasan, M.; Chowdhury, M.H. A Comparative Analysis of Different Preprocessing Pipelines for EEG-Based Mental Stress Detection. In Proceedings of the 2024 6th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT), Dhaka, Bangladesh, 2–4 May 2024; pp. 370–375. [Google Scholar] [CrossRef]

- Xing, M.; Fitzgerald, J.M.; Klumpp, H. Classification of Social Anxiety Disorder With Support Vector Machine Analysis Using Neural Correlates of Social Signals of Threat. Front. Psychiatry 2020, 11, 144. [Google Scholar] [CrossRef] [PubMed]

- Zhu, L.; Spachos, P.; Ng, P.C.; Yu, Y.; Wang, Y.; Plataniotis, K.; Hatzinakos, D. Stress Detection Through Wrist-Based Electrodermal Activity Monitoring and Machine Learning. IEEE J. Biomed. Health Inform. 2023, 27, 2155–2165. [Google Scholar] [CrossRef]

- Benchekroun, M.; Chevallier, B.; Beaouiss, H.; Istrate, D.; Zalc, V.; Khalil, M.; Lenne, D. Comparison of Stress Detection through ECG and PPG Signals Using a Random Forest-Based Algorithm. In Proceedings of the 2022 44th annual international conference of the IEEE engineering in medicine & Biology society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 3150–3153. [Google Scholar] [CrossRef]

- Chauhan, A.R.; Akhil; Kumar, S. Analysing Effectiveness of Different Physiological Biomarkers in Detecting Stress. In Proceedings of the 2023 IEEE World Conference on Applied Intelligence and Computing (AIC), Sonbhadra, India, 29–30 July 2023; pp. 71–75. [Google Scholar] [CrossRef]

- Dahal, K.; Bogue-Jimenez, B.; Doblas, A. Global Stress Detection Framework Combining a Reduced Set of HRV Features and Random Forest Model. Sensors 2023, 23, 5220. [Google Scholar] [CrossRef]

- Gazi, A.H.; Lis, P.; Mohseni, A.; Ompi, C.; Giuste, F.O.; Shi, W.; Inan, O.T.; Wang, M.D. Respiratory Markers Significantly Enhance Anxiety Detection Using Multimodal Physiological Sensing. In Proceedings of the 2021 IEEE EMBS International Conference on Biomedical and Health Informatics (BHI), Athens, Greece, 27–30 July 2021; pp. 1–4. [Google Scholar]

- Gjoreski, M.; Gjoreski, H.; Luštrek, M.; Gams, M. Continuous Stress Detection Using a Wrist Device-in Laboratory and Real Life. In Proceedings of the UbiComp 2016 Adjunct—Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 1185–1193. [Google Scholar]

- Iyer, G.G.; Udhayakumar, R.; Gopakumar, S.; Karmakar, C. Optimizing Temporal Segmentation of Multi-Modal Non-EEG Signals for Human Stress Analysis. In Proceedings of the 2024 IEEE International Conference on Artificial Intelligence in Engineering and Technology (IICAIET), Kota Kinabalu, Malaysia, 26–28 August 2024; pp. 494–499. [Google Scholar] [CrossRef]

- Morshed, M.B.; Rahman, M.M.; Nathan, V.; Zhu, L.; Bae, J.; Rosa, C.; Mendes, W.B.; Kuang, J.; Gao, A. Core Body Temperature and Its Role in Detecting Acute Stress: A Feasibility Study. In Proceedings of the ICASSP 2024-2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; pp. 1606–1610. [Google Scholar] [CrossRef]

- Pinge, A.; Bandyopadhyay, S.; Ghosh, S.; Sen, S. A Comparative Study between ECG-Based and PPG-Based Heart Rate Monitors for Stress Detection. In Proceedings of the 2022 14th International Conference on COMmunication Systems & NETworkS (COMSNETS), Bangalore, India, 4–8 January 2022; pp. 84–89. [Google Scholar] [CrossRef]

- Quadir, M.A.; Bhardwaj, S.; Verma, N.; Sivaraman, A.K.; Tee, K.F. IoT-Based Mental Health Monitoring System Using Machine Learning Stress Prediction Algorithm in Real-Time Application. Lect. Notes Electr. Eng. 2023, 1021 LNEE, 249–263. [Google Scholar] [CrossRef]

- Rashid, N.; Mortlock, T.; Faruque, M.A. Al Stress Detection Using Context-Aware Sensor Fusion from Wearable Devices. IEEE Internet Things J. 2023, 10, 14114–14127. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Van Laerhoven, K. Introducing WESAD, a Multimodal Dataset for Wearable Stress and Affect Detection. In Proceedings of the 20th ACM International Conference on Multimodal Interaction, Boulder, CO, USA, 16–20 October 2018; pp. 400–408. [Google Scholar] [CrossRef]

- Karthikeyan, P.; Murugappan, M.; Yaacob, S. EMG Signal Based Human Stress Level Classification Using Wavelet Packet Transform. In Communications in Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2012; Volume 330 CCIS, pp. 236–243. ISBN 9783642351969. [Google Scholar]

- Mazlan, M.R.B.; Sukor, A.S.B.A.; Adom, A.H.B.; Jamaluddin, R.B.; Awang, S.A.B. Investigation of Different Classifiers for Stress Level Classification Using PCA-Based Machine Learning Method. In Proceedings of the 2023 19th IEEE International Colloquium on Signal Processing & Its Applications (CSPA), Kedah, Malaysia, 3–4 March 2023; pp. 168–173. [Google Scholar] [CrossRef]

- Wijsman, J.; Grundlehner, B.; Liu, H.; Hermens, H.; Penders, J. Towards Mental Stress Detection Using Wearable Physiological Sensors. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBS, Boston, MA, USA, 30 August–3 September 2011; pp. 1798–1801. [Google Scholar]

- Kader, L.A.; Yahya, F.; Tariq, U.; Al-Nashash, H. Mental Stress Assessment Using Low in Cost Single Channel EEG System. In Proceedings of the 2023 Advances in Science and Engineering Technology International Conferences, ASET 2023, Dubai, United Arab Emirates, 20–23 February 2023. [Google Scholar]

- Jain, A.; Kumar, R. Machine Learning Based Anxiety Detection Using Physiological Signals and Context Features. In Proceedings of the 2024 2nd International Conference on Advancement in Computation & Computer Technologies (InCACCT), Gharuan, India, 2–3 May 2024; pp. 116–121. [Google Scholar] [CrossRef]

- Sim, D.Y.Y.; Chong, C.K. Effects of Dimension Reduction Methods on Boosting Algorithms for Better Prediction Accuracies on Classifications of Stress EEGs. In Proceedings of the 2023 6th International Conference on Electronics and Electrical Engineering Technology (EEET), Nanjing, China, 1–3 December 2023; pp. 49–54. [Google Scholar] [CrossRef]

- Choi, J.; Ahmed, B.; Gutierrez-Osuna, R. Development and Evaluation of an Ambulatory Stress Monitor Based on Wearable Sensors. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 279–286. [Google Scholar] [CrossRef]

- Mozafari, M.; Goubran, R.; Green, J.R. A Fusion Model for Cross-Subject Stress Level Detection Based on Transfer Learning. In Proceedings of the 2021 IEEE Sensors Applications Symposium, SAS 2021-Proceedings, Sundsvall, Sweden, 23–25 August 2021; pp. 1–6. [Google Scholar]

- Masrur, N.; Halder, N.; Rashid, S.; Setu, J.H.; Islam, A.; Ahmed, T. Performance Analysis of Ensemble and DNN Models for Decoding Mental Stress Utilizing ECG-Based Wearable Data Fusion. In Proceedings of the 2024 IEEE International Black Sea Conference on Communications and Networking (BlackSeaCom), Tbilisi, Georgia, 24–27 June 2024; pp. 276–279. [Google Scholar] [CrossRef]

- Adarsh, V.; Gangadharan, G.R. Mental Stress Detection from Ultra-Short Heart Rate Variability Using Explainable Graph Convolutional Network with Network Pruning and Quantisation. Mach. Learn. 2024, 113, 5467–5494. [Google Scholar] [CrossRef]

- Al-Shargie, F.; Badr, Y.; Tariq, U.; Babiloni, F.; Al-Mughairbi, F.; Al-Nashash, H. Classification of Mental Stress Levels Using EEG Connectivity and Convolutional Neural Networks. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Appriou, A.; Cichocki, A.; Lotte, F. Modern Machine-Learning Algorithms: For Classifying Cognitive and Affective States From Electroencephalography Signals. IEEE Syst. Man Cybern. Mag. 2020, 6, 29–38. [Google Scholar] [CrossRef]

- Shirley Benita, D.; Shamila Ebenezer, A.; Susmitha, L.; Subathra, M.S.P.; Jeba Priya, S. Stress Detection Using CNN on the WESAD Dataset. In Proceedings of the 2024 International Conference on Emerging Systems and Intelligent Computing (ESIC), Bhubaneswar, India, 9–10 February 2024; pp. 308–313. [Google Scholar] [CrossRef]

- Chatterjee, D.; Dutta, S.; Shaikh, R.; Saha, S.K. A Lightweight Deep Neural Network for Detection of Mental States from Physiological Signals. Innov. Syst. Softw. Eng. 2022, 20, 405–412. [Google Scholar] [CrossRef]

- Mortensen, J.A.; Mollov, M.E.; Chatterjee, A.; Ghose, D.; Li, F.Y. Multi-Class Stress Detection Through Heart Rate Variability: A Deep Neural Network Based Study. IEEE Access 2023, 11, 57470–57480. [Google Scholar] [CrossRef]

- Zontone, P.; Affanni, A.; Piras, A.; Rinaldo, R. Convolutional Neural Networks Using Scalograms for Stress Recognition in Drivers. In Proceedings of the 2023 31st European Signal Processing Conference (EUSIPCO), Helsinki, Finland, 4–8 September 2023; pp. 1185–1189. [Google Scholar] [CrossRef]

- Dhaouadi, S.; Ben Khelifa, M.M. A Multimodal Physiological-Based Stress Recognition: Deep Learning Models’ Evaluation in Gamers’ Monitoring Application. In Proceedings of the 2020 International Conference on Advanced Technologies for Signal and Image Processing, ATSIP 2020, Sousse, Tunisia, 2–5 September 2020; pp. 1–6. [Google Scholar]

- Praveenkumar, S.; Karthick, T. Automatic Stress Recognition System with Deep Learning Using Multimodal Psychological Data. In Proceedings of the 2022 International Conference on Electronic Systems and Intelligent Computing, ICESIC 2022, Chennai, India, 22–23 April 2022; pp. 122–127. [Google Scholar]

- Uddin, J. An Autoencoder Based Emotional Stress State Detection Approach Using Electroencephalography Signals. J. Inf. Syst. Telecommun. 2023, 11, 24–30. [Google Scholar] [CrossRef]

- Eisenbarth, H.; Chang, L.J.; Wager, T.D. Multivariate Brain Prediction of Heart Rate and Skin Conductance Responses to Social Threat. J. Neurosci. 2016, 36, 11987–11998. [Google Scholar] [CrossRef] [PubMed]

- Onim, M.S.H.; Thapliyal, H. Predicting Stress in Older Adults with RNN and LSTM from Time Series Sensor Data and Cortisol. In Proceedings of the 2024 IEEE Computer Society Annual Symposium on VLSI (ISVLSI), Knoxville, TN, USA, 1–3 July 2024; pp. 300–306. [Google Scholar] [CrossRef]

- Rashmi, C.R.; Shantala, C.P. Cognitive Stress Recognition During Mathematical Task and EEG Changes Following Audio-Visual Stimuli for Relaxation. In Proceedings of the 2023 International Conference on Sustainable Communication Networks and Application (ICSCNA), Theni, India, 15–17 November 2023; pp. 612–617. [Google Scholar] [CrossRef]

- Tigranyan, S.; Martirosyan, A. Breaking Barriers in Stress Detection: An Inter-Subject Approach Using ECG Signals. In Proceedings of the 2024 IEEE 48th Annual Computers, Software, and Applications Conference (COMPSAC), Osaka, Japan, 2–4 July 2024; pp. 1850–1855. [Google Scholar] [CrossRef]

- Amin, M.; Ullah, K.; Asif, M.; Shah, H.; Mehmood, A.; Khan, M.A. Real-World Driver Stress Recognition and Diagnosis Based on Multimodal Deep Learning and Fuzzy EDAS Approaches. Diagnostics 2023, 13, 1897. [Google Scholar] [CrossRef] [PubMed]

- Barki, H.; Chung, W.Y. Mental Stress Detection Using a Wearable In-Ear Plethysmography. Biosensors 2023, 13, 397. [Google Scholar] [CrossRef]

- Fan, T.; Qiu, S.; Wang, Z.; Zhao, H.; Jiang, J.; Wang, Y.; Xu, J.; Sun, T.; Jiang, N. A New Deep Convolutional Neural Network Incorporating Attentional Mechanisms for ECG Emotion Recognition. Comput. Biol. Med. 2023, 159, 106938. [Google Scholar] [CrossRef]

- Huynh, L.; Nguyen, T.; Nguyen, T.; Pirttikangas, S.; Siirtola, P. StressNAS: Affect State and Stress Detection Using Neural Architecture Search. In Proceedings of the Adjunct Proceedings of the 2021 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2021 ACM International Symposium on Wearable Computers, Virtual, 21–26 September 2021; ACM: New York, NY, USA, 2021; pp. 121–125. [Google Scholar]

- Ragav, A.; Krishna, N.H.; Narayanan, N.; Thelly, K.; Vijayaraghavan, V. Scalable Deep Learning for Stress and Affect Detection on Resource-Constrained Devices. In Proceedings of the Proceedings-18th IEEE International Conference on Machine Learning and Applications, ICMLA 2019, Boca Raton, FL, USA, 6–19 December 2019; pp. 1585–1592. [Google Scholar]

- Başaran, O.T.; Can, Y.S.; André, E.; Ersoy, C. Relieving the Burden of Intensive Labeling for Stress Monitoring in the Wild by Using Semi-Supervised Learning. Front. Psychol. 2023, 14, 1293513. [Google Scholar] [CrossRef]

- Halder, N.; Setu, J.H.; Rafid, L.; Islam, A.; Amin, M.A. Smartwatch-Based Human Stress Diagnosis Utilizing Physiological Signals and LSTM-Driven Machine Intelligence. In Proceedings of the 2024 Advances in Science and Engineering Technology International Conferences (ASET), Abu Dhabi, United Arab Emirates, 3–5 June 2024; pp. 1–8. [Google Scholar] [CrossRef]

- Tanwar, R.; Singh, G.; Pal, P.K. FuSeR: Fusion of Wearables Data for StrEss Recognition Using Explainable Artificial Intelligence Models. In Proceedings of the 2023 14th International Conference on Computing Communication and Networking Technologies (ICCCNT), Delhi, India, 6–8 July 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of Deep Learning Algorithms and Architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A Review of Recurrent Neural Networks: LSTM Cells and Network Architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Koldijk, S.; Sappelli, M.; Verberne, S.; Neerincx, M.A.; Kraaij, W. The Swell Knowledge Work Dataset for Stress and User Modeling Research. In Proceedings of the 16th International Conference on Multimodal Interaction, Istanbul, Turkey, 12–16 November 2014; pp. 291–298. [Google Scholar] [CrossRef]

- Gjoreski, M.; Kolenik, T.; Knez, T.; Luštrek, M.; Gams, M.; Gjoreski, H.; Pejović, V. Datasets for Cognitive Load Inference Using Wearable Sensors and Psychological Traits. Appl. Sci. 2020, 10, 3843. [Google Scholar] [CrossRef]

- Zyma, I.; Tukaev, S.; Seleznov, I.; Kiyono, K.; Popov, A.; Chernykh, M.; Shpenkov, O. Electroencephalograms during Mental Arithmetic Task Performance. Data 2019, 4, 14. [Google Scholar] [CrossRef]

- Ghosh, R.; Deb, N.; Sengupta, K.; Phukan, A.; Choudhury, N.; Kashyap, S.; Phadikar, S.; Saha, R.; Das, P.; Sinha, N.; et al. SAM 40: Dataset of 40 Subject EEG Recordings to Monitor the Induced-Stress While Performing Stroop Color-Word Test, Arithmetic Task, and Mirror Image Recognition Task. Data Br. 2022, 40, 107772. [Google Scholar] [CrossRef]

- Healey, J.A.; Picard, R.W. Detecting Stress during Real-World Driving Tasks Using Physiological Sensors. IEEE Trans. Intell. Transp. Syst. 2005, 6, 156–166. [Google Scholar] [CrossRef]

- Haouij, N.E.; Poggi, J.M.; Sevestre-Ghalila, S.; Ghozi, R.; Jadane, M. AffectiveROAD System and Database to Assess Driver’s Attention. In Proceedings of the 33rd Annual ACM Symposium on Applied Computing, Pau, France, 9–13 April 2018; pp. 800–803. [Google Scholar] [CrossRef]

- Koelstra, S.; Muhl, C.; Soleymani, M.; Lee, J.-S.; Yazdani, A.; Ebrahimi, T.; Pun, T.; Nijholt, A.; Patras, I. DEAP: A Database for Emotion Analysis; Using Physiological Signals. IEEE Trans. Affect. Comput. 2012, 3, 18–31. [Google Scholar] [CrossRef]

- Sharma, K.; Castellini, C.; van den Broek, E.L.; Albu-Schaeffer, A.; Schwenker, F. A Dataset of Continuous Affect Annotations and Physiological Signals for Emotion Analysis. Sci. Data 2019, 6, 196. [Google Scholar] [CrossRef]

- Hosseini, S.; Gottumukkala, R.; Katragadda, S.; Bhupatiraju, R.T.; Ashkar, Z.; Borst, C.W.; Cochran, K. A Multimodal Sensor Dataset for Continuous Stress Detection of Nurses in a Hospital. Sci. Data 2022, 9, 255. [Google Scholar] [CrossRef]

- Birjandtalab, J.; Cogan, D.; Pouyan, M.B.; Nourani, M. A Non-EEG Biosignals Dataset for Assessment and Visualization of Neurological Status. In Proceedings of the 2016 IEEE International Workshop on Signal Processing Systems (SiPS), Dallas, TX, USA, 26–28 October 2016; pp. 110–114. [Google Scholar] [CrossRef]

- Sah, R.K.; Cleveland, M.J.; Habibi, A.; Ghasemzadeh, H. Stressalyzer: Convolutional Neural Network Framework for Personalized Stress Classification. In Proceedings of the 2022 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 4658–4663. [Google Scholar] [CrossRef]

- Ding, Y.; Liu, J.; Zhang, X.; Yang, Z. Dynamic Tracking of State Anxiety via Multi-Modal Data and Machine Learning. Front. Psychiatry 2022, 13, 757961. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Y.; Wang, X.; Zhao, L.; Dong, H.; Du, G.; Zhao, S.; Liu, Y.; Liu, C.; Wang, D.; Liang, W. An Improved Sequence Coding-Based Gray Level Co-Occurrence Matrix for Mild Stress Assessment. Biomed. Signal Process. Control 2024, 95, 106357. [Google Scholar] [CrossRef]

- Ribeiro, G.; Postolache, O.; Ferrero, F. A New Intelligent Approach for Automatic Stress Level Assessment Based on Multiple Physiological Parameters Monitoring. IEEE Trans. Instrum. Meas. 2024, 73, 3342218. [Google Scholar] [CrossRef]

- Akbas, A. Evaluation of the Physiological Data Indicating the Dynamic Stress Level of Drivers. Sci. Res. Essays 2011, 6, 430–439. [Google Scholar] [CrossRef]

- Bh, S.; Neelima, K.; Deepanjali, C.; Bhuvanashree, P.; Duraipandian, K.; Rajan, S.; Sathiyanarayanan, M. Mental Health Analysis of Employees Using Machine Learning Techniques. In Proceedings of the 2022 14th International Conference on COMmunication Systems and NETworkS, COMSNETS 2022, Bangalore, India, 4–8 January 2022; pp. 1–6. [Google Scholar]

- Saganowski, S.; Perz, B.; Polak, A.G.; Kazienko, P. Emotion Recognition for Everyday Life Using Physiological Signals From Wearables: A Systematic Literature Review. IEEE Trans. Affect. Comput. 2023, 14, 1876–1897. [Google Scholar] [CrossRef]

- Dedovic, K.; Duchesne, A.; Andrews, J.; Engert, V.; Pruessner, J.C. The Brain and the Stress Axis: The Neural Correlates of Cortisol Regulation in Response to Stress. Neuroimage 2009, 47, 864–871. [Google Scholar] [CrossRef] [PubMed]

- Stuart, T.; Hanna, J.; Gutruf, P. Wearable Devices for Continuous Monitoring of Biosignals: Challenges and Opportunities. APL Bioeng. 2022, 6, 021502. [Google Scholar] [CrossRef]

- Boudreaux, B.D.; Hebert, E.P.; Hollander, D.B.; Williams, B.M.; Cormier, C.L.; Naquin, M.R.; Gillan, W.W.; Gusew, E.E.; Kraemer, R.R. Validity of Wearable Activity Monitors during Cycling and Resistance Exercise. Med. Sci. Sports Exerc. 2018, 50, 624–633. [Google Scholar] [CrossRef] [PubMed]

- Smets, E.; De Raedt, W.; Van Hoof, C. Into the Wild: The Challenges of Physiological Stress Detection in Laboratory and Ambulatory Settings. IEEE J. Biomed. Health Inform. 2019, 23, 463–473. [Google Scholar] [CrossRef]

- Can, Y.S.; Gokay, D.; Kılıç, D.R.; Ekiz, D.; Chalabianloo, N.; Ersoy, C. How Laboratory Experiments Can Be Exploited for Monitoring Stress in the Wild: A Bridge between Laboratory and Daily Life. Sensors 2020, 20, 838. [Google Scholar] [CrossRef] [PubMed]

- Choi, J.B.; Hong, S.; Nelesen, R.; Bardwell, W.A.; Natarajan, L.; Schubert, C.; Dimsdale, J.E. Age and Ethnicity Differences in Short-Term Heart-Rate Variability. Psychosom. Med. 2006, 68, 421–426. [Google Scholar] [CrossRef]

- Graves, B.S.; Hall, M.E.; Dias-Karch, C.; Haischer, M.H.; Apter, C. Gender Differences in Perceived Stress and Coping among College Students. PLoS ONE 2021, 16, e0255634. [Google Scholar] [CrossRef]

- Mueller, A.; Strahler, J.; Armbruster, D.; Lesch, K.P.; Brocke, B.; Kirschbaum, C. Genetic Contributions to Acute Autonomic Stress Responsiveness in Children. Int. J. Psychophysiol. 2012, 83, 302–308. [Google Scholar] [CrossRef]

- Ellis, B.J.; Jackson, J.J.; Boyce, W.T. The Stress Response Systems: Universality and Adaptive Individual Differences. Dev. Rev. 2006, 26, 175–212. [Google Scholar] [CrossRef]

- McEwen, B.S. The Neurobiology of Stress: From Serendipity to Clinical Relevance. Brain Res. 2000, 886, 172–189. [Google Scholar] [CrossRef]

- Grissom, N.; Bhatnagar, S. Habituation to Repeated Stress: Get Used to It. Neurobiol. Learn. Mem. 2009, 92, 215–224. [Google Scholar] [CrossRef]

- Alkurdi, A.; He, M.; Cerna, J.; Clore, J.; Sowers, R.; Hsiao-Wecksler, E.T.; Hernandez, M.E. Extending Anxiety Detection from Multimodal Wearables in Controlled Conditions to Real-World Environments. Sensors 2025, 25, 1241. [Google Scholar] [CrossRef]

- Alkurdi, A.; Clore, J.; Sowers, R.; Hsiao-Wecksler, E.T.; Hernandez, M.E. Resilience of Machine Learning Models in Anxiety Detection: Assessing the Impact of Gaussian Noise on Wearable Sensors. Appl. Sci. 2025, 15, 88. [Google Scholar] [CrossRef]

- Kothgassner, O.D.; Goreis, A.; Bauda, I.; Ziegenaus, A.; Glenk, L.M.; Felnhofer, A. Virtual Reality Biofeedback Interventions for Treating Anxiety: A Systematic Review, Meta-Analysis and Future Perspective. Wien. Klin. Wochenschr. 2022, 134, 49. [Google Scholar] [CrossRef] [PubMed]

- Gradl, S.; Wirth, M.; Zillig, T.; Eskofier, B.M. Visualization of Heart Activity in Virtual Reality: A Biofeedback Application Using Wearable Sensors. In Proceedings of the 2018 IEEE 15th International Conference on Wearable and Implantable Body Sensor Networks, BSN 2018, Las Vegas, NV, USA, 4–7 March 2018; Volume 2018-Janua, pp. 152–155. [Google Scholar]

- Tricco, A.C.; Lillie, E.; Zarin, W.; O’Brien, K.K.; Colquhoun, H.; Levac, D.; Moher, D.; Peters, M.D.J.; Horsley, T.; Weeks, L.; et al. PRISMA Extension for Scoping Reviews (PRISMAScR): Checklist and Explanation. Ann. Intern. Med. 2018, 169, 467–473. [Google Scholar] [CrossRef] [PubMed]

| Database | Search String |

|---|---|

| PubMed | (“machine learning”) AND “anxiety” NOT (“depression” OR “Autism” OR Stroke OR “depressive” OR phobia) |

| IEEE Xplore | (“machine learning”) AND ((“psychological stress” OR “mental stress” OR “emotional stress” OR “mental workload” OR “stressful) OR “anxiety”) |

| Scopus | TITLE-ABS-KEY (“machine learning” AND (“psychological stress” OR “mental stress” OR “emotional stress” OR “mental workload” OR “cognitive workload” OR “Cognitive stress” OR “anxiety”)) AND NOT TITLE-ABS (review OR survey OR scoping OR autism OR autistic OR diabetic) AND NOT TITLE (treatment OR suicide OR surgery OR depression OR depressed OR “anxiety disorders” OR vaccine OR child OR children OR cells OR glycemia OR tumor OR tremor OR gender OR wealth OR “mental illness” OR disorder OR “management system” OR “intelligence” OR disease) AND (LIMIT-TO (PUBSTAGE, “final”)) AND (LIMIT-TO (DOCTYPE, “ar”) OR LIMIT-TO (DOCTYPE, “cp”)) |

| E2E Models | References |

|---|---|

| CNN | [11,23,57,69,146,147,148] |

| FCN | [11,149] |

| Inception Time | [11] |

| LSTM | [150,151,152] |

| Multi-ResNet | [11] |

| ResNet | [57,87,149] |

| Encoder | [11] |

| Time CNN | [11] |

| CNN-LSTM | [11,146,151] |

| MLP | [11,124,149,151] |

| MLP-LSTM | [151] |

| RF and kNN | [56] |

| SVM, kNN, NB, LDA | [73] |

| Boost | [153] |

| Stressor Type | References |

|---|---|

| Social Stressors | [11,21,22,24,57,59,67,68,75,78,79,95,96,98,99,100,107,111,113,114,118,119,120,121,122,127,129,131,132,135,136,140,143,145,148,149,150,152,153,166] |

| Mental Stressors | [42,55,56,58,63,64,65,66,68,69,72,74,75,77,80,81,82,83,85,86,87,89,90,95,96,97,99,101,103,104,105,106,107,108,109,111,112,115,116,117,118,119,123,124,125,126,128,129,130,132,133,134,137,139,144,147,152,167,168] |

| Physical Stressors | [22,57,61,72,77,84,96,107,111,119,152,169] |

| Emotional Stressors | [73,75,76,77,78,84,91,110,115,134,141,153] |

| Driving Stressors | [62,70,71,87,88,92,93,94,102,138,146,170] |

| Daily-Life Stressors | [23,54,55,60,77,96,107,116,151] |

| Condition | References |

|---|---|

| Laboratory setting | [11,21,22,24,55,56,57,58,59,61,63,64,65,66,67,68,69,72,73,74,75,77,78,79,80,81,82,83,84,85,86,87,89,90,92,93,94,95,96,97,98,99,100,101,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,126,127,128,129,130,131,132,133,134,135,136,137,139,140,143,144,145,147,148,149,150,152,153,166,167,168,169] |

| Semi-wild setting | [62,70,71,88,102,138,146,170] |

| In-the-wild setting | [23,54,55,60,77,96,107,116,151] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, M.; Alkurdi, A.; Clore, J.L.; Sowers, R.B.; Hsiao-Wecksler, E.T.; Hernandez, M.E. Scoping Review of ML Approaches in Anxiety Detection from In-Lab to In-the-Wild. Appl. Sci. 2025, 15, 10099. https://doi.org/10.3390/app151810099

He M, Alkurdi A, Clore JL, Sowers RB, Hsiao-Wecksler ET, Hernandez ME. Scoping Review of ML Approaches in Anxiety Detection from In-Lab to In-the-Wild. Applied Sciences. 2025; 15(18):10099. https://doi.org/10.3390/app151810099

Chicago/Turabian StyleHe, Maxine, Abdulrahman Alkurdi, Jean L. Clore, Richard B. Sowers, Elizabeth T. Hsiao-Wecksler, and Manuel E. Hernandez. 2025. "Scoping Review of ML Approaches in Anxiety Detection from In-Lab to In-the-Wild" Applied Sciences 15, no. 18: 10099. https://doi.org/10.3390/app151810099

APA StyleHe, M., Alkurdi, A., Clore, J. L., Sowers, R. B., Hsiao-Wecksler, E. T., & Hernandez, M. E. (2025). Scoping Review of ML Approaches in Anxiety Detection from In-Lab to In-the-Wild. Applied Sciences, 15(18), 10099. https://doi.org/10.3390/app151810099