1. Introduction

Cyberbullying is a serious public health problem, primarily affecting children and adolescents worldwide [

1]. The growing global adoption of the Internet and the rise of social media have exacerbated this problem, eliminating time and space limitations for perpetrators and creating new, borderless virtual environments for cyberbullying [

2].

The digital environment presents a series of distinctive characteristics that intensify the severity of this phenomenon. Unlike in-person bullying, victims can reach anytime and anywhere, increasing their vulnerability. The anonymity offered by digital platforms encourages aggression as perpetrators perceive a lower likelihood of being identified and punished. This ability to hide false identities gives bullies the opportunity to use language and content that they would rarely use in face-to-face interactions. As a result, anonymity contributes significantly to an increase in inappropriate and risky behaviors among adolescents in virtual environments [

3].

Cyberbullying is caused by a combination of individual, social, and technological factors. The most common factors at the individual level included high levels of aggression, low self-control, limited awareness of the consequences of bullying, previous experiences of victimization, low moral character, ethical justification mechanisms, inappropriate parenting styles, and a higher incidence among men. At the societal level, the negative influence of peer groups reinforces bullying behavior. Although less studied, technological factors such as anonymity and online disinhibition make it easier for bullies to act without the fear of being discovered or held accountable [

4].

Personal characteristics, such as personality traits and awareness of cyberbullying, were not significantly linked to attitudes toward these actions. In contrast, psychological elements, such as self-esteem, internalizing behaviors, and antisocial behaviors, play a crucial role in shaping attitudes that are supportive of cyberbullying. Additionally, subjective norms have been shown to have a strong positive impact on these attitudes. Another important finding is the identification of social media use as a moderating factor that strengthens the connection between the intention to engage in cyberbullying and actual behavior [

5].

Recent research has concentrated on utilizing artificial intelligence to detect cyberbullying, as exemplified by the study in [

6], which introduces a practical framework for identifying cyberbullying in Roman Urdu and spotting abusive profiles on Twitter. This study systematically compared six traditional classifiers (SVM, NB, DT, LR, RF, and GB) with various embedding schemes. They used a method to categorize user profiles (Normal, Suspicious, Abusive) based on the likelihood of abusive tweets. In their evaluation, using an 80/20 data split and robust training techniques (k-fold/early stopping), GRU combined with lexical normalization achieved a 97% accuracy rate and a 97% F1 score, surpassing traditional classifiers. Other studies have conducted comparative analyses of various machine learning techniques, highlighting the effectiveness of methods such as logistic regression, a supervised statistical model used to estimate the probability of binary outcomes based on predictor variables, which achieved an average accuracy of 90.57% and the best F1 score of 0.9280 in detecting cyberbullying on platforms such as Twitter [

7].

Another study proposed a semi-synthetic dataset that includes not only aggressive content, but also key dimensions of cyberbullying, such as repetition, intent to harm, and peer relationships, addressing the limitations found in previous datasets. To validate this approach, the researchers employed the fine-tuned RoBERTa Base model, an optimized version of Bidirectional Encoder Representations from Transformers (BERT), which processes language by bidirectionally analyzing text to capture nuanced contexts. RoBERTa improves on BERT by training on larger datasets for longer periods, thereby improving performance on text classification tasks. This model achieved an F1 score of up to 0.87 in cyberbullying detection [

8].

Furthermore, techniques based on natural language processing have proven effective for the early and accurate detection of cyberbullying. In [

9], introduced a methodology for detecting cyberbullying on Twitter was introduced by leveraging natural language processing (NLP) and machine learning techniques. The research utilized a dataset of 39,870 tweets categorized into five distinct groups: religion, age, gender, ethnicity, and no cyberbullying. The authors employed a series of preprocessing steps, including tokenization, normalization, emoji cleaning, and the removal of links and stop words, followed by the application of TF-IDF for feature extraction. The performance of five classifiers—Random Forest (RF), Support Vector Machine (SVM), Logistic Regression (LR), Naïve Bayes (NB), and K-Nearest Neighbors (KNN)—was evaluated by incorporating hyperparameter tuning and the synthetic minority oversampling technique (SMOTE) for class balancing. Through an 80/20 cross-validation approach, RF demonstrated superior performance, achieving an accuracy of 94.2% with precision, recall, and F1 scores of 0.94. SVM and LR had accuracies of 93.3% and 92.9%, respectively, both with an F1 score of approximately 0.92. NB and KNN recorded accuracies of 85.3% and 73.7%, respectively. The study also presented ROC curves for RF and conducted subclass analysis, revealing performance metrics of 98.5% for age, 97.4% for ethnicity, 94.9% for religion, 89.7% for non-cyberbullying, and 83.3% for gender. These findings highlight the efficacy of RF in processing short-text data and underscore the critical role of normalization and class balancing in enhancing recall for minority classes.

The magnitude of cyberbullying is reflected in its global impact. According to data provided in a report by the NGO World Cyberbullying Stats, Spain tops the world list with approximately 2,050,000 cases, followed by Mexico with 1,950,000, and the United States with 1,900,000. Argentina is in fourth place, reporting 1,850,000 cases, while Italy is in fifth place, with 1,750,000 cases, a figure that includes adults, youth, adolescents, and children [

10].

Therefore, the main purpose of this study was to evaluate the structural quality of the CyberBullying University Students Dataset [

11]. Although this dataset has been used to analyze the relationships between beliefs in a just world, empathy, and cyberbullying experiences in university students [

12], no specific review of its quality has been conducted to date. This study seeks to fill this gap by applying data-quality assessment metrics.

2. Related Works

In recent years, cyberbullying research has evolved considerably, encompassing multiple approaches and methodologies. Some studies have focused on the analysis of social, academic, and psychological consequences for victims, as well as the development of automatic cyberbullying detection systems using machine learning and natural language processing techniques. Ref. [

13] explored the impact of cyberbullying and cyberstalking on university students and staff during the COVID-19 pandemic using a semi-structured survey of 34 participants. The sample was predominantly female (73.5%), with a median age of 38.5 years and 64.7% were academic staff. In total, 47.1% reported experiencing cyberbullying, 29.4% reported cyberstalking, and 23.5% experienced cyberbullying. A total of 35.3% reported changes in their behavior (e.g., reducing online activity and purchasing protective devices). The authors applied nonparametric tests (Mann-Whitney U test) and a logistic model to explain the likelihood of behavioral change. The model showed a reasonable fit (pseudo-R

2 ≈ 0.36–0.39; significant Wald χ

2) and moderate predictive power: at a threshold of 0.28, sensitivity was 83.3% and specificity 72.7% (overall classification 76.5%); at a threshold of 0.27, sensitivity was 91.7% and specificity 63.6% (classification 73.5%). The most strongly associated factors were the experience of in-person harassment/stalking (odds ratio ≈ 15–19,

p < 0.05), anger toward the victim, and receipt of threatening messages (OR = 2.17,

p < 0.05). This study quantifies key prevalences and presents empirical evidence that is useful for prioritizing institutional prevention and support measures. The small sample size and limited generalizability are acknowledged. Nevertheless, the results will guide the design of interventions and future research in university settings. Similarly, Ref. [

14] analyzed how different types of victimization (social, verbal, physical, and cyber) affect the academic performance of adolescent students, finding that social victimization has a particularly significant impact. Finally, Ref. [

15] analyzed the inverse relationship between affective empathy and participation in cyberbullying among high school students and found that lower levels of empathy were associated with greater participation in the phenomenon.

However, several studies have focused on the development and use of datasets for the automatic detection of cyberbullying. Ref. [

16] evaluated machine learning and transfer learning techniques on the AMiCA dataset using textual representations and psychometric features to improve the classification models. Ref. [

17] investigated the influence of cyberbullying on the academic, social, and emotional development of university students using a specific dataset to measure the prevalence and means used to perpetrate cyberbullying. Finally, Ref. [

18] This paper presents a supervised method for cyberbullying detection in text, comparing feature extractors (TF-IDF and Count Vectorizer) and a set of traditional classifiers on a corpus of approximately 18,000 preprocessed tweets. Decision Tree, Linear SVC, Logistic Regression, Random Forest, Naïve Bayes, AdaBoost, Bagging, and SGD were evaluated using accuracy, F1, and training time metrics. The results show that the Count Vectorizer consistently outperforms TF-IDF; with TF-IDF, the best configuration achieved 95.46% accuracy and F1 0.9650 (Decision Tree), while with the Count Vectorizer, the best performance was 97.63% accuracy and F1 0.9816, as well as Decision Tree. Linear SVC and SGD offered competitive alternatives with good trade-offs between accuracy and time (Linear SVC: acc. 97.25%, F1 0.9785; SGD: acc. 95.77%, F1 0.9668). Overall, the choice of extractor influenced the improvement more than the choice of classifier, and the authors concluded that the Count Vectorizer combined with simple models provides an efficient and highly accurate detector, suitable for automatic moderation applications in networks.

The reviewed studies were grouped into two broad categories. On the one hand, there are studies that have applied machine learning models to public or previously existing datasets [

16,

18], which used corpora and structured sets of texts collected from social networks and digital platforms. However, some studies generated and analyzed their own datasets based on surveys or specific measurement instruments [

13,

14,

15,

17].

Despite advances in the construction and application of datasets in various contexts, few studies have specifically addressed the structural assessment of the quality of these datasets. A notable exception is the CyberBullying University Students Dataset [

11]. This dataset was collected in Germany from a sample of 615 university students, including variables on beliefs in a just world, empathy, experiences of academic justice, and participation in cyberbullying behaviors. Subsequently, the same authors used this dataset to analyze the relationships between the measured variables [

11]. However, to date, no formal review has been conducted to determine the quality of this dataset from a technical perspective, which is the primary contribution of this study.

Table 1 shows a comparison of different studies.

3. Materials and Methods

This section describes the materials and methods used in this research, as shown in

Figure 1.

3.1. Dataset Description

Dataset name: Cyberbullying among University Students [

11]. The dataset was derived from a study of 615 German university students (after excluding 48 participants with no access to electronic devices). Participants were divided into two temporal subsamples (sample 1: in-person survey before the pandemic; sample 2: online survey during the pandemic). Each row corresponds to a student, and the columns record the responses to the standardized questionnaires and background data. The questionnaire included questions about device ownership (smartphone, computer, Internet access), browsing habits (daily hours online, use of social media, WhatsApp version 2.22.2.72, etc.), demographics, and academic aspects (curriculum, federal state). For example, at the end of the questionnaire, students were asked whether they owned a smartphone, computer, or Internet access, and how many hours a day they surfed the web [

11,

19].

The main categories of the variables in the dataset are listed below.

Sociodemographic and technological context variables: These include gender, age, subsample membership, study program, and the federal state. In addition, access to the following devices and services was recorded: smartphone, computer, Internet access, social media account, WhatsApp use, and average daily hours spent online.

Flexible social perspective-taking tasks: four items (pt1–pt4). Each task assessed the ability to adopt others’ perspectives by reading contextual statements. Responses were coded in a numerical range (e.g., values between 1 and 9 appear in the data).

Self-reported psychological scales: Several subscales of personal traits and attitudes, all in a multi-range Likert format.

Specifically:

- -

Perspective-taking tendency: Items persta1–persta5 and pereng6–pereng10 measure cognitive dispositions to adopt the perspective of others.

- -

Empathy: Items emp1–emp4 from the Interpersonal Scale, which assesses empathic concern for others.

- -

Beliefs in a Just World: Two subscales, general (gbjw1–gbjw6) and personal (pbjw1–pbjw7), which quantify the degree to which an individual believes that the world is fair for everyone or for themselves.

- -

General self-efficacy: Items sef1–sef10 measured belief in one’s own efficacy in the face of challenges.

- -

Social desirability: Items sd1–sd10 assessed the tendency to respond in a socially desirable manner.

These scales, described in the study documentation, allow for the quantification of the psychological aspects relevant to social interaction.

- -

Perceived justice in the academic environment: Two additional scales of academic experience. The Teacher Justice Scale (lj1–lj10) captures the perception of fairness in the teacher’s actions toward the student, while the Peer Justice Scale (sj1–sj6) assesses perceived fairness among students. These variables reflect the climate of justice.

- -

Cyberbullying experiences: Two sets of items that capture experiences of online violence. Items cbv1–cbv11 record the frequency of experiences as victims of cyberbullying (e.g., insults or harassment through digital media), while cbp1–cbp11 record the frequency as perpetrators of such acts. Each item is typically answered on a frequency scale (e.g., never, sometimes, often) coded numerically. These variables allowed us to identify students who were exposed to violence (either as victims or aggressors).

Total number of variables in the dataset: 100.

Number of excluded variables: 2 (study, state); these were not relevant for training the model.

Number of variables used for training: 98.

Total number of instances: 615.

3.2. Preprocessing

Definition of the target variable: For the target variable, the variables cbv1–cbv11 and cbp1–cbp11 were considered cyberbullying experiences. Below, we describe the mathematical equation for extracting the target variable (see Equation (1) for its description).

Equation (1): Equation general Bullying.

S = V ∪ P: Columns starting with “cbv” (victim) and “cbp” (perpetrator).

V: Logical OR operator applied across all columns in S.

I: Indicator function mapping True/False to 1/0.

Categorical variables, represented as text, were transformed into numeric variables to facilitate processing using machine-learning models. To assess the performance of the classifiers, stratified k-fold cross-validation (k = 5) was employed to provide a robust estimation of the model’s generalization capability. Given the class imbalance in the target variable (bullying vs. non-bullying), the synthetic minority oversampling technique (SMOTE) was utilized to achieve class balance. In each iteration of the k-fold, the following procedure was implemented to prevent information leakage: (1) the dataset was partitioned into training and test sets according to the fold; (2) within the training set, missing values were imputed using the mean (SimpleImputer), and a StandardScaler was applied; (3) SMOTE was applied exclusively to the scaled training set to generate synthetic samples of the minority class; and (4) the classifier was trained using the augmented training set, and the validation (test) set of the fold was evaluated without any modification (i.e., without SMOTE, using the same imputer and scaler fitted on the training set).

Figure 2 shows the confusion matrix of a decision tree evaluated using this protocol.

SMOTE was configured with k_neighbors = 5. To address cases where the minority class within a fold had very few samples (fewer than six), the k_neighbors parameter was automatically set to a lower value to allow for the generation of synthetic samples, as suggested by the standard implementation. Random seeds were fixed to ensure reproducible results. Model performance was evaluated using the accuracy, precision, recall, F1 score, and area under the ROC curve (ROC-AUC). All reported metrics correspond to the mean and standard deviation calculated over k folds of the cross-validation process. To prevent overfitting during the model evaluation, the hyperparameters of each classifier were defined a priori and kept fixed across all iterations, thus avoiding any within-fold optimization processes that could artificially inflate the metrics.

Table 2 shows the hyperparameters used in each model.

To compare the performance of the classifiers, the Friedman test was applied to the aggregated metrics per fold (k = 5, stratified cross validation). When Friedman was significant, pairwise comparisons between classifiers were performed using the Wilcoxon signed-rank test (paired). To control for type I errors due to multiple comparisons, the Holm correction was applied. In addition to p-values, the effect sizes were reported using paired Cohen’s d values. Statistical comparisons were calculated based on the F1 and ROC-AUC metrics obtained per fold.

3.3. Supervised Learning Models

3.3.1. Random Forest

An ensemble method constructs T-independent decision trees, each trained on a bootstrap sample with random feature selection, and integrates their predictions by voting for classification or averaging for regression. This approach reduces the variance compared to a single tree and mitigates overfitting [

20]. Random Forest combines multiple decision trees, each trained on a different subset of data and features, to make predictions. Combining the results of these trees through voting or averaging reduces the risk of errors and overfitting, which can occur with a single tree, leading to more reliable predictions. The associated equations are as follows:

- -

Prediction (regression):

- -

Prediction (majority ranking):

3.3.2. Logistic Regression

The linear model for binary class probability applies the logistic (sigmoid) function to the linear predictor; it is typically trained using the maximum likelihood [

21], as shown in the following equation:

The logistic regression model uses a special function called the logistic or sigmoid function to predict the probability of an outcome belonging to one of the two categories. This function transforms a linear combination of input variables into a value between 0 and 1, representing the probability. The model is usually trained by finding the parameters that make the observed data most likely to occur, a method known as maximum-likelihood estimation.

3.3.3. Decision Tree

This model recursively partitions the feature space into homogeneous regions based on an impurity measure (Gini, entropy) and places a single prediction at each leaf. These are some of the most important equations in this model.

- -

Gini impurity for a partition:

- -

Information gain/impurity reduction during splitting:

Decision trees work by repeatedly dividing data into smaller groups based on specific features, aiming to create groups that are as similar as possible. This process continues until the tree reaches its endpoints, called leaves, where the predictions are made. The model uses mathematical calculations to determine the best way to split data at each step, ensuring the most effective grouping of similar items [

22].

3.3.4. K-Nearest Neighbors (K-NN)

This lazy learning method assigns a test point to the majority (or average) class of its k-nearest neighbors based on distance. The main equations are as follows:

- -

Classification rule:

K-Nearest Neighbors is a straightforward machine learning technique that classifies new data points based on the characteristics of their closest Neighbors. It works by looking at the k closest data points to the new one and deciding its category based on the majority of those neighbors. The method is considered “lazy” because it does not create a general model but instead makes decisions on the spot using available data [

23].

3.3.5. Support Vector Classifier

This model searches for a hyperplane that maximizes the margin between classes, allowing for violations (slack variables), and using kernels to separate in higher-dimensional spaces, if necessary. One of the main equations is as follows:

where the variables ξ

i are called slack variables, and measure the error committed at point (x

i,y

i).

The Support Vector Classifier is a machine-learning technique that attempts to find the best way to separate different groups of data. It does this by looking for a line (or plane in higher dimensions) that creates the widest possible gap between groups. This method is flexible, allowing for errors and using special mathematical tricks to handle complex data that might not be easily separable in its original form [

24].

3.3.6. Linear Discriminant Analysis (LDA)

This method assumes Gaussian classes with the same covariance matrix; it projects the data in a direction that maximizes the separation between classes relative to the intraclass variance [

25]. The equations include:

- -

Two-class solution:

where S

W is the intra-class covariance and μ

i the means.

3.3.7. Multi-Layer Perceptron (MLP)

It is a multilayer neural network with nonlinear activation functions; the weights are adjusted by minimizing a loss function using backpropagation (gradient descent over layer composition) [

26]. One of the main equations for this method is as follows:

- -

Forward propagation for layer:

- -

Loss function:

3.3.8. XGBoost (Extreme Gradient Boosting)

It is an efficient and scalable implementation method for tree boosting (additive tree model), with regularization of tree functions and practical optimizations (sparsity-aware and cache-aware). It is widely used in competition and industrial applications. Gradient Tree Boosting is one of the most relevant equations:

XGBoost is a powerful machine-learning technique that combines multiple simple decision trees to create a more accurate model. It incorporates special features to prevent overfitting and improve the performance, making it particularly effective for handling complex datasets. XGBoost has gained popularity in both competitive data science challenges and real-world applications, owing to its ability to produce highly accurate predictions while maintaining computational efficiency [

27].

SHapley Additive ExPlanations (SHAP)

This SHAP method uses Shapley values (derived from game theory) to attribute each feature its fair contribution to the prediction of a model

f. Therefore, it produces a local additive explanatory model of the form:

where z’ 0 ∈ {0, 1}

M, M is the number of simplified input features, and φi ∈ R [

28].

SHAP is a technique that helps us understand how different factors contribute to the prediction of a model. It borrows ideas from the game theory to determine the extent to which each feature influences the final result. This method creates a simple explanation that shows how each factor is added to produce the model’s output [

28]. To determine which has the best predictions, it can be adopted in future implementations.

To train the supervised learning models, we described two categorical variables, No Bullying and Bullying, and represented them in two classes, as shown in

Table 3.

3.4. Metrics

The following evaluation metrics were used.

Accuracy: proportion of true positives out of the total number of positive predictions.

Sensitivity: proportion of true positives out of the total number of true-positive cases.

F1-score: harmonic mean of precision and recall. Balances both metrics.

Accuracy: total proportion of correct predictions.

Specificity: This refers to negative cases where the algorithm is correctly classified. This expresses how well the model can detect this class.

The ROC curve is a graphical representation that evaluates the performance of a binary-classification model. It shows the relationship between sensitivity (true positive rate) on the

Y-axis and 1—specificity (false positive rate) on the

X-axis, when varying the classification threshold of a continuous variable. The curve (AUC) quantifies the model’s ability to distinguish between the two classes analyzed (e.g., bullying vs. No Bullying). An AUC of 1.0 indicates perfect separation between classes, while a value of 0.5 reflects performance equivalent to chance [

29].

3.5. Analysis of the Importance of Variables and Their Correlation with Prediction

Variables prefixed with “cbp” and “cbv” (e.g., cbp2, cbp1, cbv1, cbv2, cbv10, etc.) are identified as the most influential in enhancing the prediction of the bullying class within the RandomForest model (refer to

Figure 2). The naming convention (where cbp* denotes perpetration questions and cbv* denotes victimization questions) implies that responses indicating higher levels of perpetration or victimization elevate the predicted probability of an observation being classified as bullying. For instance, cbp2 had the highest SHAP importance; its positive correlation (approximately +0.74) signified those elevated values of cbp2 substantially increased its contribution to the bullying prediction score. Similarly, cbv1 and cbv2 exhibited high positive correlations, with higher values for these questions driving the prediction towards the positive class. Conversely, certain characteristics (e.g., pbjw7 and sef6) demonstrated negative correlations with their SHAP values (e.g., pbjw7 corr ≈ −0.63, sef6 corr ≈ −0.79). Higher values for these questions diminished the probability that the model assigned bullying. Depending on the content of these questions (a review of the questionnaire is recommended), they may correspond to protective factors or constructs inversely associated with the probability of bullying, as identified by the model.

4. Results

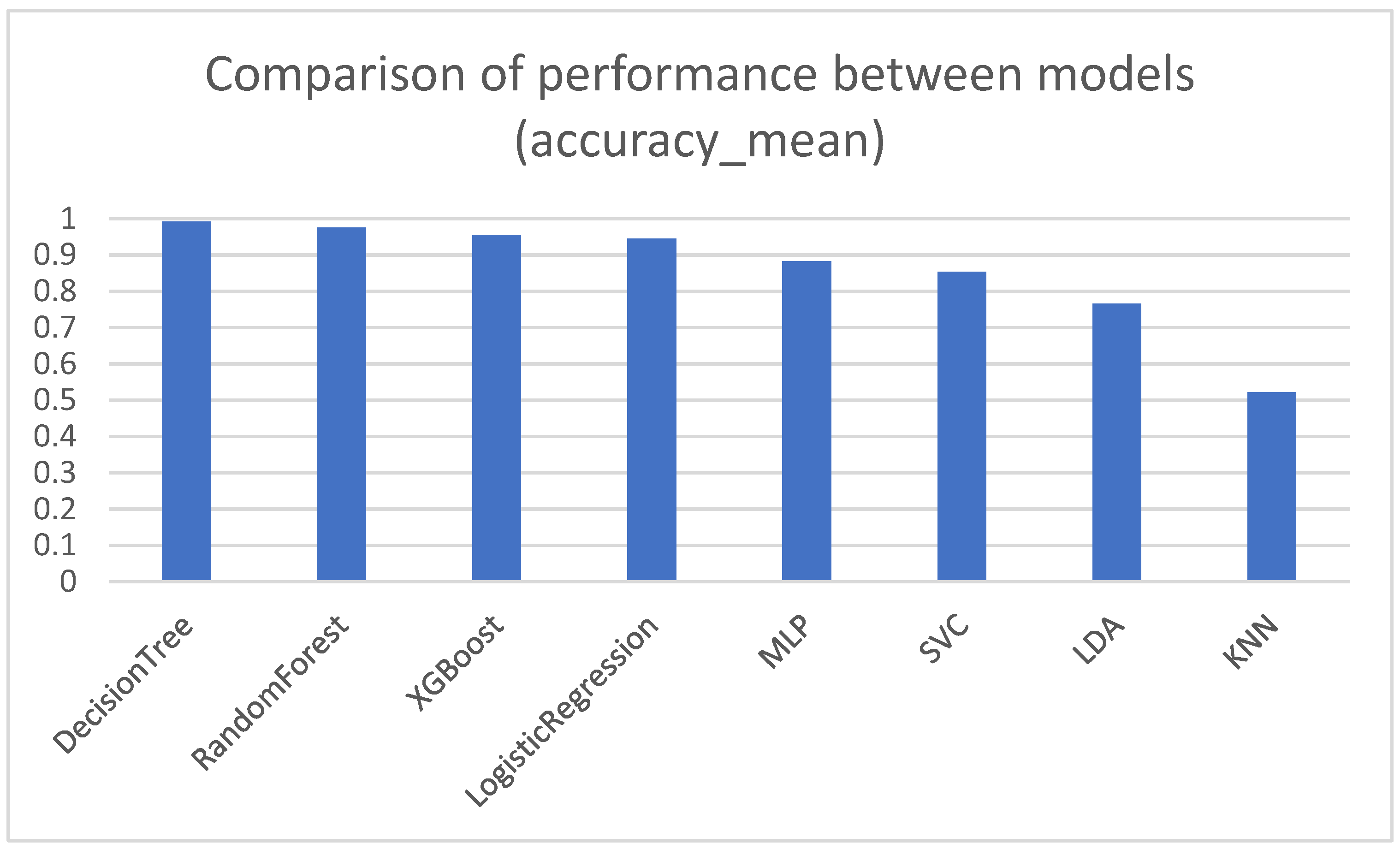

Eight classification models were evaluated to detect cyberbullying in a sample of university students. The models were compared based on the following standard evaluation metrics: precision, recall, F1 score, and accuracy, as shown in

Figure 3 and

Table 4.

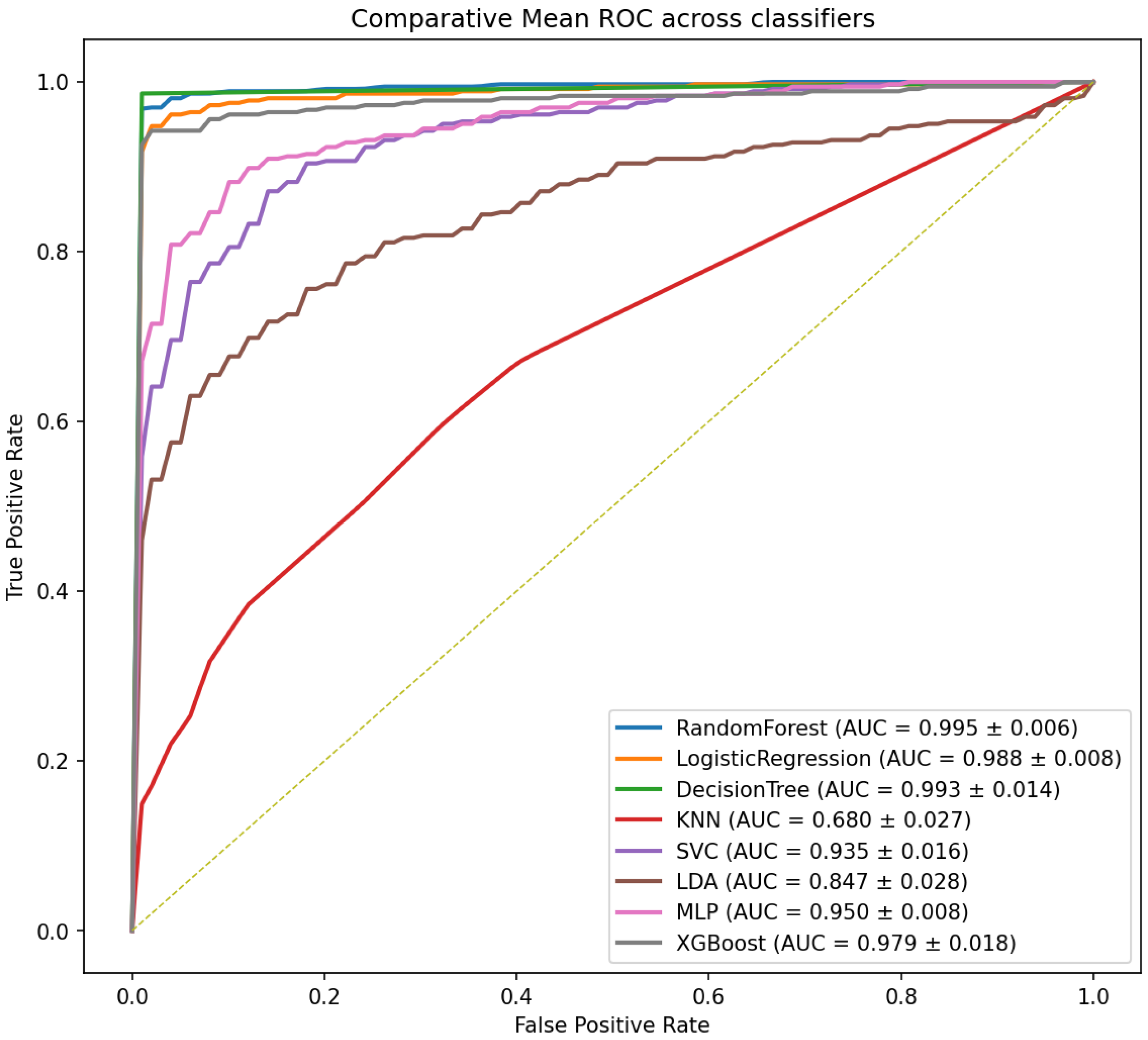

For the F1 metric, the Friedman test [

30], showed overall differences among the eight classifiers evaluated (χ

2 = 33.923,

p = 1.8 × 10

−5). Similarly, for ROC-AUC (

Figure 4), the Friedman test was significant (χ

2 = 32.106,

p = 3.9 × 10

−5). However, after applying paired comparisons Wilcoxon and correcting by Holm’s test, no pair-by-pair comparison reached significance (

p < 0.05). In terms of practical magnitude, effect sizes (Cohen’s paired d) [

31,

32]. This combination of results suggests the presence of overall differences between models; however, the lack of significant pairs after correction indicates that individual differences are not robust to the sample size of the folds (k = 5).

TreeExplainer over RandomForest (SHAP) scores were employed to elucidate the contribution of each characteristic to the prediction of bullying. The variables exerting the most significant influence were cbp2, cbv1, cbv2, cbp1, and cbp10, as ordered by SHAP mean, as illustrated in

Figure 5. Generally, responses indicating higher levels of perpetration or victimization were associated with an increased probability of bullying. Conversely, certain variables (e.g., pbjw7 and sef6) exhibited a negative association with the prediction, suggesting that they may function as protective factors or are inversely related to labeling within this dataset.

The Decision Tree model demonstrated superior performance, achieving an average accuracy of 99.1% with exceptional evaluation metrics: precision of 100%, recall of 98.6%, and F1-score of 99.2%. These metrics indicate the model’s high efficacy in accurately classifying both the positive and negative instances of cyberbullying. Furthermore, the area under the ROC curve (ROC-AUC) of 0.993 corroborates its substantial predictive capability, rendering it a reliable tool for the development of preventive monitoring systems within educational contexts.

The Random Forest model also exhibited commendable performance, with an average accuracy of 97.6%, precision of 99.2%, and F1-score of 97.9%. This robust performance, coupled with its ROC-AUC of 0.995, is one of the most reliable and consistent alternatives for predicting cyberbullying.

The XGBoost model ranked third and performed competitively, achieving an average accuracy of 95.4%, a precision of 99.1%, a recall of 93.1%, and an F1-score of 96%, with an ROC-AUC of 0.979. These metrics reflect a favorable balance between sensitivity and specificity, making them particularly appealing in scenarios where minimizing false negatives is crucial.

Logistic Regression demonstrated robust performance with an accuracy of 94.5%, precision of 98.9%, recall of 91.8%, and F1-score of 95.1%. Additionally, its ROC-AUC of 0.988 indicates that despite its simplicity, this model yields highly competitive results.

Conversely, the Multilayer Perceptron (MLP) model exhibited moderate performance, with an accuracy of 88.3% and an F1-score of 89.8%, reflecting acceptable performance, but inferior to decision tree-based methods. In contrast, the SVC model yielded more limited results, with an accuracy of 85.4% and an F1-score of 86.9%. Although its precision was relatively high (91.8%), its recall of 82.7% suggests some challenges in detecting positive cyberbullying cases.

Linear Discriminant Analysis (LDA) demonstrated intermediate performance, with an accuracy of 76.6%, a precision of 90%, a recall of 68.2%, and an F1-score of 77.4%, indicating a higher false-negative rate compared to the more robust models.

Finally, the KNN model performed the worst, with an average accuracy of 52.2% and an F1-score of 35.8%, primarily owing to its low recall (22.5%). This suggests that the model encountered significant difficulties in adequately identifying positive cyberbullying cases.

In summary, the results indicate that the Decision Tree and Random Forest models are the most effective for this dataset, with metrics exceeding 97% in all categories and an excellent ROC curve performance. Notably, the Decision Tree has emerged as the most suitable option owing to its high capacity to accurately identify both cyberbullying and non-bullying cases, making it a valuable tool for the prevention and early detection of this phenomenon in educational institutions. Within the framework of institutional strategies to prevent and mitigate cyberbullying, this model possesses features that can be integrated into a support tool for early detection and timely intervention. However, it is recommended to validate these results using independent data and expand the dataset to ensure the robustness of the model in real-world and diverse scenarios.

5. Discussion

The results showed that tree-based models, particularly Decision Tree and Random Forest, achieved very high performance on conventional metrics (accuracy, precision, recall, F1, and ROC-AUC), with the Decision Tree obtaining the highest mean value in our experiment (see

Table 3). However, interpretation of this superiority requires some qualifications. Although the Friedman test indicated overall differences between the classifiers for F1 and ROC-AUC (χ

2 F1 = 33.923,

p = 1.8 × 10

−5; χ

2 ROC-AUC = 32.106,

p = 3.9 × 10

−5), holm-corrected pairwise (Wilcoxon) comparisons did not show statistically significant pairs after controlling for multiple comparisons. This pattern suggests that while there are indications of overall differences between models, the pair-by-pair differences are not sufficiently robust with the current evaluation structure (k = 5 folds), which advises caution when declaring an outright winner.

The SHAP interpretability analysis identified that questions related to victimization and perpetration (items cbv* and cbp*, respectively) had the greatest influence on predicting the bullying label. The positive correlation between the scores of these items and their SHAP values indicated that higher responses to these questions increased the model’s contribution to the positive class. In contrast, some psychological scales (e.g., pbjw7 and sef6) showed negative correlations, suggesting that certain traits or beliefs could act as protective factors or be inversely related to the estimated probability of cyberbullying. These observations are consistent with previous theories and findings linking experiences of victimization/perpetration to the likelihood of involvement in cyberbullying episodes. The use of SMOTE to balance classes is appropriate for mitigating imbalance; however, it introduces synthetic observations that can alter the true population distribution and, in some cases, inflate metrics if not externally validated. Third, with k = 5, only five observations per classifier are available for the paired tests, which reduces the statistical power of the tests and partly explains the lack of significant pairs after correcting for multiple comparisons. Finally, the sample came from German universities and may contain cultural or contextual biases; generalization to other populations or environments requires external validation.

In terms of practical applications, the models can be used as human-in-the-loop support systems for early detection: Automatic recommendations and filters that alert guidance teams, not as autonomous decision-making tools. Operational use should include oversight of psychology/student service professionals, clear review procedures, and privacy/consent protocols.

6. Conclusions

The main objective of this study was to evaluate the suitability and structural quality of a survey-based dataset on cyberbullying among university students for use in supervised machine learning systems, and to compare the predictive performance and interpretability of several standard classifiers on that dataset. To that end, we curated and documented a clean version of the Cyber Bullying among University Students dataset (n = 615, originally 100 variables; 98 variables used for modeling), defined a clear target variable that combines victimization and perpetration items (cbv1–cbv11 and cbp1–cbp11), and implemented a reproducible modeling pipeline that addresses missing data, categorical encoding, class imbalance (SMOTE applied only to training folds), feature scaling, stratified k-fold evaluation (k = 5), and model interpretability (SHAP).

From the modeling experiments, tree-based methods (Decision Tree and Random Forest) yielded the highest predictive scores on this dataset, while other methods (Logistic Regression, MLP, SVC, LDA, KNN) presented varied performance profiles. However, statistical comparison across classifiers (Friedman test; Wilcoxon paired tests with Holm correction) indicates that although there are global differences, pairwise differences are not uniformly robust after multiple-comparison correction, which is likely influenced by the CV design (k = 5) and finite sample size. Critically, model performance must therefore be interpreted with caution; exceptionally high metrics for a given classifier may reflect dataset idiosyncrasies (informative item overlap and redundancy among cbv/cbp items) rather than universally generalizable superiority.

Interpretability analyses using SHAP identified the cbv* and cbp* items (victimization and perpetration) as the primary drivers of the predictions. Several psychological scales (e.g., some perceived justice and self-efficacy items) were negatively associated with the predicted bullying probability, suggesting potential protective factors. These findings align with the theoretical literature and help explain what the models have learned, supporting the use of explainability as a part of any deployment pipeline.

Finally, while the dataset and pipeline provide a solid basis for exploratory and methodological work on automated cyberbullying detection, before operational deployment, we recommend (a) validation of independent external samples and data from other institutions or cultural contexts, (b) the use of repeated cross-validation or nested CV when hyperparameter selection is introduced, (c) careful monitoring of fairness across subgroups, and (d) maintaining human-in-the-loop review for any intervention triggered by automated detection. In summary, this study contributes to both a documented, model-ready dataset and a reproducible evaluation framework that advance the methodological foundations for responsible cyberbullying detection at the university level.

7. Future Works

To make this work more robust, work is required along the following lines:

External validation: Evaluate the models on datasets from other universities or on samples collected in different cultural contexts to verify their robustness and generalizability.

Repeated cross-validation and nested validation: Perform repeated CV (e.g., 5 × 5) and/or nested validation to optimize hyperparameters and obtain more stable uncertainty estimates.

Calibration assessment: Check probabilistic calibration (calibration plots and Brier score) to ensure that the predicted probabilities accurately reflect the observed frequency.

Bias and fairness audit: Analyze performance by subgroups (gender, age, major) and apply fairness metrics to detect and correct bias.

Multivariate/multimodal models: Integrate textual (if messages/content are available), temporal, or social network information with psychometric data to improve sensitivity and better explain the dynamics of the phenomenon. Longitudinal studies: If possible, data should be collected at multiple points in time to study temporal predictors and develop models capable of early detection and prognosis.

Pilot implementation with human supervision: Deploy a prototype in a controlled environment (e.g., a department or guidance service) to measure the actual impact, false-alarm rate, and operational burden.

8. Limitations/Generalizability

Generalizability and cultural limitations. This study was based on a single survey dataset collected from German university students. Consequently, our results reflect the patterns and response distributions of this specific population, and may not be generalized directly to student populations in other countries, languages, or cultural contexts. Cultural differences can affect both the prevalence and expression of cyberbullying behaviors as well as the interpretation of questionnaire items, producing different response styles (e.g., acquiescence, social desirability) and factor structures. To mitigate optimistic conclusions, we applied stratified cross-validation, SMOTE only within the training folds, and stability checks (repeated CV and bootstrap confidence intervals). However, we emphasize that the external validation of independently collected samples (other universities, countries, or translated survey waves) and formal tests of measurement invariance (e.g., configural/metric/scalar invariance across groups) are required to establish transportability. Until such a validation is performed, any operational deployment should be local, human-supervised, and preceded by fairness audits across demographic subgroups.