1. Introduction

Against the backdrop of global energy transition and the urgent need to address environmental challenges, accelerating the integration of renewable energy has become a critical strategy for sustainable development. However, the inherent uncertainty of renewable energy sources such as wind power introduces significant complexities to power system scheduling, posing challenges to the stable and economic operation of the grid [

1]. Effectively accommodating fluctuations in renewable energy output while coordinating multiple resources—including conventional generators, energy storage systems, and demand response programs—to achieve optimal interaction remains a key issue in modern power system operation.

To tackle the aforementioned challenges, artificial intelligence (AI) methods—especially deep reinforcement learning (DRL)—have emerged as promising alternatives to traditional model-based approaches, thanks to their data-driven adaptability in handling dynamic uncertainties without over-reliance on precise physical models [

2]. Recent explorations of AI in real-time optimal power flow [

3] not only expand its application scope in power systems but also provide technical references for AI-driven scheduling optimization.

Research on optimal power system scheduling has received extensive attention. For microgrid scenarios involving multi-energy resources, reference [

4] proposed a day-ahead economic optimal scheduling method considering time-of-use electricity prices. At the building scale, reference [

5] developed a day-ahead optimization model for smart homes with photovoltaic–thermal (PVT) systems, integrating battery/boiler storage and load scheduling to balance cost and comfort. Extending to integrated energy systems, reference [

6] proposed a data-driven distributed robust self-scheduling method for integrated energy production units participating in day-ahead electricity–gas joint markets. Reference [

7] constructed a battery charging–discharging model and derived an economic scheduling strategy for systems with energy storage using dynamic programming. Reference [

8] characterized the uncertainty of new energy generation based on fuzzy sets, adopted a distributed robust optimization method to handle chance constraints, and managed the energy of islanded microgrids. Reference [

9] addressed the uncertainty in renewable energy and load forecasting by building a two-stage robust optimization model with uncertainty adjustment parameters, which was solved using the column-and-constraint generation algorithm. Reference [

10] considered the uncertainty of wind power in the uncertainty set, iteratively solved the adaptive robust optimization model using the column constraint generation algorithm, and ensured the feasibility of the scheduling strategy through a two-level robustness solution. However, most of these studies are confined to day-ahead scheduling and fail to consider real-time fluctuations or prediction errors; more critically, they rely heavily on precise physical models—computational efficiency declines sharply as system scale expands (e.g., multi-source energy storage integration), making them unsuitable for dynamic intra-day scenarios.

To better adapt to the dynamic characteristics of renewable energy and load fluctuations across different time horizons, research has increasingly focused on multi-time scale scheduling frameworks. Reference [

11] proposed a two-stage scheduling model for integrated energy systems (IESs) based on distributionally robust adaptive MPC, improving day-ahead–intra-day coordination via dual-loop feedback. Reference [

12] considered the participation of distributed renewable energy and distributed energy storage in microgrid scheduling, establishing an intra-day optimal scheduling model for active distribution networks based on improved deep reinforcement learning. Reference [

13] analyzed the power and energy balance mechanism of multi-interactive distribution networks with source-grid–load-storage coordination, and constructed a source-grid–load-storage coordinated optimal scheduling model. Reference [

14] proposed a four-stage scheduling model (day-ahead, intra-day, ultra-short-term, and ultra-ultra-short-term) optimized via Gurobi, incorporating buffer boundaries for global coordination. Reference [

15] developed a multi-time scale rolling scheduling model based on “robust plans” and “robust operation zones” using robust optimization. While these models improve temporal coordination, they still depend on predefined physical constraints and neglect demand-side flexibility, limiting their ability to handle high-renewable penetration.

With the advancement of artificial intelligence, DRL chen2024model has emerged as a promising solution for uncertain environments [

16,

17], leveraging its adaptability and flexibility in dynamic scenarios [

18,

19]. Reference [

20] adopted the deep Q-learning algorithm for optimal scheduling of energy management, and the simulation results showed that the use of reinforcement learning algorithms can improve energy utilization and scheduling performance. Reference [

21] employed a two-layer RL model, where the upper layer considers the energy state of the entire scheduling cycle to generate charging and discharging strategies for energy storage, and the lower layer only considers the current operating cost, using mixed-integer linear programming to solve the current scheduling actions and operating costs. Reference [

22] considered energy storage systems and realized robust economic scheduling of virtual power plants using the deep deterministic policy gradient algorithm based on scenario datasets generated by generative adversarial networks. Reference [

23] evaluated seven DRL algorithms for energy management, highlighting their potential in complex scheduling scenarios.

Beyond electrochemical and pumped hydro storage, reference [

24] integrated tidal range power stations into day-ahead scheduling, demonstrating flexible coordination of renewable energy with unique storage characteristics. Reference [

25] further explored gravity energy storage (GES) in day-ahead scheduling, using distributionally robust optimization to handle price uncertainty. However, the above studies have two critical limitations: (1) they rarely consider the synergistic coordination of multi-source energy storage (e.g., pumped hydro storage + electrochemical storage) and dual-type demand response (price-based + incentive-based); (2) they lack mechanisms to prioritize critical state variables (e.g., wind power forecasts, energy storage state of charge), leading to suboptimal decision-making in multi-variable, multi-time scale scenarios.

The limitations of existing methods directly affect practical operation of high-renewable power systems: traditional day-ahead models cannot adjust to sudden wind surges; over-reliance on conventional generator ramping increases fuel/penalty costs; and poor multi-source coordination raises grid instability risks. For grids like East China’s (plagued by renewable integration challenges), these issues hinder carbon reduction and grid stability—urgently requiring a more adaptive framework.

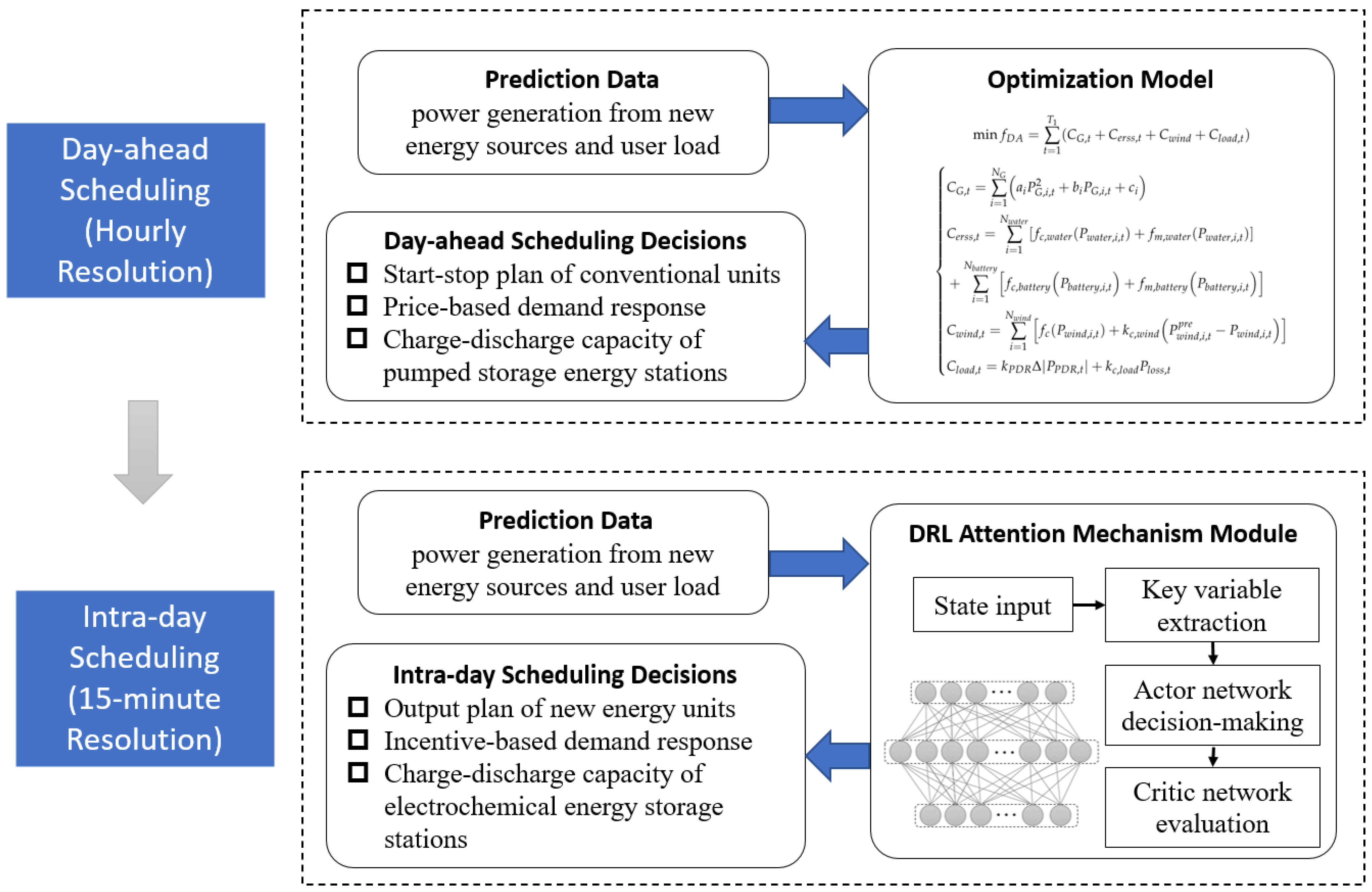

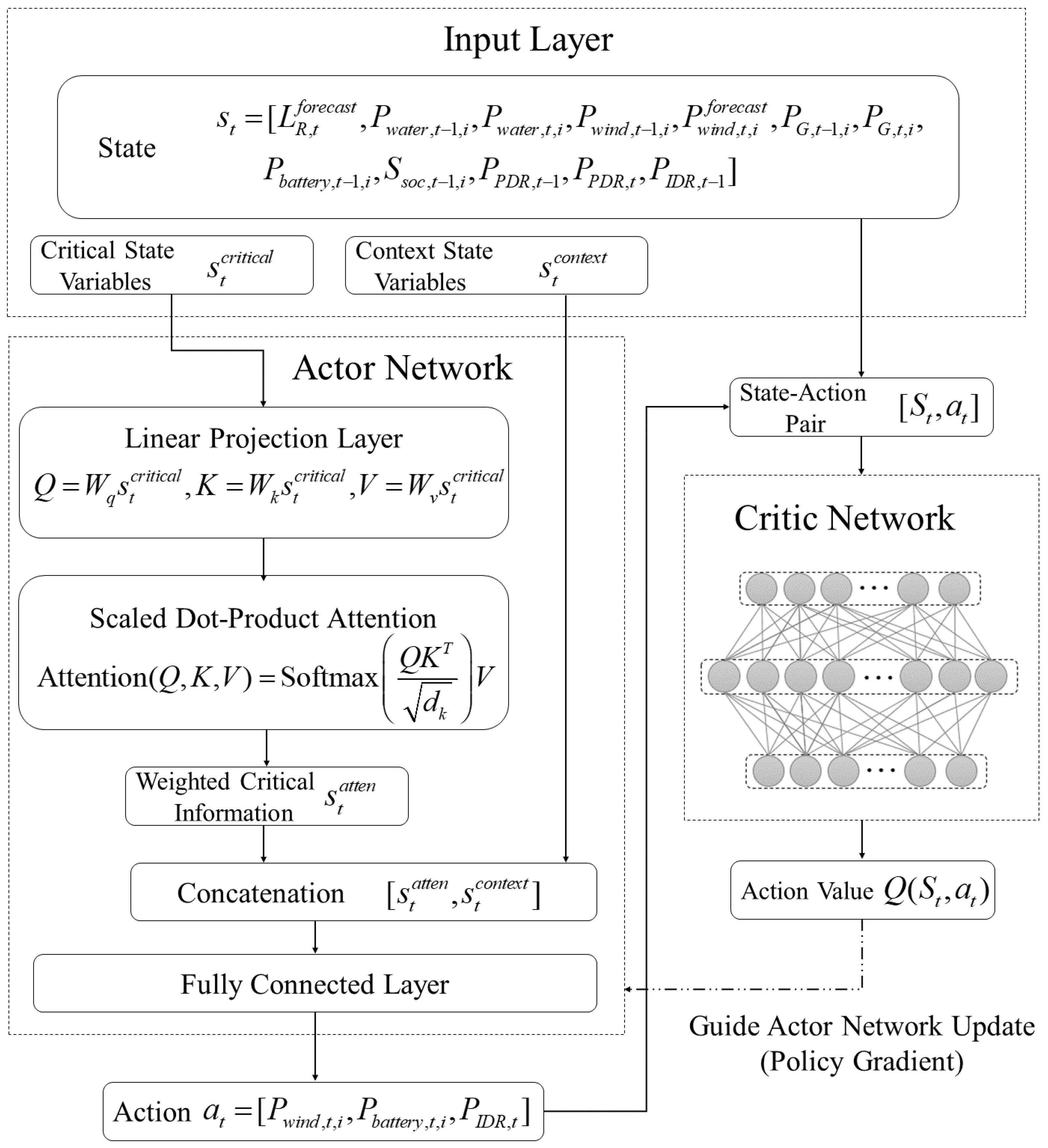

To address the above gaps, this study develops a multi-time scale coordination scheduling framework integrated with attention-enhanced DRL, balancing cost minimization and renewable utilization while improving real-time adaptability. The proposed framework combines two core stages: Day-ahead scheduling (hourly resolution), which optimizes conventional generators, pumped hydro storage, electrochemical energy storage, and price-based demand response (PDR) to minimize total operational costs; and intra-day rolling scheduling (15 min resolution, 4 h horizon), which adjusts wind power, battery operation, and incentive-based demand response (IDR) in real time. Notably, the intra-day stage uses an attention-based Actor–Critic DRL algorithm—dynamically weighting critical variables (e.g., wind forecast errors, storage SOC) to correct deviations, overcoming the lack of targeted decision-making in existing DRL methods.

The rest of the paper is organized as follows. The multi-time scale coordination scheduling model is proposed in

Section 2.

Section 3 presents the reinforcement learning-based optimization framework.

Section 4 verifies the effectiveness and advantages of the proposed method with simulation results. Finally, the conclusion of this paper is presented in

Section 5.

4. Case Study

Tackling the critical issue of constrained renewable energy absorption, this study selects a regional power grid in East China—plagued by significant new energy integration hurdles—as a real-world testbed to validate its proposed scheduling framework. The grid’s infrastructure includes six traditional thermal units stationed at nodes 1, 2, 5, 8, 11, and 13, with detailed parameters documented in

Table 1. Node 2 hosts a 400 MW wind farm alongside a 50 MW/200 MW·h electrochemical storage system, while node 8 accommodates a 100 MW/400 MW·h pumped storage facility [

28].

Operational assumptions are set as follows: PDR adjustments are capped at 10% of total load, and IDR deployment does not exceed 5% of total load. For streamlined calculations, fixed compensation cost coefficients for IDR are employed, as specified in

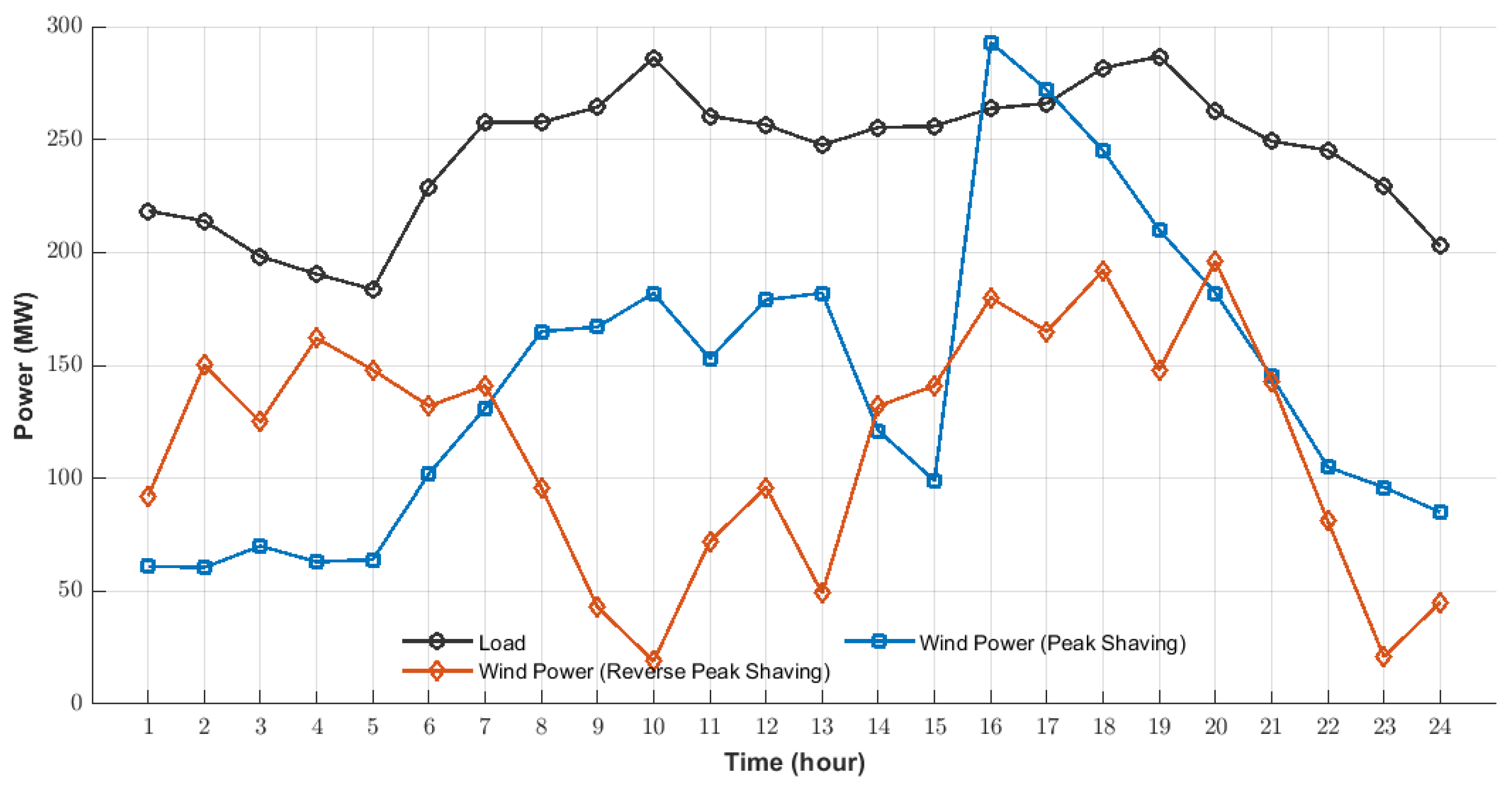

Table 2. Load and wind power dynamics are visualized in

Figure 3, which captures load fluctuations alongside wind power variations under both scenarios. To ensure the reproducibility of the DRL implementation, detailed parameters of the training process and computational setup are specified as follows: the learning rate is set to 0.001 for both the Actor and Critic networks to balance training convergence speed and stability; the Adam optimizer is adopted, with momentum parameters

= 0.9 and

= 0.9 to adaptively adjust the learning rate for different parameters; the discount factor

is used to emphasize short-term operational rewards while retaining consideration for long-term system stability; training is terminated when the average reward of 20 consecutive episodes stabilizes within a 2% fluctuation range, ensuring the learned policy is robust and convergent; and experiments are conducted on a server equipped with an Intel Core i5-11400F and 16 GB RAM with the software environment built using Python 3.8 and PyTorch 1.10 to provide sufficient computational resources for DRL model training and inference. The DRL model undergoes offline training only once. The training is conducted using sufficient historical operational data, which covers typical seasonal, daily, and load fluctuation scenarios.

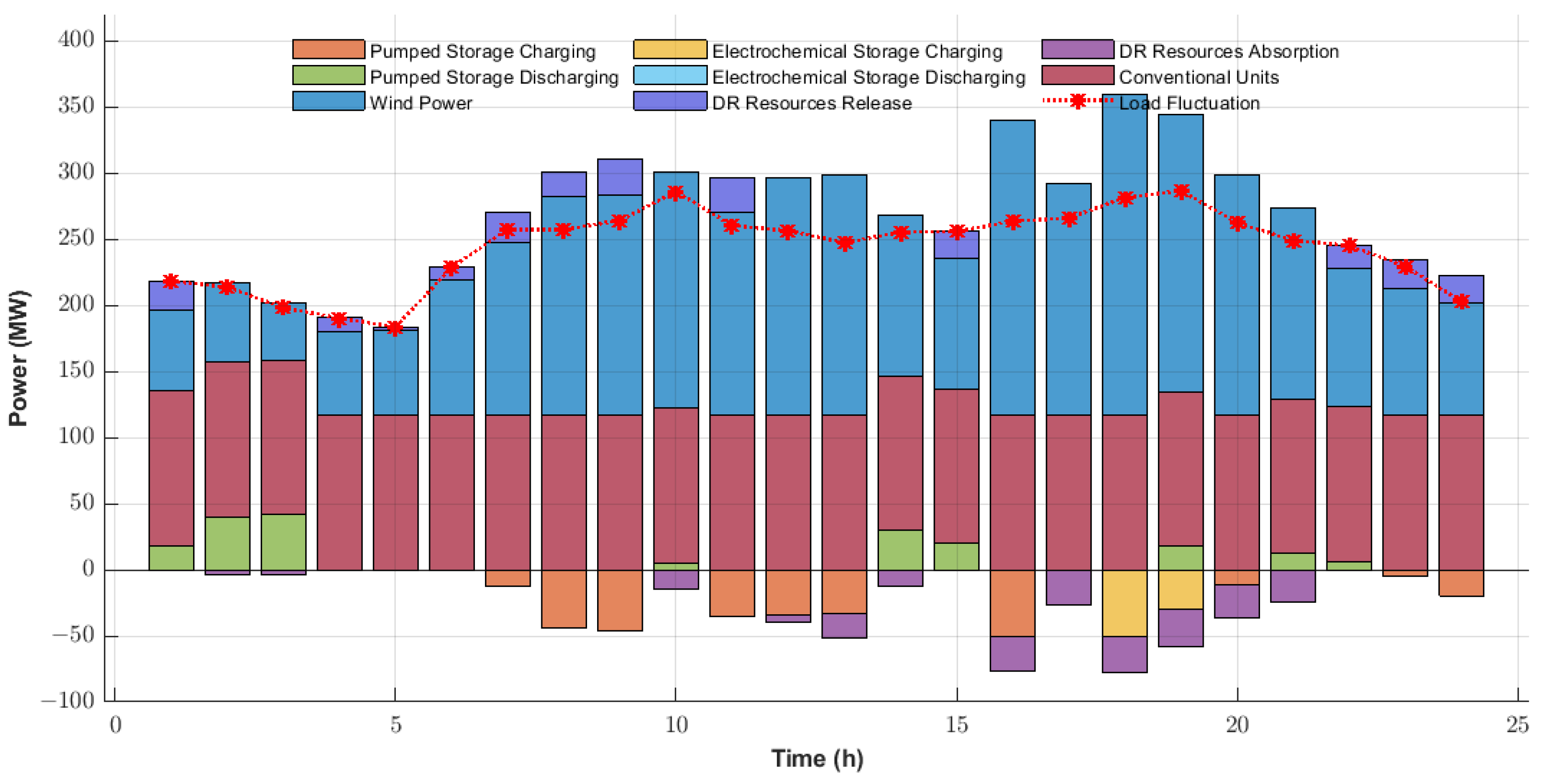

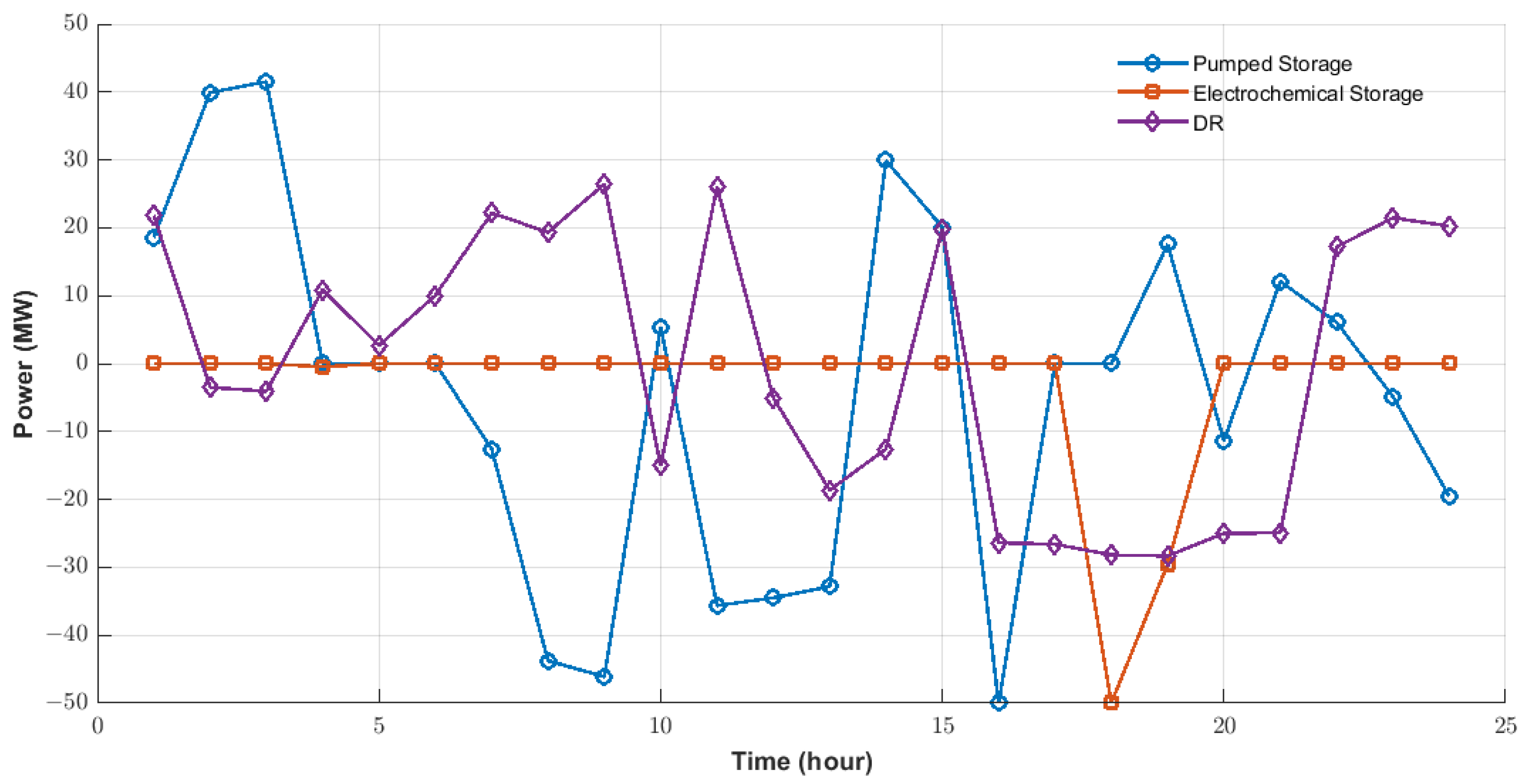

Scheduling schemes for both scenarios are detailed in

Figure 4 and

Figure 5, where each curve represents the cumulative output of preceding components plus the contribution of the current unit or DR resource. Notably, the red curve (denoting load fluctuations) maintains balance with the combined outputs of conventional units, storage systems, and wind power across all periods. Meanwhile,

Figure 6 and

Figure 7 illustrate the operational plans for pumped storage, electrochemical storage, and demand response resources across the two scenarios. A closer look at DR resource behavior reveals distinct diurnal roles: daytime usage focuses on peak shaving and damping wind power volatility, while nighttime operations shift to valley filling. This division aligns with the contrasting patterns observed in wind power and load interactions. In reverse peak shaving scenarios, wind power generation surges during early morning (2:00–6:00) and evening (16:00–21:00)—periods when non-DR loads are low. Here, active DR resource activation and storage charging effectively boost wind power absorption. Conversely, during peak shaving, wind power output aligns more closely with load curves, peaking at midday (10:00–14:00) and afternoon (16:00–19:00). The high non-DR load during these windows reduces the need for DR resources compared to reverse peak shaving at the same times.

From the perspective of practical applicability, the proposed framework aligns well with the operational characteristics of the East China regional grid—where wind power is concentrated in coastal areas and load peak–valley differences are high. As shown in

Figure 5 and

Figure 7, the framework activates IDR and charges electrochemical storage to absorb surplus wind power. Regarding computational scalability, the attention mechanism’s ability to prioritize critical variables reduces redundant calculations.

The framework’s design allows straightforward extension to solar PV integration. PV’s uncertainty can be incorporated by adding “PV forecast error” to the attention mechanism’s critical variable set (Equation (

28) in

Section 3.3). For dynamic demand response, replacing the fixed DR participation rate with a “user response elasticity model” would further enhance performance.

To explore the practical effectiveness of dual energy storage systems in improving wind power utilization, reducing curtailment rates, and saving costs, this study designed comparative experiments targeting two typical scenarios: wind power peak shaving and reverse peak shaving. By constructing three differentiated operational schemes, the research systematically analyzed the impact mechanisms of energy storage integration forms and scheduling time scales on overall performance: The first scheme is the “no-storage benchmark mode”, which relies entirely on day-ahead scheduling plans without incorporating any energy storage equipment or implementing dynamic adjustments across multiple time scales such as intra-day, serving as a basic reference. The second scheme is the “single pumped storage mode”, which, while retaining the day-ahead scheduling framework, only introduces pumped storage stations for regulation, focusing on examining the operational boundaries of a single energy storage type. The third scheme, the “hybrid energy storage multi-scale mode”, represents the core strategy proposed in this study. It combines pumped storage with electrochemical energy storage and embeds a day-ahead-intra-day coordinated multi-time scale scheduling architecture to achieve complementary advantages of different energy storage characteristics. The DRL architecture, specifically the attention-based Actor–Critic network, is applied to all three schemes.

The experimental results (as shown in

Table 3) clearly demonstrate the performance differences among the three schemes. The “no-storage benchmark mode” has obvious shortcomings in wind power absorption, especially in reverse peak shaving scenarios. During the high wind power periods, the limited call volume of demand response resources fails to match the large-scale wind power output, leading to severe curtailment and insufficient system flexibility to meet fluctuations. The “single pumped storage mode”, although an improvement over the benchmark scheme, is constrained by the inherent slow adjustment speed of pumped storage. It struggles to quickly respond to instantaneous fluctuations in wind power and load during reverse peak shaving, resulting in nearly identical curtailment rates in peak shaving and reverse peak shaving scenarios, with limited optimization effects.

In contrast, the “hybrid energy storage multi-scale mode” exhibits significant advantages: the rapid response capability of electrochemical energy storage can compensate for the lag in pumped storage adjustment, while the large-capacity characteristic of pumped storage can support energy balance over long time scales, forming efficient synergy. Combined with the refined regulation of demand response resources, this mode not only drastically reduces curtailment rates in both scenarios but also achieves a moderate reduction in system operation costs by minimizing unnecessary power redundancy and adjustment losses.To isolate the impact of the attention mechanism, a head-to-head comparison with the “DRL without attention” model is performed. The attention-enhanced model reduces wind curtailment and lowers operational costs. This improvement is attributed to the attention mechanism’s ability to weight wind power forecast errorsand energy storage SOC more heavily than other variables, enabling proactive correction of prediction deviations that would otherwise lead to curtailment or load shedding.

To evaluate the robustness of the framework under parameter fluctuations, sensitivity tests are conducted on key variables affecting scheduling outcomes. Focusing on IDR compensation costs—a critical parameter in demand response programs—we simulate adjustments within a 10% range (consistent with practical policy and subsidy variation ranges of 8–12%), modifying the baseline IDR cost (150 CNY/(MW·h)) to 135 CNY/(MW·h) and 165 CNY/(MW·h). The results indicate that even with 10% cost variations, the total operational cost changes by only 0.8–1.2% This stability arises from the framework’s “baseline + dynamic supplement” DR coordination: when IDR costs increase, the system automatically reduces IDR activation and increases battery discharge to maintain balance, avoiding over-reliance on expensive demand response. Conversely, lower IDR costs trigger increased IDR utilization, reducing energy storage cycling losses. This adaptive adjustment mechanism eliminates the need for precise parameter calibration, enhancing practical applicability.

The superiority of the proposed framework stems from three synergistic mechanisms: (1) the attention mechanism dynamically prioritizes critical variables (e.g., wind power forecast errors and energy storage SOC), enabling the agent to allocate computational focus to high-impact factors during decision-making; (2) hybrid energy storage (pumped hydro + electrochemical) leverages the high capacity of pumped storage and rapid response of batteries, reducing reliance on conventional generator ramping; and (3) coordinated DR scheduling (day-ahead PDR + intra-day IDR) enhances demand-side flexibility, with PDR establishing cost-effective baselines and IDR addressing real-time mismatches.

In summary, integrating dual energy storage technologies with a multi-time scale scheduling framework can more effectively address the uncertainty in new energy generation forecasts compared to a single pumped storage scheme. It establishes a more flexible and reliable balancing mechanism for the “source-grid-load-storage” system, thereby significantly enhancing new energy absorption capacity and providing strong support for the economical and stable operation of power grids with high proportions of renewable energy.