1. Introduction

Inflammation of alveoli in either or both the lungs is a characteristic of pneumonia, a severe respiratory infection usually caused by bacteria, viruses, fungi, or, less commonly, parasites. The disease fills the alveoli with fluid or pus, which generates symptoms such as fever, chills, chest discomfort, coughing up phlegm or pus, and also difficulty in breathing. It may be from mild to fatal, and most often strikes those who are already vulnerable, like the very young, the aged, and individuals with compromised immunity or chronic conditions [

1]. The most common cause is a bacterium called streptococcus pneumoniae and viruses such as influenza and SARS-CoV-2 (COVID-19). Successful disease control and prevention of complications like pleural effusion, lung abscess, and respiratory failure rely on efficient diagnosis obtained through clinical assessment and laboratory examination. The most common and cost-effective diagnostic test for detecting pneumonia is chest X-ray imaging; however, due to subtle visual clues, overlapping features with other pulmonary diseases, and inter-observer variation between radiologists, it can be challenging to interpret these images correctly [

2].

Recently, to enhance diagnostic accuracy and to facilitate clinical decision-making, numerous studies have explored the application of machine learning methods for the classification of pneumonia based on chest X-ray images in the past few years [

3]. In [

4], pneumonia was detected using region of interest features and classifiers, namely multilayer perceptron, random forest, and logistic regression. The multilayer perceptron obtained an accuracy of 0.95. Another study was conducted to compare the efficiency of naïve Bayes classifier (NB), k nearest neighbor (kNN), and support vector machine (SVM) to detect pneumonia in children [

5]. The SVM selected features namely correlation, average deviation, difference variance, standard deviation gave an accuracy of 0.77. In [

6], feature extraction from chest X-ray images were done by 2D discrete wavelet method and fed to the classifiers, namely artificial neural network, random forest, kNN and SVM. The random forest algorithm showed highest performance, with a 0.97 accuracy. In [

7], researchers extracted haar, shape, and texture features from chest X-rays, and SVM achieved an accuracy of 0.69.

Many researchers have also employed the convolution neural network (CNN) model for pneumonia classification. In [

8], researchers used a parameter optimization method in a 19-layer CNN model which produced an accuracy of 0.96. In [

9], the researchers used four deep learning-based transfer learning models. DenseNet201 gave an accuracy of 0.98 for classifying dataset as normal and pneumonia-affected. An accuracy of 0.95 for classifying viral affected and bacteria affected pneumonia was also achieved. In [

10], the researchers used DenseNet169 for feature extraction, while the SVM was utilized for classifications, which achieved an accuracy of 0.74. In [

11], the researchers designed a customized CNN model to identify pneumonia in chest radiographs. The model gave a 0.93 validation accuracy. Pneumonia detection using the ResNet50 model has previously been used by researchers in [

12,

13,

14]. In [

12], reseachers added a few more layers to the ResNet50 model and developed an improved ResNet50 model for classifying pneumonia chest X-ray images with an accuracy of 97%. In [

13], researchers developed a ResNet50 model for pneumonia detection and obtained an accuracy of 98.9%. In [

14], researchers used ResNet50 model to classify pneumonia chest X-ray images and achieved an accuracy of 88.88%, precision, recall and F1 score of 83%.

Traditional machine learning approaches often face limitations in capturing subtle and complex patterns in chest X-ray images. In contrast, boosting combines the outputs of multiple weak learners to create a strong learner, thereby enhancing the predictive performance. The most commonly used boosting methods are natural gradient boosting (NGBoost), adaptive boosting (AdaBoost), and extreme gradient boosting [

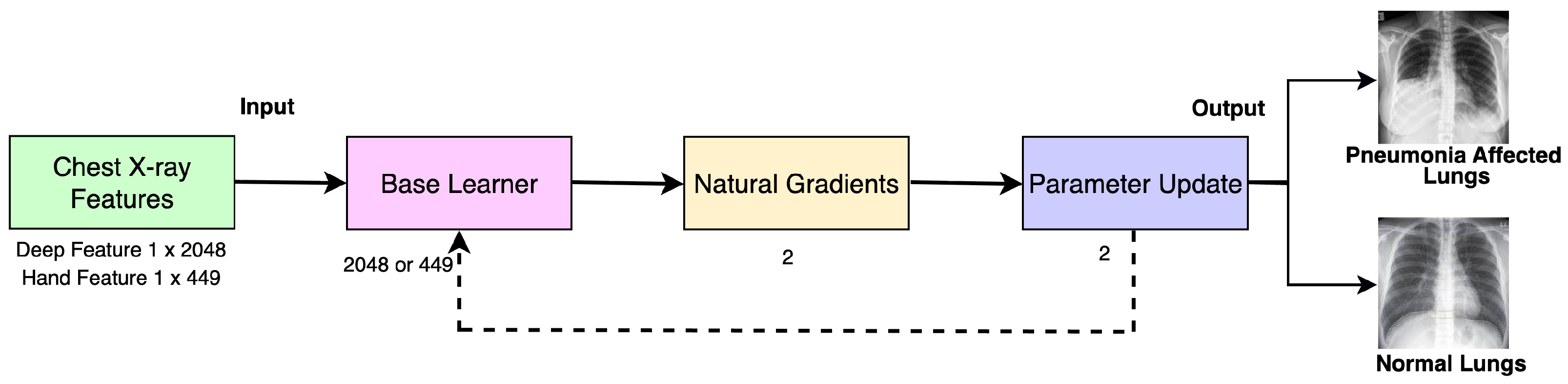

15]. Amongst these, NGBoost is a powerful machine learning model that estimates both predictions and associated uncertainties [

16]. NGBoost is a probabilistic boosting method, known for its flexibility, interpretability, and better performance in dealing with complex patterns [

17]. NGBoost exhibits successful achievements across multiple fields like electrical engineering [

18], financial prediction [

19], and construction technology [

20]. In the field of medical image analysis, NGboost is used in [

17,

21]. In [

21], the researchers used NGBoost classifiers for brain tumor detection. The classifier produced an accuracy of 98.54%. In [

17], researchers extracted Xception-based deep features and fed it to NGboost classifier, to classify Monkeypox Skin Lesion Dataset (MSLD). The model gave an accuracy of 98.53%.

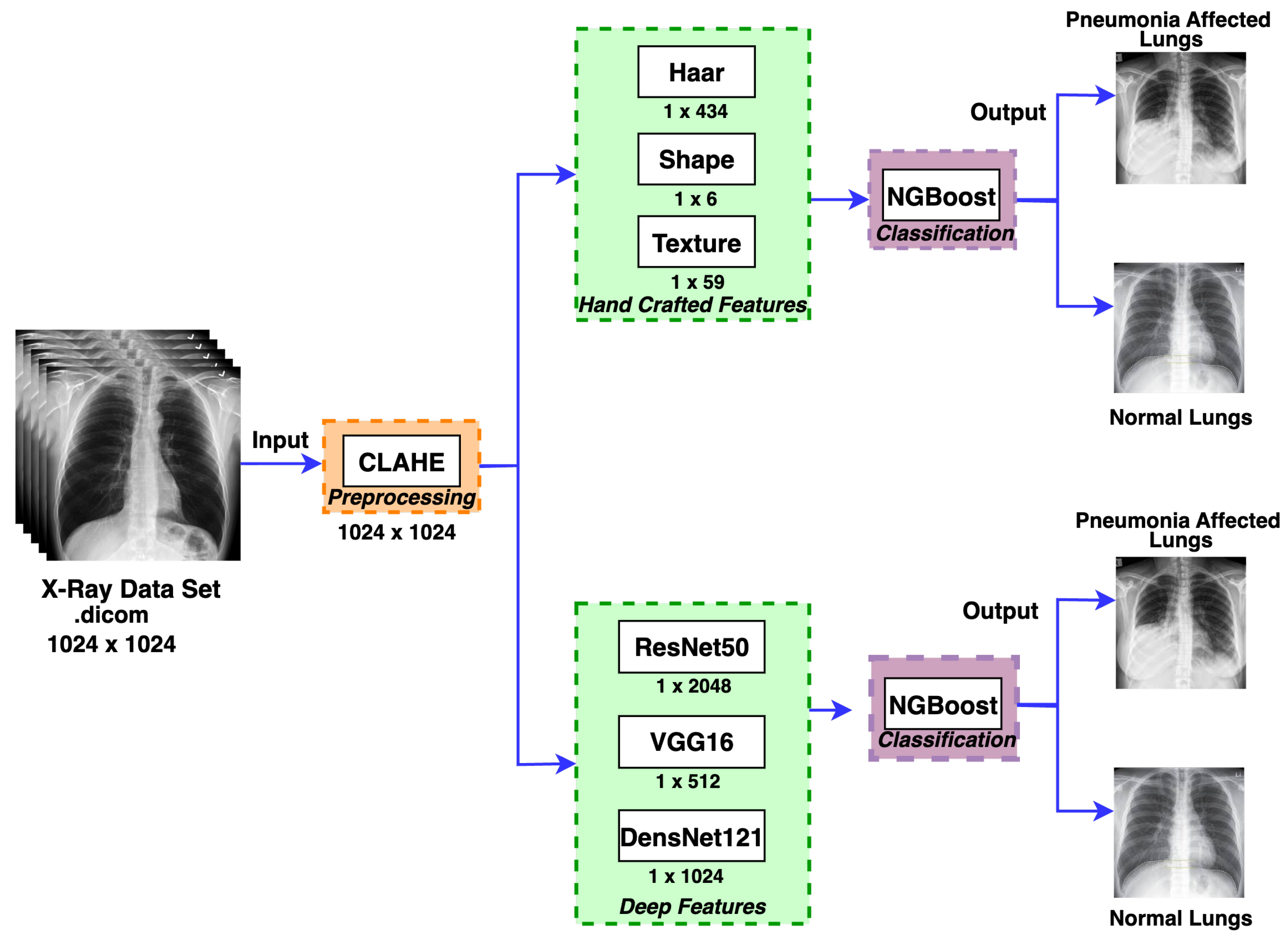

In the current study, the commonly used handcrafted features like haar, shape, and texture are extracted and fed as an input to NGBoost classifier. Additionally, deep features were also extracted using widely used CNN models such as ResNet50, DenseNet121, and VGG16 models. The experiments were conducted on 26,684 chest X-ray images obtained from “ChestX-ray8” hospital scale chest X-ray image database of stage 2 RSNA challenge [

22].

The paper is structured as follows:

Section 2 shows the materials used and methodology incorporated in the study.

Section 3 demonstrates the obtained results, and

Section 4 draws conclusion based on the obtained results.

3. Results

Experiments are conducted on a stage 2 pneumonia RSNA dataset, which consists of 26,684 chest X-ray images, out of which 6012 images are pneumonia-affected, and 20,672 are normal images. The dataset is divided into 70% training and 30% testing data. This work carries an analysis of handcrafted and deep features with respect to the NGBoost classifier. The result section contains various subsections showing parameter configuration and optimization of the proposed model, results of handcrafted features, results of deep features, and comparison results of handcrafted features and deep features with NGBoost classifier.

3.1. Parameter Configuration and Optimization

Parameter selection plays a vital role in improving the performance of any machine learning or deep learning model [

8]. The proposed framework for pneumonia chest classification employs a deep learning model for feature extraction and NGBoost for classification. The NGBoost classifier and deep learning models such as ResNet50, DenseNet121 and VGG16 are trained with different parameters; the optimal parameters are selected. ResNet50, DenseNet121, and VGG16 models are initially trained with a learning rate of 0.1 and marginally increased up to 0.001. The learning rate of 0.01 was selected for the model design, since for other learning rates, accuracy was marginally smaller. In order to select the optimal number of epochs, models were initially trained with 20 epochs, incremented by 10 in each iteration, and the best accuracy was obtained for 40 epochs. Additionally, models were trained with different combination of activation functions and optimizers namely sigmoid and adam, tanh and adam, sigmoid and RMSprop, and tanh and RMSprop. The combination of sigmoid activation function and adam optimizer gave better results for the ResNet50 model. But the combination of sigmoid activation function and RMSprop optimizer gave better results for the VGG16 and DenseNet121 models. The final optimized parameters of the deep learning models are shown in

Table 2.

Similarly, parameters of the NGBoost classifier like learning rate and number of estimators were also optimized. The NGBoost classifier was trained with different learning rates from 0.1 to 0.001, and a better accuracy was obtained for the learning rate of 0.01. The NGBoost classifier was also trained with different estimators. The number of estimators was initially chosen as 300, and it was incremented by 200 upto 700 estimators. At 700 estimators accuracy tend to marginally decline and 500 estimators was selected as optimal parameter. Regression tree is used as a base learner since it effectively captures non-linear relationships in the data and is computationally efficient. The final optimized parameters of the NGBoost classifier is shown in

Table 3.

3.2. Handcrafted Features

Handcrafted features, namely haar, shape, and texture, were extracted from the input chest X-ray images. The extracted features are used as an input to machine learning classifiers, namely support vector machine, naive Bayes, decision tree, and NGBoost. Experiments were conducted using individual features, and maximum accuracy of 0.71 was obtained for haar features using the NGBoost classifier. Additional experiments were conducted by concatenated haar, shape, and texture features, and results are presented in

Table 4. From

Table 4 it is observed that decision tree obtained an accuracy of 0.62, naive Bayes obtained an accuracy of 0.65, and support vector machine obtained an accuracy of 0.69. In comparison NGBoost obtained a maximum accuracy of 0.72, precision of 0.70, recall of 0.72, specificity of 0.75 and F1 score of 0.71.

3.3. Deep Features

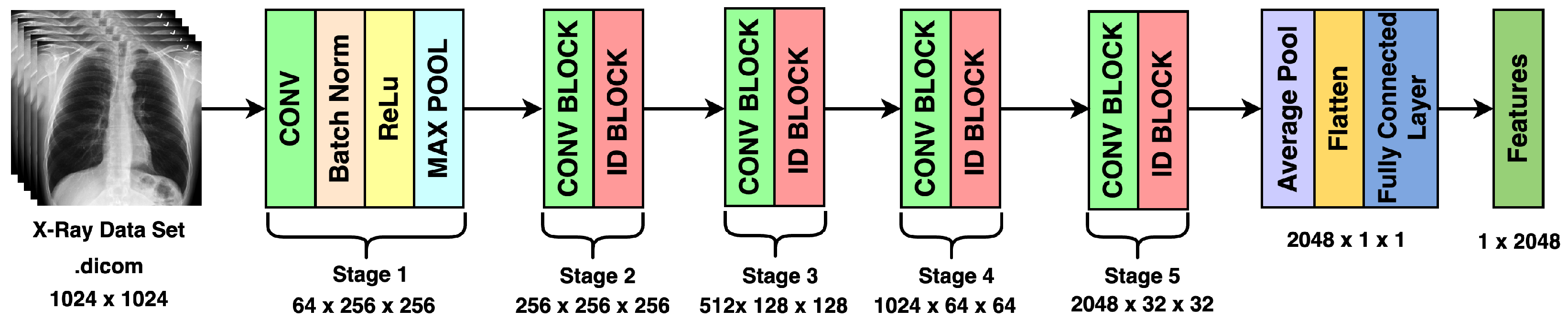

Experimental analysis using handcrafted features with NGBoost provided a maximum accuracy of 0.72. To further improve the classification accuracy, the role of deep features for pneumonia classification is explored in this work. The preprocessed images were given as input to ResNet50, VGG16, and DenseNet121 model, and their performance is shown in

Table 5. From

Table 5, it is seen that ResNet50 model gave an accuracy of 0.87, precision of 0.85, recall of 0.86, specificity of 0.89, and F1 score of 0.86. The Densenet121 model gave an accuracy of 0.79, precision of 0.76, recall of 0.70, specificity of 0.85, and F1 score of 0.75. The VGG16 model gave an accuracy of 0.78, precision and recall, F1 score of 0.76, and specificity of 0.82.

From

Table 5, it is observed that the ResNet50 model achieved better accuracy compared to the other models. Further features from the fully connected layer of the ResNet50 model are fed to support vector machine, decision tree, naive Bayes, and the NGBoost classifier. The obtained results are illustrated in

Table 6. From

Table 6, it is seen that ResNet50 features with the NGBoost classifier gave an accuracy of 0.98, a precision of 0.96, recall, F1 score of 0.97, and specificity of 0.97. The support vector machine classifier gave an accuracy of 0.88, precision of 0.87, recall of 0.86, specificity of 0.92 and F1 score of 0.87. The naive Bayes classifier gave an accuracy and precision of 0.82, a recall of 0.79, specificity of 0.89, and F1 score of 0.80. The decision tree classifier gave an accuracy and precision of 0.78, a recall of 0.76, F1 score of 0.77, and specificity of 0.82. Thus, there is a drastic increase in accuracy from handcrafted features to deep features with respect to a basic machine learning classifier and also the NGBoost classifier.

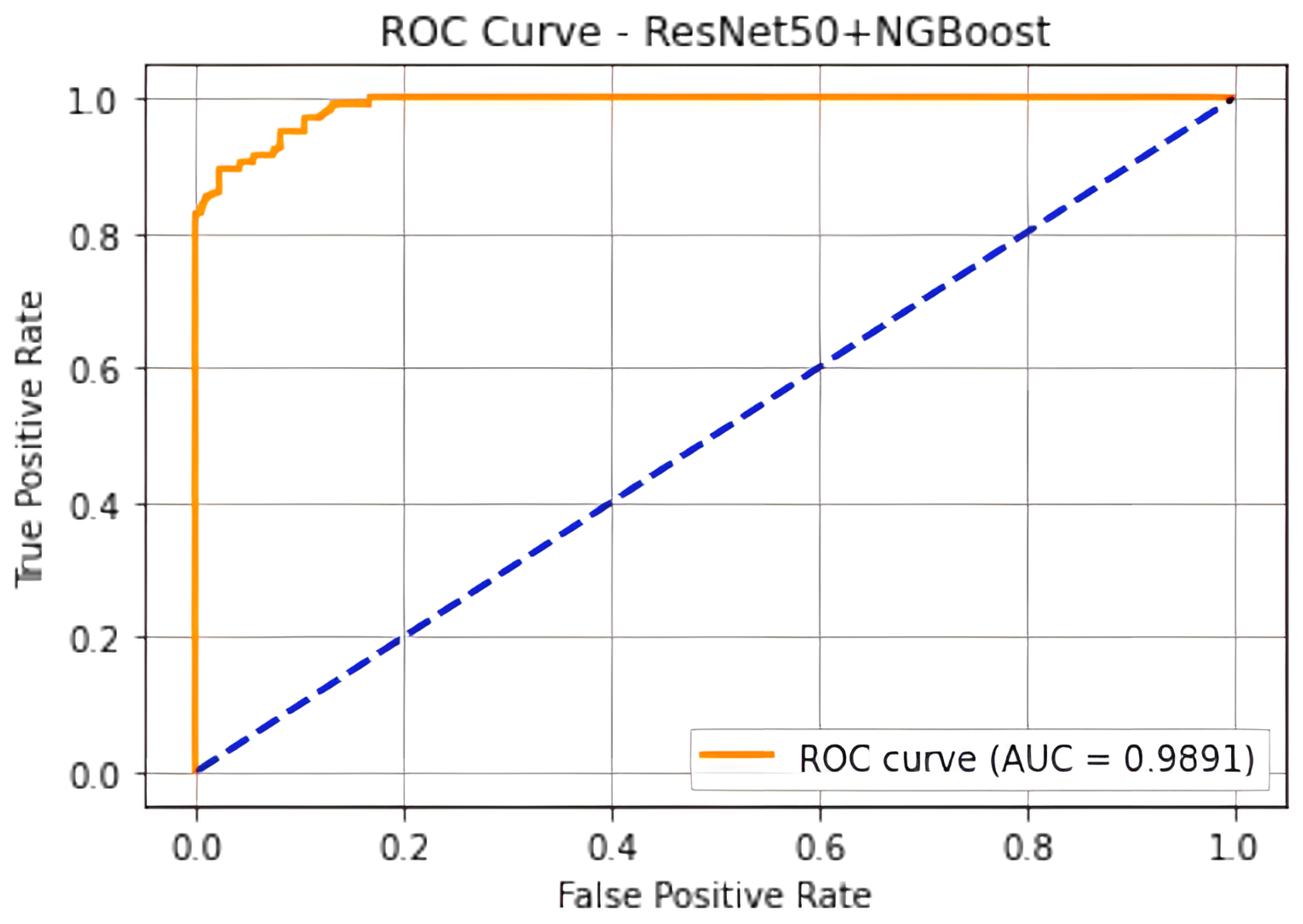

The ROC curve of the Resnet50 feature with the NGBoost classifier is shown in

Figure 4. From

Figure 4 it is observed that the area under the curve value for employing ResNet50 features with the NGBoost classifier is 0.99.

To analyze the stability of the NGBoost classifier with high dimensional input of ResNet50 model, the features of fully connected layer, features of average pooling layer, and customized dense layer of size 512 were extracted. The extracted features were given as an input to the NGBoost classifier. The features obtained from the average pooling layer of the ResNet50 model is of dimension 2048, with an accuracy of 0.97. The features obtained from the fully connected layer of the ResNet50 model is of dimension 1000, with an accuracy of 0.98. A dense layer of size 512 was added to the fully connected layer, with an accuracy of 0.98. Though all three models achieved similar results irrespective of the feature dimensions, it can be said that the NGBoost classifier is stable.

3.4. Comparison of Handcrafted and Deep Features with NGBoost Classifier

The comparative analysis of handcrafted features and deep features with respect to the NGBoost classifier was carried out in this study. The obtained results of handcrafted features and deep features with the NGBoost classifier are shown in

Table 7. Additional experiments were also conducted by combining the concatenated hand features and ResNet50 based features with respect to NGBoost classifier. This results is also shown in

Table 7. From

Table 7, it is observed that the accuracy of concatenated handcrafted features is 0.72, precision is 0.70, recall is 0.72, specificity is 0.75, and F1 score is 0.71. The ResNet50 deep features accuracy is 0.98, precision, recall, specificity and F1 score is 0.97 respectively. Thus, it can be said that deep features with respect to the NGBoost classifier are more robust than handcrafted features with respect to the NGBoost classifier for accurately classifying pneumonia in chest X-ray images. The combined handcrafted and deep features gave an accuracy of 0.94, precision of 0.92, recall of 0.87, specificity of 0.93, and F1 score of 0.89. By combining handcrafted and deep features, a slight decrease in accuracy is observed, indicating the model may be overfitting due to an increase in the number of features.

To show the significance of the proposed work, a performance comparison between the proposed approach and existing state-of-the-art approaches was carried out.

Table 8 shows the results of comparison with existing studies on pneumonia detection. For instance, in [

30], a pretrained VGG model was used with limited training samples, achieving an accuracy of 0.96. In [

31] proposed ensemble model of five deep learning models, an accuracy of 0.86 was produced. In [

32] residual CNN network was developed by researchers to classify pneumonia chest images. The model gave an accuracy of 0.85. CNN with ReLU activation function was deployed in [

33] to produce an accuracy of 0.92. ResNet18 with cycleGAN was developed in [

34], and the model produced an accuracy of 0.92. In [

35], a model called PneuNet was developed based on Vision Transformer. The model gave an accuracy of 0.94. In [

36], a ResNet34 and Vision Transformer were combined to classify pneumonia chest X-ray images. The model achieved an accuracy of 0.94. In [

37], a hybrid CNN model with Swin Transformer model was used to classify chest X-ray images as pneumonia-positive and pneumonia-negative, achieving an accuracy of 0.98. When comparing the results of the proposed method with other methods in literature, it can be concluded that by employing deep learning features from ResNet50 and NGBoost classifier, a more accurate pneumonia classification can be achieved.

4. Conclusions

In this study, NGBoost classifier, a probabilistic ensemble model is employed to classify the chest X-rays as pneumonia-affected images and normal images. The dataset used in this study was taken from stage 2 pneumonia RSNA challenge, which consists of 26,684 images. The images are preprocessed using the contrast limited adaptive histogram equalization method to amplify the contrast and brightness of the images. As a part of handcrafted features, haar, shape, and texture features were extracted. As a part of deep features, ResNet50, Densenet121, and VGG16 models were implemented and features were extracted from the fully connected layer of these models. The NGBoost classifier was trained on both handcrafted and deep features. From the analysis, it is found that deep features extracted from ResNet50 model produced an accuracy of 0.98, precision of 0.96, recall, F1 score, and specificity of 0.97. The concatenated handcrafted features produced an accuracy of 0.72, a precision of 0.70, a recall of 0.72, F1 score of 0.71, and specificity of 0.75. From the experimental results, it can be inferred that deep features play a major role in pneumonia chest X-ray classification. However, one of the limitation of the work may be usage of X-ray images and it is better to use higher resolution imaging modality like computed tomography. In future, the proposed approach can be employed for classification of other lung diseases such as tuberculosis, bronchitis and lung cancer.