Abstract

The exponential growth in wireless communication demands intelligent and adaptive spectrum-sharing solutions, especially within dynamic and densely populated 6G Cognitive Radio Networks (CRNs). This paper introduces a novel hybrid framework combing the Non-dominated Sorting Genetic Algorithm II (NSGA-II) with Proximal Policy Optimisation (PPO) for multi-objective optimisation in spectrum management. The proposed model balances spectrum efficiency, interference mitigation, energy conservation, collision rate reduction, and QoS maintenance. Evaluation on synthetic and ns-3 datasets shows that the NSGA-II and PPO hybrid consistently outperforms the random, greedy, and stand-alone PPO strategies, achieving higher cumulative reward, perfect fairness (Jain’s Fairness Index = 1.0), robust hypervolume convergence (65.1%), up to 12% reduction in PU collision rate, 20% lower interference, and approximately 40% improvement in energy efficiency. These findings validate the framework’s effectiveness in promoting fairness, reliability, and efficiency in 6G wireless communication systems.

1. Introduction

Rapid advancements in wireless communication technology are driving the evolution from 5G to 6G networks that is expected to make the Internet of Everything (IoE) feasible. The IoE depicts a huge network in which locations, people, and things are connected and can exchange services. The evolution toward 6G is being shaped by a convergence of global trends, including emerging business models, intelligent technologies, sustainability imperatives, and social responsibility [1]. As a next-generation communication paradigm, 6G is expected to enable transformative applications such as Industry 5.0, Smart Grid 2.0, Holographic Telepresence (HT), Extended Reality (XR) [2,3], Unmanned Aerial Vehicles (UAVs), and even deep-sea and space exploration [4]. Its vision extends beyond connectivity to encompass large-scale innovation, widespread Artificial Intelligence (AI), and the development of massive digital twins. By 2030, 6G is projected to have redefined the global economic landscape, contributing trillions of dollars through enabling technologies and services [5]. These advancements will drive progress across key sectors including manufacturing, healthcare, agriculture, and transportation [6]. More broadly, 6G promises to revolutionise modern civilisation, setting new standards for efficiency, innovation, and hyper-connectivity [7].

As evidenced in Table 1, the transition to 6G represents a substantial advancement over 5G, particularly in spectrum range, data rates, latency, architectural flexibility, embedded intelligence, and energy efficiency [8].

Table 1.

Comparison of 5G and 6G technologies.

More importantly, 6G can be envisioned as a vast, distributed neural network that seamlessly integrates computing, communication, and sensing. This convergence ushers in an era where everything is perceptive, interconnected, and intelligent, effectively dissolving the boundaries between the physical, biological, and digital realms. Consequently, the development of 6G networks faces substantial challenges in traffic and network engineering. According to the International Telecommunication Union (ITU), global mobile data traffic is projected to reach 5 zettabytes by 2030 [9]. To manage this exponential surge, driven by next-generation applications, effective traffic engineering (TE) becomes indispensable. Key obstacles include spectrum scarcity, energy efficiency constraints, and persistent security vulnerabilities, all of which must be addressed to ensure sustainable and resilient network operations [10]. In addition, other studies [11,12] highlight a broader set of technical challenges, encompassing resource allocation, spectrum management, waveform design, channel modelling, interference mitigation, energy optimisation, security enhancement, and the integration of Artificial Intelligence (AI). Spectrum scarcity and under-utilisation remain key barriers to the deployment of 6G networks. Integrating Cognitive Radio Networks (CRNs) into 6G architectures offers a promising solution by enabling Dynamic Spectrum Access, allowing Secondary Users to utilise idle frequencies when primary mobile components are inactive [13,14,15,16]. In 6G CRNs, resource allocation emphasises fairness, leveraging multi-channel communication to ensure equitable access regardless of individual channel capacity [17]. Notably, spectrum management, a specialised subset of resource allocation, plays a pivotal role in optimising radio frequency usage, underscoring its critical importance in achieving efficient and sustainable 6G network performance. Spectrum management (SM) is a foundational element of modern wireless communication infrastructure, encompassing the precise allocation, regulation, and coordination of radio frequency resources [15]. As spectrum demand continues to rise, competition for specific frequency bands intensifies, making efficient spectrum utilisation critical.

Effective spectrum management is driven by several imperatives: mitigating harmful interference to protect frequencies reserved for essential services, identifying opportunities to enhance spectral efficiency, and enabling the development and deployment of emerging technologies within adaptable regulatory frameworks. These efforts collectively contribute to lowering the cost of telecommunications equipment and fostering innovation [18].

In the 6G context, the spectrum management process is premised on a layered framework. The layered spectrum framework comprises four layers, namely, the policy and regulatory authorities layer, the spectrum management systems layer, components of the spectrum management layer, and the spectrum management technologies layer [15]. Across all the layers, the framework is designed to safeguard privacy and security in spectrum management processes, while harnessing Artificial Intelligence to enhance prediction, planning, real-time allocation, and enforcement. To realise diverse 6G use cases, networks must integrate a suite of cutting-edge technologies, including Machine Learning (ML), Artificial Intelligence (AI), Cognitive Radio Networks (CRNs), post-quantum cryptography, and IoT-driven automation [19]. These technologies collectively support the dynamic, intelligent, and secure operation of next-generation wireless systems. Cognitive radio (CR), in particular, plays a pivotal role in enhancing spectrum efficiency, improving network resilience, and enabling novel applications in 6G [20]. Achieving these outcomes requires robust mechanisms for dynamic spectrum allocation and interference mitigation, especially in environments characterised by spectrum congestion.

Moreover, the convergence of deep learning and CRNs presents a promising opportunity to further optimise 6G networks. This synergy facilitates autonomous, real-time spectrum management and significantly enhances the overall intelligence of network operations [21].

To support the envisioned capabilities, several transformative technologies must converge. These technologies include networked sensing, native AI, integrated Non-Terrestrial Networks (NTNs), extreme connectivity, native sustainability, and dependability. Native AI means that AI will be incorporated into communication functionalities from the beginning of system design through the development, management, and operation of systems to achieve the desired performance improvements [22]. Currently, to make AI native 6G a reality, MITRE is collaborating with NVIDIA [23]. This is achieved by integrating AI into 6G networks to address a variety of challenges, including improving service delivery and optimising spectrum availability to support the expansion of wireless connectivity. Native AI and networked sensing are closely intertwined and will provide critical synergy for the intelligent networks of the future. By investigating radio wave transmission, reflection, and scattering, the communication system as a whole can act as a sensor to sense and comprehend the physical world, eventually offering a wide range of new services. Furthermore, 6G’s Key Performance Indicators (KPIs), like transmission reliability, data rate, and communication latency, are subject to increased demands due to recently developed extreme connectivity [24]. The 6G system will pose significant challenges to trustworthiness, due to several factors: access through billions of endpoints and millions of generally untrustworthy sub-networks; architectural disaggregation and open interfaces; a heterogeneous cloud environment; and the integration of open-source and multi-vendor software. There will probably be an increase in both AI/ML-based attacks and attacks against AI/ML-based ideas [25]. By the 2030s, reliable wireless networks will underpin a wide range of applications for both consumers and industries. To address the environmental impact of ICT, 6G must evolve into an energy-efficient, adaptable digital framework that supports diverse sectors. Its sustainability should be approached holistically, integrating energy optimisation, resource stewardship, life cycle management, and social responsibility. Real-time sensing and contextual awareness will enhance service delivery and cost-effectiveness, while foundational pillars such as extreme connectivity, Integrated Non-Terrestrial Networks (NTNs), native AI, and trustworthiness will collectively enable and shape emerging 6G use cases. Key envisioned applications for 6G include mixed reality, global connectivity, autonomous mobility, spatial analytics, mission-critical services, large-scale digital twins, and AI-enhanced communication [26].

In designing a 6G Cognitive Radio Network (CRN), we considered three competing objectives: spectrum efficiency, interference reduction, and energy consumption. These goals are inherently conflicting, making optimisation a complex task.

Wireless network optimisation often treats performance metrics, such as interference mitigation or spectrum efficiency, as independent targets. However, single-objective optimisation presents a significant limitation: by focusing on improving one metric, it may inadvertently degrade other critical aspects of network performance. This challenge arises from the intrinsic interdependence among network objectives, where enhancing one can negatively impact another [27].

For instance, efforts to reduce interference and minimise energy consumption frequently conflict with the pursuit of high spectrum efficiency (SE) [28,29].

Optimising 6G networks presents significant challenges, particularly when multiple, conflicting objectives must be addressed simultaneously [30]. As noted in [31], multi-objective optimisation involves enhancing network performance and efficiency while navigating diverse constraints and performance metrics.

To improve spectrum management, this study adopted a multi-objective approach targeting three key metrics: spectrum efficiency, interference mitigation, and energy consumption. The proposed solution introduces a novel hybrid framework that integrates the Non-dominated Sorting Genetic Algorithm II (NSGA-II) [32] with Proximal Policy Optimisation (PPO) for enhanced multi-objective optimisation. Despite its effectiveness, NSGA-II exhibits several limitations, including poor scalability, high computational complexity [33], limited real-time processing capabilities, and difficulty handling many-objective problems [34]. These shortcomings highlight the potential for integrating deep learning and Reinforcement Learning (RL) techniques to overcome existing constraints and advance intelligent spectrum management in 6G networks [35].

Key Contributions

Our key contributions are summarised as follows:

- Implementation of NSGA-II: We employ the Non-dominated Sorting Genetic Algorithm II (NSGA-II) to generate Pareto-optimal solutions that balance three conflicting objectives: spectrum efficiency, interference reduction, and energy consumption. This formulation captures the trade-offs between maximising spectrum utilisation, minimising unwanted signal overlap (interference), and reducing energy expenditure during network operation.

- Integration of PPO for real-time learning: We incorporate Proximal Policy Optimisation (PPO), a Reinforcement Learning (RL) algorithm, to enable real-time policy adaptation using NSGA-II-guided rewards. PPO is particularly suited for training intelligent agents in complex 6G Cognitive Radio Network environments, allowing for dynamic responses to changing channel conditions.

- Hybrid framework evaluation: We benchmark the performance of our hybrid NSGA-II + PPO framework against existing baseline techniques. While NSGA-II [27] is recognised for producing diverse Pareto-optimal solutions in multi-objective settings, it operates offline and lacks responsiveness to real-time changes. Conversely, PPO [36] adapts policies online but is traditionally limited to single-objective optimisation and may converge prematurely when faced with competing goals.

The strength of our approach lies in the synergy between NSGA-II and PPO. NSGA-II provides a rich set of high-quality, Pareto-guided initial solutions, which define the search space for PPO. PPO then refines these solutions in real time, maintaining multi-objective performance while adapting to dynamic network conditions. This hybrid strategy achieves both long-term optimality and short-term adaptability, capabilities that neither method can fully realise in isolation. The rest of the paper is structured as follows: Section 2 presents the related work, which audits the range of efforts put towards multi-objective optimisation of 6G networks. Section 3 details the system model illustrating the configuration of a Cognitive Radio Network within a 6G environment. The evaluation of the 6G Cognitive Radio Network (CRN) is presented in Section 4, with the paper concluding in Section 5.

2. Related Work

Wireless technologies are evolving at an unprecedented pace, driven by escalating demands for reliable and high-efficiency communication. This momentum has catalysed the development of Sixth Generation (6G) networks. However, critical challenges, such as constrained spectrum resources, increasing user density, and pervasive interference, underscore the importance of Dynamic Spectrum Access as a foundational enabler for effective deployment of 6G Cognitive Radio Networks (CRNs).

For example, ref. [37] proposed a Dynamic Spectrum Access (DSA) system that enables Secondary Users to exploit underutilised channels via environmental sensing. While this approach improves spectrum efficiency, it demands precise sensing and robust coordination. Similarly, ref. [38] examined DSA in satellite–terrestrial networks, emphasising the challenges of managing overlapping domains and addressing inefficient coordination mechanisms. Cognitive NOMA (CR-NOMA) techniques, explored by [38,39], facilitate simultaneous multi-user frequency sharing through power-level differentiation. These methods yield notable throughput gains but introduce complexities in power allocation and fairness, particularly in decentralised deployments.

Table 2 synthesises recent Machine Learning (ML) approaches to spectrum sharing in Cognitive Radio Networks (CRNs), outlining both technical strengths such as enhanced accuracy, adaptability, and incentive mechanisms and persistent limitations, including insufficient empirical validation, scalability issues, and deployment realism. Despite methodological diversity spanning SVM, DRL, PPO, and MAPPO, many studies remain confined to theoretical or simulation-based evaluations, revealing a gap between algorithmic innovation and practical applicability. Recurring challenges especially in adversarial robustness, urban deployment, and fairness, underscore the need for interdisciplinary, field-tested ML frameworks tailored to real-world CRN environments.

Table 2.

Condensed ML-based spectrum sharing in CRNs with gaps.

Hybrid Deep Reinforcement Learning (DRL) approaches have recently emerged to overcome the limitations of traditional and ML-only models in 6G CRNs by combining adaptive learning with multi-objective optimisation and evolutionary strategies. These hybrid frameworks offer promising solutions for decentralised, scalable, and real-time spectrum sharing in complex and dynamic conditions. For instance, ref. [48] applied DRL agents at access points within cell-free MIMO networks to facilitate inter-operator spectrum coordination with low signalling overhead. However, the effects of large-scale MNO densification remain unstudied. Huang et al. [49] developed DRL-based autonomous spectrum access strategies for D2D-enabled IoT networks, achieving high throughput without prior access rules but lacking scalability assessments. Ref. [50] improved spectrum access and power control with DRL in CRNs, reducing collisions and improving rewards, but they did not evaluate robustness under interference and mobility. The hybrid discrete-continuous action space DRL algorithms proposed by [51] deliver near-optimal throughput with reduced sensing overhead but require standardised benchmarking and scalability studies.

Multi-agent Deep Reinforcement Learning (DRL) methods, such as MADDPG, have advanced cooperative spectrum sensing by reducing communication overhead [52]. Concurrently, federated learning and graph convolutional networks have enabled scalable learning in integrated satellite-terrestrial networks [53]. Distributed Q-learning frameworks for secure spectrum sharing in ultra-dense networks [54], along with consensus-based Reinforcement Learning for decentralised spectrum management [55], offer promising decentralised solutions though their performance under adversarial and interference-prone conditions remains under-explored. MARL architectures addressing partial observability and large action spaces demonstrate improved convergence, yet they still lack real-world validation [56]. Meanwhile, blockchain-enhanced evolutionary algorithms propose secure resource optimisation, but they require deeper integration with learning models to achieve practical viability [53].

Collectively, these hybrid DRL frameworks exhibit enhanced adaptiveness, throughput, and energy efficiency. However, they continue to face challenges in scalability, generalisability, convergence speed, and sensitivity to the environmental uncertainties typical of 6G scenarios. The proposed NSGA-II + PPO model addresses these limitations by coupling Pareto-optimal evolutionary searching with actor-critic policy learning within a modular, empirically validated simulation environment.

Table 3 presents a compact overview of DRL-based spectrum sharing strategies in 6G CRNs, spanning domains such as cell-free MIMO, D2D IoT, and energy-aware networks. While each method demonstrates strengths in scalability, throughput, and collision mitigation, common limitations persist, namely; insufficient robustness, limited scalability analysis, and lack of real-world adaptability. These gaps underscore the need for empirical validation and hybrid benchmarking.

Table 3.

Compact summary of DRL-based spectrum sharing in 6G CRNs.

Recent intelligent algorithms, such as those used for monitoring tower pumping units under varying operational conditions, illustrate the importance of tracking critical parameters to maintain system efficiency and reliability. These monitoring systems rely on algorithms that process multiple data streams to detect anomalies and anticipate failures, thereby enhancing operational resilience. Many employ Machine Learning, particularly neural networks, to model normal behaviour and flag deviations indicative of potential faults [57]. This concept of process monitoring can be generalised to network design, enabling applications such as anomaly detection for interference and security, predictive analytics for resource allocation, and state-based optimisation for spectrum sharing.

A concrete application of this concept to 6G CRNs involves modelling the network as a dynamic environment with multiple operational states, such as high-traffic, low-interference, or low-power modes. Optimisation algorithms can then be used to derive effective policies for spectrum sharing and power control, guiding the network toward its optimal state in response to real-time conditions. An alternative framework proposed in [58] advocates for decentralised learning across domains, where federated Reinforcement Learning enables scalable spectrum-sharing policies across distributed CRN nodes while preserving privacy and adaptability.

A related multi-agent DRL framework based on Stackelberg game theory [59] addresses hierarchical resource allocation and task offloading in mobile edge computing (MEC) environments. Their model demonstrates strong adaptability and scalability in vehicular networks that share key characteristics with 6G CRNs, such as high mobility, latency sensitivity, and decentralised control. Although both approaches aim to balance competing objectives under dynamic conditions, our hybrid NSGA-II + PPO framework focuses specifically on multi-objective spectrum sharing, employing Pareto-guided policy refinement rather than leader-follower coordination.

3. System Model

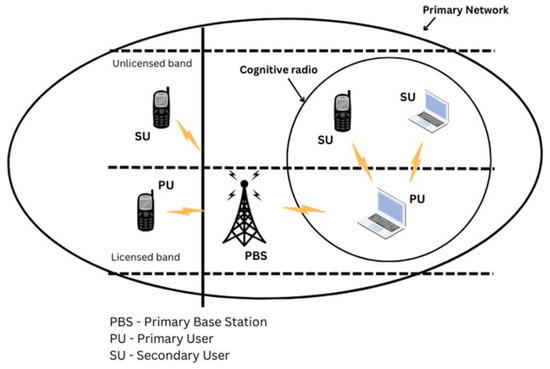

The system model depicts a 6G CRN environment comprising N authorised Primary Users (PUs) and M unlicensed Secondary Users (SUs). Each PU holds exclusive rights to a spectrum band, while SUs opportunistically access idle channels without causing interference to active PUs. Secondary users must continuously sense and vacate bands when PUs return. We assumed error-free and latency-free sensing in the design of our system model. Infrastructure-based and ad hoc CRN architectures are considered, with licensed and unlicensed spectrum access enabling coexistence between primary and secondary transmissions. In contrast to 5G, which operates mainly below 100 GHz and is optimised for enhanced broadband services and massive connectivity, 6G will extend into the sub-THz spectrum and is being designed with ultra-reliable, low-latency communication and AI-native control in mind [17,19]. These new characteristics change how spectrum sharing must be modelled. For example, sub-THz transmissions experience high propagation losses, and denser network deployments require more careful interference management and near-real-time decision-making. To reflect these realities, our formulation explicitly incorporates both interference and energy consumption in scenarios that reflect ultra-dense deployments and dynamic sub-THz channels, as illustrated in Figure 1.

Figure 1.

Cognitive radio network architecture.

Dynamic spectrum allocation governs the simultaneous assignment of Secondary Users (SUs) to available spectrum bands, enabling efficient spectrum sharing while adhering to interference and quality-of-service constraints. This allocation is represented using a binary decision variable:

where indicates that SU i is assigned channel j, and 0 otherwise.

System performance is evaluated through the following metrics:

- Signal-to-Interference-plus-Noise Ratio (SINR):where is the transmission power of SU i on band j, the channel gain for SU i, and denote the power and channel gain of interfering SUs, is the interference from active PUs, the noise spectral density, and is the bandwidth of band j. depicts how noise power is distributed over the communication system’s various frequencies. In essence, it is the noise power per bandwidth unit, commonly expressed in Joules (J) or Watts per Hertz (W/Hz). symbolises the frequency range over which the receiver j can process or function while receiving a signal.

- Objective Function 1—Spectrum Efficiency (SE):measuring the aggregate achievable throughput across Secondary Users.

- Objective Function 2—Interference to Primary Users (IL):quantifying the total interference induced by secondary transmissions on PUs.

- Objective Function 3—Energy Consumption per data rate (EC):Capturing energy efficiency by measuring the power consumed per unit data rate.

3.1. Multi-Objective Optimisation Problem Formulation

A multi-objective optimisation problem seeks to optimise multiple conflicting objectives simultaneously. Formally, it can be expressed as

subject to constraints. A solution is considered Pareto-optimal if no other feasible solution can improve one objective without simultaneously degrading at least one other [60]. The collection of all such solutions constitutes the Pareto front, which captures the inherent trade-offs among competing objectives. In the context of spectrum sharing for 6G Cognitive Radio Networks (CRNs), objectives such as maximising throughput, minimising interference, and reducing energy consumption often conflict, making Pareto-based approaches, such as NSGA-II, particularly well-suited to navigating these multi-objective challenges.

Efficient spectrum allocation in Cognitive Radio Networks (CRNs) requires balancing inherently conflicting objectives. Prioritising spectrum efficiency alone can lead to elevated interference and increased energy consumption, while minimising interference or power usage may constrain spectrum utilisation and reduce network capacity. These trade-offs naturally motivate the formulation of the problem as a multi-objective optimisation task:

subject to the following constraints:

where the SINR threshold ensures reliable communication, power budgets restrict transmission energy, interference constraints protect PUs, and allocation limits restrict each SU to, at most, one channel. The constraint enforces a minimum quality level for communication between transmitter i and receiver j. In practice, this means that the signals are sufficiently strong in relation to noise and interference levels. Thus, this guarantees the maintenance of Quality of Service (QoS), particularly in crucial real-time applications like autonomous systems or video streaming. The next constraint pertains to the fact that the total transmission power allocated by user or transmitter i across all frequency bands j must not exceed a given threshold . In our design, this constraint conveniently prevents the over-consumption of power, subsequently promoting an energy-aware network. Moreover, it also influences QoS and Interference Control by helping balance signal quality and limit interference to nearby users or systems. The design is also informed by interference issues, wherein ensures that the total interference from all selected Secondary Users on each band stays within a permissible limit . Thus, this constraint prevents harmful interference with Primary Users (PUs), maintaining coexistence as well as encouraging spectrum efficiency while preserving signal integrity. It also acts as a key boundary in optimisation models for spectrum allocation and supports regulatory compliance and spectrum etiquette. The constraint, is concerned with Secondary Users and enforces a mutual exclusivity rule, wherein it becomes particularly relevant when each entity must commit to a single option only among a predefined set. This means that in our CRN this constraint limits each to a set of channels or a single channel, thereby avoiding cross-band interference. The final constraint, , is a binary domain constraint and plays a foundational role in many optimisation and decision-making models. The binary decision variables like this one are often the backbone of optimisation logic, controlling user–band assignments, interference mitigation, and fairness guarantees.

Following [60], the objective was to determine the trade-offs represented by the Pareto-optimal front of non-dominated solutions among spectrum efficiency, interference, and energy consumption.

3.2. Hybrid NSGA-II + PPO Framework

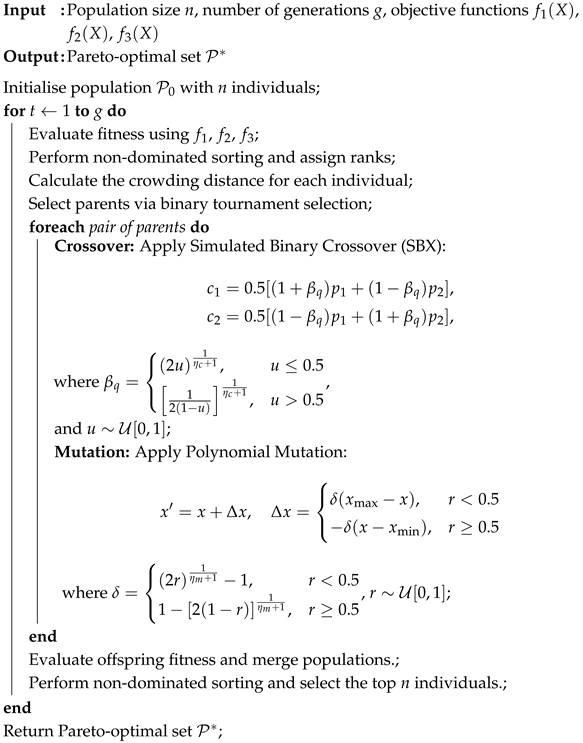

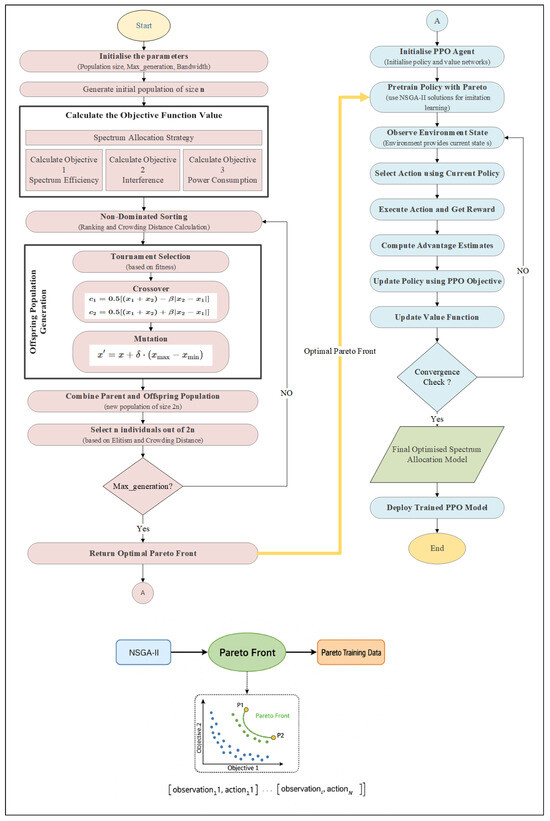

A modular two-stage hybrid framework is proposed to tackle the multi-objective spectrum sharing challenge in dynamic 6G Cognitive Radio Networks (CRNs). The first stage utilises the NSGA-II to generate a diverse set of Pareto-optimal spectrum allocation solutions simultaneously optimising key objectives: spectrum efficiency, interference mitigation, and energy consumption. These steps are detailed in Algorithm 1. NSGA-II begins by performing a fast non-dominated sorting process, organising the population into Pareto fronts according to dominance relations. A solution is considered non-dominated if no other solution improves one objective without worsening another. Within each front, a crowding distance measure estimates how close a solution is to its neighbours, favouring those in less crowded regions to maintain diversity. Selection is carried out through a binary tournament method that prioritises rank and crowding distance, after which a Simulated Binary Crossover (SBX) and Polynomial Mutation operators generate new candidate solutions. Iterating this process gradually yields a well-distributed Pareto front that balances competing objectives.

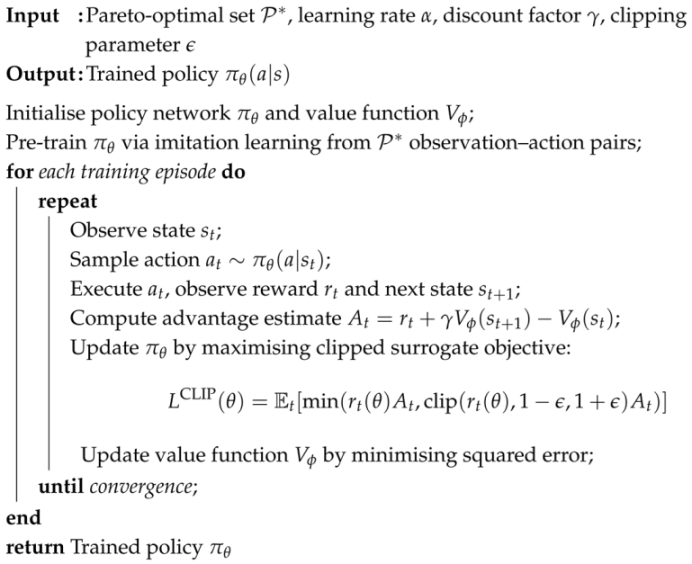

In the second stage, Proximal Policy Optimisation (PPO) is employed to refine these Pareto-optimal solutions into adaptive policies capable of making real-time spectrum access decisions. The PPO training procedure is outlined in Algorithm 2. Proximal Policy Optimisation (PPO) is a policy-gradient Reinforcement Learning algorithm designed to enhance training stability and efficiency. It employs an actor-critic architecture, where the actor defines the policy and the critic estimates the state-value function .

In our implementation, the state captures the essential network parameters, including channel availability, observed interference levels, and the energy status of Secondary Users. Conditioned on this state, the actor network generates an action , representing decisions related to spectrum access and power allocation. The critic network provides a baseline by evaluating the current state, thereby guiding the actor’s policy updates.

The optimisation process is driven by PPO’s clipped surrogate objective, which restricts the probability ratio of new and old policies to remain within a trust region:

where is the policy ratio and is the advantage estimate. This mechanism prevents large, destabilising jumps in policy behaviour and promotes more consistent learning. In our hybrid framework, PPO does not start from scratch; instead, it builds on the Pareto-optimised solutions provided by NSGA-II, enabling faster convergence to robust, high-performing policies in dynamic spectrum environments.

| Algorithm 1: NSGA-II for multi-objective spectrum allocation. |

|

The sequential integration of NSGA-II’s global, multi-objective search with PPO’s local policy refinement balances thorough exploration of the complex solution space with efficient exploitation and adaptability to dynamic network conditions. This synergy enables robust, scalable, and flexible spectrum sharing in 6G CRNs.

Figure 2 presents the end-to-end flowchart of the hybrid framework, illustrating the interaction between the NSGA-II evolutionary search, policy pre-training via imitation learning, and subsequent Reinforcement Learning fine-tuning within the cognitive radio environment.

Figure 2.

End-to-end flowchart of the proposed hybrid NSGA-II + PPO framework for dynamic spectrum allocation in 6G CRNs.

The proposed hybrid framework integrates Proximal Policy Optimisation (PPO) for adaptive policy refinement with NSGA-II for multi-objective spectrum allocation. While this combination enhances decision quality and optimisation performance, it introduces substantial computational overhead during both the learning and allocation phases.

| Algorithm 2: Proximal Policy Optimisation (PPO) for policy refinement. |

|

- NSGA-II (evolutionary search complexity): Population Dynamics: NSGA-II operates over multiple generations with a sizeable population, demanding repeated evaluations of each individual across all objectives. This gives rise to complexity for non-dominated sorting, where n is the population size and M is the number of objectives.

- PPO–Reinforcement Learning stability: In Algorithm 2, policy training leveraging Pareto-optimal solutions to bootstrap PPO adds a front-loaded cost due to imitation learning over diverse-action pairs.

In simulation settings, this hybrid algorithmic design is generally preferred due to the trade-off between computational cost and policy robustness. However, to reduce runtime bottlenecks, scaling to real-time or hardware-constrained deployments will necessitate the use of surrogate models, parallelisation techniques, or algorithmic simplification.

Ultimately, enabling Dynamic Spectrum Access, intelligent interference management, and ultra-reliable low-latency communication is essential for advanced applications such as smart agriculture, autonomous vehicles, and industrial IoT. The 6G Cognitive Radio Network (CRN) system model plays a pivotal role in translating these capabilities into real-world deployments.

By integrating adaptive algorithms with real-time spectrum sensing, CRNs facilitate efficient utilisation of underused frequency bands, ensuring scalable and resilient connectivity in densely populated environments. This paradigm bridges theoretical innovation with practical infrastructure by promoting regulatory flexibility, optimising energy consumption, and aligning with global trends in intelligent edge services and sustainable network design.

4. Evaluation

4.1. Experimental Setup

A controlled experimental environment was configured to rigorously evaluate the proposed hybrid framework, which integrates NSGA-II and PPO for dynamic spectrum sharing in 6G Cognitive Radio Networks (CRNs):

- Dataset description: The experiments utilised a composite dataset comprising approximately 15,000 samples generated from a Python-based spectrum simulator and the ns-3 network simulator to reflect both synthetic and realistic CRN behaviours.

- Generation: The synthetic dataset was generated using a customised Python 3.10 script to evaluate the proposed model. Each record represented a discrete time slot, where three Secondary Users (SUs) contended for access to five spectrum channels, with the quantity of channels being equivalent to the quantity of Primary Users (PUs), under varying spatial and interference conditions. The dataset captured PU and SU coordinates, PU activity states, SU access requests, transmission power levels, channel gains, SINR, and interference levels. By modelling diverse network topologies, PU activity patterns, and SU requests, it reflected the stochastic spectrum usage of 6G Cognitive Radio Networks, enabling the GA-DRL framework to learn allocation strategies that optimised throughput, mitigated interference, and ensured fairness. The dataset was stored in csv format. The dataset parameters included PU activity, SU requests, SINR, interference levels, channel gain, transmit power, throughput, and energy consumption.

- Training: Training and evaluation were performed using Google Colab Pro with NVIDIA T4 GPUs, leveraging PyTorch 2.7.1 for PPO and custom Python implementations for NSGA-II. The environment simulated sub-6 GHz operation across five channels, with dynamic Primary and Secondary User interactions modelled per time slot. The evaluation consisted of 30 independent episodes of 512 steps each, following 30,000 PPO training time-steps. Baseline comparisons included random allocation, greedy heuristics, and stand-alone PPO. The key hyperparameters are summarised in Table 4.

Table 4. Hyperparameter configuration.

Table 4. Hyperparameter configuration.

Considering hyperparameter configuration, a 10% mutation probability rate preserves genetic diversity without necessarily causing convergence to become unstable. In evolutionary algorithms, it is a general standard heuristic to improve solution diversity in multi-objective spaces and avoid premature convergence. The choice of the number of epochs amounting to 10 epochs per update provides a sufficient gradient refinement without over-fitting to sampled trajectories, especially in dynamic spectrum environments. In order to prevent oscillations during Reinforcement Learning fine-tuning, a PPO learning rate of guarantees steady updates to policy and value networks. The PO clip range () value of is a recommended default. This prevents harmful action in action distribution and limits policy updates, particularly in multi-user environments like CRN. The PPO batch size, another hyperparameter, is set at 64 to balance the trade-off between gradient estimate reliability and computational efficiency.

4.2. Results and Analysis

4.2.1. Convergence and Multi-Objective Optimisation

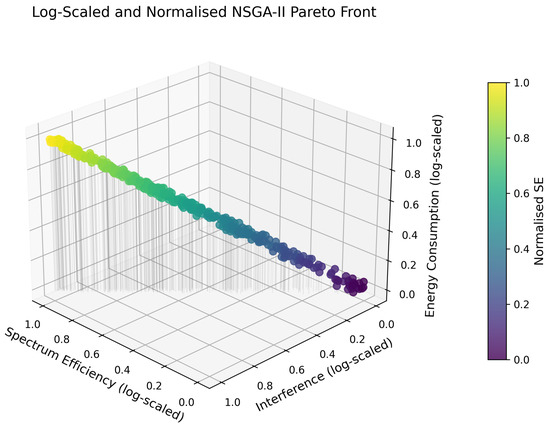

Figure 3 depicts the log-scaled and normalised Pareto front obtained by the NSGA-II algorithm. This front represents a diverse collection of the best trade-off options between the three key objectives: spectrum efficiency, interference, and energy consumption. Because these objectives are conflicting, improving one often comes at the expense of another, so the Pareto front highlights the best possible compromises the optimisation can achieve.

Figure 3.

Log-scaled and normalised NSGA-II Pareto front.

The hypervolume metric was used to quantitatively measure how well the NSGA-II optimiser performed, as depicted in Figure 4. The hypervolume measures the size of the Pareto front solutions-dominated goal space relative to a predefined reference point [60]. Intuitively, a larger hypervolume means that the Pareto front covers more of the objective space with high-quality solutions, indicating better convergence and diversity.

Figure 4.

Hypervolume convergence across NSGA-II generations.

In this study, since all the objectives were normalised to a standard scale and log-transformed for stability, the hypervolume value of approximately 0.65 (or 65%) suggests that the NSGA-II algorithm found a broad and well-distributed set of high-performing solutions close to the actual Pareto-optimal front. This strong convergence reflects effective investigation and use of the solution space, making the optimiser capable of providing diverse spectrum sharing policies that balance efficiency, interference, and energy use under different network conditions.

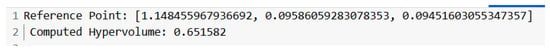

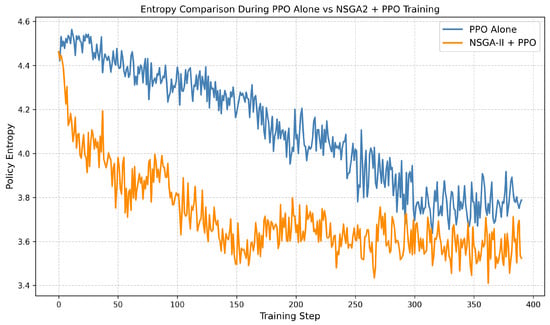

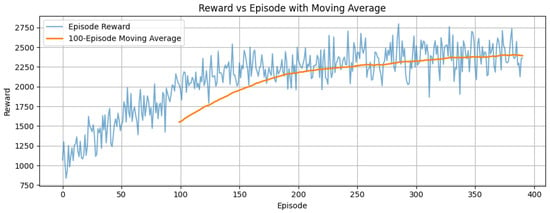

4.2.2. Learning Behaviour and Policy Stability

Figure 5 shows the policy entropy progression during training for the PPO alone and the NSGA-II + PPO hybrid agents. The hybrid model achieved an earlier and steadier reduction in entropy, reflecting a more efficient balance between exploration and exploitation. This behaviour was driven by the Pareto-informed pre-training, which guided the agent towards more purposeful exploration from the start.

Figure 5.

Entropy progression during training: PPO vs. NSGA-II + PPO.

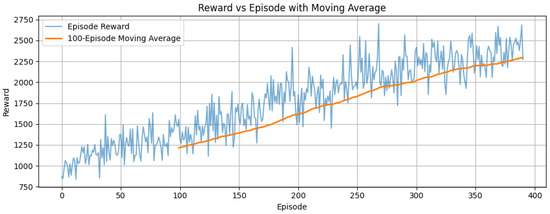

Correspondingly, Figure 6 presents the episode reward over training, including a 100-episode moving average to smooth out short-term fluctuations [61]. The moving average curve in Figure 7 illustrates that the hybrid agent attained higher rewards more rapidly and converged with greater stability than, as shown in Figure 8, PPO alone. This smoothed view highlights the accelerated and more consistent learning dynamics enabled by the hybrid framework, which benefits from structured initialisation based on multi-objective optimisation.

Figure 6.

Average reward over training episodes.

Figure 7.

Episode reward vs. episode number with 100-episode moving average for NSGA-II + PPO hybrid.

Figure 8.

Episode reward vs. episode number with 100-episode moving average for PPO alone.

4.2.3. Performance Comparison

Table 5 summarises the comparative evaluation metrics for all the strategies. Metrics such as energy efficiency, interference and PU collisions (22.88%), and hypervolume (65.2%) demonstrated superior performance compared to average reward (3433.73 vs. PPO’s 4024.45) and spectrum utilisation (48.83% vs. PPO’s 56.82%), which showed relative inferiority. The greedy and random strategies, used as baselines, revealed poor adaptability and consistently weak performance across all the metrics. While PPO excels in maximising reward and utilisation, it lacks sensitivity to operational constraints. In contrast, the hybrid NSGA-II + PPO framework delivers a more balanced and socially responsible solution, better aligned with the goals of sustainable and inclusive spectrum access. The hybrid framework achieved a balanced performance with competitive average reward, high fairness (Jain’s Fairness Index = 1.0), reduced interference, lower PU collision rates, and superior energy efficiency, confirming its multi-objective optimisation efficacy.

Table 5.

Performance comparison of spectrum sharing strategies.

4.2.4. Channel Usage and Fairness

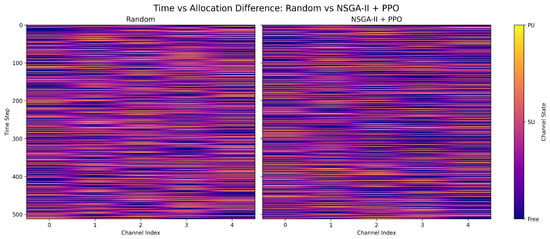

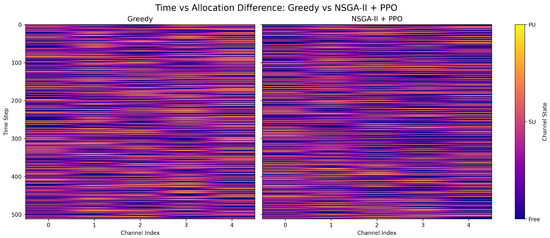

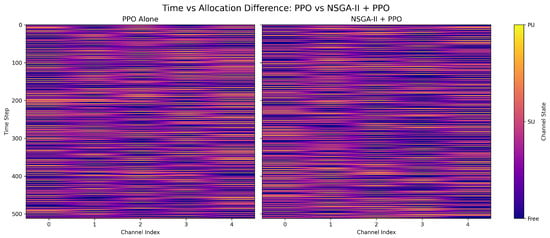

Figure 9, Figure 10 and Figure 11 depict the channel allocation patterns of the four spectrum sharing strategies over time. All three figures shared a consistent design, using the same time-step axis, channel index, and colour-coded scheme to represent PU/SU activity. This uniformity naturally resulted in a strong visual similarity between the figures. The random and greedy methods showed irregular and skewed usage, leading to channel congestion and inefficient spectrum utilisation. PPO alone improved allocation focus but exhibited channel overuse and potential contention hotspots.

Figure 9.

Channel usage: random vs. NSGA-II + PPO.

Figure 10.

Channel usage: greedy vs. NSGA-II + PPO.

Figure 11.

Channel usage: PPO vs. NSGA-II + PPO.

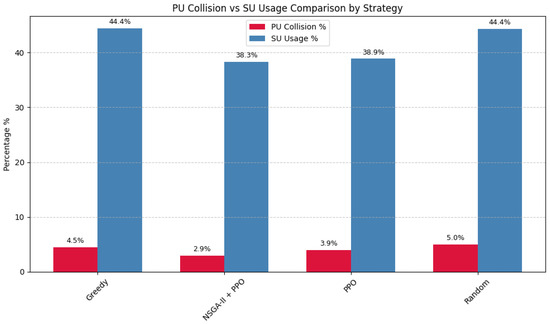

The NSGA-II + PPO hybrid model distributed Secondary User transmissions more evenly across all the channels, see Figure 12, significantly reducing channel usage variance by approximately 18% compared to PPO alone. This balanced allocation directly contributed to a lower Primary User collision rate, as evidenced by the 2.9% collision observed for the hybrid model versus 3.9% for PPO alone and the higher rates for the greedy and random strategies. The hybrid model maintained competitive Secondary User channel usage alongside low collision rates. This highlights its effectiveness in balancing throughput maximisation and interference minimisation, which are vital for reliable and fair spectrum sharing in dynamic 6G CRNs.

Figure 12.

PU collision vs. SU usage percentage comparison by strategy.

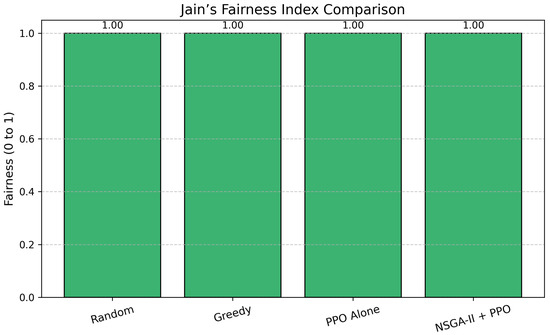

Fairness, evaluated through Jain’s Fairness Index (see Figure 13), consistently remained high (near 1.0) across all the strategies. This indicates that all spectrum-sharing techniques, including the hybrid model, facilitate equal access to the available channels for Secondary Users. The hybrid model’s ability to balance fairness alongside reduced collisions and efficient channel usage underscores its practical viability for real-world Cognitive Radio Networks. In practice, the Fairness Index remained comparable across the methods, likely due to the way fairness is incorporated into the optimisation process-typically as a constraint or secondary objective rather than a primary driver of the learning algorithm.

Figure 13.

Jain’s Fairness Index comparison of strategies.

4.2.5. Multi-Metric Visualisation

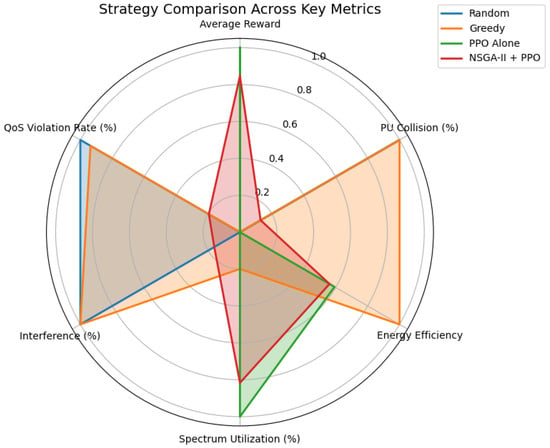

Radar plots (Figure 14) provide a holistic visual comparison of spectrum sharing strategies across multiple performance metrics, including average reward, PU collision rate, energy efficiency, spectrum utilisation, interference, and QoS violation rate. The radar chart illustrates that the NSGA-II + PPO hybrid framework consistently achieved a well-balanced performance profile, particularly in reducing PU collisions and QoS violations while maintaining fairness and spectrum utilisation levels comparable to PPO alone. This balance highlights that the hybrid model effectively manages trade-offs inherent in dynamic spectrum sharing, ensuring safety (collision avoidance and QoS compliance) without sacrificing throughput or energy efficiency. In contrast, the heuristic methods such as random and greedy showed imbalanced profiles, with notably poorer performance in critical metrics like collision, interference, and QoS violations.

Figure 14.

Radar plot comparing spectrum sharing strategies across multiple metrics.

4.3. Discussion

The evaluation demonstrates that incorporating NSGA-II’s Pareto guidance into PPO training significantly enhances learning efficiency and policy robustness. The hybrid model effectively managed multiple conflicting objectives, achieving a balanced improvement in spectrum efficiency while reducing interference and Primary User collisions, all without compromising fairness among the Secondary Users. These results directly address the research objectives of optimising dynamic spectrum sharing in 6G Cognitive Radio Networks. While our work focused on spectrum sharing in 6G CRNs, the principles underpinning the proposed NSGA-II + PPO framework extend well beyond this domain. Many real-world optimisation problems share similar characteristics: multiple objectives that compete for limited resources, rapidly evolving operating conditions, and the need to balance efficiency with fairness. Examples include dynamic task scheduling in mobile edge computing, energy-aware routing in UAV networks, and intelligent load balancing in IoT-enabled smart grids. In each of these cases, the hybrid use of evolutionary search and online policy learning can help achieve more balanced and adaptive solutions, making the framework a versatile tool for next-generation optimisation challenges.

Compared to stand-alone PPO and heuristic approaches, the hybrid framework shows marked improvements in interference mitigation and collision reduction, which are critical for protecting licensed users in shared spectrum environments. Additionally, the observed gains in energy efficiency suggest more intelligent resource allocation, contributing to the sustainability goals of next-generation wireless networks. These findings align with and extend prior work advocating integrating evolutionary algorithms and Reinforcement Learning to tackle complex multi-objective optimisation problems more effectively [50,60].

This study shows that combining NSGA-II with PPO effectively handles the challenging trade-offs involved in spectrum sharing for 6G networks. The hybrid framework carefully balances competing goals like efficiency, fairness, and interference control. These findings provide a strong starting point for future work to build more intelligent and reliable wireless communication systems.

4.4. Limitations and Future Work

While our simulation results validate the hybrid framework’s potential, limitations include reliance on datasets, which might not adequately fully convey the features of real-world wireless environments. Using scalarised weights during model training may limit the model’s ability to adapt effectively to highly dynamic conditions. Additionally, the framework employs fixed scalarised weights during model training, which could restrict its flexibility and adaptability under highly dynamic network conditions.

Advancing the NSGA-II + PPO framework for 6G CRNs will require integrating realism, scalability, and resilience into both optimisation and policy learning. Future work will embed surrogate modelling techniques such as Gaussian processes or neural approximators to estimate objective functions efficiently, reducing computational overhead during NSGA-II iterations. Adaptive population pruning and elitism strategies will be explored to retain high-quality solutions while minimising redundancy. Parallelisation using GPU acceleration and distributed computing will be critical for scaling multi-objective optimisation across large, dynamic environments. On the PPO side, enhancements will include latency-aware reward shaping and QoS-constrained policy refinement to support mission-critical responsiveness. Incorporating probabilistic sensing accuracy, energy-aware scanning overhead, and mobility-aware user behaviour will improve policy realism. Security extensions will involve adversarial Reinforcement Learning to counter Spectrum Sensing Data Falsification (SSDF), Primary User Emulation (PUE), and jamming threats, alongside blockchain-based audit trails for decentralised trust. Finally, simplified objective clustering and topology evolution mechanisms will be integrated to accelerate convergence and adapt to evolving network conditions, ensuring the framework remains robust in complex, real-world deployments.

5. Conclusions

This research addressed the challenge of managing dynamic spectrum sharing in 6G CRNs, where it is crucial to find the right balance between spectrum efficiency, interference, and energy consumption reduction. A hybrid framework combining NSGA-II and PPO was developed and evaluated to address this problem.

Synthetic and ns-3 simulations showed that this hybrid approach performs better than standard baselines like random allocation, greedy methods, and using NSGA-II or PPO alone. The hybrid model demonstrated faster learning, more stable policies, and a better balance between exploring new strategies and exploiting known ones. It also achieved higher fairness in allocating resources while reducing interference and collisions with Primary Users, which is critical for maintaining a harmonious shared spectrum.

By bringing together the strengths of NSGA-II’s global search and PPO’s adaptive policy learning, the framework adapts well to the fast-changing conditions typical of 6G networks. These findings suggest that this hybrid solution could be practical and scalable for real-world wireless systems that manage multiple, competing objectives in real time.

However, these encouraging results are based on simulations that made some ideal assumptions. Future work should focus on testing the framework in more realistic settings, including larger and more diverse networks, and exploring adaptive ways to balance competing goals. Comparison with other advanced algorithms and testing on real hardware will be important next steps to fully assess its potential. Ultimately, this hybrid framework is expected to have practical implications in terms of increased spectrum efficiency, reduced interference and improved Quality of Service, energy-conscious communication, adaptive learning in dynamic environments, and scalability for large-scale IoT deployments. The framework will enhance spectrum efficiency by dynamically enabling spectrum allocation based on real-time conditions, thereby minimising idle frequencies. At the same time, it facilitates opportunistic access by SUs in the underutilised bands such as Terahertz Bands 300 GHz–3 THz, Millimetre-Wave 30–300 GHz, Upper Mid-Band 7–24 GHz and Sub-6 GHz Bands. Furthermore, it minimises interference and improves QoS, leading to much more stable connections and better user experiences, especially in dense urban areas. The framework also makes energy-aware communication feasible, thus making it possible for network devices to adapt transmission power intelligently, extending battery life and subsequently supporting green computing initiatives. The DRL enables adaptive learning in dynamic network environments, enabling the system to learn from variable network conditions, user behaviour, and traffic patterns. The framework also allows scalable IoT deployment by promoting multi-user coordination, making it suitable for large-scale networks. To this end, it can handle heterogeneous devices with variable spectrum demands, thus ensuring fair and efficient access.

Author Contributions

Conceptualization, A.W.C., S.M.N., M.V. and S.S.D.; Methodology, A.W.C., S.M.N., M.V. and S.S.D.; Software, A.W.C.; Validation, S.M.N.; Formal analysis, A.W.C.; Investigation, A.W.C. and S.M.N.; Writing—original draft, S.M.N.; Writing—review & editing, S.M.N., M.V. and S.S.D.; Supervision, S.M.N., M.V. and S.S.D.; Project administration, S.M.N., M.V. and S.S.D.; Funding acquisition, M.V. All authors have read and agreed to the published version of the manuscript.

Funding

The authors gratefully acknowledge the financial support from the National Research Foundation of South Africa (Grant Number 141918).

Data Availability Statement

The original data presented in the study are openly available in a publicly accessible repository at [https://github.com/ancilla-chgs/nsga2-ppo-6g-crn], accessed on 27 August 2025.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| 6G | Sixth Generation |

| CRN | Cognitive Radio Network |

| NSGA-II | Non-dominated Sorting Genetic Algorithm II |

| PPO | Proximal Policy Optimisation |

| IoE | Internet of Everything |

| HT | Holographic Telepresence |

| UAV | Unmanned Aerial Vehicle |

| XR | Extended Reality |

| NTN | Non-Terrestrial Network |

| AI | Artificial Intelligence |

| ML | Machine Learning |

| QoS | Quality of Service |

| PU | Primary User |

| SU | Secondary User |

| SINR | Signal-to-Interference-plus-Noise Ratio |

| DSA | Dynamic Spectrum Access |

| NOMA | Non-Orthogonal Multiple Access |

| DRL | Deep Reinforcement Learning |

| MADDPG | Multi-Agent Deep Deterministic Policy Gradient |

| MAPPO | Multi-Agent Proximal Policy Optimisation |

| RIS | Reconfigurable Intelligent Surface |

| MIMO | Multiple Input Multiple Output |

| D2D | Device-to-Device |

| IoT | Internet of Things |

| CSI | Channel State Information |

| MLP | Multi-Layer Perceptron |

| SVM | Support Vector Machine |

| KNN | K-Nearest Neighbours |

| RF | Random Forest |

| NB | Naive Bayes |

| IIoT | Industrial Internet of Things |

| IoMT | Internet of Medical Things |

References

- Huawei. 6G: The Next Horizon White Paper; Technical Report; Huawei: Shenzhen, China, 2022; Available online: https://www.huawei.com/en/huaweitech/future-technologies/6g-white-paper (accessed on 16 June 2025).

- Nleya, S.M.; Velempini, M. Industrial metaverse: A comprehensive review, environmental impact, and challenges. Appl. Sci. 2024, 14, 5736. [Google Scholar] [CrossRef]

- Nleya, S.M.; Velempini, M. AI-Based Metaverse Cybersecurity Overview: Innovative Threats, Mitigation and Open Challenges. In Artificial Intelligence and Metaverse through Data Engineering; Patni, J.C., Choudhury, T., Eds.; Nova: Hauppauge, NY, USA, 2024; Chapter 4; pp. 59–78. [Google Scholar]

- De Alwis, C.; Kalla, A.; Pham, Q.V.; Kumar, P.; Dev, K.; Hwang, W.J.; Liyanage, M. Survey on 6G frontiers: Trends, applications, requirements, technologies and future research. IEEE Open J. Commun. Soc. 2021, 2, 836–886. [Google Scholar] [CrossRef]

- Nleya, S.M.; Velempini, M.; Gotora, T.T. Beyond 5G: The Evolution of Wireless Networks and Their Impact on Society. In Advanced Wireless Communications and Mobile Networks-Current Status and Future Directions; IntechOpen: London, UK, 2025. [Google Scholar]

- Hassan, I.M.; Maijama’a, I.S.; Adamu, A.; Abubakar, S.B. Exploring the Social and Economic Impacts of 6G Networks and Their Potential Benefits to Society. Int. J. Sci. Eng. Technol. 2025, 13, 1–9. [Google Scholar]

- Bhadoriya, R.; Garg, N.K.; Dadoria, A.K. Chapter 18—Future opportunities toward importance of emerging technologies with 6G technology. In Human-Centric Integration of 6G-Enabled Technologies for Modern Society; Tyagi, A.K., Tiwari, S., Eds.; Academic Press: Cambridge, MA, USA, 2025; pp. 267–281. [Google Scholar]

- Chen, W.; Lin, X.; Lee, J.; Toskala, A.; Sun, S.; Chiasserini, C.F.; Liu, L. 5G-advanced toward 6G: Past, present, and future. IEEE J. Sel. Areas Commun. 2023, 41, 1592–1619. [Google Scholar] [CrossRef]

- ITU-R. IMT Traffic Estimates for the Years 2020 to 2030; Technical Report; International Telecommunication Union (ITU): Geneva, Switzerland, 2015; Report M.2370-0. [Google Scholar]

- Ahmed, M.; Waqqas, F.; Fatima, M.; Khan, A.M.; Naz, M.A.; Ahmed, M. Enabling 6G Networks for Advances Challenges and Traffic Engineering for Future Connectivity. VFAST Trans. Softw. Eng. 2024, 12, 326–337. [Google Scholar] [CrossRef]

- Akbar, M.S.; Hussain, Z.; Ikram, M.; Sheng, Q.Z.; Mukhopadhyay, S. On challenges of sixth-generation (6G) wireless networks: A comprehensive survey of requirements, applications, and security issues. J. Netw. Comput. Appl. 2024, 233, 104040. [Google Scholar] [CrossRef]

- E-SPIN Group. Next 6G: Key Features, Use Cases, and Challenges of Tomorrow’s Wireless Revolution. 2023. Available online: https://www.e-spincorp.com/blogs/next-6g-key-features-use-cases-and-challenges-of-tomorrows-wireless-revolution/ (accessed on 18 June 2025).

- Nleya, S.M.; Bagula, A.; Zennaro, M.; Pietrosemoli, E. A TV white space broadband market model for rural entrepreneurs. In Proceedings of the Global Information Infrastructure Symposium-GIIS 2013, Trento, Italy, 28–31 October 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 1–6. [Google Scholar]

- Nleya, S.M.; Bagula, A.; Zennaro, M.; Pietrosemoli, E. Optimisation of a TV white space broadband market model for rural entrepreneurs. J. ICT Stand. 2014, 2, 109–128. [Google Scholar] [CrossRef]

- Sabir, B.; Yang, S.; Nguyen, D.; Wu, N.; Abuadbba, A.; Suzuki, H.; Lai, S.; Ni, W.; Ming, D.; Nepal, S. Systematic Literature Review of AI-enabled Spectrum Management in 6G and Future Networks. arXiv 2024, arXiv:2407.10981. [Google Scholar]

- Ghafoor, U.; Siddiqui, A.M. Enhancing 6G Network Security Through Cognitive Radio and Cluster-Assisted Downlink Hybrid Multiple Access. In Proceedings of the 2024 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 9–10 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Saravanan, K.; Jaikumar, R.; Devaraj, S.A.; Kumar, O.P. Connected map-induced resource allocation scheme for cognitive radio network quality of service maximization. Sci. Rep. 2025, 15, 14037. [Google Scholar] [CrossRef]

- Digital Regulation Platform. Spectrum management: Key applications and regulatory considerations driving the future use of spectrum. Digit. Regul. Platf. 2025. Available online: https://digitalregulation.org/spectrum-management-key-applications-and-regulatory-considerations-driving-the-future-use-of-spectrum-2/ (accessed on 5 May 2025).

- Ericsson. 6G—Follow the Journey to the Next Generation Networks. 2024. Available online: https://www.ericsson.com/en/5g/6g (accessed on 17 June 2025).

- Mukherjee, A.; Patro, S.; Geyer, J.; Kumar, S.; Paikrao, P.D. The Convergence of Cognitive Radio and 6G: Opportunities, Applications, and Technical Considerations. In Proceedings of the 2025 8th International Conference on Electronics, Materials Engineering & Nano-Technology (IEMENTech), Kolkata, India, 31 January–2 February 2025; IEEE: Piscataway, NJ, USA, 2025; pp. 1–6. [Google Scholar]

- Jagatheesaperumal, S.K.; Ahmad, I.; Höyhtyä, M.; Khan, S.; Gurtov, A. Deep learning frameworks for cognitive radio networks: Review and open research challenges. J. Netw. Comput. Appl. 2024, 233, 104051. [Google Scholar] [CrossRef]

- Samsung Electronics. AI-Native & Sustainable Communication; White Paper; Samsung: Seoul, Republic of Korea, 2025. [Google Scholar]

- NVIDIA. NVIDIA and Telecom Industry Leaders to Develop AI-Native Wireless Networks for 6G. 2025. Available online: https://nvidianews.nvidia.com/news/nvidia-and-telecom-industry-leaders-to-develop-ai-native-wireless-networks-for-6g (accessed on 16 June 2025).

- You, X. 6G extreme connectivity via exploring spatiotemporal exchangeability. Sci. China Inf. Sci. 2023, 66, 130306. [Google Scholar] [CrossRef]

- Uusitalo, M.A.; Rugeland, P.; Boldi, M.R.; Calvanese Strinati, E.; Demestichas, P.; Ericson, M.; Fettweis, G.P.; Filippou, M.C.; Gati, A.; Hamon, M.H.; et al. 6G Vision, Value, Use Cases and Technologies from European 6G Flagship Project Hexa-X. IEEE Access 2021, 9, 160004–160020. [Google Scholar] [CrossRef]

- Ericsson. 6G Use Cases: Beyond Communication by 2030. Ericsson White Paper, 2021. Available online: https://www.ericsson.com/en/blog/2024/12/explore-the-impact-of-6g-top-use-cases-you-need-to-know (accessed on 16 June 2025).

- Fu, Y.; Wang, X.; Fang, F. Multi-objective multi-dimensional resource allocation for categorized QoS provisioning in beyond 5G and 6G radio access networks. IEEE Trans. Commun. 2023, 72, 1790–1803. [Google Scholar] [CrossRef]

- Lozano, A.; Rangan, S. Spectral versus energy efficiency in 6G: Impact of the receiver front-end. IEEE BITS Inf. Theory Mag. 2023, 3, 41–53. [Google Scholar] [CrossRef]

- Semba Yawada, P.; Trung Dong, M. Tradeoff analysis between spectral and energy efficiency based on sub-channel activity index in wireless cognitive radio networks. Information 2018, 9, 323. [Google Scholar] [CrossRef]

- Singh, S.P.; Kumar, N.; Kumar, G.; Balusamy, B.; Bashir, A.K.; Al-Otaibi, Y.D. A hybrid multi-objective optimisation for 6G-enabled Internet of Things (IoT). IEEE Trans. Consum. Electron. 2024, 71, 1307–1318. [Google Scholar] [CrossRef]

- Lee, S. Optimizing Networks with Multiple Objectives. 2025. Available online: https://www.numberanalytics.com/blog/optimizing-networks-with-multiple-objectives (accessed on 2 July 2025).

- Liu, D.; Shi, G.; Hirayama, K. Vessel scheduling optimisation model based on variable speed in a seaport with one-way navigation channel. Sensors 2021, 21, 5478. [Google Scholar] [CrossRef] [PubMed]

- Nebro, A.J.; Galeano-Brajones, J.; Luna, F.; Coello Coello, C.A. Is NSGA-II Ready for Large-Scale Multi-Objective Optimization? Math. Comput. Appl. 2022, 27, 103. [Google Scholar] [CrossRef]

- Deb, K.; Fleming, P.; Jin, Y.; Miettinen, K.; Reed, P.M. Key issues in real-world applications of many-objective optimisation and decision analysis. In Many-Criteria Optimization and Decision Analysis: State-of-the-Art, Present Challenges, and Future Perspectives; Springer: Berlin/Heidelberg, Germany, 2023; pp. 29–57. [Google Scholar]

- Yang, B.; Chen, J.; Xiao, X.; Li, S.; Ren, T. An Enhanced NSGA-II Driven by Deep Reinforcement Learning to Mixed Flow Assembly Workshop Scheduling System with Constraints of Continuous Processing and Mold Changing. Systems 2025, 13, 659. [Google Scholar] [CrossRef]

- Zhang, W.; Xiao, G.; Gen, M.; Geng, H.; Wang, X.; Deng, M.; Zhang, G. Enhancing multi-objective evolutionary algorithms with machine learning for scheduling problems: Recent advances and survey. Front. Ind. Eng. 2024, 2, 1337174. [Google Scholar] [CrossRef]

- Pattanaik, A.; Ahmed, Z. Exploring Spectrum Sharing Algorithms for 6G Cellular Communication Networks. In Proceedings of the 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Ndiaye, M.; Saley, A.M.; Raimy, A.; Niane, K. Spectrum resource sharing methodology based on CR-NOMA on the future integrated 6G and satellite network: Principle and Open researches. In Proceedings of the 2022 International Conference on Smart Technologies and Systems for Next Generation Computing (ICSTSN), Villupuram, India, 25–26 March 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–7. [Google Scholar]

- Liu, X.; Lam, K.Y.; Li, F.; Zhao, J.; Wang, L.; Durrani, T.S. Spectrum sharing for 6G integrated satellite-terrestrial communication networks based on NOMA and CR. IEEE Netw. 2021, 35, 28–34. [Google Scholar] [CrossRef]

- Wang, Q.; Sun, H.; Hu, R.Q.; Bhuyan, A. When machine learning meets spectrum sharing security: Methodologies and challenges. IEEE Open J. Commun. Soc. 2022, 3, 176–208. [Google Scholar] [CrossRef]

- Frahan, N.; Jawed, S. Dynamics Spectrum Sharing Environment Using Deep Learning Techniques. Eur. J. Theor. Appl. Sci. 2023, 1, 6. [Google Scholar] [CrossRef] [PubMed]

- Mahmoud, H.; Baiyekusi, T.; Daraz, U.; Mi, D.; He, Z.; Lu, C.; Guan, M.; Wang, Z.; Chen, F. Machine Learning-based Spectrum Allocation using Cognitive Radio Networks. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Tavares, C.H.A.; Marinello, J.C.; Proenca Jr, M.L.; Abrao, T. Machine learning-based models for spectrum sensing in cooperative radio networks. IET Commun. 2020, 14, 3102–3109. [Google Scholar] [CrossRef]

- Sun, H.; Hu, R.Q.; Qian, Y. Secure Spectrum Sharing with Machine Learning: An Overview. In 5G and Beyond Wireless Communication Networks; Wiley-IEEE Press: Hoboken, NJ, USA, 2024; pp. 115–134. [Google Scholar]

- Praveen Kumar, K.; Lagunas, E.; Sharma, S.K.; Vuppala, S.; Chatzinotas, S.; Ottersten, B. Margin-Based Active Online Learning Techniques for Cooperative Spectrum Sharing in CR Networks. In Cognitive Radio-Oriented Wireless Networks, Proceedings of the 14th EAI International Conference, CrownCom 2019, Poznan, Poland, 11–12 June 2019; Proceedings 14; Springer: Berlin/Heidelberg, Germany, 2019; pp. 140–153. [Google Scholar]

- Saikia, P.; Pala, S.; Singh, K.; Singh, S.K.; Huang, W.J. Proximal policy optimisation for RIS-assisted full duplex 6G-V2X communications. IEEE Trans. Intell. Veh. 2023, 9, 5134–5149. [Google Scholar] [CrossRef]

- Lotfolahi, A.; Ferng, H.W. A multi-agent proximal policy optimised joint mechanism in mmwave hetnets with comp toward energy efficiency maximisation. IEEE Trans. Green Commun. Netw. 2023, 8, 265–278. [Google Scholar] [CrossRef]

- Shin, M.; Mughal, D.M.; Kim, S.H.; Chung, M.Y. Deep Reinforcement Learning Assisted Multi-Operator Spectrum Sharing in Cell-Free MIMO Networks. IEEE Commun. Lett. 2024, 28, 2894–2898. [Google Scholar] [CrossRef]

- Huang, J.; Yang, Y.; Gao, Z.; He, D.; Ng, D.W.K. Dynamic spectrum access for D2D-enabled Internet of Things: A deep reinforcement learning approach. IEEE Internet Things J. 2022, 9, 17793–17807. [Google Scholar] [CrossRef]

- Liu, S.; Pan, C.; Zhang, C.; Yang, F.; Song, J. Dynamic spectrum sharing based on deep reinforcement learning in mobile communication systems. Sensors 2023, 23, 2622. [Google Scholar] [CrossRef] [PubMed]

- Khaf, S.; Kaddoum, G.; Evangelista, J.V. Partially cooperative RL for hybrid action CRNs with imperfect CSI. IEEE Open J. Commun. Soc. 2024, 5, 3762–3774. [Google Scholar] [CrossRef]

- Gao, A.; Du, C.; Ng, S.X.; Liang, W. A cooperative spectrum sensing with multi-agent reinforcement learning approach in cognitive radio networks. IEEE Commun. Lett. 2021, 25, 2604–2608. [Google Scholar] [CrossRef]

- Yang, Y.; He, X.; Lee, J.; He, D.; Li, Y. Collaborative deep reinforcement learning in 6G integrated satellite-terrestrial networks: Paradigm, solutions, and trends. IEEE Commun. Mag. 2024, 63, 188–195. [Google Scholar] [CrossRef]

- Ding, P.; Liu, X.; Wang, Z.; Zhang, K.; Huang, Z.; Chen, Y. Distributed Q-learning-enabled multi-dimensional spectrum sharing security scheme for 6G wireless communication. IEEE Wirel. Commun. 2022, 29, 44–50. [Google Scholar] [CrossRef]

- Dašić, D.; Ilić, N.; Vučetić, M.; Perić, M.; Beko, M.; Stanković, M.S. Distributed spectrum management in cognitive radio networks by consensus-based reinforcement learning. Sensors 2021, 21, 2970. [Google Scholar] [CrossRef]

- Si, J.; Huang, R.; Li, Z.; Hu, H.; Jin, Y.; Cheng, J.; Al-Dhahir, N. When spectrum sharing in cognitive networks meets deep reinforcement learning: Architecture, fundamentals, and challenges. IEEE Netw. 2023, 38, 187–195. [Google Scholar] [CrossRef]

- Zhang, J.; Qian, K.; Luo, H.; Liu, Y.; Qiao, X.; Xu, X.; Tian, J. Process monitoring for tower pumping units under variable operational conditions: From an integrated multitasking perspective. Control Eng. Pract. 2025, 156, 106229. [Google Scholar] [CrossRef]

- Zhang, J.; Tian, J.; Yan, P.; Wu, S.; Luo, H.; Yin, S. Multi-hop graph pooling adversarial network for cross-domain remaining useful life prediction: A distributed federated learning perspective. Reliab. Eng. Syst. Saf. 2024, 244, 109950. [Google Scholar] [CrossRef]

- Zhang, S.; Tong, X.; Chi, K.; Gao, W.; Chen, X.; Shi, Z. Stackelberg game-based multi-agent algorithm for resource allocation and task offloading in mec-enabled c-its. IEEE Trans. Intell. Transp. Syst. 2025; early access. [Google Scholar]

- Kaur, A.; Kumar, K. A reinforcement learning-based evolutionary multi-objective optimisation algorithm for spectrum allocation in cognitive radio networks. Phys. Commun. 2020, 43, 101196. [Google Scholar] [CrossRef]

- Hlophe, M.C.; Maharaj, B.T. AI meets CRNs: A prospective review on the application of deep architectures in spectrum management. IEEE Access 2021, 9, 113954–113996. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).