1. Introduction

1.1. Background

The rapid development of various commercial automated vehicle technologies, including but not limited to autonomous driving at both highway speeds and stop-and-go traffic, object detection-based automated braking, vehicle-to-vehicle (V2V), vehicle-to-infrastructure (V2I), infrastructure-to-vehicle (I2V), infrastructure-to-“everything” (I2X), and vehicle-to-“everything” (V2X) communications supporting “platooning” and other crash and traffic management functions provides unique opportunities for local, state, federal, and commercial roadway management organizations to leverage and integrate with these technologies in order to advance transportation safety objectives. Failure to support, investigate, and plan for near and long-term integration with these new technologies may result in market-driven technological fragmentation, making it difficult and/or cost-prohibitive to integrate with these technologies in the future.

1.2. Significance

Among real-world deployments of autonomous and connected vehicles, intersection safety is paramount: intersections account for roughly approximately one-quarter of all traffic fatalities and about half of all injuries in the United States. While significant progress has been made in developing CAV-based traffic control algorithms, most research assumes ideal sensing conditions and complete knowledge of road participants. In real-world deployments, visibility can be reduced by weather, obstacles, and sensor limitations, and the information available to vehicles may be incomplete or delayed. This creates an urgent need for infrastructure-based sensing and communication systems that can:

Extend situational awareness beyond the on-board perception range of CAVs;

Provide reliable, low-latency I2V/I2X information to both CAVs and human-driven vehicles;

Function effectively under mixed traffic conditions and imperfect information;

Serve as a scalable integration point for evolving vehicle technologies.

1.3. Literature Review

One of the most challenging locations for drivers are intersections. According to Federal Highway Administration (FHWA) [

1], roughly one-quarter of traffic fatalities and about one half of all traffic injuries in the United States are related to intersections, which lead to huge economic and societal losses.

To improve the traffic condition at intersections, some researchers have focused on improving sensors and communication infrastructure to enable cooperative intersection management [

2]. Goldhammer et al. [

3] developed a comprehensive multi-sensor network and wireless communications which focused on providing an improved view of the intersection using cooperative perception strategies. Bashiri et al. [

4] proposed Platoon-based Autonomous Intersection Management (PAIM) to derive optimal schedules for platoons of vehicles. Gutesa et al. [

5] introduced an intersection management strategy for a corridor with automated vehicles utilizing a vehicular trajectory-driven optimization method.

Additionally, traffic signal control strategies and advanced traffic systems have been introduced to reduce traffic conflicts and maintain safe and orderly traffic streams. Old traffic signal controls strategies like pre-timed control, actuated control, and adaptive control are based on certain assumptions about the traffic flow, which simplify the complexity of the real-world traffic conditions. In contrast to conventional signal control strategies, as of recent, researchers are using reinforcement learning methods to directly learn from observations, without making sometimes unrealistic assumptions [

6]. For example, Gong et al. [

7] proposed a safety-oriented Adaptive Traffic Signal Control (ATSC) algorithm to optimize both traffic efficiency and safety simultaneously.

Connected and Automated Vehicles (CAVs), which combine advanced vehicle-based communication technologies (e.g., connected vehicles—CVs) and control technologies (e.g., automated vehicles—AVs), provide new insights into traffic control. CVs make it possible for vehicles to share and communicate real-time information among vehicles (Vehicle to Vehicle—V2V) and surrounding infrastructure units (Vehicle to Infrastructure—V2I), whereas AVs are capable of sensing their environment and moving safely with little or no human input. Therefore, CAVs can much more accurately judge distance and speed, more comprehensively perceive the surroundings and shorten the perception–reaction time based on real-time multisource information. It is expected that this will help to reduce congestion delay, improve throughput and automation level at intersections.

A signal-free intersection control strategy, which assumes that vehicles corporate with one another and cross intersections without physical traffic signals, has been proposed to improve intersection efficiency. In a fully CAV environment, Dresner and Stone [

8] proposed signal-free autonomous intersection management (AIM) in which CAVs followed a First-Come-First-Serve (FCFS) service discipline and sent reservations requesting the space-time resources along their way to the intersection. The intersection controller decided whether to grant or reject the requested reservations. Mirheli et al. [

9] proposed a signal-head-free intersection control logic (SICL) which aimed to maximize the intersection throughput. Wang et al. in [

10] proposed the system to control intersections for autonomous vehicles using microsimulations.

Apart from the abovementioned signal-free intersection control strategy, Li and Zhou [

11] considered that the Intersection Automation Policy (IAP) with a full CAV environment could be viewed as a multiple machine scheduling problem and be formulated as a mixed integer linear programming model. Additionally, under mixed traffic conditions (CAVs and non-CAVs), they proposed a novel phase-time-traffic (PTR) hypernetwork which closely coupled traffic signal operations, heterogeneous traffic dynamics, and CAV requests for green lights to minimize the total delays. Moreover, Li et al. [

12] mathematically formulated 3D CAV trajectories in combined temporal-spatial domains.

Chen et al. in [

13] proposed a limited solution for mixed traffic, with CAVs used as traffic regulators, while in [

14] Li et al. focused on signalled intersections, proposing the reinforcement learning-based system to manage mixed traffic (HVs and CAVs). A key problem with multi-vehicle-based optimization models is that they often utilize linear car-following models to describe the driving behavior of HVs ([

15,

16]), which may lead to prediction errors. To address such issues, Zou et al. in [

17] proposed a two-stage hierarchical system using PMILP (Platoon-MILP) to expand HV behavior to non-linear based on car following.

All of this research assumes, however, a high level of visibility and knowledge of all participants of the events on the road, which is an unrealistic assumption in the general case.

1.4. Contribution

This paper presents a proof-of-concept framework for an Autonomous Sensing Infrastructure integrated with CAV communication capabilities to support I2V, I2X, and V2I alerting. Its key contributions are:

Design and implementation of a modular system combining computer-vision-based object detection and tracking, SAE Basic Safety Message (BSM) generation, and multi-channel broadcasting (DSRC, LTE) for infrastructure-to-vehicle (I2V) and infrastructure-to-everything (I2X) communications.

Development of a high-accuracy pipeline using fine-tuned Faster R-CNN and Deep-SORT for real-time object detection, tracking, and geospatial localization, optimized for intersection monitoring.

BSM message management under broadcast constraints—implementation of a BSM server that prioritizes and schedules message dissemination when broadcast capacity is limited, ensuring timely alerts for the most critical objects.

Mobile and in-vehicle alerting integration—creation of an Android proof-of-concept application capable of receiving real-time BSMs via LTE/Firebase and providing collision warnings.

Experimental deployment at a real intersection, quantitative evaluation against GPS ground truth, and demonstration of real-time conflict alerting in a controlled vehicle–pedestrian scenario.

This framework is designed not only to validate technological feasibility but also to inform policy and standardization efforts, thereby supporting early, coordinated adoption of CAV-integrated intersection management systems.

2. Methods

This research focused on every aspect of transportation operations, including traffic engineering and control, systems and performance analysis, data visualization, and operations management taking place on rural, arterial and urban roadways.

This effort was intended to advance the understanding of specific CAV technical elements and their potential use on roadways. This research was designed to support and inform Maryland’s existing CAV Working Group, chartered to establish Maryland at the forefront of CAV deployment opportunities and to support private sector innovations designed to improve safety on Maryland roadways.

This research focused on three primary areas of CAV technical development:

Objection and Tracking (ODT);

SAE (Society of Automotive Engineers)-based Safety Message Generation and Dissemination;

The alerting of Vehicles, Drivers, and Pedestrians.

This research also included the development of fully functional prototype solutions using a variety of infrastructure-to-vehicle (I2V), infrastructure-to-everything (I2X), and vehicle-to-vehicle (V2) communication technologies, including DSRC, cellular, and 802.X. These solutions were designed to be fully integrated and capable of being deployed on roadways for further analysis and testing.

2.1. Solution Overview

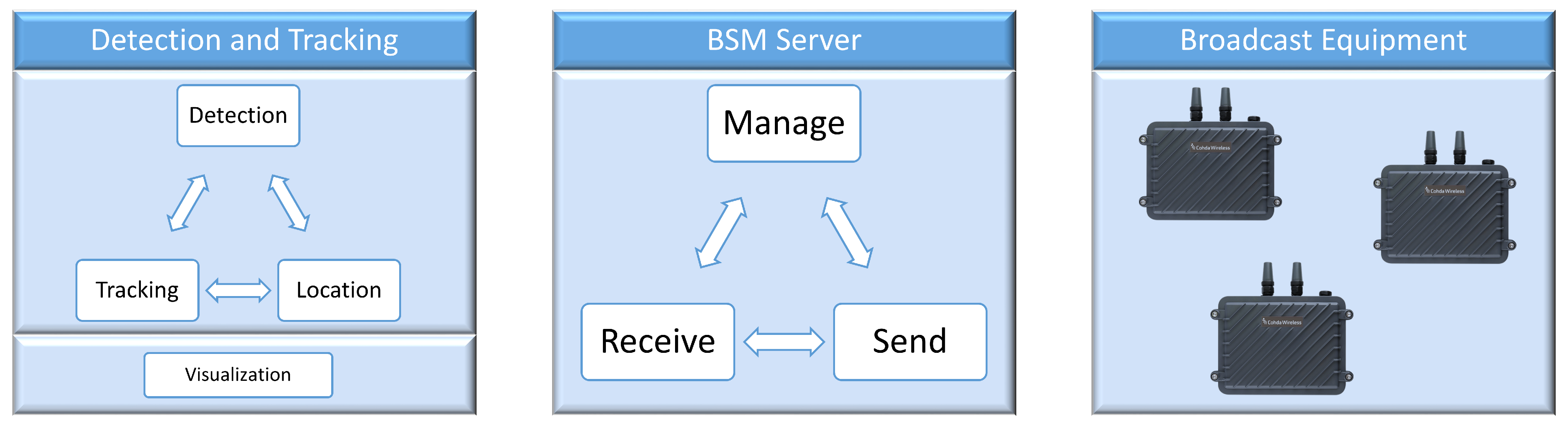

The system is built of three components presented in

Figure 1: Detection and tracking system, Basic Safety Message (BSM) Server, and broadcast equipment. The roles of the components are as follows.

Detection and tracking—given the camera footage as an input, the detection and tracking system is responsible for recognizing objects at the intersection and producing Basic Safety Messages for each object. The three main tasks of this system are: detect objects, track objects to determine their speed vectors, and locate objects in order to determine their geospatial coordinates.

BSM server—designed to manage the basic safety message. The BSM server is responsible for determining the order of messages as well as broadcasting and distributing messages among broadcasting devices. The inputs of the BSM server are the batches of BSMs generated by the detection and tracking system, whereas the outputs are single BSMs targeted for broadcast by specific devices. The BSM server is also responsible for communication with external applications.

Broadcast equipment—devices designated to broadcast the Basic Safety Messages. Each device works in a client/server architecture as a client of the BSM server. In the implemented communication standard, each device should continuously send queries to the BSM server requesting for a message to be broadcast and broadcast the message received in response. The system can work with one or multiple devices. The number of devices should be selected according to the estimated traffic and expected speed of broadcasting.

2.2. Detection and Tracking

The first part of the framework concerns object detection and tracking, both of which are widely researched topics.

Object detection is one of the challenging computer vision problems which aims to identify different classes of objects in the image and also locate the identified objects. It helps to form a complete image understanding and has been widely applied in different areas such as face detection and face recognition, pedestrian detection, video surveillance, and autonomous vehicle systems. For example, object detection techniques can help autonomous vehicles understand the environment by precisely detecting surrounding objects such as vehicles, pedestrians, traffic signs, and buildings.

A good object detection algorithm should have high accuracy and efficiency. Challenges in achieving high accuracy are mainly related to objects as the total number of different object categories can be significant. Even for objects from the same category, they may be different in appearance, viewpoint, pose, occlusion, lighting conditions, and background.

With the development of GPUs and the release of large-scale annotated datasets, this task is usually handled by deep learning-based approaches, which are mainly based on convolutional neural networks (CNNs), as the state-of-the-art object detection tools (e.g., [

18]). Their deeper architectures are capable of learning features directly from the raw input images without defining handcrafted features, which may aid in improving efficiency. One of the most established examples is Faster R-CNN [

19], a two-stage architecture that combines region proposal generation with object classification, and it is known for its robustness to occlusion and lighting variation. Approaches such as CDCDMA (cross-domain car detection model with integrated convolutional block attention [

20]) as well as detection in YOLO (You Only Look Once, [

21]) emerge as state-of-the-art methods.

Another part of this component is tracking. While the detection component considers each frame separately and returns information regarding the location of each object, the detected objects are not related to the objects that have been detected in the previous frame. In order to calculate the speed vector of an object (which is a mandatory element of a BSM message), we must track the object in time. In other words, it is necessary to match the detected objects with the objects detected in the previous frame.

Tracking is an subject of extensive research and many methods are continously developed to solve these problems, including Kernelized Correlation Filters (KFCs) [

22], the Deep-Sort algorithm [

23] based on Kalman Filters and Siamese Networks, vision transformers [

24], or histograms of oriented gradients (HOGs) [

25].

Any of those methods might be inserted into presented pipeline on both detection and tracking, both as separate methods for solving those problems or combined ones such as YOLO [

21] or combination of blob detection and the kernelled correlation filter [

26].

In the reference implementation, a Faster R-CNN (FRCNN) model was used as the primary object detector. The model was initially pre-trained on the COCO dataset and then fine-tuned on the authors’ Highway Vehicles Dataset, enabling improved accuracy for roadside traffic camera footage. As a two-stage detector, FRCNN combines region proposal generation with object classification, offering high detection precision even under partial occlusion and varying lighting conditions. In addition to this reference setup, a separate branch of the project was prepared using the YOLO architecture as a foundation for future work, aiming to explore lighter, single-stage detection models that could improve inference speed and facilitate deployment in resource-constrained environments.

2.3. Location

Location is the process of retrieving the geospatial coordinates (latitude, longitude) from pixel-based coordinates that we obtain from object detection and tracking module. There are three main steps of determining the geospatial coordinates, namely determining one point that corresponds to the location of the object, changing the view from camera to bird’s eye view, and obtaining the geospatial coordinates based on the bird’s eye view coordinates.

The first step—determining one pixel that describes the location of the object—is relatively simple. During the experiments, it turned out that selecting the point in the middle of the bottom verge of the bounding box gives the best representation of the point. The second step—changing the view from camera to bird’s eye—requires some preparation. From the mathematical point of view, this can be achieved through linear transformation. However, to compute this transformation, it is necessary to have “markers”—coordinates of the same points in both views. In practice, we choose two images—one that comes from the camera view and the second from Google Hybrid map—and try to select the same points in both images. Then, we use some of the points to build the transformation matrix and others to test how the transformation works. This process is prone to tiny errors, so it usually takes 2–3 h of repetitive work to obtain satisfactory results. Finally, we use Google Hybrid map again to determine the coefficients that allow transforming bird’s eye view coordinates into geospatial coordinates. As this process must be repeated for each intersection, we are interested only in computing latitudes and longitudes, as altitudes are always constant and equal to the altitude of the intersection.

2.4. BSM Server

The detection and tracking component returns the list that contains Basic Safety Messages for each object in the analyzed frame. However, due to hardware limitations each device (RSU—Road Side Unit) can broadcast only one message at the same time. Each device (RSU) can broadcast only a limited number of messages n in time t between two detections. This number is relatively small; in the device used for the tests, there was a built-in software limitation to 20 messages per second. If there are more than n objects detected in the frame, not all the messages can be broadcast. It may lead to the situation when some objects are completely ignored and the related messages are not broadcast at all. To handle this problem, we implemented the BSM Server—a piece of software that is responsible for managing the order of messages. The BSM Server utilizes client–server architecture for both the detection and tracking component and broadcasting devices. From the detection and tracking component, the BSM server receives information concerning all the detected objects. The BSM server preprocesses this information to keep only the latest BSM for each object. Additionally, for each object, the latest broadcasting time is also stored. It allows determining which object data were not broadcast for the longest time, and in consequence which object data should be broadcast next. On the other hand, the BSM server listens to the requests from the broadcasting devices.

2.5. Broadcasting Equimpent

For broadcasting the BSM messages, a Cohda Wireless MK5 Road Side Unit (RSU) is used. MK5 supports the DSRC/WAVE (IEEE 802.11p) communication protocol. The chipset in MK5 has a rugged weatherproof enclosure with integrated dual DSRC antennas, and a Global Navigation Satellite System (GNSS) with lane level positioning accuracy.

MK5 SDK is used to create applications necessary to seamlessly generate and broadcast BSM messages from the output of camera-based object detection and tracking components of the system. Installation of the Cohda RSU, in this case, differs slightly from other typical installations at intersections. In our test, the Cohda RSU was connected with the BSM server via Ethernet instead of being hooked up directly to the signal controller.

2.6. Messaging Receipt and Alerting

BSM messages—representing the location and movement of relevant intersection objects (e.g., vehicles, pedestrians)—ultimately need to be disseminated to end users. Two main areas were identified to focus this task: Mobile Application and In-Vehicle (On-board) unit.

2.6.1. Mobile Application

In contrast to the DSRC-based in-vehicle approach, the mobile application uses LTE cellular communication—currently 4G LTE, and likely 5G in the future—to transmit BSM messages. The initial objective of this approach was to quickly demonstrate that BSM messages generated in real time could be received and displayed in the field. However, given that this approach does not require special hardware/software and cell phones are carried by nearly all pedestrians and cyclists, it additionally provides further opportunities to communicate with non-vehicle users.

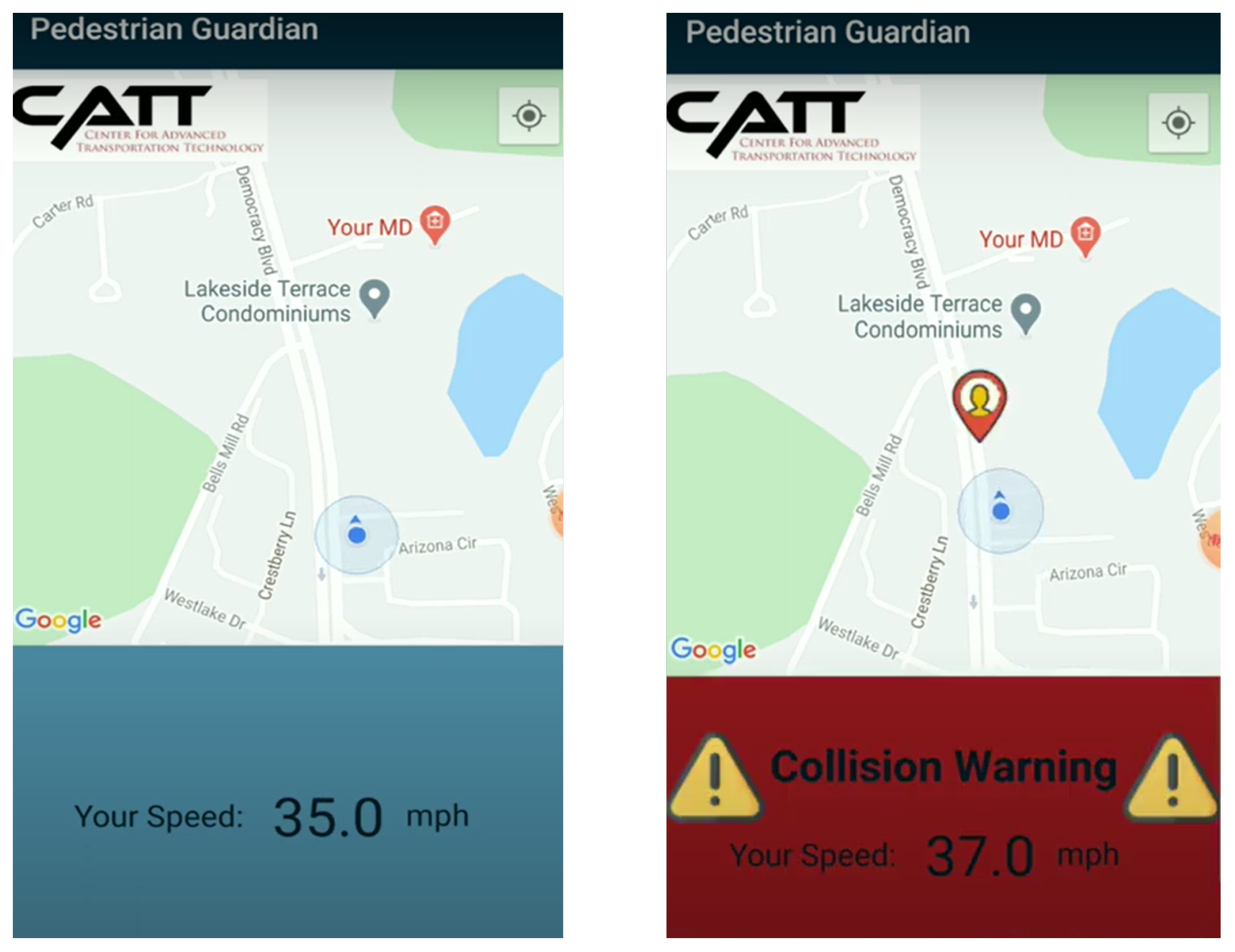

The proof-of-concept solution developed to transmit BSM messages to a mobile application focuses contains two components: (a) a service that is responsible for monitoring the BSM server and passing the data payload to the mobile device and (b) an Android application that demonstrates how the messages are received and interpreted. These concepts are summarized in

Figure 2, where Steps 1–3 show the process of passing the BSM data payload from the server to the Android device via Firebase messaging, while Steps 4–5 focus on the application’s role in receiving the message, deciding whether it is relevant, and finally alerting the user (a pedestrian or driver).

The first three steps are concerned with sending BSM messages to the Android app, and they are handled by the Firebase BSM Manager component. This is a Python 3.11 program that connects to the BSM server and continually checks for new messages (Step 1) and then forwards them via POST requests to the Firebase server (Step 2) which automatically pushes them to the Android device (Step 3). Steps 4 and 5 are handled within the Android Application. The mobile application manages the following core responsibilities:

Keeping track of the user’s position/speed/heading. The application must always understand the user’s location and trajectory so that it can predict potential conflicts with objects in or around the intersection (i.e., objects that it learns about via incoming BSM messages). Minimally, the application can directly use the device’s on-board GPS services for this purpose, but it can often achieve higher levels of accuracy by combining model estimates and sensor measurements in a Kalman filtering context.

Subscribing and listening for Firebase messages. The application subscribes to a special topic called “alerts”, which looks for special Firebase Messages with the encoded BSM messages. Although Firebase allows different types of messages (i.e., theoretically it could directly create a notification on the device), the type used in this application is specifically one with only a payload. This allows the device to decide whether the BSM objects pose a reasonable risk to the user, and it also allows irrelevant messages to be discarded.

Implementing decision logic to determine whether the user should be alerted. A simple initial implementation involves first creating a geofence around the user and automatically filtering out BSM objects outside of a given radius (e.g., 500 feet). Afterwards, the application builds a trajectory for the user and each BSM objects (keeping track of objects’ location, speed, and headings over time) and uses basic kinematic equations to determine whether the user is on a collision course, and if so when and where the collision will take place. Based on decision logic imposed for the type of object in the intersection, as well as the time and distance to collision, the application makes a determination about whether the user (either a driver or pedestrian) should be alerted.

Alert the user. Upon determining that the user is in imminent danger (or at least should be warned of a potential conflict), the application is responsible for creating an alert. Depending on the mode of operation and severity of the situation, the application can display a message on the application screen, provide haptic feedback, provide an audible warning, or create a push notification. Displaying a message is useful for debugging and demonstration purposes, but in real-life scenarios, a more urgent warning may be needed—particularly if the application is not in the foreground.

Figure 3 demonstrates an alert message presented visually to a user (driver) through the application interface. The left image shows the user’s current speed when no potential conflict is detected, while the right image shows an example alert when a potential conflict with a pedestrian is identified.

2.6.2. In-Vehicle (On Board Unit)

In both cases, the raw BSMs are transmitted to user devices. At a minimum, this information can be logged or displayed visually via an application (e.g., in-vehicle tablet, mobile application) to demonstrate proof of message receipt. However, more advanced applications rely on user devices to interpret the incoming messages in the context of the user location and movement. For example, the user device (which presumably knows its own location, speed, and heading) can figure out whether the user is on a collision course to an object in the intersection and decide to provide a user alert via visual message, audio, or haptic feedback.

3. Results

All the results were validated using publicly available code. The object detection, tracking, and localization functionalities are implemented in the GitHub repository with Python 3.11 [

27]. This repository has two primary branches. The “primary” branch, which was utilized for calculating the results and conducting in-field tests, contains a version that leverages a fine-tuned FRCNN model for detection [

28] and Deep-Sort for tracking. To fine-tune the FRCNN model, we prepared the Highway Vehicles Dataset [

29], which we share with the publication of this paper. This dataset was created specifically for this project and contains 3812 scenes from highway cameras with 91,843 objects. Additionally, we prepared a “YOLO” branch that uses YOLO models. We tested this latter branch with the most recent YOLOv11 model family and the stable YOLOv8 version.

The BSM server code, responsible for managing the message flow between the detection and tracking system and the broadcast equipment, is available in the GitHub repository [

30].

3.1. Detector Evaluation

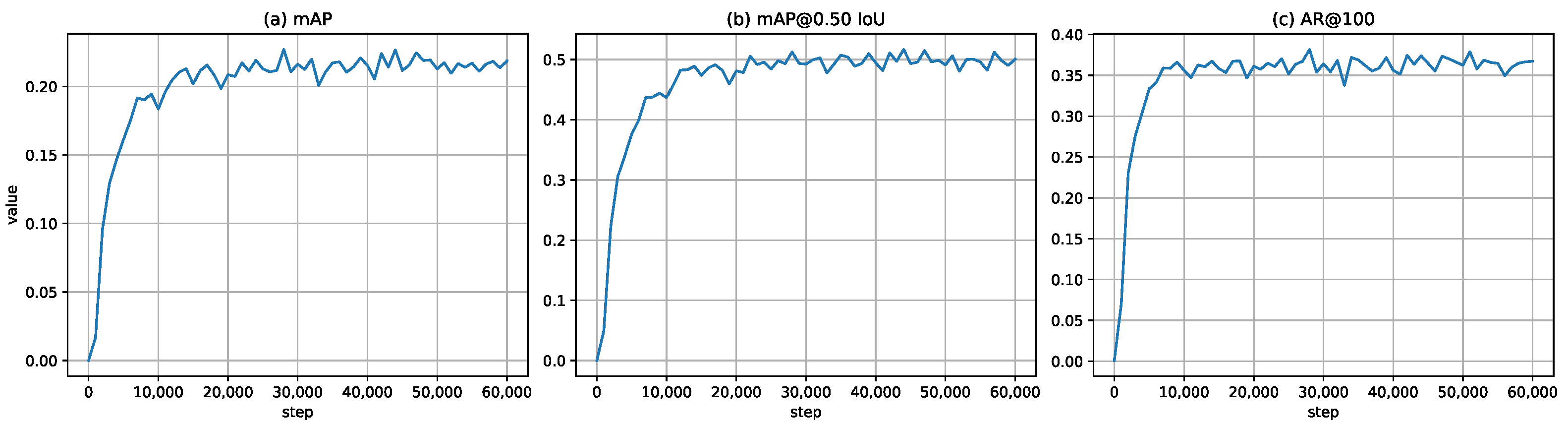

We provide a concise, COCO-style analysis of the detection subsystem and its implications for tracking. In this protocol, Average Precision (AP) is the area under the precision–recall curve at a fixed intersection-over-union (IoU) threshold, and mean AP (mAP) averages AP across classes; mAP@0.5:0.95 further averages AP over IoU thresholds from 0.50 to 0.95 in 0.05 increments. Training proceeds for 60,000 updates, and we report results for the 60k-step model, selected because training beyond this point showed signs of overfitting (declining validation performance).

Table 1 summarizes the core test set metrics (mAP@0.5:0.95, mAP@0.5, and AR@100) at the selected checkpoint. To contextualize these endpoint values without touching the test set,

Figure 4 plots mAP@0.5:0.95, mAP@0.5, and AR@100 on the validation set versus the training step. The observed metrics fall within a reasonable range for COCO-style analysis of this task. We also confirm satisfactory model behavior through visual inspection of predicted bounding boxes on the validation set. These results characterize the detections supplied to the tracker and provide the context for the system-level accuracy reported in

Section 3.2.

3.2. System Accuracy

To test the accuracy of the entire detection and tracking system, we carried out an experiment. We installed the system at an intersection and monitored the traffic. Simultaneously, we drove a car multiple times in front of the camera, measuring the location and speed of the car with both ODT and GPS systems. Assuming that the GPS provides ground truth data, it was possible to estimate the accuracy of the system. To evaluate the accuracy, we used three metrics, Mean Absolute Error (

), Symmetring Mean Absolute Percentage Error (

), and Free Flow Error (

). These metrics are defined as follows:

where

denotes ground thruth speed,

is a speed obtained from the system,

is free flow speed (50 kph),

i denotes a single measurement, and

n is a number of measurements.

Overall, the median absolute error was 2.43 kph, and the median SMAPE was 5.65%. The detailed results are presented in

Table 2.

3.3. In-Field Tests

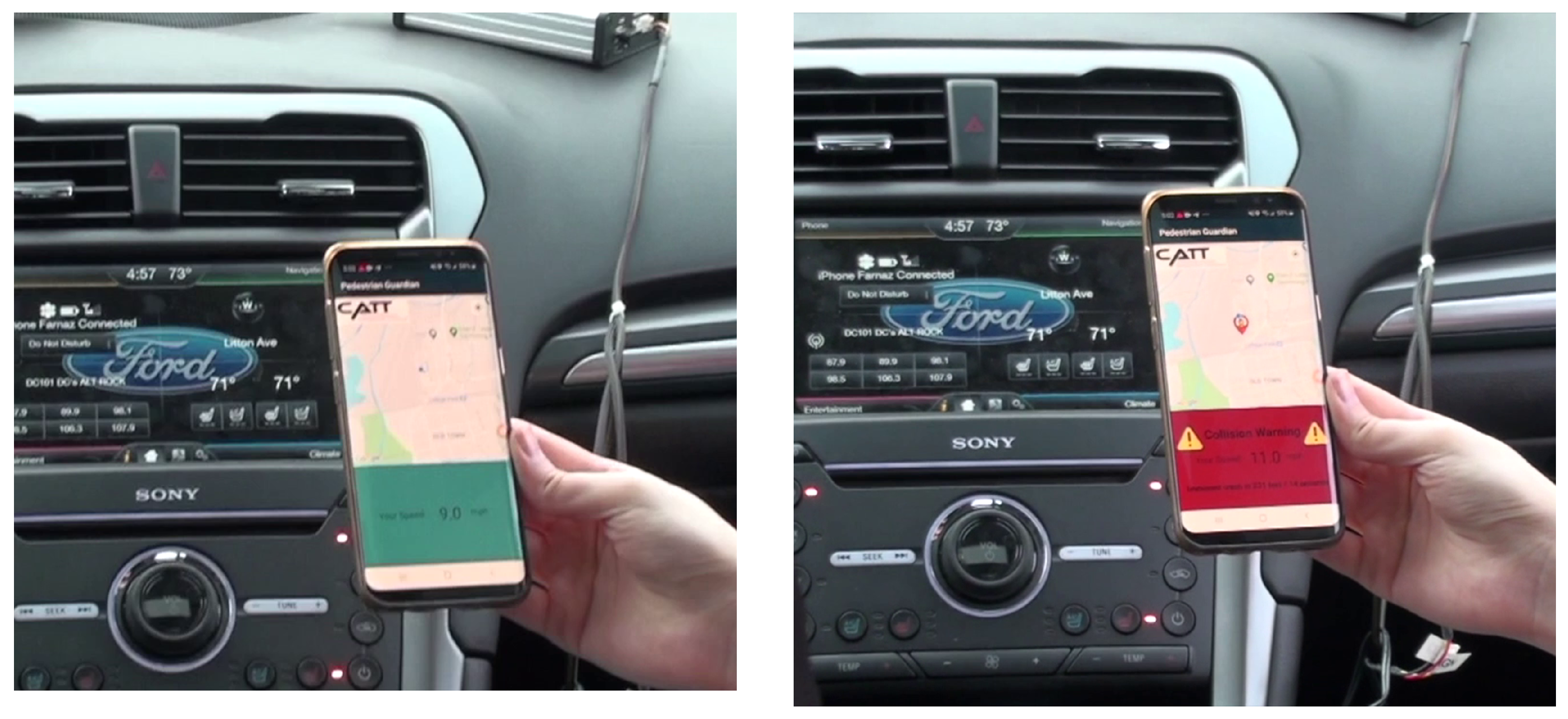

A proof-of-concept field test was conducted to demonstrate that BSM messages generated in real time from a camera feed could be successfully transmitted to users via mobile application. To this end, the authors staged a potential conflict between a pedestrian and an oncoming vehicle in a controlled setting as shown in

Figure 5 and set up a camera with a bird’s eye view to monitor the location. The camera was connected to the BSM server, which monitored the location and produced BSM messages 10×/s describing the location/heading/speed of all relevant objects in its field of view. In this contrived scenario, the driver, who had a mobile phone running the previously described Android application, was driving at about 16 kph along a road when a pedestrian began to walk across the street, potentially causing a conflict if neither party yielded.

A manual review of the BSM messages produced by the BSM server showed that the object detection and tracking generally worked as expected; the server detected both vehicles and pedestrians, and the corresponding speed and heading values appeared reasonable in most cases. Furthermore, the mobile application successfully received an alert message in-vehicle during the pedestrian crossing scenario, as shown in

Figure 6. This demonstrated that (a) the communication infrastructure worked as intended, meaning that the BSM messages successfully made their way from the BSM server to the mobile application via the Firebase messaging system, and (b) that the application logic identified a potential conflict based on the pedestrian and vehicle trajectories. While this preliminary field test was narrow in scope, it demonstrated the viability of the various system components.

3.4. Latency Analysis

From an operational responsiveness standpoint, the RSU path delivers alerts with sub-second end-to-end delay. We quantified the end-to-end delay from frame acquisition to BSM broadcast in the RSU path. On our GeForce RTX 2080 setup, the vision pipeline (detection + tracking) contributes ≈0.30 s per frame, while BSM server scheduling and RSU transmission add <0.10 s, yielding ≈0.40 s overall. This sub-second latency is acceptable for intersection-safety alerts: at typical urban speeds, it corresponds to only a few meters of travel and still supports multi-second warning horizons when messages are produced continuously. By contrast, the LTE/Android prototype used for pedestrian/driver alerts exhibits ≈2 s delay (server → Firebase → device), which we consider too high for imminent collision warnings and therefore a target for optimization. Future work will focus on reducing smartphone path delay via faster lightweight detection/tracking, persistent low-latency transports between the server and device, and deadline-aware message scheduling.

4. Discussion

In the paper, we proposed a proof-of-concept of a solution that supports infrastructure-to-vehicle and infrastructure-to-everything communications at the intersections. The primary purpose of our solution is to increase safety by alerting drivers and pedestrians. Our solution addressed important safety-related issues: roughly one-quarter of traffic fatalities and about one half of all traffic injuries in the United States are related to intersections [

1]. Using open-source and commercially available application programming interfaces (API) and high-powered computing resources with Machine Learning (ML) functions, we created a video processing solution capable of frame-by-frame analysis of higher quality video available from roadside transportation cameras. This solution enabled the simultaneous identification of multiple object types within each video frame, including but not limited to cars, buses, pedestrians, and cyclists. Based upon these identifications, we used additional software modules to effectively track each object across multiple frames. Using this frame-by-frame tracking, coupled with detailed location data of the roadway within the camera’s view, we were able to identify the precise location and speed of each object and then calculate their estimated future locations. These estimates were then validated and updated based on subsequent frame detections while self-correcting the estimated future location of each object. The output of this tracking was standard SAE Basic Safety Message (BSM) for each individual object identified. Aditionally, we investigated options for receiving transmitted BSMs created by object detection and tracking solution via RSU and the internet. This included the use of an on-board unit (OBU) in a test vehicle (2016 Ford Fusion with “SYNC” driver display) and via an external handheld (smartphone) application developed in-house. The handheld application was able to successfully receive the generated BSMs and leverage the phone’s GPS data to create alerts based upon the downstream object detections.

While the present implementation is designed for monocular, fixed-position roadside cameras, reflecting the current deployment reality of most transportation agency camera networks, it is worth noting that quickly expanding stereo vision devices (like binocular cameras) can provide direct depth information, reducing the reliance on homography-based transformations and potentially improving accuracy in complex intersection scenes with occlusions or variable lighting. In recent years, stereo-specific approaches have been explored for intelligent transportation applications, including road condition detection in IoT contexts [

31] and mobility perception systems for automotive platforms [

32]. These methods integrate disparity estimation with object detection to achieve more robust three-dimensional perception. Although stereo setups were outside the scope of our proof of concept due to infrastructure constraints and our focus on retrofitting existing monocular camera installations, the presented framework is modular and could be adapted to incorporate stereo vision inputs in future iterations to enhance detection and localization performance.

4.1. Limitations

The presented work also showed drawbacks and shortcomings of the explored technologies that should be addressed in future research. The crucial issues that should be addressed are as follows:

High-quality video is necessary for detection accuracy—typical, live-streamed traffic camera video does not have the resolution and/or frame rate necessary for effective object detection and tracking. This issue may be also partially addressed by leveraging more sophisticated object detection algorithms, such as EfficientDet models.

Standard BSMs are limited in their ability to send multiple simultaneous detections—only a single object can be included in a given BSM, severely limiting the potential usefulness of BSMs on, for example, an intersection with multiple objects. This problem my be solved by extending the BSM standard or building a new one that can address more complex cases.

Although commercial cellular internet connections can be used effectively for driver alerting based on an upstream driver’s location and heading, real-world testing is necessary to determine the potential impact of latency of message receipt.

4.2. Possible Expansion

Beyond the discussed application scenario, the proposed framework can be extended to a wide range of domains. Most straightforwardly, it can be expanded to rural or arterial roadways, where detection and tracking could focus on slower-moving agricultural vehicles or wildlife crossings, where early warnings to drivers are equally critical. In pedestrian-heavy urban areas, the system could be adapted to monitor low-visibilty crosswalks and generate alerts for vulnerable road users such as cyclists or children. It can also provide information for drivers about events on the road and the necessity to move over to another lane [

33]. Beyond the domain of transportation, combining object detection, tracking, and broadcast messaging, it could be repurposed for public safety monitoring, such as crowd management at large events, or even for industrial sites, where worker and equipment interactions must be tracked in real time to avoid accidents. By decoupling the detection-tracking subsystem from the communication and alerting subsystems, the framework offers a flexible template that can be tailored by other researchers and practitioners to fit new sensing environments, communication technologies, and safety-critical contexts. In practice, replication or extension can be achieved by swapping the detection backbone (e.g., Faster R-CNN, YOLO, or transformer-based detectors), retraining models on domain-specific datasets, or integrating with alternative V2X communication standards. This adaptability makes the framework both transferable and open for iterative improvement in future studies.