1. Introduction

Most software development has been concerned with customer-driven or bespoke development, where a software system is tailored to meet the needs of a single customer. A software product is a package of computer programs, designed to solve specific problems for end-users, and is typically offered for sale or license. However, more recently, there has been an increase in the popularity of offering software products to a broader, open market, commonly known as market-driven development.

Table 1 outlines key differences between traditional and market-driven software development approaches [

1].

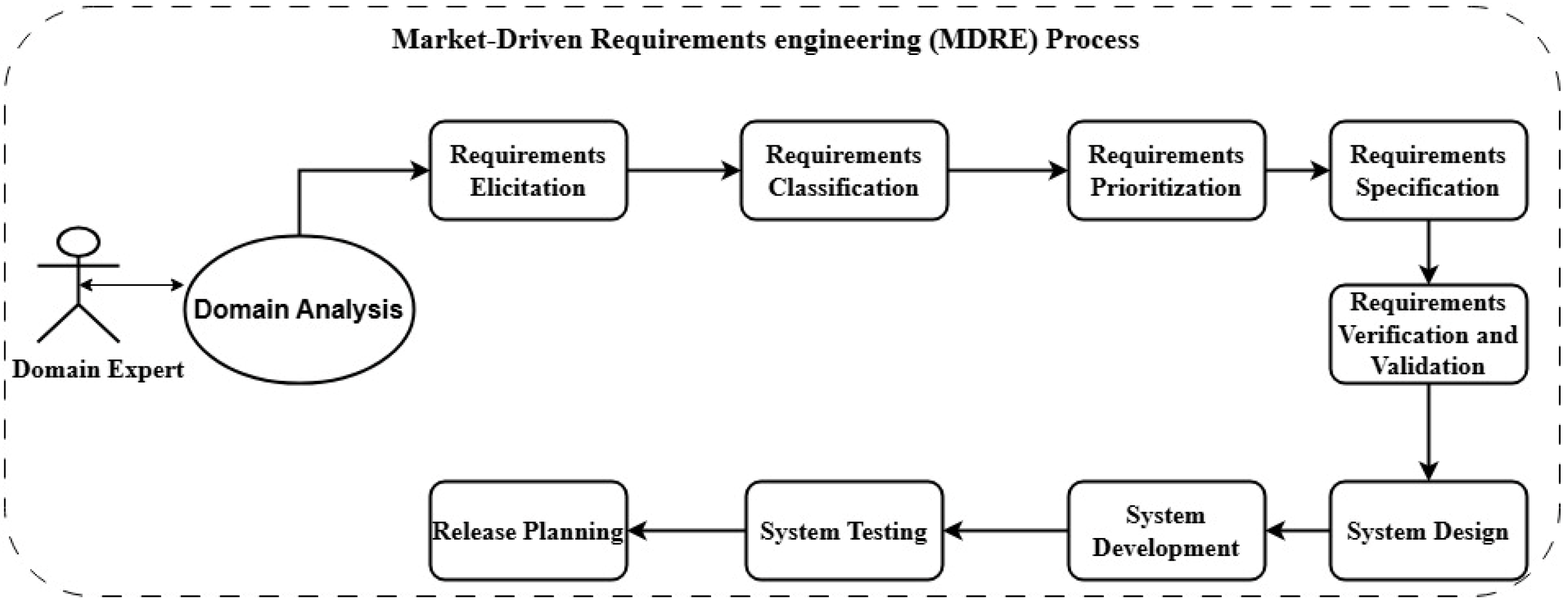

Requirements Elicitation, requirements analysis, software design, software implementation, and maintenance are the main phases of the Software Development Life Cycle (SDLC). Market-driven software development introduces several challenges to the Requirements Engineering process, particularly in effectively carrying out practices such as Requirements Elicitation, release planning, and requirements prioritization. Market-Driven Requirements Engineering (MDRE) addresses these challenges by extending traditional Requirements Engineering (RE) practices with insights for the market analysis [

2,

3], as shown in

Figure 1.

Requirements Elicitation can be defined as the process of collecting, identifying, and understanding the wishes and needs of stakeholders while building a software product [

4]. Requirements Elicitation is always important for software projects, but it becomes more critical while building market-driven software for an open market with unknown users. The volume of mobile application downloads has been rapidly expanding. By the close of the year 2023, mobile application downloads had increased to 257 billion downloads globally, as reported by Statista [

5]. Even with their extensive use, developing applications that successfully meet user expectations is still a challenge. Traditional Requirements Elicitation methods, such as interviews, workshops, questionnaires, and Joint Application Development (JAD), can be time-consuming, subjective, and have a limited coverage due to the large, diverse, and geographically distributed user bases [

1,

6,

7].

Users are allowed to write their own reviews, pointing out any issues related to mobile applications across various online platforms such as Google’s Play Store and Apple’s App Store. The users’ opinions reflect the voice of the customer, which highly affects the software success [

8,

9]. There are three main benefits of deploying mobile applications via the application store platforms, including market transparency, narrowing the user–developer gap, and the influence on release management [

10]. Consequently, a new concept has emerged which is called App Review Utilization (ARU) that leverages user reviews from mobile application distribution platforms to enhance the software development process [

8]. Also, these reviews can help in identifying bugs, user interface problems, security vulnerabilities, as well as Requirements Elicitation. In addition, user reviews play a major role in evaluating the overall application quality [

10]. Positive reviews can be utilized by the marketing teams for future marketing campaigns [

8]. Many research papers have been published discussing how to automate the software Requirements Elicitation process in recent digital transformation endeavors [

9,

11,

12]. Extracting requirements automatically from app reviews is a complex task, presenting several significant challenges, including the use of informal natural language, noise, and domain-specific terms [

11]. Unfortunately, many user reviews may include irrelevant content, offensive language, or spam without additional useful input. Such noise makes it challenging for developers and analysts to identify and extract meaningful information from user reviews [

13]. Also, manually inspecting these reviews is infeasible due to the large number of periodically received user reviews through app distribution platforms [

14,

15].

MDRE focuses on resolving these challenges by studying the opinions from user reviews, so that they can be useful in automatic Requirements Elicitation. Many existing studies use linguistic rules and a parts of speech (POS) analysis, which is not suitable for the informal and the unstructured nature of user reviews [

9,

16]. Eliciting requirements from app reviews is highly dependent on the context [

11]. Automating the app review classification is essential for developers to efficiently study and filter user reviews to obtain valuable information to be used in the Requirements Elicitation task, as manual categorization is a time-consuming task [

8]. There are several existing methods for automatic review classification using traditional Machine Learning (ML) models [

9,

17]. Recently, Deep Learning (DL) and Transformer-based techniques, such as the Bidirectional Encoder–Decoder Representation from Transformer (BERT), have been used and have achieved state-of-the-art results [

11,

18].

In this paper, we propose an automatic approach for Requirements Elicitation from app reviews by classifying the reviews into specific categories. Our proposed approach uses a pre-trained ML model called the BERT.

The remainder of this paper is structured as follows:

Section 2 presents an overview of the relevant literature review,

Section 3 expounds the problem statement, and

Section 4 discusses the proposed framework.

Section 5 presents the materials and methods used.

Section 6 presents the experimental results and discussion of our results. Finally,

Section 7 presents the limitations and threats to validity.

Section 8 concludes this paper and outlines directions for future work.

2. Literature Review

This Section describes the literature review of how app reviews are considered a valuable source for Requirements Elicitation.

In [

19], the authors performed a systematic literature review to highlight the absence of a systematic and uniform Requirements Elicitation process framework. This research presents the most common traditional tools and techniques for Software Requirements Elicitation, such as brainstorming, document analysis, focus groups, interface analysis, interviews, process modeling, prototyping, and others. In addition, ongoing challenges in Requirements Elicitation were highlighted, such as limited stakeholder involvement and natural language ambiguity. They proposed an iterative and integrated Requirements Elicitation metamodel, which consists of five components: stakeholders, requirements (categorized as functional, non-functional, and domain-specific), tools, artifacts (outputs refined iteratively), and contextual factors. Two main quantitative metrics were used to evaluate the refined artifacts, which are similarity metrics and convergence rates. The introduction of specific quantitative metrics to assess and evaluate the artifact quality and the efficiency of the revisions is one of the main strengths of this research.

The authors in [

20] conducted a content analysis of user reviews on a single mobile application “Citizen” to confirm that user reviews may contain valuable contextual information. They carried out manual coding by selecting random samples of the collected reviews from the Google Play store, and then they built a seven-variable framework according to scenario-based design and Requirements Elicitation research. The proposed framework includes requirements information, software bug reports, feature requests, and context information that combines use case scenario elements: actors, events, goals, and settings. The study concluded that classifying and analyzing contextual information in user app reviews answers why, where, and how users use a specific mobile application. However, it cannot explicitly disclose requirements or support the design of potential use case scenarios that could be beneficial in application maintenance. One major limitation of this research is the reliance on data from only one application, “Citizen”, which affects the generalizability and validity of the findings.

The study in [

21] presents a systematic review of Requirements Elicitation (RE) based on User-Generated Content (UGC). User-Generated Content (UGC) was defined as publicly accessible media created by internet users. Online user reviews, social media content, blog posts, and forum discussions are common UGC sources. These forms of UGC provide valuable insights about possible requirements, feature requests, and bugs. Rule-based methods, text clustering, topic modeling, and Machine Learning (ML) techniques are the main Requirements Elicitation approaches. Additionally, the paper proposed a research framework which consists of eight phases for UGC-based Requirements Elicitation to assist product developers and offer a comprehensive guide for both beginners and practitioners. However, as a systematic review with a primarily descriptive focus, it does not provide an empirical validation.

The researchers in [

22] introduced a framework for classifying customer requirements using online consumer opinion data, to show a non-linear relationship between customer requirements and customer satisfaction to support New Product Development. The proposed framework consists of three main phases: (1) Extracting implicit and explicit consumer opinion data from social networks, e-commerce websites, and review sites. Then, they identified product features from customer sentiment polarities, (2) utilizing a multi-layer neural network to evaluate the impact of customer requirements on overall customer satisfaction and (3) classifying the customer requirements into different categories using the Kano Model. The Kano Model is a well-established model for prioritizing features based on customer satisfaction, playing a significant role in service quality enhancement and product improvement. A case study involving mobile reviews, supported by benchmark comparisons, is used to demonstrate the effectiveness of the proposed framework. The study highlights its potential to provide strategic recommendations for New Product Development. The sentiment classification was restricted to only positive and negative categories. This binary classification neglects neutral sentiments, which can significantly impact the quality of the findings.

In [

23], a qualitative analysis was conducted on 6390 low-rated user reviews from free iOS mobile applications to identify key user complaints. The study identified 12 different categories of user complaints. Feature requests, application crashes, and functional mistakes were the main common complaints. Manual labeling was applied, and each time a new review is received, an iterative process is carried out to re-label all previous reviews. The authors revealed that the concerns related to privacy, hidden costs, and ethics have a particular effect on the ratings and reputation of applications. They observed that around 11% of user complaints are related to the latest versions of applications, emphasizing the importance of rigorous testing and quality assurance during the development process and release planning. Also, the authors found that 18.8% of user reviews contain new feature requests. A potential limitation is that the findings may not be broadly applicable due to the study’s dependence on a manually labeled dataset. Furthermore, automated tools and techniques that could help in scaling the derived insights have not been suggested.

As reported by [

24], it is difficult to capture valuable information from user reviews due to the unstructured nature of the data. Therefore, they proposed a categorization taxonomy for classifying user reviews to help in the evolution process. They combined Text Analysis (TA), Natural Language Processing (NLP), and Sentiment Analysis (SA) to point out important sentences in a review that may help developers in the maintenance phase. It was concluded that training a classifier by combining NLP and the SA achieved better results than applying only the TA. The proposed approach achieved a 75% Precision and 74% Recall (as we use the same measures in the research reported, as discussed in

Section 5.3).

An approach called RE-SWOT was introduced in [

12]. RE-SWOT utilizes users’ feedback via a competitor analysis, which aims to elicit requirements from the App Store. In RE-SWOT, NLP techniques are combined with information visualization methods. The competitor study has proven to be a valuable contribution that positively impacts the whole Requirements Engineering process. The RE-SWOT approach is inspired by strategic planning analysis, wherein it categorizes the features into four distinct categories: Strengths, Weaknesses, Opportunities, and Threats.

In [

25], the authors proposed a prototype called the User Request Referencer (URR) that uses ML and Information Retrieval (IR) to classify user reviews of mobile apps according to a specific taxonomy and automatically suggest particular source code files that need modification to address the issues pointed at in the user reviews. The empirical analysis they conducted included 39 different mobile applications. The URR achieved a high Precision and Recall in categorizing reviews into a specific taxonomy and linking them to the related source code files. Some external experts evaluated their approaches and concluded that the proposed prototype could reduce the time spent on the manual review analysis by up to 75%. From our point of view, source code files of mobile apps are not widely available to be used by the URR and linking it with the bug reports is not easily achieved either.

This paper introduces QUARE, a novel question-answering model that assists software analysts in the Requirements Elicitation process [

16]. It generates questions related to the requirements of a specific software category and extracts relevant information from various requirement writing styles (including structured, semi-structure, or natural language). The model includes a named entity recognition and Relation Extraction system to automatically identify key elements like actors, concepts, and actions within the requirements document. Through real-world case studies, the authors have demonstrated the effectiveness of QUARE in supporting some Requirements Engineering practices.

The researchers in [

26], presented a method based on Deep Learning for classifying app reviews. The presented method inspects each app review, preprocesses the extracted information, checks the sentiment using Senti4SD, and also takes into consideration the reviewer history. They achieved a model Precision of 95.49%.

In [

27], the authors studied the importance of analyzing online user feedback for application maintenance. They proposed an approach that investigates user feedback for low-rated applications to better understand users’ reasons for the bad rating. The research conducted a Sentiment Analysis by utilizing ChatGPT (exact version not stated) to prepare the input for a Deep Learning classifier. They used the API of ChatGPT to identify negative user feedback, followed by manual processing to identify commonly expressed emotions. Subsequently, ChatGPT was employed as a negotiator to address the causes of these negative emotions by generating a conflict-free version of the dataset. Finally, different DL classifiers were utilized to explore the emotions of users. A Machine Learning model called Long Short-Term Memory (LSTM) achieved the maximum Accuracy of 93%.

In [

11], the problem of extracting the requirements from mobile application reviews was formulated as a word classification task. Every word in a review text takes a specific value out of three values, which are Beginning (B), Inside (I), and Outside (O). The “B” value refers to the beginning of the requirement, the “I” value represents the core of the requirement, and “O” represents Outside the requirement. They proposed an approach called the RE-BERT model that leverages the BERT model to build semantic text representations enriched with contextual word embeddings. The researchers fine-tuned the BERT to prioritize the local context of software requirement tokens. They claim that their results outperform state-of-the-art methods, as evidenced by a statistical analysis on eight applications. They compared their work with other methods like GuMa, SAFE, and ReUS. GuMa relies on linguistic rules, while SAFE relies on part-of-speech patterns to determine the software features. As for ReUS, it utilizes grammatical class patterns and semantic dependencies to improve the extraction performance. They conducted their experiments considering two scenarios, which are exact matching and partial matching. The authors calculated the Mean Macro

score metric, which is the mean of the

score and gives all classes equal importance. They achieved a Mean Macro

score of 46% in the case of the exact matching and 62% for the partial matching. However, with the reported

score of 0.78, there is still an opportunity for improving the results of a task as critical as Requirements Elicitation.

In [

28], the authors studied the effect of the BERT model on two different classification tasks. The first task, “parent document classification”, fine-tunes the BERT model to classify requirements based on their source project. In the second task, “functional classification”, the BERT model was fine-tuned to distinguish between functional and non-functional requirements. The results show that BERT outperforms the traditional Word2Vec model. The authors computed the Matthews Correlation Coefficient (MCC), a metric that evaluates the classification model performance and takes into consideration correct and false predictions. The first task achieved a Matthews Correlation Coefficient (MCC) of 0.95, while the second task achieved an MCC of 0.82. They employed a binary classification, limiting the analysis to two predefined classes.

The authors in [

7] studied the automatic elicitation of requirements by utilizing online app reviews. As traditional techniques of Requirements Elicitation, such as interviews and questionnaires, are time-consuming, the researchers leveraged Large Language Models (LLMs) for the automation of the Requirements Elicitation. They fine-tuned three well-known LLMs, such as BERT, DistilBERT, and GEMMA, on a dataset of reviews by app store users. The fine-tuned models were used to perform a binary classification on the collected mobile app reviews. The BERT model produced a maximum Accuracy of 92.40%. The authors recommend using LLMs due to their promising results, which provide a lot of benefits for developing applications that are user-centric. They used a web scraper to collect mobile app reviews from Google Play, then labeled the reviews as either “useful” or “not useful” to the application developers. The “useful” label includes many insights, such as bug reports and new feature requests. The authors performed a binary classification by using only those two labels. The binary classification is not enough to help requirements engineers and developers due to the fact that the reviews that are labeled as “useful” need extra effort to recategorize them into more specifically useful categories.

3. Problem Statement

Developing a successful software application requires a careful consideration of user needs. While customer-specific software development focuses on a single client’s specific requirements, developing an application for a broad market presents unique challenges, particularly in Requirements Elicitation, throughout the Software Development Life Cycle (SDLC). Traditional elicitation techniques, such as interviews, meetings, and questionnaires, are not suitable in the market-driven development context. This is because most traditional elicitation techniques require direct communication with the end user, which is infeasible in a market-driven development context. Market-driven development targets a broad, often anonymous userbase, making it impractical to apply traditional techniques such as meetings, interviews, or workshops [

29]. This makes it difficult for developers and analysts to systematically and comprehensively elicit, classify, and prioritize user requirements. Therefore, alternative approaches are needed to collect and analyze information from large-scale and indirect sources, such as app reviews.

It is possible for users through app stores (such as Google Play Store and the Apple App Store) to search and download software applications for various purposes. Users are allowed to express their opinions about the software applications by writing text messages which are known as “app reviews”. App reviews have become an important source of critical information about users’ opinions. Utilizing these app reviews as a source of requirements while planning to develop a similar software application may have promising results. Analyzing app reviews of competitor applications may help in identifying new feature requests, pain points users face in existing applications, satisfaction trends, and more. Despite the growing volume of app reviews available on digital platforms, discovering valid and valuable requirements from them remains a challenge. Reviews are unstructured, informal, noisy, and often include multiple intents such as bug reports and feature requests. Also, it is not a trivial task to distinguish between what is relevant to Requirements Engineering (RE) and what is just a general opinion. There is a need for an intelligent automated system that can automatically classify app reviews into meaningful categories relevant to RE, supporting both software product development through a competitor analysis and improvements through studying users’ opinions.

This research focuses on the Requirements Elicitation for market-driven software products. To explore these concerns, we formulated the following research questions:

RQ1: What are the key challenges of building a market-driven software product from a Requirements Engineering (RE) perspective?

RQ2: Does incorporating the BERT model into the AREAR framework architecture lead to an improved performance compared to traditional Machine Learning techniques in app review classification tasks?

4. Proposed Framework

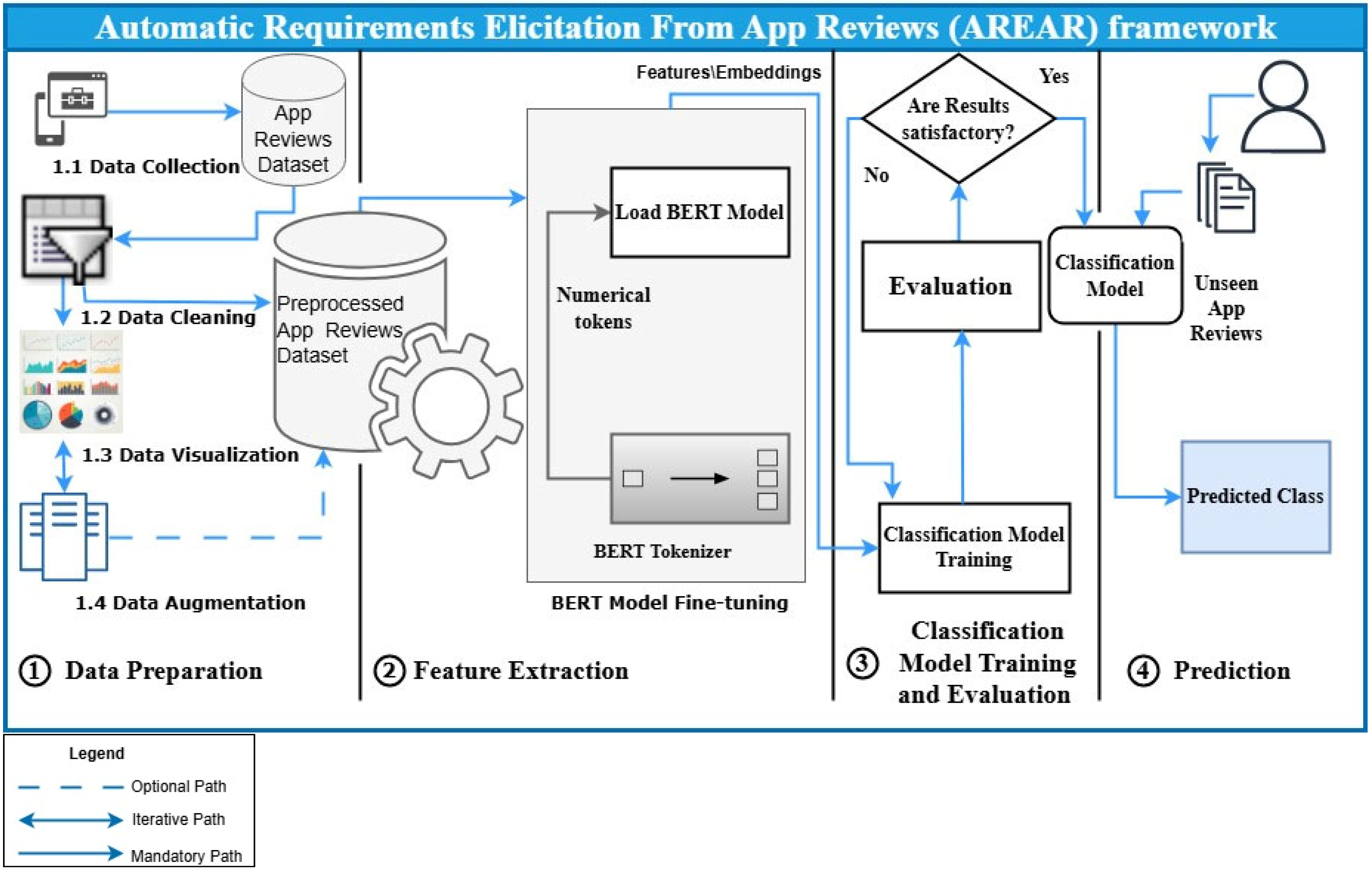

In this paper, we introduce a framework, Automatic Requirements Elicitation from the App Reviews (AREAR), designed to classify the user reviews of mobile applications into different categories. Our approach applies the concept of Data-Driven Requirements Elicitation while building a software product. We formulate the Requirements Elicitation process for software mobile apps as a text classification problem. In addition, AREAR integrates DL, TL, and NLP techniques to classify reviews into specific categories, such as “problem discovery”, “information seeking”, “feature requests”, “bug reports”, and others. The AREAR framework consists of four main phases: Data Preparation, Feature Extraction, Classification Model Training and Evaluation, and the Prediction phase. An overview of the proposed framework is shown in

Figure 2. Each phase of the proposed framework will be described in detail in the following sub-sections.

4.1. Data Preparation

This phase includes essential steps to prepare the data for use in all subsequent phases of the proposed framework. This phase consists of four sub-phases: data collection, dataset visualization, data cleaning, and data augmentation.

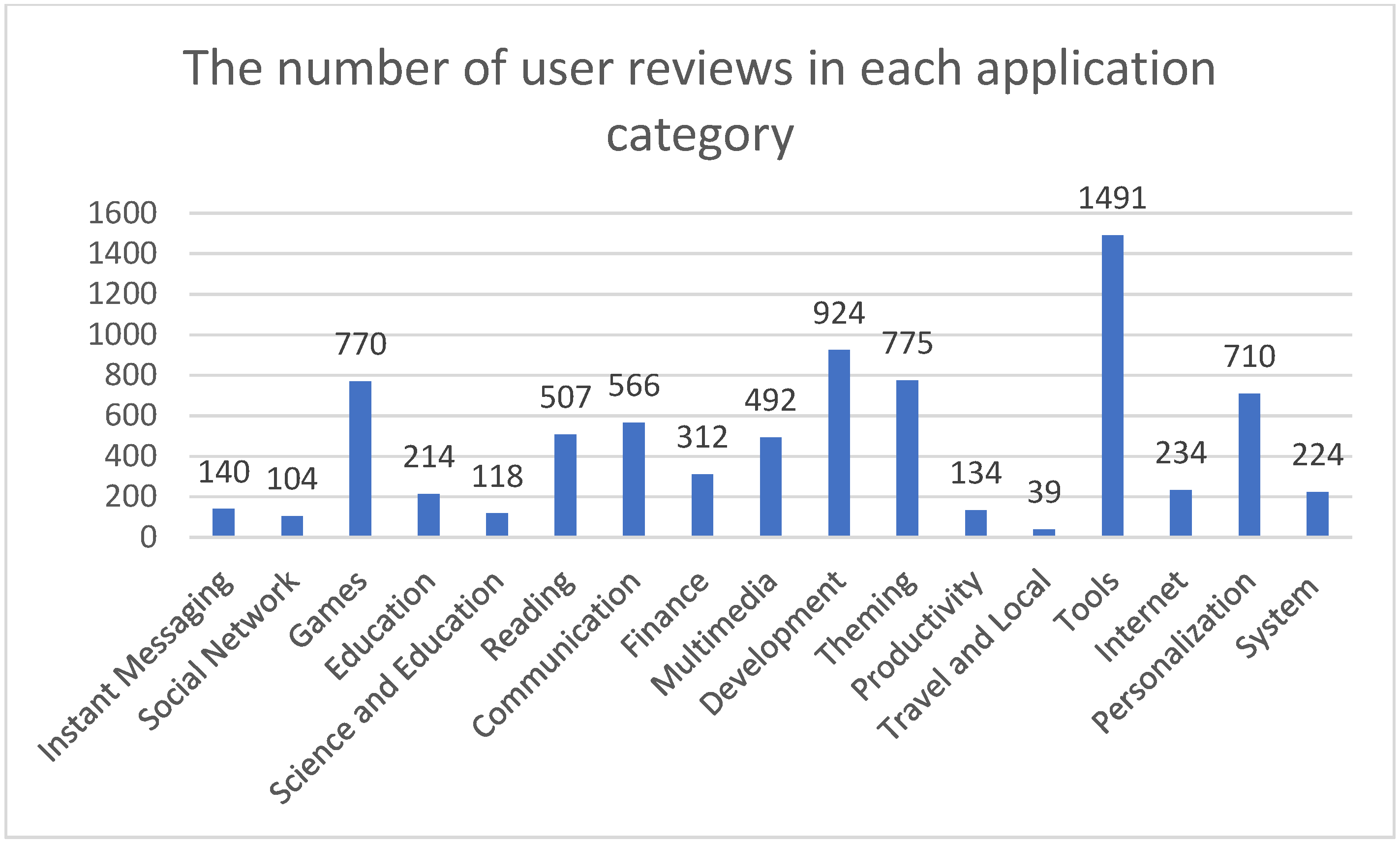

4.1.1. Data Collection

This sub-phase focuses on collecting user reviews from mobile application platforms, such as Google Play or the Apple Store, to be utilized in the software Requirements Elicitation process. To determine the wants and needs of stakeholders in a market-driven context, information from diverse sources is required, but a large volume may be problematic. So, the MDRE process must provide ongoing requirement gathering techniques to ensure no crucial requirements are overlooked. Therefore, it has become a challenge to maintain the capacity and avoid overflowing requirements even during peak periods of new requirements [

30].

The AREAR framework supports two main scenarios for data collection. First, during the maintenance phase of the software product, the development team may use the framework to examine user reviews of an existing launched application. In this case, the data collection is restricted to collect user reviews of that application only. To narrow down the volume of requirements being gathered, a set of filtering parameters may be used, such as the time duration, user age range, user background, and others. Second, when planning a new software product, the framework can assist in a broader Market Orientation. As mentioned in [

30], Market Orientation is the process of gathering, sharing, and utilizing market information to make well-informed business and technical decisions. Understanding the business significance of requirements is essential while building a market-driven software product. In the second case, the data collection will include collecting user reviews across multiple applications that belong to the same domain to identify common needs, pain points, and trends. These insights can guide marketing strategies, thereby providing a competitive advantage.

The output of this phase is a database of collected user reviews, which then feeds directly into the next phase of the AREAR framework (see

Figure 2).

4.1.2. Data Cleaning

The main aim of the data cleaning phase is to maintain the quality and consistency of user reviews. However, these reviews often contain issues, such as spelling errors, slang words, and irrelevant content like hyperlinks. Data cleaning is a process of specifying and handling errors and inconsistences within the dataset [

31]. We applied several data cleaning steps in this phase, such as the following:

Duplicate Removal: Eliminating identical reviews to avoid bias and redundancy.

Handling Null Values: Incomplete entries, like reviews without text, are removed to keep only the useful content.

Lowercasing: Converting all words in a review into lowercase.

Text Normalization: Removing irrelevant data, including deleting hyperlinks, informal abbreviations, and special characters to clean and standardize the text.

Stop Words Removal: Removing stop words like are, is, the, and others.

Spell Checking: Checking the spelling of the words and correcting misspelled words according to the review text to effectively handle typographical errors.

4.1.3. Dataset Visualization

This phase involves analyzing the collected dataset using visual analytics to understand the dataset’s distribution and structure. Traditional visualization methods, such as bar charts, pie charts, and histograms, remain effective tools in ML tasks. There are recent visualization methods used in the NLP pipeline, such as word clouds and dataset exploration [

32]. A key focus is assessing the data balance, which refers to the distribution of different classes/labels within the dataset. A dataset is considered balanced when all classes/labels have an approximately equal number of instances [

33]. Identifying a class imbalance is crucial in this phase, as it directly influences the classification model performance and may cause a bias. There are several techniques to resolve data imbalances, such as data augmentation, which is described in detail in the next phase.

4.1.4. Data Augmentation

This phase is an optional but important component of the proposed framework, activated only when a data imbalance is encountered from the previous “dataset visualization” phase. Unbalanced datasets can lead to biased predictions towards the majority classes and a poor generalization in text classification tasks. To resolve this challenge, data augmentation techniques can be applied.

Data augmentation refers to a group of techniques used to generate new data samples from a given dataset. Data augmentation may be applied on character, word, or sentence levels [

34]. It increases the diversity and size of datasets, thus improving the model performance, especially when dealing with small datasets or unbalanced classes [

34]. Data augmentation contains both resampling and text-based approaches. The Synthetic Minority Oversampling Technique (SMOTE) is a widely used method, which generates synthetic data points for the minority class to balance the dataset [

35]. The Synonym Replacement method creates new samples, and the random swapping of words with their synonyms can be performed, ensuring the original meaning of the text remains unchanged. Back Translation involves creating a paraphrase version of the text by translating them to another language and then back to the original [

34]. The Contextual Augmentation method replaces words according to the surrounding context using Masked Language Models, like BERT [

35], which produces more semantically accurate versions. The researchers in [

36], have proposed a ChatGPT-based method to augment training data by paraphrasing existing examples for few-shot classifications. They conducted experiments on both general and medical domain text classification datasets and proved that AugGPT significantly outperforms existing data augmentation techniques [

36].

The result of this phase in an augmented dataset, which provides more balanced and representative input for the subsequent Feature Extraction sub-phase, as shown in

Figure 2.

4.2. Feature Extraction

The Feature Extraction phase is responsible for transforming raw input into a set of meaningful numerical representations. There are several baseline Feature Extraction techniques, including the Bag-of-Words (BoW), Term Frequency (TF), and Term Frequency–Inverse Document Frequency (TF-IDF) [

37]. These traditional techniques work based on frequency-based occurrences of words and ignore the semantic meaning. The proposed AREAR framework utilizes the BERT as the feature extractor, which considered a powerful pre-trained language model developed by Google [

35]. Additionally, the BERT combines both syntactic and semantic meanings of words according to the surrounding context using its bidirectional mechanism.

In this phase, we fine-tune the BERT model: First, the BERT tokenizer is used to tokenize the user app reviews and to generate numerical tokens/terms. Then, the BERT model is loaded and receives the output of the BERT tokenizer, which is numerical tokens. In the next phase, the classification model uses these BERT-derived embeddings as input features. Applying the BERT in this context, the model benefits from contextual representations, enhancing its ability to handle informal and irrelevant content.

4.3. Classification Model Training and Evaluation

In this phase, the preprocessed user reviews, which were already converted into contextual embeddings using the BERT model during the Feature Extraction phase, are used as the input for a classification model. Two main approaches can be adopted to build a classification model and conduct a multi-label classification. The first option is to use an existing pre-built classifier, which is often faster and utilizes the robustness of existing ML models. Examples of these ML models include the following [

17]:

Naïve Bayes (NB): A Bayes theorem probabilistic classifier that assumes feature independence.

Logistic Regression (LR): A linear classification model that can be applied for multi-label classification.

Random Forest (RF): An ensemble technique that builds a large number of decision trees during training and computes the mean prediction which represent the class.

K-Nearest Neighbors (KNNs): An instance-based, non-parametric learning approach that classifies new data points based on the majority class among their “k” nearest neighbors in the feature space.

Support Vector Machine: A robust algorithm that maximizes the margin between data points by identifying the best hyperplane to categorize them into classes.

Decision Tree: A classification and regression ML technique that creates a tree-like model according to the input to make some decisions. It uses the decision rules gained from features to divide data into groups.

The second option involves building a custom classifier tailored to specific characteristics of the classification task and the dataset being used. A neural network is constructed on top of the BERT final layer which contains embeddings. This built neural network typically includes one or more fully connected linear or dense layers. A fully connected layer is one where each neuron is highly connected to neurons of the previous layer. The main purpose of the dense layer is to explore patterns and relationships in the data [

28]. Followed by an activation function, such as SoftMax [

38] or Sigmoid, that allows the model to independently predict which class/label is selected. The model is then trained on the multi-label classification of user reviews by applying a suitable loss function, such as cross-entropy [

38], and is optimized using an optimizer, such as the Adam or AdamW optimizer [

39]. The output layer computes a probability value for each predefined class (e.g., bug report, feature request, etc.), and the class with the highest value is selected.

The training process begins using the extracted embeddings from the BERT model (see

Section 5.2), and then the model performance is evaluated using common evaluation metrics (to be discussed in detail in

Section 5.3). It is an iterative process, and as shown in

Figure 2, there is a bidirectional arrow between the classification model training and the evaluation. In the case of dissatisfactory results gained from the evaluation, we then return back to the training process and adjust some parameters (to be discussed in detail in

Section 5.4).

To evaluate the classifier model performance, we used standard multi-label classification evaluation metrics, such as the Accuracy, Precision, Recall, and

score [

40] (see

Section 5.3 for more detail).

4.4. Prediction

The Prediction phase is the final stage of the proposed framework, where the trained multi-label classification model is deployed to automatically categorize the new unseen app reviews into one of the predefined classes.

In this phase, a new user review is passed to the same preprocessing and Feature Extraction steps, similarly to the training data, involving tokenization and the embedding via the pre-trained BERT model. The resulting embeddings are then fed into the trained classifier, which subsequently provides a set of probability scores for each predefined class. The classification model selects the class with the highest probability.

6. Results and Discussion

In this Section, we describe our results, compare them with results from other research, and then discuss our findings. First, we discuss the results of applying data augmentation to address the input data imbalance, and then we present the results of the two case studies used to validate our approach to classifying app reviews into the aforementioned classes.

After applying the data augmentation, all classes have approximately similar numbers of reviews. For example, before the data augmentation, the “other” class contained 5993 reviews, which is almost 79% of all datasets.

Figure 5 visualizes the dataset before and after applying AugGPT [

36]. The “feature request” class contained only 85 reviews out of 7753, and after the augmentation, it became almost 6000, as shown in

Figure 5.

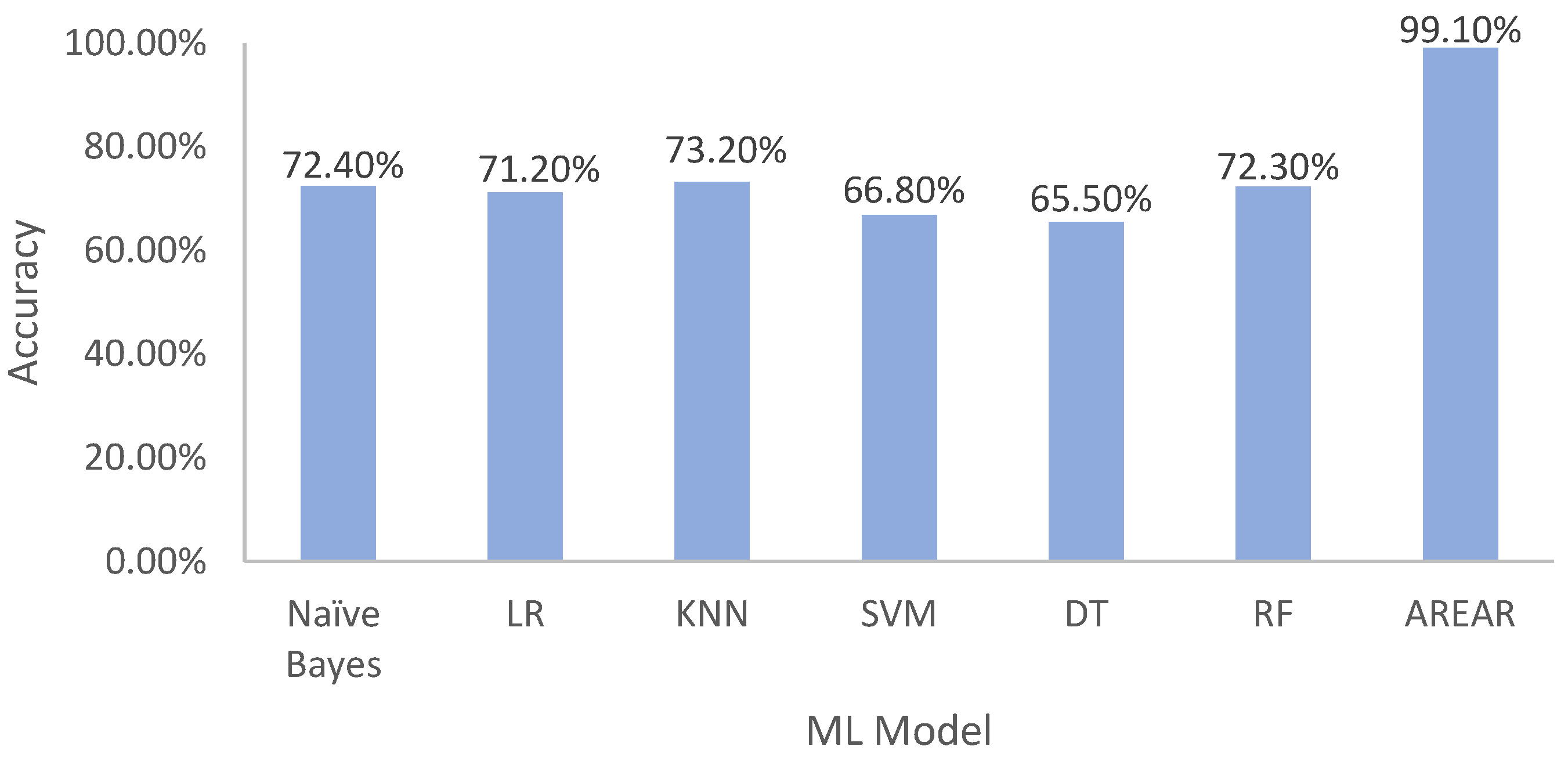

Second, we present the results of two case studies. In the first case study, we used traditional Machine Leaning techniques including the Naïve Bayes (NB), Logistic Regression (LR), K-Nearest Neighbors (KNNs), Support Vector Machine (SVMs), Decision Tree (DT), and Random Forest (RF). As shown in

Figure 6, KNNs achieved the highest Accuracy at 73% among the traditional Machine Learning techniques (other than our proposed framework: AREAR).

In the second case study, we used the BERT, a pre-trained model, for the Feature Extraction (see

Figure 6). The BERT has demonstrated its ability to address noise, misspellings, ambiguity, and use of informal language [

11]. A fine-tuned BERT model used for the Feature Extraction while classifying app reviews using our customized classifier achieved a 99.10% for Accuracy. This shows that the BERT helped achieve better results due to its bidirectionality, which integrated the surrounding context more effectively when assigning meaning, as explained in

Section 5.2 above. Thus, our proposed approach demonstrates a significant improvement in Accuracy. While traditional ML techniques require fewer computational resources, their maximum Accuracy of 73% indicates that the model is not sufficiently accurate for predicting unseen data.

According to the research environment settings (described in

Section 5.4), we observed improved results with the “AdamW” optimizer relative to the “Adam” optimizer. We evaluated the model with varying epoch numbers, observing a decrease in Accuracy after 50 epochs. We used the “cross-entropy” loss function. The cross-entropy loss function is the preferred choice when employing the Softmax activation, due to its compatibility with multi-class classification tasks [

38].

Our approach incorporates contextual word embeddings, especially BERT embeddings, that discover semantic understandings compared to the Term Frequency–Inverse Document Frequency (TF-IDF) used by [

9]. The authors in [

9] collected online feedback from Twitter and Facebook using the Kafka tool and a web scraper. They did not explicitly mention the Feature Extraction method used. They employed the Naïve Bayes classifier to categorize the collected feedback as either “functional” or “non-functional” requirements. They achieved an Accuracy of 65%, a Precision of 51.72%, and a Recall of 81.08%. Our proposed framework achieved an Accuracy of 99.1%, which is a significant improvement in predicting the correct class for the user review classification. In [

11], the RE-BERT model was proposed for the requirement classification using the BERT model fine-tuning, resulting in an

measure of 62%. However, our proposed framework achieved an

score of 99.1%, which is a significant improvement.

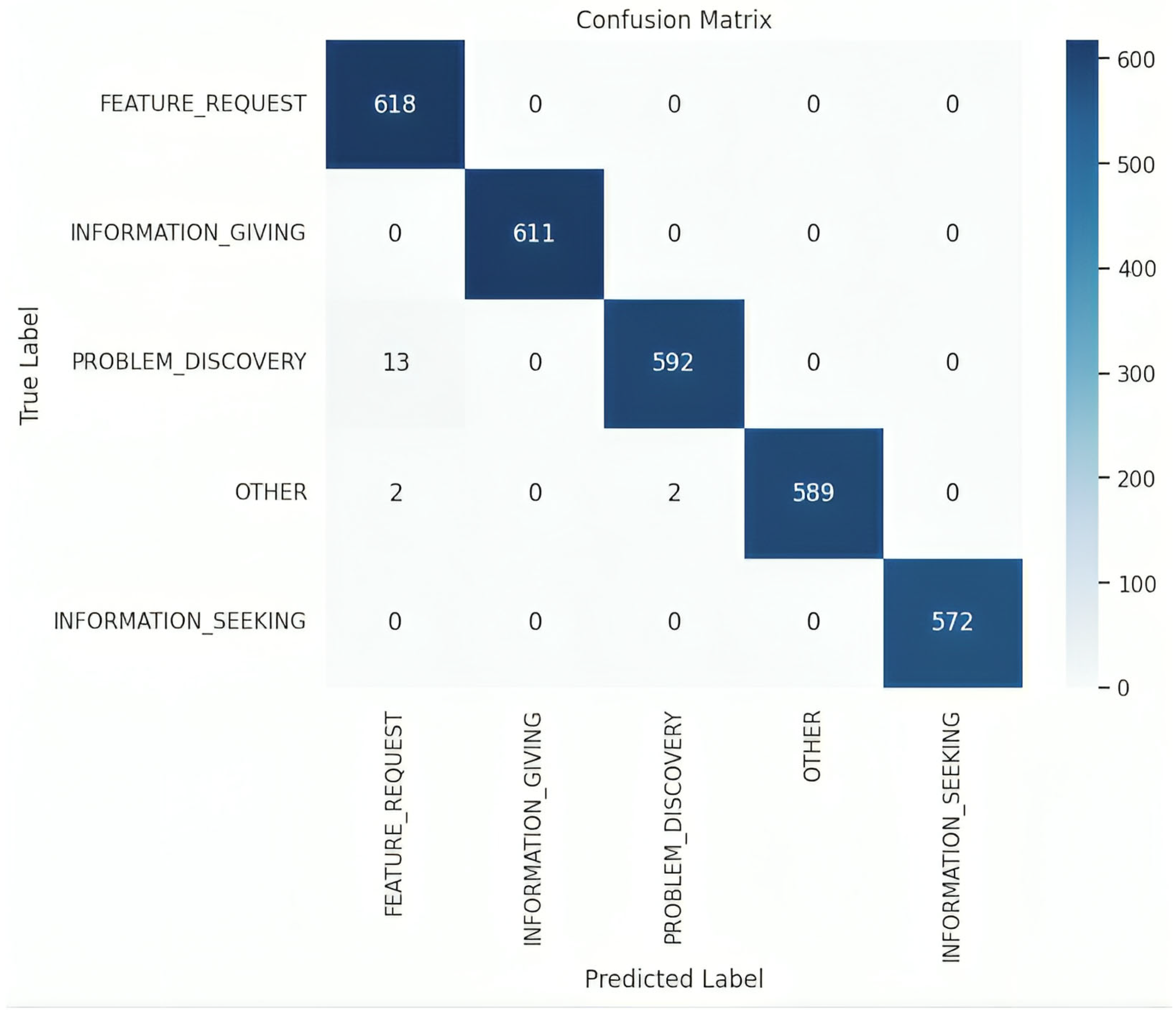

To evaluate the model performance and identify misclassification, we built a confusion matrix (

Figure 7). In Machine Learning, confusion matrices are visual representations of model effectiveness, often combining colored boxes with different meanings [

53]. As depicted in

Figure 7, blue boxes indicate the number of correct predictions, while light-colored boxes represent the number of misclassified instances. For example, in the “Feature Extraction” class, only 13 instances were misclassified, while 618 instances were correctly classified. Additionally, the “information giving” class had 611 correct classifications and no misclassifications. The number of correct and incorrect predictions varies according to the dataset splitting strategy used. The prediction is based on the predefined classes mentioned earlier in this paper (previously elaborated in

Table 2).

7. Limitations and Threats to Validity

This Section presents the limitations of the proposed AREAR framework and the threats to validity.

Construct validity: In this paper, the main purpose of the proposed framework is to study the Requirements Engineering practices in market-driven software product development, especially Requirements Elicitation. Our main intent is to partially automate Requirements Elicitation using LLMs (BERT). We have utilized users’ reviews for extracting possible software requirements. We have proposed the AREAR framework, which utilizes user app reviews from different platforms such as Google Play and the Apple Store to help in either developing a new similar software product or improving an existing one.

Our work is in itself “constructive” in the sense that it tries to produce valid requirements, so the threats to the validity of such requirements could come from a “respondent bias”, where a majority of comments could come from unhappy users, or satisfied users may not comment, or other psychological factors may deter users from proposing requirements (like having no real hope that their comments would be taken on board, etc.). It is also possible that some users do not comment seriously or comment out of spite to sabotage the exercise and bring disrepute to the app, etc. Also, some important requirements might be missed because no (or not enough) users commented, etc.

Reliability: This research has been conducted by four researchers who have a wide range of backgrounds and areas of expertise. The researchers’ experience ranges across more than one industry, such as academia, software development, NLP, project and product management, design thinking, and others. Thus, we have no reason to believe that the data analysis and collection are dependent on a specific researcher.

However, the LLM itself may have introduced biases in the (automated) classification/clustering of the comments, as may be expected with such models in general.

Internal validity: We have no concerns that there are causal effects among the study variables that are unwarranted or were overlooked. We are not studying more than one variable, so the causal effect across factors studied is not applicable to our research.

External validity: We have used one dataset, thus there is no claim to the comprehensiveness or generalizability of this research or to the possibility that there are confounding variables that affected the results. Our work has a different nature from conventional empirical research, and we can classify it as “accelerated” or “intensified” qualitative research, which demonstrated the efficacy of the tools and methodology proposed, rather than trying to reach findings that are generalizable to other contexts.

One limitation of using the BERT model is the need for a large training dataset, and fine-tuning processing can be computationally expensive, as we have experienced. Our proposed model should be further validated using other datasets from various domains and may be multilingual.

8. Conclusions

This paper proposes a framework to enhance one of the most important RE practices in MDRE, which is Requirements Elicitation. Requirements Elicitation is a crucial phase in the Software Development Lifecycle (SDLC). While developing a new software product, especially mobile applications, and studying competitive offerings in the market, studying user opinions about similar apps is an essential step. Traditional Requirements Elicitation methods are not well-suited for market-driven development. Our proposed framework, Automatic Requirements Elicitation from App Reviews (AREAR), aims at helping requirements engineers in eliciting software requirements based on market-driven data. AREAR automatically classifies user reviews into specific predefined categories. AREAR consists of four phases: Data Preparation, Feature Extraction, Classification Model Training and Evaluation, and Prediction. We fine-tuned the BERT model for Feature Extraction, and, therefore, it will be used as an input for the classifier. Our findings have answered the research questions mentioned above (

Section 3). Regarding RQ1, the challenges have been mentioned throughout this article, such as unknown and large-sized user bases and dealing with various sources for requirements. We obtained effective results by fine-tuning the BERT for the Feature Extraction phase compared to traditional ML techniques, which is the answer to RQ2. The applied proposed framework achieved a test accuracy of 99.1%, surpassing the performance of the previous research cited in [

9]. In general, the four steps of our proposed framework, AREAR, provide valuable information for entrepreneurs and requirements engineers.

In future work, we plan to explore more efficient fine-tuning methods, such as LORA (Low-Rank Adaptation), which is an efficient fine-tuning technique for Large Language Models (LLMs) that reduces computational and memory resources [

54]. Additionally, a comprehensive validation of our model on diverse datasets from different domains and languages is crucial.

In future work, we shall aim at expanding the current requirements classification approach by applying clustering techniques. Clustering may help in further grouping the reviews after classifying them into the set of predefined categories (which may be proposed by the application developers) in a second phase of this research. By integrating the classification with clustering, we can explore underlying patterns and relationships among reviews. Additionally, we plan to extend our research to requirements prioritization, which is an essential activity of the Requirements Engineering process, especially when following non-sequential process models, such Agile development. By integrating prioritization techniques, we aim to evaluate the classified and clustered requirements. These extensions will not only refine the classification process but will also provide a more holistic framework for managing requirements whilst developing a new software product for the market or improving an existing one.

We would also like to explore, in future work, whether utilizing the textual output from other Requirements Elicitation techniques—such as surveys, questionnaires, and interviews—may render different results, in terms of Accuracy, etc., and whether AREAR may be adapted to suit different types of textual inputs from those techniques.