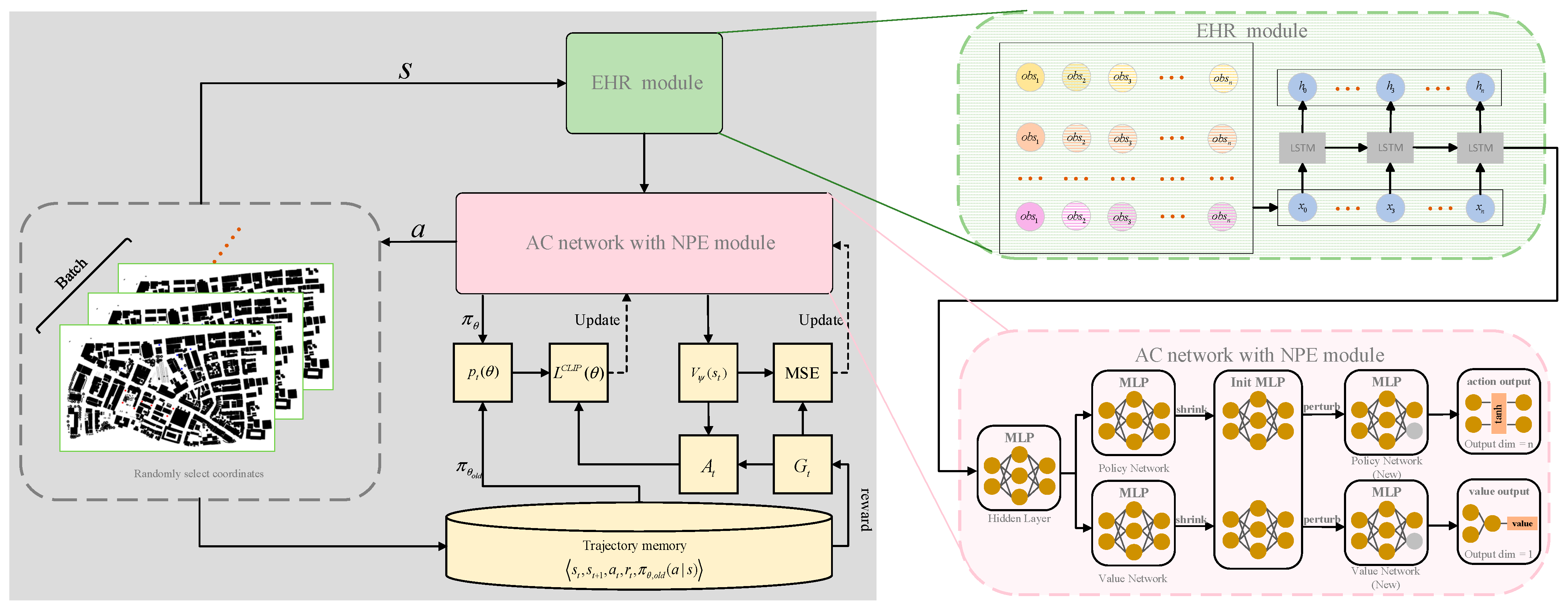

This section presents the experiments conducted on the proposed work from four distinct perspectives. All experiments were conducted in Python 3.8 using PyTorch 1.8.0 and gym 0.26.2 within PyCharm 2023.1.2, running on hardware with an NVIDIA Quadro 5000 GPU (16 GB) and Intel i9-9960X CPU. Firstly, we compared the convergence curves of various RL algorithms on this task. Secondly, we verified the effectiveness of each designed module through ablation experiments. Subsequently, we evaluated the performance of our method against RL algorithms and GA. Finally, we analyzed reward weight impacts on policy generalization. These experiments collectively validate our approach comprehensively.

6.1. Comparative Experiment

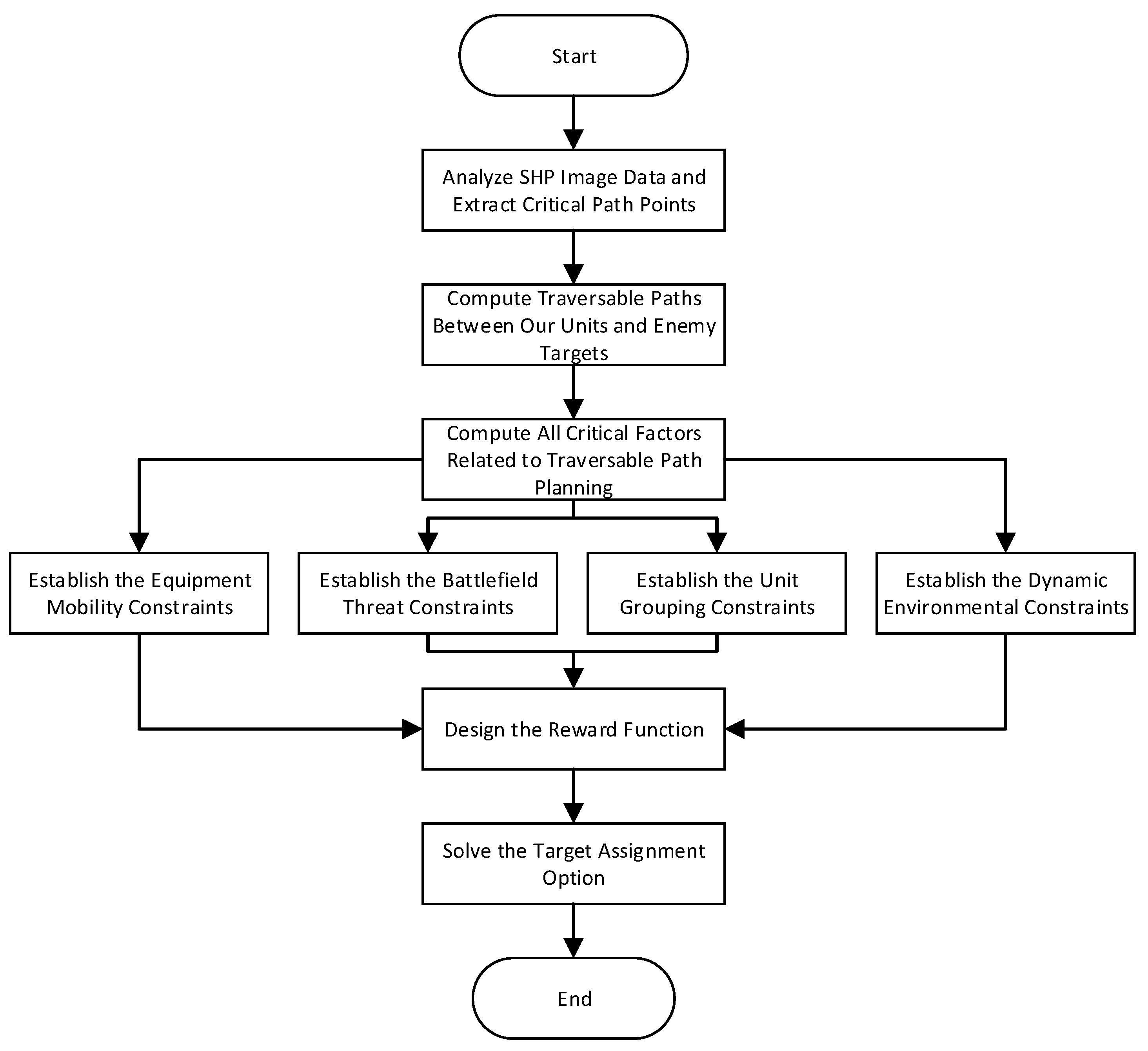

In the aforementioned urban environment scenarios, traversable paths from diverse initial states form the training dataset. The proposed GSPPO method was applied for training. We defined three adversarial scenarios. Scenario 1: 7 vs. 4 forces with 20 traversable paths per unit–target pair. Scenario 2: 10 vs. 6 forces with 20 paths per pair. Scenario 3: 7 vs. 4 forces with 30 paths per pair. These configurations test scalability and path density impacts.

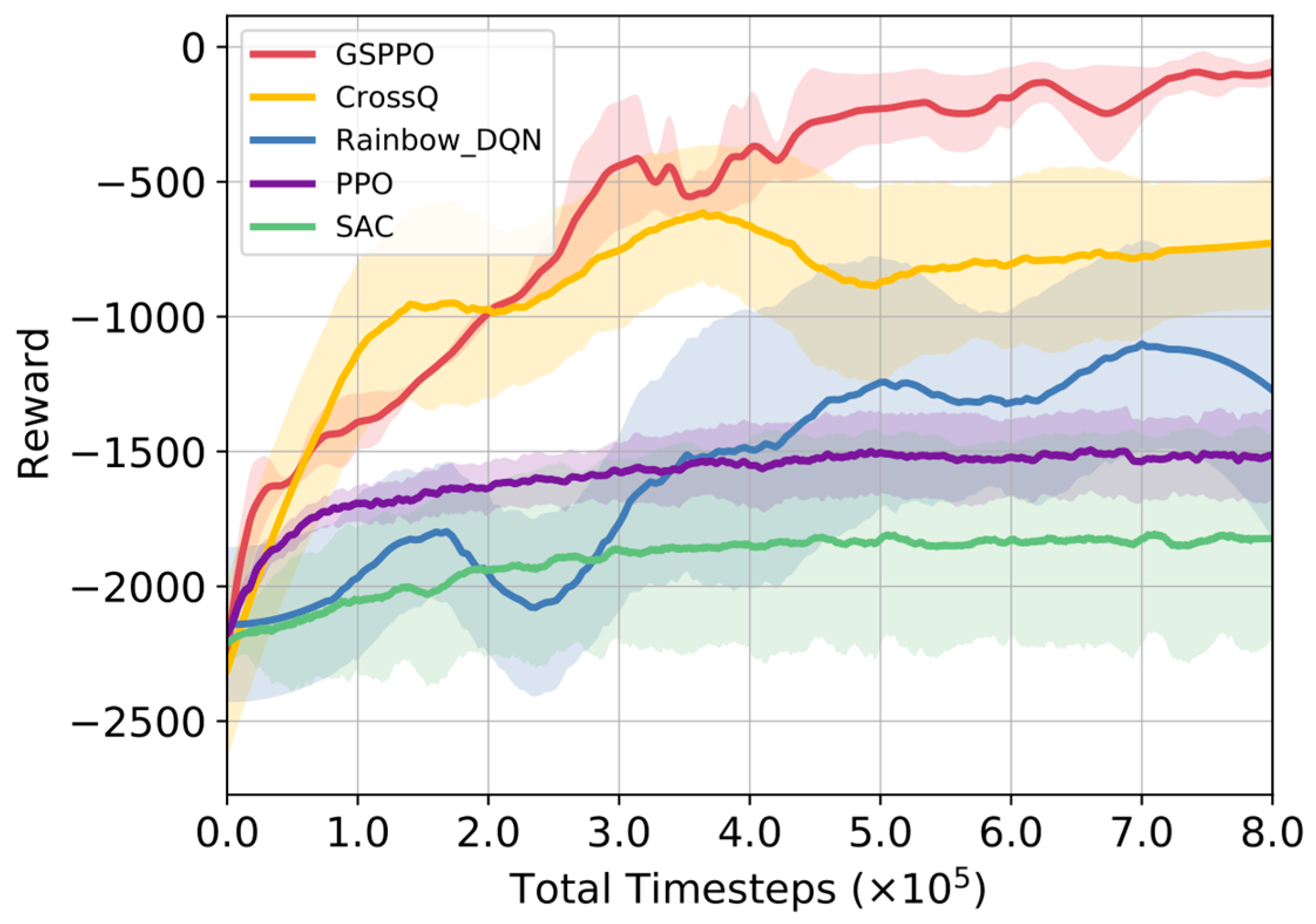

We also compared the proposed method with several RL algorithms in the experiments: the classic value-based algorithm Rainbow DQN [

49], the actor-critic based algorithm SAC [

50], and the relatively recent algorithm CrossQ [

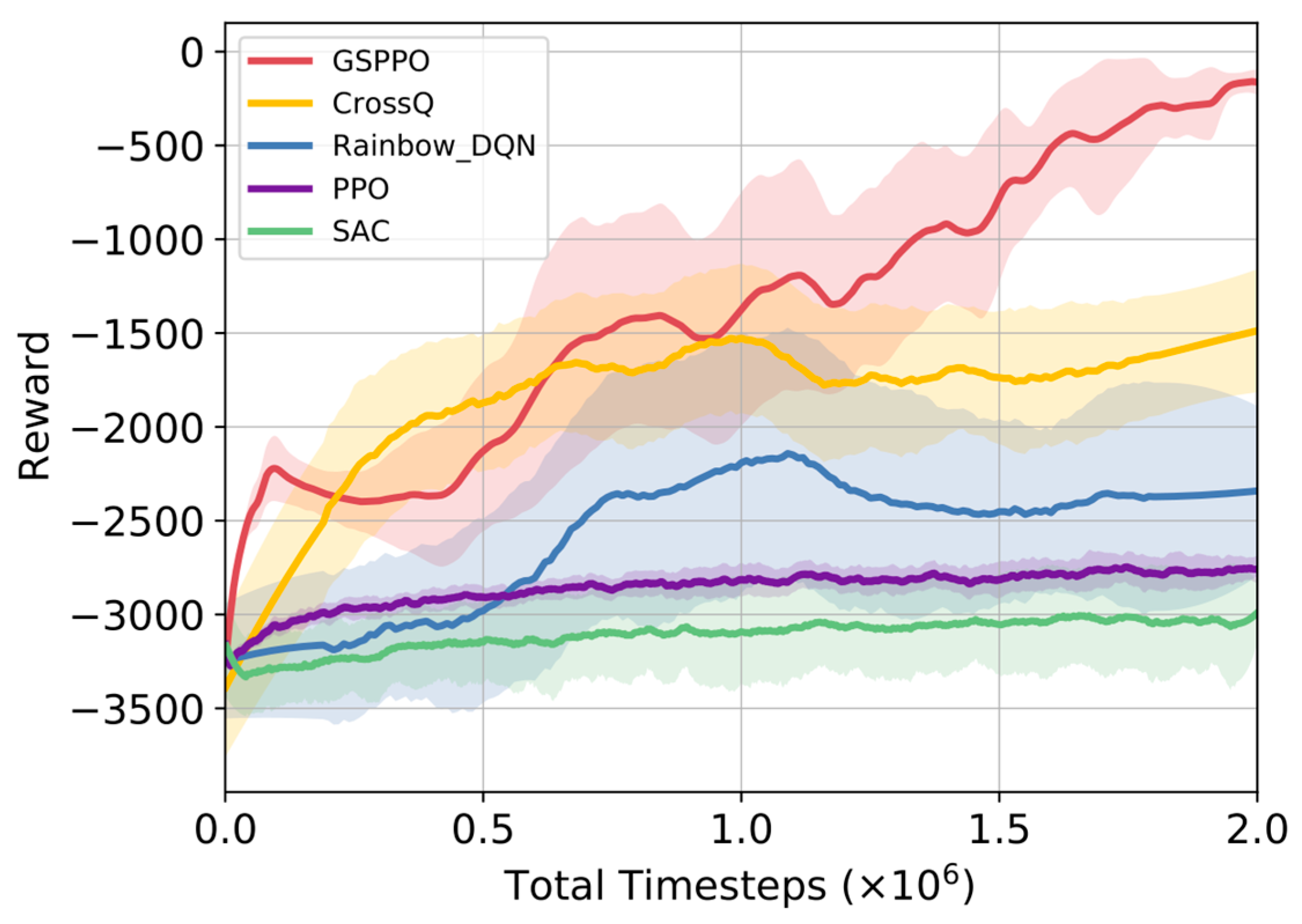

51]. The resulting learning curves after training are shown in

Figure 5,

Figure 6 and

Figure 7.

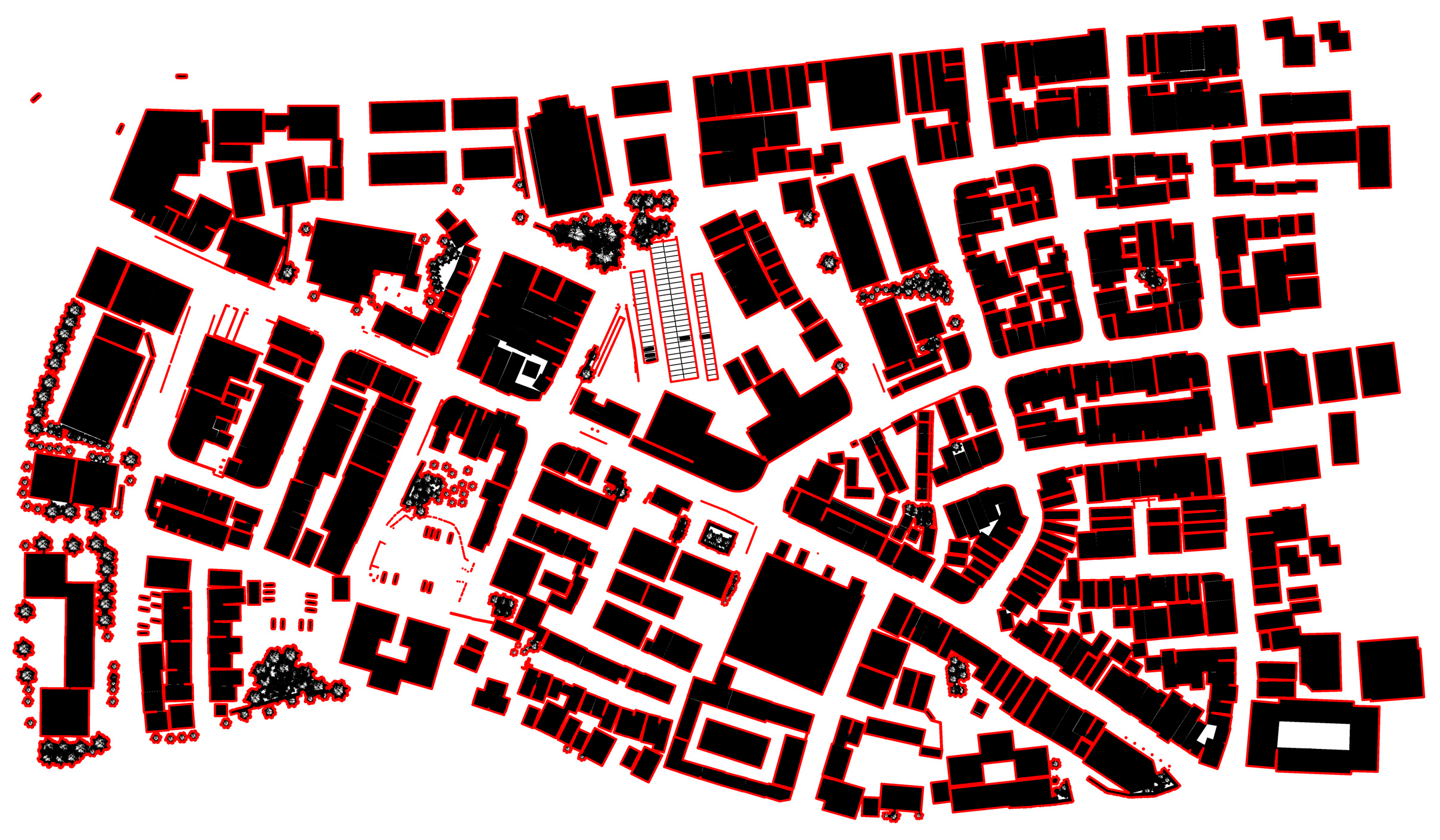

The experiments use the theoretical optimum of each reward module as the benchmark. The reward equals the negative deviation from this benchmark. Thus, the theoretical maximum total reward is 0. As shown in

Figure 5, GSPPO’s average reward increases steadily during training. It then plateaus near zero. This indicates policy network convergence.

Rainbow DQN, SAC, and CrossQ show low learning efficiency in urban adversarial scenarios. Their reward curves fail to approach the theoretical optimum. In contrast, GSPPO integrates two key innovations: an HER module and an NPE module. This integration improves data utilization and accelerates training convergence. It also mitigates performance disruptions from complex constraints and multi-dataset variations. Agents thus learn superior policies in challenging conditions.

Figure 6 shows that in scenario 2, GSPPO can still maintain high performance when facing scale expansion. Baseline algorithms exhibit significant degradation. This confirms GSPPO’s scalability for large-scale constrained target assignment.

Figure 7 displays the impact of increasing path density on the algorithm. From the results, it can be seen that though this expands the action space substantially, GSPPO maintains effective performance. This demonstrates generalization against combinatorial complexity.

6.2. Ablation Experiment

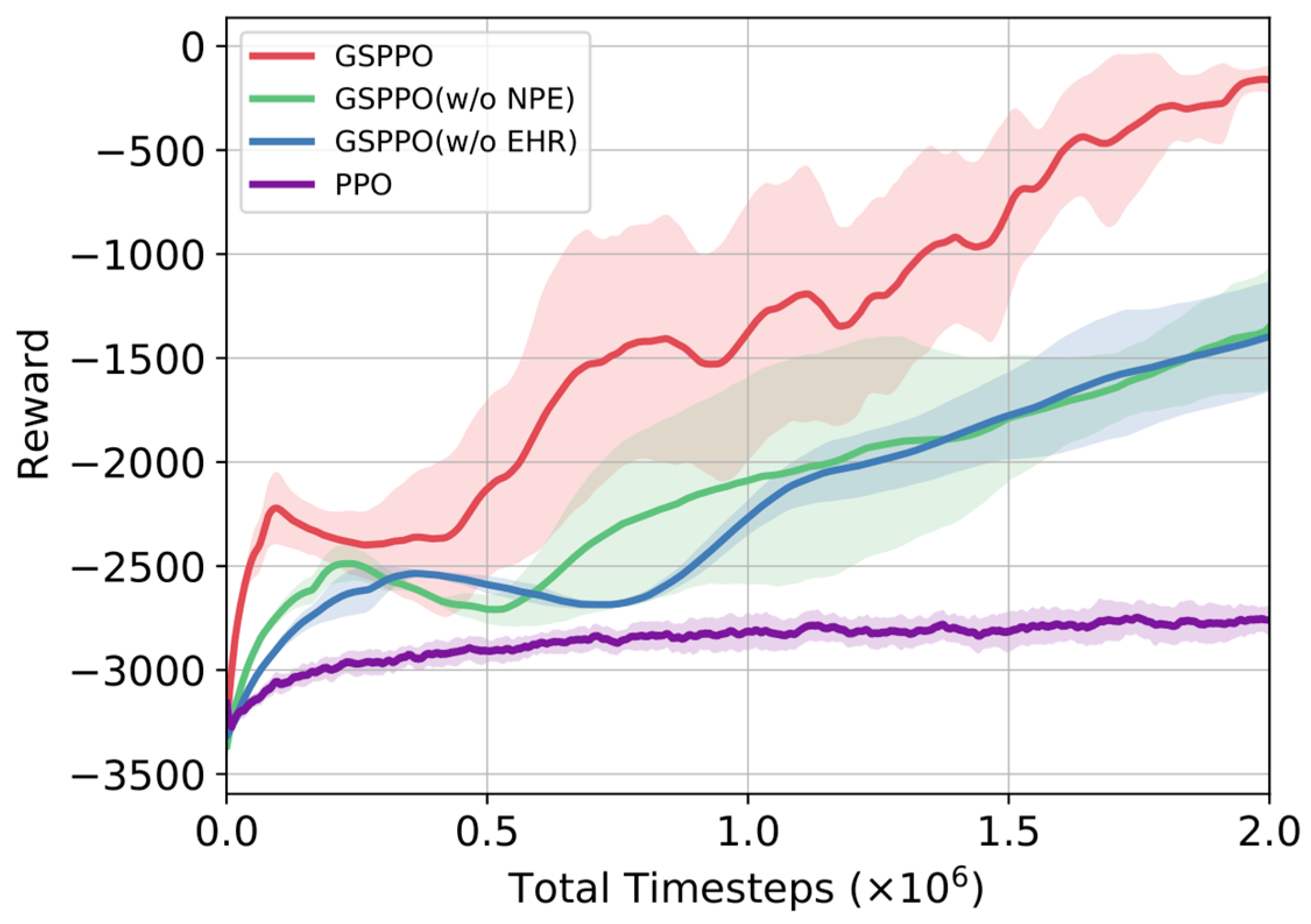

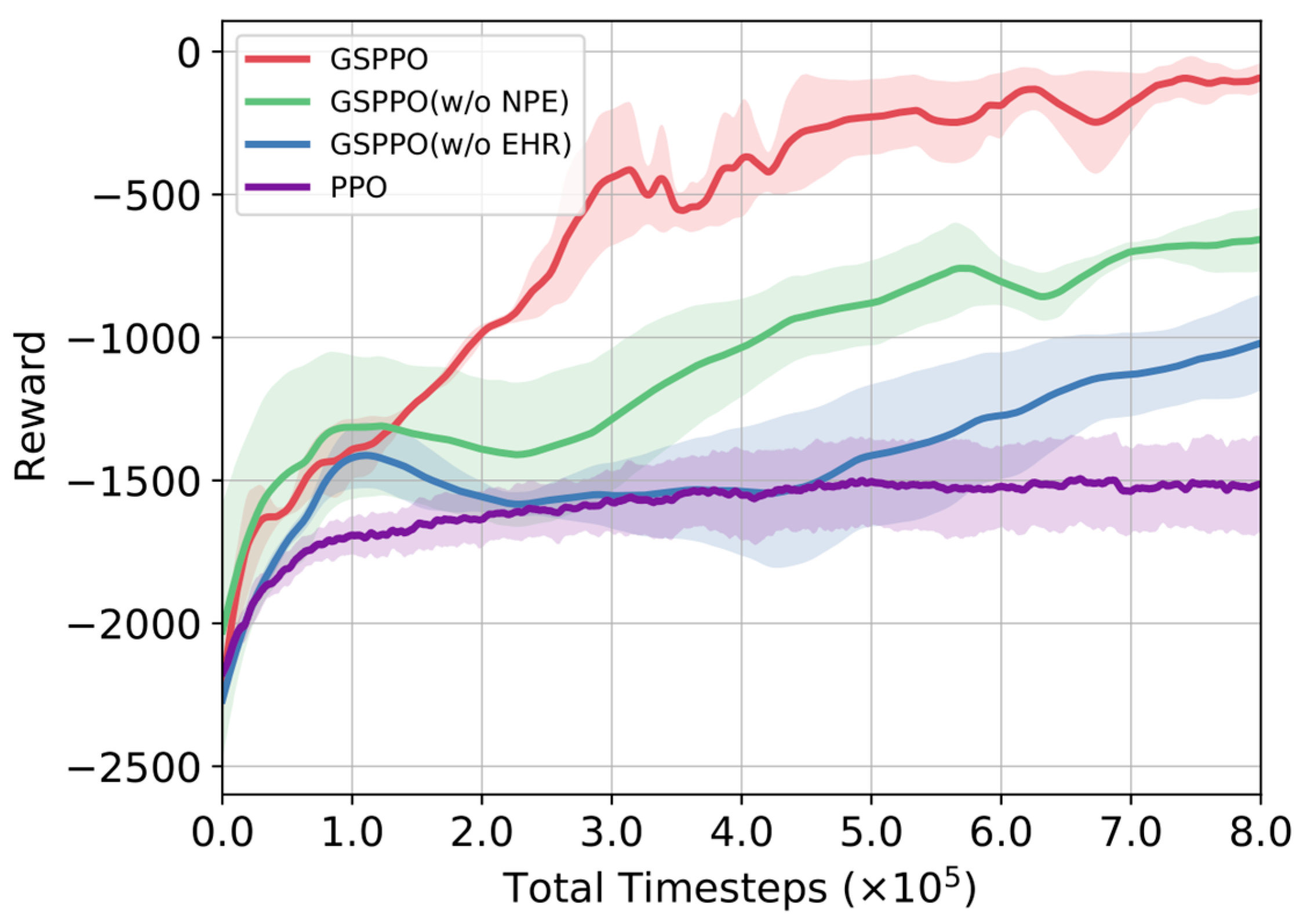

We introduced two improvements to the traditional PPO algorithm. To evaluate the impact of each component on overall performance, ablation experiments were conducted by removing one improvement at a time, and the algorithm’s performances were compared across the three aforementioned application scenarios, as shown in

Figure 8,

Figure 9 and

Figure 10.

Ablation results demonstrate key insights. The full GSPPO (EHR + NPE) achieves optimal performance. Partial variants (w/o EHR or w/o NPE) still significantly outperform baseline PPO. Both variants yield higher rewards than PPO with comparable convergence speeds.

The EHR module captures temporal dependencies in urban target assignment. Its memory cells and gating mechanisms model long-term dynamics. This capability exceeds traditional PPO methods. EHR maintains decision consistency across time steps. It thereby improves cumulative rewards.

The NPE module mitigates network plasticity loss during multi-dataset training. It enhances the model’s representational capability and cross-task generalization. By injecting controlled noise into parameter updates, NPE improves generalization against input distribution shifts while preserving policy space continuity. EHR and NPE collaborate synergistically. Their integration enables strong generalization across diverse adversarial scenarios.

6.3. Policy Application Performance Comparison

We evaluated GSPPO in a 7 vs. 4 scenario with 20 traversable paths per unit–target pair. Performance metrics included path length, threat value, and total time. The results were compared with those of the RL algorithms CrossQ, Rainbow DQN, SAC, as well as an improved genetic algorithm, AGA [

52].

Each method was evaluated using 100 Monte Carlo simulations, and the values of all metrics in the results were normalized, as shown in

Table 3. The experimental results show that the target assignment solution obtained using the GSPPO algorithm achieves an average path length of 3.8073, a threat value of 2.3587, and a total time of 3.3784. Compared with the RL algorithms CrossQ, Rainbow DQN, SAC, and the genetic algorithm AGA, GSPPO can find better target assignment solutions.

We also compared the solving time and CPU utilization of the proposed algorithm with those of the genetic algorithm, as shown in

Table 4 and

Table 5. Each method was evaluated using 10 repeated simulations. The leftmost column indicates the number of traversable paths used in the planning process. Combining the results described above, it can be concluded that the trained model significantly reduces the planning time required and the CPU usage. Moreover, even when the initial situation changes, the proposed model can still be directly invoked to generate a better assignment solution without the need for replanning.

Furthermore, we found that the proposed method relies on complete UAV aerial imagery. This imagery enables accurate extraction of traversable paths and critical path points. These elements underpin the target assignment framework. However, real-world urban environments often present challenges. Incomplete geospatial data are common, such as missing road segments or occlusions from foliage and buildings. Noisy UAV imagery also occurs, for example, due to inconsistent lighting. These issues may reduce solution reliability. The method’s performance requires full aerial coverage. Incomplete images can lead to unsatisfactory path extraction, resulting in suboptimal target assignment schemes being generated.

6.4. Parameter Sensitivity Analysis

We analyzed reward weight impacts on four operational objectives: mobility efficiency, battlefield threat, remaining firepower, and dynamic environmental factors. Four weight configurations emphasize different aspects of the operational objectives. This reveals how reward shaping quantitatively influences target assignment outcomes.

When

, the generated grouping and maneuver strategy is shown in

Figure 11. Our units 0, 1, 4, and 5 form a single group and advance along the planned route to engage enemy target 2. Our unit 2 forms a separate group and advances along the planned route to engage enemy target 1. Our unit 3 forms another individual group and follows its planned route to attack enemy target 3. Our unit 6 also operates independently and advances toward enemy target 0 along its designated path.

The results indicate that when , our units prioritize mobility efficiency during the offensive, with less consideration given to battlefield threat, remaining firepower, and dynamic environmental factors. This configuration emphasizes eliminating enemy units in the shortest possible time.

When

, the generated grouping and maneuver strategy is shown in

Figure 12. Our units 0, 3, 4, and 5 form a single group and advance along the planned route to engage enemy target 1. Our unit 1 operates independently and advances along the planned route to attack enemy target 2. Our unit 2 forms another individual group and follows its designated path to engage enemy target 3. Our unit 6 also acts alone, advancing toward enemy target 0 along its planned route.

The results indicate that when , our units prioritize reducing the potential threat encountered during maneuvering, thereby minimizing losses to our assets during the offensive.

When

, the generated grouping and maneuver strategy is shown in

Figure 13. Our unit 0 operates independently and advances along the planned route to engage enemy target 3. Our unit 1 forms another individual group and follows its planned route to attack enemy target 2. Our unit 3 acts alone and advances toward enemy target 0. Our unit 5 also forms a separate group and moves along its designated path to engage enemy target 1. Meanwhile, units 2, 4, and 6 are not assigned any target and remain in a reserve state.

The results indicate that when , our units prioritize maintaining a larger reserve force during the offensive, aiming to maximize the utilization of available firepower resources.

When

, the generated grouping and maneuver strategy is shown in

Figure 14. Our units 0, 3, and 5 form a group and advance along the planned route to engage enemy target 1. Our unit 1 operates independently and advances along the planned route to attack enemy target 3. Our units 2 and 6 form another group and follow their respective paths to engage enemy target 2. Our unit 4 acts alone and advances toward enemy target 0 along its designated route.

The results indicate that when , our units prioritize strategies that are less susceptible to disruption. This configuration aims to minimize the negative impact of potential dynamic disturbances in the operational environment.

The reward weight sensitivity analysis reveals distinct behavioral shifts in target assignment strategies based on parameter configurations. Prioritizing mobility efficiency minimizes engagement time but increases vulnerability to threats. Emphasizing threat reduction enhances survivability at the cost of operational speed. Maximizing reserve firepower conserves resources but may delay mission completion. Optimizing for environmental adaptability improves disturbance resistance while requiring balanced trade-offs in other objectives. These results demonstrate that strategic preferences can be systematically encoded through weight adjustments, enabling mission-specific policy customization. The framework’s flexibility supports diverse operational requirements—from time-critical strikes to high-risk reconnaissance—by modulating the relative importance of core constraints within the reward function.