1. Introduction

DRL has made significant progress in robotic control, autonomous driving, and game-playing agents. However, its decision-making process often functions as a black box, lacking both interpretability and verifiability. In high-risk applications such as multi-robot collaboration, medical assistance, and financial decision-making, the transparency and auditability of decisions are critical [

1]. Traditional visualization and local linearization reveal only limited sensitivities to input features. They fail to identify the causal impact of actions on outcomes and do not generalize well across tasks.

In robotic control, understanding the causal relationships among state, action, and reward is vital for both decision explanation and safety. Classical control methods, such as PID (Proportional–Integral–Derivative) control, Model Predictive Control (MPC), and rule-based expert systems, are easy to understand. These methods naturally provide strong interpretability and stability due to their clear mathematical models or rule-based logic [

2]. Their convergence and robustness can be rigorously verified using Lyapunov methods or frequency-domain analysis [

3]. However, these approaches heavily rely on precise physical dynamics models and expert knowledge. In complex tasks involving high degrees of freedom or multi-robot coordination, the manual design and maintenance of controllers and rule bases become extremely costly, and such methods cannot adaptively learn and improve from operational data.

DRL is a key approach for high-dimensional, continuous control. However, its black-box nature raises concerns about interpretability and safety. For example, recent work on Interpretable Continuous Control Trees (ICCTs) has shown that tree-based models can be easier to simulate, faster to verify [

4], and more interpretable than neural networks, while still delivering competitive performance.

Causal inference offers a rigorous mathematical framework for reinforcement learning by treating each agent’s action as an intervention on the environment. Structural Causal Models (SCMs) systematically capture the causal dependencies among state variables, actions, and outcomes, enabling contrastive explanations (“Why action A instead of B?”) and counterfactual reasoning to enhance explanation fidelity and trustworthiness. However, applying causal inference directly to complex multi-agent systems still faces challenges due to limited model expressiveness. Many Explainable Reinforcement Learning approaches borrow saliency or attention techniques from Explainable Artificial Intelligence [

5,

6]. However, these methods were not designed for sequential decision-making and struggle to account for temporal dependencies in DRL.

In recent years, many scholars have conducted a lot of research on Explainable Reinforcement Learning to improve the interpretability of reinforcement learning. Wang [

7] and Yang [

8] leveraged causal inference to enhance algorithmic robustness against confounding variables and adversarial interventions. Seitzer et al. [

9] improved exploration strategies by detecting the causal effects of actions, while Wang [

10] and Ding [

11] investigated causal world models. However, these works focus primarily on using causality to boost generalization rather than to generate explanations. True advances in explainability via causal models remain scarce. Madumal et al. [

12] introduced the Action Influence Model (AIM), a causal model specifically tailored for reinforcement learning to produce explanations of agent behavior; later work combined AIM with decision trees to improve explanation quality [

13]. Yet these methods require limited action spaces, a priori expert-provided causal structures, and only support low-fidelity models unsuitable for policy learning. Volodin et al. [

14] proposed an approach to abstract latent variables from high-dimensional observations and learn their sparse causal graph, deepening environmental understanding but without offering detailed insights into agent behaviors. Nevertheless, significant gaps remain in leveraging causal relationships to adjust and optimize DRL and robotic control strategies within interpretable reinforcement learning.

Subsequently, several studies have attempted to explain specific aspects of the decision-making process, such as observations, actions, policies, and rewards. However, these efforts rarely integrate explanations into the environment’s dynamics, which is crucial for understanding the long-term effects of the agent’s actions [

15,

16]. Traditional DRL methods often struggle to reveal the causal relationships between state variables, action choices, and final rewards in a policy. This makes it difficult for users to infer or predict the system’s behavior in complex or unusual scenarios. The lack of interpretability and safety hinders their deployment on real robots [

17].

In the field of robotic control, using Causal Policy Learning (CPL) can enhance the robustness and interpretability [

18]. Li et al. [

19] used a knowledge graph to deduce relationships in reinforcement learning, and the prior knowledge can reveal which relationships are important. Zhu [

20] used causal inference techniques to compute the importance of different samples for offline data, adjusting their influence on the loss function to ensure safety under perturbations. This method can be flexibly integrated with existing model-free DRL algorithms, such as Soft Actor-Critic and Deep Q-Learning. Tang [

21] proposed a CD-DRL-MP (Causal Deconfounding Deep Reinforcement Learning for Mobile Robot Motion Planning) method that infers causal relationships via Structural Causal Models (SCMs) and blocks backdoor paths in the model using a representation set, allowing the learning of causal effects between states and actions. Guo et al. [

22] used a dual-correction-strategy-based Markov boundary (DCMB) method, based on Bayesian network (BN) datasets, to remove false positives from the features currently selected. This method improved the learning accuracy of the Markov boundary. These studies focus on optimizing sample data via causal inference to improve RL training efficiency. However, agents following a fixed policy still require retraining for alignment.

To avoid retraining and enhance generalization, causal correction is a feasible solution. In complex robotic tasks, action execution is influenced by multiple environmental variables. Diehl and Ramirez-Amaro [

23] used Bayesian Neural Networks (BNNs) to infer causal structures, enabling robots to predict and avoid erroneous actions. This strategy builds on their earlier work [

24], which improved performance by predicting and avoiding mistakes. However, it did not enhance reward guidance. Causal correction computes the causal influence weight of each action on key states and final rewards and uses this to automatically adjust errors or disturbances in action selection. This allows robots to consistently choose actions that are truly effective for task outcomes, even under perturbations, thereby improving policy convergence speed, performance, and reliability.

Against this background, an interpretable reinforcement learning framework based on the Causal World Model is introduced, with the following primary contributions:

Causal World based on GNN-NCM: To capture environment dynamics and action–reward causal relationships in RL, we propose a Causal-World-Model-based RL framework that integrates a novel GNN-NCM architecture. It replaces traditional attention mechanisms with multilayer message passing and neighborhood aggregation. The framework more precisely infers causal influence weights, thereby modeling state transitions and reward generation more effectively.

C2-Net: We propose the C2-Net correction algorithm, which incorporates causal weights inferred from GNN-NCM into dynamic action adjustment and compensation. By explicitly leveraging action–state–reward causal relationships, C2-Net enhances reward acquisition and improves policy exploration, thereby enhancing both performance and generalization.

2. Materials and Methods

2.1. Structural Causal Model

A Structural Causal Model (SCM) formalizes the causal relationships among variables by combining exogenous variables

, endogenous variables

, and a set of structural equations

, forming the tuple

[

25]. Here is the collection of structural equations, each quantitatively describing how each variable is determined by other variables. The SCM can be visualized as a directed acyclic graph. The nodes correspond to the elements, and the edges are determined by the structural equations. Edges in the graph reflect the causal relationships between nodes. For an intervention, the post-intervention distribution can be computed using the SCM’s “prune-and-replace” rule as follows:

Building upon SCM, the Action Influence Model (AIM) incorporates an action space vector into the structural equations. This explicitly models how each exogenous variable is influenced by an action. Compared to a standard SCM, AIM more directly accounts for the impact of an agent’s or robot’s actions on the entire system, enabling more effective causal action analysis. To quantify the causal effect of a single action on a future state or reward, consider a given state-action pair at time t, and let

denote the next-step variable of interest (either a specific state dimension or the reward). The single-step causal influence of on is defined as follows:

Finally, by ranking all possible pairs according to their influence scores and retaining only those edges whose scores exceed a threshold (usually set to 0.1), one can construct an explanatory causal subgraph.

2.2. Graph Neural Networks

The Graph Neural Networks (GNNs) naturally excel at processing graph-structured data and can model interactions among agents and environmental elements in multi-robot systems. By treating state variables or agents as nodes and interactions or communication links as edges, GNNs use message-passing mechanisms to efficiently aggregate neighborhood information. This provides a structured foundation for causal inference. Combining causal inference with GNNs preserves both interpretability and scalability. This endows DRL with greater robustness and generalization in complex scenarios.

As mentioned above, causal models are mostly presented in the form of graphs. Graph Neural Networks excel at handling relational data, making them well-suited to model interactions in multi-agent and physics-based environments [

26,

27]. GNNs introduce a powerful “relational inductive bias,” enabling them to process arbitrarily structured graph data. Building on this, researchers have integrated GNNs into multi-agent reinforcement learning frameworks to improve coordination performance and robustness. Jiang et al. [

28] proposed Graph Convolutional Reinforcement Learning (GCRL) at ICLR 2020, treating the environment as a dynamic graph and using learnable relation kernels to capture agent interactions. Prior work integrates GNNs into MARL to improve coordination and robustness. These results indicate that GNNs are a versatile foundation for explainable RL.

Benham and Wang [

29] adopted a similar mechanism for causal model interpretation, in line with the recent “causal explanation” trend in GNN research. Their method trains a GNN augmented by a small multilayer perceptron (MLP) to assign a causal score to each edge, thereby constructing a neural causal model:

Here, denotes the hidden representation of node after the -th iteration, and is the set of neighbors of GNNs are well-suited to model data consisting of entities (nodes) and their relations (edges). This structured inductive bias allows interpretability techniques to capture the spatiotemporal dependencies an agent perceives—dependencies that attention-based methods often fail to capture. Consequently, GNNs can provide deeper insights into why an agent behaves as it does.

RL environments evolve through highly correlated transitions. Attention alone may miss the key spatiotemporal structure. Joint spatial–temporal encoding with GNNs better captures critical interactions and supports clearer decision explanations. In this study, since it is necessary to process a large number of causal structures arising from robot–environment interactions, we employ Graph Neural Networks (GNNs) to handle the causal structure processing. By learning and aggregating information from causal structures through GNNs, the inferred causal structures become more precise.

An MLP is used to score the aggregated outputs and update the GNN parameters by minimizing the mean squared error between the long-term causal return predicted by the GNN and the Monte Carlo return:

A sparsity regularization term is also applied to the cross-step edge weights

, encouraging concise causal chains:

The total loss function is then defined as follows:

By modeling and explaining the causal dependencies in the state–action–reward process, the inference network ultimately outputs an explanatory causal graph.

2.3. Causal Discovery and Inference

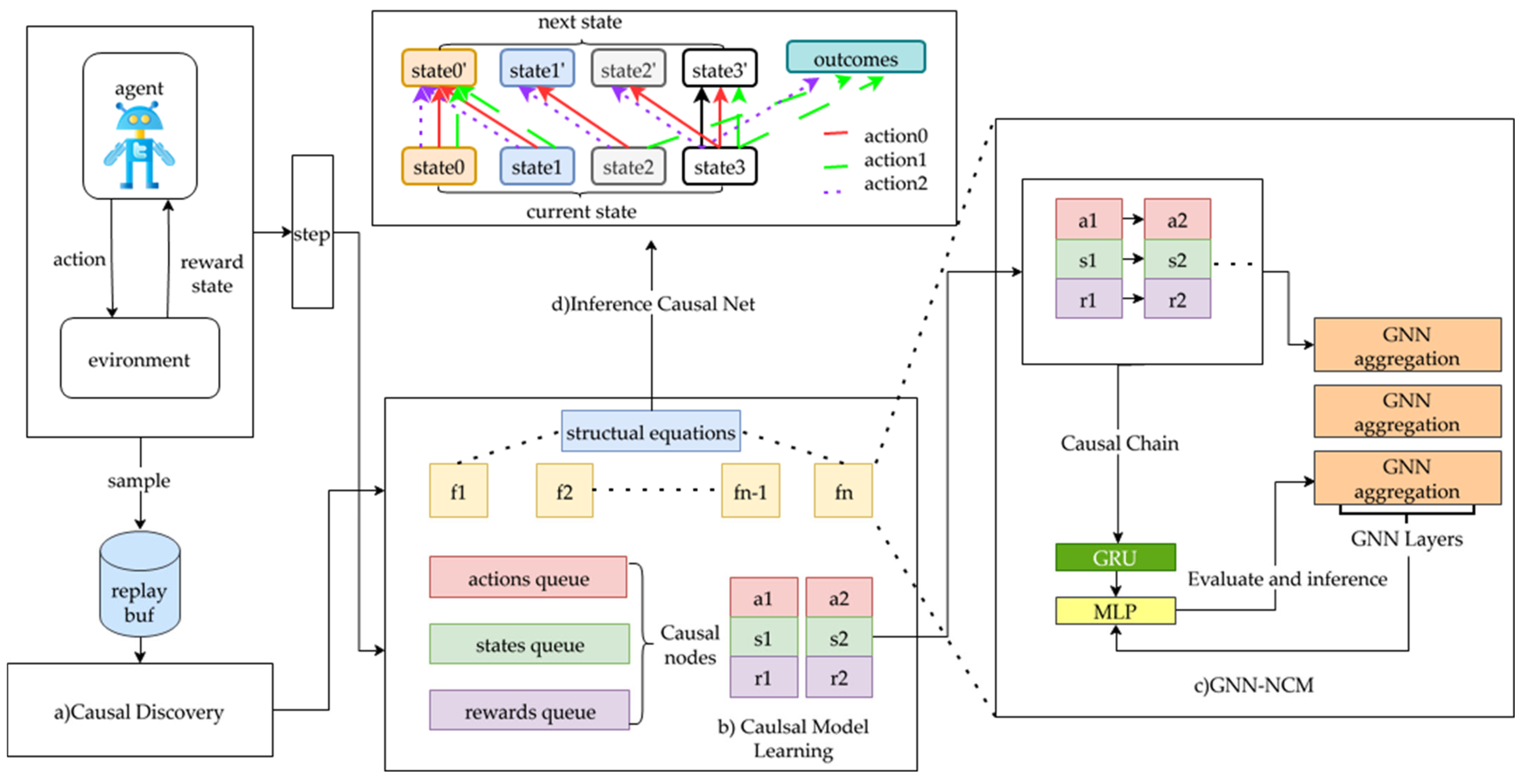

As shown in

Figure 1a), experience tuples

are sampled from the replay buffer for causal analysis. We treated state

and action

as input variables:

, and the next-state and outcome r as output variables:

=

) = (

). Assuming each input renders the outputs conditionally independent, the system decomposes into independent components. This assumption enables the orientation of each causal edge, which may then be validated through additional constraints, expert priors, or formal causal-inference rules.

For every candidate pair

, a test is performed to determine the existence of a causal edge. Prior studies have demonstrated that Conditional Independence Testing (CIT) efficiently uncovers sparse causal structures [

10,

30]. The CIT computation is given as follows:

Here, denotes the set of parent variables for . Conditional Independence Testing (CIT) assesses whether two variables and remain statistically dependent when conditioned on another set of variables . By iteratively applying CIT to remove non-existent edges, a sparse yet valid causal graph is obtained.

Extending the Causal World Model framework, edge strengths are estimated by learning node embeddings with a Graph Neural Network, yielding a fully differentiable, end-to-end causal evaluation mechanism. Parameterizing these edge weights makes it possible to infer causal influence paths directly from the GNN representations.

After the causal graph has been discovered and back-door adjustments applied (see

Figure 1b), a GNN-based inference module is constructed (

Figure 1c). At each time step, state and action nodes are projected into a shared embedding space and propagated through the causal graph via message passing. All state and action nodes are linearly projected into a shared hidden space to obtain initial embeddings. The resulting embeddings are scored using an MLP, and the GNN parameters are updated by minimizing the mean-squared error between the GNN’s long-term causal return

and the Monte Carlo return

.

Treat the entire causal graph as a heterogeneous graph with “state→output” and “action→output” edges and input it to the GNN for aggregation and causal weight computation. By modeling and explaining the causal dependencies in the state–action–reward process, the inference network (

Figure 1d) ultimately outputs an explanatory causal graph.

2.4. C2-Net

A direct application of discovered causal structures and causal weights might involve reward shaping or full policy retraining. However, reward shaping can introduce unintended biases into the learning objective and requires extensive manual tuning. In contrast, full policy retraining is computationally expensive and risks undermining established policy behaviors. To address these challenges, a lightweight, modular correction mechanism—the C2-Net—is introduced. C2-Net leverages precomputed causal weights to adjust policy actions dynamically at decision time, enabling precise, real-time intervention without modifying the reward function or retraining the entire policy network.

C2-Net is designed to adjust and refine policy actions through causal inference, thereby enhancing safety and interpretability in reinforcement learning. First, causal weights serve as an intuitive form of explanation: by leveraging the Structural Causal Model and GNN-NCM, C2-Net automatically identifies which state variables and action components exert genuine causal influence on the final return, rather than mere correlations. This precision ensures that corrections target only truly “harmful” or “inefficient” action elements, thereby avoiding indiscriminate intervention across the entire policy.

As shown in

Figure 2, C

2-Net integrates the Causal World Model with GNNs and consists of two main components:

Causal Inference with Multilayer GNN-NCM: This component learns the causal relationships among state variables, actions, and rewards by constructing a causal graph where nodes represent states and actions, and edges represent latent causal influences or interactions. Each state–action pair is linearly projected into initial node embeddings in a shared hidden space. Through several layers of message passing and neighborhood aggregation, the GNN-NCM computes causal influence weights, producing a causal weight matrix that quantifies each action’s influence on each reward variable.

Weight Partitioning, Vectorization, and Activation: The causal weight matrix

is partitioned into blocks corresponding to the action dimensions. Each block is reduced to a scalar causal weight

via a predefined mapping rule. These weights are then normalized by a temperature-adjusted Softmax function:

In this work, we leverage the causal weights learned by the GNN to perform the fine-grained correction of the original policy’s action outputs, aiming to better optimize the long-term cumulative return. Specifically, at time step t, the original policy outputs an action vector.

Meanwhile, the causal discovery module computes the causal contribution (or “weight”) of each action dimension to the future reward as follows:

Higher values of

result in larger normalized weights

. Finally, the raw action

is corrected as follows:

where

controls the interpolation strength between the unmodified action (

) and the fully causally corrected action (

), and 1/d serves as a de-centering baseline to maintain overall amplitude balance.

By combining these two steps, C2-Net can identify and suppress potentially dangerous actions in real time while promoting causally beneficial behaviors, ensuring policy robustness, safety, and interpretability under high-risk and complex conditions.

3. Results

To comprehensively evaluate the performance and interpretability of the proposed C

2-Net in reinforcement learning tasks, this chapter first introduces the experimental environments and evaluation metrics, then describes the baseline algorithms and evaluation protocols. The experiments include two main categories: single-agent control tasks on OpenAI Gym’s classic continuous control benchmarks (Hopper, Walker2d, Humanoid)—these environments are all open-sourced, and the definitions and models can be found using the following link:

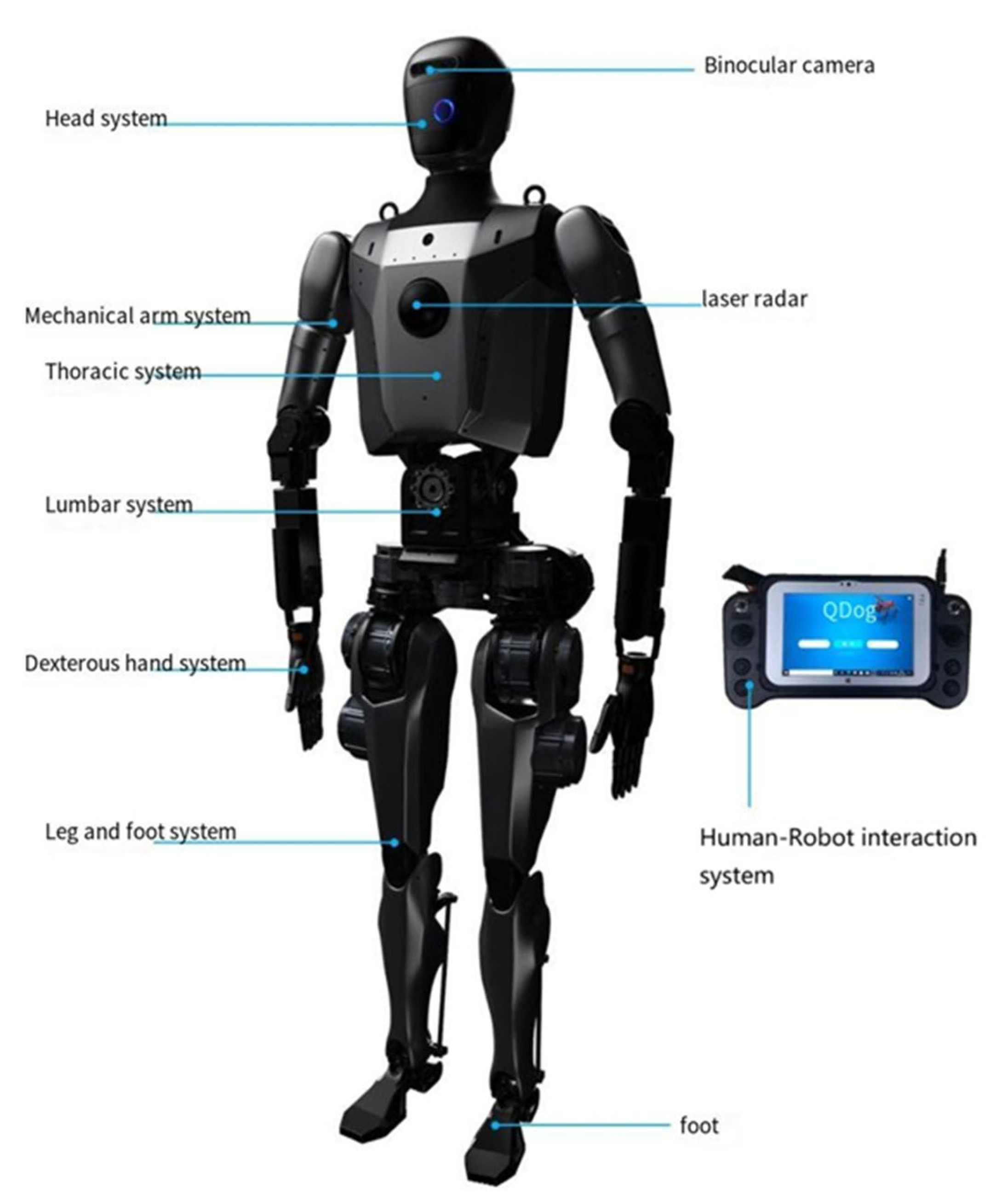

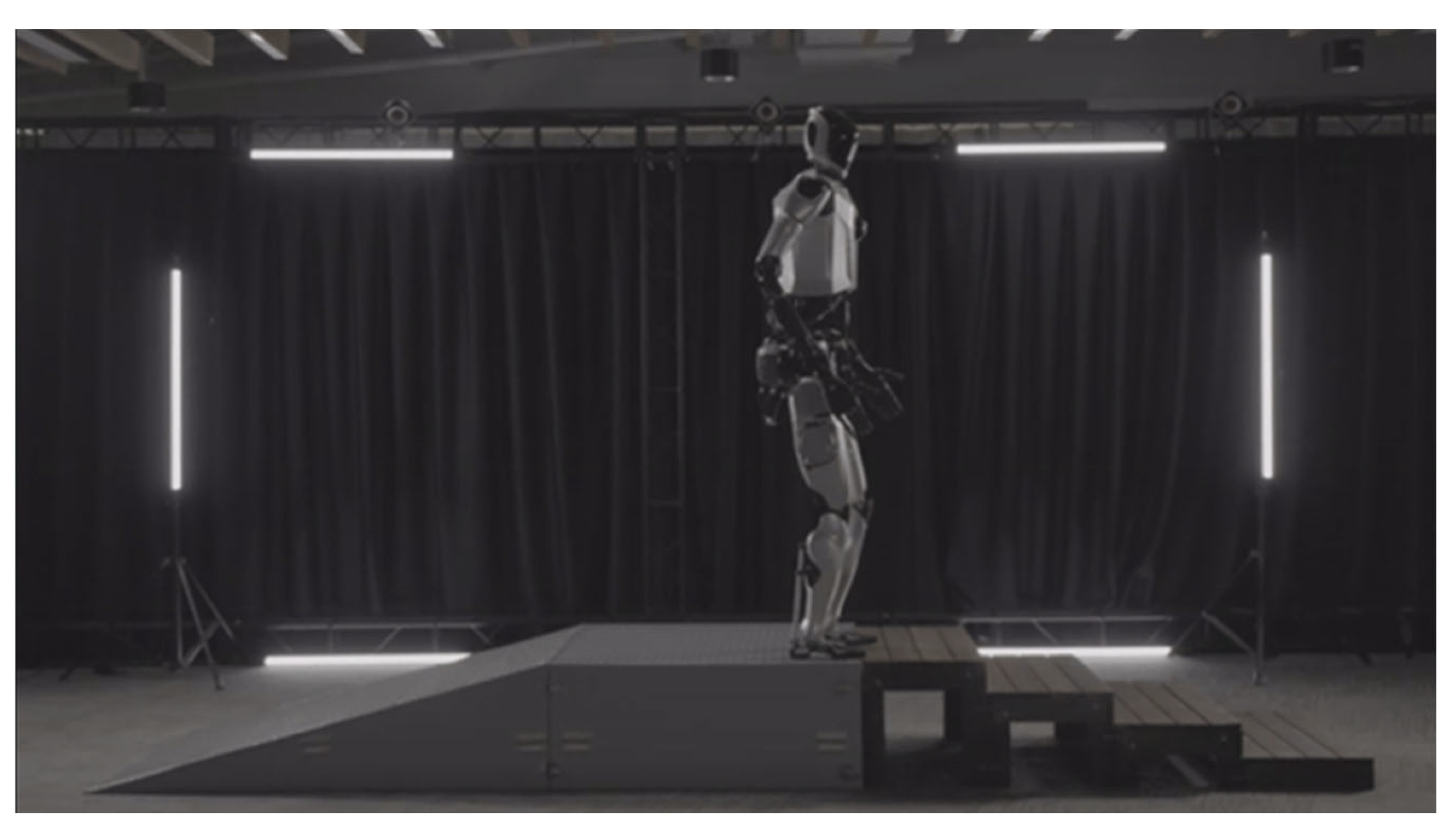

https://gymnasium.farama.org/environments/mujoco/ (accessed on 29 August 2025), used to assess convergence speed and final return. The second main category is multi-agent collaboration tasks on the AzureLoong platform built on NVIDIA Isaac Gym, used to examine coordination capabilities and explainability under complex dynamic interactions. The physical AzureLoong robot is shown in

Figure 3. All related documentation and information have been published on the open-source community at

https://www.openloong.org.cn/cn/documents (accessed on 29 August 2025). Evaluation metrics cover average return, policy entropy analysis, and explainability measures. To ensure fairness and reproducibility, all algorithms are executed under identical hyperparameter settings and random seeds, and are compared against baselines from various reinforcement learning algorithms.

We train baselines with Stable-Baselines3 as a comparison. Stable-Baselines3 provides standardized, reliable implementations of widely used reinforcement learning algorithms based on OpenAI Baselines (e.g., PPO, DDPG, SAC, TD3, A2C) and ensures reproducibility through unified interfaces and thorough documentation. The algorithms and parameter settings related to Stable-Baselines can be found at

https://github.com/DLR-RM/stable-baselines3 (accessed on 29 August 2025). PPO and SAC, two widely used algorithms in reinforcement learning control tasks, are adopted as baselines. After completing the baseline training, causal relationships are inferred using the GNN-NCM Causal World Model, and C

2-Net is applied for correction. The performance of the baseline and the corrected model is compared under the same number of simulation steps. Then, we used a

t-test to assess the differences between the baseline and our method. By comparing the reward values with established baselines and conducting statistical analysis, the advantages of the proposed algorithm can be intuitively demonstrated.

3.1. LunarLander Environment: Causal World Model Construction

To demonstrate C2-Net’s effectiveness, it is first essential to ensure the accuracy of the causal weights produced by the Causal World Model. Accordingly, an experiment was designed to compare and validate the quality of the causal inference results.

Experience tuples sampled from the replay buffer—20,000

entries—were used to train and construct the Causal World Model via GNN-NCM in the OpenAI Gym LunarLander environment, with a causal weight threshold of 0.1. A Structural Causal Model (SCM) uncovers the causal graph linking states, actions, and rewards while computing corresponding edge weights. Then, train and update the causal weights of all the edges using GNN. In this work, the GNN uses three layers, using ReLU for activation and 128 dimensions in each layer. The MLP score uses ReLU for activation, too, where

presents the embedded vector of node I, the output node

is the weight of the hidden layer, and

and

are the biases.

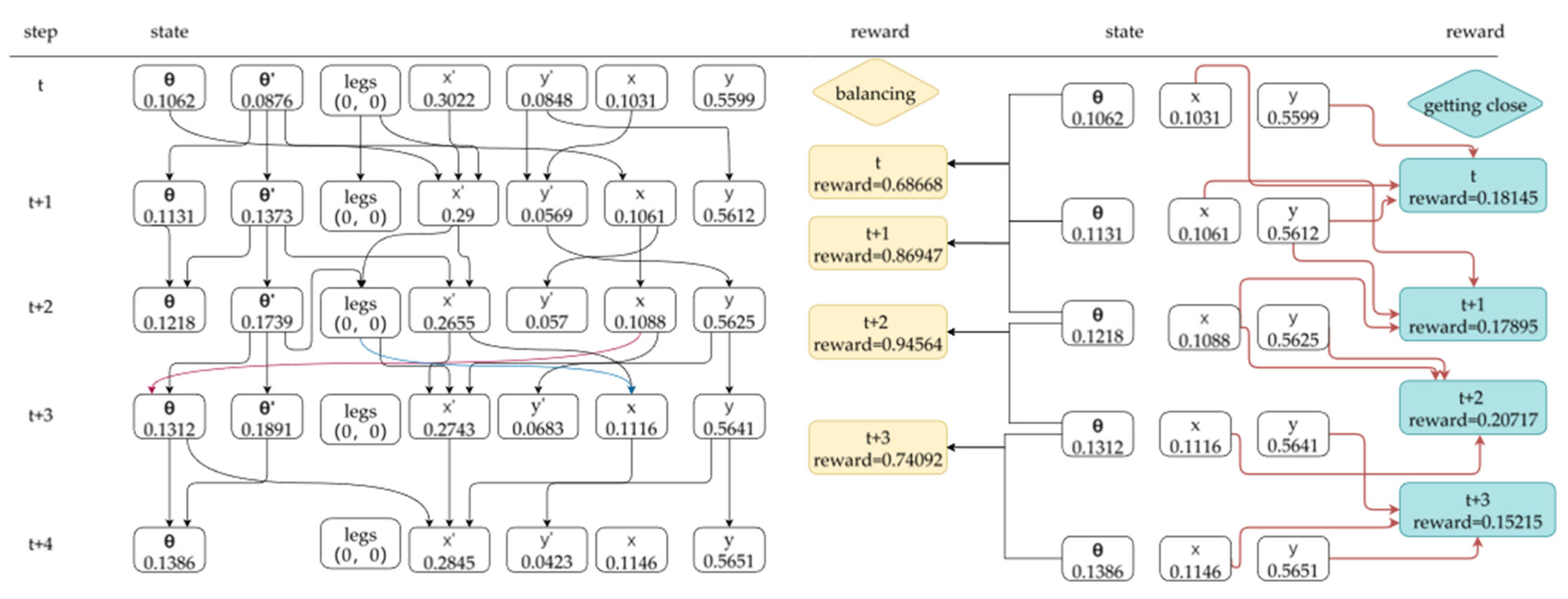

The causal chain graph inferred via GNN-NCM is shown in

Figure 4. Taking five-time steps as an example, the state space

, legs represent: (1) Horizontal position, (2) Vertical position, (3) Horizontal velocity, (4) Vertical velocity, (5) Aircraft angle, (6) Angular velocity, and (7) Whether the two legs are in contact with the ground (1 for contact, 0 for suspended). The reward “balancing” shows how well the agent keeps balance, and “getting close” shows how far to reach the destination. The graph illustrates the causal relationships among states, actions, and rewards in the LunarLander environment, that is, “what causes what.”

To assess both the interpretability of the inferred graph and its short-term prediction accuracy, a linear regression baseline was defined: only statistically significant causal edges were retained to form a subgraph, whose parent nodes then predicted the next-step state or reward. Prediction quality was evaluated using the root-mean-square error (RMSE) against ground truth, with comparisons made to two baselines:

Attention-Based Causal World Model [

30]: uses an attention mechanism to assign causal weights;

MLP-Scored World Model: Only employs a multilayer perceptron to score all candidate causal edges.

As shown in

Table 1, the GNN-NCM-based Causal World Model achieves lower RMSE and provides higher-quality explanations than both baselines, demonstrating its superiority in causal inference accuracy and interpretability. Based on this inferred causal chain structure, the subsequent experiments in this study apply causal correction to other environments.

3.2. Task: Gym Hopper-v4

In the OpenAI Gym Hopper-v4 environment, the agent and physics simulator are initialized. The observation space comprises a 17-dimensional state vector, and the action space consists of a three-dimensional joint-torque vector. The Causal World Model is defined by the following:

Inputs: the current state and the previous action .

Outputs: the causal components of the next state and the immediate reward based on the forward distance.

A Structural Causal Model (SCM) is then employed to uncover the direct causal relationships between state variables and actions, and the resulting causal graph is used to train the GNN-NCM. All SCM and GNN-NCM training parameters match those specified in

Section 3.1. Subsequently, a baseline policy is trained using Stable-Baselines3 with parameters shown in

Table 2. For each scenario, the causal inference module generates explanations that are then subjected to blind expert evaluation. Running reward curves and final returns are recorded, with their means and standard deviations reported. Two off-the-shelf algorithms from Stable-Baselines3—PPO (Proximal Policy Optimization) and SAC (Soft Actor-Critic)—serve as baselines. Causal analysis reveals that the “forward” component dominates sensitivity (all other components have causal weights ≤ 0.1); therefore, comparative evaluation focuses on this metric. All charts and statistical analyses are produced in GraphPad Prism (version 9.5.1) with a 95% confidence interval.

Figure 5 and

Figure 6 show the comparison of rewards between the baseline and after correction using C

2-Net, more data are displayed in

Table 3 and

Table 4. The experimental results demonstrate that the C

2-Net-corrected policy outperforms common baselines on several key metrics. Compared with the PPO baseline, C

2-Net achieves a 14.6% increase in average cumulative return; versus the SAC baseline, the gain is 20.2%.

Within a 300-step window, PPO and SAC baselines occasionally collapse. In contrast, the C2-Net-corrected agent remains upright and continues moving forward, indicating effective prevention of unsafe actions. Despite this, C2-Net’s state-prediction RMSE is slightly higher than the baselines—2% above PPO and 30% above SAC—reflecting the added variability from the causal reasoning process. Nonetheless, the overall improvements in performance and safety clearly validate the effectiveness and practical value of causal correction.

We conduct independent t-tests with our algorithm against the baseline data to obtain statistical results. At a 95% confidence level, the p-values for both algorithms were < 0.0001, indicating a significant difference between the C2-Net–based correction and the baseline. The differences between means of SAC and PPO algorithm, compared with the baseline, were 0.5663 ± 0.07400 and 0.4264 ± 0.07983, with corresponding Cohen’s d values of −0.6245 and 0.4368, respectively, indicating that the C2-Net correction has a medium-to-large effect.

3.3. Task: Gym Walker2d-v4

In the OpenAI Gym Walker2d-v4 environment, both the agent and the physics simulator are initialized. The observation space comprises a 17-dimensional state vector—including torso position, joint angles, and velocities—while the action space is a six-dimensional vector of joint torques. To construct the Causal World Model, the inputs and outputs are defined by the following:

Inputs: the current state and the previous action .

Outputs: the causal components of the next state and the immediate reward based on the forward distance.

A Structural Causal Model (SCM) is then employed to uncover the direct causal relationships between state variables and actions, and the resulting causal graph is used to train the GNN-based Neural Causal Model (GNN-NCM). All SCM and GNN-NCM training parameters match those specified in

Section 3.1. Subsequently, a baseline policy is trained using Stable-Baselines3 with the parameters shown in

Table 5.

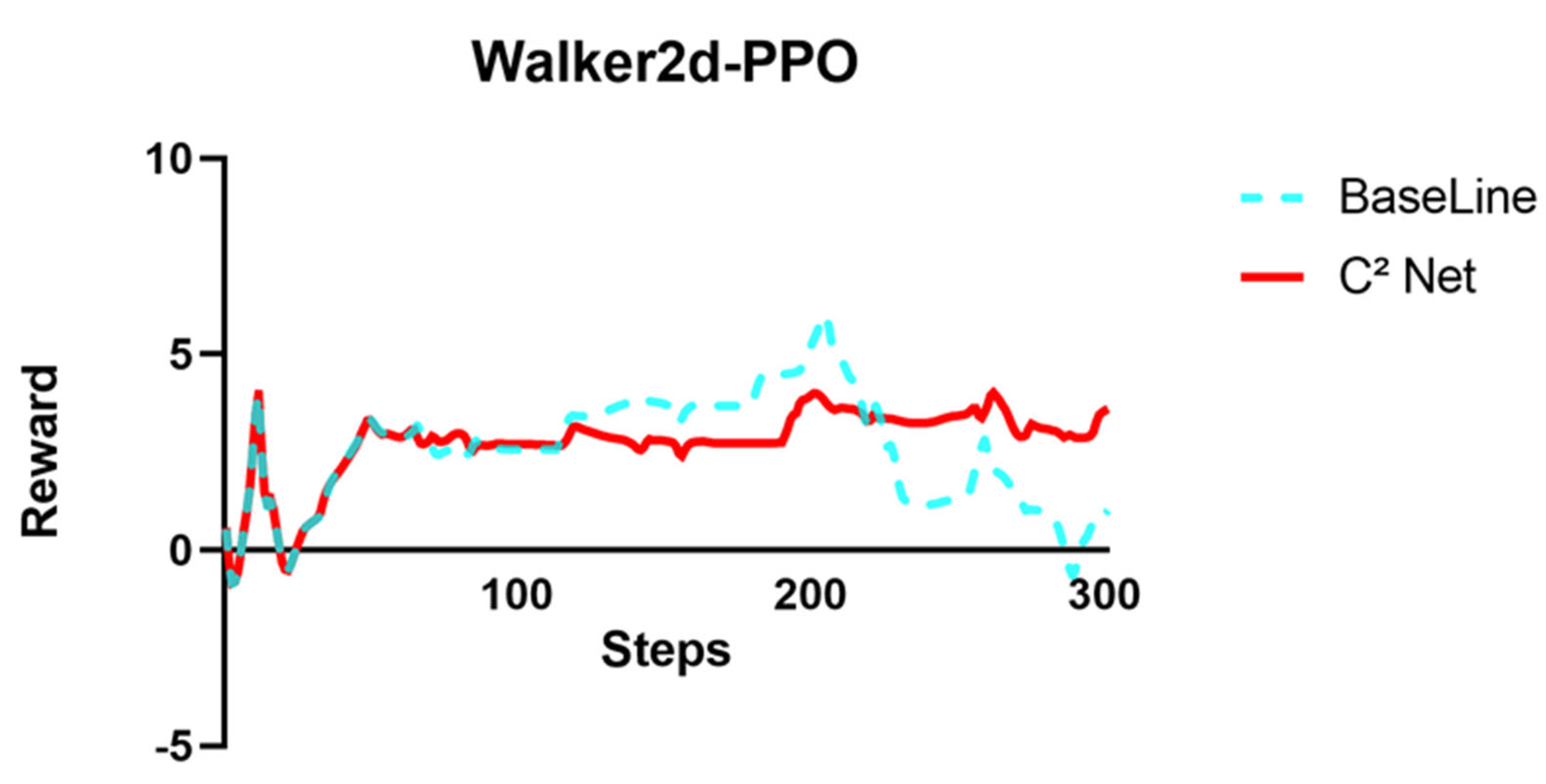

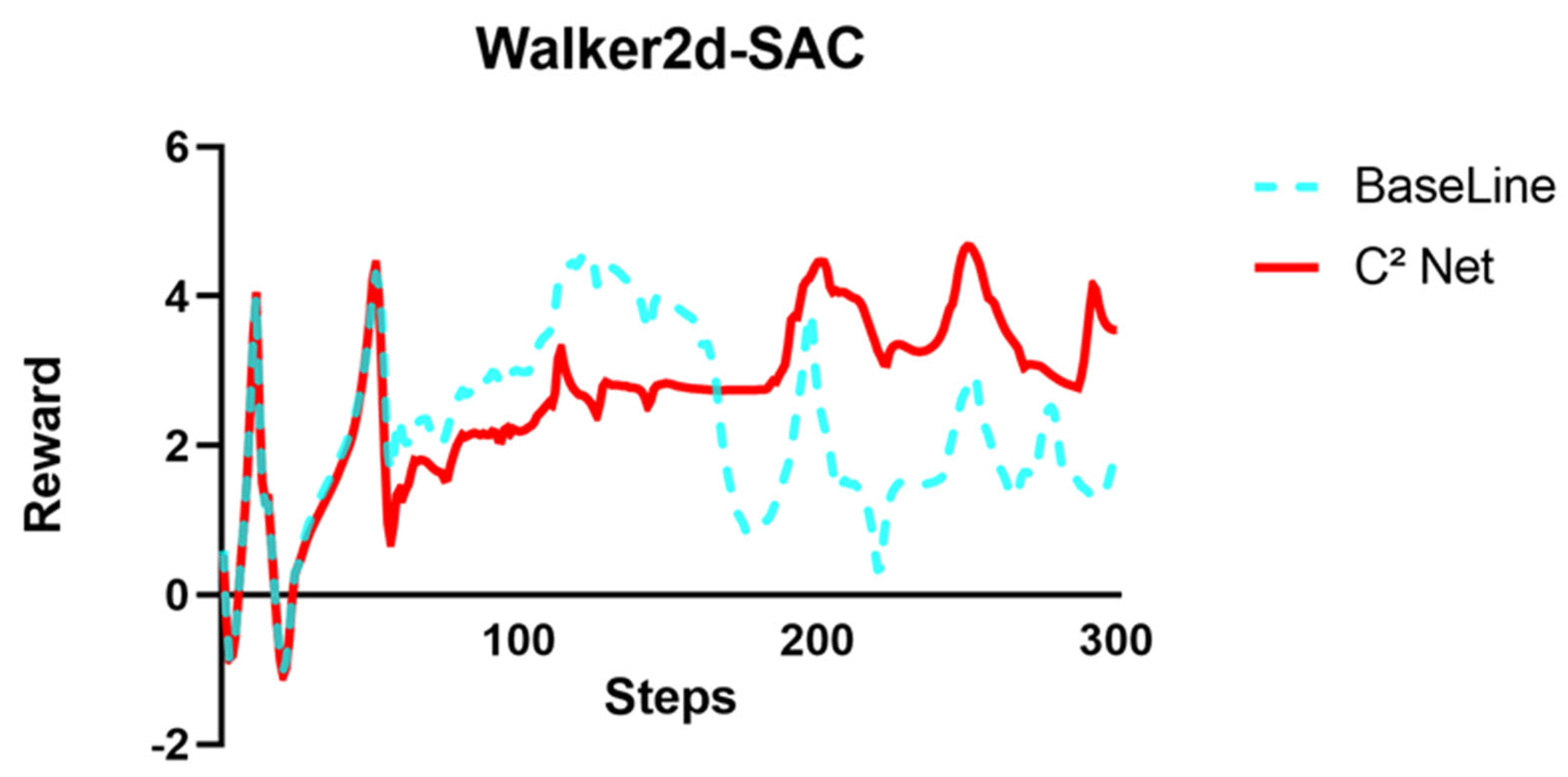

Figure 7 and

Figure 8 show the comparison of rewards between the baseline and after correction by C

2-Net, more data are displayed in

Table 6 and

Table 7Interesting observations emerge during the under-converged phase of the Walker2d task. The unmodified policy routinely collapsed within approximately 300 samples due to executing unsafe actions. After applying C2-Net correction, the agent’s trajectories avoid these dangerous motions, maintain upright locomotion, and achieve higher immediate rewards. Quantitatively, the corrected policy’s reward increased by 18% compared to the baseline. This result validates both the accuracy of the causal model in inferring action–state dependencies and the effectiveness of the causally weighted compensation strategy in ensuring action safety and improving overall performance.

Statistically, we conduct independent t-tests with our algorithm against the baseline data to obtain statistical results. At a 95% confidence level, the p-values for both algorithms were <0.0001, indicating a significant difference between the C2-Net–based correction and the baseline. The differences between means of SAC and PPO algorithms, compared with the baseline, were 0.4186 ± 0.09550 and 0.9144 ± 0.09541, with corresponding Cohen’s d values of 0.6068 and 0.3585, respectively, indicating that the C2-Net correction has a medium-to-large effect.

3.4. Task: Gym Humanoid-v4

In the OpenAI Gym Humanoid-v4 environment, C

2-Net’s capabilities are assessed on a high-dimensional, high-degree-of-freedom control task. The observation space consists of a 376-dimensional vector—encompassing joint positions, velocities, angles, and related physical quantities—while the action space comprises a 17-dimensional joint-torque vector. The Causal World Model is constructed exactly as described in

Section 3.1, using identical SCM and GNN-NCM training parameters. The environment is initialized through Gym, and the baseline policy is trained with Stable-Baselines3 using the following parameters in

Table 8.

Following environment initialization, a baseline policy is trained via Stable-Baselines3 with the hyperparameter settings shown in

Table 8.

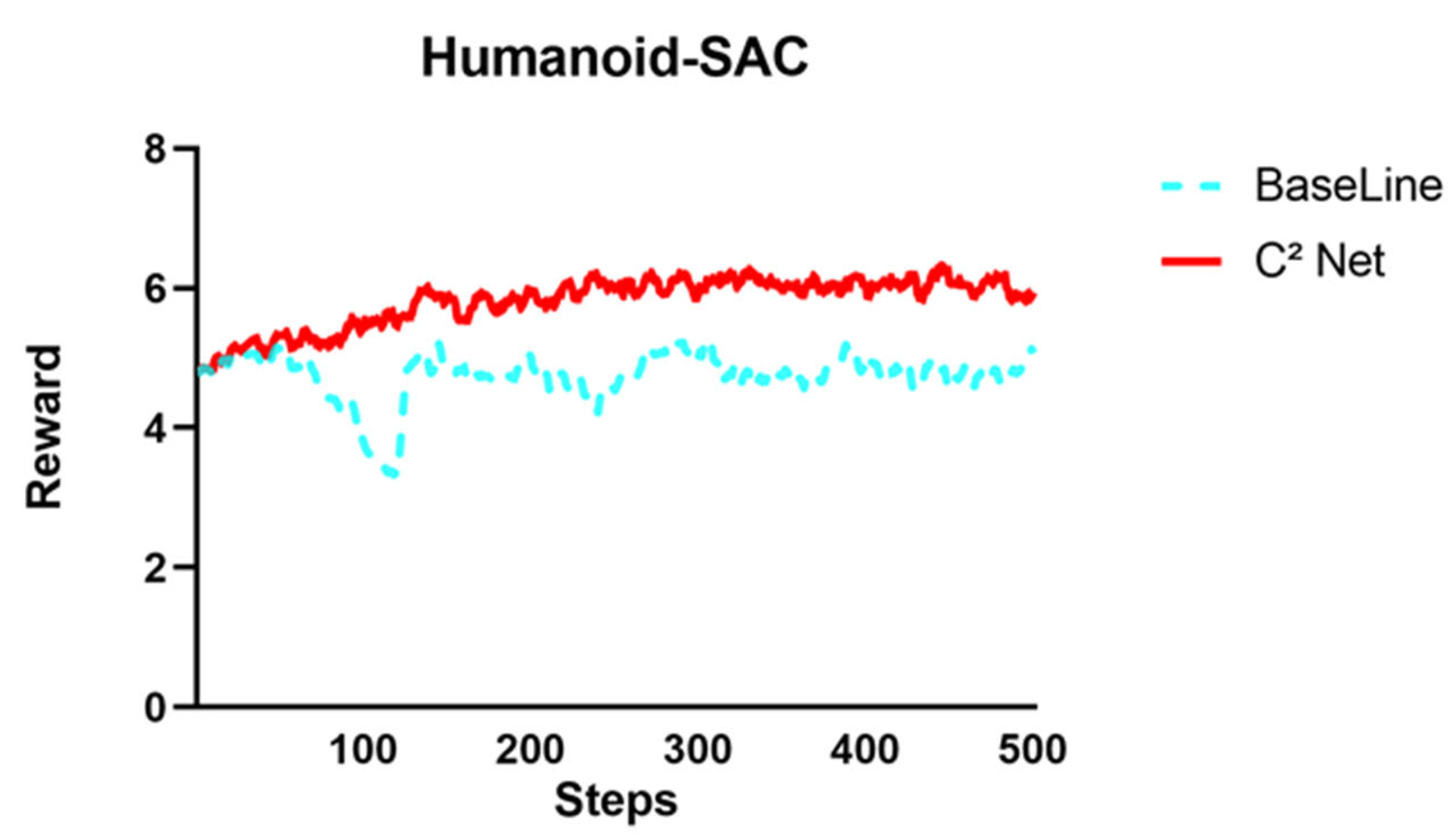

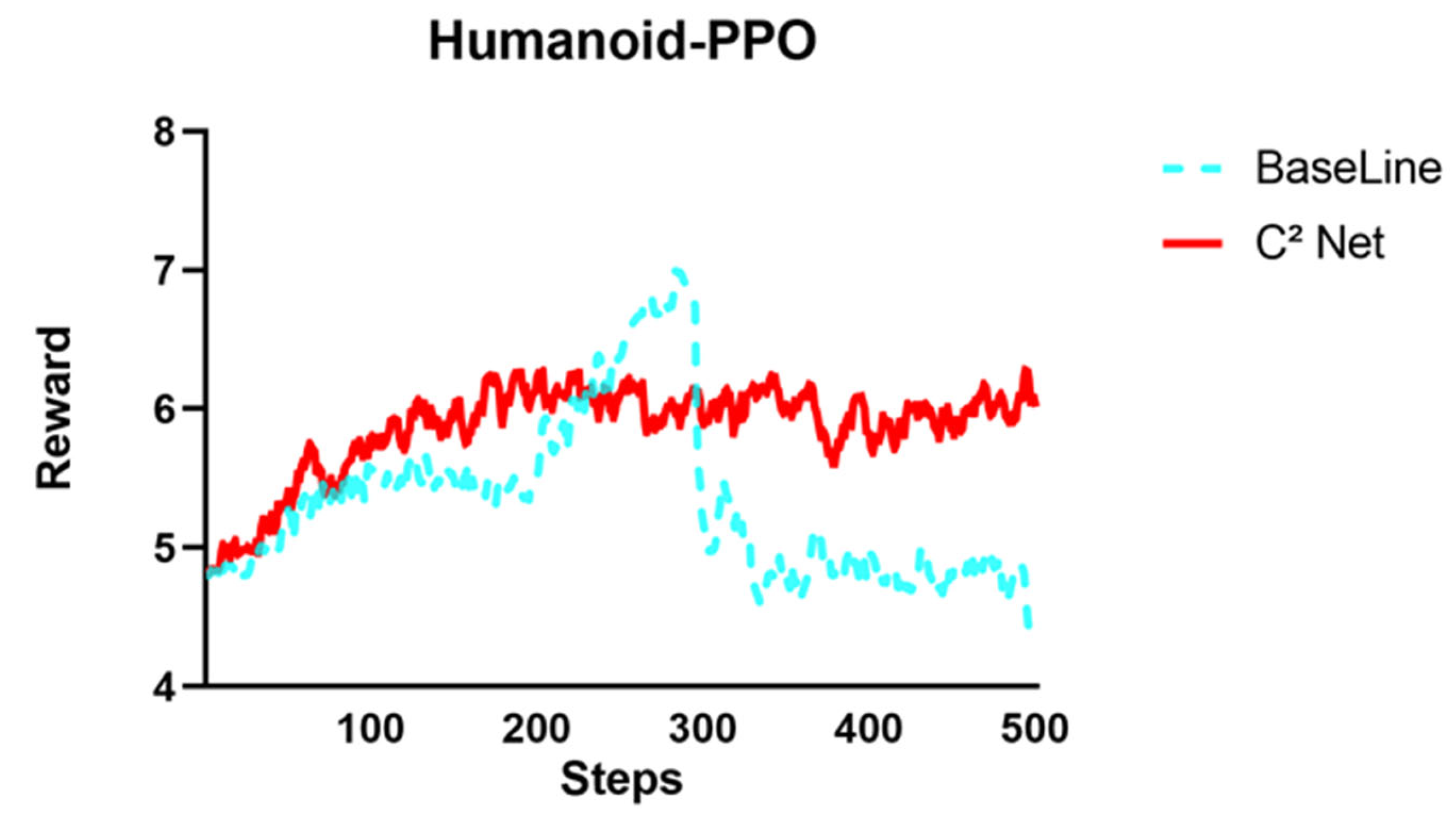

Figure 9 and

Figure 10 show the comparison of rewards between the baseline and after correction using C

2-Net; more data are displayed in

Table 9 and

Table 10.

The results in the Humanoid-v4 environment demonstrate the effectiveness of C2-Net. Introducing C2-Net increased the average return by 9.92%. Moreover, over 500 sampling steps, the C2-Net-corrected agent never fell, confirming that the causal corrections effectively suppress dangerous actions. The variance of the corrected policy’s returns decreased by approximately 54% in the policy trained by PPO, indicating that C2-Net not only enhances performance but also significantly improves the stability and reliability of the control strategy. Furthermore, these outcomes show that C2-Net adapts well to high-dimensional continuous control scenarios—such as Humanoid with 376-dimensional observations and 17-dimensional actions—suggesting potential for scalability and generalization.

We conduct independent t-tests with our algorithm against the baseline data to obtain statistical results. At a 95% confidence level, the p-values for both algorithms were <0.0001, indicating a significant difference between the C2-Net–based correction and the baseline. The differences between means of SAC and PPO algorithms, compared with the baseline, were 1.062 ± 0.02238 and 0.5286 ± 0.03083, with corresponding Cohen’s d values of 3.0040 and 1.0857, respectively, indicating that the C2-Net correction has a large effect.

3.5. AzureLoong Simulation Environment

To further validate C

2-Net’s generality and effectiveness in complex agent scenarios, conducted a comparative experiment on the AzureLoong multi-robot collaboration environment built on NVIDIA Isaac Gym. The AzureLoong platform simulates the Chinese open-source “Qinglong” humanoid robot, which features a human-like skeletal structure with up to 43 active degrees of freedom, enabling bipedal walking, balance control, and fine manipulation. The robot stands approximately 185 cm tall, weighs around 80 kg, and delivers peak joint torques of up to 400 N·m, giving it high agility and rapid obstacle-avoidance capabilities. Developed by the Humanoid Robot Innovation Center in Shanghai, the Qinglong design—including both hardware and software parameters—has been open-sourced to the OpenLoong community.

Figure 11 shows Qinglong performing robot DRL on real objects.

In simulation, AzureLoong leverages the NVIDIA Isaac Gym/Isaac Sim platform to achieve large-scale parallel physics simulation and training. By utilizing Isaac Lab’s highly efficient GPU-accelerated physics engine, the environment supports hundreds to thousands of parallel instances, delivering high throughput without sacrificing simulation fidelity. This platform allows us to model multi-robot collaboration tasks—such as object transport, dynamic obstacle avoidance, and formation keeping—and to focus the analysis on two characteristic behaviors of the Qinglong robot: “in-place stepping” and “forward locomotion”.

First, train the multi-agent system with the PPO algorithm to achieve the baseline strategy. The training code can be obtained from the OpenLoong documentation at

https://github.com/loongOpen/Openloong-gymloong (accessed on 29 August 2025). The parameters are shown in

Table 11. In the AzureLoong environment—where the state space spans 173 dimensions and the reward derives from multiple state variables—causal analysis and correction were focused on two key metrics: body energy consumption and forward distance. With 20,000 training samples available, additional data were collected for both environment states and agent actions to construct the Causal World Model and train C

2-Net. The comparison data and rewards are displayed in

Table 12 and

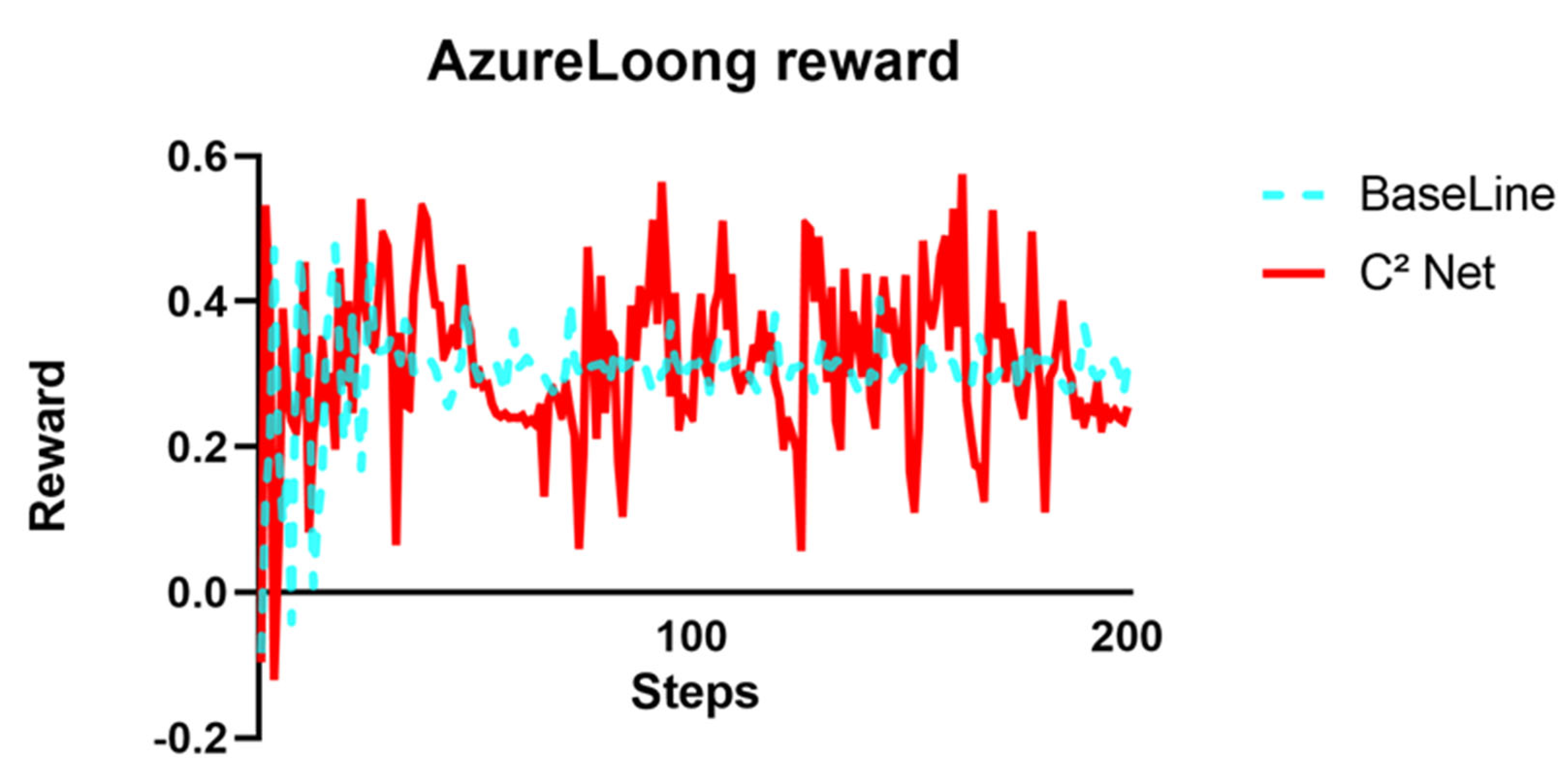

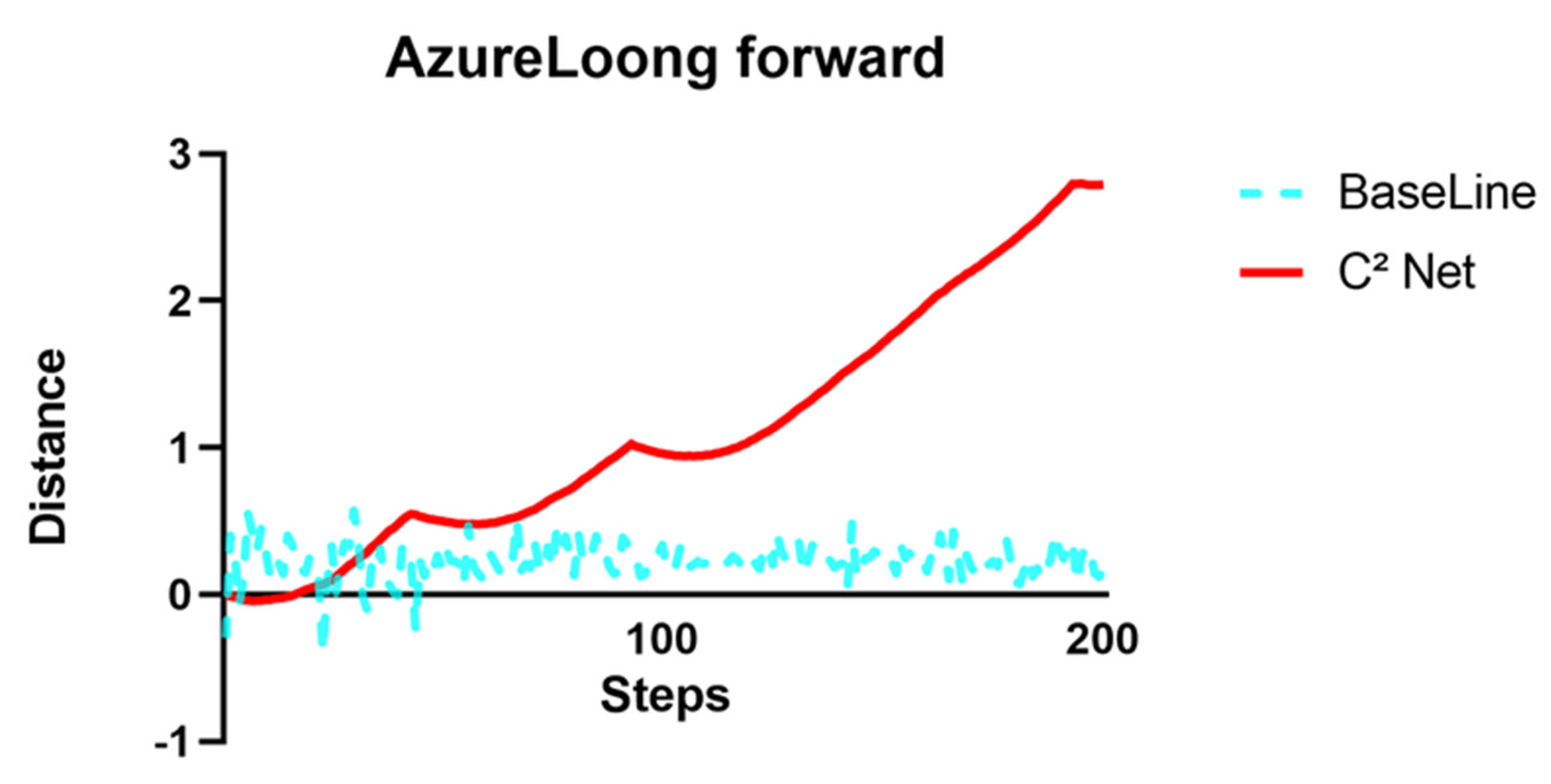

Figure 12; the rewards are given by the original AzureLoong environment.

Time-series plots of energy consumption and forward distance reveal that causal correction increased average reward by 3.6% compared to the unmodified PPO policy, albeit with a notable rise in reward variance—a reflection of the high-dimensional complexity that amplifies fluctuations in state estimation and causal inference. An examination of forward-distance curves and rendered trajectories shows that the baseline policy remains in “in-place stepping” to maintain balance, whereas the C2-Net-corrected policy, guided by the causal weight on forward distance, achieves continuous forward locomotion. These findings confirm that the causal inference and correction mechanism can substantially improve behavior even under sparse training conditions.

We conduct independent t-tests with our algorithm against the baseline data to obtain statistical results. At a 95% confidence level, the p-value was < 0.0001, indicating a significant difference between the C2-Net–based correction and the baseline. The differences between means, compared with the baseline, were 0.01093 ± 0.009059, with corresponding Cohen’s d values of 0.1210, respectively, suggesting that the C2-Net correction exhibits a small to medium effect.

Figure 13 shows the displacement generated by the robot performing ‘forward locomotion’ in the environment. It is observed that the uncorrected AzureLoong robot, after an initial external perturbation, could use balance control to right itself and maintain a relatively stable reward; however, its behavior remained largely “in-place stepping,” and yielded almost zero forward progress. After applying C

2-Net correction, the model dynamically identified and amplified that action components positively correlated with forward distance, actively driving the robot to move ahead.

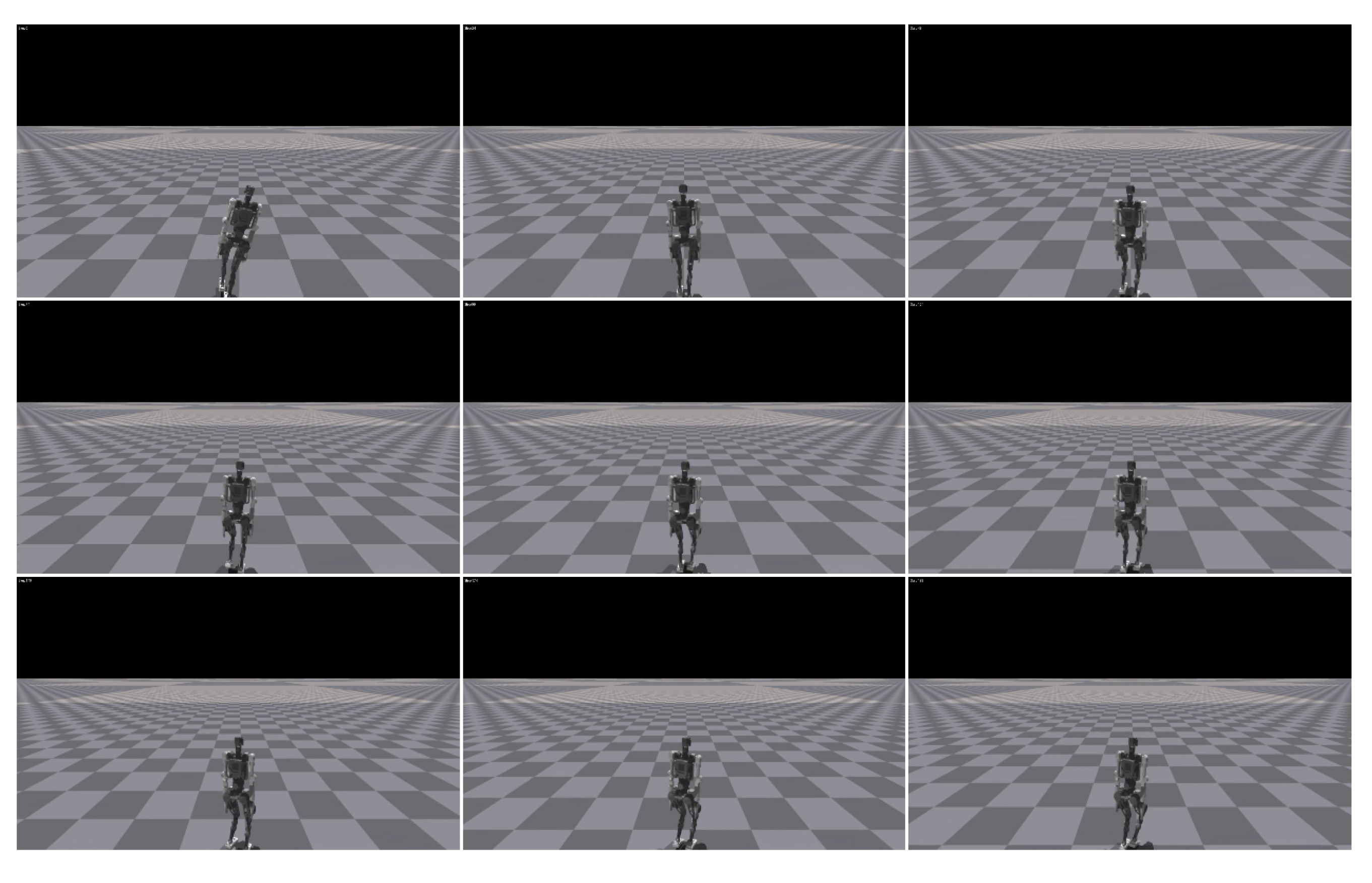

Figure 14 and

Figure 15 show the key video frames before and after applying C

2-Net correction in the simulation. The corrected robot exhibited a more fluid gait and clear forward propulsion; the reward curve reveals higher instantaneous peaks, indicating a more aggressive achievement of task goals. Although the corrected policy’s reward fluctuations increased slightly, the results show that under the learned causal model, C

2-Net can guide the robot to increase a given reward, such as “forward” in the test environment to improve strategy exploration.

4. Discussion

Figure 16 summarizes the results across all environments. Here, reward_raw denotes the mean return before correction, and reward_crr represents the mean return after applying C

2-Net. Overall, C

2-Net consistently improves the agent’s average return across all tasks. For example, in the Hopper environment with SAC and PPO algorithms, the mean return increased by 14.6% and 20.2%, with a value of Cohen’s d indicating a medium-to-large effect. Similar trends are observed in Walker2d (SAC: 18.5% and PPO: 44.9%), Humanoid (SAC: +22.3%; PPO: +9.92%), and AzureLoong (+3.6%). These effect sizes range from small to large according to Cohen’s convention, underscoring the contribution of causal correction across diverse benchmarks.

However, the T-shaped error bars indicate that the standard deviation also increased in certain scenarios. For instance, in the Hopper-SAC task, the variance rose from 0.04503 to 0.05872, and in the AzureLoong environment, it increased from 0.004507 to 0.007859. This suggests that although causal correction improves the mean performance, it also introduces greater policy variability. Such an increase in variance is expected, as C2-Net explicitly models the causal dependencies among states, actions, and rewards, providing a principled basis for the agent’s action selection. By guiding the correction process using reward information, the agent can explore multiple near-optimal behaviors under uncertainty or perturbations, thereby enhancing system robustness and adaptability. At the same time, this increased variance highlights the inherent trade-off between exploratory behavior and policy stability.

Conventional reinforcement learning methods, such as PPO and SAC, primarily regulate policy variance through reward shaping or entropy regularization. In contrast, C2-Net provides an alternative and potentially more principled approach by explicitly modeling causal dependencies among states, actions, and rewards. While this causal-driven exploration helps to interpret both the improvement in average performance and the observed variance, it also highlights a potential trade-off between adaptability and stability. Specifically, greater variance may yield diverse behavioral trajectories beneficial for long-term exploration, yet it can also increase the difficulty of convergence and control in safety-critical environments.

5. Conclusions

This paper presents an interpretable reinforcement learning framework based on the Causal Correction and Compensation Network (C2-Net) to address the ‘black box’ nature of deep RL models. The framework systematically integrates causal inference with GNNs to enhance both interpretability and policy robustness. A Structural Causal Model is employed to uncover intrinsic causal relationships among states, actions, and rewards, resulting in a Causal World Model that provides a solid theoretical foundation for subsequent inference. Building on this model, a GNN-NCM replaces traditional attention mechanisms. Through multilayer message passing, the causal influence weights of actions on key variables are computed. These weights drive the C2-Net module, which dynamically corrects and compensates the original policy’s actions, preserving performance while making the decision-making process fully interpretable.

The framework’s effectiveness and generality are validated through extensive experiments across various tasks. In single-agent continuous control benchmarks (Hopper, Walker2d, Humanoid) on OpenAI Gym, after applying C2-Net correction, the average returns improved compared with the standard PPO and SAC baselines. In the Humanoid and Walker2d tasks, the correction further prevented falling, thereby enhancing the stability of the robot’s policy. These results demonstrate the framework’s potential in complex continuous control. On the AzureLoong multi-agent platform built on Isaac Gym, C2-Net markedly reduces “in-place stepping” behavior, increases team average return by approximately 3.6%, and drives the robot forward under the guidance of the corrective policy to obtain rewards. Causal correction of high-dimensional metrics such as body energy consumption and forward distance further demonstrates adaptability to complex systems. Although state-prediction RMSE is slightly increased by the causal compensation mechanism, a reduction in return variance underscores the practical benefits of causal inference in improving performance, safety, and stability.

Future work may integrate self-supervised causal feature learning and optimize the GNN architecture to further improve inference accuracy and computational efficiency. At the same time, the framework can be extended to real-world domains—such as autonomous driving and smart grids—to explore cross-domain transfer and generalization capabilities. Interactive visualization interfaces for human–machine collaborative debugging would allow practitioners to fine-tune policies in real time using causal explanations. This approach can advance the adoption of explainable RL in both industrial and research settings. This work offers new conceptual and practical pathways for the safe and reliable deployment of high-risk continuous control and multi-agent systems, and provides potential ideas for future application exploration in real robots and industrial scenarios.