1. Introduction

The exponential growth of digital data has created a challenge for users to efficiently browse and filter valuable content. News publications, in particular, face the need to reach a wide audience amidst the dispersion of formats. Machine learning algorithms offer potential applications in text classification in various fields such as marketing, research, and medicine.

The development of a solution to classify news articles can aid in understanding current media issues and help companies adapt their marketing strategies. In addition, it can help organizations engaged in consumer behavior research, thus facilitating a more comprehensive understanding of market preferences. The automatic classification of texts can streamline article management in editorial offices and publishing houses, as well as facilitate the cataloging and categorization of publications by librarians. In the academic field, such a solution can analyze and classify scholarly articles, thereby facilitating the identification of the latest developments. In the field of medicine, the application can assist in the classification of healthcare-related publications, the identification of trends, and the advancement of the discipline.

The principal aim of the study was to perform a comprehensive review and comparative analysis of natural language processing techniques for the classification of news articles, with a particular emphasis on conventional statistical machine learning methods. The research question is whether a solution developed and tested in this way can ultimately be used in practice. By exploring the strengths and limitations of various machine learning techniques in this context, this study aims to provide a structured, evidence-based perspective on their viability for real-world use in content classification tasks across different industries.

2. Objectives

The objective of this study was to identify the optimal solution for large-scale text classification, with a particular emphasis on accuracy, performance, and the capabilities of Java-based libraries. This goal was pursued through a comprehensive comparative analysis of various natural language processing techniques, specifically evaluating their efficiency in the context of news article classification. In addition to comparing the results reported by other researchers, the study included the development of novel extensions to these techniques and a critical evaluation of their effectiveness.

It is noteworthy that languages executing on the Java virtual machine do not attain the same degree of popularity in the domain of machine learning as Python, version 3.10.12. The study assumed that Python would be used exclusively for tasks that were not feasible in Java due to the unavailability of suitable libraries. This decision was made with the intention of evaluating the suitability of Java in the context of machine learning tasks and its potential capacity to effectively replace Python in these tasks. An additional motivating factor for this approach was the prospect of utilizing the resulting models in web applications, among which Java continues to exert a dominant influence, and its role is also anticipated to be significant in 2024 [

1]. Despite the prevalence of Python in contemporary NLP research, owing to its extensive libraries and vibrant community support, Java-based frameworks have been shown to be particularly advantageous for integration into existing enterprise environments, server-side systems, or GUI applications. These domains demand long-term stability, portability, and memory efficiency.

The effectiveness of traditional algorithms based on mathematical statistics was examined, as well as the latest trends in deep machine learning. The performance of trained models in predicting specific topics was evaluated, and various methods for efficient feature extraction and the analysis of word vector representation were explored. The comparison also considered aspects such as hardware resource management, implementation simplicity, learning process duration, and result model detection quality.

The configuration process, data validation, and various phases of newspaper article analysis were discussed. The evaluation contrasted conventional models with transformer neural networks, also examining the preprocessing of the dataset, implementation of classification algorithms, and cross-validation stage.

To classify newspaper articles, the Apache OpenNLP, Stanford CoreNLP, and Waikato Weka libraries were employed. The experiments included an investigation of attribute selection, feature filtering, vector representation techniques, and the challenge of imbalanced datasets through data augmentation.

The study also explored advanced word selection techniques. Computational resource consumption, training process time, and quality metrics were evaluated to identify the advantages and disadvantages of each classifier. Furthermore, the models’ performance for specific topic categories was ascertained through a final evaluation on a dedicated test set.

3. Existing Solutions

A review of existing solutions for classifying news article texts was conducted with the objective of establishing the possible systems that are currently available. Two datasets from the Kaggle platform were analyzed: the News Category Dataset [

2] and All the News 2.0 [

3].

The first dataset, which only provided the article URL and not the full content, was focused on classifying the topic based on page headings or performing sentiment analysis. In contrast, the second dataset, which included the content of press materials, was used in studies that focused on content-based inference, specifically detecting the author, rather than the topic of the article [

4].

A further comparative analysis was identified, which evaluated the efficacy of a range of natural language processing techniques for article classification, utilizing a variety of Python libraries. The algorithms tested included naive Bayes, logistic regression, k-nearest neighbor, random forest, and support vector machines, in addition to a basic neural network for comparative purposes. The evaluation demonstrated that the naive Bayes classifier outperformed all the other classifiers, with an overall accuracy of 92% [

5].

One particularly noteworthy project was dedicated to inferring information from BBC publications. The project was implemented in Java using the naive Bayes method and focused on binary classification of sport and business articles [

6]. Another publication showed the results of the classification of text documents using generalized sequential patterns. However, this research was not concerned with the classification of newspaper articles but, rather, with the content of researchers’ and universities’ home pages [

7]. Moreover, another study evaluated the performance of the Support Vector Method alone for news content analysis, concluding that SVM algorithms can outperform their conventional binary classifier counterparts by as much as 10% [

8]. In addition, the comparative studies that were identified tended to focus on qualitative metrics of the models but did not consider other factors, such as performance.

A review of existing solutions for classifying news article texts revealed a multitude of approaches and techniques, with some specifically tailored to media publications and others focused on different types of content. While different algorithms were evaluated, most analyses were limited to Python libraries. Notably, one project implemented in Java was identified, but it did not utilize commonly used natural language processing libraries. Furthermore, the comparative studies tended to overlook factors such as computational resource consumption.

4. Materials and Methods

4.1. Dataset

The dataset employed for natural language processing was obtained from the Kaggle platform. The dataset was subjected to an Attribution 4.0 International (CC BY 4.0) license, which permitted the utilization, dissemination, and modification of the data, provided that appropriate attribution was maintained. It constituted one of the most extensive collections of news articles at the time, encompassing approximately 210,000 news metadata items sourced from the US liberal news platform The Huffington Post, collated between 2012 and 2022 [

9].

The ZIP archive contained a single JSON file with metadata records of newspaper articles. Each line of the dataset file represented a single JSON document for an article, which included the following attributes:

Category—the category in which the article was published.

Title—the headline of the news article.

Authors—a list of authors who contributed to the article.

Link—a reference to the original news article.

Short_description—a summary of the content of the article.

Date—the date the article was published.

A summary of the most prevalent categories is provided below, accompanied by the corresponding number of articles:

Politics: 33,119.

Wellness: 17,945.

Entertainment: 16,483.

Travel: 9393.

Style & Beauty: 8214.

Parenting: 7791.

Healthy Living: 6774.

Queer Voices: 4937.

Food & Drink: 6236.

Business: 5162.

Comedy: 5323.

Sports: 5277.

Black Voices: 4343.

Home & Living: 3320.

Parents: 4125.

4.2. Preprocessing

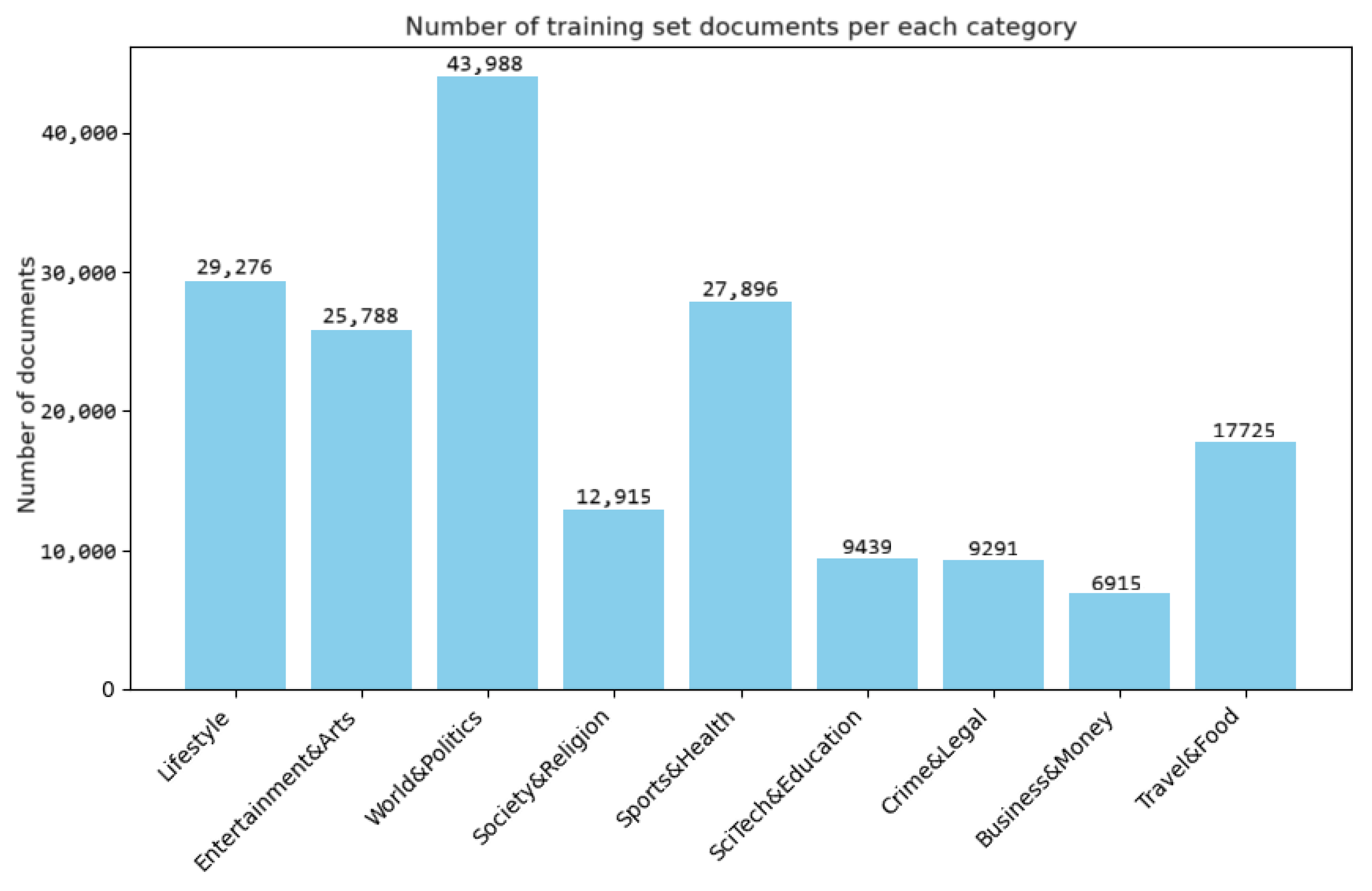

A total of 42 categories were initially identified, but the decision was made to reduce them to nine more general labels in order to achieve a better balance (

Figure 1).

The categorization was as follows:

World&Politics (43,988): U.S. News (1377), World-News (3299), The World Post (3614), World-Post (2579), Politics (33,119).

Entertainment&Arts (25,788): Comedy (5323), Culture&Arts (1074), Entertainment (16,483), Arts&Culture (1399), Arts (1509).

Lifestyle (29,276): Parenting (7791), Parents (4125), Style&Beauty (8214), Style (2254), Home&Living (3320), Women (3572).

Sports&Health (27,896): Sports (5277), Healthy Living (6774), Wellness (15,845).

Society&Religion (12,915): Queer Voices (4937), Black Voices (4343), Latino Voices (1112), Religion (2523).

Travel&Food (17,725): Food&Drink (6236), Travel (9393), Taste (2096).

Business&Money (6915): Business (5162), Money (1753).

Sci/Tech&Education (9439): Science (1796), Environment (1444), Green (1937), Education (1014), College (1144), Tech (2104).

Crime&Legal (9291): Crime (2862), Divorce (3176), Weddings (3253).

The remaining categories collectively contained fewer than four thousand articles, thereby underscoring the considerable disparity in the quantity of data between the different categories in the collection.

4.2.1. Data Extraction

As the dataset contained only links to the original sources, an efficient method was required to extract the full text of each article. This was achieved by utilizing libraries offering web content extraction (web scraping) functionality.

One such library, trafilatura, provides a news metadata extractor that is capable of extracting structural information from any news site. This includes the title, description, content, publication date, author, and thumbnail. The library’s objective is to eliminate superfluous and repetitive elements while including pertinent information to ensure data quality. Trafilatura operates efficiently and can process millions of documents without compromising quality or speed [

10].

A script was developed to facilitate interaction with the console, passing the URL as a command-line argument to trafilatura to extract the article’s content and read the result from the standard output stream (

Appendix A.2). The entire dataset was successfully reprocessed, resulting in the extraction of the core content of each publication, while information irrelevant to this research was removed.

4.2.2. Data Cleaning

The pre-processing of training data is an essential step in natural language processing, as it prepares texts for analysis. Traditional NLP libraries rely heavily on lexical and grammatical dictionaries, and thus, pre-processing aims to remove anomalies and structure the text for a better understanding using language models. Techniques such as text normalization, stop-word removal, and word alignment improve the accuracy of models for sentiment analysis, topic classification, and information extraction. By eliminating noise and redundant content, pre-processing enhances model performance [

11].

The principal pre-processing steps included the conversion of strings to lowercase, the expansion of abbreviations, the removal of accents and special characters, the filtering of URLs and digits, the correction of duplicate characters, lemmatization, the removal of stop words and punctuation, the rejection of single letters, and the elimination of duplicate white space characters and spaces at the beginning and end of lines. These steps ensured a coherent and uniform representation of the text, thereby enabling traditional NLP libraries to process the data more effectively.

The Python contractions library automatically recognized and expanded abbreviations in texts, eliminating the need for manual processing, standardizing expressions, and normalizing text, thus ensuring consistent text analysis and understanding [

12].

Removing accents or diacritical marks from text data standardizes word forms, facilitating processing for language models. However, in languages where diacritical marks distinguish between sounds and meanings, they can be important. The removal of accents can also prevent interpretation errors and ensure consistent treatment of words [

13]. Therefore, a function was implemented to transform the text and standardize word forms.

In the process of processing publications, it was observed that many extracted contents contained hyperlinks and references to other sources. These elements were deemed unnecessary and potentially disruptive to data interpretation. To address this issue, several steps were implemented to filter out the URLs from the text. Firstly, words that began with www, http, or ended in .html were identified and removed from the text. This was achieved through the use of regular expressions, which located and replaced any occurrences of URLs in the text with an empty string.

To prepare the text for analysis, it is recommended that numerical values be removed from the dataset. This is because they do not contribute much to the analysis process. By excluding numbers, the focus can be placed on the linguistic aspects of the text [

14]. A function was developed for this purpose, splitting the text into individual words and checking if any of the letters in a word were digits. Any words containing digits were removed, while the remaining words were reassembled into a coherent text and returned as the output of the function.

Furthermore, the implementation also ensured that any repeated characters in a given text were removed. Regular expressions were utilized to identify sequences where the same character appeared more than twice consecutively. The subfunction from the re module replaced these sequences with a single instance of the character.

In addition, other techniques, such as lemmatization and stemming, were employed in order to generate the source form of derived words. Lemmatization, a more advanced method, considers the meaning of words and utilizes linguistic knowledge, whereas stemming is a rule-based approach that sacrifices precision for speed. Despite lemmatization producing more accurate results, stemming is preferred in large datasets due to its efficiency [

15].

The NLTK library, a popular natural language processing tool in Python, was employed for the lemmatization process. This involved part-of-speech tagging and conversion to the WordNet convention, followed by lemmatization using the WordNetLemmatizer tool [

16]. The resulting text comprised words replaced by their lemmas, thereby ensuring standardization and eliminating different word forms.

In addition, stop-words and punctuation marks were eliminated because they do not contribute meaning to the text. The term

stop-words encompasses a range of linguistic elements, including pronouns, prepositions, and conjunctions. These elements are removed to focus the analysis on more relevant elements. Similarly, punctuation marks can introduce noise and hinder understanding of sentence structure [

17]. Therefore, they were also removed. To perform these tasks, the cleantext library was used, enabling the removal of extra spaces, stopwords, and punctuation marks [

18].

Furthermore, superfluous one-letter words were excised, thus obviating any errors that might have been introduced during the initial writing or processing stage. This involved the removal of missing characters, such as p, t, and r, with the objective of enhancing the overall accuracy and quality of the text.

4.3. Augmentation

The initial dataset exhibited an uneven distribution of data, with some categories containing a considerable number of records and others exhibiting a shortage of instances. To address this issue, an augmentation technique was employed to generate supplementary data with analogous content for the underrepresented categories. Data augmentation encompasses the generation of novel synthetic data from an existing set and may entail the replacement of words with synonyms, the introduction of noise, or the modification of sentence structure. The objective is to create a more diverse dataset, which improves the model’s ability to generalize and perform well on real test data. NLPAug, a library for text augmentation in Python, was selected for this purpose as it offered the most possibilities for augmentation among popular solutions and fully supported the contextual word embeddings technique, allowing for more advanced modifications to be made [

19].

A console program was developed that allowed text to be passed along with an argument specifying the number of expected outputs. The aim was to perform augmentation for the categories with the greatest imbalance in the collection, for which it was necessary to generate several documents per item. The transformation was implemented by calling the augment function on the stream object, which was pre-configured with a specific set of augmentation methods.

The utilized techniques included the Sequential Flow mechanism, which prioritized the techniques based on the order of arguments passed. The specific pipeline for augmenting the training data included the use of the SynonymAug method with the PPDB: The Paraphrase Database dictionary, the AntonymAug method for full transformation, the BackTranslationAug method to introduce translation noise by translating from English to Japanese and back to English, as well as the ContextualWordEmbsForSentenceAug method using the GPT2 model to augment contextual sentences [

20].

To address the limitations of the ContextualWordEmbsForSentenceAug technique, the text was segmented into sentences using the PunktSentenceTokenizer class from the NLTK library. However, an attempt to implement a contextual word augmentation method was found to be unstable when tested on a small set of samples. The method encountered issues with words that had multiple meanings, resulting in incorrect replacements that disrupted the context of the sentence. The method’s performance was not enhanced by modifying the default dictionary to other models.

Neither the TfIdfAug technique, due to its proclivity for generating errors, nor the SynonymAug method, which encountered difficulties in understanding context, such as replacing pronouns like

it with inaccurate interpretations and converting words like

who to unrelated phrases, was adopted. To address these issues, the SynonymAug class utilized the PPDB paraphrase database, resulting in more optimal replacements without changing the original meaning. The full version of the dictionary was not used due to long loading times, and a basic version was chosen instead [

21]. Additionally, the AntonymAug technique demonstrated positive results.

5. Technology Stack

The study undertook a comparative analysis of conventional natural language processing (NLP) methods with those based on deep neural networks. Conventional NLP methods employed tools such as the Apache OpenNLP, Stanford CoreNLP, and Waikato Weka libraries in Java, whereas deep learning models were developed using Python and the HuggingFace ecosystem libraries with a PyTorch (version 2.1.0) backend (

Appendix A.1).

The Apache OpenNLP platform provides a range of tools for text analysis, including named entity recognition, tokenization, sentence segmentation, language detection, and syntactic analysis [

22]. The Stanford CoreNLP system is particularly adept at semantic and syntactic analysis, offering a range of sophisticated features, including word relation recognition or grammar tree generation. It is a widely utilized tool for the completion of complex NLP tasks and document pre-processing [

23]. Weka is a data analysis and machine learning library that provides tools for the analysis of numerical data in the context of text analysis [

24]. The library offers a variety of algorithms, including naive Bayes, decision trees, and support vector machines.

HuggingFace Datasets is a Python library that provides access to machine learning datasets and integrates with the Transformers library, which enables the utilization of transformer-based models such as BERT and GPT [

25]. This integration is supported by PyTorch, a powerful library that is renowned for its dynamic graph computing capabilities and versatility in a range of machine learning domains. The PyTorch Trainer module facilitates the efficient training and tuning of models [

26].

5.1. Natural Language Processing

In the field of text classification, two main approaches can be distinguished: conventional statistical algorithms and deep machine learning. Conventional methods are based on mathematical principles and are generally known for achieving satisfactory results even with limited training data. They can be used on less advanced machines and are relatively straightforward to implement.

In contrast, deep models require a significant amount of training data and the presence of a GPU for computational intensity. They are capable of analyzing global relationships and complex linguistic structures, which is important for contextual meaning. Although deep models perform better for extensive texts, they require expensive re-training and are challenging to interpret [

27].

To test traditional methods, a naive Bayesian classifier was chosen as the training algorithm. Models based on the principle of maximum entropy were used for word partitioning and language detection. These models were trained using supervised learning, where labeled input data was provided to the model to identify patterns and relationships between the data.

Conversely, the efficacy of conventional methodologies in artificial intelligence may not be as pronounced as that of more sophisticated neural network models. To this end, one of the most prevalent transformer-type models, DistilBERT, was selected for utilization. This model is frequently employed for text sequence classification tasks and is renowned for its efficient processing of data sequences. The popularity of these networks stems from their ability to eliminate the need for recursive mechanisms found in traditional models such as simple recurrent neural networks (RNNs), long short-term memory (LSTM), or gated recurrent unit (GRU) cells. By improving DistilBERT, researchers aim to verify the superior performance of neural networks in comparison to classical approaches in AI [

28].

The objective was to assess the efficacy of traditional statistical approaches and advanced neural network approaches, utilizing DistilBERT, and to provide insights into the performance of diverse machine learning models when applied to text classification tasks, particularly in the context of constrained computational resources.

5.1.1. Conventional Statistical Algorithms

The Bayesian classifier is a supervised classification method based on Bayesian statistics. It predicts category membership using probability information and Bayes’ rule. The naive Bayesian classifier assumes that attributes used for classification are independent, although this is rarely the case in practice. Despite this simplification, the naive Bayes classifier is effective in applications such as image recognition, spam filtering, and sentiment analysis [

29].

The classification process involves calculating attribute frequencies, developing an array of probabilities, and assigning appropriate weights. The conditional probability of a feature belonging to a specific class is then calculated using Bayes’s rule. The label with the highest probability is considered the result [

30].

In the event of zero frequency, when observing a feature absent from the training data set, this is resolved using smoothing techniques. Laplace estimation, also known as

add-1 smoothing, is a commonly used method to address zero frequency in machine learning libraries [

31]. Weka employs Laplace smoothing, whereas the CoreNLP library utilizes a variant of this technique, termed

add-k, which involves adjusting the

sigma and

epsilon parameters to regulate the degree of smoothing.

Maximum entropy methodology is employed in supervised classification to reduce noise and enhance adaptability to varying training data. This approach is particularly beneficial when it is challenging to assume conditional independence between features. This methodology is based on the assumption that the optimal model maximizes entropy while adhering to the constraints imposed by the training data. The use of entropy as a measure of uncertainty ensures that the model is not biased towards certain features or classes, which is particularly important for small datasets [

32].

The classification process using maximum entropy involves defining the set of features and imposing constraints based on the expected attribute values in the training data. The model then adjusts the weights assigned to each feature through an optimization process, aiming to meet the constraints while maximizing entropy. This optimization process involves finding an extreme using methods such as the Lagrange multiplier method. The weights are adjusted iteratively until an optimal solution is achieved [

33].

Both the naive Bayesian classifier and the maximum entropy algorithm are used to classify different types of data sets. However, they differ in how they model relationships between features and predict classes. The naive Bayesian classifier assumes feature independence, making the model simple and easy to understand. However, this assumption may not always hold in reality. The Bayes method uses theoretical probabilities for class prediction, which is particularly useful when there is limited training data available. Additionally, the maximum entropy algorithm is more expeditious in both the learning and prediction processes [

34].

In contrast, the naive Bayesian classifier is more effective in modeling complex relationships between data, as it does not impose strict constraints on feature independence. However, it necessitates a greater quantity of training data to achieve a balanced distribution, and the learning process can be time-consuming, particularly with large datasets. The choice between the two approaches depends on the specific problem. The naive Bayesian classifier is effective for simple problems where feature independence does not significantly affect the model’s effectiveness. In contrast, the maximum entropy algorithm is preferred for more complex relationships between features when longer learning times are acceptable [

35].

5.1.2. Transformer Neural Networks

Transformers are distinguished by their reliance on the attention mechanism, which enables them to focus on pertinent aspects of a sequence and to capture long-term relationships within the data. In contrast to traditional recurrent models, transformers do not suffer from information loss over time and are capable of processing entire sequences of data in a single process, thereby reducing the time required for learning [

36]. They are employed in a variety of fields, including natural language processing, image recognition, and machine translation, particularly in the context of problems with important contextual dependencies [

37].

The encoder and decoder play a pivotal role in transformer networks. The encoder processes the input data sequence and creates a context representation, while the decoder generates the output sequence based on this representation. The model’s functioning can be divided into five stages: calculating results for encoder states, determining attention weights, calculating context vectors, updating the context vector, and generating the output signal. The input embedding process converts words into vector representations, incorporating contextual information using positioning encoders. The attention mechanism determines the relevance of words in the sequence, and the encoder is followed by a feed-forward coupling. The decoder operates sequentially, taking into account the information from the encoder and applying masking to focus attention on previous time steps. The output vectors are combined and transformed using perceptron networks and a softmax activation function [

38].

A variety of models based on the transformer neural network architecture have been checked, including BERT, RoBERTa, Transformer-XL, and models from the GPT family. BERT, the most popular of these, is distinguished by its capacity to process context in sentences bidirectionally and its exemplary performance in natural language processing tasks. A distilled version of BERT, called DistilBERT, addresses the computational and time limitations of the original model. Despite having fewer parameters, DistilBERT is capable of maintaining high-quality representation. The model was subjected to pre-training on a substantial linguistic dataset with the objective of acquiring an understanding of general language patterns and subtle word relationships. The utilization of a pre-trained model enables the transfer of knowledge, obviating the necessity to learn from the outset and reducing the demand for considerable hardware resources [

39].

5.2. Validation

The analysis of classifier quality represents a crucial stage in the assessment of algorithmic performance. This entails the utilization of techniques such as cross-validation and the comparison of evaluation metrics, including support, sensitivity, precision, accuracy, and the F1 measure. By analyzing these aspects, insights can be gained into a model’s ability to generalize and avoid overfitting. Tools such as confusion tables and classification reports can be employed to identify areas for improvement and eliminate models with low performance.

For conventional machine learning algorithms, Java virtual machine libraries with built-in mechanisms can assist in analyzing classifier quality statistics. Conversely, deep neural network models necessitate a greater degree of programming involvement, although the Python language offers a range of tools, including scikit-learn, which provide support in areas not covered by PyTorch.

Cross-validation is a valuable tool for evaluating the efficacy of machine learning models. It entails partitioning the data into subsets for testing and training, thereby facilitating a more reliable estimation of algorithm performance. One prevalent approach is k-fold cross-validation, wherein the data is divided into k folds and the model is trained k times, with each fold serving as a test set. The value of k should be appropriately adjusted based on the characteristics of the dataset [

40]. It is crucial to utilize a stratified version of cross-validation to account for the distinctive characteristics of the data, particularly if the classes are unevenly distributed [

41]. Finally, the results are averaged to evaluate the model’s performance.

5.3. Data Quality and Bias Control

Ensuring data integrity was a crucial step in the study. Following the automated extraction of article content, the dataset underwent multiple stages of cleaning, including removal of incomplete records, filtering of hyperlinks, the normalization of text, and the validation of category labels. The application of augmentation procedures was exclusive to underrepresented classes, with the objective of avoiding any alteration to the original category balance.

The resulting distributions of categories were monitored to ensure that the synthetic examples preserved the semantic characteristics of their respective classes. Furthermore, the augmentation pipeline was configured to introduce only minor lexical variations, thus avoiding excessive paraphrasing that could potentially distort meaning. Although a formal quantitative bias analysis (e.g., KL divergence) was not conducted, careful inspection of representative samples and post-augmentation histograms confirmed the preservation of class proportions.

5.4. Naive Bayes

The study focused on various conventional techniques used in natural language processing before the emergence of deep machine learning. Feature extraction methods, including the bag-of-words, the n-gram approach, and various other pattern extraction techniques, were analyzed. Feature selection strategies, such as the cut-off technique and minimum attribute weighting were also investigated. Furthermore, the study explored the TF-IDF method and the use of Apache OpenNLP, Stanford CoreNLP, and Weka tools to reduce the number of features in text classification.

Before the rise of deep machine learning, NLP researchers commonly employed feature selection techniques. These techniques involved identifying the most significant elements and assigning weights to words for determining their meaning. One widely used method is the bag-of-words approach, which creates a dictionary of unique words from a training set. Each document is then represented as a vector with elements corresponding to the frequency of each word in the document. However, this method may not capture important contextual information [

42].

The n-gram approach is a more advanced technique that considers sequences of words to capture the structure and relationships between lexical units. It involves dividing the text into n-grams and creating a dictionary of unique n-grams in the training set. Each document is represented as a vector with the frequency of each n-gram. This technique is especially useful when the word order is significant [

43].

Pattern extraction techniques aim to identify distinctive structures in a text for classification purposes. This may involve analyzing word arrangements, specific character sequences, or repeated letters. Techniques like prefix–suffix n-grams, character-sequence n-grams, and n-grams of the first and last words are commonly used. Regular expressions can also be used to extract specific words [

44].

The study tested the bag-of-words method and analyzed the impact of n-grams on classification accuracy. It also attempted to find similarities between words related to specific article topics and explored parameter variations and modifications within techniques.

Feature selection is crucial in machine learning to enhance model performance. By eliminating redundant expressions, feature selection reduces model complexity and enhances performance. It also increases the interpretability of the model by highlighting key factors for specific classes [

45]. The study investigated two feature selection strategies: cutting off features that occur sporadically and utilizing minimum attribute weighting.

TF-IDF (

Term frequency–inverse document frequency), a statistical term weighting method, was also examined. It considers both the frequency of a term in a document and its importance in the whole dataset. The TF component quantifies the term’s frequency, while the IDF component measures its relevance based on its occurrence in documents. TF-IDF values reflect the local frequency and global relevance of a term [

46].

5.5. BERT

The Hugging Face platform ecosystem is employed for the training of artificial intelligence models utilizing pre-trained transformer neural networks. The Trainer class obviates the necessity of low-level functions and automatically adjusts hyperparameters, thereby optimizing them for the model. The Evaluate module is responsible for generating statistical data regarding the quality of the classifier following the training phase.

BERT models have marked a revolutionary turning point in the field of natural language processing, as they have made it possible to conduct individual word analysis within a sequence. Nevertheless, processing lengthy texts with quadratic complexity remains a significant challenge [

47]. The application of techniques such as trimming the document to its maximum length may result in the loss of important information.

The BigBird and Longformer models, which were introduced in 2020, demonstrate enhanced performance for long text sequences. In comparison to BERT, which has a maximum processing capacity of 512 tokens, Longformer employs sparse attention and is capable of handling up to 4096 tokens. However, these models have been found to encounter certain limitations, including lengthy training periods, the necessity of smaller batch sizes, and a lack of notable accuracy enhancements in comparison to BERT. It is evident that advanced models require a considerably greater investment of time and GPU memory, yet it is not always the case that they demonstrate superior accuracy in comparison to their more basic equivalents [

48].

Modifications to the model configuration to accommodate the maximum sequence length have been investigated. Nevertheless, retraining may not be the optimal solution when limited resources are available, as the advantages of pre-trained models are negated [

49].

A potential solution to the limitations of BERT is to segment the text and analyze and aggregate the results separately. One proposed method by an author of BERT involves dividing the text into segments of 200 words, including 50 overlapping phrases, and combining the predictions using the softmax activation function. Special tokens, such as [SEP] for sentence separation, are incorporated into the model, and fixed-length sequences are passed through it. Subsequently, an attention mask is generated, represented by an array of zeros and ones, to distinguish between genuine tokens and padding. The predictions from each batch are obtained and integrated into a unified output through the softmax activation function. It is noteworthy that the maximum sequence length handling can be modified by adjusting the model configuration. However, this necessitates retraining and may not be optimal with limited computational resources, as it foregoes the advantages of a pre-trained model [

50]. An examination of the internal logic of DocumentCategorizerDL class in OpenNLP’s deep learning inference module reveals that the library also utilizes this method to effectively process lengthy input sequences.

Another method for dealing with text length limitations is truncation. However, simply truncating from the beginning may result in the loss of important information. The head + tail approach, as outlined in the research paper

Fine-Tune BERT for Text Classification?, whereby the article is divided into sections and subsequently merged, demonstrated superior outcomes compared to other techniques [

51].

In Java-based solutions, the handling features in OpenNLP’s transformer library facilitate the configuration of the model to process text exceeding BERT’s maximum token limit. Padding can be applied to ensure a uniform input size. This is achieved by setting the padding parameter to the defined max_length value and specifying the appropriate truncation_side parameter.

In the context of the case study, modifying the model configuration by changing the method of truncating redundant text was identified as the optimal solution. This approach enabled the transfer of text fragments with strong indicative value, thereby enhancing the accuracy of the model. The transfer of text in smaller units was also implemented to facilitate effective classification during the prediction stage. A variety of batch-size and learning-rate configurations were tested, along with a gradient accumulation technique to mitigate the negative consequences of a large batch size. Early stopping was employed to prevent exceeding the recommended number of epochs. The performance of classifiers trained on minimally cleaned data and lemmatized data was compared.

6. Results

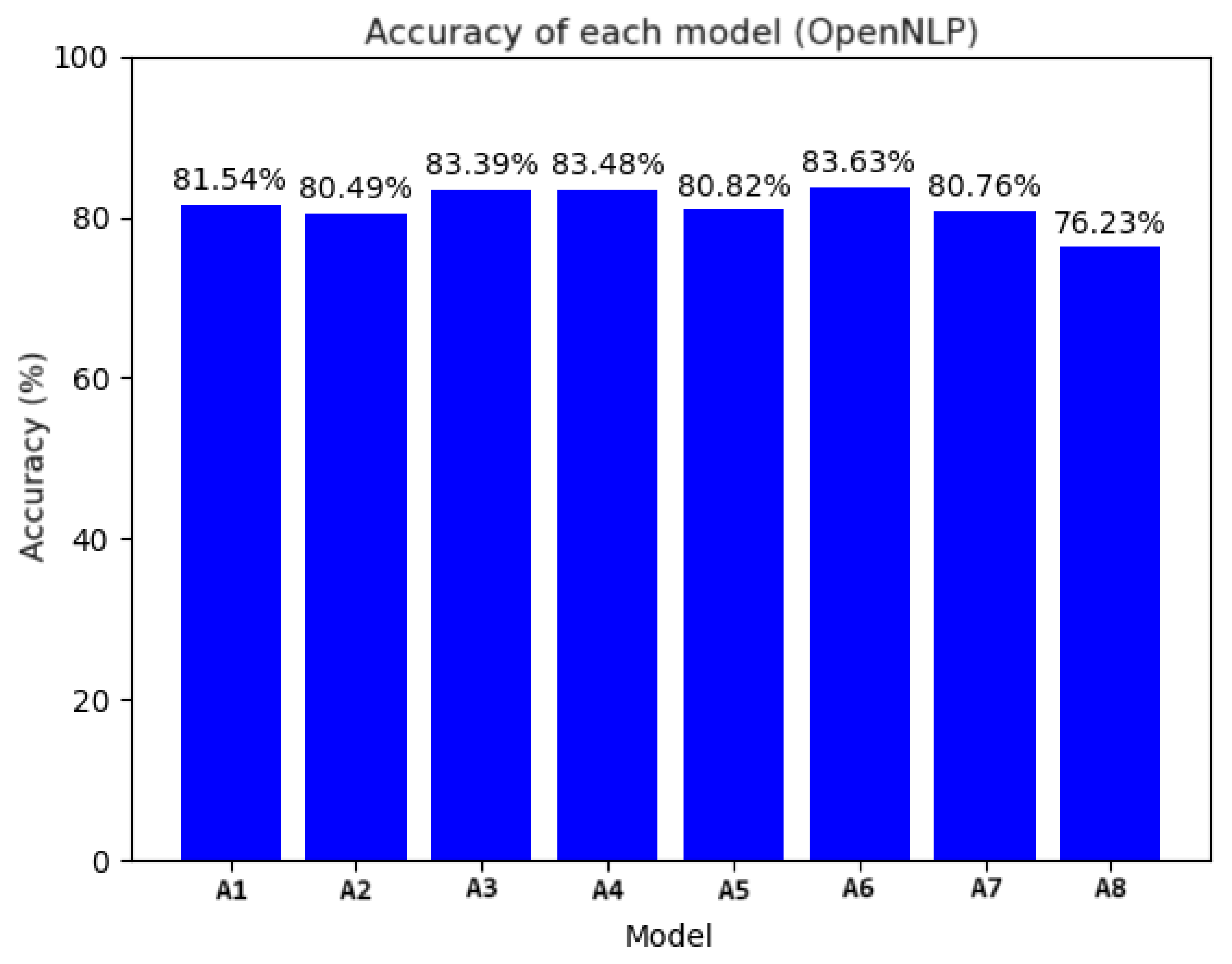

6.1. OpenNLP

The preliminary testing was conducted on the initial training set without any modifications (

Figure 2). A bag-of-words method was employed in conjunction with an n-gram generator to represent the input sequence. The resulting feature set was then transformed into vectorized word embeddings, taking into account the frequency statistics of the expressions. The model achieved an accuracy of almost 82% (Model A1).

In order to enhance the conversion of word frequency statistics, a technique was implemented to convert units from specific categories into fixed expressions. However, contrary to expectations, this resulted in a deterioration of results, with the model’s F1 measure dropping to 80%. The implementation of Named Entity Recognition (NER) for feature selection also did not result in the anticipated improvement in model metrics (Model A2).

Consequently, a novel methodology was implemented, incorporating three-word assemblies and an augmented number of n-grams. This time, a larger augmented dataset, comprising 395,892 documents, was employed. This modification resulted in a notable enhancement in the model’s efficiency, with an accuracy of 83.39% (Model A3).

Furthermore, a comparison was made between the quality metrics of training on the augmented data and the original learning set. The analysis demonstrated that, prior to augmentation, the model exhibited low sensitivity, particularly in the Business & Money category. Following augmentation, sensitivity increased from 67% to 87%. A similar pattern was observed in other categories, including SciTech & Education (21%) and Crime & Legal (15%). The recall metric also demonstrated improvement in categories such as Society & Religion (16%) and Travel & Food (3%).

Nevertheless, an enhancement in sensitivity did not invariably lead to an improvement in model quality. In the case of World & Politics, despite an 8% improvement in recall, there was a concomitant decrease in accuracy, which fell to 80%. A similar decline was observed in the Lifestyle and Sports & Health categories, although the impact was less pronounced than in World & Politics.

In certain instances, a reduction in sensitivity was associated with an enhancement in precision, culminating in a favorable impact on the classifier’s efficacy. Notwithstanding the adverse effects on specific categories, the overall balance of gains and losses underscored the significance of augmentation in enhancing model quality.

In a series of additional experiments, the number of n-grams was reduced to two while maintaining the augmented set, resulting in a noteworthy accuracy of 83.48%, exceeding the outcomes achieved with a higher number of word joins (Model A4). This finding corroborates the observation that augmenting the number of features in n-grams does not invariably result in enhanced performance. The abundance of trigrams in the validation set, without a counterpart in the training data, resulted in a zero probability of their occurrence, which explains the counter-intuitive result [

52]. This finding is at odds with previous studies that have suggested the benefits of increasing n-grams [

53]. These studies have indicated that trigrams, 4-g, and 5-g yielded worse results than bigrams or unigrams [

54].

Building on the configuration that demonstrated the highest performance, subsequent tests incorporated two additional word extraction techniques that were not available in the library. The initial modification incorporated both the initial and concluding words of the document, utilizing a range of letter n-gram lengths. The second modification involved the analysis of both prefix and suffix compounds within words, as well as strings occurring within words. The optimal configuration yielded an accuracy of 80.82% (Model A5), while the experiments evaluating each technique separately demonstrated that the inclusion of the first and last words produced the most effective results, achieving an accuracy of 84% (Model A6). However, the inclusion of prefix–suffix assemblies resulted in a regression of performance (Model A7).

In addition to the exploration of different configurations, the impact of the

CUTOFF_PARAM parameter on model effectiveness was analyzed. This parameter serves to filter out attributes that occur infrequently or have a weak correlation with the target variable. The analysis commenced with a value of 2, which signified that an attribute had to occur at least twice to be included. This resulted in a slight increase in precision, although there was a significant decrease in sensitivity, which led to a drastic deterioration of the F1 measure (Model A8). This finding challenges the assumption that the use of an attribute frequency threshold invariably leads to enhanced metrics. It underscores the significance of considering the characteristics of the problem [

55].

The optimal OpenNLP model (Model A6) exhibited an accuracy of 84% and employed unigrams, bigrams, and the first and last words of the document as input string representation. The model did not include any feature selection and utilized TF (Term Frequency) as its vector representation.

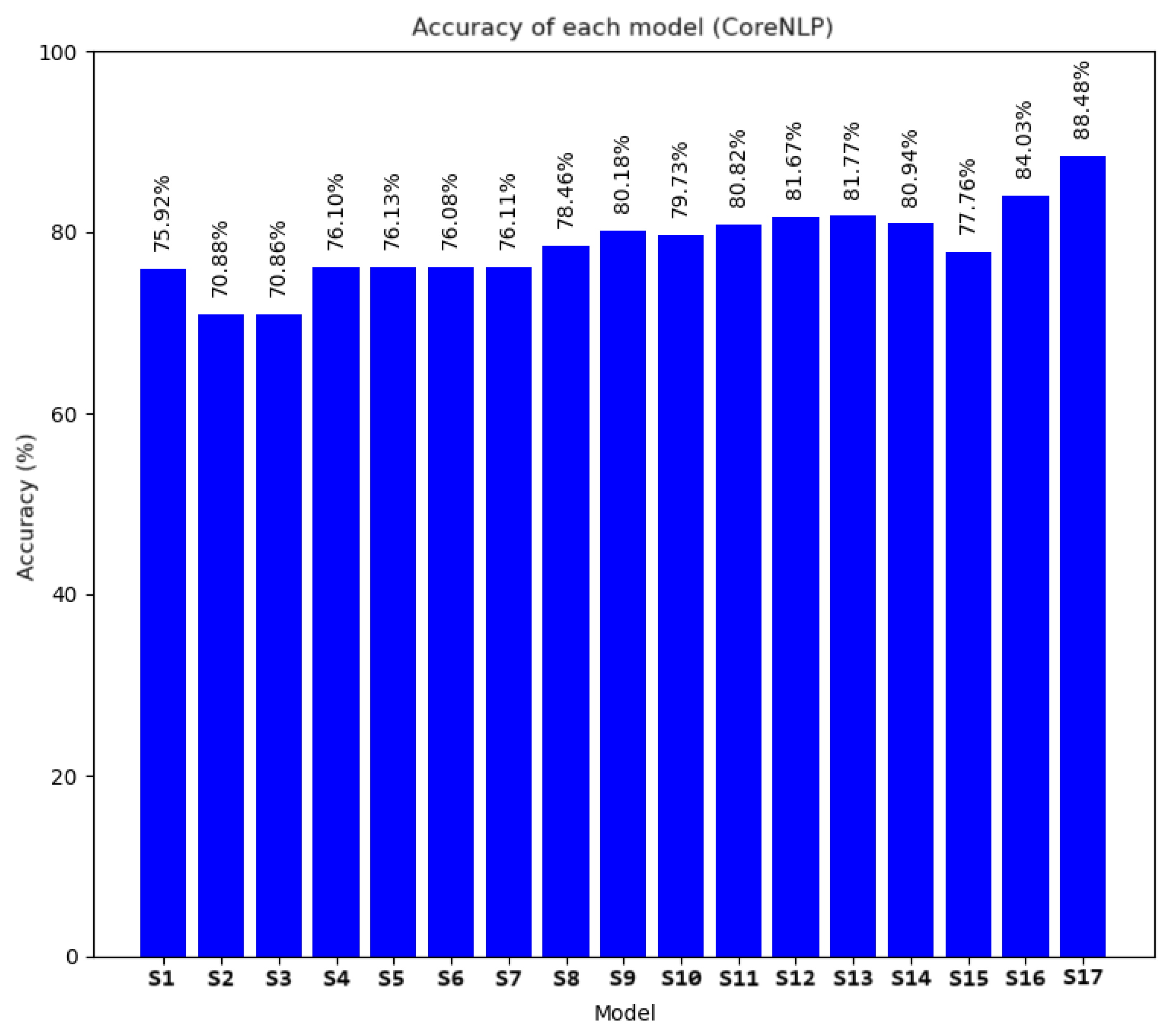

6.2. CoreNLP

The behavior of different configurations of the CoreNLP library was examined through seventeen experiments (

Figure 3). The models were trained using an augmented dataset, which had shown effectiveness in previous tests. The experiments began by selecting unigrams and bigrams in the feature selection process, similar to the experiments conducted with the OpenNLP tool. Surprisingly, the accuracy achieved was 76% (Model S1), which was 6% lower than an identical configuration using the Apache library (Model A1).

Subsequently, the use of trigrams resulted in a further reduction of 5% (Model S2), which supports the hypothesis that increasing the complexity of n-grams may have the opposite effect [

56]. Further experiments focused on the use of a naive Bayesian classifier with a maximum complexity of 2. It was found that the use of bigrams in conjunction with unigrams, in place of single words, has an impact on the F1 measure (Model S4).

The importance of including the first and last words of the document in the attribute vector was verified, which resulted in a slight improvement in the F1 metric (Model S5). However, the addition of attributes reflecting the total word count or the logarithm of this value did not have any significant impact. Moreover, this necessitated an increase in the available random-access memory (RAM) to 64 gigabytes (GB).

The experiments also examined the featureMinimumSupport parameter, which determined the minimum threshold of word occurrences to be included in the word embeddings. It was observed that increasing this value improved model performance, contrary to the behavior of the Apache tool (Model S8).

Furthermore, the experiments investigated the influence of the minimum weighting threshold and the degree of additive smoothing. It has been demonstrated that selecting an appropriate lambda value is crucial to avoiding a data mismatch and promoting generalization to new information. Nevertheless, alterations to these values did not exhibit a straightforward proportional relationship with model performance (Model S10). However, certain configurations did result in improvements (Model S12).

With regard to qualitative metrics, no notable deviations were identified that could indicate overfitting. The metrics exhibited a consistent range of 81% to 97%, with no discernible variations from the results obtained prior to any modifications. The repeated assertion that the indices oscillated between 81% and 97% without any deviations indicates that the metrics remained stable and consistent throughout the analysis.

The final CoreNLP model (Model S17) demonstrated an accuracy of 88%, utilizing unigrams, bigrams, the initial and concluding words of the document, word prefixes, and letter suffixes as input string representation. The feature selection process employed specific parameters, including the minimum number of word occurrences (2), minimum weight threshold (0.2), and sigma for additive smoothing control (0.1). The vector representation employed was that of TF-IDF.

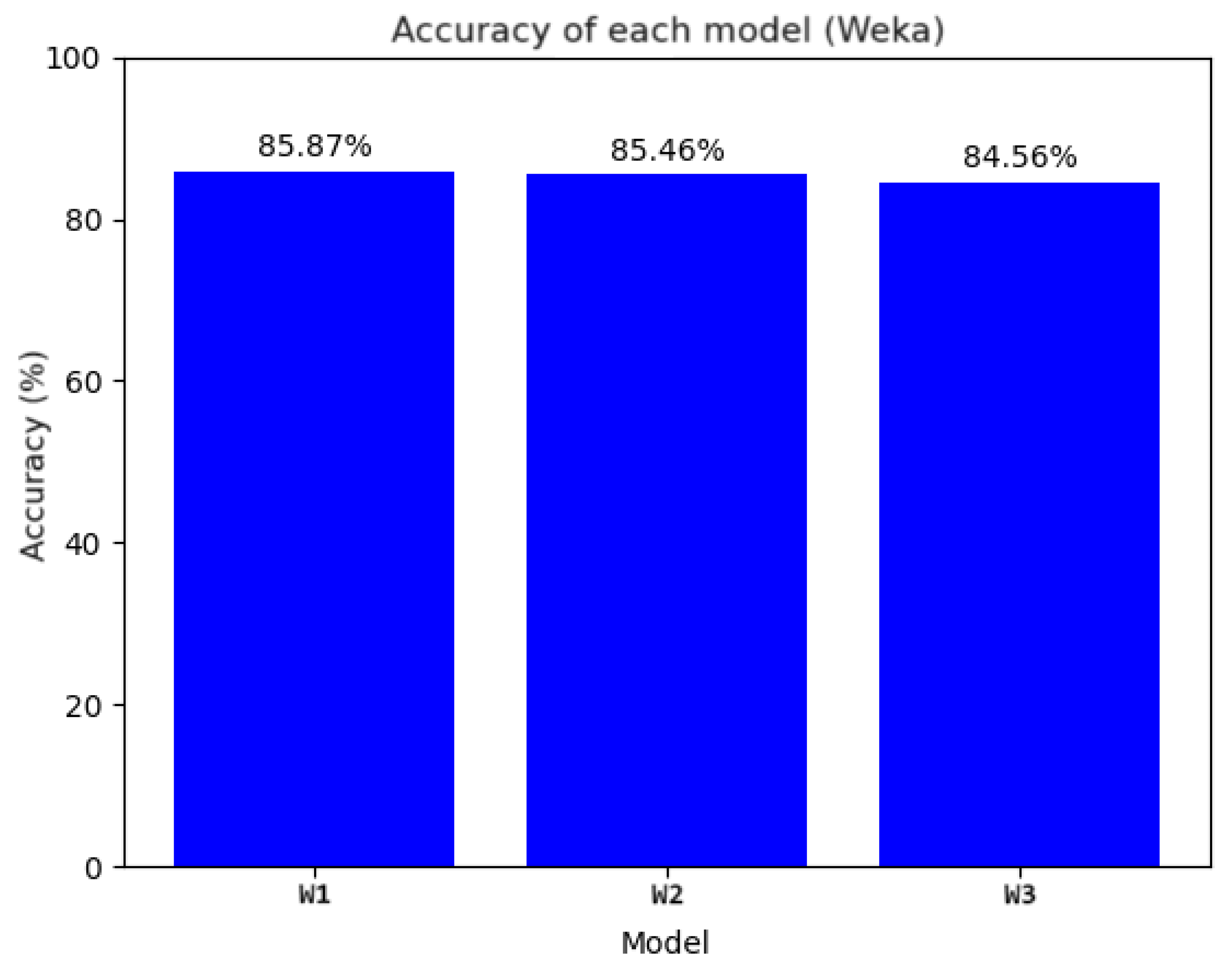

6.3. Weka

The experiments conducted utilizing the Weka library were designed to assess the efficacy of Weka’s integrated TF-IDF methodology in comparison to the configuration employed by Stanford CoreNLP. Additionally, the impact of word frequency normalization on probability vector weights was investigated, as well as the potential of advanced word selection techniques.

The experiments yielded three models (

Figure 4). The initial model demonstrated an accuracy of 86% (Model W1), which was unexpected, given that the algorithm and feature extraction methods remained consistent with those employed in the model of the preceding library. The second iteration, which imposed a constraint on the minimum frequency of word occurrences, exhibited a slight decline in accuracy (Model W2). A comparison of the results with tests conducted using OpenNLP revealed that changing the threshold did not result in a deterioration of the classifier’s quality (Model W3). However, normalizing the representation of word frequencies had a significant impact on the F1 measure, demonstrating the importance of maintaining an even distribution of word statistics.

Notwithstanding the encouraging outcomes, the primary obstacle encountered with Weka was its considerable demand for computational resources. On average, Weka required three times more memory than Apache OpenNLP and exhibited notable time discrepancies when implementing the information gain technique or modifying algorithms. It was reported by other researchers that the learning process would take several weeks to complete [

57]. In light of these observations, it was concluded that certain algorithms implemented in Weka may not be suitable for production applications, particularly when dealing with large datasets. Moreover, an investigation demonstrated that the Evaluation class, particularly the deep copy executed prior to model construction in the

crossValidateModel method, was a significant contributor to the observed high memory consumption.

The optimal Weka model exhibited an accuracy rate of nearly 86% (Model W1). The input string representation included unigrams and bigrams. No feature selection was conducted, and the vector representation technique employed was TF-IDF.

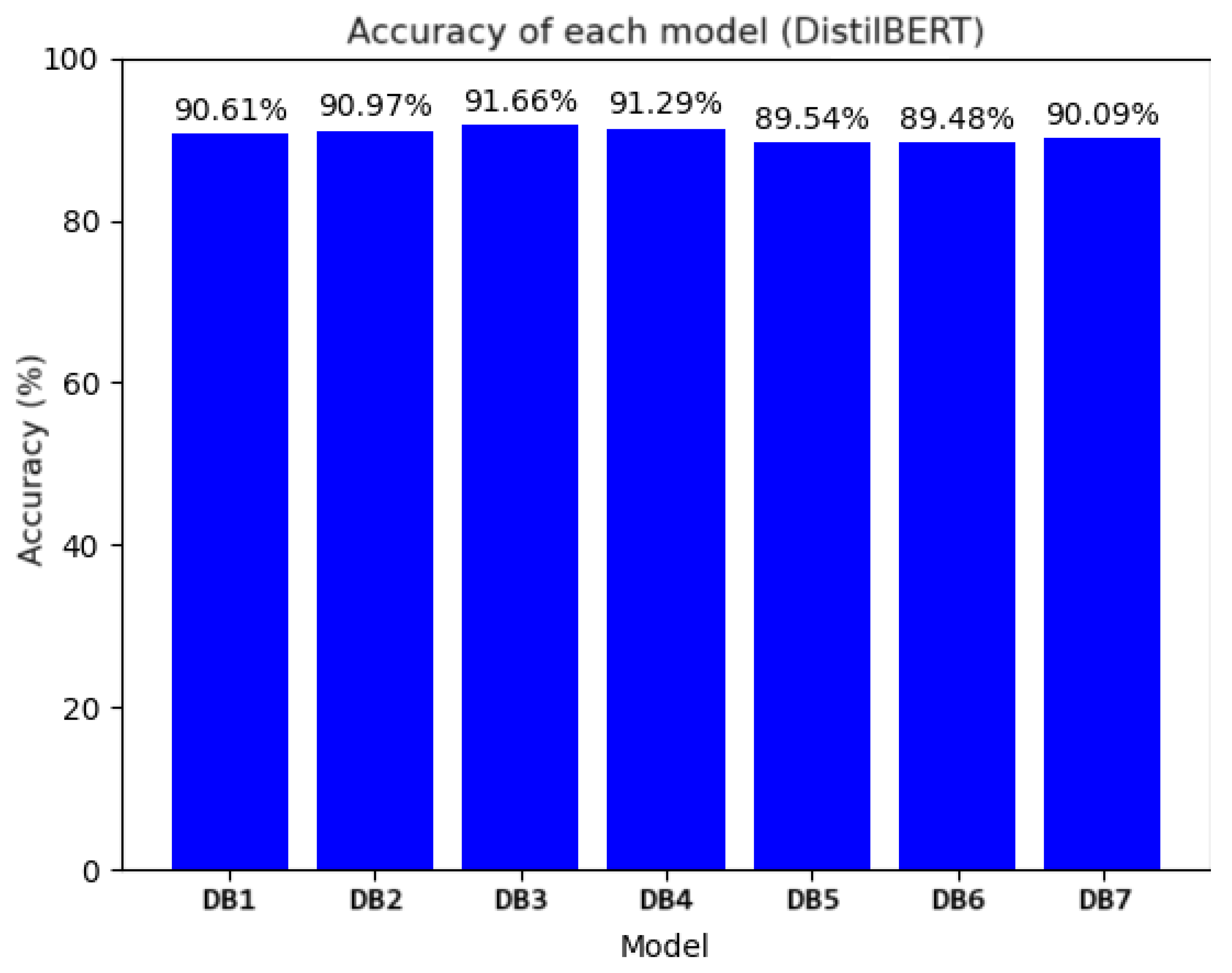

6.4. DistilBERT

The research conducted at DistilBERT led to the development of seven models through three main stages of testing (

Figure 5). The aforementioned stages entailed an investigation into the significance of pre-processing training data, a modification of the mechanism for splitting text sequences into words, and an adjustment of various hyperparameters (

Table 1).

It is noteworthy that the previously tested conventional text classification methods included the pre-cleaning of the training data in order to remove the noise introduced via HTML tags, hyperlinks, and other elements. Furthermore, more advanced techniques such as stemming and lemmatization were employed in order to reduce words to their basic form. However, transformative deep learning models, such as DistilBERT, utilize sophisticated text segmentation techniques that safeguard linguistic meanings and obviate the necessity for such pre-processing [

58]. The WordPiece technique, employed in the BERT family of models, involves the iterative merging of repeated word fragments to create a dictionary. This process allows for the representation of subwords and minimizes information loss. Moreover, Transformer-type models, such as BERT, employ contextual information in sentences through the analysis of punctuation, stop words, and the utilization of multi-headed self-attention mechanisms [

59]. Multiple sources have indicated that the removal of this contextual data from the text may not only be unnecessary but may potentially result in a reduction in the quality of the predictions generated via the model [

60].

A study was conducted to compare two approaches to text processing for machine learning. The initial approach involved comprehensive cleaning procedures, whereas the subsequent approach only subjected texts to minimal processing, solely encompassing the removal of diacritical marks and URLs. The results were somewhat unexpected, as they indicated that the absence of advanced text cleaning did not significantly enhance the model’s comprehension of context or lead to an increase in classification performance. Model DB1, which underwent a comprehensive text cleaning process encompassing lemmatization and the removal of punctuation words, demonstrated superior accuracy (91%) compared to Model DB6 (90%), which underwent minimal treatment. In light of these findings, it was decided that further experiments should continue to utilize lemmatization and the removal of punctuation words.

Hugging Face provides two tokenization mechanisms, namely

slow and

fast. The latter is implemented in Rust in order to facilitate the segmentation of text for large datasets. Additionally, the fast version offers advanced mapping techniques [

61]. A series of tests were conducted on nearly 400,000 newspaper articles, with the DistilBertTokenizerFast reducing processing time from 50 min to less than 5 min compared to the DistilBertTokenizer. Consequently, the 10-fold reduction in processing time enables more efficient testing of learning parameter configurations.

The experiment also examined the impact of truncating excessive text when the word limit exceeds 512. A comparison of the two truncation settings revealed that the left option yielded superior results, with an accuracy rate of 92% (Model DB3) compared to 91% with the default setting of right (Model DB2). This phenomenon can be attributed to the structural characteristics of newspaper articles. The initial passages of these articles commonly contain introductory or generic information, such as background, context, or quotations. In contrast, the subsequent sections of the article typically comprise domain-specific vocabulary, factual details, and key entities that are pertinent for categorization. DistilBERT has been shown to allocate greater representational capacity to tokens retained in the input sequence. Consequently, preserving the article’s ending provides richer and more discriminative features for the model to exploit. The correlation analysis between text position and classification outcomes confirms this tendency: tokens located in the final sections of articles exhibit a stronger association with target categories than those from the opening paragraphs, which explains the performance improvement observed with left truncation.

A study was conducted to examine the impact of learning speed on the acquisition of a BERT model, with a particular focus on the values of 2 ×

(Model DB6), 3 ×

(Model DB5), and 5 ×

(Model DB7). The results demonstrated that the prevailing assumption that higher learning rates lead to faster training times was not substantiated [

62]. The discrepancy in training times between the values was insignificant. However, the study did identify a correlation between higher learning coefficients and lower loss values, indicating enhanced performance. A comparison of the 2 ×

and 5 ×

learning rates revealed that the latter exhibited superior accuracy, contrary to the prevailing assumption that lower learning rates lead to higher accuracy [

63]. Furthermore, increasing the number of epochs for the 2 ×

learning rate model did not enhance performance, as it resulted in over-learning [

64]. These findings underscore the significance of learning speed in training BERT models and underscore the necessity of meticulous monitoring to prevent over-learning when utilizing higher learning rates.

A further study was conducted with the objective of investigating the impact of varying the number of epochs on the efficacy of a model. The study employed optimal parameters, including a learning rate of 5 ×

and a

truncation_side setting of left. The optimal range for the number of epochs, as recommended by the BERT authors, is 2 to 4 [

65]. The use of three epochs yielded positive results, with an accuracy of 92% (Model DB3), while the use of four epochs resulted in a lower accuracy of 91% (Model DB4). This discrepancy was caused by the

load_best_model_at_end mechanism, which finalized the model after three epochs and did not allow the final learning stage to be reached.

Furthermore, the modification to the method of eliminating the redundant text sequence influenced the outcomes of epochs 1–3 in comparison to Model DB2. An increase in the number of epochs can reduce the learning error, but it also increases the risk of overfitting and reduces the model’s ability to generalize to new data [

66]. Consequently, the default setting of three epochs was identified as the optimal choice, and there was no justification for further increases in the number of epochs. The study also demonstrated that an increase in the number of epochs resulted in a prolonged learning process and testing time [

67].

A series of experiments was conducted to investigate the impact of modifying the batch size on training performance. The initial batch size parameter was set to 8, resulting in an accuracy rate of 90% for Model DB7. An increase in the batch size to 32 resulted in a more accelerated learning process, with a reduction in the processing time per epoch from 11 h to 6 h. To further optimize the learning process, the number of gradient accumulation steps was increased to two. This resulted in enhanced learning outcomes, although it also led to an increase in GPU memory usage.

There was a divergence of opinion regarding the impact of batch size on model quality. Some argued that a larger batch size could increase convergence and inference performance [

68], while others suggested that it could lead to poorer performance on test sets [

69]. However, the tests demonstrated no issues with generalizability, and the loss of validation decreased steadily over the three epochs. The accuracy of the DB3 model remained consistent and even improved by 2% compared to the DB7 model, as indicated by the F1 measure. These results contradict common assumptions and emphasize the complexity of the issue and the importance of addressing the specific issue and dataset at hand [

70].

6.5. Real-World Dataset

In order to assess the efficiency of various models developed for text classification, a final experiment was conducted utilizing a distinct corpus of news articles. The corpus, designated All the News 2.0, comprised metadata from 2.7 million news publications by 27 distinct US publishers, collected between 2016 and 2020. For each year, the number of articles was approximately 600,000, with the exception of the final year, which exhibited a figure exceeding 200,000 articles [

3]. The distribution by press agency is presented in

Table 2.

Each article was characterized by a number of data points, including the date of publication, the author, the title, the text of the article, the URL, and the section or publication name. The articles were assigned to nine categories and underwent a manual review, whereby 600 articles were selected from each category for processing, according to identical techniques to those applied to the training dataset, prior to classification via each of the four models. The four models were OpenNLP, CoreNLP, Weka, and DistilBERT.

The experiment yielded F1 measure values of 87% for DistilBERT, 83% for CoreNLP, 81% for Weka, and 78% for OpenNLP. These values were found to be lower than those obtained during cross-validation, indicating the importance of testing based on articles from different sources rather than just one. The discrepancies in text structure, style, and other characteristics between the various sources resulted in a notable degree of variation in the predictions. The least successful performance was observed in OpenNLP during the validation metrics at the learning stage. The lowest accuracy was observed in the detection of the Society & Religion category, suggesting a lack of generalization of the models in this area. The Lifestyle category also exhibited low results for the CoreNLP model, with an accuracy of only 67%. This was somewhat unexpected. Conversely, the World & Politics category exhibited one of the highest prediction rates, possibly due to its substantial presence in the original training dataset.

The models exhibited enhanced performance in identifying specific topics. The DistilBERT model demonstrated remarkable efficacy in identifying articles pertaining to sports, health, entertainment, and politics. However, it encountered challenges in identifying lifestyle publications, where the CoreNLP model demonstrated satisfactory performance. The OpenNLP and Weka models demonstrated difficulty in identifying publications related to criminal and legal topics.

The observed decrease in accuracy can be attributed to several factors:

Domain shift—differences in writing style, vocabulary, and article structure across publishers.

Temporal differences—the training dataset covered 2012–2022, whereas the external dataset represented a partially overlapping but distinct time frame with potential topical shifts.

Label ambiguity—variations in editorial guidelines and category definitions between sources may have led to discrepancies in how certain topics were classified.

7. Discussion

OpenNLP library experiments demonstrated that the number of n-grams does not always determine model performance. Furthermore, the inclusion of certain word extraction techniques can improve accuracy. Additionally, the optimal configuration may vary, depending on the problem, and attribute filtering should be carefully considered to avoid negative impacts on model outcomes.

On the other hand, the experimentation with the CoreNLP library demonstrated the impact of various configurations on model accuracy. The inclusion of specific features, such as bigrams and IDF statistics, was found to be beneficial. The experiments also emphasized the importance of selecting appropriate thresholds and smoothing values to optimize model performance. Overall, the final model developed using the CoreNLP library achieved a 4% higher accuracy (Model S17) compared to the model developed using the Apache OpenNLP library (Model A6).

The experiments with Weka investigated the influence of word frequency normalization and advanced word selection techniques. The outcomes demonstrated encouraging accuracy, yet they also revealed the difficulties associated with the high computational resource usage and time differences that arise when certain algorithms and techniques are employed. Further investigation into memory consumption revealed that the cross-validation execution was inefficient for large datasets. These findings indicate that, while Weka has certain strengths, it may not be the optimal choice for production applications that require the handling of large amounts of data.

The findings emphasize the significance of utilizing a diverse range of datasets for training and testing in text classification tasks. The performance of the models varied, depending on the topic and source of the articles, indicating the necessity for further improvements in the techniques employed for generalization and model augmentation. In general, DistilBERT demonstrated encouraging outcomes and proved to be effective in identifying specific topics. In contrast, CoreNLP, Weka, and OpenNLP exhibited varying degrees of accuracy across different categories.

A qualitative inspection of misclassified samples suggests that articles with overlapping themes (lifestyle and entertainment, politics, and society) were the primary source of confusion. It is recommended that future work should include the refinement of a small subset of the target dataset, or the application of domain adaptation techniques with a view to enhancing generalization.

8. Observations

It has been demonstrated through experimentation and research that traditional machine learning models designed for Java Virtual Machine (JVM)-based languages encounter difficulties due to their elevated computational cost and memory usage. This impedes the learning process, particularly in the case of large datasets, as it results in errors and protracted training times. OpenNLP utilises the least amount of memory, yet has the longest learning time. In contrast, Weka consumes significantly more resources but has a shorter learning time. These limitations impede the potential use of more advanced techniques, such as the maximum entropy principle in logistic regression or decision trees, in Java-based libraries.

Consequently, there is a tendency to rely on computationally less intensive methods such as naive Bayes. An alternative approach would be to leverage Python’s machine learning tools, which are more optimized and offer greater flexibility, particularly for large datasets. This indicates that Java-based libraries may not always be the optimal choice and that exploring Python’s offerings could potentially yield superior results.

The varying capabilities of the different feature extraction methods provided by the tools were analyzed. The OpenNLP library was found to have a limited range of features, with the ability to create a bag of words based on unigrams and bigrams being the only option available. In contrast, CoreNLP offered a diverse range of techniques for attribute generation, encompassing a dozen distinct methods. Weka offered built-in support for TF-IDF vector representation, while CoreNLP provided basic support in this area, and OpenNLP lacked this capability entirely. The availability of feature extraction methods was a significant factor in the decision-making process when selecting a tool for text data analysis.

In terms of text processing, OpenNLP had the fewest built-in solutions, while CoreNLP offered advanced capabilities such as word splitting configurations and named entity recognition (NER) options. Weka offered support for stop words and stemming, but it lacked the functionality of lemmatization, which was available with the other tools. OpenNLP was only suitable for simpler problems that did not require the use of advanced word extraction or attribute filtering techniques.

All the solutions encountered difficulties in handling contractions, necessitating the implementation of appropriate solutions analogous to those employed in Python. Despite the use of analogous methodologies, the behavior and quality of the resulting models showed discrepancies among the libraries, suggesting the deployment of various strategies that may not be fully documented.

A notable enhancement was observed when a deep learning model based on transformer neural networks was employed. This enabled the learning process to be executed on a GPU, thereby resolving the memory management issues that had been encountered with Java libraries. The implementation process with Python and the Hugging Face library was found to be more concise and efficient than that of the Java solution. Although the five-fold cross-validation of the deep learning model required a processing time exceeding one day, the transformer-based approach demonstrated a notable enhancement in classifier accuracy.

9. Conclusions

In conclusion, DistilBERT demonstrated superior overall prediction accuracy compared to the other models. Transformer-based models typically demonstrate superior performance compared to traditional statistical algorithms, largely due to their reduced reliance on custom implementation. This is attributed to their ability to leverage pre-trained models with configurable base settings and hyperparameter adjustments. Additionally, they offer the optimal performance-to-training-time ratio and necessitate significantly fewer resources than traditional statistical methods. It is, however, important to note that support for transformer models in the Java ecosystem is somewhat limited. This is an important consideration for server-side or production GUI applications developed in this language. In contrast, machine learning libraries for traditional algorithms, such as Apache, Stanford NLP, and Weka, demonstrate particular efficacy in the Java environment and may serve as effective alternatives. Nonetheless, a rigorous evaluation of performance and quality is essential, as findings indicate that traditional models can occasionally exhibit slightly faster processing times than deep learning models for input prediction.

The study employed a sophisticated and multifaceted database, characterized by a diverse range of themes, a representative sample of authors, linguistic variability, and a temporal scope spanning ten years. The extensive nature of the dataset permitted the derivation of insights applicable to a broad spectrum of challenges in artificial intelligence. In order to ensure the robustness and generalizability of the findings, the model’s performance was evaluated on a separate, independent dataset. This rigorous validation process not only provided an objective assessment but also reinforced the credibility and reliability of the research outcomes by demonstrating their applicability beyond the initial dataset.

The study identified significant shortcomings of conventional machine learning algorithms, including high computational costs, restricted feature extraction capabilities, and the insufficient handling of text-specific challenges such as contractions and lemmatization. In contrast, transformer models implemented in Python, such as DistilBERT, exhibit superior performance due to their utilization of pre-trained architectures and GPU acceleration. These findings underscore the pivotal role of programming ecosystems in influencing the performance and usability of NLP tools.

From a pragmatic perspective, the findings of this study suggest that lightweight transformer models, such as DistilBERT, are particularly well-suited for organizations that require efficient deployment under limited computational resources. This is exemplified in newsroom workflows or real-time media monitoring systems. In instances where enhanced accuracy is prioritized over efficiency, the deployment of larger models is advocated, despite the attendant increase in inference costs. Furthermore, the findings on truncation strategies suggest that practitioners should carefully evaluate text preprocessing choices, depending on the document structure. For domains in which crucial information is located towards the end of the text (e.g., news or scientific articles), left truncation may improve classification performance. Conversely, for short-form content such as social media posts, default right truncation should be sufficient.

It is important to note that the discrepancy in performance between Java-based libraries and transformer models in Python can be attributed to several factors:

Optimization of libraries—Python ecosystems (e.g., Hugging Face, PyTorch) are optimized for GPU acceleration, whereas Java libraries often rely more heavily on CPU-based computation.

Pre-trained model availability—Python provides access to a wider range of pre-trained transformer models, enabling efficient transfer learning.

Tokenization and feature extraction—Java libraries implement simpler tokenization and vectorization pipelines, which can limit their ability to capture contextual information.

These limitations do not preclude Java libraries from achieving competitive results in environments with limited resources, as demonstrated by their performance in this study. It is also recommended that researchers give careful consideration to the suitability of the programming environment for large-scale or resource-intensive tasks when selecting NLP frameworks. It is recommended that Python-based solutions be considered for their adaptability and efficiency, particularly in high-accuracy applications. Conversely, Java-based tools may be advantageous for scenarios where resources are limited, such as server-side or GUI applications. A hybrid approach that leverages the strengths of both ecosystems has the potential to further optimize NLP workflows. For instance, this could involve prototyping and fine-tuning models in Python while deploying them in production environments using Java.

10. Study Contributions

The present study makes several contributions that extend beyond the replication of existing approaches. Firstly, it systematically evaluates Java-based NLP libraries such as OpenNLP, CoreNLP, and Weka. These are rarely considered in contemporary research, yet remain relevant in enterprise environments. Secondly, the work introduces methodological extensions to traditional models, including alternative feature extraction strategies and parameter configurations. These provide new insights into the effectiveness of conventional algorithms. Thirdly, the study provides an original analysis of truncation strategies in transformer models, demonstrating that left truncation yields superior results for news classification compared to the default right-side approach. Moreover, by integrating accuracy metrics with assessments of computational cost and ease of deployment, the research endeavor seeks to address the prevailing gap between experimental performance and practical applicability. The validation of models on an external corpus is a final step that confirms the robustness and generalizability of the findings. Collectively, these contributions underscore the pioneering nature of the study and its significance for researchers and practitioners in the domain of natural language processing.

11. Avenues for Future Work

In the context of the project’s further development, it would be a significant step forward to incorporate support for additional languages into the document classification process. This would likely necessitate the implementation of a dedicated model learning process on a dataset comprising articles in a novel language.

In the present study, statistical significance testing of differences between models was not performed. This decision was driven by the fact that the differences in performance were sufficiently large to be interpreted as practically meaningful, especially considering the size of the dataset and the consistency of results across cross-validation folds. The primary aim of the study was to provide a comparative evaluation of different NLP libraries and techniques, rather than to establish fine-grained statistical differences between closely performing models. Future work could incorporate McNemar’s test or paired t-tests on prediction outcomes to confirm whether the observed differences reach formal significance thresholds.

The study applied several augmentation strategies (e.g., synonym replacement, back-translation, contextual embedding) but did not perform a systematic comparison of their individual contributions. The decision was based on computational constraints and the fact that the augmentation pipeline was primarily designed to mitigate class imbalance, rather than to benchmark augmentation strategies. Nonetheless, a qualitative inspection indicated that contextual embedding and back-translation preserved semantic coherence more effectively than simple synonym-based methods. Future studies could systematically compare augmentation techniques with respect to both accuracy and computational efficiency to determine optimal trade-offs.

Furthermore, adversarial testing could be employed to identify potential security threats, thereby enhancing the system’s overall robustness. The simulation of attacks and the subsequent assessment of their impact on the model’s performance, in conjunction with the implementation of preventive measures, could prove an effective means of enhancing the algorithm’s resilience to threats.

The ultimate evaluation component would be the implementation of the developed model into a production application with a graphical user interface (GUI) and the regular collection of user feedback. This would enable the identification of areas requiring further development. Additionally, data on documents entered by users could enrich the training collection, contributing to the further improvement of the model.