1. Introduction

Sculpture embodies the artistic ideas of its creators and records human development across different historical periods, regions, and cultural phenomena. As a collective cultural heritage of humanity, sculpture connects the past and the future and plays an indispensable role in human civilization [

1,

2,

3]. Historical sculptures can provide archaeologists, historians, and other cultural researchers with material evidence for religious, humanistic, customary, political entity, and economic studies, which, although not able to provide direct information like written records, can establish a historical framework for historians based on material remains, especially for eras or events that are not well known in written records [

4,

5]. The artist’s use of beautiful lines, shapes, and textures gives each piece of sculpture an intrinsic artistic value, and the sculpture reflects the artist’s pursuit and ambition, confidence in life, and love of the world, and passes on the artistic creator’s spiritual expression and pursuit of life to future generations [

6,

7,

8]. ‘Using bronze as a mirror, one can straighten one’s clothes; using history as a mirror, one can know the rise and change of dynasties; using people as a mirror, one can reflect on successes and failures’. The exhibition of works of art in museums and heritage galleries, and other venues, allows people to understand history and see the cultural achievements of the country and the nation in different periods, and at the individual level, can draw on the wisdom of predecessors to guide the future path of life. At the national level, it can enhance everyone’s national self-confidence and thus improve the sense of national identity and national cohesion [

9,

10]. Therefore, the protection and repair of damaged sculptures is of great significance to history and culture workers, the general public, and even the nation.

Many sculptures are damaged due to aging of the materials used, unfavorable storage conditions, dust, relative humidity, and oxidation, with color distortion, blurring, peeling, blistering, and cracking of the skin and loss of paint [

11,

12]. Traditional physical restoration methods are risky and irreversible. Digitizing sculptures and applying AI technology enables the visualization and adjustment of restoration results. These digital outcomes can guide physical restoration efforts while preserving the artwork’s original style and semantics [

13,

14]. Therefore, it is necessary to build a systematic, high-precision digital restoration technology to recreate the sculpture’s original appearance, and to consider the style of the work of art, semantics, material characteristics, and other multi-dimensional information, for sculpture restoration work to provide a solid theoretical foundation and technical support. Traditional digital restoration methods use neighboring pixels of an image for texture synthesis to fill in the damaged area, which is mainly suitable for small area patch restoration, where the texture does not change much. However, it is difficult to repair larger damaged areas with complex structures, and these methods have difficulty capturing the style of the artist and the semantics intended in the artwork [

15,

16,

17]. Deep learning, transfer learning algorithms, etc., have been used for digital restoration of images, which use the feature extraction and style migration capabilities of Convolutional Neural Networks (CNNs) to detect cracks and fill in missing parts of the image. While CNN methods have advantages in highly structured image restoration, artworks such as sculptures usually have unique textures and complex structures, and the artistic style and semantics are more demanding on the restoration process. These demanding conditions limit the application of CNN methods in sculpture restoration [

18,

19,

20]. Generative Adversarial Network (GAN) algorithms are trained in an adversarial manner, where the generator and the discriminator compete with each other to improve the generator’s capability, and this training mode is suitable for digital restoration of structurally complex images. In order to make the GAN algorithm more effective for digital image restoration of damaged sculptures, Zhao et al. proposed a high-resolution broken image restoration method based on a convolutional self-coding Generative Adversarial Network (DCGAN) [

11]. Wang et al. suggested the use of recurrent GANs for the restoration of damaged sculptures in order to improve the realism of the restored images [

21]. Zou et al. used the pix2pix GAN algorithm for sculpture restoration, which improved the clarity and color of the restored images [

22]. Kumar et al. proposed a generative adversarial method with a five-layer encoder network, which achieves effective repair of sculpture point damage [

23]. Cao et al. proposed a GAN algorithm based on a pre-trained residual network, which yields a better sculpture restoration result as compared to the traditional GAN algorithm and better sculpture repair results [

24]. However, the network architecture of existing GAN-based sculpture restoration methods only consists of convolutional and residual layers, which can only learn the local relationships between neighboring image pixels, but not the global relationships between image features, so that the existing techniques are still deficient in local blurring and texture loss in sculpture restoration.

The DCGAN (Deep Convolutional Generative Adversarial Network) generator uses transposed convolution to gradually upsample the low-dimensional noise to generate color images with high-dimensional features, and this structure can effectively capture the color distribution and local texture in the sculpture images, fully restoring the appearance of the sculpture’s color and surface texture details [

25,

26]. In addition, the DCGAN stabilizes the training process by using a batch normalization layer to overcome the gradient vanishing and accelerate the model convergence to achieve clear restoration of the image with a limited number of training rounds. Additionally, the introduction of nonlinear activation functions, such as ReLU and LeakyReLU, enhances the model’s nonlinear expression ability, which can further improve the structure restoration accuracy of the restored image [

27,

28]. However, the traditional DCGAN network architecture is dominated by convolutional layers, which can only learn the local features of the image, and it is difficult to achieve the global semantic association across regions [

29,

30,

31]. The complex texture structure and color scheme of sculptures and the variety of semantic expressions of cultural symbols require the restoration model to have the ability to perceive the overall composition, stylistic consistency, and spatial structure globally. Since the DCGAN training process is unsupervised, the lack of high-quality paired datasets will lead to problems such as color deviation, texture repetition, and even structural distortion in the generated images, affecting the realism and artistry of the restored images [

32,

33,

34]. In view of the above analysis, it is necessary to make improvements to the following aspects to enhance the practical value of the DCGAN in sculpture image restoration: (1) introducing an attention mechanism to strengthen the model’s ability to focus on the key areas of the sculpture image to improve the local restoration accuracy and style consistency of the texture and structure; (2) integrating the Transformer structure or global feature modeling module to capture global semantic information across regions in the image to enhance the ability to understand complex artistic styles; (3) construct a multi-style, multi-material data enhancement strategy to improve the model’s ability to migrate styles and adaptability; (4) design a finer multi-scale loss function, taking into account the pixel-level error and differences in perceptual features and artistic style consistency, to ensure that the restoration results are true and accurate and the restoration results are consistent.

To address the limitations of existing restoration methods, this paper proposes a Generative Adversarial Network (GAN) for the digital restoration of damaged sculpture images, incorporating dual (spatial and channel) attention modules and a channel converter. To achieve high-fidelity image restoration, the proposed network integrates spatial and channel attention layers into the encoder part of the generator, enhancing the understanding of global relationships among image features across both spatial and channel dimensions, thereby enabling fine-grained restoration of sculpture images. To verify the effectiveness and superiority of the method, this study focuses on two core research questions: (RQ1) Can integrating dual attention mechanisms and a channel converter into the DCGAN framework improve the accuracy and stylistic consistency of sculpture image restoration? (RQ2) Does the proposed improved DCGAN outperform traditional DCGAN and other mainstream restoration methods in terms of SSIM, PSNR, and MSE metrics across different types of sculpture damage? For this purpose, the proposed method is compared with multiple mainstream algorithms on sculpture samples of various styles and damage types, with a comprehensive evaluation conducted with both qualitative visual performance and quantitative indicators. The results demonstrate that the proposed method achieves significant advantages in maintaining overall artistic style coherence, restoring fine texture details, and enhancing semantic consistency, highlighting its broad application prospects in digital heritage restoration and sculpture art conservation. The proposed model integrates theories and methods from multiple disciplines, including deep learning, computer vision, and art restoration, and can be widely applied in intelligent restoration workflows such as virtual museums and cultural heritage databases, with strong potential to promote the practical implementation of AI technologies in heritage conservation.

3. Improved DCGAN Model Construction

Figure 2 illustrates the overall workflow of the proposed I-DCGAN model and its structural improvements over the traditional DCGAN framework. The traditional DCGAN primarily achieves local texture generation by stacking convolutional layers but lacks the capability to model global semantic structures and maintain style consistency. In this study, the generator is enhanced with a dual-attention mechanism—comprising spatial attention and channel attention—and a channel conversion module, enabling perceptual modeling of the global context while improving local detail restoration through feature/style alignment. Specifically, the generator takes a damaged sculpture image (optionally with a mask) as input, processes it through an encoder (Conv blocks + BN + ReLU/LeakyReLU), dual-attention modules, a channel converter, and a decoder (transposed convolutions with skip connections) to produce the restored image

G(

x). The output is optimized using reconstruction losses, including L1/SSIM, perceptual (VGG), and optional style/TV losses, with gradients back-propagated to update generator parameters. The discriminator, implemented as a PatchGAN or multi-scale structure, distinguishes between real images

y and generated images

G(

x), computing an adversarial loss

Ladv. The total generator loss

LG combines adversarial, reconstruction, and perceptual losses, with both generator and discriminator parameters updated via backpropagation. These improvements in the generator, discriminator, and loss function collectively contribute to the enhancement of restoration performance. To further illustrate this process, core modules of the proposed I-DCGAN (Pseudo-Code) are presented in

Appendix A.

Based on previous studies on GAN-based image restoration [

11,

21,

22,

23,

24], and the theoretical advantages of dual attention mechanisms in enhancing both local and global feature learning [

25,

27,

29], we formulate the following hypotheses: H1: The improved DCGAN (I-DCGAN) achieves significantly higher SSIM values than the traditional DCGAN in sculpture image restoration; H2: The improved DCGAN achieves significantly higher PSNR values than the traditional DCGAN in sculpture image restoration; H3: The improved DCGAN achieves significantly lower MSE values than the traditional DCGAN in sculpture image restoration; H4: The improved DCGAN consistently outperforms other mainstream restoration models (CA, EC, RN, LGNet, CTSDG) across different sculpture damage types in terms of SSIM, PSNR, and MSE.

Table 1 presents the structural comparison between the T-DCGAN and I-DCGAN.

In summary, the proposed improvements to the DCGAN framework—including dual attention modules, channel conversion between encoder/decoder layers, a patch-based discriminator, and a multi-objective loss function—address the core limitations of traditional DCGAN models in sculpture restoration. These enhancements collectively improve the model’s ability to handle complex textures, preserve structural and stylistic integrity, and achieve more realistic restoration outcomes.

3.1. Generator Architecture Improvement

The architecture of the generator model is designed to progressively recover data features and create ‘fake’ sample images. To be precise, the generator first receives a 100-dimensional random noise vector, and then completes the upsampling process by deep transposition convolution to obtain feature maps at different scales. In this process, each sampling operation is followed by a batch normalization layer and an activation function layer, except for the last layer, which uses the Tanh activation function, and all other activation function layers use the ReLU activation function. After seven upsampling operations, the initial input random noise vector is finally transformed into a grey-scale image with a resolution of 256 × 256 × 1.

In the process of performing DCGAN image restoration model construction for sculpture color restoration, the generator part adopts the U-Net architecture based on encoder/decoder. The input and output objects of this architecture are both images, which has a great advantage in the application of the DCGAN image restoration machine learning model construction for sculpture color restoration. In addition, the convolutional module in the encoder part of the architecture facilitates the learning of local dependencies between image features, the dual attention module consisting of spatial and channel attention layers helps to learn the spatial and inter-channel global dependencies between image features, as shown in

Figure 3, and the decoder generates high-quality restoration images from both local and global image features through the attention and convolutional layers. Furthermore, the addition of the jump connections between the channel converter helps in multi-scale feature fusion and fills the semantic gap between the encoder and decoder layers to further improve the quality of the generated image.

The encoder of the generator contains three parts: the feature map module, the convolutional layer module, and the dual attention module. The function of the feature map module is to map the 3-channel input image to the 64-channel feature map, which provides input data for the convolution layer. The convolution module downsamples the image features to achieve the learning of local dependencies between the image features, which consists of four convolution modules, greatly improving the learning ability and learning efficiency. Each convolutional module is immediately followed by a dual-attention module consisting of spatial and channel attention modules, which can realize the learning of spatial and channel global dependencies between image features. The feature map module maps the final feature map to the three channels and outputs the desired image. In addition, the channel converter added between the jump connections from encoder to decoder not only helps in multi-scale feature fusion between the two, but also fills the semantic gap between the two. The convolutional encoder consists of the following components: three 3 × 3 convolutional modules filled with 1, with convolutional kernel sizes of 2 × 2, 3 × 3, and 2 × 2 in that order, followed by a batch normalization operation, a ReLU activation function operation, a random deactivation operation, and a maximal pooling operation, which act together in order to achieve feature encoding.

As shown in

Figure 4, the dual attention module contains channel and spatial attention modules in the form of a feed-forward convolutional neural network, which utilizes the global correlation and feature weights of the channels to enhance relevant features and suppress weaker ones. In the channel attention module, the input features are subjected to maximum pooling and average pooling operations and then passed through a shared multilayer perceptron; after that, a Sigmoid activation function is applied to generate the channel attention for the elements corresponding to the input features. The spatial attention module generates spatial features by maximum pooling and average pooling operations. It also uses 3 × 3 null convolutions to efficiently aggregate contextual information. Then, the Sigmoid function generates spatial attention features. Finally, the spatial and channel features of the input features are combined to achieve dual-channel attention to enhance the detailed feature information. In addition, a channel converter is used between jump connections to ensure effective feature fusion from the encoder to the decoder module. The channel converter module has two subcomponents: the inter-channel cross-fusion converter and the inter-channel cross-attention.

Figure 5 shows how the channel converter works, which consists of three steps. First, jump connections are converted into tokens using multi-scale feature embeddings. Then, these tokens are passed through multiple inter-channel cross-attention layers. Then, the outputs of these layers are passed to the multilayer perceptron to refine the features, and, finally, inter-channel cross-attention is performed on these features. The channel converter ensures multi-scale fusion of encoder and decoder features and reduces the semantic gap between them.

3.2. Discriminator Improved

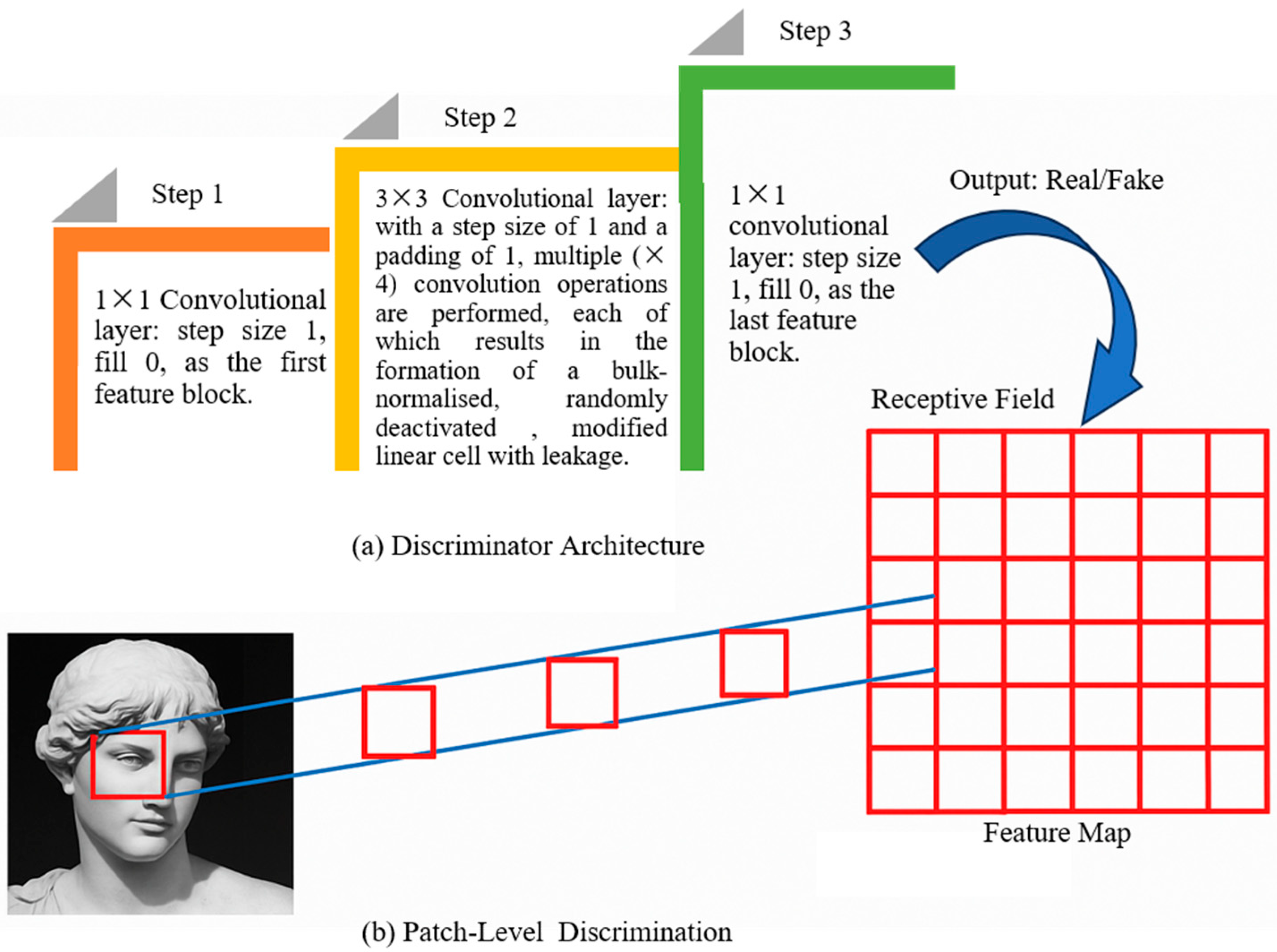

When inputting an image to the discriminator for classification, the traditional Generative Adversarial Network (GAN) architecture directly determines whether the entire image is true or false. In order to improve the accuracy of image modification details, this study proposes a region segmentation algorithm that uses a discriminator that does not judge the whole image as true or false, but rather divides the input image into numerous blocks and then classifies it as true or false. The discriminator performs a convolution operation on the image to obtain an output matrix of predicted values for each block, each value in the output matrix represents the probability that the corresponding image block is true or false, and then all the responses are averaged to obtain the final true/false prediction of the discriminator, the working principle of which is shown in

Figure 6.

The architecture of the discriminator consists of a series of convolutional layers that enable the sampling of three-channel input images and their eventual transformation into one-channel predictions to obtain the output result. The initial feature map convolutional block of the discriminator is a 1 × 1 convolutional layer, which maps the 3-channel input image into a 64-channel image feature map. The second feature map block of the discriminator contains four contraction blocks; multiple contraction blocks are designed to help the downsampling speed of the image. Each contraction block consists of two convolutional layers, the first one contains a 3 × 3 convolution, followed by batch normalization, random deactivation, and corrected linear unit (Leaky ReLU) activation function with leakage; the second convolutional layer consists of a 3 × 3 convolution, followed by batch normalization, modified linear unit with leakage (Leaky ReLU), and a maximum pooling operation block. There is an initial feature block, followed by a final feature block, the architecture of which is shown in

Figure 7.

3.3. Loss Function Design

The generator linearly combines the adversarial loss, the perceptual loss, and the structural similarity index loss as a loss function for training.

Figure 8 shows a schematic of the training process of the generator. Firstly, the repaired sculpture is spliced with the damaged sculpture and input to the patch discriminator, which predicts the result of the data values in the form of a matrix based on the inputs, with each value in the matrix representing the probability of a patch being true or false. The discriminator compares this predicted matrix with the all-1 matrix, and the difference between these two matrices is used as the adversarial loss for generator training. The perceptual loss is computed as the smoothed L1 loss between the original sculpture image and the generated sculpture image between the hidden layer activation values of the VGG-16 network. Similarly, the structural similarity index loss is computed as a measure of structural similarity between the original sculpture image and the generated sculpture image. The generator loss can be expressed as:

where

λ1 and

λ2 are empirical values, which are 200 and 10, respectively, in this study. The smooth

L1 loss (

) can be calculated by the following formula:

where

is the hyperparameter with value 1,

x and

y represent the original and restored sculpture images, respectively, and

n is the instance index.

Counterloss (

) can be calculated by the following formula:

Structural similarity index measurement (SSIM) loss (

) can be calculated by the following formula:

where

,

and

,

are the mean and variance of

x and

y, respectively, and are the covariance of

x and

y.

Since the output of the discriminator is the probability value of each submodule being true or false, the submodule-based discriminator is trained using binary cross-entropy (BCE) loss. Its output is compared with the all-zero or all-one matrix corresponding to the false or true classification. Thus, for spurious outputs generated by the generator, the predictions of the small module discriminator are compared to the all-zero matrix. For original artwork images, the predictions of the small module discriminator are compared to the all-one matrix.

Figure 9 shows a schematic of the discriminator training process. In the discriminator training process, similar to the conditional Generative Adversarial Network model constructed by Mirza, first, the damaged artwork is input into the generator as a condition, at which point the repaired image is generated by production. The repaired image is fed into the discriminator for feedback. Using a binary cross-entropy (BCE) loss function, the parameters of the discriminator are updated to classify the restored image as false and the original image as true. Based on the feedback from the discriminator, the parameters of the generator are optimized using a linear combination of adversarial loss, perceptual loss, and structural similarity index (SSIM) loss functions.

5. Discussion

5.1. Potential Applications and Implications of This Study

The proposed improved DCGAN (I-DCGAN) model, incorporating dual attention mechanisms and a channel converter, demonstrates substantial potential in advancing both academic research and practical applications within the field of digital cultural heritage restoration. Its ability to accurately reconstruct fine-grained texture details while preserving the overall stylistic integrity of artworks provides a robust foundation for multiple downstream applications.

From an academic perspective, the I-DCGAN offers a technically rigorous framework that bridges computer vision, deep learning, and art conservation. The architectural and methodological innovations introduced in this study may inform future work in other domains requiring high-fidelity texture synthesis and style preservation, such as historical document restoration, archaeological image reconstruction, and medical imaging enhancement. The model’s design also serves as a valuable reference for integrating hybrid attention mechanisms into GAN-based frameworks under data-limited conditions.

Beyond its academic significance, the I-DCGAN demonstrates considerable promise in real-world deployment scenarios. It can be directly embedded into virtual museum platforms to provide automated, high-fidelity previews of restored sculptures. Integrating the model into interactive exhibition systems would allow visitors to dynamically switch between original damaged artifacts and their digitally restored counterparts, thereby enhancing public engagement and educational outreach. In large-scale cultural heritage databases, the model could function as a backend service for automated batch restoration, annotation, and condition assessment of visual records—including sculptures, murals, and archaeological artifacts—significantly reducing manual processing time while improving the consistency of archival data. Furthermore, the I-DCGAN can form the core of a human–AI collaborative restoration workflow, where professional conservators review, refine, and approve AI-generated restorations. The system could present multiple candidate outputs ranked by structural similarity and stylistic fidelity, enabling experts to select, adjust, or merge results. This collaborative approach combines the efficiency of automated processing with the domain expertise of conservators, thereby improving both technical accuracy and cultural authenticity.

The implications of this study extend beyond technical performance gains. By enabling scalable, high-quality digital restoration, the proposed model supports the preservation of fragile cultural artifacts without physical intervention. It also provides curators, researchers, and the public with unprecedented access to historically accurate reconstructions, fostering deeper cultural understanding and appreciation while ensuring the long-term safeguarding of heritage assets.

5.2. Limitations and Constraints of This Study

While the improved DCGAN (I-DCGAN) model demonstrates notable academic and practical value, with promising applications in virtual museums, cultural heritage databases, and human–AI collaborative restoration workflows, it is equally essential to critically examine its inherent limitations. Despite the model’s clear advantages in restoring the color and texture of sculptures, several constraints remain that warrant careful consideration. The following section provides a balanced assessment of these limitations and positions the I-DCGAN within the broader landscape of alternative restoration methodologies.

First, the model’s performance is constrained by the lack of large-scale, high-quality paired datasets of damaged and original sculpture images. This scarcity limits its capacity to generalize across diverse cultural contexts, material types, and complex texture styles. Moreover, the acquisition of such datasets in the heritage restoration domain remains both labor-intensive and costly, posing a substantial barrier to further advancements. Second, while the integration of a dual attention mechanism and channel converter enhances both fine-grained local texture reconstruction and overall stylistic coherence, the model can still produce stylistic deviations or structural misinterpretations when dealing with severely degraded or semantically abstract artworks. In such cases, achieving an optimal balance between local detail fidelity and global style preservation remains challenging. Third, although Vision Transformer (ViT) architectures are recognized for their strong capability in modeling long-range dependencies, a pure ViT-based approach was not adopted in this study for three main reasons.

- (1)

ViTs require extensive, high-quality paired datasets for supervised training, which are currently unavailable for sculpture restoration.

- (2)

The proposed I-DCGAN already incorporates hybrid attention modules and a channel converter, enabling partial global feature modeling within a GAN framework better suited for limited-data conditions.

- (3)

For restoration tasks, GAN-based methods are inherently more effective at producing high-fidelity local details, whereas unmodified ViT architectures tend to prioritize global relationships at the expense of detailed local synthesis.

Nevertheless, the I-DCGAN itself has shortcomings that alternative approaches, such as ViTs or other advanced architectures, could potentially address. While the dual attention mechanism allows for partial capture of global stylistic cues, its long-range dependency modeling capability remains inferior to that of Transformer-based methods, making it less effective for artworks with widely dispersed or highly interdependent stylistic elements. In addition, as a GAN-based model, the I-DCGAN is susceptible to training instability and mode collapse, occasionally resulting in artifacts or inconsistencies in fine detail synthesis. Beyond ViTs, other paradigms offer instructive contrasts. Traditional CNN-based inpainting methods are efficient in learning localized structural patterns but generally fail to capture the broader semantic composition required for the restoration of complex heritage artworks. Recent advances in diffusion models have delivered state-of-the-art performance in image generation and restoration, producing highly realistic outputs; however, they typically demand significantly greater computational resources and training time, and their effectiveness in fine-grained detail reconstruction for severely degraded cultural heritage data remains less explored. Within the GAN family, CycleGAN excels in unpaired image-to-image translation but can introduce structural artifacts, while StyleGAN achieves high realism but often alters the original artistic style excessively—an undesirable outcome in cultural heritage preservation. Compared with these approaches, the I-DCGAN strikes a favorable balance between local detail fidelity, global style preservation, and computational efficiency, though it still falls short of the global semantic modeling capacity and ultra-high-fidelity synthesis achieved by certain newer architectures.

5.3. Future Research Directions for AI-Based Sculpture Restoration

While the I-DCGAN model demonstrates notable strengths in balancing fidelity, coherence, and efficiency, the constraints discussed above highlight areas where its performance could be further improved. In light of the findings and limitations discussed above, several targeted research avenues can be pursued to advance the technical capabilities, cultural sensitivity, and practical applicability of AI-driven sculpture restoration.

- (1)

Expanding the training corpus to include a broad spectrum of materials, artistic styles, cultural origins, and damage types would enhance both model generalization and transferability. Incorporating high-resolution imagery with standardized metadata would also facilitate reproducibility and enable cross-domain benchmarking.

- (2)

Combining 2D visual data with 3D scanning, hyperspectral imagery, and textual descriptions could provide richer semantic and structural representations of artworks. Such multimodal fusion is particularly beneficial for sculptures with intricate surface details, symbolic motifs, or subtle stylistic cues.

- (3)

Leveraging Transformer-based or hybrid architectures capable of modeling long-range dependencies and complex spatial compositions may improve the restoration of artworks with widely dispersed or interdependent stylistic elements. Integrating these capabilities into GAN or diffusion frameworks could offer a balanced synthesis of fine local detail and coherent global style.

- (4)

Embedding the model into interactive workflows where professional conservators review, refine, and approve AI-generated outputs would ensure both technical accuracy and cultural authenticity. Iterative feedback loops between experts and the system could also serve as a mechanism for continuous improvement.

- (5)

Conducting comprehensive comparative analyses against alternative state-of-the-art methods—such as diffusion models, StyleGAN, CycleGAN, and CNN–Transformer hybrids—would clarify the trade-offs between accuracy, computational efficiency, and stylistic fidelity, enabling optimal model selection for specific restoration contexts.

By pursuing these directions, future research can address current methodological gaps, broaden the applicability of AI-based restoration techniques, and contribute to the sustainable preservation and dissemination of cultural heritage.

6. Conclusions

Sculpture represents a significant component of human cultural heritage. To facilitate the digital restoration of damaged sculptures, this study proposes a novel image restoration method based on generative artificial intelligence technology. This approach enables the digital restoration of artworks while preserving their original artistic style and semantics, providing valuable guidance and reference for art conservators and museums in conducting physical restoration. In this study, a Generative Adversarial Network (GAN) model incorporating dual attention modules and channel converters is introduced to achieve digital restoration of damaged sculptures. The proposed neural network is trained using a linear combination of perceptual loss, adversarial loss, and structural similarity index (SSIM) loss, enabling high-precision image restoration. A comparative analysis was conducted between traditional sculpture image restoration methods and the improved DCGAN approach. The results demonstrate that the proposed method outperforms existing art restoration techniques in terms of SSIM, mean squared error (MSE), and peak signal-to-noise ratio (PSNR) metrics. The enhanced DCGAN model effectively refines texture details while maintaining overall stylistic consistency between pre- and post-restoration images. Given its superior performance in restoring sculpture color and texture with high structural and stylistic fidelity, the proposed I-DCGAN model holds strong potential for integration into digital heritage management systems. It can be embedded into web- or cloud-based platforms to support automated pre-restoration previews in virtual museums, enabling curators to visualize damaged artworks with high fidelity without physical intervention. In large-scale heritage databases containing visual records such as murals, sculptures, or archaeological images, the model may function as a backend service for batch restoration, annotation, or condition assessment. When integrated with user-friendly interfaces, it further allows restoration professionals, art historians, and museum staff to interactively explore restoration scenarios, thereby enhancing accuracy and efficiency in decision-making.