1. Introduction

As the world’s largest agricultural producer, agriculture plays a key pillar role in China’s economy and is crucial for national economic development [

1,

2]. However, during the crop cultivation process, environmental factors make crops highly susceptible to various diseases [

3]. This not only affects the quality of crops but also leads to more serious issues such as reduced food production. Among the common types of crop diseases, fungal diseases lower the market value of crops by damaging their leaves, stems, or fruits and may introduce harmful substances, while insect pests directly damage various parts of the crops, causing plant injury or even crop death. In practical agricultural production, both types of diseases cause significant losses to agriculture. Therefore, accurately detecting crop diseases is extremely important for ensuring the quality of agricultural products and reducing economic losses.

Driven by advancements in deep learning technologies, the integration of agricultural engineering and artificial intelligence has emerged as a key trend in modern agricultural practices [

4,

5,

6,

7,

8,

9,

10,

11]. Image-based detection and localization methods for object recognition have been widely applied across various fields of agricultural monitoring [

12,

13,

14,

15,

16,

17,

18,

19,

20]. Rahman, C.R. et al. [

21] introduced a CNN-based framework for rice pest and disease identification by fine-tuning established models such as VGG16 and InceptionV3, which demonstrated strong classification performance. Additionally, they developed a compact two-stage CNN architecture that significantly compressed the model, reducing its parameter count by 99% relative to VGG16 and achieving a classification accuracy of 93.3%, thereby enabling efficient execution on mobile platforms. The model was trained on a specific dataset, indicating limitations in its adaptability to cross-regional or diverse field conditions, which highlights the need for further enhancement of its generalization performance. Mathew, M. P. et al. [

22] proposed a modified YOLOv5 architecture to identify bacterial spot disease on sweet pepper foliage, enabling rapid identification in large-scale farmland. This approach captures field images using smartphones and leverages YOLOv5 for real-time disease detection, offering both high accuracy and speed, thus allowing farmers to detect and manage diseases promptly. Despite its efficiency, the study focused only on a single disease—bacterial spot—and lacked comprehensive multi-disease recognition capabilities. Xue, Z. Y. et al. [

23] proposed an improved pest and disease detection model for tea plants named YOLO-Tea, which integrates ACmix, CBAM, and RFB modules into YOLOv5 and employs GCNet to reduce resource consumption. The model significantly enhances detection accuracy and efficiency for tea leaf diseases and pests under complex natural conditions. The experimental results demonstrate that YOLO-Tea achieves performance gains of 5.5%, 1.8%, and 7.0% over Faster R-CNN in AP0.5, APTLB, and APGMB, respectively. Furthermore, it shows superior results compared to SSD, with improvements of 7.7%, 7.8%, and 5.2% across the same evaluation metrics. Overall performance improvements range from 0.3% to 15.0%. One limitation, however, is that the dataset was collected only during well-lit afternoon hours, without considering low-light conditions in the early morning or at night. Further improvements are needed to enhance the model’s adaptability across different lighting environments. Zhao, S. Y. et al. [

24] proposed a Faster R-CNN model with multi-scale feature fusion for detecting multiple diseases in greenhouse-grown strawberries. By integrating ResNet, FPN, and CBAM modules, the model effectively improves recognition performance for small lesions in complex backgrounds. However, the high architectural complexity and substantial computational demands hinder its applicability in resource-constrained environments such as lightweight or edge computing scenarios.

Zhao, Y. F. et al. [

25] introduced an enhanced detection framework named SPD-YOLOv7, specifically designed for pest identification in maize crops under challenging conditions such as small object size, image blur, low resolution, and interspecies variation. Based on YOLOv7, the model incorporates a Space-to-Depth Convolution (SPD-Conv) module to retain small-target features and integrates ELAN-W with CBAM to improve feature extraction efficiency. Coupled with data augmentation strategies including Gaussian noise and brightness adjustment, the framework enhances robustness and generalization. Experimental results show that SPD-YOLOv7 achieves an accuracy of 98.38% and an average accuracy of 99.4%, outperforming the original YOLOv7 by 2.46% and 3.19%, respectively. The model maintains real-time detection performance; however, its architecture is more complex than that of the original YOLOv7, which poses challenges for deployment on embedded devices. Sun, D. Z. et al. [

26] proposed an improved YOLOv8 model tailored for pest detection in tobacco under complex environmental conditions. The model incorporates the AFPN structure, the VoV-GSCSP module, and a parameter-free SimAM attention module, effectively lowering computational complexity and model size while preserving high detection accuracy. Despite overall performance improvements, the gains in accuracy remain modest, with mAP@0.5 increasing by only 1%, recall improving by 2.7%, and precision rising by 2.4%. Thus, the enhancement in detection accuracy is relatively limited.

The above research results demonstrate that object detection technology has become increasingly mature in the field of crop disease identification, offering strong technical support for precision agriculture management. However, most existing studies remain confined to specific diseases of individual crops [

27,

28,

29,

30,

31,

32,

33,

34,

35], with the developed models typically optimized for particular data distributions and application scenarios. This results in limited generalization capability, making it difficult for these models to adapt to the diverse conditions found in real-world field environments, thus significantly hindering the large-scale deployment and practical application of such technologies in agriculture. To overcome this bottleneck and further enhance the precision in identifying and controlling plant diseases, this paper proposes an improved object detection framework named YOLO-MSCM. This framework is designed to boost the detection accuracy and robustness of the model in complex natural scenes involving multiple crop diseases, thereby promoting the development of intelligent plant protection technologies toward greater generality and practicality.

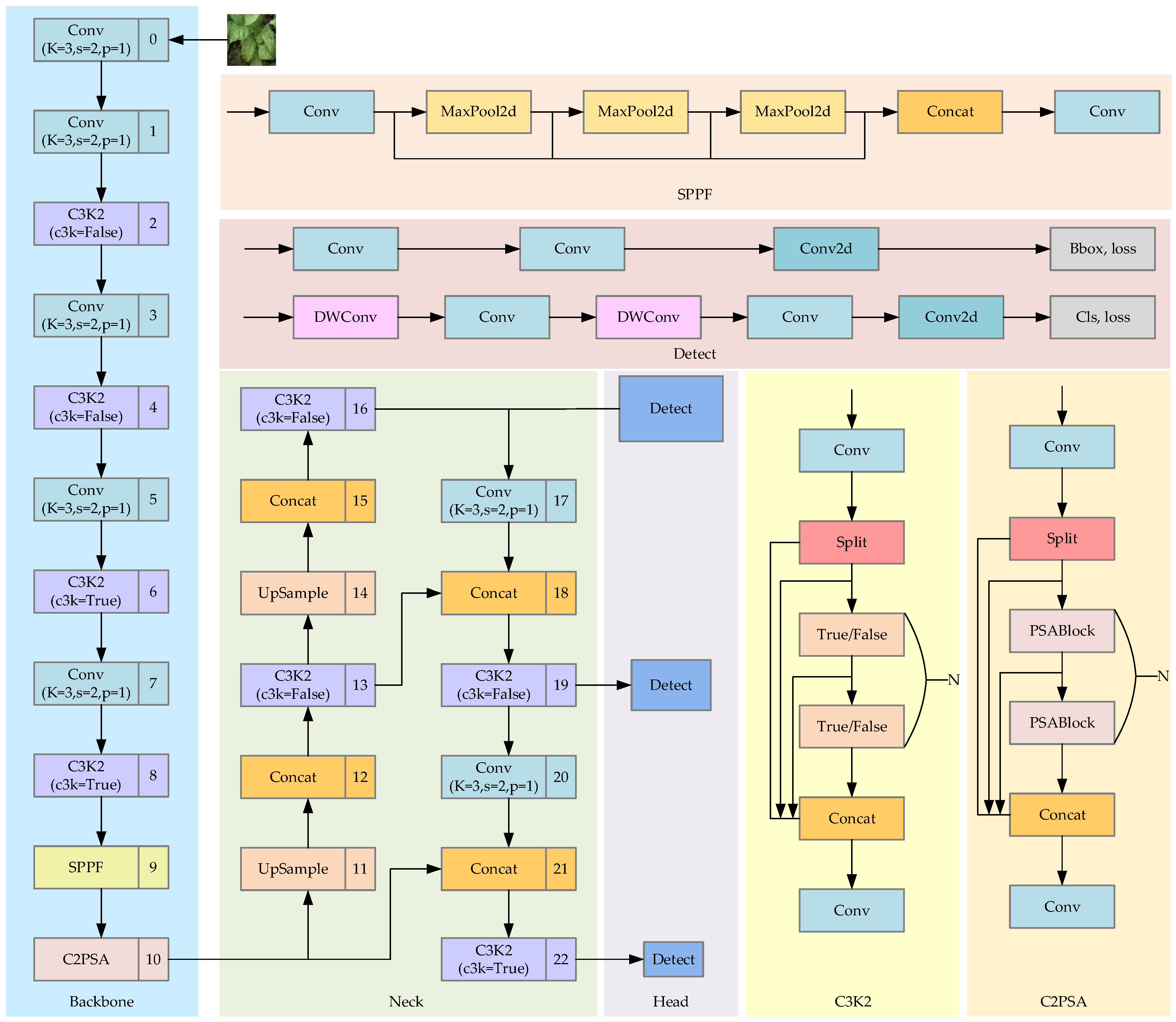

The improvements in this method are mainly reflected in the following four aspects:

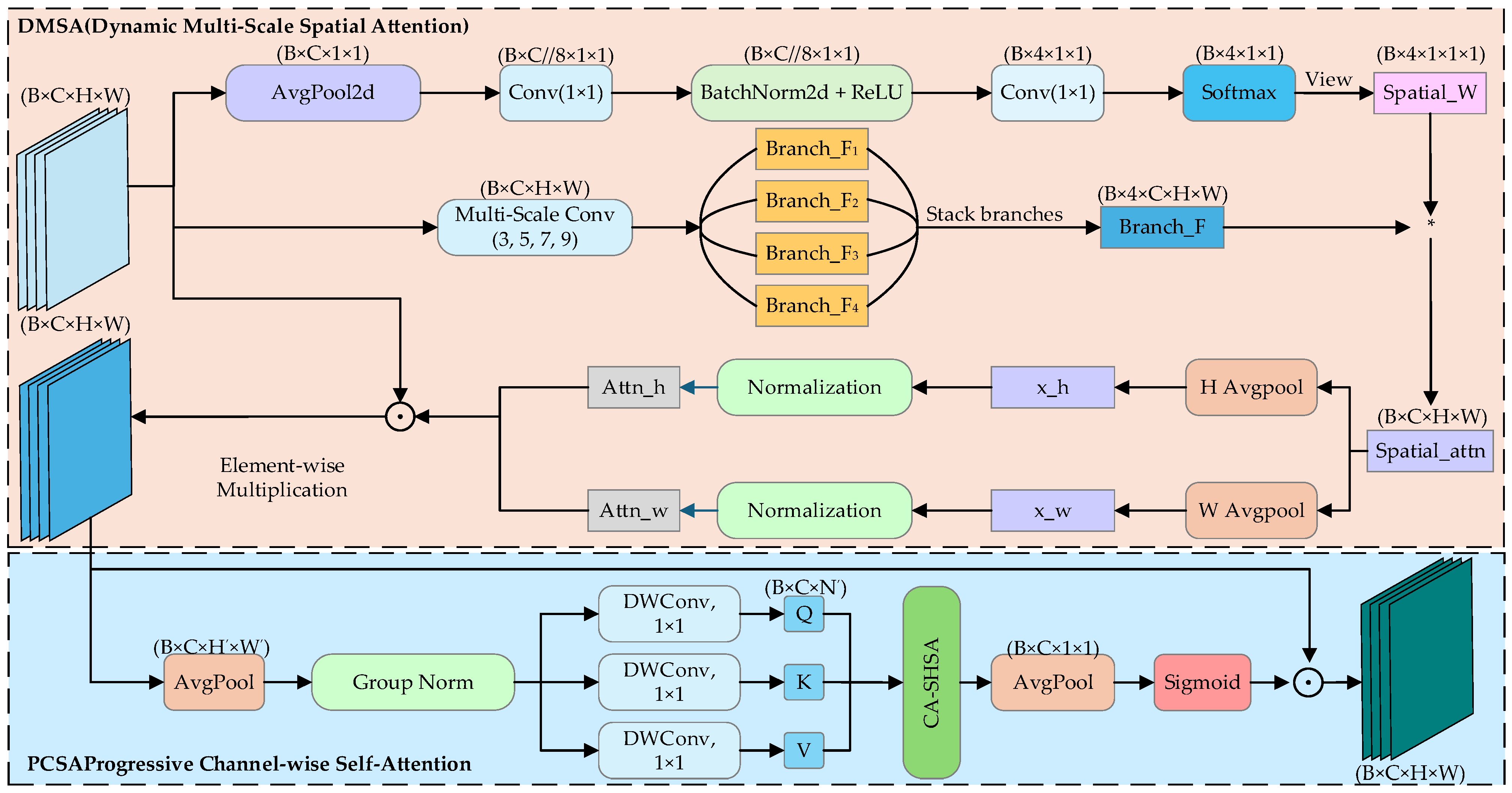

We propose an attention module incorporating multi-scale spatial perception—MCSA. MCSA fuses multi-scale spatial information through parallel branches and employs a lightweight gating network to dynamically adjust the weights of each branch, thereby enhancing the focusing ability of spatial attention.

A context-aware feature enhancement module—SimRepHMS—is proposed. SimRepHMS introduces a multi-branch depthwise separable bottleneck structure that captures contextual dependencies through cascaded multi-scale receptive fields. It then adaptively enhances the fused feature maps to highlight key regions, thus improving the feature fusion effectiveness across different levels.

To further enhance the adaptability and representational power of feature expression, the DynamicConv dynamic convolution mechanism is introduced. This mechanism generates attention weights based on the feature vectors obtained through global average pooling and dynamically selects and fuses multiple expert convolution kernels, thereby improving robustness and generalization performance across varying environmental conditions.

WIoUv3 is adopted as the loss function to enhance the model’s emphasis on localization accuracy and mitigate the negative impact of low-quality anchor boxes by suppressing harmful gradients in the later training stages, ultimately improving training stability.

3. Results

This section presents a comprehensive evaluation of the proposed YOLO-MSCM framework, including the experimental setup, performance metrics, ablation studies, and comparisons with state-of-the-art models. The results are organized to systematically demonstrate the effectiveness, efficiency, and generalization capability of the improved architecture in multi-crop disease detection tasks.

3.1. Experimental Environment

The experimental environment utilized in this study is Ubuntu 22.04 (CPU: AMD Ryzen 5 3600, GPU: NVIDIA GeForce RTX2080Ti 11 GB). Python 3.9.0, CUDA 11.8, and PyTorch 2.1.0 were used for model construction. Additionally, no pre-trained weights were used in any of the experiments. Detailed parameter configurations can be found in

Table 2.

In this study, all models are trained under the same hyperparameters, training strategies, and data partitioning methods to ensure a fair comparison. To fully account for the inherent randomness in the training process of deep neural networks (e.g., random seed, weight initialization, etc.), each comparative experiment is independently repeated three times. The experimental results presented in this paper are the averages of the three independent runs, which enhances the reliability and robustness of the performance evaluation.

3.2. Experiment Metrics

A diverse set of well-established evaluation metrics is selected to rigorously assess the model’s detection performance from multiple perspectives. These include the following: Mean Average Precision (map), which is used to assess the accuracy of the model in detecting and classifying objects. Precision (P), which measures the proportion of predictions that are correctly identified as positive samples out of all predictions made as positive. Recall (R), which reflects the proportion of actual positive samples that are correctly predicted as positive by the model. Parameter count (Params), a key metric for measuring model complexity [

38]. FPS (Frames Per Second) is used as a key metric to evaluate the real-time inference capability of a model. It is inversely proportional to latency and provides a practical measure of the model’s runtime performance [

39,

40]. Computational cost (GFLOPs), which provides an effective measure of the model’s computational complexity during inference and reflects its potential computational burden when deployed on edge devices.

Assuming there are

categories, and the

for the

i-th category is

, the formulas for calculating

,

,

, and

are shown below:

Here, denotes the count of true-positive predictions made by the model, denotes the count of false-positive predictions made by the model, denotes the count of false-negative predictions made by the model, and denotes the overall inference duration required by the model. The experimental analysis is organized under appropriate subsections to ensure clarity and readability. Results are presented in a clear and succinct manner, accompanied by thorough interpretation and supported conclusions.

3.3. Attention Mechanism Comparison Experiment

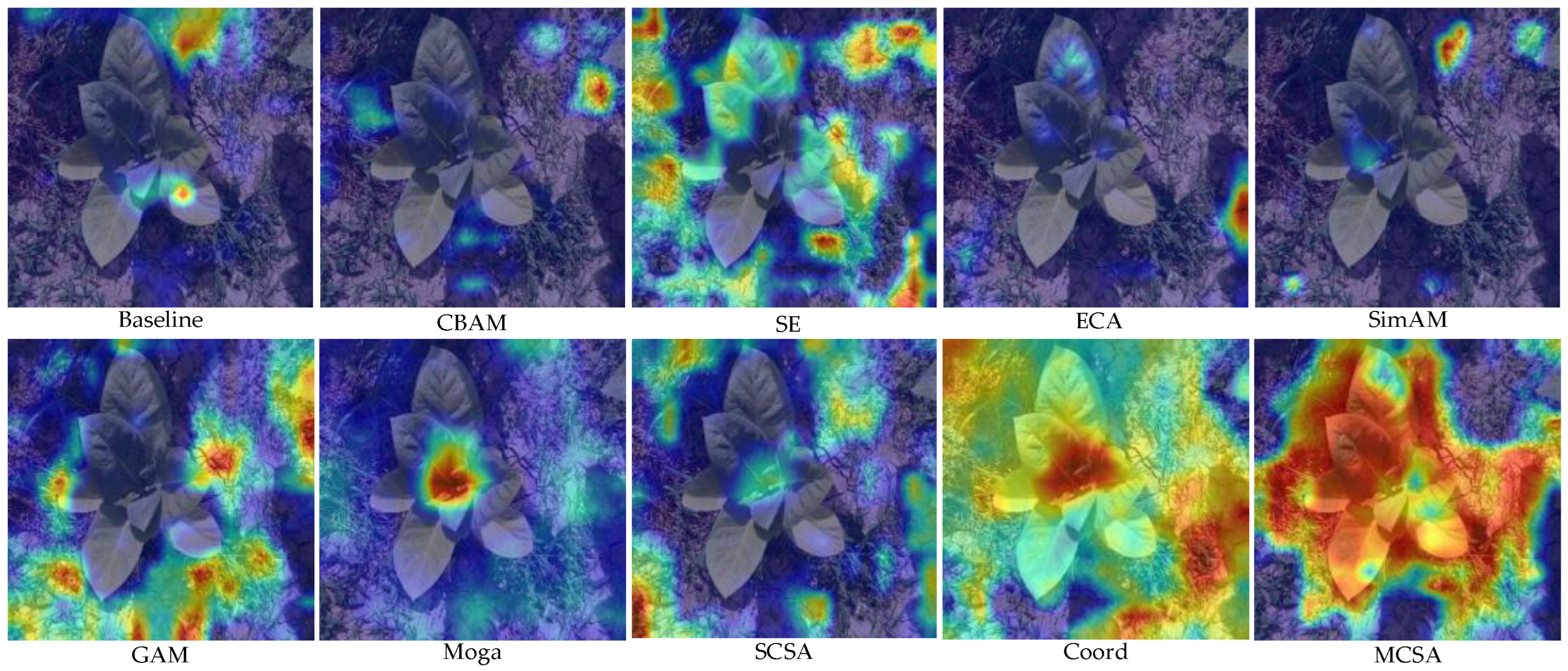

To evaluate the effectiveness of different attention mechanisms in the crop disease detection task and further validate the performance advantages of the proposed MCSA module, we incorporated several mainstream attention modules—including CBAM, SE, SimAM, ECA, GAM, Coord, Moga, and SCSA—into the YOLO11 baseline model. All models were compared under the same experimental conditions to ensure fairness.

Table 3 presents a summary of the experimental outcomes.

As shown in the table, the baseline model achieves an mAP@50 of 85.7% and an mAP@50:95 of 56.6% on this task. After incorporating various attention mechanisms, the overall detection performance improves to some extent. However, the improvements brought by CBAM and SE are limited, and in some cases, performance slightly declines, indicating their relatively weak adaptability in fine-grained detection tasks for crop disease. In contrast, SimAM and Coord demonstrate stronger feature enhancement capabilities, improving mAP@50 to 88.6% and 88.9%, respectively, showing certain application potential. Moga and SCSA perform particularly well in terms of recall (R), achieving 79.3% and 80.6%, respectively, suggesting their advantages in complex background scenes and small-target recognition. The proposed MCSA module achieves the best performance across all evaluation metrics. Specifically, precision (P) reaches 89.3%, recall (R) is 80.4%, mAP@50 is improved to 89.9%, and mAP@50:95 reaches 59.9%. This significant improvement is attributed to the core innovation of the MCSA module—multi-scale spatial perception. More specifically, MCSA effectively captures spatial information across different object scales by fusing multi-scale convolution branches, thereby enhancing the model’s ability to focus on key regions in complex backgrounds. Furthermore, combined with an adaptive weight allocation mechanism, MCSA dynamically adjusts the contribution of each scale branch, enabling more accurate target localization and feature representation. In terms of computational cost, most attention modules do not increase computational complexity, except for GAM, Moga, and MCSA. While MCSA introduces a slight additional computational burden due to the increased number of convolution operations, it still maintains a lightweight characteristic. In summary, through systematic comparisons with multiple mainstream attention mechanisms, the proposed MCSA module demonstrates stronger robustness and generalization capability in the crop disease detection task. It significantly improves detection performance in complex environments and exhibits promising application potential.

To visually assess the effectiveness of the proposed MCSA module in feature extraction, Grad-CAM++ [

41] was utilized to generate class activation maps for comparative visualization. A total of nine attention mechanisms—namely CBAM, SE, SimAM, ECA, GAM, Moga, SCSA, Coord, and the proposed MCSA—were selected for this analysis, with their corresponding heatmaps displayed in

Figure 9. In these visualizations, warmer (redder) regions reflect higher model sensitivity and focus during the detection process. The results reveal that most conventional attention modules not only highlight the target areas but also activate irrelevant background regions, which may mislead the model and impair its detection performance [

42]. By contrast, the proposed MCSA module produces a more precise and focused attention distribution, effectively suppressing activations outside the target region and thereby improving the model’s focus on key characteristics. These findings suggest that MCSA offers improved localization accuracy and greater interpretability compared to existing attention mechanisms.

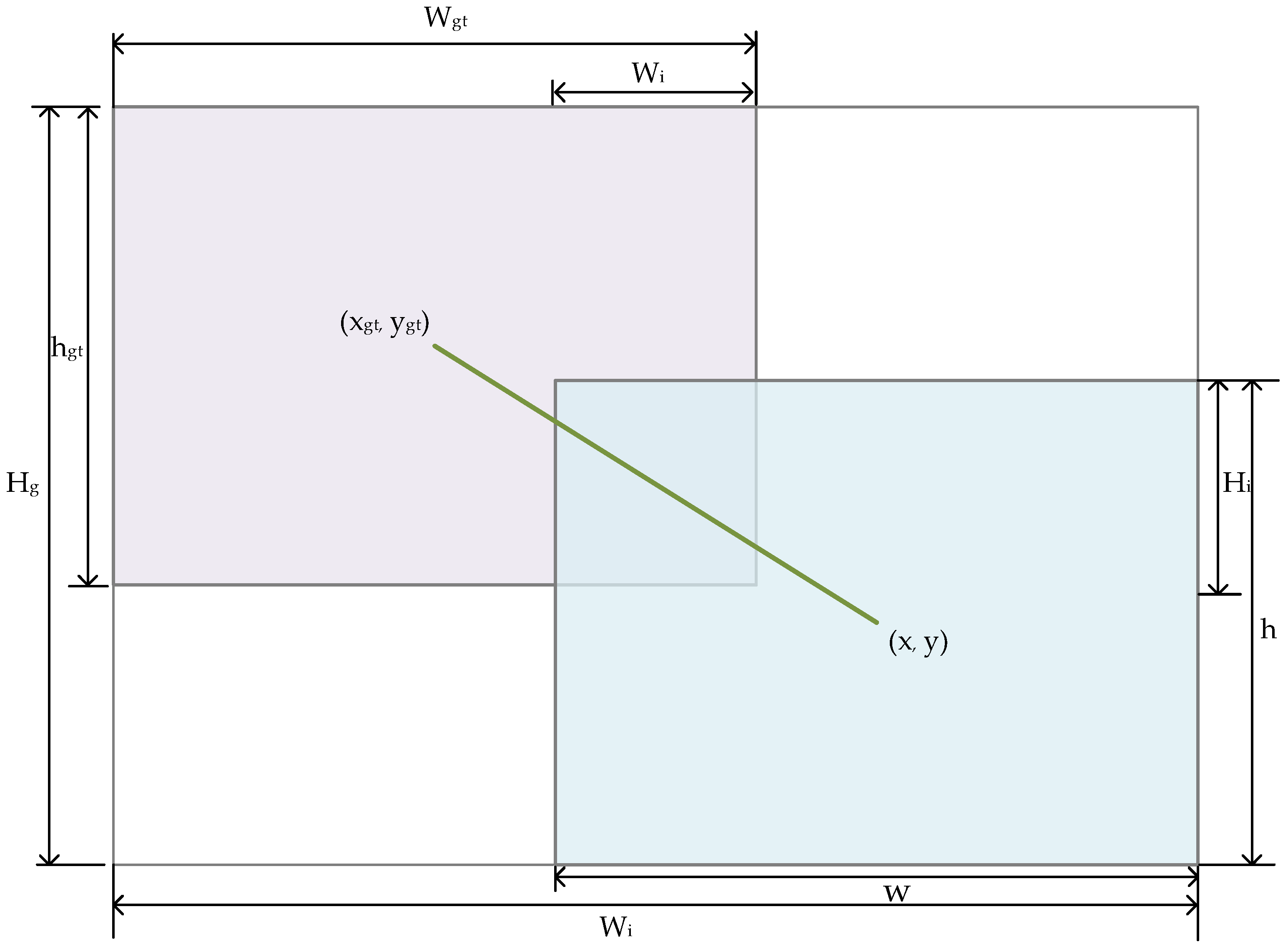

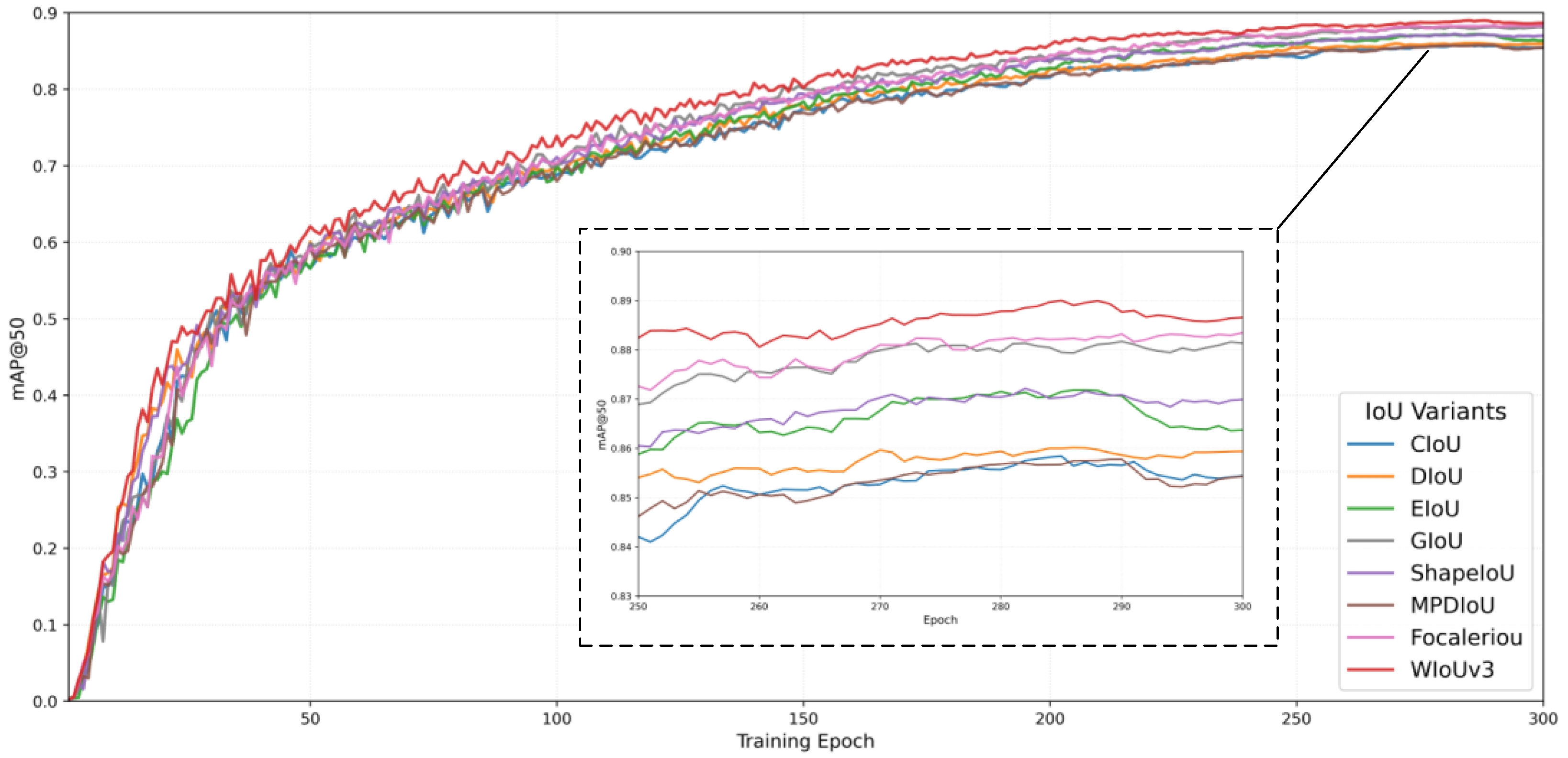

3.4. Comparative Experiment of Loss Functions

Aimed at analyzing the influence of various loss functions on the detection performance of the YOLO11n model, and seeking to demonstrate the advantage of WIoUv3 in target localization and bounding box regression, this paper sequentially replaced the original CIoU loss function used in the model with several advanced bounding box regression loss functions, including GIoU, DIoU, EIoU, ShapeIoU, MPDIoU, FocalerIoU, and WIoUv3. A comparative analysis was conducted under the same experimental conditions to evaluate the performance of each loss function on a unified dataset. As shown in

Table 4, the detection performance metrics for each loss function are presented, including precision, recall, mAP@50, and mAP@50:95.

The results show that WIoUv3 achieves the highest overall performance across all evaluation metrics. Specifically, it reaches an mAP@50 of 88.9% and an mAP@50:95 of 58.6%, marking improvements of 3.2 and 2.0 percentage points, respectively, over the CIoU baseline. Although the improvement in mAP@50 over GIoU and FocalerIoU is relatively modest (approximately 0.7%), and the recall is slightly lower than that of EIoU by 0.2 percentage points, WIoUv3 achieves the highest precision (P) of 88.3%, outperforming the second best, FocalerIoU, by 1.8 percentage points. This improvement is particularly crucial, as it indicates that WIoUv3 effectively reduces false positives and provides more confident detection results, reflecting better localization accuracy. These findings suggest that, while the gains in certain individual metrics may seem marginal, WIoUv3 strikes the best balance between precision, recall, and detection accuracy, making it the most well-rounded and effective loss function among all evaluated methods.

To intuitively compare the impact of different loss functions on model accuracy,

Figure 10 was plotted for comparison. As illustrated in the figure, after the same number of training rounds, WIoUv3 exhibits a more stable convergence trend and achieves higher final accuracy, clearly outperforming other loss functions. This indicates that WIoUv3 has better performance in terms of enhancing the learning efficiency of the model and maintaining training stability.

3.5. Ablation Experiment

To further evaluate the actual impact of each proposed module in the network architecture, this section conducts a series of ablation experiments based on YOLO11 as the baseline model. Eight different improvement strategies were tested on the same dataset to comprehensively assess the specific influence of the proposed MCSA and SimRepHMS modules, as well as the introduced DynamicConv and WIoUv3 modules, on object detection performance. The experimental results are summarized in

Table 5.

From the data in the table, it can be observed that without any improvements, the baseline model achieves an mAP@50 of 85.7%, an mAP@50–95 of 56.6%, with 2.58M parameters and a computational complexity of 6.3 GFLOPs. When only the MCSA module is added, the model’s mAP@50 increases to 89.9% and mAP@50–95 rises to 59.9%, indicating that the MCSA module significantly improves detection accuracy. At the same time, both parameter count and computational cost remain unchanged, demonstrating its good lightweight characteristics. When the SimRepHMS module is further introduced, the mAP@50 reaches 89.2% and the mAP@50–95 improves to 59.5%. Although this module brings considerable performance gains, it also causes a slight increase in both parameter count and computational cost. In comparison, the DynamicConv module provides relatively smaller performance improvements, increasing mAP@50 to 86.5% and mAP@50–95 to 56.3%, while maintaining the original model’s lightweight advantage to some extent.

When MCSA is used in combination with SimRepHMS, the mAP@50 decreases by 0.2%, and the mAP@50–95 significantly drops by 1.1%. Similarly, when SimRepHMS is combined with DynamicConv, performance also deteriorates, with mAP@50 and mAP@50–95 decreasing by 2.1% and 2.4%, respectively. Through a thorough structural analysis, it was found that the source of the performance decline in both cases is attributed to the multi-branch architecture of SimRepHMS. Specifically, the role of MCSA is to selectively enhance key features and suppress irrelevant responses through channel and spatial attention mechanisms. However, when used in conjunction with SimRepHMS, the multi-branch structure of SimRepHMS reintroduces new feature response patterns through independent convolution operations. Some of these branches activate noise regions that were previously suppressed by MCSA, leading to negative effects. A similar issue arises when SimRepHMS is combined with DynamicConv. DynamicConv relies on the multi-branch structure of SimRepHMS to generate dynamic convolution kernels. However, when SimRepHMS is reparameterized into a single convolution kernel, its feature processing method changes, causing a mismatch between the convolution features generated by DynamicConv and the actual processing path, thus resulting in a performance drop. When all three modules—MCSA, SimRepHMS, and DynamicConv—are used together, MCSA effectively purifies the input features at the feature input stage, enhancing the reliability of the dynamic convolutions generated by DynamicConv and improving the discriminability of the features processed by DynamicConv. After DynamicConv processes the features, they are passed to SimRepHMS, which fully leverages its multi-branch structure for more efficient feature fusion. This further expands the receptive field and contextual information, ultimately improving detection performance. As a result, when MCSA, SimRepHMS, and DynamicConv are used together, the model’s mAP@50 increases to 90.4%. Although the increase in mAP@50–95 is only 0.6%, the model demonstrates strong robustness and generalization ability. Finally, after integrating all four modules—including the WIoUv3 loss function—the model achieves the best overall performance across all metrics: mAP@50 reaches 91.9%, and mAP@50–95 increases to 60.4%, with 2.88M parameters and a computational complexity of 7.8 GFLOPs. This result indicates that the introduction of WIoUv3 effectively improves the precision of bounding box regression, thereby significantly enhancing the overall detection performance.

In summary, through the gradual integration of the four improved modules—MCSA, SimRepHMS, DynamicConv, and WIoUv3—the experiments fully validate the effectiveness of each module in improving detection accuracy and their ability to work synergistically. At the same time, the model achieves higher detection efficiency while keeping computational costs under control, offering practical technical support for future deployment on edge devices. This makes it especially suitable for high-precision real-time detection tasks such as crop disease identification.

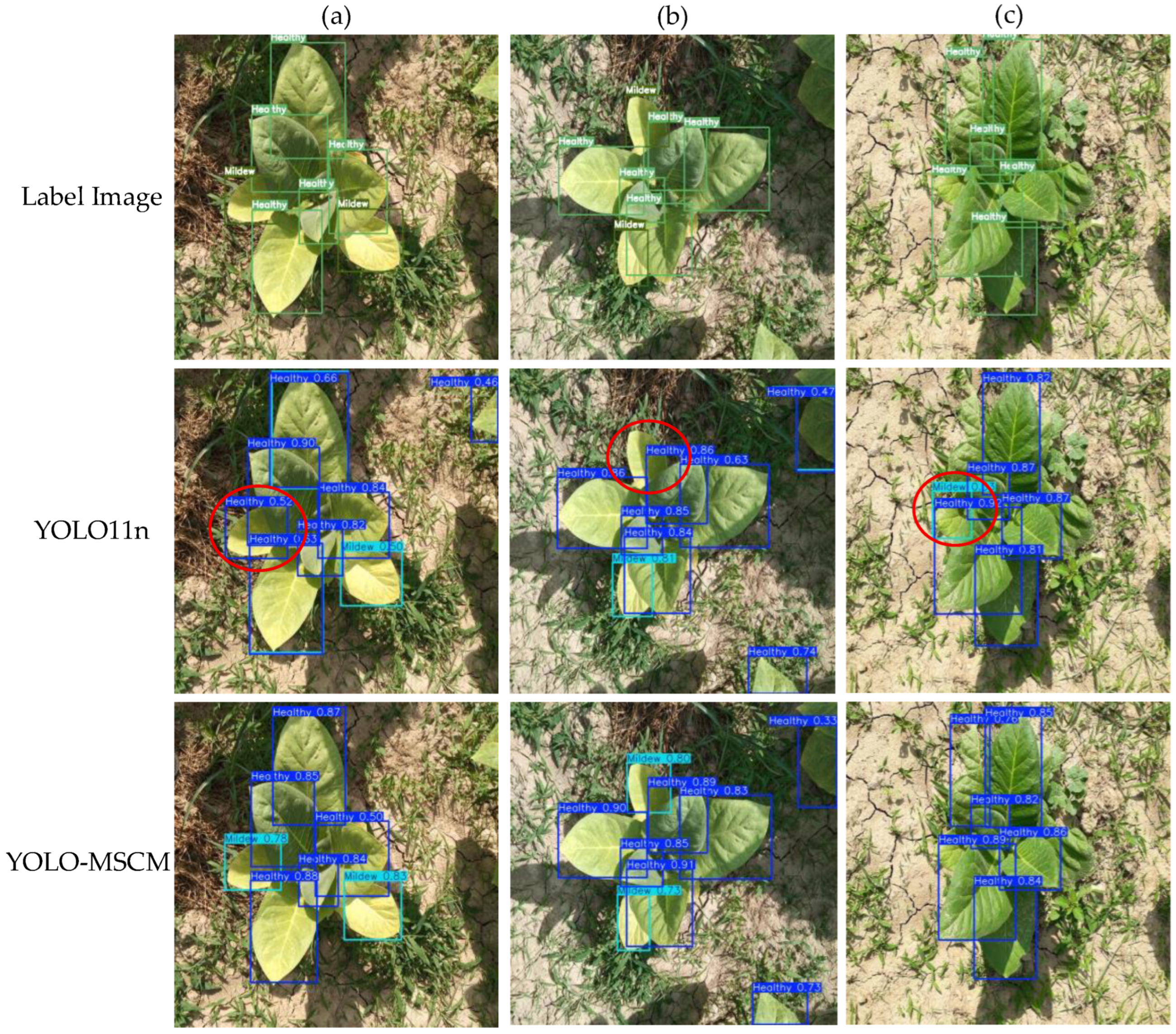

3.6. Improved YOLO-MSCM Comparison Experiment

To evaluate the performance of the proposed YOLO-MSCM model for crop disease detection, we conducted a comprehensive comparison with the lightweight object detection model YOLO11n. As shown in

Table 6, under the same experimental conditions, YOLO-MSCM significantly outperforms YOLO11n across all key evaluation metrics. Specifically, YOLO-MSCM achieves a precision (P) of 88.8%, a recall (R) of 85.5%, an mAP@50 of 91.9%, and an mAP@50:95 of 60.3%. These represent improvements of 6.1%, 9.1%, 6.2%, and 3.7%, respectively, over YOLO11n, fully demonstrating its superior target recognition capability and higher detection accuracy. In terms of model complexity, YOLO-MSCM has 2.88M parameters and a computational cost of 7.8G FLOPs. Compared to YOLO11n (2.58M parameters, 6.3G FLOPs), only a small additional computational overhead is introduced, yet a significant improvement in detection performance is achieved. This indicates that, while maintaining model lightweight characteristics, by integrating multi-level spatial perception with contextual aggregation strategies, YOLO-MSCM strengthens the model’s sensitivity to disease lesion regions, leading to more reliable and generalizable feature representations.

In field environments, the main factors affecting detection performance include insufficient lighting and occlusion of the target objects. To further analyze the model’s performance in complex agricultural scenarios, we selected representative images from the test set for experimentation, as shown in

Figure 11, aiming to comprehensively evaluate the model’s accuracy and stability in real-world application scenarios. According to the results of (a) and (b), YOLO11 tends to miss lesion areas on crop leaves under low-light conditions, especially when there is strong background interference, leading to incorrect or missed detections. In comparison, YOLO-MSCM introduces SimRepHMS, which utilizes Local Context Modeling to combine information from the target and its surrounding regions. This enhances feature expression and helps the model better capture subtle or hard-to-distinguish details in low-light environments. The results shown in (c) indicate that when the target is heavily occluded, YOLO11 fails to extract sufficient effective features, resulting in a decline in lesion recognition capability and incorrect detections. In contrast, YOLO-MSCM employs the MCSA mechanism, which enhances the model’s capacity to detect objects across multiple scales. Even under partial occlusion, it maintains relatively good detection performance. Experimental results fully validate that YOLO-MSCM exhibits stronger robustness and practicality in complex field environments, indicating its strong adaptability to real-world farming contexts featuring varying lighting conditions or the presence of occlusion.

3.7. Comparison of Different Detection Models

To assess the effectiveness of the proposed YOLO-MSCM framework in detecting crop diseases, a range of advanced detection architectures were selected as benchmark models, including Faster R-CNN-VGG, RT-DETR-R50, the YOLOv8 to YOLOv13 family, and the recently introduced domain-specific YOLO-Tobacco. All models were trained and evaluated under the same dataset, training strategy, and experimental environment to ensure a fair and comprehensive comparison. As shown in

Table 7, the proposed YOLO-MSCM model achieved superior performance across all evaluation metrics.

In terms of detection accuracy, YOLO-MSCM achieved a precision of 88.9% and a recall of 85.4%, outperforming all other models. This indicates that the model not only maintains a relatively low false-positive rate but also demonstrates strong capability in identifying infected areas, effectively reducing the rate of missed detections. The model also achieved the highest performance among all compared models in two key average precision metrics under different IoU thresholds, attaining 91.9% in mAP@0.5 and 60.4% in mAP@0.5:0.95. These results highlight YOLO-MSCM’s excellent robustness and generalization ability in detecting crop diseases under varying object scales and occlusion levels.

From the perspective of model efficiency and deployment feasibility, YOLO-MSCM also demonstrated notable advantages. It contained only 2.88 million parameters and required 7.8 GFLOPs for inference, significantly lower than most high-performance models such as YOLO11s and YOLOv13s. Simultaneously, the model achieved a high inference speed of 181.8 FPS, demonstrating strong potential for real-time implementation on resource-constrained edge platforms. In comparison, YOLO-Tobacco had a similar parameter count (2.47 M), but its mAP@50:95 was only 52.5%, indicating that its detection accuracy still has considerable room for improvement.

4. Discussion

Strict prevention and control of crop diseases is a crucial prerequisite for ensuring the economic benefits of crop cultivation. However, the complexity of field environments poses significant challenges to the automatic detection of plant diseases. Aiming to enhance detection accuracy and robustness for crop diseases under complex agricultural conditions, we introduce an optimized lightweight object detection framework—YOLO-MSCM. In model comparison experiments, YOLO-MSCM outperformed current mainstream detection models across multiple precision-related evaluation metrics, demonstrating notable performance advantages. However, due to the introduction of multiple improved modules, the network structure of the model became deeper, resulting in increased inference time and a corresponding decrease in the frame rate (FPS). While YOLO-MSCM maintains a high inference speed of 181.8 FPS, satisfying fundamental real-time constraints, its deployment in real-world agricultural environments still faces challenges due to hardware limitations, including constrained processing power and memory throughput. Therefore, its parameter count and computational cost may affect deployment efficiency and system stability. In addition, the generalization ability of the current model under different climatic conditions and crop growth stages still requires further validation. The dataset used in this study was primarily collected under clear weather conditions, where lighting remained relatively consistent, and the image capture angles were relatively fixed. Additionally, the dataset does not fully cover images of crops at different growth stages. These factors somewhat limit the environmental and visual diversity of the data. We acknowledge that obtaining accurately labeled images of various crop diseases under different field conditions is a challenging task, and data collection has been constrained by practical conditions. Nevertheless, the dataset has been carefully curated to include key disease types of major crops, making it a valid model evaluation benchmark under controlled yet representative agricultural conditions. Experimental results show that, thanks to targeted data augmentation and attention mechanisms, YOLO-MSCM still demonstrates strong detection capability despite the limited data diversity. However, the lack of environmental variation remains an objective limitation of this study. In agricultural practical applications, the above factors are still challenges that need to be addressed during model deployment. Subsequent work will focus on model compression and dataset expansion.

5. Conclusions

In the context of intelligent development in modern agriculture, automated detection of crop diseases has become an important means to improve agricultural production efficiency and ensure food security. In response to crop disease—a common and highly damaging agricultural disease—this paper proposes an improved lightweight object detection model named YOLO-MSCM, aiming to enhance the accuracy and robustness of existing detection models in complex field environments. Based on YOLO11-n, YOLO-MSCM integrates the concepts of multi-scale spatial perception and local contextual modeling and proposes the MCSA module and SimRepHMS module to effectively improve the model’s feature extraction and fusion capabilities. At the same time, DynamicConv is introduced to further enhance feature expression ability, and the WIoUv3 loss function is adopted to optimize the bounding box regression process. Experimental results on the dataset show that YOLO-MSCM achieves a precision (P) of 88.9%, a recall rate (R) of 85.4%, an mAP@50 of 91.9%, and an mAP@50:95 of 60.4%. Compared with the baseline model YOLO11n, YOLO-MSCM improves precision (P) by 6.1 percentage points, recall rate (R) by 9.1 percentage points, mAP@50 by 6.2 percentage points, and mAP@50:95 by 3.7 percentage points, verifying the effectiveness of the improvements made to YOLO-MSCM. Moreover, through comparative experiments with multiple mainstream models, the results show that YOLO-MSCM surpasses all mainstream models in detection accuracy, demonstrating the advancement of YOLO-MSCM in crop disease detection.

Although YOLO-MSCM can achieve the accurate detection of crop diseases, there are still some limitations. To further optimize deployment efficiency, future efforts will focus on applying compression methods like pruning and distillation, aiming to reduce both parameter count and computational overhead while enhancing frame processing speed and real-time responsiveness. In addition, we plan to expand the current dataset by incorporating disease images under different weather conditions (such as cloudy, rainy, and low-light conditions) and from various perspectives (such as oblique shots and close-ups). We also intend to conduct field data collection across multiple regions and growing seasons to build a larger-scale, real-world scenario dataset. The new dataset will gradually include more crops and disease types, and annotations will be made with respect to disease severity levels. On this expanded dataset, we will perform a comprehensive Grad-CAM++ analysis to visualize and interpret model attention patterns across diverse and challenging conditions. Insights gained from these visualizations—particularly regarding false positives, false negatives, and misaligned feature activations—will be used to iteratively refine and improve the existing modules of YOLO-MSCM. This data-driven refinement process will not only enhance the model’s cross-species generalization capability but also drive the evolution of YOLO-MSCM from a specialized detection framework into a more universal and robust solution for real-world plant disease detection.

At the same time, we acknowledge that the current evaluation primarily relies on theoretical computational load and average inference speed and has yet to encompass more granular system-level metrics such as memory usage, power consumption, and hardware utilization. In future work, we plan to introduce more advanced performance evaluation metrics—such as energy efficiency ratio (FLOPs/Watt), memory bandwidth utilization, and core occupancy—and conduct in-depth analyses across various target hardware platforms (e.g., Jetson series and Raspberry Pi) to further quantify the model’s comprehensive performance in real-world deployment environments. Furthermore, to enhance the statistical rigor of our experimental comparisons, we will adopt advanced validation methods, including paired hypothesis testing (e.g., paired t-test and Wilcoxon signed-rank test) and effect size analysis, to systematically assess model performance in subsequent experiments. This combined approach will not only improve the reliability and reproducibility of our results but also provide a more holistic and scientifically robust evaluation framework for edge-aware plant disease detection models.