1. Introduction

Urbanization and industrialization have fueled economic growth in recent decades, but they have also resulted in a growing number of air pollution issues [

1]. The Air Pollution Prevention and Control Action Plan (APPCAP) is one of the policies the state has implemented since 2013 to address the issue of air pollution, and an increasing number of people are deeply concerned about the sustainable and healthy development of the human environment in the future [

2]. The WHO (World Health Organization) has determined that PM

2.

5, out of all the air pollutants, poses the greatest risk to human health since it causes a number of severe illnesses, including lung cancer and cardiovascular disease [

3,

4]. A precise PM

2.

5 concentration prediction can provide citizens and the government early notice so they can take preventative action. Thus, it is indisputable that achieving a long-term, accurate prediction of PM

2.

5 concentrations is crucial and a critical step in advancing society’s sustainable growth and creating a more positive vision of peaceful coexistence between humans and the natural world [

5].

Data on the concentrations of air pollutants are shown as time series. Prediction methods to solve this problem of predicting air pollutant concentrations have evolved through three stages: statistical techniques based on meteorological principles, simple machine learning methods, and deep learning methods that have drawn the attention of numerous researchers in recent years [

6]. Statistical methods often abstract complex mathematical physical equation models for prediction through atmospheric dynamics and chemical reactions [

7]. The two most popular prediction techniques in statistics are multiple linear regression models [

8] and Bayesian statistical models [

9]. However, these prediction models usually assume ideal conditions, which makes them unsuitable for the complex and nonlinear challenges encountered in real-world applications. Furthermore, statistical models are ineffective at making predictions and require a lot of calculations in mathematics [

10]. Therefore, in order to increase prediction accuracy and efficiency and adapt to real prediction situations, better methodologies are required for the prediction of air pollutant concentrations.

The second prediction method is a straightforward machine learning method that uses historical data analysis, pattern recognition, and manually operated feature engineering to estimate air pollution concentrations. Common machine learning methods include Decision Trees [

11], k-means [

12] and SVM [

13]. In a recent study, Yang et al. [

14] suggested using the Bootstrap-XGBoost model in conjunction with a resampling technique to forecast the AQI values of Xi’an over the course of the following 15 days. The study discovered that the model had a strong prediction impact. Zheng et al. [

15] proposed 16 distinct integrated learning methods using logistic regression (LR), Catboost, random forest (RF), and other individual learners to be combined. Comparison experiments demonstrated that the performance of integrated learning models is typically superior to that of individual machine learning models. In real-world applications, machine learning methods outperform traditional statistical methods in resolving the nonlinear air pollution concentration predict problem. However, when dealing with more complicated nonlinear interactions and larger datasets, machine learning methods continue to fall short. Moreover, the majority of machine learning methods depend on human feature engineering to extract useful features from the data, which results in poor generalization ability for the machine learning models [

16].

Deep learning methods have gained popularity in a number of domains because of their significant benefits in terms of nonlinear modeling and data processing power. In time series prediction issues, deep learning methods have proven to be highly effective, particularly for air pollution concentration prediction [

17]. Ragab et al. [

18] proposed a one-dimensional deep convolutional neural network (1D-CNN) optimized by exponential adaptive gradient (EAG), and showed that 1D-CNN can effectively predict the air pollution index (API). Chang et al. [

19] aggregated three LSTM models into a single prediction model called ALSTM, which effectively improves the prediction accuracy compared with SVM, GBTR and other models. Gated Recurrent Unit (GRU) is a simplified version of LSTM, which is structurally optimized to enable it to extract timing information more efficiently. Similarly, temporal convolutional networks (TCNs) are variants of CNNs and are specifically used for time-series modeling problems. Saif-ul-Allah et al. [

20] combined the model planar projection data analysis method (PMP) with GRUs to build a prediction model, and Samal et al. [

21] built a multi-output temporal convolutional network auto encoder model (MO-TCNA), both deep learning models achieved good prediction results in the task of predicting air pollutant concentrations. In big data problems, deep learning models typically perform better than statistical models and basic machine learning models. However, when working with spatiotemporal large data that is rich in long-term and spatial features, deep learning models which only have a single structure also have drawbacks and perform badly [

22].

Hybrid deep learning models can attain a greater performance ceiling by combining the benefits of several single deep learning models. In spatiotemporal big data training problems, hybrid deep learning models have surfaced in recent years. In order to extract spatiotemporal features from air quality data, Zhu et al. [

23] developed a hybrid model named APSO-CNN-Bi-LSTM, which successfully combines CNN for spatial features and LSTM for temporal features. Faraji et al. [

24] developed a 3D CNN-GRU combined model, which has a better application prospect for spatiotemporal large datasets because it can identify long-term temporal dependence in air pollutant data, according to experiments. Zhang et al. [

25] also built an RCL-Learning hybrid deep learning model that performed well in a variety of prediction tasks by using the spatiotemporal feature extraction of pollutants and meteorological data as an entry point. The RCL-Learning model is a combination of the Convolutional Long Short-Term Memory Network (ConvLSTM) and the Residual Network (ResNet). It offers academics a novel research idea by concentrating on the temporal and spatial correlation of feature sequences.

Additionally, a growing number of academics are becoming interested in the application of attention mechanisms to enhance the performance and accuracy of deep learning models. Chen et al. [

26] proposed a spatio-temporal attention mechanism (STA) enhanced prediction model called STA-ResConvLSTM. Experiments demonstrate that this attention mechanism can improve the model’s perception of spatio-temporal distribution features, thereby increasing prediction accuracy. Li et al. [

27] proposed a new hybrid model CBAM-CNN-Bi LSTM by using the convolutional block attention module (CBAM) to extract the spatial distribution features of PM

2.

5. The ability of the CBAM attention mechanism to adaptively modify the feature weights greatly aids in increasing prediction accuracy. In summary, the current deep learning-based models for predicting the concentration of air pollutants face the following challenges: (1) the models become more complex as the prediction task grows, which results in issues with low operational efficiency and high overhead; (2) the models struggle to extract spatiotemporal features from the data. These are the challenges encountered when improving the prediction performance of the model.

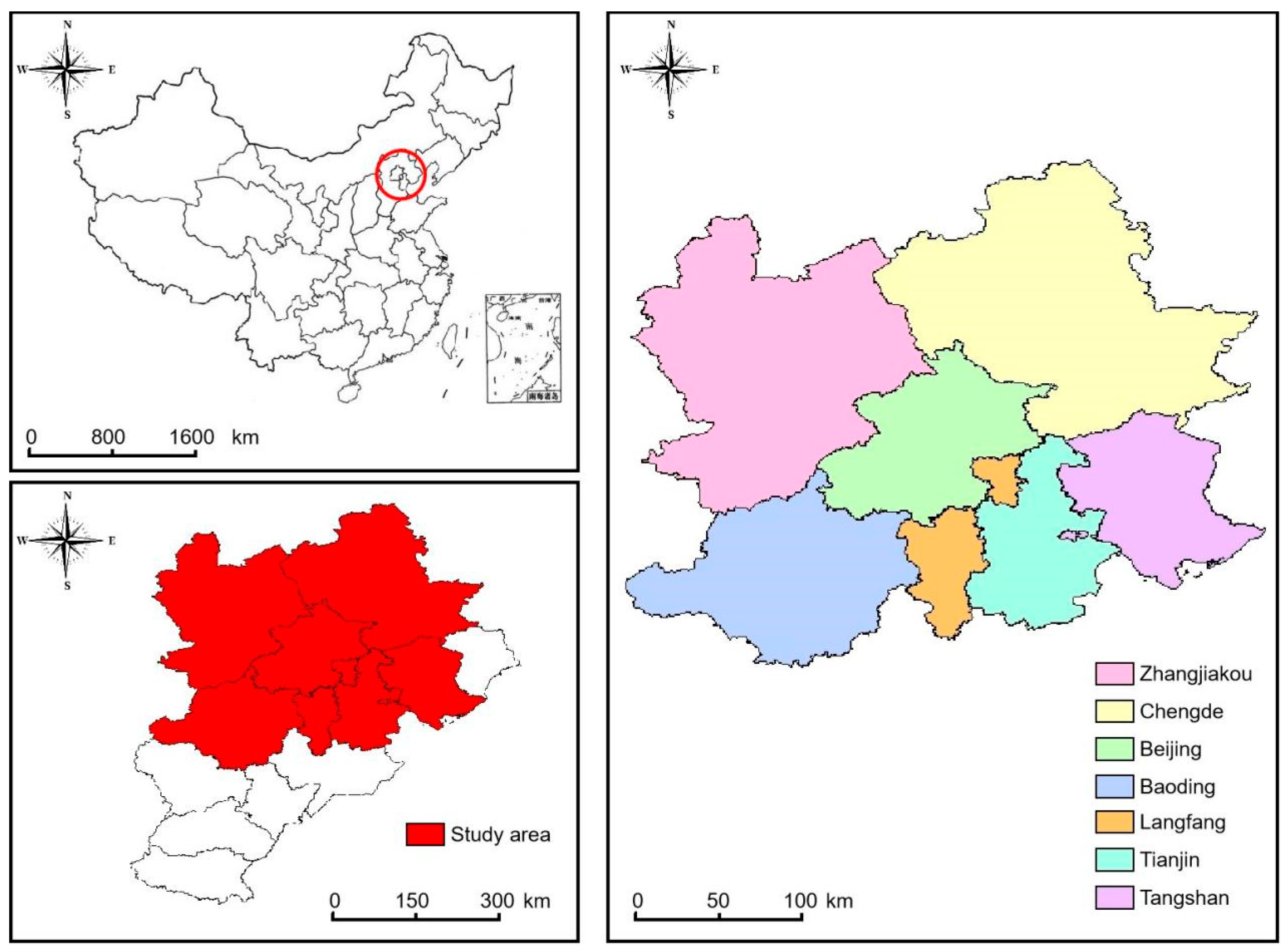

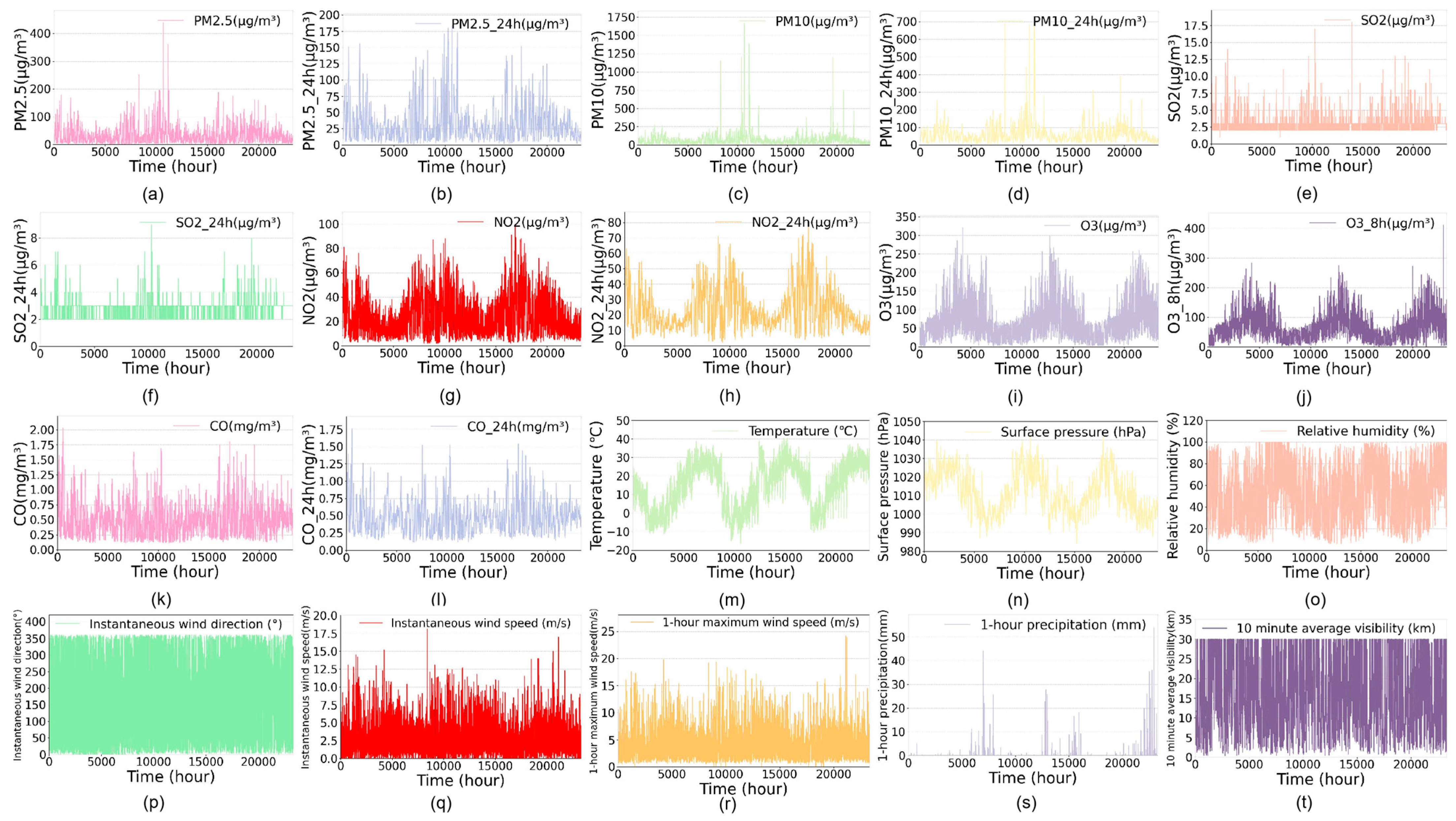

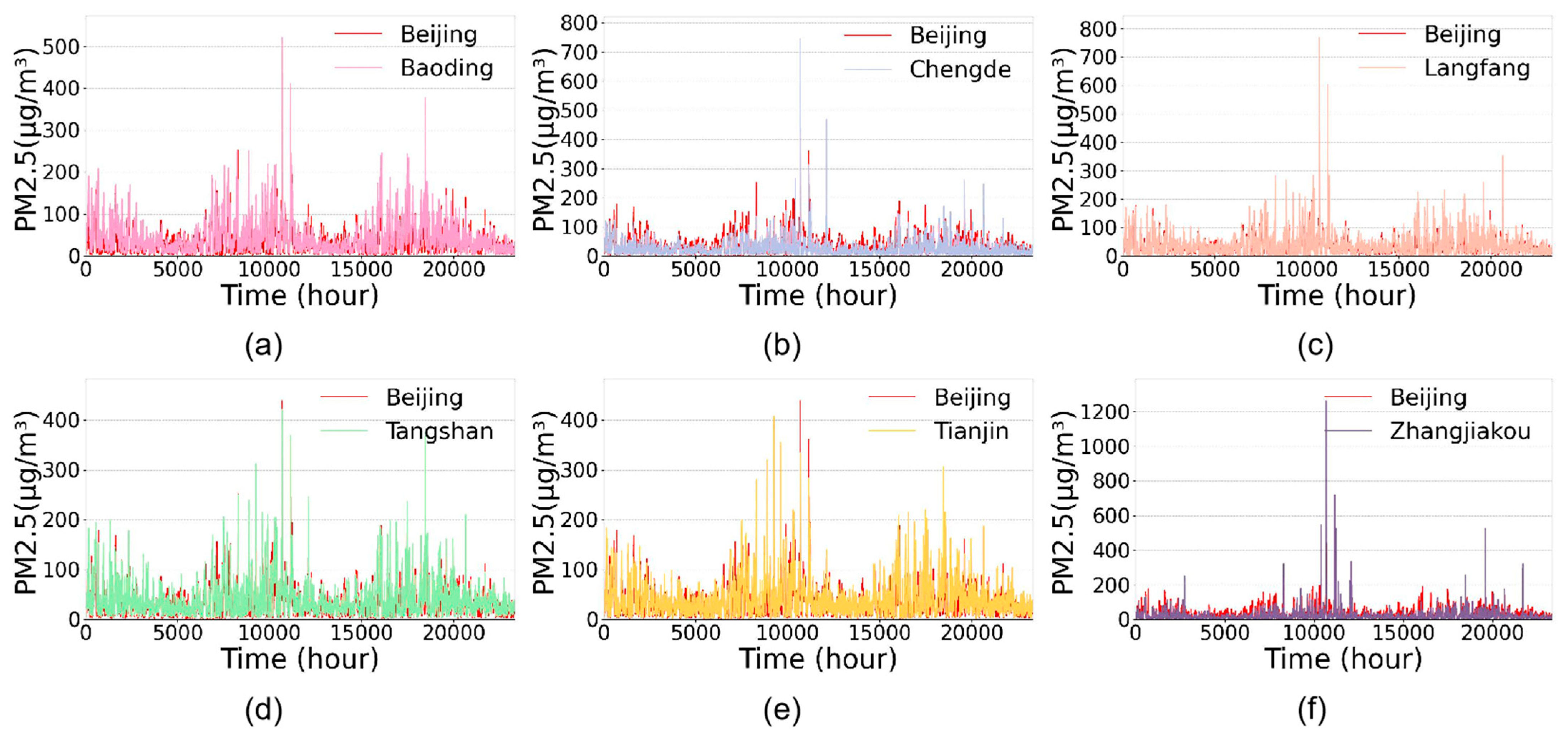

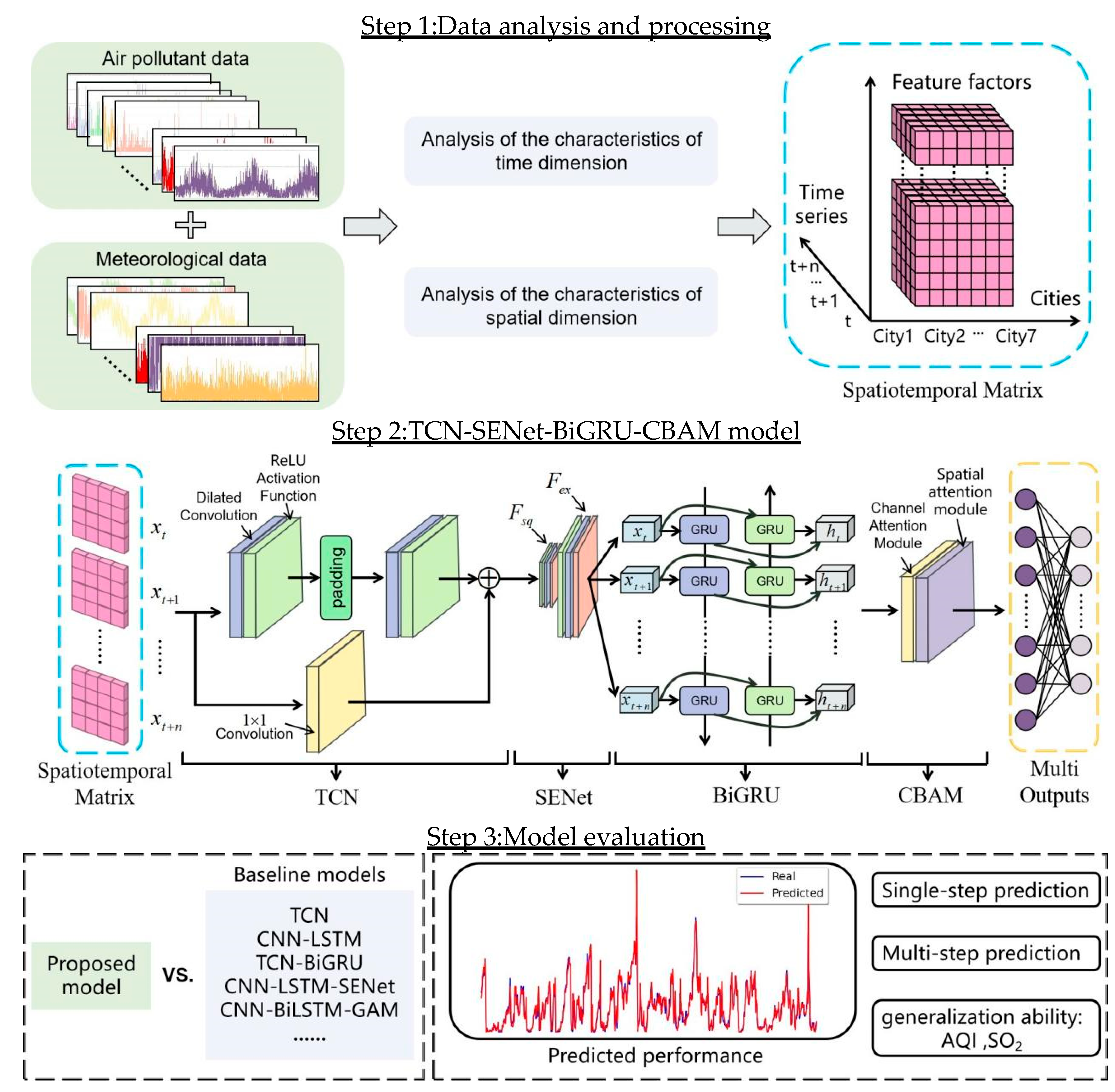

Concluding the discussion above, the efficiency of air pollutant concentration prediction is linked to the extraction of spatiotemporal features. To address this issue and increase prediction accuracy, we suggest a new hybrid deep learning prediction model called TCN-SENet-BiGRU-CBAM. The improved time convolution network (TCN) and bi-directional gated recurrent unit (BiGRU) serve as its backbone, and it incorporates the two attention mechanisms of SENet and CBAM. The following is a summary of this work’s novelty and primary contributions.

- (1)

A method to build data sets that is based on spatiotemporal correlation analysis. The selection of input features makes the dataset creation more rational and scientific by taking into account not only meteorological conditions and air pollutants in the forecast area, but also air pollutants that have a high connection in other cities outside the prediction area;

- (2)

Improvement of the traditional TCN network: the residual connection layer in the network is moved forward and replaced by a preprocessing operation. By processing the residual connection beforehand during model initialization, the overhead at runtime is decreased, the model’s performance and operation efficiency are increased, and the need to repeatedly verify that the number of channels is constant is avoided;

- (3)

The channel attention mechanism SENet is utilized to effectively extract the long-term dependent features of pollutants and meteorological data after the TCN layer is introduced. SENet can dynamically learn the dependencies between channels, select and enhance the output of TCN, so as to better capture the long-term dependencies;

- (4)

Introducing the hybrid attention mechanism CBAM. The spatial attention module of CBAM operates on the spatial dimension of the feature map, assisting the model in focusing on more discriminative local regions within the output sequence of BiGRU.

The TCN-SENet-BiGRU-CBAM model can recognize patterns of long-term spatial and temporal change and realize the prediction of air pollutant concentrations. Experiments conducted on the dataset demonstrate that the suggested model outperforms other sophisticated deep learning prediction models in terms of prediction accuracy and performance.

6. Conclusions

A novel hybrid deep learning model is developed in this study to achieve the prediction of concentrations of air pollutants. First, the selection of model input features is guided by the use of Pearson correlation analysis for the study area’s air pollutants and meteorological data. Then, by making full use of the attention mechanisms SENet and CBAM’s extraction capabilities on spatiotemporal features, the TCN-SENet-BiGRU-CBAM prediction model was built. The model’s TCN module is enhanced in comparison to the conventional TCN by converting the network’s residual connection into preprocessing processes, which boosts the model’s performance and operational efficiency. The proposed model performs better than other advanced baseline models in terms of prediction accuracy and performance, according to the experimental findings of single-step and multi-step prediction. By adding SENet and CBAM, the model can better understand and localize to deeper features than single-structured models like CNN and TCN. It can also make better predictions during periods of intense concentration fluctuations, as well as at peaks and troughs and mutation points. In the prediction task of PM2.5 in Beijing, the root mean square error (RMSE) and mean absolute error (MAE) of the proposed model are in the range of 5.309~14.043 and 3.507~9.200, respectively. Lastly, the model’s robustness and great generalization ability are demonstrated by its excellent performance in predicting the concentrations of other air pollutants. The comprehensive improvement in accuracy and performance of the TCN-SENet-BiGRU-CBAM model provides a strong technical foundation for building a more reliable regional air pollution prediction and warning system. It is expected to become the basis for air pollution warning and prevention. Here is a summary of this paper’s primary contributions:

- (1)

A scientific dataset creation method based on spatio-temporal correlation analysis is demonstrated, taking into account air pollutants with a high degree of connection in other cities outside the prediction area;

- (2)

Improvement of the traditional TCN network: the network’s residual connection layer is moved ahead and transformed into a preprocessing function. It enhances the model’s performance and operational efficiency while lowering runtime overhead;

- (3)

Following the TCN layer, the channel attention mechanism SENet is introduced, which enhances prediction performance and accuracy by efficiently extracting the long-term dependent features of pollutants and meteorological data;

- (4)

Introducing the hybrid attention mechanism CBAM. The feature map spatial dimension attention mechanism of CBAM helps the model focus on key local patterns in the output sequence of BiGRU.

Although the prediction methods proposed in this research have been proven to be superior and applicable, there is yet room for improvement. The next step will involve adding data from multiple monitoring stations in each region as independent features to introduce richer spatial information.