Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models

Abstract

1. Introduction

2. Methods

2.1. Protocol

2.2. Eligibility Criteria

2.3. Search Strategy

2.4. Data Collection and Extraction

2.5. Data Synthesis

3. Results

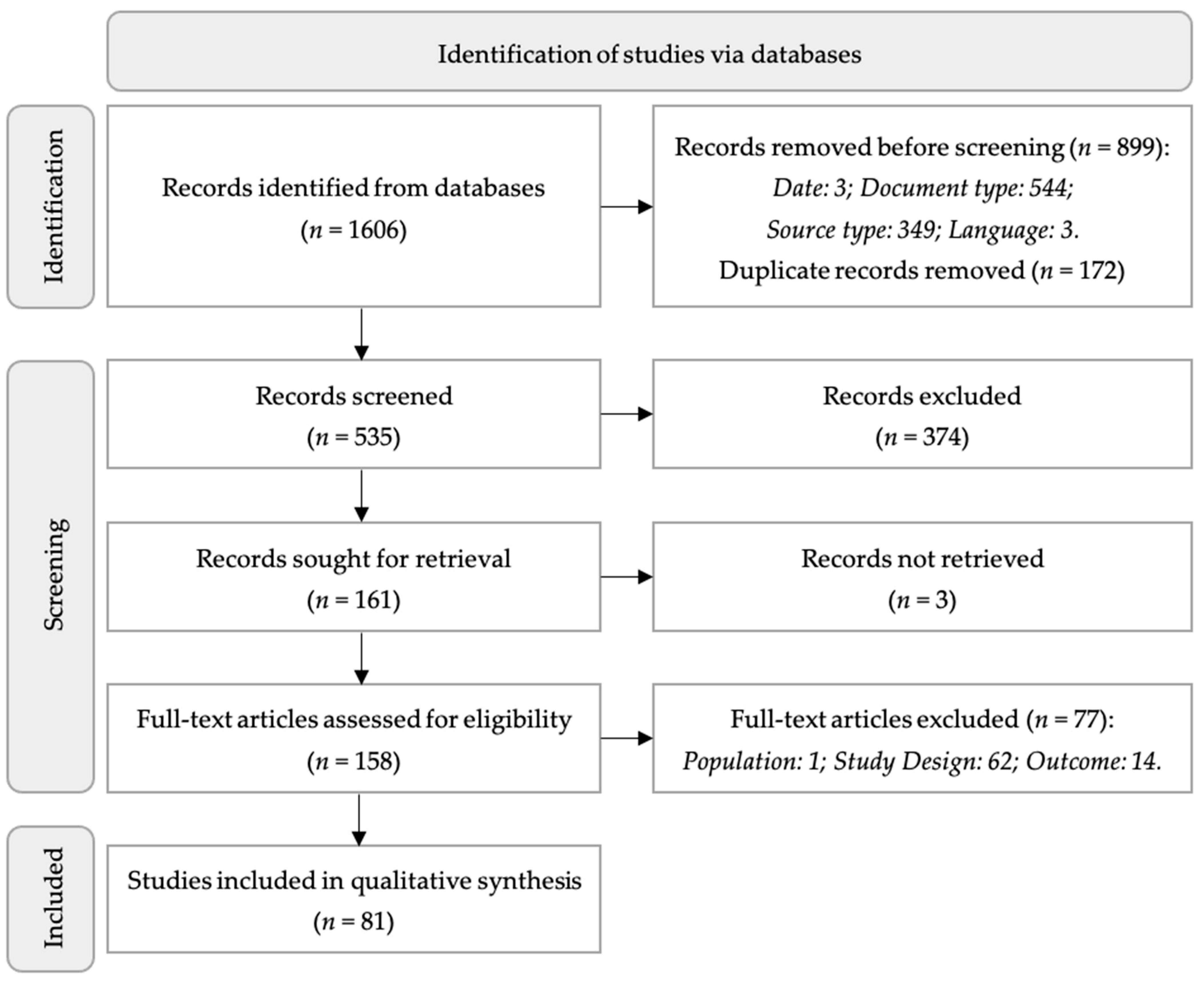

3.1. Study Selection

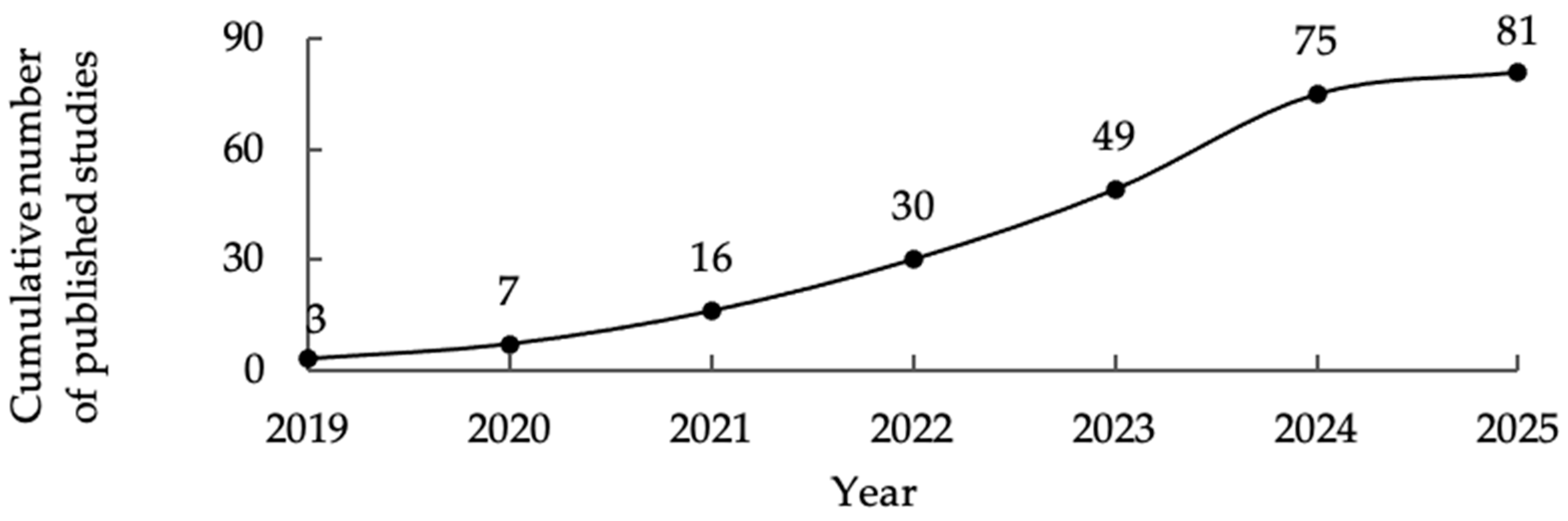

3.2. Overview of Included Studies

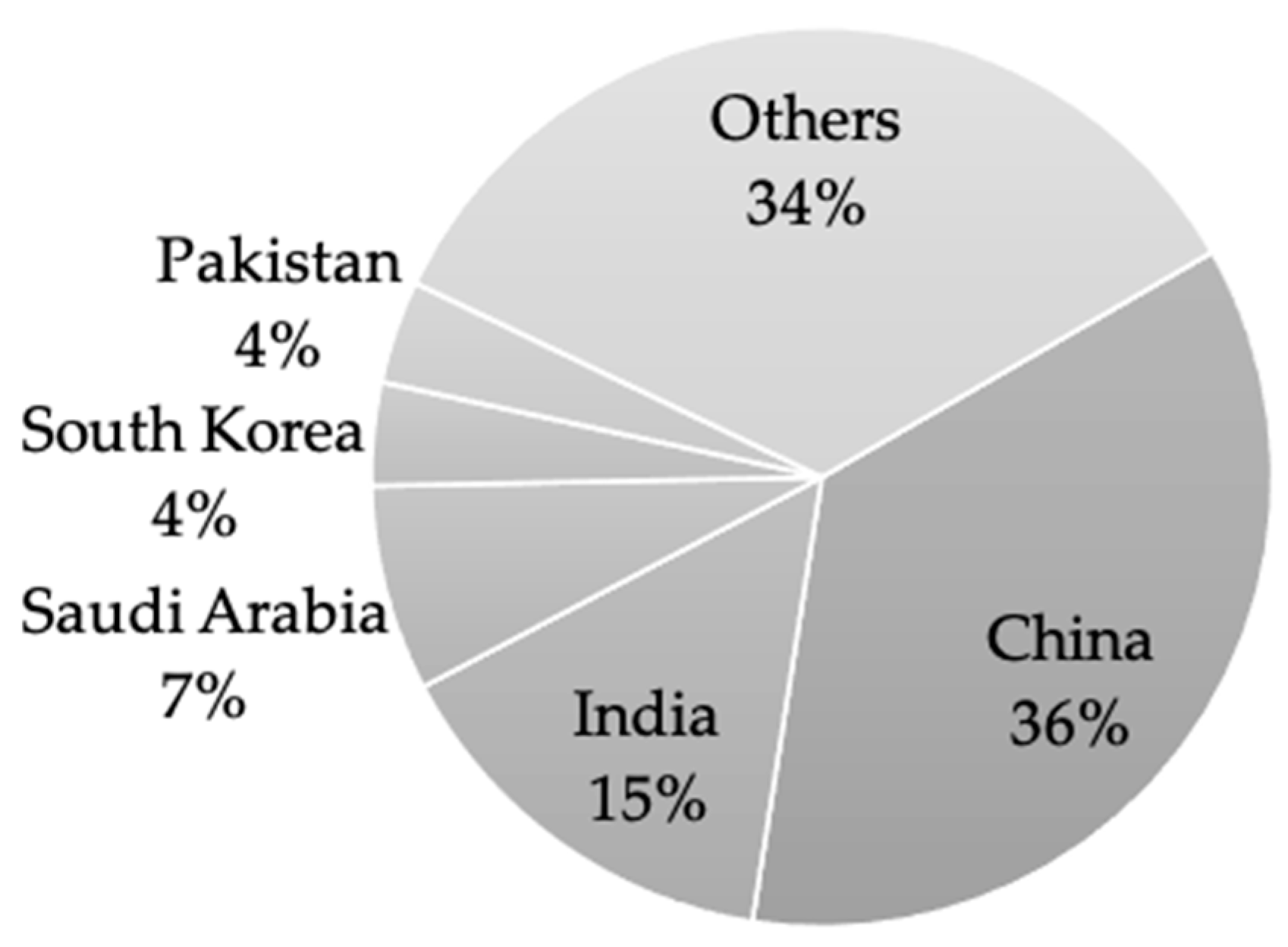

3.2.1. Characteristics of Studies

3.2.2. Contexts of Model Evaluation

3.2.3. Summary of Datasets Used

3.3. Deep Learning Models for Drowsiness Detection (RQ1)

3.3.1. Types and Frequencies of Architectures

3.3.2. Model Objective: Real-Time vs. Offline Detection

3.3.3. Computational Requirements and Feasibility of Deployment

3.4. Model Accuracy and Reliability (RQ2)

3.4.1. Accuracy, Precision, Recall, F1-Score, AUC-ROC Benchmarks

3.4.2. Performance in Different Testing

3.4.3. Handling of False Positives and Negatives

3.4.4. Model Adaptability to Demographics and Driving Scenarios

3.5. Datasets and Data Characteristics (RQ3)

3.5.1. Dataset Sources

3.5.2. Data Modalities

3.5.3. Dataset Size and Diversity

3.5.4. Annotation and Ground Truth Methods

3.6. Challenges and Limitations (RQ4)

3.6.1. Technical Challenges

3.6.2. Real-World Implementation Barriers

3.6.3. Ethical and Privacy Considerations

3.6.4. Strategies to Enhance Model Robustness and Real-World Applicability

4. Discussion

4.1. Key Findings

4.2. Strengths and Limitations of the Review

4.3. Policy Implications

4.4. Future Research Directions

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ADAS | Advanced driver assistance system |

| AI | Artificial intelligence |

| AUC-ROC | Area under the receiver operating characteristic curve |

| CNN | Convolutional neural network |

| DL | Deep learning |

| ECG | Electrocardiogram |

| EEG | Electroencephalogram |

| EOG | Electrooculography |

| ESRA | E-survey of road users’ attitudes |

| GDPR | General data protection regulation |

| GPU | Graphics processing unit |

| GRU | Gated recurrent unit |

| KSS | Karolinska sleepiness scale |

| LSTM | Long short-term memory |

| NTHU-DDD | National Tsinghua university driver drowsiness detection |

| PRISMA | Preferred reporting items for systematic reviews and meta-analyses |

| PROSPERO | International prospective register of systematic reviews |

| RNN | Recurrent neural network |

| RQ | Research question |

| SADT | Sustained-attention driving task |

| YawDD | Yawning detection dataset |

Appendix A

| Study | Country | Driving Context | DL Model Tye(s) | Inference Mode |

|---|---|---|---|---|

| Adhithyaa et al. (2023) [22] | India | Simulated | Multistage Adaptive 3D-CNN | Real-time |

| Ahmed et al. (2022) [23] | India | Simulated | Ensemble CNN with two InceptionV3 modules | Real-time |

| Akrout & Fakhfakh (2023) [24] | Saudi Arabia | Simulated | MobileNetV3 + Deep LSTM | Real-time |

| Alameen & Alhothali (2023) [25] | Saudi Arabia | Simulated | 3D-CNN + LSTM | Real-time |

| Alghanim et al. (2024) [26] | Jordan | Simulated | Inception-ResNetV2 (hybrid CNN with dilated convolutions) | Offline |

| Alguindigue et al. (2024) [27] | Canada | Simulated | SNN, 1D-CNN, CRNN | Real-time |

| Almazroi et al. (2023) [28] | Saudi Arabia | Simulated | MobileNetV3 + SSD + CNN | Real-time |

| Anber et al. (2022) [29] | Saudi Arabia | Simulated | AlexNet (fine-tuned and feature extractor) + SVM + NMF | Offline |

| Ansari et al. (2022) [30] | Australia | Simulated | reLU-BiLSTM | Offline |

| Arefnezhad et al. (2020) [31] | Austria | Simulated | CNN, CNN-LSTM, CNN-GRU | Offline |

| Bearly & Chitra (2024) [32] | India | Simulated | 3DDGAN-TLALSTM (3D Dependent GAN + Three-Level Attention LSTM) | Offline |

| Bekhouche et al. (2022) [33] | France | Simulated | ResNet-50 + FCFS + SVM | Offline |

| Benmohamed & Zarzour (2024) [34] | Algeria | Simulated | AlexNet (global features) + LSTM + handcrafted structural features | Offline |

| J. Chen, Wang, Wang et al. (2022) [35] | China | Simulated | CNN with transfer learning (AlexNet, ResNet18) | Offline |

| J. Chen et al. (2021) [36] | China | Real-world | 12-layer ConvNet (end-to-end CNN) | Offline |

| J. Chen, Wang, He et al. (2022) [37] | China | Real-world | CNN (6 architectures tested) using PLI-based functional brain network images | Offline |

| C. Chen et al. (2023) [38] | China | Simulated | SACC-CapsNet (Capsule Network with temporal-channel and channel-connectivity attention) | Offline |

| Chew et al. (2024) [16] | Malaysia | Real-world | CNN (ResNet + DenseNet) + rPPG (HR monitoring) | Real-time |

| Civik & Yuzgec (2023) [39] | Turkey | Real-world | CNN (eye model + mouth model) | Real-time |

| Cui et al. (2022) [40] | Singapore | Simulated | CNN (custom, compact) | Offline |

| Ding et al. (2024) [41] | United States | Simulated | Few-shot attention-based neural network | Offline |

| Dua et al. (2021) [42] | India | Simulated | AlexNet, VGG-FaceNet, FlowImageNet, ResNet | Offline |

| Ebrahimian et al. (2022) [43] | Finland | Simulated | CNN, CNN-LSTM | Offline |

| Fa et al. (2023) [44] | China | Simulated | MS-STAGCN (Multiscale Spatio-Temporal Attention Graph Convolutional Network) | Real-time |

| X. Feng, Guo et al. (2024) [45] | China | Simulated | ID3RSNet (interpretable residual shrinkage network) | Real-time |

| X. Feng, Dai et al. (2025) [46] | China | Simulated | PASAN-CA (Pseudo-label-assisted subdomain adaptation network with coordinate attention) | Real-time |

| W. Feng et al. (2025) [47] | China | Simulated | Separable CNN + Gumbel-Softmax channel selection | Real-time |

| Florez et al. (2023) [21] | Peru | Real-world | InceptionV3, VGG16, ResNet50V2 (Transfer Learning) | Real-time |

| Gao et al. (2019) [13] | China | Simulated | Recurrence Network + CNN (RN-CNN) | Offline |

| Guo & Markoni (2019) [48] | Taiwan | Simulated | Hybrid CNN + LSTM | Offline |

| C. He et al. (2024) [15] | China | Real-world | Attention-BiLSTM | Real-time |

| H. He et al. (2020) [49] | China | Real-world | Two-Stage CNN (YOLOv3-inspired detection + State Recognition Network) | Real-time |

| L. He et al. (2024) [50] | China | Simulated | ARMFCN-LSTM, GARMFCN-LSTM (CNN + LSTM + attention + WGAN-GP) | Offline |

| Nguyen et al. (2023) [18] | South Korea | Simulated | MLP, CNN | Real-time |

| Hu et al. (2024) [17] | China | Real-world | STFN-BRPS (CNN-BiLSTM + GCN + Channel Attention Fusion) | Offline and pseudo-online |

| Huang et al. (2022) [51] | China | Simulated | RF-DCM (CNN with Feature Recalibration and Fusion + LSTM) | Real-time |

| Hultman et al. (2021) [52] | Sweden | Real-world and simulated | CNN-LSTM | Offline |

| Iwamoto et al. (2021) [53] | Japan | Simulated | LSTM-Autoencoder | Offline |

| Jamshidi et al. (2021) [54] | Iran | Simulated | Hierarchical Deep Neural Network (ResNet + VGG16 + LSTM) | Real-time |

| Jarndal et al. (2025) [55] | United Arab Emirates | Real-world | Vision Transformers (ViT) | Real-time |

| Jia et al. (2022) [56] | China | Simulated | M1-FDNet + M2-PENet + M3-SJNet + MF-Algorithm | Real-time |

| Jiao & Jiang (2022) [57] | China | Simulated | Bimodal-LSTM | Offline |

| Jiao et al. (2020) [14] | China | Simulated | LSTM + CWGAN | Offline |

| Jiao et al. (2023) [58] | China | Simulated | MS-1D-CNN (Multi-scale 1D CNN) | Offline |

| Kielty et al. (2023) [59] | Ireland | Simulated | CNN + Self-Attention + BiLSTM | Real-time |

| Kır Savaş & Becerikli (2022) [60] | Turkey | Real-world and simulated | Deep Belief Network (DBN) | Offline |

| Kumar et al. (2023) [61] | India | Simulated | Modified InceptionV3 + LSTM | Offline |

| Lamaazi et al. (2023) [62] | United Arab Emirates | Real-world | VGG16-based CNN (face/eye/mouth) + two-layer LSTM (driving behavior) | Real-time |

| Latreche et al. (2025) [63] | Algeria | Simulated | CNN (optimized) + Hybrid ML classifiers (CNN-SVM, CNN-RF, etc.) | Offline |

| Q. Li et al. (2024) [64] | United States | Simulated | FD-LiteNet (NAS-derived CNN) | Offline |

| T. Li & Li (2024) [65] | China | Simulated | PFLD + ViT + LSTM (multi-granularity temporal model) | Offline |

| Lin et al. (2025) [66] | China | Simulated | CNN | Offline |

| Majeed et al. (2023) [67] | Pakistan | Simulated | CNN, CNN-RNN | Offline |

| Mate et al. (2024) [68] | India | Simulated | VGG19, ResNet50V2, MobileNetV2, Xception, InceptionV3, DenseNet169, InceptionResNetV2 | Offline |

| Min et al. (2023) [69] | China | Simulated | SVM (linear, RBF), BiLSTM | Real-time |

| Mukherjee & Roy (2024) [70] | India | Simulated | Stacked Autoencoder + TLSTM + Attention mechanism | Offline |

| Nandyal & Sharanabasappa (2024) [71] | India | Simulated | CNN-EFF-ResNet 18 | Offline |

| Obaidan et al. (2024) [72] | Saudi Arabia | Simulated | Deep Multi-Scale CNN | Real-time |

| Paulo et al. (2021) [73] | Portugal | Simulated | CNN (custom, shallow, single conv. layer) | Offline |

| Peng et al. (2024) [74] | China | Simulated | 3D-CNN (video) + 1D-CNN (signals) + Fusion network | Real-time |

| Priyanka et al. (2024) [75] | India | Simulated | CNN + LSTM | Offline |

| Quddus et al. (2021) [76] | Canada | Simulated | R-LSTM and C-LSTM (Recurrent and Convolutional LSTM) | Offline |

| Ramzan et al. (2024) [77] | Pakistan | Real-world | Custom 30-layer CNN (CDLM) + PCA + HOG + ML classifiers (XGBoost, SVM, RF) | Real-time |

| Sedik et al. (2023) [78] | Saudi Arabia | Real-world | 3D CNN, 2D CNN, SVM, RF, DT, KNN, QDA, MLP, LR | Offline |

| Shalash (2021) [79] | Egypt | Simulated | CNN (custom, 18-layer architecture) | Offline |

| Sharanabasappa & Nandyal (2022) [80] | India | Simulated | Ensemble Learning (DT, KNN, ANN, SVM) with handcrafted features and ReliefF, Infinite, Correlation, Term Variance | Offline |

| Sohail et al. (2024) [81] | Pakistan | Real-world | Custom CNN architecture | Real-time |

| Soman et al. (2024) [19] | India | Simulated | CNN-LSTM hybrid | Real-time |

| Sun et al. (2023) [82] | South Korea | Simulated | Facial Feature Fusion CNN (FFF-CNN) | Real-time |

| Tang et al. (2024) [83] | China | Simulated | MSCNN + CAM (Attention-Guided Multiscale CNN) | Offline |

| Turki et al. (2024) [84] | Tunisia | Real-world | VGG16, VGG19, ResNet50 (Transfer Learning) | Real-time |

| Vijaypriya & Uma (2023) [85] | India | Real-world and simulated | Multi-Scale CNN with Flamingo Search Optimization (MCNN + FSA) | Real-time |

| Wang et al. (2025) [86] | China | Simulated | CNN-LSTM with multi-feature fusion (RWECN + DE + SQ) | Offline |

| Wijnands et al. (2020) [87] | Australia | Simulated | Depthwise separable 3D CNN | Real-time |

| H. Yang et al. (2021) [88] | China | Simulated | 3D Convolution + BiLSTM (3D-LTS) | Offline |

| E. Yang & Yi (2024) [89] | South Korea | Simulated | ShuffleNet + ELM | Real-time |

| K. Yang et al. (2025) [90] | China | Simulated | Adaptive multi-branch CNN (adMBCNN: CNN + handcrafted features + functional network) | Offline |

| You et al. (2019) [91] | China | Simulated | Deep Cascaded CNN (DCCNN) + SVM classifier (custom EAR-based) | Real-time |

| Yu et al. (2024) [20] | China | Real-world | LSTM | Offline |

| Zeghlache et al. (2022) [92] | France | Simulated | Bayesian LSTM Autoencoder + XGBoost | Offline |

| Zhang et al. (2023) [93] | China | Simulated | Multi-granularity CNN + LSTM (LMDF) | Real-time |

| Study | Accuracy | Precision (PPV) | Recall (Sensitivity) | F1-Score | AUC-ROC |

|---|---|---|---|---|---|

| Adhithyaa et al. (2023) [22] | 0.774 | NR | NR | 0.781 | 0.8005 |

| Ahmed et al. (2022) [23] | 0.971 | NR | NR | NR | NR |

| Akrout & Fakhfakh (2023) [24] | 0.984 | 0.929 (YawDD), 0.956 (DEAP), 0.984 (MiraclHB) | 0.933 (YawDD), 0.962 (DEAP), 0.984 (MiraclHB) | 0.931 (YawDD), 0.959 (DEAP), 0.984 (MiraclHB) | NR |

| Alameen & Alhothali (2023) [25] | 0.96 (YawDD), 0.93 (Side-3MDAD), 0.90 (Front-3MDAD) | 0.93 (YawDD), 0.90 (Side-3MDAD), 0.90 (Front-3MDAD) | 1.00 (YawDD), 0.95 (Side-3MDAD), 0.90 (Front-3MDAD) | 0.96 (YawDD), 0.93 (Side-3MDAD), 0.90 (Front-3MDAD) | NR |

| Alghanim et al. (2024) [26] | 0.9887 (Figshare), 0.8273 (SEED-VIG) | NR | NR | NR | NR |

| Alguindigue et al. (2024) [27] | 0.9828 (HRV), 0.9632 (EDA), 0.90 (Eye) | 0.9828 (HRV), 0.9632 (EDA), 0.90 (Eye) | 0.98 (HRV), 0.96 (EDA), 0.90 (Eye) | 0.98 (HRV), 0.96 (EDA) | NR |

| Almazroi et al. (2023) [28] | 0.97 | 0.992 | 0.994 | 0.997 | NR |

| Anber et al. (2022) [29] | 0.957 (Transfer Learning), 0.9965 (Hybrid) | 0.957 (Transfer Learning), 0.9965 (Hybrid) | 0.958 (Transfer Learning), 0.9965 (Hybrid) | 0.958 (Transfer Learning), 0.9965 (Hybrid) | NR |

| Ansari et al. (2022) [30] | 0.976 (Subject1), 0.979 (Subject2) | 0.9738 | 0.9754 | 0.9746 | NR |

| Arefnezhad et al. (2020) [31] | 0.9504 (CNN-LSTM) | 0.95 (CNN-LSTM) | 0.94 (CNN-LSTM) | 0.94 (CNN-LSTM) | NR |

| Bearly & Chitra (2024) [32] | 0.9182 | NR | 0.913 | NR | NR |

| Bekhouche et al. (2022) [33] | 0.8763 | NR | NR | 0.8641 | NR |

| Benmohamed & Zarzour (2024) [34] | 0.9012 | NR | NR | NR | NR |

| J. Chen, Wang, Wang et al. (2022) [35] | 0.9844 (AlexNet relu4), 0.9313 (ResNet18) | 0.9680 (AlexNet relu4), 0.9114 (ResNet18) | 1.000 (AlexNet relu4), 0.9474 (ResNet18) | NR | NR |

| J. Chen et al. (2021) [36] | 0.9702 | 0.9674 | 0.9776 | 0.9719 | NR |

| J. Chen, Wang, He et al. (2022) [37] | 0.954 (Model 4) | 0.955 (Model 4) | 0.939 (Model 4) | 0.947 (Model 4) | 0.9953 (Model 4) |

| C. Chen et al. (2023) [38] | 0.9417 (session 1), 0.9059 (session 2) | NR | 0.9591 (session 1), 0.9382 (session 2) | 0.9594 (session 1), 0.9399 (session 2) | NR |

| Chew et al. (2024) [16] | 0.9421 | NR | NR | 0.97 | NR |

| Civik & Yuzgec (2023) [39] | 0.96 | 0.8333 | 1.00 | 0.9091 | NR |

| Cui et al. (2022) [40] | 0.7322 | NR | NR | NR | NR |

| Ding et al. (2024) [41] | 0.86 | NR | NR | 0.86 | NR |

| Dua et al. (2021) [42] | 0.8500 | 0.8630 | 0.8200 | 0.8409 | NR |

| Ebrahimian et al. (2022) [43] | 0.91 (3-level), 0.67 (5-level) | 0.87 (3-level), 0.66 (5-level) | 0.87 (3-level), 0.67 (5-level) | NR | NR |

| Fa et al. (2023) [44] | 0.924 | 0.924 | 0.924 | 0.924 | NR |

| X. Feng, Guo et al. (2024) [45] | 0.7472 (unbalanced), 0.7716 (balanced) | NR | NR | 0.7127 (unbalanced), 0.7717 (balanced) | NR |

| X. Feng, Dai et al. (2025) [46] | 0.8585 (SADT), 0.9465 (SEED-VIG) | NR | NR | NR | NR |

| W. Feng et al. (2025) [47] | 0.8084 | 0.8601 | 0.7448 | 0.7965 | NR |

| Florez et al. (2023) [21] | 0.9927 (InceptionV3), 0.9939 (VGG16), 0.9971 (ResNet50V2) | 0.9957 (InceptionV3), 0.9941 (VGG16), 0.9994 (ResNet50V2) | 0.9908 (InceptionV3), 0.9937 (VGG16), 0.9947 (ResNet50V2) | 0.9927 (InceptionV3), 0.9939 (VGG16), 0.9971 (ResNet50V2) | NR |

| Gao et al. (2019) [13] | 0.9295 | NR | NR | NR | NR |

| Guo & Markoni (2019) [48] | 0.8485 | NR | NR | NR | NR |

| C. He et al. (2024) [15] | 0.9921 | 0.8444 | 0.8201 | 0.8321 | NR |

| H. He et al. (2020) [49] | 0.947 | NR | NR | NR | NR |

| L. He et al. (2024) [50] | 0.9584 (ARMFCN-LSTM), 0.8470 (GARMFCN- LSTM) | 0.98 (GARMFCN-LSTM), 0.93 (ARMFCN-LSTM) | 0.97 (GARMFCN-LSTM), 0.81 (ARMFCN-LSTM) | 0.97 (GARMFCN-LSTM), 0.85 (ARMFCN-LSTM) | 1.00 (GARMFCN-LSTM), 0.96 (ARMFCN-LSTM) |

| Nguyen et al. (2023) [18] | 0.9487 (MLP), 0.9624 (CNN) | NR | 0.9624 (MLP), 0.9718 (CNN) | 0.9497 (MLP), 0.9626 (CNN) | NR |

| Hu et al. (2024) [17] | 0.9243 | 0.9152 | 0.9289 | 0.927 | 0.957 |

| Huang et al. (2022) [51] | NR | NR | NR | 0.8942 | NR |

| Hultman et al. (2021) [52] | 0.82 | NR | NR | NR | NR |

| Iwamoto et al. (2021) [53] | NR | NR | 0.81 | NR | 0.88 |

| Jamshidi et al. (2021) [54] | 0.8719 | NR | NR | NR | NR |

| Jarndal et al. (2025) [55] | 0.9889 (NTHU-DDD), 0.994 (UTA-RLDD) | NR | NR | NR | NR |

| Jia et al. (2022) [56] | 0.978 | NR | NR | NR | NR |

| Jiao & Jiang (2022) [57] | 0.969 (HEOG + HSUM) | 0.634 (HEOG + HSUM) | 0.978 (HEOG + HSUM) | 0.765 (HEOG + HSUM) | NR |

| Jiao et al. (2020) [14] | 0.9814 | NR | NR | 0.946 | NR |

| Jiao et al. (2023) [58] | 0.993 | 0.989 | 0.995 | 0.991 | NR |

| Kielty et al. (2023) [59] | NR | 0.959 (internal test), 0.899 (YawDD) | 0.947 (internal test), 0.910 (YawDD) | 0.953 (internal test), 0.904 (YawDD) | NR |

| Kır Savaş & Becerikli (2022) [60] | 0.86 | NR | NR | NR | NR |

| Kumar et al. (2023) [61] | 0.9136 | 0.74 | 0.92 | 0.82 | NR |

| Lamaazi et al. (2023) [62] | 0.973 (CNN-eye), 0.982 (CNN-mouth), 0.93 (LSTM) | 0.93 (LSTM) | 0.89 (LSTM) | 0.91 (LSTM) | NR |

| Latreche et al. (2025) [63] | 0.99 (CNN-SVM) | 0.98 (CNN-SVM) | 0.99 (CNN-SVM) | 0.99 (CNN-SVM) | 0.99 (CNN-SVM) |

| Q. Li et al. (2024) [64] | 0.9705 (FD-LiteNet1), 0.9972 (FD-LiteNet2) | NR | NR | NR | NR |

| T. Li & Li (2024) [65] | 0.9315 | NR | NR | NR | NR |

| Lin et al. (2025) [66] | 0.8756 | 0.9169 | 0.8995 | 0.9016 | NR |

| Majeed et al. (2023) [67] | 0.9669 (CNN-2), 0.9564 (CNN-RNN) | 0.9569 (CNN-2), 0.9541 (CNN-RNN) | 0.9558 (CNN-2), 0.9186 (CNN-RNN) | 0.9563 (CNN-2), 0.9360 (CNN-RNN) | NR |

| Mate et al. (2024) [68] | 0.9651 (VGG19) | 0.9814 (VGG19) | 0.9536 (VGG19) | 0.9673 (VGG19) | NR |

| Min et al. (2023) [69] | 0.9941 (linear), 0.7449 (RBF), 0.7365 (BiLSTM) | NR | 0.9897 (linear), 0.6170 (RBF), 0.7404 (BiLSTM) | 0.9900 (linear), 0.5933 (RBF), 0.6630 (BiLSTM) | 0.692 (RBF), 0.704 (BiLSTM) |

| Mukherjee & Roy (2024) [70] | 0.9863 | 0.986 | 0.986 | 0.983 | NR |

| Nandyal & Sharanabasappa (2024) [71] | 0.9130 | NR | 0.9210 | NR | NR |

| Obaidan et al. (2024) [72] | 0.9703 | 0.9553 | 0.9554 | 0.9553 | NR |

| Paulo et al. (2021) [73] | 0.7587 | NR | NR | NR | NR |

| Peng et al. (2024) [74] | 0.9315 | NR | 0.9171 | NR | NR |

| Priyanka et al. (2024) [75] | 0.9600 | 0.9500 | 0.9500 | 0.9500 | NR |

| Quddus et al. (2021) [76] | 0.8797 (R-LSTM), 0.9787 (C-LSTM) | NR | 0.9531 (R-LSTM), 0.9941 (C-LSTM) | NR | NR |

| Ramzan et al. (2024) [77] | 0.997 (CNN), 0.982 (Hybrid), 0.984 (ML with XGBoost) | 0.998 (CNN), 0.726 (Hybrid), 0.985 (ML with XGBoost) | 0.999 (CNN), 0.861 (Hybrid), 0.985 (ML with XGBoost) | 0.992 (CNN), 0.787 (Hybrid), 0.985 (ML with XGBoost) | NR |

| Sedik et al. (2023) [78] | 0.98 (3D CNN on Combined Dataset) | 0.98 (3D CNN on Combined Dataset) | 0.98 (3D CNN on Combined Dataset) | 0.98 (3D CNN on Combined Dataset) | NR |

| Shalash (2021) [79] | 0.9436 (FP1), 0.9257 (T3), 0.9302 (Oz) | NR | NR | NR | 0.9798 (FP1), 0.97 (T3), 0.9746 (Oz) |

| Sharanabasappa & Nandyal (2022) [80] | 0.9419 | NR | 0.9858 | 0.9764 | NR |

| Sohail et al. (2024) [81] | 0.950 | NR | 0.940 | NR | NR |

| Soman et al. (2024) [19] | 0.98 | 0.95 | 0.93 | 0.94 | 0.99 |

| Sun et al. (2023) [82] | 0.9489 (DFD), 0.9835 (CEW) | NR | NR | 0.9479 (DFD), 0.9832 (CEW) | 0.9882 (DFD), 0.9979 (CEW) |

| Tang et al. (2024) [83] | 0.905 | 0.867 | NR | 0.824 | NR |

| Turki et al. (2024) [84] | 0.9722 (VGG16), 0.9630 (VGG19), 0.9838 (ResNet50) | 0.9724 (VGG16), 0.9658 (VGG19), 0.9842 (ResNet50) | 0.9720 (VGG16), 0.9624 (VGG19), 0.9837 (ResNet50) | 0.9722 (VGG16), 0.9641 (VGG19), 0.9839 (ResNet50) | NR |

| Vijaypriya & Uma (2023) [85] | 0.9838 (YAWDD), 0.9826 (NTHU-DDD) | 0.9707 (YAWDD), 0.9945 (NTHU-DDD) | 0.9785 (YAWDD), 0.9811 (NTHU-DDD) | 0.9746 (YAWDD), 0.9878 (NTHU-DDD) | NR |

| Wang et al. (2025) [86] | 0.9657 (SEED-VIG); 0.9923 (Mendeley) | 0.9601 | 0.9512 | 0.9554 | NR |

| Wijnands et al. (2020) [87] | 0.739 | NR | NR | NR | NR |

| H. Yang et al. (2021) [88] | 0.834 (YawDDR); 0.805 (MFAY) | NR | NR | NR | NR |

| E. Yang & Yi (2024) [89] | 0.9705 | 0.9587 | 0.9269 | 0.9553 | 0.9705 |

| K. Yang et al. (2025) [90] | 0.9602 (SEED-VIG), 0.9184 (Cui) | 0.9514 (SEED-VIG), 0.9250 (Cui) | 0.9241 (SEED-VIG), 0.9117 (Cui) | 0.9351 (SEED-VIG), 0.9179 (Cui) | NR |

| You et al. (2019) [91] | 0.948 | NR | NR | NR | NR |

| Yu et al. (2024) [20] | 0.9736 | 0.9781 | 0.9778 | 0.9780 | 0.99 |

| Zeghlache et al. (2022) [92] | NR | 0.84 | 0.75 | 0.76 | NR |

| Zhang et al. (2023) [93] | 0.9005 | NR | NR | NR | NR |

| Study | Dataset Source | Data Type | Technical Challenges | Recommendations |

|---|---|---|---|---|

| Adhithyaa et al. (2023) [22] | Open-source + proprietary | Behavioral (facial landmarks) | Hardware constraints, facial region variability, lighting changes | Adaptive architecture (fusion and sub-models) reduces overfitting; augmentation and pyramid input improve stability |

| Ahmed et al. (2022) [23] | Open-source | Behavioral (facial landmarks: eyes and mouth regions) | Lighting variation, facial occlusion, smiling confused with eye closure | Facial subsampling and weighted ensemble improved robustness and reduced false detections |

| Akrout & Fakhfakh (2023) [24] | Open-source + proprietary | Behavioral (facial landmarks: iris, eyelids, head pose) | Lighting variation, facial occlusion, fatigue state subjectivity | Iris normalization, MediaPipe landmarks, multi-source feature fusion improved robustness |

| Alameen & Alhothali (2023) [25] | Open-source | Behavioral (RGB video) | Illumination variance; distractions from background | BN layer placement affects generalization per dataset |

| Alghanim et al. (2024) [26] | Open-source | Physiological (EEG spectrogram images) | Nonstationarity of EEG; data augmentation not fully effective; high training time | Use of Inception and dilated ResNet blocks; 30–50% overlap improves robustness |

| Alguindigue et al. (2024) [27] | Proprietary | Physiological (HRV, EDA), Behavioral (Eye tracking) | Class imbalance (especially in Eye model); device calibration | Use ensemble methods; improve minority class detection |

| Almazroi et al. (2023) [28] | Proprietary | Behavioral (facial landmarks: eye, mouth; objects; seatbelt use) | Occlusion, mouth covered by hand, low-light performance, alert timing threshold sensitivity | Eye/mouth ratio and MobileNet SSD integration improves accuracy and speed |

| Anber et al. (2022) [29] | Open-source | Behavioral (head position, mouth movement; face-based behavioral cues) | Lighting sensitivity; eye occlusion; limited generalizability | Combining AlexNet with NMF and SVM improves performance over transfer learning alone |

| Ansari et al. (2022) [30] | Proprietary | Behavioral (head posture) | Limited dataset; subjectivity in labeling; variability in fatigue behavior | Future use of smart seats and clothing; address data limitations with unsupervised clustering |

| Arefnezhad et al. (2020) [31] | Proprietary | Vehicle-based (steering wheel angle, velocity, yaw rate, lateral deviation, acceleration) | Noisy signals; high intra-class variability; difficult to differentiate moderate vs. extreme drowsiness | Use of CNN and RNN improves temporal modeling and detection accuracy |

| Bearly & Chitra (2024) [32] | Open-source | Behavioral (face: eyes, mouth, head position; RGB/NIR) | Noise in individual frame decisions; resolved using temporal smoothing | Combining GAN with multilevel attention improved robustness and reduced false alarms |

| Bekhouche et al. (2022) [33] | Open-source | Behavioral (video frames) | Class imbalance; scenario dependency; facial variation at night | Use of FCFS reduced features from 4096 to ~253 with better performance |

| Benmohamed & Zarzour (2024) [34] | Open-source | Behavioral (facial structural metrics and CNN features) | Low quality of IR video at night reduces feature extraction reliability | Combining CNN and structural fusion; frame aggregation improved detection |

| J. Chen, Wang, Wang et al. (2022) [35] | Proprietary | Physiological (EEG phase coherence images) | Small dataset, inter-subject variability, data imbalance | Use of relu4 layer and SVM improves results; extract features from shallow layers |

| J. Chen et al. (2021) [36] | Proprietary | Physiological (EEG—14-channel Emotiv EPOC, 128 Hz) | Signal noise, inter-subject variability, small sample size | End-to-end learning on raw EEG improved generalization and accuracy versus handcrafted features |

| J. Chen, Wang, He et al. (2022) [37] | Proprietary | Physiological (EEG—14 channels, Emotiv EPOC, 128 Hz) | EEG drift, signal noise, intra-subject variability, small dataset | Use of PLI adjacency matrices and multi-frequency band fusion improved CNN performance |

| C. Chen et al. (2023) [38] | Proprietary | Physiological (EEG—24-channel wireless dry EEG, 250 Hz) | EEG noise, inter-subject variability, data correlation due to continuous signals | Combining temporal-channel attention, covariance matrix, and capsule routing improved generalization and interpretability |

| Chew et al. (2024) [16] | Open-source + proprietary | Multimodal (behavioral: face images; physiological: rPPG, HR) | Lighting sensitivity; camera angle dependency; noise in rPPG signal | Use of top-center camera angle and 4K webcam; optimize for embedded deployment |

| Civik & Yuzgec (2023) [39] | Open-source | Behavioral (facial landmarks: eyes and mouth) | Lighting variation, eye occlusion, mouth coverage, system delay under low light | Separate CNNs for eye and mouth improve detection of complex fatigue states |

| Cui et al. (2022) [40] | Open-source | Physiological (single-channel EEG from Oz) | EEG variability, low SNR, inter-subject drift | Use of GAP layer, CAM visualization to enhance interpretability |

| Ding et al. (2024) [41] | Open-source | Physiological (EEG) | Limited training data, subject variability, anomaly detection, similarity measures | Use of attention-based feature extraction and anomaly detection block improves robustness |

| Dua et al. (2021) [42] | Open-source | Behavioral (RGB video frames and optical flow) | Class imbalance; occlusion from sunglasses or hand gestures | Use of ensemble to balance strengths of each model |

| Ebrahimian et al. (2022) [43] | Proprietary | Physiological (ECG, respiration via thermal imaging) | Signal variability, mechanical latency in physiological response, labeling subjectivity | Multi-signal fusion (HRV, PSD, RR); CNN-LSTM superior to CNN in most tasks |

| Fa et al. (2023) [44] | Open-source | Behavioral (facial landmarks via OpenPose) | Occlusion, inter-subject variation, facial landmark misalignment | Multi-scale graph aggregation and coordinate attention improved spatial-temporal robustness |

| X. Feng, Guo et al. (2024) [45] | Open-source | Physiological (EEG—Oz channel) | Signal noise, inter-subject variability, cross-subject generalization challenge | Adaptive thresholding, GAP, and ECAM modules enhanced robustness and interpretability |

| X. Feng, Dai et al. (2025) [46] | Open-source | Physiological (EEG—30 channels for SADT, 17 channels for SEED-VIG) | EEG noise, EMG interference, inter-subject variability | Coordinate attention, LMMD, and curriculum pseudo labeling improved generalization across subjects |

| W. Feng et al. (2025) [47] | Open-source | Physiological (EEG—30 channels, 128 Hz) | Channel duplication, subject variability, noisy signals | Gumbel-Softmax improves channel selection; separable CNN reduces complexity and increases accuracy |

| Florez et al. (2023) [21] | Open-source | Behavioral (eye region video frames) | Small dataset; limited generalization; image redundancy | ROI correction using MediaPipe and CNN; Grad-CAM for interpretability |

| Gao et al. (2019) [13] | Proprietary | Physiological (multichannel EEG) | EEG autocorrelation; subject variability; feature interpretability | Uses multiplex recurrence network and mutual information matrix for CNN input |

| Guo & Markoni (2019) [48] | Open-source | Behavioral (RGB facial landmarks) | Limited demographic diversity; expression similarity among classes | Use temporal context (LSTM) improved stability over single-frame CNNs |

| C. He et al. (2024) [15] | Proprietary | Multimodal (physiological: PPG, heart rate, GSR, wrist acceleration; vehicle-based: velocity, acceleration, direction, slope, load; temporal: time, rest/work duration) | Class imbalance, subjectivity in video labeling, small sample | Fusion of diverse features improved robustness; dropout and focal loss used to stabilize training |

| H. He et al. (2020) [49] | Open-source + proprietary | Behavioral (facial regions: eyes, mouth) | Illumination variation; need for gamma correction | Use of gamma correction, lightweight CNN design, real-time test |

| L. He et al. (2024) [50] | Open-source | Physiological (EEG, EOG) | Class imbalance, overfitting, small datasets | GAN-based data augmentation (WGAN-GP); adaptive convolution; attention mechanisms |

| Nguyen et al. (2023) [18] | Proprietary | Physiological (wireless EEG: behind-the-ear, single-channel, real-time) | Low resolution EEG; motion artifacts; noise filtering challenges | Dropout, quantization, batch normalization improve lightweight model performance in embedded setup |

| Hu et al. (2024) [17] | Proprietary | Physiological (EEG, 14 channels) | EEG artifacts, subjectivity in annotation, class imbalance | Functional brain region partitioning, multi-branch fusion, focal loss for imbalance |

| Huang et al. (2022) [51] | Open-source | Behavioral (facial landmarks: global face, eyes, mouth, glabella) | Pose variation, occlusion, ambiguous yawning vs. speaking | Combining multi-granularity input, FRN, and FFN improved accuracy and robustness in head pose variation |

| Hultman et al. (2021) [52] | Proprietary | Physiological (EEG, EOG, ECG) | Sensor noise; inter-subject variability; class imbalance | Combining EEG and ECG features helps accuracy; early fusion preferred |

| Iwamoto et al. (2021) [53] | Proprietary | Physiological (ECG—RRI sequences) | Ambiguity in expert scoring, inter-participant variability, simulator realism gap | LSTM-AE improved anomaly detection over PCA and HRV-based models |

| Jamshidi et al. (2021) [54] | Open-source | Behavioral (facial landmarks) | Occlusion, overfitting on training data, temporal labeling noise | Combination of spatial and temporal phases improved detection; situation-specific training helped generalization |

| Jarndal et al. (2025) [55] | Open-source | Behavioral (face video; full facial image) | Hardware resource constraints; lighting variation; face obstruction | Uses entire face with ViT, improving robustness in occluded or dark scenes |

| Jia et al. (2022) [56] | Proprietary | Behavioral (facial landmarks: eyes, mouth, head pose) | Facial occlusion (glasses, masks), lighting variability, real-time inference delay | Multi-module design with feature fusion increased robustness to occlusion and inconsistent signals |

| Jiao & Jiang (2022) [57] | Proprietary | Physiological (EOG, EEG via O2 and HSUM) | Data imbalance; low SEM frequency; limited samples for DL | Combine with SMOTE or GAN to improve sample size and reduce FP |

| Jiao et al. (2020) [14] | Proprietary | Physiological (EEG, EOG) | Temporal imprecision in labeling; signal variability; class imbalance | CWGAN improved data balance; sliding window settings boosted stability |

| Jiao et al. (2023) [58] | Open-source | Physiological (EOG) | Data imbalance; noise in physiological signals; variability across subjects | Multi-scale convolution improves feature representation |

| Kielty et al. (2023) [59] | Open-source + proprietary | Behavioral (event-based facial sequences, seatbelt motion) | Event sparsity in static scenes; hand-over-mouth occlusion; class imbalance in seatbelt transitions | Event fusion, attention maps, and recurrent layers improve robustness under occlusion and motion variance |

| Kır Savaş & Becerikli (2022) [60] | Open-source + proprietary | Behavioral (RGB face images: eyes and mouth) | Reconstruction error and model depth tuning; sensitivity to lighting and occlusion | DBN used for unsupervised feature learning; performance improved with deeper layers and symptom-specific models |

| Kumar et al. (2023) [61] | Open-source | Behavioral (RGB facial landmarks) | Overfitting risk; image quality issues; lighting variation | Dropout and global average pooling layers added for better generalization |

| Lamaazi et al. (2023) [62] | Open-source + proprietary | Multimodal (behavioral: eyes, mouth; vehicle-based: acceleration x/y/z) | Class confusion between yawning vs. mouth open, head tilt, lighting variation, accelerometer sequence noise | Multistage detection (vision and sensors) reduced false positives and improved detection latency |

| Latreche et al. (2025) [63] | Open-source | Physiological (EEG 32-channel) | Small sample size; lack of generalization; manual label noise | Optimization with RS and Optuna improved precision, reduced overfitting |

| Q. Li et al. (2024) [64] | Proprietary | Physiological (EEG from 32-channel cap, regions A–D) | High search cost; model performance sensitive to EEG region used | NAS enables optimal trade-off between performance and efficiency |

| T. Li & Li (2024) [65] | Open-source | Behavioral (face video; EAR, MAR, head pose, ViT output) | Occlusion (glasses), pose variation, detection failures, dataset limitations | ViT adds global semantics; LSTM captures drowsiness temporal trends |

| Lin et al. (2025) [66] | Open-source + proprietary | Physiological (EEG) | EEG noise, individual variability, fine-grained imbalance | Combining attention, fusion, and channel selection improve generalization; focal loss addresses imbalance |

| Majeed et al. (2023) [67] | Open-source | Behavioral (video frames) | Potential overfitting; effect of data augmentation; occlusion challenges | Augmentation helps generalization; CNN-RNN for spatiotemporal features |

| Mate et al. (2024) [68] | Open-source | Behavioral (images extracted from videos) | Overfitting risk; limited generalization; tuning challenges | Use of multiple architectures improves comparability; augmentation used |

| Min et al. (2023) [69] | Open-source | Multimodal (physiological: EEG; behavioral: eye images via video) | Facial and muscle artifacts; low-channel EEG; inter-subject generalization | Fusion of EEG and SIFT-based eye features improves detection robustness |

| Mukherjee & Roy (2024) [70] | Proprietary | Multimodal (physiological: EEG, EMG, pulse, respiration, GSR; behavioral: head movement) | Signal noise, subjectivity in labeling; short windows improve detection | Use of TLSTM and attention for temporal relevance; 250 ms window practical |

| Nandyal & Sharanabasappa (2024) [71] | Open-source | Behavioral (video/image) | Class imbalance; vanishing gradient; overfitting risks | Use of optimization algorithm (FA) to avoid local optima |

| Obaidan et al. (2024) [72] | Open-source | Physiological (EEG) | Limited training data, inter-subject variability, non-stationary EEG signals | Multi-scale CNN architecture, DE preprocessing, spatial-spectral learning improves robustness |

| Paulo et al. (2021) [73] | Open-source | Physiological (EEG) | Inter-subject variability, low SNR, generalization challenges | Explore RNNs, reduce channels, apply attention mechanisms |

| Peng et al. (2024) [74] | Proprietary | Multimodal (behavioral: RGB face video; physiological: HR, EDA, BVP) | Signal noise, subjectivity in self-labeling, imbalance of fatigue levels | Combines facial and physiological data; short-window detection; attention maps |

| Priyanka et al. (2024) [75] | Proprietary | Multimodal (behavioral, physiological, vehicle-based) | Imbalanced dataset; need for SMOTE | Personalized models; real-world testing suggested |

| Quddus et al. (2021) [76] | Proprietary | Behavioral (eye image patches 48×48 from 2 cameras) | Facial occlusion, lighting variation, mismatch between EEG and video timestamps | C-LSTM outperforms eye-tracking methods with lower error and better generalizability |

| Ramzan et al. (2024) [77] | Open-source | Behavioral (video: eye, face, mouth regions) | Training time (CNN: ~603s/epoch, Hybrid: ~206s); overfitting managed by dropout | Combining hybrid CNN, PCA, and HOG boosts accuracy and training efficiency |

| Sedik et al. (2023) [78] | Open-source | Behavioral (RGB facial landmarks, eye and mouth region, NIR for DROZY) | DROZY limitations (lighting, occlusion); overfitting risk mitigated by augmentation | Combining image and video datasets improves robustness across symptoms |

| Shalash (2021) [79] | Open-source | Physiological (single-channel EEG converted to spectrogram) | Overfitting; small dataset; high computational cost | Use of reassignment spectrogram, dropout, and L2 regularization |

| Sharanabasappa & Nandyal (2022) [80] | Open-source | Behavioral (RGB images: eye, face, mouth regions) | Manual annotation burden; subjectivity in labeling; variance in image quality | Feature selection improves accuracy over deep CNNs; ensemble stabilizes prediction |

| Sohail et al. (2024) [81] | Open-source | Behavioral (face images: eyes open/closed, yawn/no yawn) | Lighting conditions; camera placement; lack of occlusion handling | CNN and SMOTE used for balance; MaxPooling and Softmax activation |

| Soman et al. (2024) [19] | Open-source + proprietary | Behavioral (facial landmarks: EAR, MAR, PUC, MOE from camera) | Pupil detection errors, lighting variation, cultural facial trait diversity | Facial ratio fusion (EAR, MAR, PUC, MOE), Jetson Nano optimization, dropout and early stopping improve robustness |

| Sun et al. (2023) [82] | Open-source + proprietary | Behavioral (facial landmarks) | Low-quality inputs, occlusion, inconsistent eye states, data balance | FAM and SIM improve feature fusion on noisy inputs |

| Tang et al. (2024) [83] | Open-source | Physiological (21-channel EEG: forehead, temporal, posterior) | EEG noise, inter-subject variability, impact of CAM module placement | CAM placement after MSCNN improves feature quality and channel weighting |

| Turki et al. (2024) [84] | Open-source | Behavioral (face video; eye/mouth landmarks) | Image quality, illumination, face rotation, occlusion, false positives | Ensemble of CNNs and Chebyshev distance improves robustness and reduces false alerts |

| Vijaypriya & Uma (2023) [85] | Open-source | Behavioral (facial landmarks) | Low data variety; synthetic augmentation mentioned but not detailed | Use of Flamingo Search Optimization and wavelet feature fusion improves accuracy |

| Wang et al. (2025) [86] | Open-source | Physiological (EEG) | EEG signal noise, incomplete data, zero padding effects | Fused RWECN, DE, SQ features improved accuracy; filling missing values |

| Wijnands et al. (2020) [87] | Open-source | Behavioral (facial video—yawning, blinking, nodding, head pose) | Small dataset size; weak segment-level labels; slow inference on mobile devices | Temporal fusion and 3D depthwise convolutions improved robustness to occlusion and blinking patterns |

| H. Yang et al. (2021) [88] | Open-source + proprietary | Behavioral (RGB video frames) | Low resolution; camera vibration; similar facial actions | Use additional features for diverse lighting; improve resolution/deblurring |

| E. Yang & Yi (2024) [89] | Open-source | Behavioral (facial landmarks) | Small dataset size, simplified binary labeling, generalization challenges | Use of NGO for hyperparameter tuning and ShuffleNet for efficient extraction |

| K. Yang et al. (2025) [90] | Open-source | Physiological (EEG: DE, PSD, FE, SCC functional network) | EEG noise; differences in feature impact by dataset | Feature-level fusion improves classification versus single-feature GCNs |

| You et al. (2019) [91] | Open-source + proprietary | Behavioral (RGB facial video; EAR from eye landmarks) | Illumination variation, landmark errors, small eye sizes | EAR individualized classifier; uses PERCLOS and head position fallback |

| Yu et al. (2024) [20] | Proprietary | Multimodal (physiological: PPG; Behavioral: facial; behavioral: head pose) | Signal noise, alignment of video and PPG, fusion across modalities | Fusion of PPG, facial, and head pose improves accuracy; ensemble needed |

| Zeghlache et al. (2022) [92] | Open-source | Physiological (EEG, EOG) | Encoding loss tradeoff; optimal zdim tuning; noisy EEG channels | Dimensionality reduction improves classification; LSTM-VAE offers balanced features |

| Zhang et al. (2023) [93] | Open-source | Behavioral (facial landmarks: local patches, eyes, mouth, nose, full face) | Head pose variation, occlusion, ambiguous blinking vs. closing eyes | Multi-granularity representation and LSTM fusion improve spatial-temporal robustness |

References

- Grandjean, E. Fatigue in industry. Br. J. Ind. Med. 1979, 36, 175–186. [Google Scholar] [CrossRef]

- Williamson, A.; Lombardi, D.A.; Folkard, S.; Stutts, J.; Courtney, T.K.; Connor, J.L. The link between fatigue and safety. Accid. Anal. Prev. 2011, 43, 498–515. [Google Scholar] [CrossRef]

- Tefft, B.C. Drowsy Driving in Fatal Crashes, United States, 2017–2021; AAA Foundation for Traffic Safety: Washington, DC, USA, 2024. [Google Scholar]

- Areal, A.; Pires, C.; Pita, R.; Marques, P.; Trigoso, J. Distraction (Mobile Phone Use) & Fatigue; ESRA3 Thematic report Nr. 3; ESRA: Utrecht, The Netherlands, 2024. [Google Scholar]

- Ebrahim Shaik, M. A systematic review on detection and prediction of driver drowsiness. Transp. Res. Interdiscip. Perspect. 2023, 21, 100864. [Google Scholar] [CrossRef]

- El-Nabi, S.A.; El-Shafai, W.; El-Rabaie, E.S.M.; Ramadan, K.F.; Abd El-Samie, F.E.; Mohsen, S. Machine learning and deep learning techniques for driver fatigue and drowsiness detection: A review. Multimed. Tools Appl. 2024, 83, 9441–9477. [Google Scholar] [CrossRef]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef]

- Fonseca, T.; Ferreira, S. Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models. Available online: https://www.crd.york.ac.uk/PROSPERO/view/CRD420251078841 (accessed on 12 July 2025).

- Weng, C.-H.; Lai, Y.-H.; Lai, S.-H. Driver Drowsiness Detection via a Hierarchical Temporal Deep Belief Network. In Asian Conference on Computer Vision Workshop on Driver Drowsiness Detection from Video; Springer: Taipei, Taiwan, 2016. [Google Scholar]

- Abtahi, S.; Omidyeganeh, M.; Shirmohammadi, S.; Hariri, B. YawDD: A yawning detection dataset. In Proceedings of the 5th ACM Multimedia Systems Conference, Singapore, 19 March 2014; Association for Computing Machinery: New York, NY, USA; pp. 24–28. [Google Scholar]

- Zheng, W.L.; Lu, B.L. A multimodal approach to estimating vigilance using EEG and forehead EOG. J. Neural Eng. 2017, 14, 026017. [Google Scholar] [CrossRef]

- Cao, Z.; Chuang, C.H.; King, J.K.; Lin, C.T. Multi-channel EEG recordings during a sustained-attention driving task. Sci. Data 2019, 6, 19. [Google Scholar] [CrossRef] [PubMed]

- Gao, Z.K.; Li, Y.L.; Yang, Y.X.; Ma, C. A recurrence network-based convolutional neural network for fatigue driving detection from EEG. Chaos 2019, 29, 113126. [Google Scholar] [CrossRef] [PubMed]

- Jiao, Y.; Deng, Y.; Luo, Y.; Lu, B.L. Driver sleepiness detection from EEG and EOG signals using GAN and LSTM networks. Neurocomputing 2020, 408, 100–111. [Google Scholar] [CrossRef]

- He, C.; Xu, P.; Pei, X.; Wang, Q.; Yue, Y.; Han, C. Fatigue at the wheel: A non-visual approach to truck driver fatigue detection by multi-feature fusion. Accid. Anal. Prev. 2024, 199, 107511. [Google Scholar] [CrossRef]

- Chew, Y.X.; Razak, S.F.A.; Yogarayan, S.; Ismail, S.N.M.S. Dual-Modal Drowsiness Detection to Enhance Driver Safety. Comput. Mater. Contin. 2024, 81, 4397–4417. [Google Scholar] [CrossRef]

- Hu, F.; Zhang, L.; Yang, X.; Zhang, W.A. EEG-Based Driver Fatigue Detection Using Spatio-Temporal Fusion Network with Brain Region Partitioning Strategy. IEEE Trans. Intell. Transp. Syst. 2024, 25, 9618–9630. [Google Scholar] [CrossRef]

- Nguyen, H.T.; Mai, N.D.; Lee, B.G.; Chung, W.Y. Behind-the-Ear EEG-Based Wearable Driver Drowsiness Detection System Using Embedded Tiny Neural Networks. IEEE Sens. J. 2023, 23, 23875–23892. [Google Scholar] [CrossRef]

- Soman, S.P.; Kumar, G.S.; Nuthalapati, S.B.; Zafar, S.; Abubeker, K.M. Internet of things assisted deep learning enabled driver drowsiness monitoring and alert system using CNN-LSTM framework. Eng. Res. Express 2024, 6, 045239. [Google Scholar] [CrossRef]

- Yu, L.; Yang, X.; Wei, H.; Liu, J.; Li, B. Driver fatigue detection using PPG signal, facial features, head postures with an LSTM model. Heliyon 2024, 10, e39479. [Google Scholar] [CrossRef] [PubMed]

- Florez, R.; Palomino-Quispe, F.; Coaquira-Castillo, R.J.; Herrera-Levano, J.C.; Paixão, T.; Alvarez, A.B. A CNN-Based Approach for Driver Drowsiness Detection by Real-Time Eye State Identification. Appl. Sci. 2023, 13, 7849. [Google Scholar] [CrossRef]

- Adhithyaa, N.; Tamilarasi, A.; Sivabalaselvamani, D.; Rahunathan, L. Face Positioned Driver Drowsiness Detection Using Multistage Adaptive 3D Convolutional Neural Network. Inf. Technol. Control 2023, 52, 713–730. [Google Scholar] [CrossRef]

- Ahmed, M.; Masood, S.; Ahmad, M.; Abd El-Latif, A.A. Intelligent Driver Drowsiness Detection for Traffic Safety Based on Multi CNN Deep Model and Facial Subsampling. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19743–19752. [Google Scholar] [CrossRef]

- Akrout, B.; Fakhfakh, S. How to Prevent Drivers before Their Sleepiness Using Deep Learning-Based Approach. Electronics 2023, 12, 965. [Google Scholar] [CrossRef]

- Alameen, S.A.; Alhothali, A.M. A Lightweight Driver Drowsiness Detection System Using 3DCNN with LSTM. Comput. Syst. Sci. Eng. 2022, 44, 895–912. [Google Scholar] [CrossRef]

- Alghanim, M.; Attar, H.; Rezaee, K.; Khosravi, M.; Solyman, A.; Kanan, M.A. A Hybrid Deep Neural Network Approach to Recognize Driving Fatigue Based on EEG Signals. Int. J. Intell. Syst. 2024, 2024, 9898333. [Google Scholar] [CrossRef]

- Alguindigue, J.; Singh, A.; Narayan, A.; Samuel, S. Biosignals Monitoring for Driver Drowsiness Detection Using Deep Neural Networks. IEEE Access 2024, 12, 93075–93086. [Google Scholar] [CrossRef]

- Almazroi, A.A.; Alqarni, M.A.; Aslam, N.; Shah, R.A. Real-Time CNN-Based Driver Distraction & Drowsiness Detection System. Intell. Autom. Soft Comput. 2023, 37, 2153–2174. [Google Scholar] [CrossRef]

- Anber, S.; Alsaggaf, W.; Shalash, W. A Hybrid Driver Fatigue and Distraction Detection Model Using AlexNet Based on Facial Features. Electronics 2022, 11, 285. [Google Scholar] [CrossRef]

- Ansari, S.; Naghdy, F.; Du, H.; Pahnwar, Y.N. Driver Mental Fatigue Detection Based on Head Posture Using New Modified reLU-BiLSTM Deep Neural Network. IEEE Trans. Intell. Transp. Syst. 2022, 23, 10957–10969. [Google Scholar] [CrossRef]

- Arefnezhad, S.; Samiee, S.; Eichberger, A.; Frühwirth, M.; Kaufmann, C.; Klotz, E. Applying deep neural networks for multi-level classification of driver drowsiness using Vehicle-based measures. Expert. Syst. Appl. 2020, 162, 113778. [Google Scholar] [CrossRef]

- Bearly, E.M.; Chitra, R. Automatic drowsiness detection for preventing road accidents via 3dgan and three-level attention. Multimed. Tools Appl. 2024, 83, 48261–48274. [Google Scholar] [CrossRef]

- Bekhouche, S.E.; Ruichek, Y.; Dornaika, F. Driver drowsiness detection in video sequences using hybrid selection of deep features. Knowl. Based Syst. 2022, 252, 109436. [Google Scholar] [CrossRef]

- Benmohamed, A.; Zarzour, H. A Deep Learning-Based System for Driver Fatigue Detection. Ing. Des Syst. D’information 2024, 29, 1779–1788. [Google Scholar] [CrossRef]

- Chen, J.; Wang, H.; Wang, S.; He, E.; Zhang, T.; Wang, L. Convolutional neural network with transfer learning approach for detection of unfavorable driving state using phase coherence image. Expert. Syst. Appl. 2022, 187, 116016. [Google Scholar] [CrossRef]

- Chen, J.; Wang, S.; He, E.; Wang, H.; Wang, L. Recognizing drowsiness in young men during real driving based on electroencephalography using an end-to-end deep learning approach. Biomed. Signal. Process. Control 2021, 69, 102792. [Google Scholar] [CrossRef]

- Chen, J.; Wang, S.; He, E.; Wang, H.; Wang, L. Two-dimensional phase lag index image representation of electroencephalography for automated recognition of driver fatigue using convolutional neural network. Expert Syst. Appl. 2022, 191, 116339. [Google Scholar] [CrossRef]

- Chen, C.; Ji, Z.; Sun, Y.; Bezerianos, A.; Thakor, N.; Wang, H. Self-Attentive Channel-Connectivity Capsule Network for EEG-Based Driving Fatigue Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 3152–3162. [Google Scholar] [CrossRef]

- Civik, E.; Yuzgec, U. Real-time driver fatigue detection system with deep learning on a low-cost embedded system. Microprocess. Microsyst. 2023, 99, 104851. [Google Scholar] [CrossRef]

- Cui, J.; Lan, Z.; Liu, Y.; Li, R.; Li, F.; Sourina, O.; Müller-Wittig, W. A compact and interpretable convolutional neural network for cross-subject driver drowsiness detection from single-channel EEG. Methods 2022, 202, 173–184. [Google Scholar] [CrossRef]

- Ding, N.; Zhang, C.; Eskandarian, A. EEG-fest: Few-shot based attention network for driver’s drowsiness estimation with EEG signals. Biomed. Phys. Eng. Express 2024, 10, 015008. [Google Scholar] [CrossRef] [PubMed]

- Dua, M.; Shakshi Singla, R.; Raj, S.; Jangra, A. Deep CNN models-based ensemble approach to driver drowsiness detection. Neural Comput. Appl. 2021, 33, 3155–3168. [Google Scholar] [CrossRef]

- Ebrahimian, S.; Nahvi, A.; Tashakori, M.; Salmanzadeh, H.; Mohseni, O.; Leppänen, T. Multi-Level Classification of Driver Drowsiness by Simultaneous Analysis of ECG and Respiration Signals Using Deep Neural Networks. Int. J. Environ. Res. Public Health 2022, 19, 10736. [Google Scholar] [CrossRef]

- Fa, S.; Yang, X.; Han, S.; Feng, Z.; Chen, Y. Multi-scale spatial–temporal attention graph convolutional networks for driver fatigue detection. J. Vis. Commun. Image Represent. 2023, 93, 103826. [Google Scholar] [CrossRef]

- Feng, X.; Guo, Z.; Kwong, S. ID3RSNet: Cross-subject driver drowsiness detection from raw single-channel EEG with an interpretable residual shrinkage network. Front. Neurosci. 2024, 18, 1508747. [Google Scholar] [CrossRef] [PubMed]

- Feng, X.; Dai, S.; Guo, Z. Pseudo-label-assisted subdomain adaptation network with coordinate attention for EEG-based driver drowsiness detection. Biomed. Signal. Process. Control 2025, 101, 107132. [Google Scholar] [CrossRef]

- Feng, W.; Wang, X.; Xie, J.; Liu, W.; Qiao, Y.; Liu, G. Real-Time EEG-Based Driver Drowsiness Detection Based on Convolutional Neural Network With Gumbel-Softmax Trick. IEEE Sens. J. 2025, 25, 1860–1871. [Google Scholar] [CrossRef]

- Guo, J.M.; Markoni, H. Driver drowsiness detection using hybrid convolutional neural network and long short-term memory. Multimed. Tools Appl. 2019, 78, 29059–29087. [Google Scholar] [CrossRef]

- He, H.; Zhang, X.; Jiang, F.; Wang, C.; Yang, Y.; Liu, W.; Peng, J. A Real-time Driver Fatigue Detection Method Based on Two-Stage Convolutional Neural Network. IFAC-PapersOnLine 2020, 53, 15374–15379. [Google Scholar] [CrossRef]

- He, L.; Zhang, L.; Sun, Q.; Lin, X.T. A generative adaptive convolutional neural network with attention mechanism for driver fatigue detection with class-imbalanced and insufficient data. Behav. Brain Res. 2024, 464, 114898. [Google Scholar] [CrossRef]

- Huang, R.; Wang, Y.; Li, Z.; Lei, Z.; Xu, Y. RF-DCM: Multi-Granularity Deep Convolutional Model Based on Feature Recalibration and Fusion for Driver Fatigue Detection. IEEE Trans. Intell. Transp. Syst. 2022, 23, 630–640. [Google Scholar] [CrossRef]

- Hultman, M.; Johansson, I.; Lindqvist, F.; Ahlström, C. Driver sleepiness detection with deep neural networks using electrophysiological data. Physiol. Meas. 2021, 42, 034001. [Google Scholar] [CrossRef] [PubMed]

- Iwamoto, H.; Hori, K.; Fujiwara, K.; Kano, M. Real-driving-implementable drowsy driving detection method using heart rate variability based on long short-term memory and autoencoder. IFAC-PapersOnLine 2021, 54, 526–531. [Google Scholar] [CrossRef]

- Jamshidi, S.; Azmi, R.; Sharghi, M.; Soryani, M. Hierarchical deep neural networks to detect driver drowsiness. Multimed. Tools Appl. 2021, 80, 16045–16058. [Google Scholar] [CrossRef]

- Jarndal, A.; Tawfik, H.; Siam, A.I.; Alsyouf, I.; Cheaitou, A. A Real-Time Vision Transformers-Based System for Enhanced Driver Drowsiness Detection and Vehicle Safety. IEEE Access 2025, 13, 1790–1803. [Google Scholar] [CrossRef]

- Jia, H.; Xiao, Z.; Ji, P. Real-time fatigue driving detection system based on multi-module fusion. Comput. Graph. 2022, 108, 22–33. [Google Scholar] [CrossRef]

- Jiao, Y.; Jiang, F. Detecting slow eye movements with bimodal-LSTM for recognizing drivers’ sleep onset period. Biomed. Signal. Process. Control 2022, 75, 103608. [Google Scholar] [CrossRef]

- Jiao, Y.; He, X.; Jiao, Z. Detecting slow eye movements using multi-scale one-dimensional convolutional neural network for driver sleepiness detection. J. Neurosci. Methods 2023, 397, 109939. [Google Scholar] [CrossRef]

- Kielty, P.; Dilmaghani, M.S.; Shariff, W.; Ryan, C.; Lemley, J.; Corcoran, P. Neuromorphic Driver Monitoring Systems: A Proof-of-Concept for Yawn Detection and Seatbelt State Detection using an Event Camera. IEEE Access 2023, 11, 96363–96373. [Google Scholar] [CrossRef]

- Savaş, B.K.; Becerikli, Y. Behavior-based driver fatigue detection system with deep belief network. Neural Comput. Appl. 2022, 34, 14053–14065. [Google Scholar] [CrossRef]

- Kumar, V.; Sharma, S. Ranjeet: Driver drowsiness detection using modified deep learning architecture. Evol. Intell. 2023, 16, 1907–1916. [Google Scholar] [CrossRef]

- Lamaazi, H.; Alqassab, A.; Fadul, R.A.; Mizouni, R. Smart Edge-Based Driver Drowsiness Detection in Mobile Crowdsourcing. IEEE Access 2023, 11, 21863–21872. [Google Scholar] [CrossRef]

- Latreche, I.; Slatnia, S.; Kazar, O.; Harous, S. An optimized deep hybrid learning for multi-channel EEG-based driver drowsiness detection. Biomed. Signal. Process. Control 2025, 99, 106881. [Google Scholar] [CrossRef]

- Li, Q.; Luo, Z.; Qi, R.; Zheng, J. Automatic Searching of Lightweight and High-Performing CNN Architectures for EEG-Based Driving Fatigue Detection. IEEE Trans. Instrum. Meas. 2024, 73, 1–11. [Google Scholar] [CrossRef]

- Li, T.; Li, C. A Deep Learning Model Based on Multi-Granularity Facial Features and LSTM Network for Driver Drowsiness Detection. J. Appl. Sci. Eng. 2024, 27, 2799–2811. [Google Scholar] [CrossRef]

- Lin, X.; Huang, Z.; Ma, W.; Tang, W. EEG-based driver drowsiness detection based on simulated driving environment. Neurocomputing 2025, 616, 128961. [Google Scholar] [CrossRef]

- Majeed, F.; Shafique, U.; Safran, M.; Alfarhood, S.; Ashraf, I. Detection of Drowsiness among Drivers Using Novel Deep Convolutional Neural Network Model. Sensors 2023, 23, 8741. [Google Scholar] [CrossRef] [PubMed]

- Mate, P.; Apte, N.; Parate, M.; Sharma, S. Detection of driver drowsiness using transfer learning techniques. Multimed. Tools Appl. 2024, 83, 35553–35582. [Google Scholar] [CrossRef]

- Min, J.; Cai, M.; Gou, C.; Xiong, C.; Yao, X. Fusion of forehead EEG with machine vision for real-time fatigue detection in an automatic processing pipeline. Neural Comput. Appl. 2023, 35, 8859–8872. [Google Scholar] [CrossRef]

- Mukherjee, P.; Roy, A.H. A novel deep learning-based technique for driver drowsiness detection. Hum. Factors Ergon. Manuf. Serv. Ind. 2024, 34, 667–684. [Google Scholar] [CrossRef]

- Nandyal, S.; Sharanabasappa, S. Deep ResNet 18 and enhanced firefly optimization algorithm for on-road vehicle driver drowsiness detection. J. Auton. Intell. 2024, 7. [Google Scholar] [CrossRef]

- Bin Obaidan, H.; Hussain, M.; AlMajed, R. EEG_DMNet: A Deep Multi-Scale Convolutional Neural Network for Electroencephalography-Based Driver Drowsiness Detection. Electronics 2024, 13, 2084. [Google Scholar] [CrossRef]

- Paulo, J.R.; Pires, G.; Nunes, U.J. Cross-Subject Zero Calibration Driver’s Drowsiness Detection: Exploring Spatiotemporal Image Encoding of EEG Signals for Convolutional Neural Network Classification. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 905–915. [Google Scholar] [CrossRef] [PubMed]

- Peng, Y.; Deng, H.; Xiang, G.; Wu, X.; Yu, X.; Li, Y.; Yu, T. A Multi-Source Fusion Approach for Driver Fatigue Detection Using Physiological Signals and Facial Image. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16614–16624. [Google Scholar] [CrossRef]

- Priyanka, S.; Shanthi, S.; Saran Kumar, A.; Praveen, V. Data fusion for driver drowsiness recognition: A multimodal perspective. Egypt. Inform. J. 2024, 27, 100529. [Google Scholar] [CrossRef]

- Quddus, A.; Shahidi Zandi, A.; Prest, L.; Comeau, F.J.E. Using long short term memory and convolutional neural networks for driver drowsiness detection. Accid. Anal. Prev. 2021, 156, 106107. [Google Scholar] [CrossRef]

- Ramzan, M.; Abid, A.; Fayyaz, M.; Alahmadi, T.J.; Nobanee, H.; Rehman, A. A Novel Hybrid Approach for Driver Drowsiness Detection Using a Custom Deep Learning Model. IEEE Access 2024, 12, 126866–126884. [Google Scholar] [CrossRef]

- Sedik, A.; Marey, M.; Mostafa, H. An Adaptive Fatigue Detection System Based on 3D CNNs and Ensemble Models. Symmetry 2023, 15, 1274. [Google Scholar] [CrossRef]

- Shalash, W.M. A Deep Learning CNN Model for Driver Fatigue Detection Using Single EEG Channel. J. Theor. Appl. Inf. Technol. 2021, 31, 462–477. [Google Scholar]

- Sharanabasappa; Nandyal, S. An ensemble learning model for driver drowsiness detection and accident prevention using the behavioral features analysis. Int. J. Intell. Comput. Cybern. 2022, 15, 224–244. [Google Scholar] [CrossRef]

- Sohail, A.; Shah, A.A.; Ilyas, S.; Alshammry, N. A CNN-based Deep Learning Framework for Driver’s Drowsiness Detection. Int. J. Adv. Comput. Sci. Appl. 2024, 15, 169–178. [Google Scholar] [CrossRef]

- Sun, Z.; Miao, Y.; Jeon, J.Y.; Kong, Y.; Park, G. Facial feature fusion convolutional neural network for driver fatigue detection. Eng. Appl. Artif. Intell. 2023, 126, 106981. [Google Scholar] [CrossRef]

- Tang, J.; Zhou, W.; Zheng, W.; Zeng, Z.; Li, J.; Su, R.; Adili, T.; Chen, W.; Chen, C.; Luo, J. Attention-Guided Multiscale Convolutional Neural Network for Driving Fatigue Detection. IEEE Sens. J. 2024, 24, 23280–23290. [Google Scholar] [CrossRef]

- Turki, A.; Kahouli, O.; Albadran, S.; Ksantini, M.; Aloui, A.; Amara, M. Ben: A sophisticated Drowsiness Detection System via Deep Transfer Learning for real time scenarios. AIMS Math. 2024, 9, 3211–3234. [Google Scholar] [CrossRef]

- Vijaypriya, V.; Uma, M. Facial Feature-Based Drowsiness Detection with Multi-Scale Convolutional Neural Network. IEEE Access 2023, 11, 63417–63429. [Google Scholar] [CrossRef]

- Wang, K.; Mao, X.; Song, Y.; Chen, Q. EEG-based fatigue state evaluation by combining complex network and frequency-spatial features. J. Neurosci. Methods 2025, 416, 110385. [Google Scholar] [CrossRef]

- Wijnands, J.S.; Thompson, J.; Nice, K.A.; Aschwanden, G.D.P.A.; Stevenson, M. Real-time monitoring of driver drowsiness on mobile platforms using 3D neural networks. Neural Comput. Appl. 2020, 32, 9731–9743. [Google Scholar] [CrossRef]

- Yang, H.; Liu, L.; Min, W.; Yang, X.; Xiong, X. Driver Yawning Detection Based on Subtle Facial Action Recognition. IEEE Trans. Multimed. 2021, 23, 572–583. [Google Scholar] [CrossRef]

- Yang, E.; Yi, O. Enhancing Road Safety: Deep Learning-Based Intelligent Driver Drowsiness Detection for Advanced Driver-Assistance Systems. Electronics 2024, 13, 708. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, K.; Hu, Y.; Xu, J.; Yang, B.; Kong, W.; Zhang, J. Adaptive multi-branch CNN of integrating manual features and functional network for driver fatigue detection. Biomed. Signal. Process. Control 2025, 102, 107262. [Google Scholar] [CrossRef]

- You, F.; Li, X.; Gong, Y.; Wang, H.; Li, H. A Real-time Driving Drowsiness Detection Algorithm with Individual Differences Consideration. IEEE Access 2019, 7, 179396–179408. [Google Scholar] [CrossRef]

- Zeghlache, R.; Labiod, M.A.; Mellouk, A. Driver vigilance estimation with Bayesian LSTM Auto-encoder and XGBoost using EEG/EOG data. IFAC-PapersOnLine 2022, 55, 89–94. [Google Scholar] [CrossRef]

- Zhang, H.; Liu, T.; Lyu, J.; Chen, D.; Yuan, Z. Integrate memory mechanism in multi-granularity deep framework for driver drowsiness detection. Intell. Robot. 2023, 3, 614–631. [Google Scholar] [CrossRef]

| Quartile Ranking | Number of Studies |

|---|---|

| Q1 | 48 |

| Q2 | 20 |

| Q3 | 11 |

| Q4 | 2 |

| Metric | Median | Std. Dev. | Q1 (25%) | Q3 (75%) |

|---|---|---|---|---|

| Accuracy | 0.952 | 0.072 | 0.904 | 0.979 |

| Precision | 0.956 | 0.077 | 0.912 | 0.980 |

| Recall | 0.953 | 0.077 | 0.918 | 0.980 |

| F1-score | 0.953 | 0.083 | 0.903 | 0.976 |

| AUC-ROC | 0.975 | 0.101 | 0.957 | 0.990 |

| Metric | Simulated | Real-World |

|---|---|---|

| Accuracy (median) | 0.958 | 0.977 |

| F1-score (median) | 0.948 | 0.972 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fonseca, T.; Ferreira, S. Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models. Appl. Sci. 2025, 15, 9018. https://doi.org/10.3390/app15169018

Fonseca T, Ferreira S. Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models. Applied Sciences. 2025; 15(16):9018. https://doi.org/10.3390/app15169018

Chicago/Turabian StyleFonseca, Tiago, and Sara Ferreira. 2025. "Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models" Applied Sciences 15, no. 16: 9018. https://doi.org/10.3390/app15169018

APA StyleFonseca, T., & Ferreira, S. (2025). Drowsiness Detection in Drivers: A Systematic Review of Deep Learning-Based Models. Applied Sciences, 15(16), 9018. https://doi.org/10.3390/app15169018