Neural–Computer Interfaces: Theory, Practice, Perspectives

Abstract

1. Introduction

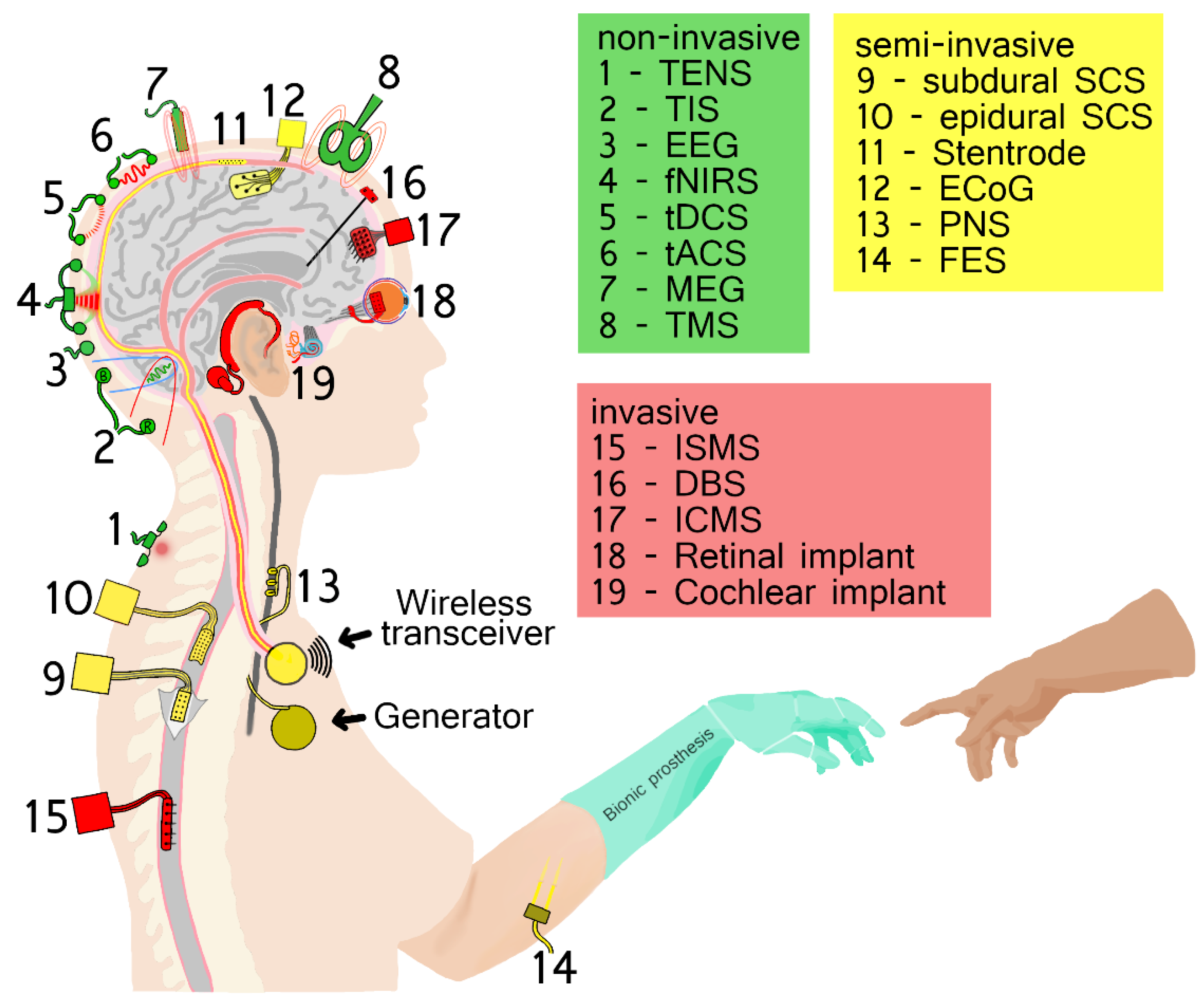

1.1. Justification for the Neural–Computer Interface Category

1.2. Main Types and Design Principles of Neural–Computer Interfaces (NCIs)

2. Basic Principles of Signal Conversion and Processing for NCIs

2.1. Signal Processing Pipeline in BCIs

- (a)

- Signal conversion

- (b)

- Signal transmission

- (c)

- Data preprocessing

- (d)

- Data extraction

- (e)

- Classification

- (f)

- Command execution

- (g)

- Feedback

2.2. Basic Principles of Data Processing for CBIs

- (a)

- Data transformation and encoding modules

- (b)

- Stimulation module

2.3. Data Processing Specifics for BBIs

3. Brain–Computer Interfaces (BCIs) by Degree of Invasiveness with Examples

3.1. Non-Invasive BCIs

- (a)

- BCIs based on electroencephalography (EEG) and magnetoencephalography (MEG)

- (b)

- Motor Imagery (MI) BCI

- (c)

- P300 Speller

- (d)

- BCIs Based on SSVEP(F)s (Steady-State Visual Evoked Potentials (Fields))

- (e)

- Passive and Hybrid BCIs

- (f)

- MEG as a Method of Non-Invasive Brain Activity Recording for BCI

- (g)

- BCI Based on Near-Infrared Spectroscopy (NIRS)

- (h)

- Eye–Brain–Computer Interfaces (EBCIs)

3.2. Semi-Invasive BCIs

- (a)

- Epidural Electrocorticography (eECoG)

- (b)

- Subdural Electrocorticography (sECoG)

- (c)

- Stentrodes

3.3. Invasive BCIs

- (a)

- BrainGate

- (b)

- BrainGate2: Speech recognition

- (c)

- Neuroport arrays: BCI-based Virtual Environment/Object Control

- (d)

- BCI (Neuroport arrays) + Functional Electrical Stimulation (FES)

- (e)

- Neuralink

- (f)

- Paradromics

4. Computer–Brain Interfaces (CBIs) by Degree of Invasiveness with Examples

4.1. Non-Invasive CBIs

- (a)

- Transcranial Magnetic Stimulation (TMS)

- (b)

- Transcranial Electrical Stimulation (TES)

- (c)

- Transcranial Focused Ultrasound Stimulation (tFUS)

4.2. Semi-Invasive CBIs

- (a)

- Peripheral Nerve Stimulation (PNS)

- (b)

- Epidural Spinal Cord Stimulation (SCS)

4.3. Invasive CBIs

- (a)

- Cochlear Implants

- (b)

- Retinal implants

- (c)

- Intracortical Microstimulation (ICMS)

5. Brain-to-Brain Interfaces (BBIs)

6. Modeling Neural–Computer Interfaces

6.1. BCIs Modeling

- (a)

- Feature analysis and optimization methods

- (b)

- Classification

6.2. Modeling CBIs

6.3. Modeling BBIs

7. Evaluation of Neural–Computer Interface Effectiveness

- (a)

- Accuracy measures the proportion of correct predictions (intended commands and their absence) among all cases classified as “positive” or “negative.”

- where:

- (b)

- Precision indicates the proportion of correctly recognized target commands among all cases where the system detected a command. This metric is useful when commands are rare, but in the case of balanced classes, accuracy may be a better measure.

- where:

- (c)

- Sensitivity/Recall determines the proportion of correctly recognized neural events corresponding to intended commands, accounting for missed command events. This metric is crucial in tasks where missing user intentions is unacceptable, such as prosthetic control.

- where:

- (d)

- Specificity/Selectivity characterizes the proportion of correctly rejected neural events that are not related to commands, considering the proportion of events erroneously identified as commands. This metric is important in tasks where false activations must be minimized.

- where:

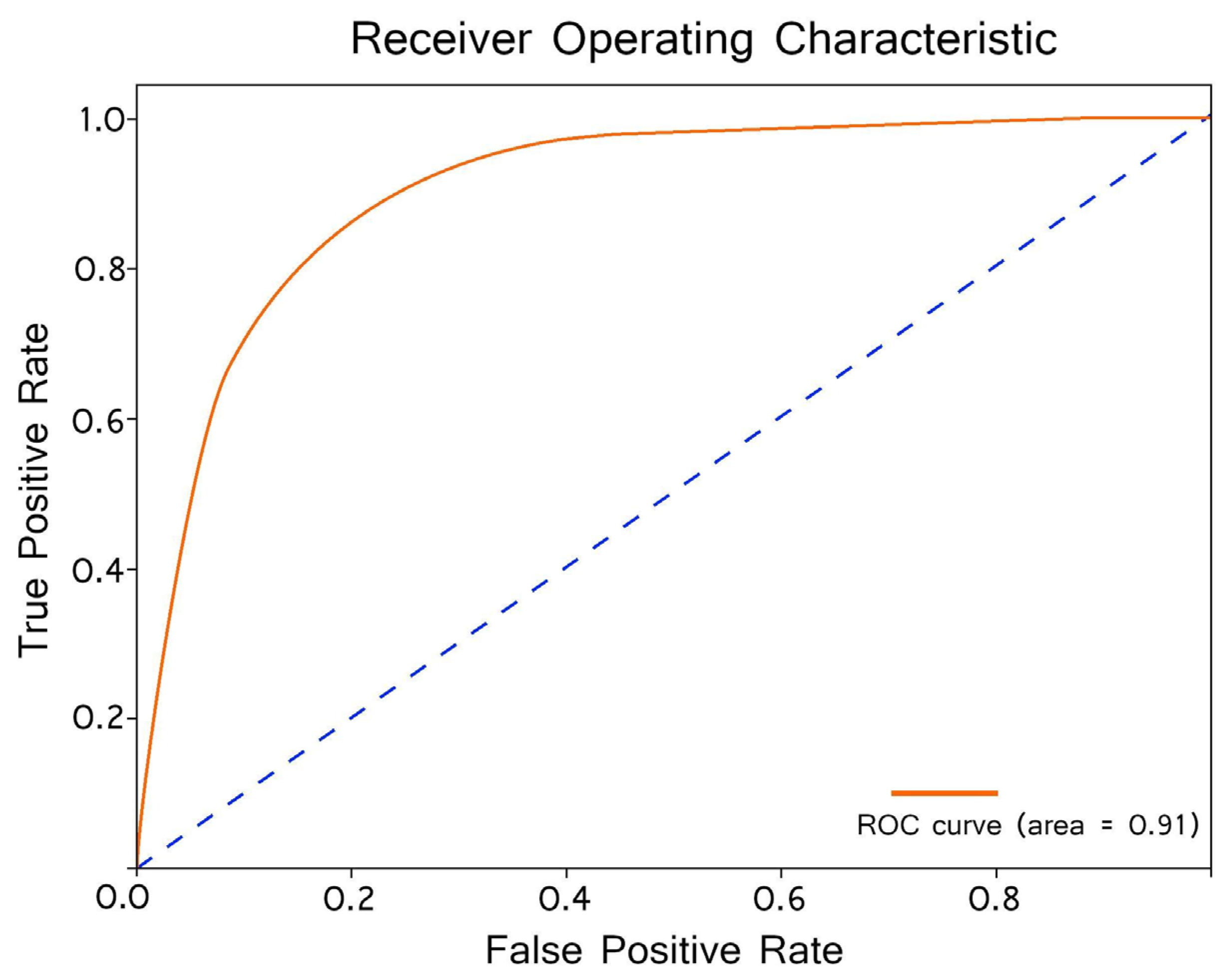

- AUC (Area Under Curve)

- (e)

- The speed of a neural–computer interface encompasses two aspects: latency and operational throughput. Latency refers to the time between signal registration and command issuance. Operational throughput measures the amount of information transmitted per unit of time. It is determined by all stages in the chain, from the analog-to-digital converter of neural signals, the transmission segment, to the processing module, the software-hardware processor, and the translator into commands for the actuator, as well as the actuator itself. In general form, ITR [216,217] is measured in bits per second/minute and does not account for semantic load, which is entirely defined by the developer (6).

- where:

- (f)

- Criteria for Evaluating the Effectiveness of CBIs and BBIs

- (g)

- Clinical Criteria for Evaluating Motor NCIs

8. Perspectives for the Development of Neural–Computer Interfaces

- (a)

- Minimally invasive and targeted stimulation technologies

- (b)

- Acoustic and optical imaging for decoding and stimulation

- (c)

- Autonomous, wireless, and energy-efficient NCI systems

- (d)

- Accelerated and neuromorphic computation

- (e)

- Multimodal electrochemical interfaces and personalized implants

- (f)

- Toward bidirectional chemical-electrical NCIs

- (g)

- Cognitive-adaptive NCI systems and intention decoding

- (h)

- Energy autonomy and high-resolution interfaces

9. Conclusions

- (a)

- NCIs as bridges between psychophysiological worlds

- (b)

- Current limitations and future directions in decoding and stimulation

- (c)

- Inter-agent BBI and collective intelligence

- (d)

- Neurofantasia: The Mind Expanded—Towards Hypersenses and Neuroethics

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| ACSP | Adaptive Common Spatial Pattern |

| ADC | Analog-to-Digital Converter |

| ALS | Amyotrophic Lateral Sclerosis |

| AMD | Age-Related Macular Degeneration |

| ARAT | Action Research Arm Test |

| ASIA | American Spinal Injury Association |

| AUC | Area Under Curve |

| BBI | Brain–Brain Interface |

| BCBI | Brain–Computer–Brain Interface |

| BCI | Brain–Computer Interface |

| BSI | Brain–Spine Interface |

| CBI | Computer–Brain Interface |

| CCPM | Correct Characters Per Minute |

| CNN | Convolutional Neural Network |

| CNS | Central Nervous System |

| CSP | Common Spatial Pattern |

| DBS | Deep Brain Stimulation |

| DL | Deep Learning |

| DSP | Digital Signal Processor |

| EBCI | Eye–Brain–Computer Interface |

| ECoG | Electrocorticography |

| EEG | Electroencephalography |

| EMG | Electromyography |

| ERD | Event-Related Desynchronization |

| ERS | Event-Related Synchronization |

| ESN | Echo State Network |

| FEM | Finite-Element Modeling |

| FES | Functional Electrical Stimulation |

| FFT | Fast Fourier Transform |

| FM-stimulus | Frequency-Modulated Stimulus |

| (f)NIRS | (functional) Near-Infrared Spectroscopy |

| FPGA | Field-Programmable Gate Array |

| fUS | functional Ultrasound |

| GAIT | Gait Assessment and Intervention Tool |

| GMFCS | Gross Motor Function Classification System |

| GRU | Gated Recurrent Unit |

| IADL | Instrumental Activities of Daily Living |

| ICA | Independent components analysis |

| ICMS (ISMS) | Intracortical Microstimulation (Intraspinal Microstimulation) |

| ITR | Information Transfer Rate |

| LDA | Linear Discriminant Analysis |

| LFP | Local Field Potential |

| LSTM | Long Short-Term Memory |

| MDM | Memory Decoding Model |

| MEG | Magnetoencephalography |

| MI | Motor Imagery |

| MIMO | Multi-Input, Multi-Output |

| MIP | Molecular Imprinting Polymer |

| MISO | Multi-Input, Single-Output |

| MRI | Magnetic Resonance Imaging |

| NCI | Neural–Computer Interface |

| OPM | Optically Pumped Magnetometer |

| PCA | Principal Component Analysis |

| PNS | Peripheral Nerve Stimulation |

| PSoC | Programmable System on a Chip |

| RC | Reservoir Computing |

| RNN | Recurrent Neural Network |

| SCI | Spinal Cord Injury |

| SFR | Stimulation Failure Rate |

| SIN-stimulus | Sinusoidal stimulus |

| SMR | Sensorimotor Rhythm |

| SQUID | Superconducting Quantum Interference Device |

| SSVEP(F) | Steady-State Visual Evoked Potential (Field) |

| SVM | Support Vector Machine |

| tACS (tDCS) | transcranial Alternating (Direct) Current Stimulation |

| TENS | Transcutaneous Electrical Nerve Stimulation |

| TES | Transcranial Electrical Stimulation |

| (t)FUS | (transcranial) Focused Ultrasound |

| TIS | Temporal Interference Stimulation |

| TMS | Transcranial Magnetic Stimulation |

| tRNS | transcranial Random Noise Stimulation |

| (t)SCS | (transcutaneous) Spinal Cord Stimulation |

| ts-MS | trans-spinal Magnetic Stimulation |

| WRF | Weighted Random Forests |

References

- Capogrosso, M.; Milekovic, T.; Borton, D.; Wagner, F.; Moraud, E.M.; Mignardot, J.-B.; Buse, N.; Gandar, J.; Barraud, Q.; Xing, D.; et al. A Brain–Spine Interface Alleviating Gait Deficits after Spinal Cord Injury in Primates. Nature 2016, 539, 284–288. [Google Scholar] [CrossRef]

- Insausti-Delgado, A.; López-Larraz, E.; Nishimura, Y.; Ziemann, U.; Ramos-Murguialday, A. Non-Invasive Brain-Spine Interface: Continuous Control of Trans-Spinal Magnetic Stimulation Using EEG. Front. Bioeng. Biotechnol. 2022, 10, 975037. [Google Scholar] [CrossRef]

- Lorach, H.; Galvez, A.; Spagnolo, V.; Martel, F.; Karakas, S.; Intering, N.; Vat, M.; Faivre, O.; Harte, C.; Komi, S.; et al. Walking Naturally after Spinal Cord Injury Using a Brain–Spine Interface. Nature 2023, 618, 126–133. [Google Scholar] [CrossRef]

- Lakshmipriya, T.; Gopinath, S.C.B. Brain-Spine Interface for Movement Restoration after Spinal Cord Injury. Brain Spine 2024, 4, 102926. [Google Scholar] [CrossRef]

- Capogrosso, M.; Gandar, J.; Greiner, N.; Moraud, E.M.; Wenger, N.; Shkorbatova, P.; Musienko, P.; Minev, I.; Lacour, S.; Courtine, G. Advantages of Soft Subdural Implants for the Delivery of Electrochemical Neuromodulation Therapies to the Spinal Cord. J. Neural Eng. 2018, 15, 026024. [Google Scholar] [CrossRef] [PubMed]

- Mead, C. Neuromorphic Electronic Systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Nicolelis, M.A.L. Brain–Machine Interfaces to Restore Motor Function and Probe Neural Circuits. Nat. Rev. Neurosci. 2003, 4, 417–422. [Google Scholar] [CrossRef] [PubMed]

- Nicolelis, M.A.L.; Lebedev, M.A. Principles of Neural Ensemble Physiology Underlying the Operation of Brain–Machine Interfaces. Nat. Rev. Neurosci. 2009, 10, 530–540. [Google Scholar] [CrossRef] [PubMed]

- Lebedev, M.A.; Nicolelis, M.A.L. Brain-Machine Interfaces: From Basic Science to Neuroprostheses and Neurorehabilitation. Physiol. Rev. 2017, 97, 767–837. [Google Scholar] [CrossRef]

- Vidal, J.J. Toward Direct Brain-Computer Communication. Annu. Rev. Biophys. Bioeng. 1973, 2, 157–180. [Google Scholar] [CrossRef]

- O’Doherty, J.E.; Lebedev, M.A.; Ifft, P.J.; Zhuang, K.Z.; Shokur, S.; Bleuler, H.; Nicolelis, M.A.L. Active Tactile Exploration Using a Brain–Machine–Brain Interface. Nature 2011, 479, 228–231. [Google Scholar] [CrossRef]

- LaRocco, J.; Paeng, D.-G. Optimizing Computer–Brain Interface Parameters for Non-Invasive Brain-to-Brain Interface. Front. Neuroinform. 2020, 14, 1. [Google Scholar] [CrossRef]

- Vakilipour, P.; Fekrvand, S. Brain-to-Brain Interface Technology: A Brief History, Current State, and Future Goals. Int. J. Dev. Neurosci. 2024, 84, 351–367. [Google Scholar] [CrossRef]

- Haggard, P.; Chambon, V. Sense of Agency. Curr. Biol. 2012, 22, R390–R392. [Google Scholar] [CrossRef] [PubMed]

- Ramakrishnan, A.; Ifft, P.J.; Pais-Vieira, M.; Byun, Y.W.; Zhuang, K.Z.; Lebedev, M.A.; Nicolelis, M.A.L. Computing Arm Movements with a Monkey Brainet. Sci. Rep. 2015, 5, 10767. [Google Scholar] [CrossRef] [PubMed]

- Zander, T.O.; Jatzev, S. Detecting Affective Covert User States with Passive Brain-Computer Interfaces. In Proceedings of the 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops, Amsterdam, The Netherlands, 10–12 September 2009. [Google Scholar] [CrossRef]

- Aricò, P.; Borghini, G.; Di Flumeri, G.; Sciaraffa, N.; Babiloni, F. Passive BCI beyond the Lab: Current Trends and Future Directions. Physiol. Meas. 2018, 39, 08TR02. [Google Scholar] [CrossRef] [PubMed]

- Alimardani, M.; Hiraki, K. Passive Brain-Computer Interfaces for Enhanced Human-Robot Interaction. Front. Robot. AI 2020, 7, 125. [Google Scholar] [CrossRef]

- Pisarchik, A.N.; Kurkin, S.A.; Shusharina, N.N.; Hramov, A.E. Passive Brain–Computer Interfaces for Cognitive and Pathological Brain Physiological States Monitoring and Control. In Brain-Computer Interfaces; Elsevier: Amsterdam, The Netherlands, 2025; pp. 345–388. ISBN 978-0-323-95439-6. [Google Scholar]

- Alrumiah, S.S.; Alhajjaj, L.A.; Alshobaili, J.F.; Ibrahim, D.M. A Review on Brain-Computer Interface Spellers: P300 Speller. Biosci. Biotechnol. Res. Commun. 2020, 13, 1191–1199. [Google Scholar] [CrossRef]

- Schalk, G.; Mellinger, J. A Practical Guide to Brain–Computer Interfacing with BCI2000; Springer: London, UK, 2010; ISBN 978-1-84996-091-5. [Google Scholar]

- Lindgren, J.; Lecuyer, A. OpenViBE and Other BCI Software Platforms. In Brain–Computer Interfaces 2; Clerc, M., Bougrain, L., Lotte, F., Eds.; Wiley: Hoboken, NJ, USA, 2016; pp. 179–198. ISBN 978-1-84821-963-2. [Google Scholar]

- Limerick, H.; Coyle, D.; Moore, J.W. The Experience of Agency in Human-Computer Interactions: A Review. Front. Hum. Neurosci. 2014, 8, 643. [Google Scholar] [CrossRef]

- Mohan, V.; Tay, W.P.; Basu, A. Towards Neuromorphic Compression Based Neural Sensing for Next-Generation Wireless Implantable Brain Machine Interface. Neuromorphic Comput. Eng. 2025, 5, 014004. [Google Scholar] [CrossRef]

- Ajiboye, A.B.; Willett, F.R.; Young, D.R.; Memberg, W.D.; Murphy, B.A.; Miller, J.P.; Walter, B.L.; Sweet, J.A.; Hoyen, H.A.; Keith, M.W.; et al. Restoration of Reaching and Grasping in a Person with Tetraplegia through Brain-Controlled Muscle Stimulation: A Proof-of-Concept Demonstration. Lancet Lond. Engl. 2017, 389, 1821–1830. [Google Scholar] [CrossRef] [PubMed]

- McFarland, D.J.; McCane, L.M.; David, S.V.; Wolpaw, J.R. Spatial Filter Selection for EEG-Based Communication. Electroencephalogr. Clin. Neurophysiol. 1997, 103, 386–394. [Google Scholar] [CrossRef] [PubMed]

- Wang, P.T.; Gandasetiawan, K.; McCrimmon, C.M.; Karimi-Bidhendi, A.; Liu, C.Y.; Heydari, P.; Nenadic, Z.; Do, A.H. Feasibility of an Ultra-Low Power Digital Signal Processor Platform as a Basis for a Fully Implantable Brain-Computer Interface System. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 4491–4494. [Google Scholar]

- Guo, L.; Weiße, A.; Zeinolabedin, S.M.A.; Schüffny, F.M.; Stolba, M.; Ma, Q.; Wang, Z.; Scholze, S.; Dixius, A.; Berthel, M.; et al. 68-Channel Highly-Integrated Neural Signal Processing PSoC with On-Chip Feature Extraction, Compression, and Hardware Accelerators for Neuroprosthetics in 22nm FDSOI. arXiv 2024, arXiv:2407.09166. [Google Scholar]

- Bousseta, R.; El Ouakouak, I.; Gharbi, M.; Regragui, F. EEG Based Brain Computer Interface for Controlling a Robot Arm Movement Through Thought. IRBM 2018, 39, 129–135. [Google Scholar] [CrossRef]

- Xie, P.; Men, Y.; Zhen, J.; Shao, X.; Zhao, J.; Chen, X. The Supernumerary Robotic Limbs of Brain-Computer Interface Based on Asynchronous Steady-State Visual Evoked Potential. Available online: https://english.biomedeng.cn/article/10.7507/1001-5515.202312056 (accessed on 10 February 2025).

- Wu, S.; Bhadra, K.; Giraud, A.-L.; Marchesotti, S. Adaptive LDA Classifier Enhances Real-Time Control of an EEG Brain–Computer Interface for Decoding Imagined Syllables. Brain Sci. 2024, 14, 196. [Google Scholar] [CrossRef] [PubMed]

- Cajigas, I.; Davis, K.C.; Prins, N.W.; Gallo, S.; Naeem, J.A.; Fisher, L.; Ivan, M.E.; Prasad, A.; Jagid, J.R. Brain-Computer Interface Control of Stepping from Invasive Electrocorticography Upper-Limb Motor Imagery in a Patient with Quadriplegia. Front. Hum. Neurosci. 2023, 16, 1077416. [Google Scholar] [CrossRef]

- Davis, K.C.; Wyse-Sookoo, K.R.; Raza, F.; Meschede-Krasa, B.; Prins, N.W.; Fisher, L.; Brown, E.N.; Cajigas, I.; Ivan, M.E.; Jagid, J.R.; et al. 5-Year Follow-up of a Fully Implanted Brain–Computer Interface in a Spinal Cord Injury Patient. J. Neural Eng. 2025, 22, 026050. [Google Scholar] [CrossRef]

- Korovesis, N.; Kandris, D.; Koulouras, G.; Alexandridis, A. Robot Motion Control via an EEG-Based Brain–Computer Interface by Using Neural Networks and Alpha Brainwaves. Electronics 2019, 8, 1387. [Google Scholar] [CrossRef]

- Willett, F.R.; Kunz, E.M.; Fan, C.; Avansino, D.T.; Wilson, G.H.; Choi, E.Y.; Kamdar, F.; Glasser, M.F.; Hochberg, L.R.; Druckmann, S.; et al. A High-Performance Speech Neuroprosthesis. Nature 2023, 620, 1031–1036. [Google Scholar] [CrossRef]

- Gomez-Rivera, Y.A.; Cardona-Álvarez, Y.; Gomez-Morales, O.W.; Alvarez-Meza, A.M.; Castellanos-Domínguez, G. BCI-Based Real-Time Processing for Implementing Deep Learning Frameworks Using Motor Imagery Paradigms. J. Appl. Res. Technol. 2024, 22, 646–653. [Google Scholar] [CrossRef]

- An, Y.; Mitchell, D.; Lathrop, J.; Flynn, D.; Chung, S.-J. Motor Imagery Teleoperation of a Mobile Robot Using a Low-Cost Brain-Computer Interface for Multi-Day Validation. arXiv 2024. arXiv:2412.08971. [Google Scholar]

- Zrenner, E.; Bartz-Schmidt, K.U.; Benav, H.; Besch, D.; Bruckmann, A.; Gabel, V.-P.; Gekeler, F.; Greppmaier, U.; Harscher, A.; Kibbel, S.; et al. Subretinal Electronic Chips Allow Blind Patients to Read Letters and Combine Them to Words. Proc. R. Soc. B Biol. Sci. 2011, 278, 1489–1497. [Google Scholar] [CrossRef]

- Valle, G. Peripheral Neurostimulation for Encoding Artificial Somatosensations. Eur. J. Neurosci. 2022, 56, 5888–5901. [Google Scholar] [CrossRef]

- Dendys, K.; Bieniasz, J.; Bigos, P.; Kuźnicki, W.; Matkowski, I.; Potyrała, P. Cochlear Implants—An Overview. Are CIs World’s Most Successful Sensory Prostheses? J. Educ. Health Sport 2023, 24, 126–142. [Google Scholar] [CrossRef]

- Van Boxel, S.C.J.; Vermorken, B.L.; Volpe, B.; Guinand, N.; Perez-Fornos, A.; Devocht, E.M.J.; Van De Berg, R. The Vestibular Implant: Effects of Stimulation Parameters on the Electrically-Evoked Vestibulo-Ocular Reflex. Front. Neurol. 2024, 15, 1483067. [Google Scholar] [CrossRef] [PubMed]

- Hao, M.; Chou, C.-H.; Zhang, J.; Yang, F.; Cao, C.; Yin, P.; Liang, W.; Niu, C.M.; Lan, N. Restoring Finger-Specific Sensory Feedback for Transradial Amputees via Non-Invasive Evoked Tactile Sensation. IEEE Open J. Eng. Med. Biol. 2020, 1, 98–107. [Google Scholar] [CrossRef]

- Barss, T.S.; Parhizi, B.; Mushahwar, V.K. Transcutaneous Spinal Cord Stimulation of the Cervical Cord Modulates Lumbar Networks. J. Neurophysiol. 2020, 123, 158–166. [Google Scholar] [CrossRef]

- Atkinson, C.; Lombardi, L.; Lang, M.; Keesey, R.; Hawthorn, R.; Seitz, Z.; Leuthardt, E.C.; Brunner, P.; Seáñez, I. Development and Evaluation of a Non-Invasive Brain-Spine Interface Using Transcutaneous Spinal Cord Stimulation. bioRxiv 2024. [Google Scholar] [CrossRef]

- Lim, R.Y.; Jiang, M.; Ang, K.K.; Lin, X.; Guan, C. Brain-Computer-Brain System for Individualized Transcranial Alternating Current Stimulation with Concurrent EEG Recording: A Healthy Subject Pilot Study. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15 July 2024; IEEE: Piscataway, NJ, USA; pp. 1–4. [Google Scholar]

- Terney, D.; Chaieb, L.; Moliadze, V.; Antal, A.; Paulus, W. Increasing Human Brain Excitability by Transcranial High-Frequency Random Noise Stimulation. J. Neurosci. 2008, 28, 14147–14155. [Google Scholar] [CrossRef]

- McLaren, R.; Smith, P.F.; Taylor, R.L.; Ravindran, S.; Rashid, U.; Taylor, D. Efficacy of nGVS to Improve Postural Stability in People with Bilateral Vestibulopathy: A Systematic Review and Meta-Analysis. Front. Neurosci. 2022, 16, 1010239. [Google Scholar] [CrossRef]

- Jovellar, D.B.; Roy, O.; Belardinelli, P.; Ziemann, U. Real-Time Brain State-Coupled Network-Targeted Transcranial Magnetic Stimulation to Enhance Working Memory. In Brain-Computer Interface Research; Guger, C., Azorin, J., Korostenskaja, M., Allison, B., Eds.; SpringerBriefs in Electrical and Computer Engineering; Springer Nature: Cham, Switzerland, 2025; pp. 67–79. ISBN 978-3-031-80496-0. [Google Scholar]

- Valle, G.; Alamri, A.H.; Downey, J.E.; Lienkämper, R.; Jordan, P.M.; Sobinov, A.R.; Endsley, L.J.; Prasad, D.; Boninger, M.L.; Collinger, J.L.; et al. Tactile Edges and Motion via Patterned Microstimulation of the Human Somatosensory Cortex. Science 2025, 387, 315–322. [Google Scholar] [CrossRef]

- Tawakol, O.; Herman, M.D.; Foxley, S.; Mushahwar, V.K.; Towle, V.L.; Troyk, P.R. In-vivo Testing of a Novel Wireless Intraspinal Microstimulation Interface for Restoration of Motor Function Following Spinal Cord Injury. Artif. Organs 2024, 48, 263–273. [Google Scholar] [CrossRef]

- Wang, Y.; Zeng, G.Q.; Wang, M.; Zhang, M.; Chang, C.; Liu, Q.; Wang, K.; Ma, R.; Wang, Y.; Zhang, X. The Safety and Efficacy of Applying a High-Current Temporal Interference Electrical Stimulation in Humans. Front. Hum. Neurosci. 2024, 18, 1484593. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kim, H.-C.; Jung, Y.; Chung, Y.A.; Song, I.-U.; Lee, J.-H.; Yoo, S.-S. Transcranial Focused Ultrasound Stimulation of Human Primary Visual Cortex. Sci. Rep. 2016, 6, 34026. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kim, S.; Kim, B.; Lee, C.; Chung, Y.A.; Kim, L.; Yoo, S.-S. Non-Invasive Transmission of Sensorimotor Information in Humans Using an EEG/Focused Ultrasound Brain-to-Brain Interface. PLoS ONE 2017, 12, e0178476. [Google Scholar] [CrossRef]

- Sahel, J.-A.; Boulanger-Scemama, E.; Pagot, C.; Arleo, A.; Galluppi, F.; Martel, J.N.; Esposti, S.D.; Delaux, A.; de Saint Aubert, J.-B.; de Montleau, C.; et al. Partial Recovery of Visual Function in a Blind Patient after Optogenetic Therapy. Nat. Med. 2021, 27, 1223–1229. [Google Scholar] [CrossRef]

- Song, D.; Hampson, R.E.; Robinson, B.S.; Marmarelis, V.Z.; Deadwyler, S.A.; Berger, T.W. Decoding Memory Features from Hippocampal Spiking Activities Using Sparse Classification Models. In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 1620–1623. [Google Scholar]

- Song, D.; Wang, H.; Tu, C.Y.; Marmarelis, V.Z.; Hampson, R.E.; Deadwyler, S.A.; Berger, T.W. Identification of Sparse Neural Functional Connectivity Using Penalized Likelihood Estimation and Basis Functions. J. Comput. Neurosci. 2013, 35, 335–357. [Google Scholar] [CrossRef]

- Roeder, B.M.; She, X.; Dakos, A.S.; Moore, B.; Wicks, R.T.; Witcher, M.R.; Couture, D.E.; Laxton, A.W.; Clary, H.M.; Popli, G.; et al. Developing a Hippocampal Neural Prosthetic to Facilitate Human Memory Encoding and Recall of Stimulus Features and Categories. Front. Comput. Neurosci. 2024, 18, 1263311. [Google Scholar] [CrossRef] [PubMed]

- Bozinovski, S.; Sestakov, M.; Bozinovska, L. Using EEG Alpha Rhythm to Control a Mobile Robot. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, New Orleans, LA, USA, 4–7 November 1988; IEEE: Piscataway, NJ, USA; Volume 3, pp. 1515–1516. [Google Scholar]

- Arroyo, S.; Lesser, R.P.; Gordon, B.; Uematsu, S.; Jackson, D.; Webber, R. Functional Significance of the Mu Rhythm of Human Cortex: An Electrophysiologic Study with Subdural Electrodes. Electroencephalogr. Clin. Neurophysiol. 1993, 87, 76–87. [Google Scholar] [CrossRef]

- Pfurtscheller, G.; Neuper, C. Motor Imagery Activates Primary Sensorimotor Area in Humans. Neurosci. Lett. 1997, 239, 65–68. [Google Scholar] [CrossRef]

- Guger, C.; Ramoser, H.; Pfurtscheller, G. Real-Time EEG Analysis with Subject-Specific Spatial Patterns for a Brain-Computer Interface (BCI). IEEE Trans. Rehabil. Eng. 2000, 8, 447–456. [Google Scholar] [CrossRef]

- Blankertz, B.; Dornhege, G.; Krauledat, M.; Muller, K.-R.; Kunzmann, V.; Losch, F.; Curio, G. The Berlin Brain-Computer Interface: EEG-Based Communication without Subject Training. IEEE Trans. Neural Syst. Rehabil. Eng. 2006, 14, 147–152. [Google Scholar] [CrossRef]

- Blankertz, B.; Tangermann, M.; Vidaurre, C.; Fazli, S.; Sannelli, C.; Haufe, S.; Maeder, C.; Ramsey, L.; Sturm, I.; Curio, G.; et al. The Berlin Brain–Computer Interface: Non-Medical Uses of BCI Technology. Front. Neurosci. 2010, 4, 198. [Google Scholar] [CrossRef]

- Choi, J.; Kim, K.T.; Jeong, J.H.; Kim, L.; Lee, S.J.; Kim, H. Developing a Motor Imagery-Based Real-Time Asynchronous Hybrid BCI Controller for a Lower-Limb Exoskeleton. Sensors 2020, 20, 7309. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Xu, B.; Lou, X.; Wu, Y.; Shen, X. MI-Based BCI with Accurate Real-Time Three-Class Classification Processing and Light Control Application. Proc. Inst. Mech. Eng. Part H 2023, 237, 1017–1028. [Google Scholar] [CrossRef] [PubMed]

- Ma, Z.-Z.; Wu, J.-J.; Cao, Z.; Hua, X.-Y.; Zheng, M.-X.; Xing, X.-X.; Ma, J.; Xu, J.-G. Motor Imagery-Based Brain–Computer Interface Rehabilitation Programs Enhance Upper Extremity Performance and Cortical Activation in Stroke Patients. J. Neuroeng. Rehabil. 2024, 21, 91. [Google Scholar] [CrossRef]

- Kim, M.S.; Park, H.; Kwon, I.; An, K.-O.; Kim, H.; Park, G.; Hyung, W.; Im, C.-H.; Shin, J.-H. Efficacy of Brain-Computer Interface Training with Motor Imagery-Contingent Feedback in Improving Upper Limb Function and Neuroplasticity among Persons with Chronic Stroke: A Double-Blinded, Parallel-Group, Randomized Controlled Trial. J. Neuroeng. Rehabil. 2025, 22, 1. [Google Scholar] [CrossRef] [PubMed]

- Russo, J.S.; Shiels, T.A.; Lin, C.-H.S.; John, S.E.; Grayden, D.B. Decoding Imagined Movement in People with Multiple Sclerosis for Brain–Computer Interface Translation. J. Neural Eng. 2025, 22, 016012. [Google Scholar] [CrossRef] [PubMed]

- Sutton, S.; Braren, M.; Zubin, J.; John, E.R. Evoked-Potential Correlates of Stimulus Uncertainty. Science 1965, 150, 1187–1188. [Google Scholar] [CrossRef]

- Bhandari, V.; Londhe, N.D.; Kshirsagar, G.B. A Systematic Review of Computational Intelligence Techniques for Channel Selection in P300-Based Brain Computer Interface Speller. Artif. Intell. Appl. 2024, 2, 155–164. [Google Scholar] [CrossRef]

- Van Der Tweel, L.H.; Lunel, H.F. Human Visual Responses To Sinusoidally Modulated Light. Electroencephalogr. Clin. Neurophysiol. 1965, 18, 587–598. [Google Scholar] [CrossRef]

- Regan, D. Some Characteristics of Average Steady-State and Transient Responses Evoked by Modulated Light. Electroencephalogr. Clin. Neurophysiol. 1966, 20, 238–248. [Google Scholar] [CrossRef]

- Ji, D.; Xiao, X.; Wu, J.; He, X.; Zhang, G.; Guo, R.; Liu, M.; Xu, M.; Lin, Q.; Jung, T.-P.; et al. A User-Friendly Visual Brain-Computer Interface Based on High-Frequency Steady-State Visual Evoked Fields Recorded by OPM-MEG. J. Neural Eng. 2024, 21, 036024. [Google Scholar] [CrossRef]

- Wang, Y.; Gao, X.; Hong, B.; Jia, C.; Gao, S. Brain-Computer Interfaces Based on Visual Evoked Potentials. IEEE Eng. Med. Biol. Mag. 2008, 27, 64–71. [Google Scholar] [CrossRef]

- Zhang, Y.; Xu, P.; Liu, T.; Hu, J.; Zhang, R.; Yao, D. Multiple Frequencies Sequential Coding for SSVEP-Based Brain-Computer Interface. PLoS ONE 2012, 7, e29519. [Google Scholar] [CrossRef] [PubMed]

- Allison, B.Z.; Brunner, C.; Altstätter, C.; Wagner, I.C.; Grissmann, S.; Neuper, C. A Hybrid ERD/SSVEP BCI for Continuous Simultaneous Two Dimensional Cursor Control. J. Neurosci. Methods 2012, 209, 299–307. [Google Scholar] [CrossRef] [PubMed]

- Dreyer, A.M.; Herrmann, C.S.; Rieger, J.W. Tradeoff between User Experience and BCI Classification Accuracy with Frequency Modulated Steady-State Visual Evoked Potentials. Front. Hum. Neurosci. 2017, 11, 391. [Google Scholar] [CrossRef] [PubMed]

- Chen, X.; Zhao, B.; Wang, Y.; Xu, S.; Gao, X. Control of a 7-DOF Robotic Arm System With an SSVEP-Based BCI. Int. J. Neural Syst. 2018, 28, 1850018. [Google Scholar] [CrossRef]

- Maksimenko, V.A.; Hramov, A.E.; Frolov, N.S.; Lüttjohann, A.; Nedaivozov, V.O.; Grubov, V.V.; Runnova, A.E.; Makarov, V.V.; Kurths, J.; Pisarchik, A.N. Increasing Human Performance by Sharing Cognitive Load Using Brain-to-Brain Interface. Front. Neurosci. 2018, 12, 949. [Google Scholar] [CrossRef]

- Dehais, F.; Ladouce, S.; Darmet, L.; Nong, T.-V.; Ferraro, G.; Torre Tresols, J.; Velut, S.; Labedan, P. Dual Passive Reactive Brain-Computer Interface: A Novel Approach to Human-Machine Symbiosis. Front. Neuroergon. 2022, 3, 824780. [Google Scholar] [CrossRef]

- Mellinger, J.; Schalk, G.; Braun, C.; Preissl, H.; Rosenstiel, W.; Birbaumer, N.; Kübler, A. An MEG-Based Brain–Computer Interface (BCI). NeuroImage 2007, 36, 581–593. [Google Scholar] [CrossRef]

- Hramov, A.; Pitsik, E.; Chholak, P.; Maksimenko, V.; Frolov, N.; Kurkin, S.; Pisarchik, A. A MEG Study of Different Motor Imagery Modes in Untrained Subjects for BCI Applications. In Proceedings of the 16th International Conference on Informatics in Control, Automation and Robotics, Prague, Czech Republic, 29–31 July 2019; SCITEPRESS—Science and Technology Publications: Setúbal, Portugal, 2019; pp. 188–195. [Google Scholar]

- Xu, H.; Gong, A.; Ding, P.; Luo, J.; Chen, C.; Fu, Y. Key Technologies for Intelligent Brain-Computer Interaction Based on Magnetoencephalography. Sheng Wu Yi Xue Gong Cheng Xue Za Zhi J. Biomed. Eng. Shengwu Yixue Gongchengxue Zazhi 2022, 39, 198–206. [Google Scholar] [CrossRef]

- Wittevrongel, B.; Holmes, N.; Boto, E.; Hill, R.; Rea, M.; Libert, A.; Khachatryan, E.; Van Hulle, M.M.; Bowtell, R.; Brookes, M.J. Practical Real-Time MEG-Based Neural Interfacing with Optically Pumped Magnetometers. BMC Biol. 2021, 19, 158. [Google Scholar] [CrossRef] [PubMed]

- Brickwedde, M.; Anders, P.; Kühn, A.A.; Lofredi, R.; Holtkamp, M.; Kaindl, A.M.; Grent-‘t-Jong, T.; Krüger, P.; Sander, T.; Uhlhaas, P.J. Applications of OPM-MEG for Translational Neuroscience: A Perspective. Transl. Psychiatry 2024, 14, 341. [Google Scholar] [CrossRef] [PubMed]

- Fedosov, N.; Medvedeva, D.; Shevtsov, O.; Ossadtchi, A. Low Count of Optically Pumped Magnetometers Furnishes a Reliable Real-Time Access to Sensorimotor Rhythm. arXiv 2024. arXiv:2412.18353. [Google Scholar] [CrossRef]

- Mokienko, O.A.; Lyukmanov, R.K.; Bobrov, P.D.; Isaev, M.R.; Ikonnikova, E.S.; Cherkasova, A.N.; Suponeva, N.A.; Piradov, M.A. Brain-computer interfaces based on near-infrared spectroscopy and electroencephalography registration in post-stroke rehabilitation: A comparative study. Neurol. Neuropsychiatry Psychosom. 2024, 16, 17–23. [Google Scholar] [CrossRef]

- Edelman, B.J.; Zhang, S.; Schalk, G.; Brunner, P.; Müller-Putz, G.; Guan, C.; He, B. Non-Invasive Brain-Computer Interfaces: State of the Art and Trends. IEEE Rev. Biomed. Eng. 2025, 18, 26–49. [Google Scholar] [CrossRef] [PubMed]

- Reddy, G.S.R.; Proulx, M.J.; Hirshfield, L.; Ries, A.J. Towards an Eye-Brain-Computer Interface: Combining Gaze with the Stimulus-Preceding Negativity for Target Selections in XR. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; Association for Computing Machinery: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Charvet, G.; Foerster, M.; Chatalic, G.; Michea, A.; Porcherot, J.; Bonnet, S.; Filipe, S.; Audebert, P.; Robinet, S.; Josselin, V.; et al. A Wireless 64-Channel ECoG Recording Electronic for Implantable Monitoring and BCI Applications: WIMAGINE. In Proceedings of the 2012 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, San Diego, CA, USA, 28 August–1 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 783–786. [Google Scholar]

- Mestais, C.S.; Charvet, G.; Sauter-Starace, F.; Foerster, M.; Ratel, D.; Benabid, A.L. WIMAGINE: Wireless 64-Channel ECoG Recording Implant for Long Term Clinical Applications. IEEE Trans. Neural Syst. Rehabil. Eng. 2015, 23, 10–21. [Google Scholar] [CrossRef]

- Sauter-Starace, F.; Ratel, D.; Cretallaz, C.; Foerster, M.; Lambert, A.; Gaude, C.; Costecalde, T.; Bonnet, S.; Charvet, G.; Aksenova, T.; et al. Long-Term Sheep Implantation of WIMAGINE®, a Wireless 64-Channel Electrocorticogram Recorder. Front. Neurosci. 2019, 13, 847. [Google Scholar] [CrossRef]

- Bellicha, A.; Struber, L.; Pasteau, F.; Juillard, V.; Devigne, L.; Karakas, S.; Chabardès, S.; Babel, M.; Charvet, G. Depth-Sensor-Based Shared Control Assistance for Mobility and Object Manipulation: Toward Long-Term Home-Use of BCI-Controlled Assistive Robotic Devices. J. Neural Eng. 2025, 22, 016045. [Google Scholar] [CrossRef]

- Liu, D.; Shan, Y.; Wei, P.; Li, W.; Xu, H.; Liang, F.; Liu, T.; Zhao, G.; Hong, B. Reclaiming Hand Functions after Complete Spinal Cord Injury with Epidural Brain-Computer Interface. medRxiv 2024. [Google Scholar] [CrossRef]

- Luo, S.; Angrick, M.; Coogan, C.; Candrea, D.N.; Wyse-Sookoo, K.; Shah, S.; Rabbani, Q.; Milsap, G.W.; Weiss, A.R.; Anderson, W.S.; et al. Stable Decoding from a Speech BCI Enables Control for an Individual with ALS without Recalibration for 3 Months. Adv. Sci. 2023, 10, 2304853. [Google Scholar] [CrossRef]

- Silva, A.B.; Liu, J.R.; Metzger, S.L.; Bhaya-Grossman, I.; Dougherty, M.E.; Seaton, M.P.; Littlejohn, K.T.; Tu-Chan, A.; Ganguly, K.; Moses, D.A.; et al. A Bilingual Speech Neuroprosthesis Driven by Cortical Articulatory Representations Shared between Languages. Nat. Biomed. Eng. 2024, 8, 977–991. [Google Scholar] [CrossRef]

- Rapoport, B.; Hettick, M.; Ho, E.; Poole, A.; Mongue, M.; Papageorgiou, D.; LaMarca, M.; Trietsch, D.; Reed, K.; Murphy, M.; et al. High-Resolution Cortical Mapping with Conformable Microelectrodes on a Thousand-Electrode Scale: A First-in-Human Study (S23.008). Neurology 2024, 102, 6531. [Google Scholar] [CrossRef]

- Konrad, P.E.; Gelman, K.R.; Lawrence, J.; Bhatia, S.; Dister, J.; Sharma, R.; Ho, E.; Byun, Y.W.; Mermel, C.H.; Rapoport, B.I. First-in-Human Experience Performing High-Resolution Cortical Mapping Using a Novel Microelectrode Array Containing 1,024 Electrodes. J. Neural Eng. 2025, 22, 026009. [Google Scholar] [CrossRef] [PubMed]

- Oxley, T.J.; Yoo, P.E.; Rind, G.S.; Ronayne, S.M.; Lee, C.M.S.; Bird, C.; Hampshire, V.; Sharma, R.P.; Morokoff, A.; Williams, D.L.; et al. Motor Neuroprosthesis Implanted with Neurointerventional Surgery Improves Capacity for Activities of Daily Living Tasks in Severe Paralysis: First in-Human Experience. J. Neurointerv. Surg. 2021, 13, 102–108. [Google Scholar] [CrossRef] [PubMed]

- Oxley, T. A 10-Year Journey towards Clinical Translation of an Implantable Endovascular BCI A Keynote Lecture given at the BCI Society Meeting in Brussels. J. Neural Eng. 2025, 22, 013001. [Google Scholar] [CrossRef]

- Collinger, J.L.; Wodlinger, B.; Downey, J.E.; Wang, W.; Tyler-Kabara, E.C.; Weber, D.J.; McMorland, A.J.C.; Velliste, M.; Boninger, M.L.; Schwartz, A.B. High-Performance Neuroprosthetic Control by an Individual with Tetraplegia. The Lancet 2013, 381, 557–564. [Google Scholar] [CrossRef]

- Rubin, D.B.; Ajiboye, A.B.; Barefoot, L.; Bowker, M.; Cash, S.S.; Chen, D.; Donoghue, J.P.; Eskandar, E.N.; Friehs, G.; Grant, C.; et al. Interim Safety Profile From the Feasibility Study of the BrainGate Neural Interface System. Neurology 2023, 100, e1177–e1192. [Google Scholar] [CrossRef]

- Willsey, M.S.; Shah, N.P.; Avansino, D.T.; Hahn, N.V.; Jamiolkowski, R.M.; Kamdar, F.B.; Hochberg, L.R.; Willett, F.R.; Henderson, J.M. A High-Performance Brain–Computer Interface for Finger Decoding and Quadcopter Game Control in an Individual with Paralysis. Nat. Med. 2025, 31, 96–104. [Google Scholar] [CrossRef] [PubMed]

- Musk, E. Neuralink An Integrated Brain-Machine Interface Platform With Thousands of Channels. J. Med. Internet Res. 2019, 21, e16194. [Google Scholar] [CrossRef]

- Parikh, P.M.; Venniyoor, A. Neuralink and Brain–Computer Interface—Exciting Times for Artificial Intelligence. S. Asian J. Cancer 2024, 13, 063–065. [Google Scholar] [CrossRef]

- Mokienko, O.A. Brain–Computer Interfaces with Intracortical Implants for Motor and Communication Functions Compensation: Review of Recent Developments. Sovrem. Tehnol. V Med. 2024, 16, 78. [Google Scholar] [CrossRef]

- Waisberg, E.; Ong, J.; Lee, A.G. Ethical Considerations of Neuralink and Brain-Computer Interfaces. Ann. Biomed. Eng. 2024, 52, 1937–1939. [Google Scholar] [CrossRef]

- Lee, L. Imaging the Effects of 1 Hz Repetitive Transcranial Magnetic Stimulation During Motor Behaviour. Ph.D. Thesis, University College London, London, UK, 2004. [Google Scholar]

- Lefaucheur, J.-P.; Aleman, A.; Baeken, C.; Benninger, D.H.; Brunelin, J.; Di Lazzaro, V.; Filipović, S.R.; Grefkes, C.; Hasan, A.; Hummel, F.C.; et al. Evidence-Based Guidelines on the Therapeutic Use of Repetitive Transcranial Magnetic Stimulation (rTMS): An Update (2014–2018). Clin. Neurophysiol. 2020, 131, 474–528. [Google Scholar] [CrossRef] [PubMed]

- Hsu, G.; Shereen, A.D.; Cohen, L.G.; Parra, L.C. Robust Enhancement of Motor Sequence Learning with 4 mA Transcranial Electric Stimulation. Brain Stimulat. 2023, 16, 56–67. [Google Scholar] [CrossRef] [PubMed]

- Legon, W.; Sato, T.F.; Opitz, A.; Mueller, J.; Barbour, A.; Williams, A.; Tyler, W.J. Transcranial Focused Ultrasound Modulates the Activity of Primary Somatosensory Cortex in Humans. Nat. Neurosci. 2014, 17, 322–329. [Google Scholar] [CrossRef] [PubMed]

- Lee, W.; Kim, H.; Jung, Y.; Song, I.-U.; Chung, Y.A.; Yoo, S.-S. Image-Guided Transcranial Focused Ultrasound Stimulates Human Primary Somatosensory Cortex. Sci. Rep. 2015, 5, 8743. [Google Scholar] [CrossRef]

- Kosnoff, J.; Yu, K.; Liu, C.; He, B. Transcranial Focused Ultrasound to V5 Enhances Human Visual Motion Brain-Computer Interface by Modulating Feature-Based Attention. Nat. Commun. 2024, 15, 4382. [Google Scholar] [CrossRef]

- Soghoyan, G.; Biktimirov, A.; Matvienko, Y.; Chekh, I.; Sintsov, M.; Lebedev, M.A. Peripheral Nerve Stimulation Enables Somatosensory Feedback While Suppressing Phantom Limb Pain in Transradial Amputees. Brain Stimulat. 2023, 16, 756–758. [Google Scholar] [CrossRef]

- Valle, G.; Katic Secerovic, N.; Eggemann, D.; Gorskii, O.; Pavlova, N.; Petrini, F.M.; Cvancara, P.; Stieglitz, T.; Musienko, P.; Bumbasirevic, M.; et al. Biomimetic Computer-to-Brain Communication Enhancing Naturalistic Touch Sensations via Peripheral Nerve Stimulation. Nat. Commun. 2024, 15, 1151. [Google Scholar] [CrossRef]

- Várkuti, B.; Halász, L.; Hagh Gooie, S.; Miklós, G.; Smits Serena, R.; Van Elswijk, G.; McIntyre, C.C.; Lempka, S.F.; Lozano, A.M.; Erōss, L. Conversion of a Medical Implant into a Versatile Computer-Brain Interface. Brain Stimulat. 2024, 17, 39–48. [Google Scholar] [CrossRef]

- Bach-y-Rita, P.; Kercel, S.W. Sensory Substitution and the Human–Machine Interface. Trends Cogn. Sci. 2003, 7, 541–546. [Google Scholar] [CrossRef]

- Novich, S.D.; Eagleman, D.M. Using Space and Time to Encode Vibrotactile Information: Toward an Estimate of the Skin’s Achievable Throughput. Exp. Brain Res. 2015, 233, 2777–2788. [Google Scholar] [CrossRef]

- Zou, X.; Chen, B.; Li, Y. Research Status and Progress of Bilateral Cochlear Implantation. Lin Chuang Er Bi Yan Hou Tou Jing Wai Ke Za Zhi J. Clin. Otorhinolaryngol. Head Neck Surg. 2024, 38, 666–670. [Google Scholar]

- Vesper, E.O.; Sun, R.; Della Santina, C.C.; Schoo, D.P. Vestibular Implantation. Curr. Otorhinolaryngol. Rep. 2024, 12, 50–60. [Google Scholar] [CrossRef]

- Fernández, E.; Alfaro, A.; Soto-Sánchez, C.; Gonzalez-Lopez, P.; Lozano, A.M.; Peña, S.; Grima, M.D.; Rodil, A.; Gómez, B.; Chen, X.; et al. Visual Percepts Evoked with an Intracortical 96-Channel Microelectrode Array Inserted in Human Occipital Cortex. J. Clin. Investig. 2021, 131, e151331. [Google Scholar] [CrossRef]

- Muqit, M.M.K.; Le Mer, Y.; Olmos de Koo, L.; Holz, F.G.; Sahel, J.A.; Palanker, D. Prosthetic Visual Acuity with the PRIMA Subretinal Microchip in Patients with Atrophic Age-Related Macular Degeneration at 4 Years Follow-Up. Ophthalmol. Sci. 2024, 4, 100510. [Google Scholar] [CrossRef]

- Shelchkova, N.D.; Valle, G.; Hobbs, T.G.; Verbaarschot, C.; Downey, J.E.; Gaunt, R.A.; Bensmaia, S.J.; Greenspon, C.M. Multi-Electrode ICMS Enables Dexterous Use of Bionic Hands. In Brain-Computer Interface Research: A State-of-the-Art Summary 12; Guger, C., Azorin, J., Korostenskaja, M., Allison, B., Eds.; Springer Nature: Cham, Switzerland, 2025; pp. 29–37. ISBN 978-3-031-80497-7. [Google Scholar]

- Greenspon, C.M.; Valle, G.; Shelchkova, N.D.; Hobbs, T.G.; Verbaarschot, C.; Callier, T.; Berger-Wolf, E.I.; Okorokova, E.V.; Hutchison, B.C.; Dogruoz, E.; et al. Evoking Stable and Precise Tactile Sensations via Multi-Electrode Intracortical Microstimulation of the Somatosensory Cortex. Nat. Biomed. Eng. 2024, 9, 935–951. [Google Scholar] [CrossRef] [PubMed]

- Jiang, L.; Stocco, A.; Losey, D.M.; Abernethy, J.A.; Prat, C.S.; Rao, R.P.N. BrainNet: A Multi-Person Brain-to-Brain Interface for Direct Collaboration Between Brains. Sci. Rep. 2019, 9, 6115. [Google Scholar] [CrossRef] [PubMed]

- Ye, Y.; Wang, Z.; Tian, Y.; Zhou, T.; Zhou, Z.; Wei, X.; Tao, T.H.; Sun, L. A Brain-to-Brain Interface with a Flexible Neural Probe for Mouse Turning Control by Human Mind. IEEJ Trans. Electr. Electron. Eng. 2024, 19, 819–823. [Google Scholar] [CrossRef]

- Hampson, R.E.; Song, D.; Robinson, B.S.; Fetterhoff, D.; Dakos, A.S.; Roeder, B.M.; She, X.; Wicks, R.T.; Witcher, M.R.; Couture, D.E.; et al. Developing a Hippocampal Neural Prosthetic to Facilitate Human Memory Encoding and Recall. J. Neural Eng. 2018, 15, 036014. [Google Scholar] [CrossRef] [PubMed]

- Hildt, E. Multi-Person Brain-To-Brain Interfaces: Ethical Issues. Front. Neurosci. 2019, 13, 1177. [Google Scholar] [CrossRef] [PubMed]

- Vivancos, D.; Cuesta, F. MindBigData 2022 A Large Dataset of Brain Signals 2022. arXiv 2022. arXiv:2212.14746. [Google Scholar]

- Liu, B.; Huang, X.; Wang, Y.; Chen, X.; Gao, X. BETA: A Large Benchmark Database Toward SSVEP-BCI Application. Front. Neurosci. 2020, 14, 627. [Google Scholar] [CrossRef]

- Rathee, D.; Raza, H.; Roy, S.; Prasad, G. A Magnetoencephalography Dataset for Motor and Cognitive Imagery-Based Brain-Computer Interface. Sci. Data 2021, 8, 120. [Google Scholar] [CrossRef] [PubMed]

- Subash, P.; Gray, A.; Boswell, M.; Cohen, S.L.; Garner, R.; Salehi, S.; Fisher, C.; Hobel, S.; Ghosh, S.; Halchenko, Y.; et al. A Comparison of Neuroelectrophysiology Databases. Sci. Data 2023, 10, 719. [Google Scholar] [CrossRef]

- Wahid, M.F.; Tafreshi, R. Improved Motor Imagery Classification Using Regularized Common Spatial Pattern with Majority Voting Strategy. IFAC-PapersOnLine 2021, 54, 226–231. [Google Scholar] [CrossRef]

- Al-Qazzaz, N.K.; Aldoori, A.A.; Ali, S.H.B.M.; Ahmad, S.A.; Mohammed, A.K.; Mohyee, M.I. EEG Signal Complexity Measurements to Enhance BCI-Based Stroke Patients’ Rehabilitation. Sensors 2023, 23, 3889. [Google Scholar] [CrossRef]

- Batistić, L.; Sušanj, D.; Pinčić, D.; Ljubic, S. Motor Imagery Classification Based on EEG Sensing with Visual and Vibrotactile Guidance. Sensors 2023, 23, 5064. [Google Scholar] [CrossRef]

- Hashem, H.A.; Abdulazeem, Y.; Labib, L.M.; Elhosseini, M.A.; Shehata, M. An Integrated Machine Learning-Based Brain Computer Interface to Classify Diverse Limb Motor Tasks: Explainable Model. Sensors 2023, 23, 3171. [Google Scholar] [CrossRef] [PubMed]

- Degirmenci, M.; Yuce, Y.K.; Perc, M.; Isler, Y. EEG Channel and Feature Investigation in Binary and Multiple Motor Imagery Task Predictions. Front. Hum. Neurosci. 2024, 18, 1525139. [Google Scholar] [CrossRef]

- Kabir, M.H.; Akhtar, N.I.; Tasnim, N.; Miah, A.S.M.; Lee, H.-S.; Jang, S.-W.; Shin, J. Exploring Feature Selection and Classification Techniques to Improve the Performance of an Electroencephalography-Based Motor Imagery Brain–Computer Interface System. Sensors 2024, 24, 4989. [Google Scholar] [CrossRef]

- Lakshminarayanan, K.; Ramu, V.; Shah, R.; Haque Sunny, M.S.; Madathil, D.; Brahmi, B.; Wang, I.; Fareh, R.; Rahman, M.H. Developing a Tablet-Based Brain-Computer Interface and Robotic Prototype for Upper Limb Rehabilitation. PeerJ Comput. Sci. 2024, 10, e2174. [Google Scholar] [CrossRef]

- Miladinović, A.; Accardo, A.; Jarmolowska, J.; Marusic, U.; Ajčević, M. Optimizing Real-Time MI-BCI Performance in Post-Stroke Patients: Impact of Time Window Duration on Classification Accuracy and Responsiveness. Sensors 2024, 24, 6125. [Google Scholar] [CrossRef]

- Zhong, Y.; Yao, L.; Pan, G.; Wang, Y. Cross-Subject Motor Imagery Decoding by Transfer Learning of Tactile ERD. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 662–671. [Google Scholar] [CrossRef]

- Gulyás, D.; Jochumsen, M. Detection of Movement-Related Brain Activity Associated with Hand and Tongue Movements from Single-Trial Around-Ear EEG. Sensors 2024, 24, 6004. [Google Scholar] [CrossRef]

- Guerrero-Mendez, C.D.; Blanco-Diaz, C.F.; Rivera-Flor, H.; Fabriz-Ulhoa, P.H.; Fragoso-Dias, E.A.; de Andrade, R.M.; Delisle-Rodriguez, D.; Bastos-Filho, T.F. Influence of Temporal and Frequency Selective Patterns Combined with CSP Layers on Performance in Exoskeleton-Assisted Motor Imagery Tasks. NeuroSci 2024, 5, 169–183. [Google Scholar] [CrossRef]

- Kojima, S.; Kanoh, S. Four-Class ASME BCI: Investigation of the Feasibility and Comparison of Two Strategies for Multiclassing. Front. Hum. Neurosci. 2024, 18, 1461960. [Google Scholar] [CrossRef] [PubMed]

- Suresh, R.E.; Zobaer, M.S.; Triano, M.J.; Saway, B.F.; Grewal, P.; Rowland, N.C. Exploring Machine Learning Classification of Movement Phases in Hemiparetic Stroke Patients: A Controlled EEG-tDCS Study. Brain Sci. 2024, 15, 28. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Yu, S.; Li, J.; Ma, J.; Wang, F.; Sun, S.; Yao, D.; Xu, P.; Zhang, T. Brain State and Dynamic Transition Patterns of Motor Imagery Revealed by the Bayes Hidden Markov Model. Cogn. Neurodyn. 2024, 18, 2455–2470. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Peon, D.; Garcia-Hernandez, N.V.; Benavides-Bravo, F.G.; Parra-Vega, V. Characterization and Classification of Kinesthetic Motor Imagery Levels. J. Neural Eng. 2024, 21, 046024. [Google Scholar] [CrossRef]

- Mebarkia, K.; Reffad, A. Multi Optimized SVM Classifiers for Motor Imagery Left and Right Hand Movement Identification. Australas. Phys. Eng. Sci. Med. 2019, 42, 949–958. [Google Scholar] [CrossRef]

- Novičić, M.; Djordjević, O.; Miler-Jerković, V.; Konstantinović, L.; Savić, A.M. Improving the Performance of Electrotactile Brain–Computer Interface Using Machine Learning Methods on Multi-Channel Features of Somatosensory Event-Related Potentials. Sensors 2024, 24, 8048. [Google Scholar] [CrossRef]

- Antony, M.J.; Sankaralingam, B.P.; Mahendran, R.K.; Gardezi, A.A.; Shafiq, M.; Choi, J.-G.; Hamam, H. Classification of EEG Using Adaptive SVM Classifier with CSP and Online Recursive Independent Component Analysis. Sensors 2022, 22, 7596. [Google Scholar] [CrossRef]

- De Brito Guerra, T.C.; Nóbrega, T.; Morya, E.; de, M.; Martins, A.; de Sousa, V.A. Electroencephalography Signal Analysis for Human Activities Classification: A Solution Based on Machine Learning and Motor Imagery. Sensors 2023, 23, 4277. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Yang, X.; Liang, Z.; Lou, H.; Cui, T.; Shen, C.; Gao, Z. A Brain–Computer Interface System for Lower-Limb Exoskeletons Based on Motor Imagery and Stacked Ensemble Approach. Rev. Sci. Instrum. 2025, 96, 015114. [Google Scholar] [CrossRef] [PubMed]

- Soangra, R.; Smith, J.A.; Rajagopal, S.; Yedavalli, S.V.R.; Anirudh, E.R. Classifying Unstable and Stable Walking Patterns Using Electroencephalography Signals and Machine Learning Algorithms. Sensors 2023, 23, 6005. [Google Scholar] [CrossRef] [PubMed]

- Akram, F.; Alwakeel, A.; Alwakeel, M.; Hijji, M.; Masud, U. A Symbols Based BCI Paradigm for Intelligent Home Control Using P300 Event-Related Potentials. Sensors 2022, 22, 10000. [Google Scholar] [CrossRef]

- Ravi, A.; Beni, N.H.; Manuel, J.; Jiang, N. Comparing User-Dependent and User-Independent Training of CNN for SSVEP BCI. J. Neural Eng. 2020, 17, 026028. [Google Scholar] [CrossRef]

- Zhao, X.; Du, Y.; Zhang, R. A CNN-Based Multi-Target Fast Classification Method for AR-SSVEP. Comput. Biol. Med. 2022, 141, 105042. [Google Scholar] [CrossRef]

- Bhuvaneshwari, M.; Grace Mary Kanaga, E.; George, S.T. Classification of SSVEP-EEG Signals Using CNN and Red Fox Optimization for BCI Applications. Proc. Inst. Mech. Eng. Part H 2023, 237, 134–143. [Google Scholar] [CrossRef]

- Xu, D.; Tang, F.; Li, Y.; Zhang, Q.; Feng, X. FB-CCNN: A Filter Bank Complex Spectrum Convolutional Neural Network with Artificial Gradient Descent Optimization. Brain Sci. 2023, 13, 780. [Google Scholar] [CrossRef]

- Li, X.; Yang, S.; Fei, N.; Wang, J.; Huang, W.; Hu, Y. A Convolutional Neural Network for SSVEP Identification by Using a Few-Channel EEG. Bioengineering 2024, 11, 613. [Google Scholar] [CrossRef]

- Li, M.; Han, J.; Yang, J. Automatic Feature Extraction and Fusion Recognition of Motor Imagery EEG Using Multilevel Multiscale CNN. Med. Biol. Eng. Comput. 2021, 59, 2037–2050. [Google Scholar] [CrossRef] [PubMed]

- Salimpour, S.; Kalbkhani, H.; Seyyedi, S.; Solouk, V. Stockwell Transform and Semi-Supervised Feature Selection from Deep Features for Classification of BCI Signals. Sci. Rep. 2022, 12, 11773. [Google Scholar] [CrossRef]

- Yang, J.; Gao, S.; Shen, T. A Two-Branch CNN Fusing Temporal and Frequency Features for Motor Imagery EEG Decoding. Entropy 2022, 24, 376. [Google Scholar] [CrossRef]

- Fan, Z.; Xi, X.; Gao, Y.; Wang, T.; Fang, F.; Houston, M.; Zhang, Y.; Li, L.; Lü, Z. Joint Filter-Band-Combination and Multi-View CNN for Electroencephalogram Decoding. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 2101–2110. [Google Scholar] [CrossRef] [PubMed]

- Lun, X.; Zhang, Y.; Zhu, M.; Lian, Y.; Hou, Y. A Combined Virtual Electrode-Based ESA and CNN Method for MI-EEG Signal Feature Extraction and Classification. Sensors 2023, 23, 8893. [Google Scholar] [CrossRef]

- Chen, G.; Zhang, X.; Zhang, J.; Li, F.; Duan, S. A Novel Brain-Computer Interface Based on Audio-Assisted Visual Evoked EEG and Spatial-Temporal Attention CNN. Front. Neurorobot. 2022, 16, 995552. [Google Scholar] [CrossRef] [PubMed]

- Ma, T.; Wang, S.; Xia, Y.; Zhu, X.; Evans, J.; Sun, Y.; He, S. CNN-Based Classification of fNIRS Signals in Motor Imagery BCI System. J. Neural Eng. 2021, 18, 056019. [Google Scholar] [CrossRef]

- Dale, R.; O’sullivan, T.D.; Howard, S.; Orihuela-Espina, F.; Dehghani, H. System Derived Spatial-Temporal CNN for High-Density fNIRS BCI. IEEE Open J. Eng. Med. Biol. 2023, 4, 85–95. [Google Scholar] [CrossRef] [PubMed]

- Hamid, H.; Naseer, N.; Nazeer, H.; Khan, M.J.; Khan, R.A.; Shahbaz Khan, U. Analyzing Classification Performance of fNIRS-BCI for Gait Rehabilitation Using Deep Neural Networks. Sensors 2022, 22, 1932. [Google Scholar] [CrossRef]

- Lawhern, V.J.; Solon, A.J.; Waytowich, N.R.; Gordon, S.M.; Hung, C.P.; Lance, B.J. EEGNet: A Compact Convolutional Neural Network for EEG-Based Brain–Computer Interfaces. J. Neural Eng. 2018, 15, 056013. [Google Scholar] [CrossRef] [PubMed]

- Mwata-Velu, T.; Niyonsaba-Sebigunda, E.; Avina-Cervantes, J.G.; Ruiz-Pinales, J.; Velu-A-Gulenga, N.; Alonso-Ramírez, A.A. Motor Imagery Multi-Tasks Classification for BCIs Using the NVIDIA Jetson TX2 Board and the EEGNet Network. Sensors 2023, 23, 4164. [Google Scholar] [CrossRef] [PubMed]

- Rao, Y.; Zhang, L.; Jing, R.; Huo, J.; Yan, K.; He, J.; Hou, X.; Mu, J.; Geng, W.; Cui, H.; et al. An Optimized EEGNet Decoder for Decoding Motor Image of Four Class Fingers Flexion. Brain Res. 2024, 1841, 149085. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Sun, Y.; Yuan, Z.; Xu, T.; Li, J. A Cascade xDAWN EEGNet Structure for Unified Visual-Evoked Related Potential Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2024, 32, 2270–2280. [Google Scholar] [CrossRef]

- Yao, H.; Liu, K.; Deng, X.; Tang, X.; Yu, H. FB-EEGNet: A Fusion Neural Network across Multi-Stimulus for SSVEP Target Detection. J. Neurosci. Methods 2022, 379, 109674. [Google Scholar] [CrossRef]

- Riyad, M.; Khalil, M.; Adib, A. MI-EEGNET: A Novel Convolutional Neural Network for Motor Imagery Classification. J. Neurosci. Methods 2021, 353, 109037. [Google Scholar] [CrossRef]

- Park, D.; Park, H.; Kim, S.; Choo, S.; Lee, S.; Nam, C.S.; Jung, J.-Y. Spatio-Temporal Explanation of 3D-EEGNet for Motor Imagery EEG Classification Using Permutation and Saliency. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 4504–4513. [Google Scholar] [CrossRef]

- Wu, X.; Chu, Y.; Li, Q.; Luo, Y.; Zhao, Y.; Zhao, X. AMEEGNet: Attention-Based Multiscale EEGNet for Effective Motor Imagery EEG Decoding. Front. Neurorobot. 2025, 19, 1540033. [Google Scholar] [CrossRef]

- Wu, X.; Shi, C.; Yan, L. Driving Attention State Detection Based on GRU-EEGNet. Sensors 2024, 24, 5086. [Google Scholar] [CrossRef]

- Shi, R.; Zhao, Y.; Cao, Z.; Liu, C.; Kang, Y.; Zhang, J. Categorizing Objects from MEG Signals Using EEGNet. Cogn. Neurodyn. 2022, 16, 365–377. [Google Scholar] [CrossRef]

- Li, H.; Yin, F.; Zhang, R.; Ma, X.; Chen, H. Motor imagery electroencephalogram classification based on sparse spatiotemporal decomposition and channel attention. Sheng Wu Yi Xue Gong Cheng Xue Za Zhi J. Biomed. Eng. Shengwu Yixue Gongchengxue Zazhi 2022, 39, 488–497. [Google Scholar] [CrossRef]

- Chunduri, V.; Aoudni, Y.; Khan, S.; Aziz, A.; Rizwan, A.; Deb, N.; Keshta, I.; Soni, M. Multi-Scale Spatiotemporal Attention Network for Neuron Based Motor Imagery EEG Classification. J. Neurosci. Methods 2024, 406, 110128. [Google Scholar] [CrossRef] [PubMed]

- Liang, G.; Cao, D.; Wang, J.; Zhang, Z.; Wu, Y. EISATC-Fusion: Inception Self-Attention Temporal Convolutional Network Fusion for Motor Imagery EEG Decoding. IEEE Trans. Neural Syst. Rehabil. Eng. Publ. IEEE Eng. Med. Biol. Soc. 2024, 32, 1535–1545. [Google Scholar] [CrossRef]

- Qin, Y.; Li, B.; Wang, W.; Shi, X.; Wang, H.; Wang, X. ETCNet: An EEG-Based Motor Imagery Classification Model Combining Efficient Channel Attention and Temporal Convolutional Network. Brain Res. 2024, 1823, 148673. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Chen, L.; Qin, S.; Zha, F.; Fan, X. Bidirectional Feature Pyramid Attention-Based Temporal Convolutional Network Model for Motor Imagery Electroencephalogram Classification. Front. Neurorobot. 2024, 18, 1343249. [Google Scholar] [CrossRef]

- AL-Quraishi, M.S.; Tan, W.H.; Elamvazuthi, I.; Ooi, C.P.; Saad, N.M.; Al-Hiyali, M.I.; Karim, H.A.; Azhar Ali, S.S. Cortical Signals Analysis to Recognize Intralimb Mobility Using Modified RNN and Various EEG Quantities. Heliyon 2024, 10, e30406. [Google Scholar] [CrossRef] [PubMed]

- Abdulghani, M.M.; Walters, W.L.; Abed, K.H. Imagined Speech Classification Using EEG and Deep Learning. Bioengineering 2023, 10, 649. [Google Scholar] [CrossRef]

- Ma, X.; Qiu, S.; Du, C.; Xing, J.; He, H. Improving EEG-Based Motor Imagery Classification via Spatial and Temporal Recurrent Neural Networks. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1903–1906. [Google Scholar] [CrossRef]

- Akhter, J.; Naseer, N.; Nazeer, H.; Khan, H.; Mirtaheri, P. Enhancing Classification Accuracy with Integrated Contextual Gate Network: Deep Learning Approach for Functional Near-Infrared Spectroscopy Brain-Computer Interface Application. Sensors 2024, 24, 3040. [Google Scholar] [CrossRef] [PubMed]

- Shelishiyah, R.; Thiyam, D.B.; Margaret, M.J.; Banu, N.M.M. A Hybrid CNN Model for Classification of Motor Tasks Obtained from Hybrid BCI System. Sci. Rep. 2025, 15, 1360. [Google Scholar] [CrossRef]

- Tanaka, G.; Yamane, T.; Héroux, J.B.; Nakane, R.; Kanazawa, N.; Takeda, S.; Numata, H.; Nakano, D.; Hirose, A. Recent Advances in Physical Reservoir Computing: A Review. Neural Netw. 2019, 115, 100–123. [Google Scholar] [CrossRef] [PubMed]

- Rusev, G.; Yordanov, S.; Nedelcheva, S.; Banderov, A.; Sauter-Starace, F.; Koprinkova-Hristova, P.; Kasabov, N. Decoding Brain Signals in a Neuromorphic Framework for a Personalized Adaptive Control of Human Prosthetics. Biomimetics 2025, 10, 183. [Google Scholar] [CrossRef]

- Braun, J.-M.; Fauth, M.; Berger, M.; Huang, N.-S.; Simeoni, E.; Gaeta, E.; Rodrigues Do Carmo, R.; García-Betances, R.I.; Arredondo Waldmeyer, M.T.; Gail, A.; et al. A Brain Machine Interface Framework for Exploring Proactive Control of Smart Environments. Sci. Rep. 2024, 14, 11054. [Google Scholar] [CrossRef]

- Aberra, A.S.; Peterchev, A.V.; Grill, W.M. Biophysically Realistic Neuron Models for Simulation of Cortical Stimulation. J. Neural Eng. 2018, 15, 66023. [Google Scholar] [CrossRef]

- Hines, M.L.; Carnevale, N.T. The NEURON Simulation Environment. Neural Comput. 1997, 9, 1179–1209. [Google Scholar] [CrossRef]

- Luff, C.E.; Dzialecka, P.; Acerbo, E.; Williamson, A.; Grossman, N. Pulse-Width Modulated Temporal Interference (PWM-TI) Brain Stimulation. Brain Stimulat. 2024, 17, 92–103. [Google Scholar] [CrossRef]

- Wenger, N.; Moraud, E.M.; Gandar, J.; Musienko, P.; Capogrosso, M.; Baud, L.; Le Goff, C.G.; Barraud, Q.; Pavlova, N.; Dominici, N.; et al. Spatiotemporal Neuromodulation Therapies Engaging Muscle Synergies Improve Motor Control after Spinal Cord Injury. Nat. Med. 2016, 22, 138–145. [Google Scholar] [CrossRef] [PubMed]

- Capogrosso, M.; Wagner, F.B.; Gandar, J.; Moraud, E.M.; Wenger, N.; Milekovic, T.; Shkorbatova, P.; Pavlova, N.; Musienko, P.; Bezard, E.; et al. Configuration of Electrical Spinal Cord Stimulation through Real-Time Processing of Gait Kinematics. Nat. Protoc. 2018, 13, 2031–2061. [Google Scholar] [CrossRef]

- Westwick, D.T.; Kearney, R.E. Identification of Nonlinear Physiological Systems; John Wiley & Sons: Hoboken, NJ, USA, 2003; ISBN 978-0-471-27456-8. [Google Scholar]

- Wagner, T.; Valero-Cabre, A.; Pascual-Leone, A. Noninvasive Human Brain Stimulation. Annu. Rev. Biomed. Eng. 2007, 9, 527–565. [Google Scholar] [CrossRef] [PubMed]

- Traub, R.D.; Wong, R.K.; Miles, R.; Michelson, H. A Model of a CA3 Hippocampal Pyramidal Neuron Incorporating Voltage-Clamp Data on Intrinsic Conductances. J. Neurophysiol. 1991, 66, 635–650. [Google Scholar] [CrossRef]

- Izhikevich, E.M. Simple Model of Spiking Neurons. IEEE Trans. Neural Netw. 2003, 14, 1569–1572. [Google Scholar] [CrossRef]

- Gerstner, W.; Kistler, W.M.; Naud, R.; Paninski, L. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition, 1st ed.; Cambridge University Press: Cambridge, UK, 2014; ISBN 978-1-107-06083-8. [Google Scholar]

- Davies, M.; Srinivasa, N.; Lin, T.-H.; Chinya, G.; Cao, Y.; Choday, S.H.; Dimou, G.; Joshi, P.; Imam, N.; Jain, S.; et al. Loihi: A Neuromorphic Manycore Processor with On-Chip Learning. IEEE Micro 2018, 38, 82–99. [Google Scholar] [CrossRef]

- Paknahad, J.; Loizos, K.; Humayun, M.; Lazzi, G. Targeted Stimulation of Retinal Ganglion Cells in Epiretinal Prostheses: A Multiscale Computational Study. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 2548–2556. [Google Scholar] [CrossRef]

- Grossman, N.; Bono, D.; Dedic, N.; Kodandaramaiah, S.B.; Rudenko, A.; Suk, H.-J.; Cassara, A.M.; Neufeld, E.; Kuster, N.; Tsai, L.-H.; et al. Noninvasive Deep Brain Stimulation via Temporally Interfering Electric Fields. Cell 2017, 169, 1029–1041.e16. [Google Scholar] [CrossRef]

- Wu, C.-W.; Lin, B.-S.; Zhang, Z.; Hsieh, T.-H.; Liou, J.-C.; Lo, W.-L.; Li, Y.-T.; Chiu, S.-C.; Peng, C.-W. Pilot Study of Using Transcranial Temporal Interfering Theta-Burst Stimulation for Modulating Motor Excitability in Rat. J. NeuroEng. Rehabil. 2024, 21, 147. [Google Scholar] [CrossRef]

- Rampersad, S.; Roig-Solvas, B.; Yarossi, M.; Kulkarni, P.P.; Santarnecchi, E.; Dorval, A.D.; Brooks, D.H. Prospects for Transcranial Temporal Interference Stimulation in Humans: A Computational Study. NeuroImage 2019, 202, 116124. [Google Scholar] [CrossRef] [PubMed]

- Caldas-Martinez, S.; Goswami, C.; Forssell, M.; Cao, J.; Barth, A.L.; Grover, P. Cell-Specific Effects of Temporal Interference Stimulation on Cortical Function. Commun. Biol. 2024, 7, 1076. [Google Scholar] [CrossRef]

- Shahdoost, S.; Frost, S.B.; Guggenmos, D.J.; Borrell, J.A.; Dunham, C.; Barbay, S.; Nudo, R.J.; Mohseni, P. A Brain-Spinal Interface (BSI) System-on-Chip (SoC) for Closed-Loop Cortically-Controlled Intraspinal Microstimulation. Analog Integr. Circuits Signal Process. 2018, 95, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Capogrosso, M.; Wenger, N.; Raspopovic, S.; Musienko, P.; Beauparlant, J.; Bassi Luciani, L.; Courtine, G.; Micera, S. A Computational Model for Epidural Electrical Stimulation of Spinal Sensorimotor Circuits. J. Neurosci. 2013, 33, 19326–19340. [Google Scholar] [CrossRef] [PubMed]

- Song, D.; Chan, R.H.M.; Marmarelis, V.Z.; Hampson, R.E.; Deadwyler, S.A.; Berger, T.W. Nonlinear Dynamic Modeling of Spike Train Transformations for Hippocampal-Cortical Prostheses. IEEE Trans. Biomed. Eng. 2007, 54, 1053–1066. [Google Scholar] [CrossRef] [PubMed]

- Birdsall, T.; Fox, W. The Theory of Signal Detectability. IEEE Trans. Inf. Theory 1954, 4, 171–212. [Google Scholar]

- Green, D.M.; Swets, J.A. Signal Detection Theory and Psychophysics; Wiley: New York, NY, USA, 1966; Volume 1. [Google Scholar]

- Lopes-Dias, C.; Sburlea, A.I.; Müller-Putz, G.R. Online Asynchronous Decoding of Error-Related Potentials during the Continuous Control of a Robot. Sci. Rep. 2019, 9, 17596. [Google Scholar] [CrossRef]

- Soriano-Segura, P.; Ortiz, M.; Iáñez, E.; Azorín, J.M. Design of a Brain-Machine Interface for Reducing False Activations of a Lower-Limb Exoskeleton Based on Error Related Potential. Comput. Methods Programs Biomed. 2024, 255, 108332. [Google Scholar] [CrossRef]

- Hand, D.J.; Till, R.J. A Simple Generalisation of the Area Under the ROC Curve for Multiple Class Classification Problems. Mach. Learn. 2001, 45, 171–186. [Google Scholar] [CrossRef]

- McFarland, D.J.; Sarnacki, W.A.; Wolpaw, J.R. Brain–Computer Interface (BCI) Operation: Optimizing Information Transfer Rates. Biol. Psychol. 2003, 63, 237–251. [Google Scholar] [CrossRef]

- Schlogl, A.; Keinrath, C.; Scherer, R.; Furtscheller, P. Information Transfer of an EEG-Based Brain Computer Interface. In Proceedings of the First International IEEE EMBS Conference on Neural Engineering, Capri Island, Italy, 20–22 March 2003; IEEE: Piscataway, NJ, USA, 2003; pp. 641–644. [Google Scholar]

- Wolpaw, J.R.; Birbaumer, N.; Mcfarland, D.J.; Pfurtscheller, G.; Vaughan, T.M. Brain-Computer Interfaces for Communication and Control. Clin. Neurophysiol. 2002, 113, 767–791. [Google Scholar] [CrossRef] [PubMed]

- Vissarionov, S.V.; Baindurashvili, A.G.; Kryukova, I.A. International Standards for Neurological Classification of Spinal Cord Injuries (ASIA/ISNCSCI Scale, Revised 2015) 67. Pediatr. Traumatol. Orthop. Reconstr. Surg. 2016, 4, 67–72. [Google Scholar] [CrossRef]

- Morris, C.; Bartlett, D. Gross Motor Function Classification System: Impact and Utility. Dev. Med. Child Neurol. 2004, 46, 60–65. [Google Scholar] [CrossRef]

- Yozbatiran, N.; Der-Yeghiaian, L.; Cramer, S.C. A Standardized Approach to Performing the Action Research Arm Test. Neurorehabil. Neural Repair 2008, 22, 78–90. [Google Scholar] [CrossRef] [PubMed]

- Burton, Q.; Lejeune, T.; Dehem, S.; Lebrun, N.; Ajana, K.; Edwards, M.G.; Everard, G. Performing a Shortened Version of the Action Research Arm Test in Immersive Virtual Reality to Assess Post-Stroke Upper Limb Activity. J. NeuroEng. Rehabil. 2022, 19, 133. [Google Scholar] [CrossRef]

- Daly, J.J.; Nethery, J.; McCabe, J.P.; Brenner, I.; Rogers, J.; Gansen, J.; Butler, K.; Burdsall, R.; Roenigk, K.; Holcomb, J. Development and Testing of the Gait Assessment and Intervention Tool (G.A.I.T.): A Measure of Coordinated Gait Components. J. Neurosci. Methods 2009, 178, 334–339. [Google Scholar] [CrossRef]

- Lawton, M.P.; Brody, E.M. Assessment of Older People: Self-Maintaining and Instrumental Activities of Daily Living. The Gerontol. 1969, 9, 179–186. [Google Scholar] [CrossRef]

- Jutten, R.J.; Peeters, C.F.W.; Leijdesdorff, S.M.J.; Visser, P.J.; Maier, A.B.; Terwee, C.B.; Scheltens, P.; Sikkes, S.A.M. Detecting Functional Decline from Normal Aging to Dementia: Development and Validation of a Short Version of the Amsterdam IADL Questionnaire. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2017, 8, 26–35. [Google Scholar] [CrossRef] [PubMed]

- Dubbelman, M.A.; Verrijp, M.; Facal, D.; Sánchez-Benavides, G.; Brown, L.J.E.; Der Flier, W.M.; Jokinen, H.; Lee, A.; Leroi, I.; Lojo-Seoane, C.; et al. The Influence of Diversity on the Measurement of Functional Impairment: An International Validation of the Amsterdam IADL Questionnaire in Eight Countries. Alzheimer’s Dement. Diagn. Assess. Dis. Monit. 2020, 12, e12021. [Google Scholar] [CrossRef]

- Ditunno, J.; Ditunno, P.; Graziani, V.; Scivoletto, G.; Bernardi, M.; Castellano, V.; Marchetti, M.; Barbeau, H.; Frankel, H.; D’Andrea Greve, J.; et al. Walking Index for Spinal Cord Injury (WISCI): An International Multicenter Validity and Reliability Study. Spinal Cord 2000, 38, 234–243. [Google Scholar] [CrossRef]

- Fugl-Meyer, A.R.; Jääskö, L.; Leyman, I.; Olsson, S.; Steglind, S. The Post-Stroke Hemiplegic Patient. 1. a Method for Evaluation of Physical Performance. Scand. J. Rehabil. Med. 1975, 7, 13–31. [Google Scholar] [CrossRef]

- Dominici, N.; Keller, U.; Vallery, H.; Friedli, L.; van den Brand, R.; Starkey, M.L.; Musienko, P.; Riener, R.; Courtine, G. Versatile Robotic Interface to Evaluate, Enable and Train Locomotion and Balance after Neuromotor Disorders. Nat. Med. 2012, 18, 1142–1147. [Google Scholar] [CrossRef]

- Smith, M.M.; Rao, R.P.N.; Olson, J.D.; Darvas, F. Utilizing Subdermal Electrodes as a Noninvasive Alternative for Motor-Based BCIs. In Brain–Computer Interfaces Handbook; CRC Press: Boca Raton, FL, USA, 2018; pp. 269–277. [Google Scholar]

- Maren, E.V.; Alnes, S.L.; Cruz, J.R.D.; Sobolewski, A.; Friedrichs-Maeder, C.; Wohler, K.; Barlatey, S.L.; Feruglio, S.; Fuchs, M.; Vlachos, I.; et al. Feasibility, Safety, and Performance of Full-Head Subscalp EEG Using Minimally Invasive Electrode Implantation. Neurology 2024, 102, e209428. [Google Scholar] [CrossRef]

- Mirzakhalili, E.; Barra, B.; Capogrosso, M.; Lempka, S.F. Biophysics of Temporal Interference Stimulation. Cell Syst. 2020, 11, 557–572.e5. [Google Scholar] [CrossRef]

- Zhu, Z.; Xiong, Y.; Chen, Y.; Jiang, Y.; Qian, Z.; Lu, J.; Liu, Y.; Zhuang, J. Temporal Interference (TI) Stimulation Boosts Functional Connectivity in Human Motor Cortex: A Comparison Study with Transcranial Direct Current Stimulation (tDCS). Neural Plast. 2022, 2022, 7605046. [Google Scholar] [CrossRef]

- Violante, I.R.; Alania, K.; Cassarà, A.M.; Neufeld, E.; Acerbo, E.; Carron, R.; Williamson, A.; Kurtin, D.L.; Rhodes, E.; Hampshire, A.; et al. Non-Invasive Temporal Interference Electrical Stimulation of the Human Hippocampus. Nat. Neurosci. 2023, 26, 1994–2004. [Google Scholar] [CrossRef]

- Wessel, M.J.; Beanato, E.; Popa, T.; Windel, F.; Vassiliadis, P.; Menoud, P.; Beliaeva, V.; Violante, I.R.; Abderrahmane, H.; Dzialecka, P.; et al. Noninvasive Theta-Burst Stimulation of the Human Striatum Enhances Striatal Activity and Motor Skill Learning. Nat. Neurosci. 2023, 26, 2005–2016. [Google Scholar] [CrossRef]

- De Paz, J.M.M.; Macé, E. Functional Ultrasound Imaging: A Useful Tool for Functional Connectomics? NeuroImage 2021, 245, 118722. [Google Scholar] [CrossRef]

- Norman, S.L.; Maresca, D.; Christopoulos, V.N.; Griggs, W.S.; Demene, C.; Tanter, M.; Shapiro, M.G.; Andersen, R.A. Single-Trial Decoding of Movement Intentions Using Functional Ultrasound Neuroimaging. Neuron 2021, 109, 1554–1566.e4. [Google Scholar] [CrossRef]

- Zheng, H.; Niu, L.; Qiu, W.; Liang, D.; Long, X.; Li, G.; Liu, Z.; Meng, L. The Emergence of Functional Ultrasound for Noninvasive Brain–Computer Interface. Research 2023, 6, 0200. [Google Scholar] [CrossRef] [PubMed]

- Nolan, M. New Neurotech Eschews Electricity for Ultrasound—IEEE Spectrum. Available online: https://spectrum.ieee.org/bci-ultrasound (accessed on 3 March 2025).

- Lefebvre, A.T.; Rodriguez, C.L.; Bar-Kochba, E.; Steiner, N.E.; Mirski, M.; Blodgett, D.W. High-Resolution Transcranial Optical Imaging of in Vivo Neural Activity. Sci. Rep. 2024, 14, 24756. [Google Scholar] [CrossRef] [PubMed]

- Oh, S.; Jekal, J.; Won, J.; Lim, K.S.; Jeon, C.-Y.; Park, J.; Yeo, H.-G.; Kim, Y.G.; Lee, Y.H.; Ha, L.J.; et al. A Stealthy Neural Recorder for the Study of Behaviour in Primates. Nat. Biomed. Eng. 2024, 9, 882–895. [Google Scholar] [CrossRef] [PubMed]

- Ivanov, D.; Chezhegov, A.; Kiselev, M.; Grunin, A.; Larionov, D. Neuromorphic Artificial Intelligence Systems. Front. Neurosci. 2022, 16, 959626. [Google Scholar] [CrossRef]

- Musienko, P.; Van Den Brand, R.; Maerzendorfer, O.; Larmagnac, A.; Courtine, G. Combinatory Electrical and Pharmacological Neuroprosthetic Interfaces to Regain Motor Function After Spinal Cord Injury. IEEE Trans. Biomed. Eng. 2009, 56, 2707–2711. [Google Scholar] [CrossRef]

- Minev, I.R.; Musienko, P.; Hirsch, A.; Barraud, Q.; Wenger, N.; Moraud, E.M.; Gandar, J.; Capogrosso, M.; Milekovic, T.; Asboth, L.; et al. Electronic Dura Mater for Long-Term Multimodal Neural Interfaces. Science 2015, 347, 159–163. [Google Scholar] [CrossRef]

- Bloch, J.; Lacour, S.P.; Courtine, G. Electronic Dura Mater Meddling in the Central Nervous System. JAMA Neurol. 2017, 74, 470–475. [Google Scholar] [CrossRef] [PubMed]

- Deriabin, K.V.; Kirichenko, S.O.; Lopachev, A.V.; Sysoev, Y.; Musienko, P.E.; Islamova, R.M. Ferrocenyl-Containing Silicone Nanocomposites as Materials for Neuronal Interfaces. Compos. Part B Eng. 2022, 236, 109838. [Google Scholar] [CrossRef]

- Afanasenkau, D.; Kalinina, D.; Lyakhovetskii, V.; Tondera, C.; Gorsky, O.; Moosavi, S.; Pavlova, N.; Merkulyeva, N.; Kalueff, A.V.; Minev, I.R.; et al. Rapid Prototyping of Soft Bioelectronic Implants for Use as Neuromuscular Interfaces. Nat. Biomed. Eng. 2020, 4, 1010–1022. [Google Scholar] [CrossRef]

- Boufidis, D.; Garg, R.; Angelopoulos, E.; Cullen, D.K.; Vitale, F. Bio-Inspired Electronics: Soft, Biohybrid, and “Living” Neural Interfaces. Nat. Commun. 2025, 16, 1861. [Google Scholar] [CrossRef]

- Tian, M.; Ma, Z.; Yang, G.-Z. Micro/Nanosystems for Controllable Drug Delivery to the Brain. The Innovation 2024, 5, 100548. [Google Scholar] [CrossRef]

- Qian, T.; Yu, C.; Zhou, X.; Ma, P.; Wu, S.; Xu, L.; Shen, J. Ultrasensitive Dopamine Sensor Based on Novel Molecularly Imprinted Polypyrrole Coated Carbon Nanotubes. Biosens. Bioelectron. 2014, 58, 237–241. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Huang, H.; Zeng, Y.; Tang, H.; Li, L. A Novel Composite of Molecularly Imprinted Polymer-Coated PdNPs for Electrochemical Sensing Norepinephrine. Biosens. Bioelectron. 2015, 65, 366–374. [Google Scholar] [CrossRef]

- Alsharabi, R.M.; Pandey, S.K.; Singh, J.; Kayastha, A.M.; Saxena, P.S.; Srivastava, A. Ultra-Sensitive Electrochemical Detection of Glutamate Based on Reduced Graphene Oxide/Ni Foam Nanocomposite Film Fabricated via Electrochemical Exfoliation Technique Using Waste Batteries Graphite Rods. Microchem. J. 2024, 199, 110055. [Google Scholar] [CrossRef]

- Han, J.; Stine, J.M.; Chapin, A.A.; Ghodssi, R. A Portable Electrochemical Sensing Platform for Serotonin Detection Based on Surface-Modified Carbon Fiber Microelectrodes. Anal. Methods 2023, 15, 1096–1104. [Google Scholar] [CrossRef] [PubMed]

- Johnson, M.D.; Franklin, R.K.; Gibson, M.D.; Brown, R.B.; Kipke, D.R. Implantable Microelectrode Arrays for Simultaneous Electrophysiological and Neurochemical Recordings. J. Neurosci. Methods 2008, 174, 62–70. [Google Scholar] [CrossRef] [PubMed]

- Zebda, A.; Cosnier, S.; Alcaraz, J.-P.; Holzinger, M.; Le Goff, A.; Gondran, C.; Boucher, F.; Giroud, F.; Gorgy, K.; Lamraoui, H.; et al. Single Glucose Biofuel Cells Implanted in Rats Power Electronic Devices. Sci. Rep. 2013, 3, 1516. [Google Scholar] [CrossRef] [PubMed]

- Xu, C.; Song, Y.; Han, M.; Zhang, H. Portable and Wearable Self-Powered Systems Based on Emerging Energy Harvesting Technology. Microsyst. Nanoeng. 2021, 7, 25. [Google Scholar] [CrossRef]

| Method | SRes (mm) | TRes (ms) | Depth (mm) | Inv | Freq (Hz) | TypeNeuroAct | Cost | NCI Use | NCI Compatibility |

|---|---|---|---|---|---|---|---|---|---|

| TENS | ~20 | ~5 | Surface, 5–20 | N | 1–150 | Gate Control Hypothesis | L | Pain, sensory mod CBI | Med |

| TIS | ~10 | ~5–10 | Deep, 30–50 | N | 2000–5000 (carrier) | Selective neuromodulation | L | Experimental CBI | Low |

| EEG | 10–20 | ~1 | Cortex, subcortical | N | 0.05–35 (practical) | PSP (distorted LFP) | M | P300, SSVEP, MI BCIs | High |

| fNIRS | 10–30 | ~1000 | ~10–20 | N | 0.01–2 | Hemodynamics | M | Hybrid BCIs | Med |

| tDCS | ~10 | ~1000 | Superficial | N | DC | Membrane polarization | L | Hybrid BCIs | Med |

| tACS | ~10 | ~1000 | Superficial | N | 0.1–5000 | Oscillatory entrainment | L | Rhythmic neuromodulation | Med |

| MEG | ~5–10 | ~1 | Superficial | N | 0.1–100 | PSF | VH | Research BCIs | Med |

| TMS | ~5–10 | ~10 | Cortex | N | Single/ Series | Depolarization of cortical pyramidal neurons | M | Stimulation, phosphenes (BBI) | Med |

| Subdural SCS | ~0.5–2 | ~1 | Spinal/ Subdural | S | 40–10000 | Dorsal column afferents, interneurons | H | BSI, motor recovery | Med |

| Epidural SCS | ~5–10 | ~1 | Spinal/ Epidural | S | 20–10000 | Dorsal column afferents, interneurons | H | BSI, motor recovery | Med |

| Stentrode | ~1–2 | ~1 | Venous, 2–3 | S | 0.5–200 | LPF | H | Endovascular BCIs | High |

| ECoG | ~1 | ~1 | Cortex, 1–2 | S | 0.5–5000 | PSP, LPF | H | High-res BCI, CBI | High |

| PNS | ~1–5 | ~1 | Peripheral | S | 1–1000 | Stimulation of afferent fibers | M | Sensorimotor feedback | Med |

| FES | ~10 | ~1 | Muscle/ Nerve | S | 10–100 | Stimulation of efferent fibers | M | Neurorehab, BSI | Med |

| ISMS | ~0.1 | ~0.1 | Spinal, 1–3 | I | 10–100 | Stimulation of motoneurons | M | Motor recovery, BSI | High |

| DBS | ~1–4 | ~1 | Deep nuclei, 60–80 | I | 1–200 | Stimulation of neural ensembles | H | Therapeutic CBI | High |

| ICMS | ~0.05–0.1 | ~0.1 | Cortex, 0.5–2.5 | I | 10–300 | Neuronal cell bodies, apical dendrites | VH | High-res motor/sensory NCI | High |

| Retinal implant | Low | ~10 | Retina, 0.2–0.5 | I | 10–50 | Ganglion/bipolar cell stimulation | H | Vision, sensory CBI | High |

| Cochlear implant | Medium | ~10 | Cochlea, 25–30 | I | 100–10000 | Afferents of the auditory nerve | H | Hearing, speech CBI | High |

| Type of Methods | Data Source | Platform | Algorithms | Key Features | CC | RT |

|---|---|---|---|---|---|---|

| Feature analysis and optimization methods | ||||||

| Temporal analysis | EEG, ECoG, MEG (Time series) | PCs, server (General-purpose CPU) | Statistical characteristics: mean, standard deviation, skewness, etc. | Variable acc. depending on task; generally low lat. | L | Y |

| PCs, servers (General-purpose CPU) | Entropy measures: Shannon, Rényi, Sample Entropy, etc. | Medium or high acc., medium lat., though higher for Sample Entropy. | L, but H for Sample Entropy | Y | ||

| PCs (General-purpose CPU), FPGA | Hjorth parameters, mean-absolute value, zero crossings, slope sign changes, waveform length, maximum fractal length, Willison amplitude, root mean square (RMS), autoregressive and adaptive autoregressive coefficients (AAR) | Low, medium, or high acc. and lat. for different applications, higher for AAR | L, but M-H for AAR | Y | ||

| Time-frequency analysis | EEG, ECoG, MEG (Time series) | PCs, (General-purpose CPU), FPGA | Fast Fourier Transform (FFT) and Short-Time Fourier Transform (STFT) | Lower acc. for non-phase-locked responses (ERD/ERS) compared to phase-locked (SSVEP) | L | Y |

| Power Spectral Density (PSD) | Analysis of dominant rhythms in the resting state, detection of SSVEP, and assessment of neurometabolic activity. Acc. is high for EEG, very low for ERD/ERS/P300. Low lat. | L | Y | |||

| Synchrosqueezing transform, Hilbert–Huang transform, wavelet transforms | Inevitable lat. due to data segmentation requirements. | M/H | Y | |||

| Spatial methods | EEG, ECoG, MEG, fNIRS (Multichannel) | PCs (General-purpose CPU), FPGA, ASIC | Common Spatial Pattern (CSP) and its modifications, EEG source localization, and inverse model-based feature extraction | Extraction of informative spatial patterns, enhancing differences between classes; dimensionality reduction, high acc.; lat. is inevitable due to data segmentation requirements. | L/M | Y |

| Component Analysis | EEG, ECoG, fMRI, MEG, fNIRS | PCs (General-purpose CPU) | PCA, ICA | Dimensionality reduction, artifact removal, and separation of mixed signals. Acc. is high for ICA, moderate for PCA. Lat. is low. | H for ICA, L/M for PCA | Y |

| Information-theoretic methods | EEG, ECoG, MEA, MEG, fNIRS | PCs, servers (General-purpose CPU), CPP | Mutual information-based best individual feature, mutual information-based rough set reduction, integral square descriptor | Extraction of the most relevant features. Reaches theoretical limits of BCI performance. High acc., lat., robustness to noise, and versatility. | H | P |