1. Introduction

The preservation and care of endangered species is an ongoing concern for different sectors of society; the bee

Apis mellifera, or the honeybee, is not exempt from risks of extinction for different reasons [

1]. One of these is attack and invasion by the Varroa mite, which has caused ongoing concern among beekeepers and environmentalists, and is capable of devastating entire colonies of bees where they nest [

2,

3,

4,

5,

6]. The Varroa mite has been identified as the most destructive ectoparasite of the honeybee [

2]. It is considered one of the greatest biological threats to the health of bees [

6], and can cause the inability of foraging bees to fly, disorientation, paralysis, and the death of individuals in the colony [

7]. The prevalence of this mite has been studied in agro-ecological regions of different countries [

3,

4]. The presence and effects of the Varroa mite in hives where they infect and destroy honeybees has been analyzed and studied in different areas of scientific and technological research [

2,

3]; however, despite the efforts made by insecticides, acarologists and beekeepers have not yet produced long-term solutions for the control of Varroa [

6]. Proposed alternative techniques, such as the development of better beekeeping practices [

5], integrated pest management (IPM) [

6], international monitoring, and therapeutic alternatives [

7], all seek to increase the effectiveness of Varroa mite control.

Currently, the Varroa mite is detected by beekeepers through two types of visual checks [

8,

9], double-sieve sampling and powdered sugar sampling. Both checks have been documented by the Food and Agriculture Organization of the United Nations (FAO) [

10]. When carried out in apiaries, both of these checks imply the opening of the hive at a time that the beekeeper decides. After the hive is open, hundreds of bees are collected and killed with isopropyl alcohol, then discarded with filters that show the existence or absence of the Varroa ectoparasite [

8,

9,

11]. The manual collection of the ectoparasite [

12] using substances applied to a group of approximately 100 bees allows for the detection of the infestation in the hive. If the ectoparasite is detected, the application of effective acaricides (fluvalinate-tau, coumaphos, or mitraz) [

7] is carried out. Another method for detecting the Varro mite, in [

11], is a visual analysis of the hive followed by the detection and counting of the number of mites on a sliding unit, then the estimation of the possible level of infestation by a heuristic calculation from the count.

These controls have three main disadvantages: first, they cause stress, loss of the queen, and loss of larvae in gestation in the colony due to the opening of the hive; second, every time manual control of the Varroa mite is carried out, hundreds of productive-age bees are sacrificed in each hive; and third, the effectiveness of the control is questionable, as it is based solely on the periodicity of the controls carried out by the beekeeper in the apiary. Thus, if few visual controls are carried out per year, the risk of attack by the Varroa mite increases; contrary to the above, however, if many controls are carried out, the risk of stress, escape of queens, and damage to larvae (unborn bees) increases.

The automation of the Varroa mite verification procedures in hives is a feasible development of an automatic recognition systems (ARS). Automatic recognition systems (ARS) consisting of intelligent software systems that manipulate miniaturized electrical, electronic, and mechanical devices, have emerged as an alternative to manual visual inspections. Pattern-recognition methods that allow for automated visual analysis have proven effective in different areas of scientific research. In the search for solutions to the Varroa ectoparasite, several works have been proposed [

8,

9,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22]. These works are focused on the development of computer systems for the detection and reduction of the harmful effects of the Varroa ectoparasite with different analysis and object-recognition techniques in images applied in real environments.

For the development of automatic recognition systems, three areas of research must be considered: first, the real environment where the system must operate; second, the use of digital image-processing techniques to extract image features; and finally, the methods of object recognition in the images. We refer to each of the areas below according to the research work carried out in visual-inspection systems in apiaries.

Real environments have a certain degree of complexity when implementing computer systems for outdoor environments, as the images are influenced by different factors such as lighting, the area of the real environment, and the movement of objects [

15,

23], as well as data-processing speeds greater than conventional ones [

24]. Digital image-processing techniques for the identification of regions and extraction of object features from the image [

25], have been successfully used in automatic recognition systems; in this way, these techniques are used in systems applied to video inspection in beehives. For example, ref. [

14] measured activity at the hive entrance by processing a set of frames, ref. [

15] detected pollen-carrying bees, parasites were detected in [

8], and Varroa mites were detected in [

9,

19,

20,

21,

22].

In bee surveillance systems, two methods have been used for recognition: Gaussian [

14,

15] and neural networks [

8,

15,

21,

22]. The neural-network models that have been used successfully have been the following: ref. [

9] used a pre-trained Convolutional Neural Network (CNN) model to detect bees; ref. [

20] used YOLO with a single neural network to predict bounding boxes; ref. [

21] used a Convolutional Neural Network for bee detection and Varroa identification, and ref. [

22] used a deep-learning model based on Faster CNN (R-CNN) architecture to perform Varroa mite detection.

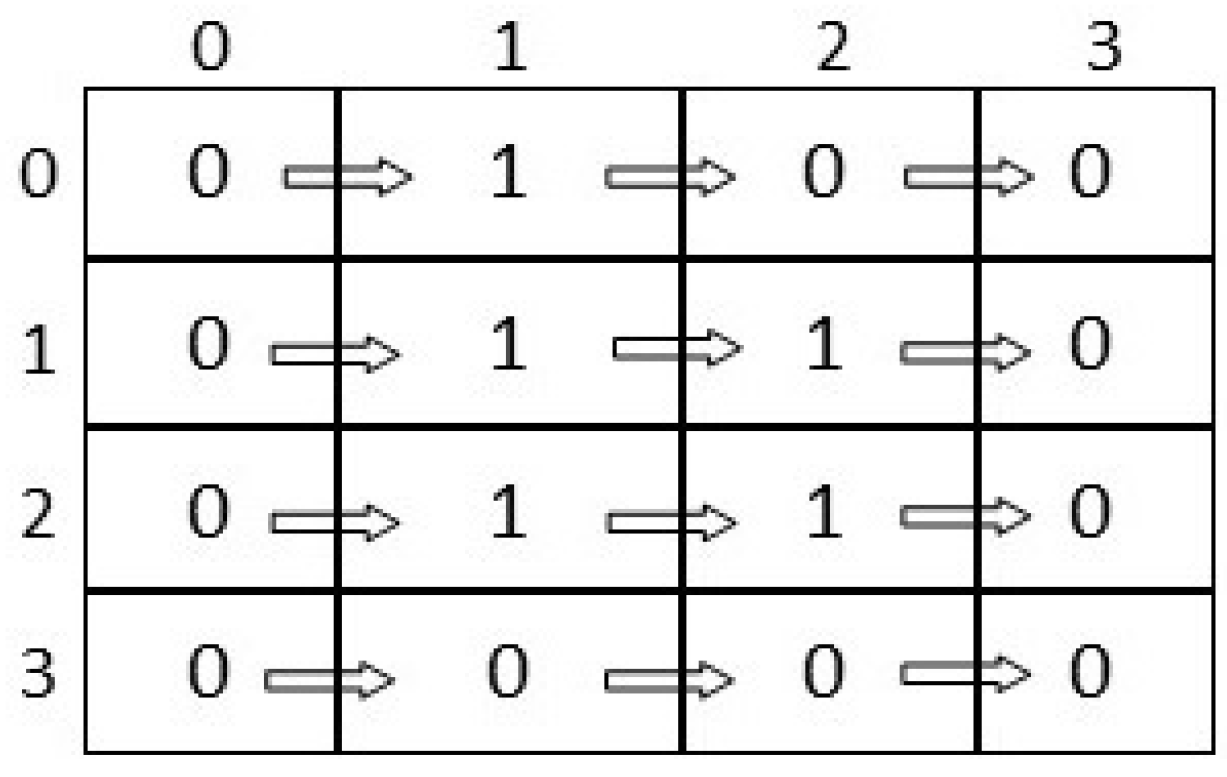

A different neural-network model from the previous ones is the Graph Neural Network (GNN) initially proposed in [

26]; this type of network represents, in a natural way and through graph structures, various application areas [

27]. GNNs for object recognition in images are being widely used. For example, in [

28,

29] they are used for image classification; in [

30], edge features extracted from objects contained in images are used in object recognition with GNNs; in [

31], GNNs are used for extracting planar roof structures from high-resolution images; in [

32], GNNs are used for symbol detection in document images. GNN applications are not only limited to applications in images; they have also been used for graph learning representation [

33].

Although various neural-network models have been applied to the detection of Varroa mites in bees, the problem remains open. Then, considering the examples from the analyzed literature and the problem of the Varroa mite existing in the environment, in addition to the traditional practices of Varroa mite control (both described in the previous paragraphs), we propose in this research work the development of a noninvasive visual-inspection system, retaining the standards of care of bees, which can remain in an apiary for an indefinite period of time, performing the tasks of detecting the Varroa parasite.

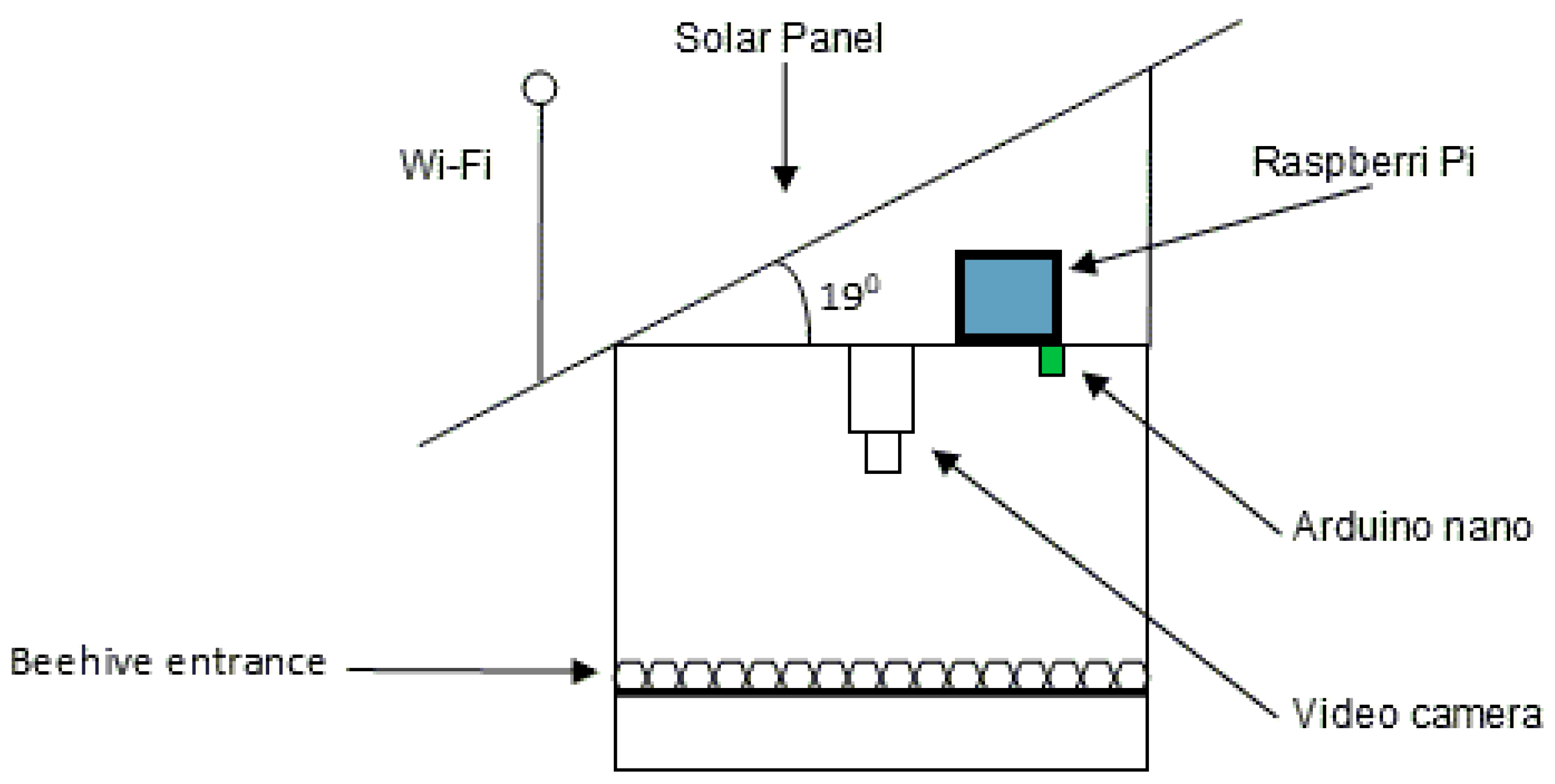

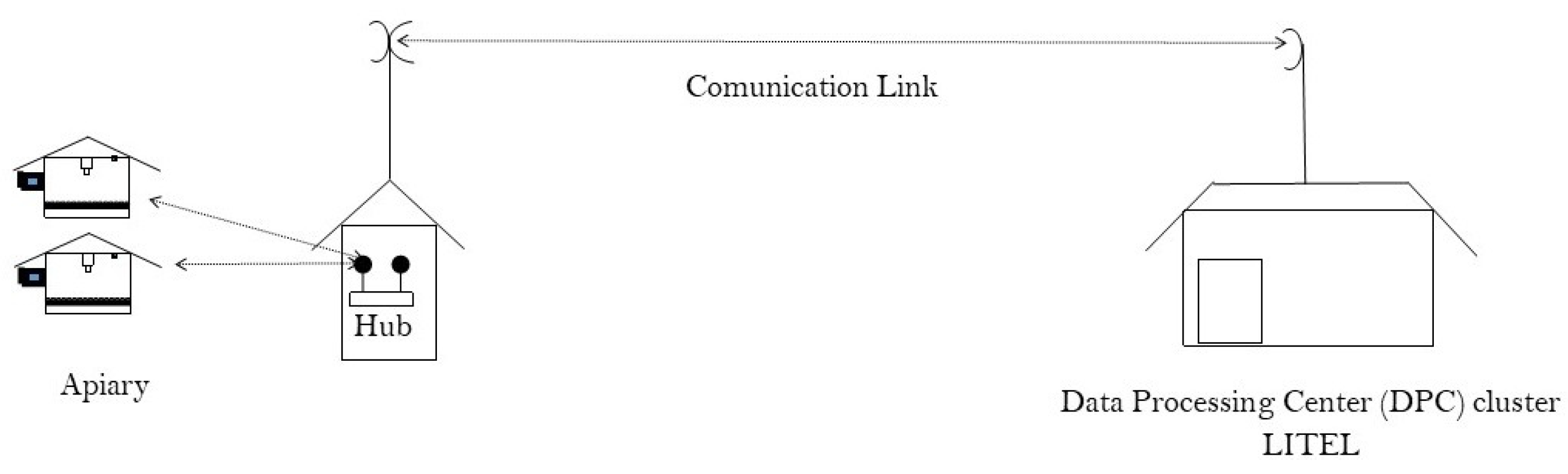

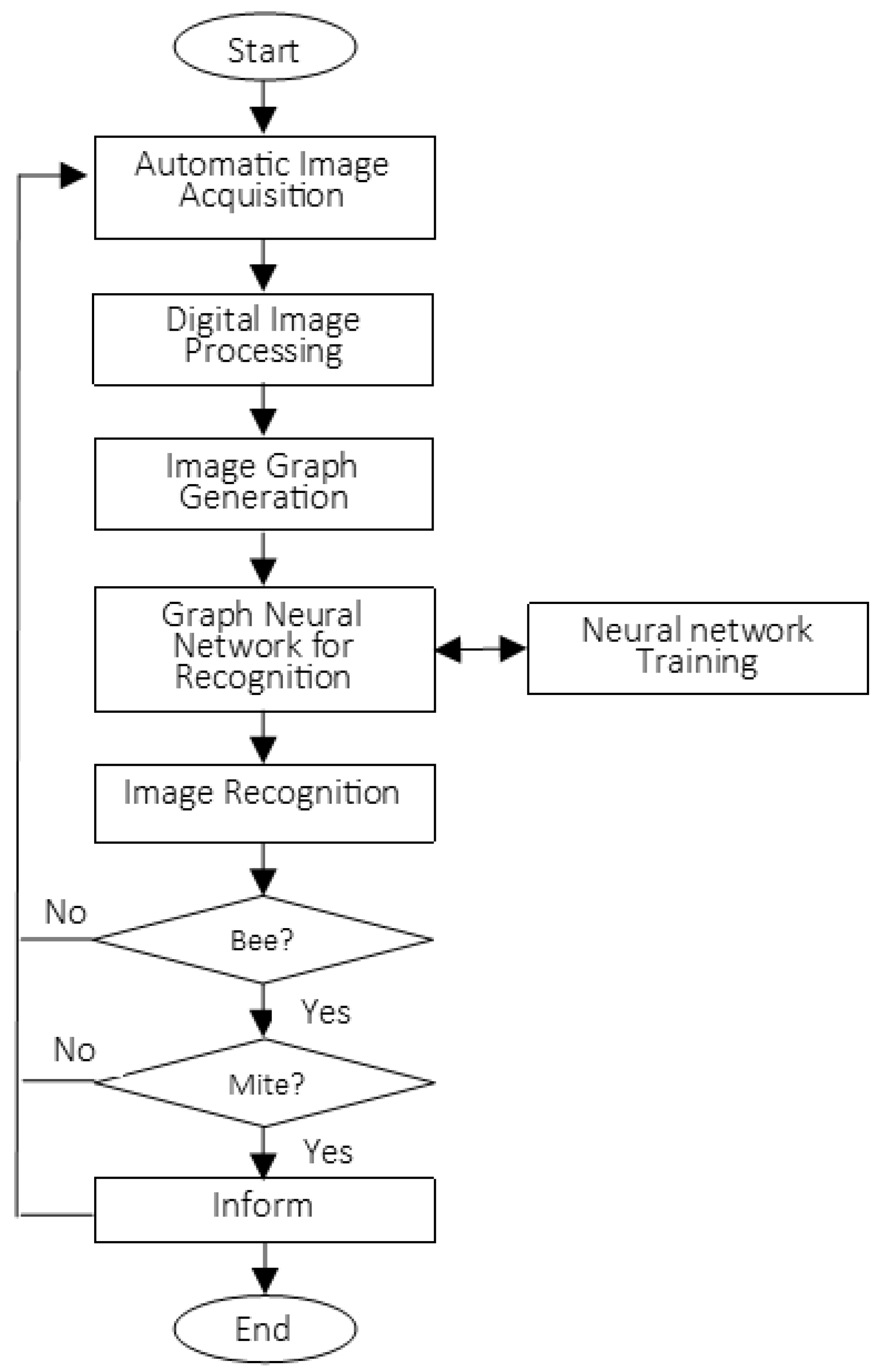

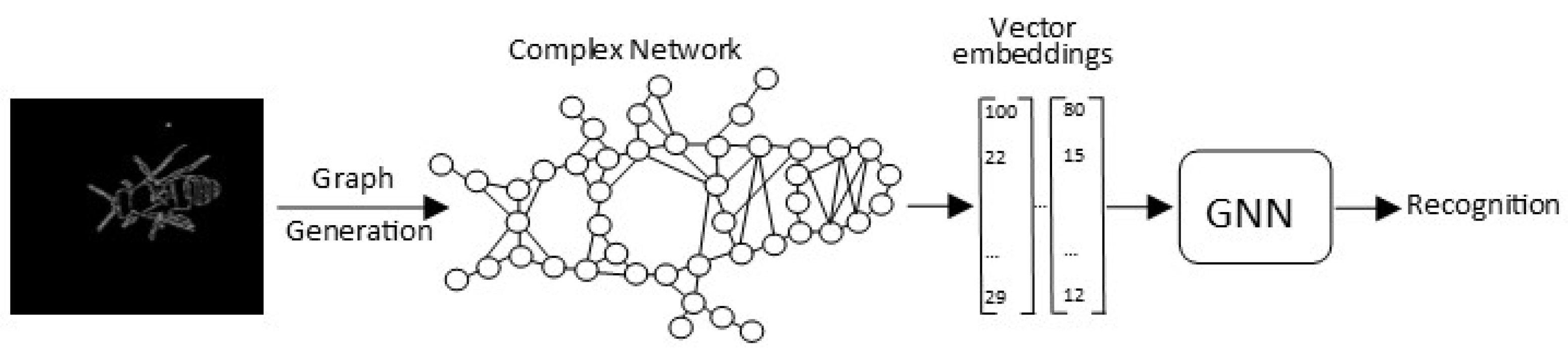

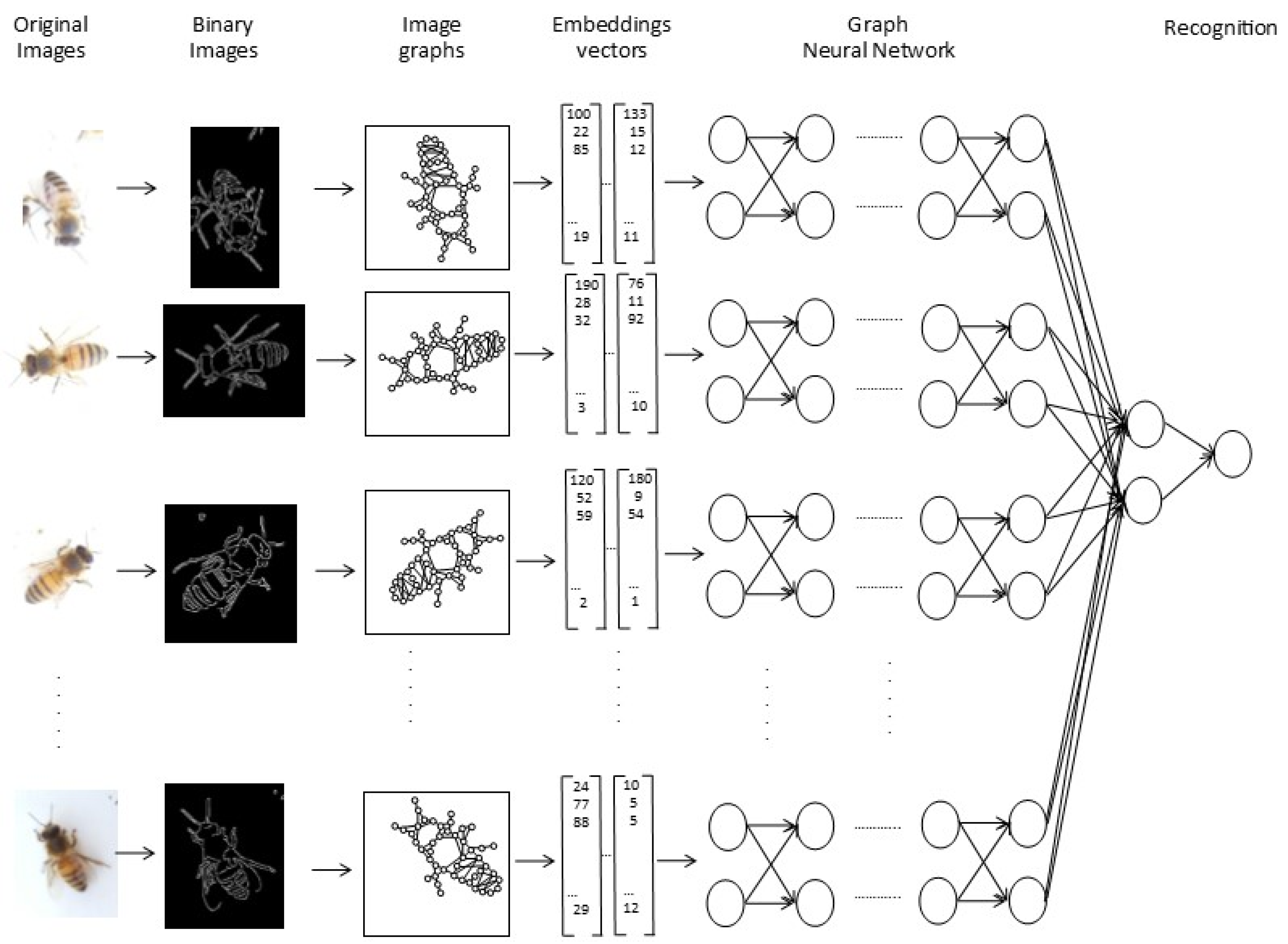

This work describes the implementation of an automatic recognition system that acquires real images of test apiaries during the four seasons of the year, with image-acquisition equipment using the Internet of Things at the entrance of the hive; the images are sent through a telecommunications system to be processed and analyzed in a server cluster. Finally, the bees are recognized in the images using DIP and GNN techniques, and a subsequent analysis of the abdomen of the Apis mellifera bee is carried out to detect the ectoparasitic Varroa.

This research paper is an extension of two previously published papers, namely Varroa Mite Detection in Honeybees Using Computer Vision [

13] and Characterization of Honey Bee Anatomy for Recognition and Analysis Using Image Regions [

34]. Both articles address the problem of the Varroa mite and describe the development of an automatic recognition system in apiaries. Based on these two studies, this manuscript describes new experiments conducted in other apiaries and hives.

This work is organized as follows:

Section 2 describes a set of works related to the development of research using automated visual analysis methods in bee colonies; for a better understanding of the sections of this work,

Section 3 defines some basic terms;

Section 4 presents the problem statement of this research;

Section 5 describes the materials and method used in this research and the complexity of developing automated visual-inspection systems in real environments;

Section 6 explains the experiments carried out in different apiaries; limitations of the research are mentioned in

Section 7;

Section 8 and

Section 9 present the conclusions and future work, respectively; finally, the proposed discussions are in

Section 10.

7. Experiments

This section presents the methodology to carry out the execution of the experiments; for this purpose, three subsections are presented. The three subsections are listed and explained in the following.

- (a)

The first subsection defines a set of terms used in the experiments; these terms are placed in this section so that the reader has a better understanding of the experiments.

- (b)

In the second subsection, the experiments performed are described. This subsection uses four sets of images (training dataset) presented in

Section 6.2.4: the first dataset is “bees in different positions for recognition”, the second dataset is “bees infected with the Varroa mite”, the third dataset is “bees in positions that are considered complex for recognition”, and the fourth dataset is “intruders that may appear at the entrance of the hive”; all datasets of images are used for training the Graph Neural Network, which is trained with the procedures described in

Section 6.2.5.

7.1. Terms Used

This subsection lists and defines a set of terms used in the process of describing the experiments performed.

Researcher. They are the people responsible for visually verifying the images and contrasting the results of the software system.

False positives. These are the results of the software system indicating the presence of a bee in the image, but, through verification by the researcher, such a bee does not exist. A false positive is also considered when the system detects the presence of a bee in the image, then the image is analyzed, and the presence of a Varroa mite is determined, but the parasite does not actually exist on the bee.

False negatives. These are the results of the software system not indicating the presence of a bee in the image, but, through verification by the researcher, the bee exists.

Images of

Apis mellifera bees.

Table 5 shows a set of images that show the bee at the beehive entrance. These images show some of the positions of the bees in the beehive.

Images of

Apis mellifera bees with the Varroa mite.

Table 6 contains a set of images that show bees with the Varroa mite at the entrance to the beehive. These images show some of the positions of bees infected with the Varroa mite at the entrance to the beehive.

7.2. Experiments

The experiments were divided to measure two parameters: first, the speed with which the bee is recognized at the hive entrance, and second, the recognition accuracy of the evaluated techniques. The next two items in the list explain each parameter evaluated and some considerations.

- (a)

The speed with which the bee is recognized at the entrance of the hive. With this experiment, we wanted to measure the time that each technique takes to recognize the bee at the entrance of the hive, because we are evaluating a real-time recognition system. For this experiment, object occlusion in the image is not considered because the camera placed at the entrance of the hive has the focus necessary for the acquisition of individual bees, in addition to the cropping process that is performed to segment the acquired images; this process is explained in detail in

Section 6.1.1. The recognition speed is measured in milliseconds to allow the evaluation of the times of each technique.

- (b)

The recognition accuracy of the evaluated techniques. With this measurement parameter, we wanted to evaluate the recognition accuracy of the object in the image, in order to determine the percentages of certainty of each evaluated technique.

With these two measurements, we can compare the speed and accuracy of each technique, considering that kNN is a fast classifier that needs few parameters in its execution with little processing time; in contrast, GNN is a recognition technique that requires more parameters and more processing time.

The following nine items are the list of experiments performed.

- (a)

Section 7.2.1—Bee recognition at the beehive entrance experiment.

- (b)

- (c)

- (d)

- (e)

Section 7.2.5—False positives in bees infected with the Varroa ectoparasite.

- (f)

Section 7.2.6—Percentages of false negatives in bees infected with the Varroa ectoparasite.

- (g)

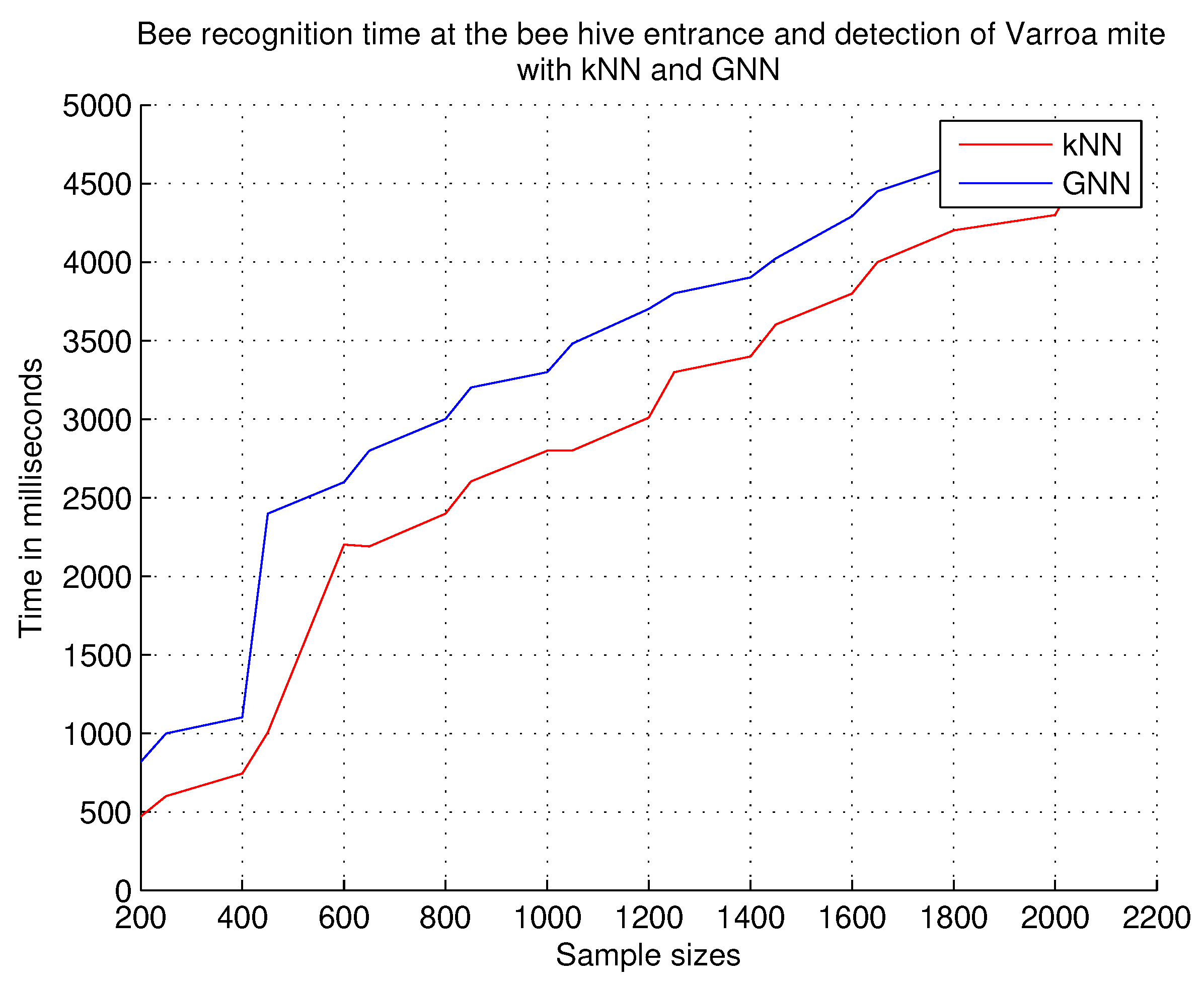

Section 7.2.7—Recognition time of the bee at the beehive entrance and detection of Varroa mite.

- (h)

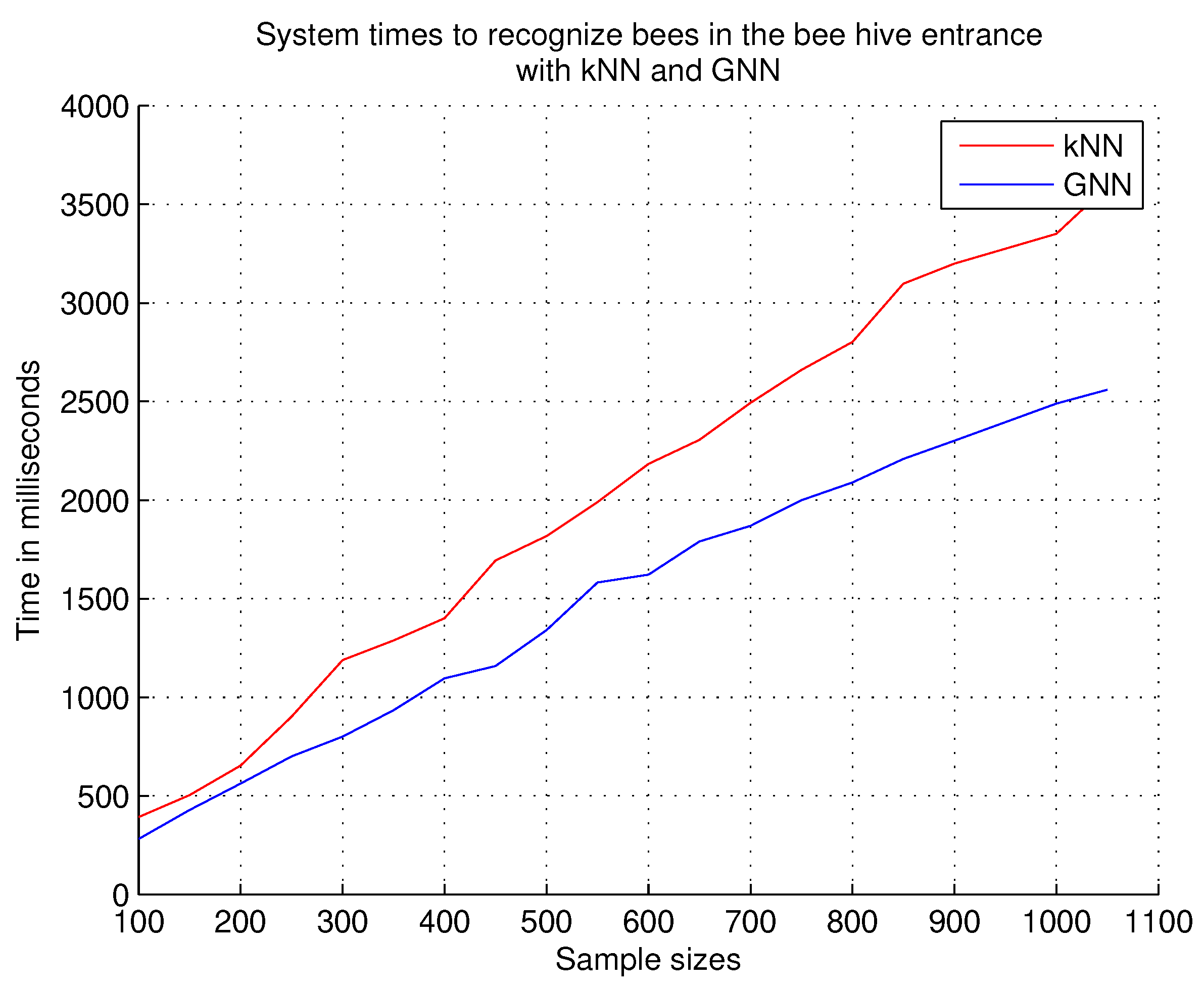

Section 7.2.8—System times to recognize bees in the beehive entrance.

- (i)

Experiments (a), (b), and (c) are performed with datasets containing healthy bees and bees infected with the Varroa mite; the recognition accuracy parameter is evaluated with the kNN technique and with the GNN technique. Results of both techniques are compared.

Experiments (d), (e), and (f) are performed with bees infected with the Varroa mite, and the two techniques, kNN and GNN, are evaluated, with the accuracy parameter. Both techniques are compared.

Once the accuracy parameter is evaluated, with both techniques, we evaluate the recognition speed parameter with experiments (g) and (h); i.e., we want to know which technique offers the best recognition times of the object in the image. The research question that remains here is whether we have a technique that offers us speed and accuracy at the same time when we have a real-time recognition system.

Finally, we experimented with the recognition of bees in different positions (see images in

Table 7), experiment (i); the parameter evaluated with this experiment is recognition accuracy.

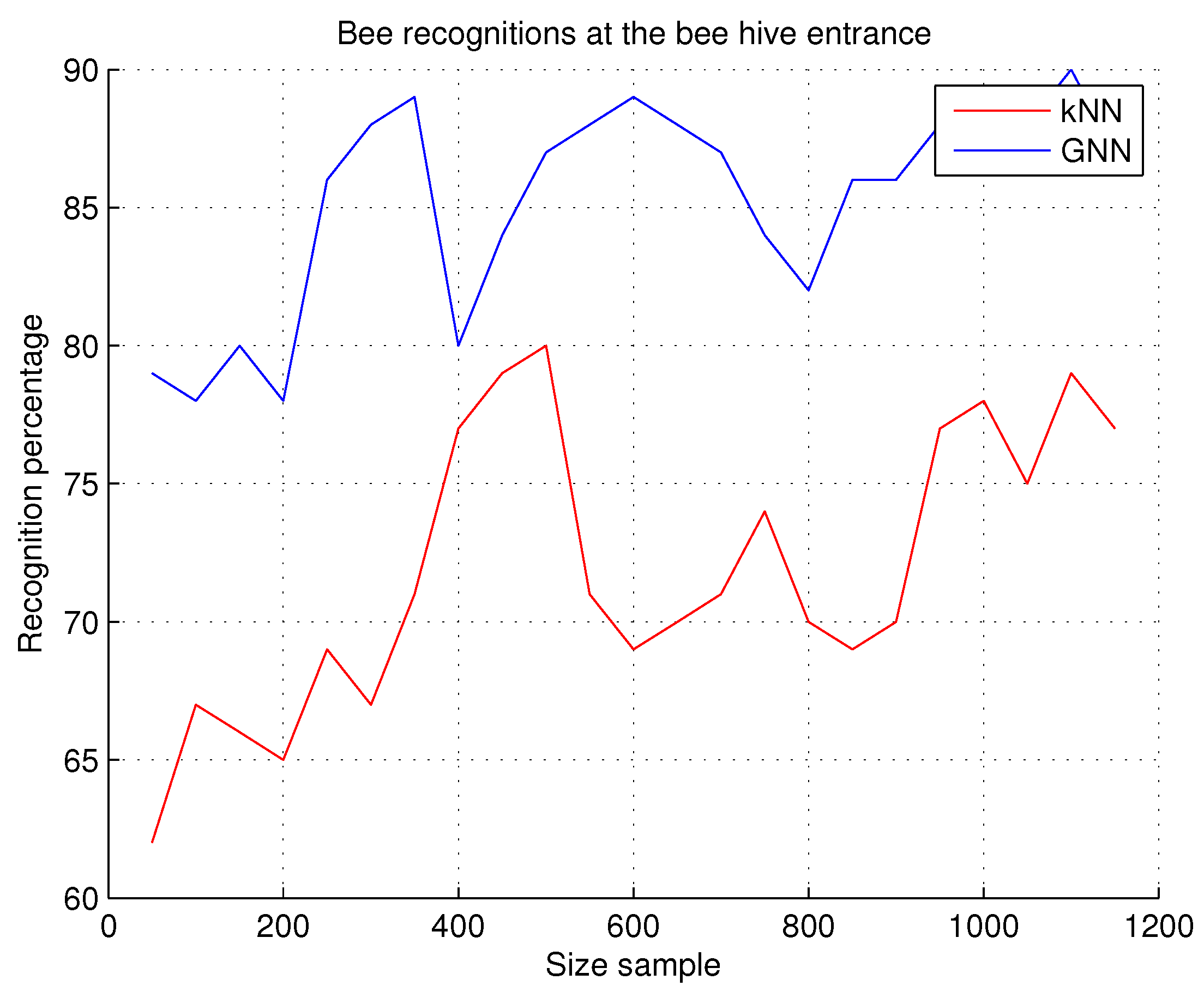

7.2.1. Bee Recognitions at the Beehive Entrance

This experiment allows us to measure the accuracy of bee recognition in the beehive entrance, through the software system; the result is considered accurate when the software system outputs the bee recognition result at the beehive entrance, and this result is verified by the researcher. This experiment is performed every time an image is transmitted from the apiary to the DPC, and it is necessary to verify if the object in the image is a bee because other different insects, such as wasps, ants, or beetles may be contained in the image; see the table of images of intruders that may appear in the hive’s entrance. Sets of 50 images are incrementally considered to obtain the recognition percentage total, i.e., the result is obtained by dividing the number of times that the system accurately recognized the bee at the beehive entrance by the number of verified images. The number of images in each set is incremental, i.e., the previous set of verified images is considered; for example, the first 50 images are taken and the recognition percentage is obtained; then the first 50 images are considered again, 50 more images are added and result is obtained again, and so on.

Observations. GNN is a recognition technique that provides higher recognition rates if the sample size is increased. The recognition rates, as shown in

Figure 9, reach almost 90%, but the processing time is higher. The image transmission time between the apiary and the data-processing center is 1 min, which facilitates the system response time; but now, if we process 100 images per minute, the system response time for each image is not acceptable. In the case of the kNN technique, although the recognition rates are lower, the recognition speed is acceptable. If we evaluate the precision with the speed, we obtain better recognition responses with GNN, but at the cost of high processing times. This can be verified in the experiment

Section 7.2.7 explained below.

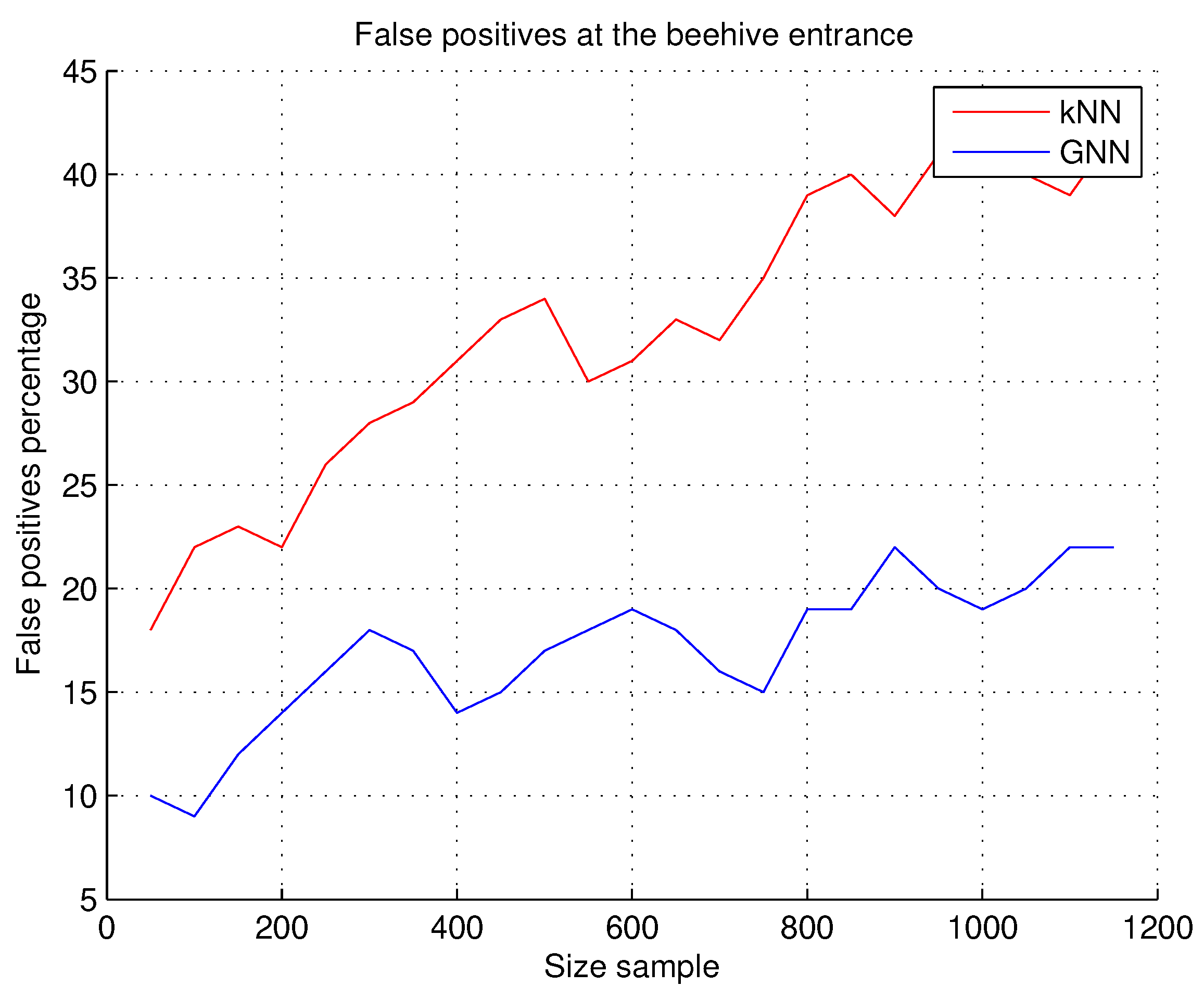

7.2.2. False Positives at the Beehive Entrance

We consider a false positive when there is no bee in the image, but the system detects the bee, i.e., it recognizes the bee in the image, but without it existing. This experiment is carried out because the image acquisitions are in real environments and the presence of noise in the image is constant; the shapes generated by the segmentation algorithms can be similar to the shapes of the beehives and generate false positives; see

Table 7.

Observations.

Figure 10 shows a low percentage of false positives using the GNN technique, compared to the false positives shown using the kNN technique. During the experiments, a better performance is shown in images that do not contain bees using GNN. Therefore, the recognition accuracy is considered better when using GNN.

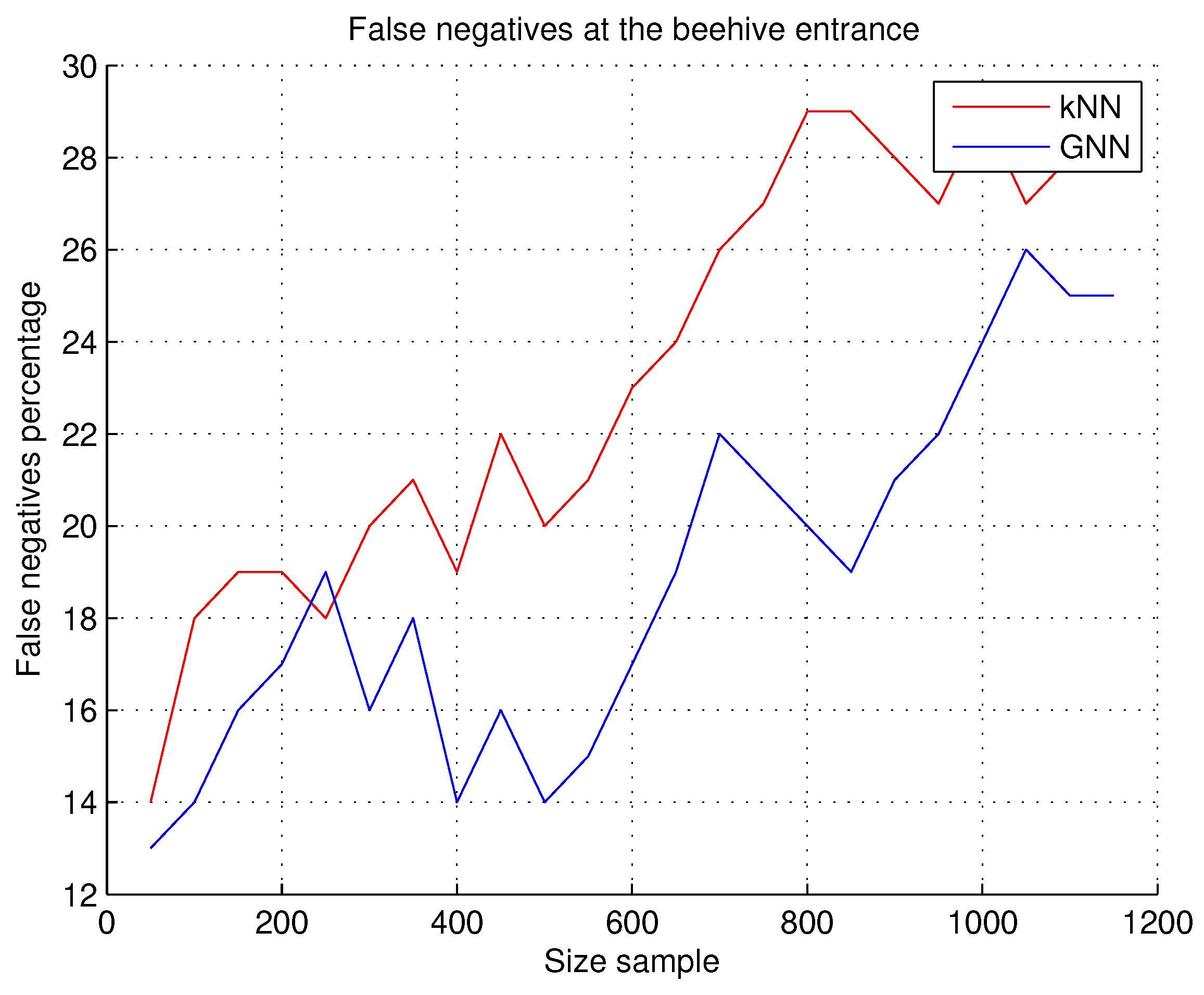

7.2.3. False Negatives at the Beehive Entrance

A false negative is when there is a bee in the image, but the system does not detect it, i.e., it does not recognize the bee that does exist in the image. Since the project uses images of real environments, the following situations may occur: the bees appear in different positions at the entrance of the hive, the images contain incomplete bees, and the images may contain different sizes of the bee in the image, due to the position of the camera in the hive; as a reference to this,

Table 7 contains images with bees in positions that are considered complex for recognition.

Observations. The results obtained show a lower number of false negatives with the GNN technique, as can be seen in

Figure 11, but it should be noted that the system response times are still longer with the use of this GNN technique. Lighting during image acquisition is an important factor to consider. The system gives better results on cloudy days because the shadow of the hive is not projected onto the hive entrance. In the case of rainy days, no experiments of any kind were carried out.

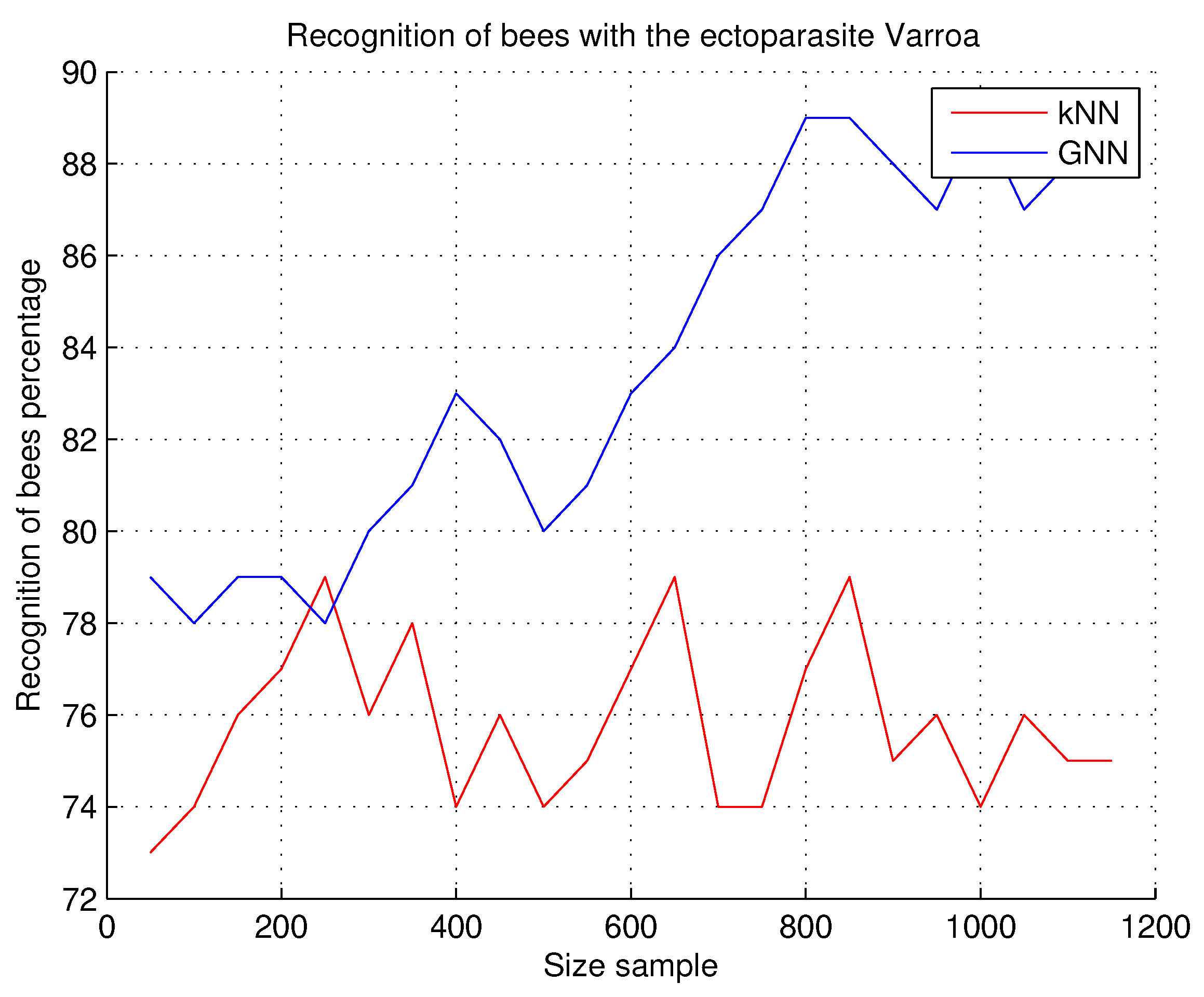

7.2.4. Recognition of Bees with the Ectoparasite Varroa

This experiment is the first of three experiments, in which the objective is to detect the presence of the ectoparasitic mite Varroa, after recognizing the bee at the entrance of the hive; the results obtained in this experiment do not consider false positives or false negatives, only the result of the execution of the system, i.e., if the bee recognized in the image is infected with the Varroa mite or is a healthy bee.

Observations. In this research work, we seek the precision in the recognition of the honeybee at the entrance of the hive and the precision in the detection of the Varroa parasite in the detected bee.

Figure 12 shows the results of this experiment; a better precision with the GNN technique was obtained, but it was not very different from the kNN technique; kNN is a plane division technique that can be effective when the feature vectors are very well defined. For this study and experimentation, we consider that both techniques work correctly in real environments with very acceptable results.

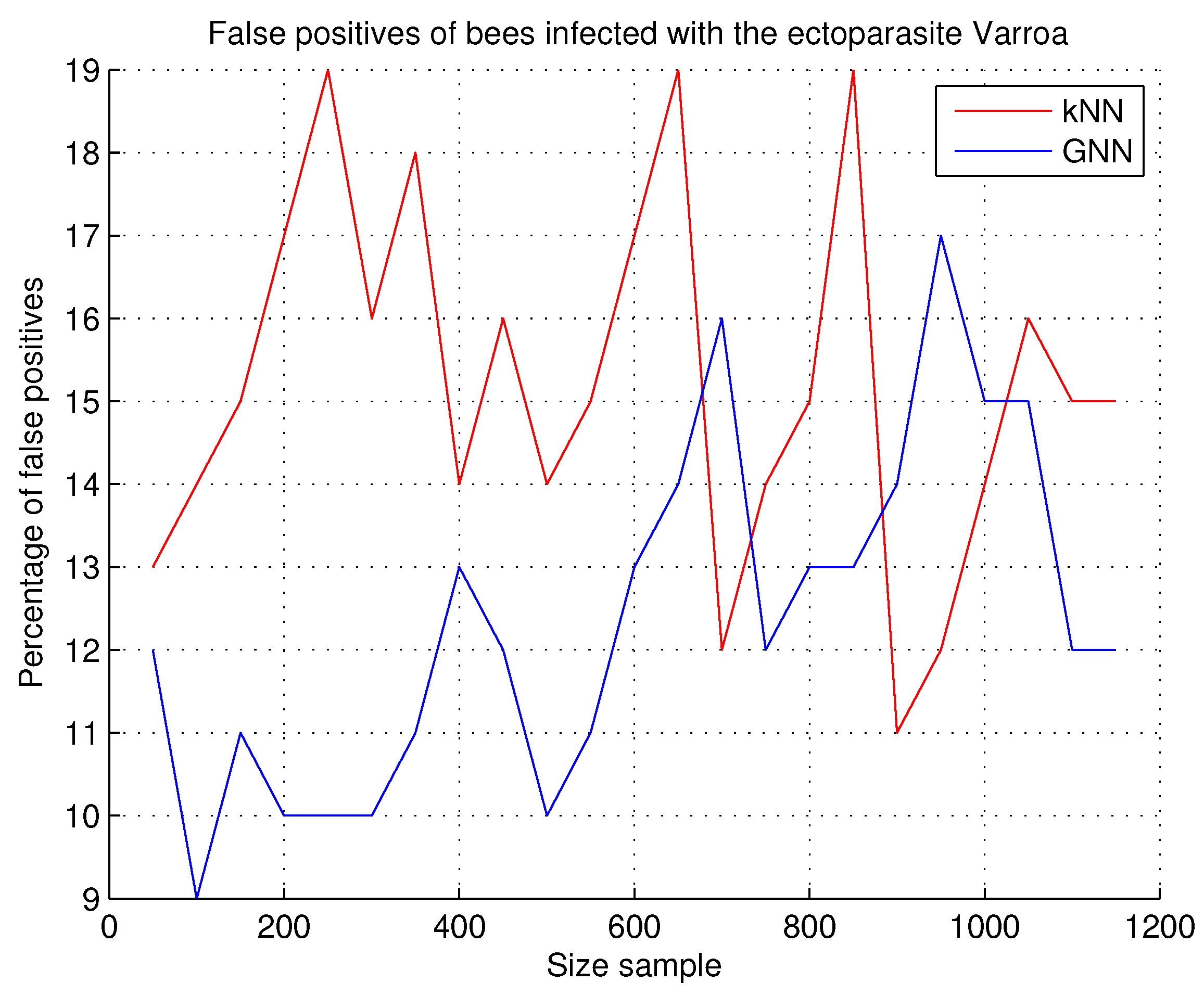

7.2.5. False Positives in Bees Infected with the Varroa Ectoparasite

A false positive is when there is no bee infected with the Varroa parasite in the image, but the system detects the bee infected with the parasite, i.e., a recognition of the infected bee in the image is issued, but it does not exist. This experiment was carried out with the support of the beekeeper in the following way: each time the system positively verifies a bee with a Varroa mite, the response and the image are issued to the researcher and the beekeeper to verify the obtained result; a false positive is indicated in the case that the response is incorrect.

Observations. The results of the experiment are shown in

Figure 13. When the sample size is low, the results of both techniques are very dissimilar, but when the sample size increases in the number of images, the results tend to be equal; as the image shows, the results become better using the kNN technique when the sample size ranges between 800 and 1000 bees. An observation process was carried out with both techniques to verify the causes of these results; it was observed that the samples do not have enough variety to achieve the expected results. For this sample, it was necessary to increase the dataset to experiment with the number of images and to experiment with both techniques, and, in this way, be able to evaluate them.

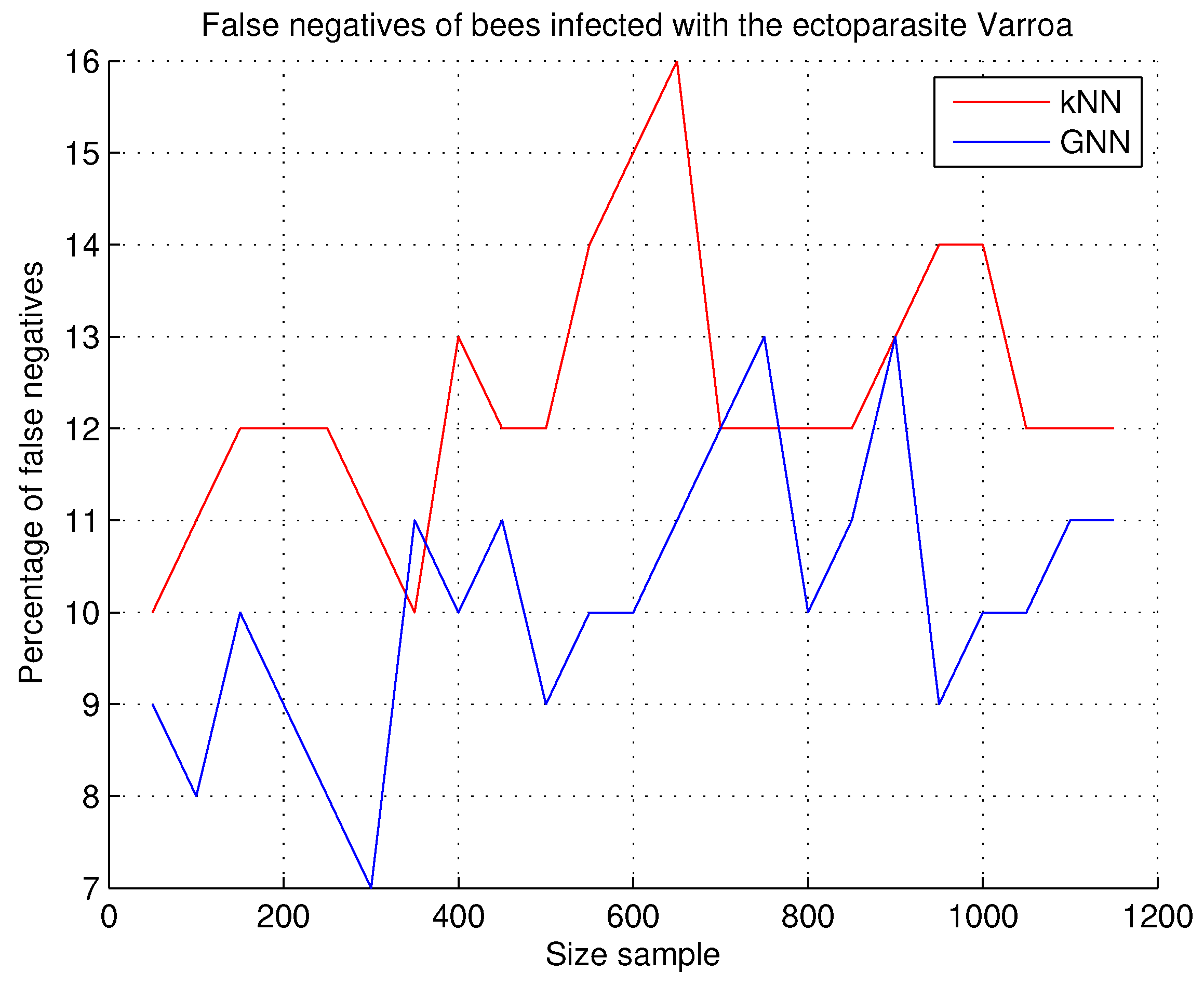

7.2.6. Percentages of False Negatives in Bees Infected with the Varroa Mite

This experiment is performed to determine the percentage of images that the system rejects or does not recognize as a bee infected with the Varroa mite, but if the image does contain the Varroa mite because it has already been verified by the researcher and by the beekeeper, i.e., the presence of the parasite in the bee is certain. For this experiment, the sets of images of the bees that present the Varroa mite are used; some examples of images from this dataset appear in

Table 6; the evaluation percentages of this experiment can be seen in

Figure 14.

Observations. According to the results obtained with the developed software system, we consider the false negatives obtained with both techniques to be within an acceptable range for this research. In

Figure 14, the kNN technique showed better results for this experiment by obtaining a lower number of false positives; the classification performed with the kNN activation function is more accurate.

7.2.7. Recognition Time of Bees at the Beehive Entrance and Detection of the Varroa Mite

This experiment measures the time taken by the software system to recognize bees at the hive entrance and detect the Varroa mite; the experiment determines the amount of time needed by the system to generate the recognition result. False negatives and false positives are not considered in this experiment.

Observations. As shown in

Figure 15, in this experiment we have found that the recognition time of bees at the hive entrance and the detection of the Varroa mite are lower with the kNN technique, but when considering false positives and false negatives, the best responses are obtained with the GNN technique. The above indicates that the kNN classification time is better, but lacks exact recognition; the results obtained with the GNN technique are more precise in classification, but have the highest time consumption per sample.

7.2.8. System Times to Recognize Bees in the Beehive Entrance

The objective of this experiment is to measure the time consumed by the system for the recognition of the bee at the entrance of the hive for each sample size presented to the system; for this experiment, the sample size starts at 100 images and incrementally up to 1100 images. In other research works, it is specified that the techniques that use graph structures are not suitable for real-time recognition, due to the time they consume; with this experiment, we demonstrate the opposite. In this experiment, we do not consider false negatives and false positives, i.e., the experiment measures the complete recognition time of the bee at the entrance of the hive.

Observations.

Figure 16 shows the results obtained; the shortest response times are obtained with the GNN technique, compared to the kNN technique. We have considered all the images of the dataset in this experiment to evaluate the totality of the images.

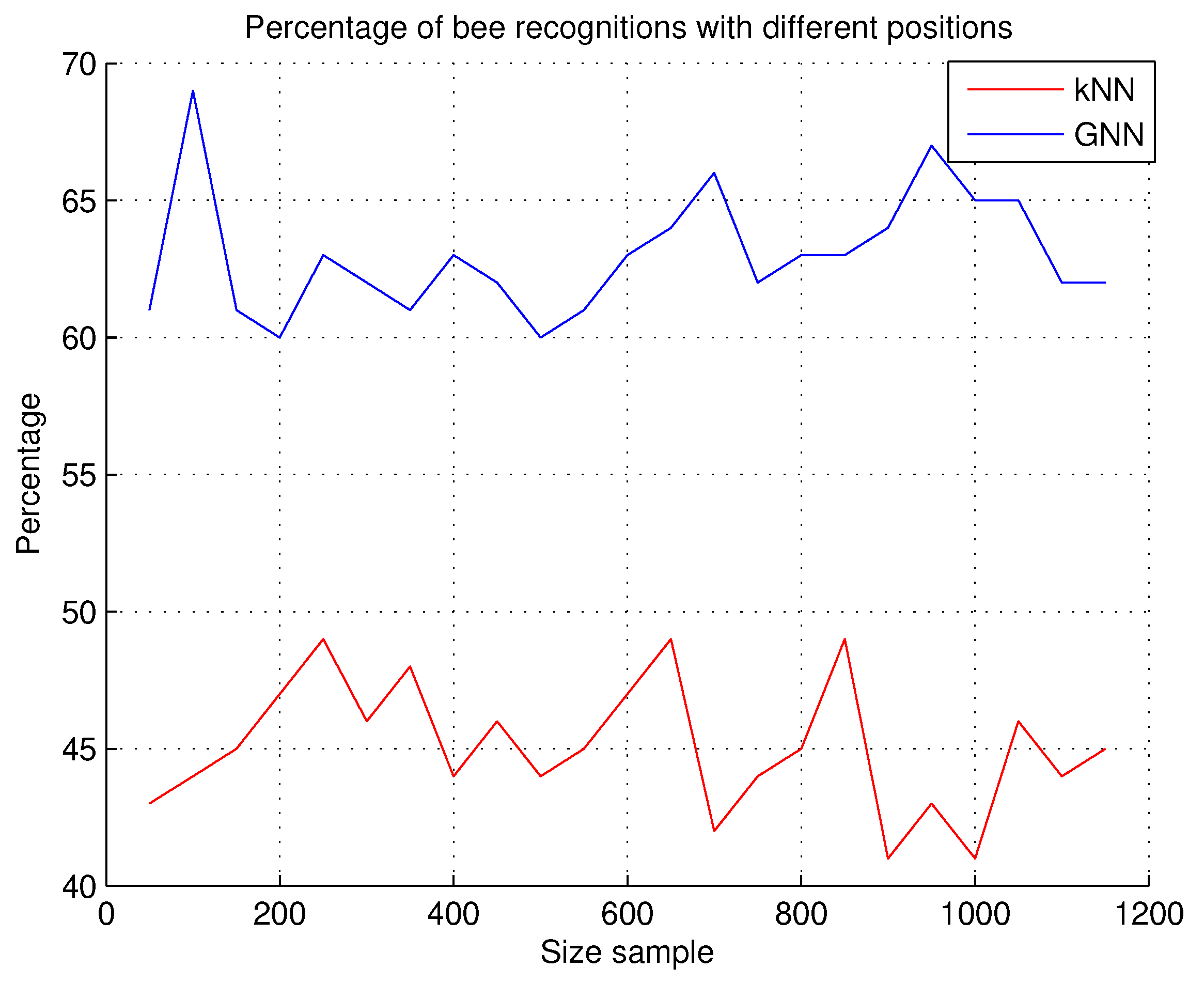

7.2.9. Recognition of Bees with Different Positions

A determining factor for the system to recognize the bee and locate the Varroa mite is the position of the bee at the hive entrance. The bee can have many different positions at the hive entrance, as shown in some examples of the dataset in

Table 7; these different positions of the bee must be verified by the software system; so, in this experiment, we used the kNN and GNN techniques to evaluate the recognition percentages of bees with different positions.

Observations. The results are shown in

Figure 17; the recognition accuracy that we obtained with the GNN technique is higher, as shown by the results of the graphed percentages. A lower accuracy is obtained with the kNN technique.

11. Discussion

In this section, discussions of the presented research project have focused on the feasibility of the project and our contributions to the state of the art of the problem of Varroa detection in honeybees, the criteria used to evaluate the proposed methodology, and what have been the practical limitations that we have had for the development of this research work applied to beekeeping.

As a discussion, the following question is proposed: How do our experimental results demonstrate the feasibility of using digital image processing for bee recognition in real apiary environments? The results obtained with the applied techniques and the method proposed in this research work demonstrate the feasibility of developing an automatic recognition system in real honeybee environments; based on the experiments proposed and discussed in this work, we consider that the results obtained with both techniques (even with percentages lower than 80%) are acceptable for real environments.

Making a comparison between the results presented in other works in the literature, the results presented and described in this work are acceptable and demonstrate the feasibility of developing the automatic recognition system and applying it in real environments; the criteria we have considered to ensure that our research is feasible are listed below.

The development of a real-time system. We do not use any images in controlled environments, as many other works do, to offer very high recognition results; we extract the visual information from the apiary with different climatic situations, excluding only rainy days to protect our image and video capture equipment.

We present a noninvasive system in the hive. The main focus of our system was to develop noninvasive technology in the hive, to avoid stress to the bees, and not sacrifice any insect in the colony.

We developed an economic and fast system with efficient devices. The beekeeping community is not willing to spend large amounts of money on new technology, which led us to develop a system with low cost and accessible but fast devices.

The software is our own creation; the software is developed with freely available technologies, such as the C language, OpenCV, which runs on free operating systems and allows the student community of the university to offer examples of the use of this software, such as Linux in its different flavors.

The creation of four datasets that grow indefinitely as they acquire the images from the test apiaries. With these datasets, we have managed to train the proposed neural network with a large number of possible occurrences of the Varroa mite, which allows us to announce to the beekeeper community the possible cases of infection with the Varroa parasite.

The community of beekeepers in the region where the project is being carried out has requested the demonstration of the functionality of the project due to the results that have been published in different international scientific events and journals, such as [

13,

34].

In previous reviews of this work, we have been asked what the contributions are to the state of the art of the problem of Varroa mite detection in honeybees; we consider that this research contributes to two areas of applied research:

- (a)

The development of an automatic recognition system that includes advanced techniques of artificial intelligence and the Internet of Things, and

- (b)

experiments in uncontrolled natural environments of honeybees that allow the discovery and documentation of the factors that affect image acquisition in automatic recognition.

Both areas allow us to develop technology in an area that is not documented in the literature in Mexico.

Based on the above, we consider our work to be innovative and a solution to the care and preservation of honeybees in our country. No work has been presented in this area with an innovation of this magnitude, which provides an application model, as well as an academic model for university students in our country in the areas of agricultural biotechnology and information and communications technologies.