Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA

Abstract

1. Introduction

- We provide a systematic analysis of sensor vulnerabilities in autonomous vehicles, with particular focus on the emerging DVS technology and its unique security characteristics in research contexts.

- We implement and evaluate realistic attack scenarios in the CARLA simulator with quantified parameters derived from existing literature, demonstrating measurable impacts on autonomous agent behavior and perception accuracy.

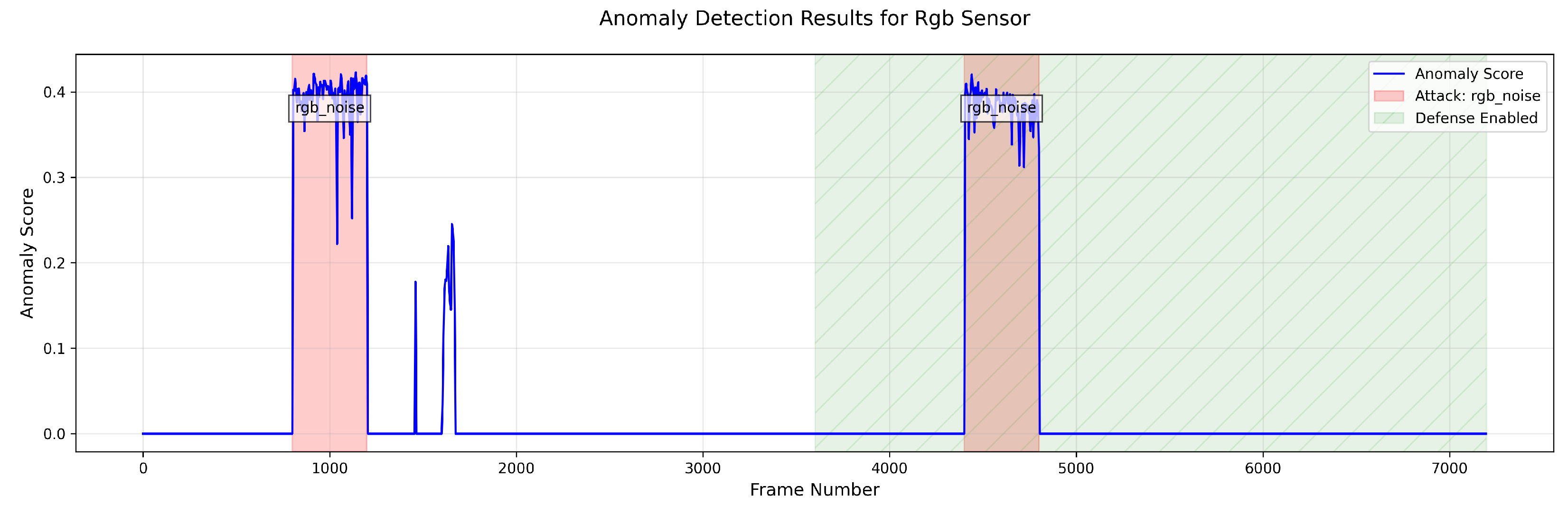

- We develop an effective anomaly detection system using the EfficientAD model from the anomalib library that achieves a > 95% detection accuracy with < 5% false positives for identifying sophisticated attacks on both RGB and DVS camera sensors.

- We provide quantitative analysis of defense mechanism effectiveness, revealing that while GPS spoofing defenses achieve near-perfect mitigation, RGB and depth sensor defenses show limited effectiveness with 30–45% trajectory drift remaining.

- We propose a comprehensive multi-layered security framework that combines sensor-specific defenses, anomaly detection, and graceful degradation strategies for resilient autonomous operation.

2. Related Work

3. Materials and Methods

3.1. Experimental Framework

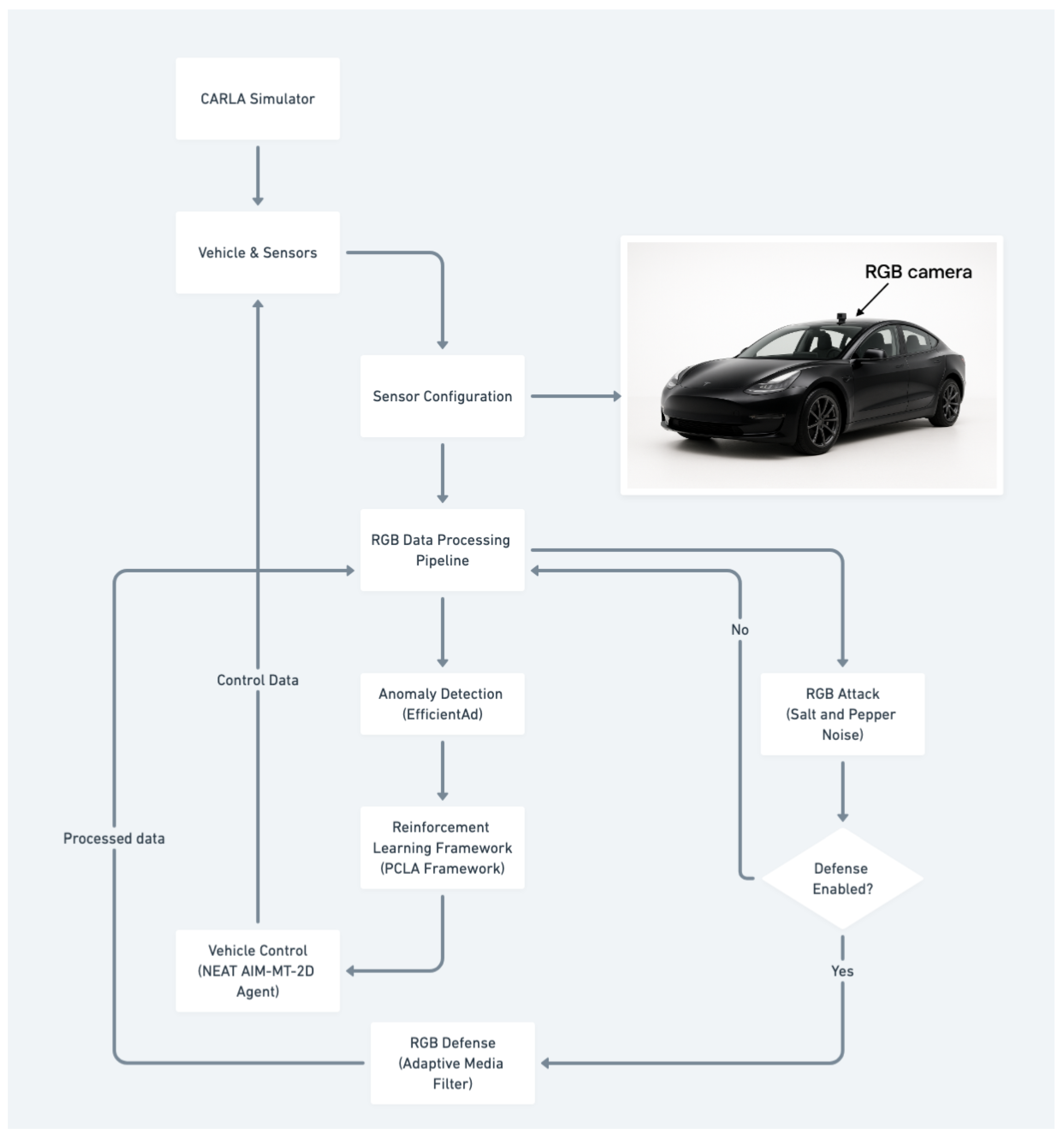

3.1.1. CARLA Simulator

3.1.2. PCLA Framework

- Ability to deploy multiple autonomous driving agents with different architectures and training paradigms

- Independence from the Leaderboard codebase, allowing compatibility with the latest CARLA version

- Support for multiple vehicles with different autonomous agents operating simultaneously

3.1.3. Autonomous Driving Agent

- NEAT AIM-MT-2D: A neural attention-based end-to-end autonomous driving agent that processes RGB images and depth information to predict vehicle controls directly. We used the NEAT variant that incorporates depth information (neat_aim2ddepth), which enhances the agent’s ability to perceive the 3D structure of the environment and improves its performance in complex driving scenarios.

3.2. Sensor Configuration

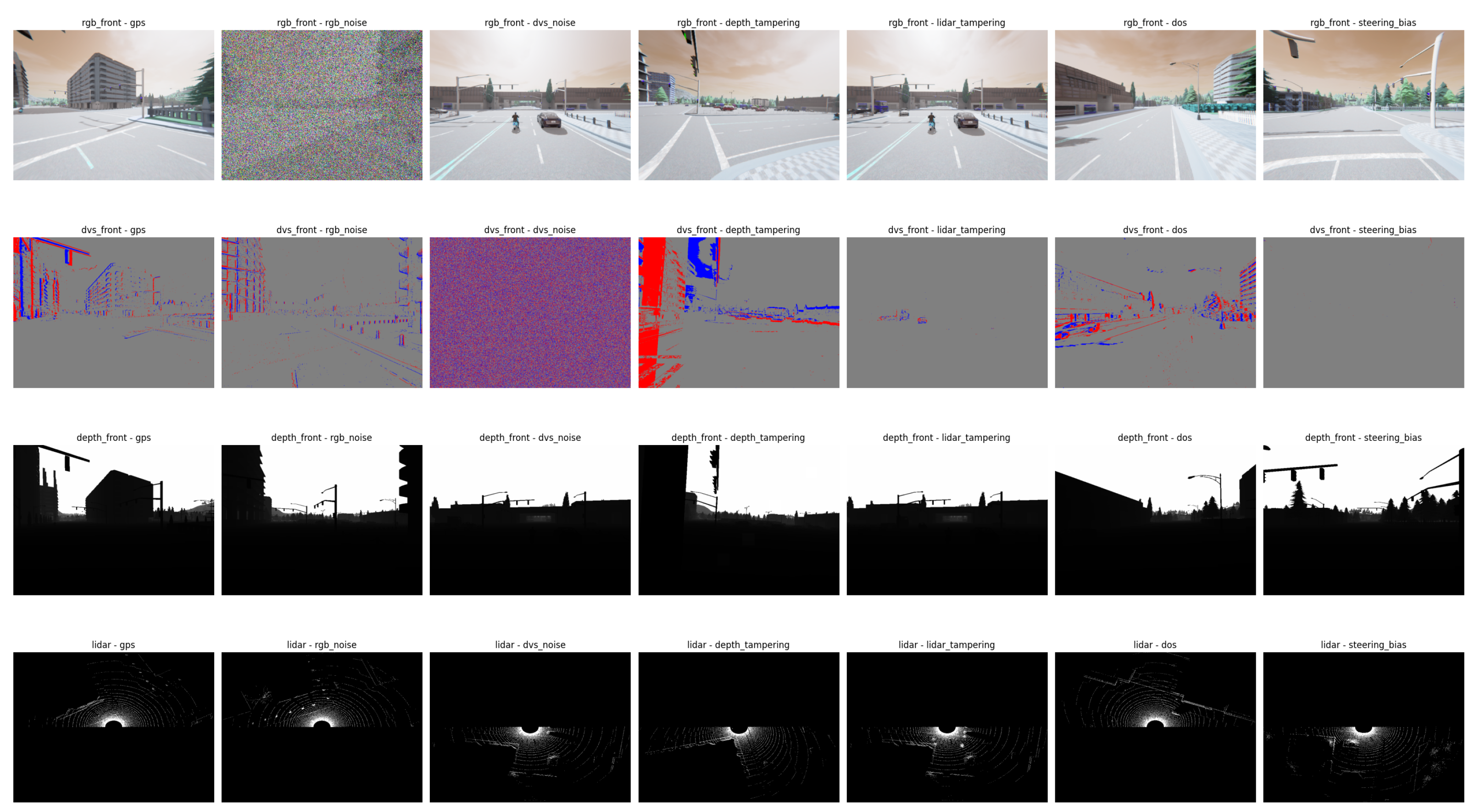

- RGB Cameras: Front-facing RGB camera with 800 × 600 resolution and a 100° field of view, mounted at position (1.3, 0.2, 2.3) relative to the vehicle center, providing visual input for the agent’s perception system.

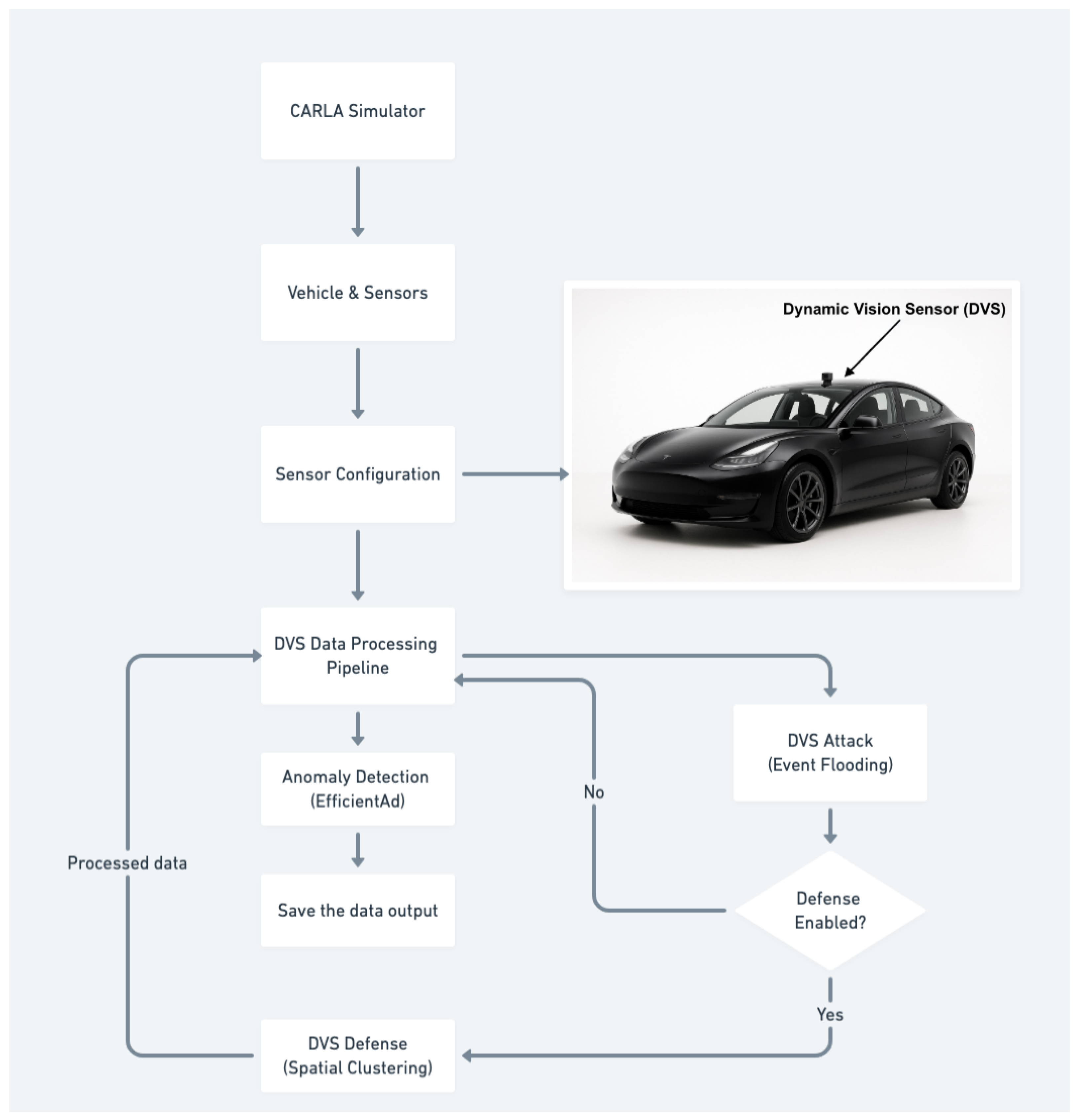

- Dynamic Vision Sensor (DVS): Event-based camera with 800 × 600 resolution and a 100° field of view, mounted at position (1.3, 0.0, 2.3), with positive and negative thresholds set to 0.3 to capture brightness changes in the scene with microsecond temporal resolution.

- Depth Camera: Depth-sensing camera with 800 × 600 resolution and a 100° field of view, aligned with the RGB camera position, providing per-pixel distance measurements for 3D scene understanding.

- LiDAR: A 64-channel LiDAR sensor with an 85 m range, 600,000 points per second, and a 10 Hz rotation frequency, mounted at position (0.0, 0.0, 2.5), providing detailed 3D point cloud data of the surrounding environment.

- GPS and IMU: For localization and vehicle state estimation, enabling the agent to maintain awareness of its position and orientation within the environment.

- Event-based data capture generates asynchronous streams of brightness change events rather than synchronized frame sequences;

- Event-to-image conversion transforms sparse temporal events into dense spatial representations for compatibility with existing vision processing frameworks;

- Specialized defense mechanisms employ spatial clustering algorithms to detect and filter noise events; and

- Importantly, DVS data streams are utilized primarily for research analysis and anomaly detection rather than direct integration into the PCLA (Pretrained CARLA Leaderboard Agent) and vehicle control model.

3.3. Attack Implementation

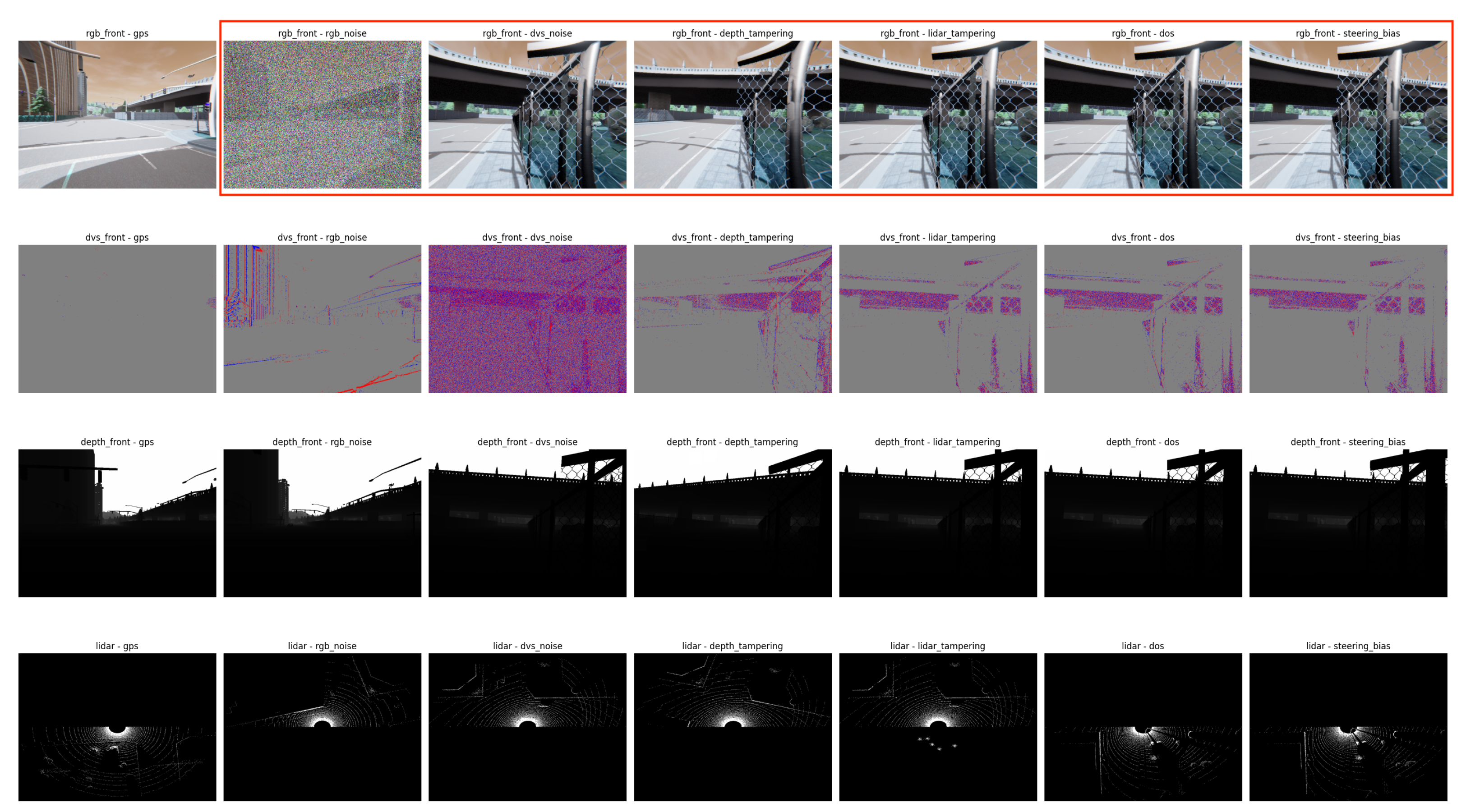

- RGB Camera Attacks, Salt-and-Pepper Noise (80% intensity): We implemented a high-density salt-and-pepper noise attack where random pixels were replaced with either black (0) or white (255) values with equal probability [37,54]. The 80% intensity parameter represents an extreme noise level commonly used in image denoising research to test algorithm robustness under severe corruption conditions. This attack simulates severe sensor interference from electromagnetic attacks or physical sensor tampering [15,27].

- Dynamic Vision Sensor Attacks, Event Flooding (60% spurious events): We implemented an event flooding attack that injected spurious events with random positions and polarities into the DVS output stream [30,33]. The 60% flooding rate was selected to represent a significant degradation scenario that would challenge event-based processing algorithms while remaining within realistic attack capabilities [28,29,34]. This attack exploits the asynchronous nature of DVS devices and could be implemented through electromagnetic interference or direct sensor manipulation [35].

- Depth Camera Attacks, Depth Map Tampering (5 patches, 50 × 50 pixels): We implemented a patch-based depth tampering attack that modified depth values in random regions of the depth map following established adversarial patch methodologies [38,55]. The attack parameters (5 patches of 50 × 50 pixels with depth offsets of 5–15 units) were selected to balance attack effectiveness with practical implementation constraints [22,56]. This creates false distance measurements that could cause collision avoidance systems to malfunction [9].

- LiDAR Attacks, Phantom Object Injection (5 clusters, 100–500 points each): We implemented a phantom object injection attack that added false point clusters to the LiDAR point cloud at positions 5–15 m ahead of the vehicle [9,22]. The attack parameters (5 clusters with 100–500 points each) were designed to create detectable objects while remaining within the spoofing capabilities demonstrated in recent LiDAR security research [23,27,57]. This attack could be implemented using laser devices or reflective materials [15,22].

- GPS Spoofing Attacks, Position Manipulation (±50 m deviation): We implemented a GPS spoofing attack that created discrepancies between actual and perceived vehicle positions [24,25]. The ±50 m deviation threshold represents a significant but realistic spoofing scenario that would noticeably impact navigation while remaining within documented GPS attack capabilities [9,58,59]. The attack maintains separate records of actual and spoofed positions to enable quantitative analysis of navigation impact.

- Denial of Service (DoS) Attacks, Sensor Rate Limiting (50% packet loss): We implemented a DoS attack that restricted sensor update frequency to simulate network-based attacks on sensor communication channels [11]. The 50% packet loss rate represents severe network conditions that significantly impact autonomous vehicle performance, as demonstrated in recent automotive cybersecurity research [16]. This attack simulates jamming or network flooding scenarios [17,18].

- Steering Bias Attacks, Control Manipulation (±15° systematic offset): We implemented a steering bias attack that introduced systematic errors into steering commands [11,16]. The ±15° bias parameter was selected to represent a significant control deviation that would be detectable by monitoring systems while demonstrating clear attack impact [17]. This attack simulates compromised control systems or actuator manipulation [18].

3.4. Defense Integration

3.4.1. Traditional Defense Mechanisms

- RGB Camera Defenses: We implemented a decision-based adaptive median filter that dynamically adjusted its kernel size (3 × 3 to 7 × 7) based on the detected noise level. The filter specifically targets salt-and-pepper noise by

- –

- Identifying potential noise pixels (values of 0 or 255),

- –

- Growing the filter window until valid median values are found, and

- –

- Preserving edge details by only replacing confirmed noise pixels.

- DVS Defense: We introduced a system to track event counts, setting a threshold of 5000 events per frame to identify flooding attacks, along with a dual-phase defense strategy composed of

- –

- Spatial clustering analysis using KD-tree data structures to identify dense noise patterns and

- –

- Morphological operations (dilation) for standard noise smoothing when clustering is not detected.

- Depth Camera Defense: A multi-stage validation approach including the following:

- –

- Range clipping to valid depth values (0–100 m),

- –

- Gradient-based tampering detection using Sobel operators, and

- –

- Adaptive Gaussian smoothing with intensity based on detected gradient magnitude.

- LiDAR Defense: A point cloud filtering pipeline with

- –

- Distance-based filtering (maximum range 50 m),

- –

- Density-based clustering using KD-tree analysis to detect phantom objects, and

- –

- Removal of points in clusters exceeding density thresholds.

- GPS Spoofing Defense: Route consistency validation and velocity checks:

- –

- Position validation against planned route (deviation threshold: 5 m),

- –

- Velocity consistency checks (maximum realistic speed: 20 m/s), and

- –

- Automatic reversion to true GPS coordinates when anomalies are detected.

- Steering Bias Defense: Statistical anomaly detection for control commands:

- –

- Maintains 50-tick steering command history,

- –

- Z-score analysis for outlier detection (threshold: |Z| > 3), and

- –

- Automatic correction to historical mean when a bias is detected.

- DoS Defense: Sensor update monitoring and rate limiting:

- –

- Timestamp tracking for all sensor updates,

- –

- Rate limiting enforcement (maximum 20 Hz), and

- –

- Buffering mechanisms for delayed sensor readings.

3.4.2. Machine Learning-Based Anomaly Detection

3.5. Anomaly Detection System

3.5.1. EfficientAD Model Architecture

- A teacher network that learns robust feature representations from normal sensor data,

- A student network that attempts to replicate the teacher’s feature extraction capabilities, and

- A discrepancy measurement module that quantifies differences between teacher and student outputs to generate anomaly scores.

3.5.2. Training and Implementation

- Data Collection and Organization: Sensor data was collected from multiple driving scenarios under normal conditions and organized into training and testing datasets with separate directories for normal and anomalous data.

- Data Preprocessing: All sensor data underwent standardized preprocessing:

- –

- Images resized to 128 × 128 pixels for computational efficiency,

- –

- Normalization using ImageNet statistics (mean = [0.485, 0.456, 0.406], std = [0.229, 0.224, 0.225]), and

- –

- LiDAR point clouds converted to 2D bird’s-eye-view images for compatibility with the image-based model.

- Model Training Configuration:

- –

- Training performed using PyTorch 1.13.1 with CUDA 11.7 acceleration

- –

- Batch size of 1 with gradient accumulation over 4 batches for memory efficiency

- –

- Training duration of 30 epochs with validation every epoch

- –

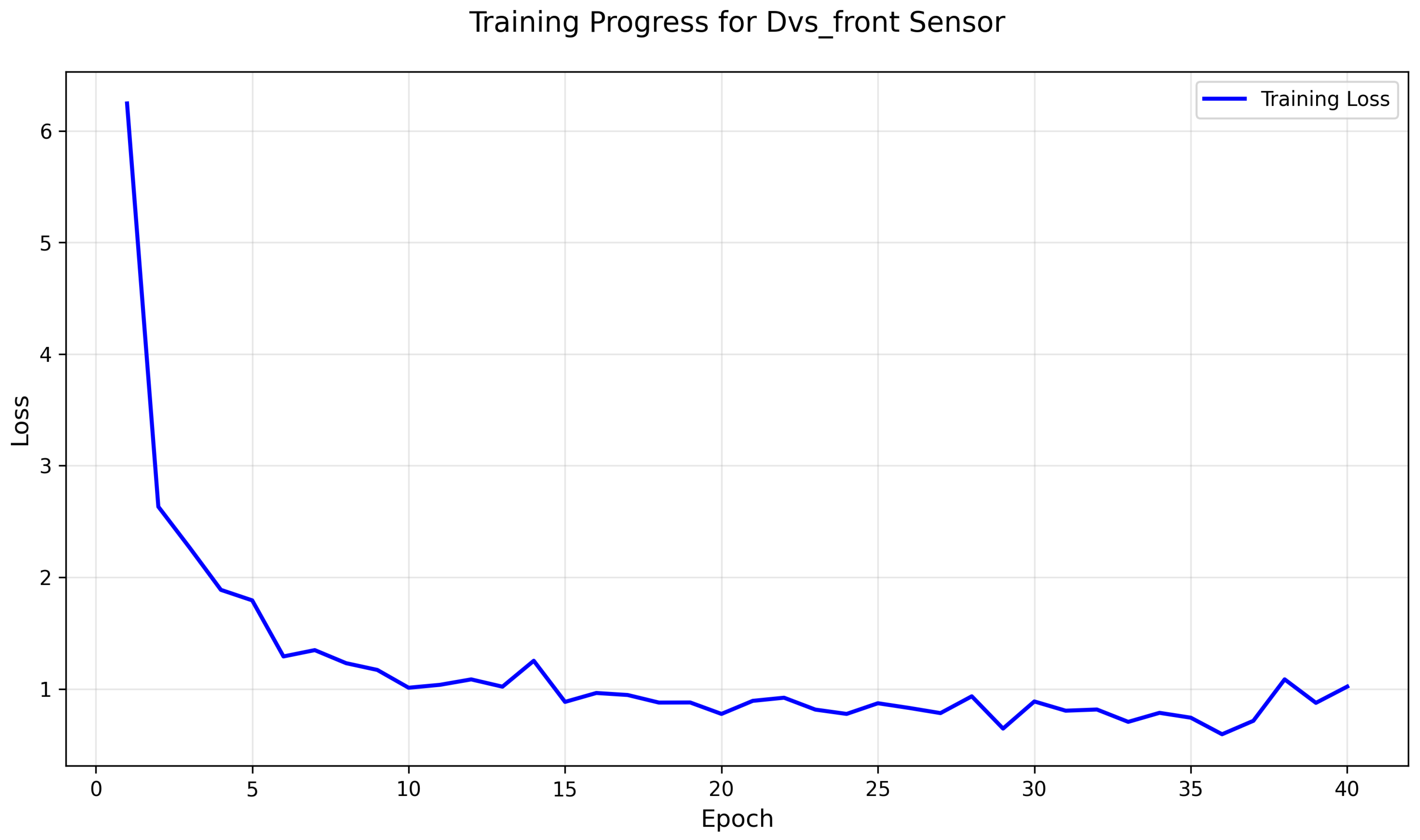

- Separate models trained for each sensor type (RGB, DVS, depth, and LiDAR). The detailed training process and loss behavior for the DVS anomaly detection model are illustrated in Figure A1 in Appendix A.

- Real-time Analysis: The trained models are used to analyze sensor data during autonomous driving episodes, generating anomaly scores that indicate the likelihood of sensor attacks. The system can process sensor data in real-time and provide continuous monitoring of sensor integrity.

3.6. Evaluation Methodology

3.6.1. Attack Impact Assessment

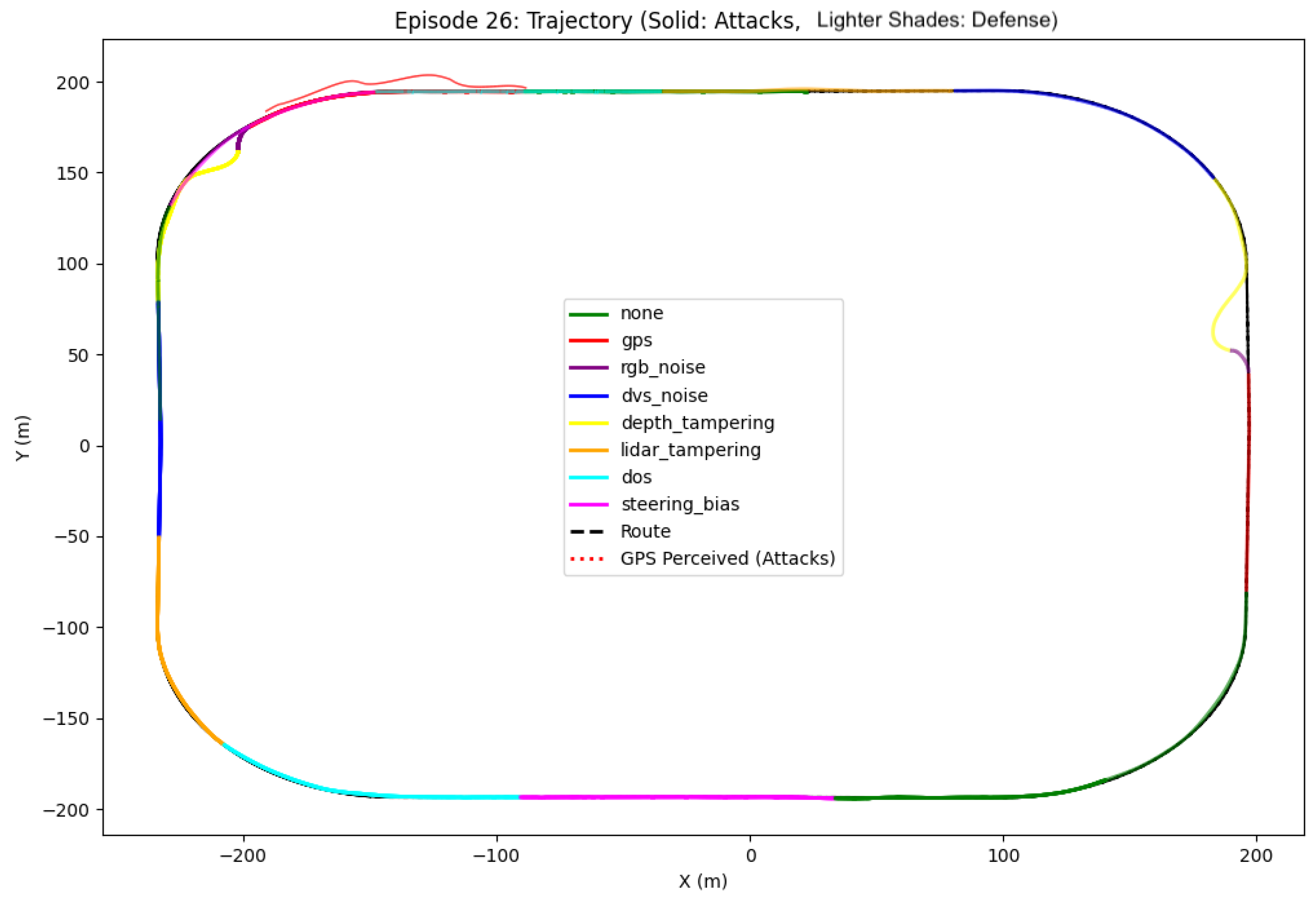

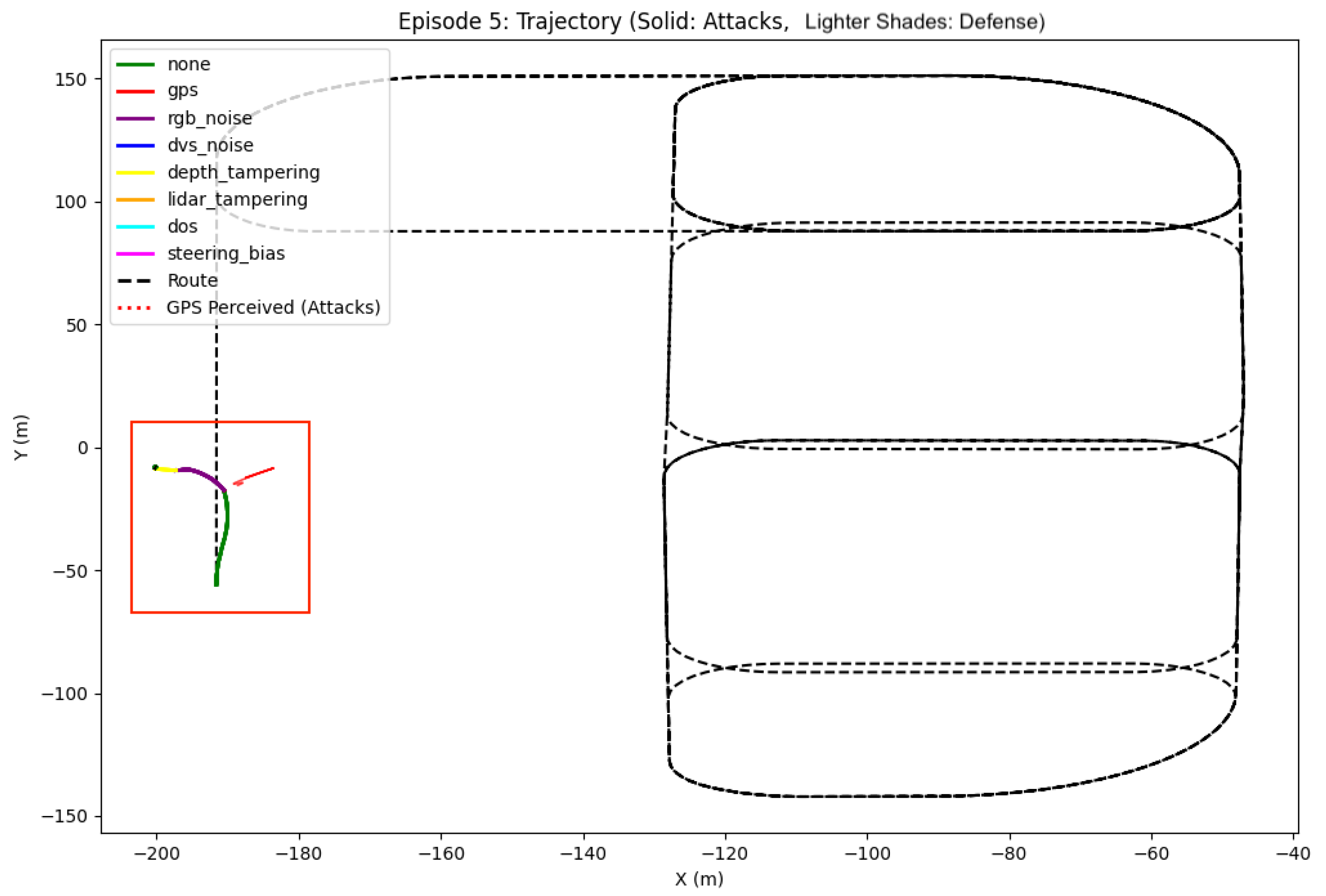

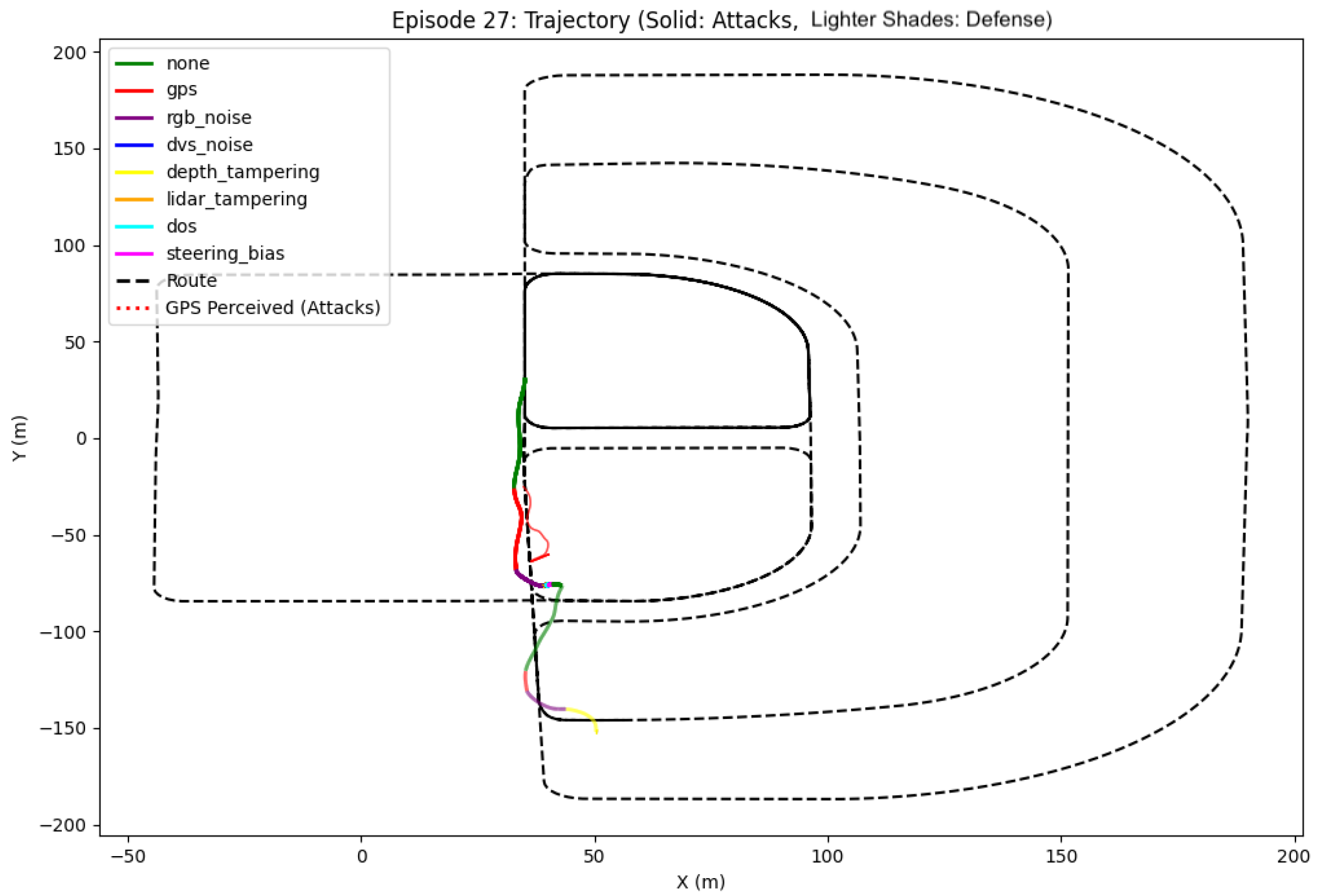

- Trajectory Analysis: We track and compare the vehicle’s actual trajectory against the planned route, calculating point-to-segment distances to measure route deviation. This analysis is particularly important for GPS spoofing attacks where perceived and actual positions may differ significantly.

- Control Stability: We analyze steering, throttle, and brake commands through detailed time series analysis. For steering bias attacks, we perform statistical analysis of steering angle distributions to detect anomalous patterns.

- Sensor Performance Metrics:

- –

- RGB Camera: Noise percentage measurements during salt-and-pepper attacks

- –

- DVS: Event count tracking to detect abnormal spikes in event generation

- –

- Depth Camera: Mean depth measurements to identify tampering

- –

- LiDAR: Point cloud density analysis to detect phantom objects

- Defense Effectiveness: Comparison of performance metrics with and without defensive measures enabled, including

- –

- Adaptive median filtering for RGB noise,

- –

- Spatial clustering analysis for DVS event flooding,

- –

- Gradient-based analysis for depth tampering,

- –

- Density-based filtering for LiDAR attacks, and

- –

- Rate limiting for DoS attacks.

3.6.2. Visualization and Analysis Tools

- Combined Video Generation: Synchronized display of multiple sensor feeds (RGB, DVS, depth, and LiDAR) with attack state indicators

- Trajectory Plots: Visualization of actual vs. perceived trajectories during GPS spoofing

- Statistical Analysis: Histograms of steering distributions and sensor metrics under different attack conditions

3.6.3. Experimental Scenarios

- Urban Navigation: Complex urban environments with intersections, traffic lights, and other vehicles.

- Highway Driving: High-speed scenarios with lane changes and overtaking maneuvers.

- Dynamic Obstacles: Scenarios with pedestrians and other vehicles executing unpredictable maneuvers.

4. Results

4.1. Impact of Cyberattacks on Autonomous Driving Performance

4.1.1. Completed Routes Under Attack

4.1.2. Route Failures and Crashes

4.2. Effectiveness of Defense Mechanisms

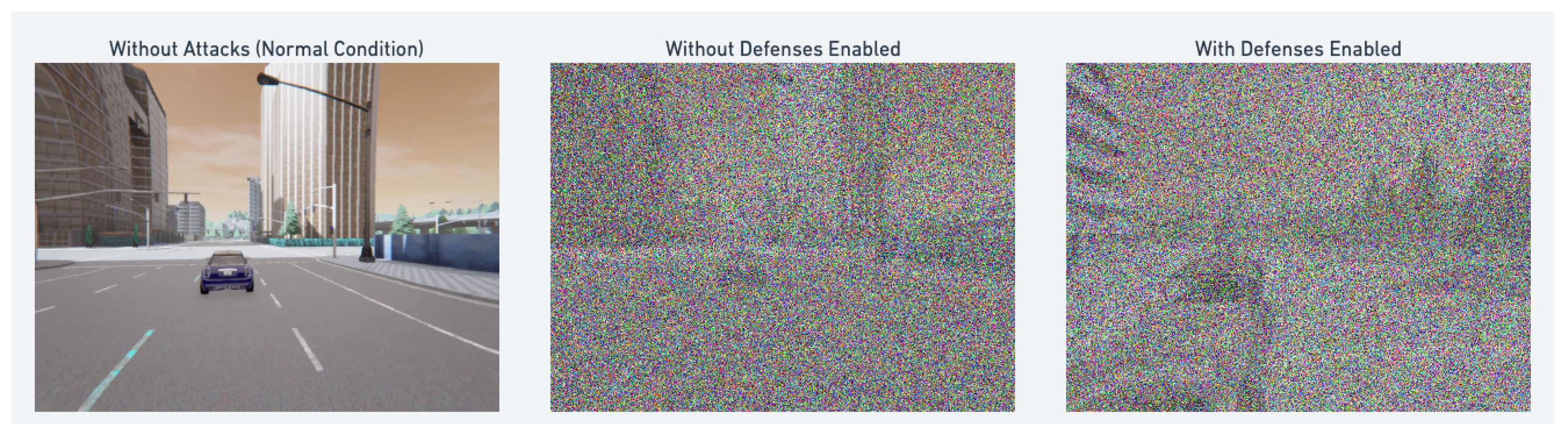

- RGB Camera Attacks: Our anomaly detection model for RGB cameras successfully identified attacks on the camera feed, but the subsequent filtering techniques showed limited effectiveness against sophisticated attacks. While some noise reduction was achieved, the filtered images often remained significantly compromised, resulting in continued trajectory deviations even with defenses active.

- Depth Sensor Attacks: The depth sensor anomaly detection system effectively detected anomalies in depth data, but defense mechanisms for depth sensor attacks demonstrated limited effectiveness against targeted attacks, with filtered depth maps still containing significant distortions that affected the vehicle’s perception capabilities.

- GPS Spoofing: Our GPS defense mechanism successfully detected and mitigated GPS spoofing attacks by implementing plausibility checks based on route deviation and velocity consistency. The defense mechanism checks if the reported GPS position deviates more than 5.0 m from the planned route or if the calculated speed exceeds 20.0 m/s, reverting to the true transform when these thresholds are exceeded. This was our most effective defense, as it clearly identified spoofed GPS coordinates and prevented the vehicle from making unwarranted course corrections based on falsified location data.

- Steering Bias: The steering defense mechanism uses statistical anomaly detection with z-score analysis on a rolling window of the last 50 steering commands. When a steering command’s z-score exceeds 3 (indicating a statistical outlier), the system reverts to the mean steering value from the recent history. This approach showed partial effectiveness in identifying malicious steering commands, but sophisticated attacks could still influence vehicle control in certain scenarios.

- Continuously monitor all sensor inputs for anomalies using our trained detection models;

- If anomalies were detected, reduce reliance on AI models for driving decisions;

- Transfer driving control to human operators for a safer experience when sensor integrity is compromised; and

- Alternatively, selectively shut down compromised sensors and cross-check with remaining functional sensors to maintain autonomous operation.

4.3. Anomaly Detection Performance

4.3.1. RGB Camera Anomaly Detection

- Normal Operation: Mean anomaly score of 0.000000 with a standard deviation of 0.000000, establishing a clear baseline for normal behavior.

- RGB Noise Attacks: Mean anomaly scores of 0.378064 (with defense) and 0.389462 (without defense), both significantly higher than normal operation. This demonstrates the model’s ability to reliably detect RGB camera attacks regardless of whether defenses are active.

- Our RGB camera anomaly detection model is specifically designed to detect anomalies in RGB camera data only and does not process data from other sensors.

4.3.2. DVS Camera Anomaly Detection

- Normal Operation: Mean anomaly score of 0.608308 with a standard deviation of 0.121466, establishing a baseline that reflects the inherent variability of event-based vision data.

- DVS Noise Attacks: Mean anomaly scores of 0.55 (with defense) and 0.75 (without defense). Interestingly, the score with defense is slightly lower than normal operation, while the score without defense is significantly higher.

- It is important to note that our DVS anomaly detection model is specifically designed to detect anomalies in DVS data only and does not process data from other sensors. Additionally, DVS cameras are currently used for research purposes only and are not integrated into the vehicle’s driving decision model.

4.4. Comparative Analysis of DVS and RGB Sensor Security

- Research Context: It is important to emphasize that the DVS cameras in our study were used exclusively for research purposes and were not integrated into the vehicle’s driving decision model. The data collected from DVS cameras was analyzed separately from the main autonomous driving pipeline.

- Defense Effectiveness: Our defense mechanisms were evaluated separately for each sensor type. RGB cameras, which are actively used in the driving model, required more aggressive filtering that sometimes reduced image quality. DVS defenses were studied in isolation as a research component.

- Anomaly Detection: The anomaly detection models for both sensors were designed to work exclusively with their respective sensor data. The RGB model showed clearer separation between normal and attack conditions and directly impacted vehicle safety, while the DVS model’s patterns were analyzed purely for research insights.

- Future Potential: While not currently used for driving decisions, the complementary nature of RGB and DVS cameras suggests that sensor fusion approaches could potentially enhance security in future implementations.

5. Discussion

5.1. Implications for Autonomous Vehicle Security

5.2. Significance of Anomaly Detection Approach

5.3. Comparative Security of DVS and RGB Sensors

5.4. Limitations and Challenges

5.5. Future Research Directions

- Enhanced Anomaly Detection: Developing more sophisticated anomaly detection models that can identify subtle attacks while maintaining low false positive rates. This could include exploring deep learning architectures specifically designed for time series sensor data and incorporating contextual information from multiple sensors [45].

- Graceful Degradation: Designing autonomous systems that can gracefully degrade performance when attacks are detected rather than failing catastrophically. This could involve developing fallback driving policies that rely on uncompromised sensors or implementing safe stop procedures [7].

- Human-in-the-Loop Security: Exploring how human operators could be effectively integrated into the security framework, particularly for remote monitoring and intervention when anomalies are detected [60]. This raises important questions about interface design, situation awareness, and response time.

- DVS Integration: Further investigating the potential of DVS technology for enhancing autonomous vehicle security, including developing specialized perception algorithms that leverage the unique properties of event-based vision [28] and exploring hybrid RGB–DVS architectures.

- Standardized Security Evaluation: Developing standardized benchmarks and evaluation methodologies for assessing the security of autonomous vehicle sensor systems, enabling more systematic comparison of different defense approaches [62].

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AV | Autonomous Vehicle |

| CUDA | Compute Unified Device Architecture |

| DoS | Denial of Service |

| DVS | Dynamic Vision Sensors |

| EfficientAd | Efficient Anomaly Detection |

| GPU | Graphics Processing Unit |

| GPS | Global Positioning System |

| IMU | Inertial Measurement Unit |

| LiDAR | Light Detection and Ranging |

| NEAT | Neural Attention |

| PCLA | Pretrained CARLA Leaderboard Agent |

| RGB | Red Green Blue |

| VRAM | Video Random Access Memory |

Appendix A. Training Process for DVS Anomaly Detection Model

Appendix B. Illustrative Example of Critical Failure Under RGB Attack

Appendix C. DeepRacer Agent Specifications for Hardware-in-the-Loop Validation

- Chassis: 1/18th scale 4WD monster truck chassis

- CPU: Intel Atom™ Processor

- Memory: 4 GB RAM

- Storage: 32 GB (expandable via USB)

- Wi-Fi: 802.11ac

- Camera: 2 × 4 MP camera with MJPEG encoding

- LiDAR: 360-degree 12-meter scanning radius LiDAR sensor

- Software: Ubuntu OS 16.04.3 LTS, Intel® OpenVINO™ toolkit, ROS Kinetic

- Drive Battery: 7.4 V/1100 mAh lithium polymer

- Compute Battery: 13.600 mAh USB-C PD

- Ports: 4× USB-A, 1× USB-C, 1× Micro-USB, 1× HDMI

- Integrated Sensors: Accelerometer and Gyroscope

References

- Litman, T. Autonomous Vehicle Implementation Predictions: Implications for Transport Planning; Victoria Transport Policy Institute: Victoria, BC, Canada, 2020. [Google Scholar]

- Fagnant, D.J.; Kockelman, K.M. Preparing a Nation for Autonomous Vehicles: Opportunities, Barriers and Policy Recommendations. Transp. Res. Part A Policy Pract. 2015, 77, 167–181. [Google Scholar] [CrossRef]

- Sakhai, M.; Sithu, K.; Oke, M.K.S.; Mazurek, S.; Wielgosz, M. Evaluating Synthetic vs. Real Dynamic Vision Sensor Data for SNN-Based Object Classification. In Proceedings of the KU KDM 2025 Conference, Zakopane, Poland, 2–5 April 2025. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer Vision for Autonomous Vehicles: Problems, Datasets and State of the Art. Found. Trends Comput. Graph. Vis. 2017, 12, 1–308. [Google Scholar] [CrossRef]

- Rajamani, R. Vehicle Dynamics and Control, 2nd ed.; Springer: New York, NY, USA, 2011. [Google Scholar]

- Giannaros, A.; Karras, A.; Theodorakopoulos, L.; Karras, C.; Kranias, P.; Schizas, N.; Kalogeratos, G.; Tsolis, D. Autonomous Vehicles: Sophisticated Attacks, Safety Issues, Challenges, Open Topics, Blockchain, and Future Directions. J. Cybersecur. Priv. 2023, 3, 493–543. [Google Scholar] [CrossRef]

- Hussain, M.; Hong, J.-E. Reconstruction-Based Adversarial Attack Detection in Vision-Based Autonomous Driving Systems. Mach. Learn. Knowl. Extr. 2023, 5, 1589–1611. [Google Scholar] [CrossRef]

- Petit, J.; Shladover, S.E. Potential Cyberattacks on Automated Vehicles. IEEE Trans. Intell. Transp. Syst. 2014, 16, 546–556. [Google Scholar] [CrossRef]

- Parkinson, S.; Ward, P.; Wilson, K.; Miller, J. Cyber Threats Facing Autonomous and Connected Vehicles: Future Challenges. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2898–2915. [Google Scholar] [CrossRef]

- Checkoway, S.; McCoy, D.; Kantor, B.; Anderson, D.; Shacham, H.; Savage, S.; Koscher, K.; Czeskis, A.; Roesner, F.; Kohno, T. Comprehensive Experimental Analyses of Automotive Attack Surfaces. In Proceedings of the 20th USENIX Security Symposium, San Francisco, CA, USA, 8–12 August 2011. [Google Scholar]

- Cui, J.; Liew, L.S.; Sabaliauskaite, G.; Zhou, F. A Review on Safety Failures, Security Attacks, and Available Countermeasures for Autonomous Vehicles. Ad Hoc Netw. 2019, 90, 101823. [Google Scholar] [CrossRef]

- Eykholt, K.; Evtimov, I.; Fernandes, E.; Li, B.; Rahmati, A.; Xiao, C.; Prakash, A.; Kohno, T.; Song, D. Robust Physical-World Attacks on Deep Learning Visual Classification. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 1625–1634. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and Harnessing Adversarial Examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Yan, C.; Xu, W.; Liu, J. Can You Trust Autonomous Vehicles: Contactless Attacks against Sensors of Self-Driving Vehicle. In Proceedings of the 24th USENIX Security Symposium, Austin, TX, USA, 13 August 2016. [Google Scholar]

- Koscher, K.; Czeskis, A.; Roesner, F.; Patel, S.; Kohno, T.; Checkoway, S.; McCoy, D.; Kantor, B.; Anderson, D.; Shacham, H.; et al. Experimental Security Analysis of a Modern Automobile. In Proceedings of the 2010 IEEE Symposium on Security and Privacy, Oakland, CA, USA, 16–19 May 2010; pp. 447–462. [Google Scholar] [CrossRef]

- Miller, C.; Valasek, C. Remote Exploitation of an Unaltered Passenger Vehicle. In Proceedings of the Black Hat USA, Las Vegas, NV, USA, 1–6 August 2015. [Google Scholar]

- Woo, S.; Jo, H.J.; Lee, D.H. A Practical Wireless Attack on the Connected Car and Security Protocol for In-Vehicle CAN. IEEE Trans. Intell. Transp. Syst. 2015, 16, 993–1006. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing Properties of Neural Networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Lin, P.; Javanmardi, E.; Nakazato, J.; Tsukada, M. Potential Field-based Path Planning with Interactive Speed Optimization for Autonomous Vehicles. arXiv 2023, arXiv:2306.06987. [Google Scholar] [CrossRef]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial Examples in the Physical World. arXiv 2016, arXiv:1607.02533. [Google Scholar]

- Cao, Y.; Jia, J.; Cong, G.; Na, M.; Xu, W. Adversarial Sensor Attack on LiDAR-Based Perception in Autonomous Driving. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 2267–2281. [Google Scholar] [CrossRef]

- Sun, J.; Cao, Y.; Chen, Q.; Mao, Z.M. Towards Robust LiDAR-Based Perception in Autonomous Driving: General Black-Box Adversarial Sensor Attack and Countermeasures. In Proceedings of the 29th USENIX Security Symposium, Virtual, 12–14 August 2020; pp. 877–894. [Google Scholar]

- Humphreys, T.E.; Ledvina, B.M.; Psiaki, M.L.; O’Hanlon, B.W.; Kintner, P.M., Jr. Assessing the Spoofing Threat: Development of a Portable GPS Civilian Spoofer. In Proceedings of the 21st International Technical Meeting of the Satellite Division of The Institute of Navigation, Savannah, GA, USA, 16–19 September 2008; pp. 2314–2325. [Google Scholar]

- Tippenhauer, N.O.; Pöpper, C.; Rasmussen, K.B.; Capkun, S. On the Requirements for Successful GPS Spoofing Attacks. In Proceedings of the 18th ACM Conference on Computer and Communications Security, Chicago, IL, USA, 17–21 October 2011; pp. 75–86. [Google Scholar] [CrossRef]

- Studnia, I.; Nicomette, V.; Alata, E.; Deswarte, Y.; Kaâniche, M.; Laarouchi, Y. Survey on Security Threats and Protection Mechanisms in Embedded Automotive Networks. In Proceedings of the 43rd Annual IEEE/IFIP Conference on Dependable Systems and Networks Workshop, Budapest, Hungary, 24–27 June 2013; pp. 1–8. [Google Scholar] [CrossRef]

- Nassi, B.; Nassi, D.; Ben-Netanel, R.; Mirsky, Y.; Drokin, O.; Elovici, Y. Phantom of the ADAS: Securing Advanced Driver-Assistance Systems from Split-Second Phantom Attacks. In Proceedings of the 2019 ACM SIGSAC Conference on Computer and Communications Security, London, UK, 11–15 November 2019; pp. 293–308. [Google Scholar] [CrossRef]

- Gallego, G.; Delbruck, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K.; et al. Event-Based Vision: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180. [Google Scholar] [CrossRef]

- Wang, Y.; Du, B.; Shen, Y.; Wu, K.; Zhao, G.; Sun, J.; Wen, H. EV-Gait: Event-Based Robust Gait Recognition Using Dynamic Vision Sensors. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6351–6360. [Google Scholar] [CrossRef]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128×128 120 dB 15 μs Latency Asynchronous Temporal Contrast Vision Sensor. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef]

- Suh, Y.; Choi, S.; Kim, J.; Kim, H.; Lee, J.; Kim, S.; Lee, J.; Kim, J. A 1280 × 960 Dynamic Vision Sensor with a 4.95-μm Pixel Pitch and Motion Artifact Minimization. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems, Seville, Spain, 10–21 October 2020; pp. 1–5. [Google Scholar] [CrossRef]

- Gehrig, D.; Scaramuzza, D. Low-Latency Automotive Vision with Event Cameras. Nature 2024, 629, 1034–1040. [Google Scholar] [CrossRef]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.-C.; Delbruck, T. A 240 × 180 130 dB 3 μs Latency Global Shutter Spatiotemporal Vision Sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. Events-to-Video: Bringing Modern Computer Vision to Event Cameras. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3857–3866. [Google Scholar] [CrossRef]

- Mueggler, E.; Rebecq, H.; Gallego, G.; Delbruck, T.; Scaramuzza, D. The Event-Camera Dataset and Simulator: Event-Based Data for Pose Estimation, Visual Odometry, and SLAM. Int. J. Robot. Res. 2017, 36, 142–149. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An Open Urban Driving Simulator. In Proceedings of the 1st Annual Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Upper Saddle River, NJ, USA, 2008. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–24 May 2017; pp. 39–57. [Google Scholar] [CrossRef]

- Jafarnia-Jahromi, A.; Broumandan, A.; Nielsen, J.; Lachapelle, G. GPS Vulnerability to Spoofing Threats and a Review of Antispoofing Techniques. Int. J. Navig. Obs. 2012, 2012, 127072. [Google Scholar] [CrossRef]

- Tehrani, M.J.; Kim, J.; Tonella, P. PCLA: A Framework for Testing Autonomous Agents in the CARLA Simulator. arXiv 2025, arXiv:2503.09385. [Google Scholar]

- Codevilla, F.; Müller, M.; López, A.; Koltun, V.; Dosovitskiy, A. End-to-End Driving via Conditional Imitation Learning. In Proceedings of the IEEE International Conference on Robotics and Automation, Brisbane, QLD, Australia, 21–25 May 2018; pp. 4693–4700. [Google Scholar] [CrossRef]

- Zhu, A.Z.; Yuan, L.; Chaney, K.; Daniilidis, K. Unsupervised Event-Based Learning of Optical Flow, Depth, and Egomotion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 989–997. [Google Scholar] [CrossRef]

- Boloor, A.; He, X.; Gill, C.; Vorobeychik, Y.; Zhang, X. Simple Physical Adversarial Examples against End-to-End Autonomous Driving Models. In Proceedings of the IEEE International Conference on Embedded Software and Systems, Shanghai, China, 2–3 June 2019; pp. 1–8. [Google Scholar] [CrossRef]

- Akcay, S.; Ameln, D.; Vaidya, A.; Lakshmanan, B.; Ahuja, N.; Genc, U. Anomalib: A Deep Learning Library for Anomaly Detection. In Proceedings of the IEEE International Conference on Image Processing, Bordeaux, France, 16–19 October 2022. [Google Scholar] [CrossRef]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards Total Recall in Industrial Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14298–14308. [Google Scholar] [CrossRef]

- Bogdoll, D.; Hendl, J.; Zöllner, J.M. Anomaly Detection in Autonomous Driving: A Survey. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, New Orleans, LA, USA, 19–20 June 2022; pp. 4488–4499. [Google Scholar] [CrossRef]

- Zhu, Y.; Miao, C.; Xue, H.; Yu, Y.; Su, L.; Qiao, C. Malicious Attacks against Multi-Sensor Fusion in Autonomous Driving. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking, Washington, DC, USA, 18–22 November 2024; Association for Computing Machinery: New York, NY, USA, 2024. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, N.; Xiao, C.; Yang, D.; Fang, J.; Yang, R.; Chen, Q.A.; Liu, M.; Li, B. Invisible for Both Camera and LiDAR: Security of Multi-Sensor Fusion Based Perception in Autonomous Driving Under Physical-World Attacks. In Proceedings of the IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 24–27 May 2021; pp. 176–194. [Google Scholar] [CrossRef]

- Kołomański, M.; Sakhai, M.; Nowak, J.; Wielgosz, M. Towards End-to-End Chase in Urban Autonomous Driving Using Reinforcement Learning. In Intelligent Systems and Applications, IntelliSys 2022; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2022; Volume 544. [Google Scholar] [CrossRef]

- Sakhai, M.; Wielgosz, M. Towards End-to-End Escape in Urban Autonomous Driving Using Reinforcement Learning. In Intelligent Systems and Applications. IntelliSys 2023; Arai, K., Ed.; Lecture Notes in Networks and Systems; Springer: Cham, Switzerland, 2023; Volume 823. [Google Scholar] [CrossRef]

- Bendiab, G.; Hameurlaine, A.; Germanos, G.; Kolokotronis, N.; Shiaeles., S. Autonomous Vehicles Security: Challenges and Solutions Using Blockchain and Artificial Intelligence. IEEE Trans. Intell. Transp. Syst. 2023, 24, 3614–3637. [Google Scholar] [CrossRef]

- Rajapaksha, S.; Kalutarage, H.; Al-Kadri, M.K.S.O. AI-Based Intrusion Detection Systems for In-Vehicle Networks: A Survey. ACM Comput. Surv. 2023, 55, 237. [Google Scholar] [CrossRef]

- Sakhai, M.; Mazurek, S.; Caputa, J.; Argasiński, J.K.; Wielgosz, M. Spiking Neural Networks for Real-Time Pedestrian Street-Crossing Detection Using Dynamic Vision Sensors in Simulated Adverse Weather Conditions. Electronics 2024, 13, 4280. [Google Scholar] [CrossRef]

- Pratt, W.K. Digital Image Processing: PIKS Scientific Inside, 4th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Brown, T.B.; Mané, D.; Roy, A.; Abadi, M.; Gilmer, J. Adversarial Patch. arXiv 2017, arXiv:1712.09665. [Google Scholar]

- Thys, S.; Van Ranst, W.; Goedemé, T. Fooling Automated Surveillance Cameras: Adversarial Patches to Attack Person Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 49–55. [Google Scholar] [CrossRef]

- Shin, H.; Kim, D.; Kwon, Y.; Kim, Y. Illusion and Dazzle: Adversarial Optical Channel Exploits against Lidars for Automotive Applications. In Proceedings of the International Conference on Cryptographic Hardware and Embedded Systems, Taipei, Taiwan, 25–28 September 2017; pp. 445–467. [Google Scholar] [CrossRef]

- Kerns, A.J.; Shepard, D.P.; Bhatti, J.A.; Humphreys, T.E. Unmanned Aircraft Capture and Control via GPS Spoofing. J. Field Robot. 2014, 31, 617–636. [Google Scholar] [CrossRef]

- Psiaki, M.L.; Humphreys, T.E. GNSS Spoofing and Detection. Proc. IEEE 2016, 104, 1258–1270. [Google Scholar] [CrossRef]

- Kim, K.; Kim, J.S.; Jeong, S.; Park, J.H.; Kim, H.K. Cybersecurity for autonomous vehicles: Review of attacks and defense. Comput. Secur. 2021, 103, 102150. [Google Scholar] [CrossRef]

- Cai, Y.; Qin, T.; Ou, Y.; Wei, R. Intelligent Systems in Motion: A Comprehensive Review on Multi-Sensor Fusion and Information Processing From Sensing to Navigation in Path Planning. Int. J. Semant. Web Inf. Syst. 2023, 19, 1–35. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

| Sensor Type | Attack Type | Results Without Defense | Results with Defense |

|---|---|---|---|

| RGB Camera | Salt-and-Pepper Noise (80%) | Severe trajectory deviations; vehicle weaving across lanes; crashes in some episodes | Limited effectiveness; 30–45% trajectory drift still present; filtered images remain compromised |

| Dynamic Vision Sensor (DVS) | Event Flooding (60% spurious events) | False motion perception; higher anomaly scores (0.76) | Limited effectiveness; anomaly detection detects attack phase; event filter still compromised; lower anomaly scores (0.55) |

| Depth Camera | Depth Map Tampering (random patches) | Misinterpreted obstacle distances; affected perception of road boundaries | Limited effectiveness; filtered depth maps still contain significant distortions |

| LiDAR | Phantom Object Injection (5 clusters) | False obstacle detection | Partial mitigation through point cloud filtering; density-based clustering removes some phantom objects |

| GPS | Position Manipulation | Significant deviation between reported and actual position; incorrect routing decisions | Highly effective mitigation; route deviation and velocity consistency checks prevented course corrections based on falsified data |

| Sensor Update | Denial of Service (rate limiting) | Delayed/missed sensor readings; affected perception and decision-making | Partial mitigation through sensor update monitoring and buffering mechanisms |

| Control System | Steering Bias | Systematic errors in steering commands; trajectory deviation | Partial effectiveness; sophisticated attacks could still influence vehicle control |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sakhai, M.; Sithu, K.; Oke, M.K.S.; Wielgosz, M. Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA. Appl. Sci. 2025, 15, 7493. https://doi.org/10.3390/app15137493

Sakhai M, Sithu K, Oke MKS, Wielgosz M. Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA. Applied Sciences. 2025; 15(13):7493. https://doi.org/10.3390/app15137493

Chicago/Turabian StyleSakhai, Mustafa, Kaung Sithu, Min Khant Soe Oke, and Maciej Wielgosz. 2025. "Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA" Applied Sciences 15, no. 13: 7493. https://doi.org/10.3390/app15137493

APA StyleSakhai, M., Sithu, K., Oke, M. K. S., & Wielgosz, M. (2025). Cyberattack Resilience of Autonomous Vehicle Sensor Systems: Evaluating RGB vs. Dynamic Vision Sensors in CARLA. Applied Sciences, 15(13), 7493. https://doi.org/10.3390/app15137493