Abstract

The technological growth in the automation of manufacturing processes, as seen in Industry 4.0, is characterized by a constant revolution and evolution in small- and medium-sized factories. As basic and advanced technologies from the pillars of Industry 4.0 are gradually incorporated into their value chain, these factories can achieve adaptive technological transformation. This article presents a practical solution for companies seeking to evolve their production processes during the expansion phase of their manufacturing, starting from a base architecture with Industry 4.0 features which then integrate and implement specific tools that facilitate the duplication of installed capacity; this creates a situation that allows for the development of manufacturing execution systems (MESs) for each production line and a fog computing node, which is responsible for optimizing the load balance of order requests coming from the cloud and also acts as an intermediary between MESs and the cloud. On the other hand, legacy Machine Learning (ML) inference acceleration modules were integrated into the single-board computers of MESs to improve workflow across the new architecture. These improvements and integrations enabled the value chain of this expanded architecture to have lower latency, greater scalability, optimized resource utilization, and improved resistance to network service failures compared to the initial one.

1. Introduction

For more than a decade, productive sectors have been gradually incorporating different technologies and innovative tools throughout the supply chain, enabling more efficient, flexible, and sustainable production that will benefit stakeholders such as manufacturing companies, production managers, engineers, maintenance operators, customers, suppliers, government authorities, society, and the environment. Technological solutions such as MESs and enterprise resource planning (ERP) systems offer several advanced tools such as the internet of things (IoTs), edge, fog computing (FC), cloud computing, artificial intelligence (AI), digital twin, digital shadows, databases, holograms, immersive-mixed virtual reality, etc., which can be integrated under an architectural reference framework for Industry 4.0, depending on the needs of the different business departments of manufacturing, communication, and data management—technological integration aimed at native smart factories or those in need of digital transformation.

The scenario of digital migration in modern automation brings with it several benefits and challenges that must be resolved, so much so that [1] mentions that digitized factories themselves already generate a large flow of data and, when processed in the cloud, could cause delays in production execution times and conflicts in applications that operate with ultra-low latency. This procedure declines the quality of service (QoS) and quality of experience (QoE), basically due to the distance between end users and centralized servers in the cloud. In such unfavorable events, fog computing (FC) emerges as an innovative solution that, due to its operating characteristics, such as its reference architecture under the IEEE 1934 standard and its infrastructure for running industrial IoT services at the edge of a production plant, can solve these and other problems [2,3,4]. Following this solution context, ref. [5] mention that, according to the network density that FC can offer in response to the demand for large volumes of data generated by innovative environments such as cities, transportation, healthcare, industry, etc., the integration of this technology would reduce, for example, the response time of cyber-physical systems (CPS), improve the predictive maintenance of actuators, and better distribute tasks and product traceability; the latter being a crucial part of the valuable life of an asset and its value chain, which is a fundamental situation specified by the reference model for Industry 4.0 (RAMI 4.0). Likewise, ref. [6] indicates that applications running under IoT systems must be managed from an FC network to ensure the mobility of heterogeneous and wireless IoT devices that are geographically distributed.

In analyzing the background of intelligent manufacturing, various studies highlight the integration of FC as an effective solution to address the aforementioned issues. However, implementing this in context results in various obstacles during the integration of advanced and emerging technologies as part of Industry 4.0. Some of these are limited by processing capacity, which is used to perform different tasks, reduce latency, improve energy consumption, and manage load and resource distribution. These situations present opportunities to leverage the potential of fog and cloud computing, particularly when dealing with expandable architectures that enable scalability, interoperability, flexibility, and modularity [7,8,9,10,11,12]. For their part, in [13], they integrate a data gateway architecture into fog computing, consisting of two subordinate edge devices, which collect, filter, and send data to the cloud from two mobile robots of different owners using Docker and Kubernetes on a low-cost single-board computer (SBC). As for [14], they develop an Integer Linear Programming (ILP) model to optimize the location of key nodes (Edge Nodes, Fog Nodes, and Gateways) in an innovative factory environment with a five-layer architecture. Its implementation aims to provide complete coverage of mobile (autonomous vehicles) and fixed devices in a modular production system at the laboratory level, ensuring cost reduction, enhanced coverage, and quality of service (QoS). In turn, and taking a step further in the current research context, ref. [15] mentions, from a conceptual study approach perspective, that manufacturing execution systems (MESs) generally have a cloud architecture and, consequently, a dependence on QoS to maintain a continuous flow of information; a situation for which the authors propose, as future studies, the implementation of an additional FC layer, which would be located under the hybrid MES layer in the cloud, thus ensuring continuous factory operation. In this context, complementary frameworks have been proposed that delve deeper into the coordination between distributed industrial environments and manufacturing execution system (MES) models, highlighting the relevance of Flexible Control (FC). A representative example is the work of [16], which proposes a model that detects cascading failures of the nodes that constitute an IIoTs architecture; i.e., it detects those distribution nodes with anomalies (overload) due to cyberattacks, and a loss of the interconnection with MES service nodes, which in turn fail due to interdependence, thus collapsing the entire network vertically and horizontally. The authors address this situation by detecting and isolating infected nodes, thereby stabilizing the infrastructure again by identifying the nodes that are not overloaded or isolated. This situation would increase the resilience of distributed systems by stabilizing the I4.0 network against possible attacks.

1.1. Rationale for the Current Study

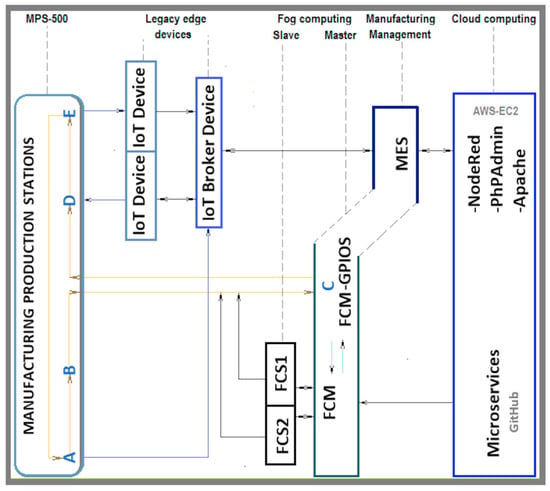

In a previous study [17], the digital transformation of a FESTO MPS 500 modular production process was carried out which is made up of five work stations in its production line: distribution (A), machining (B), quality control (C), storage (D) and classification (E) for the transitioning from Industry 3.0 to Industry 4.0. This required the integration of specialized hardware and software at different levels and layers within the RAMI 4.0 reference model, as well as lightweight communication protocols, cloud and fog computing, an MES, and advanced technologies that were integrated, such as artificial intelligence, local and cloud database management, computer vision for product quality control, computer vision for facial biometric operation, and from the Fog Computing Master (FCM), temperature management of the fog computing agent nodes (FCS), thus ensuring the available processing resources of the MES, as shown in Figure 1. This study demonstrated the feasibility of integrating several emerging technologies in Industry 3.0 to scale up to Industry 4.0.

Figure 1.

Initial connectivity and workflow architecture of an MPS500-FESTO with I4.0 technology.

Despite the above, the digital transformation developed by the authors represents a significant advance in the practical implementation of Industry 4.0 processes for SMEs. This is supported by the DIN SPEC 91345 (RAMI 4.0) reference model, which specifies that this framework, being flexible, allows for the adoption of advanced and emerging technologies in favor of digital transformation, connectivity, and interoperability, thus facilitating the horizontal and vertical integration of processes [18].

1.2. Implementation Proposal

Given the characteristics of industrial evolution mentioned above, this study proposes a scenario in which a factory undergoing expansion due to growing demand for its products must take action in response to this need to avoid the potential production interruptions caused by bottlenecks in future scenarios. Considering that its production line is well structured and optimized before demand, it is proposed to increase production capacity by replicating this production line without redesigning its workflow. This approach would reduce vulnerability to failures in a single production line, minimize operational risks, and improve the speed of final product delivery. Given this scenario, this study proposes utilizing another MPS as a secondary production line and integrating various basic and advanced technologies to develop an extended architecture that builds upon the initial architecture. The following points describe the specific goals that are considered fundamental to this development and understanding.

- The provision of a manufacturing production process operating system (OT) with characteristics similar to FESTO’s first MPS-500 production line, with business requirements for the adoption and adaptation of advanced and emerging information technology (IT) within the same flexible RAMI 4.0 reference framework. Consequently, another MES is being developed for the second production line.

- With two MESs for each MPS-500, a procedure is needed to enable the dynamic allocation of order requests hosted on an Amazon Elastic Compute Cloud (Amazon EC2) service instance. In such circumstances, a fog computing node is added to the architecture between the two MESs, providing a timely solution for load balancing based on order distribution, thereby avoiding overloads on a single production line while the other is available.

- Currently, mini-batch orders on the first production line are requested by remote users via a website.

Section 2 examines the advantages and limitations of current technologies, establishing the context for the approach presented in our research. Section 3 describes the steps that enabled improved interoperability and connectivity between two MESs using an FC intermediary node, which allows for the provision of a new management function, load distribution, and a real-time plant-to-cloud gateway. Section 4 evaluates the performance provided by the FC node in terms of response time and effective and efficient dynamic load distribution. Section 5, the Discussion Section, analyzes the results obtained, highlighting the advantages that FC adds within an established structure to improve manufacturing production. Similarly, it addresses the benefits of integrating advanced technologies to include a specific segment of the population, as well as the possible limitations that SMEs may face when implementing the use of these technologies. Finally, specific gaps are proposed for future research that still need to be covered or are awaiting synergistic integration with the proposal in this study. Finally, the conclusion in Section 6 summarizes the key results of the case study, highlighting the potential impact of integrating advanced technologies into parallel modular production processes and suggesting future research directions to explore further the technologies and methods employed.

2. Literary Review and State of the Art

This paper presents a systematic literature review (SLR) of practical implementation studies related to those proposed in this article, focusing on the following aspects: the needs of SMEs when their market participation is continuous, parallel production lines in support of sustained expansion, and the implementation of FC in parallel production line operations. The review was conducted using a structured and replicable approach in academic databases, including IEEE, Crossref, ProQuest, ResearchGate, and SCOPUS. Recent research was prioritized to ensure the relevance and timeliness of the results.

Technology Integration: MES, Cloud, and Fog Computing to Boost Production

When a company reaches a growth turning point in production, its operational logistics suggest that various lean manufacturing tools, such as process optimization, resource management, capacity expansion, and technology integration, can be applied to achieve a demanding production rate. In this regard, ref. [19], in its systematic review, and ref. [20], in its implementation of manufacturing production optimization and resource management strategies, mention that this path is viable for improving the balance in task allocation within an assembly line. Similarly, ref. [21] indicates that mass production lines are rigid in allocating tasks across different stations, making it difficult to adapt to more flexible schemes. Consequently, it proposes adjusting the installed capacity to operate in parallel with a production line that has process optimization characteristics. However, these studies focused on companies that were already established in the commercial market, leaving aside those that were in the process of development. For this reason, ref. [22] describes the reality of SMEs in Ibero-America, saying that they are fragmented in a certain way because they are not supported or illuminated by the economic focus of the governments of these countries and, as a result, a total disconnection of innovation and development (R&D) in their production processes occurs, displacing them towards the metaphor of the revolving door.

Furthermore, ref. [23] indicates that if we add to these aspects a lack of strategic planning for marketing and expansion, they would be out of the commercial game. These characteristics enable this study to focus on a structural solution that facilitates the integration of flexible technology with affordable hardware for process digitization. Maintaining this context [24,25], they highlight the benefit of distributed investment (gradual implementation, reducing initial investment, and facilitating continuous improvement) that companies can anchor by having an MES; that is, the expansion of a company is based on key functional dimensions for its maturity such as computerization, connectivity, visibility, transparency, predictability, and adaptability. Similarly, ref. [26] implements algorithms for parallel processes in multi-process and multi-order production lines managed from an MES, thus scaling up to a production line and adjusting to the growing instability of the current market, marked by a greater variety of products and a reduction in the quantity of production per variant. Taking a step further and valuing the intrinsic adaptability provided by these management systems, ref. [27] integrates a technological module (edge node) into this system for visual inspection tasks with Machine Learning (ML) algorithms. Their decisions are sent to an MES to execute various tasks, such as predictive maintenance, quality control processes, energy consumption optimization, or a combination of these or other tasks, depending on the plant’s or cloud’s needs. A similar approach was implemented in [28] in a laboratory that simulates a manufacturing line for quality control. The authors incorporated a legacy broker device that links the plant via OPC-AU or MQTT to the edge MES, which in turn links to an ERP. The edge management system is responsible for executing user orders via state machines. With the advantages mentioned so far regarding the operation of an edge-based process management system (low latency, local autonomy, security, and costs), refs. [29,30] propose a model that transfers specific capabilities of a cloud-based MES (CM) to different production processes located in different regions near the production plant through fog computing nodes, which route the load balance of different production lines running in parallel. Expanding on the potential offered by fog computing within the Industrial Internet of Things (IIoTs), ref. [30] presents an interesting theoretical framework for future studies that could be implemented, proposing the optimization of fog computing nodes, complemented by distributed intelligent agents, under a service-oriented architecture (SOA) for their integration. Based on the background presented in the literature review, various methodologies have been identified, with the primary objective of improving or optimizing workflows to ensure efficiency in advanced industrial systems, such as Industry 4.0. Despite this, ref. [31] indicates that, regardless of the architectural reference framework models used or methodologies proposed, it is important to maintain the best practices available for industrial cybersecurity and IoTs system security. In this case, following these guidelines, the authors simulate an ERP through hybrid private–public services for big data management and decision-making, facilitating bidirectional communication through an MES.

3. Materials and Methods

The purpose of this study is to extend the integration of disruptive tools within the initial architecture, with a focus on promoting sustained production growth and optimizing production processes in scenarios for micro and small enterprises (MSMEs and SMEs). In other words, as mentioned in the baseline study on the scope of its initial architecture, this implemented evolutionary architecture also focuses on the area of production processes and uses the fundamental principles of RAMI 4.0 as its structural framework.

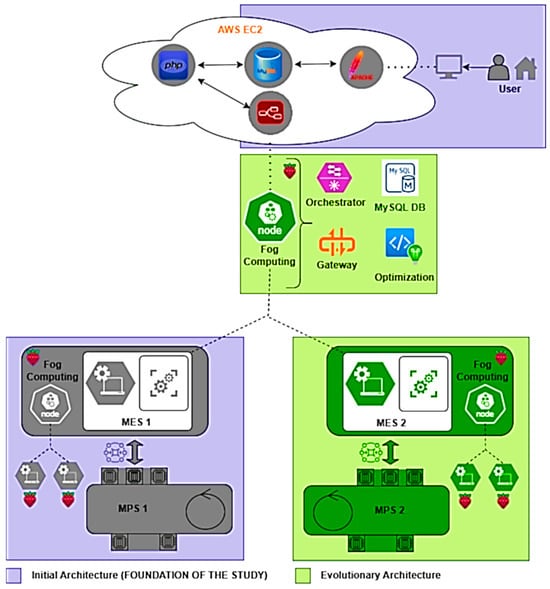

Based on the fundamental assumption that a company is expanding its installed capacity, this study proposes the use of two FESTO MPS-500s within the same laboratory environment, configured equally at their workstations. The proposal to integrate technologies arises from the moment an MES is added to each modular production system, thus taking advantage of the potential offered by cloud computing. In this situation, it is necessary to integrate a central FC node to link the MESs at the edge with cloud services and also to assign tasks based on operating costs, processing times, and energy consumption parameters. The structure illustrated in Figure 2 shows the base system of this study in gray and the technologies adopted and implemented, which form part of the extended architecture, in green boxes.

Figure 2.

Fundamentals of the current study and technological deployment in extended architecture.

The viability of this case study for technological expansion is based on the flexibility provided by the foundation of the initial study’s architecture; i.e., the system’s capacity was scaled to handle a higher production load, maintaining open standards and common communication protocols between different levels and layers of the architectural reference model, easily adapting to emerging technological trends.

3.1. Expansion of Installed Capacity with Second MPS-500 Production Line

This second MPS2 production line appears in the architecture as a horizontal scaling process, presenting the same flexible production characteristics as MPS1; i.e., it has five manufacturing stations and a conveyor belt to process three different types of products (Part A in red, Part B in black, and Part C in silver). The process begins at the distribution station, where the requested colored part is placed on a pallet on a conveyor belt and then transported to the machining station, where rotary power tools are activated according to the type of product. The part is then sent to the quality control station, followed by the storage station, where the parts are sorted and stored. Table 1 details the average time each part spends at each station and the average energy consumption involved in this process. It also records the average time each part takes to be transported from station to station and the electrical energy consumed by this movement. This measurement is performed for each MPS. This information will be used as optimization criteria within the intelligent orchestration scheme of the FC node.

Table 1.

Cycle times for preparing and replenishing orders and parts in the production plant.

The time and energy consumption values in the manufacturing process, as shown in Table 1, are considered to have a significant impact on the orchestrating tasks executed from an FC node to MESs. Therefore, these data will be considered as optimization criteria in the weighting of production costs. The implementation of the FC node will be detailed in later subsections.

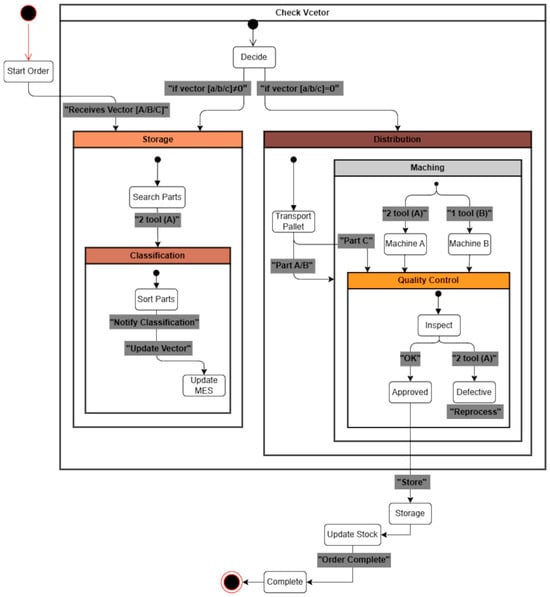

3.2. MES System Logic for Order Management

With a second MES, requests will be processed immediately and sent to MPS2 for possible market changes. In this context, this new MES was developed with the same characteristics as the initial MES, whose control flow was designed based on states to optimize order management. That is, the process begins when the MES’s order buffer receives a vector sent from the FC node. In this arrangement, the quantity and type of parts to be processed are identified according to their index and value (Status: Order Start). Subsequently, the MES requests the warehouse to locate the type of part (Status: Warehouse Locates Parts). Once identified, it is sent to the next station for classification and immediately notifies the MES of the current status (Status: Parts Classification) to update the vector by subtracting the processed units . If the quantity of the product type is not zero , then the request process to the warehouse is repeated, but if it reaches a value of zero , then the Pallet is transferred to the distribution station to begin the process of replenishing parts in the warehouse (Status: Distribute Pallet). Once the Pallet is at the machining station and depending on the type of part, the corresponding tools are activated, i.e., two tools for Part A, one tool for Part B, and no tools for Part C (Status: Machining Parts). The parts are then sent to the quality control station (Status: Quality Control). Those parts that are verified correctly are stored in the warehouse (Status: Storage); otherwise, they will be reprocessed. When has completed the vector order, the MES captures images of the warehouse shelf and runs machine vision to determine the Stock of products in the plant (Status: Update Stock), thus completing the cycle (Status: Complete Order). The transitions between states are governed by the order vector, which operates as the central control axis between the MES and the sorting and distribution stations. Figure 3 shows a diagram based on the states executed by the MESs, indicating the order preparation process and the parts replenishment process.

Figure 3.

Fundamentals of the current study and technological deployment in expandable architecture.

The processes mentioned above, which occur in the plant, are described as taking place within a continuous workflow. However, knowing the communication latency between the edge devices involved and the time it takes to execute recurring processes ensures the quality of the infrastructure. This is mentioned because, according to temporary records from the baseline study, the time it takes to update the stock in the cloud from the moment the image is captured is approximately 21 s. This is an unfavorable situation due to the type of SBC used. Despite this, and to maintain technological evolution without a significant economic impact, it was necessary and precise to integrate USB TPU Coral devices as ML model accelerators, thus delegating the ML inference operation to YOLO V5. Despite this, the accelerator device is only compatible with lightweight models; consequently, a model conversion was performed, i.e., the format was changed from PyTorch v2.1.2 (.pt) to TensorFlow Lite v2.15 (.tflite). To perform this model conversion, the YOLOv5 (Ultralytics repository version 7.0) model was first trained from the repository on GitHub (repository: ultralytics/yolov5). The model was then exported to ONNX using export.py. In a virtual environment, the ONNX model was converted to TensorFlow using tf2onnx and subsequently, to TensorFlow Lite (.tflite) with TFLiteConverter. Finally, the tflite_runtime and pycoral libraries were installed on the SBC for interaction after detection with the segmentation algorithm, allowing for the identification of the type of parts and their location within the warehouse station’s rag.

3.3. Fog Computing as an Intelligent Intermediary Between MESs and Cloud

Due to the horizontal scaling mentioned in the previous subsections, it is necessary to have an additional layer located at the edge that acts as an intermediary between the MESs and cloud systems and also has order request orchestration capabilities. This will meet the requirements involved in the productive expansion of an SME, thus creating an integrated technological situation that maintains the flexibility of the architecture, improving local management, reducing dependence on the cloud, and reducing decision times for task distribution.

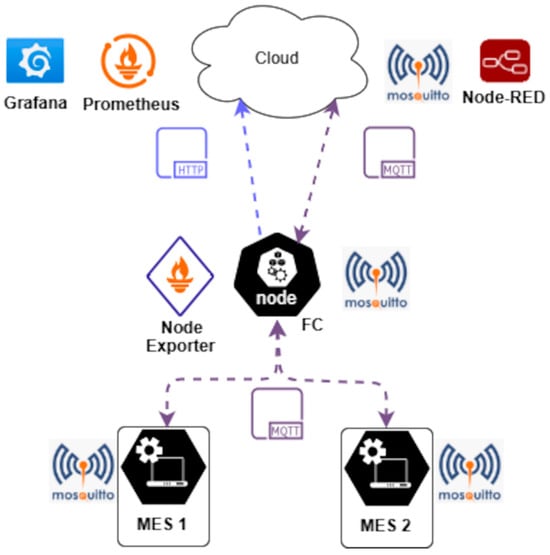

Below, in a summarized context, the operational functionalities of the cloud computing platform and MESs are mentioned as the basis for the initial study. In the case of the Amazon Elastic Compute Cloud (EC2), different software tools (Node-RED, MySQL, phpMyAdmin, and Apache) were installed, allowing a user to remotely generate their order and have it stored in a database. When the FC node requests the download of stored orders, the MQTT/TLS mosquito broker (Node-Red) publishes this information and stores it locally. As this is a two-way communication, the stock levels of the parts stored in the warehouses are displayed in real-time for users. This datum is published by MQTT clients (MES) to the MQTT broker (FC node), which in turn publishes it to the cloud, thus maintaining the cycle of distributed interoperability between heterogeneous devices (MESs—FC node—Cloud), as shown in Figure 4.

Figure 4.

Interoperable distributed architecture MESs–node and FC–Cloud.

The process just shown is made possible by a Python v3.9.16 script developed for the FC node, which concurrently executes multitasking using the multithreading library to perform the following: downloading orders from the cloud, storing them in a local database (port 3306 enabled); orchestrating tasks decided by a hybrid algorithm based on integer linear programming (ILP); monitoring information from MESs (stock, energy consumption, and production time); and the use of a Node Exporter tool (port 9100 enabled) to measure FC node performance metrics and MQTT/TLS communication (port 8883 enabled) between MESs, FC nodes, and the cloud, in a manner consistent with IEC 62443 industrial automation system network security [32]. It is also mentioned that interoperability with the cloud is possible due to the endpoints available, such as Node-RED, Prometheus, and Grafana, where the first is part of the extended architecture. The other two are not as they are used exclusively to visualize the metrics of the FC node targets (ports 9090 and 3000 enabled) and thus analyze their performance.

Functional Architecture of the FC Node with Multi-Objective Optimization

Task orchestration begins when the FC node processes the data published from the MESs (the number of parts still to be processed from the order). It is a cumulative calculation of the remaining total of the vector arrangement explained in Section 3.2, thus achieving a dynamic load balance based on the production status. Although this logic is an intelligent orchestration, it is a rigid method in the face of current needs; i.e., it does not consider production variables as strategic decision factors typical of highly dynamic markets. For this situation, a hybrid algorithm based on integer linear programming (ILP) and heuristic rules was developed to optimize task management decisions and streamline load balancing. Accordingly, the values shown in Table 1 are now relevant to this document.

The implemented algorithm is considered hybrid because it estimates production time and energy consumption weights, as well as project costs and adaptive rules. That is, the production cost for any is equal to the sum of the time and energy weights (Equation (1)). The time weight is obtained by multiplying by the number of parts according to the type of part , the production time , according to the kind of part in , and the time weight α. The energy weighting is obtained in monetary units by considering the energy cost in kW/h, where is the energy consumption according to the type of part p in , and β is the weight of the energy consumed. It should be noted that (α, β) within the cost function behave as a “trade-off;” that is, they are adjustable parameters that allow for the reflection of operational properties according to the logistical context of the companies. These values are not static but are defined by the plant manager based on actual conditions, such as variations in electricity rates, resource availability, failure rates, stock levels, environmental impact, and delivery requirements. In this case study, weights were assigned to energy consumption and the time it takes to manufacture a part, for example. In a scenario where the cost of energy rates increases, a greater weight could be assigned to energy weighting (α > β), even if this leads to longer production times. This flexibility enables the load allocation to be tailored to specific strategic criteria for each industrial context.

To avoid imbalance during FC node decision-making, a practical heuristic rule is established that adds up the costs above. This rule is characterized by a quick response and, above all, avoids complex calculations, a quality that prioritizes the performance of the FC node, which by default has limited resources. The heuristic rule facilitates the assignment of tasks to MESs based on a threshold that can be modified according to production needs. For example, if the cost of MES1 exceeds this limit, then the FC node will orchestrate the task to MES2 (Equation (2)), where is the current load of the and indicates the maximum capacity that the production lines can process using the MPSs.

In case the heuristic threshold is executed and does not provide a clear answer for the allocation decision, which may occur when there is a balance in the costs of both MESs, the algorithm assigns the task to the MES with the lowest current load, see Equation (3); i.e., the algorithm asks, “Where does the minimum cost occur?” not “What is the minimum cost?” This ensures load balancing at the FC node.

where represents the selected MES and is the argument of the minimum; that is, it looks for the MESm between (1 and 2) that has the lowest current load . Additionally, this algorithm is adaptable to incorporate several types of pieces and to scale to the MESs modules if required.

4. Analysis of Results

Next, we will highlight the behavior of the two types of 4.0 architectures implemented for the scaling of Industry 3.0 manufacturing processes. That is, the first corresponds to the base study of this article, in which the MES communicates with the cloud to manage plant processes through cloud computing. Therefore, according to the proposed framework, the initial architecture serves as a basis, and a second production line is implemented in the plant, resulting in the integration of an FC node that acts as an intermediary between the MESs and the cloud. In this context, the performance points of the distributed network system will be analyzed, including communication delay times between layers, the processing percentage and response time of the manufacturing management system’s hardware, and the effectiveness of the load balancing system during the orchestration of order requests.

Knowing that the base architecture of this study took RAMI 4.0 as a reference framework, more advanced technologies were adapted. In this regard, it was identified that the integration layer (connection: MES–legacy integration devices with PLCs) did not undergo any performance changes; instead, the functional layer (MES with AI) and the communication layer (MES–cloud connection) experienced improvements. This is mentioned because the approximate time taken for bidirectional data flow between the MES and the cloud for order requests and downloads was 1.7 [s], according to the initial study. Given this information but now measured with the integration of an FC node in the architecture, the time taken by the MESs to request the exact requirements is reduced to only approximately 22 [ms]. Table 2 presents a comparison of the data transfer and connection times between the initial and extended architectures, as measured during the order request and download processes.

Table 2.

Comparison of transmission latency and resilience in the communication layer of the base and extended architectures.

The experimental validation results demonstrate that the FC system ensures an efficient workflow and, due to its location near the edge, also facilitates smooth operation even in the presence of network interruption problems. It is also mentioned that these results were submitted under the following internet speed parameters: Upload 30.62 [Mbps] and Download 69.48 [Mbps]. To rule out any ambiguity in the results in Table 2, the time taken for two-way communication between the fog node and the cloud is independent of the MES connection and fog node. In the event of a loss of connectivity, the MESs continue to manage orders to their respective MPSs. At this point, the FC node continually sends a request to the cloud-based MQTT broker to download orders, allowing it also to verify the connection status. To assess the system’s resilience, the internet service was intentionally interrupted several times to determine the approximate average reconnection time.

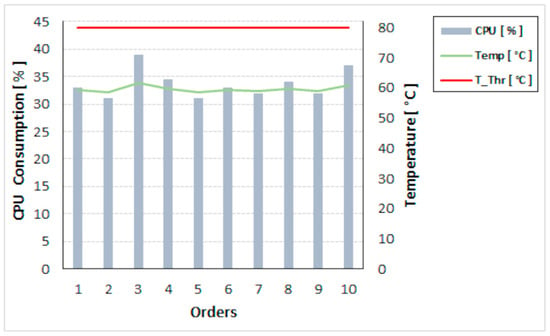

On the other hand, the averaged performance metrics were monitored from the Raspberry Pi 4 (RPi4) hardware with 4 GB of RAM from the FC node. The data were collected as order requests were made, meaning that the tasks to be performed by the FC node were concurrent, involving the following: downloading requests, storing them in a local database, orchestrating orders, and processing data published from the MESs. Considering the aforementioned tasks as a global process performed on an FC node, the following average performance metrics were recorded during the orchestration of 10 orders (see Figure 5), with an average CPU consumption of 36% and an average temperature of 61 °C, a value that is below the thermal throttling (T_Thr) threshold and is therefore stable in its operation. However, to avoid possible approaches to this critical temperature limit, it was decided to maintain forced ventilation during the period of orchestration of all order requests to the MESs, with an average execution time for task assignment decision-making of 900 [ms].

Figure 5.

Thermal and computational performance of the FC node during its orchestration process.

The time required for the load distribution decision-making process is justified when considering aspects such as strategic business expansion needs, the dynamism of current markets, and the optimization of production processes.

Table 3 shows how the implemented algorithm assigns five tasks to the MESs. However, before their execution, the FC node must acquire specific dynamic parameters from the plant and assign them to an array, such as the time it takes the plant to prepare and replenish each type of part, as well as the energy consumption involved in these actions. According to Table 1, the following data are available for the time: [A(218 [s]-MPS1.156 [s]-MPS2); B(156 [s]-MPS1.154 [s]-MPS2); and C(128 [s]-MPS1.127 [s]-MPS2)]. And, as equivalent parameters of energy consumption, we have the following: [A(843 [W]-MPS1.562 [W]-MPS2); B(569 [W]-MPS1.558 [W]-MPS2); and C(572 [W]-MPS1.571 [W]-MPS2)].

Table 3.

Validation of a hybrid algorithm based on integer linear programming (ILP) and a heuristic rule for load balancing during task distribution.

On the other hand, the maximum capacity for each production line was set at 40 units, and a heuristic limit of 1.3 times the lower cost value was also set; i.e., the higher operating cost between the two MPSs was evaluated against this threshold. If it is exceeded, the allocation option will serve as an alternative. Finally, weightings were added for production time and energy consumption, allowing the algorithm to adapt to business management needs in terms of sales, production, and energy.

The arrangement shown above presents an unusual feature in terms of the manufacture of Part A in MPS1, as it was intentionally designed to exceed the manufacturing process of Part A in MPS2 by 40% in terms of time and 50% in terms of energy consumption, in order to validate the implemented algorithm. On the other hand, delving deeper into the sensitivity of the algorithm reveals that its heuristic threshold is initially set at 1.3, which determines whether the cost difference between MPS1 and MPS2 is significant enough to justify reassignment. If the higher cost does not exceed the lower cost by 1.3 times, then the MPS with the lowest accumulated load is prioritized. With this background, and with the heuristic limit at 1.3, the values of α and β impact the costs of each MPS, but there are no significant changes in order allocation, as the differences between costs remain within the threshold. This suggests that the heuristic serves as a regulator, mitigating fluctuations. In contrast, when there is no tolerance with the heuristic limit at one, the same values of α and β do generate significant redistribution among the MPSs since minor differences in costs are sufficient to create changes. In the case where time weighting is prioritized over energy (α = 1 and β = 2), costs skyrocket in MPS1, but the algorithm continues to assign balanced orders when the threshold of 1.3 is maintained. Conversely, when the threshold is reduced to one, the algorithm’s behavior becomes highly sensitive, with even minor differences leading to a reorientation of the assignment towards the cheapest MPS in relative terms. Table 4 summarizes the findings of the sensitivity analysis of the hybrid ILP–heuristic algorithm when assigning different configurations of α and β, and a different heuristic threshold.

Table 4.

Comparison of allocation under different configurations of α, β, and heuristic threshold.

On the other hand, Section 3.2 discusses the processes executed by the MESs and the processing load supported by microprocessors (MPUs). However, Table 2 does not mention the time it took for the MES to execute the stock update, a process that the base study specifies caused a delay of 21 [s], indirectly indicating the possibility of reaching the thermal throttling threshold and, consequently, high %CPU consumption. Given this situation, a Coral USB TPU (edge tensor accelerator) device was integrated, designed to boost the inference speed of the YOLO V5 model, improving performance in each MES by freeing the CPU from the computational load and, consequently, significantly reducing the temperature and the time it took for the RPi 4 to update the stock to the FC node. Table 5 shows the behavior of the average performance variables of the MESs during the stock update process and other concurrent processes.

Table 5.

Comparison of MES performance variables executed on initial and evolutionary architecture.

5. Discussion

This study utilized advanced technologies on an initial architecture, aiming to develop a phased deployment from a traditional 3.0 industrial infrastructure to a more digitized 4.0 environment. However, some issues were identified that are important to resolve for SME ecosystems: the flexible behavior of the initial architecture when production capacity is expanded and the operational latency of the MES with embedded artificial intelligence. Given these observations, another MES system was adopted for managing the second production line, which was coordinated from an FC node that integrated an optimization algorithm for load balancing. An accelerated processing unit was also coupled to each MES to free up computing capacity. Unlike others, this study shows the practical positive impact of architectures with flexibility features; i.e., during the process, advanced technologies were deployed in stages, and methods were incorporated that ensured a smooth workflow throughout the supply chain, and even more so when the contribution was directed at the needs of SMEs for innovation and development (R&D). We emphasize this approach because, during the review of the literature on similar studies, we found solutions with a strong dependence on robust technological infrastructures and, consequently, a level of high complexity in implementing advanced and emerging solutions, as well as the high costs associated with these technological integrations. These factors alienate and discourage small businesses from R&D.

This study put into practice the favorable contextualization provided by the use of FC systems because the FC node ensured direct communication with the MESs and provided resilience during internet service interruptions. Even more important is its contribution to executing task orchestration with stable load balancing, as the FC node incorporates a hybrid algorithm based on ILP and heuristic rules, which allows the parameters to be adjusted according to business operational needs. On the other hand, equipping an R&D architecture for an SME with advanced technologies requires an analysis of the computational power of the management and distribution nodes, which are limited. In this situation, without modifying the technologies of the initial architecture, it was possible to reduce the computational load for thermal tolerance by utilizing an edge acceleration module, which ensures connectivity and interoperability throughout the architecture.

By expanding the base study’s architecture, substantial improvements are reflected throughout the production chain. This means that latency in bidirectional communication between the MES edge devices and the FC node was significantly reduced, from approximately 1.7 [s] to 22 [ms]. The same occurred with the computational processing time of the MESs, which went from approximately 21 [s] to 6 [s]. These results have a significant impact on key optimization factors, including energy consumption, responsiveness, thermal stability, scalability, and flexibility. Going one layer higher within the extended architecture, task orchestration is optimally executed in the FC node based on the operational weights in the plant (energy consumption and part manufacturing time).

Furthermore, as this algorithm does not rely on robust optimization, it optimizes its resources and facilitates adaptable growth and a flexible architecture for future scenarios. In other words, the literature encompasses multiple advanced approaches to load balancing in FC nodes, utilizing metaheuristics, neural networks, or intelligent hybrid systems [33,34,35,36,37]. However, these methods differ from the approach proposed in this study, especially in terms of their computational requirements and their viability in real industrial environments. In addition, these approaches require an advanced computational infrastructure, which makes their implementation challenging in SME environments that rely on low-cost hardware. Furthermore, their architectures focus on theoretical efficiency without considering the integration of industrial agents, such as manufacturing execution systems (MESs) or the direct control of manufacturing production lines, instead utilizing the IoTs or edge devices. Unlike these approaches, the proposed hybrid ILP–heuristic algorithm is designed for environments with limited resources, prioritizing robustness, simplicity of implementation, and near real-time response, thereby ensuring its viability in FC nodes that are sensitive to computational load.

From what has been mentioned so far, it could be said that this contribution fits perfectly with the challenges faced by SMEs and MSMEs. However, the reality of these companies varies for all and depends on government policies regarding economic support and R&D. Nevertheless, this leaves an open field as a practical reference for the use of flexible and implemented architectures. On the academic side, it presents an architecture that enables the use of optimization methods and techniques across different layers and levels of this infrastructure, allowing for the adoption of new technologies and Industry 4.0 pillars driven by business necessity. At this point, I could mention several future studies that, after a systematic review of the literature, might be obvious to experts. In that case, I propose the development of more studies that implement these contextualizations, models, and simulations so that they can be applied to real-world business scenarios. However, from a critical point of view of the implemented architecture, it is necessary to work with resilient systems operated from FC nodes for the management of different types of process variables, thus ensuring end-to-end, vertical, and horizontal interconnection. Similarly, there is no life cycle for sales products and the return of recyclable inputs. These suggestions indicate that the work carried out still has several research niches that need to be resolved.

6. Conclusions

Depending on the business ecosystem in which SMEs and micro-SMEs operate, they can produce value-added manufactured products for both domestic and international markets. However, in developing countries, this reality seems utopian due to a lack of government support, which consequently hinders innovation and development. Given this situation and as mentioned in the study’s rationale regarding the characteristics of the RAMI 4.0 reference framework in terms of its flexibility and modularity, this study contributes to the integration of advanced and emerging technologies on an initial 4.0 architecture. Thus, the architecture developed in this study is suitable for comparing performance metrics between the aforementioned architectures, but with features for implementation in SMEs, ultimately achieving an extended architecture suitable for comparing performance metrics between the aforementioned architectures. Thus, by adapting an FC node near each MES, the architecture developed in this study reduced request and data download latency by 98.7%. In addition, order orchestration was optimized based on business needs, specifically production costs such as energy consumption and the time required for product preparation and manufacture. This was achieved by considering factors beyond dynamic load balancing based on production status, as proposed by the initial architecture. These features provided by FC in its implementation include low latency, scalability for other MESs, resource optimization, and greater resilience to network service failures for load balancing. On the other hand, to improve the performance of the hardware that supports the MES plant management system in the initial architecture and, indirectly, the latency for stock updates in the cloud, it was appropriate to integrate an ML inference acceleration module into the SBC, achieving approximate reductions of 60% in CPU and 20% in temperature, thus avoiding a computational bottleneck and, consequently, the propagation of latency throughout the supply chain. In other words, by reducing the latency of stock updates in the cloud by 70%, the management time for each MES is also reduced. This solution, which is particularly suitable for companies with limited resources, would be an effective strategy for optimizing technology costs.

Author Contributions

Conceptualization, W.O. and R.S.; methodology, W.O.; software, W.O.; validation, W.O.; formal analysis, W.O.; investigation, W.O. and R.S.; writing–original draft preparation, W.O. and R.S.; writing–review and editing, W.O.; data curation, W.O.; visualization, W.O.; supervision, R.S.; review, R.S.; project administration, W.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Bouzarkouna, I.; Sahnoun, M.; Sghaier, N.; Baudry, D.; Gout, C. Challenges facing the industrial implementation of fog computing. In Proceedings of the 2018 IEEE 6th International Conference on Future Internet of Things and Cloud (FiCloud), Barcelona, Spain, 6–8 August 2018; pp. 341–348. [Google Scholar] [CrossRef]

- Singh, G.; Singh, J. A cost-effective IoT-assisted framework coupled with fog computing for smart agriculture. In Proceedings of the 2023 IEEE 8th International Conference for Convergence in Technology (I2CT), Lonavla, India, 7–9 April 2023; pp. 1–8. [Google Scholar] [CrossRef]

- IEEE 1934–2018; IEEE Standard for Adoption of OpenFog Reference Architecture for Fog Computing. IEEE Standards Association: Piscataway, NJ, USA, 2018. Available online: https://standards.ieee.org/standard/1934-2018.html (accessed on 25 January 2025).

- Chang, C.; Srirama, S.; Buyya, R. Indie Fog: An efficient fog-computing infrastructure for the Internet of Things. Computer 2017, 50, 92–98. [Google Scholar] [CrossRef]

- Mana, C.S.; Samhitha, B.; Deepa, D.; Vignesh, R. Analysis on application of fog computing in Industry 4.0 and smart cities. In Energy Conservation Solutions for Fog-Edge Computing Paradigms; Tiwari, R., Mittal, M., Goyal, L.M., Eds.; Lecture Notes on Data Engineering and Communications Technologies; Springer: Singapore, 2022; Volume 74, pp. 87–105. [Google Scholar] [CrossRef]

- Hazra, A.; Rana, P.; Adhikari, M.; Amgoth, T. Fog computing for next-generation Internet of Things: Fundamental, state-of-the-art and research challenges. Comput. Sci. Rev. 2023, 48, 100549. [Google Scholar] [CrossRef]

- Yousefpour, A.; Fung, C.; Nguyen, T.; Kadiyala, K.; Jalali, F.; Niakanlahiji, A.; Kong, J.; Jue, J.P. All one needs to know about fog computing and related edge computing paradigms: A complete survey. J. Syst. Archit. 2019, 98, 289–330. [Google Scholar] [CrossRef]

- Mori, T.; Utsunomiya, Y.; Tian, X.; Okuda, T. Optimal Design of Fog Cloud Computing System Using Mixed Integer Linear Programming. IEEJ Trans. Electron. Inf. Syst. 2019, 139, 648–657. [Google Scholar] [CrossRef]

- Kopras, B.; Bossy, B.; Idzikowski, F.; Kryszkiewicz, P.; Bogucka, H. Task allocation for energy optimization in fog computing networks with latency constraints. IEEE Trans. Commun. 2022, 70, 8229–8243. [Google Scholar] [CrossRef]

- Sun, W.-B.; Xie, J.; Yang, X.; Wang, L.; Meng, W.-X. Efficient computation offloading and resource allocation scheme for opportunistic access fog-cloud computing networks. IEEE Trans. Cogn. Commun. Netw. 2023, 9, 521–533. [Google Scholar] [CrossRef]

- Bogucka, H.; Kopras, B.; Idzikowski, F.; Bossy, B.; Kryszkiewicz, P. Green time-critical fog communication and computing. IEEE Commun. Mag. 2023, 61, 40–45. [Google Scholar] [CrossRef]

- Oñate, W.; Sanz, R. Analysis of Architectures Implemented for IIoT. Heliyon 2023, 9, e12868. [Google Scholar] [CrossRef]

- Daghayeghi, A.; Nickray, M. Delay-aware and energy-efficient task scheduling using Strength Pareto Evolutionary Algorithm II in fog-cloud computing paradigm. Wirel. Pers. Commun. 2024, 138, 409–457. [Google Scholar] [CrossRef]

- Bouzarkouna, I.; Sahnoun, M.; Bettayeb, B.; Baudry, D.; Gout, C. Optimal deployment of fog-based solution for connected devices in smart factory. IEEE Trans. Ind. Inform. 2024, 20, 5137–5146. [Google Scholar] [CrossRef]

- Omar, A.; Bouzarkouna, I.; Sahnoun, M.; Brik, B.; Baudry, D. Deployment of fog computing platform for cyber physical production system based on Docker technology. In Proceedings of the 2019 3rd International Conference on Applied Automation and Industrial Diagnostics (ICAAID), Elazig, Turkey, 25–27 September 2019; p. 8934949. [Google Scholar] [CrossRef]

- Zhu, L.; Fu, X.; Liu, X.; Du, S. Modeling and Analysis of Cascade Failures in Industrial Internet of Things Based on Task Decomposition and Service Communities. Comput. Ind. Eng. 2025, 206, 111177. [Google Scholar] [CrossRef]

- Oñate, W.; Sanz, R. Integration of Fog Computing in a Distributed Manufacturing Execution System Under the RAMI 4.0 Framework. Appl. Sci. 2024, 14, 10539. [Google Scholar] [CrossRef]

- Nagorny, K.; Scholze, S.; Colombo, A.W.; Oliveira, J.B. A DIN Spec 91345 RAMI 4.0 Compliant Data Pipelining Model: An Approach to Support Data Understanding and Data Acquisition in Smart Manufacturing Environments. IEEE Access 2020, 8, 223114–223129. [Google Scholar] [CrossRef]

- Eghtesadifard, M.; Khalifeh, M.; Khorram, M. A Systematic Review of Research Themes and Hot Topics in Assembly Line Balancing through the Web of Science within 1990–2017. Comput. Ind. Eng. 2020, 139, 106182. [Google Scholar] [CrossRef]

- Al-Msary, A.J.K.; Talib, A.H.; Kadhim, B.S. The Impact of Concurrent Engineering Techniques on Assembly Line Redesign: An Applied Study. J. Eur. Syst. Autom. 2024, 57, 945–952. Available online: https://api.semanticscholar.org/CorpusID:272157303 (accessed on 16 February 2025). [CrossRef]

- Aguilar Gamarra, H.N. Equilibrado de Líneas de Montaje en Paralelo con Estaciones Multilínea y Dimensionado de Buffers. Ph.D. Thesis, Departament d’Organització d’Empreses, Universitat Politècnica de Catalunya, Barcelona, Spain, 2022. Available online: http://hdl.handle.net/2117/380365 (accessed on 12 March 2025). [CrossRef]

- Salcedo-Pérez, C.; Contreras, A.C. Instrumentos financieros para el crecimiento sostenible de las pequeñas y medianas empresas: Estrategias para las economías emergentes de América Latina. In Manual de Investigación Sobre Intraemprendimiento y Sostenibilidad Organizacional en Pymes; Pérez-Uribe, R., Salcedo-Pérez, C., Ocampo-Guzmán, D., Eds.; IGI Global Scientific Publishing: Hershey, PA, USA, 2018; pp. 276–293. [Google Scholar] [CrossRef]

- Tong, L.Z.; Wang, J.; Pu, Z. Sustainable supplier selection for SMEs based on an extended PROMETHEE II approach. J. Clean. Prod. 2022, 330, 129830. [Google Scholar] [CrossRef]

- Fischer, M.; Schuh, G.; Stich, V. The Impact of Manufacturing Execution Systems on the Digital Transformation of Production Systems—A Maturity Based Approach. In Proceedings of the Conference on Production Systems and Logistics, Online, 10–11 August 2021; pp. 446–456. [Google Scholar] [CrossRef]

- Waschull, S.; Wortmann, J.C.; Bokhorst, J.A.C. Identifying the Role of Manufacturing Execution Systems in the IS Landscape: A Convergence of Multiple Types of Application Functionalities. IFIP Adv. Inf. Commun. Technol. 2019, 567, 502–510. [Google Scholar] [CrossRef]

- Xia, Y.; Guo, X. Intelligent Manufacturing Line Design Based on Parallel Collaboration. In Proceedings of the 2022 5th World Conference on Mechanical Engineering and Intelligent Manufacturing (WCMEIM), Ma’anshan, China, 18–20 November 2022; pp. 1149–1152. [Google Scholar] [CrossRef]

- El Zant, C.; Charrier, Q.; Benfriha, K.; Le Men, P. Enhanced Manufacturing Execution System “MES” Through a Smart Vision System. In Advances on Mechanics, Design Engineering and Manufacturing III; Lecture Notes in Mechanical Engineering; Springer: Cham, Switzerland, 2021; pp. 329–334. [Google Scholar] [CrossRef]

- Garcia, A.; Oregui, X.; Ojer, M. Edge Architecture for the Automation and Control of Flexible Manufacturing Lines. Procedia Comput. Sci. 2024, 237, 305–312. [Google Scholar] [CrossRef]

- Furmanek, L.; Lins, S.; Blume, M.; Sunyaev, A. Developing a hybrid deployment model for highly available manufacturing execution systems. In Proceedings of the 2024 47th ICT and Electronics Convention (MIPRO), Opatija, Croatia, 20–24 May 2024; pp. 2095–2100. [Google Scholar] [CrossRef]

- Gharbi, I.; Barkaoui, K.; Samir, B.A. An Intelligent Agent-Based Industrial IoT Framework for Time-Critical Data Stream Processing. In Mobile, Secure, and Programmable Networking; Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Cham, Switzerland, 2021; Volume 12605, pp. 195–208. [Google Scholar] [CrossRef]

- Chiu, P.C.; Rana, M.E.; Hameed, V.A.; Seeralan, A. Cloud Manufacturing in the Semiconductor Industry: Navigating Benefits, Risks, and Hybrid Solutions in the Era of Industry 4.0. In Proceedings of the 2023 IEEE 21st Student Conference on Research and Development, SCOReD, Kuala Lumpur, Malaysia, 13–14 December 2023; pp. 616–621. [Google Scholar] [CrossRef]

- IEC 62443-4-1:2018; Industrial Communication Networks—Network and System Security. International Electrotechnical Commission (IEC): Geneva, Switzerland, 2018.

- Singh, S.P. Effective Load Balancing Strategy Using Fuzzy Golden Eagle Optimization in Fog Computing Environment. Sustain. Comput. Inform. Syst. 2022, 35, 100766. [Google Scholar] [CrossRef]

- Tahmasebi-Pouya, N.; Sarram, M.A.; Mostafavi, S. A Reinforcement Learning-Based Load Balancing Algorithm for Fog Computing. Telecommun. Syst. 2023, 84, 321–339. [Google Scholar] [CrossRef]

- Shaik, M.B.; Reddy, K.S.; Chokkanathan, K.; Biabani, S.A.A.; Shanmugaraja, P.; Brabin, D.R.D. A Hybrid Particle Swarm Optimization and Simulated Annealing with Load Balancing Mechanism for Resource Allocation in Fog-Cloud Environments. IEEE Access 2024, 12, 172439–172450. [Google Scholar] [CrossRef]

- Nayak, A.; Tripathy, S.S.; Bebortta, S.; Tripathy, B. An Intelligent Study towards Nature-Inspired Load Balancing Framework for Fog-Cloud Environments. In Proceedings of the 2024 IEEE International Conference for Women in Innovation, Technology & Entrepreneurship (ICWITE), Bangalore, India, 16–17 February 2024; pp. 168–173. [Google Scholar] [CrossRef]

- Alkayal, E.S.; Alharbi, N.M.; Alwashmi, R.; Ali, W. Improving Fog Resource Utilization with a Dynamic Round-Robin Load Balancing Approach. Int. J. Adv. Appl. Sci. 2024, 11, 196–205. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).