Featured Application

The proposed intelligent filtering system can be seamlessly integrated into platforms utilizing large language models (LLMs), including customer service chatbots, educational tutors, healthcare assistants, and code-generation tools. By dynamically identifying and mitigating harmful, biased, or unethical outputs in real time, the system significantly enhances the safety and reliability of LLM-powered applications. This approach supports the responsible deployment of generative artificial intelligence (AI) technologies by ensuring adherence to ethical standards and regulatory frameworks while simultaneously preserving a high-quality user experience.

Abstract

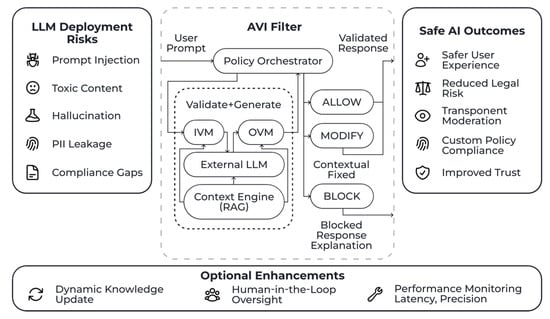

This paper proposes a technological framework designed to mitigate the inherent risks associated with the deployment of artificial intelligence (AI) in decision-making and task execution within the management processes. The Agreement Validation Interface (AVI) functions as a modular Application Programming Interface (API) Gateway positioned between user applications and LLMs. This gateway architecture is designed to be LLM-agnostic, meaning it can operate with various underlying LLMs without requiring specific modifications for each model. This universality is achieved by standardizing the interface for requests and responses and applying a consistent set of validation and enhancement processes irrespective of the chosen LLM provider, thus offering a consistent governance layer across a diverse LLM ecosystem. AVI facilitates the orchestration of multiple AI subcomponents for input–output validation, response evaluation, and contextual reasoning, thereby enabling real-time, bidirectional filtering of user interactions. A proof-of-concept (PoC) implementation of AVI was developed and rigorously evaluated using industry-standard benchmarks. The system was tested for its effectiveness in mitigating adversarial prompts, reducing toxic outputs, detecting personally identifiable information (PII), and enhancing factual consistency. The results demonstrated that AVI reduced successful fast injection attacks by 82%, decreased toxic content generation by 75%, and achieved high PII detection performance (F1-score ≈ 0.95). Furthermore, the contextual reasoning module significantly improved the neutrality and factual validity of model outputs. Although the integration of AVI introduced a moderate increase in latency, the overall framework effectively enhanced the reliability, safety, and interpretability of LLM-driven applications. AVI provides a scalable and adaptable architectural template for the responsible deployment of generative AI in high-stakes domains such as finance, healthcare, and education, promoting safer and more ethical use of AI technologies.

1. Introduction

In this paper, we propose the Aligned Validation Interface (AVI), an intelligent, LLM-agnostic API gateway that provides modular, bidirectional filtering for the safe and ethical deployment of large language models. Unlike existing solutions, AVI combines negative filtering (e.g., prompt injection and PII detection) with positive filtering through RAG-based contextual grounding, enabling fine-grained policy control across diverse application domains. This framework introduces a scalable and adaptable architectural pattern that enhances trust, transparency, and compliance in LLM interactions.

The rapid proliferation and integration of large language models (LLMs) represent a paradigm shift across numerous domains, including scientific research, business operations, education, content creation, and daily communication [1]. These powerful models, exemplified by architectures like GPT-4, Claude, Llama, Mistral, and Gemini [2], demonstrate remarkable capabilities in understanding and generating human-like text, translating languages, summarizing complex information, and even writing creative content and code. Their potential to enhance productivity, accelerate discovery, and democratize access to information is immense, driving their widespread adoption by organizations and individuals globally [3].

However, this transformative potential is intrinsically linked with significant and multifaceted risks that challenge their safe, ethical, and reliable deployment [4,5]. As LLMs become more deeply embedded in critical applications and user interactions, concerns regarding their security vulnerabilities, potential for generating harmful or biased content, propensity for factual inaccuracies (hallucinations), and risks to data privacy have become paramount [6,7]. The flexibility and generative power that make LLMs so valuable create attack surfaces and potential failure modes that are different significantly from traditional software systems. Security risks include prompt injection attacks, where malicious inputs can hijack the model’s intended function, data poisoning during training, and potential denial-of-service vectors [7]. Ethical concerns are equally pressing, encompassing the generation and amplification of biased language reflecting societal prejudices (gender, race, etc.), the creation of toxic or hateful content, the spread of misinformation and disinformation at scale, and the potential for manipulative use [8]. Furthermore, LLMs trained on vast datasets may inadvertently leak sensitive personal identifiable information (PII) or confidential corporate data present in their training corpus or user prompts [8]. Finally, the inherent nature of current generative models leads to the phenomenon of “hallucinations”—the generation of plausible but factually incorrect or nonsensical statements—undermining their reliability, particularly in knowledge-intensive applications [9]. The OWASP Top 10 for LLM Applications project (now, the OWASP Gen AI Security Project) underscores the industry-wide recognition of these critical vulnerabilities [9]. These challenges are particularly acute in sectors like finance, healthcare, legal services, and public administration, where trust, accuracy, and compliance are non-negotiable [10].

In response to these challenges, various approaches to LLM safety and content moderation have emerged. Major LLM developers like OpenAI and Anthropic have incorporated safety mechanisms directly into their models and APIs, often through extensive alignment processes like Reinforcement Learning from Human Feedback (RLHF) and Constitutional AI [10]. OpenAI offers a Moderation API, while Anthropic’s models are designed with inherent safety principles [11]. While valuable, relying solely on this built-in alignment presents limitations. These internal safety layers can sometimes be overly restrictive, leading to refusals to answer legitimate queries or exhibiting a form of “over-censorship” that hinders utility [11]. Furthermore, their specific alignment targets and policies are often opaque (“black box”) and may not be sufficiently customizable to meet the diverse requirements of specific applications, industries, or international regulatory landscapes (e.g., General Data Protection Regulation (GDPR) and the EU AI Act), which creates a dependency on the provider’s specific safety philosophy and implementation [12].

The research community and specialized companies are actively developing alternative and supplementary safety solutions. Platforms and tools from companies like Turing, Lasso Security, Protect AI, Granica AI, PromptArmor, Enkrypt AI, Fairly AI, and Holistic AI address various facets of AI security, governance, and risk management [13]. Approaches include specialized security layers, prompt injection defense data privacy techniques, vulnerability assessment, and comprehensive governance frameworks. Google’s Perspective API targets toxicity detection, while open-source projects and academic research explore filter models, Retrieval-Augmented Generation (RAG) for grounding, and hallucination/bias detection methods [14]. However, many external solutions focus on specific threat vectors, may introduce significant performance overhead, or lack seamless integration capabilities. The need for effective multilingual moderation further complicates the landscape for global applications.

This landscape reveals a critical need for a comprehensive, adaptable, and easily integrable framework that allows organizations to implement fine-grained control over LLM interactions. There is a gap for solutions that can provide robust safety and ethical alignment without solely relying on the inherent, often inflexible, safety layers of the base LLM. Such a solution should empower users to leverage a wider range of LLMs, including potentially less restricted open-source models, while maintaining control over the interaction’s safety profile. Furthermore, an ideal system should move beyond purely reactive filtering (“Negative Filtering”) to proactively enhance the quality and safety of LLM outputs (“Positive Filtering”), for instance, by grounding responses in trusted information sources. Diverging hypotheses exist regarding the optimal locus of control—internal model alignment versus external validation layers.

This paper introduces the AVI (Aligned Validation Interface), an intelligent filter system designed to bridge this gap. AVI functions as a modular Application Programming Interface (API) Gateway positioned between user applications and LLMs, providing a centralized point for monitoring, validating, and aligning LLM interactions according to configurable policies. Its core architectural principle enables universality, making it largely independent of the specific underlying LLM being used and offering potential extensibility to various data types beyond text in the future. We propose that AVI’s “Compound AI” approach, orchestrating specialized validation and enhancement modules, offers significant advantages. This approach, by design, leverages a collection of distinct, specialized AI subcomponents rather than relying on a single monolithic safety model. Such modularity provides greater flexibility in selecting and combining best-of-breed techniques for specific tasks (e.g., toxicity detection, PII masking, and factual verification), enhances maintainability as individual components can be updated independently, and allows for more transparent and auditable decision-making pathways. This modularity allows for reduced dependency on the base LLM’s built-in alignment, enabling users to tailor safety and ethical constraints externally. Crucially, AVI is designed for “bidirectional positive/negative filtering”. This dual capability means AVI performs two key types of interventions: (1) negative filtering: it detects and mitigates risks (such as harmful content, PII, or policy violations) in both user inputs (before they reach the LLM) and in the LLM’s raw outputs (before they reach the user). This is primarily achieved through its Input Validation Module (IVM) and Output Validation Module (OVM); (2) positive filtering: beyond simply blocking or modifying problematic content, AVI proactively aims to improve the quality, factual accuracy, and safety of LLM responses. This is achieved by injecting verified, trusted context into the LLM’s generation process, primarily through its RAG-based Contextualization Engine (CE). This bidirectional approach ensures comprehensive oversight of the LLM interaction lifecycle.

The central hypothesis tested in this work is that the AVI framework can effectively mitigate a broad spectrum of LLM-associated risks (including prompt injection, toxic content, PII leakage, and hallucinations) across diverse LLMs while offering superior flexibility and control compared to relying solely on built-in model safety features and imposing acceptable performance overhead. The main aim of this work is to present the conceptual architecture and methodology of AVI and to evaluate its feasibility and effectiveness through a proof-of-concept (PoC) implementation. We detail the design of the core AVI modules (Input/Output Validation, Response Assessment, and Contextualization Engine) and their orchestration. We introduce a mathematical model and metrics for evaluation. Finally, we present preliminary results from our PoC evaluation, demonstrating AVI’s potential to enhance the safety, reliability, and ethical alignment of LLM interactions. This work contributes a practical and adaptable architectural pattern for governing LLM usage, aiming to make LLMs more trustworthy and applicable in critical domains like finance, healthcare, education, and enterprise applications, thereby fostering responsible AI adoption. The described principles are intended to be comprehensible to a broad audience of scientists and engineers working with applied AI.

So, this paper addresses the identified research gap and proposes a new technology solution, the Agreed Verification Interface (AVI), which is a composite AI framework that acts as an intelligent API gateway to manage interactions between LLMs and end-users. AVI introduces a dual-pronged approach: (1) negative filtering to detect and block malicious, biased, or privacy-infringing content in both input and output; and (2) positive filtering to enhance factual and contextual relevance using RAG-based reasoning. The interface’s modular design supports data input validation, result output evaluation, hallucination detection, and context injection, all managed through an industry, government, or enterprise policy-driven orchestration layer. Unlike black-box model alignments or fragmented security add-ons, AVI is configurable, highly scalable, and independent of the underlying LLM, allowing for flexibility and integration across a wide range of applications and compliance settings [15]. By combining rule-based systems, machine-learning classifiers, named entity recognition, and semantic similarity techniques, AVI enables organizations to dynamically monitor, validate, and adjust LLM responses. Preliminary evaluation of the proof-of-concept system has shown promising results in reducing operative injection success rates, reducing toxicity, accurately identifying PII, and increasing actual accuracy—all while maintaining acceptable latency. As such, AVI represents a significant step toward implementing responsible AI principles in real-world LLM deployments. Section 2 outlines the problem setting and provides an overview of existing approaches to accident analysis in transport systems. Section 3 describes the proposed methodology for analyzing sequences of dangerous events using graph-based and machine learning techniques. Section 4 presents the results of the experimental study, followed by suggestions for further research in Section 5 and conclusions and limitations in Section 6.

2. Literature Review

The rapid development and widespread adoption of large language models (LLMs)—including GPT-4, Claude, and LLaMA—have profoundly influenced many of the world’s leading industries, particularly those in the service sector [16]. These models offer powerful capabilities for natural language generation, product and technology design, and the identification of inefficiencies within management process chains. LLMs now serve as foundational technologies in domains such as healthcare, finance, legal services, customer support, and education [17]. They can generate answers to user queries, synthesize and transform information into structured knowledge, translate languages, and perform complex reasoning tasks.

Despite their transformative potential, the rise of LLMs has elicited serious concerns among users and developers, particularly in relation to security, bias, factual reliability, privacy, and compliance with data protection regulations—especially when these models are applied in high-risk or mission-critical business environments [18]. Key risks include prompt injection attacks, the generation of toxic or biased content, hallucinations (i.e., plausible but incorrect outputs), and the unintended leakage of personally identifiable information (PII) [19,20]. These vulnerabilities pose significant dangers when LLMs are integrated into sensitive applications that demand high levels of trust, reliability, and ethical governance.

Applications in such contexts require both flexibility and rigorous risk management strategies. To address these challenges, leading LLM developers have embedded internal security mechanisms. For instance, OpenAI has employed Reinforcement Learning from Human Feedback (RLHF) and moderation APIs to align model behavior with governmental and industry-specific security standards. Similarly, Anthropic’s Constitutional AI approach incorporates rule-based constraints to guide model behavior [21,22].

However, these existing mechanisms exhibit notable limitations. First, the alignment layers in commercial models are often opaque, making them difficult to audit and insufficiently adaptable across different domains or regulatory regimes. Second, they may impose excessive censorship or restrictive controls, leading to the rejection of benign user queries and a degradation of user experience [23]. Third, many organizations opt to implement open-source or third-party models to reduce technological and operational costs, which often results in inadequate built-in security features.

Given these limitations, relying solely on internal alignment mechanisms is insufficient, particularly in sectors governed by strict privacy laws, compliance mandates, and cultural norms. In response, external security tools and middleware frameworks have emerged to supplement or replace internal safeguards. For example, tools such as PromptArmor, Holistic AI, and Google’s Perspective API provide targeted protections against prompt injections, bias, and toxic outputs [24,25]. Concurrently, academic research is exploring frameworks for hallucination detection, automated ethical filtering, and AI governance auditing [26,27,28].

While these initiatives are valuable and address key components of LLM security, they often focus on isolated threats and lack holistic orchestration across the end-to-end model interaction pipeline. Furthermore, they are frequently ill equipped to address dynamic and unpredictable external risks. Thus, an integrative, modular approach is required to ensure more comprehensive and adaptable governance of LLM-based systems.

There is growing interest in the use of augmented search generation (RAG) to ground model outputs in validated, demand-based knowledge [29]. RAG has potential benefits not only in reducing hallucinations but also in aligning credible responses with domain-specific context. However, few systems seamlessly integrate RAG into a modular security architecture capable of real-time filtering, assessing possible (predictable or undetectable) risks, and enhancing the context of outputs.

Microsoft has developed a standard for responsible AI. It is a framework for building AI systems according to six principles: fairness, trustworthiness and safety, privacy, and protection (Table 1).

Table 1.

Microsoft’s responsible AI principles: descriptions and industry examples.

Neural networks operate on a probabilistic basis so their answers are not always accurate. For example, AI can generate incorrect code but evaluate it as correct (hyper-confidence effect). However, such errors are also common to humans, and AI trained on large datasets is more likely to imitate the work of an experienced developer than an unexperienced one. The software development lifecycle (SDLC) involves various risks at each stage when incorporating AI technologies. During maintenance and operation, AI systems may produce hallucinations—plausible but incorrect information—and can be vulnerable to manipulation by malicious users, such as through SQL injection attacks. In the deployment phase, generative AI models require high computational resources, potentially leading to excessive costs, and AI-generated configurations may degrade performance or introduce system vulnerabilities. Implementation risks include insecure code produced by AI developer assistants and flawed AI-generated tests that may reinforce incorrect behavior [31]. To mitigate these risks, the SDLC is typically divided into key phases: requirements analysis, architecture design, development, testing, and maintenance. Each phase must consider specific AI-related challenges such as data specificity, adaptability to model changes, code security, and non-functional testing aspects like robustness against attacks. Increasingly, the analysis and design stages are merging, with developers directly collaborating with business stakeholders to define and refine system requirements—a practice common in both startups and major firms. Internal engineering platforms now handle many architectural constraints, adding flexibility to the design process [32]. Historically, roles like business analyst and developer were separated due to the high cost of programmer labor, but agile methods and faster development cycles have shown that excessive role separation can hinder progress [33]. AI is further reshaping these dynamics as generative models can write substantial code segments, though they require carefully crafted prompts to yield reliable results. It represents one of the key AI-specific risks at the requirements analysis and system design stages.

Additionally, AI has limited contextual understanding of user prompts. While developers rely not only on formal specifications but also on implicit knowledge about the project to make informed decisions, language models operate solely based on the explicit information contained in the prompt. Furthermore, the process is affected by context length limitations—modern models can handle tens of thousands of tokens, but even this may be insufficient for complex tasks. As a result, generative AI currently serves more as an auxiliary tool rather than an autonomous participant in the design process.

For example, the rapid rise in popularity of ChatGPT4.0 is not just a result of marketing efforts but also reflects a genuine technological breakthrough in the development of generative neural networks [34]. Over the past two and a half years, model quality has significantly improved due to larger training datasets and extended pre-training periods. However, this stage of technological evolution is nearing completion and is now being complemented by a new direction: enhancing output quality by increasing computational resources allocated per individual response. These are known as “reasoning models,” which are capable of more advanced logical reasoning. In parallel, ongoing efforts are being made to implement incremental algorithmic improvements that steadily enhance the accuracy and reliability of language models.

To effectively manage AI-specific risks in software development and application, it is essential first to have the ability to manage IT risks more broadly. Many of the current challenges associated with AI may evolve over time as the technologies continue to advance. However, the example and methodology presented in this article offer a systematic approach that can help tailor risk management strategies to real-world business needs [35,36]. Each company has its own level of maturity in working with AI, and understanding this factor is becoming a critical component of a successful AI adoption strategy.

To better position AVI within the existing landscape of LLM safety and governance solutions, Table 2 provides a conceptual comparison with several prominent tools and approaches. This comparison focuses on key architectural and functional characteristics, such as LLM-agnosticism, modularity, the types of filtering implemented, and the degree of policy configurability. It is important to note that this is a qualitative comparison based on publicly available documentation and the described operational principles of these systems, rather than a direct numerical benchmarking of performance, which is an avenue for future research (see Section 4.6). Nevertheless, this comparative overview helps to highlight the aspects where AVI aims to offer unique or more comprehensive capabilities in the pursuit of responsible LLM deployment.

Table 2.

Conceptual comparison of AVI with existing LLM safety and governance solutions.

3. Materials and Methods

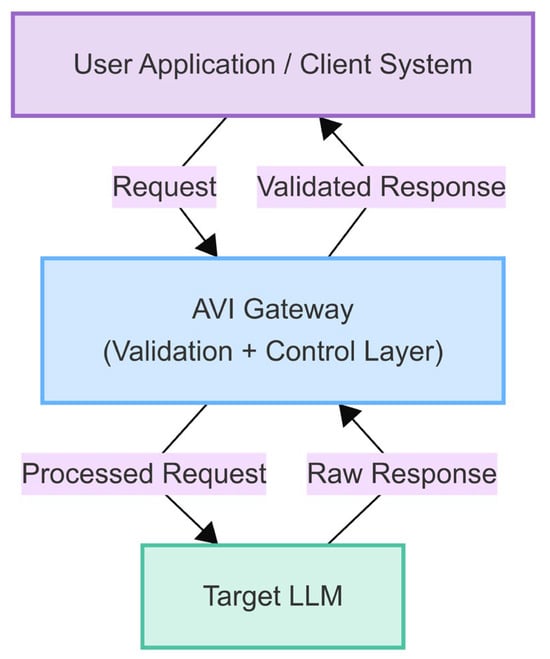

This study introduces and evaluates the Aligned Validation Interface (AVI), a conceptual framework designed to enhance the safety, reliability, and ethical alignment of interactions with large language models (LLMs). The AVI system operates as an intelligent API gateway, intercepting communication between user applications and downstream LLMs to apply configurable validation and modification policies. This architecture ensures LLM agnosticism and facilitates centralized control and monitoring. Underlying AVI is a Compound AI approach, orchestrating multiple specialized modules rather than relying on a single monolithic safety model. This modular design enhances flexibility and allows for the integration of diverse validation techniques (Figure 1). The primary goal of AVI is to enable safer, more reliable, and ethically aligned interactions by proactively validating and modifying the communication flow based on configurable policies, offering an alternative or supplement to relying solely on the often opaque and inflexible internal safety mechanisms built into many proprietary LLMs [37,38]. AVI aims to provide organizations with granular control over LLM interactions, adaptability to specific operational contexts and regulatory requirements (e.g., GDPR and the EU AI Act [39]), and compatibility with a diverse ecosystem of LLMs, thereby promoting responsible AI innovation and deployment across critical sectors such as finance, healthcare, and education [40].

Figure 1.

High-level diagram of AVI as an API gateway. Source: made by authors.

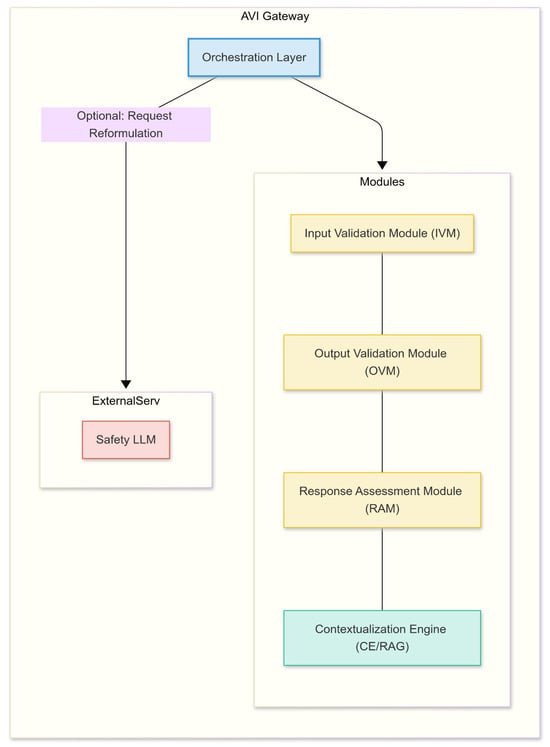

Architecturally, AVI functions as an API gateway, positioning it as a mandatory checkpoint (Figure 2). The typical data processing sequence involves: (1) receiving an incoming request; (2) pre-processing and input validation via the Input Validation Module (IVM); (3) optional context retrieval and augmentation via the Contextualization Engine (CE/RAG); (4) forwarding the processed request to the designated LLM; (5) intercepting the LLM’s response; (6) post-processing, assessment, and validation of the response via the Response Assessment Module (RAM) and Output Validation Module (OVM); (7) delivering the final, validated response. This gateway architecture provides LLM agnosticism, centralized policy enforcement, simplified application integration, and comprehensive logging capabilities. The Compound AI philosophy underpins this, utilizing an ensemble of specialized modules for distinct validation and enhancement tasks, promoting flexibility, maintainability, and the integration of diverse techniques (rule-based, ML-based, heuristic) [41].

Figure 2.

Diagram illustrating the Compound AI concept. Source: made by authors.

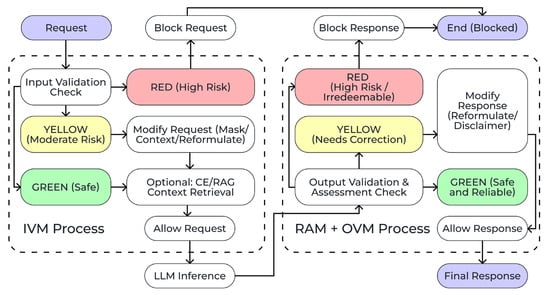

The core modules within the AVI framework are designed as follows: The Input Validation Module (IVM) analyzes incoming requests for security threats (e.g., prompt injection), PII, and policy violations (e.g., forbidden topics) (Figure 3). Conceptually, it combines rule-based systems (regex and dictionaries), ML classifiers (e.g., fine-tuned models based on architectures like Llama 3, Mistral, or DeBERTa V3 for semantic threat analysis), and NER models (e.g., spaCy-based [41] or transformer-based) for PII detection [42]. The Output Validation Module (OVM) scrutinizes the LLM’s generated response for harmful content (toxicity and hate speech), potential biases, PII leakage, and policy compliance, using similar validation techniques tailored for generated text. The Response Assessment Module (RAM) evaluates the response quality, focusing on factual accuracy (hallucination detection) and relevance. Methodologies include NLI models [43] or semantic similarity (e.g., SBERT [44]) for checking consistency with RAG context, internal consistency analysis, and potentially LLM self-critique mechanisms using CoT prompting [45]. The Contextualization Engine (CE/RAG) enables “Positive Filtering” by retrieving relevant, trusted information to ground the LLM’s response. It utilizes text-embedding models (e.g., sentence-transformers/all-MiniLM-L6-v2 [46] as used in the PoC) and a vector database (e.g., ChromaDB [47] as used in the PoC) with k-NN search. AVI’s CE supports both explicit rule–document links (for curated context based on triggered rules) and implicit query-based similarity search against a general knowledge base. An Orchestration Layer manages this workflow, implementing a multi-stage “traffic light” decision logic. Based on validation outputs from IVM, RAM, and OVM against configured policies, it determines the final action: ALLOW (pass through), BLOCK (reject request/response), MODIFY_RAG (add context before LLM call), or MODIFY_LLM (request reformulation by a Safety LLM).

Figure 3.

Flowchart diagram illustrating the orchestration logic (“traffic light”). Source: made by authors.

AVI’s adaptability relies on its configurable Policy Management Framework. Policies define the rules (keywords, regex, semantic similarity, and classifier outputs), sensitivity thresholds, and corresponding actions (block, mask, log, reformulate, and add context) for each module. While the PoC utilized CSV files for simplicity, the framework conceptually supports more robust formats (JSON, YAML, and databases) suitable for complex, potentially hierarchical or context-dependent policies [47]. Critically, the dynamic nature of LLM risks and information necessitates continuous policy maintenance. Effective deployment requires human oversight and curation by a dedicated team or individual responsible for monitoring emerging threats (e.g., new prompt injection techniques), adapting ethical rules to evolving norms, maintaining the factual grounding knowledge base for RAG, analyzing system logs for refinement, and managing rule-context links. This human-in-the-loop approach ensures the long-term relevance and effectiveness of the AVI system.

Performance Metrics include Latency, which quantifies the additional processing time introduced by the AVI system. This overhead is a critical factor for user experience and is calculated as follows:

and Throughput (requests per second, RPS). Equation (1) defines the latency directly attributable to AVI (L_AVI). Here, L_Total is the total response time experienced by the end-user when the request is processed through AVI, and L_LLM is the baseline inference time of the downstream LLM when called directly (without AVI). Minimizing L_AVI is essential for ensuring AVI’s practicality in real-time interactive applications.

Validation Quality Metrics for AVI’s classification tasks (such as detecting toxicity, PII, or prompt injection attacks) are derived from the Confusion Matrix elements: True Positives (TP), True Negatives (TN), False Positives (FP), and False Negatives (FN). Accuracy is a general measure of correct classifications:

As shown in Equation (2), Accuracy represents the proportion of all instances correctly classified by an AVI module. For example, in toxicity detection, TPs are toxic items correctly flagged, TNs are safe items correctly passed, FPs are safe items wrongly flagged as toxic (Type 1 error), and FNs are toxic items wrongly passed as safe (Type 2 error). While intuitive, accuracy can be less informative for imbalanced datasets, necessitating other metrics.

Precision is used to evaluate how many of the items flagged by AVI as positive (e.g., harmful content) are actually positive:

Equation (3) calculates Precision. In the operational context of AVI, high precision is crucial as it signifies a low rate of false positives; for instance, ensuring that when AVI intervenes by flagging or blocking content, such actions are predominantly applied to genuinely problematic instances, minimizing disruption to legitimate user interactions. (TP and FP are defined under Equation (2)).

Recall, also termed sensitivity, quantifies the capability of AVI to correctly identify all actual positive instances within the data:

Recall, defined in Equation (4), is particularly significant for AVI’s safety-critical tasks, such as PII leakage or toxic output detection. A high recall value indicates the system’s effectiveness in identifying the majority of targeted undesirable items, thereby minimizing the risk of missed threats or unmitigated harmful content. (TP and FN are defined under Equation (2)).

The F1-score serves as a consolidated metric that harmonizes Precision and Recall, proving particularly valuable in scenarios where an equitable balance between minimizing false positives and false negatives is critical:

Equation (5) presents the F1-score. This metric is often preferred for evaluating AVI’s classification modules, especially when dealing with imbalanced class distributions (common in safety applications) as it simultaneously considers both Precision and Recall. A high F1-score reflects a robust classifier capable of maintaining a good balance between avoiding false alarms and detecting relevant instances.

Specificity is employed to assess the proficiency of AVI in correctly identifying actual negative instances, such as legitimate or safe content:

Specificity, calculated as per Equation (6), is important for AVI as it measures the system’s ability to correctly permit non-problematic content. High specificity ensures that legitimate user interactions are not unduly impeded by the filtering mechanisms, complementing Recall by demonstrating accurate handling of the negative (i.e., safe) class. (TN and FP are defined under Equation (2)).

In addition to these metrics, Receiver Operating Characteristic (ROC) curves and the Area Under the Curve (AUC) may also be employed for a more comprehensive evaluation of the trade-offs between true positive rate (Recall) and false positive rate across different classification thresholds, particularly for the binary classification tasks performed by AVI’s modules.

For the evaluation of the Response Assessment Module’s (RAM) efficacy in detecting factual inaccuracies or nonsensical statements in LLM-generated outputs, the Hallucination Detection Accuracy metric is utilized. This metric focuses specifically on the correct identification of such undesirable generations:

As defined in Equation (7), this metric computes the ratio of hallucinated statements correctly identified by RAM (Correctly_Identified_Hallucinations) to the total count of actual hallucinations present within the evaluated set of LLM responses (Total_Actual_Hallucinations). This value effectively represents the recall score for the “hallucination” class, quantifying the proportion of extant hallucinations successfully detected by the AVI system.

Further Response Assessment Quality Metrics are employed, particularly when the Contextualization Engine (CE/RAG) is active. These include (1) Faithfulness Metrics: to evaluate the degree to which the LLM’s response adheres to the provided RAG context, potentially utilizing Natural Language Inference (NLI) models to assess entailment or contradiction [43]. High faithfulness ensures that the LLM leverages the retrieved context accurately and does not generate statements unsupported by or conflicting with it. (2) RAG Quality Metrics: to assess the performance of the RAG module itself, metrics such as Context Relevance, often measured by the average semantic similarity (e.g., using SBERT [44]) between the user query and the retrieved documents, indicating whether the CE fetched pertinent information; Context Recall, the proportion of all truly relevant documents from the knowledge base that were successfully retrieved by the CE for a given query.

Conceptually, the AVI Decision Process can be modeled as a sequence of transformations and decisions based on the outputs of its core modules. To formalize this workflow and the subsequent evaluation metrics, we introduce the following notation. Let R be the input request; let P represent the set of active policies governing the various AVI modules and resultant actions:

where each P_module (e.g., P_IVM for the Input Validation Module) defines the specific rules and thresholds for that module, and P_Action defines the overall decision logic. Further, let C be the retrieved context (if any), Resp_LLM the raw response from the downstream LLM, and Resp_Final the final output delivered to the user. The process unfolds through the following key steps:

- Input Validation Score (Score_IVM): The IVM module assesses the input request R against input policies P_IVM. This assessment yields a risk score, Score_IVM ∈ [0, 1], where 0 represents a safe input and 1 represents a high-risk input (e.g., detected high-severity prompt injection or forbidden content). This score can be derived from the maximum confidence score of triggered classifiers or the highest risk level of matched rules. The module may also produce a modified request R′.

- Context Retrieval (Context): If the RAG mechanism is enabled by the policy P_CE (which governs the Contextualization Engine’s operation) and potentially triggered by the initial request R or its risk score Score_IVM, the Contextualization Engine (CE) module retrieves relevant contextual information C. This context C typically consists of textual excerpts from a trusted knowledge base, intended to ground the LLM’s subsequent response. The retrieval process is represented as follows:where R′ is the (potentially modified) input request from step 1 (Equation (8)), and P_CE encompasses the policies defining the RAG activation, source selection, and retrieval strategy. If no context is retrieved (e.g., RAG is disabled or no relevant information is found), C can be considered empty.

- LLM Interaction: The base (downstream) large language model then generates a raw response, denoted as Resp_LLM. This generation process takes the (potentially modified) request R′ and the retrieved context C (if any) as inputs:Here, LLM(…) represents the generation function of the core language model. If context C is empty (i.e., no RAG-based context was provided), the LLM generates its response based solely on the request R′. The Resp_LLM is the direct output from the LLM before any further AVI processing by the OVM or RAM modules.

- Response Quality Scores (Scores_RAM): The RAM module assesses the raw response Resp_LLM potentially using context C and policies P_RAM. This yields multiple quality scores, for example, Hallucination_Prob ∈ [0, 1]: the probability of the response containing factual inaccuracies; Faithfulness_Score ∈ [0, 1]: the degree to which the response adheres to the provided context C (if applicable), where 1 is fully faithful; Relevance_Score ∈ [0, 1]: the relevance of the response to the initial request R:

- Output Validation Score (Score_OVM): The OVM assesses Resp_LLM against output policies P_OVM, yielding an output risk score, Score_OVM ∈ [0, 1], similar to Score_IVM (e.g., based on toxicity, PII detection, and forbidden content). It may also produce a potentially modified response Resp′:

- Risk Aggregation (Risk_Agg): The individual risk and quality scores are aggregated into a single metric or vector representing the overall risk profile of the interaction. A simple aggregation function could be a weighted sum, where weights (w_i) are defined in P_Action:More complex aggregation functions could use maximums, logical rules, or even a small meta-classifier trained on these scores. The specific weights or rules allow tuning the system’s sensitivity to different types of risks.

- Final Action Decision (Action, Resp_Final): Based on the aggregated risk Risk_Agg and potentially the individual scores, the final action Action and final response Resp_Final are determined according to action policies P_Action. These policies define specific thresholds, Thresh_Modify and Thresh_Block, which delineate the boundaries for different actions. The final action Action is determined by comparing the aggregated risk against these thresholds:Subsequently, the final response Resp_Final delivered to the user is determined based on the selected Action:

ALLOW permits the (potentially OVM-modified) response Resp′ to pass through to the user. MODIFY_LLM triggers a reformulation attempt, potentially invoking a separate, highly aligned “Safety LLM”, which uses the original response Resp′ and assessment scores Scores_RAM as input, guided by specific instructions in P_Action. BLOCK prevents the response from reaching the user, returning a predefined message indicating the reason for blockage. The specific thresholds (Thresh_Modify and Thresh_Block) and the implementation details of the modification strategy (SafetyLLM function) are crucial configurable elements within the action policy P_Action. This formal model provides a structured representation of the multi-stage validation and decision-making process inherent to the AVI framework.

To validate this framework, a proof-of-concept (PoC) system was implemented as an independent academic research effort. The PoC utilized Python (version 3.10), FastAPI (version 0.110.0) for the API and orchestration, ChromaDB (version 0.4.22) for vector storage, the Sentence-Transformers library (version 2.5.1) with the all-MiniLM-L6-v2 model for text embeddings, Pandas (version 2.2.0) for data handling, and Scikit-learn (version 1.4.0) for metric calculations. The primary LLM for testing was Grok-2-1212, accessed via its public API [48]. This model was specifically chosen for the proof-of-concept due to its availability and, notably, its tendency to generate responses with fewer inherent restrictions or “baked-in” safety alignments compared to some other large-scale models [49,50]. This characteristic, where some models are designed with more permissive default settings regarding content generation, provides a more challenging baseline for evaluating the effectiveness of an external filtering system like AVI, allowing for a clearer observation of the filter’s impact in mitigating potentially problematic outputs. Development and testing were conducted on a standard desktop computer (Apple M1 Pro chip with 10-core CPU, 16-core GPU, and 16 GB Unified Memory) without dedicated GPU acceleration, focusing on architectural validation over performance optimization. The source code for this specific PoC implementation is maintained privately.

The selection of an appropriate large language model (LLM) for evaluating the efficacy of an external guardrail system like AVI is crucial. To provide a challenging testbed, a model with relatively fewer built-in content restrictions is preferred as this allows for a clearer assessment of the guardrail’s independent impact. Table 3 presents a qualitative comparison of several contemporary LLM families or their prominent representatives, including Grok (used in this study). This comparison focuses on their generally reported alignment strategies and observed tendencies towards content moderation or censorship, drawing from the available literature and model documentation. This overview informed our choice of Grok as a suitable baseline for demonstrating AVI’s capabilities in a scenario where the underlying LLM may generate a wider spectrum of potentially problematic outputs.

Table 3.

Comparative overview of LLM characteristics informing baseline model selection for AVI testing.

The PoC was evaluated through a series of experiments. Input Validation against prompt injection used 95 attacks from the AdvBench benchmark [51]. The reduction in the Attack Success Rate (ASR) compared to a baseline (estimated at Value: ~78%) was measured. Output Validation for toxicity used 150 prompts from RealToxicityPrompts [52]; responses generated with and without AVI processing were scored using the Perspective API, and average toxicity reduction was calculated. PII Validation employed a synthetic dataset of 70 sentences to evaluate detection and masking performance using Precision, Recall, and the F1-score. It should be noted that this synthetic dataset of 70 sentences, while useful for the initial PoC validation of the PII detection module’s functionality and integration, is limited in size and scope and does not capture the full complexity of real-world PII instances. The primary focus was to test the module’s capability to identify predefined PII types using the chosen methods, given the challenges in obtaining and utilizing large-scale real-world PII datasets for this stage of academic research. RAG Evaluation was performed qualitatively using six rule–document pairs from the PoC’s data, comparing responses generated with and without RAG context for improvements in neutrality and factual grounding. A simplified Hallucination Detection mechanism (based on keyword matching against a predefined set of factual assertions relevant to the PoC’s limited knowledge base) was tested on 20 factual questions, measuring its accuracy in flagging incorrect LLM responses. Performance Evaluation measured the average latency overhead introduced by AVI across 50 queries in different modes (with/without RAG). Data analysis involved calculating standard metrics using Scikit-learn and performing qualitative assessments of RAG outputs.

4. Results

This section presents the experimental results obtained from the evaluation of the AVI (Aligned Validation Interface) proof-of-concept (PoC) system. The experiments were designed to assess the effectiveness of the AVI framework in mitigating key risks associated with LLM interactions, including prompt injection, toxic content generation, PII leakage, and hallucinations, as well as to evaluate its performance overhead.

4.1. Input Validation Performance: Prompt Injection Mitigation

The AVI PoC’s Input Validation Module (IVM) was evaluated for its ability to detect and neutralize prompt injection attacks using a subset of 95 attacks from the AdvBench benchmark [53]. The baseline Attack Success Rate (ASR) for the target LLM (Grok-2-1212) against this subset was estimated at approximately 78%. When interactions were routed through the AVI PoC, the system demonstrated significant mitigation capabilities. The IVM successfully blocked or neutralized ~82% of the attempted injection attacks, resulting in a reduced ASR of ~14%. Detailed results comparing the baseline ASR with the ASR achieved using AVI are presented in Table 4. These findings suggest that the IVM component of the AVI framework can substantially reduce the risk of users successfully manipulating the LLM through malicious prompts.

- 8.

- Mitigation Effectiveness calculated as follows:Baseline ASR estimated based on benchmark reports.

Table 4.

Effectiveness of AVI PoC in mitigating prompt injection attacks from the AdvBench benchmark subset (n = 95).

Table 4.

Effectiveness of AVI PoC in mitigating prompt injection attacks from the AdvBench benchmark subset (n = 95).

| Scenario | Attack Success Rate (ASR) [%] | Mitigation Effectiveness [%] |

|---|---|---|

| Baseline (Direct LLM) | 78 | - |

| AVI PoC Intervention | 14 | 82 |

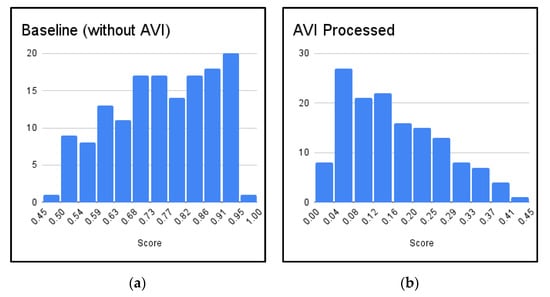

4.2. Output Validation Performance: Toxicity Reduction

To assess the Output Validation Module’s (OVM) effectiveness in managing harmful content, 150 prompts from the RealToxicityPrompts dataset [54]—a standard benchmark for such evaluations—were used to elicit responses from the Grok-2-1212 LLM. This sample size was deemed appropriate for this PoC study (TOXICITY attribute, scale 0–1). The results, summarized in Table 5 and Figure 4, indicate a substantial reduction in output toxicity. The average toxicity score, calculated over all 150 prompts from the RealToxicityPrompts dataset [54], decreased from a baseline of ~0.72 to ~0.18 when processed via AVI, representing an estimated toxicity reduction of ~75%. The AVI system identified and consequently modified or blocked approximately ~65% of the initially generated responses due to detected toxicity exceeding policy thresholds. This demonstrates the OVM’s capability to significantly improve the safety profile of LLM-generated content.

Table 5.

Impact of AVI PoC Output Validation Module on response toxicity, evaluated using 150 prompts from RealToxicityPrompts.

Figure 4.

Distribution of Perspective API toxicity scores for LLM responses generated from RealToxicityPrompts (n = 150): (a) baseline responses generated directly by the LLM without AVI intervention, showing a concentration towards higher toxicity values; (b) responses after processing by the AVI PoC’s Output Validation Module, demonstrating a significant shift towards lower toxicity scores.

4.3. PII Detection and Masking Performance

The performance of both IVM and OVM in handling Personal Identifiable Information (PII) was evaluated using a synthetic dataset comprising 70 sentences with fabricated PII (names, phone numbers, emails, addresses, and card numbers). The system’s ability to correctly identify and mask these PII instances was measured using standard classification metrics. As shown in Table 6, the AVI PoC achieved high performance in PII detection, with macro-averaged scores of Precision: ~0.96, Recall: ~0.94, and F1-score: ~0.95. Performance varied slightly across different PII categories, with structured data like phone numbers and card numbers being detected with slightly higher accuracy than less structured data like full names. These results confirm the effectiveness of the implemented PII detection mechanisms within the AVI framework for enhancing data privacy in LLM interactions.

Table 6.

Performance of the AVI PoC’s PII detection mechanism on a synthetic dataset (n = 70 sentences).

4.4. RAG Module Effectiveness (Qualitative Examples)

The effectiveness of the Contextualization Engine (CE/RAG) in improving response quality and safety through “Positive Filtering” was assessed qualitatively. Representative rule–document pairs were selected from the PoC’s knowledge resources, which include a policy repository defining potentially problematic query types, a knowledge base containing curated factual or neutral information, and rule–document linkage data associating specific rules with relevant contextual documents. Questions designed to potentially trigger a negative rule (identified in the policy repository) were posed to the system, once with RAG disabled and once with RAG enabled (utilizing the explicitly linked documents from the knowledge base as context).

Table 7 presents illustrative examples of this process. In general, responses generated with RAG context demonstrated improved neutrality, better factual grounding based on the provided documents, and alignment with safer perspectives contained within the linked context. This contrasts with the potentially problematic, evasive, or less-informed responses generated without RAG when faced with sensitive or complex queries. These qualitative results highlight the potential of using curated context, retrieved via the CE/RAG mechanism based on input analysis, not only to enhance factual accuracy but also to serve as a proactive safety mechanism, guiding the LLM towards more responsible and appropriate outputs.

Table 7.

Qualitative examples of RAG impact on LLM responses for selected queries.

4.5. Hallucination Detection Performance (Simplified RAM)

A simplified version of the Response Assessment Module (RAM) was tested for its ability to detect factual inaccuracies (hallucinations) [55]. Twenty factual questions with unambiguous answers (e.g., “What is the capital of France?” and “What is 2 + 2?”) were presented to the Grok-2-1212 LLM via AVI (RAG disabled). The PoC’s RAM implemented a basic fact-checking mechanism based on direct keyword and key-phrase matching against a predefined, small internal dictionary containing the correct answers to the specific test questions. For instance, if the question was “What is the capital of France?”, the RAM checked if the LLM response contained the exact key phrase “Paris”. Responses lacking the expected key phrase or containing conflicting factual keywords present in the dictionary were flagged as potentially inaccurate. This simplified module correctly identified 15 out of 20 incorrect factual statements generated by the base LLM, achieving a detection accuracy of ~75% on this specific, limited task set (Table 8). This accuracy level indicates that approximately 25% of incorrect statements were not flagged, or, potentially, some correct statements could have been misidentified, highlighting the limitations of the simplified approach. While this rudimentary keyword-matching approach requires significant enhancement (e.g., using semantic comparison, NLI models, or external knowledge base lookups) for robust real-world hallucination detection, the result demonstrates the potential for incorporating fact-checking mechanisms within the AVI framework to improve response reliability. A detailed error analysis of false positives and false negatives for the current simplified mechanism was outside the scope of this initial PoC but is essential for guiding the development of more robust solutions.

Table 8.

Accuracy of the simplified keyword-based hallucination detection mechanism within the AVI PoC’s RAM on a set of 20 factual questions.

4.6. System Performance (Latency Overhead)

The performance overhead introduced by the AVI PoC layer was measured across 50 representative queries. The end-to-end latency of requests routed through AVI was compared to direct calls to the Grok-2-1212 API. The average additional latency (L_AVI) introduced by the AVI processing pipeline (excluding LLM inference time) was found to be approximately 85 ms when RAG was disabled. When the RAG module was activated for context retrieval from the ChromaDB vector store, the average overhead increased to approximately 450 ms (Table 9).

Table 9.

Average additional latency introduced by the AVI PoC processing pipeline (excluding base LLM inference time) across 50 queries.

This indicates that the core validation logic imposes a relatively low latency penalty, while the RAG process, as expected, adds a more significant delay dependent on database search time. These latency figures are considered acceptable for many interactive applications, although further optimization would be necessary for high-throughput scenarios [56].

5. Discussion

5.1. Advancing LLM Governance Through Interface Design

Among the most obvious strengths of AVI is its dual ability to perform negative filtering (e.g., blocking rapid entry of unverified data or filtering out toxic outputs) and positive filtering (e.g., grounding responses with trusted context and regulatory compliance) [57,58]. This approach enables the implementation of a model-agnostic information process governance layer. It is also dynamic and configurable, allowing organizations to exercise fine-grained control over AI behavior without changing the underlying software model. This approach also supports the discussed trends and assumptions that external governance tools are necessary for effective governance of AI systems when pre-trained models are distributed as opaque systems or APIs [59]. The literature reviewed in this paper highlights that external verification mechanisms can significantly complement internal data governance, bridging the gaps in transparency and adaptability [60]. The AVI interface offers an external point of control for implementation that allows users to integrate domain-specific information and technical policies, industry-specific ethical rules, and context-based reasoning to maintain trust and reduce operational risk.

5.2. AVI as a Sovereign Gateway for Enterprise and Public Sector LLM Deployment

The AVI framework offers a pathway for enterprises and public sector organizations to harness the power of LLMs responsibly. Instead of relying solely on the opaque and often generic safety measures of external LLM providers, or risking data leakage by sending sensitive information to third-party APIs, organizations can deploy AVI as a sovereign gateway. This approach allows for (1) fine-grained control over content policies tailored to specific industry regulations, ethical guidelines, or national interests; (2) integration with internal knowledge bases, enhancing responses with proprietary, verified information while keeping such data secure within the organization’s perimeter; (3) auditable and transparent governance over LLM interactions. Thus, AVI can empower organizations to adopt LLM technologies without ceding control over their data or the information narrative, moving beyond simply blocking access to “potentially dangerous LLMs” towards a model of controlled and customized utilization [61]. This capability for tailored governance and auditable control is particularly relevant in light of emerging AI regulations, such as the European Union’s AI Act [62], and similar legislative efforts globally, which emphasize risk management, transparency, and accountability in AI systems.

5.3. Modular, Explainable Safety as a Normative Baseline

The modular architecture of AVI, which separates responsibilities between Input Validation Modules (IVMs), Output Validation Modules (OVMs), the Contextualization Module (CE), and the Response Evaluation Module (RAM), supports more transparent and auditable decision-making processes compared to existing interfaces. The advantage of the interface proposed by the authors is that each module can be adapted using rule-based logic, machine learning, or hybrid management logic, and the system’s decisions can be verified by developers or compliance teams within the information ecosystem (industry or enterprise). The model presented in this paper meets the growing market demand for explainable AI (XAI), especially in critical infrastructure [62]. At the same time, when using such an interface, users are able to analyze why an input was rejected, why a response was reformulated, or what risk was identified. AVI enables end-to-end problem recognition and understanding by breaking down interactions in the data governance information model into distinct review steps, each governed by customizable regulatory policies. This design provides a scalable foundation for AI assurance, which is especially relevant in today’s markets as organizations face increasing scrutiny under regulations such as the EU AI Act or the US Algorithmic Accountability Act [62].

5.4. Navigating Performance vs. Precision Trade-Offs

The balance between system responsiveness and security controls is a constant tension in the AI deployment landscape. The AVI evaluation shows that security interventions do not necessarily entail prohibitive latency. The measured latency overheads—an average of 85 ms without RAG and 450 ms with RAG—suggest that real-time inspection and filtering can be practically integrated into interactive applications, especially those that do not operate under strict request processing latency constraints [63].

However, domain-specific tuning remains critical. For example, applications in emergency services, trading platforms, or online learning may have stricter thresholds for processing latency. In these cases, organizations can selectively enable or disable certain modules in AVI or use risk stratification to dynamically apply security controls.

5.5. Expanding the Scope of Safety Interventions

Currently, LLM alignment mechanisms, such as those built in during pre-training or implemented through fine-tuning, often lack transparency, flexibility, and generalizability across contexts. Furthermore, many commercial models only partially comply with open fairness norms or user-centered ethical reasoning [64]. By integrating search-augmented generation (RAG) and real-time verification, AVI goes beyond binary filtering to enable more proactive safety improvements. RAG ensures what Binns et al. [65] call “contextual integrity,” in which generative responses are limited to verified, trusted data. The results obtained in this paper show that grounding improves not only factuality but also data neutrality and its compliance with internal ethical rules. However, the success of such interventions depends largely on the quality, relevance, and novelty of the underlying knowledge base. Therefore, ongoing curation and maintenance of the context database remains a critical responsibility in operational environments [66,67].

5.6. Future Directions

Building on this foundation, several directions merit further exploration. First, adaptive risk scoring could allow AVI to adjust sensitivity dynamically based on user profiles, query history, or trust levels. Second, integration with audit trails and compliance reporting could help organizations meet documentation requirements for AI oversight. Third, deeper user interface integration, such as alerting end-users to moderated content and offering explanations, would enhance transparency and engagement [68].

Enhancing PII Detection Robustness: A key priority is to significantly improve the PII detection module. This involves expanding its evaluation and training (if applicable, depending on the methods used) on large-scale, diverse, real-world, and multilingual PII datasets. The aim is to ensure higher accuracy, reduce false positives/negatives, and adapt to various PII patterns encountered in global contexts.

Cross-LLM Validation and Adaptability: A paramount future direction is the comprehensive validation of AVI’s effectiveness and LLM-agnosticism across a diverse array of large language models. This will involve rigorous testing with leading commercial models (such as GPT-4 and Claude) and popular open-source alternatives (e.g., Llama and Mistral). Such testing will also explore how AVI’s policy configurations may need to be adapted to optimally manage risks associated with different LLM architectures, their specific alignment strategies, and varying propensity for issues like hallucination or bias. This will also help to understand AVI’s utility in complementing or fine-tuning the safety layers of more heavily moderated models.

In addition, expanding AVI’s capabilities to handle multimodal input—such as images, speech, or code—would greatly increase its applicability. While the current version focuses on text interactions, the same modular validation logic can be extended with domain-specific detection models (e.g., for visual toxicity or code vulnerabilities). Lastly, future iterations should explore collaborative filtering networks, where risk data and rule templates are shared across organizations to accelerate threat detection and policy refinement.

6. Conclusions

This study addresses the issue of high-quality design and implementation of filter interfaces in artificial intelligence to reduce the risks of outputting low quality or erroneous data. The paper presents a proof-of-concept (PoC) experiment evaluating the Aligned Validation Interface (AVI), which demonstrates significant effectiveness of the filter in mitigating critical risks of using large language models (LLM). In particular, AVI reduced the injection success rate by 82%, reduced the average toxicity of output by 75%, and achieved high accuracy in detecting personally identifiable information (PII) with an F1-score of approximately 0.95. In addition, a qualitative evaluation of the RAG-based Contextualization Engine showed improvements in the neutrality, factual accuracy, and ethical consistency of the model output in the given parameters and context, while a simplified hallucination detection module flagged 75% of incorrect answers in supervised tests. Despite introducing moderate latency—an average of 85 ms without RAG and 450 ms with RAG—the system demonstrated the ability to maintain acceptable performance for real-time applications. These results validate the ability of AVI to improve the security of linguistic models, reliability, and compliance with regulatory (government, industry, or enterprise) requirements of generative AI deployments in critical domains such as healthcare, finance, and education [56]. Since deploying large language models (LLMs) in real-world business and technology environments raises critical concerns regarding data security, privacy, and ethical compliance, in this study, the authors presented the Agreement Validation Interface (AVI), a modular API gateway that serves as a policy enforcement broker between LLMs and user applications (Figure 5). The design and proof-of-concept evaluation of AVI provides strong initial evidence that combining bidirectional filtering with contextual enhancement can significantly reduce common LLM risks, including flash injection, output toxicity, hallucinations, and personally identifiable information (PII) leakage [68].

Figure 5.

Governance-oriented framework for safe LLM deployment via AVI. Source: made by authors.

Despite the positive results, several limitations require attention. First, the proof-of-concept evaluation was conducted on a narrow set of benchmarks and synthetic inputs, which may not fully reflect the complexities of real-world language use. Expansion to broader datasets, including multilingual and informal content, is needed. Second, the hallucination detection mechanism (Section 4.5) relied on rudimentary keyword matching and was not benchmarked against state-of-the-art semantic verification techniques, such as NLI or question answering-based consistency models [69]. The achieved 75% accuracy on a limited test set, while demonstrating basic functionality, also implies a significant 25% error rate (potentially encompassing both false positives and false negatives), underscoring the need for more sophisticated approaches. A comprehensive analysis of these errors will be critical for future improvements.

Furthermore, the evaluation of the PII detection module (Section 4.3) was conducted using a small synthetic dataset. While this allowed for a controlled initial assessment of the module’s performance, it does not fully represent the diversity and complexity of PII encountered in real-world applications across different languages and contexts. Validating the PII detection capabilities on larger, more diverse, and real-world datasets is a crucial next step.

Similarly, while the RAG module’s effectiveness in improving response neutrality and factual grounding was qualitatively demonstrated (Section 4.4, Table 7), a comprehensive quantitative evaluation using specific metrics such as Context Relevance and Context Recall (as outlined in Section 4) was not performed in this PoC and remains an important area for future work to rigorously assess its impact.

Third, AVI requires human oversight for maintaining policy rules, monitoring for new threat vectors, and updating context sources. This creates an operational burden that could limit adoption by smaller organizations lacking dedicated AI governance staff. Future versions of AVI should include a user-friendly policy dashboard, integration with log analytics platforms, and automated alerting systems for policy drift or emergent threats.

Advanced Hallucination Detection: Future work will focus on replacing the current simplified hallucination detection mechanism with more advanced techniques. This includes exploring and integrating Natural Language Inference (NLI) models, and question-answering-based consistency checks, and leveraging external knowledge bases for fact verification. A crucial part of this development will be rigorous testing, detailed error analysis (including case studies of false positives and negatives), and continuous refinement to significantly improve detection accuracy and reliability.

Finally, the evaluation was conducted using the Grok-2-1212 model, which may not generalize to other LLM architectures [66]. While the choice of Grok-2-1212 provided a challenging baseline for this PoC due to its relatively less restrictive nature, a significant limitation of this study is the lack of testing across a diverse range of LLMs. Different models exhibit varying behaviors in response to the same input due to differences in training data, decoding strategies, and internal alignment mechanisms. Therefore, to truly validate AVI’s claimed LLM-agnosticism and universality, extensive testing across multiple commercial (e.g., GPT-4 and Claude series) and open-source (e.g., Llama and Mistral families) LLMs is essential. Such testing would also allow for an investigation into how AVI’s performance and policy configurations might need to adapt to the varying baseline safety features and output characteristics of different LLMs.

Addressing the global governance of AI requires coordinated international regulatory frameworks that transcend national boundaries, supported by transparent design principles and enforceable compliance mechanisms [69]. Given the global distribution of users and the concentration of AI development in a few countries, such frameworks should promote cross-border accountability, standardized ethical guidelines, and cooperation among stakeholders worldwide.

Author Contributions

Conceptualization, O.S.; methodology, D.K.; software, D.K.; validation, D.K. and S.-K.L.; formal analysis, D.K.; investigation, O.S.; resources, D.K. and O.S.; data curation, D.K.; writing—original draft preparation, D.K. and O.S.; writing—review and editing, S.-K.L.; visualization, D.K.; supervision, O.S.; project administration, O.S. and S.-K.L.; funding acquisition, S.-K.L. All authors have read and agreed to the published version of the manuscript.

Funding

(a) This work was supported by the Institute of Information & Communications Technology Planning & Evaluation (IITP)—Innovative Human Resource Development for Local Intellectualization program grant funded by the Korea government (MSIT) (IITP-2025-RS-2024-00436765). (b) This research is supported by internal research funds for Professors of Korea University of Technology and Education # (202302470001/202403380001) (Educational Research Promotion Project “AI for Innovative Ecosystem”/“The Role of AI in Global Business” (period of 2023–2024/2024–2025).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The other authors declare no conflicts of interest. (Danila Katalshov is employed by MTS AI. The conceptual framework “AVI” and the proof-of-concept (PoC) system described in this paper were developed as part of the author’s independent academic research activities affiliated with the Korea University of Technology and Education and Bauman State Technical University. While the research area aligns with the author’s professional field, this publication does not disclose proprietary information or intellectual property specific to MTS AI. MTS AI has been notified of this publication).

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| API | Application Programming Interface |

| ASR | Attack Success Rate |

| AUC | Area Under the Curve |

| AVI | Aligned Validation Interface |

| CE | Contextualization Engine |

| CoT | Chain-of-Thought |

| CSV | Comma-Separated Values |

| FN | False Negative |

| FP | False Positive |

| GDPR | General Data Protection Regulation |

| IVM | Input Validation Module |

| JSON | JavaScript Object Notation |

| k-NN | k-Nearest Neighbors |

| LLM | Large Language Model |

| ML | Machine Learning |

| NER | Named Entity Recognition |

| NLI | Natural Language Inference |

| OVM | Output Validation Module |

| PII | Personally Identifiable Information |

| PoC | Proof-of-Concept |

| RAG | Retrieval-Augmented Generation |

| RAM | Response Assessment Module |

| RLHF | Reinforcement Learning from Human Feedback |

| ROC | Receiver Operating Characteristic |

| RPS | Requests Per Second |

| SBERT | Sentence-BERT |

| TN | True Negative |

| TP | True Positive |

| YAML | YAML Ain’t Markup Language |

| XAI | Explainable AI |

References

- Anthropic. Claude: Constitutional AI and Alignment. 2023. Available online: https://www.anthropic.com (accessed on 1 February 2025).

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the dangers of stochastic parrots. In Proceedings of the FAccT ‘21: 2021 ACM Conference on Fairness, Accountability, and Transparency, Virtual Event, 3–10 March 2021; pp. 610–623. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N. Language models are few-shot learners. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 1877–1901. [Google Scholar]

- Carlini, N.; Tramer, F.; Wallace, E. Extracting training data from large language models. In Proceedings of the USENIX Security Symposium, Vancouver, BC, Canada, 11–13 August 2021; pp. 2633–2650. [Google Scholar]

- Holistic AI. AI Governance and Safety Solutions. 2023. Available online: https://www.holisticai.com (accessed on 3 April 2025).

- Ji, Z.; Lee, N.; Frieske, R. Survey of hallucination in natural language generation. ACM Comput. Surv. 2023, 55, 1–38. [Google Scholar] [CrossRef]

- Kandaswamy, R.; Kumar, S.; Qiu, L. Toxicity Detection in Open-Domain Dialogue. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics (ACL 2021), Bangkok, Thailand, 1–6 August 2021; pp. 296–305. Available online: https://aclanthology.org/2021.acl-long.25 (accessed on 10 May 2025).

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-augmented generation for knowledge-intensive NLP tasks. In Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 6–12 December 2020; pp. 9459–9474. [Google Scholar]

- Seyyar, A.; Yildiz, A.; Dogan, H. LLM-AE-MP: Web attack detection using a large language model with adversarial examples and multi-prompting. Expert Syst. Appl. 2025, 222, 119482. [Google Scholar]

- Oguz, B.; Zeng, W.; Hou, L.; Karpukhin, V.; Sen, P.; Chang, Y.; Yih, W.; Mehdad, Y.; Gupta, S. Domain-specific grounding for safety and factuality. In Proceedings of the EMNLP Finding, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 2345–2357. [Google Scholar]

- OpenAI. GPT-4 technical report. arXiv 2023, arXiv:2303.08774. [Google Scholar] [CrossRef]

- European Union. Regulation (EU) 2016/679 of the European Parliament and of the Council of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data (General Data Protection Regulation). Off. J. Eur. Union 2016, L119, 1–88. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 15 November 2024).

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training Language Models to Follow Instructions with Human Feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar] [CrossRef]

- Rae, J.W.; Borgeaud, S.; Cai, T.; Millican, K.; Hoffmann, J.; Song, H.F.; Aslanides, J.; Henderson, S.; Ring, R.; Young, S.; et al. Scaling Language Models: Methods, Analysis & Insights from Training Gopher. arXiv 2021. [Google Scholar] [CrossRef]

- Rawte, V.; Vashisht, V.; Verma, S. Ethics-aware language generation. AI Ethics 2023, 4, 67–81. [Google Scholar]

- Metz, C. What Should ChatGPT Tell You? It Depends. The New York Times, 15 February 2023. Available online: https://www.nytimes.com/2023/03/08/technology/chatbots-disrupt-internet-industry.html?searchResultPosition=3 (accessed on 13 September 2024).

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- Weidinger, L.; Mellor, J.; Rauh, M.; Griffin, C.; Huang, P.-S.; Uesato, J.; Gabriel, I. Ethical and Social Risks of Harm from Language Models. arXiv 2021, arXiv:2112.04359. [Google Scholar] [CrossRef]

- Dixon, L.; Li, J.; Sorensen, J.; Thain, N.; Vasserman, L. Measuring and mitigating unintended bias in text classification. In Proceedings of the 2018 AAAI/ACM Conference on AI, Ethics, and Society, New Orleans, LA, USA, 2–3 February 2018; pp. 67–73. [Google Scholar] [CrossRef]

- Borgeaud, S.; Mensch, A.; Hoffmann, J.; Cai, T.; Rutherford, E.; Millican, K.; Van Den Driessche, G.; Lespiau, J.-B.; Damoc, B.; Clark, A.; et al. Improving Language Models by Retrieving from Trillions of Tokens. In Proceedings of the 39th International Conference on Machine Learning (ICML 2022), Baltimore, MD, USA, 17–23 July 2022; pp. 2206–2240. Available online: https://proceedings.mlr.press/v162/borgeaud22a.html (accessed on 12 April 2025).

- Feretzakis, G.; Papaspyridis, K.; Gkoulalas-Divanis, A.; Verykios, V.S. Privacy-Preserving Techniques in Generative AI and Large Language Models: A Review. Information 2024, 15, 697. [Google Scholar] [CrossRef]

- Almalki, A.; Alshamrani, M. Assessing the Guidelines on the Use of Generative Artificial Intelligence Tools in Higher Education: A Global Perspective. Informatics 2024, 8, 194–221. [Google Scholar]

- Mylrea, M.; Robinson, N. Artificial Intelligence Trust Framework and Maturity Model: An Entropy-Based Approach. Entropy 2023, 25, 1429. [Google Scholar] [CrossRef] [PubMed]

- Schuster, T.; Gupta, P.; Rajani, N.F.; Bansal, M.; Fung, Y.R.; Schwartz, R. Get your vitamin C! Robust fact verification. AAAI 2021, 35, 13493–13501. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.-A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar] [CrossRef]

- Zhou, M.; Zhang, L.; Zhao, W. PromptArmor: Robustness-enhancing middleware for LLMs. In Proceedings of the IEEE S&P Workshops, San Francisco, CA, USA, 25 May 2023; pp. 1–8. [Google Scholar]

- Izacard, G.; Grave, É. Leveraging passage retrieval with generative models for open domain question answering. In Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2021), Online, 19–23 April 2021; pp. 874–880. [Google Scholar] [CrossRef]

- Ogunleye, B.; Zakariyyah, K.I.; Ajao, O.; Olayinka, O.; Sharma, H. A Systematic Review of Generative AI for Teaching and Learning Practice. Educ. Sci. 2024, 14, 636. [Google Scholar] [CrossRef]